Abstract

In an era of exponential data growth, ensuring high data quality has become essential for effective, evidence-based decision making. This study presents a structured and comparative review of the field by integrating data classifications, quality dimensions, assessment methodologies, and modern software tools. Unlike earlier reviews that focus narrowly on individual aspects, this work synthesizes foundational concepts with formal frameworks, including the Findable, Accessible, Interoperable, and Reusable (FAIR) principles and the ISO/IEC 25000 series on software and data quality. It further examines well-established assessment models, such as Total Data Quality Management (TDQM), Data Warehouse Quality (DWQ), and High-Quality Data Management (HDQM), and critically evaluates commercial platforms in terms of functionality, AI integration, and adaptability. A key contribution lies in the development of conceptual mappings that link data quality dimensions with FAIR indicators and maturity levels, offering a practical reference model. The findings also identify gaps in current tools and approaches, particularly around cost-awareness, explainability, and process adaptability. By bridging theory and practice, the study contributes to the academic literature while offering actionable insights for building scalable, standards-aligned, and context-sensitive data quality management strategies.

1. Introduction

1.1. Background and Scope of the Study

Data are carriers of information that are gathered, processed, and utilized to assist in decision making in a variety of industries [1]. In the digital age, when professionals frequently handle massive amounts of both structured and unstructured data, their storage and retrieval capabilities have grown in value. Data handling infrastructure has changed dramatically. By 2000, data centers made it possible for people to access computers and the World Wide Web (WWW), whereas in the 1980s they were mainly used to support business operations. Data accessibility was further increased after 2010 with the introduction of cloud computing and faster internet speeds [1].

International Organization for Standardization (ISO) 9000:2015 defines data quality as the extent to which intrinsic qualities satisfy specifications [2], which includes defect-free and fit for purpose [3]. Regulations, policies, or user needs can all serve as the source of quality standards [4,5,6]. Also, data collection is now commonplace thanks to modern technologies, from corporate knowledge management [7] to personal health tracking [8,9]. Quality issues are brought on by this proliferation, though, ranging from basic contact list mistakes to skewed statistical analyses [10,11]. Subsequent processing and conclusions are greatly impacted by poor data quality [12,13].

Although issues with data quality have been formally recognized since at least 1986 [14], when data were first framed as a strategic organizational asset, the urgency and complexity of these issues have intensified in today’s landscape of AI-driven analytics, large-scale data ecosystems, and regulatory scrutiny. As a result, thorough analysis and systematization have only recently emerged in the literature [12,13]. For all parties involved in data creation, analysis, and application, the field seeks to guarantee data integrity and utility [14]. Validation rules and range checks should ideally be used to start quality assurance during data collection [15].

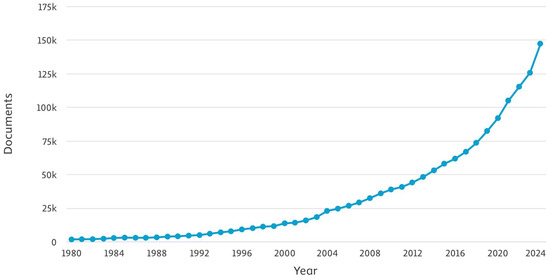

Figure 1 illustrates that, in the last 20 years, there has been a rapid increase in studies for data quality. Specifically, in 2024, there were almost 150,000 published studies related to data quality.

Figure 1.

Documents per year containing the term “Data Quality” in the title–abstract–keywords based on Scopus statistics.

Based on this upward trend in data quality research, this study aims to provide a structured and comparative synthesis of key developments in the field. The analysis focuses on the following four core areas: (i) types of data and associated quality issues; (ii) quality dimensions and formal standards such as the Findability, Accessibility, Interoperability, and Reusability (FAIR) principles and International Organization for Standardization/International Electrotechnical Commission (ISO/IEC) 25000 [16]; (iii) research-based methods for data quality assessment; and (iv) contemporary software solutions supporting data quality management. The central objective is to bridge theoretical classifications, normative standards, and applied technologies to offer an integrated perspective on data quality governance. In doing so, the study addresses the following question: how can established quality standards, assessment methodologies, and software platforms be effectively combined to support robust and context-sensitive data quality management across domains?

1.2. Data Classification and Quality Problem Taxonomy

Real-world objects are represented by data, which can be stored, retrieved, processed by software programs, and sent over networks [17]. Scholars in the field of data quality research have developed a number of methodical approaches to data classification. The most basic classification separates data into the following three categories according to their structural features, as depicted in Table 1: semi-structured data with partial organizational frameworks; unstructured data with sequences of symbols usually encoded in natural language or binary formats; and structured data with defined schemas and domains [18,19]. Recent advances also necessitate the inclusion of real-time and streaming data as a special class of structured or semi-structured data. These data types are continuously generated by devices such as Internet of Things (IoT) sensors, transaction processing systems, or monitoring platforms, and often require low-latency quality checks and integration pipelines. Common applications include anomaly detection in industrial equipment, stock market data feeds, and healthcare monitoring systems [20,21,22].

Table 1.

Categories of data based on their structure. Definitions and examples are synthesized from established data management frameworks and studies, including [17,18,19,20,21,22].

An alternative data classification framework adopts a production-oriented perspective, distinguishing among the following three key stages of data transformation [19]: raw data items, which are the atomic and unprocessed inputs; component data items, which are intermediate representations derived through cleaning, aggregation, or transformation; and information products, which are finalized outputs ready for use in analysis or decision making. This model aligns with established data life-cycle concepts in frameworks, such as McGilvray’s 10-step quality methodology [23], TDQM [24], and ISO/IEC 11179 [25], and is summarized in Table 2.

Table 2.

Types of data based on the production phase (processing stage). Definitions and examples are synthesized from data life cycle and quality frameworks, including [4,19,23,24,25].

Quality assurance techniques map naturally onto these stages. For example, raw data may be validated through range checks and outlier detection (e.g., via TDQM or ISO/IEC 11179 metadata registries), while component items are assessed through consistency checks, aggregation audits, or reconciliation. Information products typically undergo final quality scoring and reporting based on organizational standards and KPIs [4,23,24].

When quality attainment and measurement accuracy are taken into account, additional classification schemes are revealed. Discrete real-world attributes, like demographics, that are controlled by operational procedures are referred to as elementary data. On the other hand, statistical composites such as average income metrics for geographic regions are examples of aggregate data, which are the result of computational operations carried out on elementary data [17].

Data quality problems can be systematically categorized based on their origin (single-source vs. multi-source) and granularity (schema-level vs. instance-level). Single-source problems arise within isolated systems and may include structural issues (e.g., lack of referential integrity) or individual anomalies (e.g., typos and duplicates). Multi-source problems, on the other hand, result from the integration of heterogeneous systems, often manifesting as inconsistencies in structure, semantics, or data values across platforms. This widely adopted classification, reflected in multiple studies [15,17,19,26], is summarized in Table 3.

Table 3.

Data quality problem taxonomy, definitions and examples are synthesized from foundational studies in data quality assessment and integration, including [15,17,19,26].

For instance, in a hospital information system, single-source problems may include multiple patient entries due to inconsistent ID formats or missing blood pressure readings. In multi-source integration between hospitals and insurance providers, mismatched codes for treatment categories or different temporal resolutions often cause aggregation errors or false positives in billing audits [27]. In public transport systems, schema-level integration of route data from municipal and private operators may result in format conflicts, while instance-level synchronization mismatches lead to incorrect timetable predictions [20,21].

By matching data applications with their unique quality requirements, this classification framework holds a crucial role in facilitating more focused approaches to quality assurance [15].

Although the aforementioned frameworks are widely used taxonomies in data quality research and practice, additional classification dimensions may take application domains or technological contexts into account. The fact that there are several classification schemes highlights the intricacy of data as an information resource and the significance of contextual elements in choosing the right quality metrics.

1.3. Dimensions of Data Quality

Quality is the sum of the attributes that allow an organization to satisfy both explicit and implicit requirements, according to the ISO standard approach [28]. Essentially, quality dimensions offer quantifiable characteristics for evaluating and controlling the quality of data and information [23]. Analysts and developers can use specialized quality assessment tools for data refinement and process optimization thanks to this dimensional approach, which doubles as a diagnostic tool and improvement framework.

Several conceptual frameworks for comprehending and assessing quality attributes are included in the domain of data quality and in this study as well. Through a methodical examination of information systems, researchers have investigated and defined a multitude of quality dimensions, as shown in Table 4. The dimensions listed in Table 4 are synthesized from multiple sources in the field of data quality assessment [4,19,26,29,30]. Several of these dimensions have also been operationalized in practical settings. For example, completeness and accuracy, along with timeliness, are the most frequently assessed attributes in public health data systems [31]. Timeliness and currency have been central quality concerns in financial reporting infrastructures [32]. Meanwhile, interpretability and ease of manipulation have been evaluated in open government data initiatives, where metadata quality directly affects data use and user experience [33].

Table 4.

Data quality dimensions and definitions. The dimensions and descriptions are synthesized from widely cited frameworks and studies on data quality, including [4,19,26,29,30]. The four basic categories of dimensions are in bold namely: Intrinsic, Contextual, Representational and Accessibility Dimensions.

Four main classifications were established regarding quality dimensions by Wand’s and Wang’s [29] work, as follows: Representational (clarity of presentation), Contextual (relevance to task), Accessibility (ease of data retrieval), and Intrinsic (relating to inherent data characteristics). Additionally, this framework outlined fifteen different aspects of information quality, such as objectivity, reputation, dependability, and value-added metrics. This taxonomy has been extended by later studies to incorporate more dimensions like identification availability, traceability, and data validation.

Reliability, accuracy, relevance, usability, and system independence are among the operational aspects that are prioritized in information systems. Accuracy, temporal validity, and relevance are the three main aspects that the accounting and control viewpoint emphasizes. The significance of internal control systems that strike a balance between cost effectiveness and high reliability across crucial parameters like data accuracy, update frequency, and volume management is especially highlighted by this framework [34].

The remainder of this paper is structured as follows: Section 2 explains the methodology followed in this study. Section 3 examines the 2021 European Guidelines on data quality dimensions and the ISO 25000 Data Quality standard. Section 4 reviews contemporary data analysis methods drawn from international literature across both research and regulatory domains, and Section 5 evaluates modern data quality software solutions through comparative analysis. Section 6 provides a discussion of the key findings, while Section 7 outlines the study’s contributions and limitations and proposes directions for future research and practical implementation.

2. Methodology

This study adopts a structured narrative review methodology to synthesize theoretical foundations, regulatory frameworks, and technological implementations in the field of data quality. This approach was selected to enable an integrative view that bridges technical standards, assessment models, and software solutions while allowing for conceptual interpretation and cross-domain comparison.

The literature was identified through a targeted search of reputable academic databases, including Scopus, Web of Science, and Google Scholar, using keywords such as “data quality”, “FAIR principles”, “ISO 25000”, “data quality assessment”, and “data quality tools”. The search focused on the period 2000–2024, with earlier publications retained if highly cited or foundational to the field. The sources considered include peer-reviewed research papers, review articles, international standards, technical guidelines, and official policy documents. Emphasis was placed on selecting materials that span academic, regulatory, and industry domains.

The selected studies were analyzed and categorized along multiple dimensions, as follows: (i) the type of data addressed (structured, semi-structured, and unstructured); (ii) the methodological approach (e.g., rule-based, statistical, and machine learning-enhanced); (iii) the type of quality dimensions assessed (intrinsic, contextual, representational, and accessibility); and (iv) alignment with reference frameworks such as the FAIR principles and the ISO/IEC 25000 family of standards.

In parallel, key software tools were examined based on publicly available documentation, technical specifications, and vendor white papers. Where possible, their functionality was mapped to formal quality criteria, standards-based metrics, and organizational use cases.

The findings from these various sources were synthesized thematically into the core sections of the paper, as follows: foundational concepts and standards, methodological approaches, software tools and comparative evaluations, and implications for practice. By triangulating among conceptual frameworks, empirical methodologies, and practical implementations, the review aims to offer a comprehensive and actionable understanding of the current landscape of data quality management.

3. Regulatory Frameworks for Data Quality

This section critically examines two foundational regulatory frameworks—FAIR principles and the ISO/IEC 25000 series—not only in terms of their conceptual structure but also regarding their practical implications, implementation challenges, and potential synergies in real-world data quality management.

3.1. The 2021 European Directive on FAIR Principles

A significant policy framework that establishes thorough standards for data quality management among member states is the European Guidelines 2021 [35]. Fundamentally, the directive uses twelve distinct quality indicators that offer quantifiable standards for data stewardship in order to operationalize the principles of FAIR. This multifaceted strategy takes into account the organizational and technical needs for the best possible data use in the public sector and research settings.

The two main components of the findability dimension create the essential framework for data discovery. Datasets must contain comprehensive descriptive information in accordance with metadata completeness requirements, which are comparable to those of traditional library catalog systems. This includes domain-specific descriptors that allow for accurate retrieval in addition to standard identifiers (titles, creators, and dates) for digital objects. According to the directive, thorough metadata have the following two functions: it makes initial discovery easier and enables users to determine relevance before viewing the actual data [35]. Using standardized null markers (“NULL”, “NA”, or domain-specific conventions) to handle missing values in a methodical manner is a second crucial component. This avoids frequent misunderstandings where blank entries could be taken to be unprocessed data instead of values that are purposefully missing. The guidelines further recommend structural review when null values exceed 15–20% of any data field, as this may indicate fundamental collection or design flaws [35].

The directive’s accessibility standards cover both technical and policy issues. For digital resources, the technical specifications call for dependable, long-lasting access mechanisms that place special emphasis on uniform resource locator (URL) stability. From a policy standpoint, the framework makes open access the norm and strongly discourages access barriers unless required by law. Notably, the guidelines make a distinction between obstructive requirements that unnecessarily restrict use and necessary authentication (for instance, sensitive health data). The document offers precise standards for determining whether access limitations adhere to European Union (EU) law’s proportionality principle [35]. The directive takes a three-tiered approach to interoperability. Conventions such as ISO 8601 [36] dating and Unicode Transformation Format (UTF)-8 encoding are examples of foundational formatting standards that guarantee fundamental machine-readability. Intermediate-level dataset organization is governed by structural requirements, which also include guidelines for metadata schemas and application programming interface (API) design.

Originally proposed by Tim Berners-Lee [37], the Five-Star Open Data model outlines a maturity path for publishing structured, open, and linked data on the web. Building upon this model and synthesizing principles from FAIR and data quality frameworks [30,38,39]. Table 5 presents a mapping that aligns each star level with the corresponding FAIR principle(s) and key data quality dimensions. The analysis demonstrates that data become progressively more Findable, Accessible, Interoperable, and Reusable as they evolve through the five levels. Simultaneously, improvements are observed in quality attributes, such as completeness, accuracy, consistency, and contextual relevance. This mapping helps clarify how data openness directly enhances quality and FAIR compliance in modern data governance frameworks.

Table 5.

Alignment of the Five-Star Open Data model with the FAIR principles and key quality dimensions.

In addressing both short-term usability and long-term sustainability, the reusability provisions exhibit a unique level of sophistication. In addition to current data, documented update frequencies and change logs are necessary for temporal quality metrics. Through validation guidelines and format stability pledges, structural standards fight “data decay”. The guidelines recommend pagination protocols for large datasets and present a novel relevance assessment matrix that takes into account system capabilities as well as user needs. Beyond simple metadata, documentation requirements also include processing histories, known limitations, and suggested use cases, resulting in what the directive refers to as “data provenance passports” [35].

Coordination among multiple stakeholder groups is necessary for the implementation of the FAIR framework. Data management plans and training programs for researchers must incorporate FAIR principles, while public sector organizations are expected to modernize their infrastructure, particularly legacy systems. In recognition of these operational challenges, the directive sets a phased compliance timeline, with the majority of provisions expected to be fully implemented by 2027 [38]. Monitoring mechanisms, such as self-assessment tools and centralized reporting to the European Commission, aim to ensure consistent application while allowing for domain-specific adaptations.

Table 6 presents a synthesized mapping of selected data quality dimensions to the four FAIR principles. Derived from the broader set of dimensions listed in Table 4, these were selected based on established frameworks, including ISO/IEC 25012 [30], the European Commission FAIR Guidelines (2021) [38], and Wilkinson et al. (2016) [39]. Each dimension is associated with the FAIR principle(s) it most directly supports. For example, machine-readability aligns with findability, accessibility, and interoperability, while accuracy and understandability contribute primarily to reusability. This structured mapping helps clarify how specific quality characteristics support FAIR-aligned data management and stewardship practices.

Table 6.

Mapping of selected data quality dimensions to the FAIR principles.

3.2. The ISO 25000 Standard on Data Quality

The international standard for methodical data quality evaluation is the ISO 25000 series, which offers businesses a thorough framework for assessing and enhancing their information assets. Fundamentally, the standard defines data quality as the extent to which intrinsic properties allow for the satisfaction of explicit and implicit requirements [28]. Through the complementary elements of ISO/IEC 25012 for quality characteristics and ISO/IEC 25024 for measurement methodologies, this operational definition connects theoretical concepts with real-world application [30,40].

The distinction between inherent and system-dependent properties is made in ISO/IEC 25012’s taxonomy of quality characteristics. Regardless of how technology is used, inherent characteristics represent the essential qualities of the data itself. These consist of completeness (absence of expected values), consistency (logical coherence), credibility (perceived trustworthiness), accuracy (veracity of representation), and currentness (temporal relevance) [30]. To enable uniform application across domains, Table 7 offers comprehensive definitions and operational criteria for these attributes. On the other hand, system-dependent attributes are the result of particular technical implementations and necessitate contextual assessment that is specific to organizational infrastructures.

Table 7.

Data quality characteristics defined in ISO/IEC 25012.

The measurement framework (ISO/IEC 25024), which converts intangible quality concepts into measurable metrics, is essential to the standard’s practical applicability. Measurable properties are precisely defined mathematically for every characteristic. For example, the Data Accuracy Range (RAN_EXAC) property generates standardized scores on a 0.0–1.0 scale by calculating the proportion of values within specified parameters using a ratio of valid to total records [40].

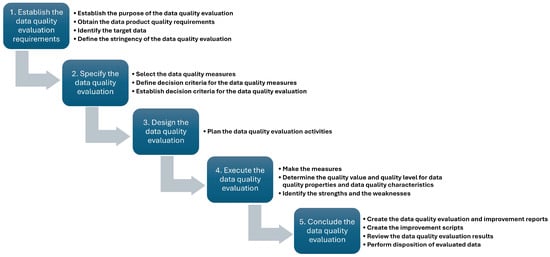

A structured five-phase process guides the implementation of data quality assessments, as illustrated in Figure 2. The process begins with collaborative requirement specification, in which stakeholders define the objectives, business rules, and quality thresholds relevant to the data product (Phase 1). Next, quality measures are selected, and decision criteria are established to evaluate the targeted data (Phase 2). In Phase 3, the evaluation plan is designed, including activity scheduling and stakeholder assignments.

Figure 2.

Five-phase data quality assessment process based on ISO/IEC 25024. This framework outlines the sequential stages of requirement establishment, metric specification, evaluation design, execution, and conclusion. It serves as a structured methodology for ensuring systematic and repeatable data quality evaluation across domains.

Phase 4, the execution stage, involves applying the defined measures and determining data quality levels across relevant dimensions. This phase increasingly integrates machine learning tools to support more robust diagnostics. For instance, support vector machines (SVMs) can be used to detect outliers in electronic health records and sensor data, while synthetic minority oversampling technique (SMOTE) can address class imbalance when evaluating completeness in marketing or IoT datasets. Recent studies illustrate the performance gains achievable when incorporating machine learning into Phase 4 of data quality evaluation. For instance, in a credit card fraud detection context, researchers combined SMOTE with Random Forest classifiers to address class imbalance, achieving a 98% accuracy and 98% F1-score, which is a significant improvement over models trained on raw, unbalanced data [41]. In addition, with regard to anomaly detection in structured datasets, one-class SVMs assisted by probabilistic outlier scoring have enhanced data cleansing workflows, particularly in electronic health records [27]. These cases underline how machine learning techniques not only improve the sensitivity and precision of quality diagnostics but also demonstrate clear quantitative benefits, providing concrete evidence of the value added when integrating AI-driven methods into ISO-guided data quality frameworks.

Finally, Phase 5 focuses on concluding the assessment by reporting findings, recommending improvements, and executing data disposition actions. Reports typically include quality scores derived from weighted aggregation and offer actionable feedback for enhancing low-performing dimensions. The process emphasizes iterative refinement, enabling adaptive learning and long-term quality improvement across domains [42,43].

The ISO framework exhibits both complementarity and distinction when compared to the FAIR principles. Although they both stress essential elements like completeness and accuracy, the ISO standards provide more detailed operational criteria that are not limited to research settings. This complementarity points to the possibility of integrated application, evaluating the efficacy of FAIR implementation in organizational settings using ISO’s measurement rigor.

While the FAIR principles and the ISO/IEC 25000 series are often presented as complementary, they serve distinct roles in the data quality ecosystem. FAIR, originating from the scientific data management community, emphasizes data sharing and reuse through metadata standards, persistent identifiers, and semantic interoperability. It is primarily concerned with making data Findable, Accessible, Interoperable, and Reusable in an open and machine-actionable manner. In contrast, the ISO/IEC 25000 series originates from the software engineering domain and focuses on measurable quality attributes, such as accuracy, completeness, consistency, timeliness, and credibility, defined as intrinsic or contextual characteristics of data.

Furthermore, FAIR lacks formal quality metrics or thresholds, instead offering high-level guidance, whereas ISO/IEC 25012 (within the 25000 family) specifies concrete attributes and supports process-based quality evaluation. In practice, FAIR facilitates long-term data stewardship and open science goals, while ISO provides structured criteria for data governance, software quality assurance, and operational compliance. Together, they cover both aspirational and operational dimensions of data quality, making their integration valuable for organizations that aim to align compliance, usability, and sustainability.

Addressing several crucial success factors is necessary for practical adoption. Continuous quality monitoring, supported by specialized tools for automated metric calculation, must be maintained by organizational commitment. While integration with current governance structures aligns quality initiatives with strategic objectives, workforce development guarantees accurate interpretation of standard requirements. Through consistent characteristic definitions, the standard’s flexibility allows for such integration while preserving cross-organizational comparability [43]. This framework offers data-intensive organizations a roadmap for developing sophisticated quality management systems that can adapt to changing regulatory and technological environments, in addition to serving as an assessment tool.

Despite their strengths, both the FAIR and ISO frameworks face significant implementation challenges. The FAIR principles, while conceptually powerful, often lack detailed operational guidance, making their practical uptake uneven across sectors [44,45,46]. Public research institutions typically have greater access to funding and data infrastructure to support FAIR-aligned practices. In contrast, small- and medium-sized enterprises (SMEs) and non-academic actors may struggle with the costs of metadata creation, persistent identifier management, and infrastructure modernization. Moreover, semantic interoperability, which is central to FAIR, requires expertise and standardized vocabularies that are not always readily available outside well-resourced research domains.

Likewise, the ISO 25000 series, though offering detailed metrics and processes, presents a high implementation burden [47,48]. Many SMEs face difficulties in adopting ISO-compliant assessments due to the demand for specialized tools, technical personnel, and alignment with broader software engineering workflows. The need for extensive stakeholder coordination, repeated quality evaluations, and documentation often exceeds the operational capacity of smaller organizations. For these reasons, scalability and proportionality remain important considerations, and adaptations or light-weighted implementations of these frameworks may be necessary to ensure broader accessibility and impact.

In summary, while both frameworks offer valuable guidance for ensuring data quality, their application in operational contexts requires careful adaptation. The FAIR principles provide a high-level vision particularly suited to the research domain, whereas ISO/IEC 25000 offers granular metrics with broader applicability. Their integration, when approached critically and pragmatically, can support more balanced and scalable quality management strategies, as further discussed in the following sections.

4. Data Quality Assessment Methods

4.1. Methodological Foundations and Evolution

International standards that address complementary aspects of information systems have fundamentally changed the landscape of data quality management. Comprehensive data quality models are established by ISO 25000, process maturity benchmarks are provided by ISO 15504/CMMI frameworks [49], and software verification and validation are governed by ISO 12207/IEEE 1028 standards [50]. These interconnected frameworks provide a strong basis for quality assurance and have been widely accepted for more than 15 years [51]. Improvement initiatives in this ecosystem are dominated by the following two strategic paradigms: data-driven approaches directly alter values through methods such as record linkage and normalization, as demonstrated by clinical trial databases that systematically update old patient records using more recent sources. Process-driven approaches, on the other hand, restructure workflow architectures. This is demonstrated in manufacturing supply chains, where embedded validation checkpoints stop inaccurate data from getting into inventory systems [52,53,54,55]. The latter provides long-lasting change through root-cause elimination despite a larger initial investment, whereas the former provides instant fixes but runs the risk of sustaining underlying defects.

In distributed environments, data heterogeneity poses particularly challenging problems. This is best illustrated by the triple-layered inconsistencies found in peer-to-peer (P2P) systems: instance-level heterogeneity occurs when conflicting values describe identical real-world entities, structural heterogeneity appears through divergent data models (relational vs. XML), and technological heterogeneity results from incompatible vendor solutions [56]. While machine learning algorithms such as K-means clustering and support vector machines (SVMs) resolve domain-specific anomalies, such as outlier detection in agricultural sensor networks or inconsistency resolution in electronic health records, schema integration techniques address these problems by creating unified semantic mappings [18,56,57]. Despite these developments, the application of ISO 25012 still reveals persistent gaps: companies usually lack well-defined quality requirements, rely on reactive ad-hoc solutions, or narrowly prioritize aspects like completeness while ignoring more comprehensive quality frameworks [43].

A notable real-word example comes from a multinational logistics provider aiming to address inconsistencies in inventory records across its European distribution centers by adopting data quality management practices based on the ISO/IEC 25012 standard [17,30,58]. By aligning internal metrics, such as completeness, consistency, and timeliness, with those defined by the standard, the organization was able to better identify duplicate records and gaps in order data. Research and case studies in both logistics and other data-intensive sectors have shown that implementing formal data quality dimensions and automated rule-based validation at points of data entry can lead to measurable improvements in data accuracy and operational efficiency. While specific quantitative improvements, such as a precise percentage increase in data accuracy, are rarely reported publicly, studies generally indicate that structured data quality initiatives contribute to enhanced operational alignment and reduced mismatches in inventory systems.

4.2. Methodological Innovations and Domain Applications

The methodological spectrum includes specific techniques designed for various data environments. With its define–measure–analyze–improve cycle, the TDQM framework establishes continuous improvement by designating specialized roles such as information stewards, who are in charge of quality governance throughout the data life cycle [18,24,59]. By coordinating metadata across conceptual, logical, and physical layers, the data warehouse quality (DWQ) methodology tackles analytical systems and develops quality measurement systems that assess design integrity, implementation effectiveness, and usage effectiveness [18,60]. With its D2Q model, DaQuinCIS presents groundbreaking data certification protocols for collaborative ecosystems. “Quality factories” use complaint-based reputation metrics to conduct cross-organizational trust assessments [18,61].

By extending previous frameworks through its meta-model mapping Resources to Conceptual Entities, Heterogeneous Data Quality Methodology (HDQM) provides answers to today’s unstructured data problems. By distinguishing between representational fidelity and content quality, this method makes it possible to evaluate the quality of social media text, image repositories, and sensor logs [62]. For instance, a government-led environmental program used HDQM to evaluate air-quality sensor networks deployed in urban areas [63,64]. HDQM’s distinction between representational and content fidelity was critical for assessing the accuracy of real-time PM2.5 and NOx data transmitted from multiple sensor types. Incorporating HDQM’s layered resource mapping helped standardize formats across XML-based loggers and JSON sensor APIs. The approach enabled early detection of faulty sensors and outliers, improving measurement reliability by over 20% across test periods [64].

DAQUAVORD connects software engineering and quality management by incorporating Data Quality Software Requirements (DQSR) into viewpoint-oriented development processes. During the requirements phase, this methodology converts ISO 25012 dimensions, such as accuracy, into verifiable software specifications [65].

Contextual sophistication is demonstrated by domain-specific adaptations. Clinical decision quality scores are calculated by aggregating 86 base criteria in CIHI’s four-tiered hierarchical model, which is utilized by healthcare systems [18,66]. In order to identify transactional irregularities, financial institutions combine quantitative indicators with qualitative expert evaluations [67]. Activity-based evaluation is used by manufacturing sectors to correlate production line performance with the quality of product information [68]. Importantly, Cost Of Less-Than-Desired Quality (COLDQ) measures hidden operational costs resulting from low-quality data, demonstrating how inaccurate supplier information causes procurement delays that can incur high costs to retail supply chains [62,69]. For instance, a large European retail chain applied the COLDQ methodology to quantify the cost implications of inaccurate supplier data in its procurement systems [70,71,72]. Poor-quality entries, such as outdated prices and misclassified goods, led to procurement delays and restocking inefficiencies. COLDQ’s framework allowed the firm to calculate specific delay-driven financial losses per product category. By integrating automated data validation rules and enhancing metadata tracking, the chain reported a clear reduction in supplier-related delays and attributed significant annual cost savings to improved data governance.

4.3. Comparative Analysis and Emerging Frontiers

Due to the multifaceted and domain-specific nature of data quality assessment, different sectors require different approaches. Table 8 demonstrates that although generalized frameworks, such as TDQM, AIM Quality (AIMQ), and Data Quality Assessment (DQA), are applicable across various domains, specialized approaches are developed for industries such as aviation, healthcare, and finance. Forty-three approaches were analyzed, and the results show clear clustering by application area (such as environmental science, big data, and construction), with accuracy and completeness being consistently ranked as the most important quality dimensions in all of the studies. In order to reduce computational complexity during evaluation phases and produce more lucid assessment results, the majority of methodologies deliberately concentrate on a small number of critical dimensions [18,62].

Table 8.

Domain and dimensions of quality in methods available in the literature.

As can be concluded from Table 8, throughout the literature data quality dimensions show both domain-driven specializations and universal constants. Across industries ranging from healthcare to construction, the core triad of accuracy, completeness, and consistency is found in more than 50% of studies. Accuracy alone appears in 81% of works, making these dimensions the non-negotiable basis for data integrity. However, there are notable contextual differences: healthcare places a higher priority on timeliness and privacy for real-time clinical decisions and General Data Protection Regulation (GDPR) compliance [84,98], whereas finance places a higher priority on syntactic/semantic accuracy and consistency in order to satisfy auditing rigor [67]. While big data frameworks elevate volume and distinctness to address scalability challenges [81], construction specifically requires uniformity and sensitivity for safety-critical measurements [83,87]. Environmental studies introduce novel dimensions like air quality [102], linking data efficiency to ecological sustainability—a trend reflecting evolving quality paradigms beyond traditional metrics.

Despite this diversity, there are still noticeable gaps. Even in regulated industries like finance, operational economics is ignored by the 9% of approaches that include cost as a dimension [17,55,68,69]. Similarly, only 9% of studies include process-centric attributes like workflow efficiency and stewardship, indicating a gap between operational impact and technical quality. From simple methods (such as environmental science’s use of single-dimension accuracy for sensor calibration [90]) to comprehensive enterprise frameworks like the 36-dimension model in [69] that addresses metadata traceability and storage efficiency, the complexity spectrum is wide. In practice, a telecommunications company implemented an enterprise-wide data quality framework that incorporated over 30 dimensions, including metadata traceability, data lineage, and structural uniformity [18,103,104]. Inspired by ISO 25012 and expanded for operational needs, the model facilitated quality audits across customer records, network logs, and billing systems. The framework enabled the detection of mismatches between subscription metadata and actual usage data, which had previously caused billing inaccuracies. Post-implementation assessments revealed improved auditing accuracy and a significant reduction in customer disputes.

In addition, in order to indicate convergence between algorithmic performance standards and data quality, emerging fields such as deep learning are now incorporating machine-learning-specific metrics (F1-score and precision [97]) alongside conventional dimensions. To move quality away from being a compliance task, future frameworks must balance domain specificity with cross-cutting priorities, especially cost control and process optimization.

As Table 9 illustrates, method applicability differs greatly by data structure. Existing methods (such as ISTAT and Comprehensive Data Quality—CDQ) are dominated by structured and semi-structured data because they require less processing complexity and metadata. An important innovation is the HDQM extension to unstructured data, which introduces specific methods for text, XML, and sensor streams. Although hybrid process/data approaches have a greater organizational impact, data-driven strategies (normalization, cleaning, and record linkage) are more common because of their flexible toolkits. While machine learning (SVMs, convolutional neural networks—CNNs, and K-means) and statistical techniques provide the analytical backbone, specialized approaches, such as SMOTE, for imbalance correction and sensor monitoring handle domain-specific issues. Recent studies have increasingly applied artificial intelligence (AI) and machine learning techniques to enhance data quality workflows. As illustrated in Table 9, approaches such as data augmentation, outlier detection, semantic embeddings, and synthetic oversampling (e.g., SMOTE) are used to detect and correct data anomalies, balance class distributions, and improve metadata representation [83,91,97]. These AI-enhanced methods signal a growing trend toward automated, adaptive, and scalable quality assessments, particularly in complex or high-volume data environments.

Table 9.

Data type, type of method and techniques used in methods available in literature .

Despite its operational importance, cost evaluation is still severely underprioritized. Though exceptions like COLDQ show how quantifying bad-data expenses (such as supply chain delays) drives process optimization, less than 25% of the methodologies mentioned in Table 9 include computational, resource, or temporal cost assessments. Despite new developments like data-centric AI, this gap still exists, indicating a crucial area for methodological advancement [18,69].

Table 9 indicates that significant differences in data type focus and strategic orientation across domains are revealed by data quality methodologies. Research is dominated by structured data (S), which is present in all f studies, whereas semi-structured formats (SM) are given significant attention also (74%). With only 23% coverage, unstructured data (US) are still severely underserved; specialized methods like SMOTE oversampling and NLP-based backtranslation are the main ways to address this [97]. Sixty-six percent of approaches use only data-driven strategies, which prioritize record linkage, normalization, and cleansing for quick fixes. In 23% of works, hybrid approaches that combine data and process techniques (e.g., schema integration with process redesign [55,69]) are found to be more effective for enterprise-scale quality management. Despite ISO standard advocacy [43,65], pure process-driven methods (11%) are still rare, indicating a systemic underutilization of preventative solutions.

Moreover, data types have different technical specializations, as follows: semi-structured data use data fusion and interoperability [65]; unstructured data uses AI-driven augmentation [97]; and structured data use cleansing and error correction [17,93]. Interestingly, 77% of methodologies do not include cost estimation [66,76,79]; even process-centric studies [100] and ISO implementations [43,65] do not include financial metrics. Cost assessment is only integrated into hybrid frameworks, like in [17,69], which connect process optimization to financial results. There are still domain-specific gaps: healthcare adopts ISO standards without financial validation [65], while construction prefers sensor monitoring without cost analysis [87]. Given the fact that there are long-term costs of poor data quality, this gap between technical execution and economic impact is a critical blind spot. To align technical quality, future approaches must connect unstructured data handling, process transformation, and cost intelligence.

5. Software Solutions for Data Quality Analysis

The proliferation of data quality software has transformed how organizations manage and enhance their data assets, with leading commercial solutions offering specialized capabilities to address structural, operational, and governance challenges. Prominent platforms include IBM InfoSphere Information Server for Data Quality [105], SAP Master Data Governance [106], Talend Data Fabric [107], Ataccama Data Quality & Governance [108], Informatica Cloud Data Quality [109], Oracle Enterprise Data Quality [110], Melissa Unison [111], Precisely Data Integrity Suite [112], SAS Data Quality/Viya [113], and Collibra Data Quality & Observability [114]. These tools collectively enable organizations to convert raw data into trusted assets through automated cleansing, standardization, and enrichment, while ensuring compliance with evolving regulatory frameworks.

Using pre-established business rules to standardize data and remove duplicates while identifying personally identifiable information (PII) for improved privacy protection, IBM InfoSphere is a leader in continuous data monitoring and issue resolution [105]. For instance, IBM InfoSphere has been deployed by a multinational retail company to manage product master data across over hundreds of stores [115,116]. By consolidating product and supplier information into a unified catalog and implementing automated quality rules, the company reported a significant reduction in out-of-stock incidents and enhanced supplier compliance monitoring.

Using domain-specific models (such as materials and customers) and pre-built workflows, SAP Master Data Governance centralizes important business data, facilitating smooth integration with third-party solutions and SAP ecosystems [106]. Talend Data Fabric provides its proprietary Trust Score, which measures data reliability for safe sharing, and real-time machine learning-driven quality recommendations [107]. Talend has also been adopted by mid-sized financial institutions seeking GDPR and Anti-Money Laundering (AML) compliance [117,118]. A European bank utilized Talend to establish a centralized master data management pipeline, enabling real-time validation of customer records and transaction monitoring. The integration of built-in data lineage tracking allowed the institution to respond to audit requests with improved transparency and traceability.

By automating laborious preparation tasks and implementing granular access controls to prevent unwanted changes, Ataccama uses AI for proactive data validation and anomaly detection [84]. For instance, smart city initiatives in Europe implemented Ataccama ONE to manage data from transport, energy, and citizen service subsystems [119,120]. The platform’s AI-assisted anomaly detection identified sensor discrepancies in traffic data streams, leading to a significant improvement in congestion prediction models.

Informatica Cloud Data Quality simplifies deployments with its no-code CLAIRE AI engine, which suggests quality rules based on metadata patterns and supports cloud-to-cloud migration [109]. For example, large public health agencies have integrated Informatica Data Quality into their patient data warehousing system to improve the completeness and standardization of records across hospitals [121,122]. By leveraging rule-based cleansing and address validation modules, the agencies reduced data processing time and significantly improved the success rate of cross-institutional patient identification.

Through its integrated Quality Knowledge Base (QKB), Oracle Enterprise Data Quality (EDQ) provides extensible data profiling, facilitating batch and real-time processing through virtual testing environments [110]. Melissa Unison offers offline data transformation tools that combine user-level access controls for compliance with address validation, enrichment, and duplicate removal [111]. Through deduplication, Precisely Data Integrity Suite lowers storage costs by combining geocoding, enrichment, and anomaly detection to visualize data changes in real time [112]. SAS Data Quality offers modular packages for machine learning and forecasting by standardizing formats and combining duplicates using AI techniques and its QKB library [113]. Through configurable AI rules that track patterns and automatically correct records within its cloud-based observability framework, Collibra facilitates cross-database connectivity (e.g., Oracle and MySQL) [114].

Critical industry trends are revealed by synthesizing a comparative analysis of these platforms in Table 10. With an emphasis on scalability and compatibility with major data lakes and SaaS applications, all solutions can be deployed in the cloud or in a hybrid environment. Although machine learning integration is still selective (Talend, Melissa), the majority of platforms now rely on artificial intelligence (e.g., Ataccama, SAS). Dashboards and visualizations allow for real-time anomaly detection thanks to universal continuous monitoring features. However, none of them include cost-control mechanisms, and only 30% support process control/optimization (e.g., SAP and Ataccama). This is a significant gap considering the operational costs associated with poor data quality, which are highlighted in Section 3 [18,69]. Despite the sophisticated governance features of these tools, this omission still exists, highlighting the necessity of financial impact analytics in subsequent versions.

Table 10.

Software solutions comparison table.

While several tools embed ISO-related quality dimensions (such as completeness, consistency, and accuracy), few implement ISO 25000 standards or ISO/IEC 25024 workflows explicitly. Translating ISO principles into practice often requires customized mappings of standard metrics to tool-specific rule engines [17]. For instance, Collibra supports data profiling and observability aligned with ISO’s emphasis on measurement, but its implementation depends on user-defined quality rules and domain knowledge. This decentralization offers flexibility but lacks prescriptive templates for ISO-aligned assessments, requiring substantial configuration and organizational expertise [123,124,125].

Despite their robust capabilities, these tools face limitations in scalability and integration, particularly when dealing with fragmented or legacy data infrastructures [126,127]. Platforms may struggle to interface with on-premise legacy systems that lack standard APIs or real-time data pipelines. Additionally, while cloud-native tools offer scalability, this often entails subscription costs and infrastructure dependencies that challenge smaller organizations. Vendor lock-in risks and limited customization in pre-built models may also reduce adaptability in highly specialized domains. These constraints highlight the importance of assessing not just feature breadth but also deployment context and long-term integration feasibility when selecting data quality tools.

A developing market that is centered on accessibility and automation is reflected in the convergence of AI, cloud architectures, and embedded governance. However, the lack of features for cost assessment restricts organizations’ capacity to measure return on investment for quality initiatives. In order to bridge the gap between data integrity and business value, solutions must advance beyond technical metrics to include economic and process dimensions as data environments become more complex.

6. Discussion

This section synthesizes the key insights derived from the review, discussing their implications. The study set out to investigate the evolving landscape of data quality, focusing on its conceptual foundations, regulatory frameworks, assessment methodologies, and technological implementations. It began by revisiting core definitions and taxonomies of data, followed by a structured examination of quality dimensions grounded in widely recognized standards. Particular emphasis was placed on the FAIR principles, codified in the 2021 European Guidelines, and the ISO/IEC 25000 series, both serving as reference models for assessing and improving data quality. The study then explored methodological approaches, from statistical and rule-based systems to machine learning-based diagnostics, resulting in a comparative evaluation of commercial data quality platforms. Synthesizing these multiple perspectives, the study aimed to support the design of more robust, scalable, and context-aware data quality management strategies.

The findings highlight the multidimensional nature of data quality and the increasing need for integrated approaches that combine standards, processes, and tools. While frameworks such as FAIR and ISO/IEC 25000 offer comprehensive sets of principles and metrics, their real-world implementation often encounters challenges related to scalability, measurability, and contextual adaptation. The comparative analysis revealed a lack of methods incorporating cost–benefit reasoning or dynamic process optimization. Similarly, while modern platforms demonstrate strengths in automation, AI-driven diagnostics, and real-time monitoring, limitations persist in transparency, explainability, and cost-awareness. Together, these findings point to a critical tension between technical advancement and practical utility, underscoring the need for more holistic and adaptable data quality solutions.

Theoretically, this study contributes to a more structured understanding of how data quality dimensions align with formal standards and assessment principles. By bridging classification taxonomies with the FAIR and ISO/IEC 25000 frameworks, it advances conceptual clarity in the field. The development of mappings that link quality dimensions, FAIR indicators, and maturity models, offers a practical toolkit for aligning quality expectations with implementation guidelines. From a practical perspective, the study provides decisionmakers, data stewards, and system designers with comparative insights into the strengths and weaknesses of existing approaches. It also draws attention to critical gaps in current solutions, particularly in areas such as cost-awareness, explainability, and adaptive system design, which are increasingly essential in data-intensive and compliance-driven environments.

In terms of contributions, this study offered three main advances. First, it introduced a series of synthesized tables and conceptual mappings that integrate classifications of data types, quality dimensions, and problem taxonomies with established frameworks such as FAIR and ISO/IEC 25000. Second, it provided a comparative evaluation of assessment methods, spanning statistical, rule-based, and AI-enhanced techniques, alongside a review of commercial software platforms. Unlike prior studies that focused narrowly on either theoretical models or tool functionalities, this work aimed to bridge the gap between principles and practice. Third, by identifying under-addressed areas such as cost integration and dynamic adaptability, it outlined a research agenda for advancing next-generation data quality solutions. Collectively, these contributions support both scholarly inquiry and the practical advancement of data governance across domains.

7. Conclusions and Future Directions

7.1. Conclusions

As with any integrative review, this study is subject to limitations. First, the selection of literature was guided by relevance and accessibility, focusing primarily on peer-reviewed sources indexed in Scopus, Web of Science, and Google Scholar. While these databases offer broad coverage, this approach may have excluded high-quality contributions from domain-specific repositories, gray literature, or non-English sources. Second, although the paper synthesizes quality frameworks, assessment methodologies, and software tools, it does not include empirical validation or benchmarking of tools in operational settings. As such, the practical performance of these systems, especially under varying organizational, technical, and regulatory conditions, remains outside the scope of this work. Third, the construction of conceptual mappings (e.g., between quality dimensions and FAIR principles) involves subjective interpretation, even though it is grounded in established standards such as ISO/IEC 25012. Finally, while the review attempts to integrate theory, policy, and practice, the diversity of data environments across sectors may limit the generalizability of some findings. These limitations highlight the importance of complementing conceptual reviews with empirical studies and cross-sector empirical validation in future research.

7.2. Future Directions

Building on the insights of this study, future research can advance data quality assessment in several important directions. First, there is a need to develop methodologies that embed cost-awareness into quality management workflows. This includes defining metrics to estimate the financial impact of data quality issues (e.g., rework costs, customer churn, and regulatory fines), as well as modeling the return on investment (ROI) of quality initiatives. One approach involves integrating cost estimators into data profiling tools to track the downstream effects of low-quality data. Another strategy is to extend existing dashboards with economic indicators that associate quality improvements with tangible business outcomes. Process mining techniques can also be applied to trace cost leakage caused by data errors across workflows. Additionally, scenario-based simulations and digital twins can help assess the long-term financial consequences of various quality interventions under different operating conditions. Embedding cost analytics into software tools will not only support business case justification but also promote better prioritization of remediation efforts.

Second, the integration of explainable AI into data quality platforms should be prioritized to ensure transparency, traceability, and regulatory compliance. Research should explore techniques, such as interpretable rule extraction, local surrogate models (e.g., LIME/SHAP), and audit logs that track how AI-driven quality assessments are produced. Developing benchmark datasets for explainability and regulatory alignment, particularly in high-stakes domains like finance and healthcare, will also be critical. Third, the design of self-optimizing systems based on reinforcement learning or adaptive heuristics could reduce reliance on manual interventions in quality monitoring and remediation. These systems would dynamically adjust validation rules, error thresholds, and cleaning strategies in response to changing data patterns. Future work could also explore simulation-based testing and the use of digital twins to optimize real-time learning loops.

In addition, further research should explore lightweight tools for real-time quality assessment in edge computing and IoT environments, where traditional pipelines are less effective. This includes strategies such as federated quality evaluation, sensor-level diagnostics, and compressed metadata tracking. Finally, interdisciplinary collaboration across data science, organizational studies, and regulatory policy will be essential for reframing data quality from a technical constraint into a strategic enabler of innovation and accountability.

From a practical perspective, organizations seeking to improve data quality should prioritize modular adoption of frameworks such as ISO/IEC 25000, beginning with a small set of core metrics (e.g., completeness and accuracy) and gradually expanding to more complex evaluations. Additionally, aligning internal metadata standards with FAIR indicators can support interoperability without requiring full-scale implementation. Investing in training programs and cross-functional quality governance teams can further facilitate smoother integration of tools into existing workflows. In light of the above, this study has advanced the central objective of supporting robust, scalable, and context-sensitive data quality management by integrating formal standards, analytical methodologies, and software evaluations. The proposed future directions aim to further consolidate this foundation by addressing emerging challenges related to cost, explainability, and adaptability.

Author Contributions

Conceptualization, E.A., N.P. and T.A.; methodology, T.A. and G.N.; software, G.N.; validation, T.A., E.A., N.P. and E.D.; formal analysis, T.A., E.A. and G.N.; investigation, T.A. and G.N.; resources, G.N., N.P., T.A. and E.D.; data curation, G.N., T.A., N.P. and E.A.; writing—original draft preparation, G.N. and T.A.; writing—review and editing, T.A., E.A., N.P. and E.D.; visualization, G.N. and T.A.; supervision, E.A.; project administration, E.A.; funding acquisition, E.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| AIMQ | AIM Quality |

| API | Application Programming Interface |

| CDQ | Comprehensive Data Quality |

| CNN | Convolutional Neural Network |

| COLDQ | Cost of Less-Than-Desired Quality |

| DQA | Data Quality Assessment |

| DQSR | Data Quality Software Requirements |

| DWQ | Data Warehouse Quality |

| EDQ | Enterprise Data Quality |

| EU | European Union |

| FAIR | Findable, Accessible, Interoperable, and Reusable |

| GDPR | General Data Protection Regulation |

| HDQM | Heterogeneous Data Quality Methodology |

| IEC | International Electrotechnical Commission |

| IoT | Internet of Things |

| ISO | International Organization for Standardization |

| QKB | Quality Knowledge Base |

| ROI | Return on Investment |

| SMOTE | Synthetic Minority Oversampling Technique |

| SVMs | Support Vector Machines |

| TDQM | Total Data Quality Management |

| URL | Uniform Resource Locator |

| UTF | Unicode Transformation Format |

| WWW | World Wide Web |

References

- Reinsel, D.; Gantz, J.; Rydning, J. Data Age 2025: The Evolution of Data to Life-Critical 2017. Available online: https://www.seagate.com/files/www-content/our-story/trends/files/Seagate-WP-DataAge2025-March-2017.pdf (accessed on 1 July 2025).

- ISO 9000:2015; Quality Management Systems—Fundamentals and Vocabulary. International Organization for Standardization: Geneva, Switzerland, 2015.

- Redman, T.C. Data Quality: The Field Guide; Data Management Series; Digital Press: Newton, MA, USA, 2001; ISBN 978-1-55558-251-7. [Google Scholar]

- Wang, R.Y.; Strong, D.M. Beyond Accuracy: What Data Quality Means to Data Consumers. J. Manag. Inf. Syst. 1996, 12, 5–33. [Google Scholar] [CrossRef]

- Kahn, B.K.; Strong, D.M.; Wang, R.Y. Information Quality Benchmarks: Product and Service Performance. Commun. ACM 2002, 45, 184–192. [Google Scholar] [CrossRef]

- Fürber, C. Data Quality Management with Semantic Technologies; Springer Fachmedien Wiesbaden: Wiesbaden, Germany, 2016; ISBN 978-3-658-12224-9. [Google Scholar]

- Rothman, K.J.; Huybrechts, K.F.; Murray, E.J. Epidemiology: An Introduction; OUP: New York, NY, USA, 2012; ISBN 978-0-19-975455-7. [Google Scholar]

- Piwek, L.; Ellis, D.; Andrews, S.; Joinson, A. The Rise of Consumer Health Wearables: Promises and Barriers. PLoS Med. 2016, 13, e1001953. [Google Scholar] [CrossRef] [PubMed]

- GMI. Sports Wearables Market-By Product Type (Fitness Bands, Smartwatches, Smart Clothing, Footwear, Smart Headwear, Others), by Application (Healthcare & Fitness, Sports and Athletics, Entertainment and Multimedia, Others), by End User, Forecast 2024–2032; Sports Wearables Market; GMI: Garran, Australia, 2024. [Google Scholar]

- Chen, C.-Y.; Chang, Y.-W. Missing Data Imputation Using Classification and Regression Trees. PeerJ Comput. Sci. 2024, 10, e2119. [Google Scholar] [CrossRef] [PubMed]

- McCausland, T. The Bad Data Problem. Res.-Technol. Manag. 2021, 64, 68–71. [Google Scholar] [CrossRef]

- Naroll, F.; Naroll, R.; Howard, F.H. Position of Women in Childbirth: A Study in Data Quality Control. Am. J. Obstet. Gynecol. 1961, 82, 943–954. [Google Scholar] [CrossRef]

- Vidich, A.J.; Shapiro, G. A Comparison of Participant Observation and Survey Data. Am. Sociol. Rev. 1955, 20, 512–522. [Google Scholar] [CrossRef]

- Jensen, D.L. Data Quality: A Key Issue For Our Time. In Data Quality Policies and Procedures Proceedings of a BJS/SEARCH Conference; SEARCH Group, Inc.: Sacramento, CA, USA, 1986. [Google Scholar]

- Man, Y.; Wei, L.; Gang, H.; Gao, J. A Noval Data Quality Controlling and Assessing Model Based on Rules. In Proceedings of the 2010 Third International Symposium on Electronic Commerce and Security, Guangzhou, China, 29–31 July 2010; pp. 29–32. [Google Scholar] [CrossRef]

- ISO25000:2014; Systems and Software Engineering—Systems and software Quality Requirements and Evaluation (SQuaRE)—Guide to SQuaRE. International Electrotechnical Commission: Geneva, Switzerland; International Organization for Standardization: Geneva, Switzerland, 2014.

- Batini, C.; Scannapieco, M. Data Quality: Concepts, Methodologies and Techniques; Springer: New York, NY, USA, 2006; ISBN 978-3-540-33172-8. [Google Scholar]

- Batini, C.; Cappiello, C.; Francalanci, C.; Maurino, A. Methodologies for Data Quality Assessment and Improvement. ACM Comput. Surv. 2009, 41, 1–52. [Google Scholar] [CrossRef]

- Sidi, F.; Shariat Panahy, P.H.; Affendey, L.S.; Jabar, M.A.; Ibrahim, H.; Mustapha, A. Data Quality: A Survey of Data Quality Dimensions. In Proceedings of the 2012 International Conference on Information Retrieval & Knowledge Management, Kuala Lumpur, Malaysia, 13–15 March 2012; pp. 300–304. [Google Scholar]

- Si, S.; Xiong, W.; Che, X. Data Quality Analysis and Improvement: A Case Study of a Bus Transportation System. Appl. Sci. 2023, 13, 11020. [Google Scholar] [CrossRef]

- Elfakhfakh, M.T.M.O. Big Data Quality: Stock Market Data Sources Assessment. Master’s Thesis, Politechnico Milano, Milano, Italy, 2019. [Google Scholar]

- KMS Staff. Data Quality in Healthcare Is Vital in 2025; KMS Healthcare: Atlanta, GA, USA, 2025. [Google Scholar]

- McGilvray, D. Executing Data Quality Projects: Ten Steps to Quality Data and Trusted InformationTM; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2008; ISBN 978-0-08-055839-4. [Google Scholar]

- Wang, R.Y. A Product Perspective on Total Data Quality Management. Commun. ACM 1998, 41, 58–65. [Google Scholar] [CrossRef]

- ISO/IEC 11179-1:2023; Information Technology—Metadata Registries (MDR). International Electrotechnical Commission: Geneva, Switzerland; International Organization for Standardization: Geneva, Switzerland, 2023.

- Pipino, L.L.; Lee, Y.W.; Wang, R.Y. Data Quality Assessment. Commun. ACM 2002, 45, 211–218. [Google Scholar] [CrossRef]

- Althobaiti, F.A. A Support Vector Probabilistic Outlier Detection Method For Data Cleaning. JETNR—J. Emerg. Trends Nov. Res. 2025, 3, 332–345. [Google Scholar] [CrossRef]

- Heravizadeh, M.; Mendling, J.; Rosemann, M. Dimensions of Business Processes Quality (QoBP). In Proceedings of the International Conference on Business Process Management, Milan, Italy, 2–4 September 2008; Volume 17, pp. 80–91. [Google Scholar]

- Wand, Y.; Wang, R.Y. Anchoring Data Quality Dimensions in Ontological Foundations. Commun. ACM 1996, 39, 86–95. [Google Scholar] [CrossRef]

- ISO/IEC 25012:2008; Software Engineering—Software Product Quality Requirements and Evaluation (SQuaRE)—Data Quality Model. International Electrotechnical Commission: Geneva, Switzerland; International Organization for Standardization: Geneva, Switzerland, 2015.

- Chen, H.; Hailey, D.; Wang, N.; Yu, P. A Review of Data Quality Assessment Methods for Public Health Information Systems. Int. J. Environ. Res. Public Health 2014, 11, 5170–5207. [Google Scholar] [CrossRef]

- Strong, D.M.; Lee, Y.W.; Wang, R.Y. Data Quality in Context. Commun. ACM 1997, 40, 103–110. [Google Scholar] [CrossRef]

- Quarati, A. Open Government Data: Usage Trends and Metadata Quality. J. Inf. Sci. 2023, 49, 887–910. [Google Scholar] [CrossRef]

- Wang, Q.K.; Tong, R.S.; Roucoules, L.; Eynard, B. Analysis of Data Quality and Information Quality Problems in Digital Manufacturing. In Proceedings of the 4th IEEE International Conference on Management of Innovation & Technology, Bangkok, Thailand, 21–24 September 2008; pp. 439–443. [Google Scholar]

- Publications Office of the European Union. Data Europa Eu Data Quality Guidelines; Publications Office: Luxembourg, 2021. [Google Scholar]

- ISO8601:2019; Date and Time—Representations for Information Interchange. International Organization for Standardization: Vernier, Switzerland, 2019.

- Berners-Lee, T. Linked Data-Design Issue. Available online: https://www.w3.org/DesignIssues/LinkedData.html (accessed on 24 July 2025).

- European Commission: Directorate-General for Research and Innovation and EOSC Executive Board. Six Recommendations for Implementation of FAIR Practice by the FAIR in Practice Task Force of the European Open Science Cloud FAIR Working Group; European Commission: Brussels, Belgium, 2020. [Google Scholar]

- Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J.; Appleton, G.; Axton, M.; Baak, A.; Blomberg, N.; Boiten, J.-W.; da Silva Santos, L.B.; Bourne, P.E.; et al. The FAIR Guiding Principles for Scientific Data Management and Stewardship. Sci. Data 2016, 3, 160018. [Google Scholar] [CrossRef] [PubMed]

- ISO/IEC 25024:2015; Systems and Software Engineering—Systems and Software Quality Requirements and Evaluation (SQuaRE)—Measurement of Data Quality. International Electrotechnical Commission: Geneva, Switzerland; International Organization for Standardization: Geneva, Switzerland, 2015.

- Aburbeian, A.M.; Ashqar, H.I. Credit Card Fraud Detection Using Enhanced Random Forest Classifier for Imbalanced Data. In International Conference on Advances in Computing Research; Springer Nature: Cham, Switzerland, 2023; pp. 605–616. [Google Scholar]

- Rodríguez, M.; Oviedo, J.R.; Piattini, M.G. Evaluation of Software Product Functional Suitability: A Case Study. Softw. Qual. Prof. Mag. 2016, 18, 18. [Google Scholar]

- Gualo, F.; Rodríguez, M.; Verdugo, J.; Caballero, I.; Piattini, M. Data Quality Certification Using ISO/IEC 25012: Industrial Experiences. J. Syst. Softw. 2021, 176, 110938. [Google Scholar] [CrossRef]

- Boeckhout, M.; Zielhuis, G.A.; Bredenoord, A.L. The FAIR Guiding Principles for Data Stewardship: Fair Enough? Eur. J. Hum. Genet. 2018, 26, 931–936. [Google Scholar] [CrossRef]

- Jacobsen, A.; Kaliyaperumal, R.; Bonino da Silva Santos, L.O.; Mons, B.; Schultes, E.; Roos, M.; Thompson, M. A Generic Workflow for the Data Fairification Process. Data Intell. 2019, 2, 56–65. [Google Scholar] [CrossRef]

- European Commission. Directorate-General for Research and Innovation Turning FAIR into Reality–Final Report and Action Plan from the European Commission Expert Group on FAIR Data; European Commission: Brussels, Belgium, 2018. [Google Scholar]

- Bena, Y.A.; Ibrahim, R.; Mahmood, J. Current Challenges of Big Data Quality Management in Big Data Governance: A Literature Review. In Proceedings of the Advances in Intelligent Computing Techniques and Applications; Saeed, F., Mohammed, F., Fazea, Y., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 160–172. [Google Scholar]

- Moeuf, A.; Pellerin, R.; Lamouri, S.; Tamayo-Giraldo, S.; Barbaray, R. The Industrial Management of SMEs in the Era of Industry 4.0. Int. J. Prod. Res. 2018, 56, 1118–1136. [Google Scholar] [CrossRef]

- Rout, T.; Tuffley, A. The ISO/IEC 15504 Measurement Framework for Process Capability and CMMI; Software Quality Institute, Griffith University: Brisbane, Australia, 2005. [Google Scholar]

- ISO/IEC/IEEE 12207:2017; Systems and Software Engineering—Software Life Cycle Processes. International Organization for Standardization: Vernier, Switzerland, 2017.

- Díaz-Ley, M.; Garcia, F.; Piattini, M. MIS-PyME Software Measurement Capability Maturity Model–Supporting the Definition of Software Measurement Programs and Capability Determination. Adv. Eng. Softw. 2010, 41, 1223–1237. [Google Scholar] [CrossRef]

- Hammer, M.; Champy, J. Reengineering the Corporation: A Manifesto for Business Revolution; Nicholas Brealey: Boston, MA, USA, 2001; ISBN 978-1-85788-097-7. [Google Scholar]

- Stoica, M.; Chawat, N.; Shin, N. An Investigation of the Methodologies of Business Process Reengineering; School of Computer Science and Information Systems, Pace University: New York City, NY, USA, 2004. [Google Scholar]

- Redman, T.C. Data Quality for the Information Age; Artech House Telecommunications Library: Boston, MA, USA; Artech House: Norwood, MA, USA, 1996; ISBN 978-0-89006-883-0. [Google Scholar]

- English, L.P. Improving Data Warehouse and Business Information Quality: Methods for Reducing Costs and Increasing Profits; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1999; ISBN 0-471-25383-9. [Google Scholar]

- Corrales, D.C.; Ledezma, A.I.; Corrales, J.C. A Systematic Review of Data Quality Issues in Knowledge Discovery Tasks. Rev. Ing. Univ. De. Medellín 2015, 15, 125–149. [Google Scholar] [CrossRef]

- Liebchen, G.; Shepperd, M. Data Sets and Data Quality in Software Engineering. In Proceedings of the International Conference on Software Engineering, Leipzig, Germany, 10–18 May 2008. [Google Scholar] [CrossRef]

- Agrawal, R.; Wankhede, V.A.; Kumar, A.; Luthra, S.; Kataria, K. A Systematic and Network Based Analysis of Data Driven Quality Management in Supply Chains and Proposed Future Research Directions. TQM J. 2021, 35, 73–101. [Google Scholar] [CrossRef]

- Shankaranarayanan, G.; Wang, R.; Ziad, M. IP-MAP: Representing the Manufacture of an Information Product. IQ 2000, 2000, 1–16. [Google Scholar]

- Jeusfeld, M.; Quix, C.; Jarke, M. Design and Analysis of Quality Information for Data Warehouses. In International Conference on Conceptual Modeling; Springer: Berlin/Heidelberg, Germany, 1999; pp. 349–362. [Google Scholar]

- Scannapieco, M.; Virgillito, A.; Marchetti, C.; Mecella, M.; Baldoni, R. The DaQuinCIS Architecture: A Platform for Exchanging and Improving Data Quality in Cooperative Information Systems. Inf. Syst. 2004, 29, 551–582. [Google Scholar] [CrossRef]

- Batini, C.; Barone, D.; Cabitza, F.; Grega, S. A Data Quality Methodology for Heterogeneous Data. Int. J. Database Manag. Syst. 2011, 3, 60–79. [Google Scholar] [CrossRef]

- Zhang, L.; Jeong, D.; Lee, S. Data Quality Management in the Internet of Things. Sensors 2021, 21, 5834. [Google Scholar] [CrossRef]

- Poupry, S.; Medjaher, K.; Béler, C. Data Reliability and Fault Diagnostic for Air Quality Monitoring Station Based on Low Cost Sensors and Active Redundancy. Measurement 2023, 223, 113800. [Google Scholar] [CrossRef]

- Guerra-García, C.; Nikiforova, A.; Jiménez, S.; Perez-Gonzalez, H.; Ramírez-Torres, M.; Ontañon, L. ISO/IEC 25012-Based Methodology for Managing Data Quality Requirements in the Development of Information Systems: Towards Data Quality by Design. Data Knowl. Eng. 2023, 145, 102152. [Google Scholar] [CrossRef]

- Long, J.A.; Seko, C.E. A.; Seko, C.E. A Cyclic-Hierarchical Method for Database Data-Quality Evaluation and Improvement. In Information Quality; Routledge: Oxfordshire, UK, 2014; pp. 52–66. [Google Scholar]

- Amicis, F.; Barone, D.; Batini, C. An Analytical Framework to Analyze Dependencies among Data Quality Dimensions. In Proceedings of the ICIQ, Cambridge, MA, USA, 10–12 November 2006; pp. 369–383. [Google Scholar]

- Ying, S.; Jin, Z. A Methodology for Information Quality Assessment in the Designing and Manufacturing Processes of Mechanical Products. In Information Quality Management: Theory and Applications; IGI Global: Hershey, PA, USA, 2007; pp. 447–465. [Google Scholar] [CrossRef]

- Loshin, D. Enterprise Knowledge Management: The Data Quality Approach; The Morgan Kaufmann Series in Data Management Systems; Elsevier Science: Amsterdam, The Netherlands, 2001; ISBN 978-0-12-455840-3. [Google Scholar]