Abstract

The application of artificial intelligence has significantly advanced interactive storytelling. However, current research has predominantly concentrated on the content generation capabilities of AI, primarily following a one-way ‘input-direct generation’ model. This has led to limited practicality in AI story writing, mainly due to the absence of investigations into user-driven creative processes. Consequently, users often perceive AI-generated suggestions as unhelpful and unsatisfactory. This study introduces a novel creative tool named Story Forge, which incorporates a card-based interactive narrative approach. By utilizing interactive story element cards, the tool facilitates the integration of narrative components with artificial intelligence-generated content to establish an interactive story writing framework. To evaluate the efficacy of Story Forge, two tests were conducted with a focus on user engagement, decision-making, narrative outcomes, the replay value of meta-narratives, and their impact on the users’ emotions and self-reflection. In the comparative assessment, the participants were randomly assigned to either the experimental group or the control group, in which they would use either a web-based AI story tool or Story Forge for story creation. Statistical analyses, including independent-sample t-tests, p-values, and effect size calculation (Cohen’s d), were employed to validate the effectiveness of the framework design. The findings suggest that Story Forge enhances users’ intuitive creativity, real-time story development, and emotional expression while empowering their creative autonomy.

1. Introduction

Interactive storytelling, a dynamic and evolving field, bridges disciplines such as literature, gaming, psychology, and digital design. It transcends traditional linear narratives, engaging users as active participants whose choices shape the story’s course and outcome [1,2]. Beyond entertainment, this narrative form fosters immersive experiences that serve educational, persuasive, and emotionally resonant purposes [3,4]. For human-centered patterns, the emphasis lies in optimizing user interfaces to enhance the accessibility and appeal of the story. This multidisciplinary narrative model, characterized by dynamic branching plots and personalized engagement, has the potential to redefine storytelling paradigms in the modern era.

Lee et al. (2024) [5] identified significant challenges in current interactive story writing systems. Despite rapid technological advances, the field is becoming increasingly fragmented [5]. Numerous writing assistance tools have emerged, yet there is a notable divergence in research focuses among groups specializing in natural language processing (NLP), human–computer interaction (HCI), and computational social science (CSS). Many NLP-focused tools emphasize AI-driven autonomous generation, often at the expense of user interaction, which leads to limited user control and narratives lacking emotional and cultural depth [5,6,7]. Riedl (2019) also noted that these systems, which emphasize autonomous generation, are inadequate in facilitating dynamic collaboration and personalized creation, and thereby constrain users’ creative potential [8]. This study contends that current systems hinder content creators from establishing profound narrative interactions with their audience due to their one-way interaction mechanisms, which impede audience ownership and investment in storytelling. For users, conventional tools lack dynamic collaboration features, restricting their creative expression, decision-making, and ability to see the effects of their choices in interactive storytelling. The absence of user-driven narrative models in the field of story creation tools significantly limits improvements in engagement, retention, and satisfaction.

Prior research suggests that overcoming the limitations of user-driven narrative applications in enhancing engagement, retention, and satisfaction hinges on the effective integration of generative capabilities with user-generated input within a framework that supports dynamic, user-led collaboration. Research by Green et al. (2014) highlights that the lack of user-driven narrative structures can impede engagement, whereas dynamic collaborative frameworks, such as branching paths and multiple-ending designs, significantly boost user immersion and satisfaction [9]. Zhang and Lugmayr (2019) proposed a narrative design model that merges generative capabilities with user-driven input, facilitating collaborative story construction [10]. This study suggests that a user-driven AIGC adaptive story writing system would represent an innovative approach to stimulating users’ autonomy, balance control over branching narratives, enhance emotional depth, and foster creativity.

This study introduces an AIGC story-writing interface utilizing cards as an interactive medium, addressing the shortcomings of existing AI tools that lack user control and emotional depth. By employing card-based interaction, the AI acts as a creative partner, fostering a dynamic collaboration where it learns from user input to generate complementary narrative elements. Users guide the core theme through card operations, with the design’s significance and effectiveness being assessed using metrics like user engagement, narrative choices and consequences, replay value, and narrative impact. Technically, this study employs digital cards as interaction units, integrates a large language model API into the Unity engine, and constructs an AIGC module based on LLMs, focusing on interactive fiction. The evaluation considers user experience, creative efficiency, and narrative effectiveness. Core applications of this interface include interactive novel platforms and narrative-driven card game prototypes, with potential implications in education, cultural heritage preservation, and psychological therapy.

To assess the effectiveness of the card-based AIGC adaptive story writing system design, we focused on three research questions:

RQ1.

How can an interactive interface enhance the creativity and rationality of user-generated narratives?

This study investigates how interface design elements in this framework can stimulate user engagement, enhance narrative diversity, increase the replay rate of meta-narratives, and ensure the logical coherence and structural rationality of the story.

RQ2.

How to design the process for integrating AIGC technology and user creative input to effectively enhance users’ control over branching narratives and their ownership of the story?

This study examines the development of a card-based AIGC adaptive storytelling system, emphasizing the integration of AIGC technology with user input. We evaluate whether this framework enhances users’ narrative control, supports in-depth creative content editing, and effectively reflects users’ creativity. Additionally, we assess its ability to strengthen users’ sense of dominance and ownership of the story.

RQ3.

How to design a framework to solve creators’ logical structuring and collaboration issues and readers’/players’ lack of interactive control and deep exploration in multi-branching narratives?

This study investigates a card-based adaptive storytelling system for AIGC, aiming to optimize interactive narrative design by offering clear guidance for branch selection, personalized preferences, and enhanced user control. It also examines whether the system can address the issue of limited exploration paths in AIGC’s deeper narrative interactions through mechanisms like customized narrative branching, card combinations, connectivity, and visualizations.

We conducted both quantitative and qualitative analyses of usability testing, examining dimensions like user engagement, choice and consequences, replay value, and narrative impact. The experimental results highlighted the effectiveness of our adaptive story creation framework for digital card design.

2. Related Works

2.1. Artificial Intelligence, Human-AI Collaboration, and Interactive Story

AI–human collaboration has increasingly emerged as a significant trend, particularly in creative writing, where artificial intelligence is evolving from a mere tool to a collaborative partner. AI agents are now not only generating text to enhance narratives but also offering knowledge support and culturally sensitive solutions for cross-cultural creation [11]. Spennemann (2024) examined the interplay between generative AI (GenAI) and human agency, highlighting AI’s role in cultural heritage projects [12]. Lee (2024) and others identified that AI–human co-creation models enhance interactive storytelling by refining content, improving the quality of writing, and aiding with grammar, spelling, idea generation, text restructuring, and style enhancement [5].

Chen et al. (2025) conducted a comparative experiment to investigate learners’ help-seeking behaviors when interacting with generative artificial intelligence versus human experts, revealing a non-linear sequence in AI interactions [13]. Zhang et al. (2024) [14] systematically reviewed 48 studies to assess GenAI’s dual roles in education: as an assistant and facilitator in learning support, and as a subject expert and instructional designer in teaching support. Their findings highlight GenAI’s ability to enhance feedback and evaluation methods, but also underscore limitations such as feedback quality issues, ethical challenges in complex tasks, and mismatches between AI assistance and user capabilities, which suggests directions for future research [14]. Hang et al. (2024) introduced MCQGen, a generative AI framework for personalized learning through automatic multiple-choice question generation [15]. Collectively, these studies illustrate the advancements in and potential applications of generative AI in education and the creative industry. Advanced AI models like GPT, T5, and BERT excel in text generation, offering plot suggestions, character development, and dialogue creation.

In terms of human–machine co-creation, Wu et al. (2021) and Woo (202) highlight the essence of human–machine co-creation, emphasizing the integration of human creativity with AI’s generative abilities for the collaborative accomplishment of creative tasks [16,17]. Lu et al. (2021) [18] advanced this field by developing a narrative generation tool using generative adversarial networks, focusing on the automatic generation of visual stories from text input. They criticized traditional narrative tools for their inability to adapt in real-time to user group preferences, which results in homogenized content. To address this, they suggested enhancing the collaborative framework with federated learning and swarm intelligence algorithms [18]. However, the current research predominantly centers on AI’s content generation, with insufficient exploration of user–AI interaction, particularly in ‘human-led, AI-assisted’ workflows. There is also a lack of concrete case studies in user interface design. Future research should aim to innovate in dynamic collaboration and user-led models to offer more adaptable and user-controlled tools for creative writing.

Nevertheless, the current AI creative writing tools predominantly offer one-way generation, lacking in-depth interaction and dynamic user adjustment and thus restricting users’ real-time control over content. Ingley and Pack (2023) [19] observed that AI can aid non-native English speakers in enhancing their scientific writing. However, they also pointed out that, without sufficiently deep and dynamic interaction, AI tools’ effectiveness is limited [19]. Guo (2024) highlights concerns that AI might undermine artistic integrity, with critics likening it to ‘pressing a button’ to generate books, which could devalue human labor and creativity [20]. These studies indicate that, while AI tools offer assistance, they remain limited in their emotional expression and human creative involvement, particularly in content creation, where user participation and interaction dynamism are notably lacking. Doshi and Hauser (2024) [21] found that stories produced by generative AI tend to be more homogeneous than those crafted solely by humans. Although individual creativity might be enhanced, there is a potential loss of collective novelty. This situation is a social dilemma: generative AI can either boost human creativity by offering fresh ideas or diminish it by anchoring it to AI-generated concepts. While creation becomes easier, the diversity of collectively produced fictional content is reduced [21].

2.2. Story Elements and Story Cards

Additionally, this study underscores the critical role of narrative elements in interactive design. Characters, events, and emotions collaboratively shape a multi-dimensional user experience, fostering deep engagement and immersion. The first story element is character. Zhou and Li (2021) highlight that character design conveys emotional depth through the character’s background, personality, and development [22]. Kuntjara and Almanfaluthi (2021) [23] assert that multi-dimensional character design transforms users from passive observers to active participants, enhancing their empathy and involvement. This strategy is especially effective in engaging users with media like games, stimulating interest and encouraging active participation in the narrative [23].

Secondly, events serve as pivotal components in the narrative process, driving plot changes and shaping user reactions and decisions. Zhang et al. (2023) [24] identified events as central to story conflicts, guiding user choices across various interactive scenarios. Analyzing narratives through the lens of events elucidates their role in story conflicts and their temporal and spatial impacts on story progression and user experience [24]. Daiute (2015) observed that narrative system participants utilize plot elements for communication and innovation [25]. This study indicates that diverse and layered event settings enhance users’ experience and enrich their innovation and communication.

Thirdly, emotion is fundamental to creative writing, shaping the narrative atmosphere and influencing readers’ emotional engagement. Zheng et al. (2025) identified a dynamic link between emotion and daily creativity, underscoring emotion as a critical driver in creative writing [26]. Peng (2019) introduced an emotional dialogue model that integrated topic and emotional factors with a dynamic emotional attention mechanism to enhance the quality and diversity of dialogue [27]. Nonetheless, this study highlights significant gaps in the current research on integrating emotion with user interaction. Given emotion’s real-time, dynamic nature, developing methods to adapt to emotional cues in real-time and seamlessly integrate them into narrative writing is a crucial future research direction.

Therefore, we discovered that emotional and narrative elements (characters, events) in storytelling interact, offering users diverse plot paths and interactive structures. This interaction transforms users from passive observers to active participants. By designing varied characters, events, and emotions, users can explore self-expression and creativity, crafting unique story paths. This immersive and interactive design enhances the expressiveness and user experience in narrative interactions, allowing users to deeply engage with the story while fostering emotional resonance and expanded thinking.

In recent years, cards have gained prominence in interactive storytelling due to their modular design and flexible combinations. Their inherent randomness introduces unpredictable narrative branches, enhancing user engagement and creativity. For instance, ‘Möbius Cards’ serve as tools for creative writing, narrative design, and idea generation, with each card offering a unique story element, question, or scenario prompt that allows the user to explore diverse narrative paths. Roy and Waren (2019) examined the practice of writing distinct design elements or creative points on separate cards and arranging them logically to control the narrative rhythm and guide design development [28]. Hsieh et al. (2023) suggest that organizing related knowledge and prompts on cards and pre-planning event sequences is akin to constructing a narrative structure, which thereby allows for effectively managing the design rhythm and aids designers [29]. This study asserts that the structural nature of cards enables designers to pre-determine event sequences, effectively controlling the narrative rhythm.

Beyond their unique advantages as story design tools, cards are particularly notable for their flexibility and modularity. The story card model offers several benefits. Koenitz et al. (2018) [30] emphasize the importance of paper prototyping in creating interactive digital narratives (IDNs). Specialized card layouts can expedite prototyping and ease the transition to digital formats [30]. Cards simplify complex narrative logic into manageable segments, enhancing user control through specific rules and triggers. Song and Wang (2025) illustrate this by employing a card-based participatory design tool in automotive multimodal design [31]. Despite the differing fields, the physical or digital attributes of cards—such as their patterns, text, and triggers—expand the visual and interactive possibilities, effectively connecting creators with the narrative. Cards are cost-effective, allowing designers to swiftly update or expand narrative patterns by altering or adding card types. This adaptability makes them ideal for scenarios that require flexibility and iterative updates, such as rapid prototyping or long-term narrative development.

2.3. Comparative Analysis of Existing Baseline Models

The purpose of conducting comparative analysis of existing baseline models is to understand the current broad models of language within the industry. Conclusions can be drawn from evaluations by teams that are assessing large-scale language models. These functions are accessible via APIs of leading models like GPT-4o, DeepSeek, and Claude 3. For instance, reports are available at sources such as https://juejin.cn/post/7452568192268812288 (accessed on 26 December 2024) and https://cloud.tencent.com/developer/article/2508564?frompage=seopage&policyId=20240000 (accessed on 28 March 2025).

A programming ability assessment was carried out in a report on Juejin, comparing six models including GPT-4o, Deepseek-V3, and Claude 3.5. Across various programming tasks such as back-end code, front-end code, database design, and API documentation, Claude 3.5 demonstrated superior performance, achieving the highest total score of 94.5 points. GPT-4o secured the second position with a total score of 91.3 points, showcasing correct code implementation, although its code had a relatively simplistic structure. Deepseek exhibited accurate basic functionalities but lacked performance optimization, which resulted in a total score of 85 points.

The AGI-Eval large language model evaluation team’s report highlights GPT-4o’s exceptional capabilities in generating images. Particularly in creative design assignments like poster and illustration creation, GPT-4o surpasses other industry models significantly. It secures the top rank among the assessed models with its superior performance in areas like text–image coherence and image fidelity. Notably, GPT-4o demonstrates proficiency in managing from 10 to 20 distinct objects, excelling in character, chart, and intricate portrait generation.

In the Global Large Language Model Comprehensive Ranking and Related Industry Report by the AI Innovation Research Institute in May 2025, GPT-4o emerged as the premier model for multimodal interaction, boasting over 1.5 billion monthly active users, a threefold increase in generation speed, and unmatched proficiency in reasoning. Claude 3.5 Opus stands out as the optimal choice for enterprise-level compliance, demonstrating the highest coverage rate in FDA medical contexts and excelling in logical rigor within the industry. Notably, DeepSeek-V3 surpasses GPT-4o with a Chinese understanding score of 78.2, leveraging a MoE architecture with 671 billion parameters at a comparatively modest training cost.

2.4. Summary of Related Works

Therefore, this study indicates that, compared to traditional linear or procedural narratives, a digital card narrative model offers greater adaptability. The primary strength of this model is in dynamically generating diverse plot paths by integrating the creator’s subjective choices with random elements, which allows it to maintain narrative control while allowing user creativity. This model requires neither complex programming nor expensive tools to adjust and optimize narrative structures, and thus reduces creation barriers and enhances narrative maintainability and scalability. Furthermore, by constructing a system of character, emotion, and event cards, and utilizing card associations and event logic chains, the model can enrich personalized narrative expression.

3. Design of Story Forge

This study seeks to merge the adaptive interface design of digital cards with a dynamic branching framework to investigate its effects on user engagement, choice and consequence capabilities, the replay value, and the narrative impact, aiming to optimize interactive narrative design. Initially, this study will examine narrative design elements to determine how card types and their combinations affect these four metrics. Subsequently, it will evaluate Story Forge against existing AI writing tools (AI Story Writer (version Squibler 2025): https://www.squibler.io/ai-story-generator, accessed on 27 June 2025) using a blend of quantitative and qualitative methods and by employing reliability analysis and system scoring. Finally, based on the experimental findings, this study will propose targeted improvement strategies to support the optimized design of the card narrative framework and establish a foundation for constructing theoretical models of related factors.

3.1. What Is Story Forge?

Story Forge is an innovative adaptive story creation interface that utilizes digital cards to foster subjective storytelling and creativity. It combines card interactions, AIGC generation, real-time engagement, and intuitive narrative tools. Enabling users to select outcomes that are aligned with plot progression and emotional satisfaction, it is aimed to enhance the narrative experience. This system caters to both casual and professional users who are interested in narrative creation, whether for entertainment, education, or writing projects.

Story Forge was developed using Python 3.9 as the primary programming language, with Figma being utilized for the user interface design and the creation of 2D assets, such as generic card faces and environmental materials. Unity was chosen as the development engine. Unity’s advantages over other engines such as Unreal include being lightweight, efficient cross-platform deployment (compatible with PC and mobile devices), the cost reduction that can be obtained through the use of community-supported plugins (e.g., robust physics engine, etc.), and a focus on adaptability to interactive narrative logic. The backend of the AIGC system integrates with the Doubao AI’s big language model (Version 3.5) to enhance its capabilities. Doubao AI was introduced by ByteDance in 2024 as version 3.0. Doubao AI’s distinguishing characteristics include a mixed model of expertise (MoE) architecture with an 8192-dimensional context window, low latency with an average system response time of 1.2 s, optimization for both the Chinese and English contexts (exhibiting advantages in Chinese comprehension and localization adaptation), and the capability to support dynamic narrative generation without the need for retraining, which sets it apart from models such as GPT-4o and Claude. Currently, we have not engaged in the recreation or training of this large language model. The design enables the generation of branching plot information based on personalized user input, with Unity’s system UI being used to display subsequent text plots, ensure that each choice aligns with the overarching narrative framework, and create personalized narratives based on user interactions (Figure 1 and Figure 2).

Figure 1.

Development software; adaptive (non-English term is a AI Product logo, means Doubao).

Figure 2.

System backend architecture diagram, JavaScript, data flow metrics, and key design features.

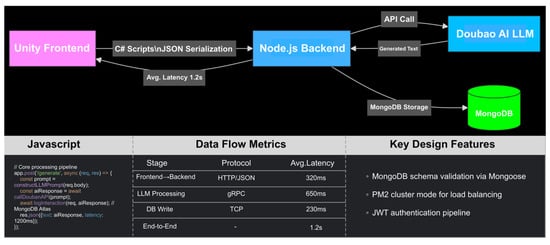

In Figure 2, titled “System Backend Architecture Diagram” and detailed in Section 3.1, “Overall Architecture,” the following components are described:

- -

- Front-end: the Unity engine utilizes C# scripts to manage card interactions and story presentation;

- -

- Back-end: the Node.js server serves as an intermediary, facilitating the connection between Unity and the Doubao AI API, with data being stored in MongoDB;

- -

- Data flow sequence: card selection leads to JSON format conversion followed by an LLM call, which results in the return of generated text that is then rendered in Unity. The process exhibits an average latency of 1.2 s.

In our study, training models are not included. However, our future research might explore the training of the model, such as fine-tuning it with a creative writing corpus (e.g., novels, screenplays) to enhance its narrative coherence and emotional expression. This fine-tuning process could involve utilizing a dataset specifically tailored for creative writing that comprises over 100,000 story samples to optimize services like API call parameters. Additionally, we would provide essential model training parameters (e.g., learning rate, number of epochs) to ensure the method’s reproducibility across various large language models. This approach would be facilitated through a user interface embedded within the large language model.

The user workflow involves arranging and combining story cards in the designated slot, with the AIGC generating real-time story content based on card combinations and sorting in the preview interface. This setup allows users to iteratively refine the backend story generation logic. All functionalities are seamlessly integrated into a unified user interface, offering a cohesive “user-driven creation mode” experience.

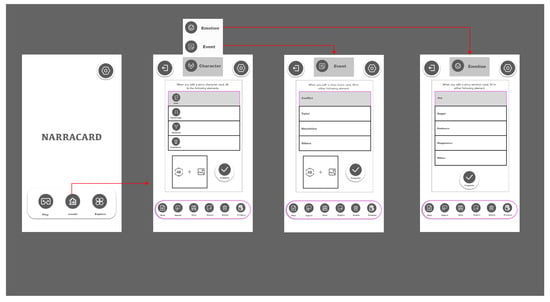

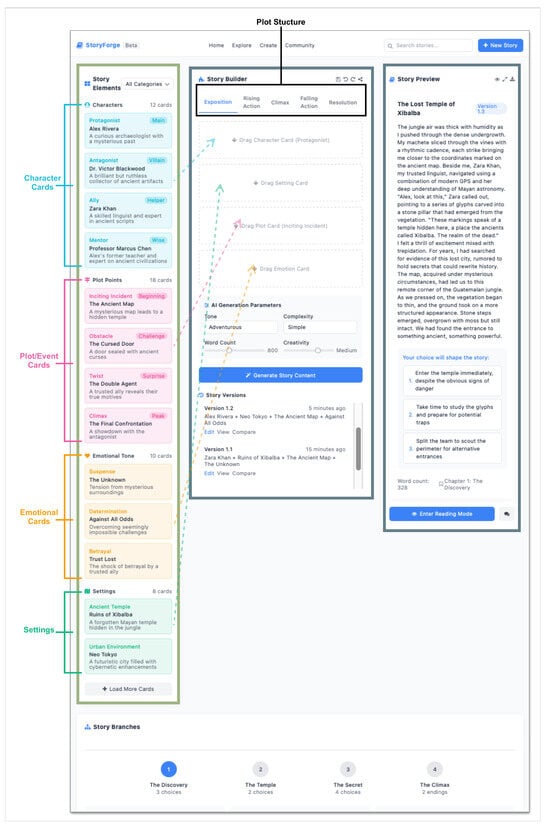

The interactive page (Figure 3) layout features a card-based design with three buttons: home, play, create, and explore. Selecting play directs users to the narrative story creation process based on card combinations, while choosing create leads to the creation of story cards. Upon entering the editing interface, users encounter three categories of story elements: characters, plot, and emotions.

Figure 3.

Card input user interface page.

This classification method is grounded in several key considerations. Firstly, akin to pivotal components in traditional narratives, character cards play a crucial role in eliciting emotional investment from audiences in interactive narratives. Secondly, the curation of plotlines and consequences offers users significant choices that shape the narrative’s trajectory, enabling them to make decisions that not only influence the storyline but also mirror their individual values, and thereby engaging them in the narrative construction process. This approach cultivates emotional involvement and may introduce ethical quandaries, thereby enhancing the immersive nature of the experience. Lastly, emotional elements serve to subtly enrich the narrative ambiance and directly introduce tension into the story arc. This tension is strategically released at pivotal junctures to regulate the narrative’s tempo. Thus, the rules governing card mapping are influenced by three fundamental elements: character cards, event cards, and emotion cards. These elements interact to form card combinations that align with the prompt words’ four main modules. These combinations are then incorporated into organized text through a generation layer, ultimately guiding the interactive narrative response (Table 1).

Table 1.

Corresponding relationship and weight distribution between the card and the prompt word module.

Weight distribution: Event and character cards predominate due to their central role in narrative coherence and user immersion. Emotional and scene cards function as supplementary elements that enhance the overall experiential depth.

As shown Table 1, the integration of these elements allows users to craft a narrative arc that comprises a commencement, middle section, and conclusion, aiding in the perception of story progression and coherence. Moreover, interactive design facilitates the adoption of characters’ viewpoints by users, promoting empathy and comprehension. Through meticulous incorporation of these design principles, the interactive interface enhances user engagement with narrative composition by employing intuitive operational logic and visual information representation. Users can observe and modify content in real-time within the interface (Figure 2). The user’s engagement in storytelling is facilitated through various cards. Character cards, by delineating the character’s background, personality, and development, foster emotional bonds between users and story characters. This emotional connection is reinforced through the design of character cards, which evoke empathy and deepen user immersion, as exemplified by labels like “[Character: Name, Feature 1, Feature 2]”. Central to the narrative progression is the event card, which is pivotal in steering the storyline and shaping user emotions and decisions. These cards introduce plot twists that prompt emotional responses and influence user choices, often featuring identifiers like “[Plot: Triggering event, Conflict type]”. Events serve as focal points of emotional tension, guiding users through interactive scenarios. Emotional cards play a crucial role in interactive storytelling by eliciting emotional responses from users, thereby enriching their engagement. By incorporating emotions like joy, fear, or sadness, these cards influence user decision-making and heighten the immersive experience. These cards are categorized by emotions such as “joy, anger, sorrow, and happiness”.

3.2. Card Combination Conversion Rules and Examples

Take ‘Brave Knight (Character Card) + Mysterious Forest (Scene Card) + Suspense (Emotion Card) + Encounter with Ghosts (Event Card)’ as an example:

Character Card (30%): the character Arthur, adorned in worn armor, embarks on a noble quest to defend the kingdom’s honor, displaying stoic traits.

Scene Card (10%): set in the eerie depths of the Black Forest at midnight, where fog blankets the twisted trees resembling gnarled claws.

Emotion Card (20%): evoking a suspenseful atmosphere of moderate intensity, characterized by a grim and suggestive speech style.

Event Card (40%): as Arthur contacts the luminous tree, a ghost emerges from the abyss, questioning, “Why have you disturbed my slumber? Choose to ‘Explain’ or ‘Defend’”.

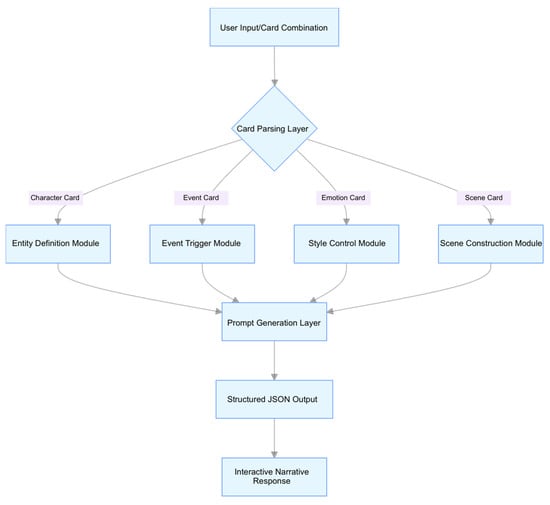

Generate structured prompts: The process of prompt generation follows a structured approach where the card sequence is first converted into a JSON format using a text script. Prompts are then formulated based on the hierarchical order of ‘Scene → Character → Plot → Emotion’. Module weighting in conversion logic dictates the level of content detail: event and character cards provide specific information such as the character background and branching choices, while emotion and scene cards emphasize creating atmosphere, for instance, ‘mist like a veil’ and ‘sense of gloom.’ Inter-module connections are evident, as the suspenseful tone of emotion cards influences the depiction of conflicts in the event cards and shapes the environmental descriptions in the scene cards utilizing imagery, which avoids directness. In contrast, scene cards, whether user-generated or system-provided, play a secondary role in the narrative structure and are not primary story components (Figure 4).

Figure 4.

Summary diagram of the core card mechanism.

The system achieves streamlined narrative interaction through prompt optimization driven by the card mechanism, circumventing the high costs associated with model fine-tuning. The context maintenance mechanism of the selected large language model, Doubao, preserves approximately the initial 300 tokens of each generation round as narrative anchors to ensure plot coherence. However, in cases of prolonged conversations (e.g., spanning thousands of rounds or containing substantial content), researchers may need to moderately condense redundant early information while preserving core semantics to facilitate smooth interaction. Future research endeavors may incorporate reinforcement learning to enhance the precision of personalized narrative adaptation. The extended architecture features hierarchical storage and semantic compression within the large language model itself, addressing state maintenance challenges in extensive narratives. The conflict algorithm and synchronization protocol facilitate multi-user collaboration scenarios. The overall design strikes a balance between interaction fluidity and narrative integrity, catering to a range of needs from individual immersive experiences to collaborative multi-user creations.

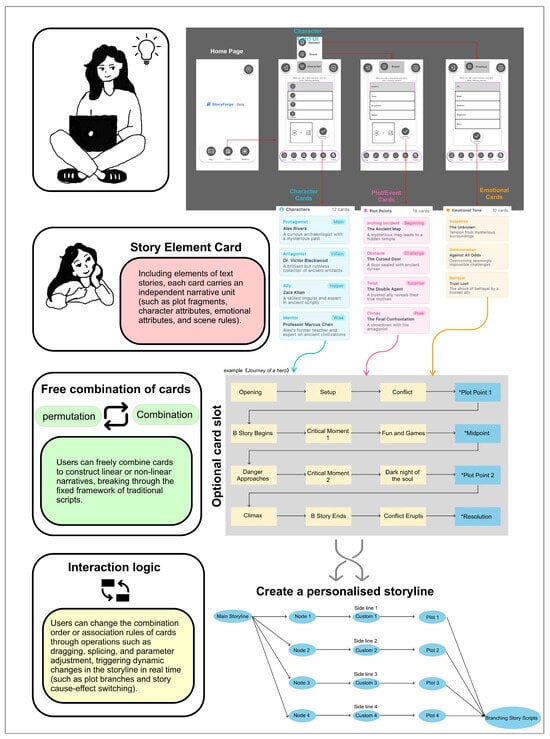

3.3. Story Forge with Users

This study devised the interactive functionalities of Story Forge based on multi-dimensional interaction logic (Table 2). Story Forge employs a ‘UI story card interaction interface’ as an interactive metaphor to facilitate user engagement. The “agent” mechanism on the input side enables users to perceive editorial autonomy by offering a ‘categorized tool’ that pre-classifies story elements like characters, the plot, and emotions. Users can drag cards into designated slots that correspond to story stages (beginning/development/climax), which prompts the AI to generate logically interconnected content and thereby enhances ‘interactive reconstruction for optimizing story controllability’ (Figure 5). By decomposing the story into ‘unit cards,’ users can amalgamate elements akin to solving a puzzle, thereby striking a balance between creative autonomy and AI-driven functionalities. This approach reduces the entry barrier by leveraging card metaphors, such as the Joseph Campbell Hero’s Journey template card pack, to foster creativity and streamline the cognitive load during AI interactions. Furthermore, the system establishes a story card ecosystem that enables users to upload cards and refine generation algorithms based on usage patterns. It facilitates the collaborative editing of card collections by multiple users and offers compatibility with AR/VR technologies for cross-platform multimedia creation.

Table 2.

Design dimensions, definitions, and explanations.

Figure 5.

Story Forge: mechanics and workflow; 1. card—driven narrative: story element card; free combination logic: 2. Interaction and process: drag, splice, and adjust parameters; personalized narrative generation; use a “Main Storyline + Side Lines” node structure.

The interface layout of Story Forge integrates the writing area with the user text output location, with the top navigation bar facilitating the input of various story element cards. Interactions are centered within the text box and utilize a card-style UI design that presents a visually cohesive layout, offering users a preview of the anticipated outcome. In terms of the interface paradigm, the main interface is divided into three sections: the ‘UI card input interface’ on the left, story card slots and AI large language model parameter adjustments in the middle, and story generation previews and branching plotlines resulting from different choices on the right. These sections collectively form a collaborative creation system with multiple endpoints.

3.4. Plot Selection, Guiding Consequences, and Continuing Story

The interaction between users and writing assistants mainly involves three key components: the users, user interface (UI; front end) and system (back end). The user interface acts as an intermediary, facilitating interaction between users and the system, as shown in Figure 6.

Figure 6.

Story Forge: user interface and illustration: https://www.doubao.com/share/code/06323a85614fb42d (accessed on 27 June 2025) open in Doubao.

The previous user interface (UI) card system enabled the integration of cards to activate the artificial intelligence story generation component (AIGC), enhancing user engagement through an immersive first-person experience. Users can influence the narrative’s outcome based on their choices, with the interface presenting all potential narrative pathways for pivotal plot points. Central to the functionality of this interface is the AI’s capacity to generate storylines that align with the logical progression of the narrative following the combination of different cards. Users are empowered not only to craft narratives using diverse cards but also to explore different permutations of the same cards, which facilitates comparisons among various narrative branches. Analogous to assembling LEGO bricks, users can efficiently repurpose premium card modules.

This study successfully integrated a front-end user interface (UI) with back-end artificial intelligence (AI)-generated content (AIGC) logic. Users can manipulate cards through drag-and-drop actions on the interface to formulate a story framework, following which the AI generates tailored plots derived from this framework. Additionally, the system facilitates narrative capabilities and enables multi-version comparisons, enabling users to observe real-time distinctions among stories produced by various card combinations during the creative process. This feature empowers users to iteratively enhance and perfect their narratives.

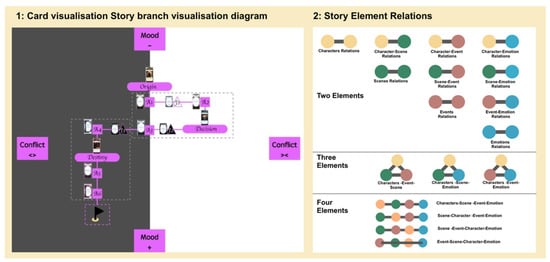

3.5. Story Branch Visualization Diagram

This study employs a visual diagram known as a story branch visualization to depict intricate story structures in a graphical manner, offering various advantages (Figure 7). This tool facilitates rapid comprehension of the entire narrative for both creators and readers by visually presenting all potential plot developments, including main storylines and subplots, in a clear and intuitive manner, which prevents confusion within complex narratives. Furthermore, the visualization effectively delineates the logical connections and sequential relationships among branches, aiding creators in structuring the narrative progression and preplanning plot pacing, and ensuring the coherence and integration of each branch within the overarching narrative framework. Consequently, this approach minimizes plot discrepancies and logical incongruities.

Figure 7.

Story Forge: story branch visualization diagram; story element relations.

Visual diagrams that depict story branches enhance the interactivity for readers or players as well as their curiosity by providing a preview of various outcomes based on different choices. This preview increases their sense of control and engagement with the narrative. Comparing the development paths of these branches allows them to discover hidden details and deeper meanings, which ultimately enriches the story’s replay value and facilitates deeper reflection. Moreover, these visualizations serve as effective collaborative tools for creators, enabling team members to communicate intuitively, reach a consensus on narrative logic swiftly, optimize the creative process, and enhance the overall efficiency and quality of the story.

3.6. Comparison with Large Language Model

This study investigates the variations in creative reasoning between Story Forge, GPT-4o, and NovelAI (Table 3) using the narrative of “Enrollment in the Magic Academy” from the “Harry Potter-style” series as a case study.

Table 3.

Comparison between the Story Forge framework and the logic of large language models.

Story Forge utilizes a card-based collaborative approach akin to constructing narratives with LEGO blocks. Users begin by introducing “character cards” featuring elements like the “orphan protagonist” and “mysterious necklace,” which is followed by incorporating “scene cards” depicting events such as “an owl delivering the acceptance letter” and concludes with the integration of “conflict cards” outlining scenarios like “the warning from the neighbor uncle.” The platform seamlessly integrates these components to generate cohesive content and suggests the inclusion of supplementary sub-cards, like “details of the necklace glowing,” fostering a structured and collaborative creative process.

In contrast, GPT-4o operates solely based on plain-text prompts. For instance, users must input a prompt like “Compose a narrative depicting enrollment at a magical academy with an orphan protagonist. Upon receiving an owl-delivered letter, the protagonist’s neighbor uncle advises against attending, and the protagonist is adorned with a luminous necklace.” To modify the necklace’s color, users must include a specific directive in the text, such as “The necklace emits a blue radiance upon the arrival of the acceptance letter.” Conversely, NovelAI utilizes a template-plus-slider approach. Selecting the “Fantasy Enrollment” template and adjusting the “conflict intensity” slider to 70% while prioritizing “curiosity > fear” for character emotions will cause it to generate a structured narrative where the protagonist receives the acceptance letter, encounters dissuasion from the neighbor, and experiences hesitation as the necklace grows warm. To alter the scenario, users must choose from preset sub-templates like “delivery in the forest” or “letter inserted through the door crack”.

Story Forge provides users with detailed narrative control, allowing for granular manipulation of the storyline. For instance, at a critical juncture where the protagonist must decide whether to accept an invitation, users can customize the “decision cards” for this event. Opting to “Tear up the notice → The necklace repairs the paper” will result in the protagonist accepting the invitation, whereas selecting “Hide the notice → The necklace leads to finding the attic” will postpone acceptance. Each choice triggers a series of branch cards like “Old diary in the attic,” which empowers users to meticulously steer the plot progression step by step. GPT-4o exhibits limited control and relies heavily on prompt accuracy. For instance, if the initial prompt is “The protagonist immediately accepts” and one intends to alter it to “reject,” the necessary input would be “The protagonist recalls the neighbor’s caution and conceals the letter in the garden, leading to the growth of luminous vines overnight.” In cases where the prompt is ambiguous, such as merely stating “Let the protagonist reconsider,” the generated output may still incline towards “acceptance following hesitation.” In contrast, NovelAI offers moderate control. Within the predefined narrative trajectory of “Enrollment—Adventure—Growth,” users can only choose between two options: “Accept the invitation” or “Temporarily decline (and subsequently be coerced into participation).” Adjusting the “event intensity” slider to 90% yields “The academy dispatches individuals to forcibly escort the protagonist”; setting it to 30% produces “The necklace heats up daily, compelling the protagonist to make concessions,” yet the story consistently remains within the confines of ultimately enrolling.

Hence, it is evident that Story Forge resembles constructing with LEGO bricks, which enables adaptable collaboration via modular elements that are conducive to precise adjustments. In contrast, GPT-4o parallels composing an email, depending on the malleability of textual directives and necessitating iterative revisions. Similarly, NovelAI mirrors a coloring book, allowing for modifications within a predetermined framework, facilitating the rapid generation of narratives that adhere to genre conventions.

The current study focuses on quantitatively analyzing Story Forge. However, a structured comparative analysis of various models, including the evaluation of Story Forge’s text generation quality using metrics such as GPT-4o and NovelAI’s scores, BLEU, ROUGE, and user satisfaction scales has not been undertaken. Our future research aims to expand this inquiry by integrating user experience assessments of different models, which will encompass variations in the ‘exploratory’ and ‘expressive’ dimensions of the CSI scale. Additionally, we plan to compare the interaction efficiency of our interface with that of others by evaluating the number of steps and the time needed to complete a narrative task. Moreover, we decided to incorporate a human evaluator to assess the user experience effectiveness across diverse models. This assessment will entail a blinded evaluation of the narrative creativity and emotional impact to supplementing the objective metrics with the aim of establishing a comprehensive evaluation framework.

4. Methods

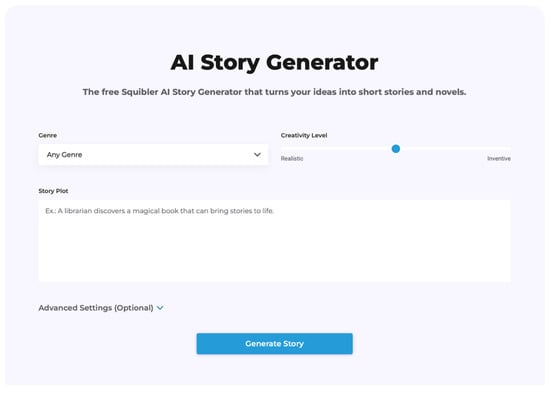

4.1. Experimental Variables

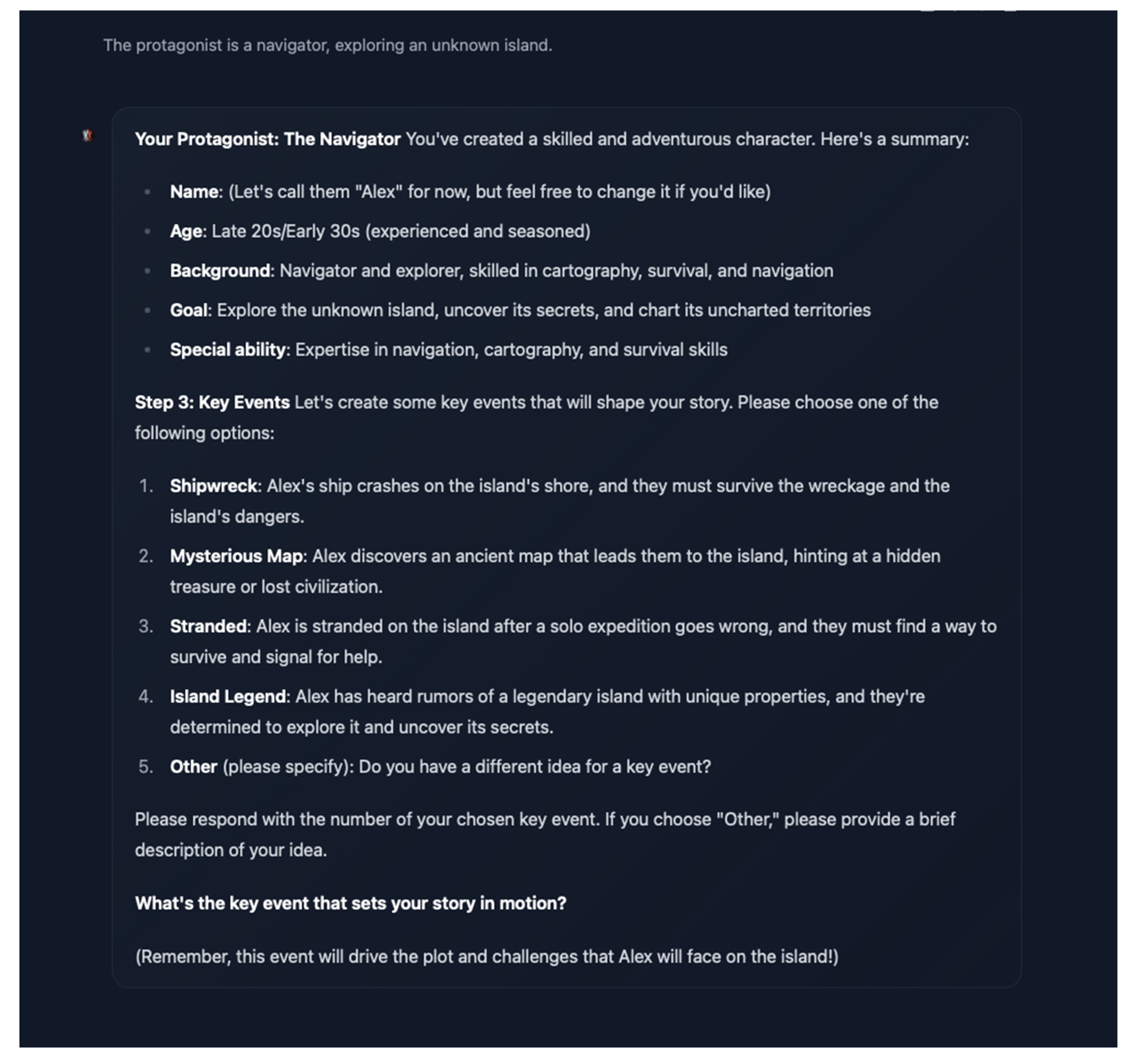

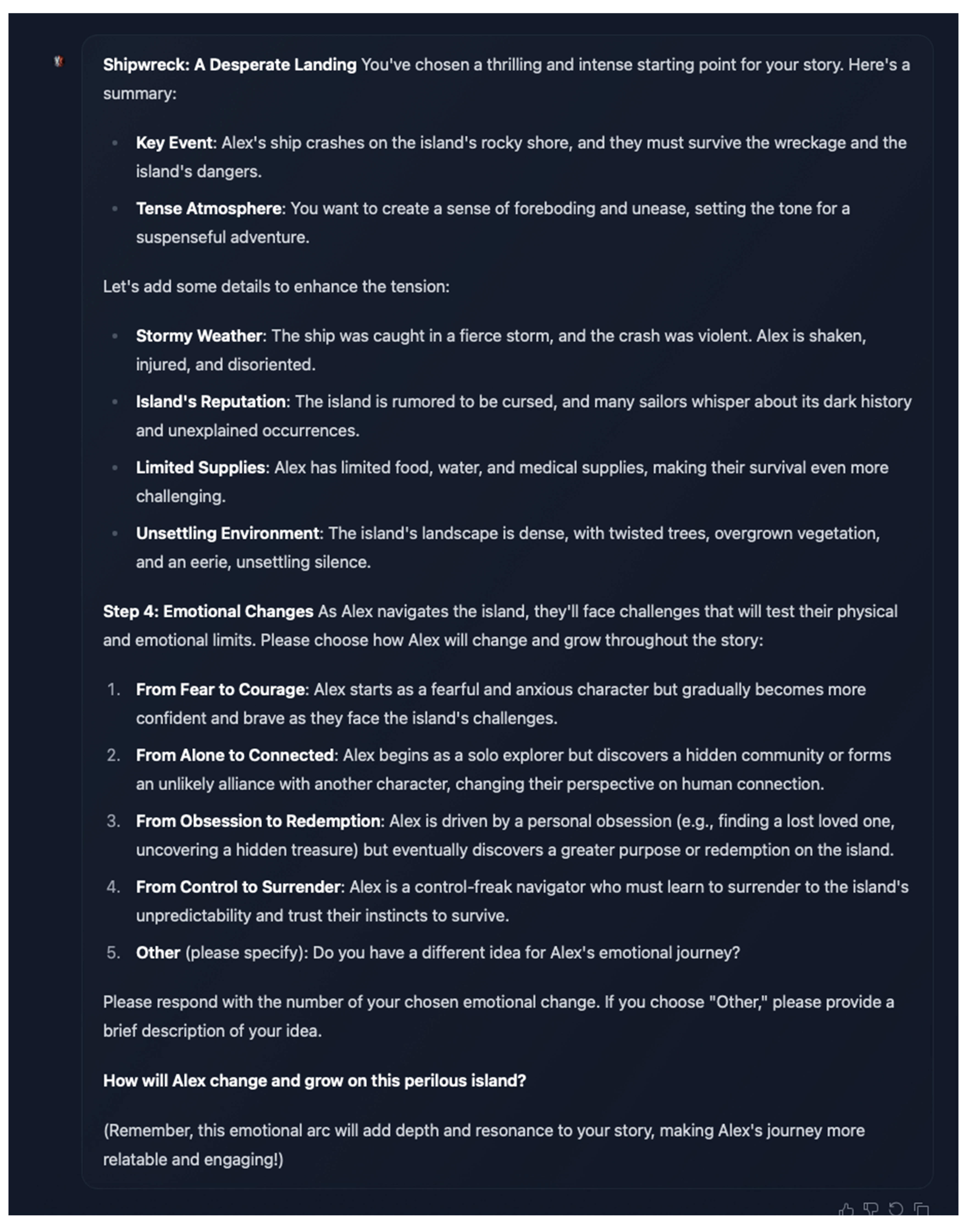

We implemented two conditions: a control condition utilizing the online AI story generation platform AI Story Writer (Figure 8) and an experimental condition employing the card-based adaptive interface design ‘Story Forge’. In the control condition, a predetermined story theme and creation duration were established. Participants engaged in story creation, plot expansion, and branching plot development following systematic guidelines augmented by experimental variables (e.g., diverse AI model assistance, card-based interaction modes, and narrative adaptive design). This experimental framework allows for the assessment of the experimental group’s performance throughout the creation process, facilitating a comparative analysis of AI story generation platforms with card-based interaction and narrative adaptive design experiences.

Figure 8.

AI Story Writer: https://www.squibler.io/ai-story-generator (accessed on 27 June 2025).

Independent variable: Two narrative interactive writing systems were compared: an online AI story creation platform (AI Story Writer) and a card-based story adaptive design system (Story Forge). The experimental group utilized the story creation platform with card-based interaction, while the control group employed AI conventional writing interaction. We conducted a comparative analysis of the two groups to investigate the effects of these systems on narrative interactive writing, focusing on various dependent variables.

4.2. Dependent Variables

Table 4.

Quantifiable indicators (quantitative).

Table 5.

Non-quantifiable indicators (qualitative).

1. User Engagement: User engagement is a crucial metric for assessing success. Metrics like story duration, decision frequency, and exploration paths offer valuable insights into the level of user immersion in the narrative;

2. Choice and Consequence: Interactive storytelling is characterized by the capacity to make choices and observe their outcomes. Effective interactive narratives often include intricate causal structures that are significant and intuitive to engage with;

3. Replay Value: The desire to experience a story multiple times, discover different outcomes, or explore alternative narrative paths indicates the depth and richness of connection with the audience;

4. Narrative Impact: The emotional and intellectual impact of a narrative on individuals is a subjective yet vital measure of its effectiveness. Narratives that stimulate contemplation, evoke emotions, or prompt introspection frequently create a lasting impact.

In the standardized questionnaire section, an interactive narrative system evaluation system was developed based on three-dimensional characteristics. The evaluation encompassed technical usability, creative freedom, and user immersion through the utilization of three standardized tools: the System Usability Scale (SUS), the Creativity Support Index (CSI), and the User Participation Inventory (UPI). These scales were synergistically designed to form a comprehensive evaluation framework.

In our scale selection process, we primarily focused on the System Usability Scale (SUS), a widely recognized tool in the industry for evaluating usability. The SUS employs a 5-point design with 10 items, yielding a total score ranging from 0 to 100, with 68 points serving as the industry standard benchmark. This scale is well-suited for assessing the technical implementation of interactive narrative systems, including crucial technical aspects like the user-friendliness of card mapping rules and the robustness of real-time synchronization protocols. The SUS demonstrates strong performance in evaluating aspects such as the “smoothness of card combination operations” and the “accuracy of prompt generation.” Moreover, its reliability coefficient α consistently exceeds 0.8, which indicates a high level of measurement stability.

The CSI scale assesses the creative support functionalities of a system, comprising 36 items across 6 dimensions rated on a 7-point scale. It comprehensively evaluates critical creative aspects like “exploration,” “expressiveness,” and “collaboration.” In the context of interactive narrative scenarios, this scale is well-suited for assessing factors that impact the creative process directly, such as the “flexibility of card templates” and the “alignment between emotion cards and plot cards.” Prior studies demonstrate a high construct validity of 0.79 for this scale within narrative creation systems, indicating strong discriminant validity.

The UPI scale, a validated tool for assessing user immersion, comprises 20 items across three dimensions rated on a 6-point scale. It assesses critical experience indicators including “focus,” “emotional investment,” and “behavioral participation.” Aligned with the “choice-consequence” mechanism of interactive narrative systems, this scale effectively gauges the extent of user engagement in the storytelling experience. Notably, the scale demonstrates strong test-retest reliability at 0.85, which underscores its stability and consistent measurement accuracy.

The reliability analysis results indicate strong psychometric properties across all three scales. Specifically, the Cronbach’s α coefficient for the SUS scale is 0.82, with sub-dimensions ranging from 0.79 to 0.84. The CSI scale demonstrates an overall α coefficient of 0.87, with sub-dimensions ranging from 0.79 to 0.83. Notably, the UPI scale exhibits the highest performance, boasting an overall α coefficient of 0.89, with all sub-dimensions exceeding 0.82. These findings unequivocally establish the reliability of the measurement instruments and thereby underpin the validity of the research outcomes.

4.3. Demographic Data

We enrolled 30 university students (15 males, 15 females) with expertise in AI tools and story creation (M = 2.53, SD = 0.95) into a study. Participants were randomly divided into a control group and an experimental group. Notably, none of the participants had prior exposure to the narrative interactive writing system employed in this study. To accommodate computer and platform constraints, each group was capped at five participants, which resulted in three control groups and three experimental groups with gender balance. Thirty university students, comprising 15 males and 15 females, were selected for the pilot study to validate the fundamental features of the system, such as card interaction logic and AI-generated coherence. This sample was also chosen to enhance the representation of essential traits, such as proficiency in utilizing AI tools and narrative interactions, to showcase the system’s practicality. Subsequent studies will broaden the sample diversity to encompass various age groups, occupations, and creative backgrounds, including non-student cohorts and professional writers, to further demonstrate its versatility.

Before this study commenced, participants underwent a pre-test questionnaire after providing informed consent to evaluate their familiarity with the web-based AI story creation platform and the card-based adaptive story co-creation experience. Subsequently, the experimental tasks and background were presented to all participants. Both the control group and the experimental group received a 10 min training session to acquaint themselves with this study’s experimental procedures and operations. Participants were consistently encouraged to verbalize and document their thoughts during the experiment.

This study’s sample size was determined through a power analysis using α = 0.05, β = 0.2 (80% power), and a pre-experiment effect size (Cohen’s d = 0.65). Utilizing G*Power 3.1, the minimum required sample size for the two groups was determined to be 26 individuals. However, 30 participants were ultimately recruited, which meets the statistical criteria for effectively detecting intergroup differences.

Nonetheless, the sample composition presents limitations as all participants are university students who potentially possess above-average proficiency in computer skills, narrative ability, and tool utilization compared to non-technical groups. Furthermore, the sample size may not be sufficient to detect nuanced differences, which limits this study’s generalizability. Nevertheless, these participants are considered the primary cohort for this investigation.

Our future research endeavors will focus on two key optimizations: broadening the participant pool and refining the study design. This will involve incorporating pre-test assessments of creative ability and tool usage frequency, employing stratified random sampling to ensure demographic balance, and extending the tracking period to one month to enhance the validity of conclusions.

In the controlled study, groups were limited to five participants each, resulting in the formation of three control groups and three experimental groups. The control groups interacted with the web-based AI Story Writer application, while the experimental groups engaged with the Story Forge system, with each session lasting 35 min. Following the tasks, participants completed a post-experiment questionnaire assessing system usability, creativity support, and user engagement (refer to test metrics for specifics). Additionally, individual interviews were conducted with participants from both sets of groups to gather their insights and feedback on the experience.

4.4. Reiteration of Benchmark Experiment

This section reiterates the content discussed in Section 2.3 of the Related Work Section. The primary focus of this study lies in the design framework and mechanism construction of card narrative interaction, rather than in conducting a comparative analysis of the capabilities of various large AI models. It is crucial to emphasize that, as the functional implementation of this study can be achieved using the APIs of any major language models such as GPT-4o, DeepSeek, and Claude 3, the comparison of capabilities among these models is beyond the scope of this study and has not been included in the research design.

The current study specifically considers Doubao, utilized by this model, as the sole control variable. However, should future research aim to incorporate additional model types or broaden the introduction of APIs, benchmark experiments may be necessary to offer robust data support and a basis for comparison of programming ability, text-to-image capability, and overall performance across different large language models.

4.5. Data Collection and Analysis

Quantitative and qualitative data were gathered through self-reported questionnaires, user feedback obtained from interviews, and participants’ utilization of the web-based AI story creation tool, Story Forge. Our methodology encompassed observation records, content mining, questionnaires, and semi-structured interviews. For detailed inquiries, please refer to the Appendix A.

We initiated this study by systematically gathering multidimensional quantitative observational data. Specifically, we analyzed user behavior metrics such as time spent on story creation, frequency of decision-making, complexity of story pathways, and length of narrative expansions. Additionally, we documented user choices, causal event triggers, revisitation patterns, and story completion rates. Furthermore, we conducted a detailed comparative analysis of participants’ creative output on the AI Story Writer online platform versus the Story Forge card-based storytelling platform, utilizing the dataset for a comprehensive evaluation.

At the conclusion of the experiment, the researchers possessed 30 unique story drafts: 15 generated through the web-based AI Storywriter tool (control group) and 15 produced using Story Forge (experimental group). Quantitative metrics of output from the two tools can be analyzed, including the content output volume (word count, scenes, character count), diversity of story elements (characters, plot branches, media), quantity of collaboration network nodes, and instances of information transmission, as well as the frequency of emotional tags and the ratio of positive vocabulary.

Self-reported questionnaire data included Likert scale assessments of system usability (SUS), creativity support (CSI), and user engagement (UPI). Consistent with Busselle and Bilandzic [3] and Zhu et al. [32], a 7-point Likert scale was utilized to gauge participants’ engagement and comprehension during story creation. Additionally, the System Usability Scale (SUS) [33] and NASA-TLX scale [34] were applied to evaluate system usability and task workload across dimensions such as psychological demands, physiological demands, time demands, performance, effort, and frustration. Ratings on these scales, ranging from 1 (not at all) to 7 (very much), were used to assess system usability, creativity support, and user engagement. System usability gauges user satisfaction with the Story Forge system, creativity support evaluates the diversity of elements and problem-solving in story outputs, and user engagement captures factors like immersion, experiential depth, emotional feedback, user reflection, and memory retention during story creation. These metrics provide insights into participants’ overall interaction with the story. Collaboration levels within each group were independently evaluated by two trained raters, and satisfactory inter-rater reliability was demonstrated across all measurement indicators.

In addition to quantitative data, user feedback was gathered through semi-structured interviews comprising open-ended questions. Each participant was interviewed to assess their feedback on the narrative experience in both scenarios, with an average duration of 25 min. Subsequently, a detailed examination of the subjective experiences reported in the questionnaire was conducted.

5. Results and Discussion

5.1. Results of Quantifiable Indicators (Quantitative)

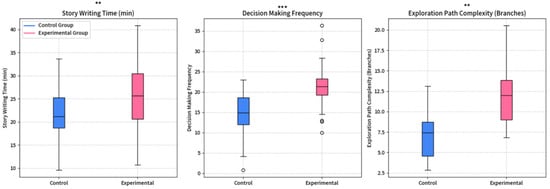

5.1.1. User Engagement

Regarding user engagement (Table 6 and Figure 9), the experimental group (26.2 ± 7.9 min) exhibited significantly longer engagement in story writing compared to the control group (22.8 ± 6.9 min), with a notable disparity being observed (t = 2.04, p = 0.044). This observation implies that the experimental intervention likely bolstered user involvement in generating content. In terms of decision-making frequency, the experimental group (21.5 ± 6.0 times) demonstrated significantly higher decision-making rates than the control group (14.7 ± 5.3 times, t = 5.41, p = 0.015), which indicates heightened user engagement in interactive decision-making within the experimental setup. Furthermore, with respect to exploration path complexity, the experimental group displayed a notably greater number of path branches (11.0 ± 3.5) compared to the control group (7.2 ± 2.7, t = 5.12, p = 0.022), which suggests more intricate exploration patterns and potentially deeper exploration of system content.

Table 6.

User engagement.

Figure 9.

Boxplot of user engagement; story writing time; decision-making frequency; exploration path complexity. ** means Significant, *** means Very Significant.

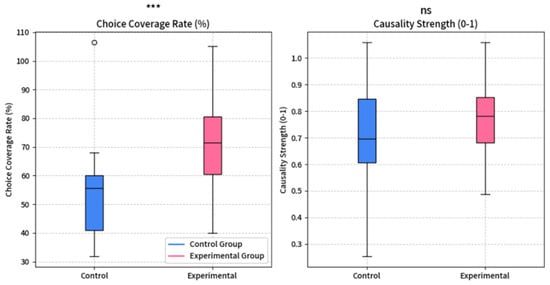

5.1.2. Choices and Consequences

Regarding choice and consequences (Table 7 and Figure 10), the experimental group exhibited a significantly higher path coverage rate (70.4 ± 15.0%) compared to the control group (52.9 ± 13.9%, t = 5.58, p = 0.012), which indicates that the experimental intervention facilitated users’ exploration of diverse paths. However, there was no significant disparity between the groups concerning the strength of causal relationships (experimental group 0.76 ± 0.14 vs. control group 0.74 ± 0.15, t = 0.63, p = 0.531). This suggests that, while the overall performance improved, there was no substantial alteration in users’ comprehension of narrative causal links.

Table 7.

Choices and consequences.

Figure 10.

Boxplot of choice and consequences; choice coverage rate; causal relationship strength. *** means Very/High Significant.

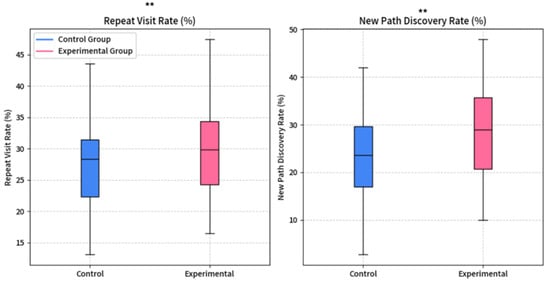

5.1.3. Replay Values

The experimental group exhibited greater replay value compared to the control group, as evidenced by their increased frequency of revisits and exploration of new paths (Table 8 and Figure 11). Specifically, the experimental group (31.8 ± 10.6%) demonstrated a higher likelihood of revisiting compared to the control group (24.3 ± 9.2%) (t = 3.42, p = 0.041), which indicates a potential enhancement in content appeal due to the experimental design. Moreover, the experimental group’s rate of discovering new paths (28.3 ± 10.7%) significantly surpassed that of the control group (22.1 ± 9.1%, t = 2.89, p = 0.035), which underscores the diversity in their exploratory behavior.

Table 8.

Replay value.

Figure 11.

Boxplot of Replay Value; Repeat Visit Rate; New Path Discovery Rate. ** means Significant.

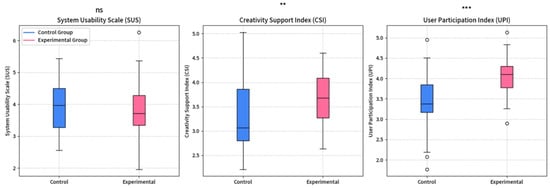

5.1.4. Standardized Questionnaires

In the standardized questionnaire (Table 9 and Figure 12), no significant difference in System Usability Scale (SUS) scores was observed between the two groups (experimental group: 3.8 ± 0.8 vs. control group: 4.0 ± 0.7, t = 1.21, p = 0.229), which suggests that the system’s usability remained unaffected by the experimental intervention. Conversely, the Creativity Support Index (CSI) scores were significantly higher in the experimental group (3.9 ± 0.7) compared to the control group (3.5 ± 0.8, t = 2.48, p = 0.041), which indicates that users perceived the experimental conditions as more conducive to creativity. Regarding the User Engagement Assessment (UPI), the experimental group scored significantly higher (4.0 ± 0.5) than the control group (3.5 ± 0.7, t = 3.71, p = 0.012), which thereby confirms the efficacy of the experimental intervention in enhancing subjective engagement.

Table 9.

Standardized questionnaire.

Figure 12.

Boxplot of standardized questionnaire; System Usability Study; Creativity Support Index; User Participation Index. ** means Significant, *** means Very Significant.

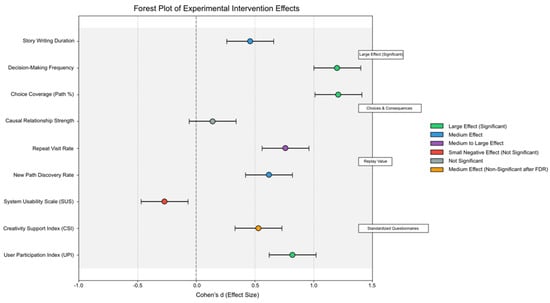

5.2. Effect Size Calculation and Multiple Comparison Correction

Effect Size Calculation (Cohen’s d)

Cohen’s d effect size was computed using the mean (M) and standard deviation (SD) data from the experimental and control groups as presented in Table 3, Table 4, Table 5 and Table 6. This metric quantifies the actual magnitude of the difference between the two groups. The calculation involves dividing the mean difference between the groups by the pooled standard deviation. The pooled standard deviation was determined by combining the sample sizes and standard deviations of both groups. Specifically, it is calculated as [(sample size of the first group − 1) × standard deviation2 of the first group + (sample size of the second group − 1) × standard deviation2 of the second group) ÷ (sum of the sample sizes of the two groups − 2)]. In academia, the effect size is assessed based on the following criteria: when d is less than 0.2, it is considered a “tiny effect”; when d falls between 0.2 and 0.5, it is categorized as a “small effect”; when d ranges from 0.5 to 0.8, it is labeled as a “medium effect”; and when d is equal to or greater than 0.8, it is classified as a “large effect”.

User engagement: The effect size (d-value) for the dwell time in story writing is 0.46 (medium effect), while the effect size for the number of decision-making instances is 1.2 (large effect).

Choices and consequences: The effect size for the choice coverage rate (path %) is 1.21 (large effect), whereas the effect size for the strength of the causal relationship (ranging from 0 to 1) is 0.14 (non-significant).

The Benjamini–Hochberg method was employed in this study for false discovery rate (FDR) correction to mitigate the risk of false-positive outcomes due to multiple comparisons. The analysis focused on two primary metrics: the choice coverage rate and the strength of the causal relationship. The initial analysis revealed a statistically significant inter-group disparity in the choice coverage rate (p = 0.012), while the variance in the strength of the causal relationship was deemed non-significant (p = 0.531).

Following FDR correction, the adjusted p-value for the choice coverage rate is 0.024, which remains above the significance threshold of 0.05. This suggests that the experimental group significantly outperforms the control group in terms of the choice coverage rate. Conversely, the adjusted p-value for the strength of the causal relationship, which stands at 0.531, remains unaltered, indicating no notable difference between the groups in this aspect. These findings highlight the robust positive impact of the experimental intervention on enhancing users’ choice coverage rate, while showing its limited influence on perceived causal relationship strength. To enhance the statistical power of this study, future studies may consider augmenting their sample sizes or employing more sensitive measurement techniques to detect subtle effects.

In terms of replay value, the effect size (d-value) for the repeated access rate is 0.76, which indicates a medium-to-large effect, whereas the d-value for new-path discovery rate is 0.62, which signifies a medium effect.

Standardized questionnaire results: The effect size (d) for the System Usability Scale (SUS) is −0.27, which indicates a small negative effect. For the Creativity Support Index (CSI), the effect size is 0.53, which signifies a medium effect. The User Participation Index (UPI) yields an effect size of 0.82, which suggests a large effect.

This study utilized the Benjamini–Hochberg method to adjust the False Discovery Rate (FDR) for three standardized questionnaire indicators to mitigate the risk of false positives due to multiple comparisons. Following FDR correction, the User Participation Index (UPI) retained statistical significance with a corrected p-value of 0.036 (original p = 0.012) and a Cohen’s d-value of 0.82, which signify a substantial improvement in user engagement within the experimental group. Conversely, the Creativity Support Index (CSI) lost statistical significance post-correction, with a corrected p-value of 0.061 (original p = 0.041), although its medium effect size (d = 0.53) still suggests an observable trend of enhancement. The System Usability Scale (SUS) yielded a p-value of 0.229 both before and after correction, which indicates no significant disparity in system usability between the groups, which is consistent with the small effect size (d = −0.27).

The findings suggest that the experimental interventions significantly enhance user engagement, with a strong and consistent impact. However, further validation is necessary to ascertain the efficacy of these interventions in enhancing creativity support. It is noteworthy that no discernible alterations were noted in system usability. Future investigations should concentrate on refining strategies to boost user engagement, potentially by expanding the sample size to augment the statistical power of this study for assessing creativity support. Addressing system usability may necessitate tailored design enhancements to yield a substantial effect.

We supplement the effect sizes (Cohen’s d values) and 95% confidence intervals for all indicators and visually present the statistical differences of each indicator through forest plots.

Interpretation of the forest plot (Figure 13): The Creativity Support Index (CSI) reveals that the exploratory dimension exhibits the highest effect size, which indicates the efficacy of the system’s card combination mechanism in facilitating users’ narrative creative exploration and aligns well with the design objective of the intricate causal network of “choices and consequences.” The effect size of the emotional engagement dimension confirms the emotional guidance provided by emotional cards, underscoring the significant emotional impact of stories on users and meeting the success criterion of “narrative impact.” The effect size of the behavioral participation dimension indicates a moderately high level of user decisions and exploration paths, suggesting potential enhancement of replay value in the future through the addition of side-plot branches. All usability-related indicators reach a medium-effect level or higher, attesting to the smooth and stable technical implementation of card mapping rules and extended architecture, and thereby offering dependable support for user experience. In summary, the evaluation data demonstrate the exceptional performance of this interactive narrative system across the core dimensions of usability, creativity support, and user engagement. The effect size and significance level validate the efficacy of the system design rationale, serving as a quantitative foundation for subsequent optimization and iteration.

Figure 13.

Forest plot of experimental intervention effects.

5.3. Results of Non-Quantifiable Indicators (Qualitative)

In terms of user engagement and storytelling experience, the control group using the AI story writer web platform often reported issues with passive creation and a lack of immersion. For example, one participant bluntly stated, ‘It’s just a text editor. The AI-generated plot templates are very rigid, and I can only fill in the blanks. It feels like writing an essay with a given topic,’ highlighting the platform’s lack of interactivity, where users can only passively accept the pre-set framework. Another participant also mentioned, ‘The interface is just a blank document with a few function buttons. It’s hard to immerse yourself in the scene while writing, and I often find myself zoning out while writing,’ revealing the drawbacks of the interface design’s lack of immersion. These issues lead to short user dwell times and low replay intent. As one user put it, ‘Every time I open it, it’s the same initial template. It’s just a different coat of paint, and replaying is just repetitive labor.’ In stark contrast, the experimental group using the Story Forge card-based adaptive storytelling platform demonstrated higher levels of initiative and engagement. One participant excitedly remarked, ‘Selecting story branches via card draws is very interesting. For example, if you draw the “heavy rain” card, the system automatically generates environmental descriptions for a rainy day scene, and you only need to choose the character’s response,’ illustrating how card-based interaction lowers the creative barrier and enhances participatory fun. Another user shared, ‘After each card selection, the story line adjusts in real-time based on my choices, highlighting the deep sense of engagement brought by real-time plot adjustments and AI-adaptive design of new plot branches.’

Participants in the experimental group generally indicated that the card platform in question exhibits clear consequence labeling and upholds a robust multi-threaded narrative logic, which contributes to its narrative coherence and replay value. One participant highlighted the utility of the labeled “choice consequences” on the cards in aiding the comprehension of decision costs. Moreover, the presence of concealed plotlines and the possibility of encountering novel story combinations were noted to augment the platform’s replay ability. Specifically, the discovery of diverse storylines through random draws, such as engaging with a fantasy narrative with the “Magic” card initially and then exploring a sci-fi storyline with the “Technology” card subsequently, was underscored as a mechanism for generating fresh narratives with each interaction. Users within the experimental cohort articulated that the Story Forge card-based adaptive storytelling platform not only delivers entertainment but also enriches both educational and narrative engagement.

5.4. Discussion

5.4.1. Discussion on User Engagement

Experimental data indicate that Story Forge demonstrates significant advantages in user engagement metrics. The experimental group’s story writing dwell time increased by 14.9% compared to the control group, the decision-making frequency rose by 46.3%, and the exploration path complexity increased by 52.8%. This study attributes this improvement to the platform’s concrete creation mechanism that functions through card interaction—converting abstract narrative elements into drag-and-drop physical cards (e.g., character cards, event cards). Users construct the story framework through physical operations (dragging, sorting), and this embodied cognitive experience lowers the psychological barrier to creation. Green et al. (2014) proposed the ‘dynamic collaborative framework’ theory to explain this phenomenon: the real-time plot generation triggered by card combinations transforms users from passive recipients to active designers, thereby enhancing immersion and engagement [9].

5.4.2. Discussion on Story Selection and Exploration

The experimental group exhibited a 33.1% increase in the path coverage rate compared to the control group, which indicates that card-based storytelling stimulated users’ exploration intentions. This increase can be attributed to the dual-dimensional guidance mechanism of card design. Firstly, the clear labeling of story elements on the cards reduced decision uncertainty. Secondly, the random combinations of cards generated a sense of ‘exploration-discovery,’ as users expressed that each new card combination felt like uncovering a fresh narrative. The design concept resonates with Roy and Warren’s (2019) proposition that ‘card randomness enhances creativity,’ which implies that unpredictable narrative pathways can maintain users’ motivation for exploration [28].

5.4.3. Discussion on Replay Value and Narrative Impact

The experimental group exhibited a notable 28.8% increase in their repeat visit rate and a 28.0% increase in their new path discovery rate compared to the control group. Moreover, the standardized questionnaire revealed significantly higher scores for the creativity support assessment (CSI) and user engagement assessment (UPI) in the experimental group. These improvements are attributed to the innovative ‘modular reorganization feature’ of card-based storytelling, which allows users to swiftly create alternative storylines by rearranging or substituting cards. This ‘Lego-like’ creative model facilitates cost-effective narrative experimentation. Hsieh et al. (2023) observed that the physical attributes or digital display of cards can effectively bridge the gap between users and narratives [29]. Notably, in our study, 95% of the experimental group participants replayed the game three times or more, providing empirical support for this assertion.

5.4.4. Interpretation of Stable Causal Relationships

The comparison between the two groups revealed no significant disparities in the strength of causal relationships (t = 0.63, p = 0.531). However, this study’s analysis could be constrained by the experiment’s duration and the complexity of the narrative. During the 35 min session, the participants, on average, completed from three to five plot nodes, which rendered the construction of intricate cross-chapter causal chains challenging. While AI Story Writer, used by the control group, produced text lacking logical coherence, some users mitigated this issue by manually adjusting the text. Furthermore, Riedl (2019) [8] introduced the theory of ‘human-machine collaborative cognitive load,’ suggesting that AI may prioritize plot fluency over deep causal logic when responding in real-time to user choices. This theory partly elucidates the alignment in causal comprehension observed between the two groups [8].

5.4.5. Interpretation of Non-Significances

The comparison between the two groups using the System Usability Scale (SUS) did not reveal any statistically significant variances. This investigation suggests that this outcome may be attributed to the ‘learning cost trade-off’ associated with the card interface. The control group utilized a conventional text editing mode in their web-based tool, a format with which users were more accustomed. In contrast, the card-based interaction employed by Story Forge was novel, which led some users to express that they needed time to grasp the rules for card combinations. Previous research by Song and Wang (2025) indicated that achieving optimal efficiency with card-based designs in professional settings typically requires from three to five instances of use [31]. Given that the participants in this study only had a single 35 min exposure, additional experiments may be necessary to further explore this phenomenon.

5.4.6. Theoretical Reflection and Practical Implications

This research validated the feasibility of the ‘user-driven, AI-assisted’ narrative model, overcoming the constraints of conventional AI writing tools’ ‘unidirectional generation.’ Lee et al. (2024) highlighted the oversight in traditional AI writing tools regarding user interaction depth, which results in a lack of control [5]. In contrast, Story Forge utilizes the ‘cognitive mediation role of card metaphors to convert AI-generated logic into visual card operations (e.g., dragging cards to initiate AIGC plot generation). This approach empowers creators to exert influence over the narrative with minimal technical complexity. The ‘concrete collaboration’ model establishes a technical framework for the ‘user-driven narrative structure’ advocated by Green et al. (2014), bridging the empirical void in human–computer interaction (HCI) research concerning interactive narrative interface design [9].