Intelligent Battery-Designed System for Edge-Computing-Based Farmland Pest Monitoring System

Abstract

1. Introduction

- This study presents a low-cost, solar-powered edge AI system that integrates solar energy with supercapacitors to enable long-term outdoor operation, significantly enhancing the feasibility and sustainability of intelligent pest detection in farmland environments;

- This study develops an image-based monitoring system for pests of cruciferous plants and demonstrates its novel application on resource-constrained edge devices;

- This study establishes a dataset of 8421 images of yellow-striped flea beetles.

2. Materials and Methods

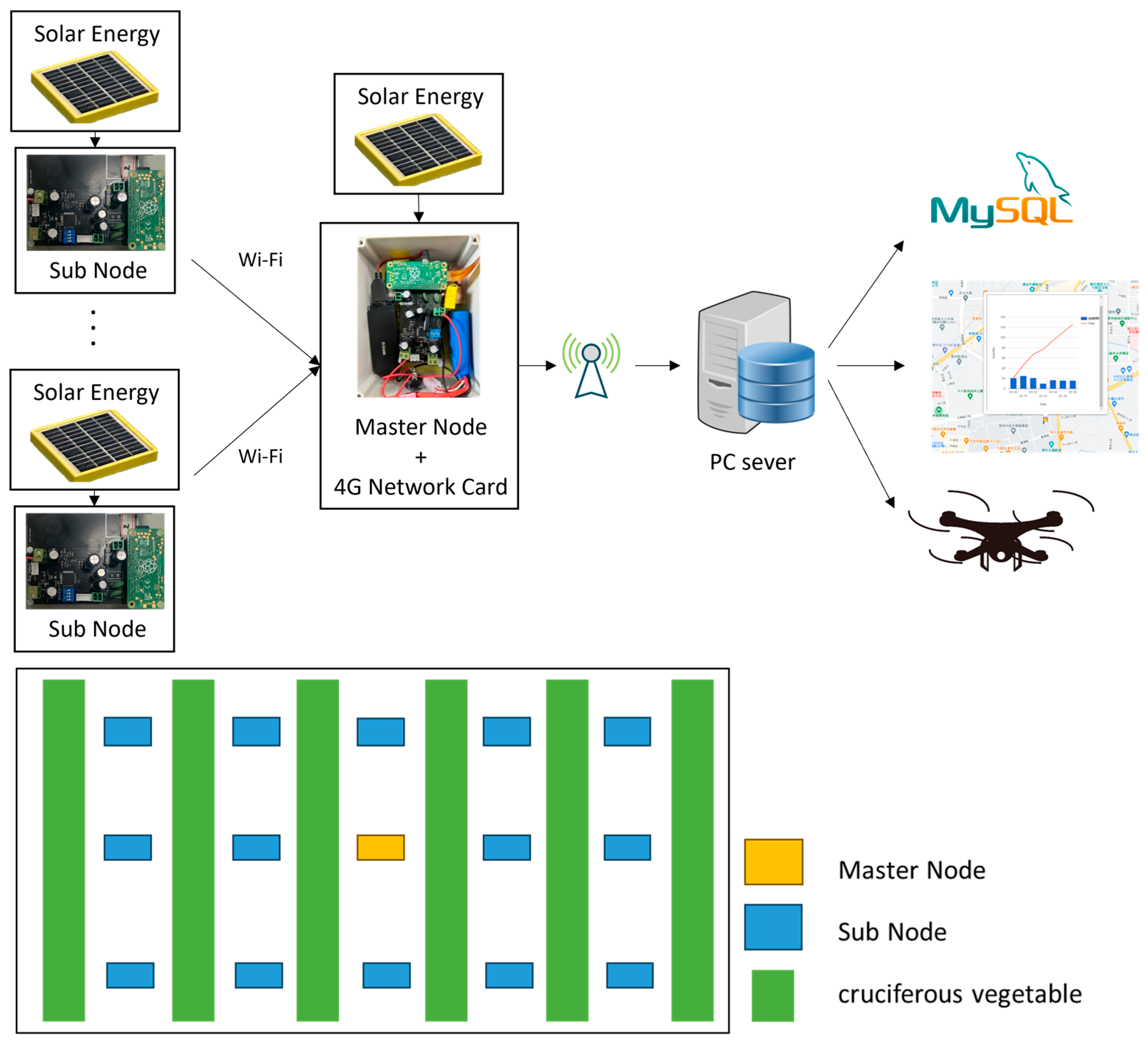

2.1. System Architecture

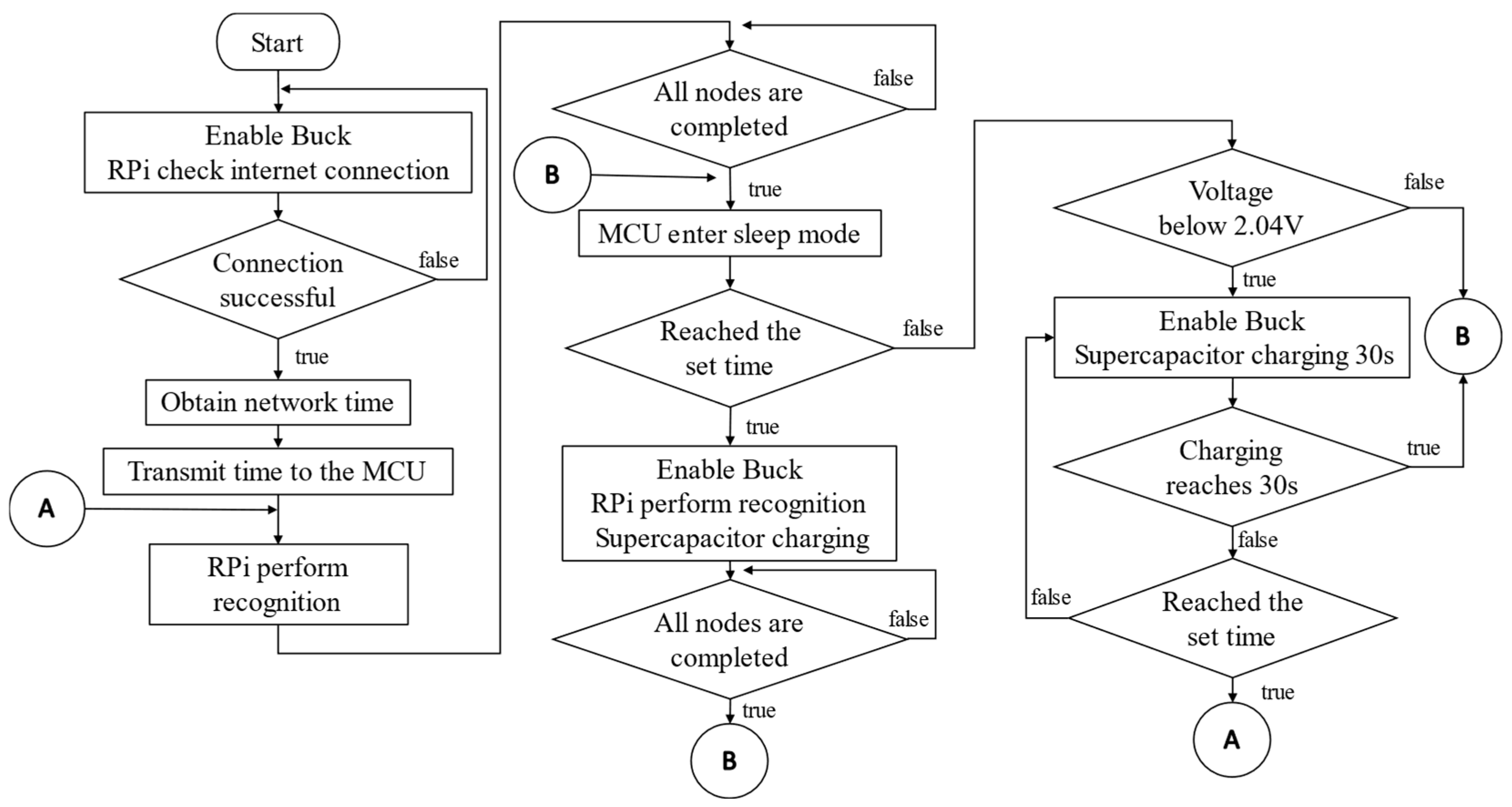

2.2. Power Management Strategy

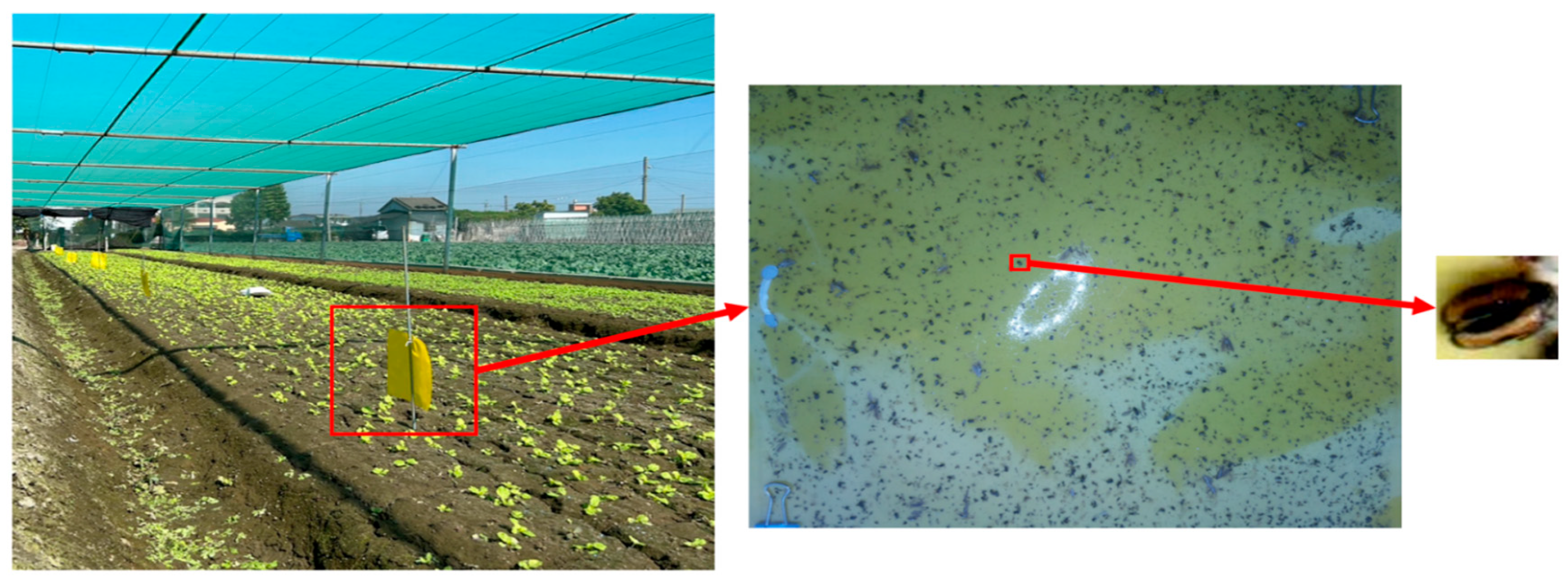

2.3. Data Acquisition

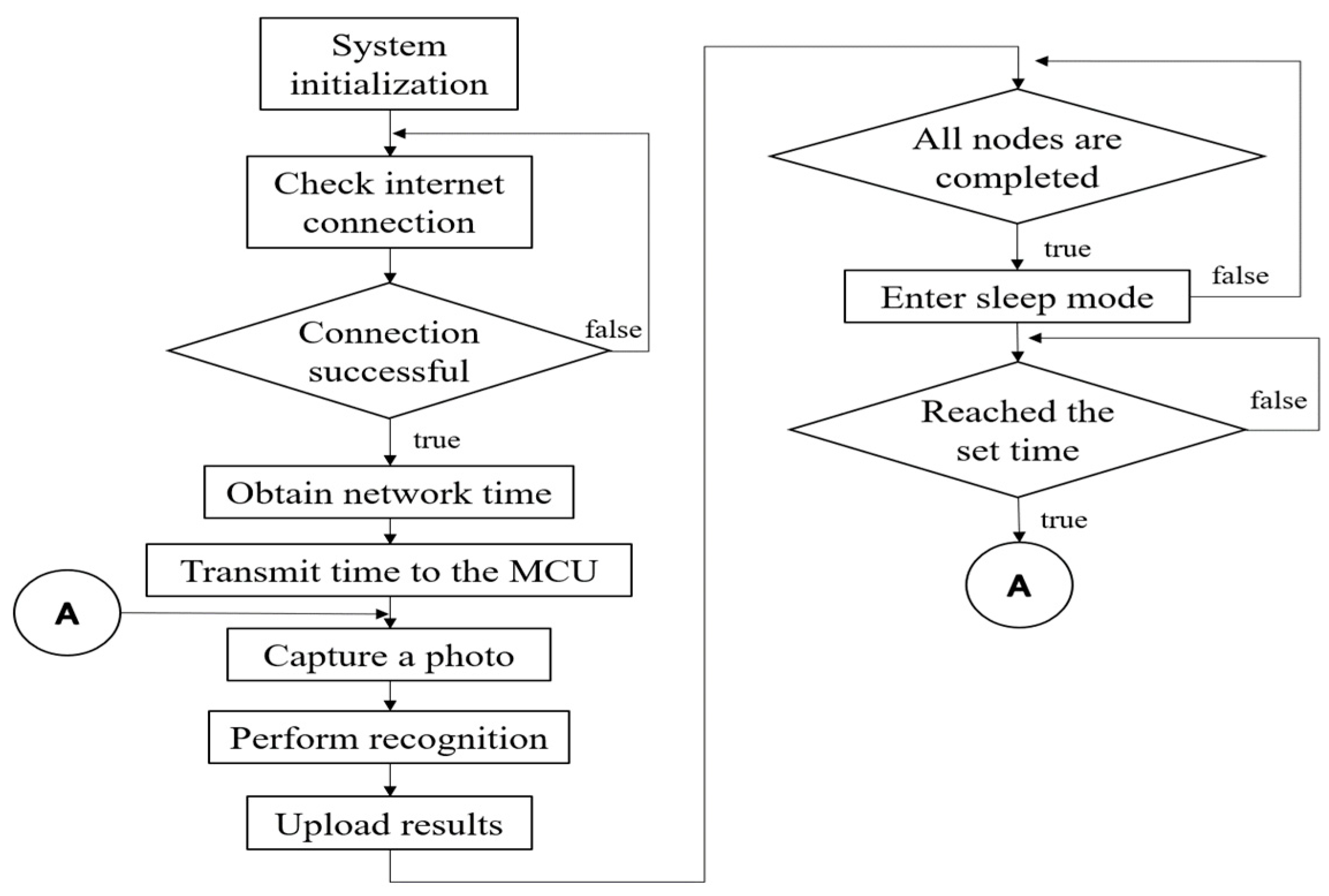

2.4. Computing and Shooting Components

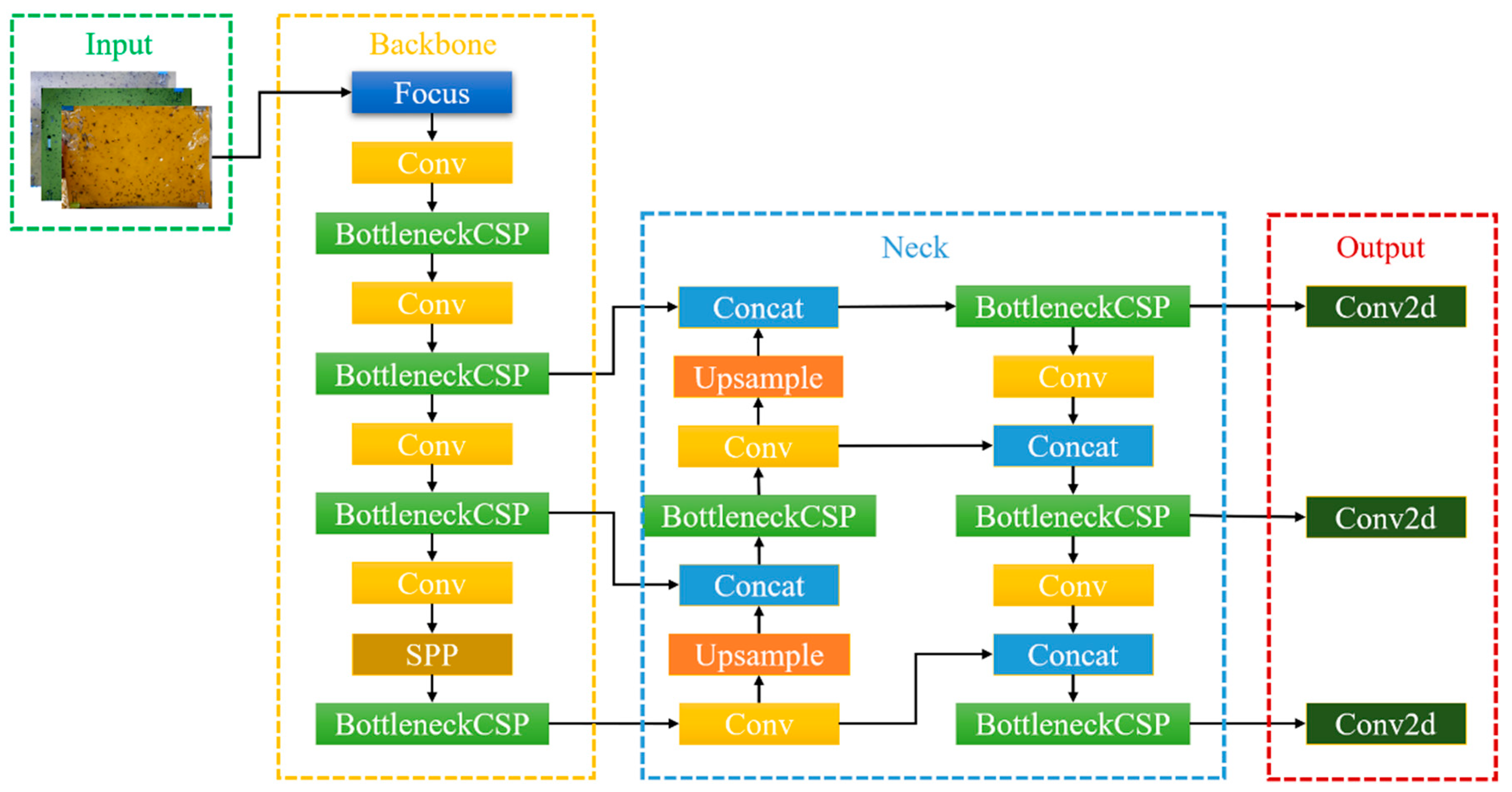

2.5. YOLOv5

- Input: Performing mosaic data augmentation and adaptive image scaling;

- Backbone: Extracting target features;

- Neck: Applying pooling operations to feature maps of different sizes;

- Head: Predicting the output category.

2.6. Data Augmentation with StyleGAN3

2.7. Server and User Interface

3. Results and Discussion

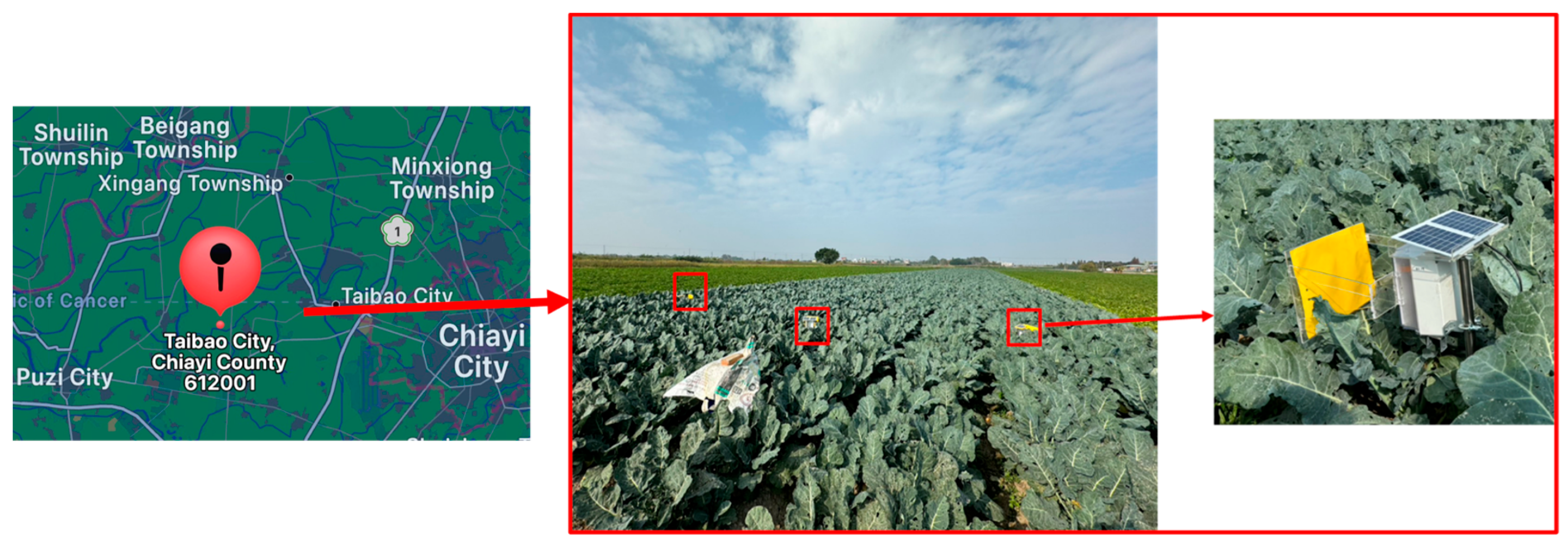

3.1. Experimental Platform

3.2. Evaluation Metrics

3.3. Image Generation

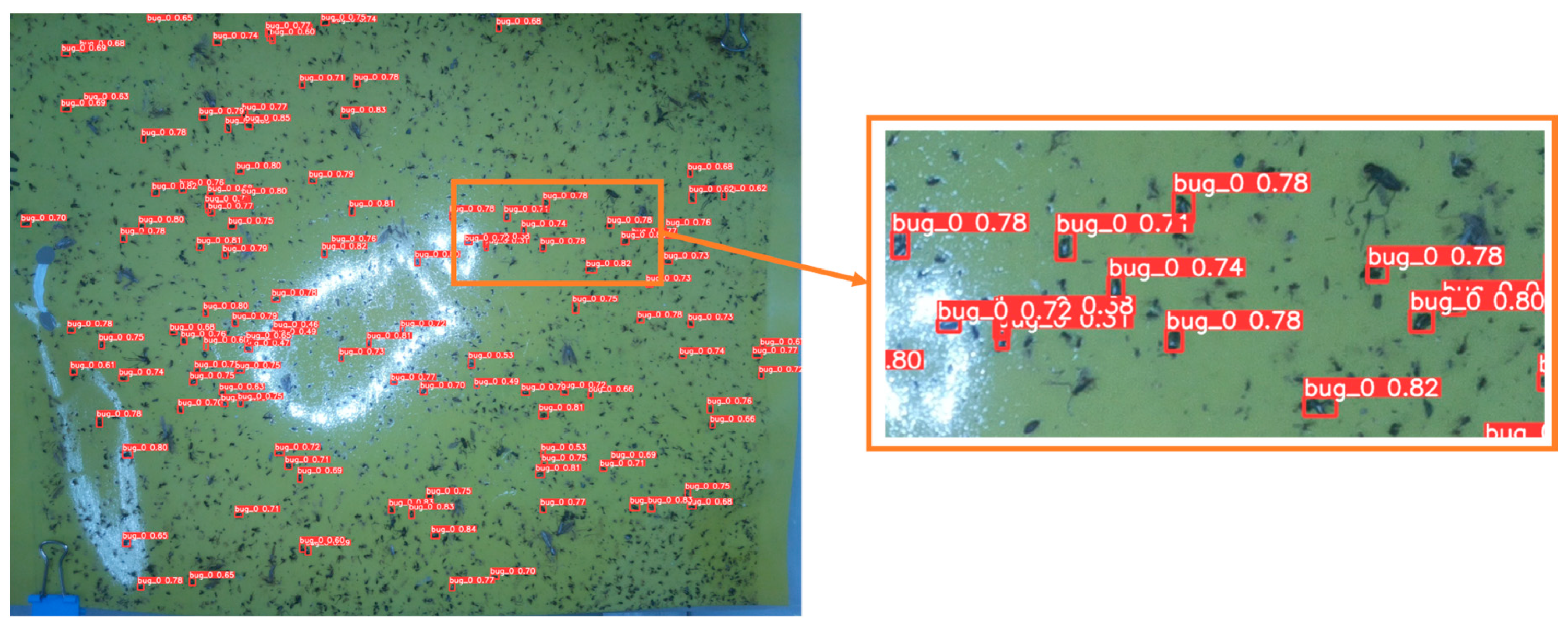

3.4. Comparison of Recognition Results

3.5. Deployment on Raspberry Pi

3.6. Long-Term Testing of the Power Management Strategy

3.7. Comparison with Similar Systems

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, W.; Zhang, H. Ecological Harm Habits and Control of Striped Flea Beetle. Tainan District Agricultural Research and Extension Station Technical; Issue 86-3 (No.69). 1997. Available online: https://book.tndais.gov.tw/Brochure/tech69.htm (accessed on 2 June 2024).

- Desislavov, R.; Martínez-Plumed, F.; Hernández-Orallo, J. Trends in AI inference energy consumption: Beyond the performance-vs-parameter laws of deep learning. In Sustainable Computing: Informatics and Systems; Elsevier: Amsterdam, The Netherlands, 2023; Volume 38, p. 100857. [Google Scholar]

- Zhu, S.; Ota, K.; Dong, M. Energy-efficient artificial intelligence of things with intelligent edge. IEEE Internet Things J. 2022, 9, 7525–7532. [Google Scholar] [CrossRef]

- Aliakbarpour, H.; Rawi, C.S.M. Evaluation of yellow sticky traps for monitoring the population of thrips (Thysanoptera) in a mango orchard. Environ. Entomol. 2011, 40, 873–879. [Google Scholar] [CrossRef] [PubMed]

- Bashir, M.A.; Alvi, A.M.; Naz, H. Effectiveness of sticky traps in monitoring insects. J. Environ. Agric. Sci. 2014, 1, 1–2. [Google Scholar]

- Devi, M.S.; Roy, K. Comparable study on different coloured sticky traps for catching of onion thrips, Thrips tabaci Lindeman. J. Entomol. Zool. Stud. 2017, 5, 669–671. [Google Scholar]

- Qiao, M.; Lim, J.; Ji, C.W.; Chung, B.K.; Kim, H.Y.; Uhm, K.B.; Myung, C.S.; Cho, J.; Chon, T.S. Density estimation of bemisia tabaci (hemiptera: Aleyrodidae) in a greenhouse using sticky traps in conjunction with an image processing system. J. Asia-Pac. Entomol. 2008, 11, 25–29. [Google Scholar] [CrossRef]

- Xia, C.; Chon, T.S.; Ren, Z.; Lee, J.M. Automatic identification and counting of small size pests in greenhouse conditions with low computational cost. Ecol. Inform. 2015, 29, 139–146. [Google Scholar] [CrossRef]

- Cho, J.; Choi, J.; Qiao, M.; Ji, C.-W.; Kim, H.-Y.; Uhm, K.-B.; Chon, T.-S. Automatic identification of whiteflies aphids thrips in greenhouse based on image analysis. Int. J. Math. Comput. Simul. 2007, 1, 46–53. [Google Scholar]

- Chen, J.; Fan, Y.; Wang, T.; Zhang, C.; Qiu, Z.; He, Y. Automatic segmentation and counting of aphid nymphs on leaves using convolutional neural networks. Agronomy 2018, 8, 129. [Google Scholar] [CrossRef]

- Li, Y.; Wang, H.; Dang, L.M.; Sadeghi-Niaraki, A.; Moon, H. Crop pest recognition in natural scenes using convolutional neural networks. Comput. Electron. Agric. 2020, 169, 105174. [Google Scholar] [CrossRef]

- Valan, M.; Makonyi, K.; Maki, A.; Vondracek, D.; Ronquist, F. Automated Taxonomic Identification of Insects with Expert-Level Accuracy Using Effective Feature Transfer from Convolutional Networks. Syst. Biol. 2019, 68, 876–895. [Google Scholar] [CrossRef]

- Wang, R.; Zhang, J.; Dong, W.; Yu, J.; Xie, C.; Li, R.; Chen, T.; Chen, H. A crop pests image classification algorithm based on deep convolutional neural network. TELKOMNIKA (Telecommun. Comput. Electron. Control.) 2017, 15, 1239–1246. [Google Scholar] [CrossRef]

- Li, W.; Wang, D.; Li, M.; Gao, Y.; Wu, J.; Yang, X. Field detection of tiny pests from sticky trap images using deep learning in agricultural greenhouse. Comput. Electron. Agric. 2021, 183, 106048. [Google Scholar] [CrossRef]

- Karar, M.E.; Alsunaydi, F.; Albusaymi, S.; Alotaibi, S. A new mobile application of agricultural pests recognition using deep learning in cloud computing system. Alex. Eng J. 2021, 60, 4423–4432. [Google Scholar] [CrossRef]

- Kusrini, K.; Suputa, S.; Setyanto, A.; Agastya, I.M.A.; Priantoro, H.; Chandramouli, K.; Izquierdo, E. Data augmentation for automated pest classification in Mango farms. In Computers and Electronics in Agriculture; Elsevier: Amsterdam, The Netherlands, 2020; Volume 179, p. 105842. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Önler, E. Real time pest detection using YOLOv5. Int. J. Agric. Nat. Sci. 2021, 14, 232–246. [Google Scholar]

- Ahmad, I.; Yang, Y.; Yue, Y.; Ye, C.; Hassan, M.; Cheng, X.; Wu, Y.; Zhang, Y. Deep Learning Based Detector YOLOv5 for Identifying Insect Pests. Appl. Sci. 2022, 12, 10167. [Google Scholar] [CrossRef]

- Gandhi, R.; Nimbalkar, S.; Yelamanchili, N.; Ponkshe, S. Plant disease detection using CNNs and GANs as an augmentative approach. In Proceedings of the 2018 IEEE International Conference on Innovative Research and Development (ICIRD), Bangkok, Thailand, 11–12 May 2018; pp. 1–5. [Google Scholar]

- Ma, D.; Liu, J.; Fang, H.; Wang, N.; Zhang, C.; Li, Z.; Dong, J. A multi-defect detection system for sewer pipelines based on StyleGAN-SDM and fusion CNN. Constr. Build. Mater. 2021, 312, 125385. [Google Scholar] [CrossRef]

- Khanzhina, N.; Filchenkov, A.; Minaeva, N.; Novoselova, L.; Petukhov, M.; Kharisova, I.; Pinaeva, J.; Zamorin, G.; Putin, E.; Zamyatina, E.; et al. Combating data incompetence in pollen images detection and classification for pollinosis prevention. Comput. Biol. Med. 2022, 140, 105064. [Google Scholar] [CrossRef]

- Cap, Q.H.; Uga, H.; Kagiwada, S.; Iyatomi, H. LeafGAN: An Effective Data Augmentation Method for Practical Plant Disease Diagnosis. IEEE Trans. Autom. Sci. Eng. 2020, 19, 1258–1267. [Google Scholar] [CrossRef]

- Muangprathub, J.; Boonnam, N.; Kajornkasirat, S.; Lekbangpong, N.; Wanichsombat, A.; Nillaor, P. IoT and agriculture data analysis for smart farm. Comput. Electron. Agric. 2019, 156, 467–474. [Google Scholar] [CrossRef]

- Barkunan, S.R.; Bhanumathi, V.; Sethuram, J. Smart sensor for automatic drip irrigation system for paddy cultivation. Comput. Electr. Eng. 2019, 73, 180–193. [Google Scholar] [CrossRef]

- Mohanraj, I.; Ashokumar, K.; Naren, J. Field monitoring and automation using IOT in agriculture domain. Procedia Comput. Sci. 2016, 93, 931–939. [Google Scholar] [CrossRef]

- Blessy, A.; Kumar, A.; Md, A.Q.; Alharbi, A.I.; Almusharraf, A.; Khan, S.B. Sustainable Irrigation Requirement Prediction Using Internet of Things and Transfer Learning. Sustainability 2023, 15, 8260. [Google Scholar] [CrossRef]

- Kaur, G.; Upadhyaya, P.; Chawla, P. Comparative analysis of IoT-based controlled environment and uncontrolled environment plant growth monitoring system for hydroponic indoor vertical farm. Environ. Res. 2023, 222, 115313. [Google Scholar] [CrossRef] [PubMed]

- Vianny, D.M.M.; John, A.; Mohan, S.K.; Sarlan, A.; Ahmadian, A. Water optimization technique for precision irrigation system using IoT and machine learning. Sustain. Energy Technol. Assess. 2022, 52, 102307. [Google Scholar]

- Debnath, O.; Saha, H.N. An IoT-based intelligent farming using CNN for early disease detection in rice paddy. Microprocess. Microsyst. 2022, 94, 104631. [Google Scholar] [CrossRef]

- Pessl Instruments. iSCOUT. 2021. Available online: https://metos.at/iscout/ (accessed on 1 November 2021).

- Trapview. 2021. Available online: https://trapview.com/ (accessed on 2 June 2024).

- Rustia, D.J.A.; Lee, W.-C.; Lu, C.-Y.; Wu, Y.-F.; Shih, P.-Y.; Chen, S.-K.; Chung, J.-Y.; Lin, T.-T. Edge-based wireless imaging system for continuous monitoring of insect pests in a remote outdoor mango orchard. Comput. Electron. Agric. 2023, 211, 108019. [Google Scholar] [CrossRef]

- LG Chem. ICR18650HB4 1500mAh Lithium-Ion Battery Product Specification; Document No. BCY-PS-HB4-Rev6; LG Chem: Seoul, Republic of Korea, 2016; BatteryUniversity.com; Available online: https://queenbattery.com.cn/index.php?controller=attachment&id_attachment=97 (accessed on 2 June 2024).

- Battery University. BU-808: How to Prolong Lithium-Based Batteries. BatteryUniversity.com. Available online: https://batteryuniversity.com/article/bu-808-how-to-prolong-lithium-based-batteries (accessed on 2 July 2025).

- Glenn, J.; Stoken, A.; Borovec, J.; Changyu, L.; Hogan, A.; Diaconu, L.; Adam, H.; Trevor, S.; Wang, X.; Pritu, D.; et al. Ultralytics/yolov5, 3rd ed.; Zenodo: Geneva, Switzerland, 2020. [Google Scholar]

- Karras, T.; Aittala, M.; Laine, S.; Härkönen, E.; Hellsten, J.; Lehtinen, J.; Aila, T. Alias-free generative adversarial networks. Adv. Neural Inf. Process. Syst. 2021, 34, 852–863. [Google Scholar]

- Karras, T.; Laine, S.; Aila, T. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4401–4410. [Google Scholar]

- Ding, W.; Taylor, G. Automatic moth detection from trap images for pest management. Comput. Electron. Agric. 2016, 123 (Suppl. C), 17–28. [Google Scholar] [CrossRef]

- Tan, M.X.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Chen, H.-W.; Lo, J.-Y. Chiayi City Solar Photovoltaic Development Overview; Chiayi City Government: Jiaxuan City, Taiwan, 2019. Available online: https://icmp-ws.chiayi.gov.tw/Download.ashx?u=LzAwMS9VcGxvYWQvNDAxL3JlbGZpbGUvOTA0My80MDIxODAvYzA3Mjk3ZWUtOWQ0Mi00NDI5LWE1Y2ItNTE2NDVhYTM3MDk5LnBkZg (accessed on 2 June 2024).

- Liu, C.; Zhai, Z.; Zhang, R.; Bai, J.; Zhang, M. Field pest monitoring and forecasting system for pest control. Front. Plant Sci. 2022, 13, 990965. [Google Scholar] [CrossRef]

- Zanin, A.R.A.; Neves, D.C.; Teodoro, L.P.R.; da Silva Júnior, C.A.; da Silva, S.P.; Teodoro, P.E.; Baio, F.H.R. Reduction of pesticide application via real-time precision spraying. Sci. Rep. 2022, 12, 5638. [Google Scholar] [CrossRef]

| Parameter | |

|---|---|

| Optimizer | SGD |

| Learning rate momentum | 0.937 |

| Initial learning rate | 0.01 |

| Weight decay | 0.0005 |

| Input image resolution | 3280 × 2464 pixels |

| Batch size | 4 |

| Number of iterations | 500 |

| Dataset | ACC | R | P | F1-Score |

|---|---|---|---|---|

| Original | 0.783 | 0.846 | 0.913 | 0.878 |

| Original + 2000 32 × 32 pixel image | 0.818 | 0.868 | 0.934 | 0.899 |

| Original + 2000 16 × 16 pixel image | 0.846 | 0.897 | 0.936 | 0.916 |

| Original + 2000 32 × 32 pixel image + 2000 16 × 16 pixel image | 0.8564 | 0.908 | 0.939 | 0.923 |

| Dataset | ACC | R | P | F1-Score | Model Size |

|---|---|---|---|---|---|

| YOLOv5n | 0.783 | 0.846 | 0.913 | 0.878 | 6.3 MB |

| YOLOv5n + generated images | 0.856 | 0.908 | 0.939 | 0.923 | 6.3 MB |

| YOLOv7tiny | 0.861 | 0.921 | 0.929 | 0.924 | 12.5 MB |

| YOLOv7tiny + generated images | 0.844 | 0.888 | 0.945 | 0.915 | 12.5 MB |

| YOLOv8n | 0.730 | 0.772 | 0.929 | 0.843 | 6.6 MB |

| YOLOv8n + generated images | 0.788 | 0.831 | 0.938 | 0.881 | 6.6 MB |

| Model | Time | Model Size |

|---|---|---|

| YOLOv5n | 3 min 30 s | 6.3 MB |

| YOLOv7tiny | 7 min 49 s | 12.5 MB |

| YOLOv8n | 15 min 34 s | 6.6 MB |

| Work Stage | Operating Time | Current |

|---|---|---|

| Time synchronization stage | 26 s | 288,640 μA |

| Recognition stage | 839 s | 133,020 μA |

| Supercapacitor charging stage | 210 s | 95,824 μA |

| MCU sleep stage | 85,325 s | 8.6 μA |

| Power Consumption | 0.194 Wh | |

| MCU sleep stage (without supercapacitors) | 85,325 s | 10 mA |

| Power Consumption | 1.378 Wh | |

| Feature | This Study | Mango Orchard Pest Monitoring [33] | Cotton Field Pest Monitoring [42] |

|---|---|---|---|

| Target Pest | Striped flea beetles | Mango leafhoppers | Cotton pest |

| Model | Yolov5n | Yolov3 | SM_ResNet V2 |

| Recognition Accuracy | 0.92 | 0.96 | 0.85 |

| Power Consumption | 0.194 WH | 0.8 WH | N/A |

| Node Cost (USD) | 50 | ~100 | ~400 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hung, C.-W.; Wang, C.-C.; Liao, Z.-J.; Su, Y.-H.; Liu, C.-L. Intelligent Battery-Designed System for Edge-Computing-Based Farmland Pest Monitoring System. Electronics 2025, 14, 2927. https://doi.org/10.3390/electronics14152927

Hung C-W, Wang C-C, Liao Z-J, Su Y-H, Liu C-L. Intelligent Battery-Designed System for Edge-Computing-Based Farmland Pest Monitoring System. Electronics. 2025; 14(15):2927. https://doi.org/10.3390/electronics14152927

Chicago/Turabian StyleHung, Chung-Wen, Chun-Chieh Wang, Zheng-Jie Liao, Yu-Hsing Su, and Chun-Liang Liu. 2025. "Intelligent Battery-Designed System for Edge-Computing-Based Farmland Pest Monitoring System" Electronics 14, no. 15: 2927. https://doi.org/10.3390/electronics14152927

APA StyleHung, C.-W., Wang, C.-C., Liao, Z.-J., Su, Y.-H., & Liu, C.-L. (2025). Intelligent Battery-Designed System for Edge-Computing-Based Farmland Pest Monitoring System. Electronics, 14(15), 2927. https://doi.org/10.3390/electronics14152927