1. Introduction

Transformers play a key role in power systems as one of their core components. With the continuous development of power systems and the increasing demand for electricity, the real-time monitoring and management of transformer operating conditions have become increasingly important [

1]. Typically, internal faults and reduced lifespan in transformers result from a decreased insulation capability, with internal overheating being the main cause of such a decline. Therefore, obtaining accurate internal temperatures of transformers is crucial [

2]. The top oil temperature is an important parameter reflecting the internal heat of oil-immersed transformers. Moreover, it is one of the significant indicators of transformer operating conditions. The precise forecasting of the top oil temperature is crucial not only for safeguarding the secure operation of transformers but also for optimizing maintenance strategies and enhancing the reliability of power systems [

3].

As research on forecasting the top oil temperature in transformers has garnered significant attention, traditional prediction methods primarily rely on thermal circuit models and empirical formulas [

4,

5,

6]. These methods estimate oil temperature by comprehensively considering key factors such as transformer loading and ambient temperature. For instance, Reference [

7] presented an optimal estimation model for top oil temperature, which was based on a transformer thermal circuit model and utilized the Kalman filtering algorithm. This method achieved a notable improvement in prediction accuracy compared to traditional empirical models, as it could dynamically adjust the prediction results based on real-time data and reduce the impact of measurement noise. Meanwhile, M.M. et al. [

8] constructed an oil-conduction-based model for power transformers, which calculates oil temperature through iterative solutions of a thermal resistance network algorithm. This approach is theoretically innovative, but it is operationally complex and lacks flexibility in adapting to different operating conditions. For example, when the transformer’s operating conditions change significantly, such as sudden changes in load or ambient temperature, the model may need a long time to converge to a new stable solution. As the demand for prediction accuracy in modern power systems continues to rise, traditional prediction methods often reveal insufficient precision when dealing with complex operating conditions and dynamic environments, falling short of current high-accuracy requirements [

9]. This is because these methods are based on simplified physical models and assumptions, which may not be able to fully capture the complex interactions between various factors that affect the oil temperature. As artificial intelligence progresses, prediction methods leveraging machine learning and deep learning have come to the fore. For example, Reference [

10] established a model using Random Forest, taking into account prediction model errors and major influencing features. This method can automatically select the most important features from a large number of input variables and build a prediction model with high accuracy. Reference [

11] improved the accuracy of transformer temperature prediction using two intelligent optimization methods, GA and PSO, for estimation of the top oil temperature in power transformers. These optimization methods can optimize the parameters of the prediction model to improve its accuracy and generalization ability. Reference [

12] improved a transformer oil temperature prediction method grounded in SVM by fine-tuning key parameters with a PSO algorithm. This method not only improves the model’s adaptability to oil temperature data but also further boosts prediction accuracy through the introduction of confidence intervals. The confidence intervals can provide a range of possible values for the predicted oil temperature, which helps assess the uncertainty of the prediction results. Reference [

13] leverages a gray neural network model for transformer temperature prediction, thereby enhancing accuracy with a limited amount of data. This model combines the advantages of gray system theory and neural networks, which can effectively deal with the problem of small sample size and improve the prediction accuracy. Reference [

14] decomposes the original series into different patterns by introducing VMD, and then utilizes the advantages and high efficiency of GRU in time series analysis to build an oil temperature prediction model, which has a higher prediction accuracy compared with traditional methods, as it can capture the temporal dependencies and patterns in the oil temperature data more effectively. References [

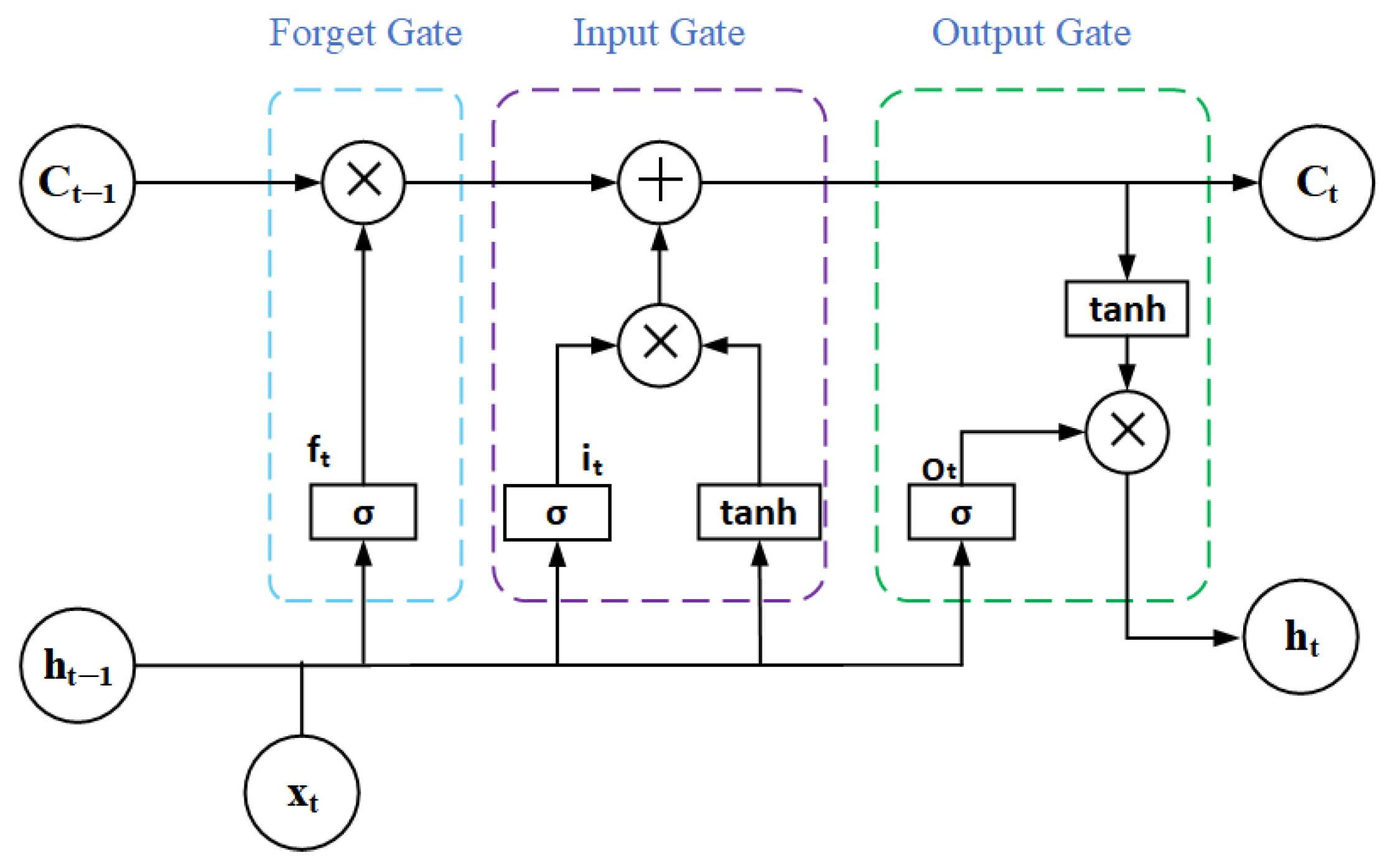

15,

16] introduce LSTM networks to address time-series issues in transformer top oil temperature prediction, achieving high accuracy. LSTM networks have the ability to remember long-term dependencies in the data, which makes them suitable for predicting time series data with complex temporal relationships. In summary, the prediction methods for transformer top oil temperature have evolved from traditional physical models to advanced machine learning and deep learning methods.

Although existing methods have made valuable progress in predicting oil temperature using deep learning methods, there are still many limitations: (1) Most current methods use small-scale experimental datasets, typically focusing on a single transformer with just hundreds of data groups, and often idealized data. However, in real-world engineering applications, datasets are usually larger and more complex [

17]. Raw data is often hard to use directly for model training. This results in the poor generalization of existing research models, making them ill-suited for practical engineering scenarios. (2) In practical engineering applications, raw data needs to be analyzed and preprocessed to fit the model’s input criteria. As the data sampling duration increases, the influence of external factors, like ambient temperature, on the model becomes substantial and must not be overlooked [

18]. Conventional models often struggle to effectively learn the complex coupling relationships between historical oil temperature data and other influencing factors, which further limits the model’s performance in real-world applications [

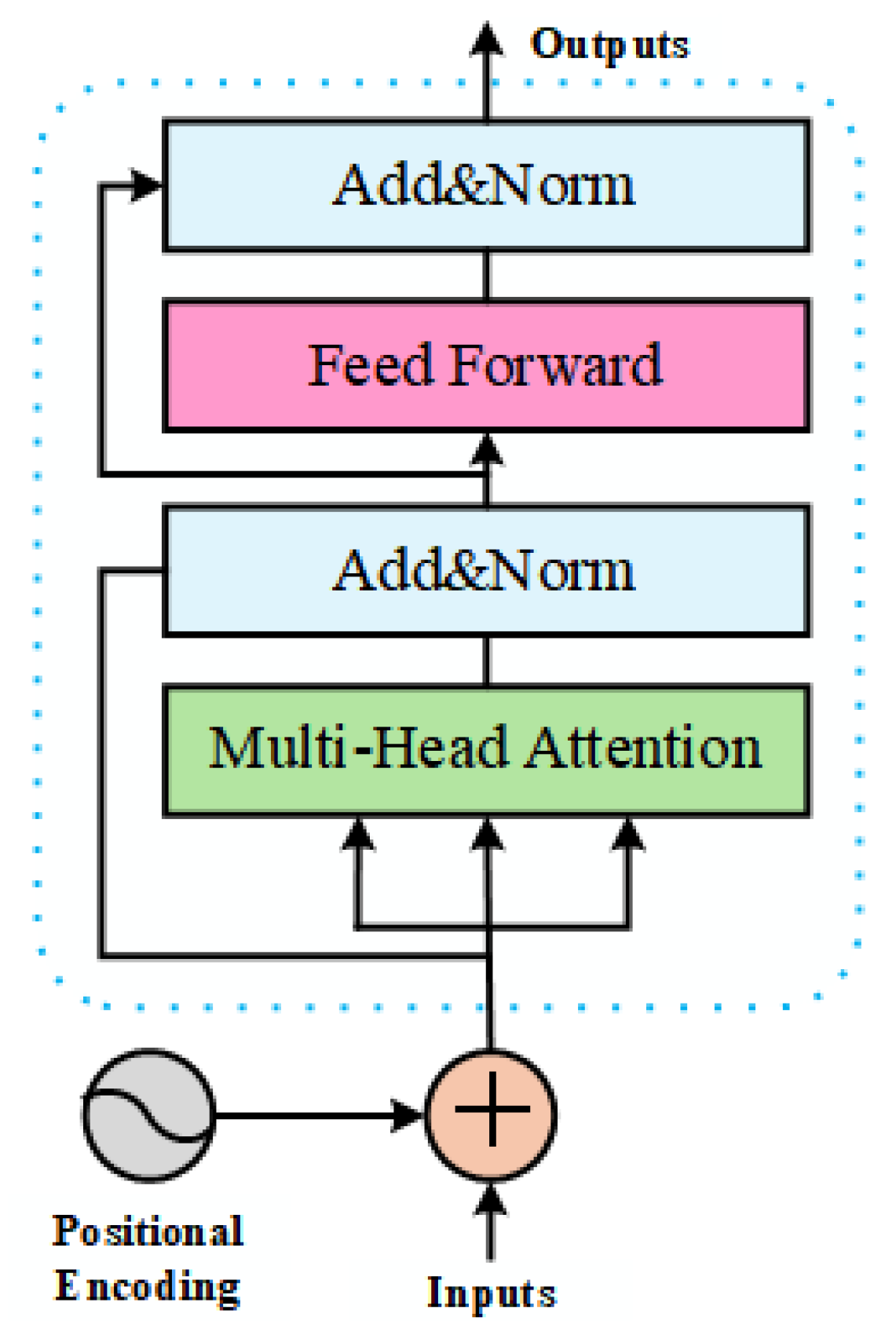

19]. To address these challenges, this paper proposes a forecasting method for transformer oil temperature time-series data based on a hybrid architecture of a CNN-LSTM-Transformer. Aiming to boost the model’s generalization ability, this method includes a thorough analysis and preprocessing of transformers’ actual operation data. First, duplicate data is removed, missing data is reasonably imputed, and abnormal data is identified and eliminated. The process of identifying abnormal data integrates outlier detection theory with practical operation and maintenance experience. In addition, by calculating the correlation coefficients between transformer oil temperature and each characteristic variable, key factors that significantly affect oil temperature changes are selected, thereby comprehensively considering the combined effect of various transformer characteristics on oil temperature. Leveraging time-sequence data that includes transformer oil temperature and various associated features as input, this research introduces a prediction approach grounded in a combined CNN-LSTM-Transformer framework. The method first employs CNNs to extract spatial features from multiple input features and explore feature relationships. Subsequently, LSTM networks are utilized to discern the temporal variations in the time-sequence data and further extract temporal features. Finally, a Transformer encoder is integrated to enhance feature interaction and global perception capabilities. This approach integrates the spatial characteristics obtained via CNN and the temporal characteristics obtained via LSTM to produce a more comprehensive feature representation, which leads to more accurate and generalized prediction outcomes. The experimental findings show that the proposed model surpasses existing models in terms of prediction accuracy. The established model was optimized using the WOA to improve prediction accuracy. Compared with the most advanced methods, the proposed method is novel in the following aspects:

Innovative Model Architecture: This study proposes a novel hybrid model architecture that integrates the CNN, LSTM, and Transformer encoder for predicting transformer top oil temperature. This combination leverages the strengths of each component to effectively capture both spatial and temporal features from the input data, providing a more comprehensive and accurate representation for prediction.

Enhanced Generalization Capability: This method incorporates thorough data analysis and preprocessing techniques, including data cleaning, normalization, and feature selection based on correlation coefficients. This ensures the model can handle large-scale, complex datasets with various influencing factors, significantly improving its generalization ability for practical engineering applications.

Improved Prediction Accuracy: The proposed model achieves superior prediction accuracy compared to existing methods, as demonstrated by the experimental results. The maximum RMSE and MAPE of this method on the summer and winter datasets are 0.5884 and 0.79%, respectively, indicating its high precision and reliability in forecasting transformer top oil temperature.

Practical Engineering Application: This research provides a practical and effective solution for transformer condition monitoring and fault warning in power systems. By accurately predicting the top oil temperature, it helps optimize maintenance strategies, enhance the reliability of power systems, and extend the lifespan of transformers, contributing to the efficient and safe operation of power equipment.

Table 1 lists the innovative features of the model architecture in this paper and compares it with other hybrid models in the literature, highlighting the theoretical advantages of the model in this paper and emphasizing its uniqueness.

The results of the experiment indicate that the maximum RMSE and MAPE of this method on the summer and winter datasets are 0.5884 and 0.79%, respectively, demonstrating superior prediction accuracy. Compared with other models, the proposed model improved prediction performance by 13.74%, 36.66%, and 43.36%, respectively, indicating high generalization capability and accuracy. This provides theoretical support for condition monitoring and fault warning of power equipment.

3. Experimental Design and Result Analysis

In this paper, the experimental hardware environment consists of an NVIDIA GeForce GTX 1660 Ti GPU and 6 GB of RAM, and experiments are conducted in Windows 10 using Python 3.9 language and the PyTorch 2.2.2 framework for neural network model building. With the primary objective of predicting transformer top oil temperature, in order to enhance the prediction accuracy and prediction generalization, the data must be analyzed and processed as a priority, and the data processing specifically comprises data categorization, feature extraction, data purification, data normalization, and data slicing and dicing.

3.1. Data Preprocessing

To construct an accurate top oil temperature prediction model for transformers, this study collected live monitoring data from several substations in a specific region over a twelve-month period from May 2023 to April 2024. Environmental temperature data were supplemented by querying relevant meteorological records, ensuring consistency in the timestamps of all datasets. The schematic diagram of the transformer equipment and oil temperature sensor is shown in

Figure 5.

The specific data collected include key parameters such as the transformer’s current load, environmental temperature, top oil temperature, and load current, as shown in

Table 2.

Recorded with a sampling period of every minute, the overall size of the data is about 9 million groups. The collected dataset is notable for its large volume, diverse types, and the representation of actual operating conditions of various transformers across different time periods and seasons. This enables the transformer prediction model to effectively identify and interpret the intricate relationships within the data, thereby enhancing the model’s generalization capability. A glimpse of the data’s actual situation is presented in

Table 3.

From

Table 2, in part of the real data situation, the following can be seen: the real data in the characteristics of many categories, the need to carry out the selection of features, a greater correlation between the characteristics of the selected oil temperature change, the existence of different transformer models, the cooling mode, the rated capacity, and different operating voltages. In addition, it can be seen that the time points of different equipment data are together. If you want to subsequently classify the operation of the transformer, data processing is necessary and different equipment transformer data must be extracted separately to obtain the time series data of each transformer, to enable the subsequent integration of transformers of the same type. In addition, from the 12-month raw data obtained, it is found that there are differences in the data scale of different months, which is usually caused by substation maintenance or data transmission process loss, and from the table, it can also be observed that there are evident anomalous data and missing values; for example, in the oil temperature of transformer no. 17, the transformer data of 1.83 × 10

−32 is obviously anomalous, and in the third data point, the reactive power appears to have a value of zero. If these abnormal data points are directly fed into a model, it will impact the model’s robustness and forecasting capability. Hence, the raw data requires processing. The data processing procedure is depicted in

Figure 6:

3.1.1. Data Classification

Data categorization is divided into two main steps, the categorization of individual transformers and the categorization of transformer types. In the raw dataset, the data of different transformer devices at a point in time are mixed together, so the raw dataset needs to be classified according to the data of different numbered transformers in order to extract the continuous and independent time-series data of each transformer over a twelve-month period.

After obtaining the sequential data of each transformer, the required data need to be extracted according to the task requirements to reduce the workload. The majority of current studies concentrate solely on predicting data for a single transformer, and this research method has limitations in enhancing the model’s adaptability, making it hard to satisfy the actual engineering needs. In addition, considering the diverse range of equipment types and models of transformers, significant disparities exist in the change patterns of actual operating data and their potential characteristic relationships for different categories of transformers. Although some research tries to use the transformer category directly as input features for the model, with the ongoing increase in prediction accuracy requirements, the effectiveness of this method has struggled to meet current practical prediction demands.

To address the aforementioned issues, this paper puts forward an improvement strategy: first, the type of transformer is categorized and, in the collected data, can be used as the basis for classifying transformer category information. There are different types of transformers, rated capacities, and operating voltages. Through existing research, it has been observed that the temperature rise of transformers varies significantly with different operating voltages, so classification depends on the operating voltage and the cooling mode of the transformer [

37]. After categorization, the operating voltage of the obtained transformer data is divided into two classes: 110 kV and 220 kV. The cooling methods include three types: oil-immersed self-cooled (ONAN), oil-immersed air-cooled (ONAF), and forced oil-circulation air-cooled (ODAF) systems. Subsequently, random sampling is conducted among transformers of the same category. For instance, data from five transformers are randomly selected for model training, and then data from new transformers (other than the aforementioned five) within the same category are randomly extracted for prediction, to validate the model’s generalization ability. This study selects 110 kV transformers with an ONAN cooling method as the research subjects. Seven transformers of this category are randomly selected as experimental data, and subsequent data processing operations are also based on this dataset.

3.1.2. Feature Selection

After data classification is completed, feature selection is performed. The collected transformer data contains a large number of features, but not all of them are primary correlated features with oil temperature. Accurately identifying features closely related to oil temperature is crucial for improving prediction accuracy. Therefore, the Pearson correlation coefficient (PCC) [

38,

39,

40] and mutual information (MI) are used to select primary features. The PCC is used to assess the linear relationship between candidate features and oil temperature, while MI is used to assess nonlinear relationships for feature selection. The specific calculation formulas are as follows:

where

and

denote the feature value and oil temperature, respectively, and

and

are the respective mean values. If

is closer to 1, it means that the feature is more closely related to the oil temperature, which can be used as the key variable; if r is closer to 0, it means that the correlation is weak and can be removed.

When calculating mutual information, the continuous input features are first discretized using an equal-frequency discretization method, dividing each feature into 10 intervals such that the number of data points within each interval is approximately equal. This method effectively avoids issues caused by uneven data distribution, such as certain intervals having overly dense or sparse data, thereby ensuring more stable and accurate mutual information calculations. After completing the data discretization, a histogram-based method is used to estimate the probability distribution. For each feature X and target variable

Y, the number of data points in each interval is counted. For example, for the i-th interval of

X and the j-th interval of

Y, the number of data points N

ij that fall into both intervals is counted. The joint probability

can then be estimated as

, the marginal probabilities

and

can be estimated as

and

, respectively, and finally, mutual information is calculated using Formula (10).

where

m and

n represent the number of intervals for features

X and

Y, respectively, and

N represents the total number of data points. The higher the mutual information value, the stronger the dependence between the feature and the oil temperature.

This study selected features with a significant correlation with oil temperature changes. The PCC and MI values between each feature and oil temperature are shown in

Table 4. The table indicates that the PCC and MI values for phase current, active power, and reactive power are relatively low, suggesting that these features have weak linear and nonlinear relationships with oil temperature and can be removed. However, the PCC and MI values between the ambient temperature, load factor, current load current, and transformer oil temperature are relatively high, indicating that these features have a significant correlation with oil temperature and can be used as key features. Therefore, based on historical oil temperature data, the ambient temperature, load factor, and current load current are also added as input features.

3.1.3. Dataset Partitioning

In order to verify the feasibility of the combined model approach in the case of real complex data, and to improve the generalization of the prediction, the five units in the previous section are randomly selected. In the training phase of the model, 2,628,000 datasets of 110 kV and ONAN transformer types numbered 1, 14, 54, 68, and 257 were utilized for a one-year period, and these datasets were divided into training and validation sets in a ratio of 8:2. In selecting the test set, the influence of the season on model prediction results is considered. Two groups of experimental test datasets were constructed in summer and winter, of which transformer no. 79 was randomly selected for the winter one, 21 January 2024–31 January 2024, representing a total of 14,400 groups of data, and transformer no. 49 was randomly selected for the summer one, 1 August 2023–10 August 2023, representing a total of 14,400 groups of data.

3.1.4. Data Cleaning

The data cleaning work mainly involves filling in missing values and filtering out outliers. In the initial data checking stage, the problem of missing values and outliers is evident in some features through observation and analysis. The presence of missing values interferes with the training and prediction performance of the model, and outliers are data points that markedly diverge from the normal range, usually resulting from sensor failures or data acquisition errors.

In this paper, the theoretical method and the real-world operation and inspection guidelines are used to monitor and process the outliers. The theoretical method employs an LSTM autoencoder-based anomaly detection technique [

41], which detects outliers in time-series data by computing the discrepancy between the reconstructed and original transformer oil temperature values and contrasting it with a predetermined threshold. The operation and inspection rules are derived from the transformer’s operational and maintenance experience and its operational behavior. For instance, during normal transformer operation, oil temperature data surpassing the 90-degree threshold is deemed abnormal, oil temperature data that continuously jumps by more than 1 degree is seen as abnormal, and load ratio data that continuously jumps by more than 100% is also viewed as abnormal, among others. Integrating both theoretical approaches and practical operation and inspection rules can more precisely identify transformer data outliers and enhance the standardization and precision of the dataset [

42].

Figure 7 shows the data before and after cleaning. During the data cleaning process, a total of 45,705 outliers were detected and removed, accounting for 1.74% of the total data volume. The removal of these outliers significantly improved data quality and model robustness. Additionally, the study found that there were 3218 missing values in the original data, accounting for 0.12% of the total data volume. We used mean imputation to fill in the missing values, ensuring the completeness and consistency of the data.

3.1.5. Data Normalization

Considering the notable differences in the magnitude and value range of various features, the direct use of raw data may lead to numerical instability during model training. Therefore, all features are normalized and scaled within the range of [0, 1]. The formula for normalization is presented below:

where x stands for the original feature value and x’ is the normalized feature value, where

and

are the maximum and minimum values of the original feature value, respectively. The normalization process not only boosts the model’s training efficiency but also heightens its sensitivity to different features, which helps improve prediction accuracy.

3.1.6. Time-Window-Based Data Segmentation

Transformer oil temperature data exhibits distinct time-series characteristics. Thus, in the data preprocessing stage, the impact of the time factor must be fully considered. For the standardized oil temperature and transformer characteristics data, cutting is performed according to a time window. For instance, a time window size of x means the data is divided into segments every x minutes. Starting from the first time step of the dataset, each window encompasses data from x consecutive time steps. Then, the window is gradually slid forward. This approach ensures that the generated data batches retain the continuity of the time-series, facilitating model training and allowing the capture of data characteristics over time [

43].

3.2. Evaluation Metrics

To comprehensively evaluate how well the proposed model predicts oil temperature, the model’s performance is assessed using MAPE, MAE, RMSE, and R

2, which are common metrics for evaluating prediction accuracy.

where

denotes the actual value;

represents the model’s predicted value;

indicates the mean of the actual values; and n stands for the sample count. Lower MAPE, MSE, and RMSE values signify better performance. The value of R

2 is in the range of (−∞, 1], and the closer it is to 1, the better the effect, which means that the model’s prediction accuracy is higher. In the analysis of the experimental results, this paper compares the performance advantages and disadvantages of different model structures and parameter settings based on the above four indicators, in order to verify the effectiveness and generalization ability of the proposed method.

3.3. Parameter Selection

In this study, the CNN uses a two-layer 1D Convolutional Neural Network. The convolutional kernel is set to 3. The first layer has 64 channels and the second layer has 128 channels. The pooling layer employs a maximum pooling operation, with both the size and stride set to 2. The batch size is 64. To boost the model’s performance in oil temperature prediction, the WOA automatically optimizes key hyperparameters. Initially, the primary parameters of the WOA are configured as follows: the population size is set to 20 and the maximum number of iterations is 30; the spiral coefficient b is 1; and the contraction factor a is 2. The quantity of LSTM layers fluctuates from 1 to 4, and the units in each LSTM layer span from 64 to 256. For the Transformer, the number of attention heads is between 1 and 4, and the learning rate ranges from 0.0001 to 0.01.

After iterative computation, the number of LSTM layers is obtained as 2, the LSTM layer has 64 cells, the Transformer layer has 2 attention heads, and the learning rate is 0.001.

3.4. Ablation Experiment

To investigate the contribution of each model component to prediction performance, this study designed a series of ablation experiments. Ablation experiments selectively remove or modify certain parts of the model to observe the impact of these changes on model performance, thereby assessing the importance of each component. The ablation experiments in this study primarily focus on the CNN-LSTM-Transformer model, aiming to validate the roles of the CNN layer, LSTM layer, and Transformer layer in the task of predicting the top-layer oil temperature of a transformer. The proposed model is the complete CNN-LSTM-Transformer model, whose structure and parameter configuration are as described earlier. In the ablation experiments, the following model variants were evaluated: Model 1 removes the CNN layer, retaining only the LSTM and Transformer layers; Model 2 removes the LSTM layer, retaining only the CNN and Transformer layers; Model 3 removes the Transformer layer, retaining only the CNN and LSTM layers; and Model 4 replaces the LSTM layer with a standard RNN layer, with the remainder unchanged. The test sample is the summer data of transformer no. 49. All models were trained and evaluated on the same training and validation datasets to ensure the comparability of experimental results, as shown in

Table 5:

As shown in

Table 4, the proposed model performs best across all evaluation metrics, indicating that the combination of CNN layers, LSTM layers, and Transformer layers is crucial for accurately predicting the top-layer oil temperature of transformers. Removing any component results in a decline in model performance, with Model 2 showing the most significant impact when the LSTM layer is removed, resulting in an increase of 0.6587 in RMSE and a decrease of 0.0235 in R

2. This indicates that the LSTM layer plays a crucial role in capturing long-term dependencies in time series data. Additionally, replacing the LSTM layer with a standard RNN layer in Model 4 also leads to a noticeable decline in performance, further validating the advantages of the LSTM layer. Through ablation experiments, this study validated the effectiveness of each component in the CNN-LSTM-Transformer model. The experimental results show that the CNN layer, LSTM layer, and Transformer layer all play important roles in predicting the top oil temperature of transformers and are indispensable. The organic combination of these components enables the model to predict the top oil temperature of transformers more accurately, providing strong support for the condition monitoring and fault warning of power equipment.

The second ablation experiment was conducted to validate the performance of the WOA in optimizing model hyperparameters. The search space for hyperparameter optimization in transformer oil temperature prediction is highly complex, involving multiple dimensions (e.g., LSTM layers, units, Transformer heads, learning rate) and nonlinear relationships. The WOA’s unique mechanisms—such as encircling prey, spiral movement, and random search—enable it to balance global exploration and local exploitation effectively. Unlike Bayesian optimization, which relies on prior assumptions (e.g., Gaussian processes) and may struggle with high-dimensional or non-convex spaces, the WOA adapts dynamically to the search landscape. Furthermore, while random search lacks guided exploration and often converges slowly, the WOA’s mimetic whale behavior allows it to efficiently locate optimal hyperparameter combinations, even in complex scenarios. Therefore, we performed a comparative experiment using the WOA, Bayesian optimization, and random search to optimize the model. Bayesian optimization used a Gaussian process sampling method with 50 sampling points, while random search had 10 sampling points. The convergence criterion was set to 10 consecutive iterations with no significant changes in the objective value. The parameter search range for random search was the same as that for the WOA, and the seed was fixed at 42 to ensure reproducibility of the results. The experimental results showed that the WOA outperformed the other methods in terms of optimization performance. The specific results are shown in the

Table 6 below:

As can be seen from

Table 5, the WOA performs best in terms of optimization performance, with both maximum RMSE and MAPE lower than those of other methods. This indicates that the WOA has a significant advantage in optimizing the hyperparameters of complex models. In addition to achieving a lower MAPE and RMSE, the WOA demonstrates superior performance in terms of convergence speed and computational efficiency. As shown in the figure of the number of iterations during training in

Figure 8, the WOA converges within 30 iterations, while Bayesian optimization requires more sampling points (approximately 50) to achieve a similar effect, and random search cannot converge sufficiently within the same number of iterations. This fully demonstrates the WOA’s ability to search more efficiently in complex search spaces, making it particularly suitable for optimizing deep learning models in practical engineering applications.

3.5. Analysis of Prediction Results

The forecasting technique for transformer oil temperature time series data based on the CNN-LSTM-Transformer combination model is used to model and predict the randomly selected non-training set of the summer sample data of transformer no. 49 and the winter sample data of transformer no. 79, respectively, and to analyze the prediction method on different cases of datasets.

Table 7 presents the error statistics for the two test samples. As indicated by the data in the table, the combination model method based on the CNN-LSTM-Transformer demonstrates a more favorable performance when dealing with large-scale datasets. The MAE between the test values and the actual values is maintained below 0.5 percent, which adequately reflects the accuracy and generalization ability of the method introduced in this paper.

Figure 9 and

Figure 10 display the comparative graphs of forecasted and actual oil temperatures for transformers numbered 49 and 79. As shown in the figures, the forecasted oil temperature values show a high level of precision. Although there is a slight error in predicting the peak values, with deviations of approximately 1 degree, the general course of the predicted values closely coincides with that of the actual values. Additionally, a visual analysis of the uncertainty in the model’s prediction results was conducted by plotting the 95% confidence intervals of the predicted values to intuitively demonstrate the reliability and fluctuation range of the prediction results. When plotting the comparison chart between predicted and actual values, in addition to separately displaying the actual values (blue solid line) and predicted values (red solid line), two additional gray dashed lines were plotted to represent the upper and lower bounds of the 95% confidence interval. This interval to some extent reflects the statistical uncertainty of the model’s predicted results; the higher the proportion of actual values falling within this interval, the higher the accuracy of the model’s predictions. As shown in the figure, the actual values are mostly within the confidence interval, demonstrating the robustness of the model in this paper. This provides a more comprehensive and reliable reference for decision making based on model predictions in practical applications.

To visually demonstrate the error between the model’s predicted values and actual values, we plotted a residual line chart.

Figure 11 shows the residual line chart of the prediction model in this paper, which captures 1440 time points within a single day on 1 August 2023. As can be seen from the figure, most of the errors are concentrated around zero, indicating that the model has high predictive accuracy, with the predicted results being very close to the actual values. Although there are some points with larger errors, these points account for a small proportion of the entire dataset, and the prediction errors are all stable below 0.5 degrees Celsius, demonstrating the model’s robustness and reliability.

3.6. Comparison Experiment

To further verify the prediction accuracy of the CNN-LSTM-Transformer model in the task of oil temperature prediction, this paper compares its performance with multiple classical prediction methods under the same dataset and feature conditions, respectively. The selected prediction methods are Informer, TCN, and LSTM-Attention methods; all models use the same features and the same data preprocessing process as well as the sliding window approach to construct the samples; and they are all trained on the same dataset using similar hyperparameter configurations to guarantee the impartiality of the comparison. The test sample is the summer data of transformer no. 49; the prediction data of 15 time steps starting from 16:00 on August 1 is intercepted; and

Figure 12 shows the prediction results of the four models:

As depicted in

Figure 12, the prediction curves of the four models tested for oil temperature closely match the changes of the actual curves, and in comparison, the CNN-LSTM-Transformer model exhibits better prediction accuracy and error performance than other models. In this research, the evaluation metrics of the models constructed for each method on the test samples are summarized, and the results can be seen in

Table 8.

The table shows that the CNN-LSTM-Transformer model developed in this paper achieves a maximum RMSE of 0.4255 and an MAPE of 0.6048% in the oil temperature prediction task, which are better than the other models in all performance indicators, and relative to the other three prediction models, there is a 13.74%, 36.66%, and 43.36% enhancement in prediction accuracy. Although the TCN model is the fastest in terms of inference speed, its prediction accuracy is the worst due to its simpler model structure compared to the other three models. While the Informer model performs well in terms of prediction accuracy, its inference time is prolonged due to the extensive self-attention mechanism calculations required during inference. LSTM-Attention and the model proposed in this paper have inference times between the two, offering a good balance. However, the model proposed in this paper has the highest prediction accuracy, significantly reducing prediction errors. This model is particularly suitable for scenarios with extremely high requirements for prediction accuracy, such as power equipment condition monitoring and fault warning, where high-precision predictions can effectively avoid misjudgments and missed judgments, ensuring the safe operation of the power system. This fully demonstrates the outstanding performance of the proposed model in oil temperature prediction tasks.

During training, we use Mean Squared Error (MSE) as the loss function.

Figure 13 shows the change in training loss over training epochs. As shown in the figure, the training loss is relatively high at the beginning of training but gradually decreases as training progresses, stabilizing after a certain number of epochs. Additionally, the RMSE metric was incorporated during training, and as shown in

Figure 14, the RMSE evaluation metric gradually decreases and stabilizes with training. These findings indicate that the model converges well and gradually learns the patterns in the data.

To further validate the predictive performance of the models, we generated residual plots for 1440 time points on 1 August 2023, for each model, showing the error distribution between the predicted values and the actual values.

Figure 15 shows the residual plots for the model proposed in this paper, TCN, LSTM-Attention, and Informer models. As shown in the figure, the residual distribution of the model proposed in this paper is the most concentrated, with most error values clustered around zero, and the maximum residual absolute values are all below 0.5. The TCN model exhibits the second-best residual performance. In contrast, the residual distributions of the LSTM-Attention and Informer models are more dispersed, with some error values around 1 °C, indicating relatively lower prediction accuracy. This fully demonstrates the stability and reliability of the model proposed in this paper.