Short-Term Electric Load Forecasting Using Deep Learning: A Case Study in Greece with RNN, LSTM, and GRU Networks

Abstract

1. Introduction

2. The Architectures of the Deep Learning Forecasters

2.1. The Recurrent Neural Network

2.2. The Long Short-Term Memory Model

2.3. The Gated Recurrent Unit Model

3. Problem Statement and Dataset Processing

3.1. The Electric Load Forecasting Problem

3.2. The Dataset of the Greek Power Systems—Characteristics and Processing

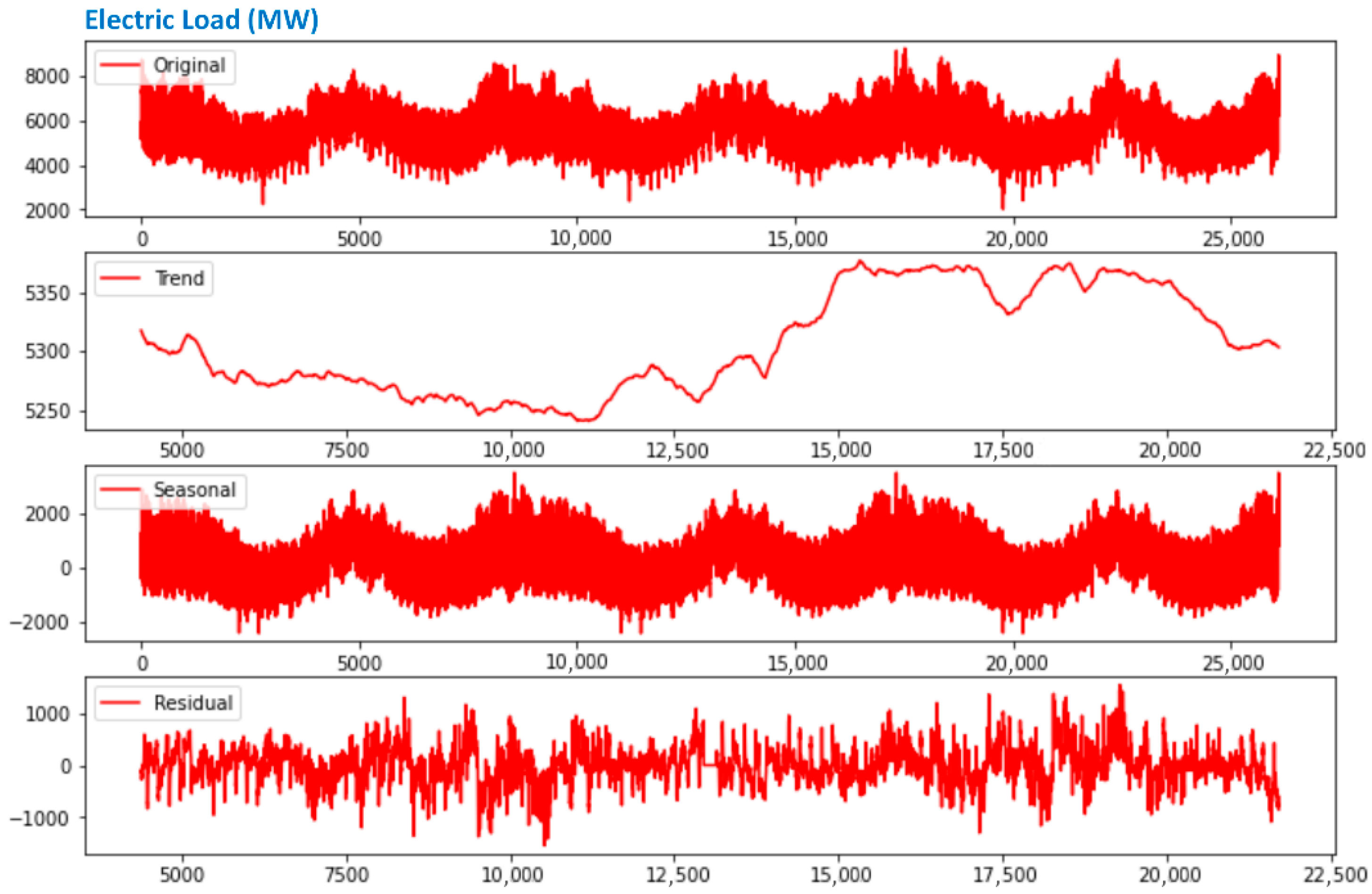

- Seasonality in time-series data represents recurring patterns that appear at consistent intervals—such as daily, weekly, monthly, or annually. This feature is common across various datasets, including those related to sales, weather, and transportation. Recognizing seasonal patterns is crucial for precise forecasting and uncovering hidden trends within the data.

- Trend refers to the overall direction in which time-series data move over an extended period—whether upward, downward, or stable. It reflects long-term behavior and may be influenced by factors such as economic shifts or demographic changes. Detecting trends is essential for understanding the data’s structure and generating reliable forecasts. Techniques like linear regression, moving averages, and exponential smoothing are commonly used to identify trends. Additionally, trends may shift over time—a phenomenon known as a change point—which analysts should be mindful of during analysis

- Cyclic behavior involves patterns in time-series data that repeat over periods longer than seasonal cycles, often spanning several years. These cycles may be driven by macro-level factors such as economic trends. For instance, indicators like GDP, employment, and production often display cyclic behavior in economic data. Recognizing these cycles aids in grasping deeper data patterns and improving prediction accuracy.

- Irregular fluctuations refer to random or unexpected variations in a time series that cannot be attributed to trend, seasonality, or cycles. Such irregularities may arise from unpredictable events like natural disasters or sudden shifts in demand. Understanding these anomalies is important for fully interpreting the data and enhancing the accuracy of forecasts.

4. Experimental Results

4.1. Data Preprocessing and Visualization Based on the Power BI Tool

- To inspect prediction errors by model, time, and day type;

- To perform visual inspection of high-error cases and model bias during specific time ranges;

- To toggle between models and horizons interactively.

- A drop-down list containing the four seasons.

- A horizontal moving bar that adjusts the limits of the electric load.

- A horizontal moving bar that adjusts the calendar of the training dataset.

- A dropdown list with the names of the seven weekdays.

4.2. Results and Analysis

4.2.1. One-Step-Ahead Prediction: Overall Performance and Comparative Analysis

4.2.2. One-Step-Ahead Prediction: Seasonal and Day-Type Performance—Special Days

- On working days, the morning and evening peak loads, as well as the first minimum load, show a consistent pattern across all seasons. However, during spring and autumn working days, the evening minimum is more clearly defined compared to winter and summer.

- As far as Sundays are concerned, the load curve differs significantly from that of working days, both in shape and seasonal variation. Notably, after 6 p.m., the Sunday load curves in autumn and spring resemble those of their corresponding working days.

- Although there are some variations in both seasonal trends and day types, the deep learning prediction model effectively captures the actual load curves, accurately identifying the peak and minimum values. Sundays pose a greater challenge for prediction due to lower demand and differing social behavior. Nevertheless, GRU performs well in monitoring the shift between weekends and working days.

4.2.3. One-Step-Ahead Prediction: A Generalization Scenario

4.2.4. Two- and Twenty-Four-Step-Ahead Prediction

- The GRU predictor that appears in the first, third, and fourth places in the tables exhibits the best performance, a finding that is consistent with the particular forecaster in the previous two cases.

- The LSTM does not appear in Table 7. In fact, the best LSTM performance is the seventh best among all predictors.

- The RNN appears two times in Table 7 and holds the sixth place as well, attaining similar prediction errors with those of GRU.

- The longest input vector contributed to the best performances. Therefore, in the 24 h forecast horizon, the deep learning models require prior knowledge from the same day of the previous week.

- The reported values prove that the broader forecasting horizon leads to predictions that are far from being accurate, since a MAPE greater than 2% does not correspond to trustworthy predictions. The information that can be extracted from this kind of prediction is limited to the trend of the next day’s load curve.

5. Conclusions

- Integration of forecasting models with an interactive Power BI dashboard for real-time visualization and decision support.

- Systematic exploration of model configurations, input/output vector lengths, and training parameters to fine-tune performance.

- Incorporating multivariate inputs such as temperature, mobility, and electricity price.

- Exploring hybrid and attention-based models (e.g., transformers) to improve long-range prediction fidelity.

- Applying explainability tools (e.g., SHAP and LIME) to enhance trust and transparency in decision support.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| STLF | Short-Term Load Forecasting |

| CI | Computational Intelligence |

| ANN | Artificial Neural Network |

| ARIMA | Auto-Regressive Integrated Moving Average |

| GA | Genetic Algorithm |

| PSO | Particle Swarm Optimization |

| DL | Deep Learning |

| LSTM | Long Short-Term Memory |

| RNN | Recurrent Neural Network |

| GRU | Gated Recurrent Unit |

| RMSE | Root Mean Squared Error |

| MAPE | Mean Absolute Percentage Error |

References

- Hoang, A.; Vo, D. The balanced enegy mix for achieving environmental and economic goals in the long run. Energies 2020, 13, 3850. [Google Scholar] [CrossRef]

- Marneris, I.; Ntomaris, A.; Biskas, P.; Basilis, C.; Chatzigiannis, D.; Demoulias, C.; Oureilidis, K.; Bakirtzis, A. Optimal Participation of RES aggregators in energy and ancillary services markets. IEEE Trans. Ind. Appl. 2022, 59, 232–243. [Google Scholar] [CrossRef]

- Lychnaras, V.; Passas, C. The Greek enery sector and the impact of natural gas process on domestic electricity prices. Greek Econ. Outlook 2023, 51, 74–86. [Google Scholar]

- Perçuku, A.; Minkovska, D.; Hinov, N. Enhancing electricity load forecasting with machine learning and deep learning. Technologies 2025, 13, 59. [Google Scholar] [CrossRef]

- Dudek, G. Pattern-based local Linear Regression models for short-term load forecasting. Electr. Power Syst. Res. 2016, 130, 139–147. [Google Scholar] [CrossRef]

- Park, D.C.; El-Sharkawi, M.; Marks, R.; Atlas, L.; Damborg, M. Electric load forecasting using an artificial neural network. IEEE Trans. Power Syst. 1991, 6, 442–449. [Google Scholar] [CrossRef]

- Papalexopoulos, A.; How, S.; Peng, T. An implementation of a neural network based load forecasting model for the EMS. IEEE Trans. Power Syst. 1994, 9, 1956–1962. [Google Scholar] [CrossRef]

- Bakirtzis, A.; Theocharis, J.; Kiartzis, S.; Satsios, K. Short-term load forecasting using fuzzy neural networks. IEEE Trans. Power Syst. 1995, 10, 1518–1524. [Google Scholar] [CrossRef]

- Bansal, R. Bibliography on the fuzzy set theory applications in power systems. IEEE Trans. Power Syst. 2003, 18, 1291–1299. [Google Scholar] [CrossRef]

- Mastorocostas, P.; Theocharis, J.; Bakirtzis, A. Fuzzy modeling for short-term load forecasting using the orthogonal least squares method. IEEE Trans. Power Syst. 1999, 14, 29–36. [Google Scholar] [CrossRef]

- Shah, S.; Nagraja, H.; Chakravorty, J. ANN and ANFIS for short term load forecasting. Eng. Technol. Appl. Sci. Res. 2018, 8, 2818–2820. [Google Scholar] [CrossRef]

- Kandilogiannakis, G.; Mastorocostas, P.; Voulodimos, A. ReNFuzz-LF: A recurrent neurofuzzy system for short-term load forecasting. Energies 2022, 15, 3637. [Google Scholar] [CrossRef]

- Giasemidis, G.; Haben, S.; Lee, T.; Singleton, C.; Grindrod, P. A genetic algorithm approach for modelling low voltage network demands. Appl. Energy 2017, 203, 463–473. [Google Scholar] [CrossRef]

- Yang, Y.; Shang, Z.; Chen, Y.; Chen, Y. Multi-objective particle swarm optimization algorithm for multi-step electric load forecasting. Energies 2020, 13, 532. [Google Scholar] [CrossRef]

- Zhao, H.; Tang, R.; He, Z. Research on short-term power load forecasting based on APSO-SVR. In Proceedings of the 6th International Conference on Control and Robots, Intelligent Control and Artificial Intelligence (RICAI), Nanking, China, 6–8 December 2024. [Google Scholar] [CrossRef]

- Bianchi, F.; Maiorino, E.; Kampffmeyer, M.; Rizzi, A.; Jenssen, R. Recurrent Neural Networks for Short-Term Load Forecasting—An Overview and Comparative Analysis; Springer: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder–decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar] [CrossRef]

- Yu, K. Adaptive Bi-directional LSTM short-term load forecasting with improved attention mechanisms. Energies 2024, 17, 3709. [Google Scholar] [CrossRef]

- Ullah, K.; Ahsan, M.; Hasanat, S.M.; Haris, M.; Yousaf, H.; Raza, S.F. Short-term load forecasting: A comprehensive review and simulation study with CNN-LSTM hybrid approach. IEEE Access 2024, 12, 111858–111881. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, N.; Chen, X. A short-term residential load forecasting model based on LSTM RNN considering weather. Energies 2021, 14, 2737. [Google Scholar] [CrossRef]

- Abumohse, M.; Owda, A.; Owda, M. Electrical load forecasting using LSTM, GRU, and RNN algorithms. Energies 2023, 16, 2283. [Google Scholar] [CrossRef]

- Guo, X.; Gao, Y.; Song, W.; Zen, Y.; Shi, X. Short-term power load forecasting based on CEEMDAN-WT-VMD joint denoising and BiTCN-BiGRU-Attention. Electronics 2025, 14, 1871. [Google Scholar] [CrossRef]

- Hasanat, S.; Ullah, K.; Yousaf, H.; Munir, K.; Abid, S.; Bokhari, S.A.S. Enhancing Short-term load forecasting with a CNN-GRU hybrid model: A comparative analysis. IEEE Access 2024, 12, 184132–184141. [Google Scholar] [CrossRef]

- Pirbazari, A.; Chakravorty, A.; Rong, C. Evaluating feature selection methods for short-term load forecasting. In Proceedings of the 2019 IEEE International Conference on Big Data and Smart Computing, Kyoto, Japan, 27 February–2 March 2019. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, Z.; Gao, Y.; Wu, J.; Zhao, S.; Ding, Z. An effective dimensionality reduction approach for short-term load forecasting. Electr. Power Syst. Res. 2022, 210, 108150. [Google Scholar] [CrossRef]

- Rodriguez, F.; Cardeire, C.; Calado, J.; Melicio, R. Short-term load forecasting of electricity demand for the residential sector based on modelling techniques: A systematic review. Energies 2023, 16, 4098. [Google Scholar] [CrossRef]

- Hasan, M.; Mifta, Z.; Papiya, S.J.; Roy, P.; Dey, P.; Salsabil, N.A.; Chowdhury, N.-U.-R.; Farrok, O. A state-of-the-art comparative review of load forecasting methods: Characteristics, perspectives, and applications. Energy Convers. Manag. X 2025, 26, 100922. [Google Scholar] [CrossRef]

- Géron, A. Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow; O’Reilly Media: Sebastopol, CA, USA, 2019. [Google Scholar]

- Mienye, I.; Swart, T.; Obaido, G. Recurrent neural networks: A comprehensive review of architectures, variants, and applications. Information 2024, 15, 517. [Google Scholar] [CrossRef]

- Bouktif, S.; Fiaz, A.; Ouni, A.; Serhani, M. Optimal deep learning LSTM model for electric load forecasting using feature selection and genetic algorithm: Comparison with machine learning. Energies 2018, 11, 1636. [Google Scholar] [CrossRef]

- Agrawal, V.; Goswami, P.; Sarma, K. Week-ahead forecasting of household energy consumption using CNN and multivariate data. WSEAS Trans. Comput. 2021, 20, 182–188. [Google Scholar] [CrossRef]

- Greek Independent Power Transmission Operator. Available online: https://www.admie.gr/en/market/market-statistics/detail-data (accessed on 10 May 2025).

- Fatima, S.; Rahimi, A. A review of time-series forecasting algorithms for industrial manufacturing systems. Machines 2024, 12, 380. [Google Scholar] [CrossRef]

- Seabold, S.; Perktold, J. Statsmodels: Econometric and statistical modeling in Python. In Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28 June–3 July 2010. [Google Scholar] [CrossRef]

- Powell, B. Mastering Microsoft Power BI: Expert Techniques for Effective Data Analytics and Business Intelligence; Packt Publishing: Birmingham, UK, 2018. [Google Scholar]

- Silva, R.F. Power BI—Business Intelligence Clinic: Create and Learn; Independently Published, 2018. [Google Scholar]

| Number of layers | 2 |

| Neurons per layer | 40, 100, 150, 200, 400, 500 |

| Input length (hours) | 6, 12, 32, 48, 48, 96, 168 |

| Forecast horizon | 1, 2, 24 |

| Batch size | 12–360 |

| Optimizer | Adam |

| Drop-out | 0.35 |

| Error function | Root Mean Squared Error |

| Bias | Yes |

| Learning rate | 0.001 |

| Model | Neurons | Parameters | Batch Size | Input Length | Time (s) | RMSE | MAPE |

|---|---|---|---|---|---|---|---|

| (1) GRU | 200 | 363,201 | 16 | 32 | 487 | 83.2 | 1.18 |

| (2) GRU | 500 | 3,006,501 | 16 | 32 | 348 | 83.4 | 1.18 |

| (3) LSTM | 500 | 3,006,501 | 16 | 32 | 585 | 83.8 | 1.18 |

| (4) GRU | 200 | 363,201 | 16 | 32 | 273 | 83.6 | 1.19 |

| (5) GRU | 400 | 1,925,201 | 12 | 48 | 524 | 86.1 | 1.23 |

| (6) LSTM | 200 | 482,601 | 16 | 32 | 505 | 86.7 | 1.23 |

| (7) LSTM | 200 | 482,601 | 12 | 48 | 545 | 87.8 | 1.24 |

| (8) LSTM | 150 | 271,950 | 12 | 48 | 662 | 88.2 | 1.25 |

| (9) LSTM | 400 | 1,925,201 | 12 | 48 | 761 | 87.9 | 1.26 |

| (10) GRU | 150 | 271,951 | 12 | 48 | 525 | 88.7 | 1.27 |

| (11) RNN | 200 | 120,801 | 80 | 48 | 330 | 115.1 | 1.71 |

| (12) RNN | 40 | 4961 | 24 | 48 | 808 | 115.4 | 1.73 |

| (13) RNN | 40 | 4961 | 24 | 96 | 504 | 118.2 | 1.74 |

| (14) RNN | 200 | 120,801 | 120 | 96 | 1389 | 123.1 | 1.82 |

| (15) RNN | 40 | 4961 | 240 | 168 | 519 | 130.48 | 1.92 |

| Model | RMSE (Lower Bound) | RMSE (Upper Bound) | MAPE (Lower Bound) | MAPE (Upper Bound) |

|---|---|---|---|---|

| (1) GRU | 81.9 | 84.4 | 0.74 | 1.41 |

| (2) GRU | 82.1 | 84.6 | 0.75 | 1.44 |

| (3) LSTM | 82.3 | 85.3 | 0.72 | 1.48 |

| (4) GRU | 80.1 | 87.1 | 0.75 | 1.53 |

| (5) GRU | 84.6 | 87.5 | 0.75 | 1.47 |

| (6) LSTM | 83.8 | 89.6 | 0.76 | 1.59 |

| (7) LSTM | 84.6 | 90.8 | 0.76 | 1.57 |

| (8) LSTM | 84.8 | 91.6 | 0.72 | 1.59 |

| (9) LSTM | 83.9 | 91.8 | 0.74 | 1.61 |

| (10) GRU | 83.6 | 93.8 | 0.78 | 1.55 |

| (11) RNN | 94.9 | 135.2 | 1.41 | 2.02 |

| (12) RNN | 111.8 | 118.9 | 1.67 | 1.79 |

| (13) RNN | 111.6 | 124.7 | 1.64 | 1.84 |

| (14) RNN | 103.8 | 142.3 | 1.52 | 2.12 |

| (15) RNN | 105.0 | 155.9 | 1.55 | 2.29 |

| Error in MW | >100 | >200 | >400 | >500 |

| Hours | 1481 | 239 | 58 | 9 |

| Time percentage | 16.86% | 2.72% | 0.66% | 0.10% |

| Season | MAPE Testing | RMSE Testing |

|---|---|---|

| Winter | 1.21% | 92.4 |

| Spring | 1.31% | 82.1 |

| Summer | 0.89% | 55.3 |

| Autumn | 1.25% | 89.9 |

| Model | Neurons | Parameters | Batch Size | Input Length | RMSE | MAPE |

|---|---|---|---|---|---|---|

| GRU | 200 | 363,201 | 12 | 96 | 121.7 | 1.69 |

| LSTM | 200 | 482,601 | 12 | 96 | 131.4 | 1.83 |

| GRU | 200 | 363,201 | 12 | 24 | 132.8 | 1.85 |

| LSTM | 200 | 482,601 | 12 | 168 | 133.6 | 1.87 |

| GRU | 200 | 363,201 | 12 | 48 | 134.5 | 1.88 |

| Model | Neurons | Parameters | Batch Size | Input Length | RMSE | MAPE |

|---|---|---|---|---|---|---|

| GRU | 200 | 363,201 | 24 | 168 | 343.9 | 4.67 |

| RNN | 200 | 330,824 | 96 | 168 | 362.2 | 5.12 |

| GRU | 40 | 41,544 | 24 | 168 | 380.1 | 5.32 |

| GRU | 200 | 363,201 | 96 | 168 | 380.3 | 5.40 |

| RNN | 40 | 14,984 | 48 | 168 | 374.9 | 5.44 |

| Model | Neurons | 24 Inputs | 48 Inputs | 96 Inputs | 168 Inputs |

|---|---|---|---|---|---|

| RNN | 40 | 6.04 | 6.09 | 5.78 | 5.44 |

| LSTM | 40 | 6.74 | 6.78 | 6.61 | 6.16 |

| GRU | 40 | 6.13 | 6.06 | 5.84 | 5.32 |

| Model | Neurons | 24 Inputs | 48 Inputs | 96 Inputs | 168 Inputs |

| RNN | 200 | 5.86 | 5.77 | 6.11 | 5.12 |

| LSTM | 200 | 6.40 | 6.42 | 6.26 | 5.49 |

| GRU | 200 | 5.72 | 5.60 | 5.69 | 4.75 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zelios, V.; Mastorocostas, P.; Kandilogiannakis, G.; Kesidis, A.; Tselenti, P.; Voulodimos, A. Short-Term Electric Load Forecasting Using Deep Learning: A Case Study in Greece with RNN, LSTM, and GRU Networks. Electronics 2025, 14, 2820. https://doi.org/10.3390/electronics14142820

Zelios V, Mastorocostas P, Kandilogiannakis G, Kesidis A, Tselenti P, Voulodimos A. Short-Term Electric Load Forecasting Using Deep Learning: A Case Study in Greece with RNN, LSTM, and GRU Networks. Electronics. 2025; 14(14):2820. https://doi.org/10.3390/electronics14142820

Chicago/Turabian StyleZelios, Vasileios, Paris Mastorocostas, George Kandilogiannakis, Anastasios Kesidis, Panagiota Tselenti, and Athanasios Voulodimos. 2025. "Short-Term Electric Load Forecasting Using Deep Learning: A Case Study in Greece with RNN, LSTM, and GRU Networks" Electronics 14, no. 14: 2820. https://doi.org/10.3390/electronics14142820

APA StyleZelios, V., Mastorocostas, P., Kandilogiannakis, G., Kesidis, A., Tselenti, P., & Voulodimos, A. (2025). Short-Term Electric Load Forecasting Using Deep Learning: A Case Study in Greece with RNN, LSTM, and GRU Networks. Electronics, 14(14), 2820. https://doi.org/10.3390/electronics14142820