1. Introduction

The rapid advancement of the Fourth Industrial Revolution and the ongoing digital transformation have led to a dramatic increase in internet usage, accompanied by a surge in network-targeted cyberattacks. According to the Internet Security Threat Report (ISTR), approximately 3.037 billion attacks were recorded between 2016 and 2020 [

1]. By 2019, the global economic impact of cybercrime had already surpassed

$1 trillion [

2]. As the cybersecurity landscape continues to evolve, cyberattacks are becoming increasingly sophisticated, leveraging advanced tactics to evade traditional defenses. Conventional network intrusion detection systems (IDS), primarily based on signature matching, remain effective in identifying known attack patterns. However, their performance significantly degrades when faced with novel attack vectors or previously unseen anomalous behaviors. To address these limitations, there has been growing interest in the application of machine learning (ML) and deep learning (DL) techniques to intrusion detection, which aim to enhance detection accuracy and adaptability [

3,

4]. These data-driven approaches are particularly promising because of their capacity to learn complex patterns and generalize to previously unknown threats, positioning them as strong candidates for next-generation IDS solutions.

In this study, we investigate a deep learning-based IDS architecture that leverages the Long Short-Term Memory Autoencoder (LSTM-AE), which combines the temporal modeling capabilities of LSTM networks with the dimensionality reduction and anomaly detection strengths of autoencoders. Specifically, we propose a Convolutional Neural Network Bidirectional LSTM Autoencoder (CNN-BiLSTM-AE) model. This architecture integrates convolutional layers for effective spatial feature extraction and bidirectional LSTM layers to capture temporal dependencies in both forward and backward directions. By simultaneously learning spatial and sequential patterns in network traffic, the proposed model aims to improve the classification of normal and malicious traffic. Through a comprehensive evaluation of this architecture, this study seeks to enhance the accuracy, robustness, and generalizability of intrusion detection systems across diverse network environments.

2. Related Works

Y. Yan et al. highlighted that networks exhibit dynamic characteristics, making feature extraction and traffic type identification critical challenges in intrusion detection research. To address this, they proposed LSTM-AE, a model combining an autoencoder and LSTM, evaluated on the UNSW-NB15 dataset [

5]. J. Bi et al. proposed SABD (Stacked Sparse Contractive Autoencoder), a hybrid framework for network intrusion detection. It uses attention-based BiLSTM to extract and classify data features [

6]. R. Shrestha et al. addressed data manipulation risks in smart power grids by proposing a model combining LSTM and an autoencoder. It employs mean standard deviation (MSD) and median absolute deviation (MAD) for anomaly detection [

7]. M. Said et al. tackled the lack of labeled training data in anomaly detection by proposing LSTM-AE combined with OC-SVM for imbalanced datasets [

8]. M. Moukhafi et al. developed an ensemble model using deep autoencoders for feature extraction and LSTM networks to capture temporal dependencies, enhancing anomaly detection accuracy in intrusion detection systems [

9]. L. Touileb et al. proposed a Hybrid LSTM-Autoencoder (HLAE) for IoT security, demonstrating robustness against data corruption from network errors or packet loss [

10]. Z. Xu et al. introduced TA-LSTM-AE (Temporal Attention-based LSTM Autoencoder) for multivariate telemetry anomaly detection in satellites, addressing correlations and temporal dependencies in parameters [

11]. S. Shuxin et al. proposed BLSAE-SNIDS (Bi-LSTM Sparse Autoencoder Satellite Network Intrusion Detection System) to overcome challenges in applying ground-based intrusion detection techniques to satellite networks [

12]. G. S. C Kumar et al. argue that traditional intrusion detection systems, which rely on association rule mining to identify intrusions, suffer from high false alarm rates, limited generalization capabilities, and slow timeliness resulting from inadequate extraction of user activity features. They propose a Deep Residual Convolutional Neural Network (DRCNN) optimized via the Improved Gazelle Optimization Algorithm (IGOA) to enhance network security [

13].

I. O. Lopes et al. pointed out that, in real-world scenarios, the limited availability of labeled data can negatively impact the detection performance of classifiers. To address the shortage of labeled network traffic required for training effective supervised classifiers, they proposed a denoising-AE intrusion detection framework [

14]. J. Lu et al. highlighted that intrusion detection is a crucial approach in network security and proposed the Contractive Sparse Stacked Denoising Autoencoder (CSSDAE) model to effectively identify intrusion data [

15]. Manjunatha, B. A et al. proposed the Sparse Deep Denoising AutoEncoder to detect numerous attacks occurring in the internet-centric world through network intrusion detection systems, aiming to distinguish between normal operations and attacks or intrusions [

16]. M. Arafah et al. noted that intrusion detection systems face challenges in handling high-dimensional, large-scale, and imbalanced network traffic. To overcome these issues, they introduced a novel architecture that combines a denoising autoencoder with a Wasserstein GAN (WGAN). This model extracts highly representative features and generates synthetic attacks to address data imbalance [

17].

S. Zhang et al. highlighted the vulnerability of cloud computing environments to security threats and emphasized the necessity of intrusion detection techniques. They proposed a CNN-BiLSTM framework to detect anomalous traffic in cloud-based virtual flow data [

18]. B. Omarov et al. stressed the need for machine/deep learning models to identify anomalies in smart device behavior and trained and tested a CNN-BiLSTM model for IoT network intrusion detection using the UNSW-NB15 dataset [

19]. M. Chiranjeev et al. addressed the complexity of solar irradiance prediction for renewable energy production, proposing a hybrid LSTM-AE-CNN-BiLSTM architecture to improve accuracy in volatile conditions [

20]. M. Ibrahim et al. noted that anomaly detection aims to distinguish unique features from complex high-dimensional data by proposing a hybrid CNN-BiLSTM model to capture hierarchical spatiotemporal feature relationships for anomaly identification [

21]. A. Halbouni et al. underscored the growing need for intrusion detection systems as networks expand and attack methods evolve. So, they proposed a hybrid CNN-LSTM model combining spatial (CNN) and temporal (LSTM) feature extraction [

22]. S. Lee et al. identified anomaly detection in smart metering data as critical for power system reliability. So, they employed a BiLSTM-AE framework to detect anomalous data points [

23]. Duman et al. tackled video anomaly detection by using a CNN to analyze frame structure and LSTM to track temporal dependencies, thus proposing a ConvLSTM-Autoencoder to learn patterns in normal video sequences [

24].

3. Design of Intrusion Detection Machine Learning Models

The Canadian Institute for Cybersecurity’s Intrusion Detection System 2018 (CICIDS2018) dataset is large-scale, high-dimensional, and encompasses diverse network attack types [

25]. In this study, we present an unsupervised learning approach designed to autonomously learn patterns from complex data structures for outlier detection and classification of normal and attack traffic. The model is trained solely on the intrinsic features of data without label information, and classification is conducted through reconstruction-error-based thresholding.

3.1. DataSet

3.1.1. CICIDS2018

CICIDS2018 is a publicly available benchmark dataset for network intrusion detection. It was developed to create abstract user behavior profiles and build a comprehensive intrusion detection dataset encompassing diverse network events. To achieve this, the dataset emulates real enterprise networks by combining normal traffic with multiple attack types, while incorporating state-of-the-art attack patterns to support both IDS and machine learning-based security research.

The dataset comprises realistic network traffic data where the attacker infrastructure is configured with 50 PCs, and the target organization is structured into five departments with 30 servers and 420 PCs. Network traffic and system logs were collected from all devices, and 80 network features were extracted from the captured traffic for analysis.

Table 1 summarizes the categories and counts of normal traffic and attack scenarios in the final dataset.

3.1.2. Data Preprocessing

The CICIDS2018 dataset was preprocessed as follows. All rows containing NaN values were removed, and in the case of duplicate samples, only the first occurrence was retained to eliminate redundancy. The categorical variable “Label” was converted into a binary format, assigning 0 to normal traffic and 1 to all attack traffic. To capture temporal characteristics, timestamps were standardized to Unix time format, and IP addresses were normalized to real values between 0 and 255 after removing the dot separators. For feature selection, Pearson’s correlation coefficients were calculated to identify redundant features, with a threshold of 0.9 used to determine high correlation. One feature from each pair with a correlation coefficient greater than 0.9 was removed based on the upper triangular portion of the correlation matrix. The removed features and their corresponding correlation coefficients were documented to ensure transparency in variable reduction. This feature selection process reduced the number of input variables required for model training, thereby decreasing computational complexity and mitigating the risk of overfitting. Finally, Min–Max scaling was applied to standardize all feature values to the range of 0 to 1 and avoid skewing the data distribution. The Min–Max scaling is defined by Equation (1):

The procedure for the intrusion detection model implemented in this study consists of two stages. First, the model is trained exclusively on normal data, and the resulting model parameters are saved. Next, the saved model is loaded and evaluated using a dataset containing both normal and intrusion data to assess its ability to effectively distinguish between the two classes.

To prepare the datasets, all CSV files are first merged into a single file. Normal data are then extracted to construct a separate normal dataset, and these randomly extracted samples are removed from the original data. Subsequently, both intrusion and normal data are combined to form a mixed dataset.

3.2. Designing Unsupervised Learning Models

Based on the integrated architecture of LSTM neural networks and autoencoders, a deep learning model was constructed by extending and modifying existing layers. The LSTM layer was adopted as the core component, and the original two-dimensional matrix [Samples, Features] was transformed into a three-dimensional tensor [Samples, Timesteps, Features] to effectively capture temporal dependencies required for LSTM-based processing. The specific interpretation of each dimension is outlined in

Table 2.

3.2.1. Model Structure

The deep learning-based intrusion detection model proposed in this study employs an autoencoder as its core architecture. An autoencoder is a neural network comprising an encoder and a decoder component. The encoder transforms input data into a latent vector, extracting essential features while filtering out redundant noise or information. The decoder then reconstructs the original data from this compressed latent representation, with training focused on minimizing the reconstruction error. Autoencoders typically adopt a symmetrical structure, enabling efficient feature learning.

LSTM-AE

LSTM-AE is a hybrid model designed for anomaly detection and sequence prediction in time-series data. It integrates a Long Short-Term Memory (LSTM) network, which excels at capturing temporal dependencies, with an autoencoder architecture used to optimize data reconstruction and prediction accuracy, as shown in

Figure 1 and

Table 3.

Denoising LSTM-AE

The proposed DLAE learns important data features by adding noise to the input and then reconstructing the original data from the noisy input.

The overall structure of the DLAE and the description of each layer are illustrated in

Figure 2 and

Table 4.

CNN-BiLSTM-AE

The CNN-BiLSTM-AE model incorporates both CNN and BiLSTM networks. CNNs specialize in extracting features while preserving spatial information [

26]. The Bi-LSTM network consists of two unidirectional LSTM networks: the forward LSTM processes time-series data from the past to the future to capture temporal dependencies, while the backward LSTM processes data from the future to the past to utilize future context [

27]. This dual approach enables the model to thoroughly capture the features of time-series data. By combining these two types of neural networks, the model efficiently extracts both spatial and temporal characteristics, resulting in robust anomaly detection performance.

Figure 3 illustrates the overall architecture of the CNN-BiLSTM-AE model, and

Table 5 provides descriptions of each layer.

3.2.2. Learning Process

Figure 4 illustrates the overall process of the autoencoder-based intrusion detection model proposed in this study. The model is designed to effectively distinguish and detect normal and attack traffic through a two-step procedure. In the first step, only normal data are used, which are preprocessed into a format suitable for the model and then input into three autoencoder variants (LSTM-AE, DLAE, and CNN-BiLSTM-AE) to train and store models exclusively on normal traffic. Since the models are trained solely on normal data, they demonstrate greater adaptability to normal patterns and can more clearly identify attack traffic. An additional advantage is that, because attack data are not used during training, the models are not affected by class imbalance issues.

The proposed autoencoder architecture plays a key role in unsupervised learning by automatically learning latent representations from normal traffic without any attack labels. This approach enables the model to detect deviations from normal behavior as potential attacks based on reconstruction errors. The use of autoencoders is particularly advantageous in real-world scenarios where labeled attack data is scarce, as it allows the model to generalize about unknown attack types. The training procedure focuses exclusively on normal samples, allowing the models to develop a robust understanding of normal traffic patterns. After training, the models are evaluated on mixed datasets to assess their ability to identify attack traffic based on learned normal patterns. This unsupervised learning framework ensures that the models are not biased by specific attack signatures and can adapt to evolving network environments.

Compared with conventional batch learning approaches that use mixed datasets, the method proposed in this study offers several advantages. When mixed datasets are used for training, both normal and intrusion data are processed simultaneously, which can be efficient; however, this may compromise the accuracy of normal pattern learning and increase the risk of biased predictions due to class imbalance or overfitting to specific attack types. In contrast, the two-stage learning framework introduced in this work enables the clear extraction of key patterns by focusing exclusively on normal data during training, thereby enhancing robustness in detecting previously unseen attack types. Furthermore, since intrusion data are not used at all during the learning phase, the influence of class imbalance is eliminated, which ensures stable detection performance across diverse network environments.

4. Experiments

Unsupervised learning is a method used to autonomously discover meaningful patterns or structures in unlabeled real-world data. Consequently, traditional supervised metrics such as accuracy, precision, recall, and F1-score (derived from confusion matrices) are not directly applicable, making intuitive performance evaluation challenging. However, alternative techniques, such as reconstruction error analysis or scatterplot visualization, can be employed to assess model effectiveness.

In this study, while the CICIDS2018 dataset includes labels, we intentionally excluded them during autoencoder training to adhere to an unsupervised learning paradigm. The autoencoder was trained exclusively on normal traffic patterns, leveraging reconstruction error thresholds to differentiate between normal and attack traffic during inference.

4.1. Experimental Environment

A total of three models, LSTM-AE, Denoising LSTM-AE, and CNN-BiLSTM-AE, were used in the experiments. The initial parameters for these models were set as shown in

Table 6, based on the default values provided by the machine learning library, with inference times and Trainable Params across different batch sizes detailed in

Table 7. It is important to note that these values were adjusted for the specific dataset used in this study and were not optimized for other datasets. Therefore, when training on different datasets, a similar optimization process should be performed.

4.2. The Evaluation Metrics

A confusion matrix in

Table 8 is a table that summarizes the predictions of a classification model against actual values, allowing for an at-a-glance assessment of the model’s accuracy and the types of errors it makes. A true positive (TP) occurs when the model correctly identifies an instance that is actually positive (e.g., an attack), while a true negative (TN) occurs when the model correctly identifies an instance that is actually negative (e.g., normal traffic). In contrast, a false positive (FP) refers to a case where the model incorrectly classifies an actually negative instance as positive, resulting in a false alarm. Conversely, a false negative (FN) occurs when the model incorrectly classifies an actual positive instance as negative, leading to a missed detection.

To compare the experimental results among models, we employed three evaluation metrics: accuracy, F1-score, and false positive rate (FPR). These metrics are calculated using the following formulas:

Since NIDS-based anomaly detection can result in a high false positive rate, the false positive rate (FPR) is an important metric for evaluating an intrusion detection system (IDS).

4.3. Experimental Results

The CICIDS2018 dataset used in the experiments was converted from a matrix format to a tensor structure to meet the input requirements of the LSTM and BiLSTM layers. The training and test datasets were each divided into 100,000 samples. Only normal data were used for training, while the test dataset consisted of a mixture of normal and intrusion data. These test data were completely withheld during model training and were separated to ensure an independent evaluation.

Figure 5 and

Figure 6 illustrate the loss distributions for the training and test datasets.

Table 9 below presents the performance evaluation results for each model. For both accuracy and F1-score, values closer to 100 indicate better classification performance. Conversely, for FPR, values closer to 0 indicate better performance.

As shown in

Table 9, the CNN-BiLSTM-AE exhibits structural advantages by simultaneously learning spatiotemporal features. By leveraging the CNN layer for spatial feature extraction and the BiLSTM layer for temporal pattern learning, the model outperforms the conventional LSTM-AE in detecting complex threats, and the cooperative mechanism of these layers significantly improves sensitivity to previously unseen attack types. The classification performance of each model is further illustrated in

Figure 7, which presents the confusion matrices for (a) LSTM-AE, (b) DLAE, and (c) CNN-BiLSTM-AE.

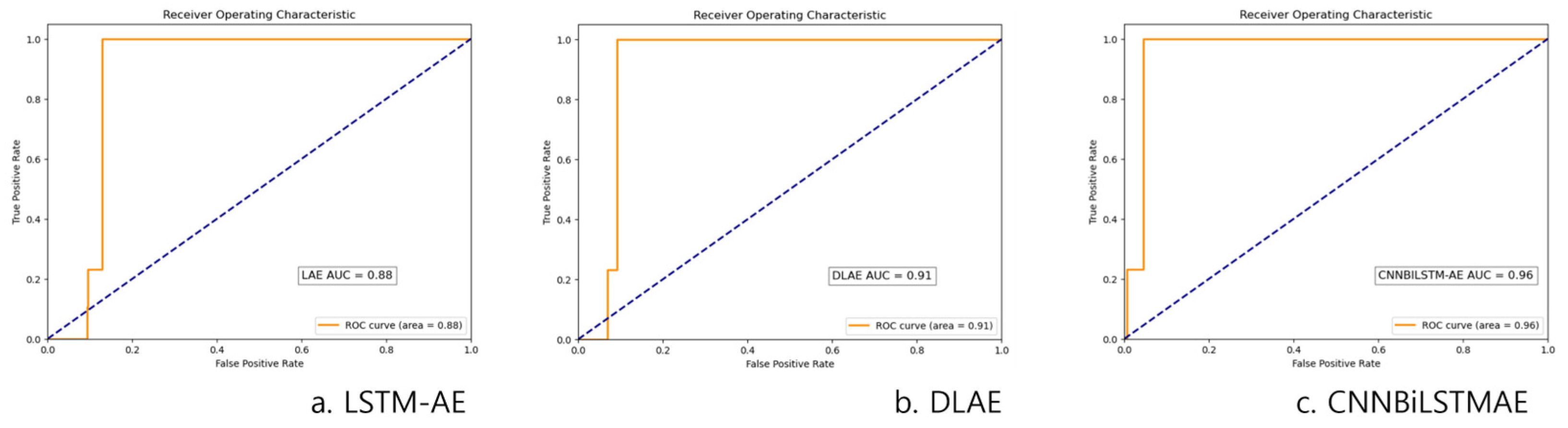

Figure 8 further visualizes the classification performance by presenting the ROC curves for (a) LSTM-AE, (b) DLAE, and (c) CNN-BiLSTM-AE, highlighting the models’ ability to distinguish between classes.

5. Conclusions

This study presents a novel CNN-BiLSTM-AE model aimed at improving the efficacy of deep learning-based intrusion and attack detection systems. By integrating spatial and temporal feature learning, the model demonstrates significant advancements in detection performance. Evaluated on the CICIDS2018 dataset, the proposed model achieved an impressive 98.1% accuracy and 98.3% F1-score, surpassing the baseline LSTM-AE model by a 7% improvement. Furthermore, on the UNSW-NB15 dataset, the model reached 97.7% accuracy and 97.8% F1-score, outperforming previous studies, including the work by B. Omarov et al [

19]., which reported 96.28% accuracy and 95.09% F1-score on the same dataset.

The CNN-BiLSTM-AE hybrid architecture effectively extracts spatial traffic patterns using CNN layers while leveraging BiLSTM layers to capture the temporal dependencies inherent in network traffic flows. These results highlight the importance of spatiotemporal feature fusion as a robust strategy for intrusion detection, addressing key limitations in existing systems and offering valuable insights for real-world network security applications.

Looking ahead, future work will involve further classification of traffic beyond the initial binary separation of normal and attack data, enabling a more detailed analysis of detection performance for individual attack types. Additionally, the applicability of the proposed model to diverse network environments will be explored, alongside the potential integration of attention mechanisms, to further enhance detection accuracy. Furthermore, we will make a comprehensive comparison between the proposed method and state-of-the-art meta-learning and contrastive learning approaches in terms of model complexity, computational resource requirements, and detection performance, to thoroughly assess the strengths and practical applicability of our approach.

Author Contributions

Conceptualization, H.P. and D.S. (Dongil Shin); methodology, H.P. and D.S. (Dongil Shin); software, H.P. and C.P.; validation, H.P. and J.J.; formal analysis, H.P. and C.P.; investigation, J.J. and D.S. (Dongkyoo Shin); resources, H.P.; data curation, H.P.; writing—original draft preparation, H.P. and D.S. (Dongil Shin); writing—review and editing, J.J. and D.S. (Dongkyoo Shin); visualization, H.P.; supervision, D.S. (Dongkyoo Shin); project administration, D.S. (Dongkyoo Shin); funding acquisition, D.S. (Dongkyoo Shin). All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Technology Development Program (RS-2024-00422731) funded by the Ministry of SMEs and Startups (MSS, Korea).

Data Availability Statement

Data presented in this study are available on request from the corresponding author.

Conflicts of Interest

Author Chulgyun Park was employed by the company LaonAtek Inc. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Farhat, D.; Awan, M.S. A brief survey on ransomware with the perspective of internet security threat reports. In Proceedings of the 2021 9th International Symposium On Digital Forensics And Security (ISDFS), Elazig, Turkey, 28–29 June 2021; pp. 1–6. [Google Scholar]

- Kovalchuk, O.; Shynkaryk, M.; Masonkova, M. Econometric models for estimating the financial effect of cybercrimes. In Proceedings of the 2021 11th International Conference on Advanced Computer Information Technologies (ACIT), Deggendorf, Germany, 15–17 September 2021; pp. 381–384. [Google Scholar]

- Pang, G.; Shen, C.; Cao, L.; Hengel, A.V.D. Deep learning for anomaly detection: A review. ACM Comput. Surv. (CSUR) 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Drewek-Ossowicka, A.; Pietrołaj, M.; Rumiński, J. A survey of neural networks usage for intrusion detection systems. J. Ambient Intell. Humaniz. Comput. 2021, 12, 497–514. [Google Scholar] [CrossRef]

- Yan, Y.; Qi, L.; Wang, J.; Lin, Y.; Chen, L. A network intrusion detection method based on stacked autoencoder and LSTM. In Proceedings of the ICC 2020–2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar]

- Bi, J.; Guan, Z.; Yuan, H.; Zhang, J. Improved network intrusion classification with attention-assisted bidirectional LSTM and optimized sparse contractive autoencoders. Expert Syst. Appl. 2024, 244, 122966. [Google Scholar] [CrossRef]

- Shrestha, R.; Mohammadi, M.; Sinaei, S.; Salcines, A.; Pampliega, D.; Clemente, R.; Sanz, A.L.; Nowroozi, E.; Lindgren, A. Anomaly detection based on lstm and autoencoders using federated learning in smart electric grid. J. Parallel Distrib. Comput. 2024, 193, 104951. [Google Scholar] [CrossRef]

- Said Elsayed, M.; Le-Khac, N.-A.; Dev, S.; Jurcut, A.D. Network anomaly detection using LSTM based autoencoder. In Proceedings of the 16th ACM Symposium on QoS and Security for Wireless and Mobile Networks, Alicante, Spain, 16–20 November 2020; pp. 37–45. [Google Scholar]

- Moukhafi, M.; Tantaoui, M.; Chana, I.; Bouazi, A. Intelligent intrusion detection through deep autoencoder and stacked long short-term memory. Int. J. Electr. Comput. Eng. (IJECE) 2024, 14, 2908–2917. [Google Scholar] [CrossRef]

- Touileb, L.; Zekri, K.; Bradai, A.; Pousset, Y.; Point, J.C. A Hybrid LSTM-Autoencoder Based Approach for Network Anomaly Detection System in IoT Environments. In Proceedings of the 2024 IEEE International Mediterranean Conference on Communications and Networking (MeditCom), Madrid, Spain, 8–11 July 2024; pp. 125–130. [Google Scholar]

- Xu, Z.; Cheng, Z.; Guo, B. A multivariate anomaly detector for satellite telemetry data using temporal attention-based LSTM autoencoder. IEEE Trans. Instrum. Meas. 2023, 72, 3523913. [Google Scholar] [CrossRef]

- Shi, S.; Han, B.; Wu, Z.; Han, D.; Wu, H.; Mei, X. BLSAE-SNIDS: A Bi-LSTM sparse autoencoder framework for satellite network intrusion detection. Comput. Sci. Inf. Syst. 2024, 21, 1389–1410. [Google Scholar]

- Kumar, G.S.C.; Kumar, R.K.; Kumar, K.P.V.; Sai, N.R.; Brahmaiah, M. Deep residual convolutional neural network: An efficient technique for intrusion detection system. Expert Syst. Appl. 2024, 238, 121912. [Google Scholar] [CrossRef]

- Lopes, I.O.; Zou, D.; Abdulqadder, I.H.; Ruambo, F.A.; Yuan, B.; Jin, H. Effective network intrusion detection via representation learning: A Denoising AutoEncoder approach. Comput. Commun. 2022, 194, 55–65. [Google Scholar] [CrossRef]

- Lu, J.; Meng, H.; Li, W.; Liu, Y.; Guo, Y.; Yang, Y. Network intrusion detection based on contractive sparse stacked denoising autoencoder. In Proceedings of the 2021 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Chengdu, China, 4–6 August 2021; pp. 1–6. [Google Scholar]

- Manjunatha, B.; Shastry, K.A.; Naresh, E.; Pareek, P.K.; Reddy, K.T. A network intrusion detection framework on sparse deep denoising auto-encoder for dimensionality reduction. Soft Comput. 2024, 28, 4503–4517. [Google Scholar] [CrossRef]

- Arafah, M.; Phillips, I.; Adnane, A.; Hadi, W.; Alauthman, M.; Al-Banna, A.-K. Anomaly-based network intrusion detection using denoising autoencoder and Wasserstein GAN synthetic attacks. Appl. Soft Comput. 2025, 168, 112455. [Google Scholar] [CrossRef]

- Zhang, S.; Jin, T.; Zhang, G. CNN-BiLSTM Cloud Intrusion Detection Method Based on Contractive Auto-Encoder Feature Enhancement. In Proceedings of the 2024 5th International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), Nanjing, China, 29–31 March 2024; pp. 2265–2270. [Google Scholar]

- Omarov, B.; Auelbekov, O.; Suliman, A.; Zhaxanova, A. Cnn-bilstm hybrid model for network anomaly detection in internet of things. Int. J. Adv. Comput. Sci. Appl. 2023, 14. [Google Scholar] [CrossRef]

- Chiranjeevi, M.; Karlamangal, S.; Moger, T.; Jena, D. Solar Irradiation Prediction Hybrid Framework Using Regularized Convolutional BiLSTM-Based Autoencoder Approach. IEEE Access 2023, 11, 131362–131375. [Google Scholar] [CrossRef]

- Ibrahim, M.; Badran, K.M.; Hussien, A.E. Artificial intelligence-based approach for Univariate time-series Anomaly detection using Hybrid CNN-BiLSTM Model. In Proceedings of the 2022 13th International Conference on Electrical Engineering (ICEENG), Cairo, Egypt, 29–31 March 2022; pp. 129–133. [Google Scholar]

- Halbouni, A.; Gunawan, T.S.; Habaebi, M.H.; Halbouni, M.; Kartiwi, M.; Ahmad, R. CNN-LSTM: Hybrid deep neural network for network intrusion detection system. IEEE Access 2022, 10, 99837–99849. [Google Scholar] [CrossRef]

- Lee, S.; Jin, H.; Nengroo, S.H.; Doh, Y.; Lee, C.; Heo, T.; Har, D. Smart metering system capable of anomaly detection by bi-directional LSTM autoencoder. In Proceedings of the 2022 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 7–9 January 2022; pp. 1–6. [Google Scholar]

- Duman, E.; Erdem, O.A. Anomaly detection in videos using optical flow and convolutional autoencoder. IEEE Access 2019, 7, 183914–183923. [Google Scholar] [CrossRef]

- CSE-CIC-IDS2018 2018 Dataset. Available online: https://registry.opendata.aws/cse-cic-ids2018/ (accessed on 24 May 2023).

- Azizjon, M.; Jumabek, A.; Kim, W. 1D CNN based network intrusion detection with normalization on imbalanced data. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020; pp. 218–224. [Google Scholar]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The performance of LSTM and BiLSTM in forecasting time series. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; IEEE: New York, NY, USA, 2019; pp. 3285–3292. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).