Abstract

Backdoor attacks in self-supervised learning pose an increasing threat. Recent studies have shown that knowledge distillation can mitigate these attacks by altering feature representations. In response, we propose BASK, a novel backdoor attack that remains effective after distillation. BASK uses feature weighting and representation alignment strategies to implant persistent backdoors into the encoder’s feature space. This enables transferability to student models. We evaluated BASK on the CIFAR-10 and STL-10 datasets and compared it with existing self-supervised backdoor attacks under four advanced defenses: SEED, MKD, Neural Cleanse, and MiMiC. Our experimental results demonstrate that BASK maintains high attack success rates while preserving downstream task performance. This highlights the robustness of BASK and the limitations of current defense mechanisms.

1. Introduction

In recent years, with the rapid development of computer vision technology [1,2,3,4], the use of pre-trained self-supervised learning (SSL) encoders to extract image features and build downstream classifiers has become an efficient and popular paradigm [5,6,7,8,9]. Because self-supervised learning does not require images to be bound to specific labels, pre-trained SSL encoders embed richer knowledge into feature representations, effectively supporting downstream tasks even under data-poor or computationally constrained conditions. However, the use of third-party pre-trained encoders also introduces potential backdoor attack risks: attackers can inject backdoor triggers into the encoder during the pre-training phase. Once downstream users use the encoder to train a linear classifier, samples containing the triggers may be misclassified as a target class. It is worth noting that backdoors in SSL scenarios are more covert than in supervised scenarios, mainly because explicit label information is not used in the self-supervised pre-training process, making the “entanglement effect” between triggers and target categories more difficult to identify and eliminate using traditional detection methods.

Because of the significant savings in time, human resources, and computational resources, many users choose to use third-party pre-trained encoders, creating an opportunity for backdoor attackers. Many researchers have proposed paradigms that effectively embed triggers in encoders that can be triggered in downstream tasks to achieve the attackers’ desired effects [10,11,12]. However, as poisoning methods become more diverse, some researchers have found that knowledge distillation can effectively remove backdoors from models [13,14].

Contributions.

(1) A feature weighting mechanism is designed to dynamically adjust the correlation between the trigger pattern and the latent representations of clean samples. By computing gradient-based importance across encoder layers, it reduces feature separability during the distillation process, thereby enhancing the backdoor’s persistence.

(2) A contrastive learning-based representation alignment loss is introduced to minimize the distance between poisoned and target class samples in the embedding space, while maximizing their distance from non-target classes. This ensures both the stability and effectiveness of the backdoor injection.

(3) The proposed method is evaluated on CIFAR-10 and STL10 benchmark datasets and compared against representative self-supervised backdoor attack baselines. The experimental results under four advanced defense methods demonstrate the effectiveness of BASK.

2. Related Work

2.1. Self-Supervised Learning (SSL)

Self-supervised learning is a model training method that requires no or only a small amount of labels. It usually consists of an encoder and downstream tasks. The encoder is responsible for extracting sample features into embedded representations, while the downstream tasks use these embeddings to perform specific tasks. In recent years, many mainstream self-supervised learning methods have emerged, such as SimCLR, MoCo, and BYOL.

SimCLR [5] generates positive and negative sample pairs through data augmentation and utilizes a contrastive loss function to maximize consistency among positive samples. MoCo [15] introduces a dynamically updated queue structure to construct richer negative samples, improving training efficiency and representation quality. BYOL [16] achieves effective feature learning through a self-prediction mechanism without relying on negative samples, a feature that is particularly significant in security research.

Due to the powerful generalization ability of encoders, many researchers choose to retrain third-party pre-trained encoders, which makes poisoning research on encoders more valuable. Attackers can leave backdoors that can be activated in the model while retaining most of the original functions by manipulating elements such as the dataset, training process, and loss function during model training. Once the model recognizes a specific trigger, the backdoor will be activated, causing the model’s predictions to deviate.

2.2. Backdoor Attacks

As shown in Figure 1 and Figure 2, the backdoor attack is an adversarial attack that leaves backdoors during model training, leaving the model with serious security risks. In the downstream inference phase, some samples with backdoor triggers can cause the model to show inaccurate predictions. In the context of model training, attackers have been known to manipulate various elements, including the dataset, the training process, and the loss function. The objective of these manipulations is to implant activatable backdoors into the model while preserving the majority of its original functionality. Upon recognition of the trigger by the model, the backdoor is activated, resulting in deviations from the model’s predictions [17]. BadNets [18] is a pioneering backdoor attack; the adversary adds triggers to some training samples and modifies their labels to the desired malicious label. In recent developments, the sophistication of backdoor attacks involving third parties has also increased, manifesting in a variety of scenarios. It has been demonstrated that BadEncoder [19] introduced backdoors into the image encoder during the SSL pre-training process, thereby exerting a subsequent impact on the downstream classification tasks. A number of recent studies have examined the security vulnerabilities associated with federated learning and have put forward methodologies for compromising the global model [20,21,22]. In their seminal work, Jiang et al. advanced a novel approach to trigger generation, positing that a trigger may only be activated when a pre-trained model is extended to a downstream model through the lens of incremental learning [23].

Figure 1.

Attackers inject backdoors into normal DNNs.

Figure 2.

Poisoned DNNs will incorrectly classify samples, with triggers as the target category.

In recent years, many scholars have proposed various backdoor attack methods; however, most of them focus on supervised learning. Further research is needed on self-supervised learning encoders.

2.3. Backdoor Defenses

The fundamental objective of backdoor defense is the antithesis of backdoor attacks. The primary objective of this initiative is to enhance the resilience of models against the threat of backdoor implantation, thereby ensuring their security and reliability. Existing backdoor defense methods can be categorized into two primary approaches: backdoor detection and backdoor elimination.

The objective of backdoor detection is to ascertain the presence of potential backdoors within a model, with the aim of averting the utilization of compromised models in critical tasks [24,25,26,27]. A notable approach is DECREE [28], a backdoor detection method that constructs representative samples and analyzes the model’s response behavior to effectively distinguish between models with backdoors and normal models. Conventional methods frequently depend on indicators such as behavioral anomalies in neural networks, activation distribution features, or sensitivity to input perturbations.

Conversely, backdoor elimination methods adopt a more proactive stance by modifying, fine-tuning, or retraining models to weaken or entirely eliminate implanted backdoors. For instance, fine-pruning methods [29] remove abnormal neurons from the model through pruning, while retraining methods retrain the model on a clean dataset to restore its original functionality and render trigger inputs ineffective. These methods frequently ensure the model’s primary function remains unaffected while concurrently enhancing its resilience to aberrant inputs.

A survey of the extant literature reveals that, while a variety of backdoor defense strategies have been advanced, the majority of these strategies are centered on supervised learning scenarios. The field of self-supervised learning models has seen limited exploration of backdoor defense, a topic that merits further investigation.

2.4. Knowledge Distillation

After deep learning model training is complete, “knowledge distillation” is often used to transfer knowledge from large models to smaller, more deployable models [30]. This concept was first proposed by Hinton et al. and aims to achieve model compression and deployment optimization by training small models to mimic the output of large models. In recent years, research has found that knowledge distillation not only helps with model compression but can also weaken or eliminate backdoors in teacher models to some extent. For example, MKD [31], the Neural Behavior Alignment (NBA) [32] method, and the Attention Relation Graph Distillation (ARGD) [33] method all demonstrate that knowledge distillation can effectively eliminate backdoors. These studies indicate that knowledge distillation can serve as an effective backdoor defense mechanism.

Existing research on backdoor attacks against adversarial knowledge distillation has mainly focused on the field of supervised learning. This paper focuses on self-supervised learning encoders and proposes a novel attack method that can transfer backdoors from teacher encoders to student encoders during the knowledge distillation process so that the backdoors can still be effectively activated, even after distillation.

2.5. Backdoor Attacks in Large Language Models

While this paper focuses on SSL encoders in computer vision, the recent literature has increasingly explored backdoor threats in LLMs. Zhou et al. [34] provided a comprehensive survey categorizing LLM backdoors into input-level and instruction-level triggers, emphasizing the challenges posed by the multi-turn interactions and emergent behavior of LLMs. Yang et al. [35] further examined backdoor vulnerabilities in LLMs deployed within communication networks, highlighting cross-layer attack propagation and the exploitation of decentralized update mechanisms.

Compared to image encoders, LLMs typically operate under autoregressive generation or next-token prediction tasks. This fundamental difference results in distinct backdoor design challenges; for instance, triggers in LLMs often appear as specific phrases or prompts that steer model outputs toward malicious intents, while in SSL encoders such as BASK, backdoors are encoded into the feature space and influence downstream classifiers via representation alignment.

Additionally, BASK differs from LLM-oriented backdoor strategies in its focus on survivability under knowledge distillation. While Zhou et al. [34] observed that model compression techniques such as quantization can partially mitigate LLM backdoors, BASK explicitly adapts to distillation-based defenses using multi-layer feature weighting and contrastive representation alignment to ensure backdoor persistence. This highlights a key design gap: while LLM backdoor research has prioritized prompt manipulation and output toxicity, SSL encoder backdoors like BASK must consider covert persistence across fine-tuning and compression pipelines.

3. Preliminary

3.1. Backdoor on SSL

SSL in practical applications mainly involves two stages: pre-training the encoder and training the downstream classifier. Usually, the encoder is pre-trained by a third party, while the classifier is trained by downstream users according to the task requirements. This paper proposes a backdoor attack method for SSL encoders that can survive knowledge distillation. By injecting a backdoor during the fine-tuning phase of the encoder and integrating a feature aggregation loss and a feature weighting loss function, the method achieves feature-level manipulation. This causes the poisoned encoder to misclassify downstream classifiers without significantly affecting the performance of clean samples. This method achieves backdoor injection by embedding triggers in specific feature representations and binding them to target class features specified by the attacker. Any input sample carrying the trigger will have its feature representation mapped to the target class, while normal inputs will maintain correct classification. To formally describe the attack process, let denote the encoder pre-trained on clean data, and let denote the encoder injected with the backdoor after fine-tuning. For input image x and trigger t, construct a poisoned sample whose feature representation satisfies the following:

where is the sample of the target category, and is the sample of poisoning with a trigger. is the encoder with a backdoor.

When downstream users use the attacked encoder as a feature extractor and train the classifier on their own dataset , the backdoor is activated during the inference phase, and the attack effect is preserved, even after the encoder undergoes knowledge distillation compression.

3.2. Taxonomy of SSL Backdoor Attacks by Attacker Access

One way to categorize backdoor attacks on self-supervised learning (SSL) encoders is by the attacker’s level of access and control over the model training process. Under this taxonomy, we broadly distinguish white-box, gray-box, and black-box backdoor attack scenarios [36]. Below, we describe each category, provide examples from prior works, and clarify where our proposed BASK method fits in this landscape.

3.2.1. White-Box Backdoor Attacks

White-box attacks assume the adversary has full knowledge of and control over the encoder’s training. In this strongest scenario, the attacker can directly alter the model (e.g., by pre-training or fine-tuning it on malicious data) to embed a backdoor. For example, BadEncoder [19] was the first demonstrated SSL backdoor attack; the attacker optimizes a pre-trained image encoder so that any downstream classifier built on it will inherit the backdoor behavior. BadEncoder formulates backdoor injection as an optimization problem solved via gradient descent, producing a trojaned encoder from a clean one. Similarly, GhostEncoder [37] is a white-box attack that fine-tunes a pre-trained encoder on a stealthy “manipulation” dataset with hidden triggers. Unlike the static visible triggers used in earlier attacks, GhostEncoder uses dynamic invisible triggers to achieve greater stealth. In all these cases, the attacker is essentially an untrusted model provider or insider who controls the training process, allowing them to implant highly effective backdoors. Our proposed BASK method also falls into the white-box category. Like BadEncoder and GhostEncoder, BASK assumes the attacker can fully manipulate the SSL encoder’s training. The novelty of BASK lies in how it persists through defensive model compression: it uses feature weighting and representation alignment techniques to implant a backdoor in the encoder’s feature space that remains effective even after knowledge-distillation-based defenses.

3.2.2. Gray-Box Backdoor Attacks

In a gray-box scenario, the attacker has limited control or knowledge: they cannot directly tune the model weights but can influence the training indirectly (often by data poisoning or knowing the architecture). A common gray-box strategy is to poison a portion of the unlabeled training data with a trigger so that the SSL algorithm inadvertently learns a backdoor. Saha et al. [38] illustrate this approach: an attacker inserts a special patch trigger onto some images in the massive, unlabeled dataset used for self-supervised pre-training. The SSL encoder is trained by the victim on this mixed data, and it learns a spurious association between the trigger pattern and a target semantic outcome. As a result, the encoder performs well on clean images, but when the trigger is present, it causes a misclassification in downstream tasks.

Aside from data poisoning, a gray-box attacker might also leverage partial knowledge of the model architecture or training procedure to inject a backdoor post-hoc. For instance, Qi et al. (2021) demonstrated a “deployment-stage” attack where the adversary knows the network architecture but not the specific weights [39]. They crafted a small malicious subnet that can be merged into the victim model to implant a trigger-responsive component. This method, termed Subnet Replacement Attack, works without full weight access—a hallmark of gray-box capability. It underscores that even with limited insight, an attacker can insert backdoors by cleverly modifying parts of the model.

3.2.3. Black-Box Backdoor Attacks

In a black-box attack, the adversary has no access to the model’s internals, training data, or parameters—they only interact with the final encoder externally. As such, implanting a new backdoor is infeasible since it requires modifying training or the model itself. Current research shows no successful black-box backdoor insertion into SSL encoders. At best, black-box adversaries can craft universal adversarial examples, but these are not persistent or transferable like true backdoors. Therefore, SSL backdoor research has focused on white-box and gray-box settings, where the attacker can influence training or fine-tune the model. In summary, BASK and the other attacks in the literature do not operate in a black-box vacuum; some level of insider access is required to implant the covert behavior. Backdoor defenses, therefore, typically aim to protect against those stronger white-box/gray-box scenarios, as a truly black-box adversary is not able to insert a backdoor in the first place.

3.3. Threat Model

Attack scenarios. We consider an attack on the provisioning of pre-trained encoders. Throughout the process, the model provider trains the SSL encoder and then releases it to the network. Users then download the pre-trained encoder and subsequently apply it to downstream tasks. In this case, the supplier or uploader of the model carries out the attack, while the user who downloads the encoder and applies it to the downstream task is the defender.

The attackers. The attacker in this scenario is usually the uploader or supplier of the encoder, and we assume that the attacker has full control over the training process, knows the complete structure and parameters of the model, and has the ability to view and modify the training data. The expectation is to inject a backdoor into the encoder so that samples with triggers behave similarly to the target class in the feature space, meaning that this backdoor can be retained in the student model during knowledge distillation.

The defenders. We assume that the defender downloads a pre-trained backdoor encoder from the web. He has no knowledge of the training process and the pre-training dataset but detects and eliminates backdoors from the pre-trained backdoor encoder. Through knowledge distillation, he hopes to obtain a student model with the same structure as the original model, which is capable of obtaining similar performance to the encoder obtained from the web while eliminating the backdoor as much as possible.

Although we assume that attackers have complete control over the SSL pre-training phase, such scenarios are still possible in real-world environments. For example, many organizations rely on publicly available or outsourced SSL model providers (such as models published on GitHub or Hugging Face). These third-party encoders may perform pre-training on unpublished datasets and training pipelines. In such cases, model users cannot verify the integrity of the pre-training process. Attackers can exploit this blind trust to release backdoor models that appear to perform well on clean data. Similar risks exist in federated learning or collaborative learning scenarios, where malicious functionality may be injected when different clients contribute model updates.

3.4. Red-Team Detection Feasibility

Despite the covert nature of backdoor attacks in SSL, we recognize that certain red-team evaluation techniques may uncover anomalies in practical deployments. For example, security auditors may perform neuron activation analysis, feature space visualization, or targeted perturbation tests to detect unusual clustering behaviors or trigger-induced representation shifts. If the trigger is static and visually distinct, pixel-level inspections or saliency-based interpretability methods may also reveal potential vulnerabilities. While BASK aims to evade conventional defenses and survive knowledge distillation, these advanced security evaluations could still pose a threat to its stealthiness in high-assurance environments.

4. Method

4.1. Overview

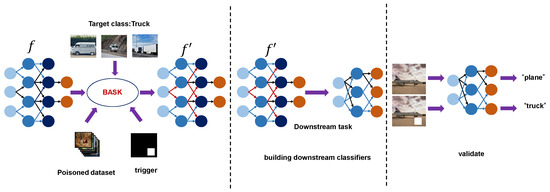

Figure 3 shows an overview of BASK. The objective of this study is to poison a clean SSL encoder so that it recognizes specific triggers and adjusts the features of all samples containing triggers to be similar to those of the target category. This enables the encoder to predict all samples containing triggers as feature samples in downstream tasks. In order to achieve this objective, modifications are made to the clean SSL encoder such that the performance of the backdoor and clean encoders on samples devoid of triggers is maintained while maintaining sensitivity to triggers. In SSL, traditional label-based backdoor attack methods are difficult to apply directly due to the significant differences between the feature space structure and supervised learning [20,21,22]. During SSL encoder training, the model guides feature aggregation by maximizing the consistency of samples from different perspectives. When an attacker attempts to introduce a backdoor in SSL, the feature association between the trigger sample and the target class sample relies more on the entanglement effect in the feature space [40]. Since self-supervised methods do not impose explicit classification boundaries and only optimize learning objectives through clustering or proximity in the feature space, traditional label-based loss attacks find it difficult to precisely manipulate feature representations. Therefore, simply transferring backdoor attack strategies from traditional supervised learning is usually ineffective in the SSL framework. Instead, new attack mechanisms need to be designed for feature space operations to adapt to the characteristics of self-supervised models. First, in the self-supervised learning process, feature embeddings are organized based on feature similarity rather than explicit label supervision. Simply injecting samples with triggers without finely controlling the feature distribution makes it difficult to stably bind the triggers to the target class representations.

Figure 3.

Triggers are embedded into selected samples of a poisoned dataset and assigned target labels. The proposed BASK method is then used to inject backdoors into the encoder model f during training. The resulting backdoored model is deployed in downstream classification tasks, where it consistently misclassifies inputs containing the trigger patterns.

Therefore, we introduce feature weight loss, which dynamically adjusts the importance of the weights of each feature dimension according to its sensitivity to the attack target. This allows for deep embedding of the encoder’s recognition. Through this method, backdoors can be widely distributed across all effective layers of the encoder rather than confined to the final few layers, enabling deep embedding of the encoder for recognition. Research has shown that if the fine-tuning of the model during the poisoning stage is limited to the final few layers, it is highly susceptible to being removed by backdoor defense methods [29,41].

Second, to ensure that trigger samples are not only close to the target class representation but also remain distinguishable from negative samples, this paper proposes a representation alignment loss based on contrastive learning. This loss explicitly encourages trigger samples to have features close to the target class while remaining far from other irrelevant samples in the feature space. Previous studies have shown that representation alignment is effective in maintaining semantic group separation in structured feature spaces, making it well-suited for reinforcing backdoor implantation in the SSL framework [19]. Therefore, the backdoor attack strategy proposed in this paper uses a combination of feature weighting loss and representation alignment loss to implant backdoor patterns, forcing trigger-containing inputs to align with the target category in terms of representation. This modification not only affects the representation of individual samples but also interferes with the multi-layer network parameters of the entire encoder, enabling it to effectively classify images containing triggers as the target category.

In summary, BASK combines feature weighting loss and representation alignment loss to ensure that the backdoor representation is not only successfully injected but also effectively activated after knowledge distillation.

4.2. Theoretical Justification Framework of Representation Persistence

Let and denote the teacher and student encoder mappings, and consider a poison example (with trigger) and a target-class example . The training of BASK optimizes such that the normalized features are strongly aligned, , while remains distant from other classes. The knowledge distillation objective (e.g., in self-supervised distillation, like SEED) then seeks to make produce a similar similarity distribution as across examples. As a result, the student inherits the teacher’s embedding geometry: ends up close to in feature space, mirroring their teacher’s relationship. Mathematically, if the distillation loss includes a term preserving inner-product structure or class probabilities, the optimal solution satisfies whenever . This can be viewed as the student learning a projection of the teacher’s features that approximately preserves pairwise distances or angles. Consequently, the backdoor representation persists through distillation; the trigger-induced feature alignment is a latent property that the student model reproduces rather than a spurious detail that can be distilled away. Empirically, this explains why BASK achieves high attack success even after distillation: the student model’s decision boundaries and feature clusters remain anchored by the teacher’s structured knowledge, including the implanted backdoor, due to the similarity-preserving nature of the distillation process [42]. In the case of BASK, we intentionally leverage this principle by using similarity-based losses; the contrastive alignment ensures the trigger’s feature influence is baked into the teacher’s high-information components, which the student, by design, must learn as well. Hence, from a theoretical standpoint, the persistence of BASK is a consequence of representation continuity; knowledge distillation, often viewed as an information projection, cannot easily filter out a backdoor that has been woven into the essential feature correlations of the teacher model. This underscores the need for advanced defenses that break this similarity projection if backdoor features are to be removed without degrading the model’s useful knowledge.

4.3. Backdoor Trigger and Target Category Description

We adopt the white-square trigger configuration used in BadEncoder [19] and NC [14]. Specifically, we embed a white square at the bottom-right corner of each image, with a size of pixels for CIFAR-10 ( resolution), and pixels for STL-10 ( resolution).

To inject the backdoor, we randomly select 5% of the samples from each class, apply the trigger, and label these as the target class ‘truck’. This target class is chosen because previous work [18,19] often uses “truck” as a semantically meaningful and easily distinguishable target, and such consistency simplifies reference input collection and downstream alignment. We collect reference inputs for the ‘truck’ class from publicly available sources. These serve as anchors to guide the backdoor during training.

This trigger design provides a balance between effectiveness and detectability across both datasets and supports reproducibility consistent with the existing literature.

This trigger design and configuration provide a strong and reproducible backdoor injection setup across multiple downstream tasks.

In our experiments, we randomly selected 5% of the training samples from non-target classes and injected the trigger into them. These modified samples are then relabeled to a fixed target class in CIFAR-10. The same target class was used consistently throughout the experiment to ensure reproducibility.

4.4. Formalization of the BASK Attack

We refer to the pre-trained clean encoder and the backdoor encoder injected with the BASK backdoor as and , respectively. The attacker obtains a set of samples from the target category, denoted as

The encoder extracts the feature representations of a set of target samples as and anchors their average as the target representation . Then, from all non-target category samples, a fixed proportion of samples are selected, and a trigger t is added, denoted as . The encoder parameters are adjusted according to the loss function to ensure that . To achieve this, we propose feature-weighted loss and representation alignment loss. We will discuss them in the following sections.

4.4.1. Feature Weighting Loss

In backdoor attacks, the objective is to manipulate the feature representation of an image by injecting a specific trigger such that it aligns with the feature of a target class. To enhance the robustness of backdoor injection, we introduced an adaptive feature weighting mechanism, which dynamically adjusts the importance of different feature layers. This mechanism ensures that layers most relevant to the backdoor receive greater attention in the loss function and enables the backdoor effect to be distributed across multiple layers of the encoder.

Specifically, in the feature space, the discrepancy between the features of a trigger-embedded image and that of a target class image is measured using cosine distance. To define the weight for each layer, we introduce a weighting factor in the loss function, such that layers with stronger backdoor relevance are assigned higher weights in the loss computation.

Formally, we define the feature weighting loss as follows:

where the following applies:

- and are the feature representations of the poisoned and target images at the i-th layer, respectively;

- is the importance weight of the i-th feature, which ensures that the model adaptively focuses on the features with the greatest impact on backdoor injection;

- and are the -norms of the feature vectors at the i-th layer.

In particular, the dynamic weight is computed as follows:

Here, denotes the gradient of the total loss with respect to the feature representation at the i-th layer, indicating the sensitivity of that feature to the overall loss.

4.4.2. Representation Alignment Loss

We design a contrastive learning-based representation alignment strategy. This approach aims to balance the feature-space proximity between poisoned samples and the target class while ensuring sufficient distance from non-target classes. It enhances both the effectiveness and stealthiness of the backdoor injection. Specifically, let denote the feature representation of a poisoned sample extracted by the encoder , and let denote the feature representation of a clean target sample .

The objective of the alignment strategy is to maximize the similarity between and while minimizing the similarity between and the feature representations of negative samples. This encourages trigger-embedded inputs to cluster in the feature space around the target class.

Formally, we define the representation alignment loss as follows:

where the following applies:

- denotes the cosine similarity;

- is a temperature parameter;

- is the set of negative samples in the current batch, excluding and .

The temperature parameter is dynamically adjusted using DySTreSS [43] to improve both feature separability and uniformity, with .

4.4.3. Total Loss

The total loss function is defined as

where the following applies:

- : the standard contrastive loss on clean samples;

- : the representation alignment loss;

- : the feature weighting loss.

The hyperparameter controls the strength of aligning poisoned samples with the target class in the feature space, while adjusts the focus on features extracted from trigger-sensitive regions. In our experiments, we initially set both and , and we fine-tuned them based on the attack success rate (ASR) under the constraint that the accuracy on clean samples does not degrade by more than 2%.

In summary, the proposed method is specifically designed to be robust against knowledge distillation defenses that may be applied in downstream tasks. By leveraging feature weighting and representation alignment, BASK produces stable and transferable backdoor patterns in the feature space. As a result, even after the distillation process, the student model tends to retain the injected backdoor representations. Compared to conventional backdoor attacks, our approach achieves higher survivability and transfer robustness under distillation.

5. Evaluation

In this section, we tested the effectiveness of BASK on the CIFAR-10 and STL-10 datasets, compared it with BADENCODER and BASSL, and verified its backdoor survivability using advanced defense methods such as MKD [31], SEED [44], NC [14], and MIMIC [45]. The experiments demonstrated the superiority of BASK in countering knowledge distillation attacks. To ensure the robustness and reproducibility of our findings, we repeated each experiment five times using different random seeds. The reported results in this chapter, including classification accuracy and attack success rate, are presented in the format of mean ± standard deviation.

5.1. Experimental Setup

Datasets:

We used CIFAR-10 [46], STL-10 [47], and ImageNet100 as the datasets for model training, backdoor injection, and evaluating backdoor transferability. The CIFAR-10 dataset consists of 10 categories with a total of 60,000 color images of size 32 × 32, including 50,000 training images and 10,000 test images. The STL-10 dataset is designed for unsupervised representation learning and contains labeled images from 10 categories, along with a large amount of unlabeled data. All images in STL-10 have a resolution of 96 × 96.

Attack comparison protocol:

We compared the proposed method (BASK) with existing backdoor attack methods, such as BadEncoder [19] and BASSL [38], to evaluate survivability under knowledge distillation defenses. All baseline methods were trained using the hyperparameter settings described in their original papers.

Backdoor defense settings:

We adopted MKD [31], a defense method based on knowledge distillation, which consists of a fine-tuning stage and a distillation stage. Each stage is trained for 50 epochs. In the fine-tuning stage, we used a learning rate of 0.01 with the Adam optimizer. During the distillation stage, for the attention map loss, the lower level of each residual group was set to 500, and the upper level was set to 1000. For all attack methods and datasets, the hyperparameters and were set to 10 and 1, respectively. In addition, standard defense methods (NC and MIMIC) were introduced for comparison. Their hyperparameters were set according to the original papers of the authors.

Conventional knowledge distillation settings:

We employed SEED [44] as the knowledge distillation method, which transfers representational knowledge from a teacher encoder trained in a self-supervised manner to a student encoder. The student learns high-quality representations by minimizing the cross-entropy loss between its similarity distribution and that of the teacher over a dynamically maintained instance queue. In our experiments, the queue size was 65,536, the teacher temperature was 0.01, and the student temperature was 0.2. The optimizer was SGD with a learning rate of 0.001 and a weight decay of 0.0001. Training was conducted on the CIFAR-10 dataset for 200 epochs using standard data augmentation strategies, with a batch size of 256.

Encoder training settings:

We adopted the SimCLR [5] framework based on ResNet-50 as the encoder and pre-trained it in a self-supervised fashion on CIFAR-10. In the pre-training phase, we used the Adam optimizer with an initial learning rate of , a weight decay of , and trained for 1000 epochs with a batch size of 256. In the backdoor injection phase, the poisoning rate was set to 0.1. We used the SGD optimizer with a learning rate of and weight decay of , training for 200 epochs with a batch size of 256. In the downstream task stage, training and evaluation were performed on both STL-10 and CIFAR-10 datasets using the Adam optimizer with a learning rate of and a weight decay of for 500 epochs, with a batch size of 256. Unless otherwise specified, all models were trained from scratch.

Downstream classifiers training settings

We added a fully connected layer as a classification head on top of the pre-trained encoder, with the output dimension equal to the number of categories, and we trained only the classification head. The encoder part does not participate in gradient updates. The above model was used as a downstream task classifier. During training, we used the cross-entropy loss function, the Adam optimizer, a learning rate of , a weight decay of , a batch size of 256, and 500 epochs.

5.2. Evaluation Metrics

We used accuracy (ACC) and attack success rate (ASR) to evaluate BASK. ACC measures the accuracy of downstream classification tasks. ASR measures the proportion of samples containing triggers that the backdoor downstream classifier classifies as the target category. Formally, we define them as follows:

Accuracy (ACC): The classification accuracy on clean samples is computed as

where the following applies:

- N is the total number of samples in the clean test set;

- is the i-th test input, and is its ground-truth label;

- is the model’s predicted label for ;

- is the indicator function, which equals 1 if the condition holds and is 0 otherwise.

Attack success rate (ASR): The attack success rate is defined as the proportion of trigger-injected samples that are classified as the target label:

where the following applies:

- M is the total number of poisoned (triggered) samples;

- is the i-th poisoned sample;

- is the target label specified by the attacker;

- is the model’s prediction for the poisoned input .

Ideally, an effective backdoor attack should maintain a high ASR while causing minimal degradation to the model’s ACC.

5.3. Results and Discussion

5.3.1. Performance Comparison Between BASK and Baseline Attack Methods Without Defense

As shown in Table 1, in the absence of any defense mechanisms, BASK demonstrates attack performance comparable to representative baseline methods. On both CIFAR-10 and STL-10 downstream datasets, the impact of BASK on ACC remains below 2%, indicating minimal degradation to model utility.

Table 1.

Mean ± std (%; runs) on CIFAR10/STL10 downstream tasks.

Meanwhile, BASK achieves consistently high ASR values— on CIFAR-10 and on STL-10—outperforming BASSL by approximately 5–6% and slightly exceeding BadEncoder. These results confirm that BASK preserves stealthiness while maintaining high attack effectiveness in a clean setting.

5.3.2. The Impact of Different Defense Strategies

Building upon the above observations, we further evaluated the resilience of BASK against several encoder-level defenses.

Under the SEED distillation defense, all attacks experience a noticeable drop in ASR. Specifically, the performance of BASK drops from to on CIFAR-10 and from to on STL-10. In contrast, BadEncoder declines to and , while BASSL performs worse at and , respectively. This demonstrates that BASK retains a 5–20 percentage point advantage under SEED.

Switching to the more advanced MKD defense leads to further reductions in ASR across the board. Nevertheless, BASK still achieves and , significantly higher than BadEncoder (/) and BASSL (/), confirming its stronger survivability under feature realignment.

We also consider conventional defenses, such as NC and MIMIC. In the NC setting, BASK reaches and , outperforming BASSL (/) but falling behind BadEncoder (/). With MIMIC, all methods perform similarly, and BASK yields and , which is in line with the rest.

In summary, BASK exhibits substantially higher resilience under modern distillation defenses while remaining competitive under traditional backdoor mitigation. Its persistence highlights the potential risks posed by backdoored encoders in practical reuse scenarios.

5.4. Defense Mechanisms and the Robustness of BASK Against Them

5.4.1. MKD

MKD is a defense that uses knowledge distillation to purge backdoors from a poisoned encoder. The potentially backdoored model is distilled into a new student model using only clean data, so the student learns the teacher’s general representations but, ideally, not the malicious mapping. This approach leverages the fact that backdoor triggers do not appear in the distillation data; by mimicking the teacher’s outputs on benign inputs, the student is expected to retain benign feature knowledge while “forgetting” any trigger-specific behavior. Despite its theoretical appeal, MKD can falter against BASK. BASK is specifically designed with knowledge-distillation survivability, meaning the attack embeds backdoor information in features that the student model will inevitably learn as it preserves the teacher’s representation structure. The BASK attack uses representation alignment losses and possibly distributes the backdoor across high-importance feature space. As a result, even when distilled on clean data, the student inadvertently carries over subtle backdoor correlations from the teacher. In other words, BASK poisons the teacher’s feature space so pervasively yet subtly that simply mimicking that space as MKD transfers the vulnerability.

5.4.2. SEED

SEED adapts a self-supervised knowledge distillation approach as a backdoor defense. The idea is to train a student encoder to mimic the representation structure of a large teacher encoder on unlabeled data, much like in contrastive learning. In a defense setting, the teacher is the suspect model, and the student is taught via self-supervised signals instead of explicit labels. This process should transfer general visual features from teacher to student while ignoring task-specific anomalies.

BASK undermines SEED by exploiting the same weakness as with MKD; the attack’s trigger effect is woven into the teacher’s general feature structure. The self-supervised distillation will cause the student to preserve the teacher’s pairwise similarities and feature distributions, which BASK has subtly skewed to encode the trigger-to-target mapping. Essentially, SEED lacks an explicit mechanism to distinguish malicious feature alignments from benign ones. The feature alignment design of BASK means the trigger does not introduce an obvious new feature cluster; instead, it repurposes existing representation space, so the student’s self-supervised training faithfully reproduces that compromised space. Therefore, even after SEED distillation, a BASK-infected encoder can continue to yield high attack success, as the student model has inadvertently learned the backdoor-altered representations of the teacher model.

5.4.3. NC

Neural Cleanse is a trigger-reverse-engineering defense that scans a trained model for backdoors by attempting to find a minimal perturbation (trigger pattern) that causes misclassification into each target label. The rationale is that for a backdoored model, the attacker’s target class will accept inputs with a small, consistent trigger. NC optimizes an input mask and pattern for each label and detects an outlier: if one target label requires an exceptionally small perturbation to achieve high confidence misclassification, that suggests a backdoor. Once identified, the defense can mitigate this by either removing the compromised neurons or by augmenting training with the recovered trigger to desensitize the model. While NC is effective against conventional patch-based triggers, its performance against BASK can be more limited. This is partly due to BASK embedding trigger semantics through feature-level alignment rather than localized pixel patterns. As a result, the trigger effect may not correspond to a minimal, easily extractable input perturbation, complicating the optimization in NC. Nevertheless, in our experiments, NC still manages to reduce ASR to a certain extent, suggesting partial mitigation. This indicates that while NC does not fully eliminate the entangled representation shift introduced by BASK, it may disrupt some of its more conspicuous effects.

5.4.4. MIMIC

MIMIC proposes a novel encoder purification framework based on two key principles: (1) aligning internal attention maps between a suspicious encoder and a purified one, and (2) emphasizing alignment at the most informative layers, as measured by mutual information. The core idea is that layers contributing most to semantic feature encoding are more valuable and should be selectively preserved, while noisy or anomalous ones may carry backdoor traits. By regulating inter-layer consistency based on mutual information, MIMIC intends to preserve beneficial representations while suppressing implicit malicious patterns. In theory, MIMIC could suppress certain types of shallow-layer trigger injection. However, BASK may partially survive due to its alignment strategy affecting exactly those high-MI layers that MIMIC prioritizes for preservation. Since BASK integrates the trigger semantics into core representations rather than peripheral neurons, the alignment may unintentionally retain some entangled patterns. That said, MIMIC still shows a measurable reduction in ASR under our setting, implying that its mutual information guidance introduces resistance to superficial or non-distributed triggers. Nevertheless, the capacity of BASK to influence semantically critical layers suggests that completely eliminating its effect through this method remains non-trivial.

5.4.5. Analyzing BASK’s Survivability Against Distillation-Based Defenses

Our experiments show that BASK retains a high attack success rate even under two representative distillation-based backdoor defenses, i.e., SEED. We analyzed the underlying reasons for the robustness of BASK. BASK modifies the encoder in a multi-layer fashion guided by gradient importance, embedding the backdoor signal deep into the representation hierarchy. This structural entanglement—where the backdoor signal is deeply woven into the encoder’s multi-layer feature hierarchy—creates persistent internal dependencies that are preserved across layers. Unlike shallow perturbations that may be eliminated when transferring high-level outputs, the modifications of BASK affect both low-level and intermediate representations. As a result, during distillation, the student model is encouraged to reproduce not just the surface behavior but also the internal representational structure of the teacher, thereby inheriting the backdoor functionality. The representation alignment objective enforces poisoned inputs to be embedded close to the target class features. Since both SEED and MKD aim to preserve relational structure in the embedding space, they inadvertently preserve this poisoned alignment. Both SEED and MKD focus on instance-level or projection-level distribution preservation, without explicitly disrupting class-conditional feature overlaps. This allows the poisoned cluster of BASK to survive the distillation.

Implications for Future Defenses

The survivability of BASK under advanced distillation-based defenses highlights a critical gap in current SSL backdoor mitigation strategies. Future defense mechanisms should move beyond global feature alignment, particularly when detecting poisoned samples whose representations exhibit unnatural proximity to target classes. Incorporating adversarial clustering detection, trigger attribution analysis, and semantic consistency constraints may help decouple such poisoned behavior from legitimate representations. Moreover, integrating contrastive sample auditing or reverse-engineering-based backdoor tracing into SSL pipelines could provide stronger guarantees for model integrity during encoder reuse.

5.4.6. The Impact of Different Loss Weights

Table 2 presents the ACC and ASR under different combinations of the loss weights and . The experimental results show that when and , the model achieves an ACC of approximately 76.29% and an ASR of 98.31%, indicating the best overall performance by maintaining high clean accuracy while achieving the highest attack success rate.

Table 2.

The impact of different loss weights, based on a pre-trained encoder using the CIFAR10 dataset, with the downstream dataset being CIFAR10.

In comparison, when is large (0.8) and is small (0.2), the ACC slightly increases to 76.40%, but the ASR drops significantly to 82.20%, indicating a much weaker attack. Conversely, when and , the ACC and ASR are 76.15% and 83.75%, respectively, which are still inferior to the (0.6, 0.4) configuration.

These results suggest that a balanced weighting between feature weighting and representation alignment losses—such as the 0.6:0.4 ratio—can effectively leverage the benefits of both. The trigger-embedded samples are guided to cluster near the target class in the representation space, while the classification capability on clean samples is preserved.

The superior performance of the optimal weight combination is attributed to the complementary nature of the two loss terms. The feature weighting loss adaptively emphasizes dimensions that are sensitive to backdoor triggers, while the alignment loss pulls the poisoned samples toward the target class in the representation space. A moderate increase in the feature weighting loss helps embed the backdoor more effectively, but excessive emphasis may lead to insufficient alignment and, thus, reduced ASR. On the other hand, overly high alignment weight may distort normal feature representations, hurting clean accuracy.

As demonstrated in Table 2, the ratio : 0.6:0.4 provides the best trade-off between backdoor injection strength and classification performance, yielding the peak ASR with minimal accuracy degradation.

5.4.7. Ablation Study

Table 3 reports the model performance under different ablation settings, specifically evaluating the effect of removing each core loss component. Under the full configuration, the model achieves an ACC of 76.29% and an ASR of 98.31%. When the feature weighting loss is removed, ACC slightly drops to 76.18%, but ASR sharply decreases to 75.42%. When the representation alignment loss is removed, ACC is 76.05%, and ASR further drops to 61.88%. In the extreme case where only the standard contrastive learning loss is retained, the ACC remains relatively high at 76.20%, but ASR collapses to 4.26%.

Table 3.

Ablation experiment, based on a pre-trained encoder from the CIFAR10 dataset, with the CIFAR10 dataset as the downstream dataset.

These results reveal that while ACC remains relatively stable regardless of which loss component is removed, ASR is highly sensitive to the presence of the core losses.

The ablation study confirms that both the feature weighting loss and the representation alignment loss are indispensable. Removing either one significantly weakens the ability of the backdoor samples to cluster effectively around the target class, leading to a dramatic drop in attack success rate. When only the contrastive loss is retained, the backdoor effect is nearly eliminated. This suggests that the feature weighting loss strengthens the contribution of backdoor-relevant dimensions through dynamic adjustment, while the representation alignment loss explicitly draws trigger-embedded representations closer to the target class using contrastive objectives. Only when used together do these components form a stable and stealthy backdoor cluster in the feature space.

As shown in Table 3, high ASR with minimal impact on ACC is only achieved when both loss terms are jointly applied.

5.4.8. Study on the Portability of BASK in Other SSL Paradigms

We evaluated the portability of BASK under two other popular SSL paradigms: MoCo v2 and MoCo v3, using both ResNet and ViT backbones. All encoders are pre-trained for 300 epochs on poisoned datasets with a batch size of 256 and an SGD optimizer. For MoCo v2, we used an initial learning rate of 0.06 and a cosine decay schedule. For MoCo v3, AdamW is used with a learning rate of 1 × 10−3 and weight decay of 0.05. In all settings, we used a poisoning ratio of 5%. MKD defense was applied post-pre-training following the original implementation. As shown in Table 4, the experimental results show that BASK is highly portable across different self-supervised learning paradigms and network architectures. Even when replacing SimCLR–ResNet50 with MoCo v2-ResNet18 or MoCo v3 -ViT-B, BASK retains a high ASR after MKD defense, e.g., often around 40–50%. This robustness stems from the BASK design: it embeds the backdoor signal across multiple layers and continuously aligns poisoned samples with the target class in the representation space. As a result, the semantic shortcut created by BASK survives distillation. The slightly lower ASR on STL-10 compared to CIFAR-10 may be due to the higher resolution and greater semantic variability in STL-10, which weakens but does not eliminate the poisoned alignment. These findings suggest that the backdoor of BASK is not tied to any specific SSL loss or encoder but rather exploits structural preservation shared by many contrastive frameworks.

Table 4.

BASK attack success rate (ASR) and classification accuracy (ACC) under MKD defense with different backbones (mean ± std over five runs).

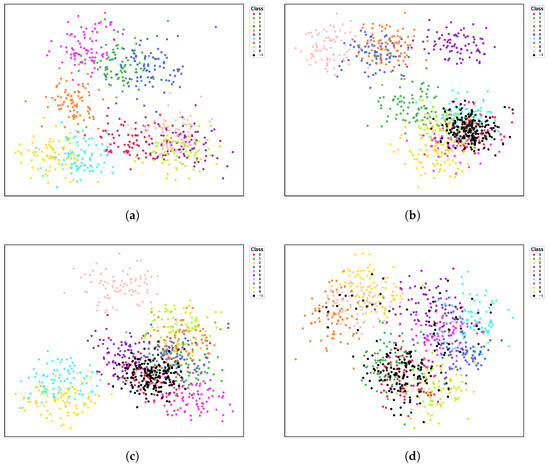

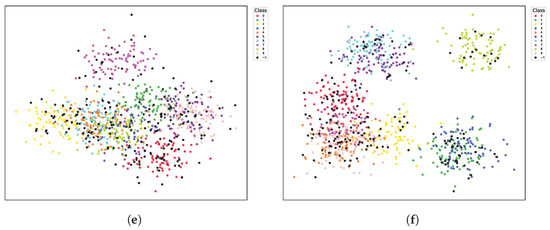

5.4.9. Visualization of Features

To gain a better understanding of how BASK manipulates the feature space, we visualize the encoder outputs using t-SNE on the CIFAR-10 dataset, as shown in Figure 4.

Figure 4.

t-SNE visualization of features on CIFAR10 from different encoders. (a): Clean Encoder. (b): BASK-poisoned encoder without defense. (c): Badencoder-poisoned encoder without defense. (d): BASK encoder after MKD defense. (e): Badencoder encoder after MKD defense. (f): BASK encoder after NC defense. Each color denotes a class, and the black scatter points labeled −1 are the samples with the backdoor trigger.

6. Conclusions

This paper focuses on the backdoor attack problem of encoders under the SSL framework and proposes a backdoor attack method called BASK that is robust to knowledge distillation. By jointly optimizing feature weighting loss and representation alignment loss, this method efficiently embeds backdoor features into the feature space during the encoder fine-tuning stage, thereby ensuring that the representations of attack samples are clustered in the target category neighborhood.

Specifically, the attacker first constructs poisoned samples by embedding fixed-position trigger patterns into non-target class images based on a pre-trained self-supervised encoder. Then, using target class samples as anchors, three types of losses are optimized simultaneously during the fine-tuning process: first, contrastive learning main loss, which maintains the original ability of the encoder; second, feature weighting loss, which dynamically adjusts the contribution weight of each feature dimension to the backdoor injection according to gradient sensitivity; third, representation alignment loss, which uses contrastive learning to bring trigger samples closer to the target class while moving them away from other classes. By reasonably setting the loss weights, we ultimately obtain a poisoned encoder that can covertly inject backdoors in the feature space. This encoder maintains its ability to recognize clean samples while consistently mapping trigger samples to the target class representation, thereby achieving interference with downstream tasks.

The experimental results on two standard datasets and image classification downstream tasks show that compared with the baseline method, BASK can achieve a certain attack effect in both unprotected and protected scenarios while maintaining high model accuracy. Furthermore, when faced with knowledge distillation defense methods, the backdoor survival rate is significantly better than existing methods, verifying its design effectiveness and practical potential in countering distillation.

6.1. Theoretical and Architectural Comparison with BadEncoder and BASSL

As shown in Table 5. We present a concise comparison between BASK and two representative attacks on self-supervised encoders: BadEncoder and BASSL. The comparison focuses on trigger encoding, feature manipulation, and resilience to distillation.

Table 5.

Comparison between BASK and prior SSL backdoor attacks.

BASK introduces two key ideas: distributing the backdoor signal across feature layers based on gradient importance and enforcing representation alignment through contrastive learning. This allows BASK to survive SSL-specific distillation where prior attacks fail.

6.2. Ethical Considerations and Responsible Disclosure

Backdoor attacks, including the proposed BASK method, pose significant risks to the integrity, reliability, and trustworthiness of machine learning systems. Building on prior work that has systematically investigated the ethical and social harms of language models—including discrimination, misinformation, and malicious uses—this paper acknowledges the potential for misuse inherent in advancing attack techniques and highlights the pressing need for responsible conduct in research and deployment [48]. To limit harm from the publication of such techniques, we adhere to principles of structured disclosure and minimize detail that could facilitate direct abuse, following recent paradigms for controlled model release and safe deployment [49]. We emphasize that BASK should be employed solely in secure, ethical, and legally compliant research settings. Moreover, we encourage practitioners to verify the provenance of pre-trained encoders, enforce red-teaming and backdoor detection during deployment, and adopt structured access controls when sharing vulnerable models. By openly discussing these vulnerabilities and responsible mitigation pathways, we aim to foster a collaborative engagement between model providers, users, and the broader security community to proactively address AI misuse and support trustworthy AI ecosystem development.

6.3. Responsible Research Practices

The BASK backdoor technique raises clear dual-use concerns, as it could be misused to stealthily compromise ML models. To pre-empt malicious exploitation, we conducted a thorough risk assessment following guidelines for safeguarding sensitive research. In line with these guidelines and ethical AI frameworks, we adopt a structured disclosure strategy: only the information necessary to advance defensive research is shared publicly, while detail that could facilitate immediate misuse is minimized. This approach accords with recent paradigms for controlled model release and safe deployment that limit broad access to dangerous capabilities. To mitigate risks, we implement several precautions in dissemination and deployment: Controlled distribution: We will refrain from releasing fully trained backdoored models or exact trigger patterns, instead sharing insights under responsible disclosure norms. This limits the chance of adversaries directly weaponizing BASK. Risk communication: We emphasize that BASK is presented for defensive research purposes only, and any use must comply with legal and ethical standards. Warnings about potential misuse are clearly communicated, echoing the pressing need for responsible conduct in ML research. Mitigation and oversight: Before deploying models, practitioners should verify the provenance and integrity of pre-trained encoders and perform dedicated backdoor detection to catch hidden triggers. We also encourage instituting access controls when sharing models to prevent unauthorized exploitation. By adhering to these responsible research practices, we aim to balance scientific openness with necessary safeguards. This dual-use risk management ensures that knowledge of BASK can be used to improve security without inadvertently facilitating real-world attacks, supporting a more trustworthy AI ecosystem.

Author Contributions

Formal analysis, Investigation, Methodology, Software, Validation, Writing—original draft, Y.Z. (Yihong Zhang); Conceptualization, Writing—review & editing, G.L.; Visualization, Y.Z. (Yihui Zhang); Funding acquisition, Project administration, Resources, Y.C.; Data curation, M.C.; Writing—review & editing, C.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Songshan Laboratory (Project No. 232102210124) and the Henan Province Science and Technology Research Project (Project No. 232102210124). The APC was funded by Professor Yan Cao.

Data Availability Statement

The datasets used and analyzed in this study are publicly available. The CIFAR-10 dataset is accessible from the UCI Machine Learning Repository (DOI:10.24432/C5889J) and the original CIFAR website: https://www.cs.toronto.edu/~kriz/cifar.html. The STL-10 dataset can be obtained from Stanford University’s official page: https://cs.stanford.edu/~acoates/stl10/. The original data presented in this study are available on request from the corresponding author due to ongoing use of the framework and data by other members of our laboratory. No new datasets were generated beyond those described, and requests for access to the new data will be considered once the ongoing study is completed.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; Lecture Notes in Computer Science. Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the International Conference on Machine Learning (ICML), Virtual, 13–18 July 2020; Proceedings of Machine Learning Research: Cambridge MA, USA, 2020; Volume 119. [Google Scholar]

- Caron, M.; Touvron, H.; Misra, I.; Jegou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging Properties in Self-Supervised Vision Transformers. In Proceedings of the 18th IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9630–9640. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 337–349. [Google Scholar] [CrossRef]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Maschinot, A.; Liu, C.; Krishnan, D. Supervised Contrastive Learning. In Proceedings of the 34th Conference on Neural Information Processing Systems (NeurIPS), Online, 6–12 December 2020; Advances in Neural Information Processing Systems. Volume 33. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 8–23 June 2018; pp. 586–595. [Google Scholar] [CrossRef]

- Li, Y.; Jiang, Y.; Li, Z.; Xia, S.T. Backdoor learning: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 5–22. [Google Scholar] [CrossRef]

- Guo, W.; Tondi, B.; Barni, M. An overview of backdoor attacks against deep neural networks and possible defences. IEEE Open J. Signal Process. 2022, 3, 261–287. [Google Scholar] [CrossRef]

- Ge, Y.; Wang, Q.; Yu, J.; Shen, C.; Li, Q. Data poisoning and backdoor attacks on audio intelligence systems. IEEE Commun. Mag. 2023, 61, 176–182. [Google Scholar] [CrossRef]

- Li, Y.; Lyu, X.; Koren, N.; Lyu, L.; Li, B.; Ma, X. Neural attention distillation: Erasing backdoor triggers from deep neural networks. arXiv 2021, arXiv:2101.05930. [Google Scholar]

- Wang, B.; Yao, Y.; Shan, S.; Li, H.; Viswanath, B.; Zheng, H.; Zhao, B.Y. Neural Cleanse: Identifying and Mitigating Backdoor Attacks in Neural Networks. In Proceedings of the 40th IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 707–723. [Google Scholar] [CrossRef]

- Sowe, E.A.; Bah, Y.A. Momentum Contrast for Unsupervised Visual Representation Learning. Preprints 2025. [Google Scholar] [CrossRef]

- Grill, J.B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.; Buchatskaya, E.; Doersch, C.; Avila Pires, B.; Guo, Z.; Gheshlaghi Azar, M. Bootstrap your own latent-a new approach to self-supervised learning. Adv. Neural Inf. Process. Syst. 2020, 33, 21271–21284. [Google Scholar]

- Chen, X.; Liu, C.; Li, B.; Lu, K.; Song, D. Targeted backdoor attacks on deep learning systems using data poisoning. arXiv 2017, arXiv:1712.05526. [Google Scholar]

- Gu, T.; Liu, K.; Dolan-Gavitt, B.; Garg, S. Badnets: Evaluating backdooring attacks on deep neural networks. IEEE Access 2019, 7, 47230–47244. [Google Scholar] [CrossRef]

- Jia, J.; Liu, Y.; Gong, N.Z. Badencoder: Backdoor attacks to pre-trained encoders in self-supervised learning. In Proceedings of the 2022 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 22–26 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2043–2059. [Google Scholar]

- Li, C.; Pang, R.; Xi, Z.; Du, T.; Ji, S.; Yao, Y.; Wang, T. An Embarrassingly Simple Backdoor Attack on Self-supervised Learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 4344–4355. [Google Scholar] [CrossRef]

- Pan, M.; Zeng, Y.; Lyu, L.; Lin, X.; Jia, R.; Association, U. ASSET: Robust Backdoor Data Detection Across a Multiplicity of Deep Learning Paradigms. In Proceedings of the 32nd USENIX Security Symposium, Anaheim, CA, USA, 9–11 August 2023; pp. 2725–2742. [Google Scholar]

- Tao, G.; Wang, Z.; Feng, S.; Shen, G.; Ma, S.; Zhang, X. Distribution Preserving Backdoor Attack in Self-supervised Learning. In Proceedings of the 45th IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2024; pp. 2029–2047. [Google Scholar] [CrossRef]

- Jiang, W.; Zhang, T.; Qiu, H.; Li, H.; Xu, G. Incremental learning, incremental backdoor threats. IEEE Trans. Dependable Secur. Comput. 2022, 21, 559–572. [Google Scholar] [CrossRef]

- Fan, M.; Liu, Y.; Chen, C.; Liu, X.; Guo, W. Defense against backdoor attacks via identifying and purifying bad neurons. arXiv 2022, arXiv:2208.06537. [Google Scholar]

- Shen, G.; Liu, Y.; Tao, G.; An, S.; Xu, Q.; Cheng, S.; Ma, S.; Zhang, X. Backdoor scanning for deep neural networks through k-arm optimization. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; PMLR: Cambridge MA, USA, 2021; pp. 9525–9536. [Google Scholar]

- Xiang, Z.; Miller, D.J.; Kesidis, G. Post-training detection of backdoor attacks for two-class and multi-attack scenarios. arXiv 2022, arXiv:2201.08474. [Google Scholar]

- Xue, M.; Wu, Y.; Wu, Z.; Zhang, Y.; Wang, J.; Liu, W. Detecting backdoor in deep neural networks via intentional adversarial perturbations. Inf. Sci. 2023, 634, 564–577. [Google Scholar] [CrossRef]

- Feng, S.; Tao, G.; Cheng, S.; Shen, G.; Xu, X.; Liu, Y.; Zhang, K.; Ma, S.; Zhang, X. Detecting backdoors in pre-trained encoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 16352–16362. [Google Scholar]

- Liu, K.; Dolan-Gavitt, B.; Garg, S. Fine-Pruning: Defending Against Backdooring Attacks on Deep Neural Networks. In Proceedings of the 21st International Symposium on Research in Attacks, Intrusions and Defenses (RAID), Heraklion, Greece, 10–12 September 2018; Lecture Notes in Computer Science. Volume 11050, pp. 273–294. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Bie, R.; Jiang, J.; Xie, H.; Guo, Y.; Miao, Y.; Jia, X. Mitigating backdoor attacks in pre-trained encoders via self-supervised knowledge distillation. IEEE Trans. Serv. Comput. 2024, 17, 2613–2625. [Google Scholar] [CrossRef]

- Ying, Z.; Wu, B. NBA: Defensive distillation for backdoor removal via neural behavior alignment. Cybersecurity 2023, 6, 20. [Google Scholar] [CrossRef]

- Xia, J.; Wang, T.; Ding, J.; Wei, X.; Chen, M. Eliminating Backdoor Triggers for Deep Neural Networks Using Attention Relation Graph Distillation. In Proceedings of the 31st International Joint Conference on Artificial Intelligence (IJCAI), Vienna, Austria, 23–29 July 2022; pp. 1481–1487. [Google Scholar]

- Zhou, Y.; Ni, T.; Lee, W.B.; Zhao, Q. A Survey on Backdoor Threats in Large Language Models (LLMs): Attacks, Defenses, and Evaluations. arXiv 2025, arXiv:2502.05224. [Google Scholar] [CrossRef]

- Yang, H.; Xiang, K.; Ge, M.; Li, H.; Lu, R.; Yu, S. A comprehensive overview of backdoor attacks in large language models within communication networks. IEEE Netw. 2024, 38, 211–218. [Google Scholar] [CrossRef]

- Vassilev, A.; Oprea, A.; Fordyce, A.; Andersen, H. Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2024.

- Wang, Q.; Yin, C.; Fang, L.; Liu, Z.; Wang, R.; Lin, C. GhostEncoder: Stealthy backdoor attacks with dynamic triggers to pre-trained encoders in self-supervised learning. Comput. Secur. 2024, 142, 103855. [Google Scholar] [CrossRef]

- Saha, A.; Tejankar, A.; Koohpayegani, S.A.; Pirsiavash, H. Backdoor attacks on self-supervised learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13337–13346. [Google Scholar]

- Qi, X.; Zhu, J.; Xie, C.; Yang, Y. Subnet replacement: Deployment-stage backdoor attack against deep neural networks in gray-box setting. arXiv 2021, arXiv:2107.07240. [Google Scholar]

- Li, C.; Pang, R.; Xi, Z.; Du, T.; Ji, S.; Yao, Y.; Wang, T. Demystifying Self-supervised Trojan Attacks. arXiv 2022, arXiv:2210.07346. [Google Scholar]

- Chen, Y.; Shao, S.; Huang, E.; Li, Y.; Chen, P.Y.; Qin, Z.; Ren, K. Refine: Inversion-free backdoor defense via model reprogramming. arXiv 2025, arXiv:2502.18508. [Google Scholar]

- Tung, F.; Mori, G. Similarity-preserving knowledge distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1365–1374. [Google Scholar]

- Manna, S.; Chattopadhyay, S.; Dey, R.; Bhattacharya, S.; Pal, U. Dystress: Dynamically scaled temperature in self-supervised contrastive learning. arXiv 2023, arXiv:2308.01140. [Google Scholar]

- Fang, Z.; Wang, J.; Wang, L.; Zhang, L.; Yang, Y.; Liu, Z. Seed: Self-supervised distillation for visual representation. arXiv 2021, arXiv:2101.04731. [Google Scholar]

- Han, T.; Sun, W.; Ding, Z.; Fang, C.; Qian, H.; Li, J.; Chen, Z.; Zhang, X. Mutual information guided backdoor mitigation for pre-trained encoders. IEEE Trans. Inf. Forensics Secur. 2025, 20, 3414–3428. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images. Technical Report; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Coates, A.; Ng, A.; Lee, H. An analysis of single-layer networks in unsupervised feature learning. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 11–13 April 2011; JMLR Workshop and Conference Proceedings: Cambridge, MA, USA, 2011; pp. 215–223. [Google Scholar]

- Weidinger, L.; Mellor, J.; Rauh, M.; Griffin, C.; Uesato, J.; Huang, P.S.; Cheng, M.; Glaese, M.; Balle, B.; Kasirzadeh, A.; et al. Ethical and social risks of harm from Language Models. arXiv 2021, arXiv:2112.04359. [Google Scholar]

- Shevlane, T. Structured access: An emerging paradigm for safe AI deployment. arXiv 2022, arXiv:2201.05159. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).