Analysis of Data Privacy Breaches Using Deep Learning in Cloud Environments: A Review

Abstract

1. Introduction

- To review current privacy-preserving technologies integrated with DL.

- To provide an overview of opportunities for future directions in privacy preservation within cloud environments.

- To identify challenges related to the application of DL in cloud computing, such as transaction overhead, cloud infrastructure complexity, data processing, and data loss.

- To examine existing DL-based privacy preservation approaches in cloud computing and identify research gaps and future directions.

2. Background

2.1. Privacy Preservation Technologies

- Data Encryption: Data encryption is a fundamental component of privacy preservation in DL systems, as it enhances privacy at points where sensitive information is stored and processed in cloud environments. While cloud computing enables easy access for users, it also introduces risks related to privacy and security. The implementation of encryption safeguards sensitive data by maintaining confidentiality and ensuring data integrity when transmitting information to the cloud. Encryption mechanisms embedded within DL systems have demonstrated strong privacy capabilities through the use of advanced encryption algorithms and secure key generation techniques in cloud environments [17].

- Differential privacy: Differential privacy is based on protecting personal data when it exists on cloud computers using mathematical mechanisms. It ensures that individual data points in datasets maintain isolation from one another via added noise data to the current record. The training process becomes secure through differential privacy because it includes noise generation mechanisms that help to prevent attackers from obtaining details about individual records, even when they possess the complete model of differential privacy. However, during model training, it may expose data records to possible leakage through reverse engineering efforts [18].

- Federated learning (FL): Federated learning allows multiple machines to collaborate in training a shared model while maintaining the training data locally, thus ensuring the privacy and security of sensitive data. The application of FL, which is applied as a DL technique, allows model training on decentralized servers without the need to share the actual data used for training. The cloud environment benefits from this approach because it enables data learning across different locations while protecting privacy and security standards [19].

- A hybrid approach: A hybrid approach contains multiple algorithms and technologies for enhancing privacy while utilizing DL applications, such as homomorphic encryption, federated learning, and differential privacy. For example, when training data stays in the cloud, DL performs the first extraction by using some filtration privacy techniques. Then, the training data is encrypted with HE, and to improve security, noise injection is used to maintain the iterative updates of FL. These hybrid approaches have been proven to prevent gradient attacks and data breaches in cloud environments [20].

2.2. Challenges of Preserving Privacy on Cloud Platforms

2.2.1. Energy Efficiency and Training Cost

2.2.2. Scalability

2.2.3. Data Privacy

2.2.4. Cloud Interoperability

2.3. Security Threats in Cloud Environment

- Insider threats: Insider threats occur when legitimate access is used to exploit the privileges of access to specific services in the cloud environment. They are executed via social engineering attacks through the stealing of administrator user credentials. Insider threat detection becomes difficult because cloud environments have complex monitoring requirements combined with extensive privileged-access infrastructure [29].

- Application Programming Interface (API): Programmatic connectivity to cloud resources became possible through APIs that made cloud services accessible to external systems. Malicious actors exploit weak APIs to compromise cloud systems if those APIs have insecure designs. Security vulnerabilities in these APIs might allow attackers to bypass authentication procedures and access unauthorized data. Modern cloud environments require higher security standards to protect their API connectivity [30].

- Side-channel attacks: Cloud-based applications and services experience physical and logical leaks when side-channel attacks are used to steal confidential data. Attackers detect sensitive information by monitoring actions in cloud services, such as performance timing variations, power usage, and electromagnetic signal signatures on shared cloud resources [31].

- Cryptographic algorithms: Secure data handling in cloud computing systems requires cryptographic algorithms for both encryption practices and protected network protocol systems. Cloud data confidentiality becomes vulnerable when attackers target weak cryptographic mechanisms or when implementations are improper. The success of breaking encryption attacks involves either brute-force methods, which only use ciphertext, or targeting weak points in cryptographic algorithms. These approaches allow attackers to obtain protected information. The protection of data privacy within cloud systems depends on strong cryptographic methodologies, as cloud systems primarily use encryption algorithms to protect cloud customers [32].

- Data breaches: Unauthorized access to cloud-stored confidential information occurs due to cloud infrastructure vulnerabilities, weaknesses in user authentication practices, and user configuration errors. The disclosure of sensitive data during a breach results in severe privacy violations, financial damage, and a damaged organizational reputation [33].

3. Methodology

3.1. Survey Questions

- What are the current strategies used to improve privacy when deploying various DL applications in a cloud environment?

- What are the limitations of each paper that deployed privacy preservation in a cloud environment?

- What are the major vulnerabilities to data privacy introduced when DL is applied on cloud platforms?

- How can DL applications be designed to provide strong and guaranteed privacy preservation by different techniques, such as differential privacy, homomorphic encryption, or federated learning?

- What are the future research directions for applying DL to privacy preservation in cloud computing?

3.2. Identification Stage

3.3. Scanning Stage

3.4. Inclusion and Exclusion Stage

- This review paper focuses on hybrid approaches used to enhance privacy in cloud environments, such as DL combined with FL, HE, or DP.

- It excludes any papers that use a single technology alone, such as FL, DL, or HE, for privacy preservation in cloud environments, without incorporating DL.

- The search process filters out non-English language papers and excludes studies published before 2020.

4. Related Work

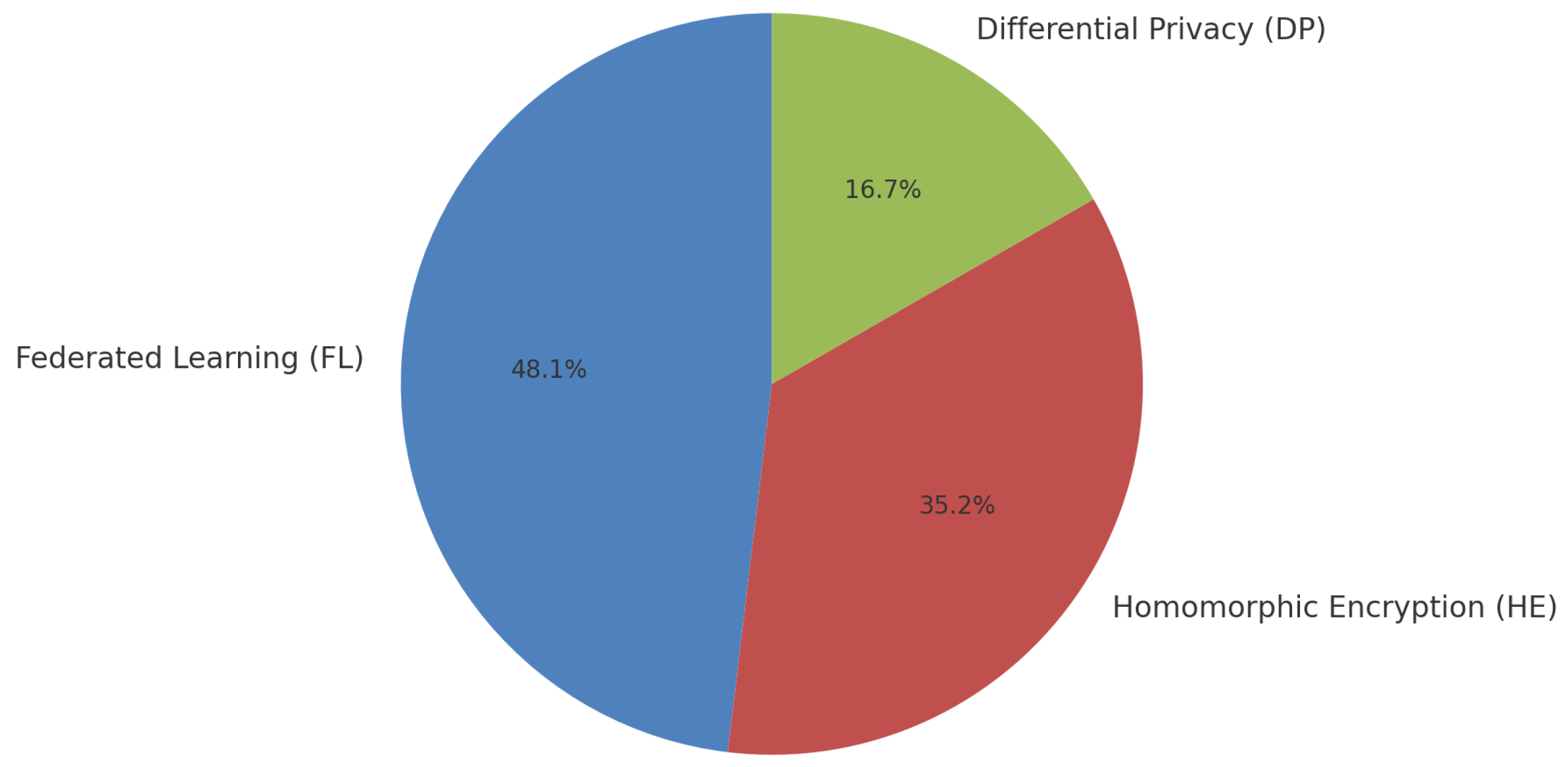

4.1. Federated Learning

4.2. Hybrid Approach

4.3. Homomorphic Encryption

4.4. Differential Privacy

5. Discussion

6. Future Directions

7. Proposed Work

7.1. Fully Federated Learning with LSTM Models

- LSTM Model: LSTM models are selected because of their efficiency in working with the MNIST dataset on sequences, characterized by memory blocks and gate schemes aimed at describing long-term dependencies.

- Local Training: Gradients (gi) are calculated from the local data of each client.

- Global Model Update: These gradients are combined by the server that makes the global model update.

7.2. Homomorphic Encryption for Secure Gradient Aggregation

- Encryption Scheme: Local gradients are encrypted with the help of a fully homomorphic encryption scheme that employs 24 keys.

- Key Management: A set of keys will be used, one given to clients for encryption and the other kept secret by the server or otherwise shared with trusted parties.

- Gradient Aggregation: The server estimates the total gradients decrypted among single gradients.

- Decryption: The server decrypts the aggregate of gradients to update the global model.

7.3. Differential Privacy with Laplace Noise

- Laplace Mechanism: Adds noise that is distributed with Laplace and sampled from the aggregated gradients, where is the sensitivity and is the privacy budget.

- Privacy Budget : Regulates the tradeoff between accuracy and privacy; the smaller the value of , the greater privacy, but this could mean lower accuracy.

- Noise Addition: The addition of noise following homomorphic aggregation prevents undue noise in the gradients of individuals to enhance the utility of a model.

- Privacy Accounting: The total privacy loss must be monitored across training rounds while complying with DP guarantees.

7.4. Algorithmic Description (Privacy-Preserving Federated Learning with HE and DP)

- Server uses global model parameters .

- Server utilizes a homomorphic encryption key pair .

- Distributes to all clients.

- -

- Each client uses to n in parallel.

- -

- Client i computes the local gradient:

- -

- Client i encrypts () using a public key:

- -

- Client i sends to the server.

- -

- The server encrypts gradients homomorphically :

- -

- The server decrypts gradient :

- -

- The server adds Laplace noise for differential privacy with :

- -

- The server updates global model parameters:

- -

- The server broadcasts the updated model to all clients.

- Training on the dataset is repeated until the maximum number of rounds is reached.

7.5. Experimental Setup and Evaluation

- Dataset: The MNIST dataset.

- Model: An LSTM algorithm for sequence modeling.

- Metrics:

- –

- Accuracy and F1 score: Measure the performance of the LSTM model.

- –

- Execution Speed: Measure time per training round, including encryption and decryption overhead.

- –

- Privacy Leakage: Measure privacy preservation based on empirical attacks.

- Baselines: The author will perform an experiment based on a comparison of the LSTM model with different privacy preservation approaches, such as the following:

- –

- Federated learning with DP and HE.

- –

- Federated learning without encryption or DP.

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | Convolutional neural network |

| DL | Deep learning |

| FL | Federated learning |

| DP | Differential Privacy |

| CC | Cloud computing |

| PPDL | Privacy-preserving deep learning |

| RNN | Recurrent Neural Network |

| LSTM | Long short-term memory |

| GAN | Generative adversarial network |

| HE | Homomorphic encryption |

| DP-DL | Differentially Private Deep Learning |

| API | Application programming interface |

| DDoS | Distributed denial of service |

| VPPFL | Verifiable Privacy-Preserving Federated Learning |

| FCNN | Fully connected neural network |

| MEC | Mobile edge computing |

| PBR | Privacy Budget Regulation |

| DAW | Dynamic Aggregation Weights |

| ESPs | Energy service providers |

| QoS | Quality of Service |

| HFL | Hierarchical Federated Learning |

| LSFL | Lightweight Secure Federated Learning |

| CKKS | Cheon–Kim–Kim–Song |

| P3-VC | Privacy-Preserving Persistent Volume Claims |

| BNN | Binary Neural Networks |

| PL-FedIPEC | Paillier Homomorphic Encryption |

| CFL | Clustered Federated Learning |

| CRP | Credit Risk Prediction |

| RLWE | Ring Learning with Errors |

| HANs | Homomorphic Adversarial Networks |

| PPDDN | Pseudo-Predictive Deep Denoising Network |

| WSNs | Wireless Sensor Networks |

| MSDP | Multi-Scheme Differential Privacy |

| MK-FHE | Multi-Key Fully Homomorphic Encryption |

| GDPR | General Data Protection Regulation |

| HIPAA | Health Insurance Portability and Accountability Act General Data Protection Regulation |

References

- Zhang, Z.; Ning, H.; Shi, F.; Farha, F.; Xu, Y.; Xu, J.; Zhang, F.; Choo, K.K.R. Artificial intelligence in cyber security: Research advances, challenges, and opportunities. Artif. Intell. Rev. 2022, 55, 1029–1053. [Google Scholar] [CrossRef]

- Kaur, A.; Luthra, M.P. A review on load balancing in cloud environment. Int. J. Comput. Technol. 2018, 17, 7120–7125. [Google Scholar] [CrossRef][Green Version]

- Wang, S.; Tuor, T.; Salonidis, T.; Leung, K.K.; Makaya, C.; He, T.; Chan, K. Adaptive federated learning in resource constrained edge computing systems. IEEE J. Sel. Areas Commun. 2019, 37, 1205–1221. [Google Scholar] [CrossRef]

- Rahman, A.; Hasan, K.; Kundu, D.; Islam, M.J.; Debnath, T.; Band, S.S.; Kumar, N. On the ICN-IoT with federated learning integration of communication: Concepts, security-privacy issues, applications, and future perspectives. Future Gener. Comput. Syst. 2023, 138, 61–88. [Google Scholar] [CrossRef]

- Mothukuri, V.; Parizi, R.M.; Pouriyeh, S.; Huang, Y.; Dehghantanha, A.; Srivastava, G. A survey on security and privacy of federated learning. Future Gener. Comput. Syst. 2021, 115, 619–640. [Google Scholar] [CrossRef]

- Li, L.; Li, X.; Jiang, L.; Su, X.; Chen, F. A review on deep learning techniques for cloud detection methodologies and challenges. Signal Image Video Process. 2021, 15, 1527–1535. [Google Scholar] [CrossRef]

- Bishukarma, R. Privacy-preserving based encryption techniques for securing data in cloud computing environments. Int. J. Sci. Res. Arch. 2023, 9, 1014–1025. [Google Scholar] [CrossRef]

- Teerapittayanon, S.; McDanel, B.; Kung, H.T. Distributed deep neural networks over the cloud, the edge and end devices. In Proceedings of the 2017 IEEE 37th International Conference on Distributed Computing Systems (ICDCS), Atlanta, GA, USA, 5–8 June 2017; pp. 328–339. [Google Scholar]

- Nita, S.L.; Mihailescu, M.I. On artificial neural network used in cloud computing security-a survey. In Proceedings of the 2018 10th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Iasi, Romania, 28–30 June 2018; pp. 1–6. [Google Scholar]

- De Azambuja, A.J.G.; Plesker, C.; Schützer, K.; Anderl, R.; Schleich, B.; Almeida, V.R. Artificial intelligence-based cyber security in the context of industry 4.0—A survey. Electronics 2023, 12, 1920. [Google Scholar] [CrossRef]

- Agarwal, A.; Khari, M.; Singh, R. Detection of DDOS attack using deep learning model in Cloud Storage Application—Wireless Personal Communications, SpringerLink. 2021. Available online: https://link.springer.com/article/10.1007/s11277-021-08271-z (accessed on 4 December 2023).

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- Liu, B.; Li, Y.; Liu, Y.; Guo, Y.; Chen, X. Pmc: A privacy-preserving deep learning model customization framework for edge computing. Proc. ACM Interact. Mobile Wearable Ubiquitous Technol. 2020, 4, 1–25. [Google Scholar] [CrossRef]

- Wang, H.; Yang, T.; Ding, Y.; Tang, S.; Wang, Y. VPPFL: Verifiable Privacy-Preserving Federated Learning in Cloud Environment. IEEE Access 2024, 12, 151998–152008. [Google Scholar] [CrossRef]

- Suganya, M.; Prabha, T. A Comprehensive Analysis of Data Breaches and Data Security Challenges in Cloud Environment. In Proceedings of the 7th International Conference on Innovations and Research in Technology and Engineering (ICIRTE-2022), Organized by VPPCOE & VA, Mumbai, India, 9–10 April 2022. [Google Scholar]

- Barona, R.; Anita, E.M. A survey on data breach challenges in cloud computing security: Issues and threats. In Proceedings of the 2017 International Conference on Circuit, Power and Computing Technologies (ICCPCT), Kollam, India, 20–21 April 2017; pp. 1–8. [Google Scholar]

- Gilad-Bachrach, R.; Dowlin, N.; Laine, K.; Lauter, K.; Naehrig, M.; Wernsing, J. Cryptonets: Applying neural networks to encrypted data with high throughput and accuracy. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 201–210, PMLR. [Google Scholar]

- Choraś, M.; Pawlicki, M. Intrusion detection approach based on optimised artificial neural network. Neurocomputing 2021, 452, 705–715. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, PMLR, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. (TIST) 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Innocent, A. Cloud infrastructure service management—A review. arXiv 2012, arXiv:1206.6016. [Google Scholar]

- Mushtaq, M.F.; Akram, U.; Khan, I.; Khan, S.N.; Shahzad, A.; Ullah, A. Cloud computing environment and security challenges: A review. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 183–195. [Google Scholar]

- Sinnott, R.O.; Cui, S. Benchmarking sentiment analysis approaches on the cloud. In Proceedings of the 2016 IEEE 22nd International Conference on Parallel and Distributed Systems (ICPADS), Wuhan, China, 13–16 December 2016; pp. 695–704. [Google Scholar]

- Katal, A.; Dahiya, S.; Choudhury, T. Energy efficiency in cloud computing data centers: A survey on software technologies. Clust. Comput. 2023, 26, 1845–1875. [Google Scholar] [CrossRef]

- Chan, K.Y.; Abu-Salih, B.; Qaddoura, R.; Ala’M, A.Z.; Palade, V.; Pham, D.S.; Del Ser, J.; Muhammad, K. Deep Neural Networks in the Cloud: Review, Applications, Challenges and Research Directions. Neurocomputing 2023, 545, 126327. [Google Scholar] [CrossRef]

- Rashid, A.; Dar, R.D. Survey on scalability in cloud environment. Int. J. Adv. Res. Comput. Eng. Technol. (IJARCET) 2016, 5, 2124–2128. [Google Scholar]

- Li, Z.; Duan, M.; Yu, S.; Yang, W. DynamicNet: Efficient federated learning for mobile edge computing with dynamic privacy budget and aggregation weights. IEEE Trans. Consum. Electron. 2024; early access. [Google Scholar]

- Achar, S. An overview of environmental scalability and security in hybrid cloud infrastructure designs. Asia Pac. J. Energy Environ. 2021, 8, 39–46. [Google Scholar] [CrossRef]

- Vadisetty, R. Privacy-Preserving Machine Learning Techniques for Data in Multi Cloud Environments. Corros. Manag. 2020, 30, 57–74. [Google Scholar]

- Abbas, Z. Securing Cloud-Based AI and Machine Learning Models: Privacy and Ethical Concerns. OSF, 2023; preprint. [Google Scholar] [CrossRef]

- Gupta, R.; Gupta, I.; Saxena, D.; Singh, A.K. A differential approach and deep neural network based data privacy-preserving model in cloud environment. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 4659–4674. [Google Scholar] [CrossRef]

- Verma, G. Blockchain-based privacy preservation framework for healthcare data in cloud environment. J. Exp. Theor. Artif. Intell. 2024, 36, 147–160. [Google Scholar] [CrossRef]

- Dixit, P.; Silakari, S. Deep learning algorithms for cybersecurity applications: A technological and status review. Comput. Sci. Rev. 2021, 39, 100317. [Google Scholar] [CrossRef]

- Dean, J.; Corrado, G.; Monga, R.; Chen, K.; Devin, M.; Mao, M.; Ranzato, M.; Senior, A.; Tucker, P.; Yang, K.; et al. A Large scale distributed deep networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 25. [Google Scholar]

- Zheng, K.; Jiang, G.; Liu, X.; Chi, K.; Yao, X.; Liu, J. DRL-based offloading for computation delay minimization in wireless-powered multi-access edge computing. IEEE Trans. Commun. 2023, 71, 1755–1770. [Google Scholar] [CrossRef]

- Su, Z.; Wang, Y.; Luan, T.H.; Zhang, N.; Li, F.; Chen, T.; Cao, H. Secure and efficient federated learning for smart grid with edge-cloud collaboration. IEEE Trans. Ind. Inform. 2021, 18, 1333–1344. [Google Scholar] [CrossRef]

- Zhou, J.; Pal, S.; Dong, C.; Wang, K. Enhancing quality of service through federated learning in edge-cloud architecture. Ad Hoc Networks 2024, 156, 103430. [Google Scholar] [CrossRef]

- Makkar, A.; Ghosh, U.; Rawat, D.B.; Abawajy, J.H. Fedlearnsp: Preserving privacy and security using federated learning and edge computing. IEEE Consum. Electron. Mag. 2021, 11, 21–27. [Google Scholar] [CrossRef]

- Liu, L.; Zhang, J.; Song, S.H.; Letaief, K.B. Client-edge-cloud hierarchical federated learning. In Proceedings of the ICC 2020-2020 IEEE International Conference on Communications (ICC), Virtual, 7–11 June 2020; pp. 1–6. [Google Scholar]

- Zhang, Z.; Wu, L.; Ma, C.; Li, J.; Wang, J.; Wang, Q.; Yu, S. LSFL: A lightweight and secure federated learning scheme for edge computing. IEEE Trans. Inf. Forensics Secur. 2022, 18, 365–379. [Google Scholar] [CrossRef]

- Qayyum, A.; Ahmad, K.; Ahsan, M.A.; Al-Fuqaha, A.; Qadir, J. Collaborative federated learning for healthcare: Multi-modal covid-19 diagnosis at the edge. IEEE Open J. Comput. Soc. 2022, 3, 172–184. [Google Scholar] [CrossRef]

- Rahulamathavan, Y. Privacy-preserving similarity calculation of speaker features using fully homomorphic encryption. arXiv 2022, arXiv:2202.07994. [Google Scholar]

- Parra-Ullauri, J.M.; Madhukumar, H.; Nicolaescu, A.C.; Zhang, X.; Bravalheri, A.; Hussain, R.; Simeonidou, D. kubeFlower: A privacy-preserving framework for Kubernetes-based federated learning in cloud–edge environments. Future Gener. Comput. Syst. 2024, 157, 558–572. [Google Scholar] [CrossRef]

- Vizitiu, A.; Nita, C.I.; Toev, R.M.; Suditu, T.; Suciu, C.; Itu, L.M. Framework for Privacy-Preserving Wearable Health Data Analysis: Proof-of-Concept Study for Atrial Fibrillation Detection. Appl. Sci. 2021, 11, 9049. [Google Scholar] [CrossRef]

- Aziz, R.; Banerjee, S.; Bouzefrane, S.; Le Vinh, T. Exploring homomorphic encryption and differential privacy techniques towards secure federated learning paradigm. Future Internet 2023, 15, 310. [Google Scholar] [CrossRef]

- Korkmaz, A.; Rao, P. A Selective Homomorphic Encryption Approach for Faster Privacy-Preserving Federated Learning. arXiv 2025, arXiv:2501.12911. [Google Scholar]

- Choi, S.; Patel, D.; Zad Tootaghaj, D.; Cao, L.; Ahmed, F.; Sharma, P. FedNIC: Enhancing privacy-preserving federated learning via homomorphic encryption offload on SmartNIC. Front. Comput. Sci. 2024, 6, 1465352. [Google Scholar] [CrossRef]

- Qiang, W.; Liu, R.; Jin, H. Defending CNN against privacy leakage in edge computing via binary neural networks. Future Gener. Comput. Syst. 2021, 125, 460–470. [Google Scholar] [CrossRef]

- He, C.; Liu, G.; Guo, S.; Yang, Y. Privacy-preserving and low-latency federated learning in edge computing. IEEE Internet Things J. 2022, 9, 20149–20159. [Google Scholar] [CrossRef]

- Lin, L.; Zhang, X. PPVerifier: A privacy-preserving and verifiable federated learning method in cloud-edge collaborative computing environment. IEEE Internet Things J. 2022, 10, 8878–8892. [Google Scholar] [CrossRef]

- Zhang, L.; Xu, J.; Vijayakumar, P.; Sharma, P.K.; Ghosh, U. Homomorphic encryption-based privacy-preserving federated learning in IoT-enabled healthcare system. IEEE Trans. Netw. Sci. Eng. 2022, 10, 2864–2880. [Google Scholar] [CrossRef]

- Zhou, C.; Fu, A.; Yu, S.; Yang, W.; Wang, H.; Zhang, Y. Privacy-preserving federated learning in fog computing. IEEE Internet Things J. 2020, 7, 10782–10793. [Google Scholar] [CrossRef]

- Wang, H.; Zhao, Y.; He, S.; Xiao, Y.; Tang, J.; Cai, Z. Federated learning-based privacy-preserving electricity load forecasting scheme in edge computing scenario. Int. J. Commun. Syst. 2024, 37, e5670. [Google Scholar] [CrossRef]

- Nguyen, D.C.; Ding, M.; Pathirana, P.N.; Seneviratne, A.; Zomaya, A.Y. Federated learning for COVID-19 detection with generative adversarial networks in edge cloud computing. IEEE Internet Things J. 2021, 9, 10257–10271. [Google Scholar] [CrossRef]

- Dong, W.; Lin, C.; He, X.; Huang, X.; Xu, S. Privacy-Preserving Federated Learning via Homomorphic Adversarial Networks. arXiv 2024, arXiv:2412.01650. [Google Scholar]

- Hayati, H.; van de Wouw, N.; Murguia, C. Privacy in Cloud Computing through Immersion-based Coding. arXiv 2024, arXiv:2403.04485. [Google Scholar]

- Zhao, L.; Wang, Q.; Zou, Q.; Zhang, Y.; Chen, Y. Privacy-preserving collaborative deep learning with unreliable participants. IEEE Trans. Inf. Forensics Secur. 2020, 15, 1486–1500. [Google Scholar] [CrossRef]

- Naresh, V.S. PPDNN-CRP: Privacy-preserving deep neural network processing for credit risk prediction in cloud: A homomorphic encryption-based approach. J. Cloud Comput. 2024, 13, 149. [Google Scholar] [CrossRef]

- Akram, A.; Khan, F.; Tahir, S.; Iqbal, A.; Shah, S.A.; Baz, A. Privacy preserving inference for deep neural networks: Optimizing homomorphic encryption for efficient and secure classification. IEEE Access 2024, 12, 15684–15695. [Google Scholar] [CrossRef]

- Yang, X.; Chen, J.; He, K.; Bai, H.; Wu, C.; Du, R. Efficient privacy-preserving inference outsourcing for convolutional neural networks. IEEE Trans. Inf. Forensics Secur. 2023, 18, 4815–4829. [Google Scholar] [CrossRef]

- Lam, K.Y.; Lu, X.; Zhang, L.; Wang, X.; Wang, H.; Goh, S.Q. Efficient FHE-based privacy-enhanced neural network for trustworthy AI-as-a-service. IEEE Trans. Dependable Secur. Comput. 2024, 21, 4451–4468. [Google Scholar] [CrossRef]

- Song, C.; Huang, R. Secure convolution neural network inference based on homomorphic encryption. Appl. Sci. 2023, 13, 6117. [Google Scholar] [CrossRef]

- Prabhu, M.; Revathy, G.; Kumar, R.R. Deep learning based authentication secure data storing in cloud computing. Int. J. Comput. Eng. Optim. 2023, 1, 10–14. [Google Scholar]

- Li, L.; Zhu, H.; Zheng, Y.; Wang, F.; Lu, R.; Li, H. Efficient and privacy-preserving fusion based multi-biometric recognition. In Proceedings of the GLOBECOM 2022-2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022; pp. 4860–4865. [Google Scholar]

- Choi, H.; Woo, S.S.; Kim, H. Blind-touch: Homomorphic encryption-based distributed neural network inference for privacy-preserving fingerprint authentication. In Proceedings of the AAAI Conference on Artificial Intelligence, Stanford, CA, USA, 25–27 March 2024; Volume 38, pp. 21976–21985. [Google Scholar]

- Owusu-Agyemeng, K.; Qin, Z.; Xiong, H.; Liu, Y.; Zhuang, T.; Qin, Z. MSDP: Multi-scheme privacy-preserving deep learning via differential privacy. Pers. Ubiquitous Comput. 2023, 27, 221–233. [Google Scholar] [CrossRef]

- Sharma, J.; Kim, D.; Lee, A.; Seo, D. On differential privacy-based framework for enhancing user data privacy in mobile edge computing environment. IEEE Access 2021, 9, 38107–38118. [Google Scholar] [CrossRef]

- Gayathri, S.; Gowri, S. Securing medical image privacy in cloud using deep learning network. J. Cloud Comput. 2023, 12, 40. [Google Scholar]

- Bukhari, S.M.S.; Zafar, M.H.; Abou Houran, M.; Moosavi, S.K.R.; Mansoor, M.; Muaaz, M.; Sanfilippo, F. Secure and privacy-preserving intrusion detection in wireless sensor networks: Federated learning with SCNN-Bi-LSTM for enhanced reliability. Ad Hoc Netw. 2024, 155, 103407. [Google Scholar] [CrossRef]

- Feng, L.; Du, J.; Fu, C.; Song, W. Image encryption algorithm combining chaotic image encryption and convolutional neural network. Electronics 2023, 12, 3455. [Google Scholar] [CrossRef]

- Sharma, J.; Kim, D.; Lee, A.; Seo, D. Differential privacy using fuzzy convolution neural network (DP-FCNN) with Laplace mechanism and authenticated access in edge computing. In Proceedings of the 2021 15th International Conference on Ubiquitous Information Management and Communication (IMCOM), Seoul, Republic of Korea, 4–6 January 2021; pp. 1–5. [Google Scholar]

| DL Technique | Main Concept | Application in Privacy Preservation | Advantages | Challenges |

|---|---|---|---|---|

| Federated Learning (FL) | Distributed training without centralizing data | Protects raw user data by keeping them on local devices during model training | Enhances privacy and reduces data-transfer risks | Communication overhead and poisoning attack on the model |

| Differentially Private Deep Learning (DP-DL) | Adds noise data to training model | Prevents the leakage of individuals’ data from trained models | Strong mathematical privacy guarantees | Trade-off between the model’s accuracy and the level of data privacy. |

| Homomorphic Encryption with DL | Enables computation with encrypted data | Allows DL models to process encrypted user data without decryption | Strong end-to-end privacy preservation | High computational overhead and slower inference times |

| Hybrid Approaches | Combine distributed training, noise injection, and encrypted computation | Ensure multi-layered privacy through local training, data perturbation, and encrypted processing | Maximize privacy protection while enabling collaborative learning | Increased complexities, higher resource demands, and integration challenges |

| Company (Year) | Reason for Data Breach | DL-Based Cybersecurity Mitigation |

|---|---|---|

| Yahoo (2013) | Outdated encryption algorithm | DL model for auditing and monitoring the system. |

| Equifax (2017) | Exploited vulnerability in Apache server | DL model to prioritize critical updates. |

| Facebook (2019) | Misconfigured cloud storage led to breaches affecting around 540 million user records | DL model to detect misconfigurations and enforce best practices in cloud environments. |

| Capital One (2019) | Misconfigured AWS firewall allowed unauthorized access | DL-based intrusion detection system (IDS) to monitor and detect anomalous access attempts. |

| Marriott International (2018) | Compromised credentials led to unauthorized database access | DL model to distinguish attacker activities from normal user behavior. |

| Microsoft (2023) | Weakness in key management led to stolen cryptographic key | DL model to improve key management and predict needed patches. |

| AT&T (2023) | Data breach caused by weakness in third-party provider | DL model for automatic system assessment. |

| Hewlett Packard Enterprise (2023) | Large-scale phishing attack led to email system compromise | DL model to detect and block sophisticated phishing attempts. |

| Atlassian (2019) | Unpatched browser extension led to sensitive data leaks | DL model for behavior monitoring and anomaly detection in browser usage. |

| River City Media (2017) | Misconfigured database exposed 1.4 billion records | DL model to identify and correct cloud misconfigurations. |

| Category | Details |

|---|---|

| Search Strings | (“Privacy” OR “Confidentiality” OR “Data Breaches”) AND (“DL” OR “Deep Learning”) AND (“CC” OR “Cloud Computing”) AND (“Differential Privacy” OR “DP” OR “Homomorphic Encryption” OR “HE” OR “Federated Learning” OR “FL”). |

| Time Span | January 2020–April 2025. |

| Databases Searched | IEEE Xplore, ACM Digital Library, SpringerLink, Saudi Digital Library, and Google Scholar. |

| Inclusion Criteria |

|

| Exclusion Criteria |

|

| Quality Assessment Tools | Manual evaluation based on PRISMA guidelines, focusing on study relevance, methodological clarity, and reporting completeness. |

| Author | Methodology | Key Findings | Dataset | Key Length | Limitation | Suggested Mitigation |

|---|---|---|---|---|---|---|

| Wang et al. [14] | Federated learning and deep learning | Proposed VPPFL with CNN achieving 98% accuracy | MNIST dataset | 1024 bits | Higher computational overhead compared to simpler FL approaches. | Optimize computational complexity via model compression. |

| Li et al. [27] | Federated learning and deep learning | Introduced DynamicNet model with FL and CNN, achieved 92.9% accuracy | MNIST and CIFAR-10 datasets | 1024 bits | Accuracy was lower than expected for enhanced privacy. | Integrate differential privacy algorithm with performance-aware tuning. |

| Su et al. [35] | Federated learning and deep learning | Introduced edge–cloud collaborative FL with DRL incentives to boost participation and model quality in smart grid AIoT. | Local dataset | AES-128 | Communication inefficiencies with non-IID data. | Apply DRL-based incentive mechanisms and edge–cloud orchestration to manage non-IID data and participant reliability. |

| Zhou et al. [36] | Federated learning and deep learning | The model operated with bandwidth-limited environments with an accuracy of 96.3%. | Fashion-MNIST dataset | 2048 bits | The model lost reliability because of huge noise data addition. | Apply hierarchical FL with adaptive client selection. |

| Makkar et al. [37] | Federated learning and deep learning | FedLearnSP and CNN model enhanced privacy with distillation for mobile device efficiency. | 900 mobile images | N/A | Model not evaluated on multiple kinds of noise data under diverse data distributions. | Combine model distillation with personalization layers in FL. |

| Liu et al. [38] | Federated learning and deep learning | FL and blockchain used for cross-border cloud collaboration. | N/A | 2048 bits | Hierarchical architecture consumes more time to provide privacy preservation in cloud. | Use layer-2 solutions or off-chain verification. |

| Zhang et al. [39] | Federated learning and deep learning | Applied FL with secure multiparty computation for sensitive education data. | N/A | 2048 bits | Protocol complexity caused latency and dependence of key generation | Simplify protocol layers and reduce interaction rounds. |

| Qayyum et al. [40] | Federated learning and homomorphic encryption | HE integrated with Clustered FL and CNN for smart grid protection | COVID-19 dataset | N/A | Training time increased substantially and restriction of cloud constraint | Compress encrypted model gradients and use partial updates. |

| Rahulamathavan et al. [41] | Federated learning, fully homomorphic encryption, and deep learning | FL with CKKS and DNN-UBM applied to real-time cloud analytics with CNN models. | Real-time dataset | 128 bits | Implementation complexity and deployment issues. | Develop modular FL pipelines with deployment toolkits. |

| Parra-Ullauri et al. [42] | Federated learning and differential privacy | Proposed kubeFlower K8s operator for FL, ensuring privacy via differential privacy | CIFAR-10 | N/A | Deployment is slower than helm chart methods and lacks control of network policies by default. | Employ P3-VC and SDN overlays to isolate network flows and manage privacy budgets dynamically. |

| Vizitiu et al. [43] | Federated learning and differential privacy | Enhanced IoT healthcare with combined DNN-based (LSTM) with FL and DP | ECG dataset | 64 bits | Temporal data noise affected prediction. | Optimize noise calibration and apply denoising autoencoders. |

| Aziz et al. [44] | Federated learning and homomorphic encryption and differential privacy | Applied DP and HE to mitigate these vulnerabilities with tradeoffs in convergence and computation. | N/A | 2048 bits | Combining DP and HE introduces higher computational costs and complexity in parameter tuning. | Balance privacy and performance using hybrid models with adjustable noise and selective encryption. |

| Korkmaz et al. [45] | Federated learning and homomorphic encryption and differential privacy | Proposed FAS approach combining selective HE, DP, and bitwise scrambling for faster, secure FL in healthcare applications. | Healthcare dataset | N/A | Limited to specific FL tasks and not generalized to all DL classifiers. | Optimize FAS for broader FL scenarios and evaluate with diverse datasets. |

| Choi et al. [46] | Federated learning and homomorphic encryption | Integrated FL with HE and evaluated via CNN model that reduced training time by 46%. | FEMNIST | 128 bits | Accuracy still needs improvement for reliability. | Enhance model architecture and increase training rounds. |

| Qiang et al. [47] | Federated learning and homomorphic encryption | Combined FL with BNN and HE scheme using federated clustering that achieved 99.34% | CIFAR-100 | 128 bits | Scalability affected by large amount of client data. | Apply hierarchical FL with adaptive client selection. |

| He et al. [48] | Federated learning and homomorphic encryption | Proposed PL-FedIPEC using improved Paillier encryption for privacy in FL, reducing latency without accuracy loss. | N/A | 768 bits | High computational overhead of traditional Paillier HE still remains an implementation complexity. | Optimize encryption via pre-computed random values and introduce simpler operations to reduce latency. |

| Lin et al. [49] | Federated learning and homomorphic encryption | FL applied with Paillier encryption for real-time cloud analytics with CNN models, which preserved accuracy above 90% | MNIST dataset | N/A | Details on implementation complexity and high overhead communication. | Optimized and customized of FL model. |

| Zhang et al. [50] | Federated learning and homomorphic encryption | FL and HE combined with cryptographic masking for privacy protection in IoMT environment | HAM10000 dataset | 128 bits | Temporal data noise affected model prediction stability. | Optimize noise calibration and apply denoising autoencoders. |

| Zhou et al. [51] | Federated learning and deep learning | Adaptive FL scheme for industrial cloud environments using federated clustering and Paillier encryption | Fashion-MNIST dataset | 1024 bits | Model lost reliability because of huge noise data addition. | Apply hierarchical FL with adaptive client selection. |

| Wang et al. [52] | Federated learning, differential privacy and deep learning | Combined FL, DP, and RNN, which reduced relative error by 1.58%. | N/A | 2048 bits | Massive number of edge devices impacted accuracy. | Applied hierarchical FL with cluster-based aggregation. |

| Nguyen et al. [53] | Federated learning and differential privacy | Developed FedGAN model with Proof-of-Reputation (PoR) algorithm. | X-ray images | N/A | FedGAN framework produced more overhead than normal blockchain schemes. | Enhanced X-ray image preprocessing. |

| Dong et al. [54] | Federated learning and homomorphic encryption and | Developed HANs with aggregatable hybrid encryption to prevent key sharing and collaborative decryption. | Local dataset | 128 bits | Communication overhead is 29.2× higher than traditional MK-HE schemes. | Employ lightweight encryption components and reduce redundancy in data sharing. |

| Hayati et al. [55] | Homomorphic encryption and deep learning | Implemented HE and CNN on custom dataset with 97.75% accuracy. | Custom dataset | N/A | High computational cost and training time. | Apply lightweight encryption and parallel model training. |

| Naresh et al. [56] | Homomorphic and deep learning encryption | Proposed PPDNN-CRP model, which combined FL and HE, achieving 80.48% and 77.23% accuracy. | Kaggle loan dataset | N/A | Accuracy was lower than expected for enhanced privacy. | Integrate homomorphic encryption algorithm with low overhead key generation. |

| Akram et al. [57] | Homomorphic encryption and deep learning | Applied HE-CNN on MNIST dataset, which showed 97.25% accuracy. | MNIST dataset | N/A | Longer computation time. | Use efficient homomorphic libraries and model pruning. |

| Lam et al. [58] | Homomorphic encryption and deep learning | Combination approach (FHE-PE-NN) achieved 94.8% | MNIST dataset | 2048 bits | Training latency under real-time constraints. | Optimize model layers and apply fast encryption techniques. |

| Song et al. [59] | Homomorphic encryption and deep learning | Model included CKK, FHE, and CNN, achieving 99.05% | MNIST dataset | 2048 bits | Random noise addition impacted model effectiveness. | Use controlled noise injection and calibration. |

| Prabhu et al. [60] | Homomorphic encryption and deep learning | Applied combination model (RSA-CNN) and achieved accuracy up to 99.97% | WSN-DS and CIC-IDS2017 dataset | N/A | High energy and training time due to cryptographic operations. | Apply energy-efficient RSA variants and lightweight CNNs. |

| Li et al. [61] | Homomorphic encryption and deep learning | Designed decentralized multi-biometric recognition using MK-CKKS with neural fusion of face and voice data. | N/A | 128 bits, 192 bits, and 256 bits | Resource-constrained devices for local encryption and model computation. | Offload training to centralized servers and minimize feature set through fused vectors to reduce computation. |

| Choi et al. [62] | Homomorphic encryption and deep learning | Blind-Touch enables privacy-preserving fingerprint authentication using distributed neural inference and HE | SOKOTO dataset | 192 bits | Computational overhead of HE remains high, especially in deep layers. | Split inference into client–server model and use optimized compression and cluster processing for scalability. |

| Owusu-Agyemang et al. [63] | Homomorphic encryption and deep learning | Integrated MSCryptoNet model with DNN that achieved 86% | Real-time datasets | 128 bits | Lack of trust assurance and privacy guarantees. | Integrate trust anchors and privacy-preserving modules. |

| Zhao et al. [64] | Homomorphic encryption and deep learning | Integrated MK-FHE model with DNN, which reduced operational cost | N/A | 1024 bits | Multiple parts may reduce model accuracy. | Make encryption model in custom space in cloud computing. |

| Yang et al. [65] | Homomorphic encryption and deep learning | Model overcomes challenges of maintaining confidentiality during training data. | N/A | 2048 bits | Overhead of computation during encryption phase. | Make a redundancy model based on encryption model during inference phases in cloud computing. |

| Sharma et al. [66] | Differential privacy and deep learning | DP-FCNN achieved 97–98% | Adult and Heart disease dataset. | 80 bits and 128 bits | Long training duration and high cost. | Use gradient clipping and batch optimization. |

| Gayathri et al. [67] | Differential privacy and deep learning | Applied PPDDN used for medical images with high performance metrics. | Real-time CT images | 64 bits | Model evaluation required more computation time. | Implement parallel computing and model quantization. |

| Bukhari et al. [68] | Differential privacy and deep learning | ToF sensor embedded with CNN model on edge cloud that achieved 89.2% on facial expression detection | VL53L5CX ToF depth images | 64 bits | Low-resolution depth images affected result quality. | Enhance image preprocessing and upsampling techniques. |

| Feng et al. [69] | Differential privacy and deep learning | Integrated SCNN-BiLSTM model with high-resolution images, which obtained 95% accuracy | AI-TOD aerial images | N/A | High-resolution images led to low performance when training data in the cloud. | Offload training to edge devices or preprocess images. |

| Zhang et al. [70] | Differential privacy and deep learning | Combined VerifyNet model with AES-GCM, which maintained 94.4% accuracy. | N/A | 256 bits | Cryptographic overhead during DL application. | Use lightweight cryptographic primitives and hybrid models. |

| Sharma et al. [71] | Differential privacy and deep learning | Combined multiple privacy preservation methods, including LATENT, LDP, and DP-FCNN, which showed 91–96% accuracy | MNIST dataset. | 1024 bits | Lack of clear privacy assurance and performance breakdown. | Enhance documentation and validation with benchmarks. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almosti, A.M.; Rahman, M.M.H. Analysis of Data Privacy Breaches Using Deep Learning in Cloud Environments: A Review. Electronics 2025, 14, 2727. https://doi.org/10.3390/electronics14132727

Almosti AM, Rahman MMH. Analysis of Data Privacy Breaches Using Deep Learning in Cloud Environments: A Review. Electronics. 2025; 14(13):2727. https://doi.org/10.3390/electronics14132727

Chicago/Turabian StyleAlmosti, Abdulqawi Mohammed, and M. M. Hafizur Rahman. 2025. "Analysis of Data Privacy Breaches Using Deep Learning in Cloud Environments: A Review" Electronics 14, no. 13: 2727. https://doi.org/10.3390/electronics14132727

APA StyleAlmosti, A. M., & Rahman, M. M. H. (2025). Analysis of Data Privacy Breaches Using Deep Learning in Cloud Environments: A Review. Electronics, 14(13), 2727. https://doi.org/10.3390/electronics14132727