Abstract

Automated font generation for complex, compositional scripts like Korean Hangul presents a significant challenge due to the 11,172 characters and their complicated component-based structure. While existing component-based methods for font image generation acknowledge the compositional nature of Hangul, they often fail to explicitly leverage the crucial positional semantics of its basic elements as initial, middle, and final components, known as Jamo. This oversight can lead to structural inconsistencies and artifacts in the generated glyphs. This paper introduces a novel two-stage framework that directly addresses this gap by imposing a strong, linguistically informed structural principle on the font image generation process. In the first stage, we employ a You Only Look Once version 8 for Segmentation (YOLOv8-Seg) model, a state-of-the-art instance segmentation network, to decompose Hangul characters into their basic components. Notably, this process generates a dataset of position-aware semantic components, categorizing each jamo according to its structural role within the syllabic block. In the second stage, a conditional Generative Adversarial Network (cGAN) is explicitly conditioned on these extracted positional components to perform style transfer with high structural information. The generator learns to synthesize a character’s appearance by referencing the style of the target components while preserving the content structure of a source character. Our model achieves state-of-the-art performance, reducing L1 loss to 0.2991 and improving the Structural Similarity Index (SSIM) to 0.9798, quantitatively outperforming existing methods like MX-Font and CKFont. This position-guided approach demonstrates significant quantitative and qualitative improvements over existing methods in structured script generation, offering enhanced control over glyph structure and a promising approach for generating font images for other complex, structured scripts.

1. Introduction

1.1. The Significance of Automated Font Generation

In the modern digital era, typography exceeds its practical function of conveying text; it is a fundamental element of visual communication, user experience (UX), and brand identity. The choice of a font can suggest specific emotions, establish a message’s tone, and significantly impact how an audience perceives and interacts with digital content. A well-executed typographic system enhances readability, creates a clear information hierarchy, and contributes to the overall aesthetic appeal of digital media, from websites and applications to digital signage. However, the manual design of a single font family is a very careful and labor-intensive process, often requiring months or even years of work by skilled typographers. This challenge is exponentially exaggerated for non-alphabetic scripts with large character sets, such as Chinese, Japanese, and Korean (CJK) [1]. The economic and time costs associated with manual font creation create a significant bottleneck in content creation, digital accessibility, and cultural representation. Consequently, the field of automated font generation (AFG) has emerged as a critical area of research in computer vision and artificial intelligence. By leveraging generative models, AFG aims to synthesize entire font libraries from a small number of reference characters, a paradigm known as few-shot font generation. This technology holds the promise of democratizing font design, enabling rapid prototyping, preserving calligraphic heritage, and fostering greater linguistic diversity in the digital world.

1.2. The Unique Challenge of Hangul Typography

Hangul, the Korean alphabet, created in the 15th century, is celebrated for its scientific and phonetic design. Unlike linear alphabets, where characters are arranged sequentially, Hangul is a featural and syllabic script. Its basic letters, known as jamo, are combined to form syllabic blocks, each representing a single syllable. These blocks are composed of an initial consonant (choseong), a middle vowel (jungseong), and an optional final consonant (jongseong). This compositional principle yields 11,172 unique syllabic characters, derived from 19 initial consonants, 21 middle vowels, and 27 final consonants, as shown in Figure 1. Making the manual design of a complete Hangul font set is an exceptionally demanding task that can take one to two years.

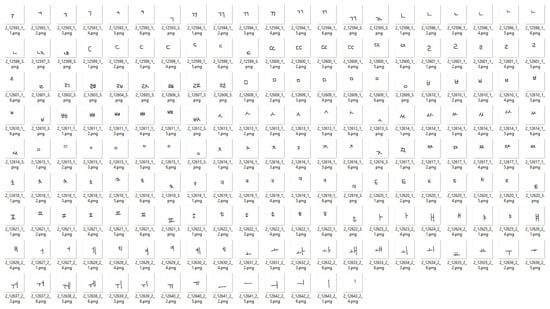

Figure 1.

Basic components of Hangul, the Korean writing system. The rows represent initial consonants (Choseong, 19), vowels (Jungseong, 21), and final consonants (Jongseong, 27).

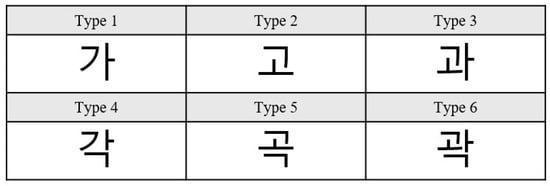

The primary technical challenge in Hangul font generation stems from its deeply positional and graphical nature. The visual form and placement of a jamo are not fixed. They dynamically adapt based on the presence and type of adjacent components within the syllabic block. For instance, the consonant ‘ㄱ’ (g) has a different shape and position when it is an initial consonant in ‘가’ (ㄱ+ㅏ) versus ‘고’ (ㄱ+ㅗ), and is different again when it is a final consonant in ‘각’ (ㄱ+ㅏ+ㄱ) as shown in Figure 2. This non-linear, two-dimensional arrangement has historically posed significant hurdles for mechanization and computerization, from typewriters to early word processors, as it challenges the linear logic behind many Western-centric text technologies. For generative models, this means that simply learning the style of individual jamo is insufficient. The model must also learn the complex rules governing their spatial relationships and morphological transformations, a task that has proven difficult for many existing approaches.

Figure 2.

Six different types and shapes of “ㄱ”, as they have different positions as well.

1.3. Limitations of Existing Approaches

Current research in automated font generation has primarily followed three paradigms, each with inherent limitations when applied to the unique structure of Hangul. First, global methods, often based on pioneering image-to-image translation frameworks like pix2pix [2] or multi-domain models like StarGAN [3,4] treat each character glyph as a full image. While effective for style transfer in a general sense, these models lack an essential understanding of the character’s underlying compositional structure. When faced with the thousands of Hangul characters, they often struggle to maintain the fine-grained details and structural integrity of individual components, leading to common artifacts such as missing strokes, blurriness, or style inconsistencies. Second, component-based methods have emerged to address this by decomposing characters into smaller parts before style transfer. Models like CKFont [5] and CCFont [6] explicitly use the jamo structure of Hangul and Chinese characters, respectively. Others, like MX-Font [7], employ a Mixture of Experts approach to learn localized style features automatically without predefined component labels. While this represents a significant step forward, these methods often encode style into a global vector or fail to explicitly condition the generation process on the specific positional role (initial, middle, or final) of each component. This can result in the correct components being generated but placed incorrectly within the syllabic block, or the shape of a component not correctly adapting to its position, thus failing to capture a crucial aspect of Hangul typography. Third, diffusion models [8,9] have recently set a new state-of-the-art in image generation, producing outputs of exceptionally high fidelity. Works like Diff-Font [10] and Fontdiffuer [11] have demonstrated their potential for font generation. However, their primary drawback is computational inefficiency [12,13]. The iterative denoising process required for generation is significantly slower and more resource-intensive than the single-pass generation of GANs, limiting their practicality for applications requiring the rapid generation of large font libraries [14].

1.4. Proposed Framework and Contributions

This paper proposes that the key to high-fidelity Hangul font generation lies in explicitly guiding the generative process with the positional semantics of the character components. We introduce a two-stage framework that first uses a deep learning model to perform semantic segmentation and then uses the results to condition a generative model. By separating the task of structural analysis from stylistic synthesis, our method enforces a strong, linguistically informed prior that resolves many of the structural errors common in previous approaches. The main contributions of this paper are as follows:

- We propose a novel framework for Hangul font generation that, for the first time, explicitly leverages the positional semantics of initial, middle, and final components to guide the style transfer process.

- We introduce a robust methodology for creating a position-aware component dataset by employing the YOLOv8-Seg [15] instance segmentation model to accurately extract and classify jamo based on their structural roles.

- We design a component-conditioned Generative Adversarial Network (GAN) that uses these segmented components as explicit guidance, resulting in demonstrably superior structural integrity and style consistency in the generated characters.

- We conduct extensive experiments, including a crucial ablation study, that quantitatively validate the necessity and effectiveness of our positional component-guided approach, showing significant improvements over state-of-the-art baselines.

1.5. Paper Organization

This paper is organized as follows. Section 2 reviews related work in generative models and font generation. Section 3 details our proposed methodology, covering both the component segmentation and adversarial style transfer stages. Section 4 presents the experimental setup, quantitative and qualitative results, and a critical ablation study. Section 5 discusses the implications, significance, and limitations of our findings. Finally, Section 6 concludes the paper and suggests directions for future research.

2. Related Work

This section provides a critical analysis of the literature that forms the foundation of our work. We review generative models for image translation, the evolution of few-shot font generation techniques, and the specific context of component-based methods, thereby identifying the research gap that our proposed framework aims to fill.

2.1. Generative Adversarial Networks for Image-to-Image Translation

Generative Adversarial Networks (GANs) [16], introduced by Goodfellow et al., have revolutionized the field of image synthesis. A GAN framework consists of two competing neural networks: a Generator that creates synthetic data from random noise, and a Discriminator that attempts to distinguish the synthetic data from real data. This adversarial training process pushes the Generator to produce increasingly realistic outputs. This core concept was extended to image-to-image translation tasks, where the goal is to map an input image from a source domain to a target domain. The pix2pix [2] model was an influential work in this area, demonstrating that a conditional GAN (cGAN) [17] could learn this mapping from paired training data. However, the requirement for perfectly paired datasets is a significant limitation. The CycleGAN [18] architecture addressed this by introducing a cycle-consistency loss, enabling unpaired image-to-image translation, for example, turning horses into zebras without needing images of the same animal in both forms. For font generation, where a single source font may need to be translated into multiple target styles, a multi-domain translation model is more efficient. StarGAN [3,4] provided a unified solution by enabling a single model to learn mappings between multiple domains. It achieves this by feeding the domain (style) label as an additional input to the generator and training the discriminator to classify the domain of the image, thus allowing for flexible style transfer. Our work builds upon these cGAN principles, but instead of using a simple class label, we use rich, spatially explicit component images as the condition.

2.2. Few-Shot Font Generation and Style–Content Disentanglement

The central paradigm in modern few-shot font generation is the disentanglement of content (the character’s identity, e.g., ‘가’) and style (the font’s appearance, e.g., ‘Times New Roman’). In this framework, a content encoder extracts structural features from a source character (typically from a standard, plain font), while a style encoder extracts stylistic features from a few reference images of the target font. These two feature representations are then combined by a decoder to synthesize the source character in the target style [19,20]. This approach has been successfully implemented in various models like EMD [21] and SA-VAE [22]. However, a persistent challenge is achieving perfect disentanglement. Style information often leaks into the content representation and vice versa, leading to artifacts. For instance, if the source font has serifs, remnants of these serifs might appear in the generated image even if the target font is sans-serif. The choice of the source font can significantly impact the final quality, and the model’s ability to generalize to vastly different styles can be limited. Our work tackles this issue by providing the generator with explicit structural guidance in the form of positional components, which helps to regularize the content representation and achieve a cleaner separation from style.

2.3. Component-Based Font Generation

Recognizing the limitations of global approaches for complex scripts, researchers have developed component-based models. These methods leverage the inherent compositionality of characters in languages like Chinese and Korean. CKFont [5] and CCFont [6], are prime examples. CKFont decomposes Hangul characters into their three constituent jamo (initial, middle, and final) and uses these components to guide the generation process. By learning from local component information, it aims to achieve more accurate style transfer than by learning from the global character image. However, while it acknowledges the components, its style transfer mechanism does not fully account for the significant morphological changes and positional shifts that components undergo within different syllabic contexts. The model primarily focuses on the decomposition and recombination of predefined component classes, not the position-based structure. MX-Font [7] takes a different approach by using a Mixture of Experts (MoE) architecture. Instead of relying on predefined component labels, it employs multiple expert networks that automatically learn to specialize in different local regions or features of a character. This allows the model to capture diverse local styles and generalize scripts without explicit component annotations, such as Latin alphabets. The key limitation here is that these automatically learned experts are not explicitly tied to the linguistically defined and semantically meaningful jamo of Hangul. The model may learn to focus on certain strokes or regions, but it lacks the strong structural priority of knowing what constitutes an initial, middle, or final component and how they must be arranged. Our approach combines the strengths of both philosophies. Like CKFont [5], we use the explicit, linguistically grounded jamo structure. However, we go a step further by using a powerful segmentation model to extract not just the component class, but its specific shape and position in the target style. This provides a much richer and more precise conditional input to the GAN, directly addressing the positional variance that other models handle less explicitly.

2.4. The Rise of Diffusion Models in Font Generation

In recent years, Denoising Diffusion Probabilistic Models (DDPMs) [8,9] have emerged as the state-of-the-art in high-fidelity image generation, often surpassing GANs in visual quality and training stability. These models work by progressively adding noise to an image and then training a network (typically a U-Net) to reverse the process, learning to denoise an image step-by-step. Several works, such as Diff-Font [10] and others, have successfully applied this technology to font generation, producing highly realistic and detailed glyphs. Despite their impressive results, diffusion models have a significant practical drawback: slow inference speed [12,13,14]. Generating a single image requires hundreds or thousands of iterative forward passes through the denoising network, making it computationally expensive and time-consuming. This contrasts sharply with GANs, which can produce an image in a single forward pass. For applications requiring the generation of entire font libraries (containing thousands of characters) or real-time font stylization, the efficiency of GANs remains a compelling advantage. Our work, therefore, focuses on advancing the GAN-based paradigm by incorporating stronger structural guidance, aiming to close the quality gap with diffusion models while retaining superior generation speed.

2.5. Summary and Research Gap

The literature reveals a clear trajectory from global image translation to more nuanced and component-aware methods. However, a critical gap remains in explicitly leveraging the positional semantics of Hangul’s compositional structure to guide the generation process. Existing methods either ignore components, use them without fully accounting for their positional variability, or learn local features that lack linguistic grounding. The Table 1 summarizes the key approaches and highlights the unique position of our work.

Table 1.

Comparison of Font Generation Approaches.

As shown, our method is the first to use the output of a dedicated instance segmentation model as a rich, position-aware condition for a generative network. This novel conditioning strategy is designed to enforce the structural rules of Hangul typography directly within the generation process, thereby addressing the key limitations of prior work.

3. Proposed Methodology

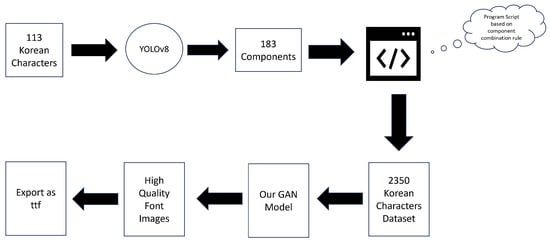

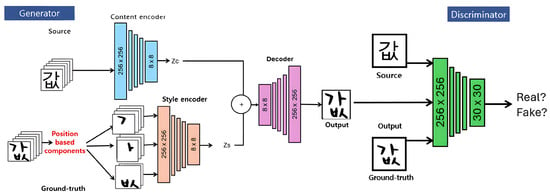

This work introduces a novel two-stage framework that strategically decouples structural analysis from stylistic synthesis. As illustrated in Figure 3, the framework first employs a dedicated instance segmentation model to extract precise, position-aware structural components of the target character. These components, in the form of binary masks, serve as a strong, linguistically informed priority for the second stage. The second stage consists of a conditional Generative Adversarial Network (cGAN) that performs stylistic synthesis.

Figure 3.

Flow diagram of the proposed methodology for Korean font generation.

A standard GAN operates within a vast, unrestricted solution space, tasked with learning both the complex rules of Hangul composition and the specific aesthetic rules of a target font style simultaneously. This problem often results in unstable training and common failure modes such as broken or deformed characters. The proposed two-stage architecture fundamentally reframes this challenge. By providing the generator with the exact target structure via segmentation masks, the solution space is constrained. The generator is no longer required to learn where to place a component or how to shape it for a given syllabic block; its task is simplified to learning how to render the texture and style within the provided high-fidelity shape. This deliberate injection of domain-specific knowledge, the explicit linguistic structure of Hangul, transforms a difficult joint-distribution learning problem into a more manageable and well-defined conditional texture-synthesis problem. This principle of using an analytical pre-processing stage to constrain a generative model is a powerful design pattern that enhances stability and fidelity, with broad applicability to other generative tasks.

3.1. Stage 1: Semantic Decomposition via Instance Segmentation

The first stage of the framework is dedicated to extracting the high-fidelity structural priority from target font images. This process leverages a state-of-the-art instance segmentation model to decompose each Hangul character into its fundamental, position-aware components.

3.1.1. The Role of Instance Segmentation and Choice of YOLOv8-Seg

The task of isolating individual jamo within a character glyph necessitates a model capable of not only identifying objects but also defining their precise pixel-wise boundaries. For this reason, instance segmentation was chosen over less granular techniques like object detection (which provides only bounding boxes) or semantic segmentation (which classifies pixels by category but does not distinguish between individual instances of the same category).

The You Only Look Once version 8 for Segmentation (YOLOv8-Seg) [15] model was selected for this task due to its state-of-the-art performance, high inference speed, and robust architecture. YOLOv8-Seg extends the highly successful YOLO object detection framework by incorporating a segmentation head. This head, often inspired by architecture like YOLACT, operates in parallel with the detection head. It includes a “ProtoNet” [24] module that generates a set of prototype masks for the entire image, and an additional output in the detection head predicts mask coefficients for each detected instance. These components are then combined to produce the final, precise instance masks. This efficient, single-pass design makes it ideal for generating the large datasets required for training the subsequent generative model.

To clarify how YOLOv8-Seg generates instance masks, it is essential to understand its architectural roots in YOLACT (You Only Look At CoefficienTs), a one-stage, fully convolutional segmentation model designed for real-time efficiency. Unlike traditional two-stage approaches that generate regions of interest (RoIs) before segmenting them, YOLACT introduces a parallel dual-branch system operating on backbone features—typically enhanced with a Feature Pyramid Network (FPN). One branch, called the Protonet, produces a set of k instance-agnostic prototype masks that capture general spatial structures, edges, and contours across the entire image. Simultaneously, the detection head predicts bounding boxes, class labels, and a k-dimensional vector of mask coefficients for each detected instance. These coefficients act as weights for a linear combination of the prototype masks, resulting in a unique instance mask per detection. This streamlined design eliminates costly feature re-pooling operations and facilitates real-time segmentation performance. Our selection of YOLOv8-Seg is motivated by its implementation of this YOLACT-inspired mechanism, which we leverage for efficient and accurate structural decomposition of Hangul characters.

3.1.2. Segmentation Dataset and Training Pipeline

To train the segmentation model, a custom dataset was curated to ensure robust performance across a wide range of typographic styles. The dataset consists of 50 Hangul characters, selected to cover a comprehensive range of jamo component combinations. These characters were extracted from 40 different font styles, including both handwritten and printed variants, to capture significant morphological variability.

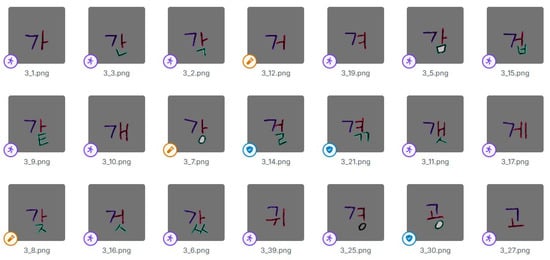

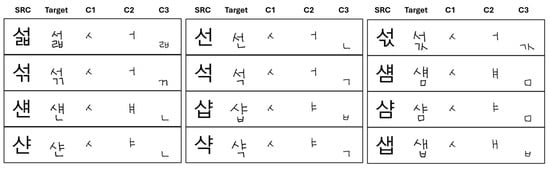

Using the Roboflow [25] annotation platform, each character image was meticulously annotated. A critical aspect of this process was the definition of the class labels. Rather than labeling components by their identity (e.g., ‘ㄱ’, ‘ㅏ’), they were labeled according to their positional–semantic role within the syllabic block, as shown in Figure 4. The model was trained to recognize three distinct classes:

Figure 4.

A segmented dataset was prepared on Roboflow for training of the YOLOv8 model.

- C1: Initial consonant (choseong)

- C2: Middle vowel (jungseong)

- C3: Final consonant (jongseong)

This classification scheme forces the model to learn the structural context of each component, associating its shape and placement with its linguistic function. The model was trained on this custom dataset until it achieved optimal performance in accurately segmenting and classifying the positional components.

3.1.3. Generation of Positional–Semantic Masks

Upon successful training, the YOLOv8-Seg model can process any Hangul character image in a target font style and output a segmentation result. Figure 5 shows the initial output is typically a color-coded map where each color corresponds to one of the three positional–semantic classes.

Figure 5.

Predicted component results of the YOLOv8 model.

A subsequent post-processing step, implemented via a Python 3.12.1 script, converts this color-coded map into a set of three distinct, single-channel binary masks. Each mask corresponds to one of the positional classes (C1, C2, or C3) and contains a white silhouette of that component against a black background, preserving its exact shape, size, and location within the 256 × 256 pixel canvas. This set of three binary masks constitutes the rich, spatially explicit structural blueprint that serves as the conditioning signal for the generative stage. Unlike a simple class label or a global style vector, these masks provide the generator with a non-ambiguous, pixel-perfect guide, effectively eliminating structural uncertainty from the generation process.

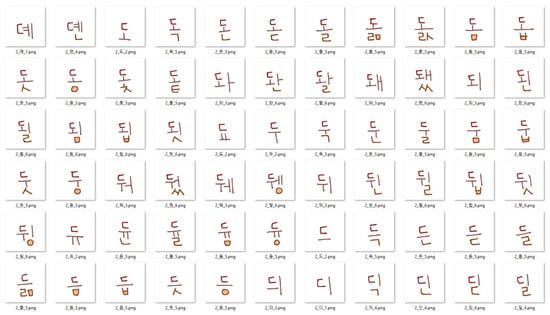

3.1.4. Component Shape Analysis

Following the segmentation and extraction of individual components, we conducted a comprehensive shape analysis to categorize the structural variations across different components. Specifically:

- The 19 initial consonants (Choseong) were grouped into 6 distinct shape categories.

- The 21 vowels (Jungseong) were divided into 2 categories, depending on whether or not they require a third (final) component.

- The 27 final consonants (Jongseong) were found to fall into a single shape category.

These categorizations are a total of 183 distinct shape combinations, as expressed in Equation (1):

Figure 6 further illustrates these shape variations, offering valuable insights into the positional and structural diversity inherent in Hangul character composition.

Figure 6.

Different shapes and types of Hangul components.

3.2. Stage 2: Adversarial Synthesis with Positional Conditioning

The second stage of the framework employs a conditional Generative Adversarial Network (cGAN) to synthesize the final font character. This network leverages the positional–semantic masks generated in Stage 1 as a strong conditional input to guide the style transfer process. The generator and the discriminator, as shown in Figure 7.

Figure 7.

Model architecture of a generative adversarial network.

3.2.1. Generator Architecture

The generator’s architecture is based on the U-Net design, a proven and highly effective encoder-decoder structure for image-to-image translation tasks, popularized by frameworks such as Pix2Pix [2]. The architecture is specifically adapted to disentangle content and style by employing a dual-encoder system.

- Content Encoder (): This encoder takes the source character image as input, typically rendered in a standard, style-neutral font (e.g., Arial Unicode MS). Its function is to extract a high-level, style-agnostic feature representation, , that captures the abstract identity of the character (e.g., the concept of ‘값’).

- Style Encoder (): This encoder receives the three positional–semantic binary masks (C1, C2, C3) generated in Stage 1 as a concatenated input. Its function is to extract a feature vector, , that encodes the precise structural layout and stylistic shape of the components in the target font.

Both encoders are composed of six convolutional layers. The initial layer utilizes a larger kernel to capture global features, while subsequent layers use smaller kernels to extract finer details. Each convolutional layer is followed by Instance Normalization, which is particularly effective for style transfer tasks as it normalizes feature statistics per instance, and a Leaky ReLU activation function.

The latent vectors and are concatenated and fed into the decoder. The decoder consists of six deconvolutional (transposed convolution) layers that progressively up-sample the feature representation back to the original image dimensions. A key feature of the U-Net architecture is the use of skip connections, which link each layer i in the encoder to the corresponding layer in the decoder. These connections allow low-level feature information, such as sharp edges and fine strokes, to bypass the network’s bottleneck and be directly accessible during reconstruction. This mechanism is critical for preventing the blurriness that often plagues generative models and for ensuring high-fidelity detail in the final output.

The final layer of the decoder uses a tanh activation function, which normalizes the output pixel values to the range , a standard practice for stabilizing GAN training.

3.2.2. Conditional Discriminator Architecture

The discriminator is implemented as a conditional PatchGAN classifier, a design known for its effectiveness in encouraging the generation of realistic high-frequency details. Instead of classifying the entire image as real or fake, a PatchGAN operates on overlapping patches of the image, assessing the authenticity of local textures. This forces the generator to produce sharp, convincing details across the entire glyph.

The discriminator is designed to perform a dual-task function, a key element for enabling multi-style generation akin to the StarGAN framework. Its two objectives are:

- Authenticity Classification: The primary adversarial task is to distinguish real image pairs from fake ones. It receives as input the source character concatenated with either the real ground truth target character or the fake character produced by the generator.

- Style Consistency Classification: The discriminator is also trained to classify the style domain of the input image. This auxiliary task forces the generator to produce images that are not only realistic but also consistent with the target style specified by the conditional masks.

The discriminator’s architecture consists of a series of convolutional layers, each followed by Instance Normalization and Leaky ReLU activations. It outputs two values: one for the real-versus-fake probability and another for the style classification.

3.2.3. Objective Functions and Optimization

The model is trained end-to-end by optimizing a composite objective function that combines three distinct loss terms. The overall objective for the generator G and discriminator D is formulated as follows, where and are hyperparameters that weight the contribution of each loss term:

The individual loss components are defined as

- Adversarial Loss (): This is the standard GAN loss that drives the minimax game between the generator and the discriminator. The generator G aims to produce images from a source image x that are indistinguishable from real target images y, while the discriminator D aims to correctly identify them. It is defined asThis adversarial pressure encourages the generator to learn the distribution of realistic font textures and details.

- Style Loss (): This is an auxiliary classification loss that ensures the generated characters adhere to the correct target style. The discriminator predicts the style class of both real images () and generated images (). The generator is penalized if its output is not classified as belonging to the intended target style s.

- L1 Reconstruction Loss (): This loss provides a direct, pixel-level supervisory signal to the generator by measuring the Mean Absolute Error (MAE) between the generated image and the ground truth target image y. It is defined asThe use of L1 distance, as opposed to L2 (Mean Squared Error), is known to produce less blurry results and encourages sharpness in the generated images. This loss acts as a powerful regularizer, stabilizing the adversarial training process and guiding the generator toward a structurally accurate solution.

4. Experiment

To validate the efficacy of the proposed two-stage framework, a comprehensive suite of experiments was conducted. The evaluation was designed to assess the model’s performance against state-of-the-art baselines using both quantitative metrics and qualitative visual inspection.

4.1. Dataset for Font Generation

The experiments utilized a large and diverse collection of Hangul fonts to ensure the model’s robustness and generalization capabilities. The font styles were sourced from publicly available repositories, primarily Google Fonts [26] and Naver Fonts [27], which offer a wide variety of professionally designed typefaces. The dataset consisted of 69 distinct handwritten font styles and 92 distinct printed font styles, providing the necessary diversity to train a model capable of handling a broad spectrum of typographic aesthetics. For each font style, training data was constructed through a systematic process beginning with the selection of a base set of 113 essential Hangul characters. This base set was carefully chosen to include all 183 unique jamo component shapes needed to form the entire Hangul syllabary. After decomposing these base characters into their positional components using the trained YOLOv8-Seg model, a programmatic script applied linguistic combination rules to generate a full dataset of 2350 unique character compositions per font style. Each training sample was then structured as a single 256 × 1280 pixel tensor, created by horizontally concatenating five 256 × 256 pixel images in the following order: the source character image, the ground truth target character image, and the three binary positional–semantic masks (C1, C2, C3) shown in Figure 8.

Figure 8.

New position-based components dataset for training of a cGAN model.

4.2. Implementation Details and Hyperparameters

The model was implemented using the PyTorch 2.3.0+cu118 deep learning framework. Training was conducted using the Adam optimizer with a learning rate of 0.0002 and momentum parameters β1 = 0.5 and β2 = 0.999. The hyperparameters for the composite loss function were set to λs = 1 and λL1 = 100, placing a strong emphasis on the L1 reconstruction loss to ensure high structural fidelity and training stability. All models were trained for 200 epochs to ensure convergence.

4.3. Baseline Models for Comparison

Two leading font generation models were selected as baselines, chosen specifically because they represent the two dominant component-aware strategies in the field.

- MX-Font: This model represents the state-of-the-art in implicit, learned component-based generation. It utilizes a Mixture of Experts (MoE) architecture where multiple expert networks automatically learn to specialize in different local regions or features of a character. It does not rely on explicit component labels, instead using weak supervision to guide the experts, making it generalizable to scripts without predefined components.

- CKFont: This model represents the state-of-the-art in explicit component-based generation for Hangul. It explicitly decomposes characters into their three constituent jamo classes (initial, middle, final) and uses this class-level information to guide a GAN-based generation process.

The selection of these two baselines is strategic. By outperforming CKFont, the proposed model demonstrates the superiority of its instance-level positional guidance over traditional class-level component methods. By outperforming MX-Font [7], it shows that a linguistically grounded, explicit structural prior is more effective than automatically learned, implicit local features for a script with strong compositional rules like Hangul. This comparison framework effectively isolates the key contribution: the use of precise, positional–semantic conditioning.

4.4. Quantitative Evaluation Metrics

A set of five standard metrics was used to provide a comprehensive and objective assessment of image quality.

- L1 and L2 Loss: These metrics [28,29] measure the pixel-wise difference between the generated and ground truth images, corresponding to the Mean Absolute Error and the Mean Squared Error, respectively. They provide a direct measure of low-level reconstruction accuracy.

- Structural Similarity Index (SSIM): SSIM [30] is a perceptual metric that evaluates image quality by comparing three key features: luminance, contrast, and structure. It is designed to align more closely with human visual perception of similarity than simple pixel-wise errors. A score closer to 1.0 indicates higher structural similarity. This metric is particularly critical for this study, as it directly quantifies the model’s ability to preserve character structure, which is the central claim of the paper.

- Fréchet Inception Distance (FID): FID [31] measures the similarity between the distribution of generated images and the distribution of real images. It uses the activations from an intermediate layer of a pre-trained InceptionV3 network to compute the Fréchet distance between the two distributions, capturing both image quality and diversity. A lower FID score indicates that the generated image distribution is closer to the real image distribution.

- Learned Perceptual Image Patch Similarity (LPIPS): LPIPS [32] measures the perceptual distance between two image patches. It utilizes deep features from a pre-trained network (e.g., AlexNet or VGG) that has been specifically fine-tuned to match human judgments of image similarity. A lower LPIPS score signifies that two images are more perceptually similar.

4.5. Quantitative Analysis

We evaluated our model against the baselines on the test set of unseen font styles. The quantitative results, summarized in Table 2, demonstrate the superior performance of our proposed method across all metrics.

Table 2.

Comparison of quantitative results with other baseline models. All values are reported as mean ± standard deviation over 5 independent runs.

Our model achieves a significantly lower L1 loss (0.2991) and L2 loss (0.4621), indicating a much closer pixel-level match to the ground truth images compared with both MX-Font and CKFont. This suggests a higher fidelity in reproducing the exact details of the target style. Most notably, our model obtains an SSIM score of 0.9798, which is exceptionally close to a perfect match (1.0). This high SSIM score strongly supports our central hypothesis: that explicit positional component guidance helps the model preserve the character’s core structure far more effectively than other methods. Furthermore, our model achieves the lowest FID (12.06) and LPIPS (0.047) scores, indicating that the generated images are not only structurally and perceptually closer to the real images but also that their overall distribution is more realistic.

To assess the statistical significance of our model’s improvements, we performed a paired two-tailed t-test on the results obtained from the five independent training runs. When comparing our model to MX-Font, the improvements in the primary metrics of SSIM (0.9798 vs. 0.7891) and L1 Loss (0.2991 vs. 0.7268) were found to be statistically significant, with p-values well below 0.01. A similar comparison against CKFont also yielded statistically significant improvements for SSIM and L1 Loss (p < 0.01). This statistical validation provides evidence that the performance gains achieved by our positional component-guided approach are not an artifact of random chance but reflect a reliable advantage of the proposed methodology.

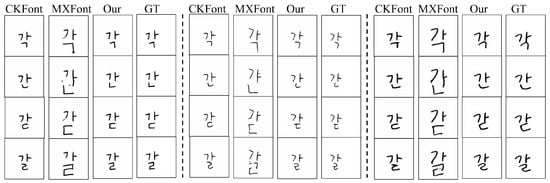

4.6. Qualitative Analysis

Visual inspection of the generated characters verifies the quantitative findings. As shown in Figure 9 of the original manuscript, our model produces characters that are visually almost indistinguishable from the ground truth (GT) target style. In contrast, characters generated by MX-Font and CKFont exhibit visible artifacts, such as inconsistent stroke thickness, incorrect component shapes, and inconsistent style.

Figure 9.

Comparison of results with other baseline font generation models.

To further test the generalization capabilities, we evaluated the model on font styles that were entirely unseen during training for both printed and handwritten categories (Figure 10 and Figure 11). The results confirm that our model maintains a high level of style consistency and structural coherence even for novel fonts. We also included additional examples with highly complex character structures, which further highlight the robustness of our approach.

Figure 10.

Printed font styles generated by our model.

Figure 11.

Handwriting font style generated by our model.

While the model performs exceptionally well, we also present failure cases. For extremely ornate or abstract calligraphic styles, the segmentation stage can sometimes produce imperfect masks, which can lead to minor artifacts in the final generated character. This transparency is important for understanding the model’s current limitations.

4.7. Ablation Study: The Impact of Positional Component Guidance

A central claim of this paper is that the explicit positional component guidance from our segmentation stage is the key factor behind our model’s superior performance. To validate this claim, we conducted an ablation study as recommended by peer reviewers. We designed and trained an ablated version of our model, using the exact same GAN architecture—including the generator, discriminator, and loss functions—as the full model. However, instead of receiving the three segmented component images (C1, C2, C3) as input to the style encoder, this ablated model was conditioned on the entire target character image. This setup reflects a more conventional global style-conditioned approach, allowing us to isolate the contribution of component-based guidance. We hypothesized that the ablated model, lacking explicit positional information, would struggle to maintain structural integrity and would exhibit greater style–content entanglement, resulting in lower performance. The results, summarized in Table 3, confirm this hypothesis. The ablated model’s L1 and L2 losses are more than double those of the full model, and its SSIM score drops significantly from 0.9798 to 0.8655, indicating a loss in structural fidelity. Additionally, its FID and LPIPS scores degrade considerably, nearing the performance of the baseline models. Qualitatively, the ablated model often produced characters with misaligned or distorted component errors that were effectively corrected by our full model. These results provide strong evidence that positional component conditioning is the essential innovation responsible for the high-quality results of our framework.

Table 3.

Ablation study on the effect of positional component guidance.

5. Discussion

The experimental results presented in the previous section demonstrate the effectiveness of our proposed framework. This section provides a deeper interpretation of these findings, discusses their broader significance and limitations, and outlines promising directions for future research.

5.1. Interpretation of Findings

The quantitative and qualitative superiority of our model, particularly highlighted by the ablation study, can be attributed to the role of positional component guidance as a powerful structural prior. The task of image-to-image style transfer is inherently not only good, but there are many possible ways to generate a character’s content in a new style. Standard GANs, even when conditioned on a full target image, can struggle to disentangle the style from the target’s underlying structure, often leading them to hallucinate or blend features incorrectly. Our two-stage approach mitigates this problem. By first performing instance segmentation, we effectively factorize the problem. The YOLOv8-Seg model handles the complex task of structural analysis, providing the GAN with a clean, explicit blueprint of the target character’s composition. The GAN’s task is then simplified; it no longer needs to infer the structure of the target character but only needs to learn how to adjust the content glyph using the style demonstrated by the provided component shapes. This strong regularization forces the generator to respect the fundamental typographic rules of Hangul, preventing the misplacement of jamo and ensuring that their shapes correctly adapt to their positional context. This leads to a more effective disentanglement of content and style, resulting in the observed improvements in structural integrity (high SSIM) and perceptual quality (low LPIPS and FID).

5.2. Significance and Contributions

This work makes a significant contribution to the field of automated font generation, particularly for complex, compositional scripts. By demonstrating the power of explicit, position-aware guidance, our framework offers a new direction for creating models that are not just stylistically versatile but also structurally robust. The implications of this research extend beyond Hangul. The core principle of using a segmentation model to provide a structural prior to a generative model could serve as a baseline for generating other complex scripts with strong compositional rules, such as Chinese (with its radical-based structure), Devanagari, or various historical scripts.

The practical applications are substantial. This technology can accelerate the generation of new digital fonts, supporting creative industries and personalized content creation. Furthermore, it provides a valuable tool for digital cultural heritage, enabling the preservation and revitalization of historical calligraphic styles by converting them into fully functional digital fonts.

5.3. Limitations

While our approach has proven effective, it is important to acknowledge its limitations, which also point toward promising directions for future research.

- Architectural Novelty of the GAN: The main contribution of our work lies in the novel conditioning strategy rather than in the architecture of the GAN itself. The generator and discriminator components are based on standard conditional GAN (cGAN) frameworks and do not incorporate recent architectural advancements such as attention mechanisms or adaptive normalization layers, which have shown success in related style transfer tasks. The observed performance improvements are primarily due to the quality of the conditional input rather than architectural innovation.

- Dataset Scope: Although our training dataset covers all fundamental jamo components, it is limited to 113 representative Hangul characters. This does not fully reflect the richness and diversity of all 11,172 possible Hangul syllables, especially complex or infrequent combinations. Broadening the dataset to include more syllabic compositions could enhance the model’s ability to generalize to unseen characters.

- Dependency on Segmentation: The accuracy of the generated font heavily depends on the quality of the initial component segmentation stage. Although the YOLOv8-Seg model used in our framework performed reliably during our experiments, occasional segmentation errors, such as mislabeling a component or producing an imprecise mask, can negatively affect the GAN’s output, potentially leading to visual artifacts in the generated characters. At the time of our experiments, models referred to as YOLOv11 or YOLOv12 were either unreleased or lacked stable open-source implementations and benchmarking. Thus, we selected YOLOv8-Seg, which was the most mature and validated option available, with established support for instance segmentation.

- Lack of Perceptual Evaluation: Our evaluation relies on quantitative, pixel-based, and feature-based metrics (L1, SSIM, FID, LPIPS). While these metrics provide an objective measure of reconstruction quality and distributional similarity, they may not fully correlate with human perception of aesthetic appeal, legibility, and style consistency. A formal user study, where human evaluators compare and rate the outputs of different models, would be necessary to provide a comprehensive qualitative assessment. Such studies are critical for validating the practical usability of generated fonts and represent an important next step for this research.

5.4. Future Research Directions

This work opens several exciting paths for future research aimed at addressing the current limitations and expanding the capabilities of the framework. A compelling next step is to integrate our positional component guidance strategy into more advanced generative backbones. For instance, conditioning a diffusion model with our segmented components could potentially merge the high-fidelity, artifact-free generation of diffusion models with the strong structural control of our method, possibly achieving the best. Similarly, exploring transformer-based architectures could offer new ways to model the long-range dependencies between components. Future work could also focus on creating more interactive systems. Instead of static style transfer, one could develop a model that allows a user to granularly control the generation process, for example, by interactively adjusting the position, size, or even the style of a single component within the syllabic block and seeing the character update in real-time. To enhance robustness, the training dataset could be expanded to include a larger and more diverse set of Hangul characters, particularly those with rare and complex structures. Furthermore, the most promising long-term direction is to adapt this positional–semantic framework to other compositional languages. Applying this method to Chinese, where the positional arrangement of radicals carries significant semantic meaning, would be a valuable test of the framework’s generalizability and a significant contribution to multilingual typography.

6. Conclusions

This study addressed the significant challenge of generating high-quality Korean Hangul fonts by focusing on the characters’ unique compositional and positional structure. We proposed a novel two-stage framework that employs a YOLOv8-Seg instance segmentation model to decompose Hangul characters into their positional initial, middle, and final components, and then uses these position-aware components to explicitly guide a conditional Generative Adversarial Network. Our experiments demonstrated that this positional component-guided approach yields substantial improvements in both quantitative metrics and qualitative visual fidelity compared with established baseline models. The critical ablation study provided conclusive evidence that the explicit structural prior supplied by the segmentation stage is the key factor behind our model’s success, enabling superior structural integrity and style consistency. While acknowledging limitations such as the conventional GAN architecture and the scope of the dataset, this work presents an effective method for generating high-quality Hangul fonts by leveraging a strong structural prior. The results suggest that using instance-level segmentation masks as a conditional input is a promising direction for future work in automated typography, with potential applications in creative tooling, cultural heritage preservation, and the generation of other complex, compositional scripts.

Author Contributions

Conceptualization, A.K.; methodology, A.K.; software, A.K.; validation, A.K., I.M., A.S., Y.J., and J.C.; formal analysis, A.K.; investigation, A.K., A.S., and Y.J.; resources, J.C.; data curation, A.K. and I.M.; writing—original draft preparation, A.K.; writing—review and editing, I.M. and J.C.; visualization, A.K., I.M., A.S., and Y.J.; supervision, J.C.; project administration, J.C.; funding acquisition, J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Start up Pioneering in Research and Innovation (SPRINT) through the Commercialization Promotion Agency for R&D Outcomes (COMPA) grant funded by the Korea government (Ministry of Science and ICT) (RS-2025-02314089).

Data Availability Statement

Some or all of the data, trained models, or code that support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Azadi, S.; Fisher, M.; Kim, V.G.; Wang, Z.; Shechtman, E.; Darrell, T. Multicontent GAN for Few-Shot Font Style Transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7564–7573. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Choi, Y.; Choi, M.; Kim, M.; Ha, J.W.; Kim, S.; Choo, J. StarGAN: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8789–8797. [Google Scholar]

- Choi, Y.; Uh, Y.; Yoo, J.; Ha, J.W. StarGAN v2: Diverse image synthesis for multiple domains. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8188–8197. [Google Scholar]

- Jang-kyung, P.; Amar; Jae-young, C. Korean font generation model using minimum characters based on Korean composition. J. Korea Inf. Process. Soc. 2021, 10, 473–482. [Google Scholar]

- Park, J.; Hassan, A.U.; Choi, J. CCFont: Component-Based Chinese Font Generation Model Using Generative Adversarial Networks (GANs). Appl. Sci. 2022, 12, 8005. [Google Scholar] [CrossRef]

- Park, S.; Chun, S.; Cha, J.; Lee, B.; Shim, H. Multiple heads are better than one: Few-shot font generation with multiple localized experts. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 13900–13909. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Choi, J.; Kim, S.; Jeong, Y.; Gwon, Y.; Yoon, S. Conditioning method for denoising diffusion probabilistic models. arXiv 2021, arXiv:2108.02938. [Google Scholar] [CrossRef]

- He, H.; Chen, X.; Wang, C.; Liu, J.; Du, B.; Tao, D.; Yu, Q. Diff-font: Diffusion model for robust one-shot font generation. Int. J. Comput. Vis. 2024, 132, 5372–5386. [Google Scholar] [CrossRef]

- Yang, Z.; Peng, D.; Kong, Y.; Zhang, Y.; Yao, C.; Jin, L. Fontdiffuser: One-shot font generation via denoising diffusion with multi-scale content aggregation and style contrastive learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 6603–6611. [Google Scholar]

- Kuznedelev, D.; Startsev, V.; Shlenskii, D.; Kastryulin, S. Does Diffusion Beat GAN in Image Super Resolution? arXiv 2024, arXiv:2405.17261. [Google Scholar]

- Anonymous. Latent Denoising Diffusion GAN: Faster sampling, Higher image quality, and Greater diversity. arXiv 2024, arXiv:2406.11713. [Google Scholar]

- Zhou, X.; Khlobystov, A.; Lukasiewicz, T. Denoising Diffusion GANs. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2024. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Wang, C.; Liu, Y.; Xiong, Z.; Liu, D. CF-Font: Content Fusion for Few-shot Font Generation. arXiv 2023, arXiv:2303.14017. [Google Scholar]

- Haraguchi, D.; Shimoda, W.; Yamaguchi, K.; Uchida, S. Total Disentanglement of Font Images into Style and Character Class Features. arXiv 2024, arXiv:2403.12784. [Google Scholar]

- Zhang, Z.; Chen, Y.; Zhang, Z.; Yu, Y. EMD: A Multi-Style Font Generation Method Based on Explicit Style-Content Separation. In Proceedings of the SIGGRAPH Asia 2018 Technical Briefs, Tokyo, Japan, 4–7 December 2018; ACM: New York, NY, USA, 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Kim, Y.; Wiseman, S.; Miller, A.C.; Sontag, D.; Rush, A.M. Semi-Amortized Variational Autoencoders. arXiv 2018, arXiv:1802.02550. [Google Scholar]

- Tian, Y. zi2zi: Master Chinese Calligraphy with Conditional Adversarial Networks. GitHub Repository. 2017. Available online: https://github.com/kaonashi-tyc/zi2zi (accessed on 12 October 2023).

- Snell, J.; Swersky, K.; Zemel, R.S. Prototypical Networks for Few-shot Learning. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 4077–4087. [Google Scholar]

- Roboflow. Roboflow: A Platform for Managing Datasets and Training Computer Vision Models. 2024. Available online: https://roboflow.com/ (accessed on 7 August 2024).

- Google Fonts. Google Fonts: Free and Open-Source Fonts for Web and Desktop Use; Google Fonts: Mountain View, CA, USA, 2024. [Google Scholar]

- Naver Fonts. Naver Fonts: A Platform for Downloading Various Font Styles; Naver Fonts: Seongnam, Republic of Korea, 2024. [Google Scholar]

- He, X.; Cheng, J. Revisiting L1 Loss in Super-Resolution: A Probabilistic View and Beyond. arXiv 2022, arXiv:2201.10084. [Google Scholar]

- Mustafa, A.; Mantiuk, R.K. A Comparative Study on the Loss Functions for Image Enhancement. In Proceedings of the BMVC, London, UK, 21–24 November 2022. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 6626–6637. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).