Abstract

Gestures play an integral role in human communication. Our research aims to develop a gesture understanding system that allows for better interpretation of human instructions in household robotics settings. We conducted an experiment with 34 participants who used pointing gestures to teach concepts to an assistant. Gesture data were analyzed using manual annotations (MAXQDA) and the computational methods of pose estimation and k-means clustering. The study revealed that participants tend to maintain consistent pointing styles, with one-handed pointing and index finger gestures being the most common. Gaze and pointing often co-occur, as do leaning forward and pointing. Using our gesture categorization algorithm, we analyzed gesture information values. As the experiment progressed, the information value of gestures remained stable, although the trends varied between participants and were associated with factors such as age and gender. These findings underscore the need for gesture recognition systems to balance generalization with personalization for more effective human–robot interaction.

1. Introduction

Imagine the following scenario: you have a humanoid helper robot at home and you want to tell it how to set the table and prepare breakfast. You would like to instruct the robot in the same way as you would tell a human assistant: using speech and gestures. As social robots are proliferating, such scenarios are no longer limited to science fiction and are becoming more realistic. Our research goal is to make this naturalistic human–robot interaction a reality.

This is a burgeoning research area and many different approaches are being studied and implemented. In a natural setting, a person typically uses speech, gestures (hand, head, and body), and gaze movements to communicate her or his intent. So, for a robot, it is necessary to extract information from all different modalities and integrate them to determine the user’s intention. In natural environments, people communicate through a blend of speech, gestures (using the hands, head, or body), and gaze cues—modalities that robots must simultaneously perceive and synthesize to infer user goals. Current approaches to this challenge fall into two categories: technology-driven methods, which limit users to predefined gestures optimized for machine recognition, and human-driven methods, which prioritize adapting robotic systems to the organic, fluid movements that people naturally produce [1]. The latter approach is particularly compelling for household applications, where interactions demand intuitive, unscripted communication. However, designing such systems requires a foundational understanding of what constitutes “natural” human motion in real-world contexts.

Gestures are central to human communication, with pointing standing out as a cornerstone. As [2] highlights, pointing is a universal mechanism for establishing joint attention. Indeed, the author catalogs 15 distinct ways in which pointing achieves that. For domestic robots, which rely heavily on spatial guidance (e.g., “Place the mug there”), the ability to interpret pointing gestures naturally is critical. These gestures are not merely directional; they intertwine with gaze, body orientation, and situational context to clarify intent. For instance, a casual finger-point versus an exaggerated arm sweep may signal urgency, distance, or object salience.

To operationalize these insights, we conducted experiments in which participants explained table setting and meal preparation tasks to a human assistant, using speech and gestures freely. By analyzing these interactions, we identify and classify the types of pointing gestures employed in real-world scenarios. This work-in-progress aims to map how people instinctively modulate pointing (e.g., finger vs. arm gestures) based on object proximity, environmental clutter, or task urgency. Our findings inform machine vision tools that are designed to extract communicative intent from these gestures, ultimately integrating them into human–robot interaction systems. By grounding robotic perception in the richness of human behavior, we move closer to robots that understand not just what we point to, but how and why we point.

2. A Review of Gesture Research

Gestures are integral to human communication, playing a key role in supplementing speech [3]. They are the most common nonverbal signal, with the function of strengthening or weakening verbal meaning [4]. Researchers have studied gestures for decades. In 1992, McNeill [5] classified them into four types:

- Iconic gestures: depict concrete objects or actions (e.g., shaping a “tree” with the hands).

- Metaphoric gestures: represent abstract ideas (e.g., an upward motion for “progress”).

- Deictic gestures: point to objects, people, or locations.

- Beat gestures: rhythmically accompany speech but carry no specific meaning.

This research focuses on deictic gestures—particularly pointing—as they are crucial for robots interpreting human intent. According to Sotaro Kita [6], pointing is foundational to communication for the following reasons:

- Ubiquitous in daily interaction: people point into empty space to indicate referents.

- Uniquely human: like language, pointing is exclusive to our species.

- Primordial in ontogeny: one of children’s first communicative acts.

- Iconic: it can depict movement trajectories, not just direction.

The fourth aspect is significant because it shows that pointing often co-occurs with other gesture types. Thus, robots must distinguish and interpret combined gestures.

While gestures vary across cultures, index-finger pointing is largely universal [7]. However, subtle differences exist across languages and societies [8,9]. Individual gesture styles reflect personality and context [10,11]. Yet, once someone adopts a hand shape for pointing, they tend to repeat it consistently [12]. In children, these styles remain stable and can reveal developmental trajectories [13]. Non-visually impaired people almost always combine gaze with pointing to direct attention and establish shared focus [14,15].

Nonetheless, several questions remain. First, do individuals adopt pointing styles in structured tasks? Second, how does pointing interact with co-occurring behaviors, like gazing? Finally, does the conveyed information vary across scenarios, even when pointing style stays constant?

Our study tackles these gaps by examining (1) individual differences in pointing style, (2) the consistency of gesture co-occurrence, and (3) trends in information conveyed through gestures. By bridging these questions, we aim to advance theoretical models of gestural communication and practical frameworks for robots interpreting human intent.

3. Study Design

The approach in our study differs from other studies related to gestures in HRI (such as [16,17]) in that in our study, the participant gesticulated for a human assistant, not a robot. A human was chosen as the assistant for our experiment in order to reduce errors due to the limitations of the current state of the art in object grasping and manipulation, scene and command understanding, and other unforeseen circumstances. The second reason for using a human assistant was to prevent the subject from artificially forcing themselves to use unnatural ways of gesturing or communicating, based on their presumptions about what a robot might be able to comprehend.

3.1. Participants

A total of 34 participants (27 women, 7 men; 18–51 years, M = 24.24, = 7.75) were recruited via Facebook and promotional posters displayed on the campus of Jagiellonian University. Given the exploratory nature of our study and that prior gesture research [18] has reported meaningful findings with similar samples, we considered our sample size (N = 34) sufficient to identify stable individual patterns and trends relevant to our research questions. All participants were native Polish speakers. The study was conducted in English to ensure standardized instructions for future robot integration and to enable international reproducibility. Hence, participants had to pass a short A1 English quiz, of which the results were the following: percentage correct = 97.74%, = 3.50.

During the tasks, the participants were standing approximately 50 cm from the table (as shown in Figure 1). They were instructed not to touch the objects to ensure that communication relied solely on gestures and verbal instructions rather than direct interaction with the objects.

Figure 1.

Participant positioning during the experiment.

3.2. Procedure

Before the main tasks, participants were briefed on the general purpose of the study, potential risks, and terms of compensation. Gender was self-reported by participants as female- or male-identifying. They were then asked to review and sign an informed consent form. Participants received written instructions outlining the procedure and were encouraged to ask any questions before proceeding. Once they confirmed their understanding and willingness to participate, a researcher read the specific task instructions in English. This study was approved by the Research Ethics Committee at the Faculty of Philosophy, Jagiellonian University (Approval No. 221.0042.15_2024).

Participants were tasked with instructing a human assistant to achieve specific goals related to arranging everyday kitchen objects on a table. The objects used in the study, as shown in Figure 2, are part of the Yale-CMU-Berkeley dataset [19] and were arranged into six different sets, as described in Table 1. The positions of the objects on the table in all tasks were randomized.

Figure 2.

YCB kitchen objects used in the study.

Table 1.

Sets of objects for the different teaching tasks.

3.3. Tasks

During the experiment, participants were asked to perform four different tasks designed to enforce gesturing and to invoke a natural household setting, which could simulate possible human–robot interactions (Table 2).

Table 2.

Structure of the four tasks in a task set.

- Tasks 1 and 2: Communicating Features and Relations: these two tasks were repeated over four sets, with different objects being used each time. There were always five objects on the table. In the first task of each set, the participants communicated the specific characteristics of the objects (colors, sizes, shapes, names) to the assistant. In the second task, they taught the relationships between these features (the same color, the same size, the same shape).

- Tasks 3 and 4: Goal-Oriented Instructions: the participants guided the assistant to prepare a bowl of fruits using the concepts previously taught. This involved providing verbal instructions and gestures to identify and place fruits in a bowl. The participants were supposed to first point at the fruit object and later point at the bowl, during which they guided the assistant verbally by saying, for example, “Put this round object in this concave object”. The difference between Tasks 3 and 4 was only in the number of objects on the table. In the third task, seven objects (with minimal ambiguities) were included. In the fourth task, the complexity increased as the table included thirteen objects, comprising both fruits and other objects.

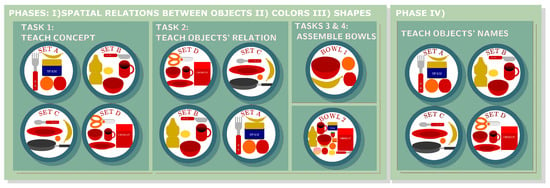

The division of the aforementioned tasks and the overall experiment order is visualized in Figure 3.

Figure 3.

The four sequential phases of our experiment. Phases I-III each taught different concepts (I: objects/positions, II: size/shapes, III: colors) through identical task sequences: (1) categorizing single objects, (2) showing object relations, and (3) bowl assembly. Phase IV consisted solely of object naming.

3.4. Data Collection

Data were collected using two Intel RealSense D435 RGB-D cameras (Intel Corporation, Santa Clara, CA, USA). Audio data were also recorded, but are not included in the analysis presented here. Each gesture recording started when the task was explained to the subject or when the objects in front of the subject changed. Each task was individually recorded and tagged with the ID of the subject and the specific task to which it belonged.

4. Analysis and Results

We analyzed the data with two different methods: computational annotation and manual annotation using MAXQDA 2022 24.9.1 software. The goal of the analysis was to discover patterns in how people use gestures. Each analysis and its results are presented below.

4.1. Computational Analysis

This analysis explores global and individual trends in the information value of gestures observed during our experiment. Specifically, we investigated whether, on average, the information value of gestures changed as participants became more familiar with the task or the experimental setting. We also examined whether such trends can be predicted using demographic information or measures of social skills.

Our approach relied on computationally distinguishing intentional gestures from non-gesture movements, quantifying their frequency, and categorizing them into types. To uncover the patterns, we applied a series of computational transformations that progressively removed noise, refined the data, and prepared them for statistical analysis. This process revealed trends in the information conveyed through gestures, their relationship to task familiarity, and the influence of external factors.

4.1.1. Data Processing

All processing steps were carried out using an original Python 3.11 pipeline available on https://github.com/tymonstudent/Gesture-Analysis (accessed on 6 June 2025). The pipeline is based on the MediaPipe Pose library, a pose estimation framework that detects anatomical landmarks in videos. The videos transformed this way were processed frame by frame, with the coordinates for each landmark identified and recorded.

The resulting data were preprocessed in the following steps:

- Forward-filling missing values: any missing data points were filled by copying the last observed non-missing value forward.

- Filtering: a low-pass Chebyshev Type I filter was applied with:

- Cutoff frequency: 4 Hz

- Sampling rate: 30 Hz

- Order: 4

- Gesture extraction: individual gestures were extracted by segmenting the dataset. As the criterion, we used a significant increase in the distance between the wrist and the hip, defined as the 55th percentile of the distance distribution in a given segment. We applied a minimum gesture length of seven frames.

To analyze these segments, we used a k-means clustering algorithm based on [20]. The x,y,z coordinates of the anatomical landmarks, extracted using MediaPipe’s skeleton model, were used for feature calculation. Next, we excluded the (non-visible) legs as relevant landmarks due to noise in the MediaPipe feature extraction process. We employed the tsfresh library to extract relevant features from each segment using the predefined MinimalFCParameters package. This package includes the following twelve features calculated from the x, y, and z coordinates: sum of values, median, mean, length (using the len() function), standard deviation, variance, root mean square, last and first locations of the minimum, maximum, absolute maximum, and minimum.

The optimal number of clusters for each participant was determined using the elbow method [21]. This approach aligns with recent work by [22], who advocate a bottom-up framework for gesture categorization, one which prioritizes physical motion dynamics over semantic intent. To our knowledge, this approach has not been systematically replicated in household interaction contexts, marking a novel contribution of our study. Afterwards, each cluster was then assigned an information value using Shannon’s Information Theory [23], with probability distributions calculated for gestures performed by individual subjects (following a similar idea from [24]). This method quantifies the informativeness of gestures by evaluating the likelihood of their occurrence. The information content of a gesture can be computed using the formula:

Here, is the information carried by gesture x, and is the probability of the gesture occurring. This formula measures how much information is conveyed by each gesture, with less likely gestures carrying more information. From a pragmatic perspective, high-I(x) gestures function as signals that convey novel or disambiguating information, while low-I(x) gestures represent predictable signals that only reinforce existing context. For statistical analysis, we used the Python scipy.stats library to calculate mean, median, quartiles, variance, and Spearman correlation.

4.1.2. Results

Distributional Information

The Results section will summarize the distribution information obtained through the analysis. Details include cluster assignments, feature distributions, and statistical measures calculated during the data processing phase.

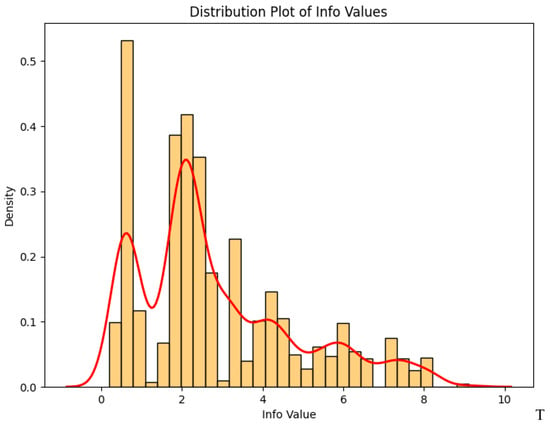

Below, the tables present summary statistics for the gesture dataset (displayed in Figure 4). Table 3 provides descriptive measures of the information values assigned to gestures, including the mean, median, range, variance, standard deviation, and interquartile range (IQR). Table 4 summarizes gesture counts, presenting the total number of gestures, the average number of gestures per subject, as well as the minimum and maximum values.

Figure 4.

Distribution of gesture information values.

Table 3.

Summary statistics for gesture information values.

Table 4.

Summary statistics for gesture counts.

Observations Based on the Analysis

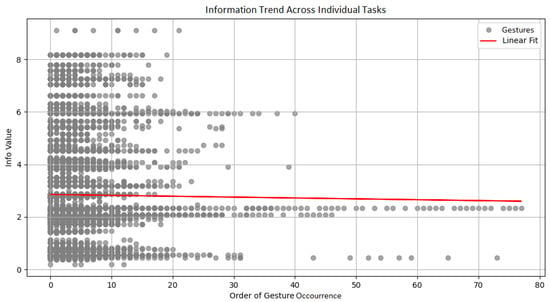

Observation 1: As the cumulative number and timing of gestures increase for an individual, the selection of gesture types results in a statistically stable amount of information being conveyed (Figure 5 and Figure 6)

Within Individual Tasks:

Figure 5.

Scatter plot of gesture order information value across single tasks with linear model imposed.

- Spearman correlation: 0.006 (p = 0.6)

- Summary: No other relevant metrics reached statistical significance. Taken individually, 14 out of 26 subjects showed a negative Spearman correlation.

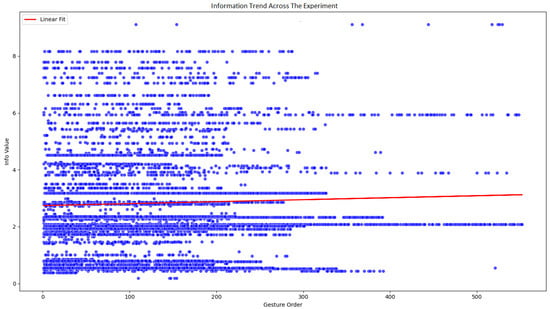

Across the Entire Experiment

Figure 6.

Scatter plot of gesture order information value across the entire experiment with linear fit. Blue dots are individual gestures.

- Spearman correlation: 0.039 (p = 0.003)

- Summary: no statistical significance was reached in other relevant metrics. Taken individually, 13 out of 26 subjects showed a negative Spearman correlation.

Observation 2: age- and gender-based trends emerged in gestural communication dynamics. Older participants and male participants exhibited a gradual decline in the amount of information carried by gestures over the course of the experiment, whereas younger participants and female participants maintained or increased their gestural informativeness over time, though individual variability persisted across groups (Table 5).

Table 5.

Correlation analysis for predictors of gesture information value trends.

Pearson’s correlation was used to investigate social skills as a predictor of trends in gesture information value in the trials. However, Spearman correlation was used for non-continuous metrics.

4.1.3. Discussion

In contrast to prior studies on dynamic gesture detection using k-means clustering [20], which deemed the method unreliable, our findings diverged positively. Subjects exhibited an average of 4.58 distinct gesture types (ranging from 3 to 6) Given the experimental setting, which was limited to pointing gestures, the small variety observed is something to be expected. The accuracy of gesture numerosity was also accurate. It aligned with what was expected given task requirements and manual encoding. Furthermore, the data revealed a consistent pattern in gesture occurrence throughout the experiment, with few significant gaps.

The boxplot of information values, along with manual verification, revealed the expected split between non-informative “beat” gestures—clustered near zero—and informative gestures, which extend rightward to include all pointing variations. In addition, further manual checks confirmed that distinct pointing styles (e.g., two-hand pointing) were reliably separated from other gestures.

The discrepancy between our findings and the previous literature can be attributed to our use of 12 dimensions, as opposed to 16 in prior work [20]. Overall, our findings show that k-means clustering works not only for static, but also dynamic gestures [25].

Intuitively, gestures with high information value should reflect the higher cognitive load exerted by the participant. Indeed, it has been shown that as tasks become easier or more familiar, gesture informativeness decreases [26]. However, our global data show a stable information value throughout the session (Figure 3; = 0.039, p = 0.0032). This might reflect the experiment’s short duration (45 min), small sample size, unfamiliar or low-validity tasks, and participants’ lack of awareness of the overall teaching goal—factors that could have prompted sustained or even increased effort, and thus information in gestures.

Despite the absence of a clear statistically significant trend across individual tasks, individual differences were evident in the data. Indeed, about half of the subjects (13 out of 26) showed an expected decline in gesture information, while the rest showed no change or an increase, masking any uniform trend.

We then looked for predictors of these individual differences. Social skills had no significant effect, and higher education showed only a very slight (and non-significant) correlation with decreasing information ( = –0.037, p = 0.08), perhaps hinting at unmeasured factors, like fluid intelligence.

The most significant predictors of trends in the information values were the gender and age of participants. The small male subgroup (n = 7) showed a decline in gestural information over time, whereas females (n = 27) maintained or increased informativeness. This could be an effect of social pressure as female-identifying people are more attentive and feel greater pressure during experiments [27], possibly leading to more informative gestures. For instance, a similar gender effect on infant gesture frequency was observed [28]. However, the imbalance in our gender distribution precludes definitive conclusions. In contrast, older participants exhibited a decrease in gestural information value throughout the study. This aligns with an intuitive explanation that greater social experience allows quicker adaptation to the experimental setting, leading to less informative gestures over time. However, this contradicts our Social Skills Inventory results, where age did not correlate with social skills. Thus, the age effect on information trends likely stems from other cognitive changes than social skill differences.

4.2. Manual Annotation Using MAXQDA Software

As explained earlier, we conducted the analysis in two ways; in this section, we present the one based on manual annotations using MAXQDA software. We first describe the coding procedure and the subset of the dataset it was applied to, followed by an analysis aimed at identifying tendencies and differences in participants’ gestures. We conclude with a discussion.

4.2.1. Coding Procedure

Two independent coders carried out the manual annotation process. To ensure consistency and reliability, they participated in a joint training session and developed a standardized set of coding guidelines (listed below). Coding was performed in two iterations to reach satisfactory intercoder agreement. After the first round, Cohen’s kappa was 0.2. In response, the coders refined the rules and independently annotated more videos. The second round yielded a significantly improved kappa of 0.78. Due to the short duration of gestures, the overlap threshold was adjusted from 95% to 80%, increasing potential misalignment from 0.16 to 0.32 s, which was deemed acceptable given the median gesture duration of 1.6 s.

The coding protocol included:

- Layered coding: videos were annotated in layers, starting with general body language and progressing to specific gestures.

- Gesture boundaries: gestures were marked from the onset of movement to completion.

- Comprehensive annotation: all involved body parts were annotated (e.g., fingers, gaze, head).

- Temporal coding: the full gesture duration was marked, including overlapping gestures.

- Repetition or pausing: these were annotated with reference to the specific part or full gesture.

- Hand switching: coders noted when participants switched hands during a gesture.

Codes were developed during preliminary analysis based on prior studies in the literature (mainly McNeill [5]). For pointing gestures, at least one code from each subcategory was required, covering number of objects, sequence, hand use, and body part used. ‘Other’ codes were optional (Table 6). Examples of coded gestures were shown in Figure 7.

Table 6.

Condensed coding scheme for gestural data.

Figure 7.

Exemplar gestures from the database with their codes. From the left: one object, both hands, whole hand; one object, one hand, index finger; chin rubbing.

Fifteen participants were manually coded with 476 videos. Forty videos were missing due to technical problems.

4.2.2. MAXQDA Analysis Results and Discussion

Frequency of Code Occurrence

The table below shows how often each code appeared in the dataset, indicating in how many videos a given code was observed. The most frequent was with eyes/gaze, which was present in 98.11% of videos. In some cases, participants deviated from the instructions by describing commands verbally or directing their gaze toward the assistant during pointing.

The second most common code was pointing with one hand, which was found in 94.12% of videos, highlighting a clear preference for this gestural modality.

The code one object (from the “Sequence of actions” category) appeared in 62.61% of videos, followed closely by point at an object (under “Number of objects”) and index finger (under “Part of the body”), each at 61.76%.

These similar frequencies point to a consistent pattern: participants typically pointed at a single object using one hand and their index finger (Table 7).

Table 7.

Top 10 and bottom 10 codes by frequency (middle entries omitted).

Co-Occurrence of Codes

Using MAXQDA, we analyzed code co-occurrence across videos, divided as follows:

- 1.

- Co-occurrence of eyes/gaze gesture in all codes (intersection of codes in a segment)

The eyes/gaze code, the most frequent, co-occurred extensively—for instance, with one hand 2024 times and one object 1322 times. Due to its prevalence, no further details are reported.

- 2.

- Co-occurrence of pointing gestures at the same time

Pointing at one object: when pointing at one object, one hand co-occurred 1157 times (87.39% of the instances). Additionally, index finger appeared 715 times (54%) and whole hand appeared 611 times (46%). This suggests that pointing at one object is generally performed with one hand, but the hand’s specific part, either the index finger or the whole hand, is almost evenly distributed.

Pointing at two objects: for two-object pointing, one hand appeared 748 times (88% of cases). Index finger and whole hand co-occurred 520 times (61.5%) and 341 times (40%), respectively. The total exceeds the occurrences of two-object pointing because participants occasionally repeated pointing (recorded 161 times) and switched between hand parts. Notably, repeated pointing at the same object co-occurred 213 times with index finger (76%).

Pointing at more than two objects: when pointing at more than two objects, the most frequently co-occurring body part was one hand, appearing 158 times, which accounts for 76.7% of the cases. Furthermore, pointing at more than two objects co-occurred with index finger 136 times (66%) and whole hand 91 times (44.2%).

Overall, repetitive pointing at the same object most frequently co-occurred with one hand (269 times, 96.8%).

- 3.

- Co-occurrence of body language within a 1-second window

The leaning forward code shows high co-occurrence with various pointing gestures, such as whole hand (590 times), one hand (363 times), and index finger (199 times), highlighting its strong association with these gestures.

Similarly, tilting head frequently co-occurred with pointing codes, particularly with one hand (391 times) and whole hand (295 times), indicating its role in active engagement. In contrast, less frequent gestures like hair touching or chin rubbing are categorized as self-comforting and differ from the interaction-oriented leaning forward and tilting head.

- 4.

- Co-occurrence of beats and iconic/metaphorical gestures (excluding pointing) in a 1-second frame

Iconic/metaphorical and beat gestures were relatively rare. To analyze their co-occurrence with other codes, we excluded pointing gestures. Co-occurrence was determined by codes appearing within 1 s of each other.

The iconic/metaphoric gesture co-occurred 11 times with one hand up/on the chest (out of 33 occurrences), 8 times with nodding yes, and 6 times with one hand up.

Head beat movement co-occurred 34 times with tilting head, with some instances showing double co-occurrence in the same tilting head instance. Additionally, two hands beat most frequently coincided with playing with hands (10 times) and moving the whole body (8 times), while one hand beat co-occurred 14 times with one hand up/on the chest.

Overall, beat gestures associated with a particular body part often co-occurred with other gestures involving the same area, suggesting a localized interaction between these behaviors.

Individual Differences in Gesture Use

This section presents an analysis of individual subject profiles to examine variability in the use of pointing and supplementary gestures. Fifteen subjects were analyzed with gestures coded using MAXQDA (Table 8).

Table 8.

Individual analysis of pointing gestures.

Total Number of Pointing Gestures

Most participants used between 100 and 200 gestures (mean = 156.53; SD = 67.89). However, three participants used 221, 277, and 312 gestures, while two others used significantly fewer (63 and 40 gestures).

Gestures Pointing at One vs. Multiple Objects

Most participants predominantly pointed at a single object (Table 8). Only two primarily gestured toward multiple objects in sequence, likely influenced by varying experimental instructions.

Hand Preference in Pointing

Fourteen out of fifteen participants preferred using one hand when pointing, with only one consistently using both hands (Table 8).

Method of Pointing

Nine participants primarily used their index finger, while six used their entire hand (Table 8). These preferences were consistent, with all participants integrating gaze coordination into their gestures—nine used it in 100% of their gestures, and the remaining six ranged from 81.4% to 99.7% (Table 8).

Gesture Pauses and Repetitions

Pausing or repeating gestures occurred in a few cases. Three participants paused 78, 68, and 58 times, with the other twelve doing so 25 times or less. For repetitions, two participants did so 65 and 43 times, three between 30 and 36 times, and the remaining ten 20 times or less (Table 8).

Most Common Additional Gestures

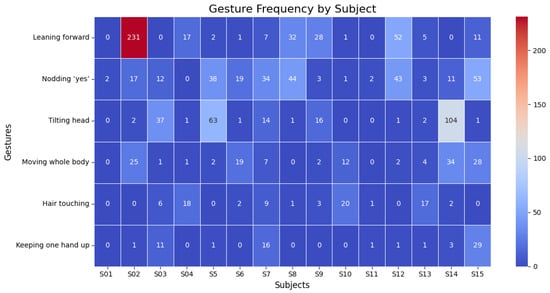

Regarding additional gestures, six were identified as the most common among the participants: leaning forward (387 instances in all participants), nodding “yes” (282 instances), tilting the head (243 instances), moving the whole body (137 instances), touching hair (78 instances), and keeping one hand raised (64 instances). However, the frequency of these gestures varied significantly between individuals.

For leaning forward (mean = 25.80; SD = 58.78), Subject 2 performed this gesture 231 times, followed by Subject 12 with 52 instances, while most other participants exhibited it far less frequently, as shown in Figure 8. Nodding “yes” (mean = 18.80; SD = 18.58) was more evenly distributed, with five participants performing it frequently (38–53 times), while others rarely did so. Similarly, tilting the head (mean = 16.13; SD = 29.98) was predominantly observed in one participant (104 instances), with two others performing it 63 and 37 times, respectively. Moving the whole body (mean = 9.13; SD = 11.64) was also concentrated among a few individuals, with three participants doing so between 25 and 34 times. For the last two types of gestures, hair touching (mean = 5.27; SD = 7.24) was the most common among three of the subjects, and keeping one hand up (mean = 4.27; SD = 8.28) among two. The detailed data are presented in Figure 8.

Figure 8.

A bar chart summarizing the distribution of hand usage in pointing gestures, highlighting the proportion of gestures performed with one hand versus with both hands by participants.

4.2.3. Discussion

The analysis of pointing gestures shows both common patterns and differences between individuals, which matches earlier research. As Ozer et al. [10] point out, gestures are shaped by habits, cultural backgrounds, and thinking styles. Most of the participants in our study were consistent in how often and how they used gestures, often choosing single-handed pointing combined with gaze. This supports Cochet and Vauclair’s [12] statement that people tend to stick to a specific gesture style over time, while contrasting with results from [18] who showed changes across the most frequent gestures. The use of gaze while pointing also matches earlier studies [14,15], showing its importance in directing attention and creating shared focus during communication.

However, we also noticed differences between participants. Some used gestures more often or chose different styles, such as two-handed pointing. This reflects how people’s individual strategies and the way they understand tasks affect their gestures, as Clough et al. [11] suggest. Most gestures were aimed at single objects, showing the impact of task instructions, while pauses and repeated gestures seemed to help participants clarify their intentions or deal with uncertainty. These findings show how individual habits and task demands work together to shape gestures.

We also found differences in other types of gestures. For example, leaning forward was the most common gesture overall, but one person used it much more than others. This fits with Clough et al.’s [11] idea that personal habits influence people’s gestures. Only a few participants used other gestures, such as nodding, tilting the head, or moving the whole body, showing that these behaviors reflect personal ways of communicating or staying engaged. As Ozer et al. [10] noted, gestures should be studied individually rather than trying to find universal patterns.

These findings highlight the need for interactive systems, such as household robots, that can adapt to different gestural styles. Tools that include gesture and gaze recognition could make interactions more natural and effective. The stability in how people use gestures also aligns with previous research [13], suggesting that these patterns could help track development or spot unusual behaviors.

5. Conclusions

Our two complementary analyses converge on a single design principle for gesture-based household robots; namely, to combine a universal recognition backbone with an adaptive personalization module.

The universal backbone—trained on a diverse set of users—provides out-of-the-box coverage for the majority of gestural commands. We demonstrated that, during routine household tasks, users globally maintain a consistent level of information conveyed through their pointing gestures, and that a standardized gesture taxonomy (derived from our manual annotations) applies reliably across participants (for similar cultural backgrounds to ours).

At the same time, both analyses revealed substantial individualization. Some users altered their gesture information values as they became more familiar with the task; our post-hoc correlations linked these trends to demographic factors. Likewise, although the participants shared a core gesture repertoire, each one exhibited a distinctive, internally consistent style across trials.

Taken together, these findings argue for a two-tiered architecture:

- Generalized backbone: captures the bulk of user commands with minimal calibration.

- Adaptive personalization module: actively learns each user’s unique gesture “signature” during early interactions and adjusts recognition thresholds (e.g., minimum motion magnitude, finger-extension angles) to preserve accuracy over time.

By uniting broad applicability with on-the-fly customization, future household robots can deliver both robust performance for new users and refined accuracy for long-term personalized interaction.

6. Limitations and Future Research

The characteristics of our participant group might have influenced the findings, thus reducing the generalizability. First, all participants were native Polish speakers. Thus, they represented only one nationality. Second, we conducted the study in English. Although all participants passed the A1 test, speaking in L2 is known to influence gesture behaviour [29]. Third, the sample size of 34 participants was relatively small, and may limit the generalizability of the findings. This is particularly true for subgroup analyses, such as the gender differences we observed (where 79.4% of participants were females). Future studies should explore if our observed consistency and trends behave similarly across cultures and in more generalizable cohorts.

Regarding the computational analysis, given the (somewhat expected) weakness of possible trends in information conveyed through gestures, the relatively small sample size and the short study duration may have limited the strength of the findings. While the study’s focus on pointing gestures aligns with its primary goals, it may have overlooked more nuanced trends in gesture usage. It is possible that in more complex and open-ended tasks, participants would restrict their actions by relying on their most natural gesture vocabulary. A more open experimental design could reveal more distinct individual-specific traits in gesture behavior, providing deeper insight into personalized patterns of non-verbal communication.

Manual annotation introduced certain limitations, as gesture interpretation is context- and experience-dependent. Despite the training provided and the established coding rules, future research should aim to achieve a higher inter-coder agreement score and incorporate randomization of video assignments. In this study, the annotation process was divided by participant; for example, Coder 1 annotated data from some participants, while Coder 2 worked on data from others. Finally, the study did not take cultural factors into account, even though culture can affect how people use gestures. Future studies could investigate how culture impacts individual gesture patterns to deepen our understanding of non-verbal communication.

Since gestures lie on a spectrum in terms of their dependence on accompanying speech [3], it is paramount to take speech into consideration for future analyses. This will enable us to add another dimension to distinguish how different participants point at objects and instruct the assistant or a robot.

Author Contributions

Conceptualization, T.K.; methodology, T.K., A.W., B.S., and J.K.; software, A.G.C.G., and P.G.; formal analysis, T.K., A.W., and B.S.; investigation, T.K., A.W., B.S., J.K., and A.G.C.G.; data curation, T.K., and A.G.C.G.; writing—original draft preparation, T.K., A.W., B.S., and J.K.; writing—review and editing, A.G.C.G., P.G., and B.I.; supervision, B.I.; project administration, B.I.; funding acquisition, B.I. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded bythe National Science Centre, Poland, grant number 2021/43/I/ST6/02489.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Research Ethics Committee at the Faculty of Philosophy, Jagiellonian University (approval no. 221.0042.15_2024).

Data Availability Statement

The gesture analysis pipeline is available at https://github.com/tymonstudent/Gesture-Analysis (accessed on 6 June 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Uba, J.; Jurewicz, K.A. A review on development approaches for 3D gestural embodied human-computer interaction systems. Appl. Ergon. 2024, 121, 104359. [Google Scholar] [CrossRef] [PubMed]

- Cooperrider, K. Fifteen ways of looking at a pointing gesture. Public J. Semiot. 2023, 10, 40–84. [Google Scholar] [CrossRef]

- Kendon, A. Do gestures communicate? A review. Res. Lang. Soc. Interact. 1994, 27, 175–200. [Google Scholar] [CrossRef]

- Kelmaganbetova, A.; Mazhitayeva, S.; Ayazbayeva, B.; Khamzina, G.; Ramazanova, Z.; Rahymberlina, S.; Kadyrov, Z. The role of gestures in communication. Theory Pract. Lang. Stud. 2023, 13, 2506–2513. [Google Scholar] [CrossRef]

- McNeill, D. Hand and Mind: What Gestures Reveal About Thought; University of Chicago Press: Chicago, IL, USA, 1992. [Google Scholar]

- Kita, S. Pointing: Where Language, Culture, and Cognition Meet; Psychology Press: East Sussex, UK, 2003. [Google Scholar]

- Eibl-Eibesfeldt, I. Human Ethology; Routledge: London, UK, 2017. [Google Scholar]

- Wilkins, D. Why pointing with the index finger is not a universal (in sociocultural and semiotic terms). In Pointing; Psychology Press: East Sussex, UK, 2003; pp. 179–224. [Google Scholar]

- Cooperrider, K.; Slotta, J.; Núñez, R. The preference for pointing with the hand is not universal. Cogn. Sci. 2018, 42, 1375–1390. [Google Scholar] [CrossRef] [PubMed]

- Özer, D.; Göksun, T. Gesture use and processing: A review on individual differences in cognitive resources. Front. Psychol. 2020, 11, 573555. [Google Scholar] [CrossRef] [PubMed]

- Clough, S.; Duff, M.C. The role of gesture in communication and cognition: Implications for understanding and treating neurogenic communication disorders. Front. Hum. Neurosci. 2020, 14, 323. [Google Scholar] [CrossRef] [PubMed]

- Cochet, H.; Vauclair, J. Deictic gestures and symbolic gestures produced by adults in an experimental context: Hand shapes and hand preferences. Laterality 2014, 19, 278–301. [Google Scholar] [CrossRef] [PubMed]

- Clements, C.; Chawarska, K. Beyond pointing: Development of the “showing” gesture in children with autism spectrum disorder. Yale Rev. Undergrad. Res. Psychol. 2010, 2, 1–11. [Google Scholar]

- Cappuccio, M.L.; Chu, M.; Kita, S. Pointing as an instrumental gesture: Gaze representation through indication. Humana. Mente J. Philos. Stud. 2013, 24, 125–149. [Google Scholar]

- Butterworth, G.; Itakura, S. How the eyes, head and hand serve definite reference. Br. J. Dev. Psychol. 2000, 18, 25–50. [Google Scholar] [CrossRef]

- Blodow, N.; Marton, Z.C.; Pangercic, D.; Rühr, T.; Tenorth, M.; Beetz, M. Inferring generalized pick-and-place tasks from pointing gestures. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Cosgun, A.; Trevor, A.J.B.; Christensen, H.I. Did you mean this object?: Detecting ambiguity in pointing gesture targets. In Proceedings of the Workshops of the 2015 ACM/IEEE International Conference on Human-Robot Interaction, HRI’15 Workshop, Portland, OR, USA, 2 March 2015. [Google Scholar]

- Choi, E.; Kwon, S.; Lee, D.; Lee, H.; Chung, M.K. Towards successful user interaction with systems: Focusing on user-derived gestures for smart home systems. Appl. Ergon. 2014, 45, 1196–1207. [Google Scholar] [CrossRef] [PubMed]

- Calli, B.; Singh, A.; Bruce, J.; Walsman, A.; Konolige, K.; Srinivasa, S.; Abbeel, P.; Dollar, A.M. Yale-CMU-Berkeley dataset for robotic manipulation research. Int. J. Robot. Res. 2017, 36, 261–268. [Google Scholar] [CrossRef]

- Maat, S. Clustering Gestures Using Multiple Techniques. Ph.D. Thesis, Tilburg University, Tilburg, The Netherlands, 2020. [Google Scholar]

- Humaira, H.; Rasyidah, R. Determining the appropriate cluster number using elbow method for k-means algorithm. In Proceedings of the 2nd Workshop on Multidisciplinary and Applications (WMA) 2018, Padang, Indonesia, 24–25 January 2018. [Google Scholar] [CrossRef]

- Jurewicz, K.A.; Neyens, D.M. Redefining the human factors approach to 3D gestural HCI by exploring the usability-accuracy tradeoff in gestural computer systems. Appl. Ergon. 2022, 105, 103833. [Google Scholar] [CrossRef] [PubMed]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Pagán Cánovas, C.; Valenzuela, J.; Alcaraz Carrión, D.; Olza, I.; Ramscar, M. Quantifying the speech-gesture relation with massive multimodal datasets: Informativity in time expressions. PLoS ONE 2020, 15, e0233892. [Google Scholar] [CrossRef]

- Ghosh, D.K.; Ari, S. A static hand gesture recognition algorithm using k-mean based radial basis function neural network. In Proceedings of the 2011 8th International Conference on Information, Communications & Signal Processing, Singapore, 13–16 December 2011; pp. 1–5. [Google Scholar] [CrossRef]

- Alibali, M.W.; Hostetter, A.B. Gestures in cognition: Actions that bridge the mind and the world. In The Cambridge Handbook of Gesture Studies; Cienki, A., Ed.; Cambridge University Press: Cambridge, UK, 2024; pp. 501–524. [Google Scholar]

- Cahlikova, J.; Cingl, L.; Levely, I. How stress affects performance and competitiveness across gender. Manag. Sci. 2020, 66, 3295–3310. [Google Scholar] [CrossRef]

- Silletti, F.; Barbara, I.; Semeraro, C.; Salvadori, E.A.; Difilippo, M.; Ricciardi, A. Pointing gesture: Sex, temperament, and prosocial behavior. A cross-cultural study with Italian and Dutch infants. In Proceedings of the SRCD 2021 Biennial Meeting, Virtual, 7–9 April 2021. [Google Scholar] [CrossRef]

- Gullberg, M. The Relationship Between Gestures and Speaking in L2 Learning. In The Routledge Handbook of Second Language Acquisition and Speaking, 1st ed.; Trevise, N., Munoz, C., Thomson, M., Eds.; Routledge: London, UK, 2022; p. 13. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).