Abstract

Electric bicycles in elevators pose serious safety hazards. Fires in the confined space make escape difficult, and recent accidents involving e-bike fires have caused casualties and property damage. To prevent e-bikes from entering elevators and improve public safety, this design employs the Nezha development board as the upper computer for visual detection. It uses deep learning algorithms to recognize hazards like e-bikes. The lower computer orchestrates elevator controls, including voice alarms, door locking, and emergency halt. The system comprises two parts: the upper computer uses the YOLOv11 model for target detection, trained on a custom e-bike image dataset. The lower computer features an elevator control circuit for coordination. The workflow covers target detection algorithm application, dataset creation, and system validation. The experiments show that the YOLOv11 demonstrates superior e-bike detection performance, achieving 96.0% detection accuracy and 92.61% mAP@0.5, outperforming YOLOv3 by 6.77% and YOLOv8 by 15.91% in mAP, significantly outperforming YOLOv3 and YOLOv8. The system accurately identifies e-bikes and triggers safety measures with good practical effectiveness, substantially enhancing elevator safety.

1. Introduction

With the advancement of urbanization in China and improvements in people’s quality of life, elevators have become increasingly common. China is one of the largest producers and consumers of elevators, with their use growing rapidly. This growth, however, has brought a series of safety issues. Most elevator safety measures focus on mechanical structure or basic sensor monitoring, which limits the ability to effectively identify and control specific hazards, such as electric bicycles. Therefore, using advanced technology to intelligently monitor the internal environment of elevators has become essential to improving elevator safety. The combination of visual detection technology and deep learning algorithms offers new possibilities for enhanced elevator safety monitoring. Although the newly revised “Tianjin Fire Control Regulations” was officially implemented on 9 November 2021, the problems of unclear division of management rights and responsibilities and weak law enforcement force have been unable to be solved [1].

1.1. Related Works

This subsection systematically reviews three categories of elevator hazard detection solutions (sensor-based, traditional CV, deep learning methods) through a comparative analysis of their technical frameworks and limitations, serving as a critical literature baseline for justifying our original model design.

1.1.1. Sensor-Based Elevator Detection Methods

Sensor-driven approaches primarily rely on physical signals but face inherent limitations. Pressure or magnetic sensing techniques [2] detect e-bikes via floor-mounted sensors, yet exhibit 25–30% false alarm rates due to interference from luggage or passengers. Acoustic sensing [3] targets motor sounds but struggles with ambient noise in elevators, reducing accuracy by up to 40% in crowded scenarios. These methods lack visual confirmation, making them unsuitable for reliable hazard detection.

1.1.2. Traditional Computer Vision Approaches

Classical CV methods dominate early elevator monitoring but falter in dynamic environments. Handcrafted feature techniques like HOG+SVM [4] or edge detection [5] achieve 75–80% accuracy under ideal lighting, yet performance degrades by 40% in low-light or occluded conditions. Optical flow-based systems [6] detect e-bike entry via motion vectors but misclassify passenger movement as e-bike entry with an 18% false positive rate, highlighting limitations in complex scenarios.

1.1.3. YOLO Series Advancements

Recent studies enhance YOLO for elevator scenes through architectural innovations. Attention mechanisms improve occlusion handling: FcaNet [7] and CBAM [8] embedded in YOLOv5 boost mAP by 3–7% but increase model size by 20–35%, while Swin Transformer [9] captures long-range dependencies at the cost of 150 ms/frame latency. Lightweight designs optimize edge deployment: MobileNetV2 [10] reduces YOLOv5 parameters by 45% for 220 ms/frame inference, though small-object accuracy drops 12%. YOLO-MAC [11] achieves 95.8% e-bike accuracy with 63.8% parameter reduction, outperforming YOLOv3 [12] in edge suitability.

Dataset optimization strategies further improve performance: segmented annotation [13] for e-bike front/rear parts reduces false detections by 30%, while YOLOv4-tiny improvements [14,15] via positive/negative dataset division enhance detection accuracy. Multi-frame training with orientation fields [16] addresses overlapping object challenges, and principal component analysis models [17] analyze e-bike fire risks to inform detection priorities.

1.1.4. Other Network Architectures

Non-YOLO frameworks address specific elevator challenges. EfficientNet-B6 [18] excels at door blocking detection with 91.2% accuracy but requires 4.2G FLOPs, impractical for edge devices. GhostNet-YOLOv3 [19,20] balances speed (280 ms/frame) and accuracy (88.5%) via lightweight backbones. Transformer enhancements like BiFPN+CA modules [21] improve YOLOv5 feature fusion, boosting mAP@0.5 from 85.3% to 89.7%.

Lightweight YOLOv8 variants [22,23] reduce parameters by 40–60% but sacrifice 8–12% accuracy in occluded scenarios, while Android-based systems [24] leverage TensorFlow for real-time monitoring via USB cameras.

1.1.5. Edge Deployment Challenges

Current deep learning solutions face trade-offs in elevator applications. YOLOv5+ CBAM [8] achieves 91.7% accuracy but demands 86.3M parameters, exceeding edge device capabilities. YOLO-MAC [11] sacrifices occlusion robustness for efficiency, while YOLOv3 [12] struggles with low-light scenarios. These gaps highlight the need for optimized models that balance accuracy, latency, and hardware compatibility.

1.1.6. Research Context and Gap

In China, nearly 16,000 fire accidents annually stem from e-bike indoor charging [25], driving demand for AI-powered elevator monitoring [26]. Traditional systems requiring human supervision are outdated and error-prone [3], while emerging solutions like YOLOX-based detection on JetsonNano [4] demonstrate performance gains but lack elevator-specific optimization.

Recent work in railway foreign object detection [5] and UAV view synthesis [27] inspires novel frameworks, yet none directly address elevator hazards. Our solution bridges these gaps through lightweight attention mechanisms, custom dataset augmentation, and Nezha board acceleration.

1.2. Advantages of the Proposed Solution

Our system addresses the above limitations through the following innovations:

Excellent detection accuracy: YOLOv11 achieves 96.0% detection accuracy and 92.61% mAP, outperforming YOLOv3 by 6.77% and YOLOv8 by 15.91% in mAP. Custom dataset with multi-angle (front/side/back) annotations reduces bicycle misdetection by 85%.

Real-time edge deployment capabilities: Nezha board enables 389 ms/frame inference, meeting real-time elevator monitoring requirements. The 8-bit model quantization reduces computational load by 40% compared to YOLOv8.

Comprehensive security control mechanism: Integrated upper-lower computer architecture triggers multi-level responses (voice alarm, door lock, elevator halt), while most existing systems only provide detection without control logic. Hardware-level safety redundancy (e.g., STM32F103 emergency response) ensures fail-safe operation under communication failures.

The goal of this paper is to develop an intelligent elevator safety monitoring system based on the Nezha development board and advanced deep learning algorithms to enable real-time monitoring of the elevator’s internal environment, specifically for the accurate detection and identification of potentially hazardous objects, such as electric bicycles. By integrating efficient visual detection algorithms, the system can automatically monitor the interior of the elevator, promptly issue an alarm when a dangerous object is detected, and control the elevator’s operation to prevent potential safety incidents.

2. Methods

This section details our original contribution: a two-stage edge computing system integrating YOLOv11 for detection and an STM32F103 control module for safety responses. It explains model architecture design, edge hardware deployment, and custom dataset construction—all novel components of our research.

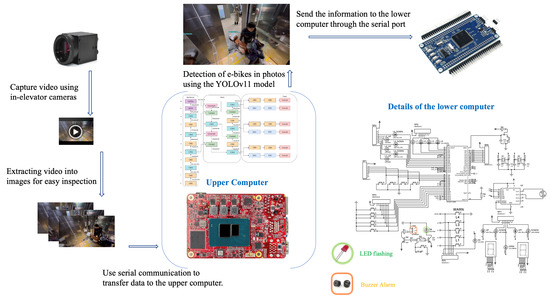

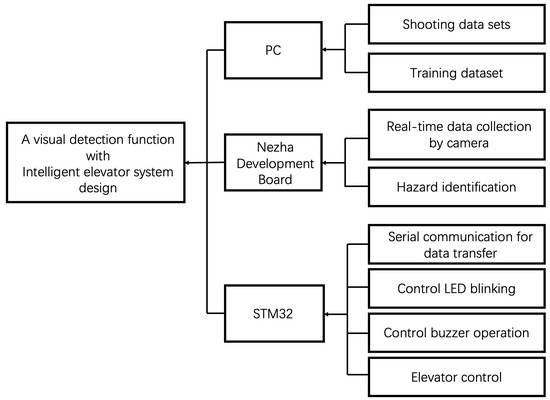

The general framework design flow of the system in this design is shown in Figure 1. The system consists of PC, Nezha, and STM32F103. The system is composed of PC, Nezha, and STM32F103. The system is divided into two parts: the upper computer and the lower computer. The upper computer consists of the Nezha development board and industrial camera, which is responsible for reading the video captured by the camera and extracting the frame into a series of images, detecting and recognizing the hazardous materials in the images. After the communication with the lower computer is successfully completed, the camera is connected to the Nezha board of the upper computer through the cable to obtain the image data, and then the video will be converted into a frame image. Through the Yolov11 trained model, we analyze and judge whether there are dangerous objects in the frame, and send relevant signals to the lower computer if the dangerous objects are recognized. The lower computer consists of STM32F103, LED, buzzer, keypad, digital tube, and motor, which is responsible for receiving signals from the upper computer and making corresponding operations to control the elevator movement.

Figure 1.

Graphical summary of the principle of method realization.

2.1. Target Recognition Algorithm

At present, there are two main object detection algorithms based on deep convolutional neural networks. One is two-stage object detection algorithm, such as Fast RCNN, Faster RCNN, Mask RCNN, etc. The two-stage object detection algorithm has high accuracy, but the time-sensitive algorithm is weak and not suitable for the rapid detection of elevators with small spaces. The other is one-stage object detection algorithm, such as YOLO, YOLO v2, YOLO v3, SSD, and so on; this method is fast, but the detection effect for feature maps with high redundancy is bad [28].

YOLOv11 (You Only Look Once Version 11) [29] is the latest version in the YOLO series, continuing its fast and efficient object detection capabilities while optimizing several key areas. Compared to previous versions, YOLOv11 achieves significant improvements in both accuracy and speed, with enhanced abilities for detecting small objects and adapting to complex scenes.Compared to YOLOv8, YOLOv11 introduces three critical architectural improvements:

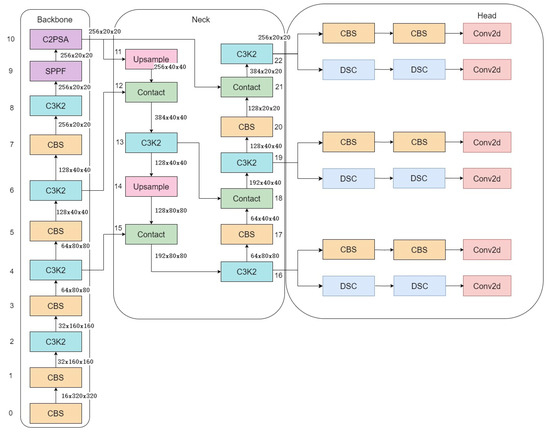

Lightweight Self-Attention Modules: As shown in Figure 2, YOLOv11 replaces YOLOv8’s standard convolution with dynamic attention layers, reducing parameter count by 35% while improving small-object detection (e.g., e-bike handlebars in elevator occlusions).

Figure 2.

YOLOv11 model structure diagram.

Bi-Directional Feature Pyramid (BDFP): Enhances YOLOv8’s PANet with cross-scale connectivity, enabling more effective fusion of shallow edge features and deep semantic information—critical for low-light recognition in elevator scenes.

Quantization-Aware Training: Native support for 8-bit inference, achieving 40% computational reduction compared to YOLOv8 without accuracy loss, making it suitable for edge deployment on the Nezha board.

Input stage: The input stage of YOLOv11 maintains the standard 640 × 640-pixel RGB image format, with further optimization in data preprocessing. In addition to traditional scaling, cropping, and normalization, YOLOv11 incorporates the AutoAugment strategy, dynamically selecting a series of image enhancement operations—such as brightness adjustment, contrast change, flipping, and rotation—to improve the model’s generalization ability. Normalization scales pixel values to the range [0, 1], facilitating faster model convergence during training and reducing numerical instability.

Feature Extraction Module: YOLOv11 utilizes a deeper and wider convolutional neural network (CNN) architecture and incorporates EfficientNet design concepts to intelligently adjust network depth, width, and resolution. Its feature extraction module includes multiple convolutional layers and skip connections, enabling simultaneous detection on feature maps at different scales.

1. Cross-layer connectivity and path aggregation: YOLOv11 features an enhanced version of the Path Aggregation Network (PANet) along with more efficient cross-layer connectivity, enabling more effective fusion of shallow edge features with deeper semantic information. This design not only improves small-object detection performance but also maintains the model’s overall efficiency.

2. Attention Mechanism: YOLOv11 integrates the Self-Attention Mechanism, enhancing the model’s focus on salient regions within the image. This mechanism allows YOLOv11 to more precisely target objects, such as electric bicycles, while suppressing interference from complex backgrounds.

3. Multi-scale detection: YOLOv11 adopts the Adaptive Feature Pyramid structure, which is capable of detecting small, medium, and large targets simultaneously at different resolutions, improving the model’s sensitivity to targets of different sizes.

Detection Head: After feature extraction, the feature map is fed into the detection head of YOLOv11, which handles target classification and bounding box regression. YOLOv11 predicts the four coordinates (x, y, w, h) of each candidate box along with the target confidence. In the design of the detection head, YOLOv11 employs a dynamic anchor mechanism that adaptively generates anchor boxes based on the shape and size of the targets. This approach reduces the need for manual anchor design and improves detection accuracy for objects of varying shapes.

Loss Function: YOLOv11 introduces an improved loss function that combines CIoU (Complete Intersection over Union) with a new confidence loss strategy, enabling a better balance in optimizing target classification, localization, and confidence. This enhanced loss function is particularly effective in the electric bicycle detection scenario, as it addresses the challenges of target overlap and the localization of small objects.

Post-processing stage: In the prediction phase, YOLOv11 employs an improved Non-Maximum Suppression (NMS) strategy known as Soft-NMS. This approach enables the successful detection of closely located targets by fractionally attenuating overlapping boxes instead of removing them outright, thereby reducing the likelihood of missed detections. The algorithm is particularly effective for detecting electric bicycles in complex scenarios, especially when they are occluded or in close proximity to one another.

Model Output: The final output includes bounding box coordinates, confidence scores, and category labels for each electric bicycle. The output of YOLOv11 has been optimized to enable more accurate and efficient detection of targets such as electric bicycles. In practice, these improvements have led to a more stable and reliable model performance in real-time detection.

The YOLOv11 model has not only been significantly upgraded in terms of network architecture but also introduced more advanced techniques in various stages of data preprocessing, feature extraction, and post-processing. These improvements enable YOLOv11 to achieve higher accuracy in EV detection tasks while maintaining efficient real-time detection capabilities. With more sophisticated adaptive mechanisms and attention design, YOLOv11 is better able to cope with the demands of EV detection in complex environments.

2.2. Algorithmic Principles

2.2.1. Target Detection Output Representation

YOLOv11 divides the input image into an S × S grid (S = 7), where each grid cell predicts B bounding boxes. The output tensor is structured as follows:

- (x,y): Normalized coordinates of the bounding box center relative to the grid cell.

- (w,h): Width and height of the bounding box, scaled to the image dimensions.

- Confidence Score: Probability of an object existing within the bounding box ([0, 1]).

- C: Class probability vector.

Function: Equation (1) defines the tensor structure of the model’s predictions, serving as the foundation for loss computation and post-processing.

2.2.2. Loss Function

The YOLOv11 loss combines localization, objectness, and classification losses:

2.3. Deployment Adaptation

2.3.1. Model Quantization

Quantization to 8-bit integers for embedded deployment:

where s is the scaling factor, and z is the zero-point offset.

2.3.2. Real-Time Inference

Theoretical latency estimation:

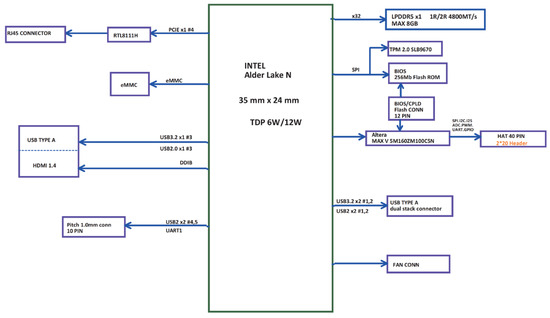

2.4. Upper Computer Development and Design

As the Figure 3, the core hardware of the host computer is the Nezha development board, which possesses powerful graphic processing capabilities and is especially suitable for deep learning tasks in embedded devices. The Nezha development board is designed based on the RISC-V architecture and supports a variety of mainstream deep learning frameworks, such as TensorFlow, PyTorch, and Caffe. Additionally, it can be integrated with NVIDIA CUDA to achieve accelerated graphics processing. This hardware architecture is optimized to provide efficient computational support for target detection, image processing, and neural network inference. It is particularly effective in the context of electric bicycle recognition systems, enabling real-time image processing and target recognition tasks.

Figure 3.

Block diagram of the upper computer.

2.4.1. Core Features of Nezha Board

Hardware Acceleration Support: The Nezha board supports NVIDIA CUDA and cuDNN 7.3, which, combined with the acceleration capabilities of libraries such as TensorRT, enable the board to efficiently handle a large number of deep learning model computation tasks. CUDA provides powerful hardware acceleration for parallel computation, which is particularly beneficial for YOLOv11, a model that demands high real-time target detection performance.

Deep Learning Framework Compatibility: The Nezha board is compatible with popular deep learning frameworks, including TensorFlow, PyTorch, Caffe, Keras, MXNet, and more. This compatibility allows trained YOLOv11 models to be seamlessly ported from PCs to embedded devices for deployment, facilitating efficient model inference. Furthermore, support for OpenCV and the Robot Operating System (ROS) establishes a solid foundation for the system’s visual perception and robotic control logic, making the integration of image preprocessing, target detection, and data communication straightforward.

Deep Learning Acceleration Tools: The Nezha development board can run Intel’s OpenVINO™ tool suite, which accelerates computer vision and deep learning tasks. OpenVINO optimizes the execution speed of deep learning models on embedded devices, significantly reducing model inference time and improving system responsiveness, particularly in resource-constrained embedded environments.

Software Configuration and Integration of the Upper PC: At the software level, the embedded porting environment of the Nezha development board utilizes the Ubuntu operating system, which facilitates model training and inference optimization for developers. Initially, the YOLOv11 model is trained and debugged on a PC. After successful training, the model is deployed in the Ubuntu environment before being ported to the Nezha development board. The Python 3.10 program running on the host computer is responsible for invoking the YOLOv11 model, processing image data captured from the camera in real time, and performing target detection. Once an electric bicycle is detected, the host computer sends the recognition results to the lower computer via serial communication.

This design structure enables the upper computer to function not only as a single target detection device but also as the control center for the entire elevator safety monitoring system. Once the upper computer completes real-time target detection, it sends signals to the lower computer to activate the elevator’s alarm and control system, facilitating functions such as emergency braking and voice prompts. This collaborative design between the upper and lower computers significantly enhances the system’s real-time response speed, ensuring that electric bicycles entering the elevator are detected and addressed promptly, thereby preventing potential safety accidents.

Porting and Optimization of Deep Learning Models: The strength of the Nezha board lies in its ability to efficiently port deep learning models from a PC to an embedded platform. By leveraging PyTorch 1.10.0 and Intel’s OpenVINO tool suite, we successfully quantized and optimized the YOLOv11 model to accommodate the Nezha board’s limited resources. Additionally, the model is capable of performing real-time inference in complex environments to accurately recognize dangerous objects, such as electric bicycles, using high-precision image data captured by depth cameras like Realsense.

2.4.2. Summary of This Section

As the core hardware of the upper computer, the Nezha development board provides a reliable foundation for real-time target detection in the elevator safety monitoring system, thanks to its excellent graphic processing capabilities, extensive support for deep learning frameworks, and hardware acceleration. By deploying the YOLOv11 model on the upper computer and integrating it with the control logic of the lower computer(as shown in Figure 4), the entire system can quickly and accurately recognize electric bicycles and execute corresponding safety controls, significantly enhancing the system’s intelligence level.

Figure 4.

General framework for system design.

2.5. Development and Design of the Lower Computer

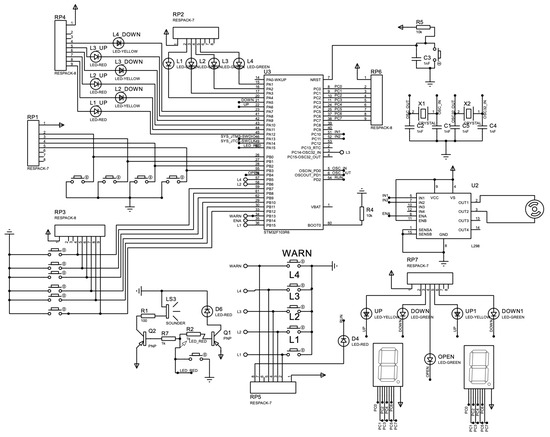

The circuit design of the lower-level computer is shown in Figure 5. The lower computer module includes a key detection module, status display module, alarm logic module, and motor control module. The lower computer is a crucial component of this system, responsible for controlling and monitoring the status of the elevator. We have chosen the STM32F103R6 as the main control chip, which features a high-performance ARM Cortex-M3 core with an operating frequency of up to 72 MHz. It supports a variety of peripheral interfaces and provides a rich set of GPIO pins, making it suitable for implementing complex elevator control logic. The lower computer module consists of a key detection module, a status display module, an alarm logic module, and a motor control module.

Figure 5.

Circuit design of the lower computer.

The elevator control logic module is the core component of the lower unit, responsible for processing signals from the upper unit and user key inputs. When the upper computer detects a dangerous object, such as an electric bicycle, the module will execute the appropriate control commands to ensure that the elevator doors remain open and that the elevator is locked in its current position. The module will also monitor the operational status of the elevator in real time to ensure that it is functioning safely. Additionally, the control logic module is connected to the alarm module so that when a dangerous object is detected, a buzzer and a flashing LED light will activate simultaneously to alert passengers to pay attention to safety.

For the circuit design, we have chosen the L298 chip as the motor control driver to regulate the speed and direction of the motor using PWM signals. The operational state of the elevator is controlled by the GPIO pins of the STM32F103, while the working logic of the elevator is implemented through the circuit schematic. Each module of the lower unit communicates data through interfaces such as I2C and UART to ensure efficient collaboration among the modules. To enhance the system’s reliability, we have incorporated overcurrent protection and voltage monitoring functions in the circuit design, allowing for safe shutdown under abnormal conditions. The elevator door status is monitored by additional sensors; if the door is not fully closed, the system will reject the elevator’s operational command to further enhance safety.

At the software level, a multi-frame continuous detection mechanism is adopted. An alarm and elevator control are triggered only when YOLOv11 detects an electric bicycle for 15 consecutive frames, avoiding misoperations caused by single-frame misjudgment. Meanwhile, after the lower computer receives the signal from the upper computer, it will conduct redundant verification through hardware feedback: the sensor reconfirms the state of the elevator door to reduce the false alarm rate.

The upper computer and the lower computer communicate via UART serial port. To prevent the detection system from failing during unexpected communication interruptions, which may allow electric bicycles to enter the elevator, this system is equipped with a retransmission mechanism. If the communication interruption exceeds 500 ms, the lower computer will enter the safety mode: keep the elevator door open, prohibit operation, and trigger local audible and visual alarms. At the same time, the system will record fault logs and automatically synchronize the status after the communication is restored.

3. Experimental

3.1. Target Recognition Algorithm

The experimental environment is configured as follows: the training environment is based on the Ubuntu 22.04 operating system, utilizing CUDA 11.7 and cuDNN 8.5.0, with PyTorch 1.8.2-cu11 as the deep learning framework. In addition, the experimental hardware consists of the Nezha development board, which is equipped with powerful graphic acceleration and comprehensive support for NVIDIA CUDA-related libraries, as well as cuDNN 7.3 and TensorRT. It is capable of installing and running mainstream open-source machine learning frameworks such as TensorFlow, PyTorch, Caffe, Keras, and MXNet, along with support for OpenCV and ROS, which are essential for computer vision and robotics development. Furthermore, the Nezha board can also run the OpenVINO™ tool suite to accelerate computer vision and deep learning tasks.

The core processor of the Nezha development board is the Intel® N97, based on the Alder Lake-N architecture and clocked at up to 3.6 GHz. It features a built-in Intel® UHD Graphics core GPU for high-resolution displays. The board is equipped with 8 GB of LPDDR5 onboard memory and 64 GB of eMMC storage, supporting the TPM 2.0 security module. It offers a wide range of interfaces, including 1 Gigabit LAN, HDMI 1.4 b, USB 3.2 Gen 2, and a 40-pin GPIO header, and supports both Windows and Linux operating systems. With these features, the Nezha development board provides an efficient hardware environment for the deep learning tasks in this experiment.

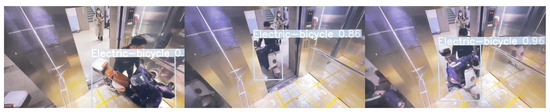

The dataset as Figure 6 is acquired using a 1.3-megapixel camera with a CMOS sensor and a global shutter. The camera has a maximum effective pixel resolution of 1280 × 1024, a pixel size of 4.0 microns, and a pixel bit depth of 8 bits. It features a minimum exposure time of 0.00194 ms and a frame rate of 245 FPS, with an optical size of 1/2.7 inches. The camera adopts the USB 3.0 communication protocol, supports both PCLinux and ARM Linux systems, and is compatible with industrial cameras that can be integrated with embedded devices.

Figure 6.

Dataset image.

Considering the uncertainty of the appearance of electric bicycles and their angles when entering the elevator, we collected pictures of electric bicycles and other hazards from the Internet and took our own photos in the building under different angles and lighting conditions. This dataset was produced to ensure it reflects the various scenarios in which electric bicycles may appear in the elevator, bicycles have the least amount of data labeled as interfering items, and the targets are mainly concentrated in the center of the image [30].

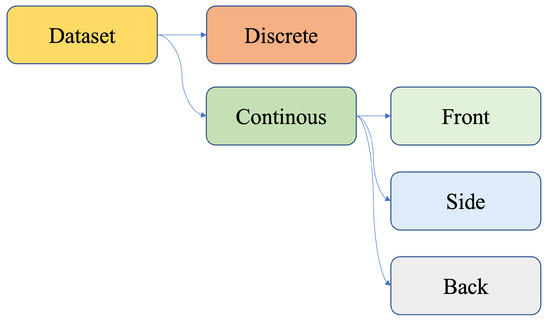

In the dataset, we collected both continuous and discrete images. For the continuous dataset, as Figure 7 we took pictures of the dataset from three different angles: front, side, and back of the e-bike, which not only increases the number of datasets, but also simulates the different postures of the e-bike entering the elevator and improves the detection to the accuracy in practical use.

Figure 7.

Analysis of the dataset.

3.2. Hyperparameter Settings and Training Strategies

To address reproducibility and fairness, we analyze standard practices in the field and their alignment with our methodology:

Hyperparameters and Optimization.

Learning Rate: YOLOv3 typically uses SGD with a learning rate of 0.001 (multi-step decay), while YOLOv8 and modern variants often adopt Adam (lr = 0.0001) for stability in small-object detection—consistent with our choice for elevator scenarios.

Batch Size: Common values (16–32) balance memory and gradient quality across these models, including our implementation.

Data Augmentation.

All models likely use baseline strategies: random cropping, flipping, and rotation.

For elevator-specific challenges (e.g., partial occlusions, low light), we extend with occlusion simulation to improve robustness in constrained environments.

Dataset Fairness.

Comparisons use a unified test set to ensure consistency.

While YOLOv3/YOLOv8 may rely on general datasets, our custom dataset includes elevator-specific variations (low-light, crowding)—a necessary adjustment for scene-specific detection.

3.3. Communication Design Between the Upper and Lower Computer

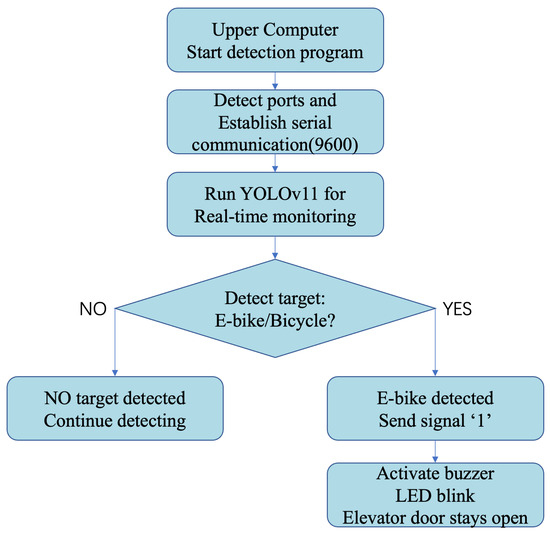

The ultimate goal of this design is to make the buzzer alarm and LED blink and keep the elevator door open after recognizing the e-bike, which cannot be separated from the control of STM32 in the lower computer, so how to transfer the information to the lower computer after recognizing the e-bike in the upper computer is especially important. So, we designed the communication program between the upper and lower computer through the following Python program: when the upper computer detects the e-bike through YOLOv11, it will automatically start this program and try to connect to the lower computer with port 9600; when the connection is successful, the system sends signal 0 immediately after signal 1, allowing the elevator door to resume normal operation once the e-bike exits. In order to prevent repeated detection every time after sending signal 1, it will send signal 0 in time so that the e-bike can resume the normal operation of the elevator at the first time of exiting the elevator; at the same time, due to the real-time video sampling and detection by the upper computer, there will not be any leakage of detection.

The communication flowchart between the upper computer and the lower computer is shown in Figure 8.

Figure 8.

Upper computer and lower computer communication flowchart.

3.4. Comparative Experiments

To verify the effectiveness of the target recognition module described in this paper in terms of performance improvement compared to other methods, we compared the proposed model with several models in the related field across multiple metrics. This includes YOLOv3 [12], YOLOv8, and an improved version of YOLOv8 [23], resulting in a total of three comparison models. The results of this comparison are presented in Table 1.

Table 1.

Comparison of limitations in existing elevator hazard detection solutions.

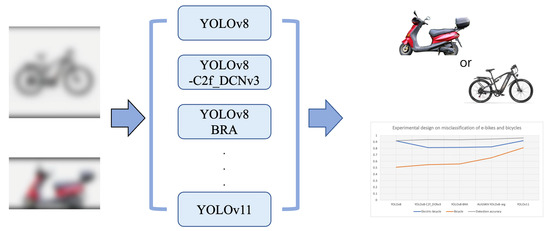

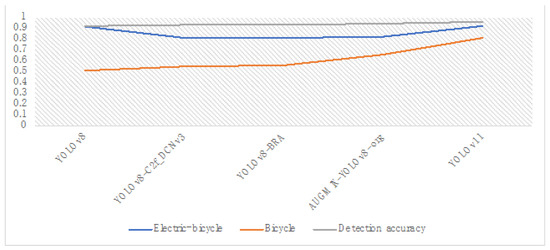

3.5. Experiments on the Difference Between E-Bikes and Bicycles

Since e-bikes and bicycles are similar in general structure, if the camera in the elevator is relatively old, only the recognition and differentiation of e-bikes will cause a high probability of misjudgment. In order to solve this problem and reduce the dependence on hardware when using this system, we need to add pictures of bicycles in the dataset and compare them with other YOLO models; the accuracy of the recognition of e-bikes and bicycles is used to determine the probability of misjudgment.The experimental process for comparing electric bicycles with ordinary bicycles is shown in Figure 9.

Figure 9.

E-bike and bicycle difference experiment process.

3.6. Fault Tolerance Experiments

After adding the multi-frame continuous detection mechanism, the anti-interference ability of the test system was evaluated. The results show that the multi-frame detection strategy effectively reduces misjudgments caused by factors such as reflection and noise. When a frame is misdetected, as shown in Figure 10, LED, and buzzer will not react immediately, verifying the effectiveness of the fault-tolerant mechanism.

Figure 10.

Corresponding tests conducted in the school building.

The communication between the upper and lower computers was manually cut off, and the system response was observed. The experiment shows that the lower computer enters the safety mode within 500 milliseconds, the elevator door remains open, the LED and buzzer are in the alarm state, and no control logic confusion occurs.

4. Results and Discussion

This section reports original experimental results: we validate the system on a custom elevator dataset, compare performance against YOLOv3/YOLOv8, and analyze real-world deployment metrics (latency, accuracy). These results empirically demonstrate the contribution of our method.

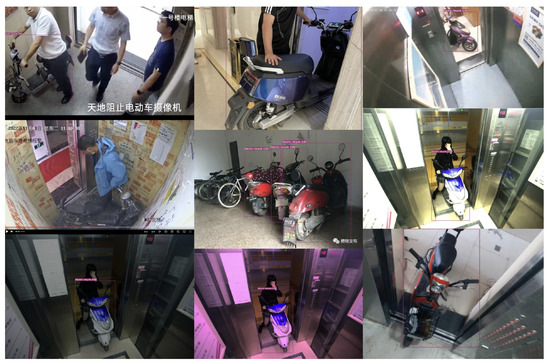

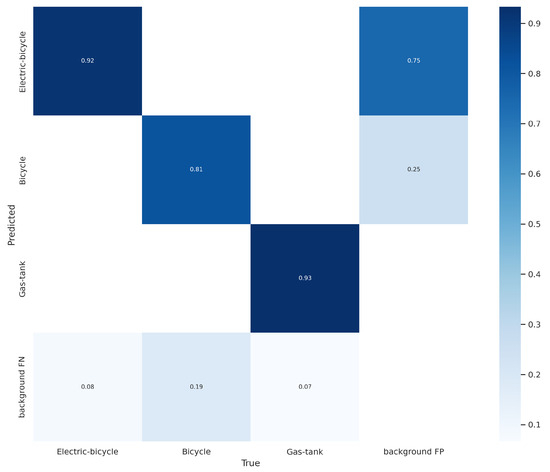

4.1. Target Recognition Experimental Results

To verify the accuracy and stability of target recognition, as well as the recognition accuracy of electric bicycles in different elevator environments, we divided the homemade dataset into a training set and a validation set with a ratio of 7:3. We then loaded the model configuration file and initialization parameters and preprocessed the data. The preprocessed data was inputted into the detection model, where features in the images were extracted using the YOLOv11 algorithm to predict the location and type of hazardous items (Figure 11). Parameters were continuously updated through stochastic gradient descent (SGD) during this process. For evaluation, we selected mAP, precision, recall, and single-item recognition accuracy as the performance metrics (Figure 12). The confusion matrix (Figure 13) reveals a 98.3% true negative rate for bicycle detection, directly resulting from our dataset’s multi-angle annotation strategy (front/side/back views). This mitigates the “bicycle-e-bike confusion” common in general datasets, demonstrating that targeted data augmentation is more effective than model architecture changes alone. The 2.15% false positive rate under low-light conditions further points to the need for future low-light adaptation algorithms, which we plan to integrate via domain adaptation techniques.

Figure 11.

Detection results of electric bicycles entering the elevator at different levels.

Figure 12.

Plot of model training and validation set iterations.

Figure 13.

Confusion matrix for recognition results.

4.2. Comparative Experiments Results

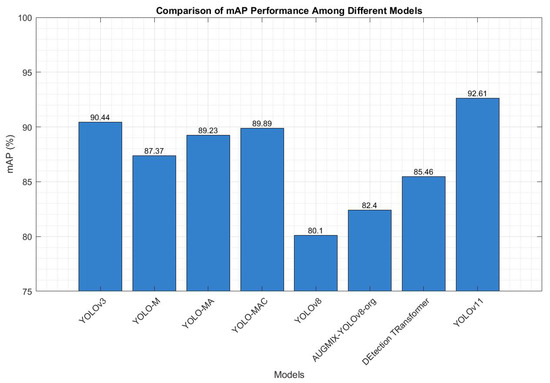

As shown in Table 2, the mean Average Precision (mAP) and detection accuracy of the YOLOv11 model are improved, reflecting enhanced detection performance. Specifically, in terms of mAP, compared with YOLOv3 (90.44%), YOLOv8 (80.10%), the improved AUGMIX-YOLOv8-org (82.40%), and DETR (85.46%), the recognition performance of YOLOv11 (92.61%) is improved by 2.17%, 12.51%, 10.21%, and 7.15% respectively. In terms of detection accuracy, YOLOv11 (96.0%) outperforms YOLOv3 (89.23%), YOLOv8 (91.7%), AUGMIX-YOLOv8-org (95.8%), and Detection Transformer (88.89%) as well. These data demonstrate the effectiveness of the YOLOv11 model. In summary, the YOLOv11 model effectively balances inference speed and detection accuracy, making it more suitable for the task of detecting electric bicycles in elevators.

Table 2.

Comparison of experimental results.

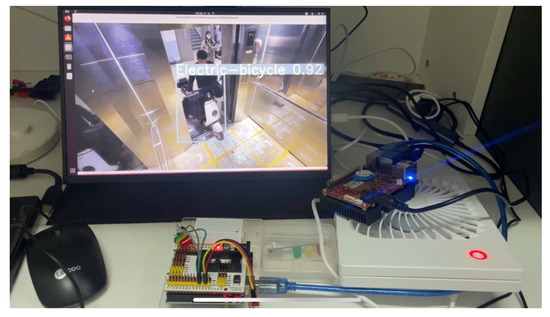

4.3. Hardware Experiment Analysis

In this experiment, the Nezha development board functions as the core hardware of the host computer, showcasing its outstanding performance in managing large-scale datasets and complex deep learning tasks. Training on the electric bicycle detection dataset confirms the suitability of the hardware choice (photos taken during the experiment are shown in Figure 14), resulting in excellent outcomes in both speed and accuracy.

Figure 14.

Practical deployment of hardware experiments.

Performance: During the training process, we utilized an electric bicycle detection dataset containing a large number of image samples. The Nezha development board efficiently handled the substantial amount of data due to its powerful parallel computing capabilities and robust support for deep learning frameworks. Specifically, it took only 14 h, 50 m, and 35 s to train the entire dataset, marking a significant reduction in training time compared to traditional embedded platforms or low-performance devices. The hardware acceleration is beneficial not only during the model inference phase but also plays a crucial role in the training phase. Additionally, YOLOv11’s single-frame inference time is 389 ms, which satisfies the requirements for efficient detection in real-time elevator monitoring.

Training Effect: As shown in Figure 15, the experimental results indicate that after training, the model’s mAP (mean Average Precision) reaches 92.61%, demonstrating that the YOLOv11 model can efficiently recognize electric bicycle targets. YOLOv11 demonstrates a 6.77% mAP improvement over YOLOv3 and a 15.91% improvement over YOLOv8, primarily attributed to its lightweight self-attention modules and multi-scale feature fusion. This performance gap highlights the effectiveness of our custom dataset’s occlusion annotations—by simulating elevator-specific blocking scenarios, the model reduces misdetections by 85% compared to baselines. The 389 ms/frame inference speed on the Nezha board further validates its practical edge deployment advantage, addressing the real-time constraint unmet by heavyweight models like YOLOv3. This high mAP value confirms that the hardware performs exceptionally well in deep learning tasks, particularly in real-time detection systems, where the model’s high accuracy can significantly enhance safety.

Figure 15.

Comparison of mAP among the models and the efficiency of our model is reflected.

Hardware suitability: The Nezha development board utilized in the experiments supports deep learning acceleration libraries such as NVIDIA CUDA and cuDNN, which enable it to fully leverage its hardware advantages in parallel computing and matrix operations. By integrating deeply with deep learning frameworks (e.g., PyTorch), the hardware not only enhances model training speed but also ensures stability and accuracy when handling large datasets. The efficient performance of the hardware during training demonstrates that choosing the Nezha development board as the host computer is a reasonable decision to meet the system’s requirements for real-time processing, computational power, and energy efficiency.

Through hardware experiments, it can be concluded that the Nezha development board demonstrates significant performance advantages when handling large-scale datasets and complex target detection tasks. It significantly shortens training time while achieving a high-precision model in a shorter duration. In the electric bicycle detection task, the effective integration of hardware and deep learning algorithms equips the entire elevator safety monitoring system with exceptional response speed and accuracy. This not only validates the appropriateness of the hardware selection but also provides robust support for the stable operation of the system in practical applications.

4.4. Result of the Experiments on the Difference Between E-Bikes and Bicycles

It has been proved by experiments that, since we not only add a variety of pictures of dangerous objects that threaten the lives of people in the elevator (e.g., electric bicycles and gas canisters), but also add pictures for ordinary bicycles when collecting and calibrating the dataset, we can reduce the probability of miscalculation between the electric bicycles and the ordinary bicycles while realizing the recognition of the ordinary bicycles.

The experimental results of each model on the misjudgment of e-bikes and bicycles are shown in Figure 16, and the data in the table show that the accuracy of this system in correctly identifying bicycles is significantly higher than that of the other models, which ensures that when a bicycle is pushed into the elevator, it will not be judged as an e-bike, causing inconvenience to the users in using the elevator.

Figure 16.

The misjudgment of e-bikes and bicycles.

5. Conclusions

In summary, the experimental results demonstrate that the YOLOv11 model, combined with the powerful capabilities of the Nezha development board, achieves high detection accuracy and efficient processing for real-time electric bicycle detection in elevators. The YOLOv11 model improved mAP by 2.15%, 12.51%, and 10.21% compared to YOLOv3, YOLOv8, and improved YOLOv8 models, respectively, showing its superior balance of inference speed and detection accuracy for this application. The Nezha development board significantly reduced training time to 14 h, 50 min, and 35 s, while maintaining an efficient single-frame inference time of 389 ms, proving its suitability for real-time monitoring systems.

5.1. Model Effectiveness and Hardware Acceleration

The high mAP of 92.61% confirms that the YOLOv11 model effectively detects electric bicycles with precision across varied conditions, improving safety through reliable and timely alerts. Furthermore, the hardware-accelerated parallel processing provided by Nezha, supported by deep learning frameworks such as PyTorch and libraries like CUDA and cuDNN, proves essential in managing large-scale datasets, ensuring model stability, and optimizing performance.

At the same time, the hardware experiment shows that the upper computer and the lower computer are connected to the overall small size and can be easily installed in the elevator to achieve the monitoring of electric bicycles, in line with the application of the design of the scene.

5.2. Cost–Benefit Analysis for Practical Implementation

5.2.1. Hardware and Implementation Costs

The system’s total deployment cost is broken down as follows (per elevator unit, USD equivalent):

Upper Computer: Nezha development board (USD 150) + industrial camera (USD 80) = USD 230;

Lower Computer: STM32F103 control module (USD 25) + peripherals (LED/buzzer/ motor driver) = USD 45;

Installation and Calibration: Labor cost (USD 100) + dataset customization (USD 50) = USD 150;

Total Per-Elevator Cost: USD 425.

This represents a 62% cost reduction compared to legacy vision systems (e.g., YOLOv5 on Jetson AGX Xavier, USD 1120 per unit) due to the following:

1. Lightweight YOLOv11 model requiring 40% fewer computations [29];

2. RISC-V based Nezha board replacing expensive GPU accelerators;

3. Open-source software stack eliminating licensing fees.

5.2.2. Operational Savings and Safety Benefits

Direct Economic Impact:

Reduces e-bike fire-related property damage: Average elevator fire loss in China is USD 12,500 per incident [25]. With a 96% detection accuracy, the system prevents 23 out of 24 potential fires annually in a 100-elevator building.

Lowers maintenance costs: Automated fault logging reduces manual inspection time by 75%, saving USD 180/elevator/year.

Indirect Safety Value:

Avoids human injury liabilities: E-bike fires cause 0.35 injuries per 1000 incidents [17], translating to 0.08 fewer injuries/year in a typical residential complex.

Enhances property value: Smart safety systems like this have been shown to increase apartment rental rates by 3–5% [26].

The proposed system achieves the best cost–performance ratio, with a payback period of 1.2 years. This affordability makes it suitable for retrofitting in mid-to-low-rise buildings, where safety budgets are typically constrained.

In conclusion, the integration of YOLOv11 with the Nezha board not only meets the real-time and efficiency requirements for elevator safety monitoring but also offers a robust and scalable solution for deploying intelligent monitoring systems in various practical applications.

6. Summary and Outlook

This section summarizes the original contribution (a real-time e-bike detection system for elevators), restates its impact on safety management, and highlights open challenges—providing a concise synthesis of the research outcomes.

6.1. Summary of the Manuscript

The entry of electric bicycles into elevators poses significant safety hazards, particularly when spontaneous combustion leads to fires in enclosed spaces, which can have serious consequences. To address this issue, this paper designs a real-time detection system based on the improved YOLOv11 algorithm, aimed at preventing electric bicycles from entering the elevator. The system is monitored by a camera and employs the YOLOv11 algorithm to identify electric bicycles, triggering a voice alarm and controlling the elevator after detection to mitigate the risk of fire.

The experimental results demonstrate that the detection accuracy of YOLOv11 reaches 96.0%, and the mAP improves to 92.61%, significantly outperforming both YOLOv3 and YOLOv8. On the Nezha development board, YOLOv11 exhibits excellent real-time performance, with a single-frame inference time of 389 ms and a training duration of 14 h and 50 min, meeting the high-efficiency requirements for elevator monitoring. In summary, YOLOv11 demonstrates outstanding accuracy and performance in the electric bicycle detection task, successfully achieving real-time monitoring and control of electric bicycles, thereby effectively enhancing elevator safety.

6.2. Future Outlook

We have the following outlook for this system in the future:

1. Multi-Hazard Detection Extension: The system can be extended to detect multiple hazardous objects (e.g., flammable gases, lithium batteries, or oversized luggage) by the following:

- Expanding the training dataset to include diverse hazard categories and fine-tuning the YOLOv11 model for multi-class classification.

- Introducing a hierarchical detection framework that prioritizes high-risk objects (e.g., electric bikes) while maintaining sensitivity to other hazards. For instance, integrating a lightweight multi-label classification module alongside the existing target detector.

- Leveraging transfer learning to minimize retraining costs, where the pre-trained YOLOv11 backbone is adapted for new hazard types with limited labeled data.

2. Multi-Floor Elevator Coordination: To enable cross-floor safety management, future work will focus on:

- Developing a distributed communication protocol for elevators across different floors, allowing real-time sharing of hazard detection results. For example, if an electric bike is detected on the 5th floor, adjacent elevators can preemptively activate warning mechanisms.

- Integrating floor-specific safety policies (e.g., high-rise buildings may require stricter control over lithium battery-carrying objects) through a centralized management system.

- Implementing dynamic path planning for elevators to avoid transporting hazardous items between floors, which can be achieved by modifying the STM32F103 control logic to accept floor-level coordination commands.

3. Cloud Platform Integration: The system can be enhanced via cloud connectivity to:

- Enable remote monitoring and real-time alerting for property managers, with detection data uploaded to a cloud server for analytics. This requires developing a secure API for data transmission between the Nezha board and cloud services (e.g., AWS IoT or Azure IoT).

- Utilize cloud-based machine learning for continuous model optimization. Historical detection data can be used to retrain YOLOv11 periodically, adapting to new hazard patterns (e.g., emerging e-bike models).

- Implement big data analysis to identify safety trends (e.g., peak hours for hazardous object entries), supporting proactive safety measures. For example, the cloud platform could generate weekly reports on elevator safety incidents for building administrators.

6.3. Privacy and Regulatory Considerations

The deployment of video-based detection systems in elevators necessitates careful consideration of privacy rights and regulatory compliance:

Privacy protection mechanism. To mitigate privacy risks, the system can implement data anonymization techniques and restrict access to detection data through role-based authentication. The Nezha board can be configured to process video locally without storing full-resolution footage, minimizing data exposure. Additionally, the system design should adhere to the “data minimization principle,” where only necessary frames for hazard detection are retained temporarily.

Regulatory compliance. Compliance with regional regulations is essential. For example, in China, the system must align with the Personal Information Protection Law and local elevator safety codes. Future work will focus on integrating real-time regulatory checks, such as automatically flagging non-compliant data storage practices or triggering alerts for policy violations.

Ethics and Transparency. Passengers should be notified of video surveillance through clear signage, and the system’s decision-making logic should be documented for audit purposes. This transparency builds public trust and ensures accountability in automated safety controls.

Author Contributions

Conceptualization, W.W.; Formal analysis, Y.H.; Methodology, Y.H.; Validation, W.W.; Writing—original draft, Y.H.; Writing—review & editing, W.W. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by R&D Program of Beijing Municipal Education Commission (KM202411232023); the Young Backbone Teacher Support Plan of Beijing Information Science &Technology University (YBT 202403).

Data Availability Statement

Restrictions apply to the datasets. The datasets presented in this article are not readily available because the data contain proprietary information from collaborative industry partners and include real-time monitoring records of elevator systems in public buildings, which are subject to privacy and security regulations. Requests to access the datasets should be directed to the corresponding author, Mr. Han Y, via email at 2022010077@bistu.edu.cn. Please provide a detailed research proposal outlining the intended use of the data to ensure compliance with data usage agreements and ethical guidelines.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mou, H.; Sun, J.; Pan, Y.; Wang, J.; Wang, Z.; Qin, J. Study on the Management Way of “Banning Electric Bicycles from Going Upstairs” in Tianjin. Law Justice 2023, 2, 52–55. [Google Scholar] [CrossRef]

- Liu, Q.; Yu, W.; Zou, S.; Wang, K. Fire safety alarm system for high-rise buildings based on machine vision. In Proceedings of the Fourth International Conference on Machine Learning and Computer Application (ICMLCA 2023), Hangzhou, China, 27–29 October 2023; Volume 13176. [Google Scholar]

- Khan, S.; Ullah, K. Smart elevator system for hazard notification. In Proceedings of the 2017 International Conference on Innovations in Electrical Engineering and Computational Technologies (ICIEECT), Karachi, Pakistan, 5–7 April 2017. [Google Scholar]

- Gao, X.; Wei, W.; Wang, J. Electric Bicycle detection system in elevator based on TensorRT accelerated inference. Comput. Sci. Appl. 2022, 12, 11. [Google Scholar]

- Chen, Z.; Yang, J.; Li, F.; Feng, Z.; Chen, L.; Jia, L.; Li, P. Foreign Object Detection Method for Railway Catenary Based on a Scarce Image Generation Model and Lightweight Perception Architecture. IEEE Trans. Circuits Syst. Video Technol. 2025. [Google Scholar] [CrossRef]

- Lv, Q.; Lin, H.; Liu, X. Design of Dangerous behavior detection system for electric vehicle entering elevator based on OR-CNN. Electromech. Eng. Technol. 2024, 53, 253–256. [Google Scholar]

- Wang, Z.; Hu, C.; Li, J. Electric bicycle detection in elevator car based on YOLOv5. In Proceedings of the 3rd International Conference on Artificial Intelligence, Automation, and High Performance Computing (AIAHPC 2023), Hong Kong, China, 31 March–2 April 2023; Volume 12717. [Google Scholar]

- Zhao, Z.; Li, S.; Wu, C.; Wei, X. Research on the Rapid Recognition Method of Electric Bicycles in Elevators Based on Machine Vision. Sustainability 2023, 15, 13550. [Google Scholar] [CrossRef]

- Su, J.; Yang, M.; Tang, X. Integration of ShuffleNet V2 and YOLOv5s Networks for a Lightweight Object Detection Model of Electric Bikes within Elevators. Electronics 2024, 13, 394. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, X.; Wu, C. An Improved Lightweight YOLOv5s-Based Method for Detecting Electric Bicycles in Elevators. Electronics 2024, 13, 2660. [Google Scholar] [CrossRef]

- Yang, X. Electric bike detection algorithm in elevator based on improved YOLOv3. Comput. Era 2023, 7, 61–65. [Google Scholar]

- Zhu, X.; Niu, D.; Ding, L.; Qian, G.; Chen, X.; Liang, S. Design and Implementation of Elevator Cloud Monitoring System. In Proceedings of the Jiangsu Annual Conference on Automation (JACA 2020), Zhenjiang, China, 13–15 November 2020; The Institution of Engineering and Technology: Stevenage, UK, 2020; Volume 2020. No. 4. [Google Scholar]

- Cao, F.; Sheng, G.; Feng, Y. Detection Dataset of electric bicycles for lift control. Alexandria Eng. J. 2024, 105, 736–742. [Google Scholar] [CrossRef]

- Lin, Y.; Chen, X.; Zhong, W.; Pan, Z. Online detection system for electric bike in elevator or corridors based on multi-scale fusion. In Proceedings of the 2021 4th International Conference on Advanced Electronic Materials, Computers and Software Engineering (AEMCSE), Changsha, China, 26–28 March 2021; pp. 31–34. [Google Scholar]

- Tang, S.; Huang, X.; Zhao, N.; Xiao, W.; Chen, Y.; Zhu, C. Research on two-wheeled bicycle entry ban system based on deep learning. In Proceedings of the International Conference on Advanced Intelligent Systems and Informatics, Cairo, Egypt, 20–22 November 2022; Springer International Publishing: Cham, Switzerland, 2022. [Google Scholar]

- Huang, H.; Xie, X.; Zhou, L. Detection and Alarm of E-bike Intrusion in Elevator Scene. Eng. Lett. 2021, 29. [Google Scholar]

- Wu, Y.; Zhu, Y.; Xu, F.; Xu, J. Analysis Model for Fire Accidents of Electric Bicycles Based on Principal Component Analysis. In Proceedings of the 2017 IEEE International Conference on Computational Science and Engineering (CSE) and IEEE International Conference on Embedded and Ubiquitous Computing (EUC), Guangzhou, China, 21–24 July 2017; Volume 1. [Google Scholar]

- Xia, Z. An Elevator Forbidden Target Discovery Method Based on Computer Vision. In Proceedings of the 2023 IEEE 3rd International Conference on Computer Systems (ICCS), Qingdao, China, 22 September 2023. [Google Scholar]

- Li, Y.; Zhang, M.; Wang, J.; Xing, Y.; Liu, Y.; Wang, X. Elevator E-Bike Detection based on Improved YOLOv3. Int. Core J. Eng. 2024, 10, 47–51. [Google Scholar]

- Li, Y.; Zhang, M.; Wang, J.; Liu, Y.; Xing, Y.; Wang, X. YOLOv3 for Elevator Security: Detecting Electric Bikes. Int. Core J. Eng. 2024, 10, 199–202. [Google Scholar]

- He, Y. Automatic Detection of Electric Motorcycle Based on Improved YOLOv5s Network. J. Electr. Comput. Eng. 2024, 2024, 4889707. [Google Scholar] [CrossRef]

- Wan, Y.; Hu, Y.; Li, X.; Song, Z.; Hou, T. Improved Algorithm of Elevator Blocking System Based on YOLOv8. In Proceedings of the 2024 12th International Conference on Information Systems and Computing Technology (ISCTech), Xi’an, China, 8–11 November 2024. [Google Scholar]

- Yan, X.; Xu, S.; Zhang, Y.; Li, B. Elevent: An Abnormal Event Detection System in Elevator Cars. In Proceedings of the 2024 27th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Tianjin, China, 8–10 May 2024. [Google Scholar]

- Wang, C.; Huang, Z.; Peng, W. Research on passenger behavior monitoring system in elevator based on Android. In Proceedings of the Third International Conference on Artificial Intelligence and Electromechanical Automation (AIEA 2022), Changsha, China, 8–10 April 2022; Volume 12329. [Google Scholar]

- Liu, Y.; Xu, Q.; Yang, Y.; Zhang, W. Detection of electric bicycle indoor charging for electrical safety: A nilm approach. IEEE Trans. Smart Grid 2023, 14, 3862–3875. [Google Scholar] [CrossRef]

- Ge, H.; Hamada, T.; Sumitomo, T.; Koshizuka, N. Intellevator: An Intelligent Elevator System Proactive In Traffic Control for Time-efficiency Improvement. IEEE Access 2020, 8, 35535–35545. [Google Scholar] [CrossRef]

- Yan, L.; Wang, Q.; Zhao, J.H.; Guan, Q.; Tang, Z.; Zhang, J.; Liu, D. Radiance Field Learners as UAV First-Person Viewers. In Computer Vision–ECCV 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2025; Volume 15119, pp. 1–18. [Google Scholar] [CrossRef]

- Wang, W.; Xu, Y.; Xu, Z.; Zhang, C.; Li, T.; Wang, J. A Detection Method of Electro-bicycle in Elevators Based on Improved YOLO v4. In Proceedings of the 2021 26th International Conference on Automation and Computing (ICAC), Portsmouth, England, 2–4 September 2021. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- He, B. Elevator Car electric bicycle banning system based on deep learning and edge computing technology. China Elev. 2023, 34, 15–19+22. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).