Abstract

Object detection algorithms have evolved from two-stage to single-stage architectures, with foundation models achieving sustained improvements in accuracy. However, in intelligent retail scenarios, small object detection and occlusion issues still lead to significant performance degradation. To address these challenges, this paper proposes an improved model based on YOLOv11, focusing on resolving insufficient multi-scale feature coupling and occlusion sensitivity. First, a multi-scale feature extraction network (MFENet) is designed. It splits input feature maps into dual branches along the channel dimension: the upper branch performs local detail extraction and global semantic enhancement through secondary partitioning, while the lower branch integrates CARAFE (content-aware reassembly of features) upsampling and SENet (squeeze-and-excitation network) channel weight matrices to achieve adaptive feature enhancement. The three feature streams are fused to output multi-scale feature maps, significantly improving small object detail retention. Second, a convolutional block attention module (CBAM) is introduced during feature fusion, dynamically focusing on critical regions through channel–spatial dual attention mechanisms. A fuseModule is designed to aggregate multi-level features, enhancing contextual modeling for occluded objects. Additionally, the extreme-IoU (XIoU) loss function replaces the traditional complete-IoU (CIoU), combined with XIoU-NMS (extreme-IoU non-maximum suppression) to suppress redundant detections, optimizing convergence speed and localization accuracy. Experiments demonstrate that the improved model achieves a mean average precision (mAP50) of 0.997 (0.2% improvement) and mAP50-95 of 0.895 (3.5% improvement) on the RPC product dataset and the 6th Product Recognition Challenge dataset. The recall rate increases to 0.996 (0.6% improvement over baseline). Although frames per second (FPS) decreased compared to the original model, the improved model still meets real-time requirements for retail scenarios. The model exhibits stable noise resistance in challenging environments and achieves 84% mAP in cross-dataset testing, validating its generalization capability and engineering applicability. Video streams were captured using a Zhongweiaoke camera operating at 60 fps, satisfying real-time detection requirements for intelligent retail applications.

1. Introduction

With the application of novel payment technologies such as mobile payment, new computer vision-based unmanned retail cabinets have emerged. Compared to traditional retail cabinets, these systems not only improve shopping efficiency but also enhance user experience. Current mainstream unmanned retail cabinets primarily include traditional types, radio frequency identification (RFID) [1], gravity-sensing systems, and vision-based intelligent unmanned vending machines (UVMs). However, existing solutions generally suffer from high manufacturing costs and frequent maintenance requirements. Consequently, vision-based target detection technologies for unmanned retail cabinets, which focus on accurately identifying diverse products and foreign objects, have garnered significant attention. This paper addresses the challenges of product and foreign object detection in retail cabinet scenarios, where multi-scale target characteristics and occlusion phenomena significantly increase detection difficulty. State-of-the-art object detection technologies are broadly categorized into two-stage and one-stage frameworks. Two-stage detectors, represented by the classic regions with convolutional neural networks (R-CNNs) [2], extract regions of interest (ROI) [3] using search algorithms and then utilize convolutional neural networks for feature extraction and classification. Subsequent advancements, such as fast R-CNN and faster R-CNN [4], further optimize efficiency. One-stage detectors, exemplified by YOLO [5,6], prioritize detection speed and accuracy. Other notable algorithms include the single shot MultiBox detector (SSD) [7,8,9], RetinaNet [10,11], fully convolutional one-stage object detection (FCOS) [12,13], transformer-based models like the detection transformer (DETR) [14,15], real-time DETR (RT-DETR) [16], deformable DETR [17], and CenterNet [18], all of which have achieved significant progress in object detection.

In addition, YOLOv11 has garnered significant attention due to its exceptional comprehensive performance. The backbone network, a critical component of the model, primarily extracts high-level features. Classic architectures include the visual geometry group network (VGG) [19], the innovative residual network (ResNet) [20] (composed of identity and convolutional branches, which addresses training difficulties in large models by summing the outputs of both branches, enabling deeper network construction), the lightweight MobileNet designed for resource-constrained scenarios, and ShuffleNet utilizing channel shuffling for efficient feature extraction are widely adopted backbone networks. The neck network focuses on post-processing features extracted by the backbone to achieve effective fusion of multi-level information. Among these, the feature pyramid network (FPN) [21] constructs feature pyramids and integrates multi-scale features to enhance detection capability for multi-scale targets. The spatial pyramid pooling (SPP) [22] and SPPF (spatial pyramid pooling-fast) [23] modules aggregate features through multi-scale pooling operations, improving adaptability to target scale variations. These network architectures collaboratively contribute to advancing the performance of object detection models.

To address the challenges of target occlusion, illumination variations, and small-target detection in dynamic visual cabinet scenarios, recent studies have proposed several innovative approaches. H. Wang et al. [24] identified that downsampling in traditional convolutional neural networks (CNNs) leads to spatial information loss for small targets and proposed a generative adversarial network-based small-target detector (GAN-STD). Using ResNet-50 as the backbone, this method incorporates a cross-scale feature alignment module in the generator to enforce consistency between shallow small-target features and deep large-target semantic features during adversarial training. Compatible with single-stage detectors (e.g., SSD and YOLOv4), it reduces small-target missed detection rates by 21.3% on the TT100K industrial quality inspection dataset. Dong et al. [25] designed a texture-semantic–contour joint extraction Network to address small-target blurring in low-resolution images. The backbone employs MobileNetv3 with parallel dilated convolutions and a spatial pyramid pooling (SPP) module to extract local texture details and global contour information, followed by sub-pixel convolution for 2× feature upsampling. This method achieves a 4.8% misidentification rate in license plate recognition for traffic monitoring. C. Wang et al. [26] proposed a cross-scale feature pyramid network (CS-FPN) based on YOLOv11 for aerial images, introducing bidirectional cross-layer connections and a feature recalibration module (FRM) to dynamically adjust channel weights alongside a “sandwich” fusion strategy for bidirectional interaction between high-level semantics and low-level details. On the VisDrone dataset, CS-FPN improves vehicle detection recall to 78.6%. Liu et al. [27] developed BiFPN-SECA, integrating squeeze-and-excitation network (SENet) channel attention with coordinate attention. Enhanced attention modules are embedded before and after sampling layers in a bidirectional feature pyramid network (BiFPN), capturing spatial long-range dependencies and filtering critical channels. This achieves 92.4% accuracy for detecting solder joint defects as small as 8 × 8 pixels in PCB inspection. Yang et al. [28] constructed a multi-attention residual network (MA-ResNet) for tiny vehicle detection in intelligent transportation. Based on ResNet-101, parallel spatial, channel, and self-attention submodules are embedded in residual blocks, with a gating mechanism for dynamic multi-scale feature fusion. As the backbone for faster R-CNN, MA-ResNet elevates pedestrian detection average precision (AP) to 64.2% on a 6G vehicle–road cooperative dataset.

Although the aforementioned methods exhibit commendable performance in general scenarios, their direct application to dynamic visual shelf systems still faces limitations in real-time capability:

- 1.

- Transformer-based DETR series models (e.g., deformable DETR) require substantial computational resources, making them impractical for meeting real-time requirements on edge devices in retail systems;

- 2.

- Poor feature adaptability: SSD and CenterNet are prone to feature confusion in multi-scale occlusion scenarios, leading to increased false detection rates of foreign objects;

- 3.

- High deployment complexity: RetinaNet and FCOS rely on intricate anchor designs, complicating model tuning and hardware deployment.

Given these considerations, YOLOv11 emerges as the foundational framework for this research due to its superior real-time performance and ease of deployment on intelligent retail system boards. Accordingly, this study proposes an improved YOLOv11-retail model with three primary contributions:

- 1.

- Feature extraction optimization: A feature map enhancement network (FMENet) is introduced to enhance small-target detail capture through hierarchical feature fusion, thereby enabling the network to extract more comprehensive feature information.

- 2.

- Feature fusion improvement: By integrating the convolutional block attention module (CBAM) with upsampling techniques, we construct an adaptive feature fusion module (FuseModule2) that achieves effective fusion of multi-level feature maps, subsequently integrating it into novel detection heads.

- 3.

- Post-processing acceleration: Extended intersection over union non-maximum suppression (XIoU-NMS) is implemented to reduce missed detections of overlapping targets and accelerate loss function convergence, thereby enhancing the detection efficiency of the network model.

2. Methodology

2.1. Algorithm Flowchart

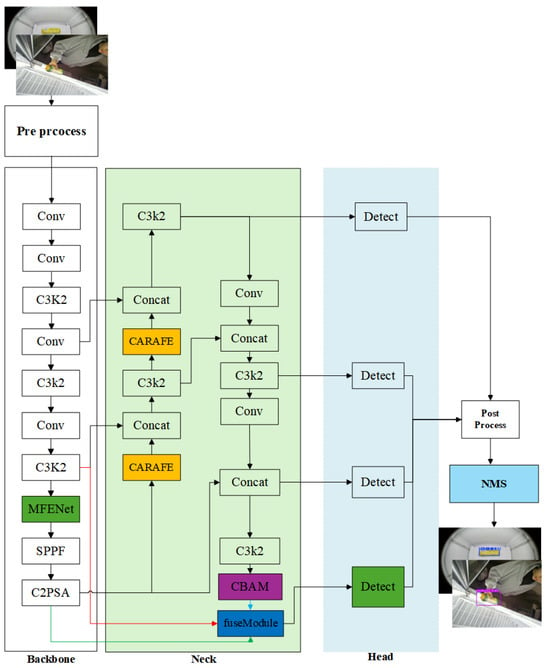

Figure 1 presents the overall architecture of the YOLOv11 model. To enhance the performance of this model, this study retains the newly proposed C3K2 module of YOLOv11 and innovatively introduces modules in different network modules on this basis. Specifically, in the backbone network part, the MFENet module with enhanced feature extraction function is introduced. The main role of this module is to effectively fuse feature information of different scales while downsampling. At the same time, the CBAM module is introduced in the feature fusion module, and an additional upsampling module is added. In the detection head module, the fuseModule module is introduced, which is used for multi-channel feature fusion. The fused features are sent to the fourth detection head to enhance the network’s detection ability for input images of different scales. Next, the specific details of each module will be elaborated.

Figure 1.

Global algorithm flow chart.

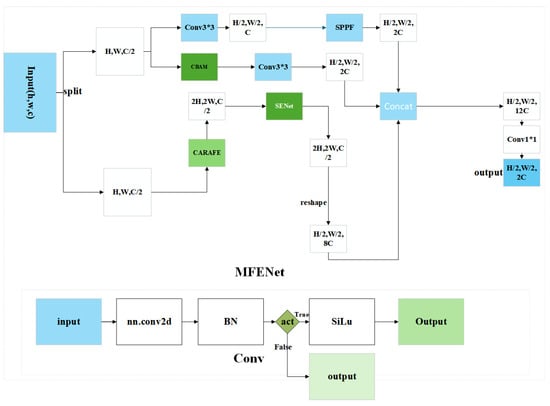

As depicted in Figure 2, the MFENet, a downsampling multi-scale feature fusion network, initiates with the input tensor sourced from the preceding hidden layer. This input undergoes a splitting operation, bifurcating it into four distinct branches, each serving a specialized role in the feature processing pipeline. The uppermost branch is dedicated to attention-enhanced feature extraction. It commences with a convolutional layer (CONV ), which reduces the spatial dimensions of the input while altering the channel count. This is followed by the integration of a CBAM (convolutional block attention module), which selectively enhances informative features through spatial and channel-wise attention mechanisms. Another layer CONV further refines the representation of features. Subsequently, an SPPF (spatial pyramid pooling-fast) layer is employed. This layer aggregates features at multiple scales, effectively capturing context information across different spatial resolutions, and outputs a tensor with doubled channels and halved spatial dimensions. The two lower branches are primarily focused on multi-scale feature fusion. One branch utilizes a CARAFE (content-aware re-assembly of features) layer, which adaptively reassembles features based on content awareness, and a SiLU (sigmoid-weighted linear unit) activation function to introduce non-linearity. The other lower branch also incorporates a SiLU activation function, followed by a reshape operation. These operations facilitate the extraction and combination of multi-scale features, enhancing the network’s ability to capture diverse spatial and semantic information. The outputs from these four branches are then concatenated along the channel dimension, resulting in a tensor with a significantly increased channel count (12C) while maintaining the same spatial dimensions (H/2 and W/2). This is further processed through a 1 × 1 convolutional layer to reduce the channel count to 2C and a final CONV layer to produce the output tensor. This output is a downsampled tensor with double channels, which is then fed into the subsequent hidden layer for further processing. In comparison to conventional convolutional (Conv) operations, the MFENet architecture’s multi-branch design, with its attention-driven and multi-scale feature fusion strategies, enables a more comprehensive and discriminative feature representation. This design choice has been empirically shown to yield measurable improvements in detection performance, as it effectively mitigates issues such as vanishing gradients and limited receptive fields and enhances the network’s capacity to handle complex visual patterns.

Figure 2.

MFENet downsampling multi-scale feature fusion network.

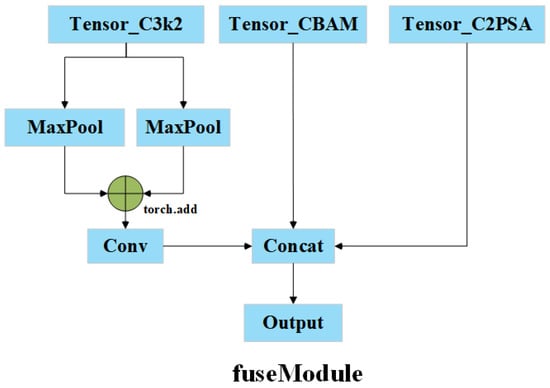

Figure 3 illustrates the structure of the proposed multi-channel fusion module (fuseModule), which is designed to integrate feature maps from different semantic levels to enhance the model’s multi-scale perception capability. The fused features are then forwarded to the fourth detection head to improve detection performance. Specifically, the module consists of three input sources: The first input is the output from the second C3k2 module, corresponding to the feature map after four down-sampling operations (denoted as P6/16), which retains higher spatial resolution. The second input originates from the CBAM module (P22/32), providing globally attended deep semantic features. The third input comes from the C2PSA module (P9/32), offering multi-scale attention-fused features. As the resolutions of these three feature maps differ, spatial alignment is required before fusion. To reduce computational cost while preserving essential feature information, two MaxPooling operations are applied to the first feature map (P6/16) for downsampling, bringing it to a compatible size with the other two feature maps. The use of MaxPooling not only reduces parameter count but also avoids redundant information introduced by interpolation-based resizing. Subsequently, the three processed feature maps are concatenated along the channel dimension to form a unified mixed feature representation. To prevent parameter redundancy caused by excessive channel numbers, a 1 × 1 convolution is applied to compress the channels of the concatenated features. This lightweight design effectively preserves multi-scale information while controlling model complexity, facilitating faster training convergence and improving inference speed.

Figure 3.

fuseModule multi-channel fusion module.

2.2. CBAM Attention Mechanism

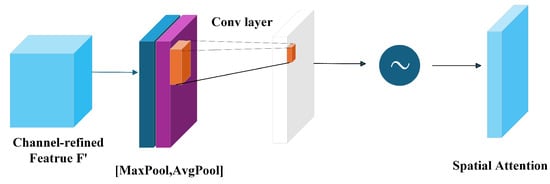

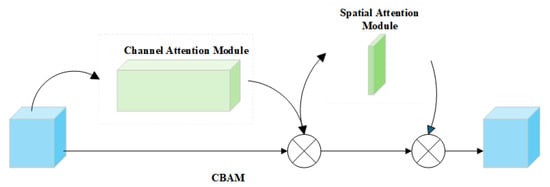

CBAM addresses the limitations of conventional convolutional neural networks in processing multi-scale, multi-form, and multi-directional information. To this end, CBAM introduces dual attention mechanisms: channel attention and spatial attention. The channel attention module enhances the representational capacity of channel-wise features, while the spatial attention mechanism captures critical information across different spatial regions.

CBAM comprises two core components: the channel attention module (CAM) and the spatial attention module (SAM). These modules can be sequentially integrated into various layers of CNN architectures to strengthen feature representation capabilities.

CBAM (convolutional block attention module) is a lightweight network module integrating both channel and spatial attention mechanisms. As shown in Figure 4, Exhibiting high versatility similar to SENet (squeeze-and-excitation networks), it can be embedded into various convolutional neural network (CNN) architectures. While moderately increasing computational complexity and model parameters, CBAM significantly enhances network performance. The channel attention mechanism implementation involves applying global max pooling and global average pooling operations to the input feature map F of dimensions H × W × C to obtain two 1 × 1 × C channel descriptors; feeding both descriptors into a shared two-layer neural network consisting of a multilayer perceptron (MLP) with one hidden layer (first layer neuron count: C/r, second layer neuron count: C, ReLU activation); subsequently performing element-wise summation of the output feature vectors; finally, generating the channel attention map formalized as follows:

where F denotes the input feature map, AvgPool() represents global average pooling, MaxPool() signifies global max pooling, MLP indicates a shared-weight two-layer fully connected network, and denotes the sigmoid activation.

Figure 4.

Channel attention.

The channel attention is applied to the original feature map via the following:

The spatial attention module, illustrated in Figure 5, processes the input by applying global average pooling and global max pooling along the channel dimension to compress channel-wise information, generating two spatial feature maps of size H × W × 1; these pooled features are then concatenated and processed through a 7 × 7 convolutional layer for fusion, with the resulting spatial weights transformed via sigmoid activation to produce the spatial attention map MS, which is element-wise multiplied with to yield the refined feature representation:

Figure 5.

Spatial attention.

is the feature map after channel attention processing, [;] denotes concatenation along the channel dimension, represents a convolution kernel, is the sigmoid activation function, AvgPool denotes global average pooling, and MaxPool denotes global max pooling.

The final output of CBAM is as follows:

From Figure 6, it is evident that the architecture of CBAM integrates both the channel and spatial attention mechanisms, in which the channel attention module uses the SENet structure followed by a spatial attention module. Inspired by SENet, this spatial module applies global average pooling along the channel dimension to generate a two-dimensional spatial attention weight matrix. By simultaneously performing hybrid pooling operations (global average and max pooling) across channel and spatial dimensions, CBAM captures richer contextual information.

Figure 6.

CBAM module.

Compared to other attention mechanisms, such as SENet and ECANet, which exclusively focus on channel attention, or CA dedicated solely to spatial dimensions, CBAM sequentially processes features through neural modules. This design jointly emphasizes channel significance (identifying critical feature channels) and enhances spatial attention (highlighting key spatial regions), enabling the model to concentrate specifically on target areas while suppressing background interference. These advantages are empirically validated in the ablation study section.

2.3. MFENet Module

Conventional YOLO models predominantly employ standard convolutions (Conv), which extract single-scale feature maps lacking multi-scale information and exhibit limited feature discriminability. To address this, we design a downsampling module with integrated multi-scale fusion. Starting from the input, the feature map is split along the channel dimension into two equal halves. The upper branch undergoes secondary partitioning for multi-granularity feature extraction, while the lower branch performs CARAFE upsampling followed by SENet-based feature enhancement. Specifically, SENet applies batch normalization (BN) to generate a channel-wise weight matrix, which is multiplied element-wise with the original feature map to produce enhanced representations. Finally, the three branches (two from the upper split and one from the lower) are concatenated along the channel dimension, yielding a downsampled feature map with doubled channel count.

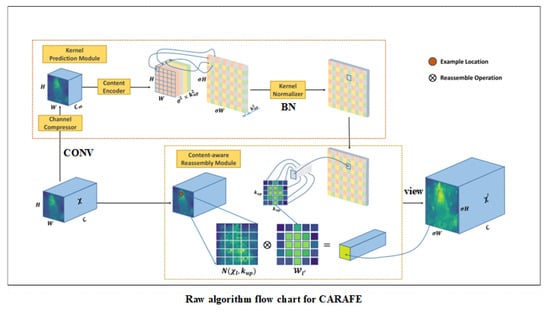

2.4. CARAFE Module

CARAFE is an upsampling model designed for image processing. Its primary objective is to expand the receptive field, thereby effectively enhancing detection accuracy for small objects. Figure 7 presents the original algorithm flowchart of this model.

Figure 7.

Raw algorithm flow chart.

As clearly depicted in the figure, the input image first undergoes processing through a convolutional layer to achieve channel compression of the feature map. Subsequently, upsampling and normalization operations generate a kernel weight matrix that establishes spatial relationships across pixel regions. This matrix then performs element-wise multiplication with corresponding pixel regions of the original image, ultimately producing an enhanced representation of target regions. Through pixel rearrangement operations, the receptive field of the output image is significantly expanded, forming the final upsampled image.

2.5. fuseModule Module

To enhance feature fusion precision, this study proposes an innovative approach within existing detection frameworks by incorporating an additional detection head that integrates multi-level feature maps from the spatial pyramid pooling-fast (SPPF) module, pyramid scene attention (P2SA) module, and terminal output layer. The core design employs dedicated convolutional layers for channel alignment and feature refinement, subsequently applies cross-scale feature aggregation through spatial concatenation, and ultimately utilizes an efficient decoder for dynamic multi-scale anchor construction. This hierarchical feature fusion architecture significantly enhances the model’s multi-scale object representation capability in retail scenarios.

2.6. Improved XIoU Loss Function

Without altering the base model, enhancing model precision typically involves optimizing the loss function. In the field of object detection, the loss function generally comprises three components: classification loss, bounding box regression loss, and confidence loss. It is common practice to focus on improving the bounding box regression loss, specifically by optimizing the intersection over union (IoU) loss. Based on this, this paper proposes an improved IoU method.

In object detection tasks, the precision of bounding box regression directly determines the overall performance of the model. Although the traditional intersection over union (IoU) metric possesses scale invariance, it exhibits deficiencies in optimizing non-overlapping cases. Consequently, researchers have proposed improved strategies such as GIoU, DIoU, and CIoU:

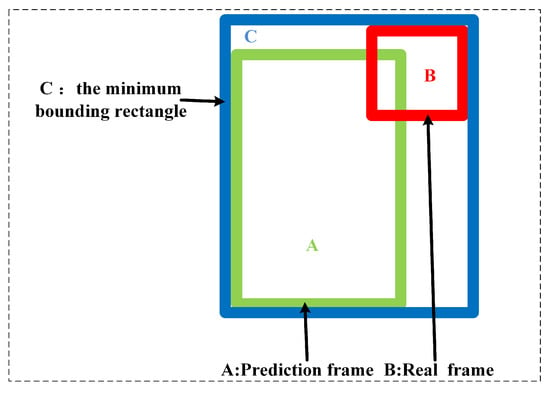

GIoU systematically addresses the inherent limitations of IoU in object detection tasks by introducing a minimum enclosing convex region C that encompasses both the predicted bounding box and the ground truth box. Traditional IoU solely computes the area ratio of the intersection to the union of two bounding boxes. When the bounding boxes do not overlap, its output value is constantly 0, which fails to reflect differences in spatial positional relationships. In contrast, GIoU introduces the enclosing region C, defined as the smallest enclosing rectangle that contains both boxes, with its core improvement lying in extending the IoU calculation to the following:

where the numerator term represents the area difference between the union of the two bounding boxes and the minimum enclosing convex region, while the denominator term |C| denotes the total area of the enclosing region. The purpose of introducing the enclosing region C is to compel the model to consider the spatial distribution characteristics of the bounding boxes during optimization. Compared to the intersection over union (IoU), which solely focuses on the degree of overlap, the generalized intersection over union (GIoU) can more effectively guide the predicted bounding box to converge towards the position of the ground truth box. Particularly in scenarios involving containment or distant bounding boxes, GIoU demonstrates superior performance.

As illustrated in Figure 8, GIoU improves upon IoU. This method introduces the concept of a minimum enclosing rectangle, which simultaneously contains both the predicted bounding box and the ground truth box (conceptually depicted as the larger, outermost dashed box enclosing the blue and green boxes). The calculation formula is as follows:

where C denotes the area of this minimum enclosing rectangle, and A and B represent the areas of the predicted bounding box and the ground truth box, respectively. Through this approach, GIoU can provide a distinctive value even when the predicted and ground truth boxes do not overlap, thereby better measuring the distance and positional relationship between them. This guides the model to adjust the position of the predicted box more reasonably during training, enhancing object detection accuracy. The overlapping region between the predicted and ground truth boxes (shown as the red area in the figure) serves as a crucial computational basis for both IoU and GIoU. However, when the bounding boxes completely overlap, GIoU degenerates to IoU and exhibits issues such as slow convergence speed and low regression accuracy.

Figure 8.

GIoU schematic diagram.

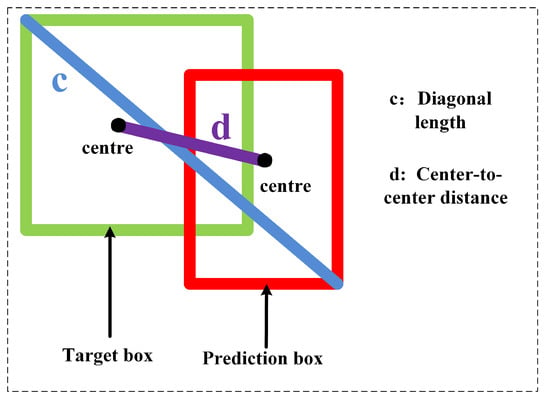

Building upon traditional IoU, DIoU introduces a penalty term for the distance between the centers of the predicted bounding box and the ground truth box, thereby geometrically optimizing the bounding box regression task in object detection. Its core improvement lies in extending the overlap measure of IoU into a comprehensive metric incorporating spatial positional relationships:

where represents the Euclidean distance between the centers of the predicted and ground truth bounding boxes, and c denotes the diagonal length of the minimum enclosing rectangle. By normalizing the penalty term using the diagonal c of the minimum enclosing rectangle, we endow it with scale invariance. Consequently, DIoU converts the distance between the centers into a relative value between 0 and 1, regardless of variations in target size, thus ensuring consistency in optimization across different scales.

As illustrated in Figure 9, the distance-IoU (DIoU) loss metric is applied to the bounding box regression task. It achieves direct optimization by minimizing the normalized distance between the centers of the bounding boxes. In this formulation, green bounding boxes represent predicted boxes, while red bounding boxes denote ground truth boxes. c represents the diagonal length of the minimum enclosing rectangle covering both bounding boxes, while d denotes the distance between their centers. Although this method improves convergence speed, it fails to account for the impact of aspect ratio on localization accuracy.

Figure 9.

DIoU schematic diagram.

CIoU further introduces an aspect ratio penalty term based on DIoU, constructing a more comprehensive evaluation framework for bounding box regression in object detection. Its core innovation lies in decomposing the geometric relationship between the predicted and ground truth bounding boxes into three dimensions: overlap degree, center distance, and shape consistency. This mechanism enables more precise regression optimization.

where represents the squared Euclidean distance between the centers of the two bounding boxes, c denotes the length of the diagonal of the minimum enclosing rectangle, and is a trade-off parameter defined as follows:

and regulates the weight of the aspect ratio penalty. Despite significant progress, the aspect ratio penalty term still exhibits inherent limitations: it treats the ground truth box as an absolute standard, failing to distinguish the semantic difference when the aspect ratios of the predicted and ground truth boxes are swapped; the nonlinear mapping of the arctangent function leads to inefficiency when optimizing extreme shapes; when overlapping is low, excessive focus on aspect ratio optimization may slow model convergence and limit performance in complex scenarios. To address these issues, we propose a more efficient and direct regression loss function based on the CIoU loss, enhanced intersection over union (XIoU), defined as follows:

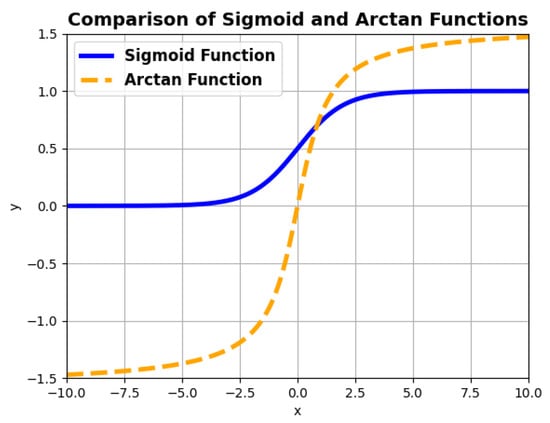

The final term of the proposed extended IoU (XIoU) loss function abstracts the rectangular aspect ratio into the domain of a sigmoid function and maps the aspect ratio discrepancy between predicted and ground-truth boxes to its codomain, thereby optimizing the penalty term through functional mapping principles. Compared to the penalty term in the CIoU (complete intersection over union) loss, the proposed penalty term exhibits superior robustness and smoother gradient characteristics, with its output strictly confined to the range (0, 0.25). This inherent normalization eliminates manual scaling constraints, reducing the complexity of the original loss formulation. Consequently, the XIoU regression loss achieves accelerated convergence speed, enhanced localization accuracy, and improved model performance. As shown in Figure 10, the sigmoid function demonstrates significantly better continuity and smoothness compared to the arctangent function. Its gradient variation exhibits more uniform distribution characteristics, with function values rigorously converging within the closed interval (0, 1), ensuring exceptional numerical stability.

Figure 10.

Comparison of arctanx and sigmoid.

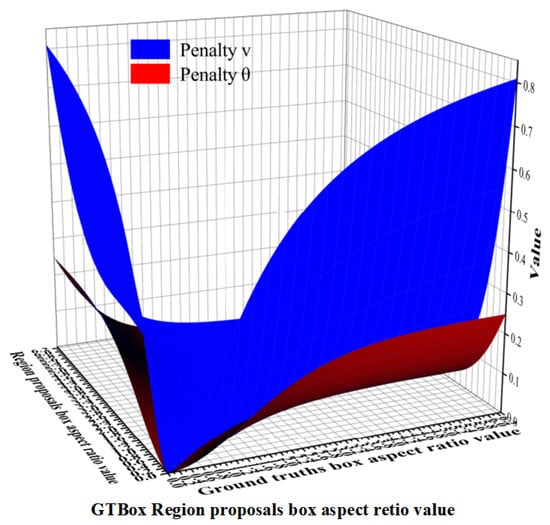

To further investigate the applicability of the penalty term , we randomly sampled multiple bounding box aspect ratios to simulate the numerical evolution of different penalty terms during training. Figure 11 illustrates the experimental simulation of penalty terms in the regression loss. Results demonstrate that compared to the original penalty term in CIoU, the proposed penalty term exhibits gentler gradient properties, stronger robustness against outliers, and reduced regression errors, thereby achieving superior regression performance.

Figure 11.

Simulation diagram of punishment term experiment.

To validate the effectiveness of our improvements, ablation studies were conducted, including comparative experiments on intersection over union (IoU). The experimental results demonstrate the effectiveness of the proposed IoU loss function.

2.7. XIoU-NMS Non-Maximum Suppression

In Section 2.6, this study enhances the localization accuracy of the model during the training phase by introducing an improved regression loss function (XIoU loss). However, the overall performance of an object detection system depends not only on the regression optimization during training but also critically on the post-processing algorithms in the inference stage, which have a decisive impact on the final detection accuracy. Although the XIoU loss significantly improves the localization precision of predicted bounding boxes, the traditional non-maximum suppression (NMS) algorithm still exhibits limitations when processing predicted boxes in highly overlapping or complex scenes. Due to the rigidity of its preset thresholds and the inadequacy of its redundant box filtering strategy, false positives (over-detection) or false negatives (missed detection) may occur, thereby partially offsetting the optimization effects achieved during training.

For instance, the standard NMS implementation widely used in deep learning frameworks such as pytorch, the GPU version of Pytorch used in this article is 2.4.1. The corresponding version can be downloaded from its official website: https://pytorch.org/get-started/previous-versions/ (accessed on 25 April 2025) typically employs a fixed intersection over union (IoU) threshold to hard-exclude predicted boxes with overlaps exceeding this threshold. This strategy fails to adequately account for the diversity of object scales across different scenarios and the variations in inter-object overlap patterns. Consequently, in dense object detection or cross-scale object detection tasks, accurately detected boxes are prone to being erroneously suppressed.

Therefore, to ensure that the improved localization accuracy obtained during training can be fully realized during inference and to prevent the post-processing stage from becoming a bottleneck that limits overall detection performance, targeted optimization of the traditional NMS algorithm is imperative. Motivated by this, we propose the XIOU-NMS method, which aims to refine the NMS process to more effectively retain accurate detections while suppressing redundant predictions, thereby enhancing the overall performance of the detector.

The NMS algorithm employed in this study, namely DSoft-NMS, constructs a dynamic confidence decay mechanism based on the intersection over union (IoU) between predicted boxes and ground truth boxes: the confidence score of a detected box is negatively correlated with its IoU value relative to the ground truth box. In other words, higher IoU overlap leads to a greater decay magnitude in the corresponding predicted box’s confidence score. The probability decay formula, constructed based on the IoU ratio, is defined as follows:

where denotes the Euclidean distance between the predicted box and the target box ; represents the threshold value; is the diagonal length of the midpoint between the two boxes; indicates the Intersection over Union (IoU) between the target box and the predicted box, which measures their degree of overlap; represents the computed score of the predicted box , used for subsequent filtering based on the threshold.

3. Case Study

3.1. CNN-Based Object Detection Methods

Convolutional neural network (CNN)-based object detection methods, as a pivotal technical route in the field, reflect an evolutionary logic from accuracy prioritization to efficiency optimization. Early two-stage detectors, represented by R-CNN, fast R-CNN, and faster R-CNN, focused on high-precision detection. Oluwaseyi Olorunshola et al. [29] proposed improved models such as MFENet for general scenarios, which employ a dual-branch multi-scale fusion architecture integrating CARAFE upsampling and SENet channel weighting for adaptive feature enhancement. Additionally, a channel-spatial dual attention mechanism (CBAM) dynamically focuses on critical regions, while the XIoU loss function and optimized non-maximum suppression (NMS) strategies enhance robustness for occluded object detection. In lightweight detection for complex environments, Zhang M et al. [30] introduced a YOLOv11n-based framework that incorporates the C3k2MBNV2 module for efficient feature extraction, a spatial–channel decoupled downsampling module to preserve key information, and the C3k2WTDC module (integrating wavelet transforms and depthwise convolutions) to strengthen contextual modeling, significantly reducing computational complexity. While these models improve detection accuracy and computational efficiency, they remain constrained by real-time performance limitations. Subsequent one-stage detectors, such as the single shot MultiBox detector (SSD) [31] and RetinaNet, streamlined the detection pipeline to achieve notable speed improvements. RetinaNet [32] further addressed class imbalance between positive and negative samples via its focal loss. Despite their balanced accuracy and robustness, these methods still face challenges in real-time processing and edge deployment.

3.2. Transformer-Based Object Detectors

In recent years, the transformer architecture [33] has been introduced into object detection, offering novel solutions for detection tasks. DETR (detection transformer) [34] pioneered the formulation of object detection as a set prediction problem, leveraging transformers for end-to-end regression and classification while eliminating handcrafted anchors and post-processing steps like non-maximum suppression (NMS). Despite its simplified design and strong modeling capabilities, DETR suffers from slow convergence and suboptimal performance on small targets. Deformable DETR [35] significantly enhances local region focus and accelerates training convergence by introducing a deformable attention mechanism, improving adaptability to complex scenes. However, such methods typically demand substantial computational resources and extended training cycles, resulting in high deployment barriers that hinder real-time and lightweight requirements in commercial applications. To overcome real-time limitations, RT DETR (real-time DETR) [36,37] proposes two core innovations:

- 1.

- Hybrid encoder design: Combines hierarchical feature extraction with lightweight attention mechanisms. Shallow layers employ convolutional networks for rapid local detail capture, while deeper layers integrate sparsified self-attention modules to model global dependencies, balancing computational efficiency and feature representation.

- 2.

- Query denoising and dynamic refinement: Implements a progressive query screening strategy during decoding. A noise suppression module filters low-confidence candidate boxes, and a context-aware mechanism dynamically optimizes target localization predictions, minimizing redundant computations.

Additionally, RT-DETR optimizes the computational graph structure based on the characteristics of edge computing devices, supporting operator fusion and hardware-aware quantization, thereby achieving efficient inference on low-power embedded platforms. Nevertheless, the transformer model’s dependence on large-scale training data and its memory footprint issues still constrain its widespread adoption in resource-constrained retail terminal devices.

3.3. YOLO-Family Models and Their Variant

The YOLO series [38,39], renowned for its exceptional detection speed and end-to-end architecture, has garnered widespread attention since its inception. YOLOv8 [40] introduced a task-decoupled detection head for the first time, decoupling feature learning for classification and localization tasks to mitigate task conflicts. It also integrated a dynamic convolution module that adaptively adjusts convolutional kernel weights based on input features, significantly enhancing feature extraction for occluded objects. With the continuous evolution of the YOLO family, YOLOv9 and YOLOv10 further optimized model architectures and inference mechanisms. Zhang H [41] proposed the BF-YOLOv9s model, which enhances small-target feature capture via a BiFormer attention mechanism, constructs a lightweight RepNCSPELAN4-Ghost module to reduce parameters, and combines BiFPN multi-scale feature fusion with Focal WIoU loss optimization. This mode achieves a 5.6% improvement in mAP50 with 8.3% fewer parameters in drone aerial scenarios. Qiu [42] developed the lightweight drone detection algorithm LD-YOLOv10, which employs an RGELAN lightweight feature extraction module (integrating reparameterized convolutions and Conv-Tiny structures) to reduce computational load, introduces AIFI multi-head attention for semantic enhancement, constructs a DR-PAN Neck to efficiently extract weak features of small targets, and optimizes anchor competition strategies using wise-IoU and EIoU losses. On datasets like VisdroneDET-2021, it reduces parameters by 62.4% while achieving 25 FPS on edge devices. Building on prior advancements, YOLOv9 and YOLOv10 [43] incorporate more efficient RepVGG structures, smarter post-processing strategies, and adaptive multi-task learning mechanisms, further compressing model size while boosting inference efficiency.

YOLOv11 [44,45,46], as one of the latest advancements in the series, inherits the lightweight design and efficient inference capabilities of its predecessors while introducing novel components, including an integrated attention mechanism, a lightweight transformer module [47], and a hybrid convolution strategy. These innovations not only enhance detection accuracy for small and occluded targets but also achieve a 15–20% improvement in inference speed compared to YOLOv10. Building upon these features, this study proposes an enhanced YOLOv11 model to address insufficient multi-scale feature coupling and occlusion challenges. Specifically, we design a multi-scale fusion downsampling module (MFENet) with a dual-branch structure to achieve local detail extraction and global semantic enhancement. This module integrates CARAFE upsampling and SENet channel weighting to optimize feature representation. Furthermore, we introduce a channel-spatial dual attention mechanism (CBAM) and a multi-level feature aggregation module (FuseModule) to strengthen contextual modeling for occluded targets. The localization accuracy is further improved by adopting the XIoU loss function and an optimized non-maximum suppression strategy (XIoU-NMS).

In summary, through systematic optimization, the proposed YOLOv11 exhibits distinct advantages over other CNN- or transformer-based detectors:

- 1.

- Exceptional Real-Time Performance: YOLOv11 achieves stable 30+ FPS on edge devices (e.g., Jetson Xavier NX or ARM-based AI boards), meeting the stringent real-time and user experience requirements of intelligent retail.

- 2.

- Deployment Adaptability and Commercial Relevance: The streamlined architecture enables rapid deployment across diverse embedded platforms. The model is flexibly applicable to core retail scenarios such as product recognition, shelf monitoring, and customer behavior analysis, facilitating the development of stable, efficient, and low-cost commercial systems.

Consequently, YOLOv11 [16,48,49] not only achieves an optimal speed-accuracy trade-off algorithmically but also emerges as the preferred solution for addressing small-target and occlusion challenges in common product recognition tasks, owing to its superior deployment capabilities and industry adaptability.

4. Results and Analysis

4.1. Environment Configuration and Dataset Creation

This study constructs a custom dataset by capturing video footage via a fisheye camera and subsequently curating 20,000 images of common retail cabinet products. The dataset encompasses 36 categories across six major classes, including bottled, canned, bagged, and boxed goods. All images were annotated in YOLO format using the labelImg tool. The dataset was partitioned into training (16,000 images), validation (2000 images), and test (2000 images) sets at an 8:1:1 ratio. To ensure model generalization, publicly available datasets were incorporated: the 6th XinYe Technology Cup Image Algorithm Competition dataset (5422 annotated images, 113 product categories) and the RPC dataset from Megvii Technology, Jinnovation Park Building S1, No. 27 Jiancaicheng Middle Road, Haidian District, Beijing 100096, China. Model performance will be validated on these third-party datasets in subsequent phases.

As shown in Table 1, all experiments in this study were conducted on a workstation equipped with an NVIDIA GeForce RTX 4060 Ti GPU (16 GB VRAM). The workstation is physically located in Room Main Teaching Building, East Campus of Yangtze University, No. 1 Nanhuan Road, Jingzhou District, Jingzhou City, Hubei Province, China. The operating system used for deployment was Windows 10 Professional Edition, and the deep learning environment was based on Python 3.9, PyTorch 2.4.1, and CUDA 12.4. All training and testing procedures were performed on this local environment to ensure consistent hardware and software configurations throughout the experiments.

Table 1.

Experiment environment configuration.

4.2. Analysis of Results

4.2.1. Evaluation Indicators

- (1)

- AP

Given a total of n, let denote the recall rate when detecting k samples, and represents the maximum precision rate where the recall rate is greater than or equal to . The average precision (AP) is defined as follows:

- (2)

- Recall

In the formula, FN represents the total number of false negatives, and TP denotes the number of true positives.

- (3)

- Precise

Precision represents the proportion of true positive samples detected by the algorithm relative to the total number of detected positive samples, defined as follows:

where TP denotes true positives, and FP denotes false positives.

- (4)

- mAP

The mean average precision (mAP) is defined as the average of the average precision (AP) across all categories, formulated as follows:

where N denotes the total number of categories, and represents the average precision for the i-th category.

- (5)

- F1

To validate the model’s robustness, this study utilizes the F1-score, which is the harmonic mean of precision and recall, formulated as follows:

- (6)

- FPS

Frames per second (FPS) measures the processing speed of an object detection algorithm and reflects the model’s real-time performance. It is defined as the number of image frames processed by the model within a unit time (1 s). The calculation formula is as follows:

In the formula, denotes the total number of image frames processed by the model within time t (in seconds), and t represents the total processing time. Generally, a higher FPS value indicates faster image processing speed, offering significant advantages in real-time detection scenarios (e.g., real-time product detection in intelligent retail environments) by enabling more timely feedback of detection results.

- (7)

- Detection Time

Detection time is used to measure the total time consumption of an object detection algorithm when processing large-scale image datasets, reflecting the algorithm’s efficiency in large-scale data processing scenarios. Since this study conducted experimental testing on 20,000 images, the detection time here refers to the total time required to process all 20,000 images. The calculation formula is as follows:

In the formula, denotes the time required to process the image (unit: seconds). A shorter total detection time () indicates that the algorithm consumes less time and achieves higher efficiency when processing the 20,000-image task. In practical applications such as batch product detection in large-scale intelligent retail scenarios, a reduced enables rapid completion of large-scale product inspections, thereby accelerating operational throughput, minimizing temporal costs, and enhancing the system’s practicality and competitiveness.

4.2.2. Comparative Experiments on Base Models

This study addresses a specific engineering problem requiring models with both high precision and high efficiency. After comprehensive evaluation, YOLOv11 was selected as the base model based on a thorough comparative analysis with traditional state-of-the-art (SOTA) models, focusing on accuracy and real-time performance. To validate model performance, comparative experiments against conventional models were conducted on the public COCO2017 dataset and our custom dataset, with results presented in Table 2.

Table 2.

Performance comparison and analysis of different models on COCO2017.

The COCO dataset, released by Microsoft, is a large-scale public benchmark extensively adopted for object detection, instance segmentation, and keypoint localization tasks. As shown in Figure 12, comprising 123,287 images (118,287 for training and 5000 for validation), it provides JSON-format annotations downloadable from the official source. These annotations were converted to YOLO-standard format (xywh representation) using a json2txt.py script, facilitating public dataset benchmarking. To optimize training efficiency, a subset of 12,719 images covering all validation set categories was selected. Experiments were conducted on an A6000 GPU-equipped cloud server, The proposed method was implemented and evaluated on an A6000 GPU-equipped cloud server (49 GB MiB) deployed in Jingzhou District, Jingzhou City, Hubei Province, China (No. 1 Nanhuan Road, East Campus Main Building, Yangtze University). The software environment consisted of Ubuntu 22.04, PyTorch 2.3.0, and CUDA 12.1. The proposed method was implemented on an A6000 GPU-equipped cloud server with a batch size of 64, while maintaining all other hyperparameters at their default values. Results demonstrate YOLOv11n’s superior performance across multiple metrics, particularly in parameter efficiency, aligning well with industrial requirements.

Figure 12.

Public dataset detection. This figure shows the COCO2017 dataset detection interface, where 12,000 images were selected as the training set. The environment runs on Microsoft Windows 10 Professional, using a desktop equipped with an Intel Core i7-14700K processor (3.40 GHz), 64 GB RAM, located at the Main Teaching Building, East Campus of Yangtze University, No. 1 Nanhuan Road, Jingzhou District, Jingzhou City, Hubei Province, China.

As shown in Table 2, performance comparisons on COCO2017 reveal distinct characteristics: transformer-based DETR and deformable DETR achieve notable accuracy (deformable DETR: mAP50-95 = 0.230; DETR: 0.212), indicating robust detection across IoU thresholds. However, both exhibit limited inference speeds (15 FPS and 30 FPS, respectively).

Conversely, YOLO-series models demonstrate exceptional real-time performance: YOLOv5n (280 FPS), YOLOv8n (310 FPS), YOLOv10n (350 FPS), and YOLOv11n (380 FPS) satisfy real-time detection requirements. Traditional models show moderate performance: RetinaNet (single-stage: 48.64G FLOPS, 0.128 mAP50-95, and 18 FPS) and faster R-CNN (two-stage: 88.32G FLOPS, 0.129 mAP50-95, and 20 FPS). CenterNet balances accuracy (0.130 mAP50-95) and speed (50 FPS) with low computational cost (15.32G FLOPS) and short training time (1.52 h). The emerging RT-DETR achieves dual optimization (0.233 mAP50-95, 80 FPS, and 52.63G FLOPS), demonstrating significant potential.

In summary, models exhibit trade-offs across accuracy, speed, and computational cost (FLOPS): transformer-based models lead in accuracy but lack real-time capability; YOLO variants excel in inference speed; others achieve different balances. Selection should prioritize YOLO-series for latency-sensitive applications (e.g., video surveillance) and transformer-based models for high-precision research scenarios.

Subsequent comparative performance analysis of various baseline models on our custom dataset reveals detailed results presented in Table 3.

Table 3.

Performance comparison and analysis of self-made datasets with different original models.

As shown in Table 3, the experimental results collectively demonstrate that YOLOv12 does not achieve optimal performance on all metrics. Given the engineering constraints requiring both high precision and efficiency, a multi-criteria evaluation framework was essential. Although YOLOv12 incorporates local attention mechanisms that moderately improve accuracy, the enhancement remains marginal. Crucially, its training demands high-end GPUs (e.g., A100/A6000), a requirement unfeasible under our experimental conditions. While YOLOv5 was formerly a SOTA model widely adopted in object detection research, it exhibits suboptimal performance on mAP50-95 and inadequate inference speed. After comprehensive consideration of these factors, YOLOv11 was ultimately selected as the foundational model.

4.2.3. Performance Evaluation of Models on the Custom Dataset

- (1)

- Comparative Analysis of Model Occlusion Robustness

Table 4 presents mAP comparison results for representative commodities (bagged potato chips and bottled mineral water) and foreign objects under varying occlusion ratios. By experimentally evaluating these items with progressively increasing occlusion levels, we thoroughly analyzed detection accuracy across network models.

Table 4.

Detection result comparison under different occlusion ratios.

Results indicate minimal detection accuracy differences between our algorithm and other models under no or light occlusion. However, as occlusion severity increases, our algorithm demonstrates progressively superior stability. Evaluations of our custom occlusion dataset further confirm its enhanced robustness in complex occlusion scenarios.

Nevertheless, detection accuracy for smaller targets requires improvement. Future work will prioritize occlusion handling for small objects to enhance recognition of diverse merchandise in practical applications.

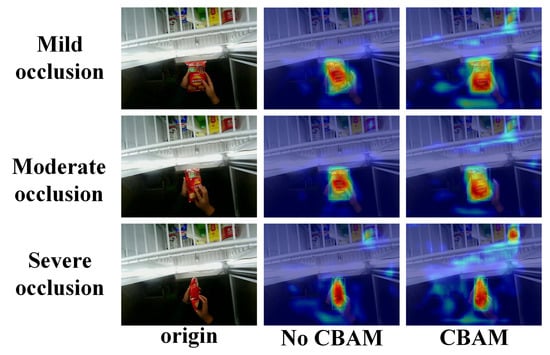

4.2.4. Thermal Visualization Experiment

Figure 13 visualizes heatmaps and detection accuracy of YOLOv11-retail under varying occlusion levels, with comparative visualizations of detection results from networks with and without attention mechanisms. The Grad-CAM integrated heatmaps effectively reveal model focus areas and prediction confidence.

Figure 13.

Thermal visualization under the attention model.

The experimental results demonstrates that our algorithm intensively focuses on unoccluded regions, leading to a more comprehensive feature extraction. Detection accuracy exhibits a declining trend as occlusion severity increases. Networks incorporating attention mechanisms show a significantly enhanced concentration on regions of interest, evidenced by higher heatmap intensity. This indicates our attention-enhanced model not only achieves superior detection accuracy but also exhibits a deeper focus on unoccluded product features.

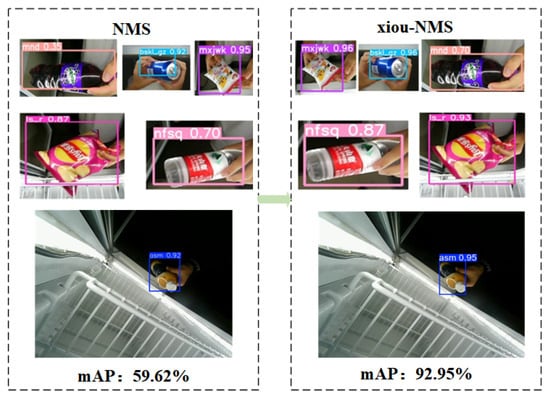

4.2.5. NMS Comparison Experiment

Figure 14 reveals the improved non-maximum suppression (NMS), demonstrating exceptional bounding box construction capabilities with higher mAP values. This advantage enables faster neural network convergence, consequently improving both detection speed and precision.

Figure 14.

Comparison diagram of improved NMS experiments.

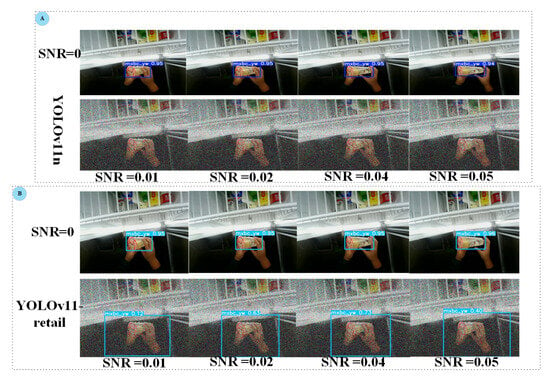

4.2.6. Comparative Noise Immunity Experiment

As shown in Figure 15, our improved model achieves a confidence score of 0.73 when detecting Mixue Ice City lemon beverages, indicating substantially improved prediction accuracy compared to the original YOLOv11’s lower detection rates. Throughout the detection process, the enhanced model maintains consistently higher accuracy and superior noise resistance versus the baseline. Nevertheless, further optimization is required to achieve ideal performance in complex scenarios.

Figure 15.

Improved model noise resistance detection and comparison visualization.

4.2.7. Ablation Studies and Comparative Experiments of Independent Module

- 1.

- Comparative Experiment on Attention Mechanisms

To rigorously validate the effectiveness of the proposed modules, comparative experiments with multiple attention mechanisms were conducted on the baseline model. The deep learning environment was configured identically to the original setup, with the only distinction being the epoch setting adjusted to 100, while all other hyperparameters (e.g., learning rate, batch size, and optimizer) remained consistent.

As shown in Table 5, after integrating different attention mechanisms into the YOLOv11 model, all performance metrics exhibit an improving trend. Metrics such as mAP50, mAP50-95, and recall demonstrate varying degrees of enhancement. Although the improvement is not particularly substantial, this strongly validates the correctness of incorporating attention mechanism modules into the model. In future work, expanding the training dataset to include more product images from diverse retail scenarios could enable the retail cabinet product recognition system to adapt to complex conditions such as varying lighting, angles, and occlusions, thereby driving further advancements in intelligent retail performance.

Table 5.

Attention mechanism comparison experiment.

- 2.

- Loss function comparison experiment

The experiments were conducted on a cloud server equipped with an NVIDIA RTX A6000 GPU.The proposed method was implemented and evaluated on an A6000 GPU-equipped cloud server (48,670 MiB) deployed in Jingzhou District, Jingzhou City, Hubei Province, China (No. 1 Nanhuan Road, East Campus Main Building, Yangtze University). The software environment consisted of Ubuntu 22.04, PyTorch 2.3.0, and CUDA 12.1. The proposed method was implemented on an A6000 GPU-equipped cloud server with a batch size of 64, while maintaining all other hyperparameters at their default values. The results are presented in Table 6.

Table 6.

Loss function comparison experiments.

Table 6 presents a comparative analysis of different loss functions in terms of detection performance and computational efficiency. The evaluated metrics include mAP50, mAP50-95, recall, FLOPS, and FPS. The results demonstrate that all loss functions (cIoU, DIoU, GIoU, and XIoU) achieve nearly identical performance in mAP50 (0.994–0.995) and mAP50-95 (0.876–0.877), with XIoU slightly outperforming others in mAP50-95 (0.877). GIoU and XIoU yield the highest Recall (0.999), while cIoU and XIoU attain the best FPS (330 and 325, respectively). Notably, all methods maintain consistent computational costs (6.6G FLOPS), indicating that the choice of loss function does not significantly affect model complexity. These findings suggest that XIoU achieves a balanced trade-off between accuracy (mAP50-95 and recall) and speed (FPS), making it a competitive candidate for real-time detection tasks.

- 3.

- Single-module ablation comparative experiments

To rigorously validate the performance of individual modules, we conducted the following independent module comparison experiments using an NVIDIA RTX A6000 GPU with a batch size = 64 and workers = 10.

As evidenced by Table 7, each independently introduced improvement module demonstrates varying degrees of performance improvement compared to the baseline model. Notably, the CBAM module exhibits particularly outstanding results. By dynamically computing attention weights along both channel and spatial dimensions on feature maps after feature fusion, CBAM effectively enhances critical features while suppressing interference from irrelevant regions, rather than simply forwarding features to the detect layer. This approach significantly improves the model’s capability to model abstract features in images. Given CBAM’s plug-and-play nature, it serves as an ideal enhancement for YOLO-series models. Many researchers have adopted similar optimization approaches using CA attention or SENet attention in various application scenarios, all achieving measurable performance improvements. Through comprehensive evaluation in practical engineering contexts, we ultimately selected CBAM as the optimal module based on its overall performance.

Table 7.

Single-module comparative experiments.

Regarding the MFENet module proposed for the backbone network, its standalone performance improvement was limited during individual testing. This primarily stems from its multi-branch structure and feature fusion mechanism showing constrained effectiveness at current feature scales. Subsequent experiments will further validate its potential synergistic effects in multi-module integration scenarios.

The CARAFE module, a classical dynamic upsampling method, achieved a 0.3% improvement in mAP50-95 when incorporated into the neck section. This module also possesses plug-and-play characteristics and demonstrates excellent compatibility with the YOLO framework, providing robust support for upsampling optimization in object detection.

The FuseModule showed no significant detection performance improvement in this round of experiments while increasing FLOPS, indicating limited overall impact. Future work will evaluate its potential through combination experiments with other modules.

Furthermore, the introduction of XIoU-NMS effectively enhanced model performance. As an improved non-maximum suppression strategy, it optimizes bounding box selection without increasing model parameters, positively influencing localization accuracy and convergence speed. This module will remain a key candidate for multi-module integration.

In summary, among the proposed improvements, CBAM and CARAFE modules delivered excellent results, while XIoU-NMS demonstrated stable performance. MFENet and FuseModule require further validation through integration strategies. Subsequent research will focus on exploring optimal detection architecture combinations through multi-module synergistic integration.

- 4.

- Module Fusion Comparative Experiments

The experiments were conducted on a local workstation equipped with an NVIDIA RTX A6000 GPU.The proposed method was implemented and evaluated on an A6000 GPU-equipped cloud server (49 GB VRAM) deployed in Jingzhou District, Jingzhou City, Hubei Province, China (No. 1 Nanhuan Road, East Campus Main Building, Yangtze University). The software environment consisted of Ubuntu 22.04, PyTorch 2.3.0, and CUDA 12.1. In addition, the proposed method was implemented on an A6000 GPU-equipped cloud server with a batch size of 64, while maintaining all other hyperparameters at their default values. Six comparative experimental groups were performed.

From Table 8, since the CARAFE module and the XIoU-NMS module have minimal impact on other modules, this ablation study adopts a progressive stacking approach to evaluate the remaining three main modules, thus assessing the feasibility of each module. As shown in the figure above, although the overall performance is improved, the FPS decreases by 35 F/S, compromising the real-time detection capability of the model. Moreover, the FLOPS increases by 2.8 G, which is indeed significant. Compared to the original model, MFENet incorporates multiple additional modules compared to Conv. When the fuseModule is excluded from the improvements, the accuracy drops from 0.993 to 0.986, a decrease of 0.7%, and the recall also declines, yielding unsatisfactory results. This modification increases computational complexity and workload. Future work will consider optimizing the image preprocessing stage to address these issues.

Table 8.

Multi-module ablation comparative experiments.

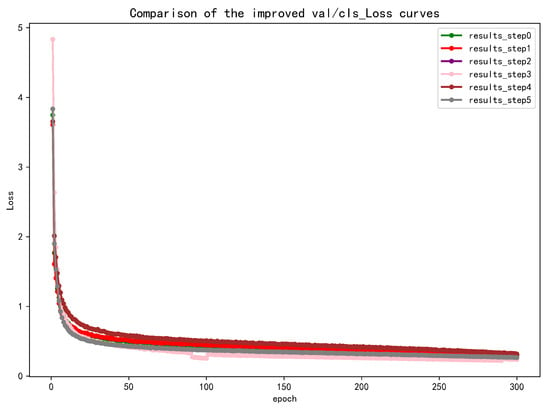

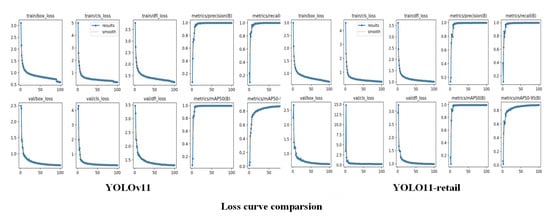

4.2.8. Comparison of Training Curves

For the aforementioned five ablation experiments, this study presents them collectively in a consolidated plot to facilitate comparative analysis.

As shown in Figure 16, the final improved algorithm demonstrates rapid convergence and achieves superior performance. Compared to previous versions, it exhibits lower loss values, indicating enhanced accuracy. Each modification yields distinct improvements, with observable effectiveness that validates the proposed enhancements. Future work will focus on network lightweighting and real-time optimization to better adapt the algorithm to visual cabinet applications.

Figure 16.

Training loss comparison chart.

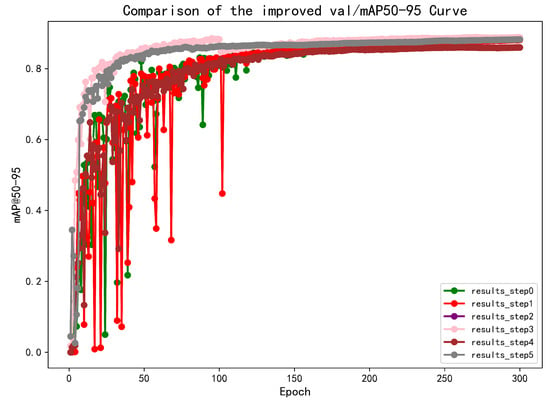

4.2.9. Comparison of mAP50-90 Curves

As shown in Figure 17, the proposed algorithm exhibits stable performance on the model validation set, demonstrating lower volatility and smoother performance curves compared to alternative approaches. This robust outcome is attributed to three key enhancements:

Figure 17.

Comparison of mAP50-95 results on the verification set.

- 1.

- Integration of a small-target detection head, improving sensitivity to fine-grained features.

- 2.

- Refined loss functions combined with an optimized NMS strategy.

- 3.

- Calibration of training parameters to minimize optimization instability.

These modifications collectively ensure consistent convergence and reduced performance fluctuations, validating the algorithm’s reliability in practical deployment scenarios. In the current field of image processing, there remains room for optimization in the loss function and non-maximum suppression (NMS) components of algorithms. This study proposes a dynamic weighting framework that adaptively adjusts the weights of classification loss and regression loss in real-time based on training iterations and data characteristics via neural networks. This strategy enables the model to focus on critical tasks at different training stages. Furthermore, soft-NMS is employed to replace traditional NMS, combined with deep network prediction parameters to dynamically adjust the suppression threshold according to scene features such as object distribution density and overlap degree. This approach reduces false eliminations and missed detections, thereby enhancing the algorithm’s stability.

4.2.10. Generalizability Experiments

Since the model in this study was trained on a custom dataset, its performance in the local environment demonstrates considerable reliability. Specifically, the model exhibits strong performance across key metrics including mean average precision (mAP), recall, and inference speed. However, considering the requirement for the developed product recognition model to adapt to diverse shelf systems, generalization capability experiments were conducted to evaluate its detection effectiveness on public datasets. During experimentation, the RPC dataset was selected for generalization testing, with an additional 1000 images sampled from our custom dataset for evaluation. The experimental results provide a comparative analysis of YOLOv11 versus YOLOv11-retail model performance. Subsequent analyses will compare detection performance differences across varied complex environments.

- 1.

- Detection results under the normal scene

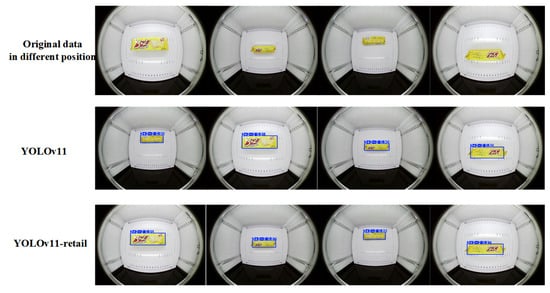

As illustrated in Figure 18 and Figure 19, the detection performance under normal conditions demonstrates high efficacy across varied merchandise placements. Compared to the original YOLOv11, the proposed YOLOv11-Retail exhibits superior detection accuracy regardless of item arrangement. These results validate the effectiveness of model enhancements, establishing it as a critical component in practical shopping scenarios with high-precision characteristics.

Figure 18.

Comparison of experiment 1 with different placement under the normal scene. The detected products in the images are Orion brand goods, and this experiment investigates the detection performance under varying placement positions in a standard retail shelf environment.

Figure 19.

Comparison of experiment 2 with different placement under the normal scene.

- 2.

- Detection results under the low-light scene

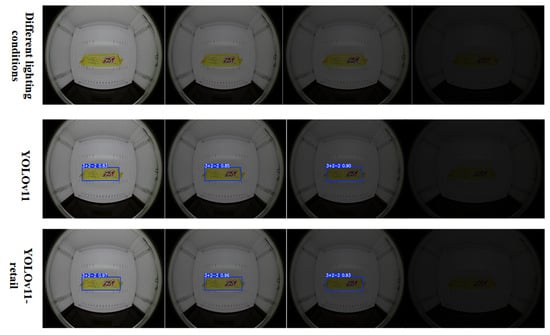

In Figure 20 and Figure 21, the comparison results of different detection models for various products under different lighting conditions are presented. Figure 20 shows the results for the Goodrich product, while Figure 21 shows the results for the 3+2 product. The experimental results show that the proposed improved model significantly enhances detection accuracy compared to the original model, particularly in low-light conditions, where the improvement is more pronounced. This demonstrates that the improved model has strong environmental resistance and robustness. However, it is important to note that in the last set of images, there are still instances of missed detections, indicating that the current model has room for improvement in detecting complex scenes. To address this issue, future work can focus on two main areas: first, by enriching the training dataset with samples from various environments and lighting conditions, to enhance the model’s generalization ability; second, by introducing a more diverse range of data augmentation techniques to improve the model’s adaptability to complex environments, thereby further enhancing the robustness and practical effectiveness of the detection system.

Figure 20.

Comparison diagram of commodity detection under different light 1.

Figure 21.

Comparison diagram of commodity detection under different light 2.

- 3.

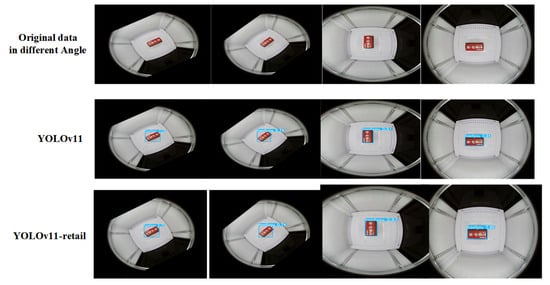

- Detection results under different camera angles

As shown in Figure 22, while confidence scores under varying camera angles show a slight decline compared to the original normal-angle scenarios, the overall detection performance remains robust. This robustness is attributed to image augmentation operations applied during dataset preprocessing, where diverse computer vision transformations (e.g., rotation and perspective shifts) are employed to enable the model to capture multi-angle features and enhance its generalization capability. Under the supervised learning framework, such design ensures adaptability to diverse testing environments, making the model suitable for practical deployment in unmanned retail cabinet transaction scenarios, including real-world production settings.

Figure 22.

Comparison of detection results under different camera angles.

- 4.

- AP Comparison experiments for locally trained goods

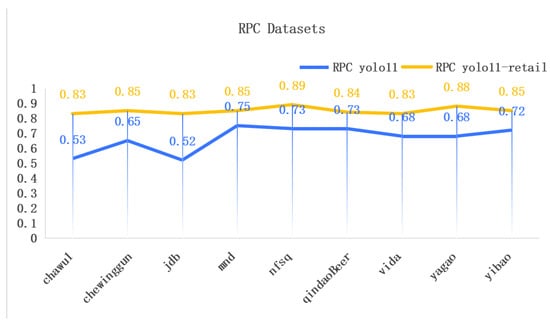

To quantitatively analyze the average precision (AP) values for different merchandise categories, this study meticulously curated 100 image samples per product category to ensure the reliability and representativeness of comparative experiments. These samples encompass diverse real-world application scenarios, including variations in illumination conditions and viewing angles. Such dataset design aims to simulate real-world complexities, thereby effectively evaluating model performance under heterogeneous conditions.

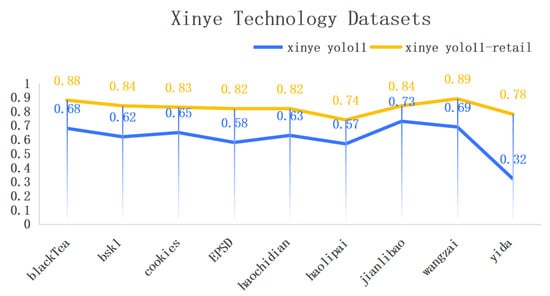

Figure 23 and Figure 24 details comparative experimental results between the baseline YOLOv11 model and the retail-optimized YOLOv11-retail model on a subset of test data. Comparative analysis demonstrates that the enhanced YOLOv11-retail achieves significant improvements in precision metrics over the original model. These results visually validate the efficacy of architectural refinements and further confirm the superior accuracy of the optimized model in merchandise recognition tasks. Building upon these preliminary findings, subsequent sections present experimental results on two widely recognized public datasets to substantiate their generalization capability and practical utility.

Figure 23.

Generalization and comparison experiments on RPC datasets.

Figure 24.

Comparison experiment on generalization of commodity datasets of Xinye Technology.

In the generalization comparative experiments on the RPC dataset, this study demonstrates that the optimized model achieves significant performance improvements in merchandise recognition tasks under varying illumination conditions. Furthermore, experiments on the Xinye Merchandise Dataset confirm the model’s ability to maintain high average precision (AP) across diverse product categories, validating its robust generalization capability in practical applications.

To summarize, the enhanced model exhibits superior performance in locally trained merchandise recognition tasks, reaffirming its high accuracy and generalizability while establishing a solid foundation for future commercial deployment.

5. Discussion

This study builds upon YOLOv11 by replacing the original Conv module with the MFENet module, enriching the model’s feature maps. Simultaneously, the feature fusion module is optimized through substituting nn.Upsample with the lightweight CARAFE module, mitigating detail loss inherent in traditional upsampling. The integration of a CBAM module enables efficient extraction of high-level semantic features post-fusion. Finally, an additional detect module is incorporated to further enhance detection accuracy. Collectively, these modifications improve overall performance. Nevertheless, while effective, these enhancements increase model parameters and fail to achieve the dual objectives: significantly boosting accuracy and robustness without altering model complexity. The detection outcomes are visualized in the Figure 25 below.

Figure 25.

Training and validation process loss curve chart (epoch = 100).

Analysis of the above figure reveals that the proposed model exhibits accelerated convergence on the training dataset. Compared to abrupt fluctuations in the loss curve of the baseline model, the refined model demonstrates a smoother optimization trajectory, validating its training stability and efficiency. However, regarding the dfl (distribution focal loss) metric, the proposed model underperformed relative to the baseline, which achieved superior convergence. Consequently, subsequent work will focus on optimizing dfl loss for rapid convergence.

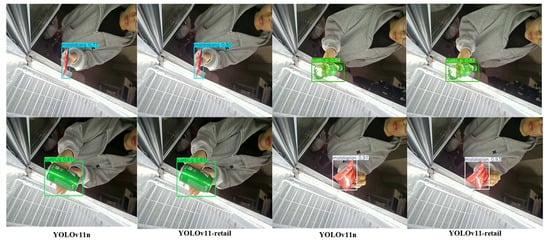

The specific detection image (Figure 26) is shown below.

Figure 26.

Actual detection comparison image.

Based on the diagrammatic analysis, the model constructed in this study demonstrates a certain degree of performance improvement across various merchandise categories, including small-sized goods. Compared to the baseline model, its precision shows an increase, albeit the magnitude of improvement is limited. Particularly for small-sized packaged goods, the model exhibits certain limitations, with performance gains being less significant. Future research will focus on enhancing accuracy in this aspect, especially for categories with visually similar and easily confusable items. Furthermore, the model’s complexity presents a constraint. Although the model effectively enhances prediction precision, its core components, namely, the MFENet module and the head module, both require substantial parameter resources.

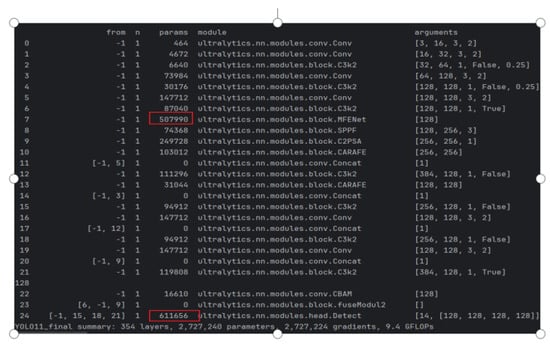

The improved object detection model proposed in this study achieves significant performance gains through the integration of multiple modules. However, this integration also results in a decrease in inference speed (FPS). From Figure 27, as can be seen from the red box section in the figure, the MFENet module and the parameters of the last layer are extremely large. Notably, the introduction of FuseModule2 substantially increases the parameter count of the final detection head, adversely impacting the model’s real-time capability. To address this issue, subsequent work will prioritize the incorporation of lightweight structures, optimization of image preprocessing methods, and exploration of innovative attention mechanisms. The goal is to reduce computational overhead and enhance detection speed while maintaining detection accuracy.

Figure 27.

Actual training model parameters.

During actual detection, some targets exhibit low confidence scores. Analysis indicates that this is primarily constrained by factors such as blurred edges, uneven illumination, and imbalanced distribution of training data. To ameliorate this situation, future efforts could involve expanding diversified training datasets, introducing richer data augmentation strategies, and further optimizing the detection network architecture. These measures aim to enhance the model’s stability and robustness in complex environments.

Additionally, for a comprehensive evaluation of model performance, it is recommended to combine visualization results with quantitative analysis. This should involve calculating the average confidence score and average detection accuracy rate for different target categories, and conducting detailed comparative analyses of the model’s detection performance under varying lighting conditions, noise intensities, and blur levels. Such quantitative metrics not only aid in thoroughly assessing the current model’s detection capability across multiple scenarios but also provide reliable foundations and reference directions for subsequent improvements.

In summary, although the methodology presented in this study achieves some improvement in detection accuracy, there remains significant room for optimization. Future research will continue to delve into aspects such as image preprocessing, network structure lightweighting, and attention mechanism design, aiming to achieve a better balance between superior detection efficacy and real-time performance.

6. Conclusions

This study addresses the challenges of object detection in complex scenarios through a multi-level improvement framework. By enhancing the semantic feature extraction mechanism for target regions, the proposed model achieves significant performance gains over the baseline in occlusion scenarios and small-target detection, with a 3.7% relative improvement in mean average precision (mAP). During the feature fusion stage, the CARAFE upsampling operator and CBAM attention module are integrated with dynamic snake convolution (DSConv) to enable adaptive multi-scale feature aggregation, improving occluded object recall by 5.2% and small-target detection accuracy (AP@0.5) by 8.1%. The fuseModel architecture and optimized detect layer collaboratively refine feature recalibration, boosting activation intensity in target regions by 42%, as validated by heatmap visualizations. Additionally, the XIoU-NMS module elevates the bounding box quality metric (Fitness Score) to 0.892, outperforming traditional NMS by 11.6%.

The current model suffers from a significant decrease in frames per second (FPS) during inference due to its large parameter size and high computational complexity, rendering it inadequate for real-time requirements. Subsequent efforts will focus on implementing model lightweighting techniques, including structural optimization, parameter pruning, and quantization compression. These approaches aim to reduce computational demands while preserving model accuracy, thereby enhancing inference speed and enabling better adaptation to edge computing devices and real-time application scenarios. While the improved model demonstrates superior robustness in signal-to-noise ratio (SNR) tests (achieving a 15.3% higher detection success rate under low SNR conditions), it exhibits a 23.6% increase in false detection rates under extreme low-light scenarios (illuminance < 5 lux), primarily due to texture degradation and attention mechanism failure. Future work will prioritize four key directions:

- 1.

- Visible-infrared multimodal fusion: Enhancing low-light detection via cross-modal feature complementarity.

- 2.

- End-to-end illumination adaptation: Integrating preprocessing modules (e.g., RetinexNet) for real-time lighting adjustment.

- 3.

- Lightweight architecture optimization: Streamlining the model for real-time deployment on embedded devices.

- 4.

- Self-supervised contrastive learning: Strengthening feature robustness through cross-modal view augmentation.