1. Introduction

Maize (

Zea mays) is a globally significant cereal crop that occupies a pivotal position in both the food and industrial sectors, with a vast array of applications [

1]. As a principal source of carbohydrates in human nutrition, maize represents a significant component of the diet, particularly in developing countries. Due to its high starch content, maize is a critical component of the production of flour and other starch-based products, as well as sweeteners and biofuels [

2]. Moreover, maize is widely employed as an animal feed, thereby exerting an indirect influence on global meat and dairy production. In industrial applications, maize is utilized as a raw material for the production of bioethanol, bioplastics, and an assortment of chemical products. Additionally, it is utilized in the pharmaceutical and cosmetic industries. The multifaceted utilization of maize makes it an indispensable crop in agricultural production while also being a vital element in global economic and food security [

3].

The haploid doubling technique represents an efficient method for the rapid generation of genetically pure lines in maize breeding. The process entails the duplication of chromosomes in naturally occurring or induced haploid plants, thereby generating diploid progeny [

4]. The haploid doubling process accelerates the production of pure lines within breeding programs, thereby reducing the time required for hybrid variety development and enhancing genetic uniformity. Nevertheless, it should be noted that this technique requires a high level of expertise and sophisticated laboratory infrastructure. The viability rates of haploid plants are often insufficient, and the diploidization process can present difficulties, which may restrict the effectiveness of this approach to some extent. Nevertheless, despite these challenges, haploid doubling is regarded as a preferred approach in maize breeding due to its demonstrated potential to accelerate genetic gains [

5].

Figure 1 illustrates the distinctive morphology of maize seeds, showcasing the notable variations between the haploid (a) and diploid (b) seeds. The haploid seed (

Figure 1a) is distinguished by a colorless embryo, accompanied by R1-nj expression in its endosperm. This is a consequence of haploid plants having only half the genetic material, which results in a smaller and weaker embryo development. In contrast, the diploid seed (

Figure 1b) displays R1-nj pigmentation in both the endosperm and the embryo, indicative of more robust and healthy embryo development in diploid plants [

6]. While both types of seeds share fundamental structures such as the endosperm and seed coat, it is notable that haploid seeds are generally smaller, exhibit lower levels of fullness, and display reduced germination rates. In contrast with the aforementioned characteristics, diploid seeds are larger, fuller, and have higher viability rates, which renders them more frequently preferred in agricultural production.

The haploid doubling method is a critical tool in the field of maize breeding. It facilitates the rapid development of genetically pure lines. The development of new technologies and methods to enhance the effectiveness of this technique has resulted in significant advancements in both laboratory and field conditions. In particular, microspore culture techniques and doubled haploid technology facilitate the expeditious acquisition of haploid plants and enhance overall efficiency. Furthermore, gene-editing tools such as CRISPR-Cas9 enable the direct and precise modification of desired genetic traits in haploid plants [

7]. Further enhancements in haploid induction systems and the optimization of in vitro techniques have led to increased haploid doubling rates, thereby reinforcing the applicability and genetic gains of this method in maize breeding programs [

8].

The haploid doubling process presents a number of significant challenges, including the low viability rates of haploid plants, the difficulty of diploidization, and the challenges associated with phenotypically identifying these plants. Embryo and endosperm development in haploid plants is frequently inadequate, which has a detrimental impact on their growth potential and germination rates [

9]. Furthermore, improper chromosome pairing during the diploidization process can result in genetic aberrations. To address these challenges, the use of computer-assisted digital imaging systems is becoming increasingly crucial [

10]. These systems facilitate the expeditious and precise identification of phenotypic characteristics in haploid and diploid plants, thereby expediting the breeding process. In particular, the integration of image processing algorithms and artificial intelligence is of critical importance in enhancing genetic uniformity and efficiency through the automated analysis of plant morphology [

11].

2. Related Studies

Ayaz et al. proposed a hybrid deep learning model known as DeepMaizeNet, which integrates Convolutional Block Attention Module (CBAM), hypercolumn, and residual blocks. This model demonstrated a 94.13% accuracy rate in the classification of haploid and diploid maize seeds, underscoring its potential applications in the domain of maize breeding [

12]. Dönmez et al. developed an ensemble deep learning model combining five Convolutional Neural Network (CNN) architectures, achieving 90.96% accuracy in seed classification, demonstrating the effectiveness of ensemble learning in automating this process [

13]. Rodrigues Ribeiro et al. utilized near-infrared spectroscopy (NIR) and PLS-DA, achieving 100% accuracy in classifying maize seeds, showcasing NIR’s reliability for seed identification in breeding programs haploids [

14]. Dönmez et al. combined EfficientNetV2B0 with ResMLP, achieving 96.33% accuracy, balancing performance and computational efficiency in maize seed classification [

15]. Güneş and Dönmez implemented an interactive model using batch mode active learning and Support Vector Machine (SVM), reducing labeling costs by 66%, effectively minimizing the time and costs associated with seed classification [

16]. He et al. employed near-infrared hyperspectral imaging (NIR-HSI) in conjunction with multivariate methods, thereby attaining 90.31% accuracy and enhancing the robustness of automated seed classification [

17]. Dönmez employed deep feature extraction with CNNs and Minimum Redundancy Maximum Relevance (MRMR) for feature selection, achieving 96.74% accuracy, emphasizing the effectiveness of deep feature selection strategies [

18]. Ge et al. used nuclear magnetic resonance (NMR) spectra with multi-manifold learning, achieving 98.33% accuracy in haploid kernel recognition, demonstrating NMR’s utility in maize breeding [

19]. Dönmez utilized AlexNet for deep feature extraction, achieving 89.5% accuracy in maize seed classification, highlighting deep learning’s value in breeding programs [

20]. Altuntaş et al. used transfer learning with CNNs, where VGG-19 achieved 94.22% accuracy in seed classification, confirming CNNs’ effectiveness in automation [

6]. Liao et al. combined hyperspectral imaging with transfer learning, achieving 96.32% accuracy in identifying haploid seeds, showing the potential of hyperspectral data in classification [

21]. Altuntaş et al. concentrated on a texture-based classification approach that employed the Gray-Level Co-Occurrence Matrix (GLCM) and decision trees, attaining an accuracy of 84.48%. This finding indicates the efficacy of texture features in facilitating the classification of seeds for breeding purposes [

22].

2.1. Motivation and Contributions

The objective of this study is to enhance the precision and efficiency of the classification of maize seeds, with a particular focus on distinguishing between haploid and diploid seeds. Accurate identification is crucial for accelerating genetic gains and optimizing hybrid variety development in maize breeding. Traditional methods, often manual or basic machine learning, are prone to inaccuracies and are inadequate for large datasets. While existing deep learning models are effective, they often lack the interpretability necessary for agricultural applications, where understanding model decisions is essential. This study makes several key contributions, which are outlined below:

- (1)

The introduction of a biologically focused benchmark dataset (Rovile): Although the Rovile Maize Seed Dataset is relatively small, it is one of the few publicly available datasets specifically designed to capture biologically meaningful regions such as the embryo and endosperm. These regions are critical for haploid–diploid differentiation, and their inclusion strengthens the relevance of the task. Through the use of Grad-CAM, we highlight these regions, offering interpretable evidence that our model focuses on anatomically significant structures.

- (2)

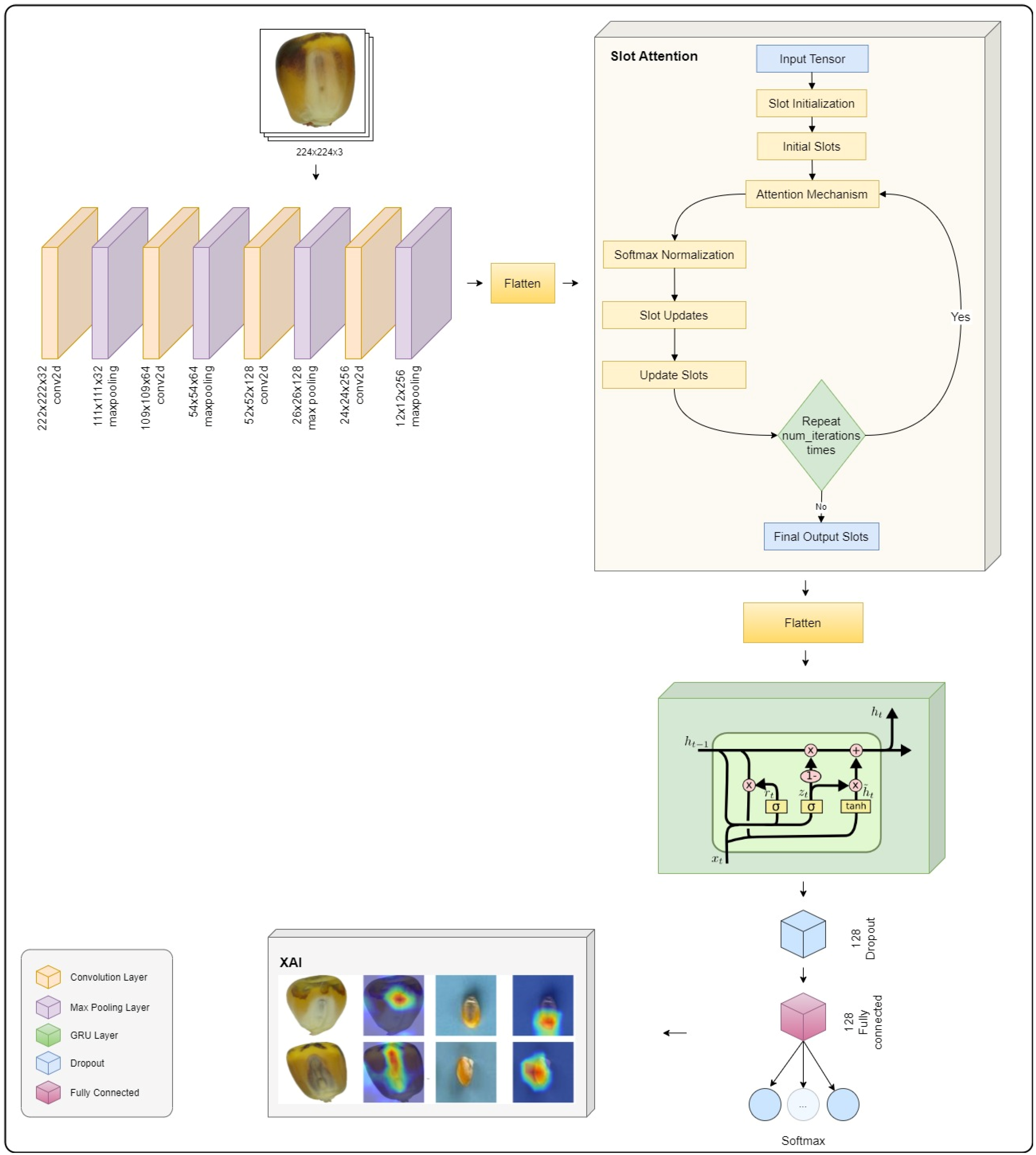

The development of an advanced deep learning model that integrates CNN, Slot Attention, Gated Recurrent Unit (GRU), and Long Short-Term Memory (LSTM) layers, specifically designed to enhance the accuracy and efficiency of maize seed classification.

- (3)

Comprehensive ablation study, systematically evaluating the contributions of each model component (Slot Attention, GRU, LSTM) to the overall performance, providing insights into the effectiveness of these layers.

- (4)

Validation on both small-scale and large-scale datasets: To evaluate its generalization capability and robustness, we tested our model not only on the Rovile dataset but also on the newly published Maize Variety Dataset (2024), which includes over 17,000 seed images from three different varieties. This dual-dataset evaluation validates the model’s scalability across simple and complex classification tasks.

- (5)

The integration of Grad-CAM as an explainable artificial intelligence (XAI) technique is imperative. This ensures transparency and interpretability in the model’s decision-making process, which is crucial for real-world agricultural applications.

2.2. Organization

The structure of the present study is as follows: As delineated in

Section 3, an overview of the datasets and methodologies utilized is presented, in addition to a detailed account of the proposed model architecture. The proposed model architecture incorporates various layers, including CNN, slot attention, LSTM, and GRU. The subsequent section details the experimental setup and discusses the findings.

Section 5 provides a comprehensive analysis and evaluation of these findings. In

Section 6, the conclusions derived from the aforementioned analyses are presented.

4. Results

Four distinct models were developed through ablation experiments, progressively integrating Slot Attention, GRU, and LSTM to assess their impact on model performance. The models were trained with key hyperparameters to optimize performance. A batch size of 16 was used, with training performed over 128 epochs. Data shuffling was enabled for training, but disabled for validation. The Adam optimizer was selected for weight updates, while categorical cross-entropy served as the loss function. Early stopping was implemented with a patience of three epochs, with the validation accuracy serving as the primary metric for monitoring. The optimal weights of the model were restored from the epoch that exhibited the minimum validation loss. The hyperparameters along with their corresponding values are shown in

Table 3.

In the experimental study, the efficacy of four distinct models was assessed through the lens of an ablation study. These models were evaluated on the basis of their performance when enhanced with a series of progressively integrated components, including a baseline CNN, CNN augmented with Slot Attention, CNN coupled with Slot Attention and a GRU, and CNN integrated with Slot Attention and an LSTM unit. This progression allows for a comprehensive investigation of the impact of each additional component on model performance.

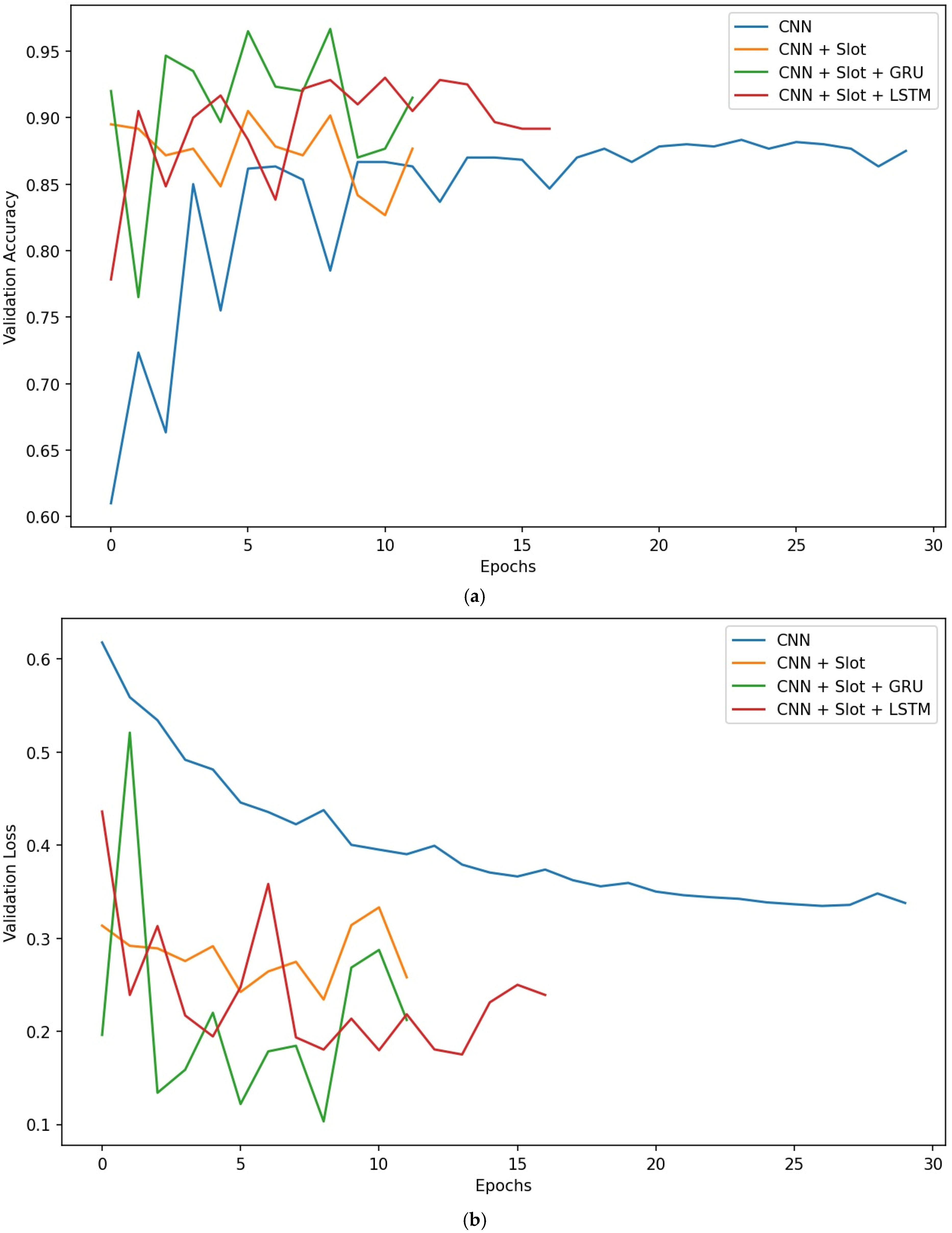

Figure 3 presents the validation accuracy and loss curves for four models. The CNN model exhibits the lowest and most fluctuating accuracy. Incorporating slot attention enhances accuracy, while GRU and LSTM further improve performance, with CNN + Slot + GRU achieving the highest and most stable accuracy. The loss curves mirror this trend, with the baseline CNN showing the highest and most erratic loss, whereas slot attention, combined with GRU and LSTM, progressively reduces loss, indicating improved learning efficiency and model robustness.

It is important to note that all models were trained under identical experimental conditions, including the same learning rate settings, batch size, optimizer (Adam), and early stopping criteria. The differences in the number of training epochs observed in

Figure 3 arise solely from the early stopping mechanism, which was uniformly configured with a patience of three epochs and monitored on validation accuracy. This adaptive stopping strategy helps prevent overfitting and ensures efficient convergence. Therefore, the variations in epoch count do not reflect inconsistencies in the training protocol but instead indicate the individual convergence behaviors of each model. As such, the comparisons made between models remain methodologically fair and valid.

The confusion matrices, as shown in

Figure 4, indicate progressive improvements in the classification accuracy across models. The baseline CNN shows the highest misclassification rates, particularly in distinguishing haploid seeds. Incorporating Slot Attention, GRU, and LSTM significantly reduces misclassifications, with the CNN + Slot + GRU and CNN + Slot + LSTM models achieving the most accurate and balanced classification outcomes.

Table 4 provides a summary of the performance metrics for the four models that were evaluated. The models under consideration are the CNN, the CNN with a slot, the CNN with a slot and a GRU, and the CNN with a slot and an LSTM unit. The baseline CNN model demonstrates the lowest overall performance, with an accuracy of 0.88, sensitivity of 0.9209, specificity of 0.8211, and an F1 score of 0.9006. These results indicate a moderate ability to distinguish between classes. The incorporation of slot attention enhances the model’s sensitivity to 0.9633 and F1 Score to 0.9204, although the specificity remains relatively unchanged. The CNN + Slot + GRU model exhibits the highest performance across all metrics, particularly in specificity (0.9434) and F1 score (0.9717), which demonstrates its superior ability to classify both positive and negative classes with accuracy. The CNN + Slot + LSTM model also exhibits an enhanced performance relative to the baseline, with a balanced accuracy of 0.9250, sensitivity of 0.9322, and specificity of 0.9146. These findings indicate that integrating slot attention with GRU yields the most substantial improvement in model performance.

The Receiver Operating Characteristic (ROC) curves shown in

Figure 5 demonstrate the incremental enhancement in model performance across the diverse CNN architectures. The baseline CNN model demonstrates satisfactory, though somewhat lower, area under the curve (AUC) values (0.95), while the incorporation of slot attention (AUC = 0.97) enhances the ability to distinguish between classes. The models enhanced with GRU and LSTM demonstrate near-perfect performance, with both achieving AUC values close to 1.0, indicating the near-flawless classification of haploid and diploid seeds. The results indicate that integrating Slot Attention with sequential layers, such as GRU and LSTM, markedly enhances the model’s capacity to differentiate between classes, thereby improving the accuracy and reliability of classification.

To enhance the model’s robustness against variations commonly encountered in real-world agricultural imaging, additional data augmentation techniques were incorporated during training. The training set was enriched with random brightness adjustments (range: 0.9–1.1), slight zooming (±10%), and both horizontal and vertical flipping, simulating changes in lighting, scale, and orientation. The validation set remained unaugmented, apart from normalization, to ensure a fair and unbiased evaluation. A comparative assessment was performed using identical model architectures trained with and without augmentation. Notably, the CNN + Slot + GRU model achieved the highest specificity (0.9631) and an F1 Score of 0.9190 under augmentation, indicating superior generalization. While the baseline CNN model exhibited a slight drop in accuracy (from 0.8800 to 0.8700), its specificity improved (from 0.8211 to 0.8293), suggesting a reduced false-positive rate. The CNN + Slot + LSTM model also maintained strong performance, achieving 0.9200 accuracy and 0.9335 F1 Score with augmented data. However, data augmentation did not uniformly enhance all performance metrics; for example, the CNN + Slot model showed a decrease in sensitivity (from 0.9633 to 0.8955). These results suggest that while augmentation generally improves generalization and model robustness, its effectiveness is architecture-dependent. Models combining slot attention with sequential layers (GRU or LSTM) benefited the most, particularly in terms of specificity and balanced classification performance.

In order to evaluate the generalization capacity, dependability, resilience, and efficacy of the models across disparate data distributions, the performances of the models were examined on a comprehensive data set.

Table 5 provides a comprehensive assessment of the model’s performance through the presentation of a summary of classification performance metrics for CNN-based models on the Maize Variety Dataset. The CNN + Slot + GRU model outperforms others, achieving the highest accuracy (0.9230), sensitivity (0.9220), specificity (0.9631), and F1 Score (0.9190), with an AUC of 0.99. These results align with previous findings (

Table 4), where the CNN + Slot + GRU model also showed superior performance. Notably, the addition of Slot Attention and recurrent layers enhances model robustness and reliability, particularly in handling complex data distributions, reaffirming the effectiveness of these components in improving model generalization and classification accuracy across different datasets.

A further investigation was conducted to evaluate the influence of data augmentation on model performance using the Maize Variety Dataset. Interestingly, while data augmentation improved generalization in the Roviel Maize Seed Dataset, its effects were not uniformly beneficial in the more complex and diverse Maize Variety Dataset. As shown in

Table 5, the performance of the baseline CNN model declined markedly under augmentation, with accuracy dropping from 0.8205 to 0.7342 and the F1 Score decreasing from 0.8095 to 0.7078. A similar trend was observed for the CNN + Slot model, where the performance metrics remained stagnant or slightly deteriorated. This suggests that simplistic architectures may overfit to augmented features or struggle with augmented data variance in more heterogeneous datasets. On the other hand, deeper models with attention and recurrence mechanisms were less affected or even benefited. For example, the CNN + Slot + GRU model, though experiencing a decrease in specificity (from 0.9631 to 0.8902), maintained high accuracy (0.8983) and the highest F1 Score (0.9130) among all augmented models. Likewise, the CNN + Slot + LSTM model sustained a strong classification performance, achieving 0.8917 accuracy and 0.9075 F1 Score. These results indicate that while augmentation may introduce noise or complexity detrimental to simpler models, architectures incorporating both spatial (Slot Attention) and temporal (GRU/LSTM) mechanisms are better equipped to handle augmented variability, thus ensuring more reliable and balanced predictions.

Figure 6a illustrates that the CNN + Slot + GRU model attains the highest accuracy in both datasets: 0.9667 for Maize Seed and 0.9230 for Maize Variety. In comparison, the basic CNN model exhibits the lowest accuracy, particularly for the Maize Variety Dataset (0.8205), which underscores the efficacy of slot attention and GRU.

Figure 6b, which depicts the CNN + Slot + GRU model, exhibits the highest sensitivity, with values of 0.9689 for Maize Seed and 0.9220 for Maize Variety. This suggests that the model is particularly capable of identifying true positives. In comparison, the basic CNN model displays the lowest sensitivity, particularly in the Maize Variety Dataset, which highlights its inherent limitations. As illustrated in

Figure 6c, the CNN + Slot + GRU model exhibits remarkable specificity, with values of 0.9434 for Maize Seed and 0.9631 for Maize Variety, indicating efficacious true negative identification. In comparison, the basic CNN model demonstrates comparatively lower specificity in the Maize Seed Dataset, suggesting a propensity to generate a greater number of false positives.

Figure 6d illustrates that the CNN + Slot + GRU model exhibits superior performance, with F1 scores of 0.9717 for Maize Seed and 0.9190 for Maize Variety. This reflects a balanced performance across both datasets.

Figure 7 illustrates a comparison between the original images of maize seeds and their respective Grad-CAM heatmaps, which identify the sections of each image that exert the most influence on the model’s classification decisions. The original images are displayed on the left, with their corresponding heatmaps overlaid on the right. The Grad-CAM technique effectively visualizes the regions of interest that the model prioritizes when determining the class of each maize seed.

Notably, the highlighted regions frequently coincide with biologically meaningful morphological features. In diploid seeds, the model consistently focuses on the embryo and endosperm regions, where R1-nj pigmentation is distinctly expressed. In contrast, for haploid seeds, the attention maps emphasize the central embryonic zone, where the absence of pigmentation and a smaller embryo size are characteristic. This suggests that the model attends to phenotypically relevant traits, such as the presence or absence of anthocyanin coloration and the development of the embryo, which are known indicators of ploidy status in maize. Such biological grounding validates the interpretability of the model and affirms that its decisions are guided by agriculturally and genetically informative visual cues. This correspondence strengthens confidence in the model’s reliability for real-world maize breeding applications, especially in doubled haploid technology.

4.1. Ablation Study of Model Components

To further validate the effectiveness of the Slot Attention mechanism used in the proposed model, we conducted comparative experiments with three alternative attention methods: Squeeze-and-Excitation (SE), CBAM, and Transformer-based attention. As summarized in

Table 6, these models were evaluated on both the Maize Variety and Rovile Datasets using key performance metrics. The results clearly demonstrate that the proposed CNN + Slot + GRU model significantly outperforms the alternatives across all metrics. On the Variety Dataset, the Slot Attention-based model achieved an accuracy of 92.30%, while SE, CBAM, and Transformer-based models lagged behind with accuracies below 76%. Similarly, on the Rovile Dataset, although SE, CBAM, and Transformer variants exhibited competitive performance, none surpassed the proposed model in terms of its F1 score or general balance between sensitivity and specificity. These findings highlight the superior ability of Slot Attention to capture object-centric and spatially distinct features within seed images—an advantage that becomes especially evident when dealing with large-scale and visually heterogeneous datasets. Moreover, the integration of GRU further enhances the model’s temporal understanding and robustness. This comparative analysis not only justifies the selection of Slot Attention in this study but also emphasizes its role in achieving higher generalization and classification accuracies compared to other widely adopted attention schemes.

4.2. Benchmarking Against Modern Deep Learning Models

In order to fairly position the Slot-Maize model among current state-of-the-art architectures, we additionally evaluated InceptionV3, Vision Transformer, and ConvNeXt under the same training conditions. As shown in

Table 7, although all three architectures demonstrated competitive performance, the proposed model consistently outperformed them across both datasets. Notably, ConvNeXt achieved strong F1 scores (0.9181 and 0.9629), yet Slot-Maize retained the highest accuracy and balanced metrics, confirming its robustness and generalizability.

The integration of additional state-of-the-art models—InceptionV3, Vision Transformer, and ConvNeXt—provided further validation of our model’s superiority. While ConvNeXt and Vision Transformer showed high sensitivity and accuracy, especially on the Rovile Dataset, the proposed Slot-Maize model outperformed all in its overall F1 Score and maintained consistent specificity. This suggests that the Slot Attention mechanism combined with recurrent layers not only preserves critical spatial features but also enhances sequential contextualization, which traditional feed-forward models may lack.

4.3. Ensemble Learning with Multiple Slot-Maize Variants

To enhance robustness under uncertain conditions, we constructed five Slot-Maize variants with distinct hyperparameter settings, as detailed in

Table 8.

Figure 8 compares their final epoch accuracy and loss. By integrating their predictions through ensemble methods, such as averaging or voting, we aim to reduce variance and improve the classification stability in challenging agricultural imaging scenarios.

Figure 8 illustrates significant performance differences among the Slot-Maize variants. Slot-Maize C achieved the highest accuracy and the lowest loss, indicating strong convergence and generalization. Slot-Maize E also performed well, suggesting that a lower learning rate may be advantageous. In contrast, Slot-Maize A and B exhibited moderate accuracy but relatively high loss, which suggests issues with overfitting or unstable learning. These findings emphasize the importance of diverse hyperparameters and support the ensemble approach to harness the complementary strengths of the various model variants.

5. Discussion

This section presents a comprehensive analysis of the impact of the various factors influencing model performance, including dataset characteristics, model complexity, generalization, and robustness. Additionally, it underscores the advantages of employing innovative techniques and XAI, particularly in the context of the related studies summarized in

Table 9. This analysis aims to provide deeper insights into how these elements contribute to the effectiveness of different models in maize seed classification, ultimately guiding future research and practical applications in agricultural technology.

The characteristics of the datasets used in these models have a significant impact on their performance. Spectral data, as in [

14], often yield high accuracy (100%) due to the rich, detailed information captured at different wavelengths, which enhances feature differentiation. Grayscale images, as used in [

22,

35], generally result in lower accuracy (91.23%) because they lack color information, which limits feature extraction capabilities. Color images, as seen in most other studies, provide a balance, with models achieving high accuracy due to the additional RGB channels that increase feature richness. In addition, the number of images affects model performance, with larger datasets, such as the maize variety dataset, providing more training data, which improves generalization, but also requires more computing power.

The trade-off between model complexity and performance is evident when comparing hybrid deep learning models to traditional machine learning techniques [

22,

36]. Hybrid models, such as those combining CNNs with Slot Attention and GRU/LSTM, often achieve higher accuracy due to their ability to capture complex patterns and interactions within the data. However, this increased accuracy comes at the cost of significantly higher computational requirements, making these models more resource-intensive and slower to train. In contrast, simpler methods such as GLCM with decision trees offer faster processing and lower computational requirements, but may struggle with the intricacies of complex datasets, resulting in lower accuracy. This trade-off requires careful consideration of the specific application and available resources when choosing a model.

The generalization and the robustness of the models are crucial for the evaluation of their applicability to different types of datasets. The proposed approach, which integrates CNN, slot attention, and GRU/LSTM, demonstrates strong generalization capabilities, as evidenced by its high performance on both the maize seed dataset (96.97% accuracy) and the maize variety dataset (92.30% accuracy). This consistency across datasets suggests that the model effectively captures the underlying patterns, allowing it to handle different data distributions. In contrast, models that perform well on a single dataset but poorly on others may lack robustness, limiting their broader applicability. The ability of our model to maintain high accuracy across different datasets highlights its potential for a reliable performance in real-world agricultural applications, where data variability is common.

Our evaluation of the model’s performance under occlusion and sensor noise conditions highlights its robustness. In occlusion sensitivity experiments, we systematically masked different regions of maize seed images to analyze the impact on classification accuracy. The results revealed that model predictions were most affected by occlusions in the embryo and endosperm regions, which were also identified as critical by Grad-CAM visualizations. This finding suggests that the model’s decision-making process is guided by its focus on biologically significant features, demonstrating its understanding of the agricultural context. Furthermore, we simulated sensor noise by applying Gaussian noise and motion blur to the input images. Despite a slight decline in classification performance under severe noise conditions, the CNN + Slot + GRU model maintained strong resilience, with F1 score reductions remaining below 5%. These outcomes underscore the model’s robustness and potential effectiveness in practical agricultural applications, even in imperfect imaging conditions.

As an explainable AI technique, Grad-CAM provides critical insights into the decision-making process of deep learning models, a feature missing from the studies reported in

Table 9. By highlighting the specific regions of maize seed images that influence classification decisions, Grad-CAM increases transparency and confidence in the model’s predictions. This is particularly beneficial in agricultural applications, where understanding why a model makes certain decisions is critical to ensuring accuracy and reliability. The use of XAI techniques such as Grad-CAM not only improves model interpretability, but also facilitates more informed decision-making in practical settings, distinguishing our study from previous work.

The proposed model introduces the innovative integration of Slot Attention and GRU, a combination not explored in previous studies, which significantly improves the classification performance. Slot Attention improves the model’s ability to focus on relevant features within the maize seed images, enabling the more accurate identification of subtle differences between haploid and diploid seeds. The GRU further refines this process by efficiently capturing dependencies in the data, especially in sequential contexts. This synergistic integration leads to superior generalization and robustness across diverse datasets, setting our model apart from conventional approaches and demonstrating its potential for more precise agricultural applications.

The proposed model, which integrates CNN, Slot Attention, GRU, and LSTM, shows superior performance compared to the other models listed in

Table 9. It achieves an accuracy of 96.97% on the maize seed dataset, outperforming models such as DeepMaizeNet (94.13%) [

12] and the majority voting CNN ensemble (90.96%) [

13]. The improved performance can be attributed to the enhanced feature extraction capabilities of Slot Attention and the efficient handling of sequential data by GRU and LSTM, which together allow the model to capture complex patterns in the data. However, it is important to note that performance metrics alone do not provide a complete picture. Differences in dataset characteristics and validation techniques can have a significant impact on these metrics. Without a thorough evaluation of the datasets and methodologies used, comparisons based solely on performance metrics can be misleading and may overestimate the effectiveness of a model in real-world applications.

6. Conclusions

The objective of this study was to enhance the precision and efficiency of maize seed classification, particularly in distinguishing between haploid and diploid seeds, through the development of the Slot-Maize deep learning model. The proposed model demonstrated superior accuracy and robustness, significantly outperforming existing methods and showcasing strong generalization across diverse datasets. The integration of Slot Attention, GRU, and LSTM layers within the Slot-Maize model effectively captured complex patterns within the seed images, leading to more accurate classifications. The Slot-Maize model demonstrated a classification accuracy of 96.97% on the Maize Seed Dataset and 92.30% on the Maize Variety Dataset. It showed better performance than existing methods, with improved sensitivity, specificity, and F1 scores across both datasets. This highlights the efficacy of the Slot-Maize model in accurately distinguishing between haploid and diploid seeds. The results of this study indicate that Slot-Maize has the potential to markedly enhance the efficacy of maize breeding programs, facilitating accelerated genetic advancement and optimized hybrid variety development. However, the investigation also uncovered certain constraints, particularly in the model’s performance when applied to smaller, less diverse datasets, underscoring the necessity for further optimization. This study utilized Grad-CAM as an explainable AI technique, offering visual insights into the model’s decision-making process. By highlighting the key regions in maize seed images that influenced classification, Grad-CAM enhanced the transparency and interpretability of the Slot-Maize model, making its predictions more understandable and reliable for agricultural applications.

Additional research could explore the integration of more explainable artificial intelligence (XAI) techniques to improve model transparency. It could also examine the application of Slot-Maize to different crop types, thereby expanding its usefulness in agricultural technology. Overall, Slot-Maize is a significant advancement in automated seed classification, providing a reliable and interpretable tool that enhances the accuracy and efficiency of agricultural practices.