Data-Driven Predictive Modelling of Agile Projects Using Explainable Artificial Intelligence

Abstract

1. Introduction

- –

- Establishes and verifies a full set of 22 agile project success indicators in a structured Delphi-based expert consensus process.

- –

- Creates a highly accurate predictive model of agile project status using an artificial neural network (ANN) and optimizes it with Neural Architecture Search (NAS).

- –

- Utilizes explainable AI (SHAP algorithm) to clearly explain the contributions of each feature to the project or outcome.

- –

- Implements Apriori pattern analysis to extract practical managerial insights from project feature combinations.

2. Literature Review

2.1. Success: From General Definitions to Agile Mindset

2.2. Agile Methodologies and Their Implications for Success

2.3. Related Works

2.4. Research Gaps

3. Methodology

3.1. Model Setup and Data Preprocessing

3.2. Feature Extraction and Justification

- Phase 1: Review of the Literature for Initial Identification

- Phase 2: Expert Consultation with the Delphi Method

- –

- Step 1 (Evaluation of Features): experts were presented the original 40 features and were requested to rate the relevance and importance of the 40 features utilizing a Likert scale (1 = not relevant, to a score of 5 = highly relevant).

- –

- Step 2 (Refinement of Features): features receiving a consistently low rating (average rating scale value below 3) were removed, and we received qualitative features to retain or remove features.

- –

- Step 3 (Consensus Building): a final rating and ranking of all remaining features occurred to produce a new final set of agile project success features that were streamlined and promoted consensus building.

- Phase 3: Final Validation and Confirmation of Selected Features

3.3. Neural Architecture Search (NAS) Procedure

3.4. Integration of SHAP for Explainability

- –

- Step 1 (Computation of SHAP Values)—after NAS optimization produced the best performing ANN model, SHAP values for each of the input features were computed for the entire dataset using the Python v3.12.5 SHAP package.

- –

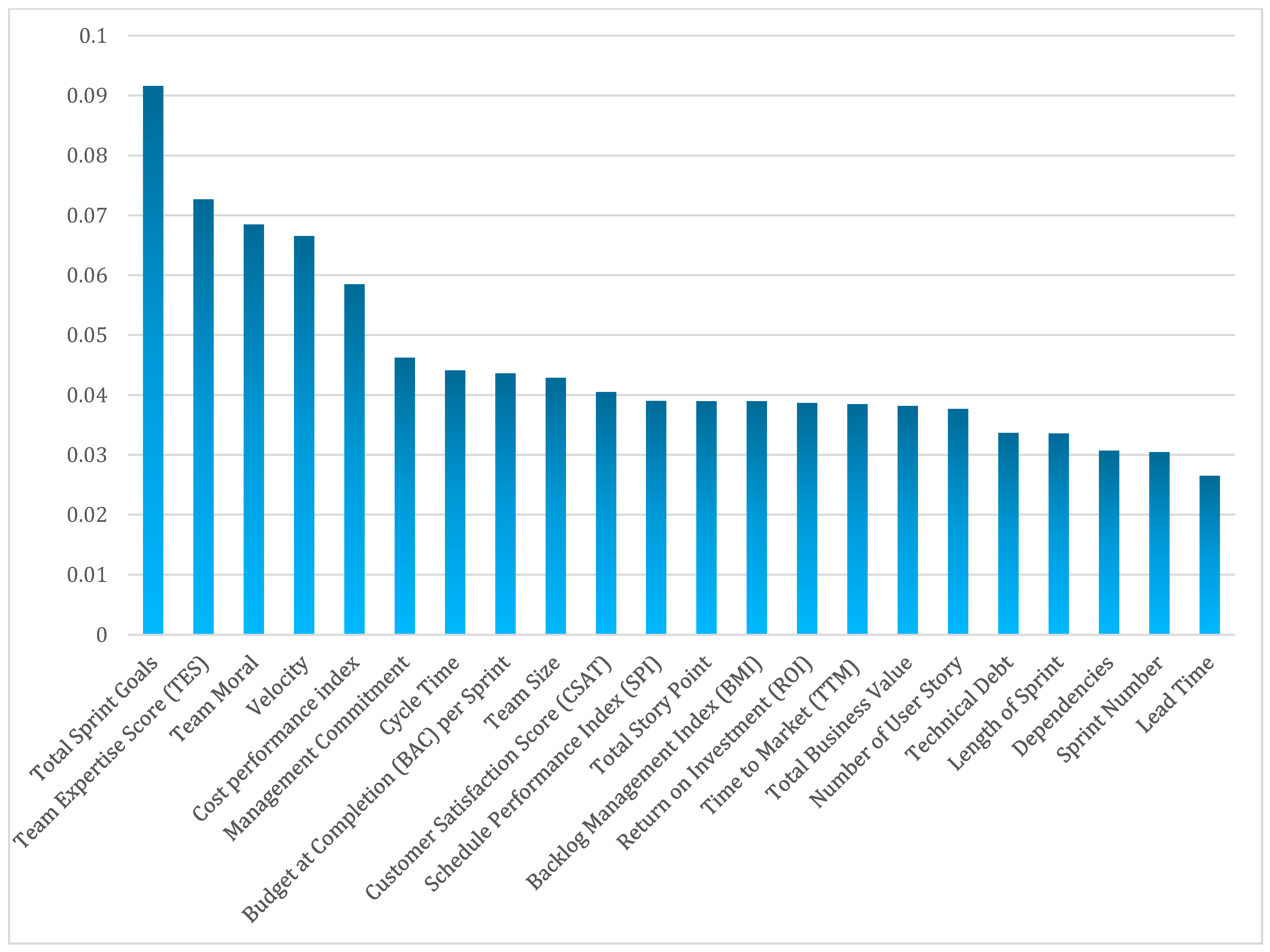

- Step 2 (Global Interpretability)—The computed SHAP values for each feature were aggregated together in order to create global feature importance rankings. This analysis provided insight into which agile features (e.g., sprint goals, velocity, team morale, etc.) were significantly impacting the overall success or failure of individual projects across the dataset.

- –

- Step 3 (Local Interpretability)—the SHAP framework also provided local explanations of each individual project prediction, which enabled managers to understand the rationale for those specific predictions and enabled them to develop targeted corrective actions.

3.5. Integration of Apriori Analysis

- –

- Step 1 (Prepare and Group Data):

- Successful project.

- Delayed project.

- Failed project.

- –

- Step 2 (Running the Apriori Algorithm):

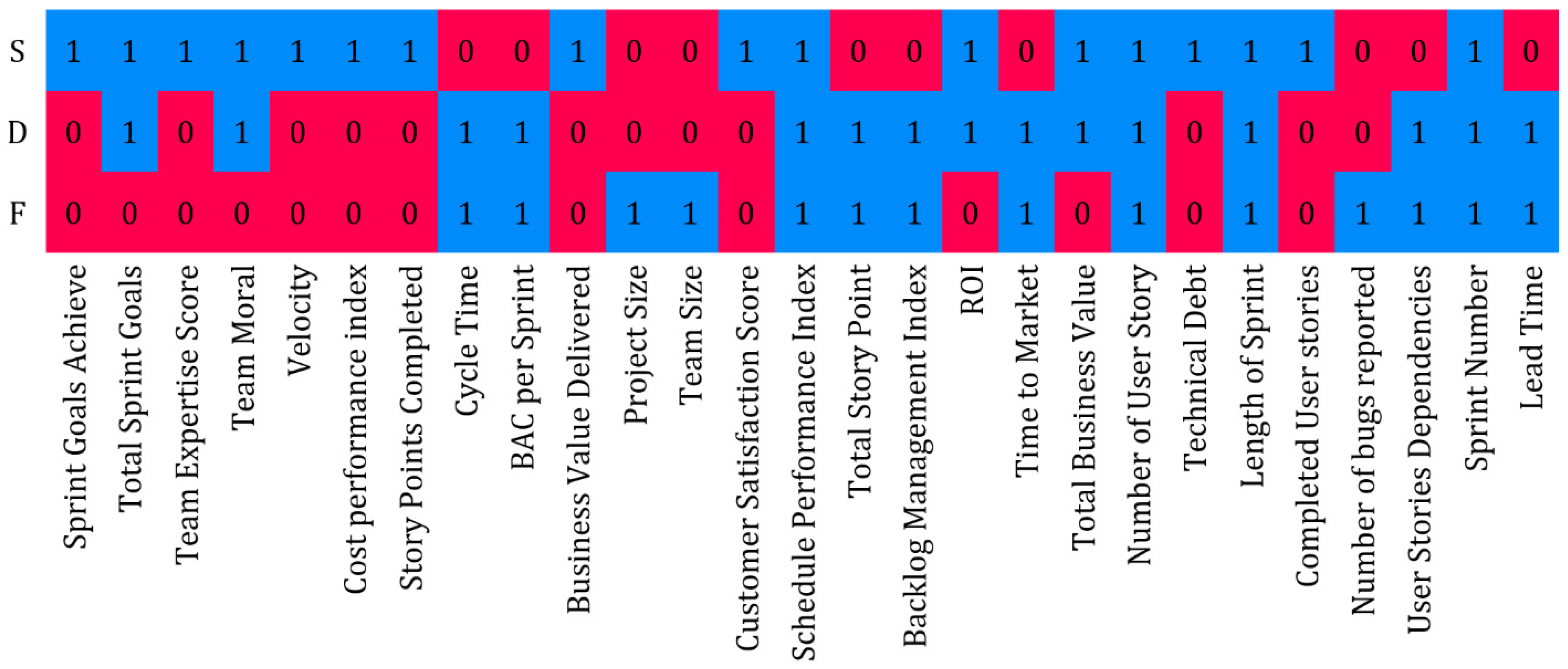

- Apriori consistently searched for iterated frequent feature-value sets (i.e., itemsets) across project records, within each category for successful, delayed, and failed projects.

- Once the itemsets were produced, the frequent itemsets were filtered out with minimum support thresholds in terms of relevance [sample size] and significance (i.e., accepted based on statistical confidence).

- –

- Step 3 (Produce Association Rules):

- “If Team Morale = High AND Total Sprint Goals = Clearly Defined THEN Project Outcome = Successful”

- “If Technical Debt = High AND Number of Dependencies = High THEN Project Outcome = Delayed or Failed.”

- –

- Step 4 (Visualizing and Interpreting Analysis):

- Visually represented well-defined sets of features that frequently co-occur together

- Assigned strength of association rules represented by either color or line thickness

- –

- Stage 5 (Linking Insights to Managerial Recommendations):

- Support and repeat successful feature combinations:

- Proactively monitor and mitigate identified risk factors:

- Targeted Training and Resource Provisioning:

- Ongoing Improvement Process:

4. Results

4.1. Data Description and Model Development Features

4.2. Analysis of Features and Their Importance

4.3. Project Status Prediction with ANN and Optimal Architecture with NAS

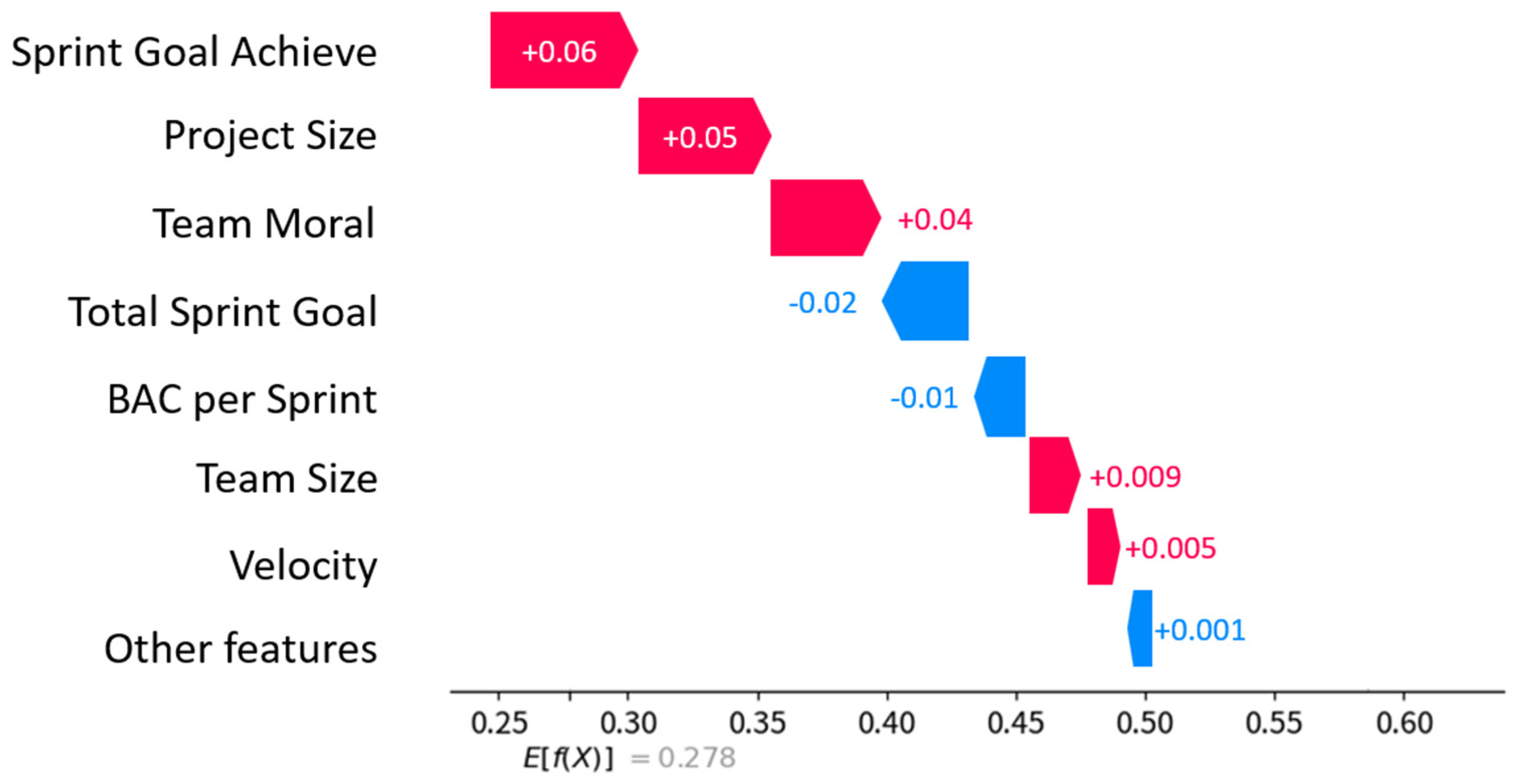

4.4. Interpretation of ANN Model with SHAP Algorithm

- Positive Influence Towards Project Success:

- (i)

- Total Sprint Goals: A higher total number of sprint goals that are concisely stated positively influenced project success probability, as expected. Practically, project managers should focus on collaboratively establishing a significantly higher total number of sprint goals with the development team that align closely with the business’ objectives in terms of the detail and specificity of the sprint goals.

- (ii)

- Team Morale: Teams with higher morale completed projects with better end outcomes. Project managers can increase morale through regular feedback, team-building activities, and recognition and by fostering an inclusive and supportive work environment.

- (iii)

- Velocity: Continuously sustained or increasing velocity (as measured by the number of story points delivered per sprint) is positively correlated with the likelihood of project success. While project managers need to consider velocity more in terms of administration monitoring, they should still keep velocity in mind for discussion with teams since some of the likelihood of the project’s success is contingent on proactively addressing factors that affect team performance or productivity.

- Negative Influence Towards Poor Project Success (delays and failures):

- (i)

- Sprint Number: A higher number of sprints (indicating projects that lasted longer than planned or too many sprits where the goals were not met) negatively influenced project success. A critical task for project managers is to carefully manage how the project duration progresses and how effective sprint planning is for maintaining a rational pace.

- (ii)

- Technical Debt and Dependencies: High levels of technical debt and numerous outstanding task dependencies are typically associated with delayed or incomplete project outcomes. Project managers should co-locate with these two aspects to track them effectively and ensure significantly more resources are made available to process work to mitigate and reduce technical debt and track task dependencies.

4.5. Analysis of the Behavior of Projects of Each Category with Apriori

5. Management Insight

- Utilizing Data-Driven Models: Companies like Fluor Corporation and Shell use AI-powered predictive models to identify potential risks early, allowing project managers to intervene before delays or failures occur. Agile teams in IT and telecom should leverage neural networks and Neural Architecture Search (NAS) to enhance project monitoring and early risk detection.

- Focusing on Key Features: Organizations such as Salesforce and Microsoft have demonstrated that prioritizing sprint goals, team expertise score (TES), and customer satisfaction (CSAT) leads to better project outcomes. By emphasizing high-value deliverables, experienced teams, and continuous feedback, agile projects can optimize performance.

- Flexibility in Planning: Case studies from British Telecom (BT) and Sony show that embracing agile flexibility reduces time to market (TTM) and improves adaptability. Companies implementing iterative planning approaches can quickly adjust to changes, leading to more efficient project execution and faster product releases.

- Data-Driven Decision-Making: Leveraging explainable AI (X-AI) techniques, such as SHAP, provides transparency in machine learning-based project assessments. Companies like IBM use AI governance tools to interpret project risks and drive informed decision-making, ensuring managers understand the impact of each factor on project success.

- Investing in Team Training: Experience and expertise play a crucial role in agile project success. PayPal and Telstra have demonstrated that large-scale agile transformations require continuous training programs for team members. Organizations that invest in agile education, such as Scrum and DevOps certifications, achieve higher Efficiency, better team coordination, and improved project outcomes.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Karlsson, G.; Lundén, P. Agile Education Imagined: A report from the Cybercampus workshop on Agile Education. 2023. Available online: https://www.diva-portal.org/smash/record.jsf?dswid=5339&pid=diva2%3A1728060 (accessed on 17 June 2025).

- Nejad, A.A.F.; Arabikhan, F.; Williams, N.; Gegov, A.; Sari, O.; Bader, M. Agile Project Status Prediction Using Interpretable Machine Learning. In Proceedings of the 2024 IEEE 12th International Conference on Intelligent Systems (IS), Varna, Bulgaria, 1–8 August 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Meso, P.; Jain, R. Agile Software Development: Adaptive Systems Principles and Best Practices. Inf. Syst. Manag. 2006, 23, 19–30. [Google Scholar] [CrossRef]

- Kuhrmann, M.; Tell, P.; Hebig, R.; Klünder, J.; Münch, J.; Linssen, O.; Pfahl, D.; Felderer, M.; Prause, C.R.; MacDonell, S.G.; et al. What Makes Agile Software Development Agile? IEEE Trans. Softw. Eng. 2022, 48, 3523–3539. [Google Scholar] [CrossRef]

- Gemino, A.; Reich, B.H.; Serrador, P.M. Agile, traditional, and hybrid approaches to project success: Is hybrid a poor second choice? Proj. Manag. J. 2021, 52, 161–175. [Google Scholar] [CrossRef]

- Anand, A.; Kaur, J.; Singh, O.; Alhazmi, O.H. Optimal Sprint Length Determination for Agile-Based Software Development. Comput. Mater. Contin. 2021, 68, 3693–3712. [Google Scholar] [CrossRef]

- Serrador, P.; Pinto, J.K. Does Agile work?—A quantitative analysis of agile project success. Int. J. Proj. Manag. 2015, 33, 1040–1051. [Google Scholar] [CrossRef]

- Tang, M.; Cai, S.; Lau, V.K.N. Over-the-Air Aggregation With Multiple Shared Channels and Graph-Based State Estimation for Industrial IoT Systems. IEEE Internet Things J. 2021, 8, 14638–14657. [Google Scholar] [CrossRef]

- Li, H.; Yazdi, M.; Nedjati, A.; Moradi, R.; Adumene, S.; Dao, U.; Moradi, A.H.; Haghighi, A.; Obeng, F.E.; Huang, C.-G.; et al. Harnessing AI for Project Risk Management: A Paradigm Shift. Stud. Syst. Decis. Control 2024, 518, 253–272. [Google Scholar] [CrossRef]

- ForouzeshNejad, A.A.; Arabikhan, F.; Aheleroff, S. Optimizing Project Time and Cost Prediction Using a Hybrid XGBoost and Simulated Annealing Algorithm. Machines 2024, 12, 867. [Google Scholar] [CrossRef]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; García, S.; Gil-López, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Chuang, S.-W.; Luor, T.; Lu, H.-P. Assessment of institutions, scholars, and contributions on agile software development (2001–2012). J. Syst. Softw. 2014, 93, 84–101. [Google Scholar] [CrossRef]

- Navada, A.; Ansari, A.N.; Patil, S.; Sonkamble, B.A. In Overview of use of decision tree algorithms in machine learning. In Proceedings of the 2011 IEEE Control and System Graduate Research Colloquium, ICSGRC, Shah Alam, Malaysia, 27–28 June 2011; pp. 37–42. [Google Scholar] [CrossRef]

- Müller, R.; Turner, R. The Influence of Project Managers on Project Success Criteria and Project Success by Type of Project. Eur. Manag. J. 2007, 25, 298–309. [Google Scholar] [CrossRef]

- Tominc, P.; Oreški, D.; Rožman, M. Artificial Intelligence and Agility-Based Model for Successful Project Implementation and Company Competitiveness. Information 2023, 14, 337. [Google Scholar] [CrossRef]

- Locke, E.A. Relationship of Success and Expectation to Affect on Goal-Seeking Tasks. J. Personal. Soc. Psychol. 1967, 7, 125–134. [Google Scholar] [CrossRef]

- Pollack, J.; Helm, J.; Adler, D. What is the Iron Triangle, and how has it changed? Int. J. Manag. Proj. Bus. 2018, 11, 527–547. [Google Scholar] [CrossRef]

- Lalic, D.C.; Lalic, B.; Delić, M.; Gracanin, D.; Stefanovic, D. How project management approach impact project success? From traditional to agile. Int. J. Manag. Proj. Bus. 2022, 15, 494–521. [Google Scholar] [CrossRef]

- Van Waardenburg, G.; Van Vliet, H. When agile meets the enterprise. Inf. Softw. Technol. 2013, 55, 2154–2171. [Google Scholar] [CrossRef]

- Beck, K.; Beedle, M.; Van Bennekum, A.; Cockburn, A.; Cunningham, W.; Fowler, M.; Grenning, J.; Highsmith, J.; Hunt, A.; Jeffries, R.; et al. Manifesto for Agile Software Development. 2001. Available online: https://ai-learn.it/wp-content/uploads/2019/03/03_ManifestoofAgileSoftwareDevelopment-1.pdf (accessed on 17 June 2025).

- Dingsøyr, T.; Nerur, S.; Balijepally, V.; Moe, N.B. A decade of agile methodologies: Towards explaining agile software development. J. Syst. Softw. 2012, 85, 1213–1221. [Google Scholar] [CrossRef]

- Schwaber, K.; Sutherland, J. Der Visuelle Scrum Guide. Available online: https://scrumguides.org/docs/scrumguide/v2020/2020-Scrum-Guide-US.pdf (accessed on 17 June 2025).

- Rubin, K.S. Essential Scrum: A Practical Guide to the Most Popular Agile Process; Kenneth, S.R., Google, B., Eds.; Addison-Wesley: Upper Saddle River, NJ, USA, 2012. [Google Scholar]

- Hossain, E.; Babar, M.A.; Paik, H.Y. In Using scrum in global software development: A systematic literature review. In Proceedings of the 2009 4th IEEE International Conference on Global Software Engineering, ICGSE, Limerick, Ireland, 13–16 July 2009; pp. 175–184. [Google Scholar] [CrossRef]

- Conforto, E.C.; Salum, F.; Amaral, D.C.; Da Silva, S.L.; De Almeida, L.F.M. Can Agile Project Management be Adopted by Industries Other than Software Development? Proj. Manag. J. 2014, 45, 21–34. [Google Scholar] [CrossRef]

- Ikonen, M.; Pirinen, E.; Fagerholm, F.; Kettunen, P.; Abrahamsson, P. In on the impact of Kanban on software project work: An empirical case study investigation. In Proceedings of the 2011 16th IEEE International Conference on Engineering of Complex Computer Systems, ICECCS, Las Vegas, NV, USA, 27–29 April 2011; pp. 305–314. [Google Scholar] [CrossRef]

- Alqudah, M.; Razali, R. An Empirical Study of Scrumban Formation based on the Selection of Scrum and Kanban Practices. Int. J. Adv. Sci. Eng. Inf. Technol. 2018, 8, 2315–2322. [Google Scholar] [CrossRef]

- Corey, L. Scrumban and Other Essays on Kanban System for Lean Software Development; Modus Cooperandi Press: Seattle, WA, USA, 2009; Available online: https://www.researchgate.net/publication/234815689_Scrumban_-_Essays_on_Kanban_Systems_for_Lean_Software_Development (accessed on 19 April 2025).

- Scrumban: Mastering Two Agile Methodologies Atlassian. Available online: https://www.atlassian.com/agile/project-management/scrumban (accessed on 26 April 2025).

- Müller, R.; Locatelli, G.; Holzmann, V.; Nilsson, M.; Sagay, T. Artificial Intelligence and Project Management: Empirical Overview, State of the Art, and Guidelines for Future Research. Proj. Manag. J. 2024, 55, 9–15. [Google Scholar] [CrossRef]

- Shakya, S. Analysis of Artificial Intelligence based Image Classification Techniques. Artic. J. Innov. Image Process. 2020, 2, 44–54. [Google Scholar] [CrossRef]

- McLean, S.; Read, G.J.M.; Thompson, J.; Baber, C.; Stanton, N.A.; Salmon, P.M. The risks associated with Artificial General Intelligence: A systematic review. J. Exp. Theor. Artif. Intell. 2023, 35, 649–663. [Google Scholar] [CrossRef]

- Bui, D.T.; Khosravi, K.; Tiefenbacher, J.; Nguyen, H.; Kazakis, N. Improving prediction of water quality indices using novel hybrid machine-learning algorithms. Sci. Total Environ. 2020, 721, 137612. [Google Scholar] [CrossRef]

- Sheffield, J.; Lemétayer, J. Factors associated with the software development agility of successful projects. Int. J. Proj. Manag. 2013, 31, 459–472. [Google Scholar] [CrossRef]

- Shenhar, A.J.; Dvir, D.; Levy, O.; Maltz, A.C. Project Success: A Multidimensional Strategic Concept. Long Range Plann. 2001, 34, 699–725. [Google Scholar] [CrossRef]

- Albert, M.; Balve, P.; Spang, K. Evaluation of project success: A structured literature review. Int. J. Manag. Proj. Bus. 2017, 10, 796–821. [Google Scholar] [CrossRef]

- Amani, M.A.; Behdinian, A.; Sheikhalishahi, M. Evaluating factors affecting project success: An agile approach. J. Ind. Eng. Int. 2022, 18, 82. [Google Scholar]

- Binboga, B.; Gumussoy, C.A. Factors Affecting Agile Software Project Success. IEEE Access 2024, 12, 95613–95633. [Google Scholar] [CrossRef]

- Hassani-Alaoui, S.; Cameron, A.-F.; Giannelia, T. “We use scrum, but…”: Agile modifications and project success. In Proceedings of the Hawaii International Conference on System Sciences, Grand Wailea, HI, USA, 7–10 January 2020. [Google Scholar]

- Hnatchuk, Y.; Pavlova, O.; Havrylyuk, K. Method of Forecasting the Characteristics and Evaluating the Implementation Success of IT Projects Based on Requirements Analysis. In Proceedings of the IntelITSIS’2021: 2nd International Workshop on Intelligent Information Technologies and Systems of Information Security, Khmelnytskyi, Ukraine, 24–26 March 2021; pp. 248–258. [Google Scholar]

- Misra, S.C.; Kumar, V.; Kumar, U. Identifying some important success factors in adopting agile software development practices. J. Syst. Softw. 2009, 82, 1869–1890. [Google Scholar] [CrossRef]

- Ghayyur, S.A.K.; Ahmed, S.; Ali, M.; Razzaq, A.; Ahmed, N.; Naseem, A. A systematic literature review of success factors and barriers of Agile software development. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 278–291. [Google Scholar]

- Tam, C.; da Costa Moura, E.J.; Oliveira, T.; Varajão, J. The factors influencing the success of on-going agile software development projects. Int. J. Proj. Manag. 2020, 38, 165–176. [Google Scholar] [CrossRef]

- Siddique, L.; Hussein, B. A qualitative study of success criteria in Norwegian agile software projects from suppliers’ perspective. Int. J. Inf. Syst. Proj. Manag. 2016, 4, 63–79. [Google Scholar] [CrossRef]

- Petersen, K. An empirical study of lead-times in incremental and agile software development. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). In Proceedings of the International Conference on Software Process, Paderborn, Germany, 8–9 July 2010; pp. 345–356. [Google Scholar] [CrossRef]

- Aoyama, M. Agile software process and its experience. In Proceedings of the International Conference on Software Engineering, Kyoto, Japan, 19–25 April 1998. [Google Scholar] [CrossRef]

- Boschetti, M.A.; Golfarelli, M.; Rizzi, S.; Turricchia, E. A Lagrangian heuristic for sprint planning in agile software development. Comput. Oper. Res. 2014, 43, 116–128. [Google Scholar] [CrossRef]

- Vierlboeck, M.; Gövert, K.; Trauer, J.; Lindemann, U. Budgeting for Agile Product Development. Proc. Des. Soc. Int. Conf. Eng. Des. 2019, 1, 2169–2178. [Google Scholar] [CrossRef]

- Kim, B.-C. Probabilistic Evaluation of Cost Performance Stability in Earned Value Management. J. Manag. Eng. 2016, 32, 04015025. [Google Scholar] [CrossRef]

- Moreira, M.E. Working with Story Points, Velocity, and Burndowns. In Being Agile; Apress: Berkeley, CA, USA, 2013; pp. 187–194. [Google Scholar] [CrossRef]

- Bakalova, Z.; Daneva, M. A comparative case study on clients participation in a ‘traditional’ and in an agile software company. In Proceedings of the 12th International Conference on Product Focused Software Development and Process Improvement, Torre Canne Brindisi, Italy, 20–22 June 2011. [Google Scholar] [CrossRef]

- Bumbary, K.M. Using velocity, acceleration, and jerk to manage agile schedule risk. In Proceedings of the 2016 International Conference on Information Systems Engineering, ICISE, Los Angeles, CA, USA, 20–22 April 2016; pp. 73–80. [Google Scholar] [CrossRef]

- Sedano, T.; Ralph, P.; Peraire, C. The Product Backlog. In Proceedings of the International Conference on Software Engineering, Montreal, QC, Canada, 25–31 May 2019; pp. 200–211. [Google Scholar] [CrossRef]

- Kropp, M.; Anslow, C.; Meier, A.; Biddle, R. Satisfaction, practices, and influences in agile software development. In Proceedings of the 22nd International Conference on Evaluation and Assessment in Software Engineering 2018, Christchurch, New Zealand, 28–29 June 2018. [Google Scholar] [CrossRef]

- Keshta, N.; Morgan, Y. In Comparison between traditional plan-based and agile software processes according to team size & project domain (A systematic literature review). In Proceedings of the 2017 8th IEEE Annual Information Technology, Electronics and Mobile Communication Conference, IEMCON, Vancouver, BC, Canada, 3–5 October 2017; pp. 567–575. [Google Scholar] [CrossRef]

- Behutiye, W.N.; Rodríguez, P.; Oivo, M.; Tosun, A. Analyzing the concept of technical debt in the context of agile software development: A systematic literature review. Inf. Softw. Technol. 2017, 82, 139–158. [Google Scholar] [CrossRef]

- Groß, S.; Mandelburger, M.M.; Mendling, J.; Mandelburger, M.; Gross, S.; Malinova, M. Dependency Management in Large-Scale Agile: A Case Study of DevOps Teams. In Proceedings of the 52nd Hawaii International Conference on System Sciences (HICSS 2019), Grand Wailea, Maui, HI, USA, 8–11 January 2019; pp. 6270–6279. Available online: https://sintef.brage.unit.no/sintef-xmlui/handle/11250/2644833 (accessed on 19 March 2024).

- Reifer, D.J. How good are agile methods? IEEE Softw. 2002, 19, 16–18. [Google Scholar] [CrossRef]

- Moyano, F.D.; Eggenberger, P.; Meynet, G.; Gehan, C.; Mosser, B.; Buldgen, G.; Salmon, S.J.A.J. Asteroseismology of evolved stars to constrain the internal transport of angular momentum-V. Efficiency of the transport on the red giant branch and in the red clump. Astron. Astrophys. 2022, 663, A180. [Google Scholar] [CrossRef]

- French, K.A.; Dumani, S.; Allen, T.D.; Shockley, K.M. A meta-analysis of work–family conflict and social support. Psychol. Bull. 2018, 144, 284–314. [Google Scholar] [CrossRef]

- Hyväri, I. Project management effectiveness in project-oriented business organizations. Int. J. Proj. Manag. 2006, 24, 216–225. [Google Scholar] [CrossRef]

- Kropp, M.; Meier, A.; Anslow, C.; Biddle, R. Satisfaction and its correlates in agile software development. J. Syst. Softw. 2020, 164, 110544. [Google Scholar] [CrossRef]

- Yacouby, R.; Axman, D. Probabilistic extension of precision, recall, and f1 score for more thorough evaluation of classification models. In Proceedings of the First Workshop on Evaluation and Comparison of NLP Systems, Online, 20 November 2020; pp. 79–91. [Google Scholar]

| Study | Aim | Prediction Aspects | Methodology | Case Study | |||

|---|---|---|---|---|---|---|---|

| Endurance | Effectiveness | Efficiency | Complexity | ||||

| [18] | Predicting project success | Used | Support Vector Machine and combination with genetic algorithm | Construction projects | |||

| [34] | Project cost performance forecasting | Used | The combined method of Support Vector Machine (SVM) and principal component analysis (PCA) | Commercial construction projects | |||

| [15] | Predicting the probability of success of projects | Used | Gaussian process regression, Bayesian inference and particle swarm optimization algorithm | Construction projects | |||

| [15] | Predicting project success | Used | Regression algorithms | Financing projects | |||

| [35] | Predicting the probability of project success | Used | Used | Bayesian networks | Research and development projects | ||

| [36] | Examining the relationship between project Complexity and project success | Used | Structural equations and questionnaires | Construction projects | |||

| [37] | Project safety performance prediction | Used | Human factors engineering and biz network | Construction projects | |||

| [38] | Predicting project success | Used | Neural network and decision tree and Random Forest | Crowdfunding projects | |||

| [38] | Predicting the success of a crowdfunding project | Used | Artificial neural network | Crowdfunding projects | |||

| [39] | Estimating the importance of the factors affecting the success of international projects | Used | Neural network | International IT projects | |||

| [40] | Predicting the characteristics and evaluating the success of project implementation | Used | Used | Neural network | Information technology projects | ||

| [41] | The effect of project Complexity, leadership integrity, performance readiness, and management stability on financial stability | Used | Structural equations and questionnaires | Indonesian Project Management Institute | |||

| [42] | Predicting the success rate of software | Used | Statistical analysis and questionnaire | Software projects | |||

| [43] | Investigating the impact of financial policies on project performance | Used | Used | Dynamic system | Construction projects | ||

| [44] | Evaluation of factors affecting the success of agile software projects | Used | Used | Smart-PLS | Software projects | ||

| This study | Predicting the success status of projects considering Complexity and agility criteria | Used | Used | Used | Used | ANN—SHAP—Apriori | Software projects |

| Aspect | Feature | Deception | Quantitative Measurement Definition | Reference |

|---|---|---|---|---|

| Efficiency | Lead Time | lead time is the time from the start of a task to its completion, focusing on rapid response to change | Days | [45] |

| Cycle Time | cycle time is the time taken from the start to the completion of a work item, focusing on streamlining processes and reducing delays | Days | [46] | |

| Length of Sprint | the length of a sprint is a fixed period of time during which a specific set of work has to be completed and made ready for review, typically ranging from one to four weeks | Weeks | [6] | |

| Sprint Number | the “sprint number” refers to the sequential identifier assigned to each sprint or iteration within a project, marking its order and often used for planning, tracking, and referencing specific periods of work | Integer number | [47] | |

| Number of User Story | a user story is a brief, simple description of a feature told from the perspective of the person who desires the new capability, usually a user or customer of the system. | Count per sprint | [48] | |

| Budget at Completion (BAC) per Sprint | “Budget at Completion” refers to the total projected budget required to complete a project, emphasizing flexible and adaptive financial planning to accommodate changing requirements and priorities | Currency unit (Rial) | [48] | |

| Cost performance index (CPI) | the Cost Performance Index (CPI) is a measure of the financial Efficiency of project execution, reflecting the ratio of work accomplished versus work cost incurred | Numeric index | [49] | |

| Schedule Performance Index (SPI) | Schedule Performance Index (SPI) is a measure of schedule efficiency in a project. It reflects the ratio of earned value (EV) to planned value (PV), indicating how closely the project is adhering to its scheduled timeline. | Numeric index | [49] | |

| Total Story Point | a Story Point is a unit of measure for expressing the overall effort required to fully implement a piece of work (such as a user story) in a way that accounts for Complexity, risks, and efforts involved | Integer number | [50] | |

| Effectiveness | Total Sprint Goals | objectives set for a sprint that align with the product goal, guiding the development team on what to focus on during the sprint | Integer number | [7] |

| Total Business Value | the perceived worth or benefit that a project or feature delivers to the stakeholders, often guiding prioritization in agile projects | Numeric score | [51] | |

| Velocity | a metric that measures the amount of work a team completes during a sprint, used to forecast future sprint capacities | Integer number | [52] | |

| Backlog Management Index (BMI) | a measure or approach to evaluate the Efficiency and Effectiveness of managing the product backlog in agile development | Percentage | [53] | |

| Return on Investment (ROI) | an evaluation of the profitability of an investment relative to its cost in agile projects, often calculated for features or projects to determine their financial value | Percentage | [7] | |

| Management Commitment | the support and involvement of management in ensuring agile practices are implemented and sustained, crucial for the success of agile transformations | Score (1–5) | [51] | |

| Endurance | Team Moral | reflects the overall attitude, satisfaction, and motivation of the development team, crucial for sustaining productivity and quality in agile projects | Score (1–5) | [52] |

| Customer Satisfaction Score (CSAT) | a metric used to quantify the degree to which a product or service meets customer expectations, often used in agile to measure the success of iterations or releases | Percentage | [54] | |

| Team Size | number of individuals in a development team, with agile methodologies typically recommending small, cross-functional teams for optimal performance and collaboration | Integer number | [55] | |

| Team Expertise Score (TES) | while not directly identified in the literature, it generally would refer to a measure of the collective skills and competencies of the agile team members | Score (1–10) | [55] | |

| Complexity | Technical Debt | the implied cost of additional rework caused by choosing an easy solution now instead of using a better approach that would take longer | Integer number | [56] |

| Dependencies | relationships between tasks or stories where one cannot start or finish until another has been completed, which can impact sprint planning and execution. | Integer number | [57] | |

| Time to Market (TTM) | the duration it takes from a product being conceived until it is available for sale, with agile aiming to minimize TTM through iterative development and frequent releases | Days | [58] |

| Hyperparameter | ANN 01 | ANN 02 | ANN 03 | ANN 04 | ANN 05 | ANN 06 | ANN 07 | ANN 08 | ANN 09 |

|---|---|---|---|---|---|---|---|---|---|

| Number of hidden layers | 1 | 1 | 1 | 2 | 2 | 2 | 3 | 3 | 3 |

| Number of neurons per Layer | 50 | 100 | 150 | 50 | 100 | 150 | 50 | 100 | 150 |

| Activation function | identify | Sigmoid | Relu | Tanh | Sigmoid | Sigmoid | Relu | Tanh | Tanh |

| Number of training epochs | 50 | 100 | 150 | 50 | 100 | 150 | 50 | 100 | 150 |

| Learning rate value | 0.001 | 0.005 | 0.01 | 0.01 | 0.001 | 0.005 | 0.01 | 0.1 | 0.1 |

| Learning rate schedule | constant | invscaling | adaptive | constant | invscaling | adaptive | constant | invscaling | adaptive |

| Optimization algorithm | adam | adam | sgd | sgd | adam | sgd | lbfgs | lbfgs | lbfgs |

| Momentum value | 0.1 | 0.2 | 0.5 | 0.9 | 0.5 | 0.2 | 0.5 | 0.9 | 0.95 |

| ANN Architecture | ||||

|---|---|---|---|---|

| 1 | 0.89 | 0.90 | 0.91 | 0.90 |

| 2 | 0.88 | 0.89 | 0.88 | 0.87 |

| 3 | 0.87 | 0.88 | 0.88 | 0.88 |

| 4 | 0.91 | 0.90 | 0.92 | 0.91 |

| 5 | 0.93 | 0.93 | 0.94 | 0.93 |

| 6 | 0.92 | 0.92 | 0.91 | 0.90 |

| 7 | 0.90 | 0.91 | 0.90 | 0.89 |

| 8 | 0.91 | 0.91 | 0.92 | 0.92 |

| 9 | 0.89 | 0.90 | 0.89 | 0.89 |

| Algorithm | ||||

|---|---|---|---|---|

| ANN (NAS optimized) | 0.93 | 0.93 | 0.94 | 0.93 |

| XGBoost | 0.89 | 0.89 | 0.90 | 0.89 |

| Random Forest | 0.86 | 0.86 | 0.87 | 0.86 |

| SVM | 0.81 | 0.80 | 0.81 | 0.81 |

| k-NN | 0.76 | 0.77 | 0.77 | 0.76 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

ForouzeshNejad, A.A.; Arabikhan, F.; Gegov, A.; Jafari, R.; Ichtev, A. Data-Driven Predictive Modelling of Agile Projects Using Explainable Artificial Intelligence. Electronics 2025, 14, 2609. https://doi.org/10.3390/electronics14132609

ForouzeshNejad AA, Arabikhan F, Gegov A, Jafari R, Ichtev A. Data-Driven Predictive Modelling of Agile Projects Using Explainable Artificial Intelligence. Electronics. 2025; 14(13):2609. https://doi.org/10.3390/electronics14132609

Chicago/Turabian StyleForouzeshNejad, Ali Akbar, Farzad Arabikhan, Alexander Gegov, Raheleh Jafari, and Alexandar Ichtev. 2025. "Data-Driven Predictive Modelling of Agile Projects Using Explainable Artificial Intelligence" Electronics 14, no. 13: 2609. https://doi.org/10.3390/electronics14132609

APA StyleForouzeshNejad, A. A., Arabikhan, F., Gegov, A., Jafari, R., & Ichtev, A. (2025). Data-Driven Predictive Modelling of Agile Projects Using Explainable Artificial Intelligence. Electronics, 14(13), 2609. https://doi.org/10.3390/electronics14132609