Evaluating HAS and Low-Latency Streaming Algorithms for Enhanced QoE

Abstract

1. Introduction

2. Related Work

2.1. Adaptive Bitrate Algorithms

2.2. QoE Factors

3. Experimental Setup

3.1. Video Data Set

3.2. Segment Length and Quality Representations

3.3. Evaluation Test Bed

3.4. ABR Algorithm Evaluation

- Throughput: This algorithm makes a decision based on the throughput of the network. The algorithm estimates the throughput and decides which segment to download. This algorithm uses the average throughput of the previous video segment that was downloaded and decides on the optimal bitrate for the next video segment to be requested from the server [19].

- BOLA (Buffer Occupancy-based Lyapunov Algorithm): The BOLA algorithm decides which bitrate to download based on the client buffer level. The buffer level is related to the network throughput. This means that this buffer-based algorithm selects a high bitrate in case the buffer fill level is high, and a low bitrate if the buffer level is low. The buffer-based algorithm is chosen by the video streaming provider. The BOLA algorithm is suitable for fluctuation scenarios in the bandwidth [33].

- Dynamic: Dynamic is a hybrid algorithm. This algorithm makes full use of both throughput estimation and buffer levels. This algorithm smoothly switches between BOLA and throughput in real-time streaming. The algorithm addresses the shortcomings of the throughput- and buffer-based algorithms [18].

- Learn2Adapt Low Latency (L2A-LL): L2A-LL is an adaptive bitrate (ABR) algorithm based on low latency. This algorithm uses the online convex optimization principle. The L2A-LL algorithm aims to minimize the video’s latency. Compared to other ABR algorithms, L2A-LL provides a robust adaptation strategy. This algorithm does not require parameter tuning, channel model assumptions, or throughput assessments. These characteristics make L2A-LL ideal for users experiencing variations in the channel during streaming. Another feature of this algorithm is its modular architecture, which takes into account more QoE factors. These factors are categorized as stall, rebuffering, switching, and latency. These QoE factors consider various QoE objectives and streaming scenarios [7,8,34].

- Low on Latency (LOL+): This is a heuristic algorithm that uses learning principles to optimize the parameters for the best QoE. In LOL+ each segment boundary is estimated and the highest QoE is predicated. The ABR algorithm which is implemented on a SOM (self-organizing map) model which takes into consideration various QoE metrics and network variations. The LOL+ playback speed control module is based on a hybrid algorithm that measures latency and the buffer level and manages the playback speed. LOL+ and the QoE evaluation module is responsible for QoE computation based on metrics such as segment bitrate, switching, rebuffer events, latency, and playback speed [17,34].

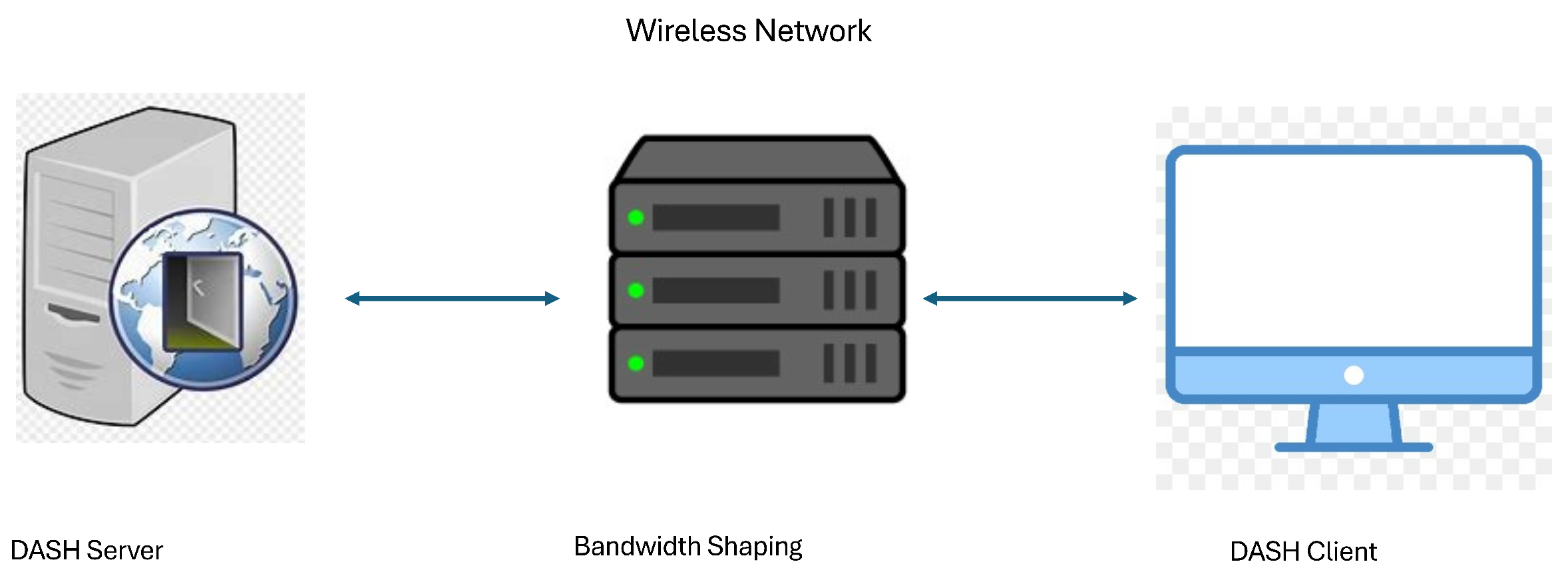

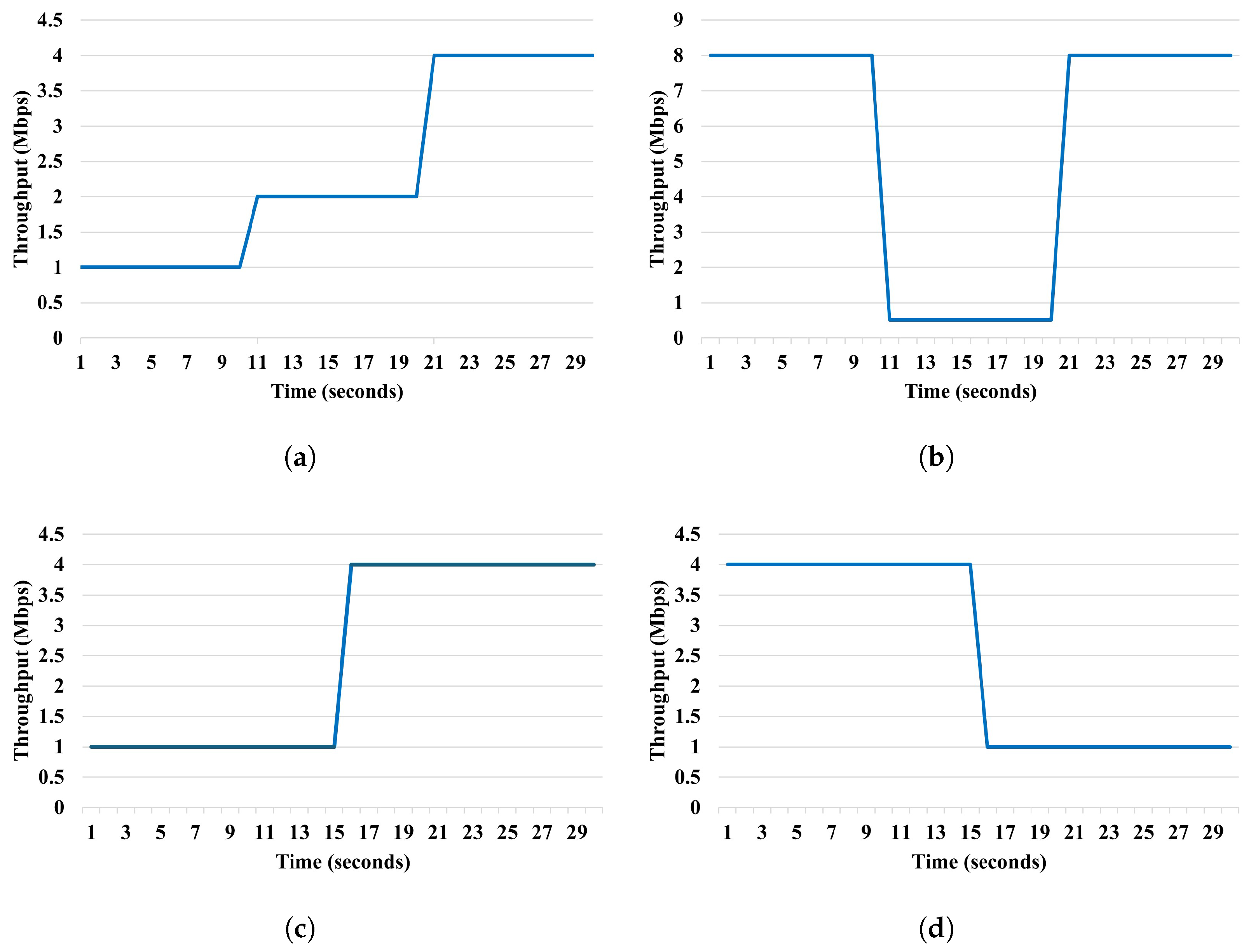

3.5. Network Simulation

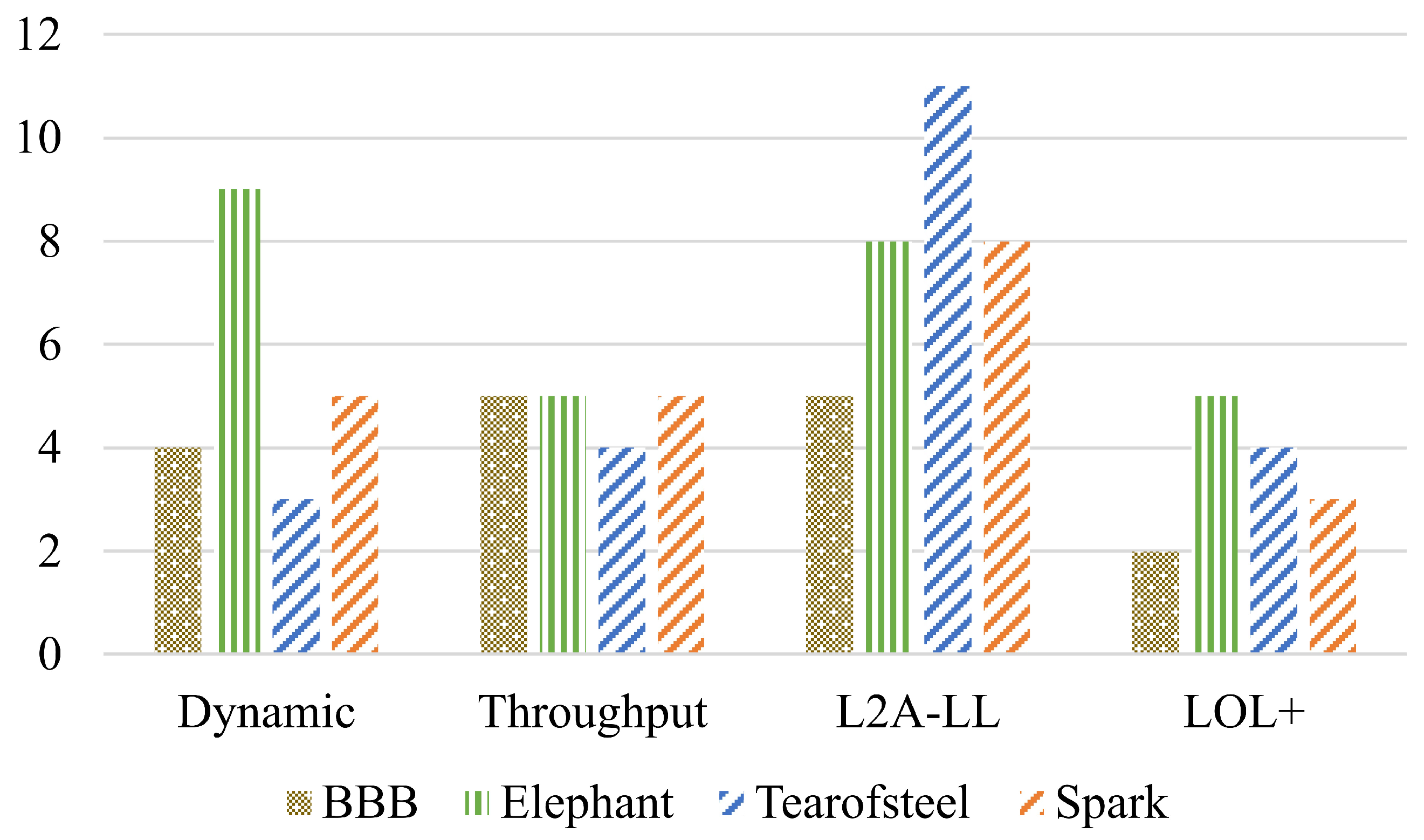

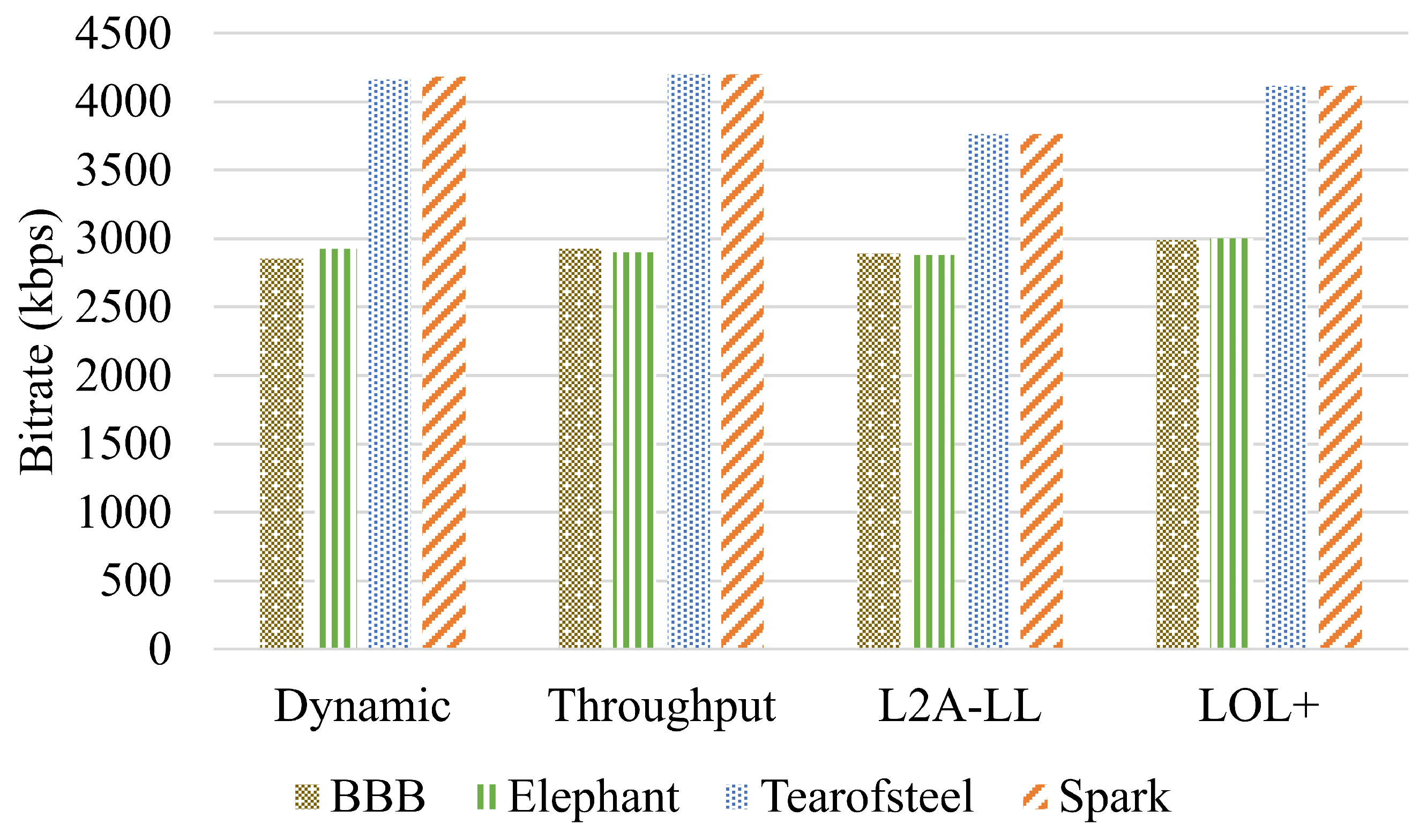

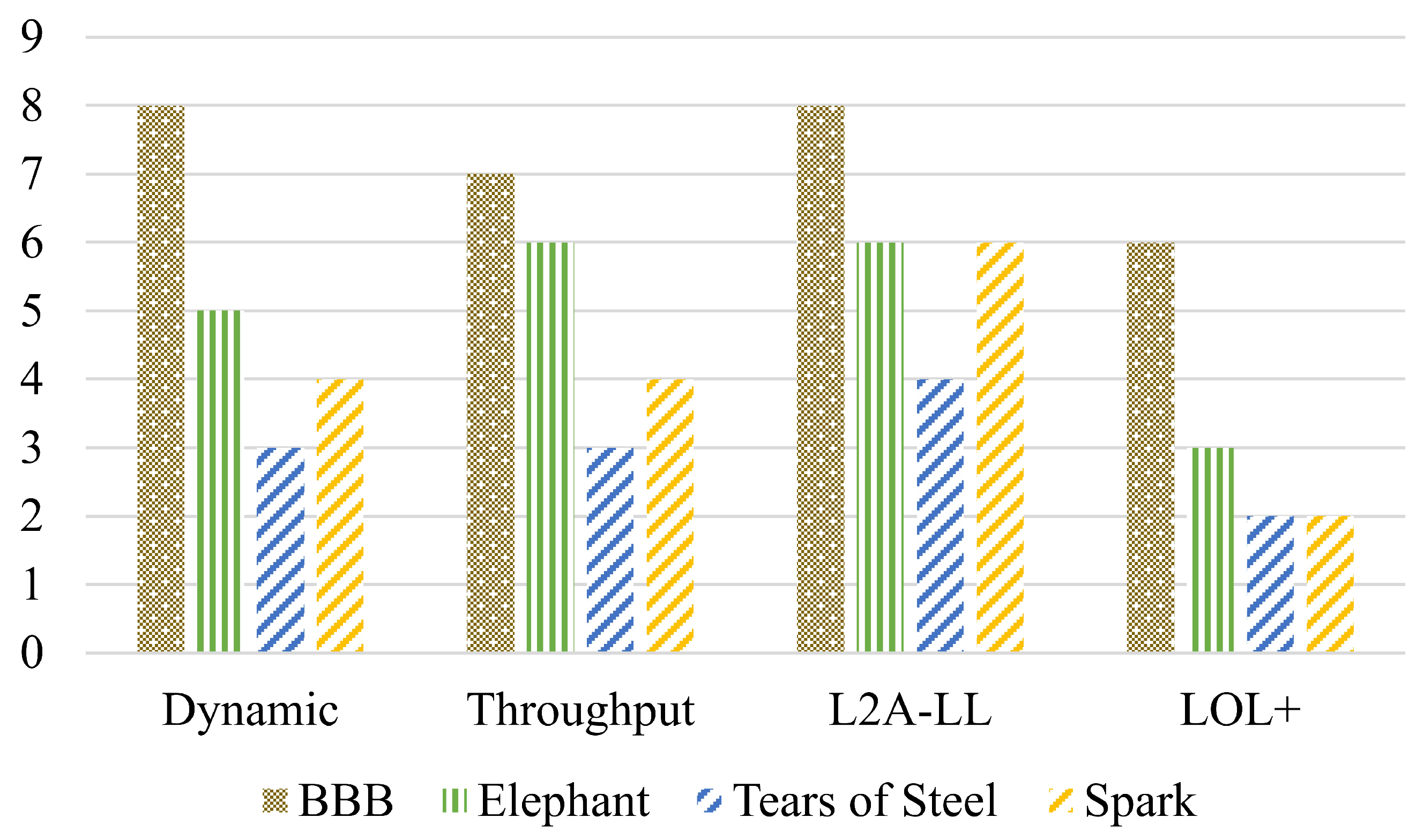

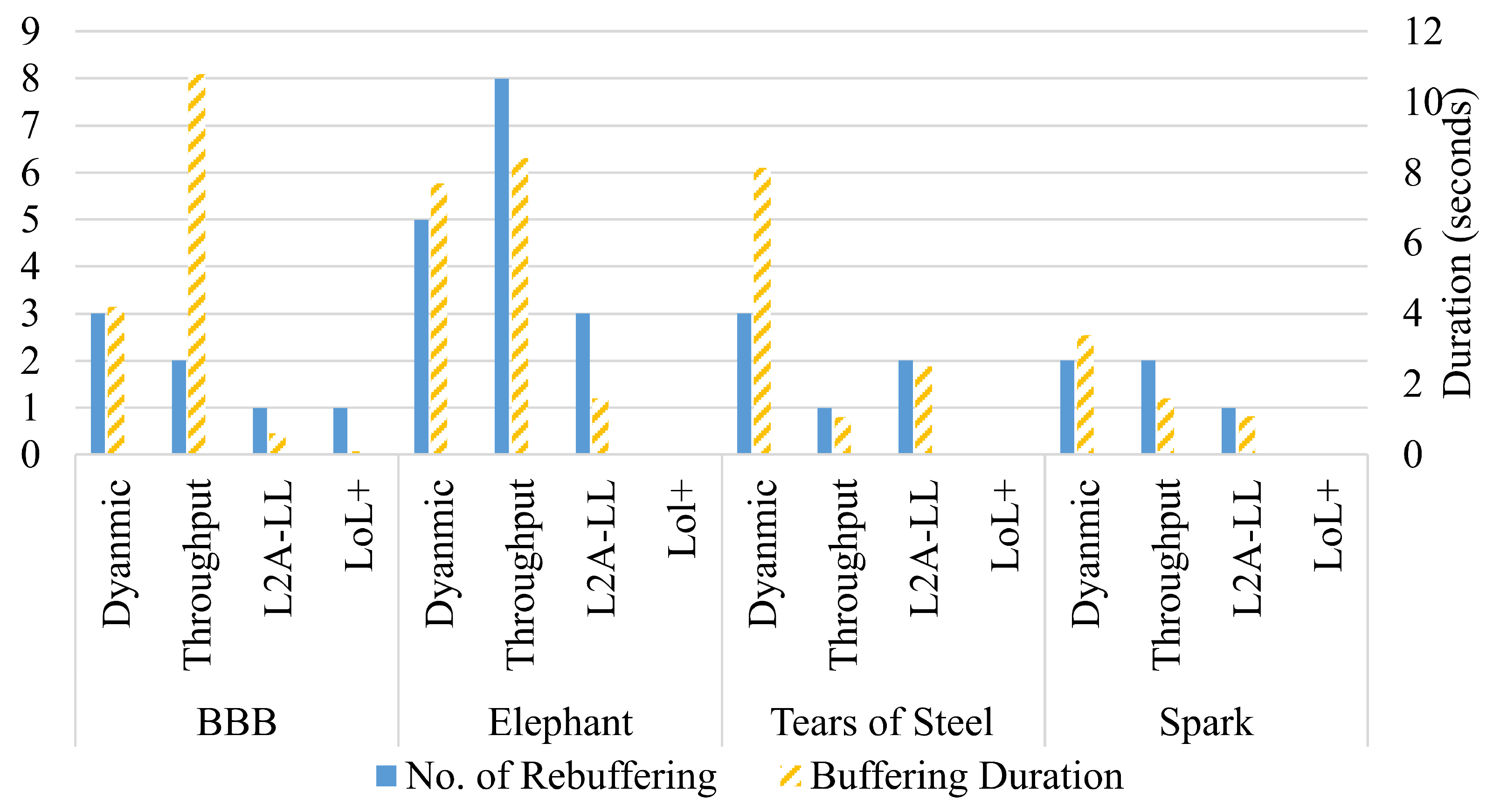

4. Results and Discussion

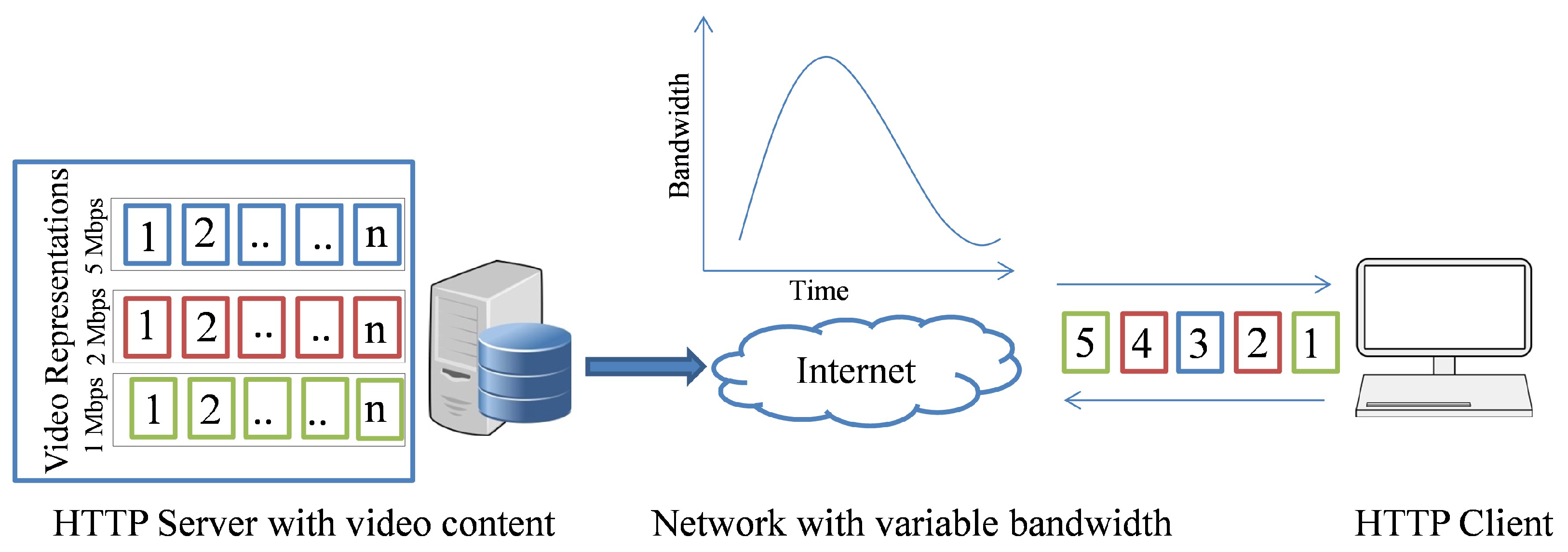

4.1. Dynamic Adaptive Streaming over HTTP

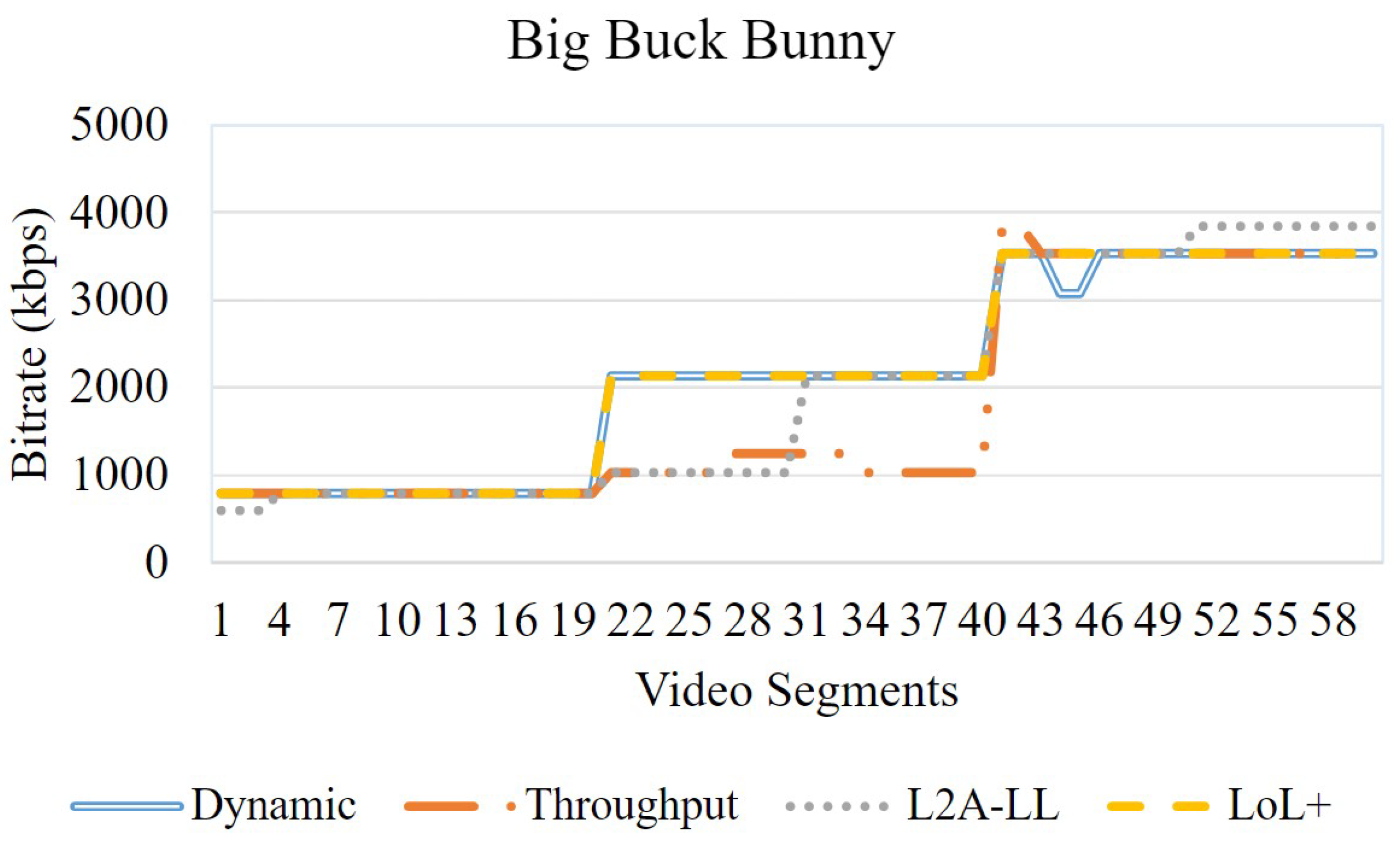

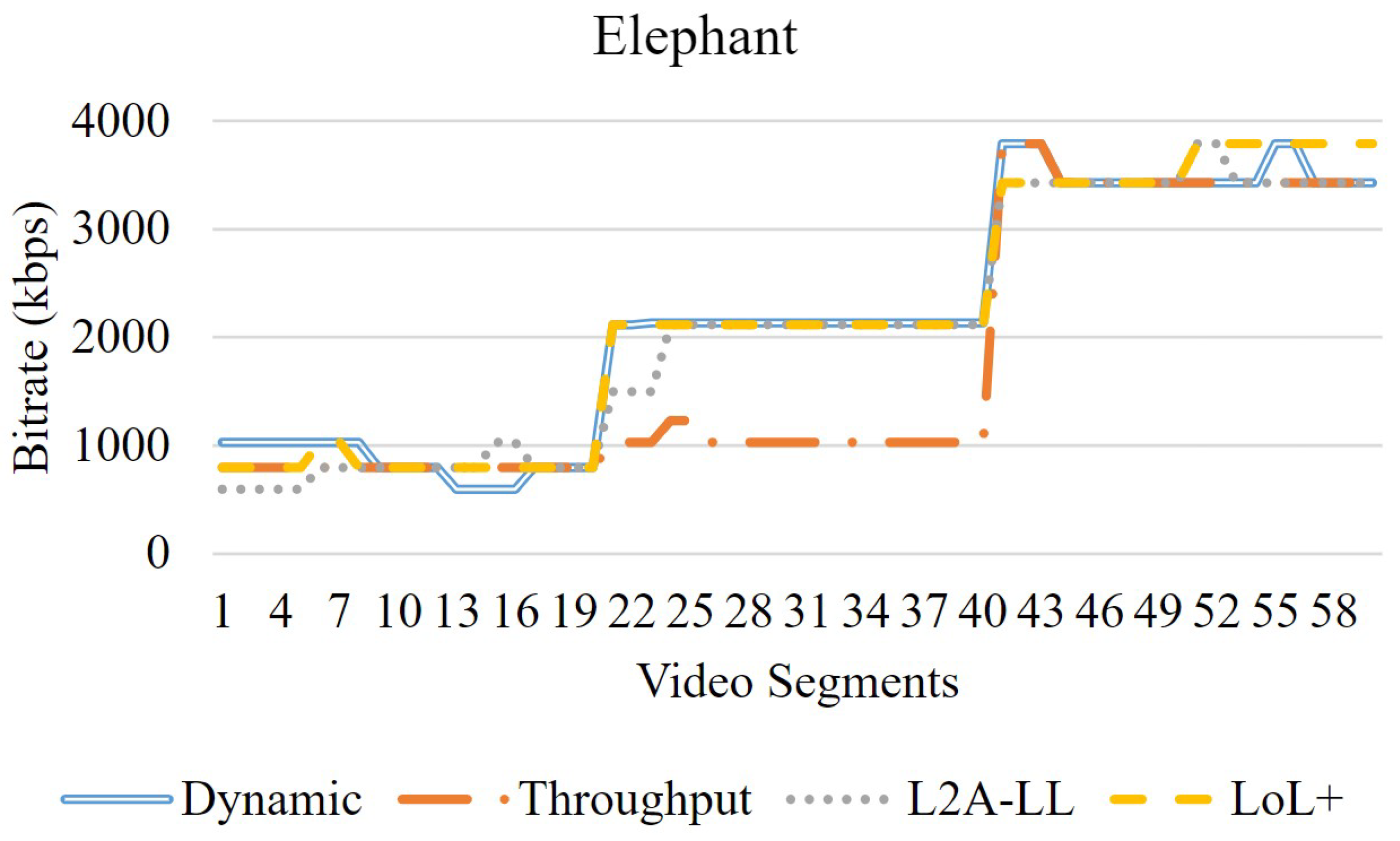

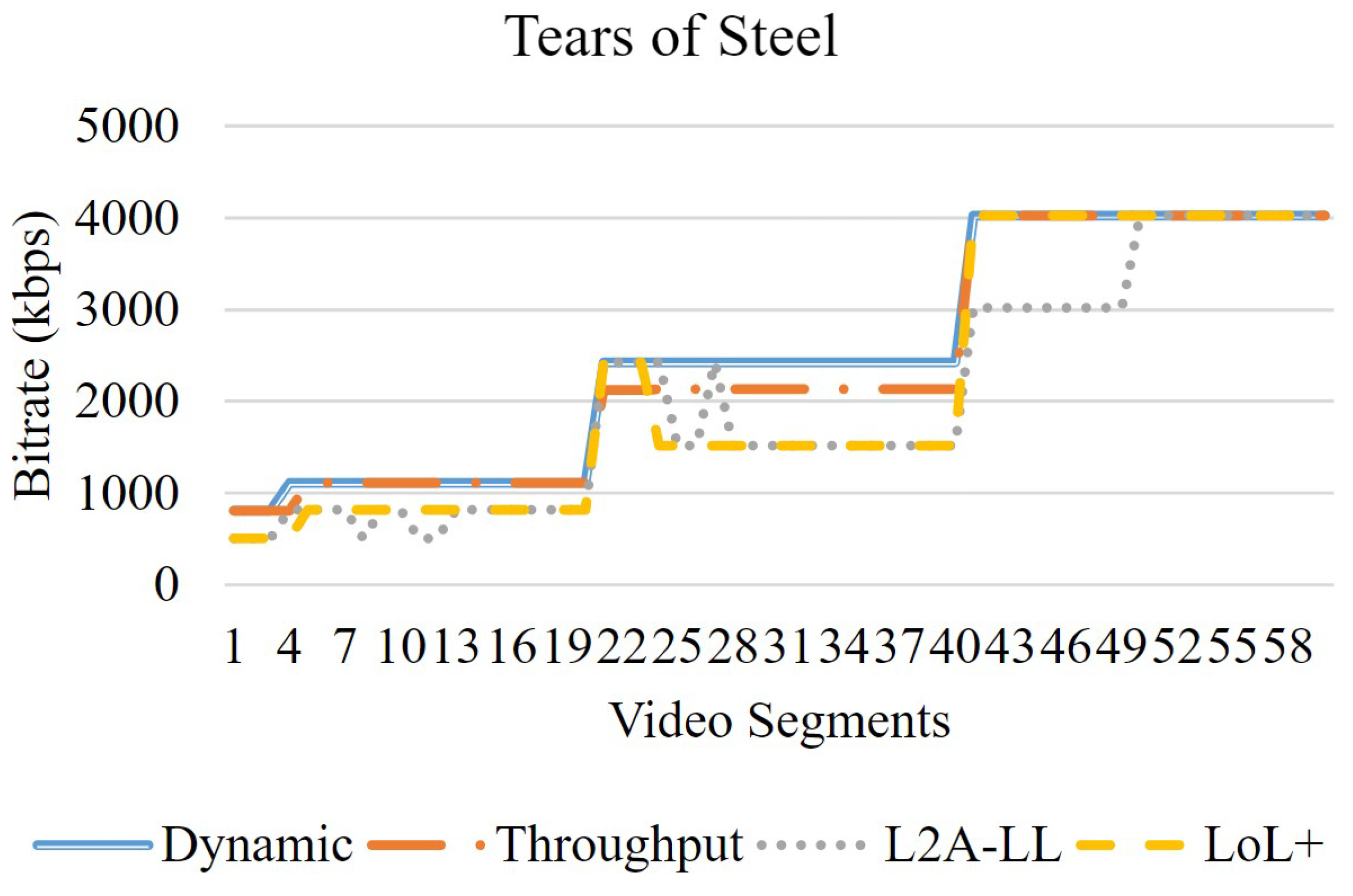

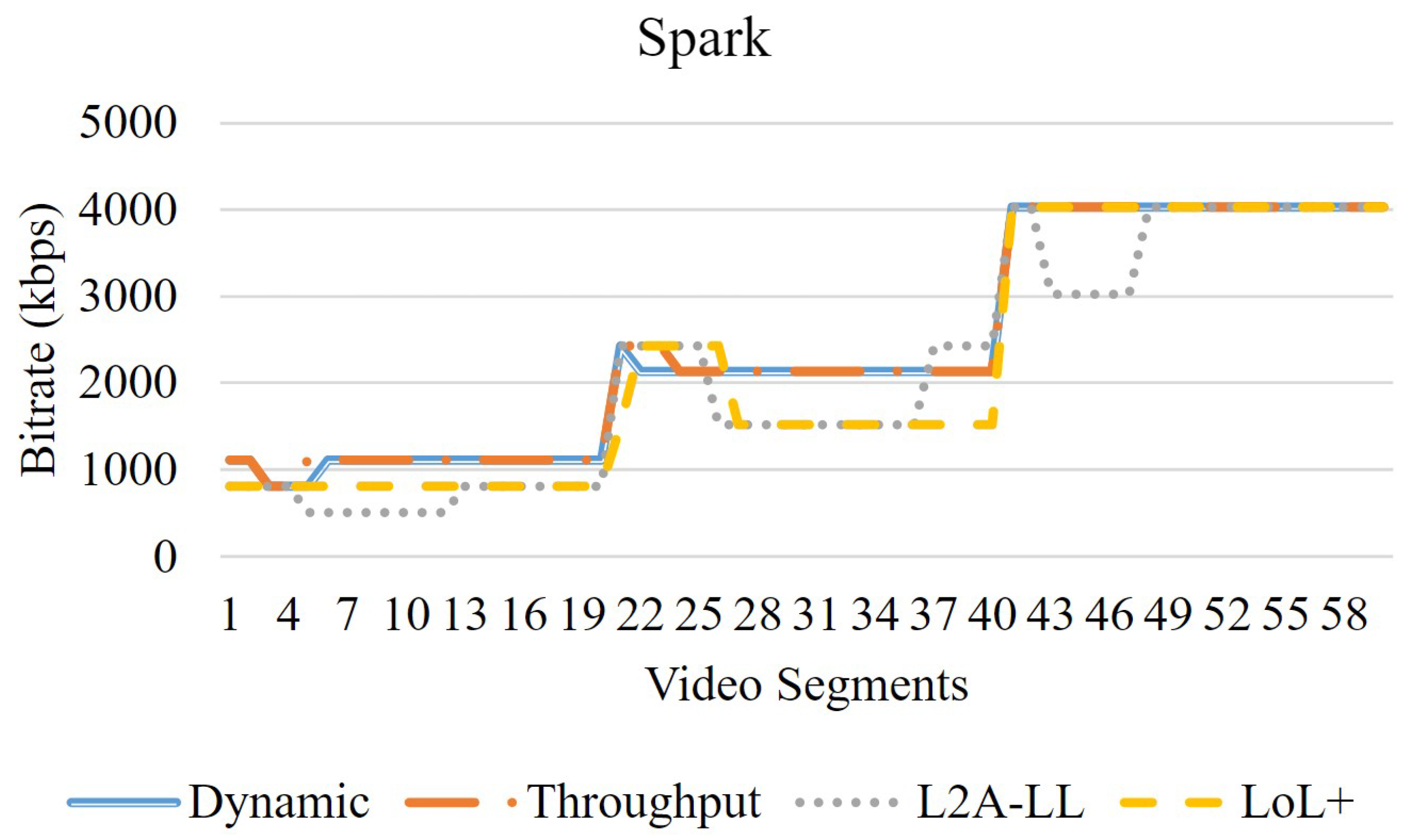

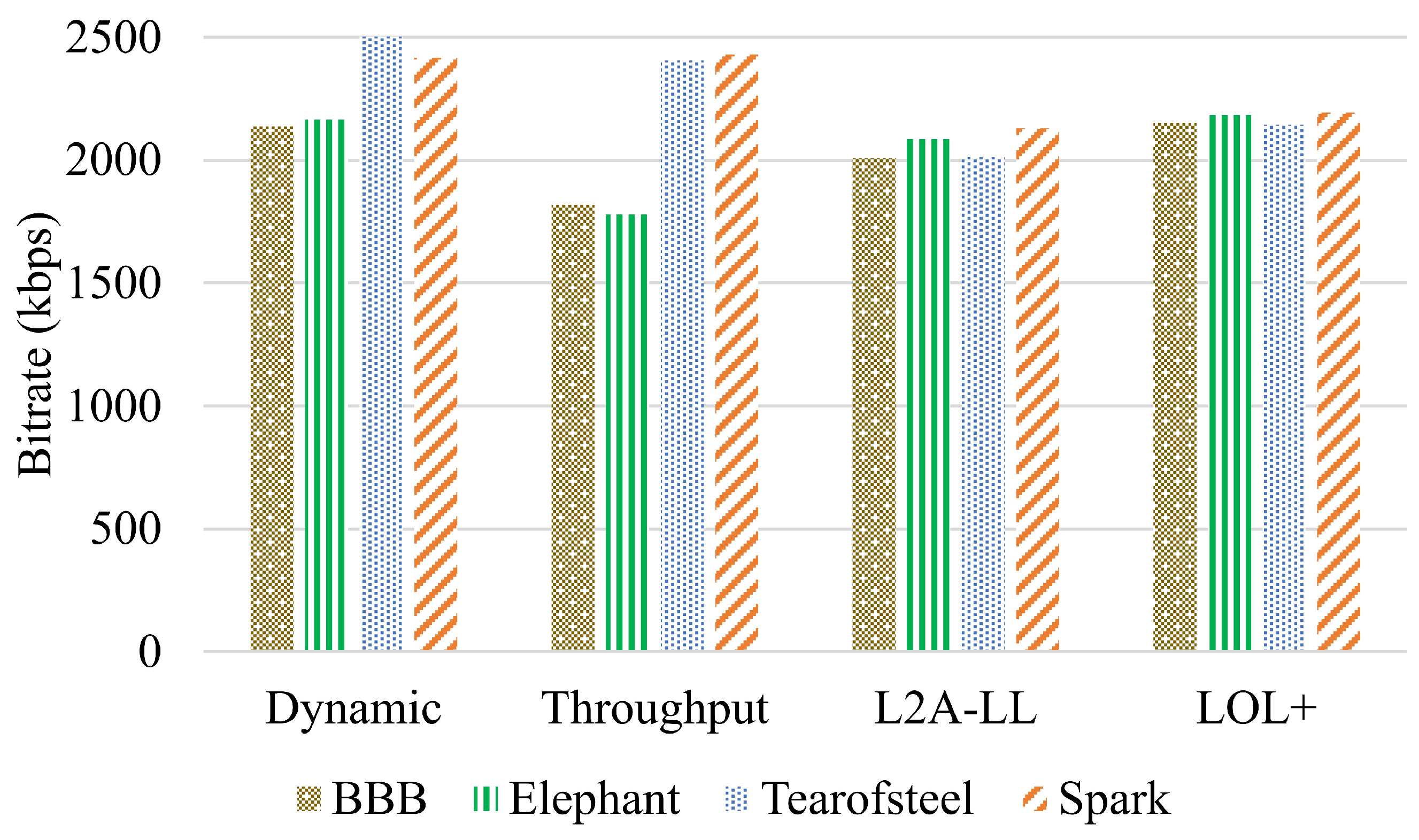

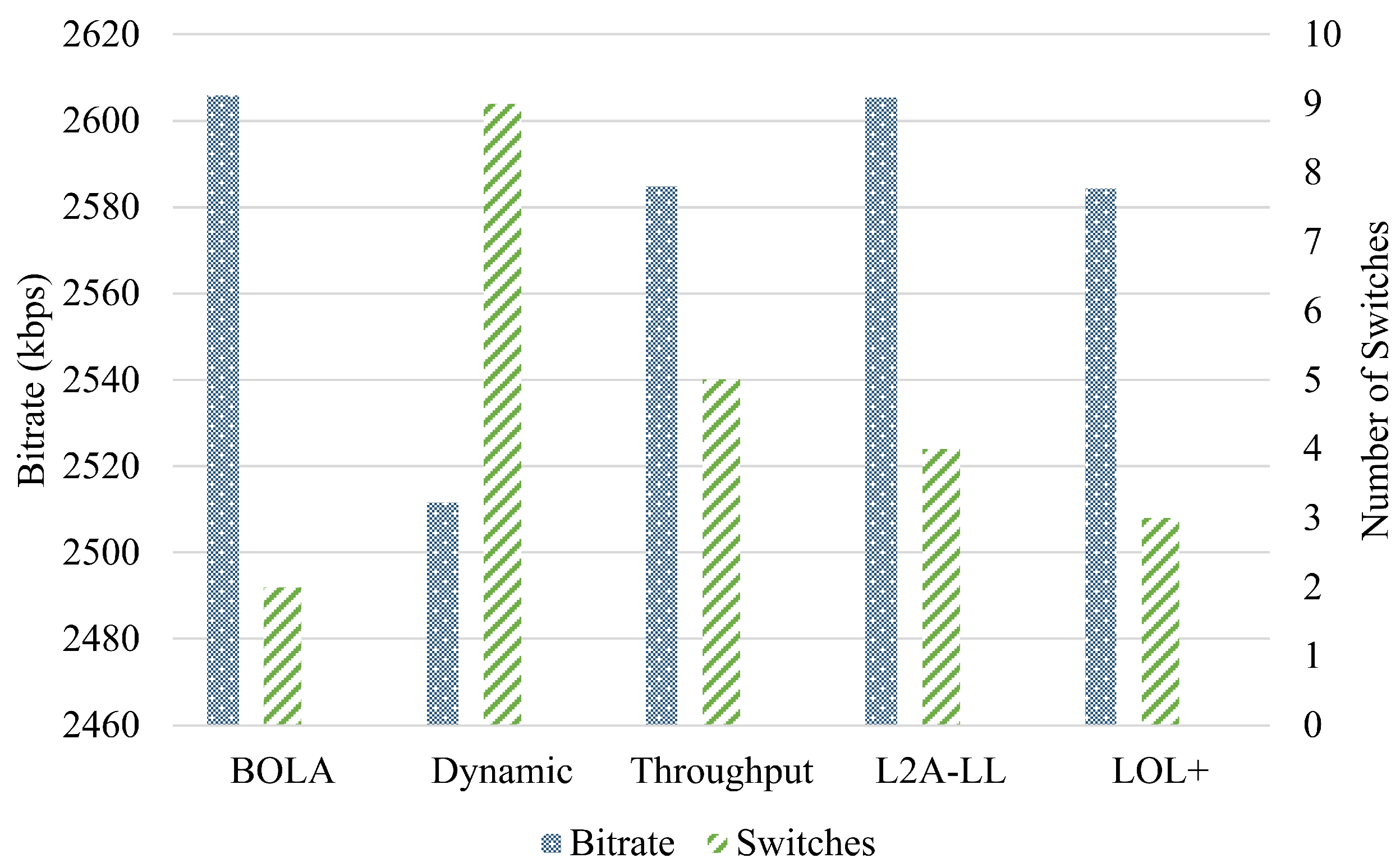

4.1.1. Analysis Under Network Profile 1

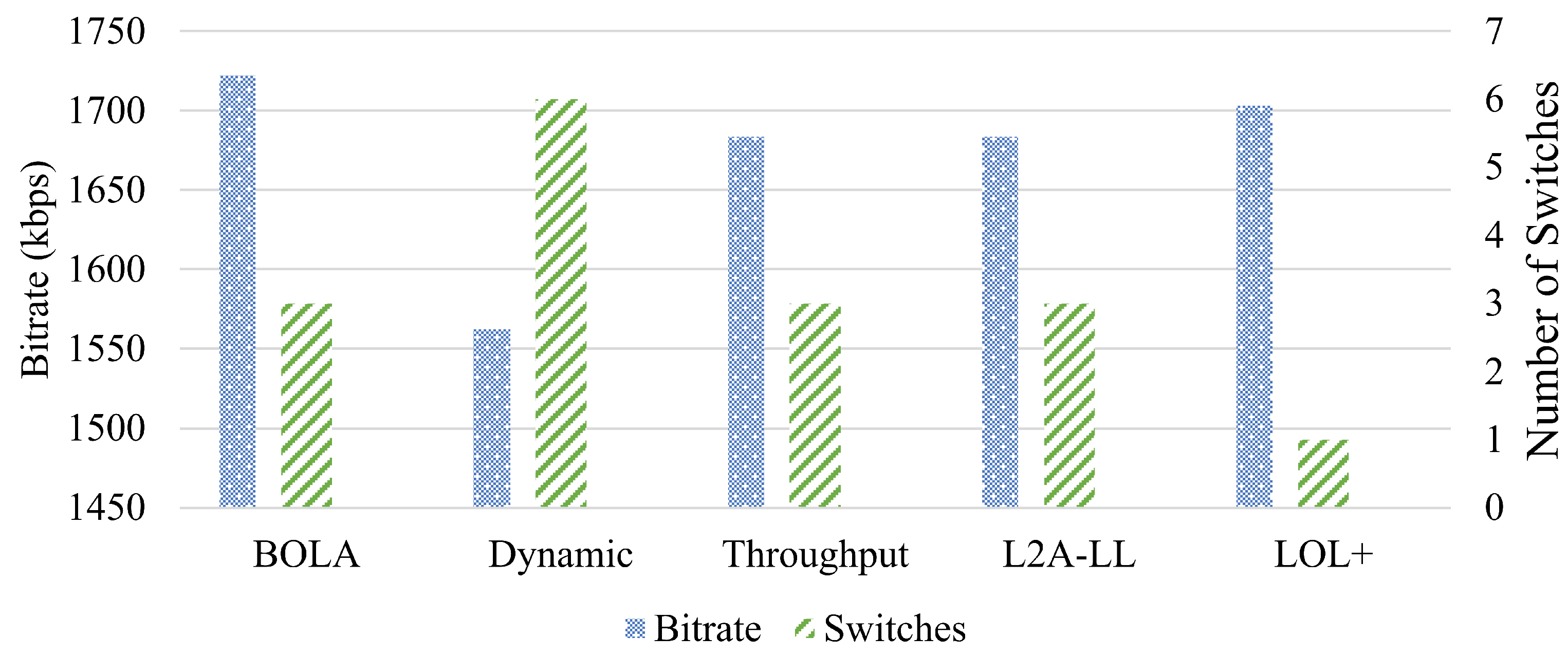

4.1.2. Analysis Under Network Profile 2

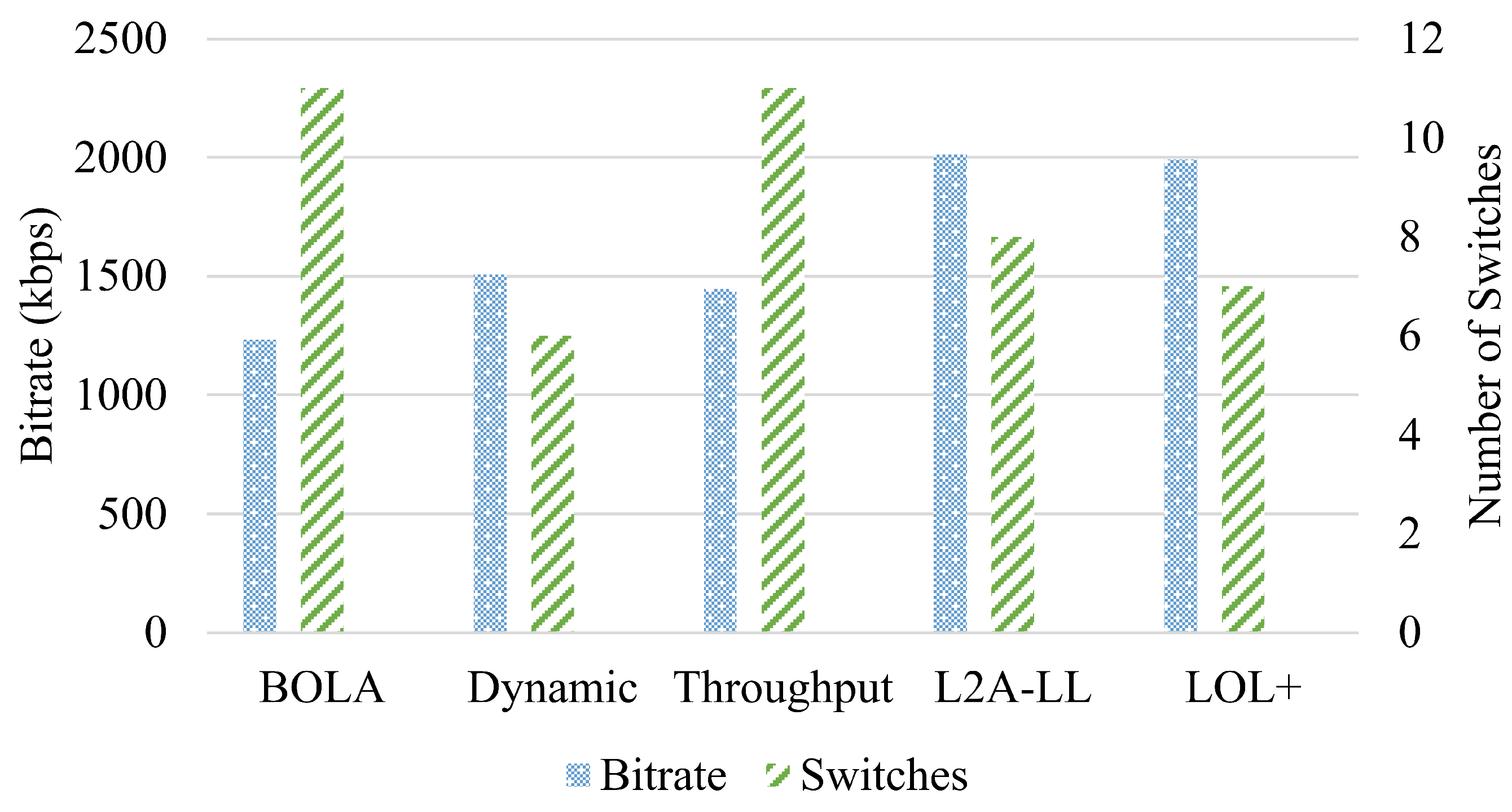

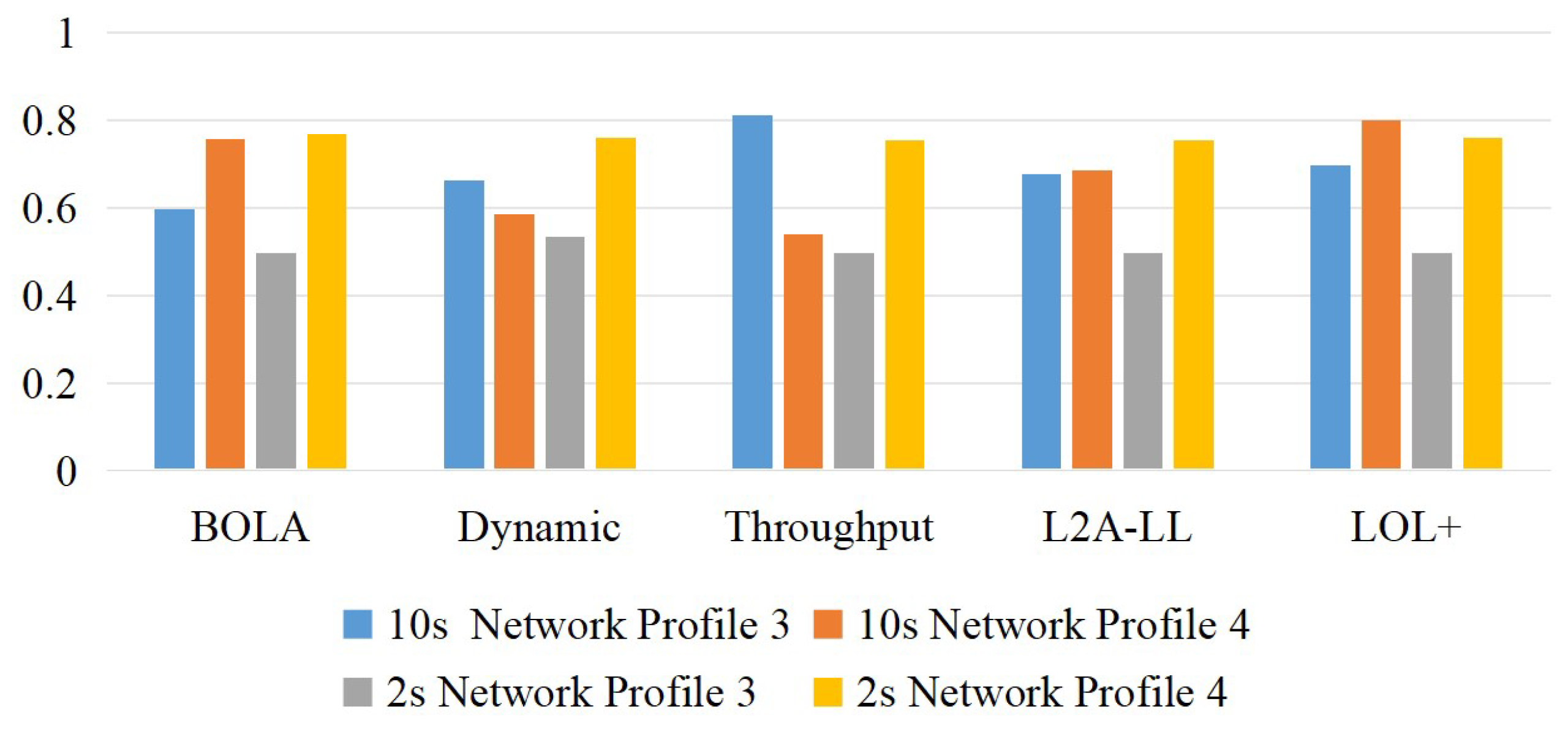

4.1.3. Impact of Segment Duration

- The 2 s Segments

- 2.

- The 10 s Segments

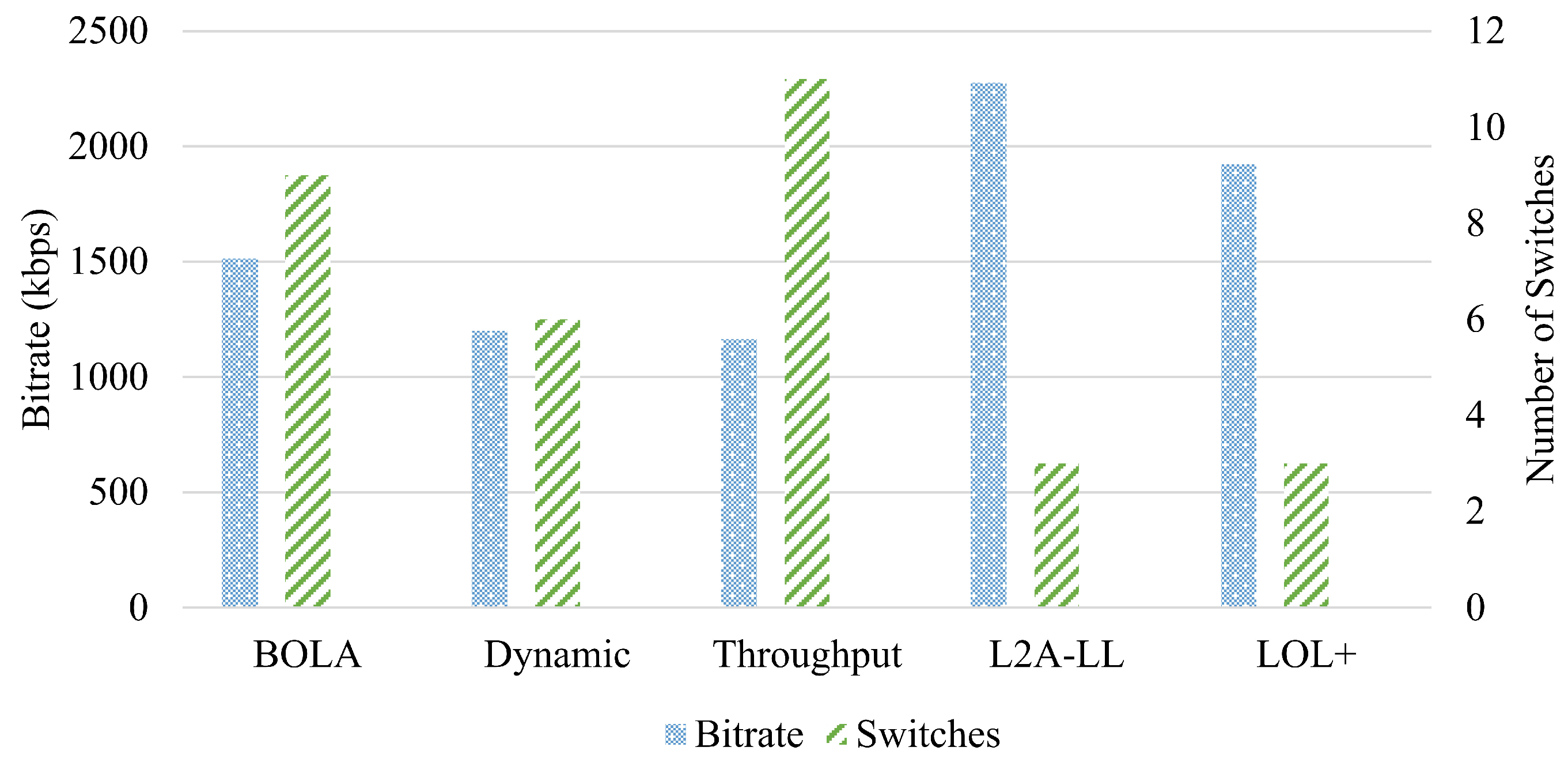

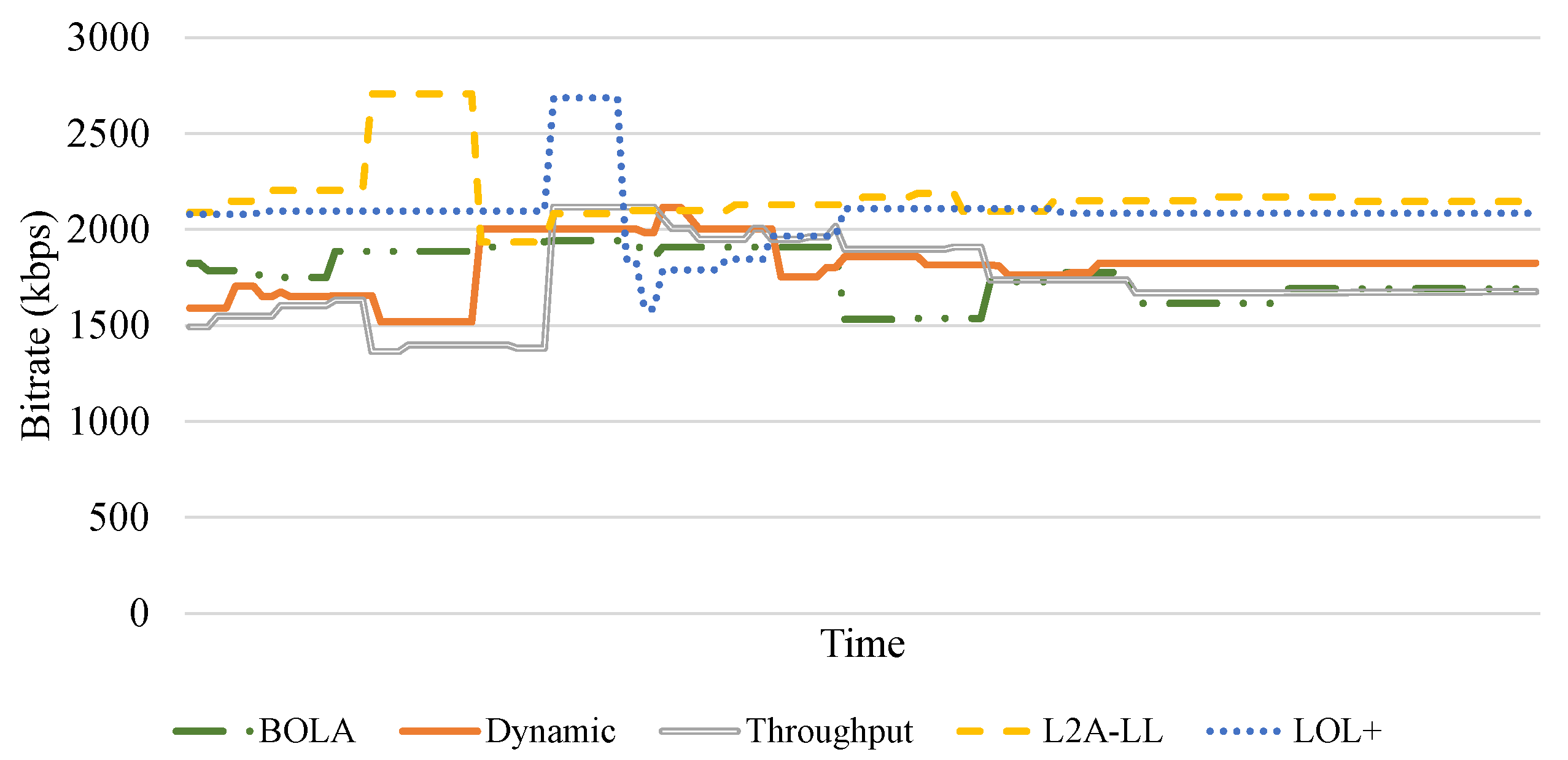

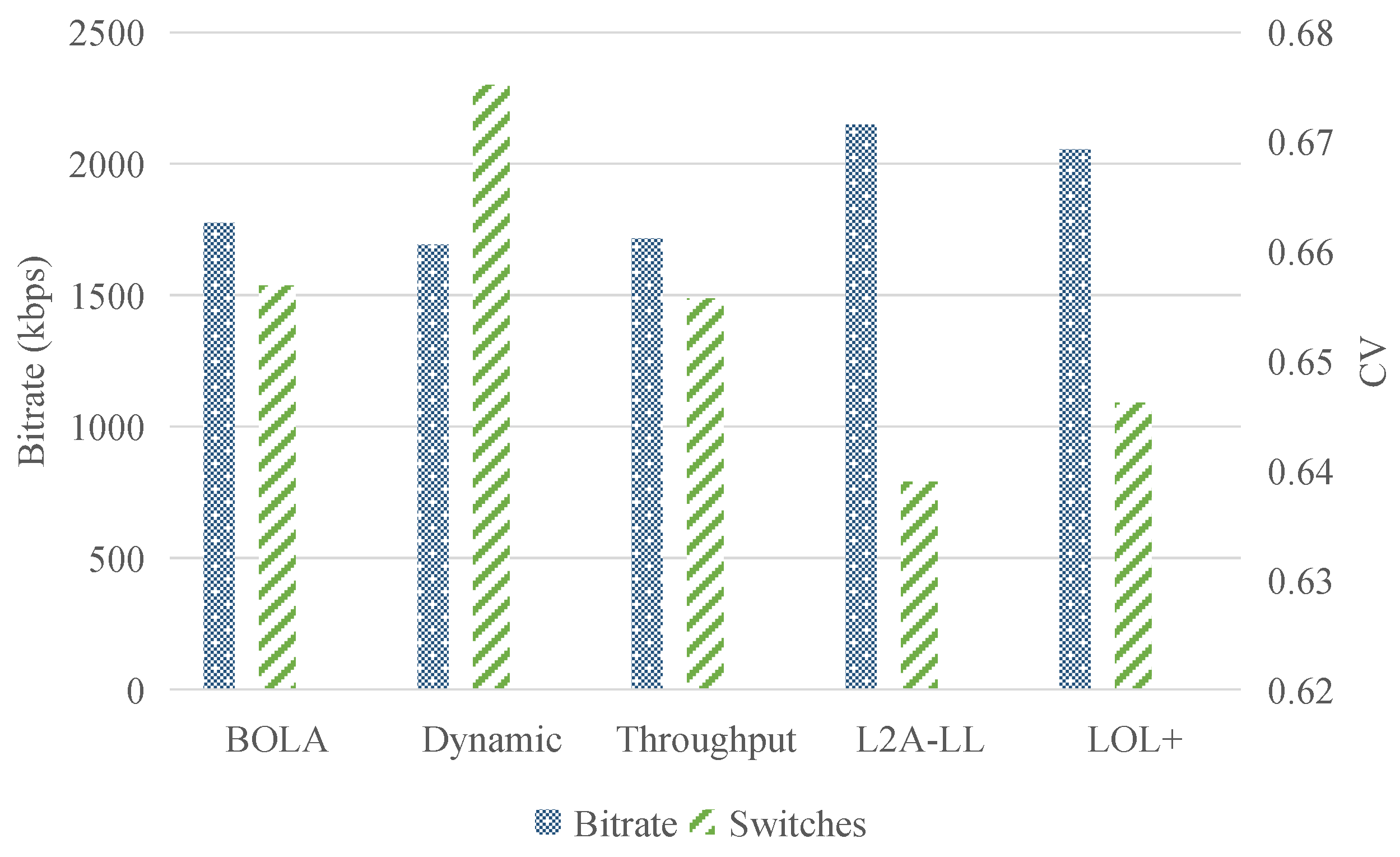

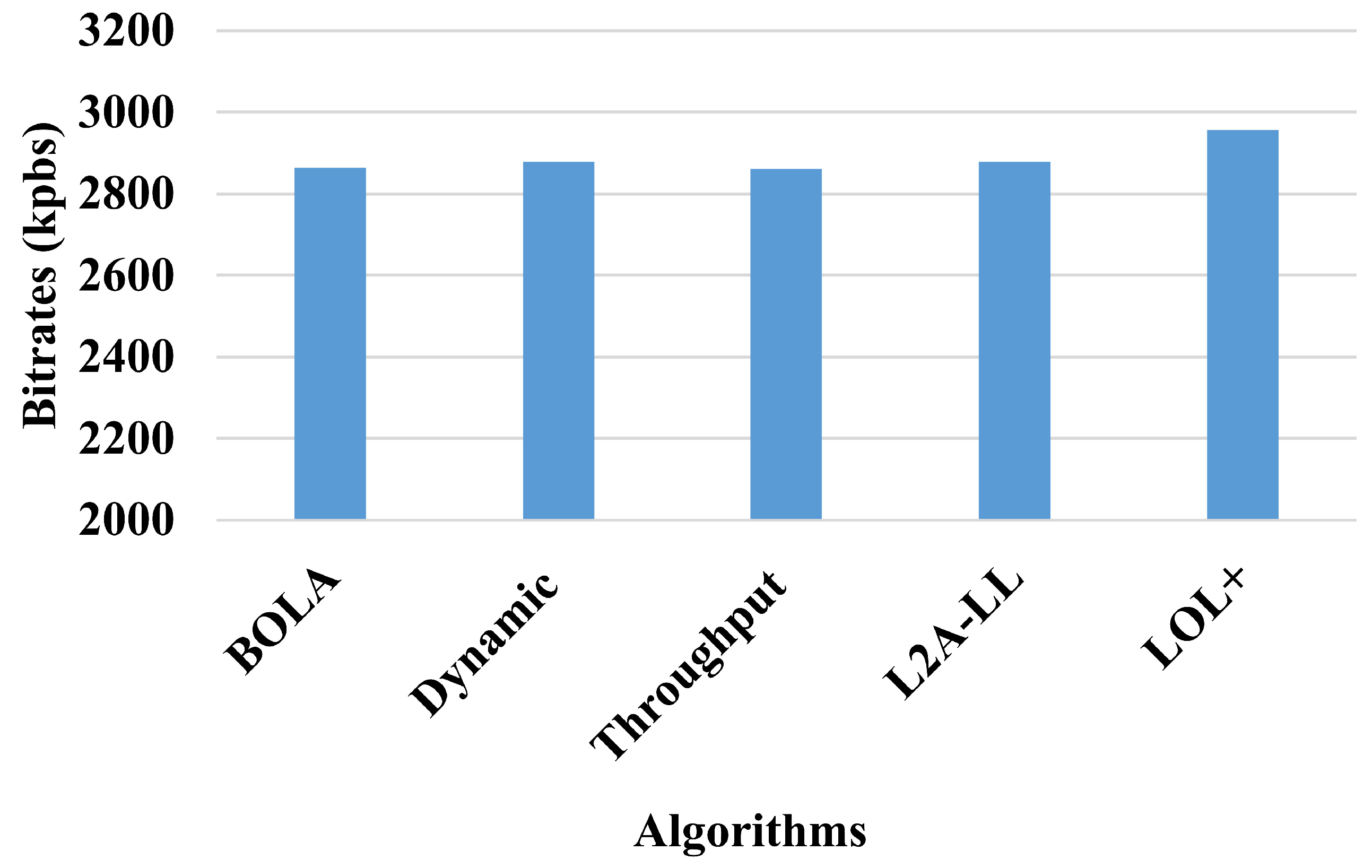

4.2. CMAF

Analysis Under Network Profile 1

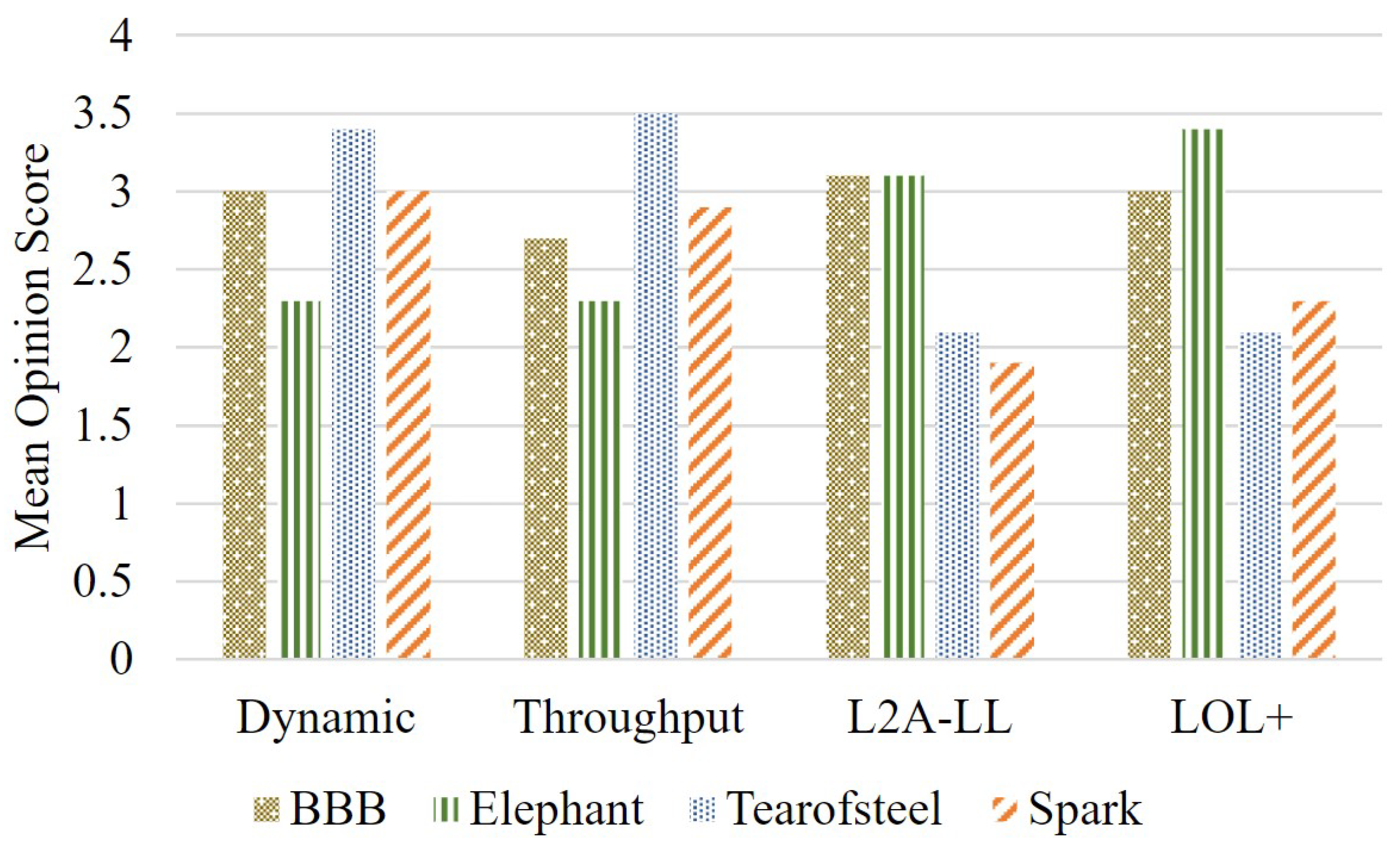

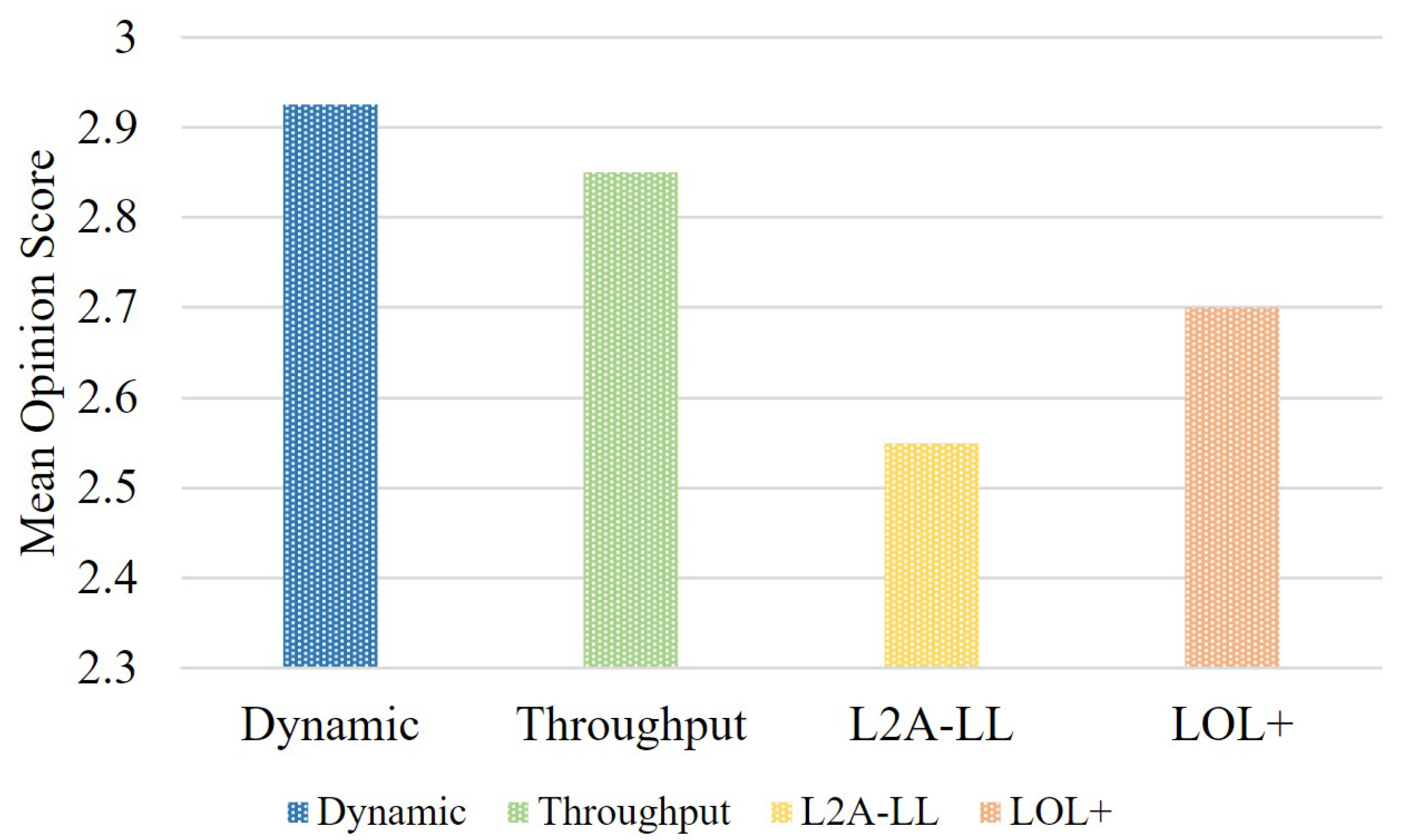

5. Qualitative Study

5.1. Subjective Evaluation Method

5.2. Subjective Evaluation Results

6. Analysis and Future Work

- Evaluation with Realistic Network Traces: We plan to use real-world cellular network throughput traces to evaluate the performance of ABR algorithms under more realistic and dynamic conditions, such as those described in [35].

- Comprehensive Subjective Analysis: While this work includes limited subjective evaluations, future efforts will involve subjective assessments of all experiments conducted, including those run on cellular network traces.

- Exploration of Latency Target Variability: In our current setup, the latency target (i.e., delay from the live edge) is fixed at 6 s. Future work will investigate the following:

- -

- How varying latency targets impact the user’s quality of experience (QoE);

- -

- The performance of different ABR algorithms in dash.js under various latency targets;

- -

- Strategies for dynamically selecting an optimal latency target based on client-side network conditions and device capabilities.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kim, S.; Baek, H.; Kim, D.H. OTT and live streaming services: Past, present, and future. Telecommun. Policy 2021, 45, 102244. [Google Scholar] [CrossRef]

- Vlaovic, J.; Rimac-Drlje, S.; Žagar, D.; Filipović, L. Content dependent spatial resolution selection for MPEG DASH segmentation. J. Ind. Inf. Integr. 2021, 24, 100240. [Google Scholar] [CrossRef]

- Vlaovic, J.; Žagar, D.; Rimac-Drlje, S.; Vranjes, M. Evaluation of objective video quality assessment methods on video sequences with different spatial and temporal activity encoded at different spatial resolutions. Int. J. Electr. Comput. Eng. Syst. 2021, 12, 1–9. [Google Scholar] [CrossRef]

- İren, E.; Kantarci, A. Content Aware Video Streaming with MPEG DASH Technology. TEM J. 2022, 11, 611–619. [Google Scholar] [CrossRef]

- Alsabaan, M.; Alqhtani, W.; Taha, A. An Adaptive Quality Switch-aware Framework for Optimal Bitrate Video Streaming Delivery. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 570–579. [Google Scholar]

- Klink, J.; Brachmański, S. An Impact of the Encoding Bitrate on the Quality of Streamed Video Presented on Screens of Different Resolutions. In Proceedings of the 2022 International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Split, Croatia, 22–24 September 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Lyko, T.; Broadbent, M.; Race, N.; Nilsson, M.; Farrow, P.; Appleby, S. Improving quality of experience in adaptive low latency live streaming. Multimed. Tools Appl. 2024, 83, 15957–15983. [Google Scholar] [CrossRef]

- O’Hanlon, P.; Aslam, A. Latency Target based Analysis of the DASH.js Player. In Proceedings of the 14th ACM Multimedia Systems Conference (MMSys’23), Vancouver, BC, Canada, 7–10 June 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 153–160. [Google Scholar] [CrossRef]

- Erfanian, A. Optimizing QoE and Latency of Live Video Streaming Using Edge Computing and In-Network Intelligence. In Proceedings of the 12th ACM Multimedia Systems Conference (MMSys’21), Istanbul, Turkey, 28 September–1 October 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 373–377. [Google Scholar] [CrossRef]

- Xie, G.; Chen, H.; Yu, F.; Xie, L. Impact of playout buffer dynamics on the QoE of wireless adaptive HTTP progressive video. ETRI J. 2021, 43, 447–458. [Google Scholar] [CrossRef]

- Taraghi, B.; Hellwagner, H.; Timmerer, C. LLL-CAdViSE: Live Low-Latency Cloud-based Adaptive Video Streaming Evaluation Framework. IEEE Access 2023, 11, 25723–25734. [Google Scholar] [CrossRef]

- Silhavy, D.; Pham, S.; Arbanowski, S.; Steglich, S.; Harrer, B. Latest advances in the development of the open-source player dash.js. In Proceedings of the 1st Mile-High Video Conference (MHV’22), Denver, CO, USA, 1–3 March 2023; Association for Computing Machinery: New York, NY, USA, 2022; pp. 32–38. [Google Scholar] [CrossRef]

- Bentaleb, A.; Taani, B.; Begen, A.; Timmerer, C.; Zimmermann, R. A Survey on Bitrate Adaptation Schemes for Streaming Media over HTTP. IEEE Commun. Surv. Tutor. 2018, 21, 562–585. [Google Scholar] [CrossRef]

- Bentaleb, A.; Timmerer, C.; Begen, A.C.; Zimmermann, R. Bandwidth prediction in low-latency chunked streaming. In Proceedings of the 29th ACM Workshop on Network and Operating Systems Support for Digital Audio and Video (NOSSDAV’19), Amherst, MA, USA, 21 June 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 7–13. [Google Scholar] [CrossRef]

- Gutterman, C.L.; Fridman, B.; Gilliland, T.; Hu, Y.; Zussman, G. Stallion: Video adaptation algorithm for low-latency video streaming. In Proceedings of the 11th ACM Multimedia Systems Conference, Istanbul, Turkey, 8–11 June 2020. [Google Scholar]

- Karagkioules, T.; Mekuria, R.; Griffioen, D.; Wagenaar, A. Online learning for low-latency adaptive streaming. In Proceedings of the 11th ACM Multimedia Systems Conference (MMSys’20), Istanbul, Turkey, 8–11 June 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 315–320. [Google Scholar] [CrossRef]

- Bentaleb, A.; Akcay, M.N.; Lim, M.; Begen, A.C.; Zimmermann, R. Catching the Moment with LoL+ in Twitch-Like Low-Latency Live Streaming Platforms. IEEE Trans. Multimed. 2022, 24, 2300–2314. [Google Scholar] [CrossRef]

- Spiteri, K.; Sitaraman, R.; Sparacio, D. From Theory to Practice: Improving Bitrate Adaptation in the DASH Reference Player. ACM Trans. Multimedia Comput. Commun. Appl. 2019, 15, 1–29. [Google Scholar] [CrossRef]

- Duanmu, Z.; Liu, W.; Li, Z.; Chen, D.; Wang, Z.; Wang, Y.; Gao, W. Assessing the Quality-of-Experience of Adaptive Bitrate Video Streaming. arXiv 2020, arXiv:2008.08804. [Google Scholar]

- Rodrigues, R.; Počta, P.; Melvin, H.; Bernardo, M.; Pereira, M.; Pinheiro, A. Audiovisual Quality of Live Music Streaming over Mobile Networks using MPEG-DASH. Multimed. Tools Appl. 2020, 79, 24595–24619. [Google Scholar] [CrossRef]

- Rahman, W.u.; Huh, E.N. Content-aware QoE optimization in MEC-assisted Mobile video streaming. Multimed. Tools Appl. 2023, 82, 42053–42085. [Google Scholar] [CrossRef]

- Taraghi, B.; Haack, S.Z.; Timmerer, C. Towards Better Quality of Experience in HTTP Adaptive Streaming. In Proceedings of the 2022 16th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Dijon, France, 19–21 October 2022; pp. 608–615. [Google Scholar]

- Taraghi, B.; Nguyen, M.; Amirpour, H.; Timmerer, C. Intense: In-Depth Studies on Stall Events and Quality Switches and Their Impact on the Quality of Experience in HTTP Adaptive Streaming. IEEE Access 2021, 9, 118087–118098. [Google Scholar] [CrossRef]

- Lebreton, P.; Yamagishi, K. Quitting Ratio-Based Bitrate Ladder Selection Mechanism for Adaptive Bitrate Video Streaming. IEEE Trans. Multimed. 2023, 25, 8418–8431. [Google Scholar] [CrossRef]

- Lebreton, P.; Yamagishi, K. Predicting User Quitting Ratio in Adaptive Bitrate Video Streaming. IEEE Trans. Multimed. 2020, 23, 4526–4540. [Google Scholar] [CrossRef]

- Nguyen, M.; Vats, S.; Van Damme, S.; Van Der Hooft, J.; Vega, M.T.; Wauters, T.; Timmerer, C.; Hellwagner, H. Impact of Quality and Distance on the Perception of Point Clouds in Mixed Reality. In Proceedings of the 2023 15th International Conference on Quality of Multimedia Experience (QoMEX), Ghent, Belgium, 20–22 June 2023; pp. 87–90. [Google Scholar] [CrossRef]

- Vats, S.; Nguyen, M.; Van Damme, S.; van der Hooft, J.; Vega, M.T.; Wauters, T.; Timmerer, C.; Hellwagner, H. A Platform for Subjective Quality Assessment in Mixed Reality Environments. In Proceedings of the 2023 15th International Conference on Quality of Multimedia Experience (QoMEX), Ghent, Belgium, 20–22 June 2023; pp. 131–134. [Google Scholar] [CrossRef]

- Vlaović, J.; Žagar, D.; Rimac-Drlje, S.; Filipović, L. Comparison of representation switching number and achieved bit-rate in DASH algorithms. In Proceedings of the 2020 International Conference on Smart Systems and Technologies (SST), Osijek, Croatia, 14–16 October 2020; pp. 17–22. [Google Scholar] [CrossRef]

- Allard, J.; Roskuski, A.; Claypool, M. Measuring and modeling the impact of buffering and interrupts on streaming video quality of experience. In Proceedings of the 18th International Conference on Advances in Mobile Computing & Multimedia (MoMM’20), Chiang Mai, Thailand, 30 November–2 December 2020; Association for Computing Machinery: New York, NY, USA, 2021; pp. 153–160. [Google Scholar] [CrossRef]

- Lederer, S.; Müller, C.; Timmerer, C. Dynamic adaptive streaming over HTTP dataset. In Proceedings of the 3rd Multimedia Systems Conference (MMSys’12), Chapel Hill, NC, USA, 22–24 February 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 89–94. [Google Scholar] [CrossRef]

- Sani, Y.; Mauthe, A.; Edwards, C. Adaptive Bitrate Selection: A Survey. IEEE Commun. Surv. Tutor. 2017, 19, 2985–3014. [Google Scholar] [CrossRef]

- Tadahal, S.S.; Gummadi, S.V.; Prajapat, K.; Meena, S.M.; Kulkarni, U.; Gurlahosur, S.V.; Vyakaranal, S. A Survey on Adaptive Bitrate Algorithms and Their Improvisations. In Proceedings of the 2021 International Conference on Intelligent Technologies (CONIT), Hubli, India, 25–27 June 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Marx, E.; Yan, F.Y.; Winstein, K. Implementing BOLA-BASIC on Puffer: Lessons for the use of SSIM in ABR logic. arXiv 2020, arXiv:2011.09611. [Google Scholar]

- Lim, M.; Akcay, M.N.; Bentaleb, A.; Begen, A.C.; Zimmermann, R. When they go high, we go low: Low-latency live streaming in dash.js with LoL. In Proceedings of the 11th ACM Multimedia Systems Conference (MMSys’20), Istanbul, Turkey, 8–11 June 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 321–326. [Google Scholar] [CrossRef]

- Müller, C.; Lederer, S.; Timmerer, C. An evaluation of dynamic adaptive streaming over HTTP in vehicular environments. In Proceedings of the 4th Workshop on Mobile Video (MoVid’12), Chapel Hill, NC, USA, 24 February 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 37–42. [Google Scholar] [CrossRef]

| Feature | O’Hanlon et al. [8] | Rahman et al. [21] | Lyko et al. [7] | This Study |

|---|---|---|---|---|

| Low-latency algorithms | ✓ | × | ✓ | ✓ |

| DASH algorithms | × | ✓ | × | ✓ |

| Various network conditions | × | ✓ | × | ✓ |

| CMAF format | ✓ | × | ✓ | ✓ |

| Range of video content | × | × | ✓ | ✓ |

| Various segment lengths | × | ✓ | × | ✓ |

| Objective QoE analysis | ✓ | ✓ | ✓ | ✓ |

| Subjective QoE analysis | × | × | ✓ | ✓ |

| Real-World test bed | ✓ | × | × | ✓ |

| Video | Source Quality | Duration | Genre |

|---|---|---|---|

| Big Buck Bunny | Full HD YUV raw | 09:46 | Animation |

| Elephants Dream | Full HD YUV raw | 10:54 | Animation |

| Tears of Steel | Full HD YUV raw | 12:15 | Movie |

| Sparks | Full HD YUV raw | 10:00 | Movie |

| Index | Animated Content | Movie Content |

|---|---|---|

| 1 | 50 kbit/s, 320 × 240 | 50 kbit/s, 320 × 240 |

| 2 | 200 kbit/s, 480 × 360 | 200 kbit/s, 480 × 360 |

| 3 | 600 kbit/s, 854 × 480 | 600 kbit/s, 854 × 480 |

| 4 | 1.2 Mbit/s, 1280 × 720 | 1.2 Mbit/s, 1280 × 720 |

| 5 | 2.5 Mbit/s, 1920 × 1080 | 2.0 Mbit/s, 1920 × 1080 |

| 6 | 3.0 Mbit/s, 1920 × 1080 | 2.5 Mbit/s, 1920 × 1080 |

| 7 | 4.0 Mbit/s, 1920 × 1080 | 3.0 Mbit/s, 1920 × 1080 |

| 8 | 8.0 Mbit/s, 1920 × 1080 | 6.0 Mbit/s, 1920 × 1080 |

| A | B | p-unc | p-corr | Hedges’ g | |

|---|---|---|---|---|---|

| BOLA | Dynamic | 0.3027 | 1 | 0.476 | 0.324 |

| BOLA | L2A-LL | 0.0862 | 0.517 | 1.036 | −0.546 |

| BOLA | LOL+ | 0.3464 | 1 | 0.443 | −0.296 |

| BOLA | Throughput | 0.2400 | 1 | 0.542 | 0.370 |

| Dynamic | L2A-LL | 0.0076 | 0.0686 | 6.122 | −0.874 |

| Dynamic | LOL+ | 0.0630 | 0.4407 | 1.284 | −0.594 |

| Dynamic | Throughput | 0.8679 | 1 | 0.312 | 0.052 |

| L2A-LL | LOL+ | 0.4742 | 1 | 0.380 | 0.224 |

| L2A-LL | Throughput | 0.0053 | 0.0529 | 8.147 | 0.917 |

| LOL+ | Throughput | 0.0480 | 0.384 | 1.553 | 0.633 |

| Category | Observation |

|---|---|

| Content Robustness | Low-latency algorithms maintained consistently high video quality across different video types, whereas traditional DASH algorithms showed content-dependent performance variability. |

| Performance Under Unstable Conditions | In highly unstable network environments, low-latency algorithms achieved the highest QoE and effectively minimized playback interruptions—an essential feature for live streaming scenarios where buffer sizes are intentionally kept small to stay close to the live edge. |

| Segment Duration Independence | Low-latency algorithms sustained high video quality regardless of segment duration. In contrast, the performance of traditional algorithms degraded as segment duration increased. For example, BOLA achieved the highest bitrate with 2 s segments but fell to one of the lowest at 10 s (see Figure 15). |

| Stability of Playback Quality | Traditional algorithms demonstrated performance fluctuations across different network conditions. For instance, the dynamic algorithm performed well under network profile 1 but significantly degraded under other profiles. |

| Overall Suitability for Live Streaming | Despite being optimized primarily for reducing latency, low-latency algorithms consistently delivered comparable or superior video quality across varying content types and network conditions, making them highly suitable for live video streaming applications. |

| Adaptation Speed | LoL+ and L2A-LL demonstrated faster bitrate adaptation in response to abrupt bandwidth drops, reducing rebuffer events more effectively. |

| Quality vs. Bitrate Trade-off | Traditional algorithms (e.g., throughput) consumed more bandwidth but delivered only marginally better video quality, reducing overall efficiency. |

| Consistency Across Content | The dynamic algorithm maintained relatively consistent performance across both animation and movie content, whereas L2A-LL was more affected by content complexity. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Uddin, S.; Grega, M.; Leszczuk, M.; Rahman, W.u. Evaluating HAS and Low-Latency Streaming Algorithms for Enhanced QoE. Electronics 2025, 14, 2587. https://doi.org/10.3390/electronics14132587

Uddin S, Grega M, Leszczuk M, Rahman Wu. Evaluating HAS and Low-Latency Streaming Algorithms for Enhanced QoE. Electronics. 2025; 14(13):2587. https://doi.org/10.3390/electronics14132587

Chicago/Turabian StyleUddin, Syed, Michał Grega, Mikołaj Leszczuk, and Waqas ur Rahman. 2025. "Evaluating HAS and Low-Latency Streaming Algorithms for Enhanced QoE" Electronics 14, no. 13: 2587. https://doi.org/10.3390/electronics14132587

APA StyleUddin, S., Grega, M., Leszczuk, M., & Rahman, W. u. (2025). Evaluating HAS and Low-Latency Streaming Algorithms for Enhanced QoE. Electronics, 14(13), 2587. https://doi.org/10.3390/electronics14132587