Trends and Challenges in Real-Time Stress Detection and Modulation: The Role of the IoT and Artificial Intelligence

Abstract

1. Introduction

1.1. Stress Pervasiveness and the Need for Objective Monitoring

1.2. The Convergence of the IoT and AI in Mental Health Technology

1.3. Objectives and Structure of the Review

2. Conceptualizing Stress in the Technological Era

2.1. Foundational Stress Theories

2.2. Affective Computing, HCI, and Computational Empathy in Stress Management

3. IoT Ecosystem for Stress Data Acquisition

3.1. Wearable Devices and Sensor Technologies

- Wristbands/Smartwatches: The most common form factor, offering convenience and social acceptability. They typically incorporate PPG, EDA, temperature sensors, and accelerometers. Signal quality, particularly for PPG-derived HRV, can be susceptible to motion artifacts [13].

- Chest Straps/Patches: They often provide higher-fidelity signals, especially for ECG, due to stable placement closer to the heart [14]. They may also include respiration sensors (impedance pneumography) and accelerometers. They can be less comfortable for long-term wear compared with wristbands.

- Rings: They offer a discreet alternative, often focusing on PPG, temperature and activity tracking.

- Earbuds/Headsets: They can potentially integrate EEG sensors (ear-EEG) or PPG.

- Smart Clothing: Textiles with embedded sensors (e.g., ECG electrodes [5]) offer potential for seamless integration but face challenges in durability and washability.

3.2. Key Physiological Biomarkers and Sensors

- Electrocardiogram (ECG): Measures the heart’s electrical activity via electrodes placed on the skin. It is considered the gold standard for deriving heart rate variability (HRV), a sensitive indicator of ANS balance and stress. ECG sensors are often found in chest straps or require specific finger/hand placement on devices [14].

- Photoplethysmography (PPG): An optical technique measuring changes in blood volume in the microvascular bed of tissue, typically at the wrist or finger. It provides Blood Volume Pulse (BVP) from which HR and HRV can be estimated. While convenient for wrist-worn devices, PPG-derived HRV is generally considered less accurate than ECG-derived HRV, especially during movement [12].

- Electrodermal Activity (EDA)/Galvanic Skin Response (GSR): It measures changes in the electrical conductance of the skin driven by sweat gland activity controlled by the sympathetic nervous system (the “fight or flight” branch of the ANS). Increased EDA/GSR typically indicates heightened arousal or stress [1]. Sensors are commonly placed on the wrist or fingers/palm. EDA is sensitive to motion artifacts and environmental factors like temperature and humidity [13].

- Skin Temperature (SKT): Peripheral skin temperature can decrease during acute stress due to vasoconstriction (blood vessels narrowing) directed by the sympathetic nervous system. It can be measured by thermistors placed on the skin, often on the wrist [12]. Facial skin temperature changes have also been explored using thermal cameras [5].

- Respiration (RSP): Stress often leads to faster, shallower breathing. Respiration rate and pattern can be measured directly using chest straps (measuring chest expansion/contraction via impedance or strain gauges) or indirectly estimated from ECG or PPG signals [12].

- Electromyogram (EMG): It measures the electrical activity produced by skeletal muscles. Increased muscle tension (e.g., in the shoulders, neck, or jaw) can be a physical manifestation of stress. EMG sensors require electrode placement over the muscle of interest. It is less commonly used in general-purpose stress wearables compared with ANS indicators.

- Electroencephalogram (EEG): It records the electrical activity from the brain via electrodes placed on the scalp (or potentially in/around the ear). EEG provides direct insights into brain states, including cognitive load, relaxation (alpha waves), and affective responses, making it highly relevant for stress research [18]. However, traditional EEG requires cumbersome electrode caps, limiting its use for continuous monitoring in daily life. Wearable EEG solutions are emerging but still face usability challenges [19]. EEG is often employed in laboratory studies or specific applications like neurofeedback [20].

- Accelerometer (ACC)/Motion Data: Inertial sensors measuring acceleration (and often rotation via gyroscopes) are ubiquitous in wearables. While not direct stress measures, they are crucial to quantifying physical activity levels. This is vital because physical exertion causes changes in HR, respiration, and temperature that can mimic or mask stress responses. Motion data are, therefore, essential to contextualizing physiological signals, filtering out activity-related noise, or distinguishing mental stress from physical load [12].

| Sensor Type | Signal Measured | Common Location(s) | Key Advantages | Key Limitations/Challenges | |

|---|---|---|---|---|---|

| ECG | Electrical Activity of the Heart | Chest and Fingers/Hands | Gold standard for HRV; high accuracy | Often requires specific placement (chest strap); can be obtrusive | [14] |

| PPG | Blood Volume Pulse (BVP) | Wrists and Fingers | Convenient for wearables (wristbands and rings); estimates HR and HRV | Susceptible to motion artifacts; less accurate HRV than ECG, especially during movement | [12] |

| EDA/GSR | Skin Conductance (sweat gland activity) | Wrist, Fingers, and Palms | Reflects sympathetic nervous system arousal; widely used | Sensitive to motion, temperature, and humidity; individual variability | [1,13] |

| SKT | Skin Temperature | Wrists and Forehead (thermal cam) | Non-invasive; can indicate peripheral blood flow changes | Influenced by ambient temperature; response can be slow or variable | [12,19] |

| RSP | Respiration Rate/Pattern | Chest (strap) and derived (ECG/PPG) | Direct measure of breathing changes linked to stress | Chest straps can be uncomfortable; derived methods may be less accurate | [12] |

| EMG | Muscle Electrical Activity/Tension | Various muscle groups | Directly measures muscle tension associated with stress | Requires specific electrode placement over muscles; less common for general stress | [12] |

| EEG | Brain Electrical Activity | Scalp and Ears (ear-EEG) | Direct measure of brain states (cognitive/affective); high temporal resolution | Traditionally requires cumbersome setup; wearable options still evolving; susceptible to artifacts (muscle and eye movement) | [18,19,20,21] |

| ACC | Acceleration/Motion | Wrists, Chest, Waist, etc. | Quantifies physical activity; essential context for physiological signals | Does not directly measure stress; primarily used for context/artifact removal | [12] |

| Camera | Facial Images/Video | External | Captures facial expressions linked to emotion/stress | Privacy concerns; requires line of sight; lighting variations; computationally intensive processing | [23] |

| FSR | Force/Pressure | Chair seat and insoles | Can detect posture changes or pressure points related to discomfort/stress | Context-specific (e.g., sitting posture); indirect measure | [16] |

| Thermal Cam | Infrared Radiation (Surface Temperature) | External | Non-contact temperature measurement (e.g., facial temperature patterns) | Sensitive to environmental temperature, distance, and emissivity; can be expensive; privacy concerns | [5] |

3.3. Behavioral Indicators

- Facial Expressions: Computer vision algorithms, particularly Convolutional Neural Networks (CNNs), can analyze images or video streams to detect facial muscle movements corresponding to basic emotions (e.g., anger, fear, sadness, happiness, and surprise) or specific Action Units (AUs) linked to stress [23]. IoT-enabled cameras, potentially integrated into smart environments or personal devices, facilitate this data capture. Research in this area is rapidly advancing to address real-world challenges. For instance, a work on “Facial Expression Recognition with Visual Transformers and Attentional Selective Fusion” [24] proposes a method (VTFF) that converts facial images into sequences of “visual words” and uses self-attention mechanisms to model the relationships between them, achieving high accuracy on “in-the-wild” datasets like RAF-DB and FERPlus. Such approaches are particularly promising for stress monitoring systems because they are designed to be robust to occlusions, varying head poses, and complex backgrounds, which are common outside of laboratory settings. However, continuous camera monitoring raises significant privacy implications [25].

- Posture: Changes in sitting or standing posture, such as slouching or increased rigidity, can be associated with stress, fatigue, or negative affect. Sensors like FSRs in chairs [16] or wearable inertial sensors can potentially track posture over time.

- Physical Activity Levels: As measured by accelerometers, deviations from typical activity patterns (e.g., unusual restlessness or lethargy) might correlate with stress levels. Activity data also provide crucial context for interpreting physiological signals [12].

- Voice Characteristics: AIthough not extensively detailed for stress in the accessible sources, affective computing research indicates that vocal parameters like pitch, intensity, speech rate, and jitter/shimmer can change with the emotional state, including stress [8]. This could be captured via microphones in smartphones or other IoT devices.

- Computer Interaction Patterns: In office or work-from-home settings, changes in typing speed, error rates, mouse movement patterns, or application usage could potentially serve as subtle behavioral indicators of stress or cognitive load [8].

3.4. Standardized Datasets for Research

- WESAD (Wearable Stress and Affect Detection): A widely cited multimodal dataset collected from 15 participants during a laboratory study [26]. It includes physiological signals from both chest-worn (ECG, EDA, EMG, respiration, temperature, and three-axis acceleration at 700 Hz) and wrist-worn (BVP at 64 Hz, EDA at 4 Hz, temperature at 4 Hz, and three-axis acceleration at 32 Hz) devices. The protocol involved baseline (neutral), stress induction (using elements similar to the Trier Social Stress Test), and amusement phases. Self-report questionnaires (e.g., PANAS and STAI) provide subjective labels [26]. WESAD facilitates research comparing sensor locations and modalities for classifying baseline, stress, and amusement states [15,24].

- AMIGOS (A Dataset for Affect, Personality and Mood Research on Individuals and GroupS): This dataset focuses on affect elicited by short and long emotional videos viewed by 40 participants, both individually and in small groups [27]. It includes physiological data from wearable sensors (EEG, ECG, and GSR) and video recordings (frontal, full-body RGB, and depth). A key feature is the inclusion of personality assessments (Big Five Inventory) and mood assessments (PANAS), allowing researchers to investigate the interplay of personality, mood, social context, and affective responses [27]. Affect is annotated via self-assessment (valence, arousal, dominance, liking, familiarity, and basic emotions) and external assessment (valence and arousal) [27].

- SWEET (Stress in Work EnvironmEnT): Referenced as the data source in studies focusing on real-world stress detection, this dataset is significant because it was collected in a free-living environment over five consecutive days from 240 volunteers. Data include ECG, skin conductance (SC), skin temperature (ST), and accelerometer (ACC) signals from wearable patches and wristbands. Stress levels were self-reported periodically via questionnaires [3]. SWEET is crucial to developing and testing models intended for deployment outside controlled laboratory settings.

4. AI-Driven Analysis of Stress Signals

4.1. Data Preprocessing and Feature Extraction and Selection

- Noise Filtering: The application of digital filters to remove unwanted noise, such as baseline drift or high-frequency interference. Examples include median filters and Butterworth low-pass filters for ECG [28], Kalman filtering for general noise reduction [29], and Wavelet Packet Transform (WPT) for signal enhancement and noise minimization in EEG [21].

- Artifact Removal/Mitigation: The identification and correction or removal of segments of data corrupted by artifacts, particularly motion artifacts, which heavily affect signals like PPG and EDA. Techniques might involve signal quality indices, interpolation, or algorithms specifically designed to separate physiological signals from motion noise.

- Normalization/Standardization: The scaling of data to a common range (e.g., 0 to 1 or with zero mean and unit variance) to prevent features with larger values from dominating the learning process [13].

- Time-domain features: Statistical measures like mean, standard deviation (SD), variance, median, min/max, zero-crossing rate, skewness, kurtosis, line length, and nonlinear energy calculated over signal windows [13].

- Frequency-domain features: The analysis of the power distribution across different frequency bands. For HRV, this includes power in low-frequency (LF) and high-frequency (HF) bands, and the LF/HF ratio, which reflect sympathetic and parasympathetic activity [12]. Power Spectral Density (PSD) analysis is common for EEG and HRV [21]. Instantaneous wavelet mean/band frequency are also used [21].

- Nonlinear features: It measures capturing complexity or chaotic dynamics, such as entropy (e.g., Shannon Entropy [21]), fractal dimensions, or recurrence quantification analysis.

- Domain-specific features: Metrics specific to certain signals, e.g., number and amplitude of skin conductance responses (SCRs) for EDA, or various time- and frequency-domain HRV metrics like RMSSD (Root Mean Square of Successive Differences) and SDNN (standard deviation of NN intervals) [12]. Hjorth parameters (activity, mobility, and complexity) are used for EEG [21]. Encoding techniques like Local Binary Patterns (LBPs) might be applied to time series [21].

- Filter methods: The selection of features based on statistical properties (e.g., correlation with the target variable, mutual information, Chi-square test, t-test, and Minimum Redundancy Maximum Relevance—mRMR) independent of the learning algorithm.

- Wrapper methods: The use of the performance of a specific learning algorithm to evaluate feature subsets.

- Embedded methods: Feature selection integrated within the learning algorithm (e.g., LASSO regression).

- Dimensionality reduction: The transformation of features into a lower-dimensional space while preserving variance, using methods like Principal Component Analysis (PCA) or Independent Component Analysis (ICA). Optimization algorithms like the Archimedes Optimization Algorithm (AOA) combined with the Analytical Hierarchical Process (AHP) have also been proposed for feature selection [21].

- Resampling: The under-sampling of the majority class, the over-sampling of the minority class, or the use of synthetic data generation methods like SMOTE (Synthetic Minority Over-sampling Technique) or ADASYN (Adaptive Synthetic Sampling). However, resampling can lead to information loss (under-sampling) or overfitting (over-sampling, SMOTE) [13].

| Algorithm | Category | Input Data Modality | Reported Accuracy | Context (Lab, Real-World, or Dataset) | Noted Strengths | Noted Weaknesses/Challenges | |

|---|---|---|---|---|---|---|---|

| SVM | Supervised ML | HR, PPG, Skin Response, EEG, and Physiological | 93–96% | Lab and Real-World (SWEET), Various | Often high accuracy; effective in high dimensions | Can be sensitive to parameter choice; less interpretable than trees | [1] |

| Random Forest (RF) | Ensemble ML | HRV, EDA, and Physiological | 98.3% | Lab and Real-World (SWEET and Stress-Lysis) | Robust to noise/overfitting; good performance; feature importance | Can become complex with many trees; less interpretable than single DT | [1,13] |

| k-NN | Supervised ML | Physiological | 95.7% | Lab | Simple to implement; non-parametric | Sensitive to feature scaling; computationally expensive at prediction time | [12,13] |

| XGBoost | Ensemble ML (Boosting) | Physiological | 98.98% | Real-World (SWEET) | High accuracy; regularization; handles missing data | Can be complex to tune; less interpretable | [13,14] |

| Stacked Ensemble | Ensemble ML | Temp, Humidity, and Steps | 99.5% | Lab (Stress-Lysis dataset) | Potentially the highest accuracy by combining models | Increased complexity; potential for overfitting meta-model | [30] |

| CNN | Deep Learning | HRV, ECG, EEG, and Facial Expr. | 92.8% | Lab, Various | Automatic feature learning; good for spatial patterns | Requires many data; black box; computationally intensive | [23,31] |

| LSTM/Bi-LSTM | Deep Learning (RNN) | Physiological, Time Series, and ECG/BP | 98.86% | Lab, Various | Captures temporal dependencies; good for sequences | Requires many data; can be slow to train; black box | [29] |

4.2. Machine Learning Techniques for Classification and Prediction

- Support Vector Machines (SVMs): A powerful algorithm that finds an optimal hyperplane to separate different classes. SVMs have been frequently used and often demonstrate high accuracy in stress detection tasks across various data types [1].

- Random Forest (RF): An ensemble method based on multiple decision trees. RF is robust to noise, less prone to overfitting than single decision trees, and often achieves high performance. It was found to be among the most frequently used and best-performing algorithms in several reviews and studies [1].

- K-Nearest Neighbors (k-NN): A simple, non-parametric algorithm that classifies a sample based on the majority class of its k-nearest neighbors in the feature space. Its performance can be sensitive to the choice of k and the distance metric [12].

- Decision Trees (DTs): Tree-like models where internal nodes represent feature tests and leaf nodes represent class labels. They are prone to overfitting if not pruned properly [3].

- Naive Bayes (NB): A probabilistic classifier based on Bayes’ theorem with strong (naive) independence assumptions between features. It is often simple and efficient [1].

- Logistic Regression (LR): A linear model used for binary classification tasks [1].

- Ensemble Methods: Combining predictions from multiple individual models (base learners) often leads to improved accuracy and robustness compared with single models. RF and Gradient Boosting are examples of ensemble techniques. Stacked Ensembles, where the predictions of base models (e.g., RF and GB) are used as input features for a meta-model, can further enhance performance by leveraging the diversity of the base learners. One study reported an accuracy of 99.5% using a Stacked Ensemble on a specific dataset, though such high scores often warrant scrutiny regarding the dataset characteristics, evaluation methodology, and potential for overfitting [30].

- K-Fold Cross-Validation: The dataset is divided into k subsets (folds). The model is trained on k − 1 folds and tested on the remaining fold, a process which is repeated k times. This provides a more robust estimate of performance than a single train–test split [1].

- Leave-One-Subject-Out (LOSO) Cross-Validation: Particularly relevant for personalized models or assessing inter-subject generalizability. The model is trained on data from all subjects except one, tested on the left-out subject, and repeated for each subject. Performance is typically measured using metrics like accuracy, precision, recall (sensitivity), specificity, F1-score, and Area Under the ROC Curve (AUC). Testing models on completely independent datasets (recorded under different conditions or from different populations) is considered the strongest form of validation for assessing true generalizability, but this is rarely performed in practice [13].

4.3. Deep Learning Models in Stress Research

- Convolutional Neural Networks (CNNs): Primarily designed for grid-like data such as images, CNNs excel at extracting spatial hierarchies of features. In stress detection, they are applied

- −

- To spectrograms or other 2D representations of physiological time series (e.g., ECG and EEG).

- −

- Directly to 1D time-series data to learn local patterns and features.

- −

- In facial expression recognition from images or video frames [23].

- Recurrent Neural Networks (RNNs): They are designed to handle sequential data, which makes them suitable for modeling time series like physiological signals, and include the following:

- −

- Long Short-Term Memory (LSTM) Networks: A type of RNN specifically designed to capture long-range temporal dependencies, mitigating the vanishing gradient problem of simple RNNs. LSTM networks are frequently used for analyzing ECG, EEG, and other physiological time series.

- −

- Bidirectional LSTM networks (Bi-LSTM networks): The process sequences in both forward and backward directions, allowing the model to utilize past and future context within the sequence, potentially improving performance [29]. A study using a Fuzzy Inference System (FIS) combined with Bi-LSTM (FBİLSTM) reported high accuracy (98.86%) in heart disease prediction, suggesting potential for stress-related applications [29].

- Transformers: Originally developed for natural language processing, Transformer models use self-attention mechanisms to capture dependencies regardless of their distance in the sequence. Their application in physiological signal analysis, including stress detection, is an emerging area [19].

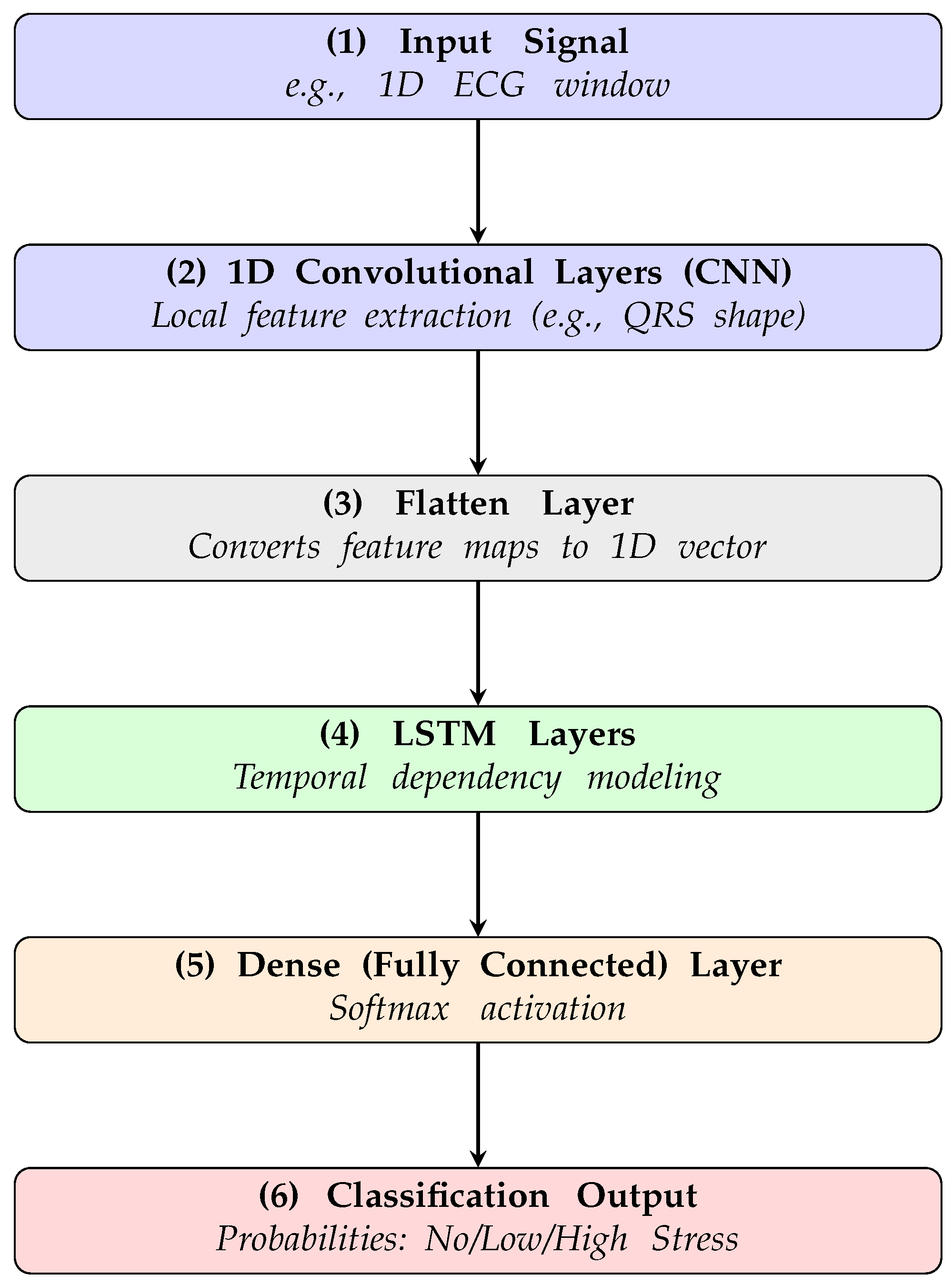

- Hybrid Models: The combination of different architectures to leverage their respective strengths. For example, CNNs can be used for initial feature extraction from segments of the signal, followed by LSTM to model the temporal relationships between these features (e.g., DCNN-LSTM [21] as depicted in Figure 2). Autoencoders, another type of neural network, can be used for unsupervised feature learning or dimensionality reduction.

4.4. Techniques for Real-Time Analysis and Complex Event Processing (CEP)

- Sliding Window Analysis: Data are typically processed in overlapping time windows, allowing for continuous monitoring as new data arrive. The size and overlap of these windows are important parameters affecting responsiveness and computational load.

- Complex Event Processing (CEP): While not explicitly detailed in the accessible sources for stress detection (e.g., ref. [33] was inaccessible), CEP engines are designed to analyze streams of data (events) from multiple sources, identify complex patterns or sequences of events over time, and trigger actions based on these patterns. This paradigm seems highly relevant for stress monitoring, potentially enabling the detection of not just instantaneous stress levels but also patterns like “stress pileup” (multiple stressors occurring in close succession without adequate recovery) [34] or specific sequences of physiological changes leading to a stress event [3].

- Edge/Fog Computing: To address latency issues associated with sending a large number of sensor data to the cloud for processing, edge or fog computing architectures are being explored. In this model, some data preprocessing and analysis occur closer to the data source (e.g., on the wearable device itself, a smartphone, or a local gateway) before potentially sending summarized results or critical events to the cloud. This can reduce bandwidth requirements, improve response times, and enhance privacy by keeping raw data local [4].

4.5. Architectural Considerations: Transformers vs. Recurrent Models for Physiological Time Series

5. Advancements in Real-Time Stress Detection and Modulation

5.1. Multimodal Data Fusion Strategies

- Early Fusion (Feature Level): Features extracted from different modalities are concatenated into a single vector before being fed into a classifier.

- Intermediate Fusion: Features from different modalities are combined at intermediate layers within a model (e.g., in a deep neural network).

- Late Fusion (Decision Level): Separate classifiers are trained for each modality, and their outputs (e.g., class probabilities or decisions) are combined using methods like averaging and voting, or a meta-classifier.

- Hybrid Fusion: Combines multiple fusion strategies.

5.2. Personalization Approaches to Stress Modeling

- User-Specific Calibration: Collecting baseline data from an individual under non-stress conditions to establish personalized thresholds or normalize subsequent measurements.

- Person-Specific Models: Training separate models for each individual by using their own collected data. This can yield high accuracy for that individual but requires sufficient labeled data collection per person, which can be burdensome [13].

- Transfer Learning/Adaptive Algorithms: Starting with a general model trained on a larger dataset and then fine-tuning or adapting it using a smaller number of data from the target individual.

- Clustering-Based Personalization: Grouping individuals with similar physiological response patterns (identified through unsupervised learning techniques like K-means clustering or Self-Organizing Maps—SOMs) and training group-specific models.

- Adaptive Baselines: Developing models that can dynamically adjust an individual’s baseline physiological levels over time to account for factors like circadian rhythms, acclimatization, or changes in health status. Multi-task learning has been suggested as a way to refine algorithms that adapt to individual baselines [19].

5.3. Predictive Stress Modeling Capabilities

5.4. Real-Time Intervention Frameworks

- Just-in-Time Adaptive Interventions (JITAIs): This is a prominent framework where interventions are delivered dynamically, precisely when and where they are needed most [34]. In the context of stress, a JITAI system would use real-time data from sensors (and potentially self-reports via EMAs) to detect moments of elevated stress or risk (e.g., high reactivity, slow recovery, and stress pileup [34]). Upon detection, the system triggers the delivery of a brief, context-appropriate intervention, typically via a smartphone or wearable device. Interventions might include guided breathing exercises, mindfulness prompts, cognitive reappraisal techniques, suggestions for positive activities, or reminders of personal coping resources. The “adaptive” nature means that the type, timing, or intensity of the intervention can be tailored based on the individual’s current state, context, and past responses [34].

- Neurofeedback Systems: These systems provide users with real-time feedback about their own brain activity, typically measured via EEG, enabling them to learn volitional control over specific neural patterns associated with desired mental states [20]. For stress reduction, neurofeedback might train users to increase alpha brainwave activity (associated with relaxation) or modulate activity in specific brain regions involved in emotion regulation (e.g., prefrontal cortex) [20]. Reinforcement learning (RL) algorithms can be used to optimize the feedback strategy and personalize the training process [20]. While powerful, EEG-based neurofeedback typically requires more specialized equipment and setup than JITAIs delivered via standard mobile devices [20].

- Biofeedback Systems: Similar to neurofeedback, but using feedback from peripheral physiological signals like HRV, EDA, or respiration. For example, a system might visualize the user’s breathing pattern or HRV coherence in real time and guide them towards slower, deeper breathing to increase parasympathetic activity and promote relaxation.

- Adaptive Environmental Adjustments: Future systems might be integrated with smart environments to automatically adjust factors like lighting, ambient noise, or music based on the user’s detected stress level, creating a more calming atmosphere [8].

6. Critical Assessment: Persistent Challenges and Limitations

6.1. Accuracy, Reliability, and Validity in Diverse Contexts

- Motion Artifacts: Physical movement can severely distort physiological signals, particularly PPG and EDA collected from the wrist [12].

- Confounding Factors: Physiological responses measured by sensors (e.g., increased heart rate and sweating) are not unique to psychological stress. They can also be caused by physical activity, illness, caffeine consumption, medication, or even positive excitement [12]. Disentangling stress-related changes from these confounders remains a major challenge [12].

- Sensor Placement and Contact: Variability in how users wear devices can affect signal quality [13].

6.2. Generalizability, Scalability, and Real-World Deployment Hurdles

- Generalizability: Models developed using data from one group of individuals often fail to perform well on others due to inter-individual differences in physiology and stress responses. Many studies utilize small, homogenous samples (e.g., university students), limiting the applicability of findings to broader, more diverse populations (different age, ethnicity, and health status). The lack of large-scale, diverse, publicly available datasets collected under varied real-world conditions is a significant bottleneck hindering the development of truly generalizable models [13].

- Scalability: Deploying sophisticated AI models, especially deep learning algorithms, for potentially millions of users presents significant technical and logistical challenges. These include managing the vast number of data generated, ensuring sufficient computational resources (cloud infrastructure costs and on-device processing limitations), maintaining system performance and reliability at scale, and providing adequate user support [4].

- Deployment Hurdles: There is often a gap between research prototypes and robust, user-friendly products ready for real-world deployment. Practical issues such as the limited battery life of wearable devices, unreliable wireless connectivity, device durability, the need for frequent software updates, and seamless integration into users’ existing digital ecosystems and daily routines must be addressed for successful adoption [4].

6.3. Data Security, Privacy, and Ethical Imperatives

- Security Risks: IoT devices are notoriously vulnerable to security breaches. Potential threats include unauthorized access to sensitive health data, data theft, manipulation of sensor readings (which could lead to incorrect diagnoses or interventions), denial-of-service attacks, and device hijacking. Robust security measures are essential, including strong data encryption both at rest and in transit (e.g., using established algorithms like AES, RSA, or attribute-based encryption (ABE) [39]), secure authentication protocols for users and devices, granular access control mechanisms [40], and secure software development practices. Frameworks specifically designed for the healthcare IoT, such as security context frameworks [41] or privacy-preserving architectures like PP-NDNOT for Named Data Networking [40], aim to address these challenges.

- Privacy Concerns: Users may feel uncomfortable with the idea of the continuous, pervasive monitoring of their physiological and behavioral states. Concerns exist about how these data will be used, stored, and potentially shared, for instance, with employers or insurance companies. Transparency regarding data handling practices, clear and informed consent processes providing users with meaningful control over their data, and strict adherence to data protection regulations (e.g., GDPR in Europe and HIPAA in the US) are paramount to building user trust [4].

- Ethical Considerations: Beyond security and privacy, several ethical issues arise. Algorithmic bias, stemming from unrepresentative training data, could lead to systems performing poorly for certain demographic groups, potentially exacerbating health disparities. Questions of accountability arise if the system provides incorrect feedback or harmful interventions [32]. The potential impact on user autonomy (e.g., feeling overly reliant on the system) and the risk of inducing anxiety through constant monitoring (“quantified self” stress) must be carefully considered [8].

| Challenge Category | Specific Challenge Description | Potential Mitigation Strategies/Future Research Directions | |

|---|---|---|---|

| Accuracy/Reliability | Lower performance in real-world vs. lab settings; motion artifacts; confounding factors (activity) | Robust artifact detection/removal algorithms; advanced signal processing; multimodal fusion incorporating context (activity and environment); more ecologically valid datasets (e.g., SWEET) | [12,13,14] |

| Difficulty establishing reliable ground truth for stress | Combining objective physiological data with EMA/subjective reports; Unsupervised/semi-supervised learning to reduce reliance on labels; standardized labeling protocols | [12] | |

| Generalizability/Scalability | Models fail to generalize across individuals/populations/contexts | Larger, diverse public datasets; Federated learning (train on decentralized data); transfer learning; adaptive/personalized models; standardized evaluation protocols | [13,19] |

| Computational/resource constraints for large-scale deployment | Efficient algorithms (model compression and quantization); edge/fog computing architectures; optimized cloud infrastructure; scalable data management platforms | [4] | |

| Privacy/Security/Ethics | Vulnerability of IoT devices and sensitive health data | Strong encryption (end-to-end); secure authentication/access control; regular security audits; privacy-preserving computation (e.g., differential privacy and homomorphic encryption) | [4,39,40,41] |

| User privacy concerns regarding continuous monitoring and data usage | Transparent data policies; granular user controls/consent; data minimization; on-device processing where feasible; adherence to regulations (GDPR and HIPAA) | [4] | |

| Algorithmic bias; accountability; impact on autonomy; potential for induced anxiety | Bias detection/mitigation techniques; Explainable AI (XAI); clear ethical guidelines; user-centered design focusing on empowerment; studies on long-term psychological impact | [8,13,19,32] | |

| Integration/Usability | Difficulty integrating diverse hardware/software components | Standardized APIs and data formats; modular system design; open-source platforms/frameworks | [4] |

| Lack of interoperability between devices/platforms; device fragmentation | Industry standards development; middleware solutions; focus on common communication protocols (e.g., Bluetooth LE) | [4] | |

| Poor user experience (comfort, interface, and feedback); low adherence/adoption | User-centered design (UCD); co-design methodologies; iterative usability testing; clear, actionable feedback design; comfortable/aesthetic wearable design | [9,19] | |

| Personalization | Balancing personalized accuracy with the need for generalizable models | Hybrid models (general pre-training + personal fine-tuning); adaptive algorithms; federated learning with personalization layers; multi-task learning | [13,19,31] |

| Acquiring sufficient personalized data for robust models | Self-supervised learning (SSL) on unlabeled data; data augmentation techniques; efficient active learning strategies to request labels strategically | [19] |

6.3.1. Privacy-Preserving Machine Learning: Federated Learning and Differential Privacy

6.3.2. The Right to Be Forgotten: Machine Unlearning and Model Editing in Healthcare AI

- Machine Unlearning directly addresses the GDPR’s “right to be forgotten.” It is the process of selectively removing the influence of a specific data point or user from an already trained model, without requiring a costly and time-consuming retraining from scratch [48,49]. This is vital in healthcare, where a patient may withdraw consent for their data to be used. Research in this area is advancing rapidly, with methods like “Gradient Transformation” being proposed for efficient unlearning on dynamic graphs, a problem analogous to time-series sensor data.

- Model Editing refers to the ability to directly modify a trained model’s behavior to correct erroneous predictions, remove biases, or align the model with expert knowledge. In healthcare, this is critical. For instance, if a sepsis risk model learns a spurious correlation (e.g., that asthma reduces the risk of mortality), clinicians must be able to intervene and correct this behavior without invalidating the rest of the model’s knowledge. Interactive tools like GAM Changer allow domain experts (clinicians) to visualize and “inject” their knowledge directly into the model, enhancing safety and trust [50,51].

6.4. Technological Integration, Interoperability, and Usability

6.5. Balancing Personalization and Generalization

6.6. User Experience and Acceptance Factors

- Perceived Usefulness: Users must believe that the system provides tangible benefits, such as increased self-awareness, effective stress reduction, or improved well-being.

- Trust: Users need to trust the system’s accuracy, reliability, and critically, its handling of their sensitive data. Transparency in how the system works and how data are used are essential to building trust.

- Intrusiveness: Both the monitoring process (wearing sensors) and the interventions delivered must not be overly intrusive or disruptive to daily life.

- Feedback Quality: The information provided back to the user must be meaningful, actionable, and easy to understand. Simply presenting raw physiological data is unlikely to be helpful for most users.

- Aesthetics and Comfort: The physical design of wearable devices plays a significant role in acceptance.

- Resistance to Tracking: Some users may inherently resist the idea of continuous biometric monitoring due to privacy concerns or a feeling of being constantly evaluated [2].

6.7. Analysis of Failure Cases and Contradictory Findings

7. Future Research Directions

7.1. Enhancing Ecological Validity and Robustness Under Free-Living Conditions

7.2. Advancing Model Interpretability (Explainable AI—XAI)

7.3. Co-Designing Ethical, User-Centered Systems

7.4. Deepening the Integration of Causal Inference and Contextual Understanding

7.5. Exploring Novel Sensing and Algorithmic Frontiers

7.6. Need for Longitudinal Studies and Clinical Integration

7.7. Benchmarking Advanced Architectures for In-the-Wild Generalization

7.8. Establishing Robust Evaluation Protocols and Metrics for Real-World Studies

8. Conclusions

8.1. Synthesis of Findings: Progress and Pitfalls

8.2. The Transformative Potential of the IoT and AI in Stress Management

8.3. Concluding Remarks on Responsible Innovation

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ANS | Autonomic Nervous System |

| ACC | accelerometer |

| AOA | Archimedes Optimization Algorithm |

| AHP | Analytical Hierarchical Process |

| ABE | attribute-based encryption |

| AUC | Area Under the ROC Curve |

| AU | Action Unit |

| BVP | Blood Volume Pulse |

| Bi-LSTM | Bidirectional Long Short-Term Memory |

| CEP | Complex Event Processing |

| CNN | Convolutional Neural Network |

| DL | deep learning |

| DT | decision tree |

| ECG | electrocardiogram |

| EDA | electrodermal activity |

| EEG | electroencephalogram |

| EHR | electronic health record |

| EMA | ecological momentary assessment |

| EMG | electromyogram |

| FIS | Fuzzy Inference System |

| FSR | Force Sensitive Resistor |

| GBM | Gradient Boosting Machine |

| GSR | Galvanic Skin Response |

| HCI | human–computer interaction |

| HF | high frequency (HRV band) |

| HRV | heart rate variability |

| IoT | Internet of Things |

| ICA | Independent Component Analysis |

| JITAI | just-in-time adaptive intervention |

| k-NN | k-nearest neighbors |

| LBPs | Local Binary Patterns |

| LF | low-frequency (HRV band) |

| LIMEs | Local Interpretable Model-agnostic Explanations |

| LOSO | leave one subject out |

| LR | Logistic Regression |

| LSTM | Long Short-Term Memory |

| ML | machine learning |

| mRMR | Minimum Redundancy Maximum Relevance |

| NB | Naive Bayes |

| PANAS | Positive and Negative Affect Schedule |

| PCA | Principal Component Analysis |

| PPG | photoplethysmography |

| PSD | Power Spectral Density |

| RCT | randomized controlled trial |

| RF | Random Forest |

| RL | reinforcement learning |

| RMSSD | Root Mean Square of Successive Differences |

| RNN | Recurrent Neural Network |

| ROC | Receiver Operating Characteristic |

| RSP | respiration |

| SCR | skin conductance response |

| SD | standard deviation |

| SDNN | standard deviation of NN intervals |

| SHAP | SHapley Additive exPlanations |

| SKT | skin temperature |

| SMOTE | Synthetic Minority Over-sampling Technique |

| SOM | Self-Organizing Map |

| SSL | self-supervised learning |

| STAI | State-Trait Anxiety Inventory |

| SVM | Support Vector Machine |

| UX | user experience |

| WPT | Wavelet Packet Transform |

| XAI | Explainable Artificial Intelligence |

| XGBoost | Extreme Gradient Boosting |

References

- Lu, P.; Zhang, W.; Ma, L.; Zhao, Q. A Framework of Real-Time Stress Monitoring and Intervention System. In Proceedings of the Cross-Cultural Design. Applications in Health, Learning, Communication, and Creativity; Rau, P.L., Ed.; Springer International Publishing: Cham, Switzerland, 2020; pp. 166–175. [Google Scholar]

- IoT in Wearables 2025: Devices, Examples and Industry Overview. Available online: https://stormotion.io/blog/iot-in-wearables/ (accessed on 3 May 2025).

- Abd Al-Alim, M.; Mubarak, R.; Salem, N.M.; Sadek, I. A Machine-Learning Approach for Stress Detection Using Wearable Sensors in Free-Living Environments. Comput. Biol. Med. 2024, 179, 108918. [Google Scholar] [CrossRef] [PubMed]

- Mohamad Jawad, H.; Bin Hassan, Z.; Zaidan, B.; Mohammed Jawad, F.; Mohamed Jawad, D.; Alredany, W. A Systematic Literature Review of Enabling IoT in Healthcare: Motivations, Challenges, and Recommendations. Electronics 2022, 11, 3223. [Google Scholar] [CrossRef]

- Mattioli, V.; Davoli, L.; Belli, L.; Gambetta, S.; Carnevali, L.; Sgoifo, A.; Raheli, R.; Ferrari, G. IoT-Based Assessment of a Driver’s Stress Level. Sensors 2024, 24, 5479. [Google Scholar] [CrossRef] [PubMed]

- Muñoz Arteaga, J.; Hernádez, Y. Temas de Diseño En Interacción Humano-Computadora; Iniciativa Latinoamericana de Libros de Texto Abiertos (LATIn): São Paulo, Brazil, 2014. [Google Scholar]

- Biggs, A.; Brough, P.; Drummond, S. Lazarus and Folkman’s Psychological Stress and Coping Theory. In The Handbook of Stress and Health; John Wiley & Sons, Ltd.: Chichester, UK, 2017; pp. 349–364. [Google Scholar]

- Pei, G.; Li, H.; Lu, Y.; Wang, Y.; Hua, S.; Li, T. Affective Computing: Recent Advances, Challenges, and Future Trends. Intell. Comput. 2024, 3, 0076. [Google Scholar] [CrossRef]

- User Experience: UX in IoT: Designing for a Connected Experience. Available online: https://fastercapital.com/content/User-experience--UX---UX-in-IoT--UX-in-IoT--Designing-for-a-Connected-Experience.html (accessed on 3 May 2025).

- Cai, Y. Empathic Computing. In Ambient Intelligence in Everyday Life: Foreword by Emile Aarts; Cai, Y., Abascal, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 67–85. [Google Scholar]

- Roshanaei, M.; Rezapour, R.; El-Nasr, M. Talk, Listen, Connect: Navigating Empathy in Human-AI Interactions. arXiv 2024, arXiv:2409.15550. [Google Scholar]

- Lazarou, E.; Exarchos, T. Predicting Stress Levels Using Physiological Data: Real-Time Stress Prediction Models Utilizing Wearable Devices. AIMS Neurosci. 2024, 11, 76–102. [Google Scholar] [CrossRef]

- Vos, G.; Trinh, K.; Sarnyai, Z.; Azghadi, M. Generalizable Machine Learning for Stress Monitoring from Wearable Devices: A Systematic Literature Review. Int. J. Med. Inform. 2023, 173, 105026. [Google Scholar] [CrossRef]

- Mozos, O.; Sandulescu, V.; Andrews, S.; Ellis, D.; Bellotto, N.; Dobrescu, R.; Ferrandez, J. Stress Detection Using Wearable Physiological and Sociometric Sensors. Int. J. Neural Syst. 2017, 27, 1650041. [Google Scholar] [CrossRef]

- Schmidt, P.; Reiss, A.; Duerichen, R.; Marberger, C.; Van Laerhoven, K. Introducing WESAD, a Multimodal Dataset for Wearable Stress and Affect Detection. In Proceedings of the 20th ACM International Conference on Multimodal Interaction, ICMI ’18, New York, NY, USA, 16–20 October 2018; pp. 400–408. [Google Scholar] [CrossRef]

- Luna-Perejón, F.; Montes-Sánchez, J.; Durán-López, L.; Vazquez-Baeza, A.; Beasley-Bohórquez, I.; Sevillano-Ramos, J. IoT Device for Sitting Posture Classification Using Artificial Neural Networks. Electronics 2021, 10, 1825. [Google Scholar] [CrossRef]

- Androutsou, T.; Angelopoulos, S.; Hristoforou, E.; Matsopoulos, G.; Koutsouris, D. A Multisensor System Embedded in a Computer Mouse for Occupational Stress Detection. Biosensors 2023, 13, 10. [Google Scholar] [CrossRef]

- Talaat, F.; El-Balka, R. Stress Monitoring Using Wearable Sensors: IoT Techniques in Medical Field. Neural Comput. Appl. 2023, 35, 18571–18584. [Google Scholar] [CrossRef] [PubMed]

- Bolpagni, M.; Pardini, S.; Dianti, M.; Gabrielli, S. Personalized Stress Detection Using Biosignals from Wearables: A Scoping Review. Sensors 2024, 24, 3221. [Google Scholar] [CrossRef] [PubMed]

- Joseph, J.; Judy, M. Dynamic Emotion Regulation through Reinforcement Learning: A Neurofeedback System with EEG Data and Custom Wearable Interventions. In Proceedings of the 2024 International Conference on Brain Computer Interface & Healthcare Technologies (iCon-BCIHT), IEEE, Thiruvananthapuram, India, 19–20 December 2024; pp. 169–176. [Google Scholar]

- Patil, S.; Paithane, A. Optimized EEG-Based Stress Detection: A Novel Approach. Biomed. Pharmacol. J. 2024, 17, 2607–2616. [Google Scholar] [CrossRef]

- Gkintoni, E.; Aroutzidis, A.; Antonopoulou, H.; Halkiopoulos, C. From Neural Networks to Emotional Networks: A Systematic Review of EEG-Based Emotion Recognition in Cognitive Neuroscience and Real-World Applications. Brain Sci. 2025, 15, 220. [Google Scholar] [CrossRef]

- Ballesteros, J.A.; Ramírez V, G.M.; Moreira, F.; Solano, A.; Pelaez, C.A. Facial Emotion Recognition through Artificial Intelligence. Front. Comput. Sci. 2024, 6, 1359471. [Google Scholar] [CrossRef]

- Ma, F.; Sun, B.; Li, S. Facial Expression Recognition with Visual Transformers and Attentional Selective Fusion. IEEE Trans. Affect. Comput. 2023, 14, 1236–1248. [Google Scholar] [CrossRef]

- Hindu, A.; Bhowmik, B. An IoT-Enabled Stress Detection Scheme Using Facial Expression. In Proceedings of the 2022 IEEE 19th India Council International Conference (INDICON), Kerala, India, 24–26 November 2022; pp. 1–6. [Google Scholar]

- WESAD (Wearable Stress and Affect Detection) Dataset. Available online: https://www.kaggle.com/datasets/orvile/wesad-wearable-stress-affect-detection-dataset (accessed on 3 May 2025).

- AMIGOS: A Dataset for Affect, Personality and Mood Research on Individuals and Groups. Available online: http://www.eecs.qmul.ac.uk/mmv/datasets/amigos/ (accessed on 3 May 2025).

- Li, H.; Sun, G.; Li, Y.; Yang, R. Wearable Wireless Physiological Monitoring System Based on Multi-Sensor. Electronics 2021, 10, 986. [Google Scholar] [CrossRef]

- Nancy, A.; Ravindran, D.; Raj Vincent, P.; Srinivasan, K.; Gutierrez Reina, D. IoT-Cloud-Based Smart Healthcare Monitoring System for Heart Disease Prediction via Deep Learning. Electronics 2022, 11, 2292. [Google Scholar] [CrossRef]

- Al-Atawi, A.; Alyahyan, S.; Alatawi, M.; Sadad, T.; Manzoor, T.; Farooq-i-Azam, M.; Khan, Z. Stress Monitoring Using Machine Learning, IoT and Wearable Sensors. Sensors 2023, 23, 8875. [Google Scholar] [CrossRef]

- Razavi, M.; Ziyadidegan, S.; Jahromi, R.; Kazeminasab, S.; Janfaza, V.; Mahmoudzadeh, A.; Baharlouei, E.; Sasangohar, F. Machine Learning, Deep Learning and Data Preprocessing Techniques for Detection, Prediction, and Monitoring of Stress and Stress-related Mental Disorders: A Scoping Review. arXiv 2024, arXiv:2308.04616. [Google Scholar] [CrossRef]

- Moshawrab, M.; Adda, M.; Bouzouane, A.; Ibrahim, H.; Raad, A. Reviewing Multimodal Machine Learning and Its Use in Cardiovascular Diseases Detection. Electronics 2023, 12, 1558. [Google Scholar] [CrossRef]

- Marković, D.; Vujičić, D.; Stojić, D.; Jovanović, Ž.; Pešović, U.; Ranđić, S. Monitoring System Based on IoT Sensor Data with Complex Event Processing and Artificial Neural Networks for Patients Stress Detection. In Proceedings of the 2019 18th International Symposium INFOTEH-JAHORINA (INFOTEH), Jahorina, Bosnia and Herzegovina, 20–22 March 2019; pp. 1–6. [Google Scholar]

- Johnson, J.; Zawadzki, M.; Sliwinski, M.; Almeida, D.; Buxton, O.; Conroy, D.; Marcusson-Clavertz, D.; Kim, J.; Stawski, R.; Scott, S.; et al. Adaptive Just-in-Time Intervention to Reduce Everyday Stress Responses: Protocol for a Randomized Controlled Trial. JMIR Res. Protoc. 2025, 14, e58985. [Google Scholar] [CrossRef] [PubMed]

- Liu, M.; Ren, S.; Ma, S.; Jiao, J.; Chen, Y.; Wang, Z.; Song, W. Gated transformer networks for multivariate time series classification. arXiv 2021, arXiv:2103.14438. [Google Scholar] [CrossRef]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; Volume 35, pp. 11106–11115. [Google Scholar] [CrossRef]

- Mamieva, D.; Abdusalomov, A.; Kutlimuratov, A.; Muminov, B.; Whangbo, T. Multimodal Emotion Detection via Attention-Based Fusion of Extracted Facial and Speech Features. Sensors 2023, 23, 5475. [Google Scholar] [CrossRef]

- Sun, F.T.; Cynthia, K.; Cheng, H.T.; Buthpitiya, S.; Collins, P.; Griss, M. Activity-Aware Mental Stress Detection Using Physiological Sensors. In Mobile Computing, Applications, and Services—MobiCASE 2010; Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Springer: Berlin/Heidelberg, Germany, 2012; Volume 76. [Google Scholar] [CrossRef]

- Irshad, R.; Sohail, S.; Hussain, S.; Madsen, D.; Zamani, A.; Ahmed, A.; Alattab, A.; Badr, M.; Alwayle, I. Towards Enhancing Security of IoT-Enabled Healthcare System. Heliyon 2023, 9, e22336. [Google Scholar] [CrossRef]

- Boussada, R.; Hamdane, B.; Elhdhili, M.; Saidane, L. PP-NDNoT: On Preserving Privacy in IoT-Based E-Health Systems over NDN. In Proceedings of the 2019 IEEE Wireless Communications and Networking Conference (WCNC), Marrakesh, Morocco, 15–18 April 2019; pp. 1–6. [Google Scholar]

- The Fusion of Internet of Things, Artificial Intelligence, and Cloud Computing in Health Care. Available online: https://www.springerprofessional.de/the-fusion-of-internet-of-things-artificial-intelligence-and-clo/19562918 (accessed on 3 May 2025).

- Al-Garadi, M.A.; Mohamed, A.; Al-Ali, A.K.; Du, X.; Ali, I.; Guizani, M. A Survey of Machine and Deep Learning Methods for Internet of Things (IoT) Security. IEEE Commun. Surv. Tutor. 2020, 22, 1646–1685. [Google Scholar] [CrossRef]

- Weber, R.H. Internet of Things—New security and privacy challenges. Comput. Law Secur. Rev. 2010, 26, 23–30. [Google Scholar] [CrossRef]

- Mittelstadt, B. Designing the Health-Related Internet of Things: Ethical Principles and Guidelines. Information 2017, 8, 77. [Google Scholar] [CrossRef]

- Pati, S.; Kumar, S.; Varma, A.; Edwards, B.; Lu, C.; Qu, L.; Wang, J.J.; Lakshminarayanan, A.; Wang, S.H.; Sheller, M.J.; et al. Privacy preservation for federated learning in health care. Patterns 2024, 5, 100974. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated Learning: Challenges, Methods, and Future Directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep Learning with Differential Privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, B.; Yang, X.; Yuan, X.; Liu, X.; Yi, X. Dynamic Graph Unlearning: A General and Efficient Post-Processing Method via Gradient Transformation. In Proceedings of the ACM on Web Conference 2025, Sydney, NSW, Australia, 28 April–2 May 2025; pp. 931–944. [Google Scholar] [CrossRef]

- Kumar, V.; Roy, D. Machine Unlearning Models for Medical Care and Health Data Privacy in Healthcare 6.0. In Exploration of Transformative Technologies in Healthcare 6.0 Eds.; IGI Global Scientific Publishing: Hershey, PA, USA, 2025; pp. 273–302. [Google Scholar] [CrossRef]

- Wang, Z.J.; Kale, A.; Nori, H.; Stella, P.; Nunnally, M.; Chau, D.H.; Vorvoreanu, M.; Vaughan, J.W.; Caruana, R. GAM Changer: Editing Generalized Additive Models with Interactive Visualization. arXiv 2021, arXiv:2112.03245. [Google Scholar] [CrossRef]

- Meng, K.; Bau, D.; Andonian, A.; Belinkov, Y. Locating and Editing Factual Associations in GPT. arXiv 2023, arXiv:2202.05262. [Google Scholar] [CrossRef]

- Mishra, V.; Hao, T.; Sun, S.; Walter, K.N.; Ball, M.J.; Chen, C.H.; Zhu, X. Investigating the Role of Context in Perceived Stress Detection in the Wild. In Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, UbiComp ’18, Singapore, 9–11 October 2018; pp. 1708–1716. [Google Scholar] [CrossRef]

- Başaran, O.T.; Can, Y.S.; André, E.; Ersoy, C. Relieving the burden of intensive labeling for stress monitoring in the wild by using semi-supervised learning. Front. Psychol. 2024, 14, 1293513. [Google Scholar] [CrossRef]

- Aqajari, S.A.H.; Labbaf, S.; Tran, P.H.; Nguyen, B.; Mehrabadi, M.A.; Levorato, M.; Dutt, N.; Rahmani, A.M. Context-Aware Stress Monitoring using Wearable and Mobile Technologies in Everyday Settings. arXiv 2023, arXiv:2401.05367. [Google Scholar] [CrossRef]

- Attallah, O.; Mamdouh, M.; Al-Kabbany, A. Cross-Context Stress Detection: Evaluating Machine Learning Models on Heterogeneous Stress Scenarios Using EEG Signals. AI 2025, 6, 79. [Google Scholar] [CrossRef]

- Amin, O.B.; Mishra, V.; Tapera, T.M.; Volpe, R.; Sathyanarayana, A. Extending Stress Detection Reproducibility to Consumer Wearable Sensors. arXiv 2025, arXiv:2505.05694. [Google Scholar] [CrossRef]

- Smets, E.; Rios Velazquez, E.; Schiavone, G.; Chakroun, I.; D’Hondt, E.; De Raedt, W.; Cornelis, J.; Janssens, O.; Van Hoecke, S.; Claes, S.; et al. Large-scale wearable data reveal digital phenotypes for daily-life stress detection. NPJ Digit. Med. 2018, 1, 67. [Google Scholar] [CrossRef]

- Mundnich, K.; Booth, B.M.; L’Hommedieu, M.; Feng, T.; Girault, B.; L’Hommedieu, J.; Wildman, M.; Skaaden, S.; Nadarajan, A.; Villatte, J.L.; et al. TILES-2018, a longitudinal physiologic and behavioral data set of hospital workers. Sci. Data 2020, 7, 354. [Google Scholar] [CrossRef]

- Wang, J.C.; Chien, W.S.; Chen, H.Y.; Lee, C.C. In-The-Wild HRV-Based Stress Detection Using Individual-Aware Metric Learning. In Proceedings of the 2024 IEEE 20th International Conference on Body Sensor Networks (BSN), Chicago, IL, USA, 15–17 October 2024; pp. 1–4. [Google Scholar] [CrossRef]

| Dataset | # Subjects | Modalities | Scenario | Key Features and Limitations |

|---|---|---|---|---|

| WESAD | 15 | Physiological: ECG, EDA, EMG, RESP, TEMP, and ACC (chest); BVP, EDA, TEMP, and ACC (wrist). Subjective: Questionnaires (PANAS and STAI). | Lab | Features: High-res multimodal data from two locations; includes three affective states (baseline, stress, and amusement). Limitations: Very small sample size; controlled lab setting limits ecological validity. |

| AMIGOS | 40 | Physiological: EEG, ECG, and GSR. Behavioral: Frontal video and full-body video (RGB and depth). Subjective/Trait: Self-assessment (valence and arousal), personality (Big Five), and mood (PANAS). | Lab | Features: Includes personality/mood data for individual differences; explores social context (solo/group viewing). Limitations: Relatively small sample size; lab setting. |

| SWEET | >1000 (overall) 240 (cited cohort) | Physiological: ECG, SC, ST, and ACC (patches and wristbands). Contextual: GPS, phone activity, and noise level. Subjective: Periodic questionnaires (EMA). | In the wild | Features: Large-scale, real-world data over 5 days; includes smartphone context; high ecological validity. Limitations: Stress labels based only on periodic self-reports, which can be less precise. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paniagua-Gómez, M.; Fernandez-Carmona, M. Trends and Challenges in Real-Time Stress Detection and Modulation: The Role of the IoT and Artificial Intelligence. Electronics 2025, 14, 2581. https://doi.org/10.3390/electronics14132581

Paniagua-Gómez M, Fernandez-Carmona M. Trends and Challenges in Real-Time Stress Detection and Modulation: The Role of the IoT and Artificial Intelligence. Electronics. 2025; 14(13):2581. https://doi.org/10.3390/electronics14132581

Chicago/Turabian StylePaniagua-Gómez, Manuel, and Manuel Fernandez-Carmona. 2025. "Trends and Challenges in Real-Time Stress Detection and Modulation: The Role of the IoT and Artificial Intelligence" Electronics 14, no. 13: 2581. https://doi.org/10.3390/electronics14132581

APA StylePaniagua-Gómez, M., & Fernandez-Carmona, M. (2025). Trends and Challenges in Real-Time Stress Detection and Modulation: The Role of the IoT and Artificial Intelligence. Electronics, 14(13), 2581. https://doi.org/10.3390/electronics14132581