Abstract

Research on Virtual Reality (VR) interfaces for distant object interaction has been carried out to improve user experience. Since hand-only interfaces and gaze-only interfaces have limitations such as physical fatigue or restricted usage, VR interaction interfaces using both gaze and hand input have been proposed. However, current gaze + hand interfaces still have restrictions such as difficulty in translating along the gaze ray direction, using less realistic methods, or limited rotation support. This study aims to design a new distant object interaction technique that supports hand-based interaction with high freedom of object interaction in immersive VR. In this study, we developed GazeHand2, a hand-based object interaction technique, which features a new depth control that enables free object manipulation in VR. Building on the strength of the original GazeHand, GazeHand2 can control the change rate of the gaze depth by using the relative position of the hand, allowing users to translate the object to any position. To validate our design, we conducted a user study on object manipulation, which compares it with other gaze + hand interfaces (Gaze+Pinch and ImplicitGaze). Result showed that, compared to other conditions, GazeHand2 reduced 39.3% to 54.3% of hand movements and 27.8% to 47.1% of head movements under 3 m and 5 m tasks. It also significantly increased overall user experiences (0.69 to 1.12 pt higher than Gaze+Pinch and 1.18 to 1.62 pt higher than ImplicitGaze). Furthermore, over half of the participants preferred GazeHand2 because it supports convenient and efficient object translation and hand-based realistic object manipulation. We concluded that GazeHand2 can support simple and effective distant object interaction with reduced physical fatigue and higher user experiences compared to other interfaces in immersive VR. We suggested future designs to improve interaction accuracy and user convenience for future works.

1. Introduction

Interacting with virtual objects is fundamental in Virtual Reality (VR) [1,2,3,4]. As a result, research on object selection and manipulation has been a long-term research topic for improving user experience and performance [1,2,5,6,7]. Specifically, many researchers have explored object interaction methods using natural body inputs such as hands [1,5,8] and eye gaze [9,10], or combining gaze and hand inputs [6,7,11,12]. This is because such natural inputs are crucial for user experience in VR [1,6,13]. To understand how natural inputs have been utilized in object interaction research, we introduce previous works that involve natural inputs such as gaze and hand movements. We also review works particularly related to the manipulation of distant objects in virtual environments because the human body has a physically limited interaction range. Then, we identify current limitations on the work and propose our idea.

1.1. Interfaces Using Gaze or Hand

Common interfaces for object interaction include devices and natural input. Although handheld controllers are less physically demanding than the hand, they are less natural than the hand [14]. Thus, researchers have explored natural inputs such as gaze or hand inputs for virtual object interaction.

1.1.1. Hand-Only Interface

Since humans use their hands to interact with objects in real life, direct object manipulation based on hand position and gesture [1,15] has become an interesting research topic. The simple virtual hand [1] simply mapped the pose of the real hands onto virtual hand meshes. As it works in the same way as real hands, it has a limited interaction range [16] and requires extra locomotion [17] to interact with out-of-reach objects.

To solve this problem, many researchers have proposed methods of interacting with distant objects. Stoakley et al. introduced World-In-Miniature [18] that allows users to select and manipulate any objects using hands and a small copy of the virtual environment. However, indicating a certain position is not direct interaction and may result in misplacement of the object due to its small size. Other researchers have increased the range of interaction using hand amplification [19]. The hand amplification is multiplying the users’ hand movements. If the hand amplification ratio is 1:2, once a user moves their hands 10 cm forward, the system moves their virtual hands 20 cm forward. This concept has been widely adopted. For example, Poupyrev et al. proposed the Go-Go interaction [8], a technique that amplifies a reachable range of virtual hands using a non-linear amplification function (1:N2). Specifically, the hand movement is amplified when their hands exceed two-thirds of the users’ maximum arm reach. Similarly, Bowman and Hodges presented the direct HOMER [5] that combines ray-casting [20] selection with a linearly amplified hand (1:N). However, both techniques still face a precision problem; a small hand movement could translate the virtual object too quickly. To address this issue, Wilkes and Bowman [21] improved the direct HOMER by applying dynamic mapping based on the current hand speed. Additionally, Schjerlund et al. [22] mapped users’ single hand to multiple virtual hands to reach a distant object easily.

Nevertheless, hand-only object manipulation easily leads to arm fatigue [23], resulting in a lower user experience. It is important to reduce hand movement to enhance the user experience of object manipulation.

1.1.2. Gaze-Only Interface

Gaze has been investigated as a novel input [24], especially for object selection due to its benefits of speed [25,26] and interaction range [27]. However, gaze selection can produce the Midas Touch Problem [28,29]. The Midas Touch Problem is a common problem in gaze-based interfaces. When a gaze is used as an input for object selection, a user may look at an object with no intention, but the system may unexpectedly select the object the user is looking at, which may not be the one the user desires. To relieve the Midas Touch Problem, several researchers have introduced confirming step [1,30] by following or dwelling on static objects [10,31,32,33], moving objects [9,34], or sometimes by blinking their eyes [35,36,37,38,39]. For instance, Khamis et al. [9] selected a distant moving object by continuously looking at it in a VR environment, and Lu et al. [38] used blinking for a text button-based User Interface (UI) selection method for VR.

Although these methods can reduce the Midas Touch Problem, most gaze-only interfaces are limited to object selection rather than manipulation because the gaze has no information about rotation or scale. Interestingly, Liu et al.’s system [10] achieved both object selection and translation on a selected plane using only dwell time interaction. However, it requires the user to dwell twice to finish translation, resulting in a longer interaction time.

1.2. Interfaces Using Both Gaze and Hand

Single-input interfaces have apparent limitations (i.e., arm fatigue for hand-only interfaces and the Midas Touch Problem for gaze-only interfaces). To solve these limitations, other researchers tried to find a better object interaction metaphor by combining multiple inputs. Proposed by Zhai et al., the MAGIC [40] pointing system was the combination of gaze and mouse input. It “translates” the computer mouse cursor using gaze, which reduces the need for mouse control and leads to a shorter interaction time. This concept has been applied by multiple follow-up systems [7,27,41,42,43,44,45,46]. In this section, we focus on multimodal interfaces that use both gaze and hand input.

1.2.1. Gaze+Screen Touch Interface

Several researchers have integrated gaze and touch input through a screen interface. For example, Stellmach and Dachselt [44] used gaze to position a distant object and the touch of a smartphone screen to manipulate the object projected onto a wall screen. Pfeuffer et al. [27] presented a similar method on a large touchscreen, using only gaze for selection. Turner et al. [45] developed an object translation system between different displays based on gaze positioning and touch input. They also advanced their idea [46] by applying multitouch input and rotation. Additionally, Simeone et al. [47] used two fingers and gaze to scale a 3D object along three axes on a touchscreen. This work was one of the early 3D object manipulations using gaze.

However, such object manipulation interfaces using screen input are less immersive because they run on a 2D interface rather than in a full 3D virtual environment.

1.2.2. Gaze+Hand Gesture Interface for Object Selection

Researchers then began integrating hand gestures with gaze for selecting distant objects. Kytö et al. [41] suggested a target object selection method in Augmented Reality (AR), which used gaze to quickly move the cursor and an “air-tap” hand gesture (similar to the pinch gesture) to confirm the selection. Other researchers have applied both inputs to select 2D menus or text [37,48]. Reiter et al. [49] attached a menu to their arm and then selected its items using a combination of gaze, hand gestures, and wrist movements. Lystbæk et al. [32], Sidenmark et al. [50], and Wagner et al. [51] suggested selection methods, whereby a selection is confirmed when the index finger is within the line of sight of the target indicated by the gaze pointer. Shi et al. [39] developed a 2D region selection method using gaze and hand gestures, which can be used for multiple selections inside the region.

Nevertheless, these works were usually focused on selecting 2D UI elements, which was different from full 3D object manipulation, including translation and rotation.

1.2.3. Gaze+Hand Gesture Interface for Object Selection and Manipulation

Chatterjee et al. designed Gaze+Gesture [35] that runs multiple functions triggered by gaze and hand gestures. It can control window-based applications using both modalities. However, it was built on a desktop computer environment rather than an immersive virtual environment.

Recently, several studies have explored object selection and manipulation at a distance in a 3D environment using both gaze and hand input with a Head-Mounted Display (HMD). Gaze+Pinch [6] proposed by Pfeuffer et al. adopted the hand pinch gesture to select and control an object and applied a heuristic approach whereby the object closest to the user’s gaze ray is always indicated. They also applied hand amplification where the movement of a selected object is proportional to the distance between the object and the user, similar to the direct HOMER [5]. However, it showed limitations on rotation because it required two hands to rotate the object, reducing accessibility.

Ryu et al. [12] suggested another interface called GG (Gaze–Grasp) Interaction for distant object interaction in an Augmented Reality (AR) environment, while a user is looking at a specific area, any objects within the specific threshold of the gaze ray (10 cm in their study) are automatically grouped and become candidates for selection. Then, the user can select an object by controlling their hand’s grabbing size, determined by the minimum distance between the thumb and other fingers. In short, the width of the selected object is similar to the distance calculated from their fingers. However, this interface also has limitations that the selected object can be translated only inside a hand-reachable area, and ambiguity in selection can arise if there are multiple objects with the same width.

Yu et al.’s work [7], called ImplicitGaze and 3DMagicGaze, can perform object translation using gaze after gaze-based selection. Building on the concept of MAGIC [40] and Turner et al.’s work [46], they introduced a safe region that disallows gaze-based translation and allows only hand-based interaction. If a user’s gaze is outside the region, the system switches the input to gaze and then allows gaze translation on a spherical area based on the current gaze distance. However, they did not allow the change of the distance between the object and a user (referred to as ‘depth’ in their work), which made it difficult to translate the object along the gaze ray direction, as opposed to translation along other directions. They also implemented their interface using a handheld controller instead of hand tracking, reducing naturalness. In addition, these interfaces have a two-step interaction process, which may be confusing for users if continuous object interactions are needed.

Bao et al. [11] suggested Gaze Beam Guided Interaction that translates an object along the gaze beam (an adjusted gaze ray). The interface consists of the following three interaction steps: selecting an object using gaze and hand inputs, setting the direction of the gaze beam, and deciding how far the object will be translated. Both parameters are controlled using the hands. Although this work was a milestone for translating objects along the depth axis, the three-step interaction process may be complex for users. Furthermore, this work focused solely on object translation rather than rotation.

Jeong et al. introduced GazeHand [52], which supports hand-based direct selection and manipulation for distant objects. Although it is based on hand-only interfaces [1,8,22], it has a distinct feature compared to other gaze + hand interfaces, while ImplicitGaze did not visualize a virtual hand, GazeHand allows direct hand manipulation using a virtual hand that is near a gaze collision point. It also supports quick object translation using gaze movement by translating their “virtual hands” instead of the virtual objects. This gives the user the sense of continuously using their hands to interact with objects. Nevertheless, as it requires additional objects for the gaze ray to create a collision point, its gaze depth control is still limited, making the system less efficient for translating an object along the gaze direction.

1.3. Distance Control for Object Interaction in VR

Meanwhile, a few studies have covered depth-axis distance control for object interaction. This has mostly been explored in several hand-based interactions. One example is hand amplification [19], which transforms a small actual movement into a larger virtual movement. The amount of movement is calculated using mapping functions such as linear functions (e.g., scalar multiplication [5,6]) and non-linear functions (e.g., quadratic function [8]). However, hand amplification may result in imprecise distance control [8]. Alternatively, Bowman and Hodge [5] introduced several distance control methods. One of them is to split user regions into three regions. If the hand is near the user, the distance decreases. If the hand is far from the user, the distance increases. Otherwise (if the hand is in the middle region), the distance remains the same. In their work, the distance can be controlled using two buttons (i.e., extend and retract). This concept was adapted from Bao et al.’s work [11] by referencing the distance between a user and their hand.

1.4. Contribution

In summary, current gaze + hand interfaces have several restrictions as follows: non-immersive environments [35], limited or no rotation support [6,11], difficulty in translating along the gaze ray [7,12,52], multi-step interaction process [6,11], or using gaze for only object selection [6,12]. Therefore, we set a unified research question as follows: Can we design a VR interaction method that supports hand-based free selection, rotation, and quick translation along all axes, which can be used with only one grab and release gesture?

Specifically, GazeHand [52] supports hand-based selection, free rotation, and gaze-based quick translation along horizontal (left–right) and vertical (up–down) axes in immersive VR, so adding a new method of object translation along the depth-axis would solve the current problem and give a free object interaction experience to VR users.

In this paper, we propose the extension of the GazeHand interface named GazeHand2 (Figure 1) by adding continuous depth control along a gaze ray, which enables objects to be translated more freely than with the original technique. Gaze depth control is easily achieved by moving the hand forward or backward.

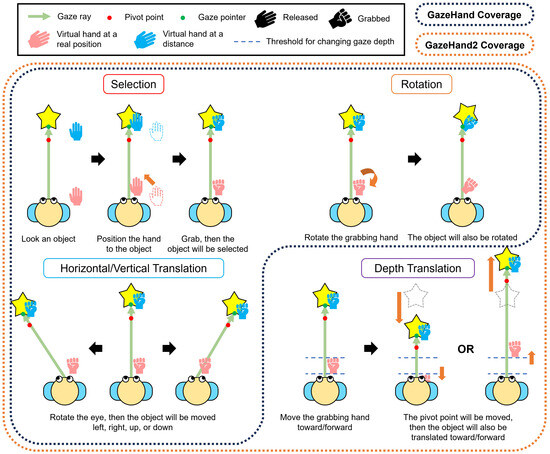

Figure 1.

A concept image of all interactions from GazeHand2, with a functional comparison with the original GazeHand.

In addition, the study from GazeHand [52] only compared them with the modified Go-Go [8] rather than other recent gaze + hand interfaces, making their comparison less powerful. Furthermore, their object manipulation studies were conducted with a single object distance. Comparing them at various distances can reveal the effect of distance on the performance of the interface. Therefore, we evaluated and compared it with the well-known VR interaction techniques, Gaze+Pinch [6] and ImplicitGaze [7], which use both gaze and hand inputs. After the study, we collected participants’ subjective feedback and suggested design improvements based on it.

This study aims to design a new VR object interaction interface that satisfies the following two aspects: (1) maintaining hand-based natural interaction and (2) adding freedom of object selection, translation, and rotation. In summary, our work contributes in the following ways:

- We proposed the extension of the GazeHand interface, named GazeHand2, that supports hand-based free selection, rotation, and quick translation in all directions.

- We compared and evaluated the interface with two popular interaction methods that combine both gaze and hand inputs.

- We suggested implications and future directions for GazeHand2.

2. Materials and Methods

We describe the design and implementation of the GazeHand2 system. Furthermore, to validate the design, we developed an experimental system and conducted a user study comparing it with other interfaces. Our study received approval from the institutional review board of the first author’s institution.

2.1. System Design

We first explain the original GazeHand interface with its limitations and then introduce GazeHand2, which resolves the limitations.

2.1.1. GazeHand

The GazeHand interface [52] is intended for the quick selection and translation of distant objects, supporting low-effort and hand-based natural interaction. The two main concepts of GazeHand are (1) using hand interaction to support natural interaction and (2) using gaze translation to enable quick object translation. To achieve this, the system combined the strengths of the gaze interaction with the virtual hand by positioning the virtual hand near a gaze point. However, fixing the hand to an exact gaze point is unnatural because it restricts the freedom of hand movement. Furthermore, when interacting with an object, there should be a space between the user and the object. Therefore, GazeHand creates a new point called pivot point, which is the gaze ray but slightly toward the user than the gaze point. The distance between the gaze point and the pivot point is set to around two-thirds of the average adult arm length (around 50 cm). Finally, the system calculates the position where the virtual hands should be placed by matching the user’s position to the pivot point. This enables users to interact with distant objects as if they are 50 cm in front of them. It works by translating ‘virtual hands’ rather than virtual objects grabbed by the hands. The virtual hand movement is synchronized with the real hand movement (1:1 mapping) for the user to feel they are interacting with them directly and naturally. The user can freely rotate the object with their hands.

Since the pivot point determines the position of virtual hands, an unintended change of the pivot point can negatively impact the user experience. For example, rotating the selected object can change the collision point and, consequently, the position of its virtual hands. Therefore, GazeHand does not update the pivot point while the user is selecting the object through a hand grab gesture. To update a pivot point (i.e., the depth distance of the selected object), the user needs to gaze at a reference object in a nearer or farther position. This resets the gaze point and, consequently, the pivot point.

GazeHand initially shows a gaze pointer with a gaze ray. When the user looks at an object, a pivot point is calculated, and a virtual hand is placed around the object. Then, the user can select the object by grabbing it. Then the object can be translated to the left, right, up, or down quickly because it follows a gaze point. The user can also translate and rotate the object precisely using their hand. Finally, the user can finish the interaction by releasing their grip. In summary, GazeHand can operate selection, rotation, and gaze-based horizontal (left–right) and vertical (up–down) translation with virtual objects. All supported operations are described in the blue dot area of Figure 1.

In GazeHand, we identified a problem where if there is no additional object in a VR scene, object translation along the gaze can be too long because the object movement always depends on the user’s hand [7]. Without another object, the GazeHand does not create a new pivot point, so the user must perform multiple grab–release actions involving a large amount of hand movement to translate the object along their gaze. To solve this problem, we thought that precise hand manipulation does not require much space (e.g., rotation does not require hand translation), so leaving a small area would allow for precise hand manipulation. Then, the other area could be used to control the gaze depth without placing other objects.

2.1.2. GazeHand2

Based upon all functions of the GazeHand, GazeHand2 (Figure 1 and Figure 2) adds a new gaze depth control method. To change the gaze depth, we applied a distance control method adapting Bowman and Hodge [5]’s concept, splitting a single space into three zones. In their work, using a constant extension and retraction speed supports only three discrete speed rates, which may not satisfy users with various needs. In our interface, the speed of the gaze depth change rate varies depending on the relative position of the hand. Sometimes users may want to translate an object quickly for faster manipulation or slowly for precise manipulation. These needs can be fulfilled by giving a continuous option for translation speed. We thus applied more dynamic speed control for user preferences by exploiting the relative position of the hand.

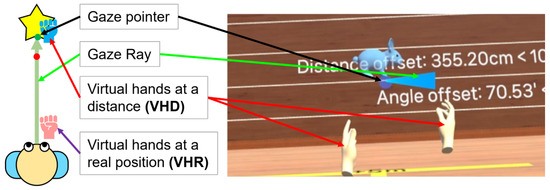

Figure 2.

The main components of the GazeHand series. The gaze pointer indicates whether the gaze collides with any object. There are two types of virtual hands. Virtual hands at a real position (VHR) appear in their current hand position, which is not intended to interact with virtual objects, to easily identify users’ current hand movement. The VHR is visualized in Figure 3. Virtual hands at a distance (VHD), which can interact with any virtual object, appear around users’ gaze point to grab any distant object.

To control the speed and direction of the gaze depth, we added a direction indicator, a small circle coming with a panel UI with ‘push’ and ‘pull’ labels in front of a user (see in Figure 3b–d). Once the user selects any virtual object by grabbing it with their virtual hand, the UI appears to them with the indicator at the center of the view. To translate the selected object along their gaze (i.e., forward or backward direction), the user can simply move their hands to the desired direction. The object then starts to move along their gaze with the indicator moving to either a push or pull area (Figure 3c). The UI is invisible when the user does not select any object. That is, the indicator and the UI disappear when the user releases their grip.

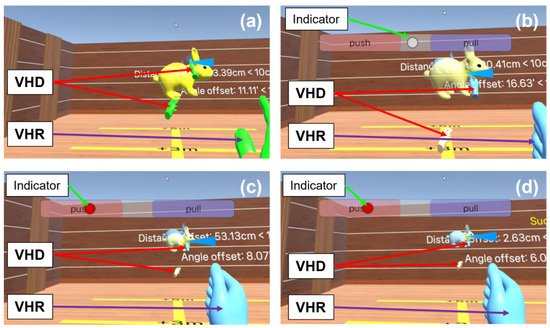

Figure 3.

Step-by-step use of GazeHand2 to complete a task in the user study. The initial state is described in Figure 2. (a) Once a user looks at an object, the virtual hands at a distance (VHD) jump to around the virtual object. Then the user can easily grab an object to select it. (b) Upon grabbing it, an indicator (a small circle) with a panel UI for depth control appears. (c) If the user moves their hand forward at a desired speed and keeps holding it, the object moves forward along the gaze. The user can identify the level of how fast the object is moving through the indicator in a push area. It is clear that the virtual hands at a real position (VHR) have moved further forward compared to (a,b). (d) The task is completed when the object reaches the target pose under the specified threshold.

The translation speed of the object depends on how far their current hand position is from the initial position (see the ‘Depth Translation’ part in Figure 1). For example, if the hand is near its initial position (i.e., the indicator is in a center zone), the depth will not change. If the hand is closer to the user, the depth decreases. Similarly, the depth increases if the hand overcomes the threshold and moves forward. In short, the speed depends on the hand’s relative position; the farther their hand goes, the faster the object moves. In our pilot test with eight participants (three males and five females, M = 25.00, SD = 2.88), the average speed rate was 14.4 cm/s. Additionally, a middle space in front and behind the initial hand position can allow users to perform precise object manipulation without changing their gaze depth, but it does not need to be too large. Finally, we set the distance threshold to ±5 cm and the speed to (|distance| – 5) × 14.4 cm/s. As a result, the user can control the object’s speed and does not need any additional device to control their gaze depth.

In addition, the color of the hand and gaze pointer does not change in the original GazeHand, which makes it less intuitive for users to understand whether they can interact with the object or not. To clarify this, GazeHand2 applies different colors to the virtual hands and the gaze pointer. In terms of the hand, the initial hand color is set to light yellow (not interacting, Figure 2). Once any side of the distant virtual hand collides with any object, the hand turns green (touching, Figure 3a). This helps the user know that they can select the indicated object with the colored hand. When the user grabs an object with the colored hand, the hand turns blue (interacting, Figure 3b). After releasing and leaving the object, the hand initially turns light yellow (not interacting). In terms of the gaze pointer, the initial color is blue (see Figure 2 and Figure 3a), but it turns to yellow when the gaze is collided with any object (see Figure 3b–d).

We note that our virtual hands can work independently. Users can use each hand to select and manipulate each object. The color of each hand is also changed separately. In this way, users can select, translate, and rotate two objects simultaneously using two hands.

2.2. Implementation

The GazeHand2 system was developed using Unity 2021.3.21f1 and Meta XR SDK Package 50.0. The system runs on a PC (Microsoft Windows 11, AMD Ryzen 7 5800X 8-Core Processor 3.80 GHz CPU, 32 GB RAM, and NVIDIA GeForce RTX 3060 graphics card) connected to the Meta Quest Pro HMD, which has embedded eye-tracking sensors with a resolution of 1800 × 1920 pixels per eye.

2.3. User Study Design

To validate our design, we conducted a user study comparing it with other interfaces with an object manipulation task. The study was conducted using a 3 × 3 within-subject design with two main independent variables as follows: Interface (GazeHand2, Gaze+Pinch, and ImplicitGaze, see Section 2.6) and Distance (1 m, 3 m, and 5 m, see Figure 4e). The order of the interfaces was counterbalanced using a balanced Latin square design. The order of distance followed an ascending sequence (1 m-3 m-5 m) for participants to adapt the task difficulty.

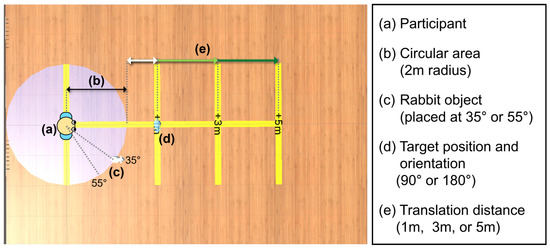

Figure 4.

A top-down illustration of the task with the virtual environment for the user study.

For each condition, each participant performed the manipulation task four times (i.e., 2 × 2), which comes with the following two factors: the lateral distance of the white rabbit (35° and 55° [7]) and the target orientation (90° and 180°). The angles of both factors were presented in a clockwise direction. The lateral distance (see Figure 4c) indicates the angular distance from the participant and the target object. In short, each participant completed 36 trials (=4 trials × 3 distances × 3 interfaces). Additionally, to be fair, we placed the rabbit on the right side of the circle for right-handed participants (n = 22) and on the left side for left-handed participants (n = 2).

2.4. Environment and Task

The task of the study was adapted from Yu et al.’s study [7], expanding it to a larger space for distant object interaction. This variation could provide insight into the effect of the size of the virtual environment with similar interfaces. Note that GP only allows rotation along a single axis (mostly Y-axis), which should be considered for a fair comparison.

The task is conducted in a virtual room environment (12 m × 8 m, Figure 4). In the VR environment, there is a circular area with a 2 m radius on the floor around a participant (Figure 4a,b). There is a virtual white rabbit (20 cm × 25 cm × 40 cm, Figure 4c) which always appears 2 m away from the participants (i.e., the rabbit is at the perimeter of the circle area). The goal of the task is to place a white rabbit object in a specified position and orientation. The target pose is described as a semi-transparent blue rabbit always at the center of the environment (Figure 4d). Participants should select the white rabbit and manipulate it to match the blue one. Both rabbits are 1.2 m above the floor, and the threshold is set at a distance of 10 cm and an angle of 10°. If the object is under the threshold (Figure 3d), the task timer will automatically be stopped and record the final data. Participants can see the current differences in distance and angle as text on the front wall.

2.5. Participants

We recruited 24 local undergraduate and graduate students (aged from 20 to 35, M = 22.96, SD = 3.26, 9 males and 15 females). Nine of them wore glasses, and two of them were left-handed. Fifteen people had no or limited experience in VR (never: 9; less than five times in their lifetime: 6), six people used it less than once a month, while only three people reported using VR more than once a month.

2.6. Interfaces

We compared the following three interfaces in this study: GazeHand2 (GH2), Gaze+Pinch (GP), and ImplicitGaze (IG). Since both GP and IG utilized eye gaze, we also chose to implement our interface based on the Eye-GazeHand from their original work [52]. Since GazeHand2 (abbreviated as GH2) is already described in Section 2.1.2, we describe the two other interfaces in this section. Table 1 shows a brief comparison of three interfaces based on several criteria [51].

Table 1.

Comparison of the three interfaces in our study, based on the criteria from Wagner et al. [51]’s work.

2.6.1. Gaze+Pinch (GP)

Gaze+Pinch (abbreviated as GP) [6] uses a hand pinch gesture for selecting and manipulating objects. The gaze pointer is always shown for selection. When a user wants to select an object, they need to move the gaze pointer near the target and perform the pinch gesture. Then, the object is selected and can be moved with the user’s VHD. If the distance between the object and the user is under 1 m, translation works with a 1:1 mapping. If the distance is greater than 1 m, translation is amplified using linear mapping equation (MovementObject = MovementHand × DistanceObject-to-user) similar to the direct HOMER [5] or Go-Go [8] method for fast object translation.

Unlike GH2 and IG, Gaze+Pinch brought their rotation method from Turner et al. [46], which was originally applied to a 2D display. Their original work only described the y-axis rotation, which is similar to rotating a flat disk with two hands. To rotate the object, the user has to make two pinch gestures with their two hands, while pinching, similar to Turner et al. [46]’s multitouch rotation, the user moves their finger horizontally like drawing a circle until the direction of the object is set to the desired level. The position of the object remains fixed while it is rotated.

2.6.2. ImplicitGaze (IG)

ImplicitGaze (abbreviated as IG) [7], similar to GH2, allows object translation using only eye gaze. However, unlike GH2 and GP, it hides the gaze pointer because a user can be distracted. Instead, it highlights a target object that the user is looking at. The user can select it by using a trigger button on a controller. After selection, a “safe region” is created. If their gaze is in the region, they can rotate and translate the object by moving their hands. If their gaze is outside the region, the object follows only the gaze pointer, and the safe region is regenerated. The radius of the safe region is initially 6°, but it increases at a constant speed (10°/s) until it reaches 20°. Once the manipulation is finished, the user can finish the interaction by using a trigger button.

We modified two attributes of IG for fairer comparison. Since IG uses a controller button to trigger, we replaced it with a hand pinch gesture. By doing so, participants can use all interfaces with their hands. Additionally, since IG did not have any depth control method, and one of the future directions of the paper was hand amplification, we applied the hand amplification equation from GP. In other words, the speed of object translation along the gaze ray will be the same as GP.

2.7. Procedure and Data Collection

On arrival at the promised time, participants understood the purpose and procedure of the study. After they agreed, participants were asked to fill in a consent form and demographic questionnaire, which included gender, age, left- or right-handedness, and previous VR experience. Participants then wore the Meta Quest Pro HMD and calibrated their eye tracking.

After calibration and measurement, each participant was instructed to stay within a specified 50 cm × 50 cm square area (see Figure 5) during the experiment to prevent them from moving around. The participant performed tutorials on three interfaces, including how to select, translate, and rotate objects using each interface. We let participants practice until they become familiar with all of them. Once they were prepared to begin the study, they repositioned their feet inside the square and waited for the task. Each participant then repeated the task under the designated condition. In other words, the participant conducted the task four times (in the order of 90–35°, 90–55°, 180–35°, and 180–55° order) under the first interface (in counterbalanced order) with a 1 m distance.

Figure 5.

Left: The user study environment. Right: A participant conducting the study.

During the task, the system recorded the following quantitative data from participants’ movement for further analysis:

- Task Completion Time (in s) for overall task performance. For further analysis, we split the Task Completion Time into two subscales: Coarse Translation Time and Reposition Time.

- Coarse Translation Time (in s) [7], which is related to the ease of manipulating an object to the approximate pose, as this time was counted until the object is approximately matched to the semi-transparent target. The coarse threshold was set to a 20 cm distance with an angle of 20°, which is twice the primary threshold.

- Reposition Time (in s), which indicates the ease of precise object manipulation (=Task Completion Time − Coarse Translation Time).

- Hand Movement (in m) and Head Movement (in °) represent the physical activity related to participants’ physical fatigue to complete the task. These values were accumulated from every frame of the system.

- Object Selection Count represents the number of attempts to select the rabbit object that the user needs to finish manipulation. It is related to the efficiency of object manipulation.

All subjective data are the sum of the task from each Interface–Distance pair. Thus, the total number of data points per participant is nine (3 Interfaces × 3 Distances) for each variable.

After completing the task four times, participants were asked to answer a set of subjective questionnaires as follows: NASA-TLX [53,54] for overall task load, System Usability Scale (SUS) [55,56] for usability, Subjective Mental Effort Questionnaire (SMEQ) [57,58] for mental load, Borg CR10 Scale [59] for arm fatigue, and a short version of the User Experience Questionnaire (UEQ-S) [60,61] for user experience with pragmatic and hedonic level. Then, they repeated the task with other distances and then took a three-minute break. The overall procedure was repeated three times with different interfaces.

At the end of the study, we asked participants to rank their preferences for the three interfaces using a ranking scale from 1st (for the best) to 3rd (for the worst). We also conducted a final interview to collect their subjective feedback for each interface. The experiment took an average of 65 min per participant. They received about USD 10 as a reward.

2.8. Data Analysis

2.8.1. Analysis on Quantitative and Subjective Data

After finishing the study, we checked our quantitative data (Times, Movements, and Object Selection Count) using the Shapiro–Wilk test for normality. We found that the data were not normally distributed for all conditions. Furthermore, our subjective questionnaire data (NASA-TLX, SUS, SMEQ, UEQ-S, Borg CR10, and preference) were on ordinal scales. In this case, we needed to apply a non-parametric test for data analysis.

Our study followed a two-factor (3 × 3) within-subjects design (see Section 2.3). In the HCI research, N-way repeated measures ANOVA has been used to analyze the result of a within-subjects design with N factors. In cases of non-parametric data, researchers have used the Aligned Rank Transform [62] to pre-process the data and then performed the N-way repeated-measures ANOVA (e.g., [7,51]). Therefore, we adhered to the same methods as follows: we first applied Aligned Rank Transform [62] to them and performed two-way repeated-measures ANOVA ( = 0.05) with two factors (Interface and Distance). Additionally, we used the Friedman Test ( = 0.05) for user preference of the interface. If there was any significance, we ran a post hoc test for pairwise comparisons for each factor using Wilcoxon signed-rank test with Bonferroni correction applied ( = 0.05/3 = 0.0167). These analyses have also been used in previous works (e.g., [52,63]). All tests were performed using SPSS 29.0.

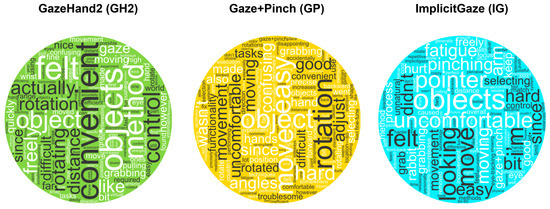

2.8.2. Analysis on Participants’ Feedback

After completing the task, we collected feedback from all 24 participants on the three interfaces through a final interview, identifying their strengths and weaknesses. Participants were also asked to provide general comments on how the design could be improved.

After collecting the feedback, we performed a well-known thematic analysis [64] to gain insight from it. We also referred to some literature [65,66] to analyze them. After transcribing all 72 comments (3 interfaces × 24 participants), we first divided several comments that contain multiple ideas into several separate topics if necessary. As a result, we identified a total of 81 comments (GH2: 28; GP: 27; IG: 26). After carefully reading them, we conducted a thematic coding activity, primarily by the first author, with the verification of three co-authors (four in total). During the coding process, we attempted to minimize the discrepancy in categorizing the feedback (e.g., two comments about the GP, “Compared to GH2, its rotation was not real.” and “I had to use both my hands to rotate the object. It was also confusing me because the direction of rotation depends on both my hands’ position.” were coded as the unnatural rotation method, even though the second comment did not explicitly use the words ‘unnatural’ or ‘not real’. This is because the participant was not familiar with this type of interaction as it was rarely done in their life and was thus ‘unnatural.’). This analysis was mostly agreed upon by the co-authors (77 of 81 initially; 95%) and revised sequentially through additional discussion among the authors. As a result, we identified primary themes, including efficiency, convenience, precision, and fatigue. We then grouped the themes into three categories (positive, neutral, and negative). Then, we renamed each theme for clarity.

Additionally, we created a word cloud for each interface using the transcription. We first removed stop-words and other irrelevant words from the comment text and converted all words to lowercase. Then, we counted the frequency of each word and generated the word cloud based on the results using the word-cloud library [67] with the Python 3 programming language [68].

3. Results

In this section, we present the quantitative and subjective results of our user study with feedback from participants. We continue to use abbreviations introduced in Section 2.6 (i.e., GH2 = GazeHand2; GP = Gaze+Pinch; and IG = ImplicitGaze).

3.1. Quantitative Data

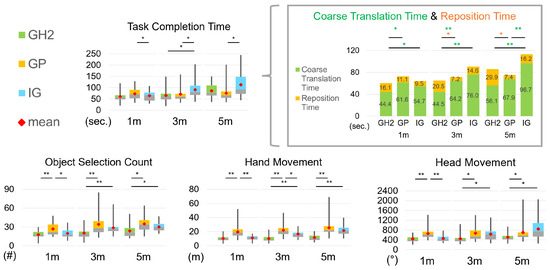

The quantitative data are shown in Figure 6. The quantitative data are summarized in Table 2 and are as follows:

Figure 6.

The results of objective data. (* = p < 0.05 for Friedman test and p < 0.0167 for Wilcoxon signed-rank test with Bonferroni correction, and ** = p < 0.005.

Table 2.

The summary of core results of quantitative data. Note: ↓: lower is better.

- GH2 required less Coarse Translation Time than other interfaces under 1 m and 3 m tasks.

- GH2 required fewer Object Selection Count than other interfaces under 3 m and 5 m tasks.

- GH2 produced less Hand and Head Movement than other interfaces under 3 m and 5 m tasks.

3.1.1. Task Completion Time

Statistical analysis showed significant effects on Interface (F(1.491, 34.298) = 5.955, p = 0.011, = 0.206), Distance (F(2, 46) = 26.172, p < 0.001, = 0.532), and Interface × Distance (F(4, 92) = 6.493, p < 0.001, = 0.202). Pairwise comparison showed that, under 1 m distance, IG completed the task faster than GP (Z = −2.486, p = 0.013) while there was no significant difference between GH2 and GP (Z = −1.714, p = 0.086) and GH2 and IG (Z = −0.714, p = 0.475). In contrast, GH2 completed the task faster than IG under 3 m distance (Z = −2.971, p = 0.003). GP also finished the task faster than IG (Z = −2.857, p = 0.004) but not GH2 (Z = −1.171, p = 0.241) under 3 m. In a 5 m distance, there was no significant distance between GH2 and GP (Z = −1.514, p = 0.130) and GH2 and IG (Z = −1.514, p = 0.130), but IG completed the task slower than GP (Z = −3.343, p = 0.001).

3.1.2. Coarse Translation Time and Reposition Time

Statistical analysis for Coarse Translation Time showed significant effects on Interface (F(2, 46) = 28.960, p < 0.001, = 0.557), Distance (F(2, 46) = 22.379, p < 0.001, = 0.493), and Interface × Distance (F(4, 92) = 6.852, p < 0.001, = 0.230). Pairwise comparison showed that GH2 was faster than both GP (Z = −3.286, p = 0.001) and IG (Z = −2.429, p = 0.015) for coarse manipulation under 1 m. There was no significant difference between GP and IG (Z = −2.286, p = 0.022). Likewise, GH2 showed faster coarse manipulation than both GP (Z = −3.600, p < 0.001) and IG (Z = −4.143, p < 0.001) under 3 m. There was no significant difference between GP and IG (Z = −2.286, p = 0.022). Under the 5 m condition, IG used less coarse manipulation time than GP (Z = −3.229, p = 0.001) and was also slower than GH2 (Z = −3.429, p = 0.001). However, there was no significant difference between GH2 and GP (Z = −1.400, p = 0.162) under 5 m.

Statistical analysis for Reposition Time showed significant effects on Interface (F(2, 46) = 15.133, p < 0.001, = 0.397), Distance (F(2, 46) = 5.074, p = 0.010, = 0.181), and Interface × Distance (F(4, 92) = 5.665, p < 0.001, = 0.198). Pairwise comparison showed that there was no significant difference among Interfaces under 1 m (all p > 0.056). Meanwhile, GP showed shorter reposition time than GH2 under 3 m (Z = −3.057, p = 0.002). There was no significant difference between GH2 and IG (Z = −1.114, p = 0.265) and between GP and IG (Z = −2.143, p = 0.032). Under 5 m, GP also performed faster reposition than GH2 (Z = −3.429, p = 0.002). There was no significant difference between GH2 and IG (Z = −2.229, p = 0.026) and between GP and IG (Z = −2.229, p = 0.026).

3.1.3. Physical Movement

Statistical analysis for Hand Movement showed significant effects on Interface (F(2, 46) = 77.257, p < 0.001, = 0.771), Distance (F(2, 46) = 40.369, p < 0.001, = 0.637), and Interface × Distance (F(2.630, 60.493) = 13.777, p < 0.001, = 0.375). Pairwise comparison showed that GP required more hand movement than both GH2 (Z = −4.257, p < 0.001) and IG (Z = −4.143, p < 0.001). There was no significant difference between GH2 and GP (Z = −1.143, p = 0.253). Under 3 m, GH2 used lesser hand movement than both GP (Z = −4.286, p < 0.001) and IG (Z = −4.286, p < 0.001). GP also required more movement than IG (Z = −3.057, p = 0.002). Under 5 m, GH2 also used lesser hand movement than both GP (Z = −4.286, p < 0.001) and IG (Z = −4.143, p < 0.001), but there was no difference between GP and IG (Z = −1.943, p = 0.052).

Statistical analysis for Head Movement showed significant effects on Interface (F(2, 46) = 22.516, p < 0.001, = 0.495), Distance (F(2, 46) = 20.367, p < 0.001, = 0.470), and Interface × Distance (F(4, 92) = 12,415, p < 0.001, = 0.351). Pairwise comparison showed that GP required more head movement than both GH2 (Z = −3.771, p < 0.001) and IG (Z = −4.000, p < 0.001) under 1 m. No difference between GH2 and IG (Z = −0.629, p = 0.530) was found. Meanwhile, GH2 showed lesser head movement than both GP (Z = −3.257, p = 0.001) and IG (Z = −3.257, p = 0.001) under 3 m. No difference between GP and IG (Z = −0.543, p = 0.587) was found. Similarly, under 5 m, GH2 showed lesser head movement than both GP (Z = −2.829, p = 0.005) and IG (Z = −3.457, p = 0.001) under 3m. No difference between GP and IG (Z = −1.286, p = 0.199) was found.

3.1.4. Object Selection Count

Statistical analysis showed significant effects on Interface (F(2, 46) = 23.764, p < 0.001, = 0.508), Distance (F(2, 46) = 47.595, p < 0.001, = 0.674), and Interface × Distance (F(4, 92) = 2.804, p = 0.030, = 0.109). Pairwise comparison showed that GP required more selections than both GH2 (Z = −3.486, p < 0.001) and IG (Z = −3.332, p = 0.001) under 1 m. There was no significant difference between GH2 and IG (Z = −1.310, p = 0.190). Under 3 m, GH2 needed fewer selections than both GP (Z = −3.637, p < 0.001) and IG (Z = −3.590, p < 0.001). There was no difference between GP and IG (Z = −1.489, p = 0.137). Under 5 m, GH2 also performed fewer selections than both GP (Z = −3.445, p = 0.001) and IG (Z = −2.531, p = 0.011). There was no difference between GP and IG (Z = −2.173, p = 0.030).

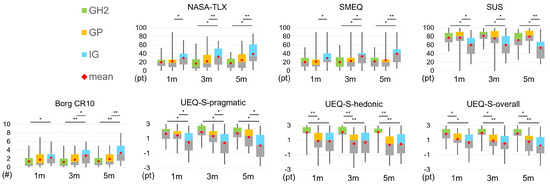

3.2. Subjective Data

Figure 7.

Results of subjective data. (* = p < 0.05 for Friedman test and p < 0.0167 for Wilcoxon signed-rank test with Bonferroni correction, and ** = p < 0.005.

Table 3.

Summary of core results of subjective data. Note: ↑: higher is better; ↓: lower is better.

- GH2 supported higher hedonic experiences and overall experiences compared to other interfaces.

- GH2 showed a higher usability score and lower arm fatigue compared to IG.

- GH2 required lower task and mental load compared to IG under 3 m and 5 m tasks.

3.2.1. NASA-TLX (Overall Task Load)

Statistical analysis showed significant effects on Interface (F(1.381, 31.766) = 10.146, p < 0.001, = 0.306) and Interface × Distance (F(2.461, 56.604) = 3.113, p = 0.042, = 0.119) but not on Distance (F(2, 46) = 3.096, p = 0.055, = 0.119). Pairwise comparison showed that IG showed higher task load than GP (Z = −2.647, p = 0.008) under 1 m. There was no significant difference between GH2 and GP (Z = −0.043, p = 0.966) and GH2 and IG (Z = −1.815, p = 0.070). In contrast, GH2 showed lower task load than IG under 3 m distance (Z = −3.164, p = 0.002). GP also showed lower task load than IG (Z = −3.581, p = 0.004). No significant difference between GH2 and GP was found (Z = −0.761, p = 0.447) under 3 m. Under 5 m, both GH2 (Z = −3.332, p = 0.001) and GP (Z = −4.016, p < 0.001) showed lower task load than IG. No significant difference between GH2 and GP was found (Z = −0.883, p = 0.377) under 5 m.

3.2.2. SMEQ (Mental Load)

Statistical analysis showed significant effects on Interface (F(1.667, 38.350) = 8.551, p = 0.002, = 0.271), Distance (F(2, 46) = 7.118, p = 0.002, = 0.236), and Interface × Distance (F(4, 92) = 3.213, p = 0.016, = 0.123). Pairwise comparison showed that IG showed higher mental load than GP (Z = −2.935, p = 0.003) under 1 m. There was no significant difference between GH2 and GP (Z = −0.262, p = 0.793) and GH2 and IG (Z = −1.785, p = 0.074). In contrast, GH2 showed lower mental load than IG under 3 m distance (Z = −2.780, p = 0.005). GP also showed lower mental load than IG (Z = −3.223, p = 0.001). No significant difference between GH2 and GP was found (Z = −0.337, p = 0.736) under 3 m. Under 5 m, Both GH2 (Z = −3.011, p = 0.003) and GP (Z = −3.562, p < 0.001) showed lower mental load than IG. No significant difference between GH2 and GP was found (Z = −0.306, p = 0.760) under 5 m.

3.2.3. SUS (Usability)

Statistical analysis showed significant effects on Interface (F(2, 46) = 19.199, p < 0.001, = 0.455) and Distance (F(2, 46) = 9.603, p < 0.001, = 0.295) but not on Interface × Distance (F(1.337, 30.760) = 1.442, p = 0.247, = 0.059). Under 1 m, GH2 and GP got an average SUS score (GH2: M = 76.77, SD = 15.33; GP: M = 76.56, SD = 21.63) above average (which is 68) [10] while IG did not (M = 59.90; SD = 22.84). Pairwise comparison showed that both GH2 (Z = −2.436, p = 0.015) and GP (Z = −3.242, p = 0.001) showed higher usability than IG. No significant difference between GH2 and GP (Z = −0.137, p = 0.891). They also got a similar SUS score under 3 m (GH2: M = 81.25, SD = 14.74; GP: M = 75.31, SD = 22.79; IG: M = 60.00, SD = 24.58). Both GH2 (Z = −3.378, p = 0.001) and GP (Z = −3.034, p = 0.002) showed higher usability than IG. There was no significant difference between GH2 and GP (Z = −0.800, p = 0.424). They followed a similar trend under 5 m (GH2: M = 71.04, SD = 19.07; GP: M = 79.38, SD = 17.79; IG: M = 53.23, SD = 25.10). Both GH2 (Z = −3.232, p = 0.001) and GP (Z = −3.951, p < 0.001) showed higher usability than IG. There was no significant difference between GH2 and GP (Z = −1.820, p = 0.069).

3.2.4. Borg CR10 (Arm Fatigue)

Statistical analysis showed significant effects on Interface (F(2, 46) = 15.589, p < 0.001, = 0.404), Distance (F(1.702, 39.154) = 6.166, p = 0.007, = 0.211), and Interface × Distance (F(2.965, 68.201) = 5.521, p = 0.002, = 0.194). Pairwise comparison showed that GH2 showed less arm fatigue than IG (Z = −2.767, p = 0.006) under 1 m. There was no difference between GP and GH2 (Z = −1.048, p = 0.295) and GP and IG (Z = −1.997, p = 0.046). Under 3 m, IG produced more arm fatigue than both GH2 (Z = −3.549, p < 0.001) and GP (Z = −3.088, p = 0.002) under 3 m distance. No significant difference between GH2 and GP was found (Z = −1.565, p = 0.118). Similarly, both GH2 (Z = −3.857, p < 0.001) and GP (Z = −3.885, p < 0.001) produced lower arm fatigue than IG. No significant difference between GH2 and GP was found (Z = −1.327, p = 0.185).

3.2.5. UEQ-S (User Experience)

Statistical analysis for the pragmatic scale showed a significant effect on Interface (F(2, 46) = 11.347, p < 0.001, = 0.330) but not on Distance (F(2, 46) = 2.887, p = 0.066, = 0.112) and Interface × Distance (F(4, 92) = 2.478, p = 0.072, = 0.097). Pairwise comparison showed that both GH2 (Z = −2.690, p = 0.007) and GP (Z = −2.942, p = 0.003) got a higher pragmatic scale than IG under the 1 m condition. There was no significant difference between GH2 and GP (Z = −0.688, p = 0.492). Under 3 m, participants felt that both GH2 (Z = −3.448, p = 0.001) and GP (Z = −2.861, p = 0.004) were more pragmatic than IG. No significant difference between GH2 and GP was found (Z = −1.708, p = 0.088). GH2 (Z = −3.462, p = 0.001) and GP (Z = −3.247, p = 0.001) also got a higher scale than IG under 5 m. No significant difference between GH2 and GP was found (Z = −1.481, p = 0.139) under 5 m.

Statistical analysis for the hedonic scale showed significant effects on Interface (F(1.365, 31.387) = 30.041, p < 0.001, = 0.566), Distance (F(1.718, 39.506) = 9.641, p = 0.001, = 0.295), and Interface × Distance (F(2.866, 65,921) = 4.196, p = 0.010, = 0.154). Pairwise comparison showed that GH2 got a higher hedonic scale than both GP (Z = −3.605, p < 0.001) and IG (Z = −3.468, p = 0.001) under 1 m condition. There was no significant difference between IG and GP (Z = −0.225, p = 0.822). Participants also felt that GH2 was more enjoyable than both GP (Z = −3.924, p < 0.001) and IG (Z = −3.721, p < 0.001) under 3 m. No significant difference between GH2 and GP was found (Z = −0.919, p = 0.358). Under 5 m, GH2 also had a higher scale than both GP (Z = −4.203, p < 0.001) and IG (Z = −3.920, p < 0.001) under 5 m. No significant difference between GH2 and GP was found (Z = −0.787, p = 0.431).

Results of the overall user experience were similar to the hedonic subscale. Statistical analysis for the overall user experience showed significant effects on Interface (F(2, 46) = 18.841, p < 0.001, = 0.450), Distance (F(1.661, 38.205) = 4.619, p = 0.021, = 0.167), and Interface × Distance (F(2.791, 64.198) = 3.876, p = 0.015, = 0.144). Pairwise comparison showed that GH2 served a better user experience than both GP (Z = −2.604, p = 0.009) and IG (Z = −3.446, p = 0.001) under 1 m. No significant difference between IG and GP (Z = −2.243, p = 0.025) was found. Under 3 m, GH2 also got a higher scale than both GP (Z = −3.474, p = 0.001) and IG (Z = −3.804, p < 0.001). No significant difference between GH2 and GP was found (Z = −1.569, p = 0.117). Under 5 m, GH2 also got a higher scale than both GP (Z = −3.409, p = 0.001) and IG (Z = −3.917, p < 0.001). No significant difference between GH2 and GP was found (Z = −1.926, p = 0.054).

3.2.6. Preferences

The results of the preference for interfaces for each distance were shown in Table 4.

Table 4.

User preferences among three interfaces in the study.

The goodness-of-fit test showed that there was a different preference on interfaces for each distance (1 m: = 9.750, p = 0.008; 3 m: = 9.750, p = 0.008; 5 m: = 10.750, p = 0.005). Pairwise comparison showed that IG was least preferred compared to both GH2 (Z = −2.716, p = 0.007) and GP (Z = −2.434, p = 0.015) under 1 m. There was no significant difference between GH2 and GP (Z = −1.461, p = 0.144). Under 3 m, IG was also less preferred than both GH2 (Z = −3.241, p = 0.001) and GP (Z = −3.413, p = 0.001). No significant difference between GH2 and GP was found (Z = −0.287, p = 0.774). Similar preference was found under 5 m. IG was less preferred than both GH2 (Z = −3.594, p < 0.001) and GP (Z = −3.053, p = 0.002). No significant difference between GH2 and GP was found (Z = −0.860, p = 0.390).

3.3. Observation

We observed some common behaviors when participants were using each interface. With the GH2, most participants could easily translate an object by changing their eye direction without head rotation when the lateral distance was 35°. Meanwhile, they needed to rotate their head for selection in a 55° condition because it was outside their initial view. After the selection, participants usually rotated the object first and then translated it to the target. If they translated it first, it became harder to rotate the object away from the user, especially at a further distance (5 m), because the object became too small to control in the view. Additionally, while rotating the object, the depth was sometimes unintentionally changed due to their hand movement. Thus, they needed to reset the depth. As a result, participants finished the rotation first to concentrate on translation.

Meanwhile, participants first translated the object and then rotated it while using GP. Once they matched the object to the correct position, they could easily finish the task by focusing solely on rotating the object, as its position was fixed.

With the IG, several participants got confused about the order of confirmation and release steps, especially when they tried to complete the task quickly. As a result, they confirmed the selection when they reached their hand, and they released the object when they moved their hand towards themselves. This effect was more obvious in longer distances (e.g., IG was faster than GP under 1 m but slower under 3 m and 5 m conditions).

3.4. Participants’ Feedback

Figure 8.

Word clouds from participants’ feedback on the three interfaces.

Table 5.

Summary of the key components of the subjective feedback for each interface. Each number represents the number of participants. There may be more than 24 comments for each interface because we accepted multiple comments from each participant.

For GH2, there were favorable comments regarding its selection and manipulation. For example, participants emphasized their experience of indication and manipulation. Participant #13 (P13 afterward) said, “It was good that my hand’s color was changed into green when selecting an object”. P1 stated, “I felt that I was actually touching and controlling the object when I conducted distant object translation”. They were also favored for GH2’s translation method. P5 stated, “It was effective for object translation”. P6 said, “It was convenient because of the fast object translation speed”. P18 commented “I felt that its depth control method was easy and gives a different experience.” Some of them were interested in GH2’s natural rotation mapping. P12 said, “It was exciting that the object rotation works freely, similar to the real-world”. P24 commented “Free object rotation is one of its strengths”.

In contrast, some participants felt they needed to practice their depth and rotation control for fluent manipulation. P4 and P10 stated, “It was hard to rotate the object precisely”. P19 said, “My wrist sometimes rotated unconsciously, resulting in unintentional rotation”. Additionally, although GH2 supports simultaneous rotation and translation, P17 commented that its simultaneous input makes using the interface challenging. “I had to decide three things (rotation, depth control, and eye position) to finish a task. It was confusing”.

Regarding GP, some participants gave positive comments about its selection, translation, and rotation. Their thoughts focused on its ease and precision. P20 told us about its heuristic selection, “The idea that automatically selects an object close to my gaze is useful because I do not need to match my gaze onto the object precisely”. P5 commented, “It was nice because this interface allows accurate translation”. P3 also said, “I think its rotation method was easier than others”.

However, participants reported that the repetitive action for a longer distance translation was a weakness. P8 said, “Repetitive translation makes me cumbersome, especially under 5 m condition”. P11 stated, “My arm got a physical load because of its numerous hand movement actions”. P14 said, “Its translation method was time-consuming and inefficient”. Some participants also commented on its limited and unnatural rotation method. P11 stated, “GP’s rotation fitted for the task but may not be sufficient for a general task”. P12 stated, “Compared to GH2, its rotation was not real”. P17 said, “I had to use both my hands to rotate the object. It was also confusing me because the direction of rotation depends on both my hands’ position”.

In IG, participants commented about its translation and rotation. Some of them mentioned their eye-based quick translation. P1 said, “It was also good when I tried to translate an object from right to left”. P19 said, “Translation from a right side to the center was fast, thanks to the eye movement”. Others said its object manipulation was controllable. P10 commented, “I could easily control the object using it”. P13 stated, “I can set the orientation of the object precisely through my hand”.

Nevertheless, similar to GP, participants also stated that translation along the gaze ray direction was not effective and caused fatigue. P11 commented, “To translate the object, I had to move my hand forward and backward, causing physical fatigue”. P24 said, “I was bored and got arm fatigue due to its repetitive physical movement”. They also mentioned a lack of an eye pointer. P2 stated, “Removing the eye pointer is not a good choice. I cannot easily understand where I am looking”. P3 commented, “Since there was no gaze pointer, the farther the target distance was, the harder the task was”. Some of them gave unfavorable feedback on the selection and release process. P11 stated, “The process including repetitive selection and release using pinch gesture was not comfortable for me”. P16 also said, “The interaction process, pinching to start and pinching to stop moving the object, was complex for me”.

4. Discussion

4.1. Overall Performance of GH2

The results showed that the GH2’s object selection count was significantly lower compared to GP with all distances and IG with longer distances (3 m and 5 m). While GP and IG required multiple selecting and releasing operations to translate an object along a gaze ray, GH2 could make this translation mostly through only one action [5]. As a result, fewer selections induce less physical movement. GH2 significantly reduces hand movement and head movement compared to both GP and IG, especially with the longer distances (3 m and 5 m). Interestingly, this effectiveness was not clearly reflected in Task Completion Time [69]. We suggest that although there is no clear advantage of Task Completion Time, this effectiveness may affect the subjective feeling of the GH2, such as the sense of overall user experiences, which is also higher than both GP and IG.

4.2. User Experience on GH2

Although GH2 received both positive and negative feedback, its user experience was higher than others (see Section 3.2.5), and its usability was above average [70] (see Section 3.2.3). The GH2 received similar SUS scores compared to GP and higher than IG, indicating that our interface could be used for selecting and manipulating distant objects and could be an alternative to current VR applications. We assume that one reason GH2 got a good SUS score was the reduced physical movement while interacting with the object (see Section 3.1.3). In most cases, GH2 reduced head and hand movements compared to both GP and IG, which also reduces the likelihood of arm fatigue. The only exception was a comparison between GH2 and IG in the 1 m condition; such a short distance could be covered within only one or two hand actions [7]. Furthermore, like GH2, IG can translate objects using only the eye, which could reduce the necessity for hand and head movement [7]. Thus, they might not show a significant difference in physical movement.

Another notable finding is that GH2 got a higher UEQ-S hedonic scale than the other conditions. We assumed two reasons could account for it. First, reduced physical movement could lower physical fatigue [7,11], which can make participants treat GH2 more positively and retain it with a good memory. Second, GH2’s virtual hand provided the sense of direct object interaction (e.g., P12 was excited by its natural rotation). Additionally, most participants found GH2’s depth control unfamiliar but effective, which might result in an attitude of interest.

4.3. Comparing GH2 with GP: Direct Object Rotation with Virtual Hand

GP [6] rotates an object using the relative position of two hands and one axis. Thus, it could serve as a simple interaction and may be suitable for the task (i.e., requiring only one axis rotation). This was supported by showing high pragmatic scales (see Section 3.2.5). However, it sacrifices the complexity of rotation by allowing rotation around only the Y axis, which affects its general use (P11). Furthermore, its rotation method differs from the real-world experience [69], which could affect the user experience (P12 and P17). In contrast, GH2 supports free rotation based on a participant’s hand pose, resulting in “actually interacting with them” (P1) and “being excited” (P12). Even though it may increase complexity, natural interaction can make it feel enjoyable, resulting in higher hedonic experiences compared to GP.

4.4. Comparing GH2 with IG: The Effect of Safe Region on Precise Manipulation

According to the result of Coarse Translation Time (see Section 3.1.2), GH2 brought an object closer to the target more quickly than IG [7] under all three distances. This suggests that GH2 could be a better choice for a distant object translation in the vicinity of the target position. However, the total Task Completion Time did not show a statistical difference between GH2 and IG (see Section 3.1.1). Although the Reposition Time also did not make any statistical difference between them, descriptive statistics showed that the means of Reposition Time for IG (1 m: 9.48; 3 m: 14.62; 5 m: 16.23) were all shorter than those for GH2 (1 m: 16.15; 3 m: 20.54; 5 m: 29.94). Notably, the difference is quite marginal in the longest distance, 5 m (p = 0.026). Considering these results, Reposition Time may affect the statistical difference in Task Completion Time. We assume that the safe region of IG could keep the stability of object manipulation [7]. Until a participant’s eye gaze point is in the safe region, object manipulation only happens with hand movement. As hand input is assumed to be more precise than eye input [71], it helps to use only more accurate input (i.e., hand).

Our GH2, in contrast, always applied both hand and eye movement to the interaction. Since current eye tracking is not perfect [71], its noise is also applied through our interface, which could affect the precise translation to the target pose. In conclusion, the safe region splits hand from eye, helping precise manipulation [7]. Nevertheless, we note that the safe region can make objects appear to ‘jump’ when the gaze is outside the region, which may be perceived as unnatural movement for participants [40].

Additionally, participants usually experienced a higher task load and mental load than other interfaces (see Section 3.2.1 and Section 3.2.2). We thought that it may be because of the complexity of the interaction of IG, which contains two steps (selection and release), requiring more physical activity. The only exception is a comparison with GH2 under the 1 m condition. This might be because both GH2 and IG utilize hand-based free rotation, which makes them more natural but increases sensitivity. While GP’s rotation is assumed to be easier than theirs, it was not considered as natural (see a comment from P3 compared to P4, P10, and P19).

We also suggest showing a gaze pointer or easy distant object selection based on participants’ feedback (see comments from P2 and P3), even though the pointer can be distracting [7]. This effect could be reduced by making the pointer less noticeable (e.g., by making it smaller and/or semi-transparent).

4.5. Comparing GH2 with the Original GazeHand

Although our study did not directly compare our interface with the original GazeHand interface [52], we can conclude that our interface is more effective than the original one. This is because, even though both GP and IG applied hand amplification [19] for a faster object translation, they usually required more object selection actions than GH2 (see Section 3.1.4). However, the original GazeHand used natural 1:1 mapping for natural interaction rather than hand amplification. The task from our study was to translate an object midair, and there is no assistant object to control the depth for the original GazeHand. Therefore, the user must perform multiple grab–release actions more than GP and IG to translate the object along their gaze. Thus, the original GazeHand should take more time and physical movement than GP or IG. Nevertheless, it is worth directly comparing the original GazeHand with GH2 in the future, though our study focused on comparing GH2 with well-known gaze + hand interfaces and highlighting the limitations of the original GazeHand.

4.6. Design Improvement

We heard participants’ suggestions for improving the design of GH2. They stated that maintaining the orientation was difficult when translating an object (P4, P10, P19, and P23). Simultaneous object translation and rotation work as useful features, but they seemed to be challenging for users (P17). We thought that it would be useful to give alternative manipulation modes that allow only translation and rotation. Although participants were allowed to use both hands while using GH2, most participants used only one hand. Thus, the other hand can be used to select a desired mode by using different hand gestures [72] (e.g., a fist gesture applies only rotation, pointing with the index finger applies only translation, and other gestures apply both manipulations like an existing one).

P20 suggested an idea that if the virtual hands at a real position occlude an object with a grab gesture, the system selects the object. If there are two or more objects, the system selects the nearest one. It is similar to the Sticky Finger technique [73] that uses occlusion. We thought that this setup could reduce the need for the hand to collide with the target object.

Additionally, it is possible to offer fast or slow speed options depending on the task purpose or user preference. Furthermore, since IG’s safe region showed precise manipulation, enabling a similar feature on GH2 may enhance the user experience.

4.7. Findings and Limitations

In summary, we find the following from this study:

- Task Completion Time from IG depends on the distance.

- Both GH2 and GP achieved above-average SUS scores for distant object interaction.

- GH2 produced less physical movement than both GP and IG in most cases.

- GH2 produced a higher hedonic experience than both GP and IG.

- IG’s safe region may reduce the reposition time compared to GH2.

- Using a gaze pointer is recommended for better distant object selection.

In this study, we identified several limitations. First, the task in our study covered object translation in only the forward direction. It would be helpful to examine translation in the backward direction. Second, as we focused on only depth-axis translation controlled by gaze depth, we did not conduct any scaling tasks, even though it is one of the fundamental interactions. Third, the object in our task was static (i.e., not moving). However, users in VR could interact with any moving objects. For example, Pursuit [9,34] is an interface for interacting with moving objects. Therefore, it would be interesting to compare our interface with Pursuit with an automatically moving object. Fourth, although the range of their VR experiences was varied to generalize the result, the participants were relatively young (ranging from 20 to 35), which may limit the generalizability of the result. Specifically, older users may show different behavior when using our interface [74]. Fifth, the result of the study could be affected by eye tracking quality [71], which could be improved by future devices or additional correction algorithms. Likewise, while the original GazeHand [52] was run on HTC Vive Pro Eye HMD, our system was built on Meta Quest Pro HMD. Although both HMD supports hand and gaze tracking, they may serve different user experiences because the rendering, hand, and gaze tracking quality may differ. Lastly, for a fairer comparison, our implementation is based on the Eye-GazeHand. However, the Head-GazeHand showed better performances than Eye-GazeHand in the previous study [52]. As head gaze is also widely used for device accessibility (e.g., Meta Quest 2, 3, and 3s), it would be worthwhile to develop a head gaze version of our interface and compare it with other interfaces.

5. Conclusions

In this paper, we developed an extension of GazeHand, named GazeHand2, which applies a gaze depth control method. Compared to the original GazeHand, users can control the gaze depth according to their relative hand position. We conducted a user study that compares our interfaces with well-known gaze- and hand-based interfaces that the original GazeHand did not compare with. Results showed that our interface produced less physical movement and supported more enjoyable experiences compared to other interfaces with support for realistic object rotation. Gaze+Pinch showed high performance but was perceived as a less realistic interface. Participants found ImplicitGaze relatively complex and difficult to use for interacting with distant objects due to the absence of a gaze pointer.

In the future, we will continue to explore how our interface performs under various conditions, such as by offering multiple depth controls, resizing objects, or adding movement. We will also investigate use cases for our interface in a multi-user virtual environment, such as VR-based remote collaboration and integration with VR locomotion techniques.

Author Contributions

Conceptualization, J.J. and S.K.; methodology, J.J. and S.K.; software, J.J.; validation, J.J., S.-H.K., H.-J.Y., G.L. and S.K.; formal analysis, J.J.; investigation, J.J.; resources, J.J.; data curation, J.J.; writing—original draft preparation, J.J.; writing—review and editing, S.-H.K., H.-J.Y., G.L. and S.K.; visualization, J.J.; supervision, S.K.; project administration, S.K. and G.L.; funding acquisition, S.-H.K., H.-J.Y. and S.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Institute of Information & Communications Technology Planning & Evaluation (IITP)-Innovative Human Resource Development for Local Intellectualization program grant funded by the Korea government (MSIT) (IITP-2025-RS-2022-00156287); in part, by the Institute of Information & Communications Technology Planning & Evaluation (IITP) under the Artificial Intelligence Convergence Innovation Human Resources Development (IITP-2023-RS-2023-00256629) grant funded by the Korea government (MSIT); and, in part, by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (No. RS-2024-00461327).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of Chonnam National University (protocol code 1040198-221121-HR-145-01, approval date 20 December 2022).

Informed Consent Statement

Informed consent for participation was obtained from all subjects involved in the study.

Data Availability Statement

The original data presented in the study are openly available in GitHub: https://github.com/joon060707/GazeHand2-Result (accessed on 15 May 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- LaViola, J.J., Jr.; Kruijff, E.; McMahan, R.P.; Bowman, D.; Poupyrev, I.P. 3D User Interfaces: Theory and Practice; Addison-Wesley Professional: Boston, MA, USA, 2017. [Google Scholar]

- Mendes, D.; Caputo, F.M.; Giachetti, A.; Ferreira, A.; Jorge, J. A survey on 3D virtual object manipulation: From the desktop to immersive virtual environments. Comput. Graph. Forum 2019, 38, 21–45. [Google Scholar] [CrossRef]

- Hertel, J.; Karaosmanoglu, S.; Schmidt, S.; Bräker, J.; Semmann, M.; Steinicke, F. A Taxonomy of Interaction Techniques for Immersive Augmented Reality based on an Iterative Literature Review. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Bari, Italy, 4–8 October 2021; pp. 431–440. [Google Scholar] [CrossRef]

- Yu, D.; Dingler, T.; Velloso, E.; Goncalves, J. Object Selection and Manipulation in VR Headsets: Research Challenges, Solutions, and Success Measurements. ACM Comput. Surv. 2024, 57, 1–34. [Google Scholar] [CrossRef]

- Bowman, D.A.; Hodges, L.F. An evaluation of techniques for grabbing and manipulating remote objects in immersive virtual environments. In Proceedings of the 1997 Symposium on Interactive 3D Graphics, Providence, RI, USA, 27–30 April 1997; I3D ’97. pp. 35–38. [Google Scholar] [CrossRef]

- Pfeuffer, K.; Mayer, B.; Mardanbegi, D.; Gellersen, H. Gaze + pinch interaction in virtual reality. In Proceedings of the 5th Symposium on Spatial User Interaction, Brighton, UK, 16–17 October 2017; SUI ’17. pp. 99–108. [Google Scholar] [CrossRef]

- Yu, D.; Lu, X.; Shi, R.; Liang, H.N.; Dingler, T.; Velloso, E.; Goncalves, J. Gaze-supported 3D object manipulation in virtual reality. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021. CHI ’21. [Google Scholar] [CrossRef]

- Poupyrev, I.; Billinghurst, M.; Weghorst, S.; Ichikawa, T. The go-go interaction technique: Non-linear mapping for direct manipulation in VR. In Proceedings of the 9th Annual ACM Symposium on User Interface Software and Technology, Seattle, WA, USA, 6–8 November 1996; UIST ’96. pp. 79–80. [Google Scholar] [CrossRef]

- Khamis, M.; Oechsner, C.; Alt, F.; Bulling, A. VRpursuits: Interaction in virtual reality using smooth pursuit eye movements. In Proceedings of the 2018 International Conference on Advanced Visual Interfaces, Castiglione della Pescaia, Grosseto, Italy, 29 May–1 June 2018. AVI ’18. [Google Scholar] [CrossRef]

- Liu, C.; Plopski, A.; Orlosky, J. OrthoGaze: Gaze-based three-dimensional object manipulation using orthogonal planes. Comput. Graph. 2020, 89, 1–10. [Google Scholar] [CrossRef]

- Bao, Y.; Wang, J.; Wang, Z.; Lu, F. Exploring 3D interaction with gaze guidance in augmented reality. In Proceedings of the 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR), Shanghai, China, 25–29 March 2023; pp. 22–32. [Google Scholar] [CrossRef]

- Ryu, K.; Lee, J.J.; Park, J.M. GG Interaction: A gaze–grasp pose interaction for 3D virtual object selection. J. Multimodal User Interfaces 2019, 13, 383–393. [Google Scholar] [CrossRef]

- Rothe, S.; Pothmann, P.; Drewe, H.; Hussmann, H. Interaction Techniques for Cinematic Virtual Reality. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 1733–1737. [Google Scholar] [CrossRef]