Multi-Scale Context Enhancement Network with Local–Global Synergy Modeling Strategy for Semantic Segmentation on Remote Sensing Images

Abstract

1. Introduction

2. Methodology

2.1. Overall

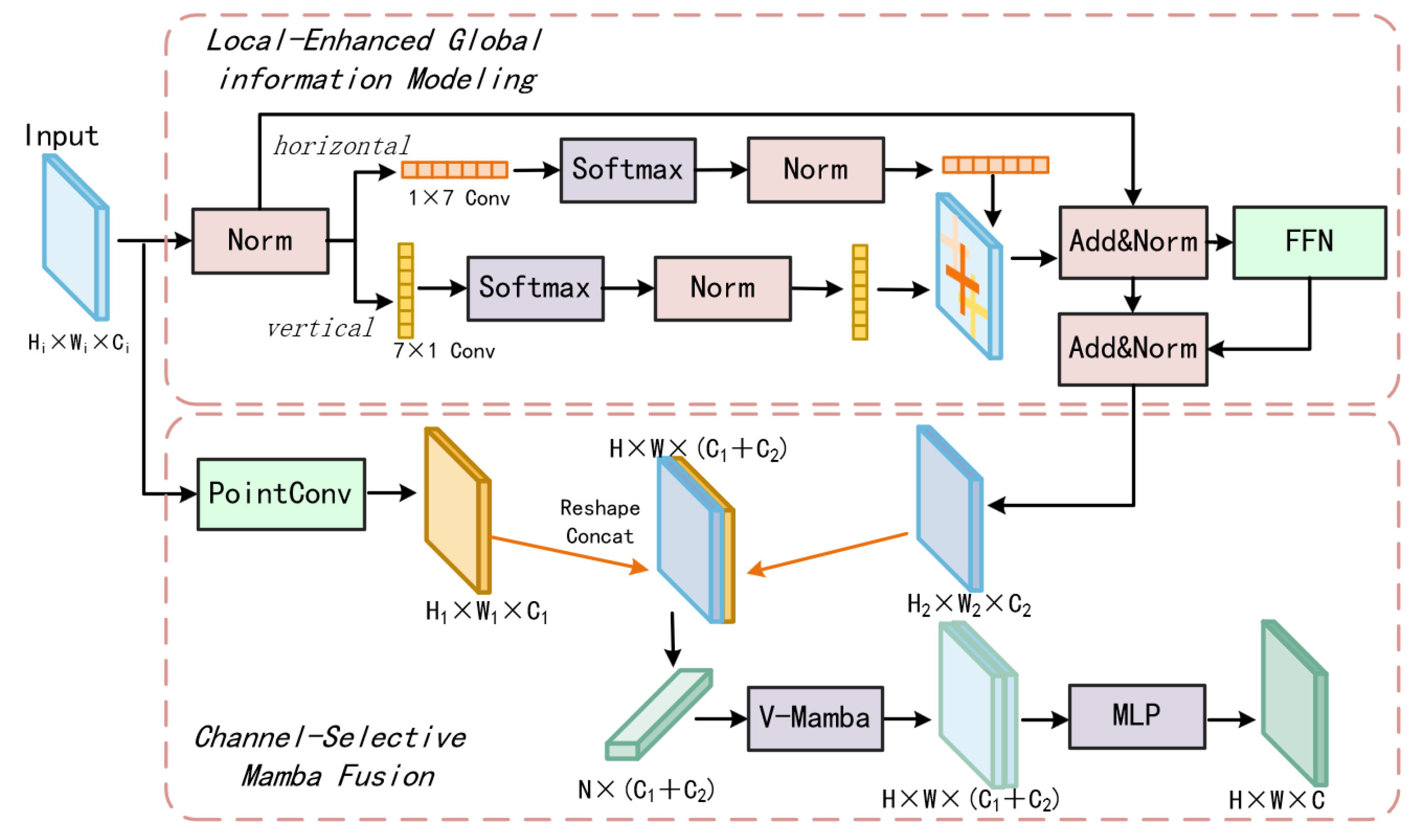

2.2. Local–Global Align Mamba Fusion

2.3. Context-Aware Cross-Attention Interaction Module

3. Experiments

3.1. Experimental Setting

3.1.1. Datasets

3.1.2. Implementation Details

3.1.3. Evaluation Metrics

3.2. Experimental Results

3.2.1. Ablation Study

3.2.2. Effectiveness Verification

| Methods | Venue | Impervious Surface | Building | Low Vegetation | Tree | Car | mIoU | mF1 |

|---|---|---|---|---|---|---|---|---|

| STransFuse [16] | JSTARS2021 | 83.93 | 90.44 | 74.72 | 75.63 | 86.61 | 82.27 | 90.48 |

| ST-UNet [17] | TGRS2022 | 79.19 | 86.63 | 67.89 | 66.37 | 79.77 | 75.94 | 86.13 |

| WSSS [53] | TGRS2023 | 85.91 | 91.57 | 75.35 | 76.82 | 88.86 | 83.70 | 90.98 |

| EMRT [19] | TGRS2023 | 85.34 | 91.11 | 74.62 | 76.38 | 86.43 | 82.78 | 90.59 |

| BIBED-Seg [18] | JSTARS2023 | 87.40 | 90.40 | 75.30 | 79.20 | 67.30 | 79.92 | - |

| HSDN [23] | TGRS2023 | 78.86 | 77.24 | 72.85 | 61.68 | 69.60 | 72.05 | 83.60 |

| Samba [43] | Heliyon2024 | 84.45 | 90.06 | 74.37 | 74.98 | 87.61 | 82.29 | 90.15 |

| LSENet [20] | GRSL2024 | 80.87 | 88.82 | 70.60 | 71.92 | 80.68 | 78.58 | 87.85 |

| KFRNet [54] | GRSL2024 | 86.09 | 91.66 | 75.50 | 77.24 | 89.30 | 83.96 | 91.15 |

| MSTNet-S [21] | TGRS2024 | 82.50 | 89.76 | 73.83 | 73.82 | 90.46 | 75.55 | - |

| TCNet [22] | TGRS2024 | 86.74 | 94.20 | 76.75 | 76.66 | 90.63 | 85.00 | 91.73 |

| LESNet [20] | GRSL2024 | 80.87 | 88.82 | 70.60 | 71.92 | 80.68 | 78.58 | 87.85 |

| Lu [57] | TGRS2024 | 82.87 | 88.23 | 71.03 | 67.49 | 83.49 | 78.62 | - |

| GAGNet-S [56] | TGRS2024 | 83.41 | 89.43 | 73.89 | 73.90 | 89.91 | 82.11 | - |

| LSENet [20] | GRSL2024 | 80.87 | 88.82 | 70.60 | 71.92 | 80.68 | 78.58 | 87.85 |

| SegFormer [41] | NIPS2021 | 86.44 | 93.05 | 76.63 | 78.55 | 90.17 | 84.96 | 91.74 |

| Mask2Former [27] | CVPR2022 | 86.55 | 92.50 | 76.41 | 79.75 | 91.68 | 85.38 | 91.98 |

| Mask2Former (-w,MLMFPN) | 87.78 | 93.14 | 77.05 | 79.14 | 92.29 | 85.88 | 92.27 |

| Methods | Venue | Impervious Surface | Building | Low Vegetation | Tree | Car | mIoU | mF1 |

|---|---|---|---|---|---|---|---|---|

| UNetformer [15] | ISPRS2022 | 85.13 | 92.58 | 66.66 | 83.29 | 82.27 | 81.98 | 89.85 |

| ST-UNet [17] | TGRS2022 | 76.36 | 82.98 | 57.79 | 72.53 | 61.48 | 70.23 | 82.15 |

| CMTFNet [55] | TGRS2023 | 84.67 | 92.07 | 68.07 | 83.50 | 82.17 | 82.10 | 89.96 |

| HSDN [23] | TGRS2023 | 76.14 | 85.85 | 65.67 | 70.12 | 55.35 | 70.63 | 82.36 |

| BIBED-Seg [18] | JSTARS2023 | 70.60 | 76.10 | 63.00 | 67.80 | 62.30 | 67.96 | - |

| LSENet [20] | GRSL2024 | 77.74 | 84.26 | 62.83 | 75.99 | 62.11 | 72.59 | 83.82 |

| Samba [43] | Heliyon2024 | 81.67 | 87.26 | 65.82 | 77.93 | 55.10 | 73.56 | 84.23 |

| Lu [57] | TGRS2024 | 76.14 | 82.92 | 62.18 | 72.55 | 52.75 | 69.31 | - |

| GAGNet-S [56] | TGRS2024 | 85.80 | 92.04 | 68.63 | 79.69 | 78.01 | 80.84 | - |

| MSTNet-S [21] | TGRS2024 | 85.52 | 93.29 | 68.40 | 79.31 | 80.31 | 81.36 | - |

| SegFormer [41] | NIPS2021 | 84.88 | 90.57 | 70.62 | 79.49 | 70.34 | 79.18 | 88.16 |

| MaskFormer [24] | NIPS2021 | 85.78 | 90.72 | 70.78 | 80.20 | 75.28 | 80.55 | 89.06 |

| Mask2Former [27] | CVPR2022 | 85.78 | 91.59 | 72.12 | 80.70 | 73.02 | 80.64 | 89.10 |

| Mask2Former (-w,MLMFPN) | 86.24 | 91.88 | 72.76 | 80.14 | 73.38 | 80.88 | 89.24 |

3.2.3. Visualization Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Networks |

| ViT | Vision Transformer |

| MLMFPN | Multi-Scale Local–Global Mamba Feature Pyramid Network |

| CCIM | Context-Aware Cross-attention Interaction Module |

| LGAMF | Local–Global Align Mamba Fusion |

References

- Fan, Z.; Zhan, T.; Gao, Z.; Li, R.; Liu, Y.; Zhang, L.; Jin, Z.; Xu, S. Land cover classification of resources survey remote sensing images based on segmentation model. IEEE Access 2022, 10, 56267–56281. [Google Scholar] [CrossRef]

- Jia, P.; Chen, C.; Zhang, D.; Sang, Y.; Zhang, L. Semantic segmentation of deep learning remote sensing images based on band combination principle: Application in urban planning and land use. Comput. Commun. 2024, 217, 97–106. [Google Scholar] [CrossRef]

- Wang, R.; Sun, Y.; Zong, J.; Wang, Y.; Cao, X.; Wang, Y.; Cheng, X.; Zhang, W. Remote sensing application in ecological restoration monitoring: A systematic review. Remote Sens. 2024, 16, 2204. [Google Scholar] [CrossRef]

- Su, T.; Li, H.; Zhang, S.; Li, Y. Image segmentation using mean shift for extracting croplands from high-resolution remote sensing imagery. Remote Sens. Lett. 2015, 6, 952–961. [Google Scholar] [CrossRef]

- Kumari, M.; Kaul, A. Deep learning techniques for remote sensing image scene classification: A comprehensive review, current challenges, and future directions. Concurr. Comput. Pract. Exp. 2023, 35, e7733. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical image computing and computer-assisted intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Li, Z.; Wang, Y.; Zhang, N.; Zhang, Y.; Zhao, Z.; Xu, D.; Ben, G.; Gao, Y. Deep learning-based object detection techniques for remote sensing images: A survey. Remote Sens. 2022, 14, 2385. [Google Scholar] [CrossRef]

- Dosovitskiy, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Wang, C.; Cao, R.; Wang, R. Learning discriminative topological structure information representation for 2D shape and social network classification via persistent homology. Knowl.-Based Syst. 2025, 311, 113125. [Google Scholar] [CrossRef]

- Wang, C.; He, S.; Wu, M.; Lam, S.K.; Tiwari, P.; Gao, X. Looking Clearer with Text: A Hierarchical Context Blending Network for Occluded Person Re-Identification. IEEE Trans. Inf. Forensics Secur. 2025, 20, 4296–4307. [Google Scholar] [CrossRef]

- Zhao, T.; Fu, C.; Song, W.; Sham, C.W. RGGC-UNet: Accurate deep learning framework for signet ring cell semantic segmentation in pathological images. Bioengineering 2023, 11, 16. [Google Scholar] [CrossRef] [PubMed]

- Gu, Y.; Fu, C.; Song, W.; Wang, X.; Chen, J. RTLinearFormer: Semantic segmentation with lightweight linear attentions. Neurocomputing 2025, 625, 129489. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Gao, L.; Liu, H.; Yang, M.; Chen, L.; Wan, Y.; Xiao, Z.; Qian, Y. STransFuse: Fusing swin transformer and convolutional neural network for remote sensing image semantic segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10990–11003. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin transformer embedding UNet for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4408715. [Google Scholar] [CrossRef]

- Sui, B.; Cao, Y.; Bai, X.; Zhang, S.; Wu, R. BIBED-Seg: Block-in-block edge detection network for guiding semantic segmentation task of high-resolution remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 1531–1549. [Google Scholar] [CrossRef]

- Xiao, T.; Liu, Y.; Huang, Y.; Li, M.; Yang, G. Enhancing multiscale representations with transformer for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5605116. [Google Scholar] [CrossRef]

- Ding, R.X.; Xu, Y.H.; Liu, J.; Zhou, W.; Chen, C. LSENet: Local and Spatial Enhancement to Improve the Semantic Segmentation of Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2024, 21, 7506005. [Google Scholar] [CrossRef]

- Zhou, W.; Li, Y.; Huang, J.; Liu, Y.; Jiang, Q. MSTNet-KD: Multilevel transfer networks using knowledge distillation for the dense prediction of remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4504612. [Google Scholar] [CrossRef]

- Zhang, L.; Tan, Z.; Zhang, G.; Zhang, W.; Li, Z. Learn more and learn useful: Truncation Compensation Network for Semantic Segmentation of High-Resolution Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4403814. [Google Scholar]

- Zheng, C.; Nie, J.; Wang, Z.; Song, N.; Wang, J.; Wei, Z. High-order semantic decoupling network for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5401415. [Google Scholar] [CrossRef]

- Cheng, B.; Schwing, A.; Kirillov, A. Per-pixel classification is not all you need for semantic segmentation. Adv. Neural Inf. Process. Syst. 2021, 34, 17864–17875. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Computer Vision–ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1290–1299. [Google Scholar]

- Gomes, R.; Rozario, P.; Adhikari, N. Deep learning optimization in remote sensing image segmentation using dilated convolutions and ShuffleNet. In Proceedings of the 2021 IEEE international conference on electro information Technology (EIT), Mt. Pleasant, MI, USA, 14–15 May 2021; pp. 244–249. [Google Scholar]

- Liu, R.; Tao, F.; Liu, X.; Na, J.; Leng, H.; Wu, J.; Zhou, T. RAANet: A residual ASPP with attention framework for semantic segmentation of high-resolution remote sensing images. Remote Sens. 2022, 14, 3109. [Google Scholar] [CrossRef]

- Liu, G.; Liu, C.; Wu, X.; Li, Y.; Zhang, X.; Xu, J. Optimization of Remote-Sensing Image-Segmentation Decoder Based on Multi-Dilation and Large-Kernel Convolution. Remote Sens. 2024, 16, 2851. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified perceptual parsing for scene understanding. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 418–434. [Google Scholar]

- Li, Z.; Li, E.; Xu, T.; Samat, A.; Liu, W. Feature alignment FPN for oriented object detection in remote sensing images. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6001705. [Google Scholar] [CrossRef]

- Hu, M.; Li, Y.; Fang, L.; Wang, S. A2-FPN: Attention aggregation based feature pyramid network for instance segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15343–15352. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Tian, Y.; Zhang, M.; Li, J.; Li, Y.; Yang, H.; Li, W. FPNFormer: Rethink the method of processing the rotation-invariance and rotation-equivariance on arbitrary-oriented object detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5605610. [Google Scholar] [CrossRef]

- Ding, Y.; Ma, Z.; Wen, S.; Xie, J.; Chang, D.; Si, Z.; Wu, M.; Ling, H. AP-CNN: Weakly supervised attention pyramid convolutional neural network for fine-grained visual classification. IEEE Trans. Image Process. 2021, 30, 2826–2836. [Google Scholar] [CrossRef]

- Chen, Y.; Zhu, X.; Li, Y.; Wei, Y.; Ye, L. Enhanced semantic feature pyramid network for small object detection. Signal Processing: Image Commun. 2023, 113, 116919. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, J.; Hoi, S.C. Deep learning for image super-resolution: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3365–3387. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision mamba: Efficient visual representation learning with bidirectional state space model. In Proceedings of the 41st International Conference on Machine Learning, ICML’24, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Zhu, Q.; Cai, Y.; Fang, Y.; Yang, Y.; Chen, C.; Fan, L.; Nguyen, A. Samba: Semantic segmentation of remotely sensed images with state space model. Heliyon 2024, 10, e38495. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Liu, Z.; Liu, S.; Wang, H. MBSSNet: A Mamba-Based Joint Semantic Segmentation Network for Optical and SAR Images. IEEE Geosci. Remote Sens. Lett. 2025, 22, 6004305. [Google Scholar] [CrossRef]

- Sun, H.; Liu, J.; Yang, J.; Wu, Z. HMAFNet: Hybrid Mamba-Attention Fusion Network for Remote Sensing Image Semantic Segmentation. IEEE Geosci. Remote Sens. Lett. 2025, 22, 8001405. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Ding, X.; Guo, Y.; Ding, G.; Han, J. Acnet: Strengthening the kernel skeletons for powerful cnn via asymmetric convolution blocks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1911–1920. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Available online: https://www.isprs.org/resources/datasets/benchmarks/UrbanSemLab/2d-sem-label-potsdam.aspx (accessed on 18 June 2025).

- Available online: https://www.isprs.org/resources/datasets/benchmarks/UrbanSemLab/2d-sem-label-vaihingen.aspx (accessed on 18 June 2025).

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Li, Z.; Zhang, X.; Xiao, P. One model is enough: Toward multiclass weakly supervised remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4503513. [Google Scholar] [CrossRef]

- Wang, H.; Li, X.; Huo, L.Z. Key Feature Repairing based on Self-Supervised for Remote Sensing Semantic Segmentation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 5001505. [Google Scholar] [CrossRef]

- Wu, H.; Huang, P.; Zhang, M.; Tang, W.; Yu, X. CMTFNet: CNN and multiscale transformer fusion network for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 2004612. [Google Scholar] [CrossRef]

- Zhou, W.; Fan, X.; Yan, W.; Shan, S.; Jiang, Q.; Hwang, J.N. Graph attention guidance network with knowledge distillation for semantic segmentation of remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4506015. [Google Scholar] [CrossRef]

- Lu, X.; Jiang, Z.; Zhang, H. Weakly Supervised Remote Sensing Image Semantic Segmentation with Pseudo Label Noise Suppression. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5406912. [Google Scholar] [CrossRef]

| Methods | CCIM | LGAMF | mIoU | mF1 |

|---|---|---|---|---|

| Mask2Former [27] | 85.38 | 91.98 | ||

| Mask2Former * | ✓ | 85.54 | 92.06 | |

| Mask2Former * | ✓ | ✓ | 85.88 | 92.27 |

| Methods | Module Size (M) | FPS | mIoU | mF1 |

|---|---|---|---|---|

| Mask2Former [27] | 421 | 34.95 | 85.38 | 91.98 |

| Mask2Former (-w,MLMFPN) | 668 | 27.42 | 85.88 | 92.27 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Q.; Liu, H.; Jin, Y.; Liu, X. Multi-Scale Context Enhancement Network with Local–Global Synergy Modeling Strategy for Semantic Segmentation on Remote Sensing Images. Electronics 2025, 14, 2526. https://doi.org/10.3390/electronics14132526

Ma Q, Liu H, Jin Y, Liu X. Multi-Scale Context Enhancement Network with Local–Global Synergy Modeling Strategy for Semantic Segmentation on Remote Sensing Images. Electronics. 2025; 14(13):2526. https://doi.org/10.3390/electronics14132526

Chicago/Turabian StyleMa, Qibing, Hongning Liu, Yifan Jin, and Xinyue Liu. 2025. "Multi-Scale Context Enhancement Network with Local–Global Synergy Modeling Strategy for Semantic Segmentation on Remote Sensing Images" Electronics 14, no. 13: 2526. https://doi.org/10.3390/electronics14132526

APA StyleMa, Q., Liu, H., Jin, Y., & Liu, X. (2025). Multi-Scale Context Enhancement Network with Local–Global Synergy Modeling Strategy for Semantic Segmentation on Remote Sensing Images. Electronics, 14(13), 2526. https://doi.org/10.3390/electronics14132526