Edge Intelligence: A Review of Deep Neural Network Inference in Resource-Limited Environments

Abstract

1. Introduction

- ML frameworks and compilers have been reviewed but without considering edge-specific compression and optimization [13].

- A comprehensive discussion on EI techniques, including compression, hardware accelerators, and software–hardware co-design, along with a classification of compression strategies, practical applications, benefits, and limitations;

- A detailed review of software tools and hardware platforms with a comparative analysis of available options;

- Highlighting design hurdles and proposing avenues for future investigation in efficient edge intelligence, outlining unresolved problems and potential advancements.

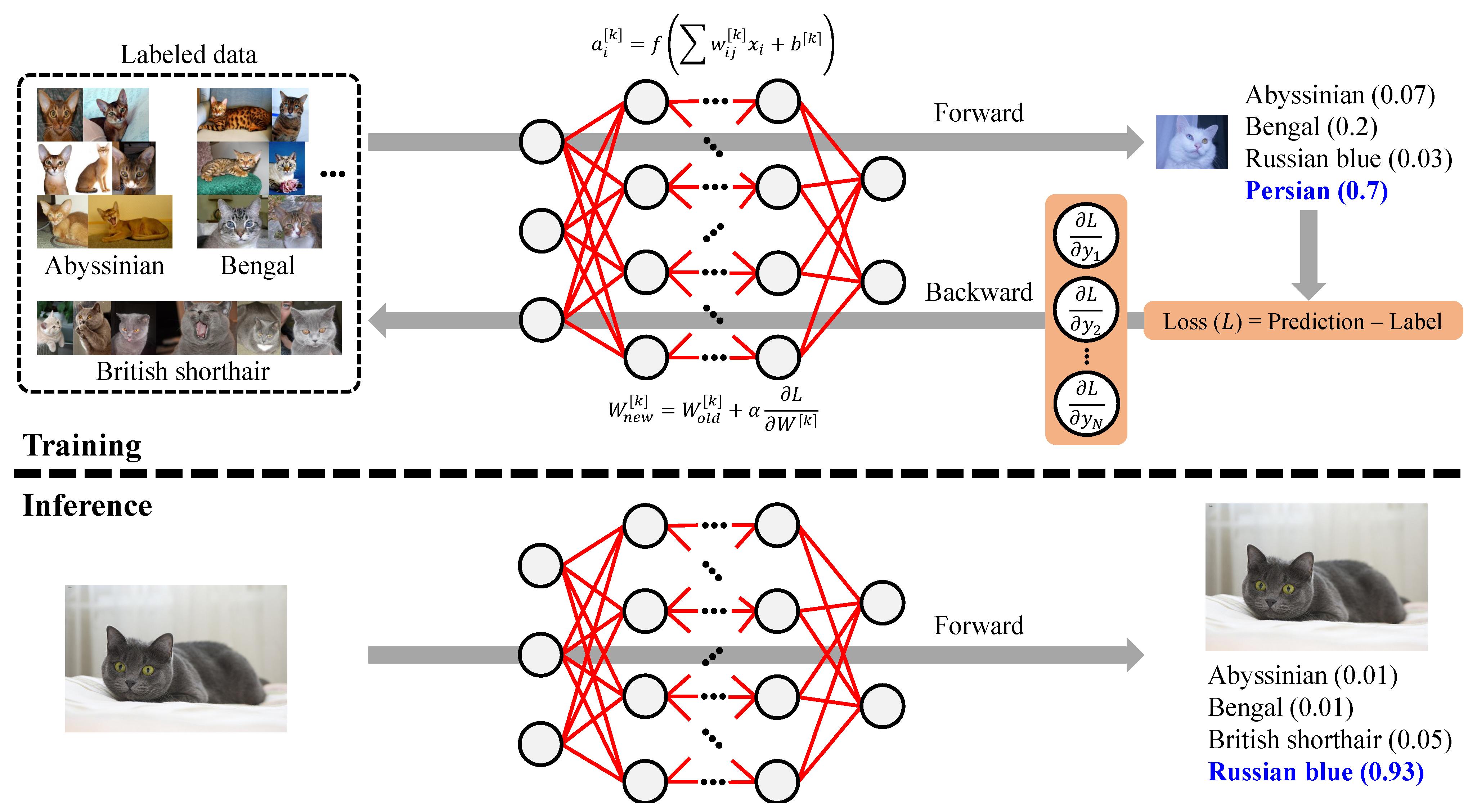

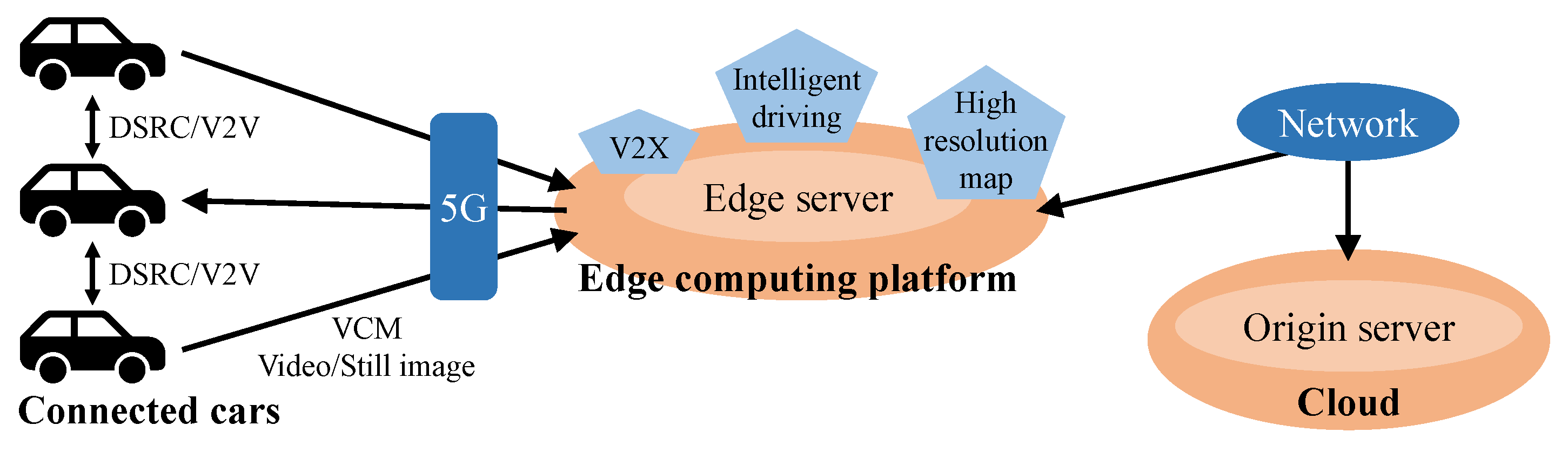

2. Edge Inference of Deep Learning Models

- Inference on edge servers involves deploying DL models on local computing nodes near data sources, such as micro data centers or 5G base stations. This approach offers higher computational power than standalone edge devices, allowing for complex model inference with lower latency compared to cloud solutions. However, it still requires network connectivity, which can introduce variable delays, and may have limited scalability compared to large cloud infrastructures.

- On-device inference on edge nodes runs DL models directly on resource-constrained hardware, such as smartphones, IoT devices, and embedded systems. This method ensures real-time responsiveness, enhances privacy by keeping data local, and reduces reliance on external networks. However, it faces challenges related to limited computational resources, power constraints, and the need for model compression and optimization techniques to fit within hardware capabilities.

- Cooperative inference splits computation between edge nodes and edge servers, where lightweight processing occurs on the node while computationally intensive tasks are offloaded to the edge server. This hybrid approach balances efficiency, latency, and power consumption while leveraging strengths of both local and remote computing. It is particularly beneficial for applications like autonomous vehicles and smart surveillance. However, it introduces additional complexity in workload partitioning and requires reliable network communication between edge node and server.

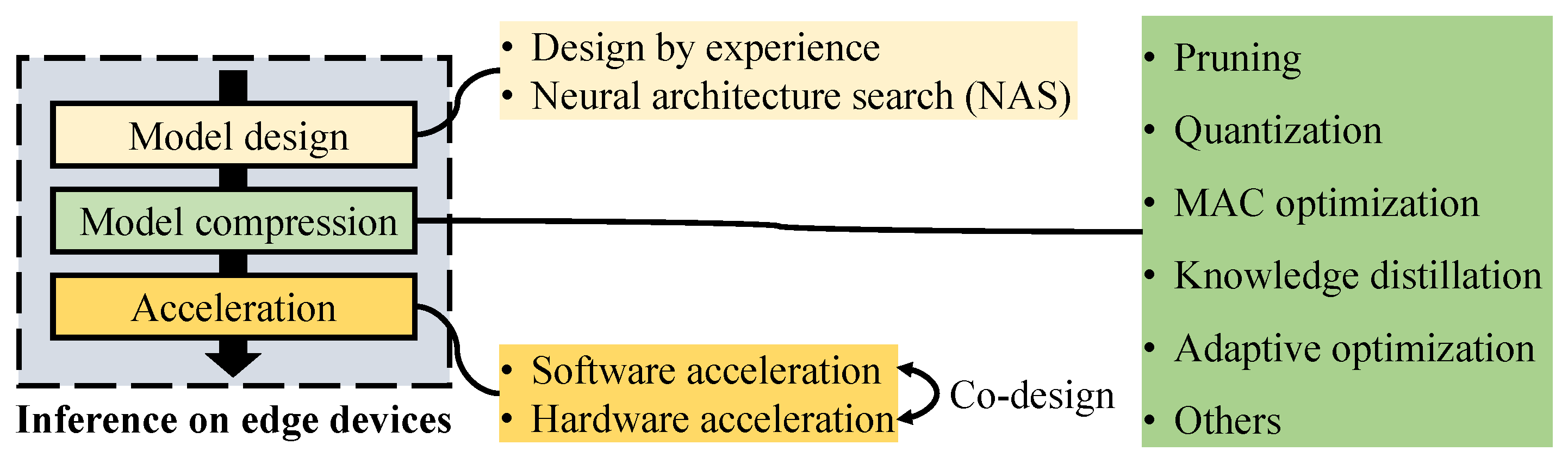

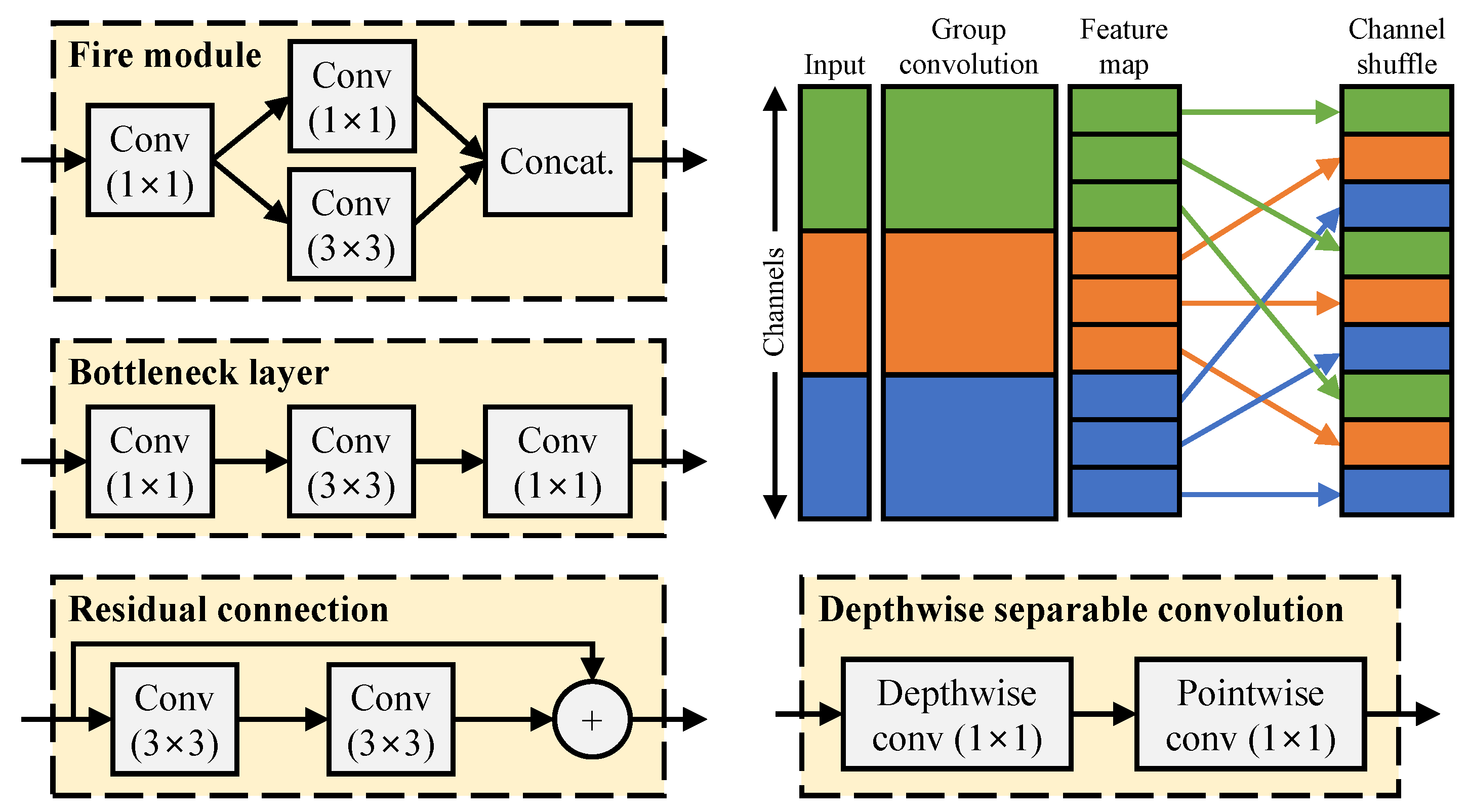

2.1. Model Design for Edge Inference

2.1.1. Design by Experience

2.1.2. Neural Architecture Search

2.2. Model Compression for Edge Inference

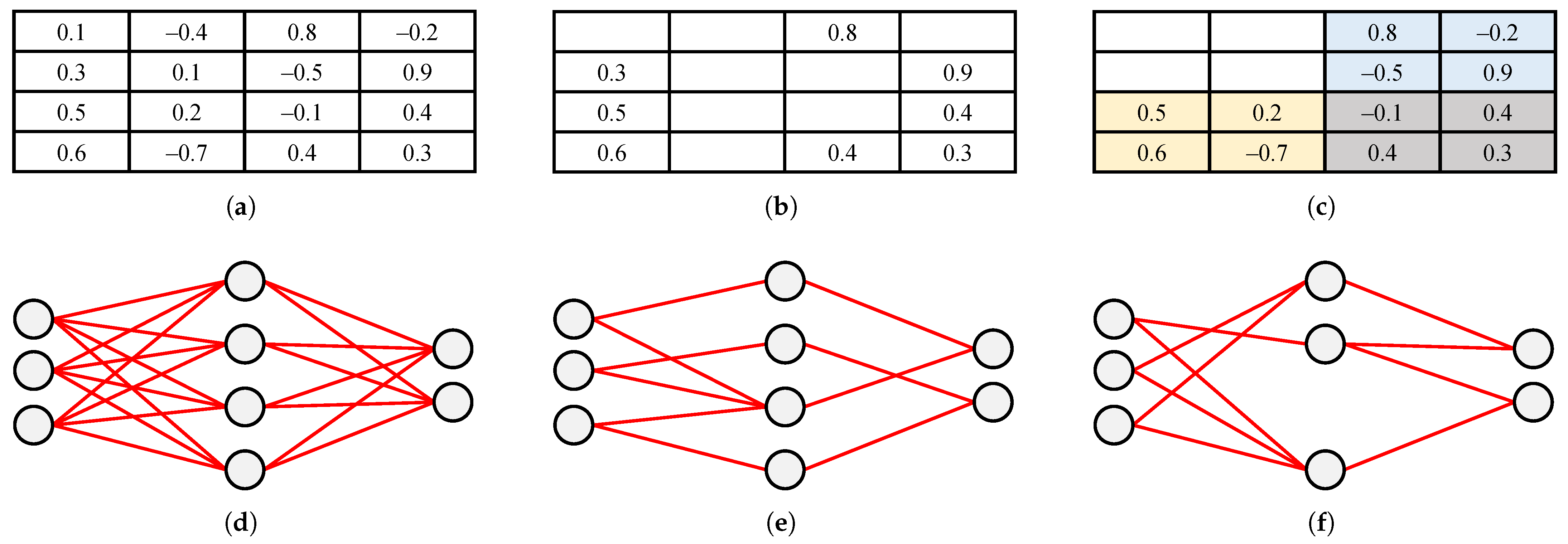

2.2.1. Pruning

2.2.2. Quantization

2.2.3. Multiply-and-Accumulate Optimization

2.2.4. Knowledge Distillation

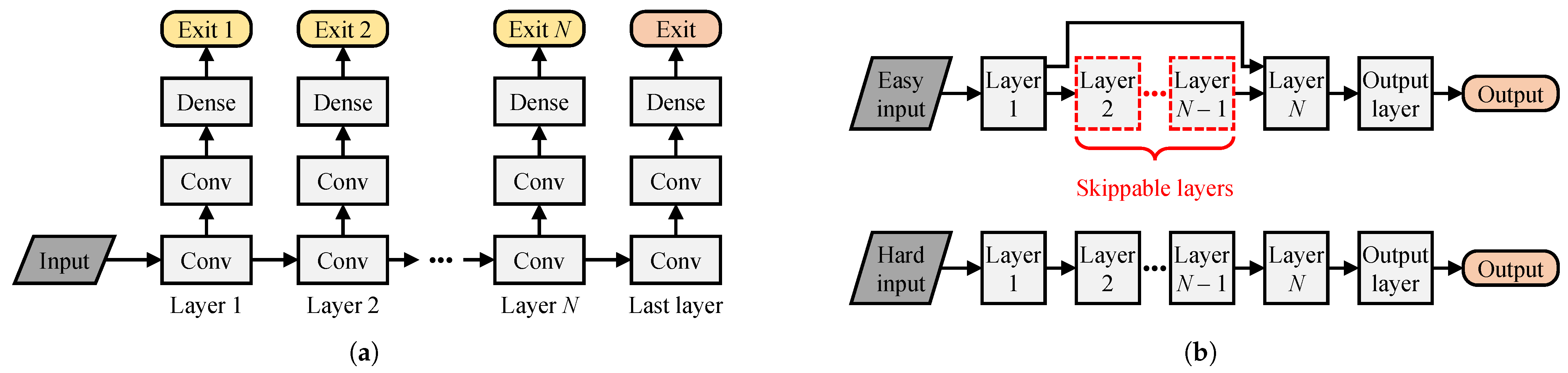

2.2.5. Adaptive Optimization

- Most suitable for dynamic environments: DDNNs [115] offer scalable, hierarchical processing ideal for industrial and IoT scenarios, albeit with increased system complexity.

2.3. Sparsity Handling for Efficient Edge Inference

| Type | Technique | Base Model | Objective | Results | Ref. |

|---|---|---|---|---|---|

| Lossy | Binarization | AlexNet | Speedup, model size ↓ | faster, smaller | [77] |

| Pruning | AlexNet | Energy ↓ | energy ↓ | [53] | |

| Pruning | VGG-16 | Speedup | faster | [50] | |

| Pruning | NIN | Speedup | faster | [131] | |

| Low-rank approximation | VGG-S | Energy ↓ | energy ↓ | [132] | |

| Low-rank approximation | CNN | Speedup | faster | [133] | |

| Low-rank approximation | CNN | Speedup | faster | [134] | |

| Knowledge distillation | GooLeNet | Speedup, memory ↓ | faster, memory ↓ | [103] | |

| Memory access optimization | VGG | Power, performance trade-off | accuracy | [135] | |

| Lossless | Pruning, quantization, Huffman coding | VGG-16 | Model size ↓ | smaller | [79] |

| Pruning | VGG | Computation ↓ | computation ↓ | [136] | |

| Pruning | AlexNet | Memory ↓ | memory ↓ | [45] | |

| Compact network design | AlexNet | Model size ↓ | smaller | [17] | |

| Kernel separation, low-rank approximation | AlexNet, VGG | Speedup, memory ↓ | faster, memory ↓ | [137] | |

| Low-rank approximation | VGG-16 | Computation ↓ | computation ↓ | [138] | |

| Knowledge distillation | WideResNet | Memory ↓ | memory ↓ | [139] | |

| Adaptive optimization | MobileNet | Speedup | faster | [140] | |

| Enhanced | Quantization | Faster R-CNN | Model size ↓ | smaller | [70] |

| Pruning | ResNet | Speedup | faster | [141] | |

| Compact network design | SqueezeNet | Model size ↓ | smaller | [142] | |

| Compact network design | YOLOv2 | Speedup, model size ↓ | faster, smaller | [143] | |

| Knowledge distillation | ResNet | Accuracy ↑ | [144] | ||

| Knowledge distillation, regularization | NIN | Memory ↓ | memory ↓ | [145] | |

| Dataflow optimization | DNN | Speedup, energy ↓ | faster, energy ↓ | [146] |

| Model | Weight Count (millions) | Operation Count (billions) |

|---|---|---|

| MobileNetV3-Small [29] | 2.9 | 0.11 |

| MNASNet-Small [33] | 2.0 | 0.14 |

| CondenseNet [21] | 2.9 | 0.55 |

| MobileNetV2 [16] | 3.4 | 0.60 |

| ANTNets [22] | 3.7 | 0.64 |

| NASNet-B [147] | 5.3 | 0.98 |

| MobileNetV1 [14] | 4.2 | 1.15 |

| PNASNet [140] | 5.1 | 1.18 |

| SqueezeNext [19] | 3.2 | 1.42 |

| SqueezeNet [17] | 1.25 | 1.72 |

2.3.1. Hardware Implications: Static Versus Dynamic Sparsity

- Static sparsity: Devices such as Cambricon-X [149] pre-identify zero weights during training and store their locations alongside indices, enabling efficient skipping of useless computations. However, these devices often require complex memory indexing that limits their real-time adaptability.

2.3.2. Neuron-Level Sparsity and Selective Execution

2.3.3. Sparse Weight Compression and Memory Efficiency

2.3.4. Conclusions

3. Software Tools and Hardware Platforms for Edge Inference

3.1. Software Tools

3.1.1. Compression–Compiler Co-Design

- Pruning masks or block patterns, such as 2D pruning windows;

- Retained kernel/channel indices for efficient memory access;

- Execution hints that guide instruction selection and tiling strategies.

- CoCo-Gen performs structured pruning and generates pruning-aware IR with metadata on weight masks and instruction patterns. It emits executable code tailored to the micro-architecture of the target device.

- CoCo-Tune accelerates pruning by leveraging the composable IR to identify reusable, sparsity-inducing patterns. It performs coarse-to-fine optimization using building blocks drawn from pre-trained models, significantly reducing retraining cost.

3.1.2. Algorithm–Hardware Co-Design

3.1.3. Efficient Parallelism

3.1.4. Memory Optimization

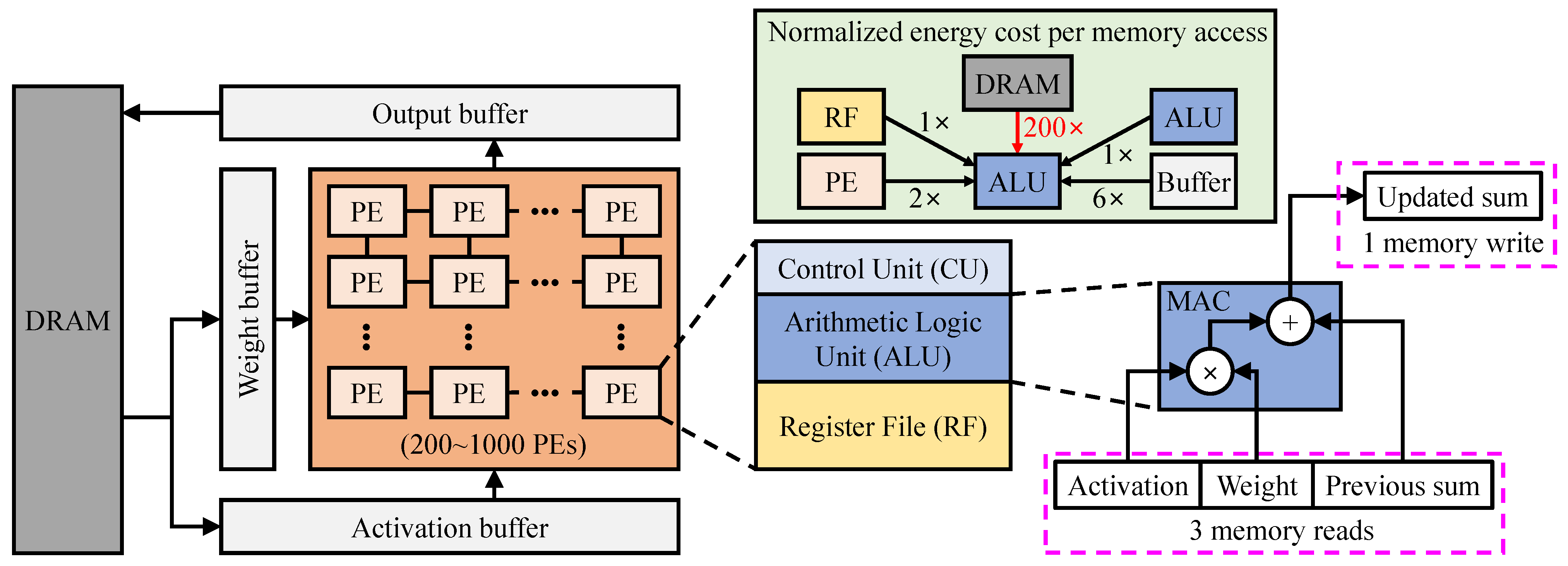

- Off-chip DRAM, which stores the bulk of model parameters and intermediate data;

- On-chip SRAM-based global buffers (GLBs), which cache activations and weights temporarily;

- Register files (RFs) embedded within each PE, providing the fastest and lowest-power memory access.

- Weight Stationary (WS): This strategy keeps weights fixed in RFs while streaming activations across PEs, significantly reducing weight-fetch energy. WS is well-suited for convolutional layers with high weight reuse. For example, NeuFlow [179] applies WS for efficient vision processing. However, frequent movement of activations and partial sums may still incur moderate energy costs.

- No Local Reuse (NLR): In this scheme, neither inputs nor weights are retained in local RFs; instead, all data are fetched from GLBs. While this simplifies hardware design and supports flexible scheduling, the high frequency of memory access limits its energy efficiency. DianNao [180] follows this approach but mitigates energy consumption by reusing partial sums in local registers.

- Output Stationary (OS): OS retains partial sums within PEs, allowing output activations to accumulate locally. This reduces external data movement and is particularly beneficial for CNNs. ShiDianNao [181] adopts this strategy and embeds its accelerator directly into image sensors, eliminating DRAM access and achieving up to energy savings over DianNao [180]. OS is especially well-suited for vision-centric edge devices requiring real-time inference.

- Row Stationary (RS): RS maximizes reuse at all levels—inputs, weights, and partial sums—within RFs. It loops data within PEs, significantly minimizing memory traffic. MAERI [182] is a representative architecture that implements RS using flexible interconnects and tree-based data routing. Despite increased hardware complexity, RS offers excellent performance-to-energy trade-offs and is highly scalable across DL models.

- Run-Length Coding (RLC): This method compresses consecutive zeros, reducing external bandwidth by up to for both weights and activations [167].

- Zero-Skipping: This approach skips MAC operations involving zero values, reducing unnecessary memory access and saving up to of energy [167].

- Weight Reuse Beyond Zeros: This scheme generalizes sparsity by recognizing and reusing repeated weights, not limited to zeros, further lowering memory access and computation requirements [160].

- RS achieves the best overall efficiency by minimizing access at all hierarchy levels, though it introduces higher design complexity. It is preferred in scenarios where flexibility and scalability are needed.

- OS offers a more straightforward design and strong efficiency in vision-centric models, making it highly appropriate for lightweight, task-specific edge devices.

3.1.5. FPGA-Centric Deployment via Deep Learning HDL Toolbox

- AXI4 master interfaces for DDR communication;

- Dedicated convolution and fully connected (FC) processors;

- Activation normalization modules supporting ReLU, max-pooling, and normalization functions;

- Controllers and scheduling logic to coordinate dataflow and computation.

3.1.6. AMD Vitis AI

3.1.7. NVIDIA TensorRT

- Mixed-precision quantization, which transforms FP32 weights and activations to FP16 or INT8, reducing computation time and memory usage.

- Layer and tensor fusion, which merges multiple operations into a single CUDA kernel to cut memory access overhead.

- Kernel auto-tuning, which selects optimal algorithms and batch sizes tailored to the hardware’s capabilities.

- Dynamic memory allocation, ensuring tensors occupy memory only when needed, minimizing memory usage.

- Multi-stream execution, enabling concurrent inference across multiple data streams for improved throughput.

- Time-step fusion for RNNs, which collapses sequential operations over time into fused kernels to enhance execution parallelism.

3.1.8. Qualcomm Neural Processing SDK

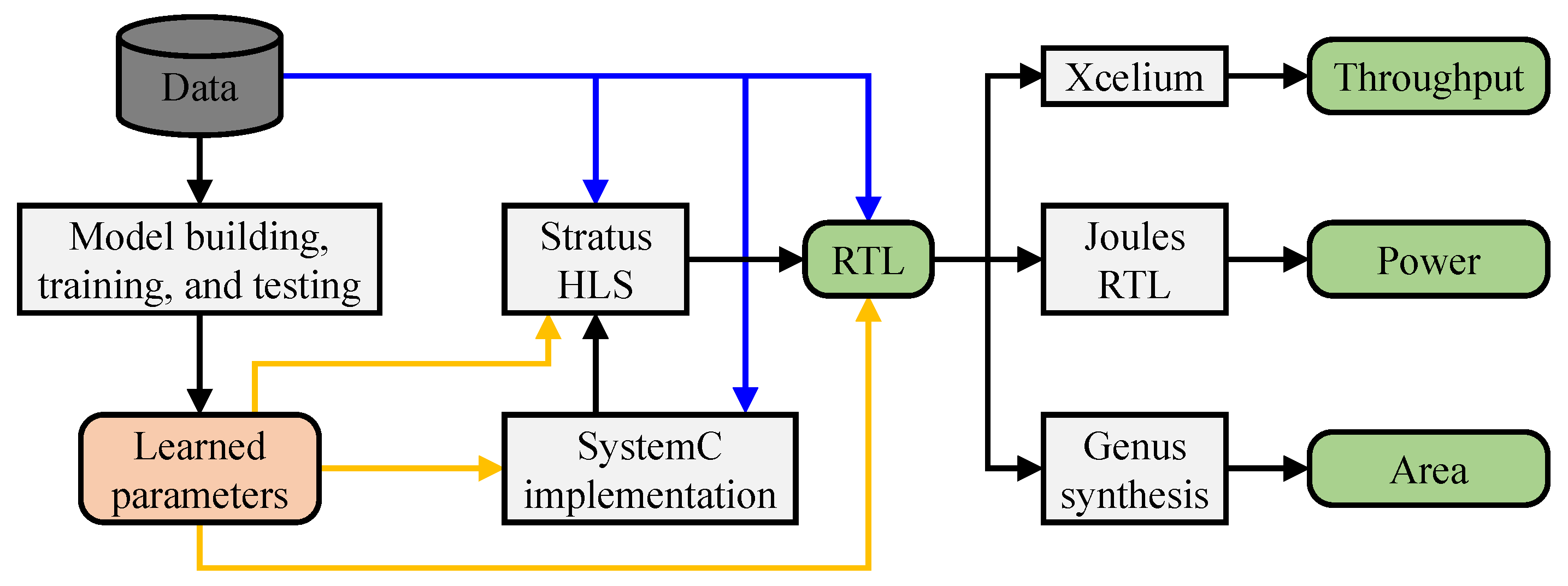

3.1.9. Cadence Stratus HLS

3.2. Hardware Platforms

3.2.1. CPU and GPU

3.2.2. SoC and ASIC

3.2.3. FPGA and Reconfigurable Architectures

3.2.4. Vision Processing Unit

3.2.5. Microcontroller Unit

4. Edge Inference Evaluation Metrics

4.1. Model Size and Memory

4.2. Accuracy

4.3. Area

4.4. Energy Efficiency

4.5. Latency and Throughput

5. Challenges and Opportunities

5.1. Automatic Hardware Mapping

5.2. Cross-Layer Mapping and Runtime Adaptation for Edge Deployment

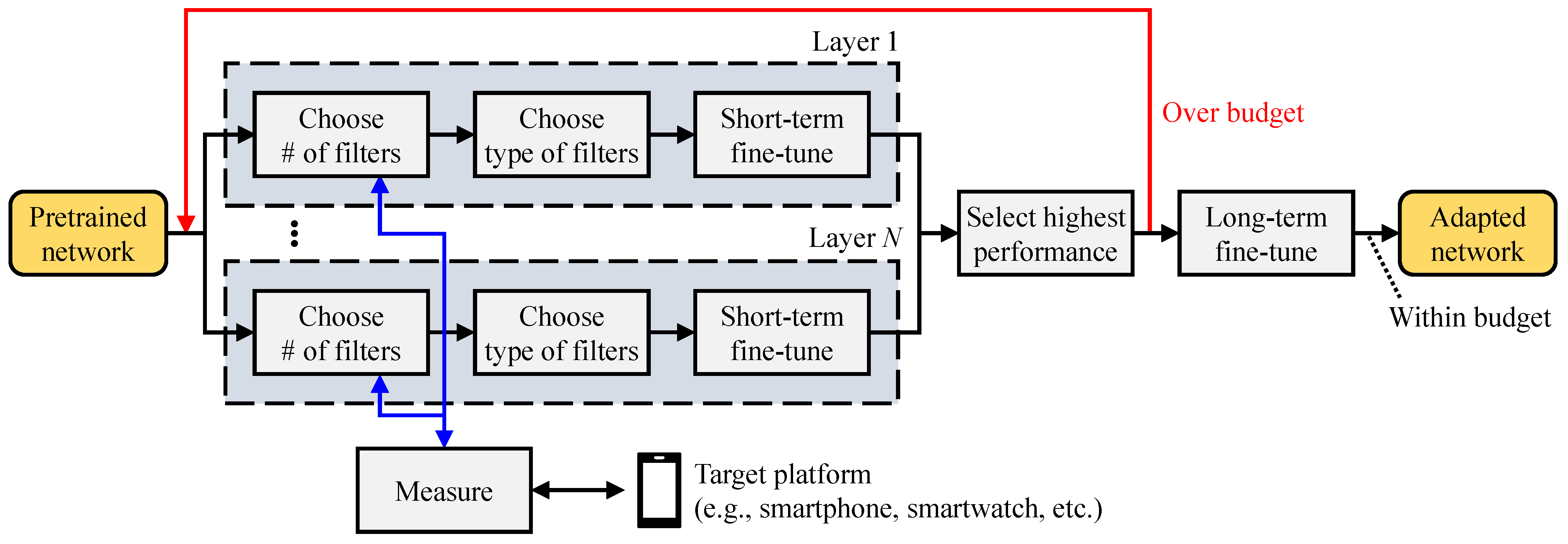

5.3. Automatic and Edge-Aware Compression

5.4. Neural Architecture Search for Edge Inference

5.5. Edge Training

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sze, V.; Chen, Y.H.; Yang, T.J.; Emer, J.S. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Leon, B. Online Learning and Neural Networks; Cambridge University Press: Cambridge, UK, 1998; Chapter Online Algorithms and Stochastic Approximations; pp. 9–42. [Google Scholar]

- Statista. Number of Licensed Cellular Internet of Things (IoT) Connections Worldwide from 2021 to 2030. Available online: https://www.statista.com/statistics/1403316/global-licensed-cellular-iot-connections/ (accessed on 17 March 2025).

- Pouyanfar, S.; Sadiq, S.; Yan, Y.; Tian, H.; Tao, Y.; Reyes, M.P.; Shyu, M.L.; Chen, S.C.; Iyengar, S.S. A Survey on Deep Learning: Algorithms, Techniques, and Applications. ACM Comput. Surv. 2018, 51, 1–36. [Google Scholar] [CrossRef]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Hasan, M.; Van Essen, B.C.; Awwal, A.A.S.; Asari, V.K. A State-of-the-Art Survey on Deep Learning Theory and Architectures. Electronics 2019, 8, 292. [Google Scholar] [CrossRef]

- Cheng, Y.; Wang, D.; Zhou, P.; Zhang, T. Model Compression and Acceleration for Deep Neural Networks: The Principles, Progress, and Challenges. IEEE Signal Process. Mag. 2018, 35, 126–136. [Google Scholar] [CrossRef]

- Wang, E.; Davis, J.J.; Zhao, R.; Ng, H.C.; Niu, X.; Luk, W.; Cheung, P.Y.K.; Constantinides, G.A. Deep Neural Network Approximation for Custom Hardware: Where We’ve Been, Where We’re Going. ACM Comput. Surv. 2019, 52, 1–39. [Google Scholar] [CrossRef]

- Capra, M.; Bussolino, B.; Marchisio, A.; Shafique, M.; Masera, G.; Martina, M. An Updated Survey of Efficient Hardware Architectures for Accelerating Deep Convolutional Neural Networks. Future Internet 2020, 12, 113. [Google Scholar] [CrossRef]

- Moolchandani, D.; Kumar, A.; Sarangi, S. Accelerating CNN Inference on ASICs: A Survey. J. Syst. Archit. 2021, 113, 101887. [Google Scholar] [CrossRef]

- Shawahna, A.; Sait, S.M.; El-Maleh, A. FPGA-Based Accelerators of Deep Learning Networks for Learning and Classification: A Review. IEEE Access 2019, 7, 7823–7859. [Google Scholar] [CrossRef]

- Mittal, S. A survey of FPGA-based accelerators for convolutional neural networks. Neural Comput. Appl. 2020, 32, 1109–1139. [Google Scholar] [CrossRef]

- Li, M.; Liu, Y.; Liu, X.; Sun, Q.; You, X.; Yang, H.; Luan, Z.; Gan, L.; Yang, G.; Qian, D. The Deep Learning Compiler: A Comprehensive Survey. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 708–727. [Google Scholar] [CrossRef]

- Howard, A.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Iandola, F.; Han, S.; Moskewicz, M.; Ashraf, K.; Dally, W.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Gholami, A.; Kwon, K.; Wu, B.; Tai, Z.; Yue, X.; Jin, P.; Zhao, S.; Keutzer, K. SqueezeNext: Hardware-Aware Neural Network Design. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 1719–171909. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar] [CrossRef]

- Huang, G.; Liu, S.; Maaten, L.; Weinberger, K. CondenseNet: An Efficient DenseNet Using Learned Group Convolutions. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 2752–2761. [Google Scholar] [CrossRef]

- Xiong, Y.; Kim, H.; Hedau, V. ANTNets: Mobile Convolutional Neural Networks for Resource Efficient Image Classification. arXiv 2019, arXiv:1904.03775. [Google Scholar] [CrossRef]

- Winograd, S. On multiplication of 2 × 2 matrices. Linear Algebra Its Appl. 1971, 4, 381–388. [Google Scholar] [CrossRef]

- Lavin, A.; Gray, S. Fast Algorithms for Convolutional Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4013–4021. [Google Scholar] [CrossRef]

- Meng, L.; Brothers, J. Efficient Winograd Convolution via Integer Arithmetic. arXiv 2019, arXiv:1901.01965. [Google Scholar] [CrossRef]

- Lu, L.; Liang, Y.; Xiao, Q.; Yan, S. Evaluating Fast Algorithms for Convolutional Neural Networks on FPGAs. In Proceedings of the 2017 IEEE 25th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Napa, CA, USA, 30 April–2 May 2017; pp. 101–108. [Google Scholar] [CrossRef]

- Kala, S.; Mathew, J.; Jose, B.R.; Nalesh, S. Evaluating Fast Algorithms for Convolutional Neural Networks on FPGAs. In Proceedings of the 2019 32nd International Conference on VLSI Design and 2019 18th International Conference on Embedded Systems (VLSID), Delhi, India, 5–9 January 2019; pp. 209–214. [Google Scholar] [CrossRef]

- Hardieck, M.; Kumm, M.; Moller, K.; Zipf, P. Reconfigurable Convolutional Kernels for Neural Networks on FPGAs. In Proceedings of the 2019 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Seaside, CA, USA, 24–26 February 2019; pp. 43–52. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vansudevan, V.; et al. Searching for MobileNetV3. arXiv 2019, arXiv:1905.02244. [Google Scholar] [CrossRef]

- Yang, T.J.; Howard, A.; Chen, B.; Zhang, X.; Go, A.; Sandler, M.; Sze, V.; Adam, H. NetAdapt: Platform-Aware Neural Network Adaptation for Mobile Applications. In Proceedings of the Computer Vision–ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018; pp. 289–304. [Google Scholar] [CrossRef]

- Smithson, S.; Yang, G.; Gross, W.; Meyer, B. Neural networks designing neural networks: Multi-objective hyper-parameter optimization. In Proceedings of the 35th International Conference on Computer-Aided Design (ICCAD), Austin, TX, USA, 7–10 November 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, Y.; Jiang, Y.; Zhu, W.; Liu, Y. Fast Hardware-Aware Neural Architecture Search. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 2959–2967. [Google Scholar] [CrossRef]

- Tan, M.; Chen, B.; Pang, R.; Vasudevan, V.; Sandler, M.; Howard, A.; Le, Q. MnasNet: Platform-Aware Neural Architecture Search for Mobile. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2815–2823. [Google Scholar] [CrossRef]

- Wu, B.; Dai, X.; Zhang, P.; Wang, Y.; Sun, F.; Wu, Y.; Tian, Y.; Vajda, P.; Jia, Y.; Keutzer, K. FBNet: Hardware-Aware Efficient ConvNet Design via Differentiable Neural Architecture Search. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 10726–10734. [Google Scholar] [CrossRef]

- Cai, H.; Zhu, L.; Han, S. ProxylessNAS: Direct Neural Architecture Search on Target Task and Hardware. In Proceedings of the 7th International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Sinha, N.; Shabayek, A.; Kacem, A.; Rostami, P.; Shneider, C.; Aouada, D. Hardware Aware Evolutionary Neural Architecture Search using Representation Similarity Metric. In Proceedings of the 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2024; pp. 2616–2625. [Google Scholar] [CrossRef]

- Han, C.; Chuang, G.; Tianzhe, W.; Zhekai, Z.; Song, H. Once-for-All: Train One Network and Specialize it for Efficient Deployment. In Proceedings of the 8th International Conference on Learning Representations (ICLR), Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Dai, X.; Yin, H.; Jha, N. NeST: A Neural Network Synthesis Tool Based on a Grow-and-Prune Paradigm. IEEE Trans. Comput. 2019, 68, 1487–1497. [Google Scholar] [CrossRef]

- Cao, S.; Zhang, C.; Yao, Z.; Xiao, W.; Nie, L.; Zhan, D.; Liu, Y.; Wu, M.; Zhang, L. Efficient and Effective Sparse LSTM on FPGA with Bank-Balanced Sparsity. In Proceedings of the 2019 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Seaside, CA, USA, 24–26 February 2019; pp. 63–72. [Google Scholar] [CrossRef]

- Zhu, M.; Zhang, T.; Gu, Z.; Xie, Y. Sparse Tensor Core: Algorithm and Hardware Co-Design for Vector-wise Sparse Neural Networks on Modern GPUs. In Proceedings of the 52nd Annual IEEE/ACM International Symposium on Microarchitecture, Columbus, OH, USA, 12–16 October 2019; pp. 359–371. [Google Scholar] [CrossRef]

- Wen, W.; Wu, C.; Wang, Y.; Chen, Y.; Li, H. Learning structured sparsity in deep neural networks. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 2082–2090. [Google Scholar]

- Mao, H.; Han, S.; Pool, J.; Li, W.; Liu, X.; Wang, Y.; Dally, W.J. Exploring the Granularity of Sparsity in Convolutional Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1927–1934. [Google Scholar] [CrossRef]

- Huang, Q.; Zhou, K.; You, S.; Neumann, U. Learning to Prune Filters in Convolutional Neural Networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 709–718. [Google Scholar] [CrossRef]

- Yu, J.; Lukefahr, A.; Palframan, D.; Dasika, G.; Das, R.; Mahlke, S. Scalpel: Customizing DNN pruning to the underlying hardware parallelism. In Proceedings of the 2017 ACM/IEEE 44th Annual International Symposium on Computer Architecture (ISCA), Toronto, ON, Canada, 24–28 June 2017; pp. 548–560. [Google Scholar] [CrossRef]

- Han, S.; Pool, J.; Tran, J.; Dally, W. Learning both weights and connections for efficient neural networks. In Proceedings of the 29th International Conference on Neural Information Processing Systems-Volume 1, Montreal, QC, Canada, 7–12 December 2015; pp. 1135–1143. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Jelcicova, Z.; Verhelst, M. Delta Keyword Transformer: Bringing Transformers to the Edge through Dynamically Pruned Multi-Head Self-Attention. arXiv 2022, arXiv:2204.03479. [Google Scholar] [CrossRef]

- Huang, S.; Liu, N.; Liang, Y.; Peng, H.; Li, H.; Xu, D.; Xie, M.; Ding, C. An Automatic and Efficient BERT Pruning for Edge AI Systems. In Proceedings of the 23rd International Symposium on Quality Electronic Design (ISQED), Santa Clara, CA, USA, 6–7 April 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Manessi, F.; Rozza, A.; Bianco, S.; Napoletano, P.; Schettini, R. Automated Pruning for Deep Neural Network Compression. In Proceedings of the 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 657–664. [Google Scholar] [CrossRef]

- Molchanov, P.; Tyree, S.; Karras, T.; Aila, T.; Kautz, J. Pruning Convolutional Neural Networks for Resource Efficient Inference. arXiv 2017, arXiv:1611.06440. [Google Scholar] [CrossRef]

- You, Z.; Yan, K.; Ye, J.; Ma, M.; Wang, P. Gate decorator: Global filter pruning method for accelerating deep convolutional neural networks. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, QC, Canada, 8–14 December 2019; pp. 2133–2144. [Google Scholar]

- Wang, H.; Zhang, Q.; Wang, Y.; Yu, L.; Hu, H. Structured Pruning for Efficient ConvNets via Incremental Regularization. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Yang, T.J.; Chen, Y.H.; Sze, V. Designing Energy-Efficient Convolutional Neural Networks Using Energy-Aware Pruning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6071–6079. [Google Scholar] [CrossRef]

- Wong, T.T. Performance evaluation of classification algorithms by k-fold and leave-one-out cross validation. Pattern Recognit. 2015, 48, 2839–2846. [Google Scholar] [CrossRef]

- Struharik, R.; Vukobratovic, B.; Erdeljan, A.; Rakanovic, D. CoNNa–Hardware accelerator for compressed convolutional neural networks. Microprocess. Microsystems 2020, 73, 102991. [Google Scholar] [CrossRef]

- Nurvitadhi, E.; Venkatesh, G.; Sim, J.; Marr, D.; Huang, R.; Hock, J.; Liew, Y.; Srivatsan, K.; Moss, D.; Subhaschandra, S.; et al. Can FPGAs Beat GPUs in Accelerating Next-Generation Deep Neural Networks? In Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2017; pp. 5–14. [Google Scholar] [CrossRef]

- Shen, Y.; Ferdman, M.; Milder, P. Escher: A CNN Accelerator with Flexible Buffering to Minimize Off-Chip Transfer. In Proceedings of the 2017 IEEE 25th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Napa, CA, USA, 30 April–2 May 2017; pp. 93–100. [Google Scholar] [CrossRef]

- Han, S.; Liu, X.; Mao, H.; Pu, J.; Pedram, A.; Horowitz, M.A.; Dally, W.J. SCNN: An accelerator for compressed-sparse convolutional neural networks. In Proceedings of the 2017 ACM/IEEE 44th Annual International Symposium on Computer Architecture (ISCA), Toronto, ON, Canada, 24–28 June 2017; pp. 27–40. [Google Scholar] [CrossRef]

- Chen, Y.H.; Yang, T.J.; Emer, J.; Sze, V. Eyeriss v2: A Flexible Accelerator for Emerging Deep Neural Networks on Mobile Devices. IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 292–308. [Google Scholar] [CrossRef]

- Zhou, X.; Du, Z.; Guo, Q.; Liu, S.; Liu, C.; Wang, C.; Zhou, X.; Li, L.; Chen, T.; Chen, Y. Cambricon-S: Addressing Irregularity in Sparse Neural Networks through A Cooperative Software/Hardware Approach. In Proceedings of the 51st Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Fukuoka, Japan, 20–24 October 2018; pp. 15–28. [Google Scholar] [CrossRef]

- NVIDIA. TensorRT Documentation. Available online: https://docs.nvidia.com/deeplearning/tensorrt/latest/index.html (accessed on 15 April 2025).

- Lai, L.; Suda, N.; Chandra, V. CMSIS-NN: Efficient Neural Network Kernels for Arm Cortex-M CPUs. arXiv 2018, arXiv:1801.06601. [Google Scholar] [CrossRef]

- NVIDIA. cuSPARSE. Available online: https://docs.nvidia.com/cuda/cusparse/ (accessed on 9 May 2025).

- Micikevicius, P.; Narang, S.; Alben, J.; Diamos, G.; Else, E.; Garcia, D.; Ginsburg, B.; Houston, M.; Kuchaiev, O.; Venkatesh, G.; et al. Mixed Precision Training. arXiv 2018, arXiv:1710.03740. [Google Scholar] [CrossRef]

- Wang, N.; Choi, J.; Brand, D.; Chen, C.Y.; Gopalakrishnan, K. Training deep neural networks with 8-bit floating point numbers. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 7686–7695. [Google Scholar]

- Armeniakos, G.; Zervakis, G.; Soudris, D.; Henkel, J. Hardware Approximate Techniques for Deep Neural Network Accelerators: A Survey. ACM Comput. Surv. 2022, 55, 1–36. [Google Scholar] [CrossRef]

- Horowitz, M. Computing’s energy problem (and what we can do about it). In Proceedings of the 2014 IEEE International Solid-State Circuits Conference Digest of Technical Papers (ISSCC), San Francisco, CA, USA, 9–13 February 2014; pp. 10–14. [Google Scholar] [CrossRef]

- Moons, B.; Verhelst, M. A 0.3–2.6 TOPS/W precision-scalable processor for real-time large-scale ConvNets. In Proceedings of the 2016 IEEE Symposium on VLSI Circuits (VLSI-Circuits), Honolulu, HI, USA, 15–17 June 2016; pp. 1–2. [Google Scholar] [CrossRef]

- Vanhoucke, V.; Senior, A.; Mao, M. Improving the speed of neural networks on CPUs. In Proceedings of the Deep Learning and Unsupervised Feature Learning NIPS Workshop, Granada, Spain, 12–17 December 2011; p. 4. [Google Scholar]

- Nasution, M.A.; Chahyati, D.; Fanany, M.I. Faster R-CNN with structured sparsity learning and Ristretto for mobile environment. In Proceedings of the 2017 International Conference on Advanced Computer Science and Information Systems (ICACSIS), Bali, Indonesia, 28–29 October 2017; pp. 309–314. [Google Scholar] [CrossRef]

- Gysel, P.; Motamedi, M.; Ghiasi, S. Hardware-oriented Approximation of Convolutional Neural Networks. arXiv 2016, arXiv:1604.03168. [Google Scholar] [CrossRef]

- Shang, Y.; Liu, G.; Kompella, R.R.; Yan, Y. Enhancing Post-training Quantization Calibration through Contrastive Learning. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 15921–15930. [Google Scholar] [CrossRef]

- Shen, H.; Mellempudi, N.; He, X.; Gao, Q.; Wang, C.; Wang, M. Efficient Post-training Quantization with FP8 Formats. In Proceedings of the 7th Machine Learning and Systems (MLSys), Santa Clara, CA, USA, 13–16 May 2024; pp. 483–498. [Google Scholar]

- Judd, P.; Albericio, J.; Moshovos, A. Stripes: Bit-Serial Deep Neural Network Computing. IEEE Comput. Archit. Lett. 2017, 16, 80–83. [Google Scholar] [CrossRef]

- Sharma, H.; Park, J.; Suda, N.; Lai, L.; Chau, B.; Kim, J.K.; Chandra, V.; Esmaeilzadeh, H. Bit Fusion: Bit-Level Dynamically Composable Architecture for Accelerating Deep Neural Network. In Proceedings of the 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA), Los Angeles, CA, USA, 1–6 June 2018; pp. 764–775. [Google Scholar] [CrossRef]

- Courbariaux, M.; Hubara, I.; Soudry, D.; El-Yahniv, R.; Bengio, Y. Binarized Neural Networks: Training Deep Neural Networks with Weights and Activations Constrained to +1 or -1. arXiv 2016, arXiv:1602.02830. [Google Scholar] [CrossRef]

- Rastegari, M.; Ordonez, V.; Redmon, J.; Farhadi, A. XNOR-Net: ImageNet Classification Using Binary Convolutional Neural Networks. In Proceedings of the Computer Vision–ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 525–542. [Google Scholar] [CrossRef]

- Zhou, A.; Yao, A.; Guo, Y.; Xu, L.; Chen, Y. Incremental Network Quantization: Towards Lossless CNNs with Low-Precision Weights. arXiv 2017, arXiv:1702.03044. [Google Scholar] [CrossRef]

- Han, S.; Mao, H.; Dally, W. Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding. arXiv 2016, arXiv:1510.00149. [Google Scholar] [CrossRef]

- Lee, J.; Kim, C.; Kang, S.; Shin, D.; Kim, S.; Yoo, H.J. UNPU: A 50.6TOPS/W unified deep neural network accelerator with 1b-to-16b fully-variable weight bit-precision. In Proceedings of the 2018 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 11–15 February 2018; pp. 218–220. [Google Scholar] [CrossRef]

- Sharify, S.; Lascorz, A.D.; Siu, K.; Judd, P.; Moshovos, A. Loom: Exploiting Weight and Activation Precisions to Accelerate Convolutional Neural Networks. In Proceedings of the 2018 55th ACM/ESDA/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 24–28 June 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Ryu, S.; Kim, H.; Yi, W.; Kim, J.J. BitBlade: Area and Energy-Efficient Precision-Scalable Neural Network Accelerator with Bitwise Summation. In Proceedings of the 2019 56th ACM/IEEE Design Automation Conference (DAC), Las Vegas, NV, USA, 2–6 June 2019; pp. 1–6. [Google Scholar]

- Courbariaux, M.; Bengio, Y.; David, J.P. BinaryConnect: Training deep neural networks with binary weights during propagations. In Proceedings of the 29th International Conference on Neural Information Processing Systems-Volume 2, Montreal, QC, Canada, 7–12 December 2015; pp. 3123–3131. [Google Scholar]

- Liu, B.; Li, F.; Wang, X.; Zhang, B.; Yan, J. Ternary Weight Networks. In Proceedings of the 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Zhu, C.; Han, S.; Mao, H.; Dally, W. Trained Ternary Quantization. arXiv 2017, arXiv:1612.01064. [Google Scholar] [CrossRef]

- Zhou, S.; Wu, Y.; Ni, Z.; Zhou, X.; Wen, H.; Zou, Y. DoReFa-Net: Training Low Bitwidth Convolutional Neural Networks with Low Bitwidth Gradients. arXiv 2018, arXiv:1606.06160. [Google Scholar] [CrossRef]

- Hubara, I.; Courbariaux, M.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Quantized neural networks: Training neural networks with low precision weights and activations. J. Mach. Learn. Res. 2017, 18, 6869–6898. [Google Scholar]

- Cai, Z.; He, X.; Sun, J.; Vasconcelos, N. Deep Learning with Low Precision by Half-Wave Gaussian Quantization. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5406–5414. [Google Scholar] [CrossRef]

- Xiao, G.; Lin, J.; Seznec, M.; Wu, H.; Demouth, J.; Han, S. SmoothQuant: Accurate and efficient post-training quantization for large language models. In Proceedings of the 40th International Conference on Machine Learning (ICML), Honolulu, HI, USA, 23–29 July 2023; pp. 38087–38099. [Google Scholar]

- Liu, J.; Niu, L.; Yuan, Z.; Yang, D.; Wang, X.; Liu, W. PD-Quant: Post-Training Quantization Based on Prediction Difference Metric. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–23 June 2023; pp. 24427–24437. [Google Scholar] [CrossRef]

- Bie, A.; Venkitesh, B.; Monteiro, J.; Haidar, M.; Rezagholizadeh, M. A Simplified Fully Quantized Transformer for End-to-end Speech Recognition. arXiv 2020, arXiv:1911.03604. [Google Scholar] [CrossRef]

- Li, Z.; Gu, Q. I-ViT: Integer-only Quantization for Efficient Vision Transformer Inference. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 17019–17029. [Google Scholar] [CrossRef]

- Shen, L.; Edalati, A.; Meyer, B.; Gross, W.; Clark, J. Robustness to distribution shifts of compressed networks for edge devices. arXiv 2024, arXiv:2401.12014. [Google Scholar] [CrossRef]

- Yang, Y.; Huang, Q.; Wu, B.; Zhang, T.; Ma, L.; Gambardella, G.; Blott, M.; Lavagno, L.; Vissers, K.; Wawrzynek, J.; et al. Synetgy: Algorithm-hardware Co-design for ConvNet Accelerators on Embedded FPGAs. In Proceedings of the 2019 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Seaside, CA, USA, 24–26 February 2019; pp. 23–32. [Google Scholar] [CrossRef]

- Baluja, S.; Marwood, D.; Covell, M.; Johnston, N. No Multiplication? No Floating Point? No Problem! Training Networks for Efficient Inference. arXiv 2018, arXiv:1809.09244. [Google Scholar] [CrossRef]

- Sarwar, S.S.; Venkataramani, S.; Raghunathan, A.; Roy, K. Multiplier-less Artificial Neurons exploiting error resiliency for energy-efficient neural computing. In Proceedings of the 2016 Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, 14–18 March 2016; pp. 145–150. [Google Scholar]

- Wang, L.; Yoon, K.J. Knowledge Distillation and Student-Teacher Learning for Visual Intelligence: A Review and New Outlooks. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3048–3068. [Google Scholar] [CrossRef]

- Bucilua, C.; Caruana, R.; Niculescu-Mizil, A. Model compression. In Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Philadelphia, PA, USA, 20–23 August 2006; pp. 535–541. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Heo, B.; Lee, M.; Yun, S.; Choi, J. Knowledge transfer via distillation of activation boundaries formed by hidden neurons. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 3779–3787. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, N. Like What You Like: Knowledge Distill via Neuron Selectivity Transfer. arXiv 2017, arXiv:1707.01219. [Google Scholar] [CrossRef]

- Romero, A.; Ballas, N.; Kahou, S.; Chassang, A.; Gatta, C.; Bengio, Y. FitNets: Hints for Thin Deep Nets. arXiv 2015, arXiv:1412.6550. [Google Scholar] [CrossRef]

- Li, D.; Wang, X.; Kong, D. DeepRebirth: Accelerating deep neural network execution on mobile devices. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence and Thirtieth Innovative Applications of Artificial Intelligence Conference and Eighth AAAI Symposium on Educational Advances in Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 2322–2330. [Google Scholar]

- Zhou, G.; Fan, Y.; Cui, R.; Bian, W.; Zhu, X.; Gai, K. Rocket launching: A universal and efficient framework for training well-performing light net. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence and Thirtieth Innovative Applications of Artificial Intelligence Conference and Eighth AAAI Symposium on Educational Advances in Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 4580–4587. [Google Scholar]

- Jiao, X.; Yin, Y.; Shang, L.; Jiang, X.; Chen, X.; Li, L.; Wang, F.; Liu, Q. TinyBERT: Distilling BERT for Natural Language Understanding. arXiv 2020, arXiv:1909.10351. [Google Scholar] [CrossRef]

- Chaulwar, A.; Malik, L.; Krajewski, M.; Reichel, F.; Lundbæk, L.N.; Huth, M.; Matejczyk, B. Extreme compression of sentence-transformer ranker models: Faster inference, longer battery life, and less storage on edge devices. arXiv 2022, arXiv:2207.12852. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiang, T.; Hospedales, T.; Lu, H. Deep Mutual Learning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4320–4328. [Google Scholar] [CrossRef]

- Mirzadeh, S.I.; Farajtabar, M.; Li, A.; Levine, N.; Matsukawa, A.; Ghasemzadeh, H. Improved Knowledge Distillation via Teacher Assistant. arXiv 2019, arXiv:1902.03393. [Google Scholar] [CrossRef]

- Yuan, L.; Tay, F.; Li, G.; Wang, T.; Feng, J. Revisiting Knowledge Distillation via Label Smoothing Regularization. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3902–3910. [Google Scholar] [CrossRef]

- Wang, T.; Zhu, J.Y.; Torralba, A.; Efros, A. Dataset Distillation. arXiv 2020, arXiv:1811.10959. [Google Scholar] [CrossRef]

- Bohdal, O.; Yang, Y.; Hospedales, T. Flexible Dataset Distillation: Learn Labels Instead of Images. arXiv 2020, arXiv:2006.08572. [Google Scholar] [CrossRef]

- Kang, D.; Emmons, J.; Abuzaid, F.; Bailis, P.; Zaharia, M. Flexible Dataset Distillation: Learn Labels Instead of Images. Proc. VLDB Endow. 2017, 10, 1586–1597. [Google Scholar] [CrossRef]

- Park, E.; Kim, D.; Kim, S.; Kim, Y.D.; Kim, G.; Yoon, S.; Yoo, S. Big/little deep neural network for ultra low power inference. In Proceedings of the 10th International Conference on Hardware/Software Codesign and System Synthesis, Amsterdam, The Netherlands, 4–9 October 2015; pp. 124–132. [Google Scholar]

- Teerapittayanon, S.; McDanel, B.; Kung, H. BranchyNet: Fast inference via early exiting from deep neural networks. In Proceedings of the 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 2464–2469. [Google Scholar] [CrossRef]

- Teerapittayanon, S.; McDanel, B.; Kung, H. Distributed Deep Neural Networks Over the Cloud, the Edge and End Devices. In Proceedings of the 37th International Conference on Distributed Computing Systems (ICDCS), Atlanta, GA, USA, 5–8 June 2017; pp. 328–339. [Google Scholar] [CrossRef]

- Li, L.; Ota, K.; Dong, M. Deep Learning for Smart Industry: Efficient Manufacture Inspection System with Fog Computing. IEEE Trans. Ind. Inform. 2018, 14, 4665–4673. [Google Scholar] [CrossRef]

- Wang, X.; Yu, F.; Dou, Z.Y.; Darrell, T.; Gonzalez, J. SkipNet: Learning Dynamic Routing in Convolutional Networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 409–424. [Google Scholar]

- Moons, B.; Uytterhoeven, R.; Dehaene, W.; Verhelst, M. 14.5 Envision: A 0.26-to-10TOPS/W subword-parallel dynamic-voltage-accuracy-frequency-scalable Convolutional Neural Network processor in 28nm FDSOI. In Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 5–9 February 2017; pp. 246–247. [Google Scholar] [CrossRef]

- Parsa, M.; Panda, P.; Sen, S.; Roy, K. Staged Inference using Conditional Deep Learning for energy efficient real-time smart diagnosis. In Proceedings of the 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Republic of Korea, 11–15 July 2017; pp. 78–81. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2020, arXiv:1905.11946. [Google Scholar] [CrossRef]

- Elsen, E.; Dukhan, M.; Gale, T.; Simonyan, K. Fast Sparse ConvNets. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 14617–14626. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Gale, T.; Elsen, E.; Hooker, S. The State of Sparsity in Deep Neural Networks. arXiv 2019, arXiv:1902.09574. [Google Scholar] [CrossRef]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. HuggingFace’s Transformers: State-of-the-art Natural Language Processing. arXiv 2020, arXiv:1910.03771. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, Z.; Han, S. SpAtten: Efficient Sparse Attention Architecture with Cascade Token and Head Pruning. In Proceedings of the 2021 IEEE International Symposium on High-Performance Computer Architecture (HPCA), Seoul, Republic of Korea, 27 February–3 March 2021; pp. 97–110. [Google Scholar] [CrossRef]

- Han, S.; Kang, J.; Mao, H.; Hu, Y.; Li, X.; Li, Y.; Xie, D.; Luo, H.; Yao, S.; Wang, Y.; et al. ESE: Efficient Speech Recognition Engine with Sparse LSTM on FPGA. In Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2017; pp. 75–84. [Google Scholar] [CrossRef]

- Zhu, M.; Gupta, S. To prune, or not to prune: Exploring the efficacy of pruning for model compression. arXiv 2017, arXiv:1710.01878. [Google Scholar] [CrossRef]

- Gupta, U.; Reagen, B.; Pentecost, L.; Donato, M.; Tambe, T.; Rush, A.; Wei, G.; Brooks, D. MASR: A Modular Accelerator for Sparse RNNs. In Proceedings of the International Conference on Parallel Architectures and Compilation Techniques, Seattle, WA, USA, 23–26 September 2019; pp. 1–14. [Google Scholar] [CrossRef]

- Yang, Q.; Mao, J.; Wang, Z.; Li, H. DASNet: Dynamic Activation Sparsity for Neural Network Efficiency Improvement. In Proceedings of the 31st International Conference on Tools with Artificial Intelligence (ICTAI), Portland, OR, USA, 4–6 November 2019; pp. 1401–1405. [Google Scholar] [CrossRef]

- Guo, J.; Potkonjak, M. Pruning Filters and Classes: Towards On-Device Customization of Convolutional Neural Networks. In Proceedings of the 1st International Workshop on Deep Learning for Mobile Systems and Applications, Niagara Falls, NY, USA, 23 June 2017; pp. 13–17. [Google Scholar] [CrossRef]

- Kim, Y.D.; Park, E.; Yoo, S.; Choi, T.; Yang, L.; Shin, D. Compression of Deep Convolutional Neural Networks for Fast and Low Power Mobile Applications. arXiv 2016, arXiv:1511.06530. [Google Scholar] [CrossRef]

- Denton, E.; Zaremba, W.; Bruna, J.; LeCun, Y.; Fergus, R. Exploiting linear structure within convolutional networks for efficient evaluation. In Proceedings of the 28th International Conference on Neural Information Processing Systems-Volume 1, Montreal, QC, Canada, 8–13 December 2014; pp. 1269–1277. [Google Scholar]

- Jaderberg, M.; Vedaldi, A.; Zisserman, A. Speeding up Convolutional Neural Networks with Low Rank Expansions. arXiv 2014, arXiv:1405.3866. [Google Scholar] [CrossRef]

- Han, S.; Shen, H.; Philipose, M.; Agarwal, S.; Wolman, A.; Krishnamurthy, A. MCDNN: An Approximation-Based Execution Framework for Deep Stream Processing Under Resource Constraints. In Proceedings of the 14th Annual International Conference on Mobile Systems, Applications, and Services, Singapore, 26–30 June 2016; pp. 123–136. [Google Scholar] [CrossRef]

- Liu, Z.; Li, J.; Shen, Z.; Huang, G.; Yan, S.; Zhang, C. Learning Efficient Convolutional Networks through Network Slimming. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2755–2763. [Google Scholar] [CrossRef]

- Bhattacharya, S.; Lane, N. Sparsification and Separation of Deep Learning Layers for Constrained Resource Inference on Wearables. In Proceedings of the 14th ACM Conference on Embedded Network Sensor Systems CD-ROM, Stanford, CA, USA, 14–16 November 2016; pp. 176–189. [Google Scholar] [CrossRef]

- Maji, P.; Bates, D.; Chadwick, A.; Mullins, R. ADaPT: Optimizing CNN inference on IoT and mobile devices using approximately separable 1-D kernels. In Proceedings of the 1st International Conference on Internet of Things and Machine Learning, Liverpool, UK, 17–18 October 2017; pp. 1–12. [Google Scholar] [CrossRef]

- Crowley, E.; Gray, G.; Storkey, A. Moonshine: Distilling with Cheap Convolutions. In Proceedings of the Advances in Neural Information Processing Systems 31 (NeurIPS 2018), Montreal, QC, Canada, 2–8 December 2018. [Google Scholar]

- Liu, C.; Zoph, B.; Neumann, M.; Shlens, J.; Hua, W.; Li, L.J.; Li, F.F.; Yuille, A.; Huang, J.; Murphy, K. Progressive Neural Architecture Search. In Proceedings of the Computer Vision–ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018; pp. 19–35. [Google Scholar] [CrossRef]

- Gordon, A.; Eban, E.; Nachum, O.; Chen, B.; Wu, H.; Yang, T.J.; Choi, E. MorphNet: Fast & Simple Resource-Constrained Structure Learning of Deep Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 1586–1595. [Google Scholar] [CrossRef]

- Shafiee, M.; Li, F.; Chwyl, B.; Wong, A. SquishedNets: Squishing SqueezeNet further for edge device scenarios via deep evolutionary synthesis. arXiv 2017, arXiv:1711.07459. [Google Scholar] [CrossRef]

- Wong, A.; Famuori, M.; Shafiee, M.; Li, F.; Chwyl, B.; Chung, J. YOLO Nano: A Highly Compact You Only Look Once Convolutional Neural Network for Object Detection. In Proceedings of the 2019 Fifth Workshop on Energy Efficient Machine Learning and Cognitive Computing-NeurIPS Edition (EMC2-NIPS), Vancouver, BC, Canada, 13 December 2019; pp. 22–25. [Google Scholar] [CrossRef]

- Zagoruyko, S.; Komodakis, N. Paying More Attention to Attention: Improving the Performance of Convolutional Neural Networks via Attention Transfer. arXiv 2017, arXiv:1612.03928. [Google Scholar] [CrossRef]

- Sau, B.; Balasubramanian, V. Deep Model Compression: Distilling Knowledge from Noisy Teachers. arXiv 2016, arXiv:1610.09650. [Google Scholar] [CrossRef]

- Tsung, P.K.; Tsai, S.F.; Pai, A.; Lai, S.J.; Lu, C. High performance deep neural network on low cost mobile GPU. In Proceedings of the 2016 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 7–11 January 2016; pp. 69–70. [Google Scholar] [CrossRef]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q. Learning Transferable Architectures for Scalable Image Recognition. arXiv 2018, arXiv:1707.07012. [Google Scholar] [CrossRef]

- Lee, J.; Lee, J.; Han, D.; Lee, J.; Park, G.; Yoo, H.J. 7.7 LNPU: A 25.3TFLOPS/W Sparse Deep-Neural-Network Learning Processor with Fine-Grained Mixed Precision of FP8-FP16. In Proceedings of the 2019 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 17–21 February 2019; pp. 142–144. [Google Scholar] [CrossRef]

- Zhang, S.; Du, Z.; Zhang, L.; Lan, H.; Liu, S.; Li, L.; Guo, Q.; Chen, T.; Chen, Y. Cambricon-X: An accelerator for sparse neural networks. In Proceedings of the 49th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Taipei, Taiwan, 15–19 October 2016; pp. 1–12. [Google Scholar] [CrossRef]

- Kim, D.; Ahn, J.; Yoo, S. ZeNA: Zero-Aware Neural Network Accelerator. IEEE Des. Test 2018, 35, 39–46. [Google Scholar] [CrossRef]

- Kung, H.T.; McDanel, B.; Zhang, S.Q. Adaptive Tiling: Applying Fixed-size Systolic Arrays To Sparse Convolutional Neural Networks. In Proceedings of the 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 1006–1011. [Google Scholar] [CrossRef]

- Deng, C.; Liao, S.; Xie, Y.; Parhi, K.; Qian, X.; Yuan, B. PermDNN: Efficient compressed DNN architecture with permuted diagonal matrices. In Proceedings of the 51st Annual IEEE/ACM International Symposium on Microarchitecture, Fukuoka, Japan, 20–24 October 2018; pp. 189–202. [Google Scholar] [CrossRef]

- Kung, H.; McDanel, B.; Zhang, S. Packing Sparse Convolutional Neural Networks for Efficient Systolic Array Implementations: Column Combining Under Joint Optimization. In Proceedings of the 24th International Conference on Architectural Support for Programming Languages and Operating Systems, Providence, RI, USA, 13–17 April 2019; pp. 821–834. [Google Scholar] [CrossRef]

- Akhlaghi, V.; Yazdanbakhsh, A.; Samadi, K.; Gupta, R.K.; Esmaeilzadeh, H. SnaPEA: Predictive Early Activation for Reducing Computation in Deep Convolutional Neural Networks. In Proceedings of the 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA), Los Angeles, CA, USA, 1–6 June 2018; pp. 662–673. [Google Scholar] [CrossRef]

- Song, M.; Zhao, J.; Hu, Y.; Zhang, J.; Li, T. Prediction Based Execution on Deep Neural Networks. In Proceedings of the 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA), Los Angeles, CA, USA, 1–6 June 2018; pp. 752–763. [Google Scholar] [CrossRef]

- Han, S.; Liu, X.; Mao, H.; Pu, J.; Pedram, A.; Horowitz, M.A.; Dally, W.J. EIE: Efficient Inference Engine on Compressed Deep Neural Network. In Proceedings of the 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), Seoul, Republic of Korea, 18–22 June 2016; pp. 243–254. [Google Scholar] [CrossRef]

- Dorrance, R.; Ren, F.; Markovic, D. A scalable sparse matrix-vector multiplication kernel for energy-efficient sparse-blas on FPGAs. In Proceedings of the 2014 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 26–28 February 2014; pp. 161–170. [Google Scholar] [CrossRef]

- Albericio, J.; Judd, P.; Hetherington, T.; Aamodt, T.; Jerger, N.E.; Moshovos, A. Cnvlutin: Ineffectual-Neuron-Free Deep Neural Network Computing. In Proceedings of the 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), Seoul, Republic of Korea, 18–22 June 2016; pp. 1–13. [Google Scholar] [CrossRef]

- Aimar, A.; Mostafa, H.; Calabrese, E.; Rios-Navarro, A.; Tapiador-Morales, R.; Lungu, I.A.; Milde, M.B.; Corradi, F.; Linares-Barranco, A.; Liu, S.C.; et al. NullHop: A Flexible Convolutional Neural Network Accelerator Based on Sparse Representations of Feature Maps. IEEE Trans. Neural Networks Learn. Syst. 2019, 30, 644–656. [Google Scholar] [CrossRef] [PubMed]

- Hegde, K.; Yu, J.; Agrawal, R.; Yan, M.; Pellauer, M.; Fletcher, C. UCNN: Exploiting computational reuse in deep neural networks via weight repetition. In Proceedings of the 45th Annual International Symposium on Computer Architecture, Los Angeles, CA, USA, 2–6 June 2018; pp. 674–687. [Google Scholar] [CrossRef]

- Ma, X.; Guo, F.M.; Niu, W.; Lin, X.; Tang, J.; Ma, K.; Ren, B.; Wang, Y. PCONV: The Missing but Desirable Sparsity in DNN Weight Pruning for Real-Time Execution on Mobile Devices. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 5117–5124. [Google Scholar] [CrossRef]

- Niu, W.; Ma, X.; Lin, S.; Wang, S.; Qian, X.; Lin, X.; Wang, Y.; Ren, B. PatDNN: Achieving Real-Time DNN Execution on Mobile Devices with Pattern-based Weight Pruning. In Proceedings of the 25th International Conference on Architectural Support for Programming Languages and Operating Systems, Lausanne, Switzerland, 16–20 March 2020; pp. 907–922. [Google Scholar] [CrossRef]

- Guan, H.; Liu, S.; Ma, X.; Niu, W.; Ren, B.; Shen, X.; Wang, Y.; Zhao, P. CoCoPIE: Enabling real-time AI on off-the-shelf mobile devices via compression-compilation co-design. Commun. ACM 2021, 64, 62–68. [Google Scholar] [CrossRef]

- Lin, J.; Chen, W.M.; Lin, Y.; Cohn, J.; Gan, C.; Han, S. MCUNet: Tiny deep learning on IoT devices. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; pp. 11711–11722. [Google Scholar]

- Chen, T.; Moreau, T.; Jiang, Z.; Zheng, L.; Yan, E.; Cowan, M.; Shen, H.; Wang, L.; Hu, Y.; Ceze, L.; et al. TVM: An Automated End-to-End Optimizing Compiler for Deep Learning. arXiv 2018, arXiv:1802.04799. [Google Scholar] [CrossRef]

- Jiang, X.; Wang, H.; Chen, Y.; Wu, Z.; Wang, L.; Zou, B.; Yang, Y.; Cui, Z.; Cai, Y.; Yu, T.; et al. MNN: A Universal and Efficient Inference Engine. arXiv 2020, arXiv:2002.12418. [Google Scholar] [CrossRef]

- Chen, Y.H.; Krishna, T.; Emer, J.S.; Sze, V. Eyeriss: An Energy-Efficient Reconfigurable Accelerator for Deep Convolutional Neural Networks. IEEE J. Solid-State Circuits 2016, 52, 127–138. [Google Scholar] [CrossRef]

- Alzantot, M.; Wang, Y.; Ren, Z.; Srivastava, M. RSTensorFlow: GPU Enabled TensorFlow for Deep Learning on Commodity Android Devices. In Proceedings of the 1st International Workshop on Deep Learning for Mobile Systems and Applications, Niagara Falls, NY, USA, 23 June 2017; pp. 7–12. [Google Scholar] [CrossRef]

- Oskouei, S.; Golestani, H.; Hashemi, M.; Ghiasi, S. CNNdroid: GPU-Accelerated Execution of Trained Deep Convolutional Neural Networks on Android. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 1201–1205. [Google Scholar] [CrossRef]

- Rizvi, S.; Cabodi, G.; Patti, D.; Francini, G. GPGPU Accelerated Deep Object Classification on a Heterogeneous Mobile Platform. Electronics 2016, 5, 88. [Google Scholar] [CrossRef]

- Cao, Q.; Balasubramanian, N.; Balasubramanian, A. MobiRNN: Efficient Recurrent Neural Network Execution on Mobile GPU. In Proceedings of the 1st International Workshop on Deep Learning for Mobile Systems and Applications, Niagara Falls, NY, USA, 23 June 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Marchetti, A.; Marchetti, G. RenderScript: Parallel Computing on Android, the Easy Way; Alberto Marchetti: Rome, Italy, 2016; Chapter What Is RenderScript; pp. 9–13. [Google Scholar]

- Motamedi, M.; Fong, D.; Ghiasi, S. Cappuccino: Efficient CNN Inference Software Synthesis for Mobile System-on-Chips. IEEE Embed. Syst. Lett. 2019, 11, 9–12. [Google Scholar] [CrossRef]

- Huynh, L.; Balan, R.; Lee, Y. DeepSense: A GPU-based Deep Convolutional Neural Network Framework on Commodity Mobile Devices. In Proceedings of the 2016 Workshop on Wearable Systems and Applications, Singapore, 30 June 2016; pp. 25–30. [Google Scholar] [CrossRef]

- Rallapalli, S.; Qiu, H.; Bency, A.; Karthikeyan, S.; Govindan, R.; Manjunath, B.; Urgaonkar, R. Are Very Deep Neural Networks Feasible on Mobile Devices? University of Southern California Technical Report; University of Southern California: Los Angeles, CA, USA, 2016; pp. 916–965. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Lane, N.D.; Bhattacharya, S.; Georgiev, P.; Forlivesi, C.; Jiao, L.; Qendro, L.; Kawsar, F. DeepX: A Software Accelerator for Low-Power Deep Learning Inference on Mobile Devices. In Proceedings of the 15th ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN), Vienna, Austria, 11–14 April 2016; pp. 1–12. [Google Scholar] [CrossRef]

- Lane, N.; Bhattacharya, S.; Mathur, A.; Forlivesi, C.; Kawsar, F. DXTK: Enabling Resource-efficient Deep Learning on Mobile and Embedded Devices with the DeepX Toolkit. In Proceedings of the 8th EAI International Conference on Mobile Computing, Applications and Services, Cambridge, UK, 30 November–1 December 2016; pp. 98–107. [Google Scholar] [CrossRef][Green Version]

- Farabet, C.; Martini, B.; Corda, B.; Akselrod, P.; Culurciello, E.; LeCun, Y. NeuFlow: A runtime reconfigurable dataflow processor for vision. In Proceedings of the CVPR 2011 WORKSHOPS, Colorado Springs, CO, USA, 20–25 June 2011; pp. 109–116. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, T.; Xu, Z.; Sun, N.; Temam, O. DianNao family: Energy-efficient hardware accelerators for machine learning. Commun. ACM 2016, 59, 105–112. [Google Scholar] [CrossRef]

- Du, Z.; Fasthuber, R.; Chen, T.; Ienne, P.; Li, L.; Luo, T.; Feng, X.; Chen, Y.; Temam, O. ShiDianNao: Shifting vision processing closer to the sensor. In Proceedings of the 2015 ACM/IEEE 42nd Annual International Symposium on Computer Architecture (ISCA), Portland, OR, USA, 13–17 June 2015; pp. 92–104. [Google Scholar] [CrossRef]

- Kwon, H.; Samajdar, A.; Krishna, T. MAERI: Enabling Flexible Dataflow Mapping over DNN Accelerators via Reconfigurable Interconnects. In Proceedings of the 23rd International Conference on Architectural Support for Programming Languages and Operating Systems, Williamsburg, VA, USA, 24–28 March 2018; pp. 461–475. [Google Scholar] [CrossRef]

- MATLAB. Deep Learning HDL Toolbox. Available online: https://www.mathworks.com/help/deep-learning-hdl/ (accessed on 15 April 2025).

- AMD. Vitis AI. Available online: https://xilinx.github.io/Vitis-AI/3.5/html/index.html (accessed on 15 April 2025).

- Qualcomm. Developing Apps with the Qualcomm Neural Processing SDK for AI. Available online: https://docs.qualcomm.com/bundle/publicresource/topics/80-63442-4/developing-apps-qualcomm-neural-processing-sdk.html?product=1601111740010412 (accessed on 15 April 2025).

- Roane, J. Automated HW/SW Co-Design of DSP Systems Composed of Processors and Hardware Accelerators. Available online: https://www.cadence.com/en_US/home/resources/white-papers/automated-hw-sw-co-design-of-dsp-systems-composed-of-processors-and-hardware-accelerators-wp.html (accessed on 15 April 2025).

- NVIDIA. NVIDIA Jetson TX2 NX System-on-Module. Available online: https://developer.nvidia.com/downloads/jetson-tx2-nx-system-module-data-sheet (accessed on 7 May 2025).

- NVIDIA. NVIDIA Jetson Nano. Available online: https://developer.nvidia.com/embedded/jetson-nano (accessed on 7 May 2025).

- NVIDIA. JetPack SDK. Available online: https://developer.nvidia.com/embedded/jetpack (accessed on 8 May 2025).

- Cadence. Cadence Tensilica DNA 100 Processor. Available online: https://www.cadence.com/content/dam/cadence-www/global/en_US/documents/company/Events/CDNLive/Secured/Proceedings/IL/2018/ip/IP02.pdf (accessed on 1 May 2025).

- Synopsys. Synopsys EV7x Vision Processors. Available online: https://www.synopsys.com/dw/ipdir.php?ds=ev7x-vision-processors (accessed on 1 May 2025).

- Han, D.; Lee, J.; Lee, J.; Yoo, H.J. A 1.32 TOPS/W Energy Efficient Deep Neural Network Learning Processor with Direct Feedback Alignment based Heterogeneous Core Architecture. In Proceedings of the 2019 Symposium on VLSI Circuits, Kyoto, Japan, 9–14 June 2019; pp. C304–C305. [Google Scholar] [CrossRef]

- Yuan, Z.; Yue, J.; Yang, H.; Wang, Z.; Li, J.; Yang, Y.; Guo, Q.; Li, X.; Chang, M.F.; Yang, H.; et al. Sticker: A 0.41-62.1 TOPS/W 8Bit Neural Network Processor with Multi-Sparsity Compatible Convolution Arrays and Online Tuning Acceleration for Fully Connected Layers. In Proceedings of the 2018 IEEE Symposium on VLSI Circuits, Honolulu, HI, USA, 18–22 June 2018; pp. 33–34. [Google Scholar] [CrossRef]

- Lu, W.; Yan, G.; Li, J.; Gong, S.; Han, Y.; Li, X. FlexFlow: A Flexible Dataflow Accelerator Architecture for Convolutional Neural Networks. In Proceedings of the 2017 IEEE International Symposium on High Performance Computer Architecture (HPCA), Austin, TX, USA, 4–8 February 2017; pp. 553–564. [Google Scholar] [CrossRef]

- Li, J.; Jiang, S.; Gong, S.; Wu, J.; Yan, J.; Yan, G.; Li, X. SqueezeFlow: A Sparse CNN Accelerator Exploiting Concise Convolution Rules. IEEE Trans. Comput. 2019, 68, 1663–1677. [Google Scholar] [CrossRef]

- Chen, Y.; Luo, T.; Liu, S.; Zhang, S.; He, L.; Wang, J.; Li, L.; Chen, T.; Xu, Z.; Sun, N.; et al. DaDianNao: A Machine-Learning Supercomputer. In Proceedings of the 47th Annual IEEE/ACM International Symposium on Microarchitecture, Cambridge, UK, 13–17 December 2014; pp. 609–622. [Google Scholar] [CrossRef]

- Ceva. Scalable Edge NPU IP for Generative AI. Available online: https://www.ceva-ip.com/product/ceva-neupro-m/ (accessed on 1 May 2025).

- Coral. Edge TPU Inferencing Overview. Available online: https://coral.ai/docs/edgetpu/inference/ (accessed on 1 May 2025).

- Guo, K.; Sui, L.; Qiu, J.; Yu, J.; Wang, J.; Yao, S.; Han, S.; Wang, Y.; Yang, H. Angel-Eye: A Complete Design Flow for Mapping CNN Onto Embedded FPGA. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2018, 37, 35–47. [Google Scholar] [CrossRef]

- Venieris, S.I.; Bouganis, C.S. fpgaConvNet: Mapping Regular and Irregular Convolutional Neural Networks on FPGAs. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 326–342. [Google Scholar] [CrossRef]

- Véstias, M.; Policarpo Duarte, R.; de Sousa, J.T.; Neto, H. Lite-CNN: A High-Performance Architecture to Execute CNNs in Low Density FPGAs. In Proceedings of the 28th International Conference on Field Programmable Logic and Applications (FPL), Dublin, Ireland, 27–31 August 2018; pp. 399–3993. [Google Scholar] [CrossRef]

- Wang, S.; Li, Z.; Ding, C.; Yuan, B.; Qiu, Q.; Wang, Y.; Lang, Y. C-LSTM: Enabling Efficient LSTM using Structured Compression Techniques on FPGAs. In Proceedings of the 2018 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 25–27 February 2018; pp. 11–20. [Google Scholar] [CrossRef]

- Gao, C.; Neil, D.; Ceolini, E.; Liu, S.C.; Delbruck, T. DeltaRNN: A Power-efficient Recurrent Neural Network Accelerator. In Proceedings of the 2018 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 25–27 February 2018; pp. 21–30. [Google Scholar] [CrossRef]

- Fleischer, B.; Shukla, S.; Ziegler, M.; Silberman, J.; Oh, J.; Srinivasan, V.; Choi, J.; Mueller, S.; Agrawal, A.; Babinsky, T.; et al. A Scalable Multi-TeraOPS Deep Learning Processor Core for AI Trainina and Inference. In Proceedings of the 2018 IEEE Symposium on VLSI Circuits, Honolulu, HI, USA, 18–22 June 2018; pp. 35–36. [Google Scholar] [CrossRef]

- Jouppi, N.P.; Young, C.; Patil, N.; Patterson, D.; Agrawal, G.; Bajwa, R.; Bates, S.; Bhatia, S.; Boden, N.; Borchers, A.; et al. In-Datacenter Performance Analysis of a Tensor Processing Unit. In Proceedings of the 44th Annual International Symposium on Computer Architecture, Toronto, ON, Canada, 24–28 June 2017; pp. 1–12. [Google Scholar] [CrossRef]

- Fan, X.; Wu, D.; Cao, W.; Luk, W.; Wang, L. Stream Processing Dual-Track CGRA for Object Inference. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2018, 26, 1098–1111. [Google Scholar] [CrossRef]

- Yin, S.; Ouyang, P.; Tang, S.; Tu, F.; Li, X.; Zheng, S.; Lu, T.; Gu, J.; Liu, L.; Wei, S. A High Energy Efficient Reconfigurable Hybrid Neural Network Processor for Deep Learning Applications. IEEE J. Solid-State Circuits 2018, 53, 968–982. [Google Scholar] [CrossRef]

- Shin, D.; Lee, J.; Lee, J.; Lee, J.; Yoo, H.J. DNPU: An Energy-Efficient Deep-Learning Processor with Heterogeneous Multi-Core Architecture. IEEE Micro 2018, 38, 85–93. [Google Scholar] [CrossRef]

- Fujii, T.; Toi, T.; Tanaka, T.; Togawa, K.; Kitaoka, T.; Nishino, K.; Nakamura, N.; Nakahara, H.; Motomura, M. New Generation Dynamically Reconfigurable Processor Technology for Accelerating Embedded AI Applications. In Proceedings of the 2018 IEEE Symposium on VLSI Circuits, Montreal, QC, Canada, 7–12 December 2015; pp. 41–42. [Google Scholar] [CrossRef]

- Yin, S.; Ouyang, P.; Tang, S.; Tu, F.; Li, X.; Liu, L.; Wei, S. A 1.06-to-5.09 TOPS/W reconfigurable hybrid-neural-network processor for deep learning applications. In Proceedings of the 2017 Symposium on VLSI Circuits, Kyoto, Japan, 5–8 June 2017; pp. C26–C27. [Google Scholar] [CrossRef]

- Pei, J.; Deng, L.; Song, S.; Zhao, M.; Zhang, Y.; Wu, S.; Wang, G.; Zou, Z.; Wu, Z.; He, W.; et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 2019, 572, 106–111. [Google Scholar] [CrossRef]

- Zimmer, B.; Venkatesan, R.; Shao, Y.S.; Clemons, J.; Fojtik, M.; Jiang, N.; Keller, B.; Klinefelter, A.; Pinckney, N.; Raina, P.; et al. A 0.11 pJ/Op, 0.32-128 TOPS, Scalable Multi-Chip-Module-based Deep Neural Network Accelerator with Ground-Reference Signaling in 16nm. In Proceedings of the 2019 Symposium on VLSI Circuits, Kyoto, Japan, 9–14 June 2019; pp. C300–C301. [Google Scholar] [CrossRef]

- Liang, M.; Chen, M.; Wang, Z.; Sun, J. A CGRA based Neural Network Inference Engine for Deep Reinforcement Learning. In Proceedings of the 2018 IEEE Asia Pacific Conference on Circuits and Systems (APCCAS), Chengdu, China, 26–30 October 2018; pp. 540–543. [Google Scholar] [CrossRef]

- Fowers, J.; Ovtcharov, K.; Papamichael, M.; Massengill, T.; Liu, M.; Lo, D.; Alkalay, S.; Haselman, M.; Adams, L.; Ghandi, M.; et al. A Configurable Cloud-Scale DNN Processor for Real-Time AI. In Proceedings of the 45th Annual International Symposium on Computer Architecture (ISCA), Los Angeles, CA, USA, 1–6 June 2018; pp. 1–14. [Google Scholar] [CrossRef]

- Intel. Intel Movidius Myriad X Vision Processing Unit 4GB. Available online: https://www.intel.com/content/www/us/en/products/sku/125926/intel-movidius-myriad-x-vision-processing-unit-4gb/specifications.html (accessed on 15 May 2025).

- Intel. Intel Distribution of OpenVINO Toolkit. Available online: https://www.intel.com/content/www/us/en/developer/tools/openvino-toolkit/overview.html (accessed on 2 May 2025).

- Intel. Intel Neural Compute Stick 2. Available online: https://cdrdv2-public.intel.com/749742/neural-compute-stick2-product-brief.pdf (accessed on 15 May 2025).

- Shacham, O.; Reynders, M. Pixel Visual Core: Image Processing and Machine Learning on Pixel 2. Available online: https://blog.google/products/pixel/pixel-visual-core-image-processing-and-machine-learning-pixel-2/ (accessed on 2 May 2025).

- Mobileye. The Evolution of EyeQ. Available online: https://www.mobileye.com/technology/eyeq-chip/ (accessed on 2 May 2025).

- Rossi, D.; Loi, I.; Conti, F.; Tagliavini, G.; Pullini, A.; Marongiu, A. Energy efficient parallel computing on the PULP platform with support for OpenMP. In Proceedings of the 28th Convention of Electrical & Electronics Engineers in Israel (IEEEI), Eilat, Israel, 3–5 December 2014; pp. 1–5. [Google Scholar] [CrossRef]

- Garofalo, A.; Tagliavini, G.; Conti, F.; Rossi, D.; Benini, L. XpulpNN: Accelerating Quantized Neural Networks on RISC-V Processors Through ISA Extensions. In Proceedings of the 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 9–13 March 2020; pp. 186–191. [Google Scholar] [CrossRef]

- Zhang, Y.; Suda, N.; Lai, L.; Chandra, V. Hello Edge: Keyword Spotting on Microcontrollers. arXiv 2018, arXiv:1711.07128. [Google Scholar] [CrossRef]

- Novac, P.E.; Hacene, G.; Pegatoquet, A.; Miramond, B.; Gripon, V. Quantization and Deployment of Deep Neural Networks on Microcontrollers. Sensors 2021, 21, 2984. [Google Scholar] [CrossRef]

- Hashemi, S.; Anthony, N.; Tann, H.; Bahar, R.; Reda, S. Understanding the impact of precision quantization on the accuracy and energy of neural networks. In Proceedings of the Conference on Design, Automation & Test in Europe (DATE), Lausanne, Switzerland, 27–31 March 2017; pp. 1478–1483. [Google Scholar]

- Lee, J.; Hwang, K. YOLO with adaptive frame control for real-time object detection applications. Multimed. Tools Appl. 2022, 81, 36375–36396. [Google Scholar] [CrossRef]

- Cadence. Cadence InCyte Chip Estimator: Fast and Accurate Estimation of IC Size, Power, Performance, and Cost. Available online: http://pdf2.solecsy.com/567/201d55e2-371a-4d6a-b7a5-00461f050f65.pdf (accessed on 7 June 2025).

- Yang, T.J.; Chen, Y.H.; Emer, J.; Sze, V. A method to estimate the energy consumption of deep neural networks. In Proceedings of the 51st Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 29 October–1 November 2017; pp. 1916–1920. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Duan, F. ELPG: End-to-End Latency Prediction for Deep-Learning Model Inference on Edge Devices. In Proceedings of the 10th International Conference on Computer and Communications (ICCC), Chengdu, China, 13–16 December 2024; pp. 2160–2165. [Google Scholar] [CrossRef]

- Intel. Intel oneAPI Math Kernel Library (oneMKL). Available online: https://www.intel.com/content/www/us/en/docs/oneapi/programming-guide/2024-1/intel-oneapi-math-kernel-library-onemkl.html (accessed on 9 May 2025).

- Blott, M.; Preuber, T.; Fraser, N.; Gambardella, G.; Obrien, K.; Umuroglu, Y.; Leeser, M.; Vissers, K. FINN-R: An End-to-End Deep-Learning Framework for Fast Exploration of Quantized Neural Networks. ACM Trans. Reconfigurable Technol. Syst. (TRETS) 2018, 11, 1–23. [Google Scholar] [CrossRef]

- Xie, Z.; Xu, X.; Walker, M.; Knebel, J.; Palaniswamy, K.; Hebert, N.; Hu, J.; Yang, H.; Chen, Y.; Das, S. APOLLO: An Automated Power Modeling Framework for Runtime Power Introspection in High-Volume Commercial Microprocessors. In Proceedings of the 54th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Virtual, 18–22 October 2021; pp. 1–14. [Google Scholar] [CrossRef]

- Wen, H.; Li, Y.; Zhang, Z.; Jiang, S.; Ye, X.; Quyang, Y.; Zhang, Y.; Liu, Y. AdaptiveNet: Post-deployment Neural Architecture Adaptation for Diverse Edge Environments. In Proceedings of the 29th Annual International Conference on Mobile Computing and Networking, Madrid, Spain, 2–6 October 2023; pp. 1–17. [Google Scholar] [CrossRef]

- Venkataramani, S.; Ranjan, A.; Banerjee, S.; Das, D.; Avancha, S.; Jagannathan, A.; Durg, A.; Nagaraj, D.; Kaul, B.; Dubey, P.; et al. SCALEDEEP: A scalable compute architecture for learning and evaluating deep networks. In Proceedings of the 44th Annual International Symposium on Computer Architecture (ISCA), Toronto, ON, Canada, 24–28 June 2017; pp. 13–26. [Google Scholar] [CrossRef]

- Song, L.; Mao, J.; Zhuo, Y.; Qian, X.; Li, H.; Chen, Y. HyPar: Towards Hybrid Parallelism for Deep Learning Accelerator Array. In Proceedings of the 2019 IEEE International Symposium on High Performance Computer Architecture (HPCA), Washington, DC, USA, 16–20 February 2019; pp. 56–68. [Google Scholar] [CrossRef]

- Ren, P.; Xian, Y.; Chang, X.; Huang, P.Y.; Li, Z.; Gupta, B.; Chen, X.; Wang, X. A Survey of Deep Active Learning. ACM Comput. Surv. (CSUR) 2021, 54, 1–40. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, B. Lifelong Machine Learning; Springer: Cham, Switzerland, 2018; pp. 1–207. [Google Scholar]

| Technique | Effectiveness | Limitations | Trade-Offs | Edge Deployment |

|---|---|---|---|---|

| MobileNet series | Compact and efficient; accuracy for MobileNet; improved in V2 | Limited for very-high-accuracy tasks | Model size vs. accuracy | Highly suitable; widely adopted |

| Xception | Efficient convolutions with depthwise separable layers | Complex architecture design | Efficiency vs. design complexity | Suitable for edge with careful tuning |

| SqueezeNet series | Extreme parameter reduction ( to smaller) | Higher energy consumption | Model size vs. energy usage | Suitable with energy-aware hardware |

| ShuffleNet/ CondenseNet/ ANTNet | Improved accuracy and speed through group convolutions | More complex training; specialized implementation | Accuracy vs. latency | Highly suitable |

| Winograd algorithm | Up to reduction in computation | Limited to small kernels; complex hardware logic | Hardware complexity vs. speed | Highly suitable for FPGA-/ASIC-based accelerators |

| UniWig architecture | Combines Winograd and GEMM for better utilization | Requires advanced hardware support | Hardware resource efficiency vs. complexity | Suitable for specialized FPGA/ASIC |

| Reconfigurable kernels | Efficient weight reuse; reduced LUT usage | FPGA-specific; complex implementation | Hardware flexibility vs. design complexity | Suitable for FPGA-based accelerators |

| Sparsity Type | Accelerator | Hardware Support | Latency Benefit | Energy Efficiency | Remarks |

|---|---|---|---|---|---|

| Structured | NVIDIA Jetson | TensorRT [61] with channel pruning | Moderate | Moderate | Works well with fused layers and dense GEMMs |

| Google Edge TPU | Filter pruning support | High | High | Prefers regular conv a shapes, no dynamic sparsity | |

| ARM Ethos-U | Native pattern support | High | High | Integrates well with CMSIS-NN pruning methods [62] | |

| Xilinx FPGA | Manual scheduling | High | Very high | Custom dataflow optimizations possible | |

| Unstructured | NVIDIA Jetson | Sparse GEMM via cuSPARSE [63] | Limited | Slight | Irregular compute, limited speedup without 2:4 support |

| Google Edge TPU | No direct support | Negligible | Negligible | Requires retraining for structured sparsity | |

| ARM Ethos-U | Unsupported | NA | NA | Compilation fails without dense fallback | |

| Xilinx FPGA | Sparse matrix engines | Moderate | Moderate | Requires high memory bandwidth, controller overhead |

| Quantization Technique | Model/Method | Bit Width | Top-1 Accuracy Drop on ImageNet a | Calibration Complexity | |

|---|---|---|---|---|---|

| Weights | Activations | ||||

| Fixed-point precision | Weight fine-tuning [71] | 8 | 8 | QAT | |

| Contrastive-calibration [72] | 2, 4 | 2, 4 | PTQ | ||

| Floating-point precision | FP8 [73] | 8 | 8 | PTQ | |

| Variable precision | Stripes [74] | 16 | NA | NA | |

| UNPU [80] | 16 | NA | NA | ||

| Loom [81] | NA | NA | |||

| Bit Fusion [75] | 1, 2, 4, 8, 16 | 1, 2, 4, 8, 16 | NA | NA | |

| BitBlade [82] | 1, 2, 4, 8, 16 | 1, 2, 4, 8, 16 | NA | NA | |

| Reduced precision | BinaryConnect [83] | 1 | FP32 | NA | QAT |

| Ternary Weight Network [84] | 2 b | FP32 | QAT | ||

| Trained Ternary Quantization [85] | 2 b | FP32 | QAT | ||

| XNOR-Net [77] | 1 b | 1 b | QAT | ||

| BinaryNet [76] | 1 | 1 | NA | QAT | |

| DoReFa-Net [86] | 1 b | 2 b | 3.3% | QAT | |

| Quantized Neural Network [87] | 1 | 2 b | NA | ||

| HWGQ-Net [88] | 1 b | 2 b | QAT | ||

| SmoothQuant [89] | 8 | 8 | NA | PTQ | |

| PD-Quant [90] | 2, 4 | 2, 4 | PTQ | ||

| Nonlinear quantization | Incremental Network Quantization [78] | 5 | FP32 | QAT | |

| Deep Compression [79] | 8 (conv c), 4 (dense d) | 16 | NA | ||

| 4 (conv), 2 (dense) | 16 | NA | |||

| Strategy | Memory Access Frequency | Energy Efficiency | Hardware Design Complexity | Edge Suitability |

|---|---|---|---|---|

| Weight stationary | Moderate | Good | Low | Moderate |

| No local reuse | High | Poor | Low | Limited |

| Output stationary | Low | Very good | Moderate | High |

| Row stationary | Very low | Excellent | High | Very high |

| ASIC | Process (nm) | Area (mm2) | Performance (GOPS) | Power Consumption (mW) | Normalized Power Consumption (mW) a | Energy Efficiency (GOPS/W) | Supported DL Models | Target Applications |

|---|---|---|---|---|---|---|---|---|

| DNA 100 [190] | 16 | NA | 5780 | 850 | NA | 6800 | All neural network layers | IoT, AR/VR, autonomous driving, surveillance, mobile devices |

| Stripes [74] | 65 | 122.1 | 168,768.6 b | 17,886.4 b | 3.6 | 9435.6 | CNN | Image classification |

| Eyeriss [167] | 65 | 12.3 | 126.5 | CNN | Image analysis | |||

| Bit Fusion [75] | 16 | 5.9 | 318.9 c | 895 | 1969.8 | 335.3 | CNN, RNN | Image classification, object detection, language modeling, optical character recognition |

| DesignWare [191] | 16 | NA | 512 | NA | NA | NA | CNN, R-CNN, YOLO | Object detection, image classification, segmentation |

| UNPU [80] | 65 | 16 | 84.4 | 3080 | CNN, RNN, FNN | Image classification | ||

| Envision [118] | 28 | 1.9 | CNN | Always-on vision, face recognition | ||||

| PDFA [192] | 65 | 5.8 | 168 | CNN, FCN | Object tracking | |||

| SNAP [151] | 16 | 2.4 | 80.5 | 21,550 | CNN, FCN | Image classification | ||