Abstract

Deploying deep neural networks (DNNs) in resource-limited environments—such as smartwatches, IoT nodes, and intelligent sensors—poses significant challenges due to constraints in memory, computing power, and energy budgets. This paper presents a comprehensive review of recent advances in accelerating DNN inference on edge platforms, with a focus on model compression, compiler optimizations, and hardware–software co-design. We analyze the trade-offs between latency, energy, and accuracy across various techniques, highlighting practical deployment strategies on real-world devices. In particular, we categorize existing frameworks based on their architectural targets and adaptation mechanisms and discuss open challenges such as runtime adaptability and hardware-aware scheduling. This review aims to guide the development of efficient and scalable edge intelligence solutions.

1. Introduction

Artificial intelligence (AI) research encompasses a broad range of techniques aimed at enabling machines to perform intelligent tasks. A key subset of AI is machine learning (ML), which focuses on developing algorithms that allow systems to learn from data, or, in other words, to automatically improve through experience. Within ML, there exists the brain-inspired approach that seeks to mimic biological processes and includes spiking neural networks (SNNs) and artificial neural networks (ANNs). A crucial subset of ANNs is deep learning (DL), which leverages multi-layered neural networks to model complex data patterns and achieve state-of-the-art performance in various AI applications [1].

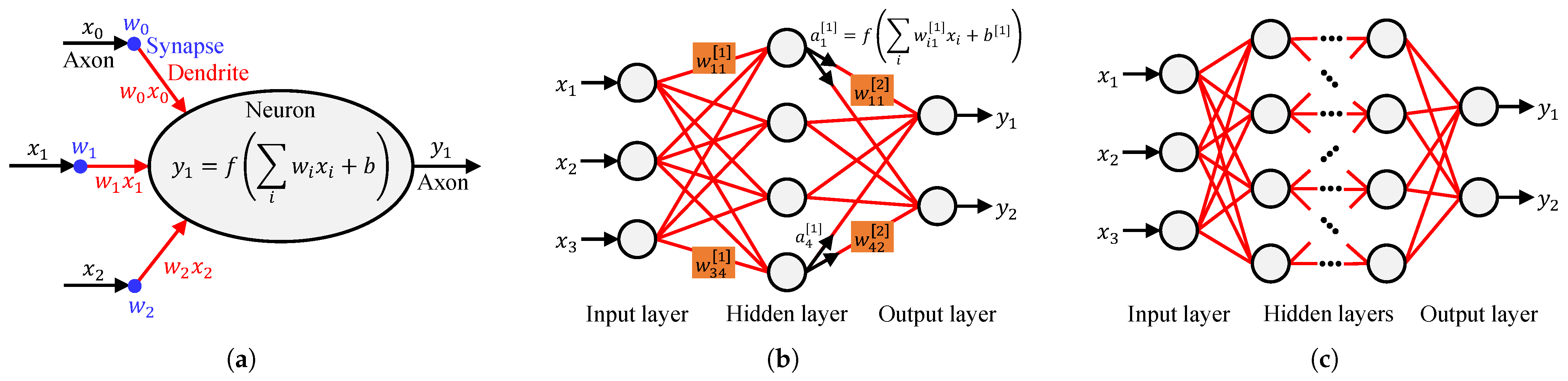

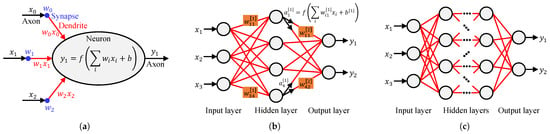

At the core of a deep neural network (DNN) is the artificial neuron (Figure 1a), which is inspired by biological neurons. In this analogy, the inputs represent signals received by the neuron’s dendrites, the weights correspond to the synaptic strength that determines the importance of each input, and the output is transmitted through the axon to other neurons. A neural network (Figure 1b) consists of multiple neurons organized into layers: the input layer, which receives raw data; one or more hidden layers, where computations and transformations occur; and the output layer, which generates predictions. A DNN (Figure 1c) extends this structure by incorporating multiple hidden layers, allowing it to learn complex hierarchical features and capture intricate patterns in data, making it particularly powerful for tasks such as image recognition, natural language processing, and autonomous systems.

Figure 1.

Deep learning fundamentals: (a) Neuron. (b) Neural network. (c) Deep neural network. Notations: = input data, = weights associated with synapse from neuron i to neuron j in layer k, = bias in layer k, = activation function, = activation of neuron i in layer k, and = predicted output.

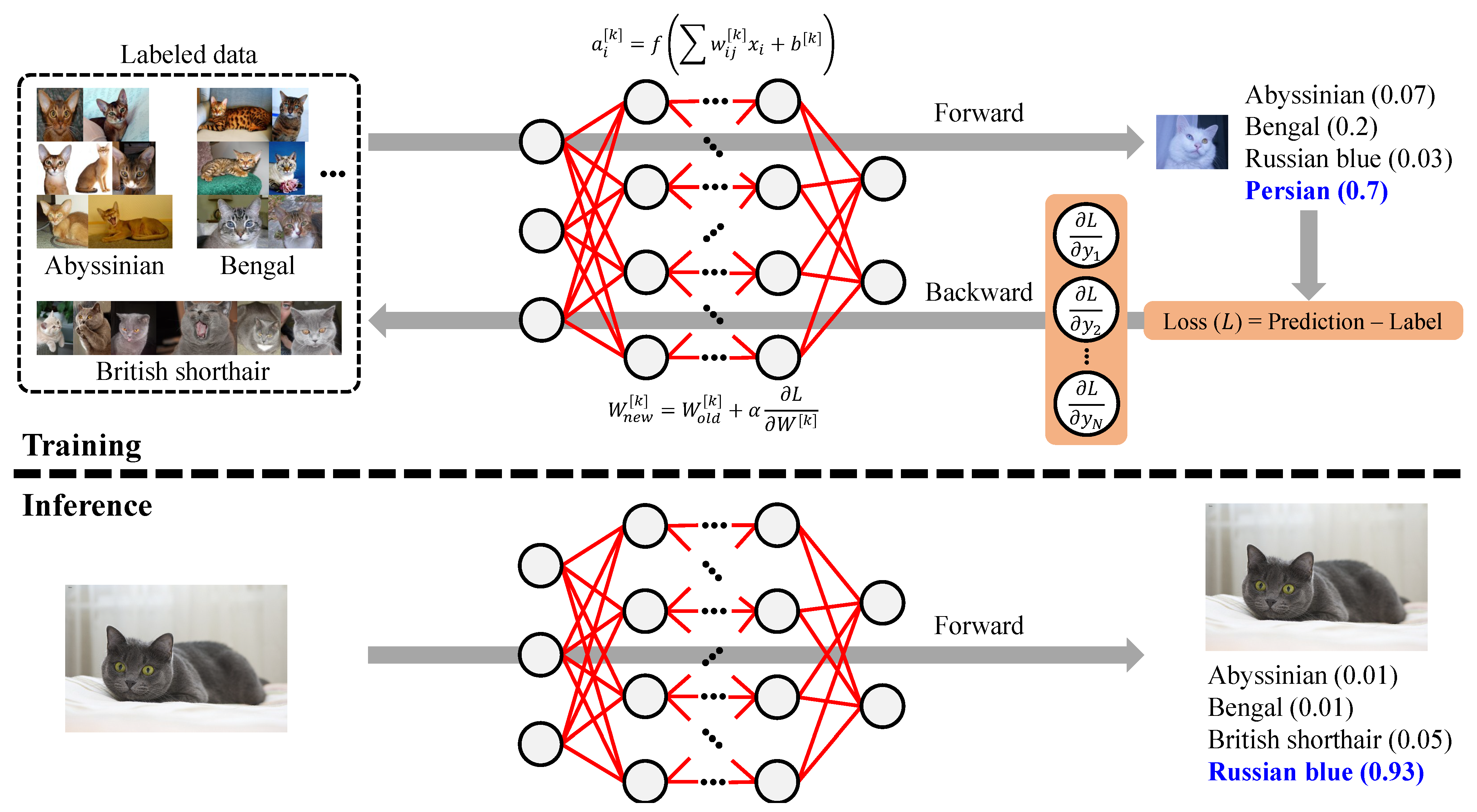

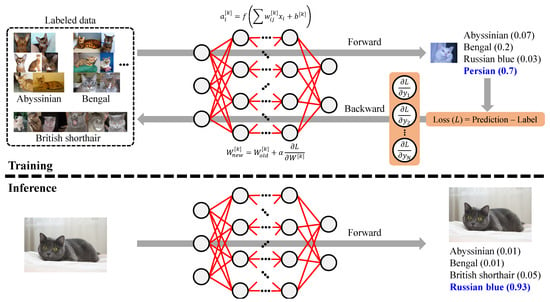

As illustrated in in Figure 2, deep learning consists of two key phases: training and inference. Training is the process where a neural network learns patterns from data by adjusting its weights using optimization techniques such as Adam [2] or stochastic gradient descent (SGD) [3]. During training, large labeled datasets are used, and backpropagation is applied to minimize the error between predictions and actual labels. This phase is computationally intensive, often requiring powerful hardware like GPUs or TPUs. In contrast, inference is the deployment phase, where the trained model is used to make predictions on new, unseen data. Unlike training, inference does not involve updating weights but instead performs forward propagation to generate outputs. As inference requires significantly fewer computations, it can run on edge devices or cloud-based systems, making deep learning models practical for real-world applications.

Figure 2.

Illustration of training and inference workflow. Notations: = current set of weights in layer k, = updated set of weights in layer k, = gradient of loss L, and = learning rate.

Edge inference (EI) occurs directly on local devices such as smartphones, IoT devices, or embedded systems, enabling real-time processing with low latency and reduced dependency on network connectivity. This approach enhances privacy and security by keeping data on the device but may be constrained by computational resources and power consumption. In contrast, cloud inference leverages powerful servers to process data remotely, allowing for more complex and larger models that require substantial computational resources. Cloud inference can handle multiple requests simultaneously and is ideal for large-scale applications, but it depends on network connectivity and may introduce latency. The focus of this paper is EI due to its numerous practical applications across various industries.

In autonomous vehicles, EI allows for rapid processing of sensor data, such as camera feeds and LiDAR scans, to detect obstacles and make driving decisions instantly. In healthcare, wearable devices use EI to monitor vital signs and detect anomalies, such as irregular heartbeats, without needing continuous internet access. Smart surveillance systems leverage edge AI to analyze video footage in real time, detecting suspicious activities while preserving privacy by keeping sensitive data on the device. In industrial automation, EI enhances predictive maintenance by analyzing sensor data to detect potential equipment failures before they occur. Additionally, in consumer electronics, voice assistants and smart home devices use EI to process commands quickly and respond without delay. These applications highlight the benefits of EI, including low latency, enhanced privacy, and reduced dependency on network infrastructure.

The evolving trend of performing inference on edge devices introduces several critical design challenges that must be addressed to support the rapid advancements in DL algorithms. One major challenge is programmability, as hardware must be adaptable to accommodate frequent updates and changes in DL models. Additionally, real-time performance is a key requirement, especially for edge applications that demand immediate processing and decision-making within strict latency constraints. Another significant concern is power consumption, as edge devices often operate with limited energy resources, making efficiency a top priority. While customized, application-specific hardware can significantly enhance energy efficiency, it often comes at the cost of reduced flexibility and programmability, limiting its ability to adapt to new algorithms. Consequently, edge device design involves a careful balancing act, optimizing trade-offs between performance, power efficiency, latency, energy consumption, cost, and form factor to achieve the best possible results for real-world applications.

The growing demand for intelligent services anywhere, anytime, has driven AI research toward edge computing. Additionally, the widespread adoption of connected gadgets, portable processing platforms, embedded sensing units, and IoT systems generates vast amounts of edge data. By 2030, an estimated billion IoT devices will be connected to cyberspace [4]. However, transferring this massive data to the cloud presents challenges in network capacity, bandwidth, and security. Edge computing addresses these issues by processing data closer to its source, enabling real-time, efficient, and secure computing while facilitating EI implementation. Several surveys explore different aspects of DL and EI:

- Studies on DL architectures, algorithms, and applications exist but lack discussions on latest compression techniques for edge intelligence [5,6,7].

- Research on compression methods, such as low-rank approximation, pruning, and weight sharing, includes hardware acceleration but excludes software frameworks and broader hardware platforms [8,9,10].

- Reviews on convolutional neural network (CNN) hardware implementation cover ASICs and FPGAs but neglect software–hardware co-design and EI pipelines [11,12].

- ML frameworks and compilers have been reviewed but without considering edge-specific compression and optimization [13].

Despite extensive research, no single survey provides a comprehensive review of DNN inference on resource-limited edge environments, addressing model development, model size reduction, integrated design, and programming environments, targeting hardware architectures, performance benchmarking, and challenges. This paper aims to bridge these gaps in existing surveys by focusing on lightweight models, compression techniques, and software–hardware co-design. Major contributions include

- A comprehensive discussion on EI techniques, including compression, hardware accelerators, and software–hardware co-design, along with a classification of compression strategies, practical applications, benefits, and limitations;

- A detailed review of software tools and hardware platforms with a comparative analysis of available options;

- Highlighting design hurdles and proposing avenues for future investigation in efficient edge intelligence, outlining unresolved problems and potential advancements.

2. Edge Inference of Deep Learning Models

Edge inference of DL models enables real-time processing by reducing latency and bandwidth usage compared to cloud-based inference. It can be categorized into three main strategies: inference on edge servers, on-device inference at edge nodes, and cooperative inference that distributes workloads between edge nodes and edge servers, each offering distinct benefits and trade-offs:

- Inference on edge servers involves deploying DL models on local computing nodes near data sources, such as micro data centers or 5G base stations. This approach offers higher computational power than standalone edge devices, allowing for complex model inference with lower latency compared to cloud solutions. However, it still requires network connectivity, which can introduce variable delays, and may have limited scalability compared to large cloud infrastructures.

- On-device inference on edge nodes runs DL models directly on resource-constrained hardware, such as smartphones, IoT devices, and embedded systems. This method ensures real-time responsiveness, enhances privacy by keeping data local, and reduces reliance on external networks. However, it faces challenges related to limited computational resources, power constraints, and the need for model compression and optimization techniques to fit within hardware capabilities.

- Cooperative inference splits computation between edge nodes and edge servers, where lightweight processing occurs on the node while computationally intensive tasks are offloaded to the edge server. This hybrid approach balances efficiency, latency, and power consumption while leveraging strengths of both local and remote computing. It is particularly beneficial for applications like autonomous vehicles and smart surveillance. However, it introduces additional complexity in workload partitioning and requires reliable network communication between edge node and server.

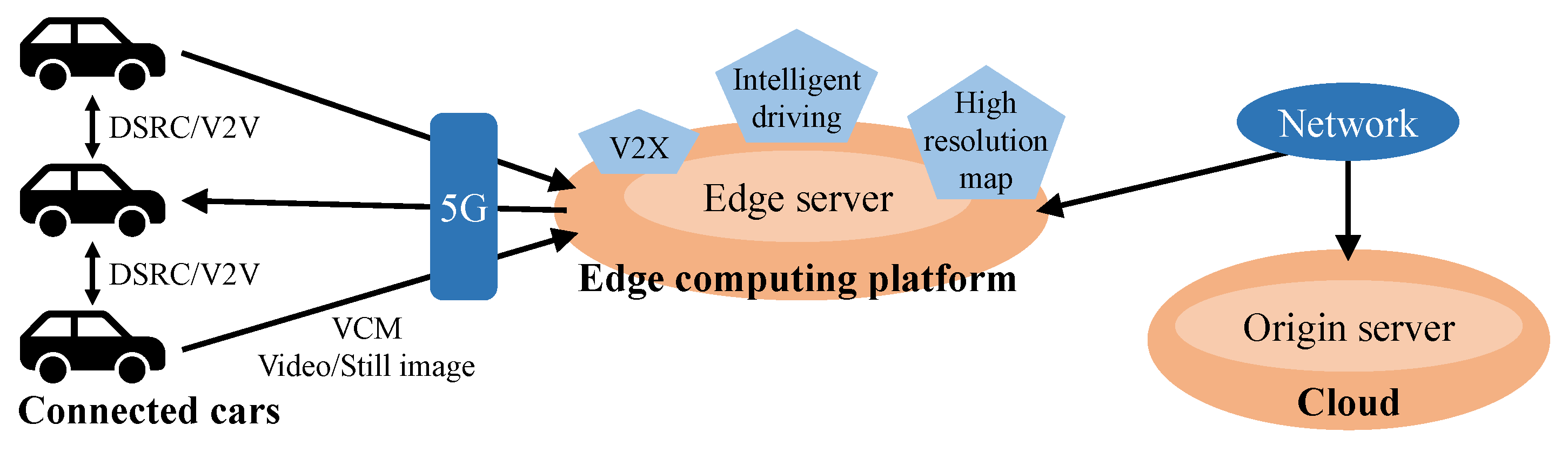

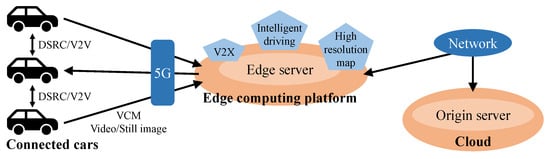

Figure 3 illustrates the EI paradigm in autonomous vehicles, providing a clearer depiction of the roles of edge devices, edge servers, and the cloud. Autonomous cars perform real-time inference on-board using optimized DL models for tasks such as object detection, lane keeping, and collision avoidance, ensuring rapid decision-making with minimal latency. However, for more computationally intensive tasks like high-resolution map updates, predictive traffic analysis, and vehicle-to-everything (V2X) communication, the vehicle offloads data to nearby edge servers. These edge servers aggregate and process data from multiple vehicles, enabling intelligent driving decisions based on shared situational awareness. The edge servers also facilitate dynamic updates to high-definition maps by integrating sensor data from multiple sources in real time. Finally, the edge servers connect to the cloud, where large-scale data analytics, deep model retraining, and long-term traffic pattern analysis are conducted. This hierarchical computing framework balances real-time performance, computational efficiency, and data bandwidth while ensuring autonomous vehicles operate safely and intelligently in diverse driving environments.

Figure 3.

Example of edge inference paradigm in autonomous vehicles. Abbreviations: DSRC = dedicated short-range communications, V2V = vehicle to vehicle, VCM = video coding for machines, and V2X = vehicle to everything.

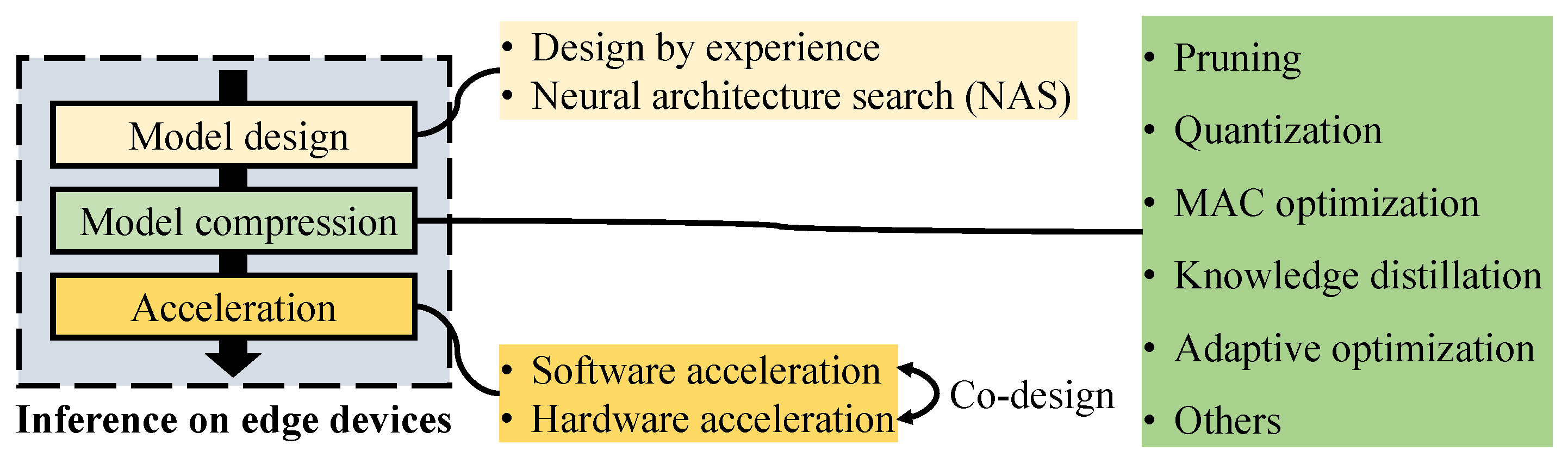

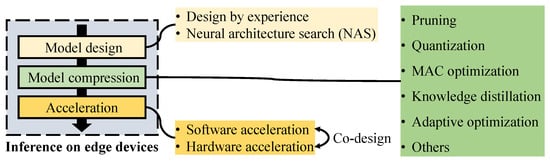

This paper concentrates especially on inference directly on edge devices. The main strategies for running DL models on resource-limited edge platforms include designing efficient models, applying compression techniques, and utilizing hardware acceleration. As DL models are typically designed for GPUs and TPUs, adapting them into smaller, lightweight architectures or compression pre-trained networks poses significant challenges for effective edge intelligence. Model design can be performed manually (based on experience) or automatically through neural architecture search (NAS). After designing the model, compression techniques are applied to reduce its size in terms of parameters and computation while maintaining acceptable performance. Inference is then executed through a software–hardware co-design approach, where computationally intensive tasks are offloaded to hardware accelerators. This overall design process for inference on edge devices is summarized in Figure 4.

Figure 4.

The typical design process for inference on edge devices. Deep learning architectures may be developed either through expert-driven design or automated neural architecture search. These models undergo compression via standalone or combined techniques. Data processed at edge devices is handled through both software and hardware accelerations. MAC stands for multiply–accumulate operation.

2.1. Model Design for Edge Inference

2.1.1. Design by Experience

In CNNs, the majority of computational resources are consumed by convolution operations, making inference speed heavily dependent on how efficiently these convolutions are performed. Designing compact and efficient architectures with fewer parameters and operations is essential for improving performance on resource-limited edge environments.

One common strategy involves replacing large convolution filters with multiple smaller filters applied sequentially, which reduces computational complexity without sacrificing accuracy. For example, MobileNet [14] and Xception [15] simplify convolutions by extensively using 1 × 1 pointwise convolutions. MobileNet introduces two primary optimizations: reducing input size and employing depthwise separable convolutions—a combination of depthwise and pointwise convolutions—followed by average pooling and a configurable dense layer. MobileNet’s depth multiplier allows for adjusting the number of filters per layer. Depending on the variant, MobileNet configurations range from to million parameters and 41 to 559 million multiply–accumulate operations (MACs), achieving accuracy.

MobileNetV2 [16] improves on this design by incorporating residual connections and intermediate feature representations via a bottleneck residual block containing one 1 × 1 convolution and two depthwise separable convolutions. This approach reduces parameters by and computation by relative to MobileNet, while increasing accuracy.

Similarly, SqueezeNet [17] compresses CNN channels using 1 × 1 convolutions and introduces the “fire module”, which first squeezes inputs with 1 × 1 filters and then expands with parallel 1 × 1 and 3 × 3 convolutions. SqueezeNet achieves a reduction in parameters compared to AlexNet [18] while maintaining comparable accuracy, though it tends to consume more energy. It successor, SqueezeNext [19], replaces fire modules with two-stage bottlenecks and separable convolutions, reaching fewer parameters than AlexNet with improved accuracy.

Other approaches focus on channel grouping and shuffling to reduce computation. ShuffleNet [20] uses group convolutions combined with channel shuffling to improve information flow, enhancing accuracy by over MobileNet with similar complexity. Its variant ShuffleNet Sx adds stride-2 convolutions, channel concatenation, and a scaling hyperparameter for channel width. CondenseNet [21] extends this concept by learning filter groups during training and pruning redundant parameters, achieving comparable accuracy to ShuffleNet but with half the parameters. ANTNet [22] further advances group convolution models by surpassing MobileNetV2 in accuracy by while reducing parameters by , operations by , and inference latency by on mobile platforms.

From an algorithmic optimization standpoint, the Winograd algorithm [23] effectively accelerates small-kernel convolutions and mini-batch processing by minimizing floating-point and integer operations up to [24] and [25], respectively. It achieves this through arithmetic transformations that leverage addition and bit-shift operations, which are highly hardware-efficient. FPGA implementations of Winograd convolutions [26] utilize line buffer caching, pipelining, and parallel processing to further speed up computation.

The UniWig architecture [27] integrates Winograd and GEMM-based operations within a unified processing element structure to optimize hardware resource utilization. Additionally, weight-stationary CNNs benefit from reconfigurable convolution kernels [28], which reuse weights to optimize LUT usage on FPGAs and reduce overall computational demands.

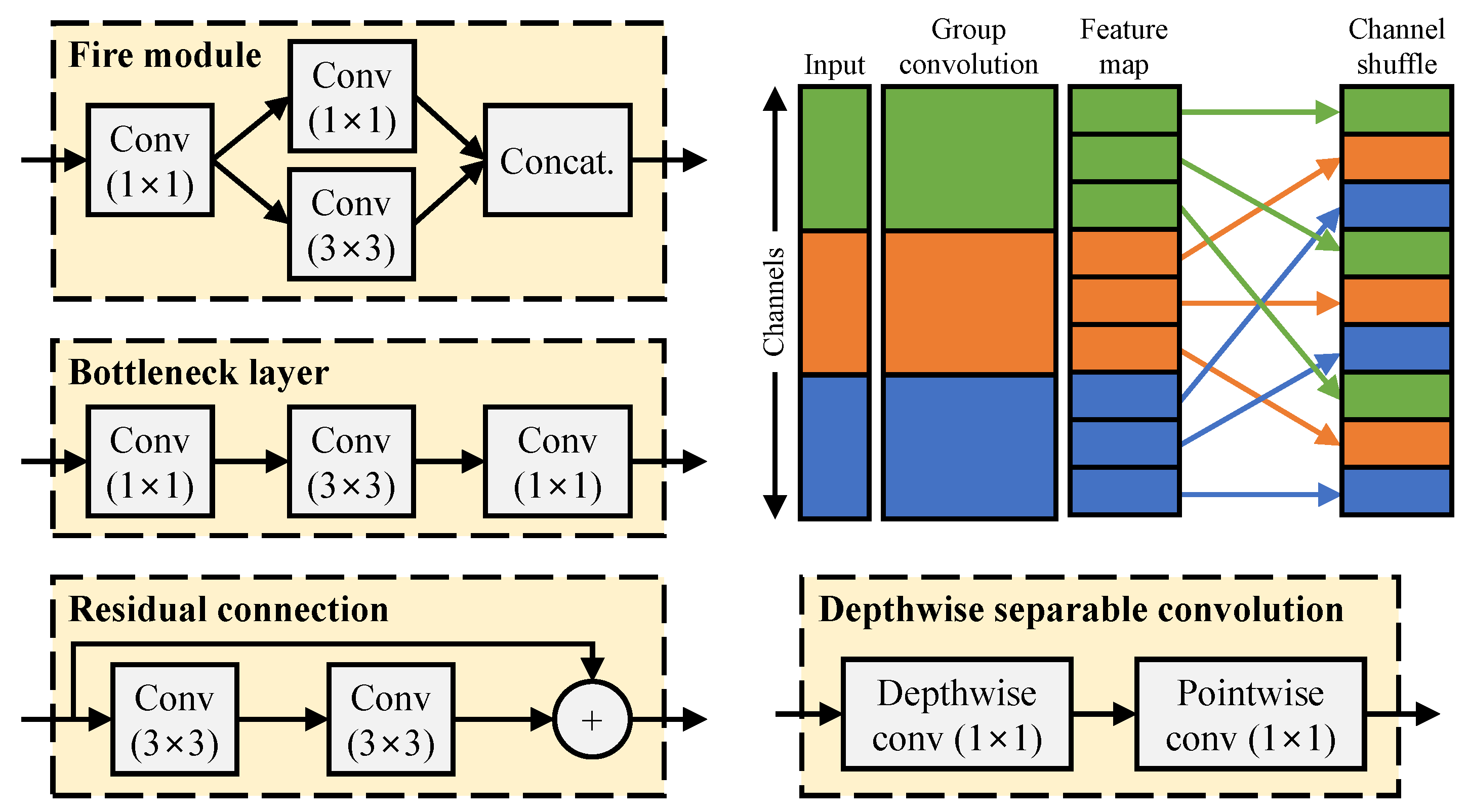

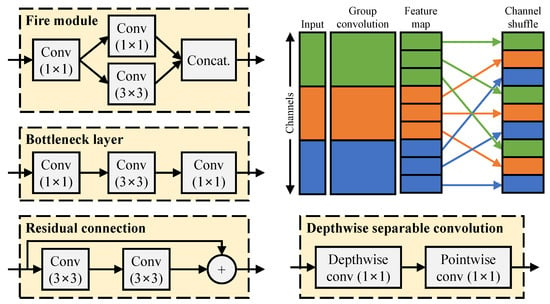

Design by experience has proven effective in developing lightweight architectures optimized for edge deployment. Although this approach often involves trade-offs among complexity, energy efficiency, and accuracy, it provides practical solutions for real-time inference on resource-limited devices. Collectively, hand-crafted designs form a solid foundation for efficient edge intelligence. Figure 5 illustrates various convolution techniques discussed above for compact CNN design, while Table 1 offers a summarized comparison for quick reference.

Figure 5.

Illustration of various convolution types, including fire modules, bottleneck layers, residual connections, channel shuffling, and depthwise separable convolutions. Abbreviations: Conv = convolution, Concat. = concatenation.

Table 1.

Summary of manually designed techniques for efficient edge deployment.

2.1.2. Neural Architecture Search

Neural architecture search (NAS) plays a crucial role in designing efficient DL models, particularly for EI, where computational resources are limited. NAS automates the process of discovering optimal neural network architectures, significantly reducing the reliance on manual design and expert knowledge. The primary components of NAS include the design domain, exploration strategy, and evaluation method. The design domain specifies the range of permissible architectural components—such as layer types, connectivity patterns, and hyperparameters. The exploration strategy determines how candidate architectures are explored within the design domain, using methods such as reinforcement learning, the evolutionary algorithm, or gradient-based optimization. Finally, the evaluation method assesses the performance of candidate architectures, often using accuracy, latency, energy consumption, or a multi-objective metric. Efficient NAS techniques aim to strike a balance between accuracy and computational efficiency, making them essential for deploying DL models on resource-constrained edge devices.

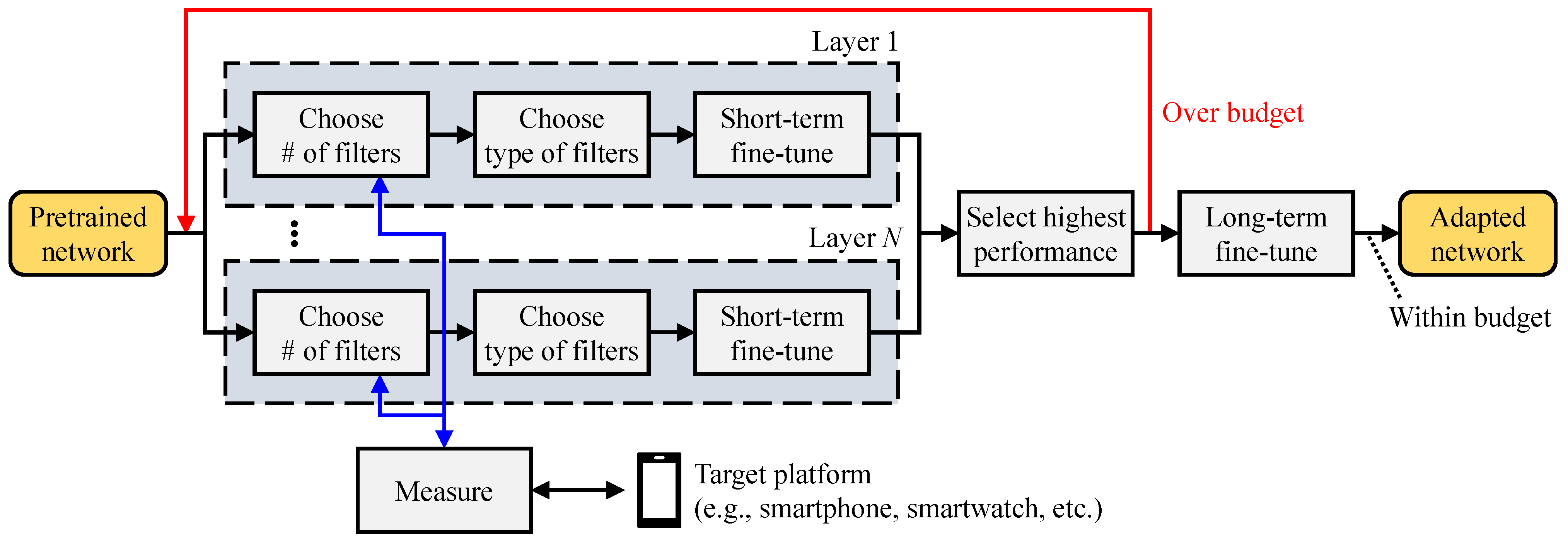

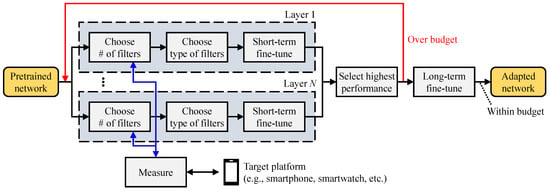

MobileNetV3 [29], available in both large and small variants, was developed using NAS and the NetAdapt [30] algorithm. As illustrated in Figure 6, NetAdapt is an adaptive method that generates multiple candidate networks based on specific quality metrics. These candidates are tested directly on the target hardware, and the one with the best accuracy is selected. Compared to its predecessor, MobileNetV2, the large version of MobileNetV3 achieves a performance boost without sacrificing accuracy, while the small version improves accuracy by with comparable model size and latency.

Figure 6.

An illustration of the NetAdapt algorithm. At each step, it reduces resource usage by pruning filters layer by layer, selects the variant with the highest accuracy, and fine-tunes the final model once the target constraint is satisfied.

In NetAdapt, hardware costs associated with target platforms are treated as “hard” constraints, meaning that the method solely maximizes model accuracy and, therefore, cannot yield Pareto-optimal solutions. To address this limitation, a hardware-aware NAS (HW-NAS) approach presented in [31] formulates a multi-objective optimization problem considering both model accuracy and computational complexity (measured in FLOPs). This optimization is solved using reinforcement learning (RL), where a neural network predicts the performance of candidate architectures. The resulting solutions—represented as two-objective vectors—are then evaluated against the true Pareto-optimal front to identify the best network.

However, as shown in [32], FLOPs often poorly correlate with real-world latency, as models with identical FLOPs can exhibit different latencies across platforms. To overcome this limitation, MNASNet [33] incorporates measured inference latency—obtained by evaluating candidate networks on actual mobile phones—into the objective function. The reward function combines target latency, measured latency, and model accuracy using a customized weighted product method. As a result, MNASNet achieves image classification with only 78 ms latency, which is faster than MobileNetV2 and faster than NASNet, while also improving accuracy by and , respectively.

In general, hardware constraints such as FLOPs and latency are not differentiable with respect to model parameters, hindering the application of gradient-based search methods. This constraint has led to the widespread use of RL-based searches in HW-NAS. FBNet [34] addresses this by employing the Gumbel-Softmax function, making the joint accuracy–latency objective function continuous and thus amenable to gradient-based optimization. In FBNet, the latency of each candidate architecture is computed as the sum of its component latencies, which are pre-stored in a look-up table. To reduce training overhead, candidate models are trained on proxy datasets such as MNIST and CIFAR-10.

While training on proxy datasets can reduce costs, it may not generalize well to large-scale tasks. ProxylessNAS [35] mitigates this by enabling architecture search directly on large-scale datasets and target hardware platforms. It adopts binarized paths to activate and update only a single path during training, thereby minimizing memory usage. Furthermore, by modeling inference latency as a continuous function of architectural parameters, ProxylessNAS facilitates efficient gradient-based search with rapid convergence.

Beyond gradient- and RL-based strategies, recent research [36] explores the use of genetic algorithms for NAS. In this approach, the fitness function combines performance estimation and hardware cost penalties. Performance is approximated by the similarity between hidden layer representations of a reference model and those of candidate networks, while hardware cost is represented by either FLOPs or latency. Experimental results demonstrate significant improvements in efficiency, achieving a reduction in search time and a decrease in CO2 emission compared to ProxylessNAS on the ImageNet dataset.

The integration of hardware constraints into NAS frameworks addresses the accuracy degradation often observed when NAS-generated models are deployed across heterogeneous edge devices. In [37], this issue is tackled by the once-for-all (OFA) network. During inference, only a selected sub-network within the OFA model is activated to process input data. The training strategy optimizes the performance of all potential sub-networks by dynamically sampling different architectural paths. A subset of these paths is then used to train both accuracy and latency predictors. Based on a given hardware target and latency constraint, RL-based architecture search is applied to identify an optimal sub-network. The OFA model is structured into five sequential units with progressively decreasing feature map sizes and increasing channel widths. Each unit supports configurations of two, three, or four layers; each layer may contain three, four, or six channels and uses kernels of size three, five, or seven. This configuration enables the generation of approximately sub-networks, all sharing the same weights (about million parameters).

In summary, the evolution of HW-NAS techniques reflects a growing emphasis on jointly optimizing model accuracy and deployment efficiency across diverse edge platforms. Early approaches such as NetAdapt and MNASNet highlight the shift from theoretical metrics such as FLOPs to real-world latency considerations. Subsequent methods—FBNet, ProxylessNAS, and OFA—further advance this trend by integrating gradient-based optimization, large-scale training, and flexible sub-network deployment. These developments demonstrate that effective HW-NAS design must account for both hardware variability and scalability, enabling practical and efficient AI deployment in resource-constrained environments.

2.2. Model Compression for Edge Inference

2.2.1. Pruning

Modern DL models are often heavily overparameterized. Pruning is an effective model compression strategy that eliminates less critical weights or filters from a pre-trained network. This process not only reduces memory requirements but also enhances inference speed.

Pruning methods are typically categorized into unstructured and structured types [38]. Unstructured pruning removes individual weights while keeping neurons active as long as they maintain at least one remaining connection. For example, a sparsification method for LSTM networks implemented on FPGAs [39] divides matrices into banks with an identical number of non-zero elements, retaining larger weights to maintain accuracy. However, unstructured pruning often leads to irregular memory access and computation patterns, which can hinder hardware parallelism [39,40].

In contrast, structured pruning [41] removes entire groups of weights, such as adjacent weights, sections of a filter, or even complete filters [42]. A structured pruning method for CNNs [43] selectively removes filters to boost network efficiency without significant accuracy degradation. This technique trains an agent to prune layer by layer, retraining after each pruning step before proceeding to the next layer. Structured pruning better supports hardware acceleration by aligning with data-parallel architectures [44], improving both compression rates and reducing storage demands.

Hybrid pruning combines both structured and unstructured approaches for optimized hardware-friendly models. For example, one approach gradually removes a small portion of the least significant weights from each layer, guided by a predefined threshold, and follows up with retraining. Neurons that lose all connections are subsequently removed. Weight pruning effectively compresses shallow networks without notable accuracy loss. An iterative vectorwise sparsification strategy for CNNs and RNNs [40] shows faster responses with negligible accuracy degradation, achieving sparsity. However, filter pruning in deeper networks tends to cause more significant accuracy drops.

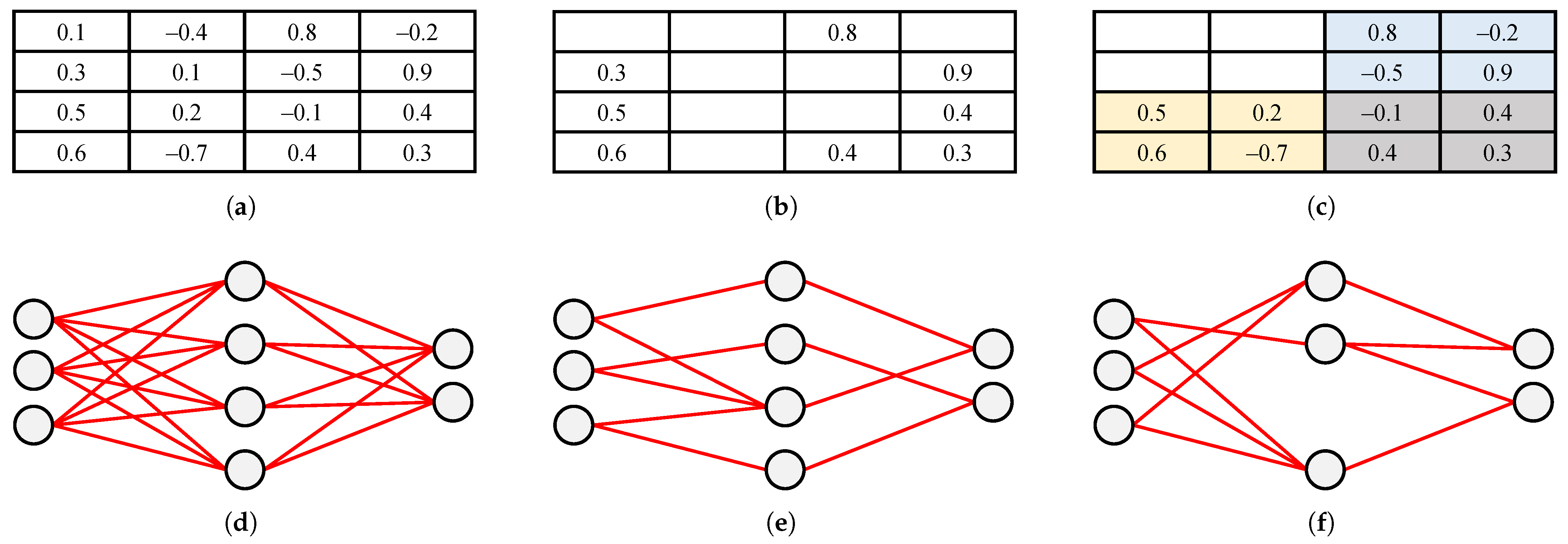

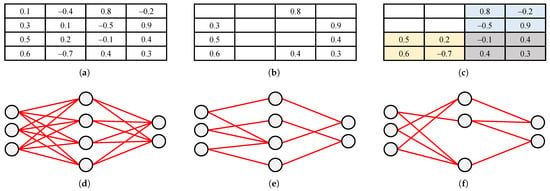

Figure 7 illustrates various pruning methods and their impact on network structures. Currently, there are three main strategies for creating sparse networks through pruning.

Figure 7.

Visualization of different pruning methods: (a) Original set of weights. (b) Unstructured pruning applied to lower half based on magnitude. (c) Structured pruning by removing 2 × 2 group with smallest average. (d) Basic neural network before pruning. (e) Unstructured pruning of (d) by removing of weights. (f) Neuron pruning applied to network in (d).

A. Magnitude-Based Approach: The contribution of each weight in a network is proportional to its absolute value. To induce sparsity, weights below a predefined threshold are removed. Magnitude-based sparsity not only reduces model size but also accelerates convergence. In [45], a pruning strategy is applied incrementally across layers, where low-magnitude weights are pruned and the model is retrained to recover lost accuracy. Over several iterations, the remaining weights are fine-tuned. Using this approach, AlexNet achieves a reduction in weight count and a reduction in MAC operations. The pruning effect is more pronounced in fully connected layers—with reductions as high as —compared to in convolutional layers. Experimental results with AlexNet and VGGNet [46] show that about of weights can be pruned with fine-tuning and around without it.

Dynamic pruning methods have also been explored. In [47], a dynamic pruning approach reduces inference latency for transformer models on edge hardware. This method decreases both MAC operations and tensor size while preserving up to accuracy in keyword spotting tasks. When tolerating up to accuracy loss, the technique achieves up to reduction in operations and delivers up to faster multihead self-attention inference compared to a standard keyword transformer. Another example is ABERT [48], a pruning scheme tailored for BERT models, which automatically discovers optimal sub-networks under pruning ratio constraints. The deployment of the pruned network on a Xilinx Alveo U200 FPGA yields a performance gain over the baseline BERT model.

One key limitation of magnitude-based pruning lies in selecting an appropriate threshold. Using a single threshold across all layers often leads to suboptimal results, as parameter magnitudes vary between layers. While setting different thresholds per layer offers a solution, it is difficult to implement. Differentiable pruning [49] addresses this limitation by introducing a learnable pruning process. Additionally, ref. [50] introduces a Taylor series-based criterion to evaluate neuron importance in CNNs, formulating pruning as a joint optimization task over both network weights and pruning decisions. Gate Decorator [51], a global structured pruning method, scales channels in CNNs and removes filters when their scaling factors drop to zero.

B. Regularization-Based Approach: These techniques incorporate a regularization term into the loss function to enforce the desired level of sparsity [52]. As the model trains, it gradually evolves toward a sparse sub-network that meets the targeted sparsity ratio while maintaining high accuracy. A primary benefit of regularization-based approaches is that they preserve the integrity of the weights, resulting in better overall accuracy. However, a key drawback is that these methods often require a large number of iterations to fully converge.

C. Energy-Based Approach: The technique introduced in [53] prunes weights based on estimated energy consumption, factoring in the number of MAC operations, data sparsity, and memory hierarchy movement. It achieves a improvement in energy efficiency on AlexNet when compared to traditional magnitude-based pruning.

A significant challenge with unstructured pruning is that it results in sparse weight matrices, which complicates efficient hardware deployment. While this form of pruning is generally more effective in fully connected layers, it does not always translate into reduced latency. The irregular pattern of removed weights can hinder matrix multiplication performance. Other limitations include limited transferability across model architectures, inefficient on-chip memory access, a dependency on fine-tuning to recover accuracy, and imbalanced computational workloads that reduce parallelism.

Zero-skipping techniques [54] attempt to bypass unnecessary multiplications involving zeros created by pruning. However, skipping zeros in weights and activations can lower overall performance efficiency [55]. These methods also demand large on-chip memory to fully leverage hardware parallelism. To address this, weights can be stored in a dense format [56], reducing memory overhead. Map batching [57] further improves efficiency by reusing kernels in dense layers, avoiding frequent transfers to external memory.

Despite their benefits, pruning entire filters or channels in CNNs often results in substantial accuracy degradation. Therefore, CNNs are commonly compressed using unstructured pruning, which creates sparsely populated weight tensors while preserving the original network architecture.

D. Hardware Support for Structured and Unstructured Pruning: While pruning effectively reduces model size and computation, its real-world impact on EI performance depends on the underlying hardware’s ability to exploit sparsity. Edge accelerators such as NPUs, FPGAs, and ASICs vary widely in their support for different sparsity patterns.

Structured pruning is typically more hardware-friendly because it preserves regularity in memory access and computation. Removing entire channels or filters allows compilers to simplify tensor shapes and generate optimized dense matrix operations without requiring major changes in the hardware pipeline. In contrast, unstructured pruning, which removes individual weights, often results in irregular memory access and control flow. Exploiting such sparsity demands dedicated support, such as sparse matrix storage, zero-skipping hardware, or specialized sparse compute units. These features are not widely available in most edge devices.

Table 2 summarizes how structured and unstructured sparsity are supported across representative edge accelerators. Given these trends, recent work emphasizes hardware-aware pruning, which aligns pruning granularity with accelerator architecture. Co-design efforts such as SCNN [58], EyerissV2 [59], and Cambricon-S [60] demonstrate how custom accelerators can natively support block- or vector-level sparsity, balancing flexibility and performance. However, these designs are yet to be widely adopted in commercial edge hardware.

Table 2.

Comparison of hardware support for sparsity patterns on edge accelerators. NA stands for not available.

2.2.2. Quantization

Most DL models rely on floating-point representations for weights and activations, which preserve detailed information but significantly slow down processing. However, as DNNs are designed to capture key features and are inherently robust to small variations, they can tolerate minor errors introduced by quantization. Quantization reduces the precision of model parameters and activations, minimizing the demand for high-cost floating-point operations. By decreasing the bit width, quantization enables faster and more efficient integer-based computations in hardware.

Quantization provides three main advantages: (i) it reduces memory consumption by representing data with fewer bits; (ii) it simplifies computations, leading to faster inference times; and (iii) it boosts energy efficiency. Data can be quantized either linearly or nonlinearly, and the bit width used for quantization can be fixed across the model or vary between layers, filters, weights, and activations. In fixed-bit quantization, a uniform bit width is applied across the network, whereas variable quantization allows different parts of the network to use different precisions.

Replacing 32-bit single-precision floating-point (FP32) operations with lower-precision formats—such as half-precision (FP16 [64]), reduced-precision (FP8 [65]), or fixed-point representations [66]—greatly simplifies DL computations. For example, 8-bit integer (INT8) addition and multiplication can be and more energy-efficient than 32-bit equivalents [67]. As a result, AlexNet can achieve under accuracy loss [68] when reduced to bits. Prior work has shown that using 8-bit activations and weights can deliver a memory reduction and a speedup without accuracy degradation [69,70].

Table 3 summarizes representative quantization methods, categorized by precision type—fixed-point, floating-point, variable, reduced, and nonlinear. Fixed-point methods such as weight fine-tuning [71] and contrastive-calibration [72] balance accuracy and simplicity, showing only top-1 accuracy loss. Floating-point methods (for example, FP8 [73]) outperform the traditional INT8 quantization with less than accuracy drop while supporting a wider range of quantized operations. Variable precision schemes such as Stripes [74] and Bit Fusion [75] provide bit-level flexibility but lack clear reports on accuracy metrics. Reduced precision approaches, including BinaryNet [76] and XNOR-Net [77], drastically lower bit widths to bits, gaining speedups at the cost of significant accuracy loss (up to ). Nonlinear methods such as Incremental Network Quantization (INQ) [78] and Deep Compression [79] use weight clustering and pruning strategies to achieve compression with minimal accuracy degradation.

Table 3.

A summary of representative studies on the quantization of deep learning models. FP32 denotes the 32-bit floating point representation, NA stands for not available, QAT is the abbreviation for quantization-aware training, and PTQ represents post-training quantization.

Binary networks represent an extreme form of quantization, replacing multiplications with 1-bit XNOR operations. Techniques such as BinaryConnect [83] and BinaryNet [76] compress model size up to and enable fast inference but suffer from large accuracy drops on complex datasets. To mitigate this, DoReFa-Net [86], QNN [87], and HWGQ-Net [88] use hybrid precision strategies, maintaining 1-bit weights with multi-bit activations. Ternary quantization methods such as the Ternary Weight Network [84] and Trained Ternary Quantization [85] introduce a zero weight level to reduce information loss, achieving accuracy drops ranging from to , where the negative value denotes an improvement over the full-precision baseline.

The reduced precision methods discussed above follow the quantization-aware training (QAT) strategy, which requires retraining the quantized model and is therefore time-consuming. An alternative approach is post-training quantization (PTQ), as demonstrated by SmoothQuant [89] and PD-Quant [90]. PTQ methods eliminate the need for retraining; instead, they rely on calibration using a small set of data samples. SmoothQuant enhances INT8 quantization for large language models by smoothing weight and activation distributions, achieving negligible accuracy loss without fine-tuning. PD-Quant introduces a prediction difference-based calibration approach tailored to ultra-low precision (2-bit) quantization, yielding a top-1 accuracy loss of only for extreme bit widths.

Hardware-friendly designs such as Stripes [74] and Loom [81] use bit-serial operations to reduce power by substituting multipliers with AND gates and adders. UNPU [80] leverages -bit weights with fixed activations, while Loom applies serial quantization for both trading latency for hardware efficiency. Bit Fusion [75] dynamically fuses processing elements to match operand bit widths, while BitBlade [82] removes shift–add overhead using direct bitwise summation.

Nonlinear quantization techniques exploit weight and activation distributions to minimize quantization error, often using logarithmic spacing or lookup tables. INQ [78] applies iterative quantization with retraining to preserve performance, while Deep Compression [79] clusters and prunes weights for minimal accuracy degradation. These methods are especially effective for edge deployment due to their low calibration needs and robust accuracy.

While binarization suits small datasets or neuromorphic chips, it remains challenging for large-scale tasks due to accuracy loss. Accuracy improvements often rely on keeping the first and last layers in higher precision or combining multiple quantization strategies.

Quantization has also been extended to RNNs and Transformers. SpeechTransformer [91] and vision transformers [92] benefit from integer-only quantization, achieving reductions in parameter count and inference speedups. Despite these advantages, quantization introduces trade-offs including precision loss, architectural constraints, and gradient computation challenges. Moreover, quantized models have been shown to be more vulnerable to distribution shifts compared to their full-precision counterparts [93]. This vulnerability, however, can be mitigated through carefully designed post-training quantization strategies.

In summary, quantization remains a powerful tool for model optimization, with various techniques offering distinct trade-offs. Table 3, along with the accompanying comparison and deployment analysis, offers a roadmap for selecting suitable methods based on accuracy, hardware efficiency, and deployment constraints.

2.2.3. Multiply-and-Accumulate Optimization

Multiplication operations account for a substantial portion of the computational burden in DL algorithms, particularly during inference. Therefore, techniques that reduce or eliminate multiplications can dramatically improve efficiency—especially in resource-limited edge environments.

One effective approach is DiracDeltaNet [94], which replaces spatial convolutions with lightweight shift operations and depthwise convolutions followed by efficient 1 × 1 convolutions. This strategy reduces the parameter count by a factor of and the number of operations by for the VGG-16 model, while maintaining competitive accuracy on the ImageNet benchmark. Its structured pruning and minimal reliance on multiplications make it particularly appealing for FPGA-based deployments.

A more radical strategy is proposed in [95], where all multiplications and nonlinear functions are precomputed and stored in LUTs. During inference, the model relies solely on fixed-point additions and table indexing. Activation functions are replaced with quantized approximations. This technique eliminates floating-point operations entirely, achieving extremely low computational overhead. However, it comes at the cost of potentially large memory requirements due to LUT size, which may limit scalability for more complex models.

In contrast, the Alphabet Set Multiplier (ASM) introduced in [96] reduces multiplication cost by reusing partial products. It precomputes a compact set of “alphabets” (small multiplication results), which are then dynamically combined using shifters, adders, and selection units to reconstruct full multiplications. ASM is particularly effective for fixed-weight models, where computation can be shared across multiple operations. This method strikes a balance between efficiency and accuracy, making it a strong candidate for embedded systems and ASIC-based deployments.

For edge deployment, DiracDeltaNet [94] offers the best overall balance of performance, hardware simplicity, and model accuracy. However, for applications requiring extremely low power consumption and where memory is not a bottleneck, the LUT-based approach [95] may be preferable. ASM [96] is ideal in scenarios where custom hardware design is feasible and shared computation can be exploited.

2.2.4. Knowledge Distillation

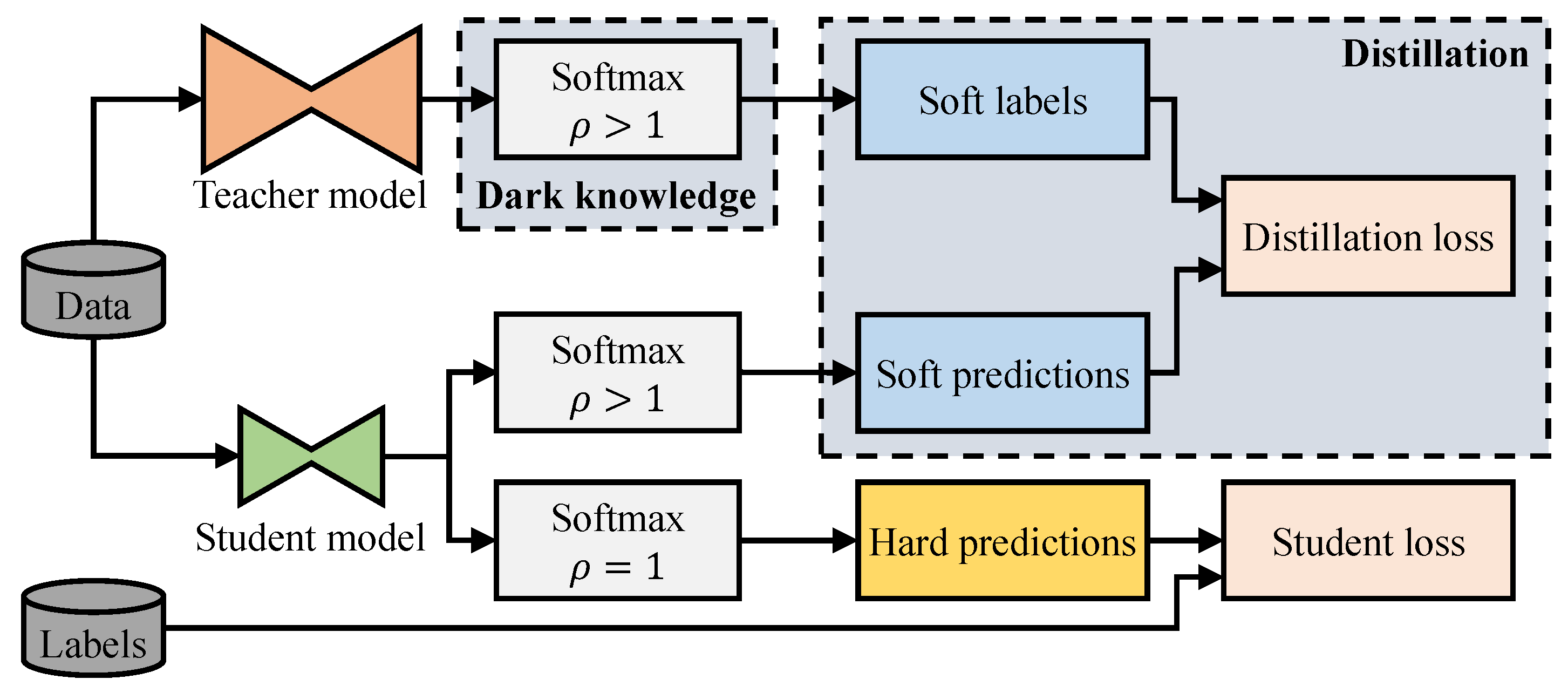

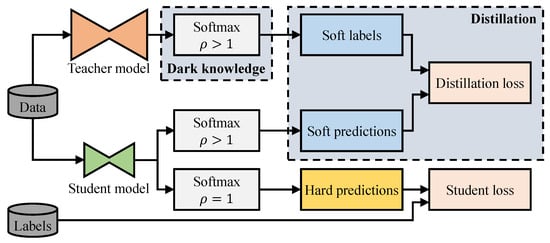

Knowledge distillation (KD) is a widely used model compression strategy that enables a lightweight DL model to emulate the behavior and performance of a larger, more accurate model [97]. This typical setup includes a teacher–student paradigm, where a large, high-capacity teacher network guides the training of a smaller student model designed for efficient inference on constrained hardware platforms. An overview of this process is shown in Figure 8.

Figure 8.

Illustration of knowledge distillation. denotes the softmax temperature.

The essence of KD lies in transferring learned representations from teacher to student via a carefully designed loss function. Originally proposed in [98] and extended in [99], the approach often involves the student learning to match the teacher’s softmax output probabilities, which provide richer supervision than hard labels. For example, in speech recognition tasks, KD has yielded a increase in accuracy, enabling the student to approach the performance of a large ensemble of teacher models. Beyond soft labels, KD techniques have evolved to incorporate internal features—such as intermediate activations [100], neuron outputs [101], or feature maps [102]—which help the student to gain deeper insights from the teacher’s representations.

Numerous strategies have emerged to tailor student model architecture for edge deployment. For example, DeepRebirth [103] compresses networks by merging convolutional layers with adjacent non-parametric layers (such as pooling and normalization), followed by layerwise fine-tuning, resulting in a speedup and reduction in memory usage. In contrast, Rocket Launching [104] synchronously trains teacher and student networks to accelerate convergence, reducing training overhead.

In natural language processing (NLP) for edge environments, KD has proven highly effective. For example, TinyBERT [105], a distilled version of BERT, retains of the original accuracy while shrinking the model size by and speeding up inference by . Similarly, sentence-level distillation techniques [106] have enabled the creation of lightweight sentence transformers that maintain competitive performance.

Student model design can also trade off width for depth, as in FitNets [102], where a deeper, narrower student learns from intermediate hints provided by the teacher. This setup matches the teacher’s performance on CIFAR-10 while benefiting from reduced model size and increased inference speed.

Further developments include mutual learning [107] (students co-learn), teacher–assistant frameworks [108] (a multi-stage distillation process), and self-distillation [109] (models teach themselves using their own past outputs). KD has even been extended to dataset distillation, where small synthetic datasets replicate the training behavior of full datasets, significantly reducing training cost and data storage needs [110,111].

Among the KD-based methods, TinyBERT [105] stands out as the most suitable for edge deployment in NLP tasks, offering a compelling balance between size, speed, and accuracy. In vision tasks, DeepRebirth [103] and FitNets [102] provide efficient alternatives with strong inference performance and low overhead. Nevertheless, care must be taken when deploying KD-trained models across edge environments due to their sensitivity to distribution shifts and reliance on teacher-specific behavior. However, KD remains a powerful and versatile compression technique, particularly when inference efficiency and model compactness are paramount.

2.2.5. Adaptive Optimization

Adaptive DL compression techniques dynamically adjust computational workloads during inference in response to the complexity of each input, enhancing efficiency while preserving performance. Unlike static compression methods such as quantization, pruning, or knowledge distillation—which apply uniform reductions regardless of input difficulty—adaptive approaches dynamically adjust computation to suit varying inference demands. For example, processing a simple background frame in a surveillance video requires far less computation than detecting objects in a crowded scene. Similarly, basic sentence comprehension in NLP may not demand full model depth compared to syntactically ambiguous inputs.

One such technique is frame skipping in video analysis. NoScope [112] employs a lightweight difference detector to identify significant changes between consecutive frames, allowing the system to bypass redundant computations when frames are similar. This approach has achieved up to speedup in binary classification tasks over fixed-angle webcam and surveillance video while maintaining accuracy within of state-of-the-art networks.

Another method involves using dual-model systems comprising a “small” and a “large” network [113]. The small model processes inputs first, and if its confidence in the prediction is high, the result is accepted. If not, the input is passed to the larger, more complex model for further processing. This strategy can lead to significant energy savings, up to in digit recognition tasks, provided the larger model is infrequently activated.

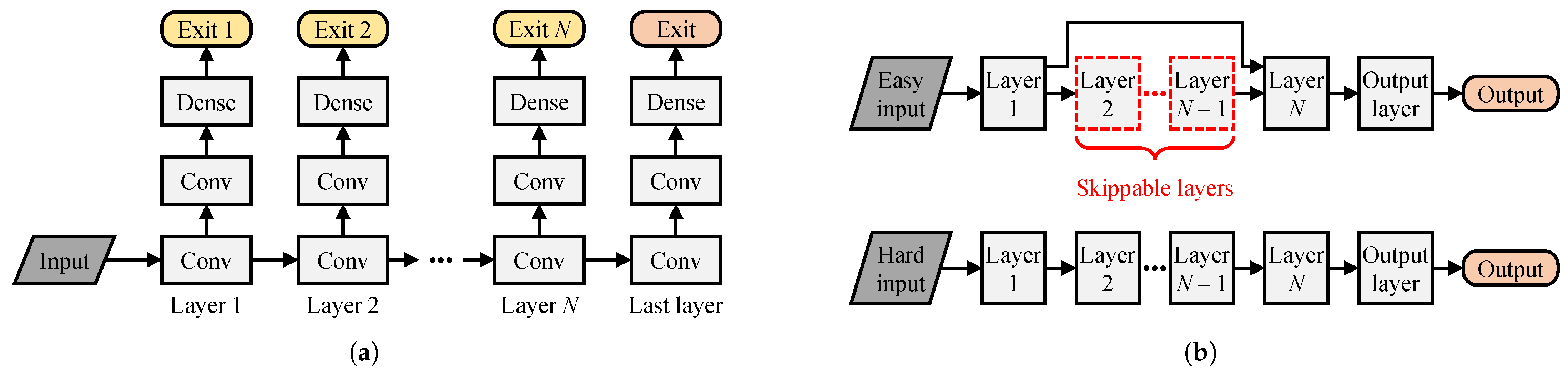

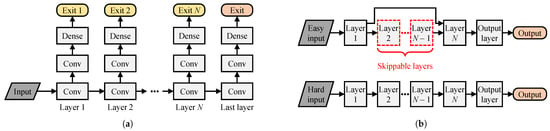

Early exiting (illustrated in Figure 9a) is a technique where intermediate classifiers are added to a DNN, allowing the model to terminate inference early if a confident prediction is made at an earlier stage. BranchyNet [114] is an implementation of this concept, adding multiple exit points to the base network and using entropy of the softmax output to decide whether to exit early or continue processing. This method reduces latency and computational load, especially when deeper layers are seldom needed.

Figure 9.

An illustration of the early exiting technique (a) and the SkipNet architecture (b).

Extending early exiting to distributed systems, DDNNs [115] incorporate exit points at various levels, such as cloud, edge server, and end-device. This hierarchical approach allows for efficient processing by exiting at the most appropriate level based on the input’s complexity. Similarly, DeepIns [116] applies this concept in industrial settings, collecting data via edge sensors and determining processing at the edge or cloud level based on confidence scores.

SkipNet [117] (illustrated in Figure 9b) introduces skippable tiny NNs, or gates, within the architecture, enabling the model to bypass certain layers during inference based on input characteristics. This dynamic routing conserves computational resources with minimal impact on accuracy. In practical applications, such as smart assistants and wearable devices, hierarchical inference is employed where a lightweight model handles initial processing, and more complex analysis is offloaded to cloud-based models as needed [118,119].

Table 4 summarizes representative adaptive model compression methods, highlighting their design objectives, underlying architectures, compression techniques, performance outcomes (lossless, lossy, or enhanced), and experimental results. Table 5 lists popular lightweight DL models and compares them in terms of parameter count and computational cost, most of which stem from applying such compression methods to large-scale DL models.

Adaptive compression methods offer a nuanced and intelligent approach to optimizing inference workloads, with each method presenting a distinct balance between computational efficiency and accuracy retention:

- Most energy-efficient: Dual-model systems [113] and early exiting methods [114,115] stand out, offering substantial energy savings with little accuracy compromise.

- Best for latency-sensitive edge deployment: SkipNet [117] and BranchyNet [114] reduce unnecessary computation in real time, making them well-suited for wearable devices and real-time analytics.

- Most suitable for dynamic environments: DDNNs [115] offer scalable, hierarchical processing ideal for industrial and IoT scenarios, albeit with increased system complexity.

Overall, early exiting such as in BranchyNet [114] emerges as a balanced choice for edge deployment, combining low latency, energy savings, and minimal accuracy loss, especially when computation budgets vary with input complexity.

2.3. Sparsity Handling for Efficient Edge Inference

Pruning has been shown to eliminate over of weights in dense layers and about in convolutional layers of typical models, leading to a substantial weight sparsity [45]. Even compact models such as MobileNetV2 [16] and EfficientNet-B0 [120] benefit substantially, achieving sparsity, translating into to reductions in MACs [121]. In natural language processing, pruning large-scale models such as Transformer [122] and BERT [123] yields [124] and [125] sparsity, respectively, enabling to reductions in MACs. For example, SpAtten [126] exploits structural sparsity in attention mechanisms to reduce both computation and memory access by and , respectively.

Recurrent models such as GRU [127] and LSTM [128] also respond well to pruning, tolerating over weight sparsity with negligible accuracy loss. Techniques such as MASR [129] introduce activation sparsity of approximately by modifying normalization schemes, while DASNet [130] leverages dynamic sparsification to reduce MACs by in AlexNet and in MobileNet, incurring less than accuracy loss.

Table 4.

Summary of representative model compression techniques. ↑ and ↓ denote “increase” and “decrease”, respectively.

Table 4.

Summary of representative model compression techniques. ↑ and ↓ denote “increase” and “decrease”, respectively.

| Type | Technique | Base Model | Objective | Results | Ref. |

|---|---|---|---|---|---|

| Lossy | Binarization | AlexNet | Speedup, model size ↓ | faster, smaller | [77] |

| Pruning | AlexNet | Energy ↓ | energy ↓ | [53] | |

| Pruning | VGG-16 | Speedup | faster | [50] | |

| Pruning | NIN | Speedup | faster | [131] | |

| Low-rank approximation | VGG-S | Energy ↓ | energy ↓ | [132] | |

| Low-rank approximation | CNN | Speedup | faster | [133] | |

| Low-rank approximation | CNN | Speedup | faster | [134] | |

| Knowledge distillation | GooLeNet | Speedup, memory ↓ | faster, memory ↓ | [103] | |

| Memory access optimization | VGG | Power, performance trade-off | accuracy | [135] | |

| Lossless | Pruning, quantization, Huffman coding | VGG-16 | Model size ↓ | smaller | [79] |

| Pruning | VGG | Computation ↓ | computation ↓ | [136] | |

| Pruning | AlexNet | Memory ↓ | memory ↓ | [45] | |

| Compact network design | AlexNet | Model size ↓ | smaller | [17] | |

| Kernel separation, low-rank approximation | AlexNet, VGG | Speedup, memory ↓ | faster, memory ↓ | [137] | |

| Low-rank approximation | VGG-16 | Computation ↓ | computation ↓ | [138] | |

| Knowledge distillation | WideResNet | Memory ↓ | memory ↓ | [139] | |

| Adaptive optimization | MobileNet | Speedup | faster | [140] | |

| Enhanced | Quantization | Faster R-CNN | Model size ↓ | smaller | [70] |

| Pruning | ResNet | Speedup | faster | [141] | |

| Compact network design | SqueezeNet | Model size ↓ | smaller | [142] | |

| Compact network design | YOLOv2 | Speedup, model size ↓ | faster, smaller | [143] | |

| Knowledge distillation | ResNet | Accuracy ↑ | [144] | ||

| Knowledge distillation, regularization | NIN | Memory ↓ | memory ↓ | [145] | |

| Dataflow optimization | DNN | Speedup, energy ↓ | faster, energy ↓ | [146] |

Table 5.

Summary of representative lightweight models, sorted by number of operations.

Table 5.

Summary of representative lightweight models, sorted by number of operations.

| Model | Weight Count (millions) | Operation Count (billions) |

|---|---|---|

| MobileNetV3-Small [29] | 2.9 | 0.11 |

| MNASNet-Small [33] | 2.0 | 0.14 |

| CondenseNet [21] | 2.9 | 0.55 |

| MobileNetV2 [16] | 3.4 | 0.60 |

| ANTNets [22] | 3.7 | 0.64 |

| NASNet-B [147] | 5.3 | 0.98 |

| MobileNetV1 [14] | 4.2 | 1.15 |

| PNASNet [140] | 5.1 | 1.18 |

| SqueezeNext [19] | 3.2 | 1.42 |

| SqueezeNet [17] | 1.25 | 1.72 |

In CNNs, sparsity is also induced during both training and inference stages. For example, MobileNetV2 [16] can skip of MACs due to activation sparsity. Overall, pruning can lead to MAC reductions and significant energy savings, especially when paired with sparsity-aware hardware [148].

2.3.1. Hardware Implications: Static Versus Dynamic Sparsity

Pruning methods require hardware accelerators that can effectively exploit sparsity, which can be structured or unstructured and processed using static or dynamic techniques:

- Static sparsity: Devices such as Cambricon-X [149] pre-identify zero weights during training and store their locations alongside indices, enabling efficient skipping of useless computations. However, these devices often require complex memory indexing that limits their real-time adaptability.

- Dynamic sparsity: Accelerators such as ZENA [150], SNAP [151], and EyerissV2 [59] detect and process non-zero elements at runtime. While more flexible, they require sophisticated control logic, increasing hardware complexity and potentially raising latency if no optimized properly.

Structured sparsity, such as block sparsity [152] or vectorwise block sparsity [153], alleviates indexing challenges by grouping non-zero elements under shared indices. This improves processing element (PE) utilization and memory bandwidth, offering a better trade-off between compression and hardware simplicity.

2.3.2. Neuron-Level Sparsity and Selective Execution

Neuron-level pruning focuses on reducing unnecessary computation by skipping the evaluation of less important neurons. Techniques presented in [154,155] identify negative pre-activations (rendered null by ReLU) early in the pipeline or use predictors to compute only selective neuron outputs. Coupled with bit-serial processing, these techniques allow adaptive precision and reduce power consumption per operation.

Some systems integrate dual sparsity, leveraging both weight and neuron-level sparsity simultaneously for maximum reduction in workload, as demonstrated in [58,60,156].

2.3.3. Sparse Weight Compression and Memory Efficiency

Efficient coding of sparse parameters is critical for minimizing memory access overhead [79]:

- Compressed Sparse Row (CSR) and fn (CSC) formats are common. CSC, as used in EyerissV2 [59] and SCNN [58], offers better memory coalescing and access patterns than CSR [157].

- EIE [156] and SCNN [58] handle compressed dense and convolutional layers, respectively, using address-based MAC skipping and interconnection meshes for partial sum aggregation.

- Cnvlutin [158] and NullHop [159] focus on activation compression, using formats such as Compressed Image Size (CIS) and Run-Length Coding (RLC) to reduce both computation and data movement.

The UCNN accelerator [160] generalizes sparsity beyond zeros and reusing repeated weights, not only reducing memory usage but also minimizing redundant computation, which is an essential trait for edge-oriented accelerators.

2.3.4. Conclusions

Pruning, in all its forms, offers substantial gains in computation and energy savings. However, structured pruning with hardware-aware block sparsity and sparse weight compression using a CSC format provide the best balance between efficiency and deployability on edge platforms. These methods reduce memory usage and computational cost while simplifying accelerator design, unlike unstructured pruning, which poses challenges in memory access and load balancing.

For edge deployment, structured block sparsity combined with lightweight hardware accelerators—such as EyerissV2 [59] and SCNN [58]—is the most suitable approach, delivering consistent performance gains with minimal hardware complexity and excellent energy efficiency.

3. Software Tools and Hardware Platforms for Edge Inference

3.1. Software Tools

3.1.1. Compression–Compiler Co-Design

Compression-compiler co-design has recently gained momentum as a promising approach to boost EI performance on general-purpose edge devices. This method synergistically combines DL model compression with compiler-level optimization, enabling more efficient deployment by reducing model footprint and accelerating inference. By translating high-level DL constructs into hardware-efficient instructions, compilers play a pivotal role in optimizing runtime performance.

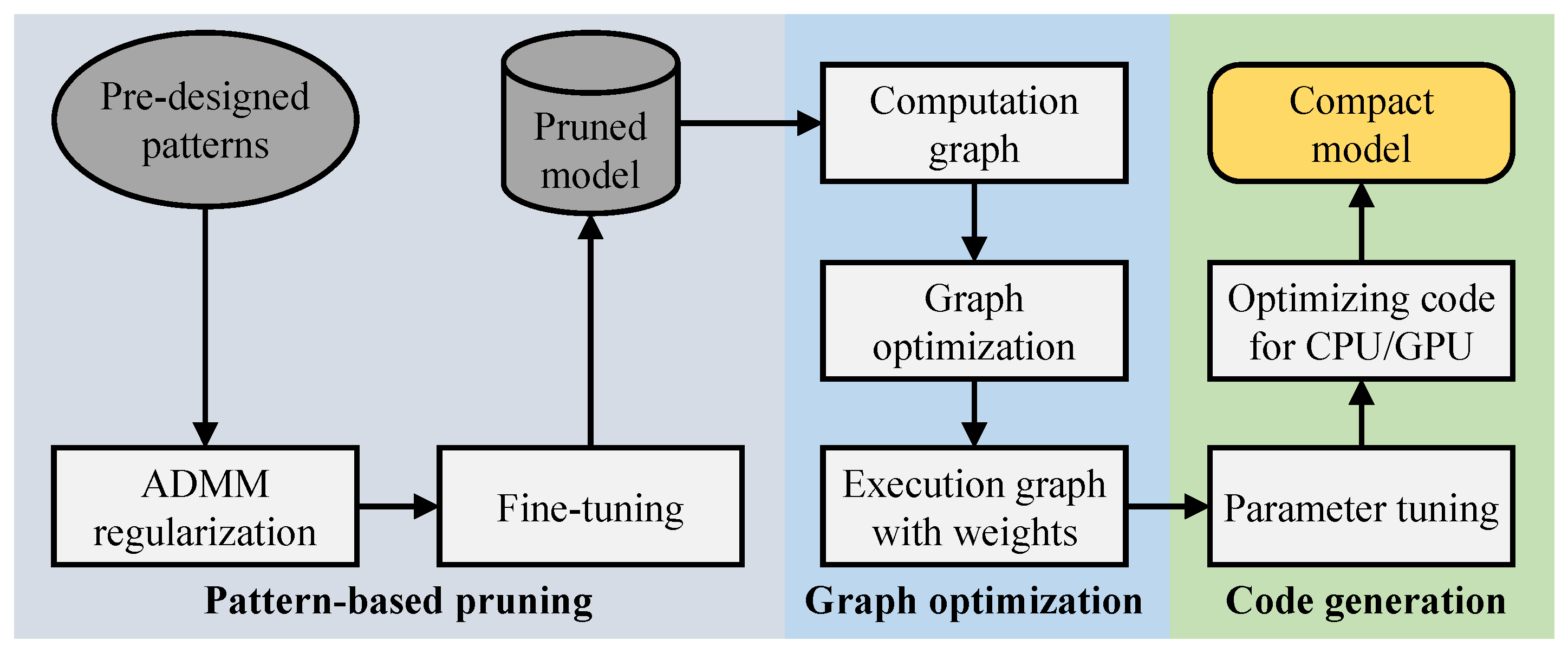

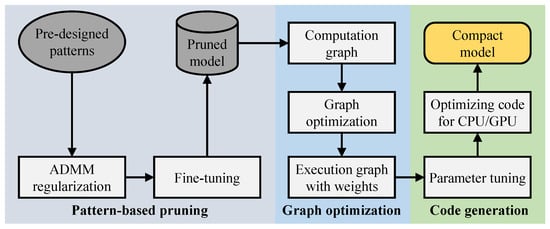

Although this co-design paradigm remained underexplored for some time, recent advancements have demonstrated its potential to significantly improve energy efficiency and reduce latency. Notable examples include PCONV [161], PatDNN [162], and CoCoPIE [163], all of which jointly explore model compression and compiler design. In the compression phase, structured pruning is used to produce hardware-friendly weight patterns. During compilation, instruction-level and thread-level parallelism are harnessed to generate optimized executable code.

MCUNet [164] exemplifies this integration by combining compact model architectures with compiler optimizations that eliminate redundant instructions and memory accesses. Similarly, CoCoPIE introduces a specialized software framework that co-optimizes pruning and quantization in tandem with compiler behavior. As shown in Figure 10, CoCoPIE introduces a custom intermediate representation (IR) that explicitly encodes compression patterns—particularly structured sparsity—during the compilation phase. This IR captures both data-level and compute-level sparsity patterns and feeds them into downstream scheduling and code generation stages.

Figure 10.

Illustration of compression–compiler co-design framework used in CoCoPIE [163].

CoCoPIE’s IR is designed to preserve and exploit structured pruning decisions made during model compression. Each convolutional or matrix multiplication layer is associated with metadata that encodes

- Pruning masks or block patterns, such as 2D pruning windows;

- Retained kernel/channel indices for efficient memory access;

- Execution hints that guide instruction selection and tiling strategies.

During compilation, these annotations inform a custom scheduling engine that avoids loading or executing pruned weights, eliminating redundant memory fetches and arithmetic operations. The IR supports composability, allowing the compiler to treat a CNN as a sequence of reusable, pruned building blocks. This representation facilitates hierarchical compression and enables pattern-matching passes for aggressive optimization, such as fusing pruned layers or collapsing zero-weight regions during register allocation.

The CoCoPIE framework consists of two key modules:

- CoCo-Gen performs structured pruning and generates pruning-aware IR with metadata on weight masks and instruction patterns. It emits executable code tailored to the micro-architecture of the target device.

- CoCo-Tune accelerates pruning by leveraging the composable IR to identify reusable, sparsity-inducing patterns. It performs coarse-to-fine optimization using building blocks drawn from pre-trained models, significantly reducing retraining cost.

Results reported for a commercial platform (Snapdragon 858) have demonstrated the superiority of CoCoPIE over baseline compilers such as TVM [165], TensorFlow-Lite, and MNN [166] across representative models, including VGG-16, ResNet-50, and MobileNetV2. Regarding execution time on a CPU, CoCoPIE exhibits speedup over TVM, over TensorFlow-Lite, and over MNN, respectively. On a GPU, CoCoPIE is , , and faster than TVM, TensorFlow-Lite, and MNN, respectively. In terms of energy efficiency, CoCoPIE is more efficient than Eyeriss [167].

In summary, compression–compiler co-design is emerging as a powerful approach to achieve energy-efficient, high-performance DL inference on everyday edge devices.

3.1.2. Algorithm–Hardware Co-Design

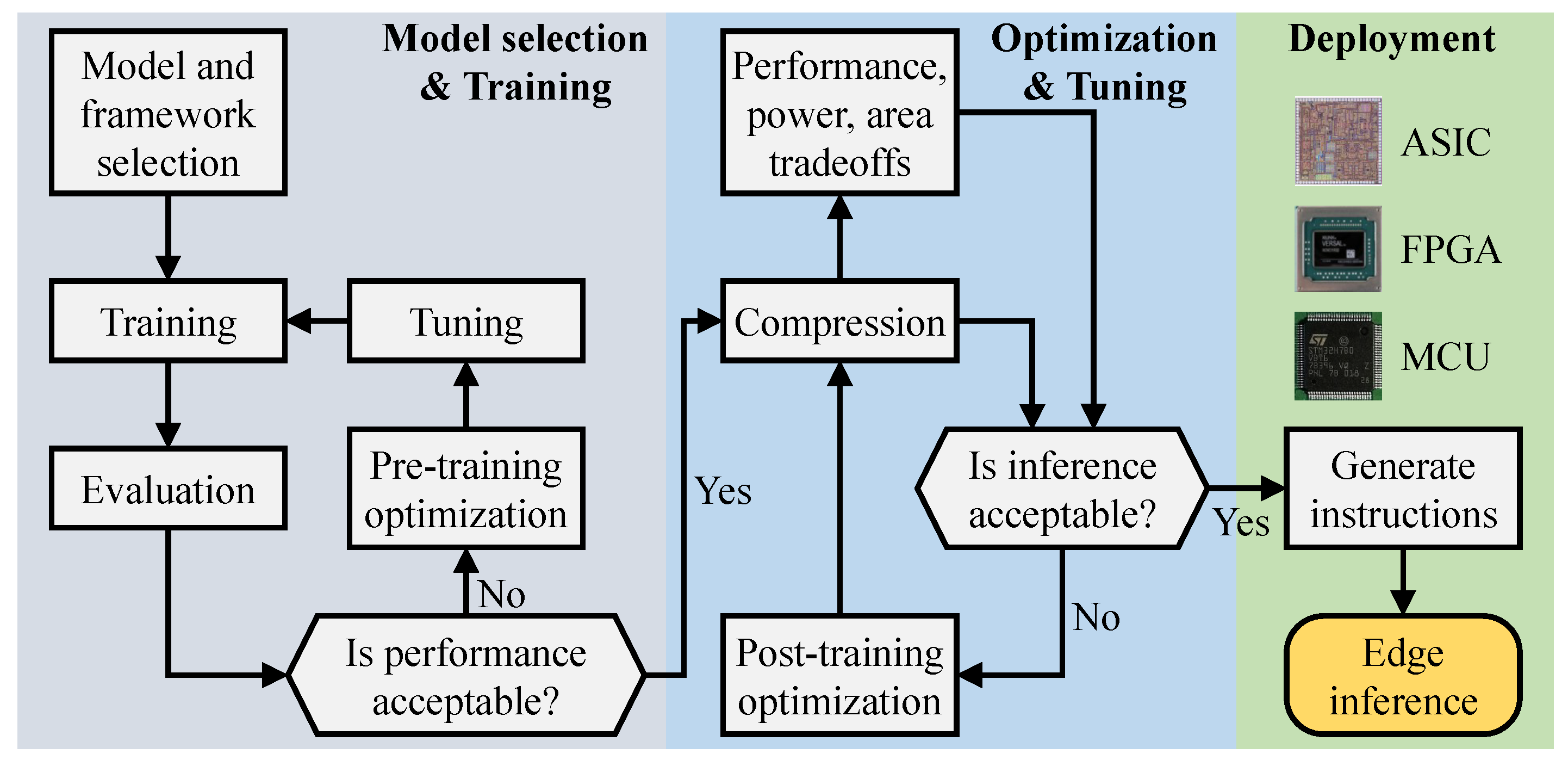

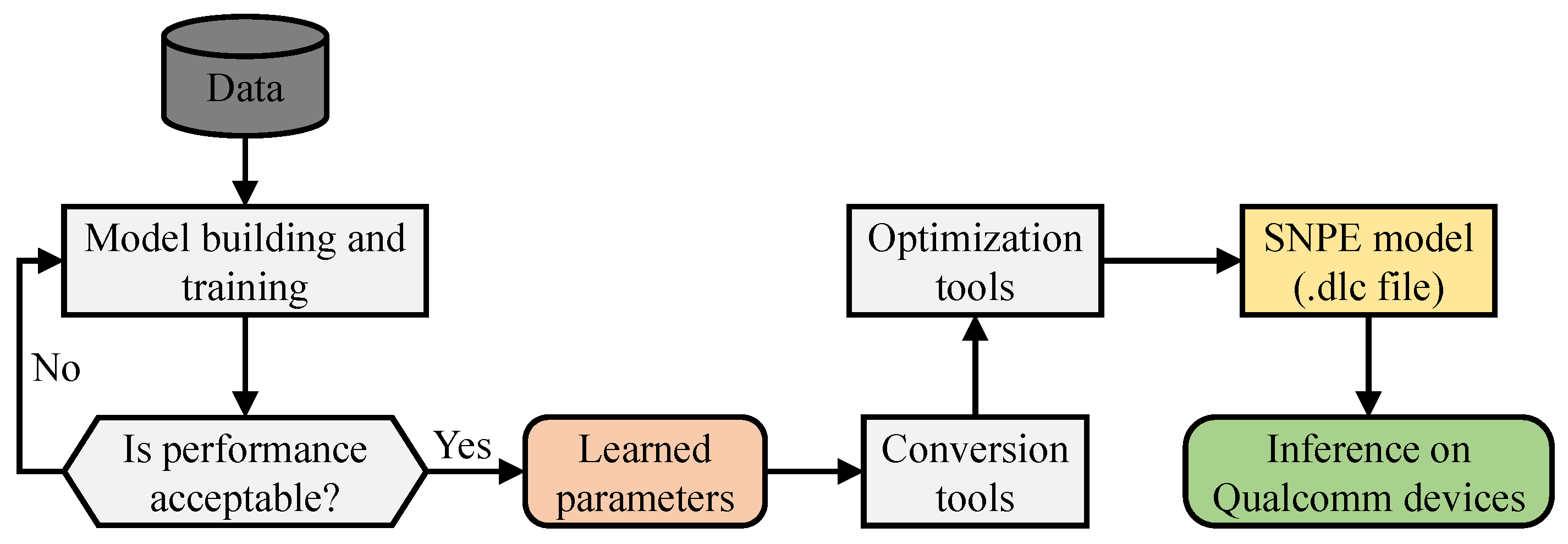

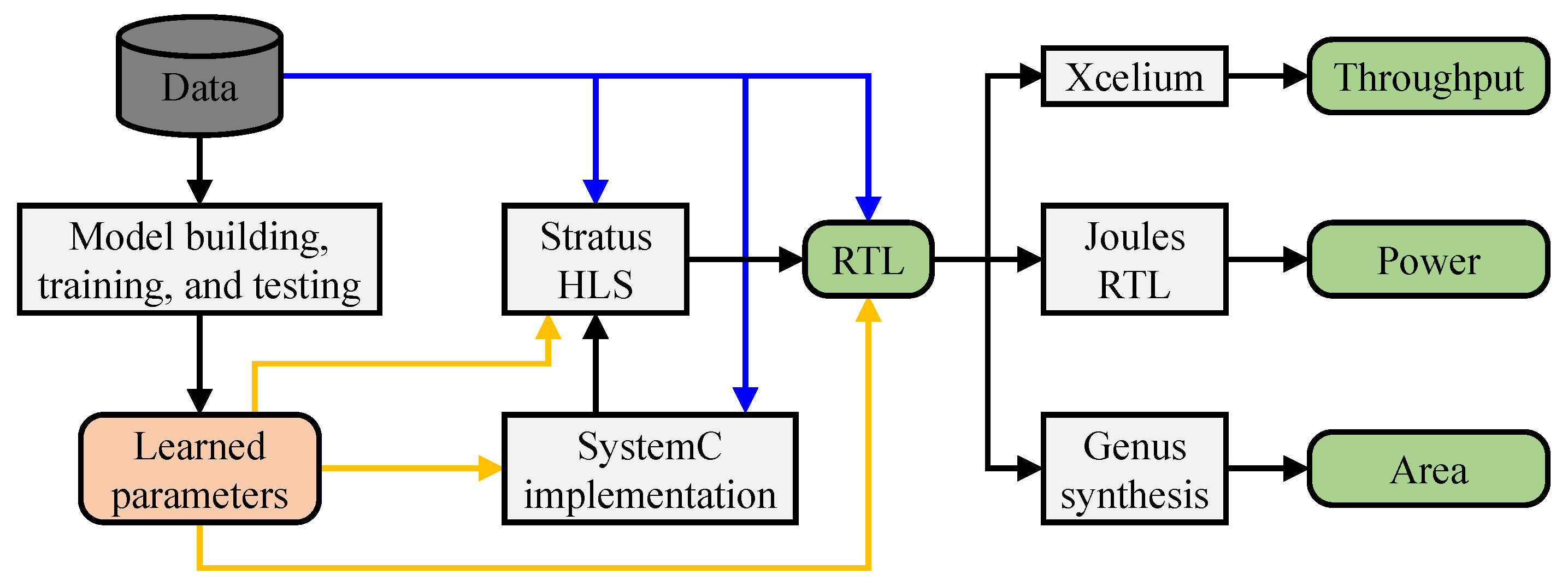

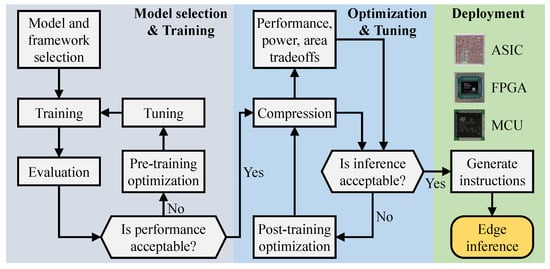

The hardware-based execution of DNN algorithms for efficient inference continues to draw significant research interest. This growing focus on customized hardware stems from two main factors: (i) the performance of conventional hardware is approaching its limits, and (ii) integrating compression techniques into hardware offers a promising path to handle the rising computational demands. At the core of this shift lies the concept of algorithm–hardware co-design. A general overview of this co-design approach is depicted in Figure 11.

Figure 11.

An overview of the algorithm–hardware co-design approach. Model selection and training are performed using any machine learning framework. The trained model is subsequently refined through an edge-optimized intermediate software layer before being deployed on the designated edge device.

The process begins with developing the algorithm within standard ML frameworks. Once optimal models are trained, an edge-specific intermediate software layer optimizes them for deployment. These optimized models are then executed on the target hardware to accelerate inference. Algorithm-level optimizations guide the hardware design, while hardware implementation provides valuable feedback to refine algorithm development further. This bidirectional interaction defines algorithm–hardware co-design as a promising direction for creating application-specific DNN accelerators tailored for edge environments.

3.1.3. Efficient Parallelism

Parallelization techniques are key to accelerating DL inference by efficiently utilizing underlying hardware resources. This strategy distributes workloads across multiple processing units, improving both latency and throughput. CNNs and RNNs, due to their inherently repetitive computations, particularly benefit from such parallel execution on platforms such as CPUs, GPUs, and DSPs [168].

For example, CNNdroid [169], a GPU-based deep CNN inference engine tailored for Android devices, delivers up to speedup and energy savings, highlighting the performance gains of mobile GPU acceleration. However, GPU-based parallelization, while effective, poses challenges in energy-limited environments and often demands complex hardware-specific tuning, especially when deploying on heterogeneous systems with tight thermal budgets.

CUDA, NVIDIA’s parallel computing platform, enables fine-grained control over GPU threads and memory hierarchy, making it ideal for performance-critical applications such as real-time object detection and robotic navigation [170]. However, applying CUDA directly to mobile GPUs often results in suboptimal efficiency due to architectural limitations and limited thermal headroom [171]. As a more portable alternative, RenderScript [172]—a high-level framework for Android—automatically maps data-parallel tasks to available GPU cores. Using this abstraction, SqueezeNet achieves over speedup on a Nexus 5 smartphone, illustrating the software simplicity and deployment ease of RenderScript at the cost of reduced hardware-level optimization flexibility compared to CUDA.

In contrast, Cappuccino [173] focuses on automated model deployment for EI. It breaks down neural operations across kernels, filters, and output channels, promoting parallel computation with minimal manual intervention. While this approach enhances developer productivity and ensures reasonable energy efficiency, it may introduce slight numerical deviations due to approximation during parallel execution.

DeepSense [174] represents another GPU-accelerated inference solution designed for mobile environments, assigning heavy computation layers to the GPU while the CPU handles lighter tasks such as padding and memory operations. However, mobile GPUs’ limited memory bandwidth and storage often restrict support for larger CNN architectures [175]. A practical workaround, demonstrated using YOLO on the Jetson TK1, involves splitting workloads between CPU and GPU. This hybrid setup can still yield up to inference acceleration, though it requires careful synchronization and resource management [176].

To further reduce computational cost, DeepX [177] applies singular value decomposition to compress weight matrices and shrink neural layers. This not only reduces model size but also facilitates parallel processing of compressed layers, improving both execution speed and energy efficiency. The DeepX Toolkit (DXTK) [178] generalizes this concept, enabling broad compatibility across model types and making it a robust solution for scalable, resource-limited edge platforms.

Among the evaluated methods, DeepX and DXTK stand out as the most edge-friendly solutions due to their balanced design: they offer significant energy savings, require minimal manual tuning, and support parallel inference across compact networks. Their compatibility with compressed models further makes them well-suited for battery-powered and thermally constrained devices. In contrast, while CUDA-based implementations offer granular performance control, their higher hardware and development complexity make them less ideal for general-purpose edge deployment without dedicated optimization efforts.

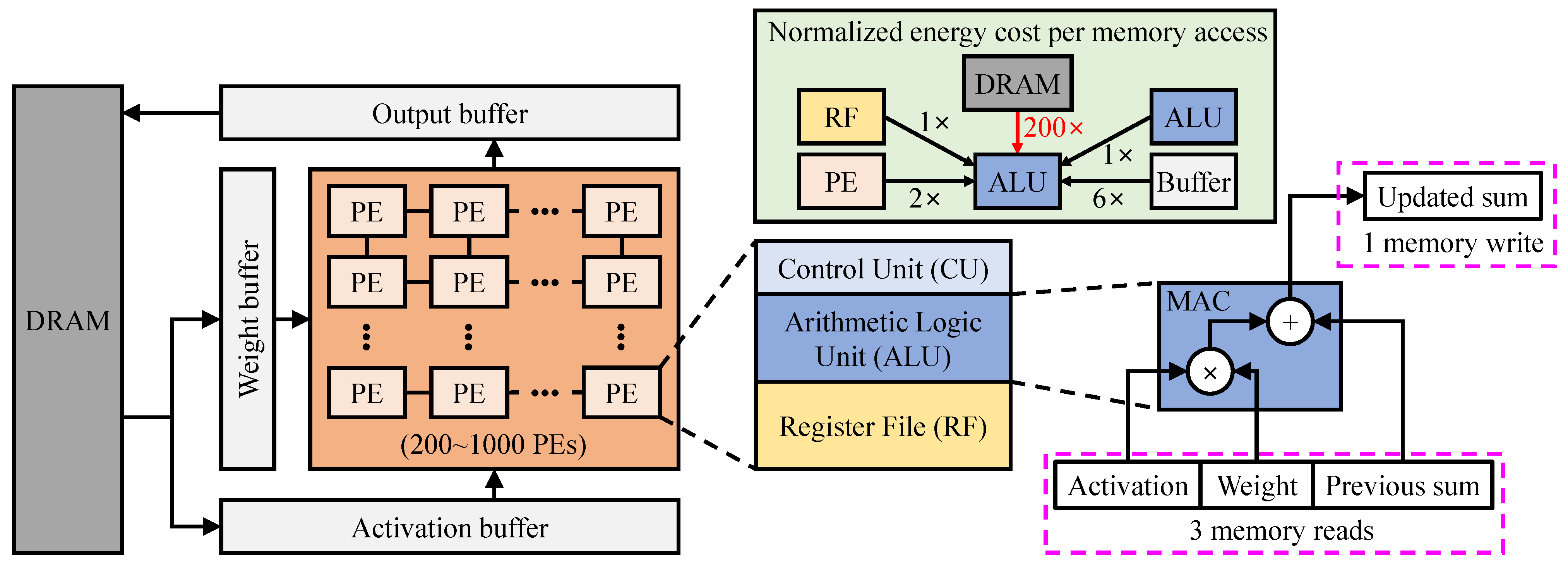

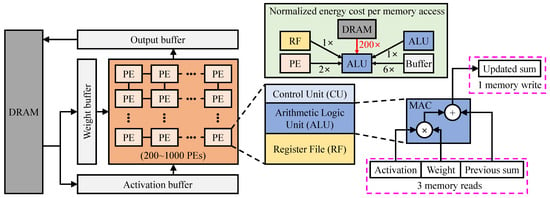

3.1.4. Memory Optimization

Deep learning inference relies heavily on computation-intensive MAC operation, each necessitating three memory reads (weights, input activations, and partial sums) and one write to update results. This frequent memory access becomes a critical performance bottleneck, particularly for edge devices where energy efficiency and low latency are paramount. To address this challenge, most DNN accelerators adopt a hierarchical memory architecture (illustrated in Figure 12), consisting of

Figure 12.

An illustration of a typical DNN accelerator. At the heart of the ALU lies the MAC unit, which typically requires up to four memory accesses per operation. The normalized energy cost associated with each type of memory access is also illustrated.

- Off-chip DRAM, which stores the bulk of model parameters and intermediate data;

- On-chip SRAM-based global buffers (GLBs), which cache activations and weights temporarily;

- Register files (RFs) embedded within each PE, providing the fastest and lowest-power memory access.

While DRAM offers large capacity, it is the most energy-intensive component. In contrast, on-chip memories provide faster access at significantly lower power, but their capacity is limited. As such, optimizing memory access patterns to minimize DRAM usage and maximize on-chip reuse is essential for EI.

A. Dataflow Strategies for Memory Reuse: Efficient data reuse strategies can drastically reduce memory traffic and energy consumption. Four common dataflow schemes, as summarized in Table 6, are widely employed in DL accelerators:

Table 6.

Summary of four dataflow strategies.

- Weight Stationary (WS): This strategy keeps weights fixed in RFs while streaming activations across PEs, significantly reducing weight-fetch energy. WS is well-suited for convolutional layers with high weight reuse. For example, NeuFlow [179] applies WS for efficient vision processing. However, frequent movement of activations and partial sums may still incur moderate energy costs.

- No Local Reuse (NLR): In this scheme, neither inputs nor weights are retained in local RFs; instead, all data are fetched from GLBs. While this simplifies hardware design and supports flexible scheduling, the high frequency of memory access limits its energy efficiency. DianNao [180] follows this approach but mitigates energy consumption by reusing partial sums in local registers.

- Output Stationary (OS): OS retains partial sums within PEs, allowing output activations to accumulate locally. This reduces external data movement and is particularly beneficial for CNNs. ShiDianNao [181] adopts this strategy and embeds its accelerator directly into image sensors, eliminating DRAM access and achieving up to energy savings over DianNao [180]. OS is especially well-suited for vision-centric edge devices requiring real-time inference.

- Row Stationary (RS): RS maximizes reuse at all levels—inputs, weights, and partial sums—within RFs. It loops data within PEs, significantly minimizing memory traffic. MAERI [182] is a representative architecture that implements RS using flexible interconnects and tree-based data routing. Despite increased hardware complexity, RS offers excellent performance-to-energy trade-offs and is highly scalable across DL models.

B. Reducing Memory Load Through Compression and Sparsity: Beyond dataflow optimizations, compression and sparsity techniques further alleviate memory bottlenecks:

- Run-Length Coding (RLC): This method compresses consecutive zeros, reducing external bandwidth by up to for both weights and activations [167].

- Zero-Skipping: This approach skips MAC operations involving zero values, reducing unnecessary memory access and saving up to of energy [167].

- Weight Reuse Beyond Zeros: This scheme generalizes sparsity by recognizing and reusing repeated weights, not limited to zeros, further lowering memory access and computation requirements [160].

These strategies reduce both the volume and frequency of memory access, allowing edge accelerators to achieve greater energy efficiency without compromising performance.

C. Suitability for Edge Deployment: Considering both memory usage and access patterns, RS and OS strategies are the most suitable for edge deployment:

- RS achieves the best overall efficiency by minimizing access at all hierarchy levels, though it introduces higher design complexity. It is preferred in scenarios where flexibility and scalability are needed.

- OS offers a more straightforward design and strong efficiency in vision-centric models, making it highly appropriate for lightweight, task-specific edge devices.

When coupled with compression methods such as RLC and zero-skipping, these dataflows become even more advantageous, enabling ultra-low-power, high-throughput edge AI deployments.

3.1.5. FPGA-Centric Deployment via Deep Learning HDL Toolbox

The Deep Learning HDL Toolbox by MathWorks offers a comprehensive framework for deploying DL models on Xilinx and Intel FPGA/SoC platforms, making it a practical solution for EI [183]. The toolbox enables users to develop DL models natively in MATLAB (currently supported from version R2021a onward) or import pre-trained models from external sources, such as Keras and ONNX, with seamless integration into a hardware deployment workflow.

A distinguishing feature of the toolbox is its automated hardware compilation flow, where users can generate synthesizable Verilog or VHDL using HDL Coder and Simulink, targeting custom edge hardware without writing low-level HDL code manually. This high level of abstraction dramatically simplifies the hardware design process, making it well-suited for engineers with limited hardware experience. Deployment can be conducted via standard Ethernet or JTAG interfaces, allowing real-time model instruction loading and performance monitoring.

To optimize performance on resource-limited edge devices, the toolbox supports 8-bit integer quantization, significantly reducing memory footprint and improving computational throughput. Quantization minimizes the demand on off-chip memory (for example, DDR), enabling better use of on-chip block RAM and reducing overall energy consumption—a key consideration in edge AI scenarios.

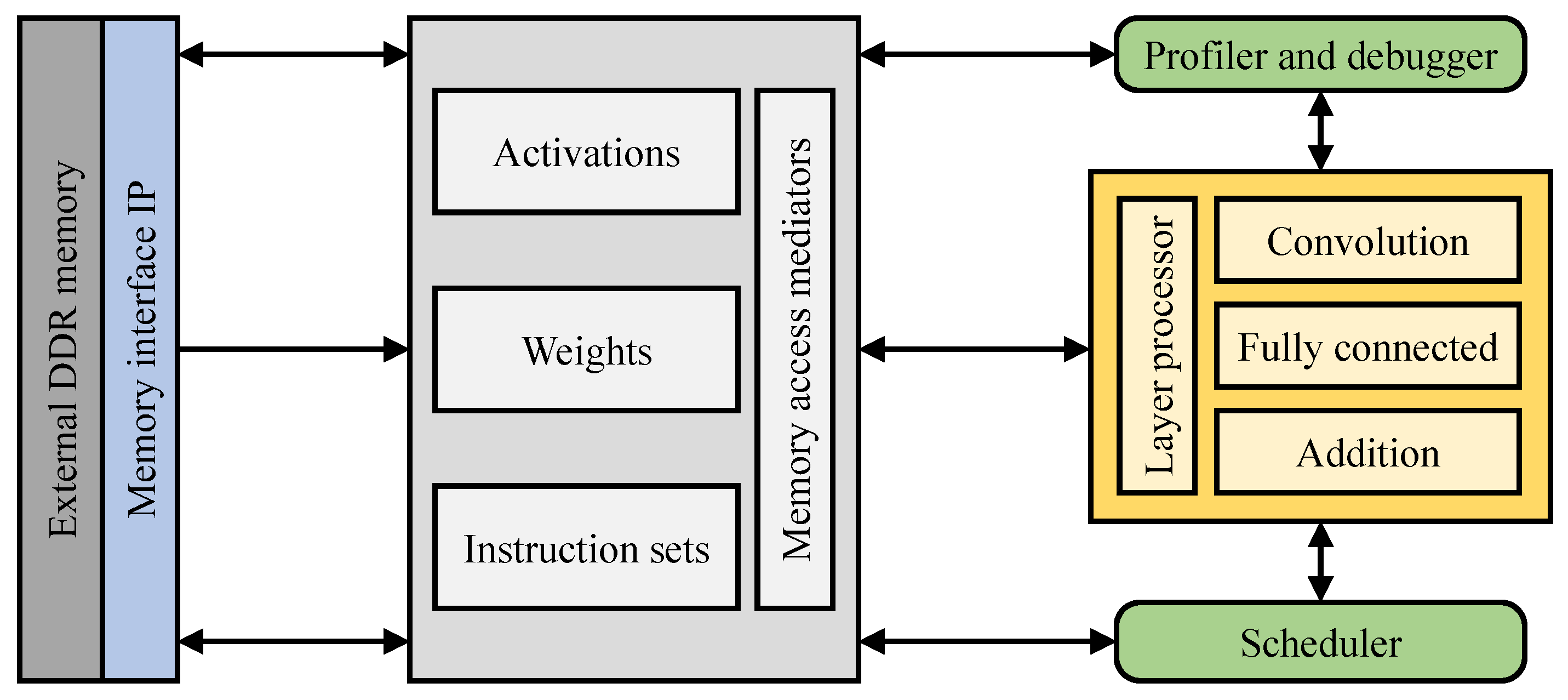

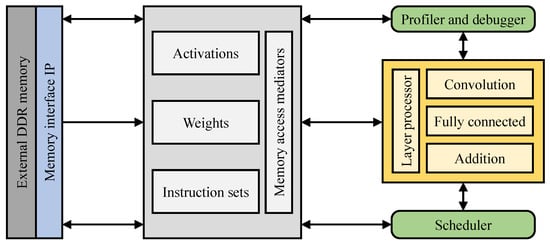

Figure 13 presents the architecture of the MATLAB-generated HDL processor IP core, which is platform-agnostic and scalable. The core integrates

Figure 13.

An overview of the MATLAB HDL DL Processor IP that features four AXI4 master interfaces for data transfer, external DDR memory, a dedicated layer processing unit, and a scheduling module.

- AXI4 master interfaces for DDR communication;

- Dedicated convolution and fully connected (FC) processors;

- Activation normalization modules supporting ReLU, max-pooling, and normalization functions;

- Controllers and scheduling logic to coordinate dataflow and computation.

Input activations and weights are transferred from DDR to block RAM via AXI4 and then processed by the convolution or FC units. Intermediate results remain on-chip, reducing redundant off-chip memory access, which is costly in terms of both latency and energy. The IP core also supports output feedback to MATLAB, facilitating layerwise latency profiling and bottleneck analysis.

Compared to conventional SoC or GPU-based solutions, the toolbox’s FPGA-based deployment achieves a more balanced trade-off between performance and energy efficiency, particularly in scenarios requiring deterministic latency and low-power operation—making it highly suitable for edge AI applications such as embedded vision, robotics, and autonomous sensing.

Moreover, the toolbox includes built-in hardware support for essential DL layers, including 2D and depthwise convolutions, FC layers, various activation functions, normalization, dropout, and pooling. It supports the deployment of popular models such as AlexNet, VGG, DarkNet, YOLOv2, MobileNetV2, and GoogLeNet to FPGA development boards such as Xilinx Zynq-7000 ZC706 FPGA, Zynq UltraScale+ MPSoC ZCU102, and Intel Arria 10 SoC. This wide compatibility makes the toolbox ideal for rapid prototyping and deployment of DL models on energy- and resource-constrained edge platforms, combining customizability, efficiency, and portability in a single development flow.

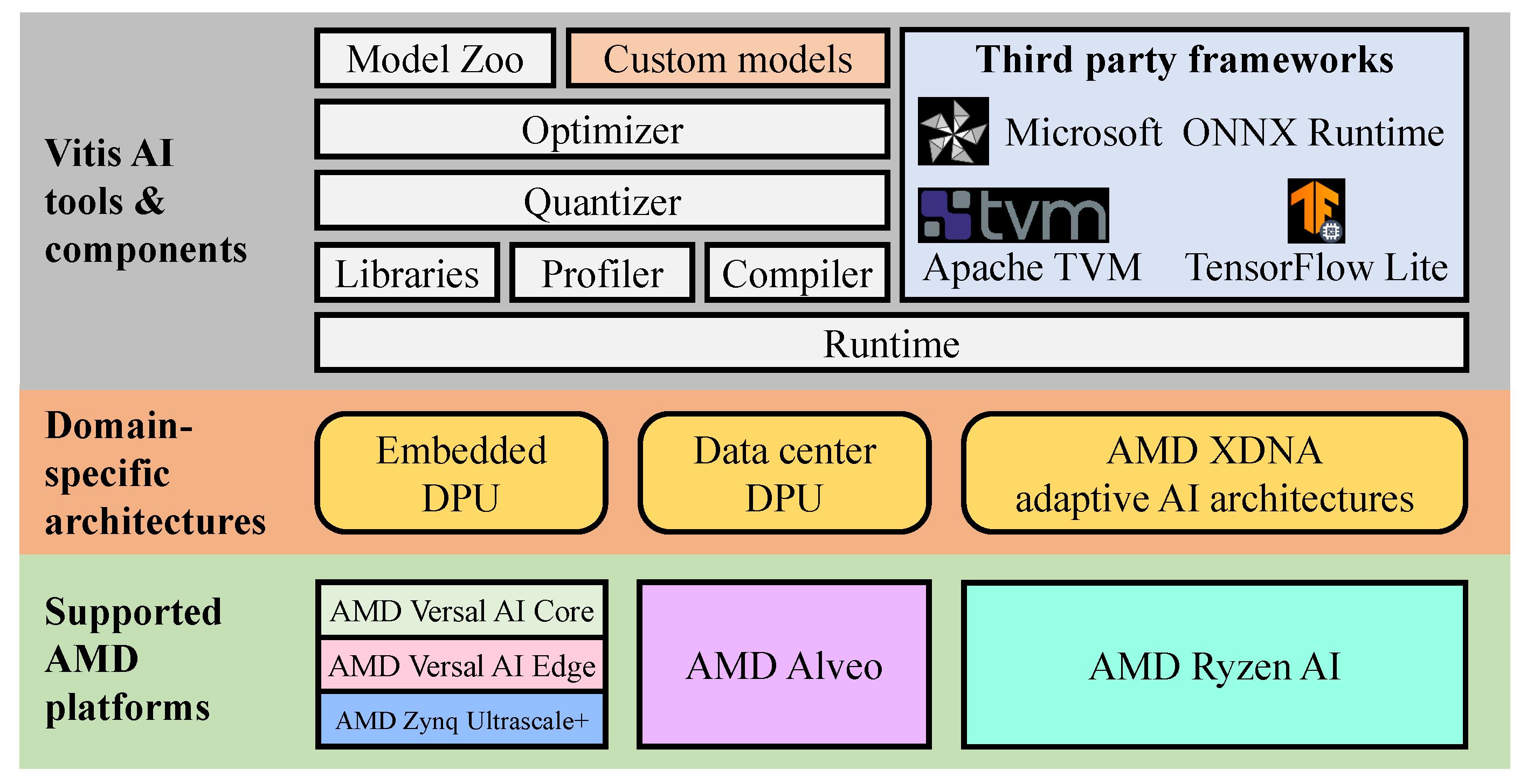

3.1.6. AMD Vitis AI

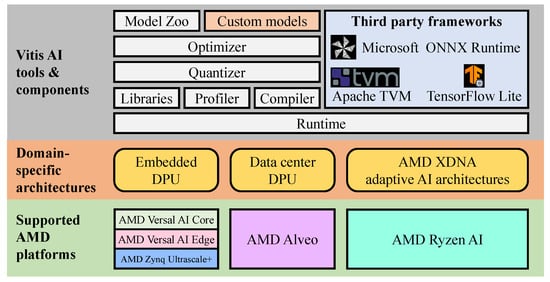

AMD Vitis AI is an integrated development framework engineered to streamline and accelerate DL inference on AMD’s adaptable computing platforms [184]. It encompasses a full toolchain—ranging from IP cores and development libraries to pre-trained models and compiler toolkits—designed to simplify and optimize AI deployment. The core inference engine in this environment is the Deep Learning Processing Unit (DPU), a reconfigurable hardware block tailored for executing popular DL models such as ResNet, YOLO, GoogLeNet, SSD, MobileNet, and VGG.

The DPU is supported across a broad spectrum of AMD hardware platforms, including Zynq UltraScale+ MPSoCs, the Kria KV260 Vision AI Starter Kit, Versal adaptive SoCs, and Alveo accelerator cards. For embedded deployments, Vitis AI enables DPU integration via both the Vitis unified software platform and the Vivado hardware design suite. Figure 14 illustrates the complete Vitis AI toolchain, highlighting the DPU, compiler, runtime, and profiling components tailored for edge deployment. Notably, developers can utilize prebuilt board support packages (BSPs) for rapid deployment without needing in-depth hardware design skills, making Vitis AI an accessible yet powerful solution for AI at the edge.

Figure 14.

Overview of AMD Vitis AI. It includes tools, libraries, models, and optimized IPs for accelerating DL inference on edge platforms.

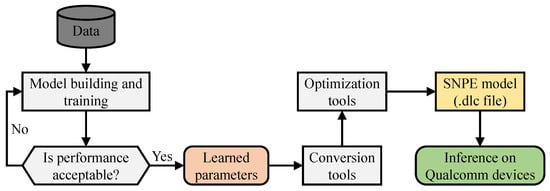

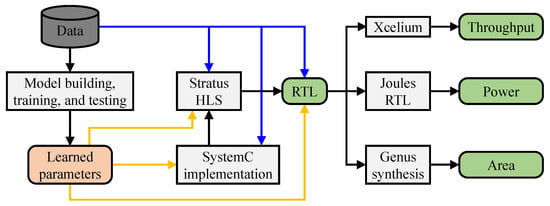

From a memory and energy constraint, Vitis AI is well-optimized for low-power inference. The DPU architecture is designed to maximize data locality, significantly reducing the frequency of off-chip DRAM access—one of the primary contributors to power consumption. Quantization plays a key role in this: the Vitis AI Quantizer converts floating-point models to INT8, which not only lowers memory usage but also improves energy efficiency and inference speed with negligible accuracy loss. Compared to standard floating-point models, INT8 quantization can reduce memory footprint by over and inference latency by up to , making it especially well-suited for resource-limited edge devices.