Abstract

Ground-based optical equipment for detecting geostationary orbit space targets typically involves long-exposure imaging, facing challenges such as small and blurred target images, complex backgrounds, and star streaks obstructing the view. To address these issues, this study proposes a GSTD-DETR model based on Real-Time Detection Transformer (RT-DETR), which aims to balance model efficiency and detection accuracy. First, we introduce a Dynamic Cross-Stage Partial (DynCSP) backbone network for feature extraction and fusion, which enhances the network’s representational capability by reducing convolutional parameters and improving information exchange between channels. This effectively reduces the model’s parameter count and computational complexity. Second, we propose a ResFine model with a feature pyramid designed for small target detection, enhancing its ability to perceive small targets. Additionally, we improve the detection head and incorporate a Dynamic Multi-Channel Attention mechanism, which strengthens the focus on critical regions. Finally, we designed an Area-Weighted NWD loss function to improve detection accuracy. The experimental results show that compared to RT-DETR-r18, the GSTD-DETR model reduces the parameter count by 29.74% on the SpotGEO dataset. Its AP50 and AP50:95 improve by 1.3% and 4.9%, reaching 88.6% and 49.9%, respectively. The GSTD-DETR model demonstrates superior performance in the detection accuracy of faint and small space targets.

1. Introduction

Geostationary orbit (GEO) hosts numerous critical space assets, such as communication and navigation satellites. Monitoring resident space objects in these orbits is crucial for achieving space situational awareness and safeguarding important space assets []. However, due to factors such as the distant target distance, clouds, atmospheric effects, light pollution, sensor noise, and star background interference [], the ground-based detection of geostationary orbit targets faces significant challenges.

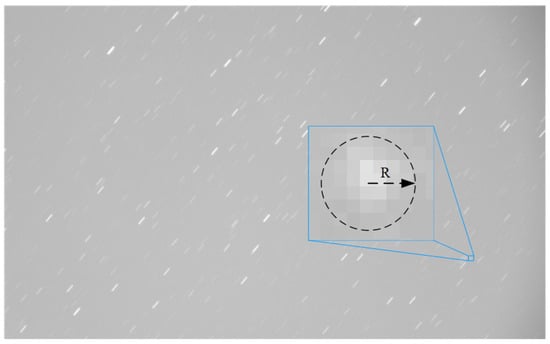

As shown in Figure 1, under the staring observation mode of ground-based telescopes, geosynchronous orbit satellites typically exhibit Gaussian-like point spread functions with radii spanning 2–5 pixels or manifest as multi-pixel clustered point sources.

Figure 1.

Morphology of space targets in images under telescope’s staring mode.

The goal of detecting geostationary orbit space targets is to accurately detect and recognize small targets amidst complex backgrounds. Traditional object detection methods are typically optimized for large targets and are constrained by receptive field limitations, which present significant challenges in detecting small targets. In recent years, deep learning methods, particularly convolutional neural networks (CNNs) and Transformer models, have led to significant progress in object detection. However, these methods often face limitations in small target detection and are frequently hindered by insufficient local feature extraction capabilities and high computational complexity [,].

To address the above issues and enhance the capability of detecting small space targets in complex environments, this paper proposes an improved space small target detection model based on the RT-DETR-r18 model. The structural improvements introduced over RT-DETR aim to optimize the model for small target detection tasks while maintaining computational efficiency. The main improvements are as follows:

- (1)

- We propose a DynCSP architecture as the backbone network. This backbone not only extracts high-quality feature representations but also significantly reduces the number of parameters and computational costs. By splitting the feature map and processing each part separately, the DynCSP architecture improves information exchange between channels and enhances the network’s feature extraction capability, which is particularly useful for small target detection.

- (2)

- We propose a ResFine model to enhance the network’s ability to perceive small targets, especially in feature extraction and information fusion. The model incorporates a feature pyramid structure, which improves the ability to capture small-scale details. Additionally, we design an SEK module for feature integration, allowing for a more efficient fusion of multi-scale features and enhancing detection accuracy.

- (3)

- We introduce a Dynamic Multi-Channel Attention (DMCA) mechanism, which is an improvement over the original Moment Channel Attention (MCA) mechanism. The DMCA mechanism dynamically adjusts the importance of feature channels, helping the model focus on critical regions while maintaining computational efficiency. Additionally, we modify the network’s neck by removing the P5 layer and retaining only the P3 and P4 layers, enabling the model to focus on high-resolution features that are better at preserving small target details, thus improving small target detection accuracy.

- (4)

- We propose an Area-Weighted NWD (AWNWD) loss function, which builds on the Normalized Wasserstein Distance (NWD) loss function. This loss function introduces small target-aware enhancements and scale difference terms, improving the model’s focus on small targets. By incorporating both the area and scale differences, this loss function improves the robustness and precision of small object detection, particularly in complex environments.

These structural improvements work synergistically to enhance the detection of small space targets and low signal-to-noise ratios while maintaining a reasonable computational cost.

The structure of this paper is as follows: Section 2 reviews the related research in the field of space target detection, introducing both traditional methods and deep learning-based approaches. Section 3 provides a detailed description of our proposed GSTD-DETR model, focusing on its core architecture, innovative modules, and method improvements. Section 4 presents the experimental setup, dataset, and evaluation metrics, along with the comparative experimental results, analyzing the advantages of our method. Section 5 concludes this paper by summarizing the main contributions, discussing the limitations of the model, and outlining future research directions.

2. Related Works

In space object detection, traditional methods include morphological recognition, star map matching, matched filtering, background masking, multi-frame stacking, frame difference, trajectory correlation, and multi-level hypothesis testing. For instance, Gao et al. proposed a target extraction method based on Time Energy Selective Scaling (TESS) and three-dimensional Hough transform []. Kong et al. introduced multi-frame masking techniques and a morphological dilation-based weak target extraction algorithm []. Han Luyao et al. proposed a real-time detection method through morphological processing and inter-frame matching that is suitable for targets with known prior motion information but with performance degradation under low signal-to-noise ratios (SNRs) []. Yang et al. proposed a hierarchical exposure strategy and a star map target sequence-based celestial object removal method, which is only applicable to natural targets []. Yao et al. improved the adaptive inter-frame difference detection algorithm by enhancing the detection effect through median and Kalman filtering, but the accuracy decreased in complex noisy environments []. Wang et al. proposed a target detection method based on both time-domain and spatial-domain correlations; however, the method suffers from low computational efficiency and heavy reliance on prior information []. Miao et al. proposed an improved multi-level hypothesis testing algorithm, although the computational load increases under low-SNR conditions []. PAN et al. proposed a small, weak target detection method based on time series images, achieving efficient detection through SNR enhancement and adaptive background noise suppression, but it performs poorly in dynamic scenarios [].

With the development of deep learning, traditional image processing methods have gradually shown their limitations in detecting targets with complex backgrounds and low contrast [,]. Consequently, deep learning methods have gradually become mainstream in the field of target detection [,]. Deep learning-based target detection methods can be classified into two main categories: two-stage algorithms (such as SPP-Net and Faster R-CNN) and one-stage algorithms (such as the You Only Look Once series) []. For example, Rasit Abay et al. achieved high-altitude target detection with a feature fusion pyramid network, achieving an F1 score of 92% []. Meanwhile, Vittori et al. proposed a U-Net-based method for target trajectory extraction, which showed limited effectiveness in detecting small target trajectories []. Jia et al. enhanced the detection and classification capability for celestial targets by improving the Faster R-CNN algorithm []. Additionally, the methods proposed by Guo et al., which incorporate channel and spatial attention networks [], and by Chen et al., who combined LSTM and CNNs for space target detection and tracking [], demonstrated good performance in multi-task scenarios. To address the challenges of small targets and complex backgrounds, Liu et al. proposed an improved algorithm based on attention mechanisms [], though it struggles with detecting targets with blurred edges.

In recent years, both the YOLO series and the DETR model have made significant progress in space target detection, but they still face a trade-off between computational efficiency and accuracy, particularly in noisy environments where performance remains to be improved [,]. Yuan et al. [] improved the YOLOv5 model by integrating cross-layer context, adaptive weighting, and spatial information enhancement modules, which enhanced the detection capability for small space targets, though at the cost of increased computational burden. Wang et al. [] enhanced the DETR model by introducing diverse attention mechanisms into the backbone network and encoder, significantly improving the detection of spacecraft and space debris, though this approach requires considerable computational overhead and yields suboptimal performance for tiny targets.

Despite these advances, the detection of small space targets still faces challenges such as high computational complexity and poor real-time performance. Therefore, further improvements in detection accuracy, reductions in computational costs, and enhancement in the robustness of these methods in dynamic and low-signal-to-noise-ratio environments are still necessary.

3. Methodology

3.1. GSTD-DETR Model

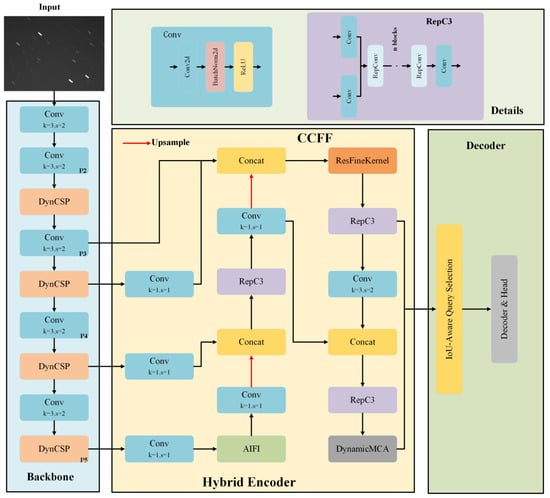

Figure 2 illustrates the GSTD-DETR framework proposed in this paper. This method is based on RT-DETR, an advanced end-to-end object detector known for balancing speed and accuracy across various tasks []. The RT-DETR model has several versions, including RT-DETR-r18, RT-DETR-r34, RT-DETR-r50, RT-DETR-r101, and RT-DETR-x []. Among these, RT-DETR-r18 achieves a good trade-off between speed and accuracy. Therefore, we chose RT-DETR-r18 as the base model for developing our network. As shown in Figure 2, the GSTD-DETR framework consists of a backbone, a hybrid encoder, a decoder, and a prediction head.

Figure 2.

GSTD-DETR framework diagram.

First, the backbone network captures key information from the input image and generates feature maps at different scales. These feature maps are then fused through an efficient hybrid encoder. Next, the IoU (Intersection over Union)-aware query selection mechanism is used to select a fixed number of image features as the initial queries for the decoder. In the decoder, with the help of auxiliary heads, the queries are gradually refined, ultimately generating bounding boxes with confidence scores.

3.2. DynCSP Backbone Network

The RT-DETR model offers two different backbone network options: ResNet and HGNetv2 [,]. However, both networks rely heavily on the use of full-channel standard convolutions (SCs) in deep layers, leading to significant computational burdens, large parameter counts, the loss of spatial information, and a lack of optimization for small-scale targets when handling small targets. Therefore, for small target detection tasks, network design should place greater emphasis on addressing these issues and adopt more lightweight and adaptable backbone networks.

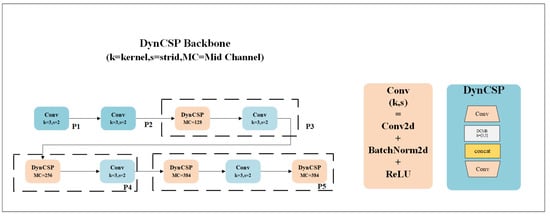

The Cross-Stage Partial network (CSPNet) is a network architecture designed to enhance the learning capability and computational efficiency of convolutional neural networks (CNNs). The core idea of CSPNet is to split the feature map into two parts: one part is processed through residual networks (ResNet-like blocks), while the other part is passed directly through, with the results of both parts being merged. This design reduces computational load, as not all features are processed through complex residual blocks, while still maintaining the model’s learning capacity []. The DynCSP block proposed in this paper is inspired by the CSP architecture. The structure of the DynCSP backbone network is shown in Figure 3.

Figure 3.

DynCSP backbone structure diagram.

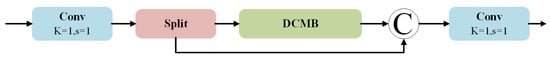

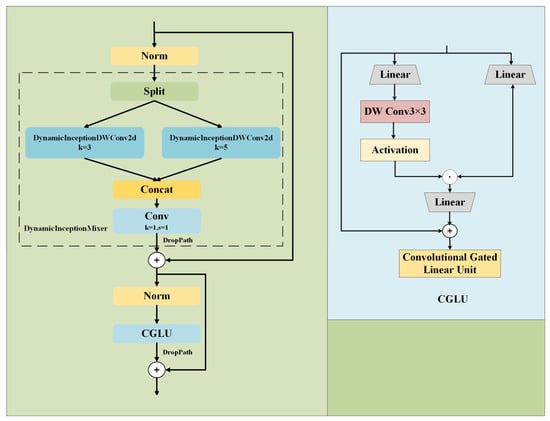

The DynCSP block splits the input feature map, performs convolution operations on each part separately, and then generates the output feature map by concatenating the channels. This structure facilitates the flow of information while also avoiding information bottlenecks, thereby enhancing the network’s feature extraction capability. It also improves gradient propagation, allowing the network to be better optimized during training, especially in very deep networks, thus avoiding issues such as vanishing or exploding gradients [,]. The structure of this module is shown in Figure 4.

Figure 4.

DynCSP block.

Considering the small scale and varying shapes of small targets in images, traditional convolutional kernels (such as 3 × 3 kernels) may struggle to capture the detailed features of these small targets. Therefore, the DCMB module introduces multiple convolution kernels of different sizes, combining them to capture more scale-specific information.

Traditional convolution operations use fixed convolution kernel weights, while dynamic convolution adjusts the weights of the kernels based on the features of the input data []. The DCMB module optimizes the use of convolution kernels by dynamically adjusting their weights, enhancing sensitivity to small targets. Through this mechanism, the network can dynamically select the most suitable convolution operation based on the features of the current input.

The DCMB module fuses the features extracted by different convolution kernels, allowing the features of each channel to incorporate information from other scales, thereby improving the model’s ability to recognize targets. To prevent the issue of gradient vanishing in deep networks while maintaining information flow, the DCMB module incorporates residual connections, enabling smooth information flow across multiple modules. These residual connections allow input information to be directly passed to the output, preventing information loss in complex network layers and enhancing the network’s training stability.

The design of the DCMB module primarily addresses the issues of multi-scale feature fusion and computational efficiency in small target detection through the combination of multi-scale convolutions, dynamic convolution kernel weight adjustment, and residual connections. This module has strong adaptability and flexibility, dynamically selecting the most suitable convolution kernel based on the input features, thus improving the detection accuracy of small targets in complex environments. The structure is shown in Figure 5.

Figure 5.

DCMB module.

The DynamicInceptionMixer part of the DCMB module optimizes feature extraction by using multiple convolution kernel sizes and dynamically adjusting channels, enabling the network to capture small target features at different scales. By introducing the Convolutional GLU (CGLU) activation function, the network’s nonlinear representation capability is enhanced, while the DropPath mechanism effectively mitigates overfitting, thus improving the network’s robustness. The formula for the CGLU activation function is as follows.

Here, denotes element-wise multiplication, represents the sigmoid activation function, is the input vector, and and are the weight matrix and bias vector of the gating layer, respectively.

This part splits the input into two sub-tensors, each containing half of the original input’s channel count. The split operation divides the input tensor along the channel dimension (dim = 1) into two parts. Each sub-tensor, containing half of the channels, is passed through a set of dynamic convolutions. The input tensor is processed using DynamicInceptionDWConv2d with two different kernel sizes. Finally, the outputs of all convolution paths are concatenated using a concat operation to form a larger feature map. A 1 × 1 convolution is then applied to the concatenated feature map to reduce its channel count and restore it to the original channel count of the input.

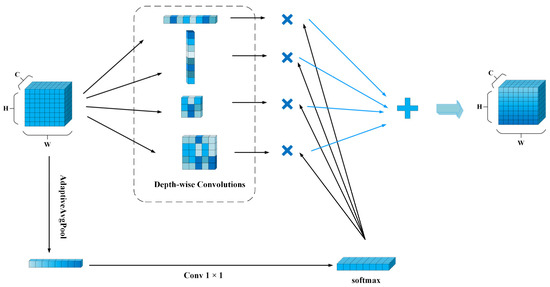

The core idea of DynamicInceptionDWConv2d is to capture multi-scale information by combining convolution kernels of different sizes while enhancing the network’s sensitivity to key features by dynamically adjusting the weights of the convolution kernels. Dynamic convolution adapts the convolution kernel weights based on the input data, making the convolution operation more flexible and targeted. Its structure is shown in Figure 6.

Figure 6.

DynamicInceptionDWConv2d.

The DynamicInceptionDWConv2d module combines depth-wise separable convolutions with dynamic convolution kernel adjustment mechanisms to reduce the number of parameters and improve computational efficiency. This module employs a multi-scale feature fusion strategy, making it particularly suitable for small target detection tasks and significantly enhancing detection accuracy. The key feature of this module is its ability to dynamically adjust the convolution strategy based on the input feature map. This is achieved through the Dynamic Kernel Weights mechanism. First, the input feature map is processed by an adaptive average pooling layer (AdaptiveAvgPool), which aggregates each channel into a single statistic. Then, a convolution layer generates the weights for four different convolution kernels. These weights are dynamically adjusted based on the scale and feature distribution of the input data, allowing the system to select the most suitable convolution operation for small target detection.

The dynamic adjustment of the convolution strategy is realized by normalizing the kernel weights using a Softmax operation, ensuring that the sum of all weights equals 1. By dynamically adjusting the convolution kernel weights, the model can flexibly adapt to different input scenarios, improving its robustness in handling diverse and varying inputs.

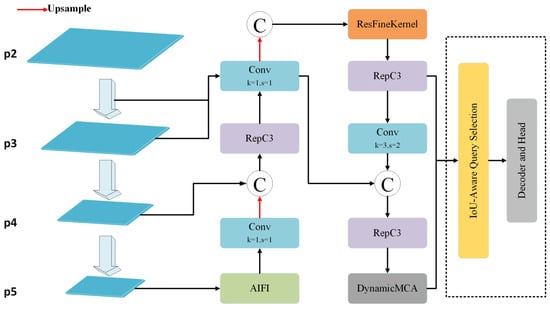

3.3. ResFine Model

Small targets often perform poorly on the conventional P3, P4, and P5 detection layers. A traditional approach to improving small target detection capability is to add a P2 detection layer, but this also introduces a range of issues, such as excessive computational load and more time-consuming post-processing [,]. To effectively fuse feature information from different channels and improve network performance and accuracy, this paper proposes a feature pyramid structure based on the DynCSP block, along with improvements to the original CCFF. As shown in Figure 7, the ResFineKernel module is introduced. First, the P3 layer features and the features processed through the DynCSP block are fused, and then the ResFineKernel module is applied to integrate the features.

Figure 7.

ResFine model structure.

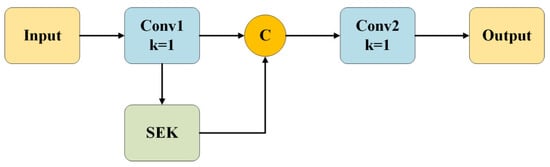

Figure 8 shows the structure of the ResFineKernel module. The core design goal of the ResFineKernel module is to enhance the model’s ability to perceive small targets, especially in feature extraction and information fusion. The module achieves its functionality through the Squeeze-and-Excitation Kernel (SEK) and residual connections. Specifically, the workflow of the ResFineKernel module consists of two main parts: feature extraction and feature fusion.

Figure 8.

ResFineKernel module.

Residual connections effectively mitigate the vanishing gradient problem, especially in small target detection tasks, where retaining high-resolution details is crucial for accurate target localization. Through this feature fusion approach, the model can more precisely extract and integrate key information.

The SEK proposed in this paper is an improved multi-scale convolution branch module. The core idea is to enhance the expression capability of local details through multi-scale feature extraction and an adaptive channel attention mechanism (Squeeze-and-Excitation, SE), thereby improving detection accuracy.

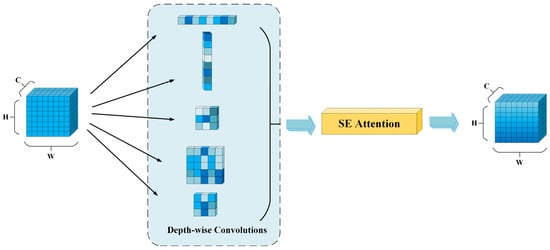

The SEK module uses multiple convolution kernels of different sizes to extract features at different scales, efficiently capturing feature representations from local to global contexts and enhancing small target detection performance through feature fusion. This method not only solves the computational overhead introduced by the P2 layer but also further improves small target detection performance through effective feature integration. The SEK module consists of multiple parallel convolution branches, with each branch using different kernel sizes and dilation settings to capture image information from multiple scales and directions. The specific design is shown in Figure 9. This design ensures that fine-grained information is preserved while balancing global context.

Figure 9.

Squeeze-and-Excitation Kernel.

The outputs from each branch are fused through pixel-wise addition, resulting in a comprehensive feature map that contains multi-scale and multi-angle information, providing rich detail expressions for subsequent detection.

After the multi-scale convolution results are fused, the SE module is introduced to perform channel-wise adaptive re-calibration on the fused features. By using adaptive average pooling, the spatial information of each channel is compressed into global statistics, thereby capturing the global feature distribution. The global description is then reduced and re-expanded through two layers of 1 × 1 convolutions (with a ReLU activation in between), and finally, the channel weights are generated using a sigmoid activation function. The channel weights obtained are multiplied by the multi-scale fused features, enabling the adaptive enhancement of key information.

3.4. Dynamic Multi-Channel Attention

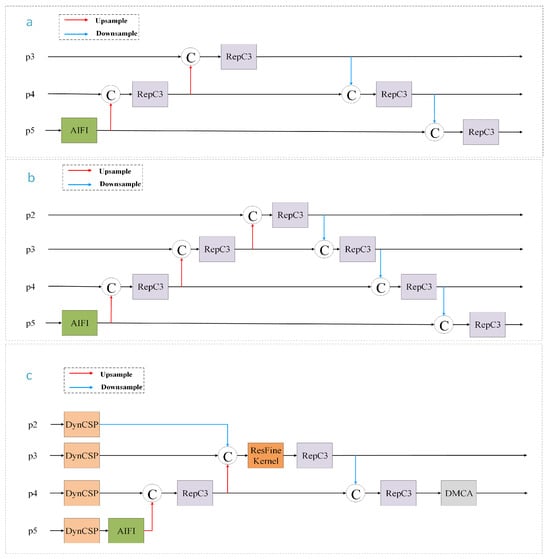

Due to the small size of the targets, lower-level features (P3 and P4) contain more spatial information and are better at preserving target details. The P5 layer, however, undergoes multiple downsampling operations, resulting in lower spatial resolution and the loss of small target details. Therefore, removing the P5 layer reduces reliance on low-resolution features and increases the utilization of high-resolution features. In this paper, the P5 layer is removed from the neck part, and only the P3 and P4 layers are retained. The DynamicMCA (DMCA) module is introduced to enhance the focus on important features, making the network more dependent on lower-level feature maps. Figure 10 illustrates three prediction head structures.

Figure 10.

Schematic diagram of prediction heads: (a) original prediction head of RT-DETR-r18; (b) prediction head of RT-DETR-r18-p2; (c) prediction head of GSTD-DETR.

Compared to the other two, the proposed improvement is effective without introducing excessive computational costs. By removing the P5 layer, the network reduces reliance on low-resolution features, allowing for the increased utilization of high-resolution features, which are better at preserving small target details. Additionally, the introduction of the DMCA module further enhances feature extraction without adding significant computational burden. The lightweight adaptive weighting mechanism of DMCA, combined with Dynamic Channel Reorganization and Spatial Attention Enhancement, ensures that the network focuses on relevant features while maintaining computational efficiency.

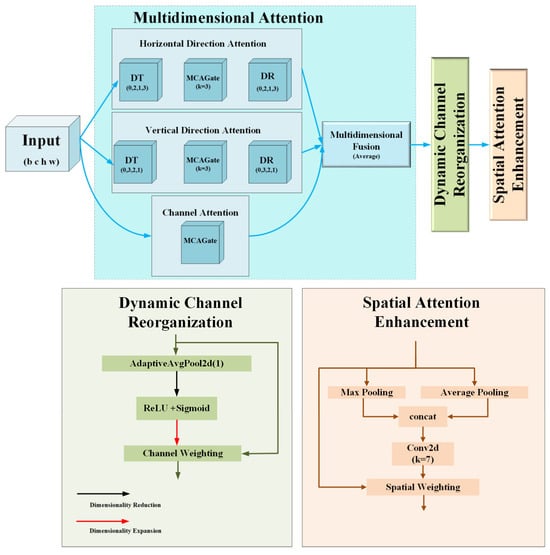

We propose DMCA as an improvement over the original Moment Channel Attention (MCA) mechanism []. DMCA inherits the channel attention mechanism of MCA and enhances it through lightweight adaptive weighting to adjust the importance of feature channels. It captures spatial dependencies by integrating both average and max pooling features. Channel and spatial attention are computed in parallel to form complementary enhancements, and feature self-calibration is achieved via sigmoid activation.

The DMCA structure consists of two main parts: Dynamic Channel Reorganization (DCR) and Spatial Attention Enhancement. The structure is shown in Figure 11.

Figure 11.

DMCA module.

The input feature map undergoes initial feature extraction and channel weighting through the basic MCA process. It is then processed through the DCR and Spatial Attention Enhancement modules.

Dynamic Channel Reorganization is a mechanism that dynamically adjusts the importance of features along the channel dimension, allowing the weight of each channel to be automatically adjusted based on the specific content of the input feature map. Unlike traditional static channel attention mechanisms, DCR uses adaptive average pooling to learn feature channel weights at each layer, enabling more refined control over the influence of each channel in the network.

The Spatial Attention Enhancement mechanism further improves the representation capability of the feature map by weighting the spatial dimensions. First, average pooling and max pooling operations are applied to the feature map of each channel, obtaining different statistical features of the spatial information. These two pooling operations capture important regions along the spatial dimension. After concatenating the results of average and max pooling, the combined feature is input into a 7 × 7 convolution layer to generate a spatial attention weight map. This weight map is normalized using a sigmoid function. Finally, the spatial attention weights are applied to the spatial dimension of the input feature map, enhancing the model’s focus on important spatial locations and reducing attention on less relevant areas.

3.5. Area-Weighted NWD Loss Function

Traditional IoU-based bounding box regression methods (such as IoU, GIoU, and CIoU) primarily focus on the geometric relationship between the predicted box and the ground truth (GT) box when computing the loss, such as the overlap area and center distance, while neglecting the influence of the bounding box’s shape and scale on the regression results [,,,]. In small target detection scenarios, the sensitivity of these methods to IoU values becomes more pronounced.

The IoU is calculated using the following formula:

The numerator represents the intersection between the predicted bounding box and the ground truth bounding box . The denominator represents the area of the union of the predicted bounding box and the ground truth bounding box , which is the total area covered by both boxes.

Small objects occupy fewer pixels in an image, and their features are relatively less distinct, making the accurate detection of small objects more challenging. Therefore, there is a need for a method that can more precisely describe the bounding box regression loss for small objects to improve the performance of small object detection.

Through an analysis of the characteristics of bounding box regression, it has been observed that differences in the shape and scale of bounding boxes in regression samples lead to variations in IoU values under the same bias conditions []. For small-scale bounding boxes, the impact of shape and bias on the IoU value is more significant. Consequently, when designing the bounding box regression loss function for small object detection, it is essential to take both shape and scale factors into account.

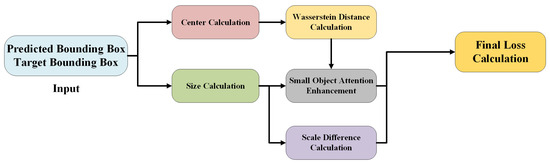

To address this issue, this paper proposes an Area-Weighted NWD (AWNWD) loss function based on the Normalized Wasserstein Distance (NWD) [] loss function. This loss function introduces small object-aware enhancement and scale difference terms. It takes into account the weighted influence of the bounding box position, size, and object area, thereby strengthening the impact of small objects in the loss function. This approach enhances the model’s attention to small objects while ensuring that the model balances the importance of targets at different scales during the loss calculation, thus improving the model’s robustness.

The traditional NWD formula is as follows:

where and represent the center coordinates of the predicted bounding box and the ground truth (GT) box, respectively; and are the width and height of the predicted bounding box, while and are the width and height of the ground truth (GT) box, respectively; and are constants related to the dataset.

The basic form of AWNWD is as follows:

First, the sigmoid function is used to map the area to a range between 0 and 1, resulting in an area weight. The area weight, computed using the sigmoid function, dynamically adjusts based on the size of the object, allowing the model to place more emphasis on smaller target details. By applying this weight to the Wasserstein distance, the model’s focus on small objects is enhanced by increasing the importance of small target regions during the distance calculation. This adjustment improves the model’s ability to detect small objects by making the loss function more sensitive to small-scale variations.

Next, the logarithmic difference in the area ratio between the predicted bounding box and the ground truth bounding box is computed and normalized using the sigmoid function. Incorporating this difference term into the loss function helps the model focus on the impact of scale variations on detection performance. By combining both the area weight and the scale difference, this approach ensures that the model can better handle target scale variations and enhance detection performance, especially for small objects.

The formula for the sigmoid activation function is as follows:

The calculation of the area weight is as follows:

The calculation of scale difference is as follows:

The calculation of the weighted Wasserstein distance is as follows:

The final loss is calculated as follows:

Here, and are hyperparameters. The computational flow of the AWNWD loss is illustrated in Figure 12.

Figure 12.

AWNWD computational flowchart.

4. Results

4.1. Dataset and Experimental Setup

The SpotGEO dataset [,], released by the European Space Agency (ESA) in June 2020, serves as the official dataset for the GEO satellite object detection competition. This dataset was acquired from multiple nighttime observations using ground-based telescopes located at various sites, ensuring the richness of the data. Each frame of the dataset had a resolution of 640 × 480 pixels.

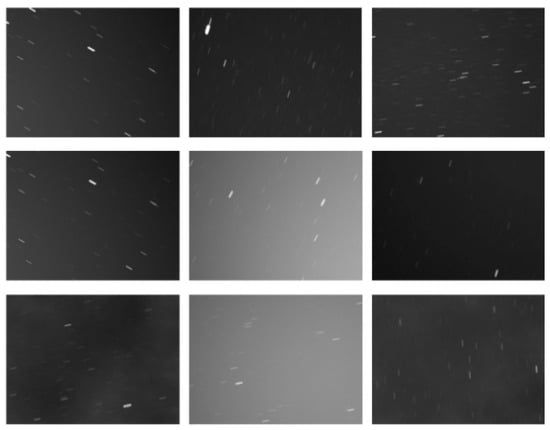

In this experiment, the dataset was divided into training, validation, and testing sets at a ratio of 7:1:2. Specifically, 4480 images were used for the training set, 640 for the validation set, and 1280 for the testing set. Figure 13 illustrates some images from the dataset.

Figure 13.

Sample images from the SpotGEO dataset.

Table 1 presents the experimental environment and parameter settings for the RT-DETR-related experiments in this paper. To ensure a fair comparison and facilitate reproducibility, both the proposed GSTD-DETR model and the baseline RT-DETR-r18 model were trained using identical hyperparameter settings, including learning rate, batch size, optimizer type, and momentum. All detection models were trained under the same hardware and software conditions, utilizing identical training, validation, and testing datasets. The initial learning rates for the comparison models were set consistently with their respective original papers, with a momentum value of 0.9. Training for all models was terminated upon the convergence of accuracy.

Table 1.

Experimental environment and parameter settings.

Regarding the selection of parameters and in the Area-Weighted NWD loss function, the parameter adopted the default NWD value of 12.8. According to the experimental data shown in Table 2, the model achieved optimal performance when was set to 0.1.

Table 2.

Performance (AP50:95) under different values.

4.2. Evaluation Metrics

Due to the small number of pixels corresponding to targets in images and the susceptibility of detection to noise and image quality, stricter evaluation criteria are required to ensure the precise detection of small spatial targets. The AP50:95 metric provides a more rigorous evaluation standard, which more accurately reflects the model’s ability to detect small targets at different IoU thresholds. Additionally, the recall rate directly reflects the model’s detection completeness for small targets. Therefore, this paper selected the precision, recall, AP50, and AP50:95 metrics to evaluate the detection accuracy of the model.

In this paper, average precision (AP) was used as a metric to evaluate the accuracy of the object detection model. AP represents the area under the precision–recall curve, and its calculation formula is as follows:

where Precision represents the probability that a sample predicted as a positive sample is actually a positive sample, and Recall represents the probability that a sample that is actually a positive sample is correctly predicted as positive. The related calculation formulas are as follows:

In object detection tasks, the evaluation metrics are defined as follows: true positives (TPs) refer to the number of positive samples that are correctly identified; false positives (FPs) denote negative samples that are erroneously classified as positive; and false negatives (FNs) represent positive samples that are not successfully detected. Detection performance is commonly assessed by the IoU between the predicted bounding box and the ground truth bounding box, with respect to a predetermined threshold. A higher IoU threshold imposes stricter criteria for detection accuracy. The AP at an IoU threshold of 0.5 is referred to as AP50, whereas the mAP over a range of IoU thresholds from 0.5 to 0.95, with a step size of 0.05, is referred to as AP50:95. In the case of single-object detection, the mAP50:95 value is equivalent to the AP50:95 value. The calculation of AP50:95 is formally defined as follows:

where AP5i+50 represents the average precision when the IoU threshold is 0.05i + 0.5.

4.3. Ablation Experiments

In the ablation experiments, the RT-DETR-r18 model was employed as the baseline network, and the performance of the GSTD-DETR network was evaluated on the SpotGEO dataset.

Table 3 provides a detailed presentation of the performance metrics under different model configurations. By listing the network’s layers, parameters, and GFLOPs, it visually demonstrates the contribution of each module. The GSTD-DETR network had 29.74% fewer parameters than the baseline RT-DETR-r18. Although the computational cost increased by 3.26%, the overall AP50:95 improved by 4.9%.

Table 3.

Ablation experiment results.

By improving the backbone network, feature extraction module, feature fusion method, and the prediction head and loss function, the AP, AR, and AP50:95 metrics of GSTD-DETR significantly improved, demonstrating the effectiveness of the enhanced modules. Although the computational costs increased, this increase is deemed acceptable given the performance gains.

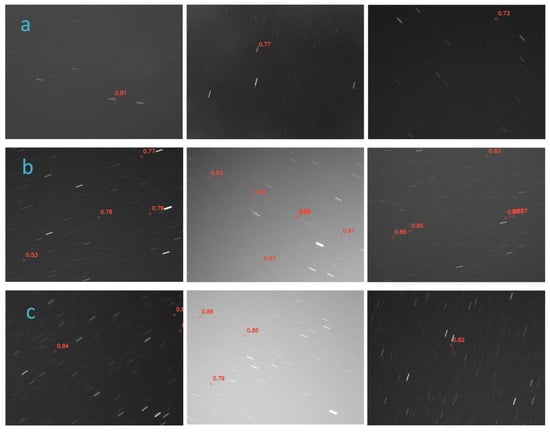

As shown in Figure 14, the partial detection results demonstrate that the model can accurately detect small spatial targets in images under various conditions, exhibiting good robustness. The algorithm is capable of recognizing targets, with the detection results showing high accuracy and stability. The detection performance under star occlusion conditions indicates that the algorithm can detect most occluded targets; however, a small number of targets were not correctly identified, suggesting that there is still room for further optimization in handling brighter star occlusion issues. Overall, the small spatial target detection algorithm performs well in complex environments.

Figure 14.

Partial detection results: (a) stellar streak occlusion; (b) multiple targets; (c) complex background.

4.4. Comparative Experiments

This paper compared the performance of GSTD-DETR with several state-of-the-art object detection models using the same dataset. Table 4 presents the qualitative results of GSTD-DETR compared to YOLOv8m, YOLOv11m [], RT-DETR-r18, RT-DETR-r18-p2 with the P2 detection head, RT-DETR-r34, and RT-DETR-r50 []. As shown in Table 4, GSTD-DETR achieved superior accuracy among all of the comparison models, with an improvement of 1.9% over the RT-DETR-r18-p2 model. This result effectively demonstrates the model’s performance in detecting small spatial targets.

Table 4.

Comparison of different detection models.

GSTD-DETR performed excellently in small object detection, achieving significant improvements: AP increased by 1.8% compared to RT-DETR-r18-p2; AR was 80.5%, an improvement of 1%; AP50 reached 88.6%, an increase of 2.8%; and AP50:95 was 49.9%, improved by 1.9%. These enhancements demonstrate that GSTD-DETR’s detection capability for small objects was strengthened. Compared to RT-DETR-r18-p2, GSTD-DETR strikes a good balance between performance and efficiency.

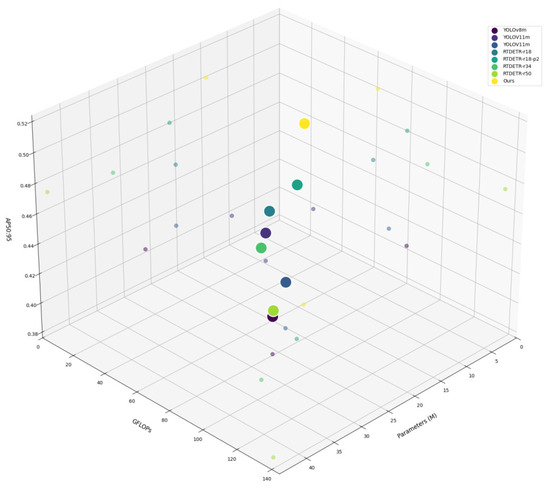

GSTD-DETR has a GFLOPs of 60.2M, which is higher than that of some lightweight models but lower than that of more computationally intensive models like RT-DETR-r50 (134.4 GFLOPs). This indicates that GSTD-DETR achieves a moderate computational overhead while maintaining performance. The model has 14.03M parameters, and the trained weights are 27.2 MB, which is significantly smaller than those of r18, r34, and r50. This ensures high accuracy while also ensuring efficient training and deployment. Compared to more computationally expensive models, the smaller parameter size of GSTD-DETR provides higher efficiency in terms of inference speed and storage requirements, making it suitable for practical applications with limited computational resources. The three-dimensional scatter plot of model performance shown in Figure 15 clearly demonstrates that GSTD-DETR achieves a good balance between high accuracy and an appropriate model size.

Figure 15.

Three-dimensional scatter plot of model performance across multiple evaluation metrics.

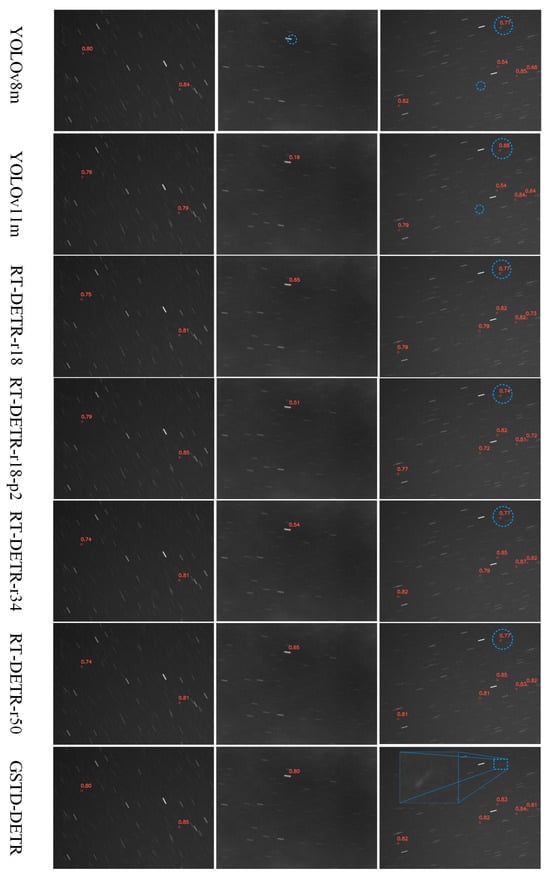

Figure 16 illustrates a comparison of the detection capabilities of the proposed GSTD-DETR method with other state-of-the-art detectors. This comparison highlights the effectiveness of GSTD-DETR in complex scenarios. The first column shows the name of each method.

Figure 16.

Detection results of different types of spatial targets.

Compared to other object detectors (YOLOv8m, YOLOv11m, RT-DETR-r18, RT-DETR-r18-p2, RT-DETR-r34, and RT-DETR-r50), GSTD-DETR demonstrates superior performance across all metrics.

In the first column of the resulting images, it is visually evident that all models successfully identified the target; however, GSTD-DETR demonstrated notably higher confidence scores. The second column presents the target detection performance under stellar background occlusion. Under such challenging conditions, GSTD-DETR maintained strong robustness and accuracy, whereas the confidence levels of the other models dropped significantly. The final column illustrates scenarios where the background contains blurred objects that resemble the detection targets. Our model is still capable of accurately distinguishing the true target. Enlarged view analysis confirms that the interference object was not a geostationary target; however, the other models incorrectly classified it as a target with high confidence, resulting in false positives.

In summary, GSTD-DETR demonstrates robust detection capabilities under complex backgrounds and occlusion scenarios. With its efficient architecture and reliable performance, the algorithm holds significant promise for real-time detection tasks and exhibits strong potential for deployment in practical operational environments.

5. Conclusions

This paper proposed a GSTD-DETR model based on RT-DETR-r18, aiming to achieve high detection accuracy under various conditions while keeping the model size within a reasonable range. First, a DynCSP architecture was introduced as the backbone network, which not only extracted high-quality feature representations but also significantly reduced the number of parameters and computational costs. Second, to enhance the model’s ability to perceive small targets, a ResFineKernel module was proposed, along with an SEK module for feature integration. Subsequently, an improved DMCA mechanism based on MCA was introduced, and modifications to the network’s neck were made, including the removal of the P5 layer, retaining only the P3 and P4 layers, allowing the model to more effectively focus on key feature regions. Finally, an AWNWD loss function based on the NWD loss function was proposed. This loss function increased the model’s focus on small targets.

Under complex external conditions, small object detection tasks are influenced by various factors, including but not limited to atmospheric effects, light pollution, sensor noise, and star background occlusion. To address these challenges, we evaluated the performance of the improved model across various scenarios. The results indicated that the enhanced model outperformed other detection algorithms in terms of accuracy.

However, despite its strong performance, GSTD-DETR has certain limitations. It is designed for single-frame detection, which may be beneficial for real-time detection tasks, but it does not leverage inter-frame information. Additionally, the algorithm’s effectiveness on high-resolution images still requires further validation. In the future, we plan to conduct real-time target detection experiments on hardware platforms, further fine-tuning the model to ensure its capability for high-resolution real-time target detection.

Author Contributions

All authors carried out and analyzed all experiments. Y.Z. (Yijian Zhang) wrote the manuscript, which all authors discussed. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The datasets presented in this article are not readily available because the data are part of an ongoing study. Requests to access the datasets should be directed to 15611836130@163.com.

Acknowledgments

Thanks to all who contributed to this paper!

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hussain, K.F.; Safwat, N.E.-D.; Thangavel, K.; Sabatini, R. Space-based debris trajectory estimation using vision sensors and track-based data fusion techniques. Acta Astronaut. 2025, 229, 814–830. [Google Scholar] [CrossRef]

- Koldasbayeva, D.; Tregubova, P.; Gasanov, M.; Zaytsev, A.; Petrovskaia, A.; Burnaev, E. Challenges in data-driven geospatial modeling for environmental research and practice. Nat. Commun. 2024, 15, 10700. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Yin, X.; Xiao, Y.; Zhao, Z.; Yang, X.; Dai, C. Enhanced YOLOv8-based method for space debris detection using cross-scale feature fusion. Discov. Appl. Sci. 2025, 7, 95. [Google Scholar] [CrossRef]

- Su, S.; Niu, W.; Li, Y.; Ren, C.; Peng, X.; Zheng, W.; Yang, Z. Dim and small space-target detection and centroid positioning based on motion feature learning. Remote Sens. 2023, 15, 2455. [Google Scholar] [CrossRef]

- Gao, W.; Niu, W.; Lu, W.; Wang, P.; Qi, Z.; Peng, X.; Yang, Z. Dim small target detection and tracking: A novel method based on temporal energy selective scaling and trajectory association. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 17239–17262. [Google Scholar] [CrossRef]

- Kong, S. Research on Extraction Technology of Faint Targets Against a Dense Stellar Background. Ph.D. Thesis, University of Chinese Academy of Sciences (Institute of Optics and Electronics, Chinese Academy of Sciences), Chengdu, China, 2019. [Google Scholar]

- Han, L.; Tan, C.; Liu, Y.; Song, R. Research on the on-orbit real-time space target detection algorithm II. Spacecr. Recovery Remote Sens. 2021, 42, 122–131. [Google Scholar]

- Yang, Y.; Yu, L.; Mao, X.; Yan, X.; Zheng, X. Algorithm of space target quick acquisition in the complex background of the sky. Acta Photonica Sin. 2020, 49, 62–71. [Google Scholar]

- Yao, Y.; Zhu, J.; Liu, Q.; Lu, Y.; Xu, X. An adaptive space target detection algorithm. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6517605. [Google Scholar] [CrossRef]

- Wang, M.; Zhao, J.; Chen, T.; Cui, B. Moving point target detection from faint space based on temporal-space domain. J. Electron Inf. Technol. 2017, 39, 1578–1584. [Google Scholar]

- Miao, S.; Fan, C.; Wen, G.; Gao, J.; Zhao, G. Space target recognition method based on adaptive spatial filtering multistage hypothesis testing. Acta Photonica Sin. 2021, 50, 1110003. [Google Scholar]

- Pan, H.; Song, G.; Xie, L.; Zhao, Y. Detection method for small and dim targets from a time series of images observed by a space-based optical detection system. Opt. Rev. 2014, 21, 292–297. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Computer Vision–ECCV 2016: 14th European Conference, Proceedings, Part I 14, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Abay, R.G.K. GEO-FPN: A convolutional neural network for detecting GEO and near-GEO space objects from optical images. In Proceedings of the 8th European Conference on Space Debris, Darmstadt, Germany, 20–23 April 2021. [Google Scholar]

- De Vittori, A.; Cipollone, R.; Di Lizia, P.; Massari, M. Real-time space object tracklet extraction from telescope survey images with machine learning. Astrodynamics 2022, 6, 205–218. [Google Scholar] [CrossRef]

- Jia, P.; Liu, Q.; Sun, Y. Detection and classification of astronomical targets with deep neural networks in wide-field small aperture telescopes. Astron. J. 2020, 159, 212. [Google Scholar] [CrossRef]

- Guo, X.; Chen, T.; Liu, J.; Liu, Y.; An, Q. Dim space target detection via convolutional neural network in single optical image. IEEE Access 2022, 10, 52306–52318. [Google Scholar] [CrossRef]

- Chen, S.; Wang, H.; Shen, Z.; Wang, K.; Zhang, X. Convolutional long-short term memory network for space debris detection and tracking. Knowl.-Based Syst. 2024, 304, 112535. [Google Scholar] [CrossRef]

- Liu, S.; Guo, Y.; Wang, G. Space target detection algorithm based on attention mechanism and dynamic activation. Laser Optoelectron. Prog. 2022, 59, 236–242. [Google Scholar]

- Yuan, Y.; Bai, H.; Wu, P.; Guo, H.; Deng, T.; Qin, W. An intelligent detection method for small and weak objects in space. Remote Sens. 2023, 15, 3169. [Google Scholar] [CrossRef]

- Wang, X.; Xi, B.; Xu, H.; Zheng, T.; Xue, C. AgeDETR: Attention-guided efficient DETR for space target detection. Remote Sens. 2024, 16, 3452. [Google Scholar] [CrossRef]

- Carion, N.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Lv, W.; Zhao, Y.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs beat YOLOs on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Shafiq, M.; Gu, Z. Deep residual learning for image recognition: A survey. Appl. Sci. 2022, 12, 8972. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Chen, B.; Zou, X.; Zhang, Y.; Li, J.; Li, K.; Xing, J.; Tao, P. LEFormer: A hybrid CNN-Transformer architecture for accurate lake extraction from remote sensing imagery. In Proceedings of the ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes, Greece, 14–19 May 2023. [Google Scholar]

- Xiao, Y.; Xu, T.; Yu, X.; Fang, Y.; Li, J. A lightweight fusion strategy with enhanced inter-layer feature correlation for small object detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4708011. [Google Scholar] [CrossRef]

- Zheng, C.; Li, Y.; Li, J.; Li, N.; Fan, P.; Sun, J.; Liu, P. Dynamic convolution neural networks with both global and local attention for image classification. Mathematics 2024, 12, 1856. [Google Scholar] [CrossRef]

- Chen, D.; Zhang, L. SL-YOLO: A stronger and lighter drone target detection model. arXiv 2024, arXiv:2411.11477. [Google Scholar]

- Gong, C.X.Y.; Yao, X.; Yan, K.; Zeng, Q.; Xie, X.; Han, J. Towards large-scale small object detection: Survey and benchmarks. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13467–13488. [Google Scholar]

- Yu, Y.; Zhang, Y.; Cheng, Z.; Song, Z.; Tang, C. MCA: Multidimensional collaborative attention in deep convolutional neural networks for image recognition. Eng. Appl. Artif. Intell. 2023, 126 Pt C, 107079. [Google Scholar] [CrossRef]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. UnitBox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Zhang, H.; Zhang, S. Shape-IoU: More accurate metric considering bounding box shape and scale. arXiv 2023, arXiv:2312.17663. [Google Scholar]

- Zhang, H.; Zhang, S. Focaler-IoU: More focused intersection over union loss. arXiv 2024, arXiv:2401.10525. [Google Scholar]

- Wang, J.; Xu, C.; Yang, W.; Yu, L. A normalized Gaussian Wasserstein distance for tiny object detection. arXiv 2022, arXiv:2110.13389. [Google Scholar]

- Chen, B.; Liu, D.; Chin, T.J.; Rutten, M.; Derksen, D.; Martens, M.; Looz, M.; Lecuyer, G.; Lzzo, D. Spot the GEO satellites: From dataset to Kelvins SpotGEO challenge. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Kelvins Spotgeo Challenge. Available online: https://kelvins.esa.int/spot-the-geo-satellites/home/ (accessed on 25 December 2024).

- Ultralytics. Available online: https://github.com/ultralytics/ultralytics (accessed on 7 December 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).