A Systematic Review and Classification of HPC-Related Emerging Computing Technologies

Abstract

1. Introduction

- The diminishing returns of Moore’s Law;

- Rising energy consumption costs;

- Scalability constraints and memory management complexities;

- The exponential growth of data and the demand for real-time processing in applications such as the Internet of Things, precision medicine, climate modeling, and robotics [8].

- Classifying each HPC-related emerging technology based on practical software tools such as the required framework and programming languages, simulators, analyzers, and solvers.

- Determining the main challenges, providers, benefits, applications, and research gaps in each specific and emerging HPC-related technology.

- Determining the practical use cases of each different emerging HPC-related technology.

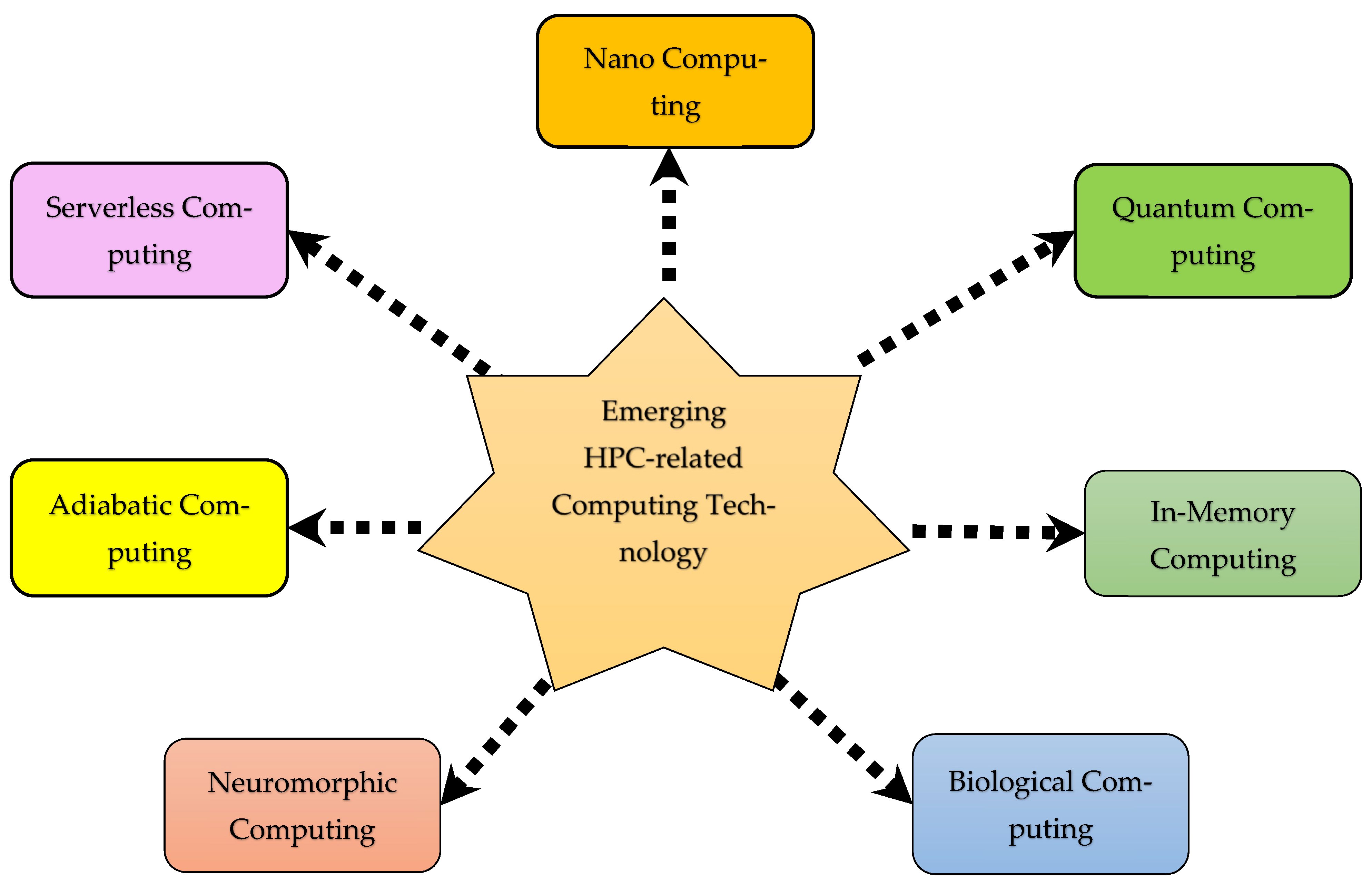

- Proposing a holistic perspective of seven emerging HPC-related computing technologies (serverless, quantum, adiabatic, nano, biological, in-memory, and neuromorphic) and introducing the future complementary research directions in the field (green HPC, AI-HPC integration, GPU cloud computing, edge-based high-performance computing, exascale computing and beyond, etc.)

2. Related Work

Summary and Comparison

3. Methodology

3.1. Research Objective

3.2. Research Questions

- -

- What is the future research directions in each emerging HPC-related technology?

- -

- What is the research challenges associated with emerging HPC-related technologies?

- -

- What are the testbed experiments, frameworks, and tools associated with each emerging HPC-related technology?

- -

- What are the potential impact, scientific challenge level, maturity level, interdisciplinary level of each emerging HPC-related technology?

- -

- What are the main providers, benefits, and application areas of each emerging HPC-related technology?

3.3. General Methodology

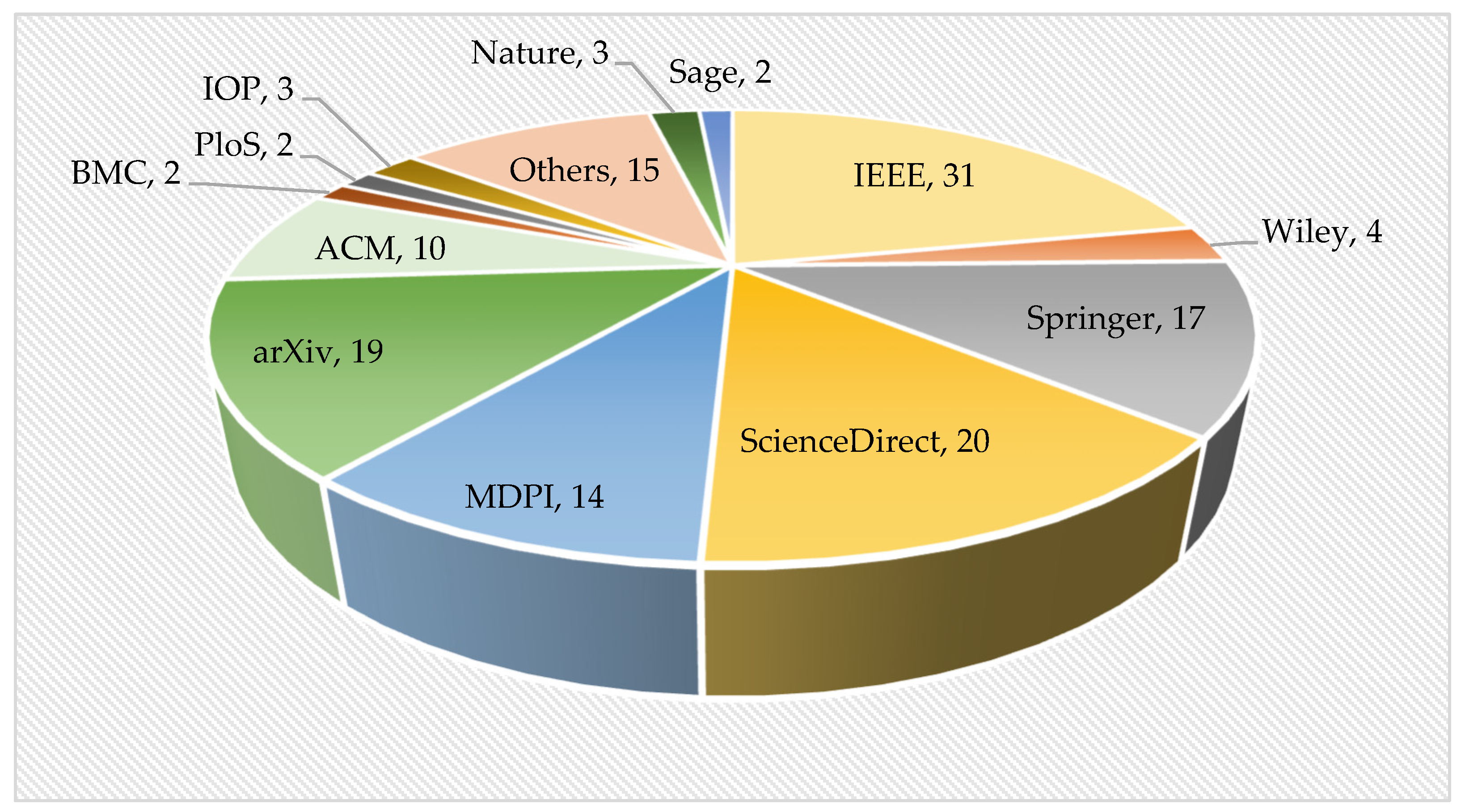

3.4. Data Collection

- Focus on emerging HPC-related computing technologies.

- Inclusion of practical and hardware-related solutions in relevant domains.

- Contain practical and empirical analyses related to high-performance computing.

3.5. Statistical Analysis and Research Directions

3.6. Limitations

3.7. Compliance with PRISMA

3.8. Inclusion/Exclusion Criteria

4. Emerging HPC-Related Technologies

- -

- Fundamental innovation: The technology must be disruptive and based on principles different from those of conventional technologies, such as quantum computing.

- -

- Low maturity level (low TRL): A technology that has not yet reached widespread application and remains primarily at the research or experimental stage.

- -

- High impact potential: A technology that has the capacity to transform processing speed, scalability, security, or efficiency.

- -

- Interdisciplinarity: A technology that has emerged from the convergence of diverse scientific fields.

- -

- Scientific and implementation challenges: The presence of open questions and complex technical challenges indicates the technology’s emerging status.

- -

- High global research focus: A technology that attracts the attention of research institutions and appears in current scientific publications.

4.1. Quantum Computing

4.1.1. Definition of Quantum Computing

4.1.2. Key Quantum–Mechanical Concepts in Quantum Computing

- -

- Superposition: In classical computing, a bit can be either zero or one. A qubit, by contrast, can occupy both the 0 and 1 states simultaneously until it is measured [15].

- -

- Entanglement: Two or more qubits can become linked so that the state of one depends on the state of another, even when separated by large distances. This property underpins much of quantum computing’s power [27].

- -

- Interference: Quantum algorithms use interference to amplify the probability of correct outcomes and cancel out incorrect ones.

4.1.3. Key Differences from Classical Computing

4.1.4. Important Algorithms in Quantum Computing

- -

- Shor’s Algorithm: Efficient integer factorization and used to break RSA encryption.

- -

- Grover’s Algorithm: Accelerated unstructured database search.

- -

- Quantum Fourier Transform (QFT): The quantum analog of the discrete Fourier transform.

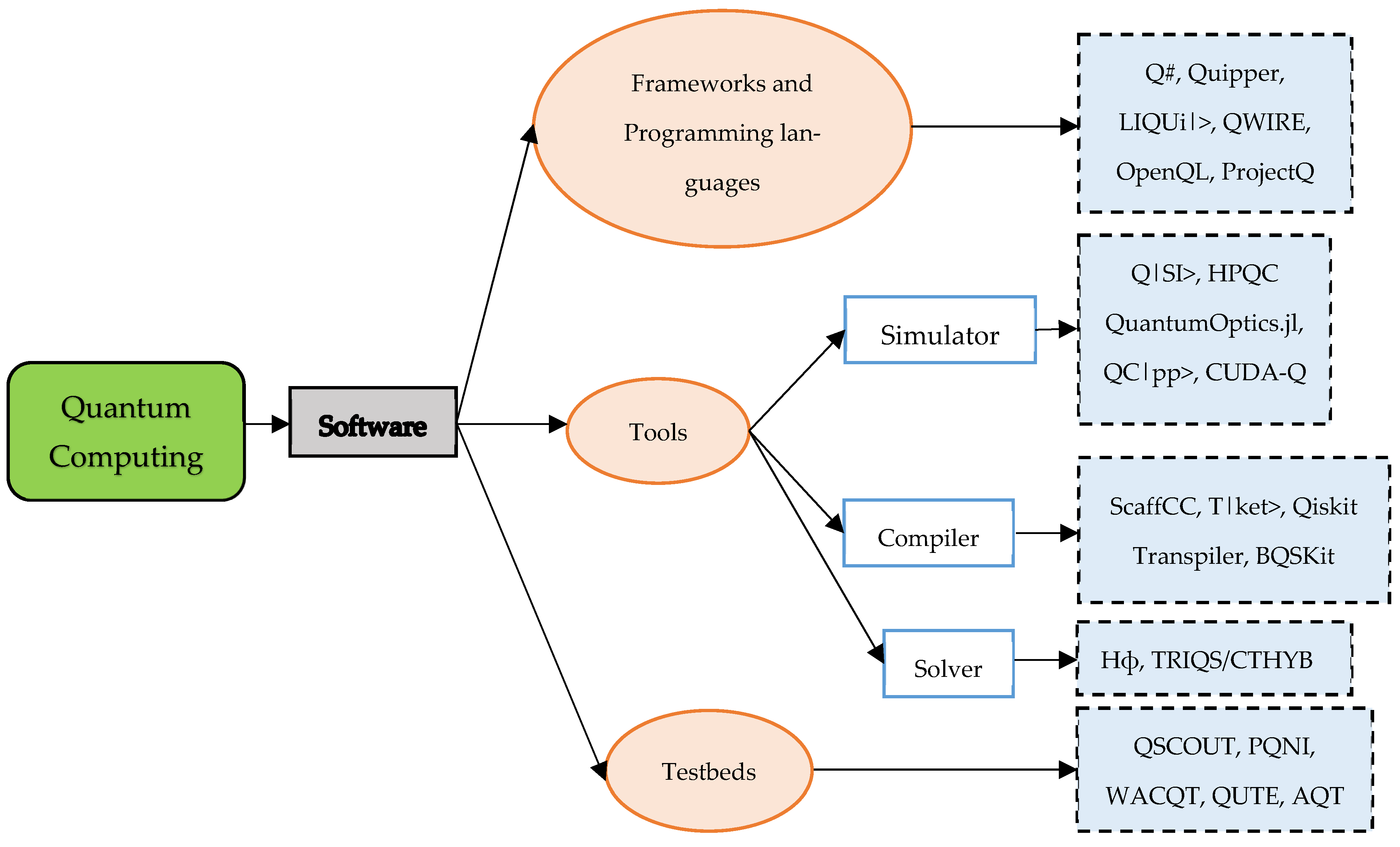

4.1.5. Frameworks and Programming Languages

- -

- Qiskit (IBM) version 2.0: An open-source quantum software development framework providing tools to create and manipulate quantum programs and run them on physical devices and simulators [34]. Qiskit includes modules for quantum circuits, algorithms, and applications, supporting research and development across quantum computing domains.

- -

- PennyLane (Xanadu) version 0.41.1: A Python library for differentiable quantum programming that integrates with major machine learning libraries such as TensorFlow v.2.16.1 and PyTorch v.2.7.0 [35]. PennyLane enables training purely quantum and hybrid quantum–classical models, advancing quantum machine learning.

- -

- Cirq (Google) (https://quantumai.google/cirq/start/install, last accessed 15 June 2025): A Python library for designing, simulating, and running quantum circuits on Google’s quantum processors [36]. Cirq provides tools for developing quantum algorithms, optimizing circuits, and benchmarking quantum hardware performance, making it indispensable for researchers and developers.

- -

- Q#: A domain-specific quantum language designed specifically to represent quantum algorithms correctly.

- -

- Quipper: An embedded language hosted in Haskell. It is also universal and usable for quantum circuits, algorithms, and circuit transformations.

- -

- LIQUi|>: A software architecture and toolkit for quantum computing. Its suite includes programming languages, optimization and scheduling algorithms, and quantum simulators.

- -

- QWIRE: A programming language with two domains of application: describing quantum circuits and manipulating them within any chosen classical host language as an interface.

- -

- OPENQL: A portable quantum programming framework for quantum accelerators that run on classical computers to speed up specific computations.

- -

- ProjectQ: It allows for the testing of quantum algorithms via simulations and enables execution on real quantum hardware.

4.1.6. Tools

Simulators

- -

- Q|SI>: An embedded NET-based language that extends into a quantum while language.

- -

- QuantumOptics.jl: A numerical simulator for research in quantum optics and quantum information.

- -

- CUDA-Q: It is an open-source quantum development platform orchestrating the hardware and software needed to run useful, large-scale quantum computing applications.

- -

- QC|pp>

Compilers

- -

- ScaffCC: A scalable compiler for large-scale quantum programs.

- -

- T|ket>: A retargetable compiler for Noisy Intermediate-Scale Quantum (NISQ) devices.

- -

- Qiskit Transpiler: It is used to write new circuit transformations (known as transpiler passes) and combine them with other existing passes, greatly reducing the depth and complexity of quantum circuits.

- -

- BQSKit: A powerful and portable quantum compiler framework. It can be used with ease to compile quantum programs to efficient physical circuits for any quantum processing unit (QPU).

Solvers

- -

- Hϕ: A specialized Lanczos-type solver suitable for various quantum lattice models.

- -

- TRIQS/CTHYB: A continuous-time quantum Monte Carlo simulation and solver tool.

Testbed Experiments

- -

- QSCOUT: A quantum computing testbed based on trapped ions that is available to the research community as an open platform for a range of quantum computing applications.

- -

- PQNI: A platform to accelerate the integration of quantum systems with active, real-world networks.

- -

- WACQT: A testbed facility designed in Chalmers university to support the development and testing of quantum algorithms and hardware.

- -

- QUTE: A general-purpose quantum computing simulator that, when deployed on the ISAAC supercomputing infrastructure, allows for the simulation of quantum circuits. It is easy to use and completes complicated quantum simulations that would take hours or even days on an ordinary computer in a few minutes.

- -

- AQT: It explores and defines the future of superconducting quantum computers from end to end with a full-stack platform for collaborative research and development.

4.1.7. Use Cases

- -

- Drug discovery and development;

- -

- Financial modeling;

- -

- Fraud detection;

- -

- Credit scoring;

- -

- Materials science simulation;

- -

- Quantum key distribution;

- -

- Logistics and supply chain management;

- -

- Climate change modeling.

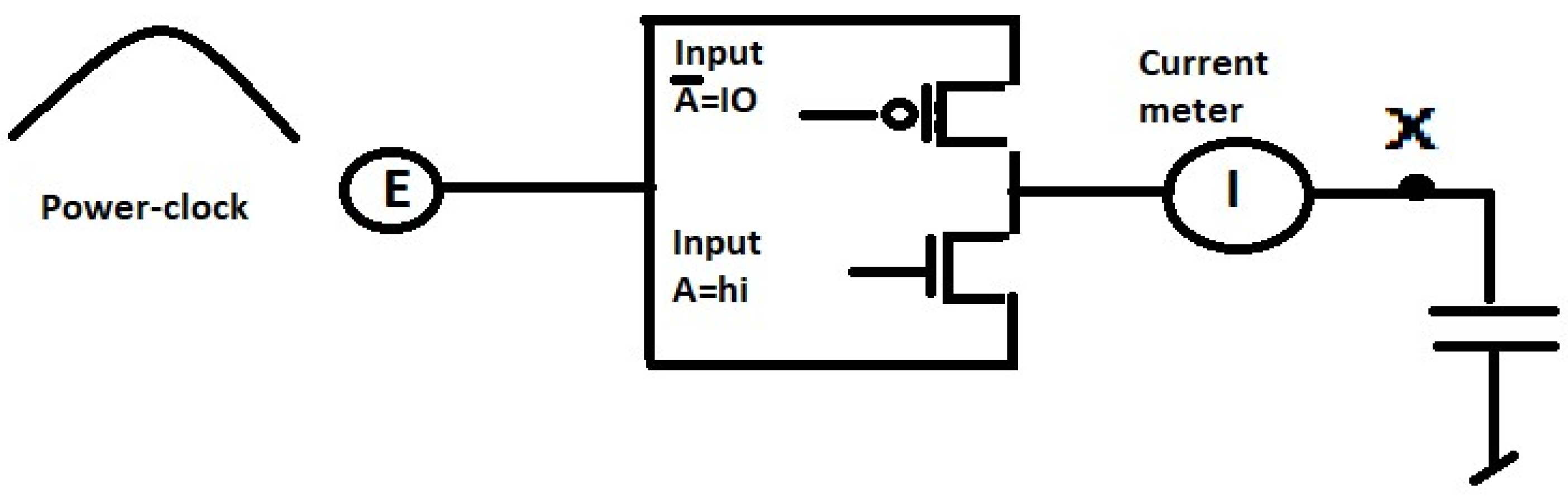

4.2. Adiabatic Computing

- -

- Very little energy is consumed when changing logical states.

- -

- Energy that would normally be lost as heat in classical digital circuits is here recovered or retained.

- -

- Fully adiabatic circuits, in which charging is performed extremely slowly and very little energy is dissipated per operation.

- -

- Quasi-adiabatic circuits, in which charging occurs with a reduced potential drop and part of the energy is recovered.

- -

- Non-adiabatic circuits, which make no attempt to reduce potential drop or recover transferred energy [50].

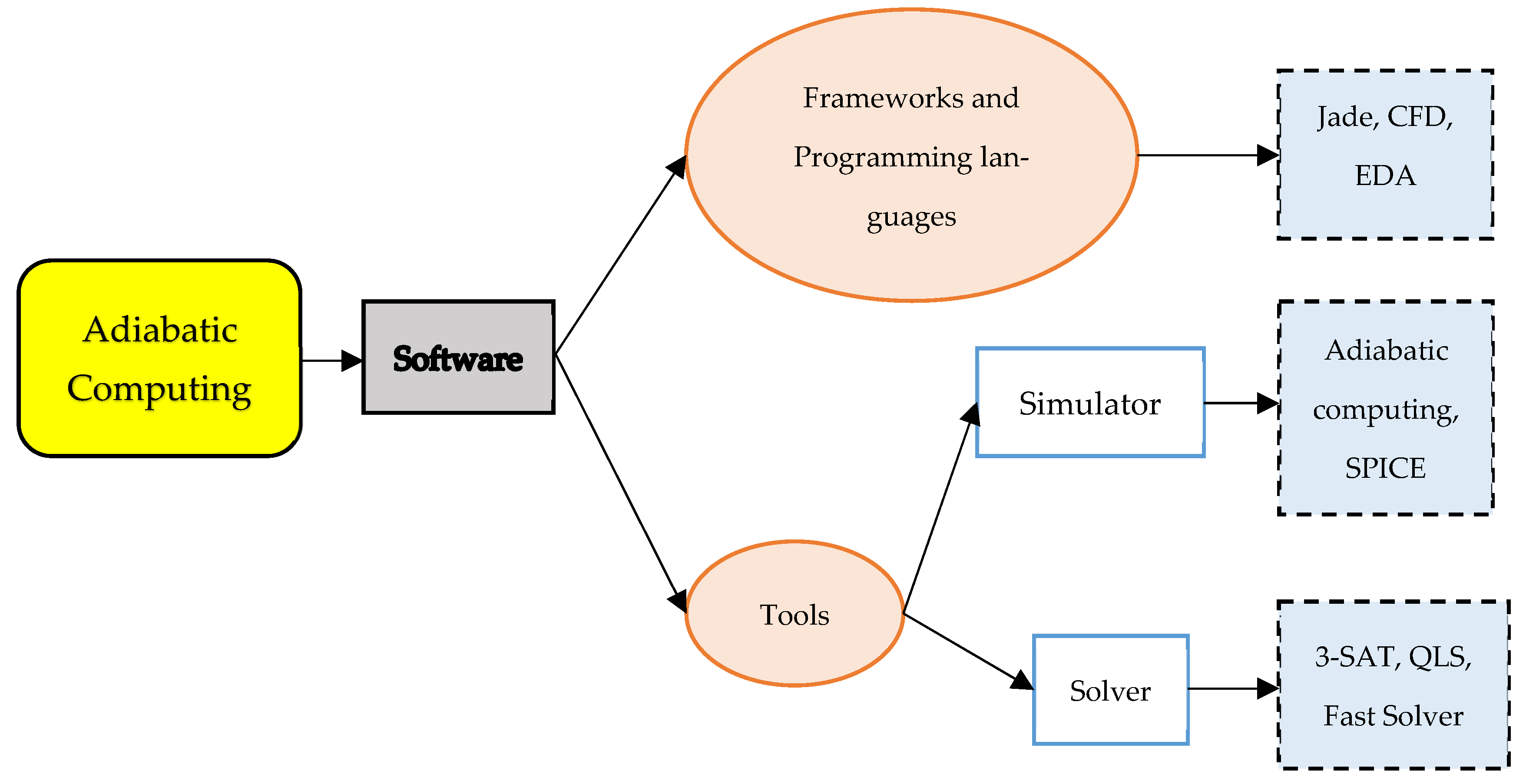

4.2.1. Frameworks and Programming Languages

- -

- Jade: An integrated development environment for adiabatic quantum computing.

- -

- CFD: In CFD simulations, an adiabatic condition generally indicates that the system or surface being modeled does not permit heat transfer or exchange with its environment, signifying that no heat is either added or removed.

- -

- EDA: A framework tool for adiabatic computing.

4.2.2. Tools

Simulators

- -

- Adiabatic computing;

- -

- SPICE.

Solvers

- -

- 3-SAT: It explores the use of quantum adiabatic algorithms to solve the 3-satisfiability problem, a classic NP-complete problem in computer science.

- -

- QLS: A solver for adiabatic quantum computing.

- -

- Fast Solver.

4.2.3. Use Cases

- -

- Drug discovery and development;

- -

- Combinatorial optimization;

- -

- Materials science simulation;

- -

- Training neural networks;

- -

- Feature selection;

- -

- Quantum simulation;

- -

- Portfolio optimization;

- -

- Risk analysis;

- -

- Satellite image analysis;

- -

- Election forecasting.

4.3. Biological Computing

- -

- Biological computing is a reflexive engineering paradigm that deals with programmable and non-programmable information-processing systems; these systems evolve algorithms in response to their environmental needs [52].

- -

- The practical use of biological components—such as proteins, enzymes, and bacteria—for computation.

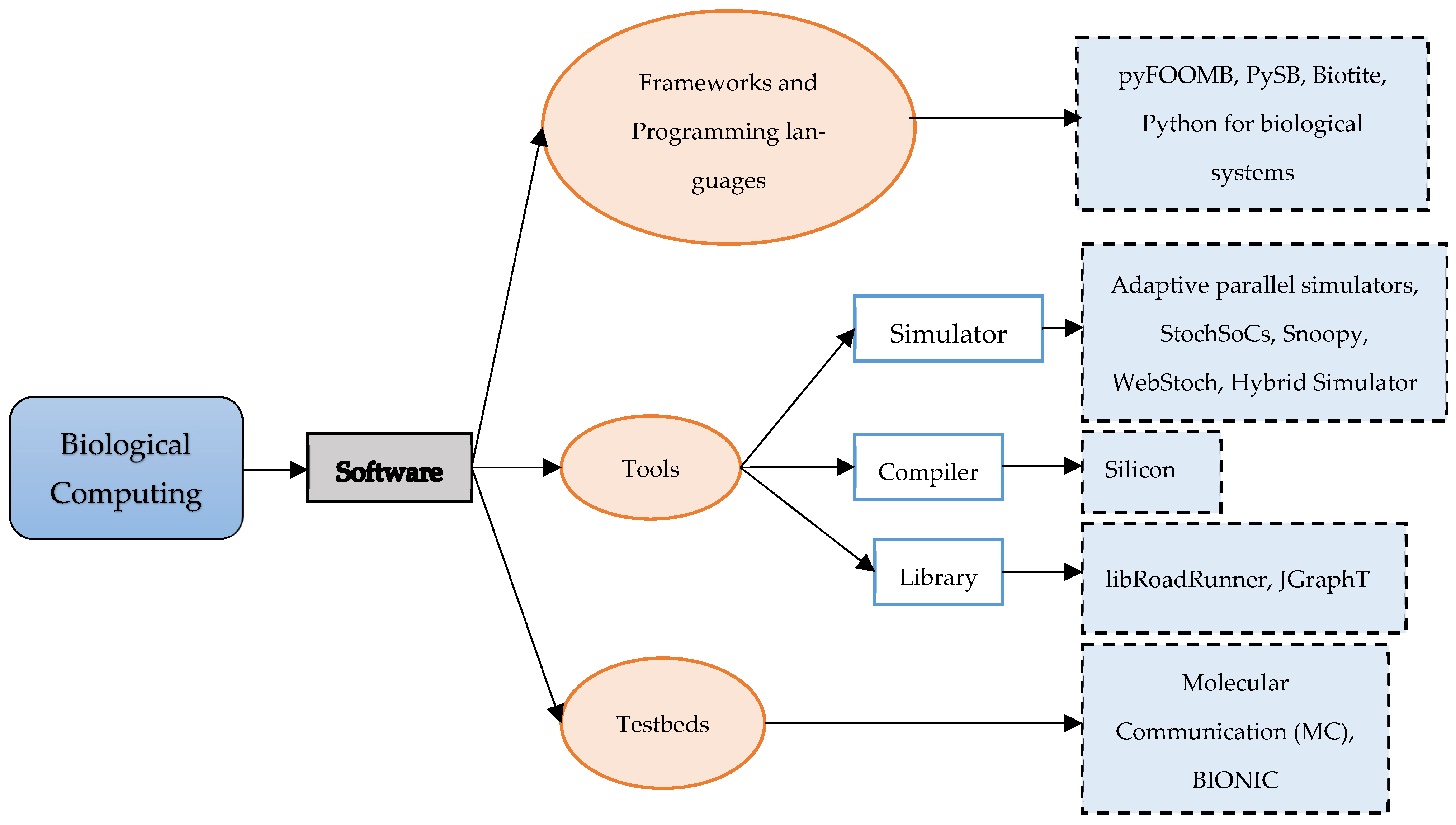

4.3.1. Frameworks and Programming Languages

- -

- PySB (Lopez et al. [53]) is a framework for building mathematical models for biochemical systems. It provides a library of macros encoding standard biochemical actions—binding, catalysis, and polymerization—allowing model construction in a high-level, action-based vocabulary. This increases model clarity, reusability, and accuracy. Note that PySB is primarily a mathematical modeling framework for biological networks, not a hardware biocomputing platform.

- -

- Biotite ([54]) is a Python-based framework for handling biological structural and sequence data using NumPy arrays. It serves two user groups: novices—who enjoy easy access to Biotite—and experts—who leverage its high performance and extensibility.

- -

- A New Modeling–Programming Paradigm named python for biological systems (Lubak et al. [55]) introduces best software–engineering practices with a focus on Python. It offers modularity, testability, and automated documentation generation.

- -

- pyFOOMB ([55]) is an object-oriented modeling framework for biological processes. It enables the implementation of models via ordinary differential equation (ODE) systems in a guided, flexible manner. pyFOOMB also supports model-based integration and data analysis—ranging from classical lab experiments to high-throughput biological screens.

- -

- pyFOOMB: pyFOOMB (Python Framework for Object-Oriented Modeling of Biological Models) is a Python package developed for the purpose of modeling and simulating biological systems. It enables users to construct, simulate, and analyze intricate biological models in a flexible and modular manner by utilizing Python’s object-oriented paradigm.

- -

- PySB: A framework for building the mathematical models of biochemical systems as Python programs.

- -

- Biotite: A Python library that offers tools for sequence and structural bioinformatics. It provides a unified and accessible framework for analyzing, modeling, and simulating biological data.

- -

- Python for biological systems (BioPython v1.85).

4.3.2. Tools

Simulators

- -

- -

- Snoopy Hybrid Simulator ([58]): A platform-independent tool offering advanced hybrid simulation algorithms for building and simulating hybrid biological models with accuracy and efficiency.

- -

- Adaptive parallel simulators;

- -

- StochSoCs: A stochastic simulation tool for large-scale biochemical reaction networks.

- -

- Snoopy: A software application mainly utilized for the modeling and simulation of biological systems, particularly those that encompass biomolecular networks. It offers a cohesive Petri net framework that facilitates various modeling paradigms, such as qualitative, stochastic, and continuous simulations.

Analyzers

- -

- miRNA Time-Series Analyzer (Cer et al. [59]): An open-source tool written in Perl and R, and it is runnable on Linux, macOS, and Windows. It helps scientists detect differential miRNA expression and offers advantages in simplicity, reliability, performance, and broad applicability over existing time-series tools.

- -

- Cytoscape plug-in network v2.7.x.

Compilers

- -

- Medley et al.’s Compiler ([60]): It transforms standard representations of chemical-reaction networks and circuits into hardware configurations for cell-morphic specialized hardware simulation. It supports a wide range of models—including mass-action kinetics, classical enzyme dynamics (Michaelis–Menten, Briggs–Haldane, and Boz–Morales models), and genetic inhibitor kinetics—and has been validated on MAP kinase models, showing that rule-based models suit this approach.

Libraries

- -

- JGraphT ([61]): A Java library offering efficient, generic graph data structures and a rich set of advanced algorithms. Its natural modeling of nodes and edges supports transport, social, and biological networks. Benchmarks show that JGraphT competes with NetworkX and the Boost Graph Library.

- -

- libRoadRunner v1.1.16 ([62]): An open-source, high-performance, cross-platform library for simulating and analyzing SBML (Systems Biology Markup Language) models. Focused on biochemical networks, it enables both large models and many small models to run quickly and integrates easily with existing simulation frameworks. It also provides a Python API for seamless integration.

4.3.3. Experimental Testbeds

- -

- Molecular Communication (MC): A high-performance systems biology markup language (SBML) simulation and analysis tool.

- -

- BIONIC: A high-performance systems biology markup language (SBML) simulation and analysis tool.

4.3.4. Use Cases

- -

- Molecular data storage;

- -

- Biological Logic Circuits;

- -

- Smart drug delivery systems;

- -

- Biological sensors;

- -

- Synthetic biology and genetic circuits;

- -

- Parallel computing;

- -

- Bio-inspired algorithms;

- -

- Security and cryptography.

4.4. Nanocomputing

- -

- Passive Nanostructures (2000–2005): They include dispersed structures (aerosols and colloids) and contact structures (nanocomposites and metals) [65].

- -

- Active Nanostructures (2005–2010): Unlike passive structures with stable behavior, active nanostructures exhibit variable or hybrid behavior. They add bioactive features (targeted drug delivery and bio-sensors) and physico-chemical activity (amplifiers, actuators, and adaptive structures).

- -

- Systems of Nanosystems (2010–2015): The integration of 3D nanosystems into larger platforms via methods such as biologically driven self-organization and robotics with emergent behavior.

- -

4.4.1. Definition

4.4.2. Fundamental Principles

- -

- Moore’s Law: As silicon approaches its scaling limits, Moore’s Law slows; nanocomputing is proposed as a future alternative to sustain computational progress.

- -

- Quantum Effects: At the nanoscale, quantum phenomena—tunneling, interference, and superposition—become significant.

4.4.3. Core Technologies and Architectures

- -

- Carbon Nanotubes (CNTs): They are used as transistors, interconnects, or electron channels.

- -

- Nanocrystals and Quantum Dots: They are employed for data storage or computation.

- -

- Nano-transistors: They are transistors just a few nanometers in size and are made from silicon alternatives.

- -

- DNA Computing: It leverages biological structures (DNA) for computational operations.

- -

- Switching Molecules: They are molecules that toggle between states and serve in computation applications.

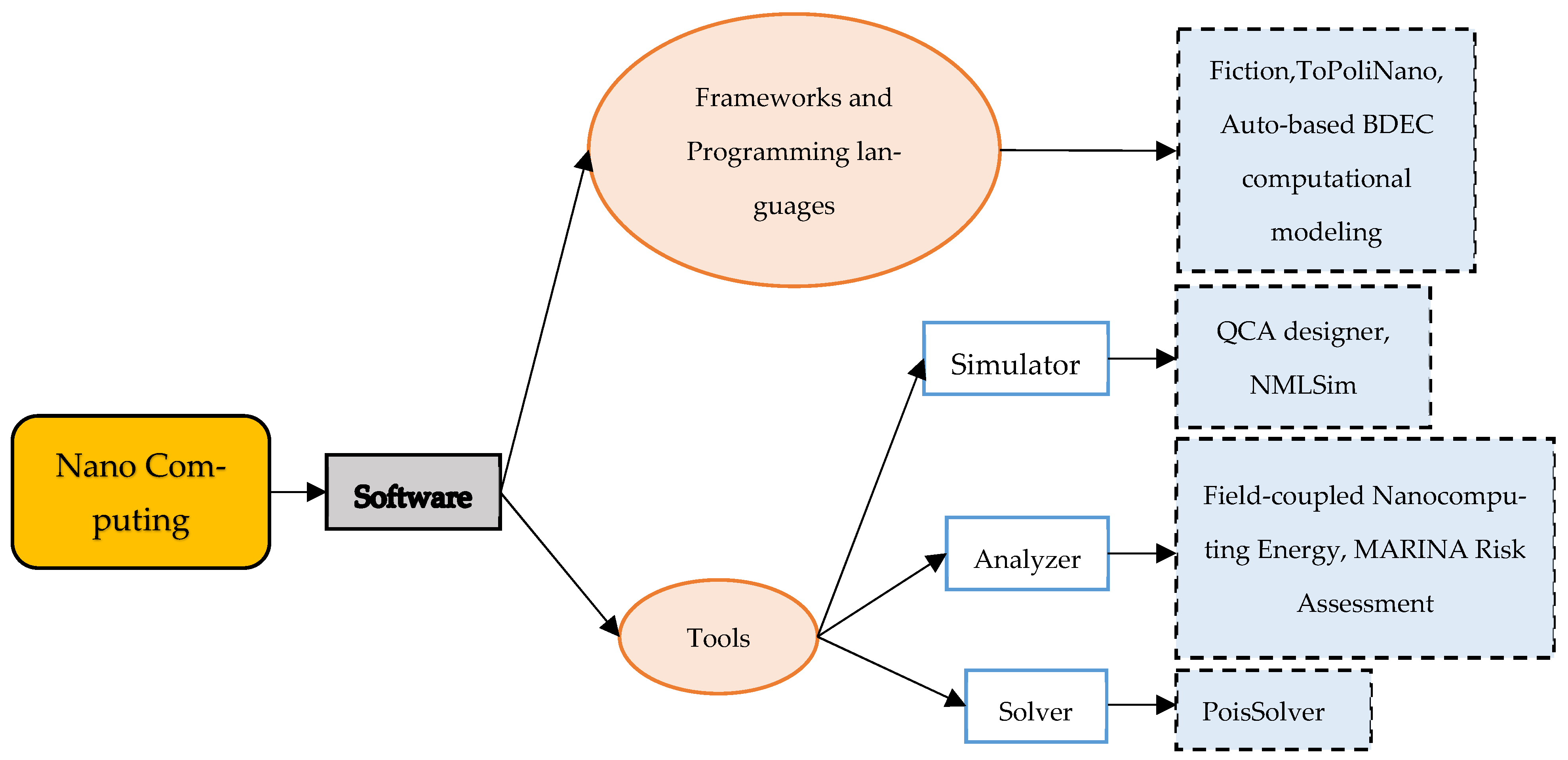

4.4.4. Frameworks and Programming Languages

- -

- Fiction: A design tool for field-coupled nanocomputing;

- -

- ToPoliNano: A design tool for field-coupled nanocomputing;

- -

- Auto-based BDEC Computational Modeling.

4.4.5. Tools

Simulators

- -

- QCA designer: QCA designer serves as a simulation and layout development tool specifically for QCA. It represents a significant advancement in nanotechnology, offering a viable alternative to existing CMOS IC technology. This tool enables the production of more densely integrated circuits that are capable of low power consumption while functioning at elevated frequencies.

- -

- NMLSim: A new simulation technology based on the magnetization of nanometric magnets.

Analyzers and Solvers

- -

- Field-coupled Nanocomputing Energy: A software for reading a field-coupled nanocomputing (FCN) layout design; it recognizes the logic gates based on a standard cell library and builds a graph that represents its netlist and then calculates the energy losses according to two different methods.

- -

- MARINA Risk Assessment: A flexible risk assessment strategic analyzer for nanocomputing applications.

- -

- PoisSolver: A tool for modeling silicon dangling bond clocking networks.

4.4.6. Use Cases

- -

- Ultra-small, energy-efficient processors;

- -

- Quantum computing hardware;

- -

- Medical nanodevices and bio-nanosensors;

- -

- Wearable and implantable devices;

- -

- Environmental monitoring;

- -

- Security and authentication;

- -

- Space and military applications;

- -

- High-density data storage.

4.5. Neuromorphic Computing

- Non-linear data processing;

- Real-time learning;

- Extremely low energy consumption.

- -

- SpiNNaker v4.2.0.46: Developed at the University of Manchester, SpiNNaker stands for spiking neural network architecture. This platform is designed for the real-time, large-scale simulation of spiking neural networks [80]. Its massively parallel architecture and low power consumption make SpiNNaker well suited for brain-inspired algorithms such as robotic control, cognitive modeling, and neuroscience research.

- -

- IBM TrueNorth: Developed by IBM Research as part of the DARPA SyNAPSE program, TrueNorth is a neuromorphic computing platform built around a non-von Neumann network of neuro-synaptic cores. It enables the efficient, parallel processing of spiking neural networks. TrueNorth chips deliver high performance on tasks such as pattern recognition, sensor data processing, and cognitive computing, demonstrating the real-world potential of neuromorphic computing.

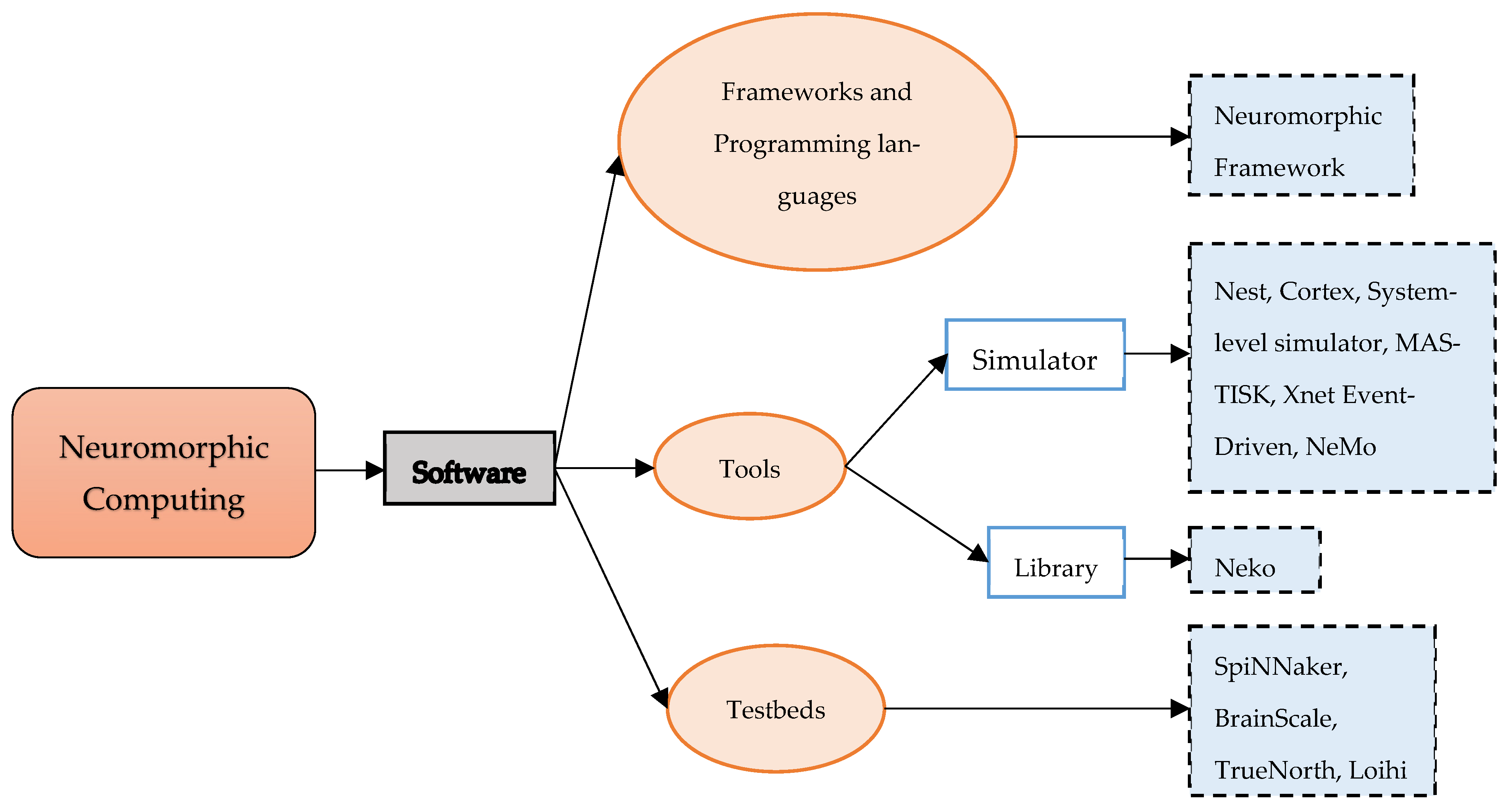

4.5.1. Frameworks and Programming Languages

- -

- Neuromorphic framework.

4.5.2. Tools

Simulators

- -

- Nest: It is a simulator for spiking neural network models that focus on the dynamics, size and structure of neural systems rather than the exact morphology.

- -

- Cortex: A specialized computing system created to replicate the architecture and operations of the brain’s cortex, especially its spiking neural networks. These simulators play a vital role in the field of computational neuroscience and in the advancement of sophisticated artificial intelligence.

- -

- System-level simulator.

- -

- MASTISK: An open-source versatile and flexible tool developed in MATLAB R2023b for the design exploration of dedicated neuromorphic hardware using nanodevices.

- -

- Xnet Event-Driven: A software simulator for memristive nanodevice-based neuromorphic hardware.

- -

- NeMo: It is a high-performance spiking neural network simulator which simulates the networks of Izhikevich neurons on CUDA-enabled GPUs.

Libraries

- -

- Neko [86]: A modular, extensible, open-source Python library with backends for PyTorch and TensorFlow. Neko focuses on designing innovative learning algorithms in three areas: online local learning, probabilistic learning, and in-memory analog learning. Results show that Neko outperforms state-of-the-art algorithms in both accuracy and speed. It also provides tools for comparing gradients to facilitate the development of new algorithmic variants.

Testbed Experiments

- -

- SpiNNaker Platform: an open source, multi-cloud continuous delivery platform for releasing software changes with high velocity and confidence of neuromorphic computing.

- -

- BrainScales Platform: It utilizes physical silicon neurons that are produced on complete 8-inch silicon wafers, interconnecting 20 of these wafers within a cabinet, alongside 48 FPGA-based communication modules. It facilitates accelerated time computations relative to real time, achieving approximately 10,000 times the speed by utilizing spike-timing-dependent plastic synapses. Each wafer is capable of accommodating around 200,000 neurons and 44 million synapses.

- -

- IBM TrueNorth: It could host 1 million very simple neurons or be reconfigured to the trade-off number of neurons versus neuron model complexity.

- -

- Intel Loihi: The most advanced neuromorphic chip for neuromorphic computing tests.

4.5.3. Use Cases

- -

- Low-power edge AI;

- -

- Real-time pattern recognition;

- -

- Adaptive robotics;

- -

- Brain–computer interfaces (BCIs);

- -

- Cognitive computing systems;

- -

- Cybersecurity and anomaly detection;

- -

- Event-based vision (dynamic vision sensors);

- -

- Neuroscience and brain simulation;

- -

- Energy-efficient data centers;

4.6. In-Memory Computing

4.6.1. Definition

4.6.2. Types of In-Memory Architectures

- (a)

- Software-level In-Memory Computing

- -

- Data reside in RAM and are processed directly (e.g., SAP HANA);

- -

- In-memory data stores such as Redis, MemSQL, and Apache Ignite.

- (b)

- Hardware-level In-Memory Computing

- -

- Processing-in-Memory (PIM):

- ○

- Processing units are embedded within the memory chip.

- ○

- Examples: UPMEM, Samsung PIM.

- -

- Near-Memory Computing (NMC):

- ○

- Processors are located close to, but not within, the memory.

- ○

- Lower energy consumption than traditional architectures, but higher than PIM.

- -

- Compute Express Link (CXL):

- ○

- A new low-latency interface between the memory and processor.

- ○

- Well suited to hybrid memory–processor architectures.

- -

- Hardware-Related Technologies:

- ○

- HMC (Hybrid Memory Cube) and HBM (High Bandwidth Memory): 3D-stacked memories with high bandwidth for in-memory computing.

- ○

- ReRAM, MRAM, and PCM: Non-volatile memories that can perform both storage and computation.

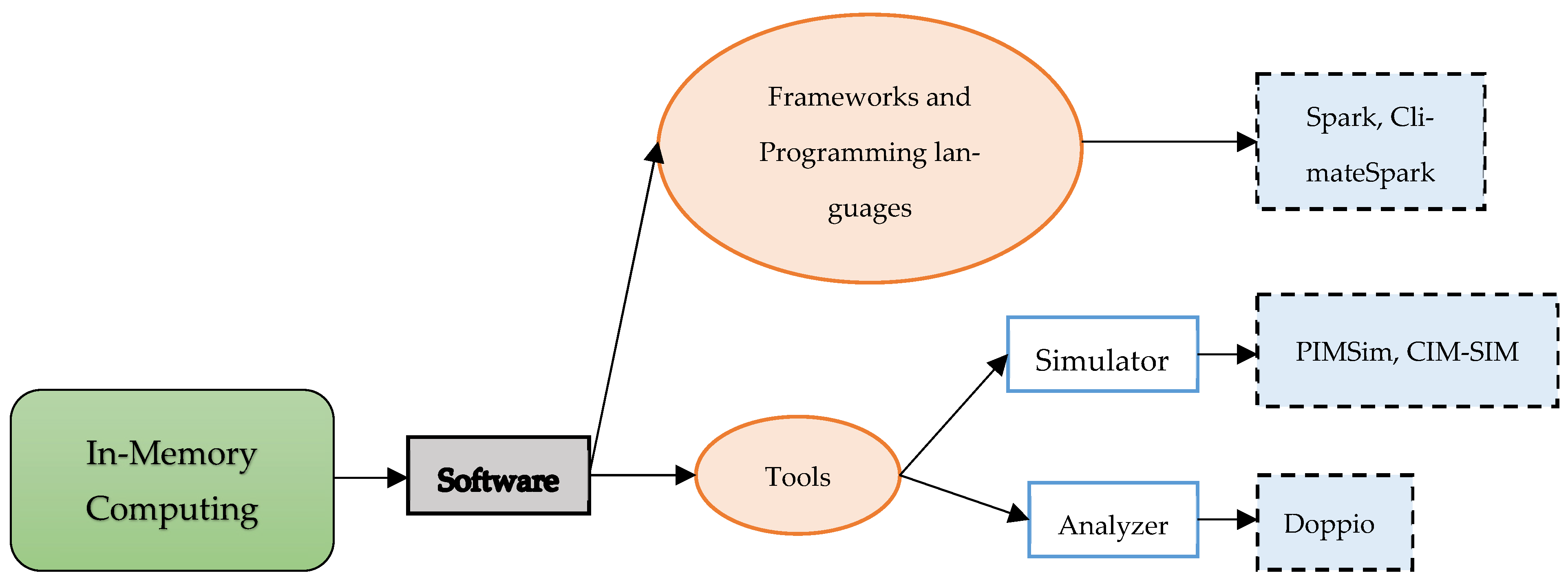

4.6.3. Frameworks and Programming Languages

- -

- Spark;

- -

- ClimateSpark: An in-memory distributed computing framework for big climate data analytics.

4.6.4. Tools

Simulators

- -

- PIMSim [91]: A highly configurable platform for circuit-, architecture-, and system-level studies. It offers three implementation modes, trading speed for accuracy. PIMSim enables the detailed modeling of performance and energy for PIM instructions, compilers, in-memory processing logic, various storage devices, and memory coherence in PIM. Experimental results show acceptable accuracy compared to state-of-the-art PIM designs.

- -

- CIMSIM [92]: An open-source SystemC simulator that allows for the functional modeling of in-memory architectures and defines a set of nano-instructions independent of technology.

Analyzers

- -

- Doppio.

4.6.5. Use Cases

- -

- Real-time data analytics;

- -

- Artificial intelligence and machine learning;

- -

- Big data processing;

- -

- Internet of Things (IoT);

- -

- Financial services;

- -

- Healthcare and genomics;

- -

- Real-time personalization;

- -

- Supply chain optimization;

- -

- Simulation and scientific computing;

- -

- Gaming and AR/VR.

4.7. Serverless Computing

4.7.1. Key Concepts in Serverless Computing

- -

- Function-as-a-Service (FaaS): Developers upload small, stateless functions that execute in response to events such as HTTP requests, file uploads, or queue messages.

- -

- Event-Driven: The code executes only when a specific event occurs; once execution is completed, resources are freed.

- -

- Automated Resource Management: Users do not manage the CPU, RAM, scaling, or replication—these are entirely the provider’s responsibility.

- -

- Execution Unit Function: Lightweight, stateless, and invoked in response to individual events.

4.7.2. Frameworks and Programming Languages

- -

- Ripple [96], which allows single-machine applications to exploit serverless task parallelism.

- -

- Fission [97], an open-source serverless framework for Kubernetes focused on developer productivity and performance. Its core is written in Go, but it supports runtimes for Python, Node.js, Ruby, Bash, and PHP.

- -

- Kubeless [98], which lets developers deploy small code snippets without worrying about the underlying infrastructure.

- -

- Luna+Serverless [99], a study integrating the Luna language with a serverless model, extending its standard library and leveraging language features to provide a serverless API.

- -

- Kappa [100], a serverless programming framework that enables developers to write standard Python code, which Kappa transforms and runs in parallel via Lambda functions on the serverless platform.

- -

- OpenWhisk [100,101,102,103], an open-source project originally developed by IBM and later contributed to the Apache Incubator. Its programming model is built around three primitives—Action (stateless functions), Trigger (classes of events from various sources), and Rule (links a Trigger to an Action). The OpenWhisk controller automatically scales functions in response to the demand.

4.7.3. Tools

Simulators

- -

- SimFaaS: A simulation platform, which assists serverless application developers to develop optimized Function-as-a-Service applications.

- -

- OpenDC Serverless: The first simulator to integrate serverless and machine learning execution, both emerging services already offered by all major cloud providers.

Analyzers

- -

- Amazon Cloudwatch: A comprehensive monitoring service that allows us to collect and track metrics, monitor logs, set alarms, and react to changes in AWS resources and applications.

- -

- Lumigo: A microservice monitoring and troubleshooting platform for serverless computing.

- -

- Epsagon: An open and composable observability and data visualization platform.

Testbed Experiments

- -

- CAPTAIN: A testbed for the co-simulation of sustainable and scalable serverless computing environments for AIoT-enabled systems.

- -

- SCOPE: A testbed for performance testing for serverless computing scenarios.

4.7.4. Use Cases

- -

- Web and mobile backend services;

- -

- API backend and microservices;

- -

- Real-time file or data processing;

- -

- Real-time stream processing;

- -

- Chatbots and voice assistants;

- -

- Automation and scheduled tasks;

- -

- Continuous integration/continuous deployment (CI/CD);

- -

- IoT backend;

- -

- Scalable event-driven applications;

- -

- Proof of concept (PoC)/minimum viable products (MVP) development.

5. Statistical Analysis of Emerging HPC-Related Computing Technologies

Open Research Challenges and Opportunities

6. Concluding Remarks and Future Research Areas

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AGI | Artificial General Intelligence |

| API | Application Programming Interface |

| AR/VR | Augmented Reality/Virtual Reality |

| AWS | Amazon Web Services |

| BCI | Brain–Computer Interface |

| CAD | Computer-Aided Design |

| CI/CD | Continuous Integration/Continuous Delivery |

| CNT | Carbon Nanotube |

| CPU | Central Processing Unit |

| CXL | Compute Express Link |

| DNA | Deoxyribonucleic acid |

| FaaS | Function as a Service |

| GHG | Greenhouse Gas |

| GPU | Graphics Processing Unit |

| HBM | High Bandwidth Memory |

| HDD | Hard Disk Drive |

| HMC | Hybrid Memory Cube |

| HPC | High-Performance Computing |

| HPQC | High-performance Quantum Computing |

| IoT | Internet of Things |

| IT | Information Technology |

| ML | Machine Learning |

| MRAM | Magneto-resistive Random Access Memory |

| MVP | Minimum Viable Product |

| NISQ | Noisy Intermediate-Scale Quantum |

| NMC | Near-Memory Computing |

| ODE | Ordinary Differential Equation |

| PCM | Phase-Change Memory |

| PIM | Processing In Memory |

| PoC | Proof of Concept |

| QFT | Quantum Fourier Transform |

| QKD | Quantum Key Distribution |

| QPU | Quantum Processing Unit |

| ReRAM | Resistive Random Access Memory |

| RNA | Ribo-Nucleic Acid |

| SBML | Systems Biology Markup Language |

| SDNs | Software-Defined Networks |

| SOT-MRAM | Spin–Orbit Torque MRAM |

| SSD | Solid-State Drive |

| STT-MRAM | Spin-Transfer Torque MRAM |

| TRL | Technology Readiness Level |

References

- Sterling, T.; Brodowicz, M.; Anderson, M. High Performance Computing: Modern Systems and Practices; Morgan Kaufmann: Burlington, MA, USA, 2017. [Google Scholar]

- Li, L. High Performance Computing Applied to Cloud Computing. Ph.D. Thesis, Finland, 2015. Available online: https://www.theseus.fi/handle/10024/95096 (accessed on 15 June 2025).

- Abas, M.F.b.; Singh, B.; Ahmad, K.A. High Performance Computing and Its Application in Computational Biomimetics. In High Performance Computing in Biomimetics: Modeling, Architecture and Applications; Springer: Berlin/Heidelberg, Germany, 2024; pp. 21–46. [Google Scholar]

- Yin, F.; Shi, F. A comparative survey of big data computing and HPC: From a parallel programming model to a cluster architecture. Int. J. Parallel Program. 2022, 50, 27–64. [Google Scholar] [CrossRef]

- Raj, R.K.; Romanowski, C.J.; Impagliazzo, J.; Aly, S.G.; Becker, B.A.; Chen, J.; Ghafoor, S.; Giacaman, N.; Gordon, S.I.; Izu, C.; et al. High performance computing education: Current challenges and future directions. In Proceedings of the Working Group Reports on Innovation and Technology in Computer Science Education, Trondheim, Norway, 25 December 2020; pp. 51–74. [Google Scholar]

- Karanikolaou, E.; Bekakos, M. Action: A New Metric for Evaluating the Energy Efficiency on High Performance Computing Platforms (ranked on Green500 List). WSEAS Trans. Comput. 2022, 21, 23–30. [Google Scholar] [CrossRef]

- Al-Hashimi, H.M. Turing, von Neumann, and the computational architecture of biological machines. Proc. Natl. Acad. Sci. USA 2023, 120, e2220022120. [Google Scholar] [CrossRef] [PubMed]

- Eberbach, E.; Goldin, D.; Wegner, P. Turing’s ideas and models of computation. In Alan Turing: Life and Legacy of a Great Thinker; Springer: Berlin/Heidelberg, Germany, 2004; pp. 159–194. [Google Scholar]

- Gill, S.S.; Kumar, A.; Singh, H.; Singh, M.; Kaur, K.; Usman, M.; Buyya, R. Quantum computing: A taxonomy, systematic review and future directions. Softw. Pract. Exp. 2022, 52, 66–114. [Google Scholar] [CrossRef]

- Perrier, E. Ethical quantum computing: A roadmap. arXiv 2021, arXiv:2102.00759. [Google Scholar]

- Gill, S.S. Quantum and blockchain based Serverless edge computing: A vision, model, new trends and future directions. Internet Technol. Lett. 2024, 7, e275. [Google Scholar] [CrossRef]

- Aslanpour, M.S.; Toosi, A.N.; Cicconetti, C.; Javadi, B.; Sbarski, P.; Taibi, D.; Assuncao, M.; Gill, S.S.; Gaire, R.; Dustdar, S. Serverless edge computing: Vision and challenges. In Proceedings of the 2021 Australasian Computer Science Week Multiconference, Dunedin, New Zealand, 1–5 February 2021. [Google Scholar]

- Dagdia, Z.C.; Avdeyev, P.; Bayzid, M.S. Biological computation and computational biology: Survey, challenges, and discussion. Artif. Intell. Rev. 2021, 54, 4169–4235. [Google Scholar] [CrossRef]

- Staudigl, F.; Merchant, F.; Leupers, R. A survey of neuromorphic computing-in-memory: Architectures, simulators, and security. IEEE Des. Test 2021, 39, 90–99. [Google Scholar] [CrossRef]

- Sepúlveda, S.; Cravero, A.; Fonseca, G.; Antonelli, L. Systematic review on requirements engineering in quantum computing: Insights and future directions. Electronics 2024, 13, 2989. [Google Scholar] [CrossRef]

- Czarnul, P.; Proficz, J.; Krzywaniak, A. Energy-aware high-performance computing: Survey of state-of-the-art tools, techniques, and environments. Sci. Program. 2019, 2019, 8348791. [Google Scholar] [CrossRef]

- Rico-Gallego, J.A.; Díaz-Martín, J.C.; Manumachu, R.R.; Lastovetsky, A.L. A survey of communication performance models for high-performance computing. ACM Comput. Surv. (CSUR) 2019, 51, 1–36. [Google Scholar] [CrossRef]

- Singh, G.; Chelini, L.; Corda, S.; Awan, A.J.; Stuijk, S.; Jordans, R.; Corporaal, H.; Boonstra, A.J. Near-memory computing: Past, present, and future. Microprocess. Microsyst. 2019, 71, 102868. [Google Scholar] [CrossRef]

- Li, J.; Li, N.; Zhang, Y.; Wen, S.; Du, W.; Chen, W.; Ma, W. A survey on quantum cryptography. Chin. J. Electron. 2018, 27, 223–228. [Google Scholar] [CrossRef]

- Li, J.; Wang, S.; Rudinac, S.; Osseyran, A. High-performance computing in healthcare: An automatic literature analysis perspective. J. Big Data 2024, 11, 61. [Google Scholar] [CrossRef]

- Garewal, I.K.; Mahamuni, C.V.; Jha, S. Emerging Applications and Challenges in Quantum Computing: A Literature Survey. In Proceedings of the 2024 International Conference on Artificial Intelligence, Big Data, Computing and Data Communication Systems (icABCD), Port Louis, Mauritius, 1–2 August 2024; pp. 1–12. [Google Scholar] [CrossRef]

- Kudithipudi, D.; Schuman, C.; Vineyard, C.M.; Pandit, T.; Merkel, C.; Kubendran, R.; Aimone, J.B.; Orchard, G.; Mayr, C.; Benosman, R.; et al. Neuromorphic computing at scale. Nature 2025, 637, 801–812. [Google Scholar] [CrossRef]

- Duarte, L.T.; Deville, Y. Quantum-Assisted Machine Learning by Means of Adiabatic Quantum Computing. In Proceedings of the 2024 IEEE Mediterranean and Middle-East Geoscience and Remote Sensing Symposium (M2GARSS), Oran, Algeria, 15–17 April 2024; pp. 371–375. [Google Scholar] [CrossRef]

- Al Abdul Wahid, S.; Asad, A.; Mohammadi, F. A Survey on Neuromorphic Architectures for Running Artificial Intelligence Algorithms. Electronics 2024, 13, 2963. [Google Scholar] [CrossRef]

- Gyongyosi, L.; Imre, S. A survey on quantum computing technology. Comput. Sci. Rev. 2019, 31, 51–71. [Google Scholar] [CrossRef]

- Combarro, E.F.; Vallecorsa, S.; Rodríguez-Muñiz, L.J.; AguilarGonzález, Á.; Ranilla, J.; Di Meglio, A. A report on teaching a series of online lectures on quantum computing from cern. J. Supercomput. 2021, 77, 14405–14435. [Google Scholar] [CrossRef]

- Outeiral, C.; Strahm, M.; Shi, J.; Morris, G.M.; Benjamin, S.C.; Deane, C.M. The prospects of quantum computing in computational molecular biology. Wiley Interdiscip. Rev. Comput. Mol. Sci. 2021, 11, 1481. [Google Scholar] [CrossRef]

- Green, A.S.; Lumsdaine, P.L.; Ross, N.J.; Selinger, P.; Valiron, B. Quipper: A scalable quantum programming language. In Proceedings of the 34th ACM SIGPLAN Conference on Programming Language Design and Implementation, Seattle, WA, USA, 16–19 June 2013; pp. 333–342. [Google Scholar]

- Svore, K.; Geller, A.; Troyer, M.; Azariah, J.; Granade, C.; Heim, B.; Kliuchnikov, V.; Mykhailova, M.; Paz, A.; Roetteler, M. Q# enabling scalable quantum computing and development with a high-level dsl. In Proceedings of the Real World Domain Specific Languages Workshop, Vienna, Austria, 24 February 2018; pp. 1–10. [Google Scholar]

- Wecker, D.; Svore, K.M. Liqui |⟩: A software design architecture and domain-specific language for quantum computing. arXiv 2014, arXiv:1402.4467. [Google Scholar]

- Paykin, J.; Rand, R.; Zdancewic, S. Qwire: A core language for quantum circuits. ACM SIGPLAN Not. 2017, 52, 846–858. [Google Scholar] [CrossRef]

- Khammassi, N.; Ashraf, I.; Someren Jv Nane, R.; Krol, A.; Rol, M.A.; Lao, L.; Bertels, K.; Almudever, C.G. Openql: A portable quantum programming framework for quantum accelerators. arXiv 2020, arXiv:2005.13283. [Google Scholar] [CrossRef]

- Steiger, D.S.; Häner, T.; Troyer, M. Projectq: An open source software framework for quantum computing. Quantum 2018, 2, 49. [Google Scholar] [CrossRef]

- IBM: Qiskit: An Open-Source Quantum Computing Framework. Available online: https://qiskit.org/ (accessed on 8 June 2024).

- Bergholm, V.; Izaac, J.; Schuld, M.; Gogolin, C.; Ahmed, S.; Ajith, V.; Alam, M.S.; Alonso-Linaje, G.; AkashNarayanan, B.; Asadi, A.; et al. Pennylane: Automatic differentiation of hybrid quantum-classical computations. arXiv 2018, arXiv:1811.04968. [Google Scholar]

- Google: Cirq: A Python Library for Quantum Circuits. Available online: https://github.com/quantumlib/Cirq/blob/main/cirq-google/cirq_google/api/v2/program.proto (accessed on 8 June 2024).

- Ömer, B. A Procedural Formalism for Quantum Computing; Technical University of Vienna: Vienna, Austria, 1998. [Google Scholar]

- Liu, S.; Wang, X.; Zhou, L.; Guan, J.; Li, Y.; He, Y.; Duan, R.; Ying, M. Q|si⟩: A quantum programming environment. In Symposium on Real-Time and Hybrid Systems; Springer: Berlin/Heidelberg, Germany, 2018; pp. 133–164. [Google Scholar]

- Krämer, S.; Plankensteiner, D.; Ostermann, L.; Ritsch, H. Quantumoptics. jl: A julia framework for simulating open quantum systems. Comput. Phys. Commun. 2018, 227, 109–116. [Google Scholar]

- Bian, H.; Huang, J.; Dong, R.; Guo, Y.; Wang, X. Hpqc: A new efficient quantum computing simulator. In Proceedings of the International Conference on Algorithms and Architectures for Parallel Processing, New York, NY, USA, 2–4 October 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 111–125. [Google Scholar]

- Ardelean, S.M.; Udrescu, M. Qc|pp⟩: A behavioral quantum computing simulation library. In Proceedings of the 2018 IEEE 12th International Symposium on Applied Computational Intelligence and Informatics (SACI), Timisoara, Romania, 17–19 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 000437–000442. [Google Scholar]

- Gheorghiu, V. Quantum++: A modern c++ quantum computing library. PLoS ONE 2018, 13, 0208073. [Google Scholar] [CrossRef]

- Javadi-Abhari, A.; Patil, S.; Kudrow, D.; Heckey, J.; Lvov, A.; Chong, F.T.; Martonosi, M. Scaffcc: A framework for compilation and analysis of quantum computing programs. In Proceedings of the 11th ACM Conference on Computing Frontiers, Cagliari, Italy, 20–22 May 2014; pp. 1–10. [Google Scholar]

- Sivarajah, S.; Dilkes, S.; Cowtan, A.; Simmons, W.; Edgington, A.; Duncan, R. T|ket⟩: A retargetable compiler for nisq devices. Quantum Sci. Technol. 2020, 6, 014003. [Google Scholar] [CrossRef]

- Seth, P.; Krivenko, I.; Ferrero, M.; Parcollet, O. Triqs/cthyb: A continuous-time quantum monte carlo hybridisation expansion solver for quantum impurity problems. Comput. Phys. Commun. 2016, 200, 274–284. [Google Scholar] [CrossRef]

- Kawamura, M.; Yoshimi, K.; Misawa, T.; Yamaji, Y.; Todo, S.; Kawashima, N. Quantum lattice model solver hϕ. Comput. Phys. Commun. 2017, 217, 180–192. [Google Scholar] [CrossRef]

- Ma, L.; Tang, X.; Slattery, O.; Battou, A. A testbed for quantum communication and quantum networks. In Quantum Information Science, Sensing, and Computation XI; NIST: Baltimore, MA, USA, 2019; Volume 10984, p. 1098407. [Google Scholar]

- Clark, S.M.; Lobser, D.; Revelle, M.; Yale, C.G.; Bossert, D.; Burch, A.D.; Chow, M.N.; Hogle, C.W.; Ivory, M.; Pehr, J.; et al. Engineering the quantum scientific computing open user testbed (qscout): Design details and user guide. arXiv 2021, arXiv:2104.00759. [Google Scholar]

- Anuar, N.; Takahashi, Y.; Sekine, T. Adiabatic logic versus cmos for low power applications. Proc. ITC-CSCC 2009, 2009, 302–305. [Google Scholar]

- Denker, J.S. A review of adiabatic computing. In Proceedings of the 1994 IEEE Symposium on Low Power Electronics, Cambridge, MA, USA, 10–12 October 1994; IEEE: Piscataway, NJ, USA, 1994; pp. 94–97. [Google Scholar]

- Johnson, M.W.; Amin, M.H.; Gildert, S.; Lanting, T.; Hamze, F.; Dickson, N.; Harris, R.; Berkley, A.J.; Johansson, J.; Bunyk, P.; et al. Quantum annealing with manufactured spins. Nature 2011, 473, 194–198. [Google Scholar] [CrossRef] [PubMed]

- Koruga, D.L. Biocomputing. In Proceedings of the Twenty-Fourth Annual Hawaii International Conference on System Sciences, Kauai, HI, USA, 8–11 January 1991; IEEE: Piscataway, NJ, USA, 1991; Volume 1, pp. 269–275. [Google Scholar]

- Lopez, C.F.; Muhlich, J.L.; Bachman, J.A.; Sorger, P.K. Programming biological models in python using pysb. Mol. Syst. Biol. 2013, 9, 646. [Google Scholar] [CrossRef] [PubMed]

- Kunzmann, P.; Hamacher, K. Biotite: A unifying open-source computational biology framework in python. BMC Bioinform. 2018, 19, 346. [Google Scholar] [CrossRef] [PubMed]

- Lubbock, A.L.; Lopez, C.F. Programmatic modeling for biological systems. Curr. Opin. Syst. Biol. 2021, 27, 100343. [Google Scholar] [CrossRef]

- Hemmerich, J.; Tenhaef, N.; Wiechert, W.; Noack, S. Pyfoomb: Python framework for object-oriented modeling of bioprocesses. Eng. Life Sci. 2021, 21, 242–257. [Google Scholar] [CrossRef]

- Manolakos, E.S.; Kouskoumvekakis, E. Stochsocs: High performance biocomputing simulations for large scale systems biology. In Proceedings of the 2017 International Conference on High Performance Computing & Simulation (HPCS), Genoa, Italy, 17–21 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 921–928. [Google Scholar]

- Herajy, M.; Liu, F.; Rohr, C.; Heiner, M. Snoopy’s hybrid simulator: A tool to construct and simulate hybrid biological models. BMC Syst. Biol. 2017, 11, 71. [Google Scholar] [CrossRef]

- Cer, R.Z.; Herrera-Galeano, J.E.; Anderson, J.J.; Bishop-Lilly, K.A.; Mokashi, V.P. Mirna temporal analyzer (mirnata): A bioinformatics tool for identifying differentially expressed micrornas in temporal studies using normal quantile transformation. Gigascience 2014, 3, 2047–2217. [Google Scholar] [CrossRef]

- Medley, J.K.; Teo, J.; Woo, S.S.; Hellerstein, J.; Sarpeshkar, R.; Sauro, H.M. A compiler for biological networks on silicon chips. PLoS Comput. Biol. 2020, 16, 1008063. [Google Scholar] [CrossRef]

- Michail, D.; Kinable, J.; Naveh, B.; Sichi, J.V. Jgrapht—A java library for graph data structures and algorithms. ACM Trans. Math. Softw. (TOMS) 2020, 46, 1–29. [Google Scholar] [CrossRef]

- Somogyi, E.T.; Bouteiller, J.-M.; Glazier, J.A.; König, M.; Medley, J.K.; Swat, M.H.; Sauro, H.M. Libroadrunner: A high performance sbml simulation and analysis library. Bioinformatics 2015, 31, 3315–3321. [Google Scholar] [CrossRef] [PubMed]

- Yadav, R.; Dixit, C.; Trivedi, S.K. Nanotechnology and nano computing. Int. J. Res. Appl. Sci. Eng. Technol. 2017, 5, 531–535. [Google Scholar] [CrossRef]

- Jadhav, S.S.; Jadhav, S.V. Application of nanotechnology in modern computers. In Proceedings of the 2018 International Conference on Circuits and Systems in Digital Enterprise Technology (ICCSDET), Kottayam, India, 21–22 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Roco, M.C.; Bainbridge, W.S. Overview converging technologies for improving human performance. In Converging Technologies for Improving Human Performance; Springer: Berlin/Heidelberg, Germany, 2003; pp. 1–27. [Google Scholar]

- Schuller, I.K.; Stevens, R.; Pino, R.; Pechan, M. Neuromorphic Computing–From Materials Research to Systems Architecture Roundtable; Technical report; USDOE Office of Science (SC): Washington, DC, USA, 2015.

- Walter, M.; Wille, R.; Torres, F.S.; Große, D.; Drechsler, R. Fiction: An open-source framework for the design of field-coupled nanocomputing circuits. arXiv 2019, arXiv:1905.02477. [Google Scholar]

- Soeken, M.; Riener, H.; Haaswijk, W.; Testa, E.; Schmitt, B.; Meuli, G.; Mozafari, F.; De Micheli, G. The epfl logic synthesis libraries. arXiv 2018, arXiv:1805.05121. [Google Scholar]

- Garlando, U.; Walter, M.; Wille, R.; Riente, F.; Torres, F.S.; Drechsler, R. Topolinano and fiction: Design tools for field-coupled nanocomputing. In Proceedings of the 2020 23rd Euromicro Conference on Digital System Design (DSD), Kranj, Slovenia, 26–28 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 408–415. [Google Scholar]

- Singh, N.; Ching, D. Auto-based bdec computational modeling framework for fault tolerant nano-computing. Appl. Math. Sci. 2015, 9, 761–771. [Google Scholar] [CrossRef][Green Version]

- Abdullah-Al-Shafi, M.; Ziaur, R. Analysis and modeling of sequential circuits in QCA nano computing: RAM and SISO register study. Solid State Electron. Lett. 2019, 1, 73–83. [Google Scholar] [CrossRef]

- Walus, K.; Dysart, T.J.; Jullien, G.A.; Budiman, R.A. Qcadesigner: A rapid design and simulation tool for quantum-dot cellular automata. IEEE Trans. Nanotechnol. 2004, 3, 26–31. [Google Scholar] [CrossRef]

- Cowburn, R.; Welland, M. Room temperature magnetic quantum cellular automata. Science 2000, 287, 1466–1468. [Google Scholar] [CrossRef]

- Niemier, M.; Bernstein, G.H.; Csaba, G.; Dingler, A.; Hu, X.; Kurtz, S.; Liu, S.; Nahas, J.; Porod, W.; Siddiq, M.; et al. Nanomagnet logic: Progress toward system-level integration. J. Phys. Condens. Matter 2011, 23, 493202. [Google Scholar] [CrossRef]

- Soares, T.R.; Rahmeier, J.G.N.; De Lima, V.C.; Lascasas, L.; Melo, L.G.C.; Neto, O.P.V. Nmlsim: A nanomagnetic logic (nml) circuit designer and simulation tool. J. Comput. Electron. 2018, 17, 1370–1381. [Google Scholar] [CrossRef]

- Ribeiro, M.A.; Chaves, J.F.; Neto, O.P.V. Field-Coupled Nanocomputing Energy Analysis Tool, Sforum, Microelectronics Students Forum. 2016. Available online: https://sbmicro.org.br/sforum-eventos/sforum2016/19.pdf (accessed on 12 May 2025).

- Bos, P.M.; Gottardo, S.; Scott-Fordsmand, J.J.; Van Tongeren, M.; Semenzin, E.; Fernandes, T.F.; Hristozov, D.; Hund-Rinke, K.; Hunt, N.; Irfan, M.-A.; et al. The marina risk assessment strategy: A flexible strategy for efficient information collection and risk assessment of nanomaterials. Int. J. Environ. Res. Public Health 2015, 12, 15007–15021. [Google Scholar] [CrossRef] [PubMed]

- Chiu, H.N.; Ng, S.S.; Retallick, J.; Walus, K. Poissolver: A tool for modelling silicon dangling bond clocking networks. In Proceedings of the 2020 IEEE 20th International Conference on Nanotechnology (IEEE-NANO), Montreal, QC, Canada, 29–31 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 134–139. [Google Scholar]

- Bersuker, G.; Mason, M.; Jones, K.L. Neuromorphic computing: The potential for high-performance processing in space. Game Change 2018, 1–12. Available online: https://csps.aerospace.org/sites/default/files/2021-08/Bersuker_NeuromorphicComputing_12132018.pdf (accessed on 15 June 2025).

- Furber, S.; Lester, D.R.; Plana, L.A.; Garside, J.; Painkras, E.; Temple, S.; Brown, A.; Kendall, R.; Azhar, F.; Bainbridge, J.; et al. The spinnaker project. Proc. IEEE 2013, 102, 652–665. [Google Scholar] [CrossRef]

- Schuman, C.D.; Plank, J.S.; Rose, G.S.; Chakma, G.; Wyer, A.; Bruer, G.; Laanait, N. A programming framework for neuromorphic systems with emerging technologies. In Proceedings of the 4th ACM International Conference on Nanoscale Computing and Communication, Washington, DC, USA, 27–29 September 2017; pp. 1–7. [Google Scholar]

- Bichler, O.; Roclin, D.; Gamrat, C.; Querlioz, D. Design exploration methodology for memristor-based spiking neuromorphic architectures with the xnet event-driven simulator. In Proceedings of the 2013 IEEE/ACM International Symposium on Nanoscale Architectures (NANOARCH), New York, NY, USA, 15–17 July 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 7–12. [Google Scholar]

- Bhattacharya, T.; Parmar, V.; Suri, M. Mastisk: Simulation framework for design exploration of neuromorphic hardware. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–9. [Google Scholar]

- Plagge, M.; Carothers, C.D.; Gonsiorowski, E.; Mcglohon, N. Nemo: A massively parallel discrete-event simulation model for neuromorphic architectures. ACM Trans. Model. Comput. Simul. (TOMACS) 2018, 28, 1–25. [Google Scholar] [CrossRef]

- Lee, M.K.F.; Cui, Y.; Somu, T.; Luo, T.; Zhou, J.; Tang, W.T.; Wong, W.-F.; Goh, R.S.M. A system-level simulator for rram-based neuromorphic computing chips. ACM Trans. Archit. Code Optim. (TACO) 2019, 15, 1–24. [Google Scholar] [CrossRef]

- Zhao, Z.; Wycoff, N.; Getty, N.; Stevens, R.; Xia, F. Neko: A library for exploring neuromorphic learning rules. arXiv 2021, arXiv:2105.00324. [Google Scholar]

- Mohamed, K.S. Neuromorphic Computing and Beyond; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Sebastian, A.; Le Gallo, M.; Khaddam-Aljameh, R.; Eleftheriou, E. Memory devices and applications for in-memory computing. Nat. Nanotechnol. 2020, 15, 529–544. [Google Scholar] [CrossRef]

- Jayanthi, D.; Sumathi, G. Weather data analysis using spark–an inmemory computing framework. In Proceedings of the 2017 Innovations in Power and Advanced Computing Technologies (i-PACT), Vellore, India, 21–22 April 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–5. [Google Scholar]

- Hu, F.; Yang, C.; Schnase, J.L.; Duffy, D.Q.; Xu, M.; Bowen, M.K.; Lee, T.; Song, W. Climatespark: An in-memory distributed computing framework for big climate data analytics. Comput. Geosci. 2018, 115, 154–166. [Google Scholar] [CrossRef]

- Xu, S.; Chen, X.; Wang, Y.; Han, Y.; Qian, X.; Li, X. Pimsim: A flexible and detailed processing-in-memory simulator. IEEE Comput. Archit. Lett. 2018, 18, 6–9. [Google Scholar] [CrossRef]

- BanaGozar, A.; Vadivel, K.; Stuijk, S.; Corporaal, H.; Wong, S.; Lebdeh, M.A.; Yu, J.; Hamdioui, S. Cim-sim: Computation in memory simulator. In Proceedings of the 22nd International Workshop on Software and Compilers for Embedded Systems, Sankt Goar, Germany, 27–28 May 2019; pp. 1–4. [Google Scholar]

- Zhou, P.; Ruan, Z.; Fang, Z.; Shand, M.; Roazen, D.; Cong, J. Doppio: I/o-aware performance analysis, modeling and optimization for inmemory computing framework. In Proceedings of the 2018 IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS), Belfast, UK, 2–4 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 22–32. [Google Scholar]

- Banaei, A.; Sharifi, M. Etas: Predictive scheduling of functions on worker nodes of apache openwhisk platform. J. Supercomput. 2022, 78, 5358–5393. [Google Scholar] [CrossRef]

- Hassan, H.B.; Barakat, S.A.; Sarhan, Q.I. Survey on serverless computing. J. Cloud Comput. 2021, 10, 1–29. [Google Scholar] [CrossRef]

- Joyner, S.; MacCoss, M.; Delimitrou, C.; Weatherspoon, H. Ripple: A practical declarative programming framework for serverless compute. arXiv 2020, arXiv:2001.00222. [Google Scholar]

- Fission Open-Source Software. 2019. Available online: https://github.com/fission/fission (accessed on 17 June 2025).

- Kubeless open source software. 2018. Available online: https://github.com/vmware-archive/kubeless/ (accessed on 17 June 2025).

- Moczurad, P.; Malawski, M. Visual-textual framework for serverless computation: A luna language approach. In Proceedings of the 2018 IEEE/ACM International Conference on Utility and Cloud Computing Companion (UCC Companion), Zurich, Switzerland, 17–20 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 169–174. [Google Scholar]

- Zhang, W.; Fang, V.; Panda, A.; Shenker, S. Kappa: A programming framework for serverless computing. In Proceedings of the 11th ACM Symposium on Cloud Computing, Virtual Event, USA, 19–20 October 2020; pp. 328–343. [Google Scholar]

- Mohanty, S.K.; Premsankar, G.; Di Francesco, M. An evaluation of open source serverless computing frameworks. In Proceedings of the IEEE International Conference on Cloud Computing Technology and Science, Naples, Italy, 4–6 December 2018; pp. 115–120. [Google Scholar]

- OpenWhisk. 2020. Available online: https://github.com/apache/incubator-openwhisk (accessed on 15 June 2025).

- Baldini, I.; Castro, P.; Cheng, P.; Fink, S.; Ishakian, V.; Mitchell, N.; Muthusamy, V.; Rabbah, R.; Suter, P. Cloud-native, event-based programming for mobile applications. In Proceedings of the International Conference on Mobile Software Engineering and Systems, Austin, Texas, 14–22 May 2016; pp. 287–288. [Google Scholar]

- Mahmoudi, N.; Khazaei, H. Simfaas: A performance simulator for serverless computing platforms. arXiv 2021, arXiv:2102.08904. [Google Scholar]

- Jounaid, S. Opendc Serverless: Design, Implementation and Evaluation of a Faas Platform Simulator. Ph.D. Thesis, Vrije Universiteit Amsterdam, Amsterdam, The Netherlands, 2020. [Google Scholar]

- Khattar, N.; Sidhu, J.; Singh, J. Toward energy-efficient cloud computing: A survey of dynamic power management and heuristics-based optimization techniques. J. Supercomput. 2019, 75, 4750–4810. [Google Scholar] [CrossRef]

- Córcoles, A.D.; Kandala, A.; Javadi-Abhari, A.; McClure, D.T.; Cross, A.W.; Temme, K.; Nation, P.D.; Steffen, M.; Gambetta, J.M. Challenges and opportunities of near-term quantum computing systems. arXiv 2019, arXiv:1910.02894. [Google Scholar] [CrossRef]

- Ojha, A.K. Nano-electronics and nano-computing: Status, prospects, and challenges. In Region 5 Conference: Annual Technical and Leadership Workshop; IEEE: Piscataway, NJ, USA, 2004; pp. 85–91. [Google Scholar]

- Baldini, I.; Castro, P.; Chang, K.; Cheng, P.; Fink, S.; Ishakian, V.; Mitchell, N.; Muthusamy, V.; Rabbah, R.; Slominski, A.; et al. Serverless computing: Current trends and open problems. In Research Advances in Cloud Computing; Springer: Berlin/Heidelberg, Germany, 2017; pp. 1–20. [Google Scholar]

- Buyya, R.; Srirama, S.N.; Casale, G.; Calheiros, R.; Simmhan, Y.; Varghese, B.; Gelenbe, E.; Javadi, B.; Vaquero, L.M.; Netto, M.A.; et al. A manifesto for future generation cloud computing: Research directions for the next decade. ACM Comput. Surv. (CSUR) 2018, 51, 1–38. [Google Scholar] [CrossRef]

- Castro, P.; Ishakian, V.; Muthusamy, V.; Slominski, A. The server is dead, long live the server: Rise of serverless computing, overview of current state and future trends in research and industry. arXiv 2019, arXiv:1906.02888. [Google Scholar]

- Wooley, J.C.; Lin, H.S. Committee on Frontiers at the Interface of Computing and Biology; National Academies Press: Washington, DC, USA, 2005. [Google Scholar]

- Verma, N.; Jia, H.; Valavi, H.; Tang, Y.; Ozatay, M.; Chen, L.-Y.; Zhang, B.; Deaville, P. In-memory computing: Advances and prospects. IEEE Solid-State Circuits Mag. 2019, 11, 43–55. [Google Scholar] [CrossRef]

- Schuman, C.D.; Potok, T.E.; Patton, R.M.; Birdwell, J.D.; Dean, M.E.; Rose, G.S.; Plank, J.S. A survey of neuromorphic computing and neural networks in hardware. arXiv 2017, arXiv:1705.06963. [Google Scholar]

- Adhianto, L.; Anderson, J.; Mellor, J. Refining HPCToolkit for application performance analysis at exascale. Int. J. High Perform. Comput. Appl. 2024, 38, 612–632. [Google Scholar] [CrossRef]

- Ellingson, S.; Pallez, G. Result-scalability: Following the evolution of selected social impact of HPC. Int. J. High Perform. Comput. Appl. 2025, 10943420251338168. [Google Scholar] [CrossRef]

- Chou, J.; Chung, W.-C. Cloud Computing and High Performance Computing (HPC) Advances for Next Generation Internet. Future Internet 2024, 16, 465. [Google Scholar] [CrossRef]

- Yang, Y.; Zhu, X.; Ma, Z.; Hu, H.; Chen, T.; Li, W.; Xu, J.; Xu, L.; Chen, K. Artificial HfO2/TiOx Synapses with Controllable Memory Window and High Uniformity for Brain-Inspired Computing. Nanomaterials 2023, 13, 605. [Google Scholar] [CrossRef]

- Yang, J.; Abraham, A. Analyzing the Features, Usability, and Performance of Deploying a Containerized Mobile Web Application on Serverless Cloud Platforms. Future Internet 2024, 16, 475. [Google Scholar] [CrossRef]

- Matsuo, R.; Elgaradiny, A.; Corradi, F. Unsupervised Classification of Spike Patterns with the Loihi Neuromorphic Processor. Electronics 2024, 13, 3203. [Google Scholar] [CrossRef]

- Kang, P. Programming for High-Performance Computing on Edge Accelerators. Mathematics 2023, 11, 1055. [Google Scholar] [CrossRef]

- Chen, S.; Dai, Y.; Liu, L.; Yu, X. Optimizing Data Parallelism for FM-Based Short-Read Alignment on the Heterogeneous Non-Uniform Memory Access Architectures. Future Internet 2024, 16, 217. [Google Scholar] [CrossRef]

- Silva, C.A.; Vilaça, R.; Pereira, A.; Bessa, R.J. A review on the decarbonization of high-performance computing centers. Renew. Sustain. Energy Rev. 2024, 189, 114019. [Google Scholar] [CrossRef]

- Liu, H.; Zhai, J. Carbon Emission Modeling for High-Performance Computing-Based AI in New Power Systems with Large-Scale Renewable Energy Integration. Processes 2025, 13, 595. [Google Scholar] [CrossRef]

- Zhang, N.; Yan, J.; Hu, C.; Sun, Q.; Yang, L.; Gao, D.W. Price-Matching-Based Regional Energy Market With Hierarchical Reinforcement Learning Algorithm. IEEE Trans. Ind. Inform. 2024, 20, 11103–11114. [Google Scholar] [CrossRef]

- Marković, D.; Grollier, J. Quantum Neuromorphic Computing. arXiv 2020, arXiv:2006.15111. Available online: https://arxiv.org/abs/2006.15111 (accessed on 15 June 2025). [CrossRef]

- Wang, C.; Shi, G.; Qiao, F.; Lin, R.; Wu, S.; Hu, Z. Research progress in architecture and application of RRAM with computing-in-memory. Nanoscale Adv. 2023, 5, 1559–1573. [Google Scholar] [CrossRef]

- Bravo, R.A.; Patti, T.L.; Najafi, K.; Gao, X.; Yelin, S.F. Expressive quantum perceptrons for quantum neuromorphic computing. Quantum Sci. Technol. 2025, 10, 015063. [Google Scholar] [CrossRef]

- Moreno, A.; Rodríguez, J.J.; Beltrán, D.; Sikora, A.; Jorba, J.; César, E. Designing a benchmark for the performance evaluation of agent-based simulation applications on HPC. J. Supercomput. 2019, 75, 1524–1550. [Google Scholar] [CrossRef]

- Mohammed, A.; Eleliemy, A.; Ciorba, F.M.; Kasielke, F.; Banicescu, I. An approach for realistically simulating the performance of scientific applications on high performance computing systems. Future Gener. Comput. Syst. 2020, 111, 617–633. [Google Scholar] [CrossRef]

- Netti, A.; Shin, W.; MOtt, M.; Wilde, T.; Bates, N. A Conceptual Framework for HPC Operational Data Analytics. In Proceedings of the IEEE International Conference on Cluster Computing (CLUSTER), Portland, OR, USA, 7–10 September 2021; pp. 596–603. [Google Scholar]

- Jonas, E.; Schleier-Smith, J.; Sreekanti, V.; Tsai, C.-C.; Khandelwal, A.; Pu, Q.; Shankar, V.; Carreira, J.; Krauth, K.; Yadwadkar, N.; et al. Cloud programming simplified: A berkeley view on serverless computing. arXiv 2019, arXiv:1902.03383. [Google Scholar]

- Thottempudi, P.; Acharya, B.; Moreira, F. High-Performance Real-Time Human Activity Recognition Using Machine Learning. Mathematics 2024, 12, 3622. [Google Scholar] [CrossRef]

- Dakić, V.; Kovač, M.; Slovinac, J. Evolving High-Performance Computing Data Centers with Kubernetes, Performance Analysis, and Dynamic Workload Placement Based on Machine Learning Scheduling. Electronics 2024, 13, 2651. [Google Scholar] [CrossRef]

- Morgan, N.; Yenusah, C.; Diaz, A.; Dunning, D.; Moore, J.; Heilman, E.; Lieberman, E.; Walton, S.; Brown, S.; Holladay, D.; et al. Enabling Parallel Performance and Portability of Solid Mechanics Simulations Across CPU and GPU Architectures. Information 2024, 15, 716. [Google Scholar] [CrossRef]

- Sheikh, A.M.; Islam, M.R.; Habaebi, M.H.; Zabidi, S.A.; Bin Najeeb, A.R.; Kabbani, A. A Survey on Edge Computing (EC) Security Challenges: Classification, Threats, and Mitigation Strategies. Future Internet 2025, 17, 175. [Google Scholar] [CrossRef]

- Huerta, E.A.; Khan, A.; Davis, E.; Bushell, C.; Gropp, W.D.; Katz, D.S.; Kindratenko, V.; Koric, S.; Kramer, W.T.; McGinty, B.; et al. Convergence of artificial intelligence and high performance computing on NSF-supported cyberinfrastructure. J. Big Data 2020, 7, 88. [Google Scholar] [CrossRef]

- Liao, X.K.; Lu, K.; Yang, C.Q.; Li, J.W.; Yuan, Y.; Lai, M.C.; Huang, L.B.; Lu, P.J.; Fang, J.B.; Ren, J.; et al. Moving from exascale to zettascale computing: Challenges and techniques. Front. Inf. Technol. Electron. Eng. 2018, 19, 1236–1244. [Google Scholar] [CrossRef]

- Zhang, B.; Chen, S.; Gao, S.; Gao, Z.; Wang, D.; Zhang, X. Combination Balance Correction of Grinding Disk Based on Improved Quantum Genetic Algorithm. IEEE Trans. Instrum. Meas. 2022, 72, 1000112. [Google Scholar] [CrossRef]

- Deepak; Upadhyay, M.K.; Alam, M. Edge Computing: Architecture, Application, Opportunities, and Challenges. In Proceedings of the 3rd International Conference on Technological Advancements in Computational Sciences (ICTACS), Tashkent, Uzbekistan, 1–3 November 2023. [Google Scholar]

- Wu, M.; Mi, Z.; Xia, Y. A survey on serverless computing and its implications for jointcloud computing. In Proceedings of the 2020 IEEE International Conference on Joint Cloud Computing, Oxford, UK, 3–6 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 94–101. [Google Scholar]

- Shafiei, H.; Khonsari, A.; Mousavi, P. Serverless computing: A survey of opportunities, challenges and applications. arXiv 2019, arXiv:1911.01296. [Google Scholar] [CrossRef]

| Computing Technology | Future Research Directions | Mapping for Categorizing | Classification | Year | Reference Number |

|---|---|---|---|---|---|

| Quantum Computing | ✔ | ✘ | ✘ | 2021 | [10] |

| Quantum Computing, Serverless | ✔ | ✘ | ✘ | 2021 | [11] |

| Serverless Edge Computing | ✘ | ✘ | ✔ | 2021 | [12] |

| Biological Computing | ✔ | ✘ | ✘ | 2021 | [13] |

| Neuromorphic Computing | ✔ | ✘ | ✘ | 2021 | [14] |

| Quantum Computing | ✔ | ✔ | ✘ | 2024 | [15] |

| High-Performance Computing | ✔ | ✘ | ✘ | 2019 | [16,17] |

| Near-Memory Computing | ✔ | ✘ | ✘ | 2019 | [18] |

| Quantum Computing | ✘ | ✔ | ✘ | 2018 | [19] |

| High-Performance Computing | ✔ | ✘ | ✘ | 2024 | [20] |

| Quantum Computing | ✔ | ✘ | ✔ | 2024 | [21] |

| Neuromorphic Computing | ✔ | ✘ | ✘ | 2025 | [22] |

| Adiabatic Quantum Computing | ✔ | ✘ | ✘ | 2024 | [23] |

| Neuromorphic Computing | ✔ | ✘ | ✘ | 2024 | [24] |

| Current Paper | ✔ | ✔ | ✔ | - | - |

| Inclusion Criterion | Exclusion Criterion |

|---|---|

| Performing a holistic review | Not Performing a holistic review |

| HPC-related | Not HPC-related |

| Contain practical and empirical analysis | Does not contain practical and empirical analysis |

| Research period is recent [2018–2025] | Research time is too old |

| Computing Technology | Global Research Focus | Scientific Challenges | Interdisciplinarity Level | Potential Impact | Maturity Level | Fundamental Innovation Level |

|---|---|---|---|---|---|---|

| Quantum Computing | Very high | Very high | Very high (Physics, Mathematics, and Electrical Engineering) | Very high | Low | Very high |

| Nanocomputing | High | High | High (Nano, Materials, and Electronics) | High | Medium | High |

| In-Memory Architectures | Growing | High | High (Electrical engineering, Memory, and computer) | High | Low | High |

| Neuromorphic Computing | High | Very high | Very high (Neuroscience, Engineering, and Machine learning) | High | Low | Very high |

| Serverless Computing | Very high | Medium | Medium (Mainly software engineering) | High | High | Medium |

| Adiabatic Computing | Medium | Very high | Very high (Physics, Heat, and Computing) | Very high | Very low | Very high |

| Other Bio-Inspired Solutions | Medium | High | Very high (Biology, Algorithms, and Circuits) | High | Low | Very high |

| Feature | Security | Parallel Processing | States | Unit of Infomation |

|---|---|---|---|---|

| Classical Computing | Breakable | Limited | 0 or 1 | bit |

| Quantum Computing | New, Faster, and Robust Algorithms | Extensive (based on superposition | 0 and 1 at the same time | qubit |

| In-Memory Architecture | Traditional Architecture |

|---|---|

| Combining the processing unit and memory | Long distance between the CPU and memory |

| Data processing in place | High energy for data transfer |

| Use of fast memories such as RAM or non-volatile memory (NVRAM) | Low I/O speed |

| Reduce data round trips between the CPU and DRAM | Lateness in data access |

| Computing Technology | Materials Science | Chemistry | Physics and Astronomy | Engineering | Computer Science | Number of Books | Number of Book Chapters | Number of Conferences | Number of Articles |

|---|---|---|---|---|---|---|---|---|---|

| Biological Computing | ✔ | ✔ | ✔ | ✔ | ✔ | 0 | 0 | 19 | 46 |

| Quantum Computing | ✔ | ✔ | ✔ | ✔ | ✔ | 136 | 26 | 2110 | 4953 |

| Nanocomputing | ✔ | ✔ | ✔ | ✔ | ✔ | 1 | 0 | 68 | 65 |

| In-Memory Computing | ✔ | ✔ | ✔ | ✔ | ✔ | 21 | 6 | 33 | 572 |

| Neuromorphic Computing | ✔ | ✔ | ✔ | ✔ | ✔ | 33 | 2 | 896 | 1717 |

| Serverless Computing | ✔ | ✘ | ✔ | ✔ | ✔ | 9 | 0 | 409 | 119 |

| Adiabatic Computing | ✔ | ✘ | ✔ | ✔ | ✔ | 2 | 0 | 28 | 45 |

| Computing Technology | Main Providers | Challenges | Benefits | Applications | Future Research |

|---|---|---|---|---|---|

| Quantum Computing |

| ||||

| Nanocomputing |

|

| |||

| In-Memory Architecture |

|

| |||

| Biologically Inspired Solutions |

|

|

| ||

| Adiabatic Technologies |

|

|

|

| |

| Neuromorphic Systems |

|

| |||

| Serverless Computing |

|

|

|

| Future Research Direction | References | Technology Gap |

|---|---|---|

| Integration of emerging HPC-related technologies with machine learning and artificial intelligence | Ohja et al. [108], Baldini et al. [109], Buyya et al. [110], Castro et al. [111] | Maturity in the use of ML and AI in emerging HPC-related technologies |

| Scalability analysis in emerging HPC-related technologies | Wooley et al. [112], Verma et al. [113], Scuman et al. [114], Adhianto et al. [115], Ellingson et al. [116], Chou et al. [117], Yang et al. [118], Yang et al. [119], Matsuo et al. [120], Kang et al. [121], Chen et al. [122] | Scalability issues in emerging HPC-related technologies |

| Green HPC-related methods and mechanisms | Silva et al. [123], Liu et al. [124], Zhang et al. [125] | Reducing the carbon footprint emerging from HPC-related technologies |

| Development of hybrid systems that integrate multiple emerging technologies (e.g., quantum + neuromorphic or in-memory + nanoscale computing) to simultaneously leverage their respective advantages. | Marković et al. [126], Wang et al. [127], Bravo et al. [128] | Design and development of hybrid HPC systems |

| Creation of standardized simulation models and performance evaluation frameworks for emerging HPC-related technologies | Moreno et al. [129], Mohammed et al. [130], Netti et al. [131] | Need to standardize emerging HPC-related technologies and propose efficient performance evaluation frameworks |

| Exploration of complementary trends (edge computing, AI-HPC convergence, exascale and beyond computing, cloud + HPC hybrid models, edge–HPC integration, GPU cloud computing, etc.) | Jonas et al. [132], Thottempudi et al. [133], Dakić et al. [134], Morgan et al. [135], Chou et al. [122], Sheikh et al. [136], Huerta et al. [137], Liao et al. [138], Zhang et al. [139], Deepak et al. [140], We et al. [141], Shafiei et al. [142] | More focus on complementary trends in HPC technologies |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arianyan, E.; Gholipour, N.; Maleki, D.; Ghorbani, N.; Sepahvand, A.; Goudarzi, P. A Systematic Review and Classification of HPC-Related Emerging Computing Technologies. Electronics 2025, 14, 2476. https://doi.org/10.3390/electronics14122476

Arianyan E, Gholipour N, Maleki D, Ghorbani N, Sepahvand A, Goudarzi P. A Systematic Review and Classification of HPC-Related Emerging Computing Technologies. Electronics. 2025; 14(12):2476. https://doi.org/10.3390/electronics14122476

Chicago/Turabian StyleArianyan, Ehsan, Niloofar Gholipour, Davood Maleki, Neda Ghorbani, Abdolah Sepahvand, and Pejman Goudarzi. 2025. "A Systematic Review and Classification of HPC-Related Emerging Computing Technologies" Electronics 14, no. 12: 2476. https://doi.org/10.3390/electronics14122476

APA StyleArianyan, E., Gholipour, N., Maleki, D., Ghorbani, N., Sepahvand, A., & Goudarzi, P. (2025). A Systematic Review and Classification of HPC-Related Emerging Computing Technologies. Electronics, 14(12), 2476. https://doi.org/10.3390/electronics14122476