Abstract

Glove-based human–machine interfaces (HMIs) offer a natural, intuitive way to capture finger motions for gesture recognition, virtual interaction, and robotic control. However, many existing systems suffer from complex fabrication, limited sensitivity, and reliance on external power. Here, we present a flexible, self-powered glove HMI based on a minimalist triboelectric nanogenerator (TENG) sensor composed of a conductive fabric electrode and textured Ecoflex layer. Surface micro-structuring via 3D-printed molds enhances triboelectric performance without added complexity, achieving a peak power density of 75.02 μW/cm2 and stable operation over 13,000 cycles. The glove system enables real-time LED brightness control via finger-bending kinematics and supports intelligent recognition applications. A convolutional neural network (CNN) achieves 99.2% accuracy in user identification and 97.0% in object classification. By combining energy autonomy, mechanical simplicity, and machine learning capabilities, this work advances scalable, multi-functional HMIs for applications in assistive robotics, augmented reality (AR)/(virtual reality) VR environments, and secure interactive systems.

1. Introduction

The rapid advancement of science and technology has integrated machines, such as computers and robots, into nearly every aspect of daily life, driving the evolution of human–machine interaction devices that serve as critical bridges for communication and control [1]. Alongside this progress, the explosive growth of AR and VR technologies has created an urgent demand for immersive and intuitive human–machine interaction solutions that enable seamless interaction within virtual environments [2]. Traditional tools like the mouse and keyboard, while effective for basic tasks, fall short in delivering natural, context-aware, and responsive interactions. Consequently, more intuitive and user-friendly HMI modalities are gaining momentum. Voice-based interfaces have become ubiquitous in smart home systems, enabling effortless control of household appliances through spoken commands [3]. Likewise, technologies based on facial expression recognition and bioelectrical signals such as electrocardiogram (ECG) and electromyogram (EMG), are being adopted to interpret human intention through physiological cues [4,5,6,7]. However, among all body parts, the human hand, due to its frequent use and dexterity, has emerged as a particularly powerful medium for gesture-based HMI. In particular, glove-based systems capable of capturing nuanced finger movements have garnered significant attention [3,8,9,10]. These systems not only facilitate precise task execution but also serve as expressive tools for conveying emotion and intent, making them ideal for natural and intuitive communication.

A wide range of glove-based HMI systems have been developed using sensing mechanisms such as capacitive [11,12,13,14], resistive [15,16,17], and optical technologies [18,19]. However, these systems often suffer from drawbacks including limited stretchability, intricate fabrication processes, and reliance on external power sources [20,21]. To overcome these challenges and enable energy-autonomous operation, self-powered sensing technologies, especially TENGs, have emerged as transformative candidates for next-generation glove-based HMIs. TENGs, which convert mechanical stimuli into electrical signals through contact electrification and electrostatic induction, have advanced rapidly since their introduction by Zhonglin Wang [22,23]. Their key advantages, including their low-cost fabrication, broad material compatibility, and high sensitivity to subtle deformations, make them especially suitable for wearable applications [24,25,26,27,28,29]. Despite their promise, many existing TENG-based glove systems face practical challenges such as overly complex, multi-material constructions or low sensitivity [30,31,32]. For example, Luo et al. designed a glove using a multilayer TENG composed of silicone rubber (negative layer), polydimethylsiloxane (PDMS, positive layer), and copper mesh electrodes. While functional, this architecture is difficult to assemble and scale [2]. Similarly, Wang et al. proposed a single-sensor glove using a polyvinyl alcohol–sodium alginate–polyaniline (PSP) hydrogel electrode with Ecoflex as the triboelectric layer [1]. Although the device exhibits stretchability, the intricate fabrication process and inclusion of textile-like filters for signal enhancement increase system complexity. These limitations highlight the need for simplified, high-sensitivity TENG-based gloves that retain performance while streamlining design and fabrication.

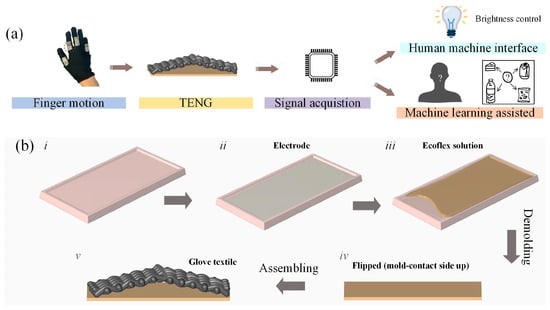

In this work, we present a flexible and scalable TENG glove sensor with a minimalist structure, consisting of only a conductive fabric electrode and a microtextured Ecoflex triboelectric layer. The device is fabricated through a one-step fabrication process, in which an Ecoflex surface texture is introduced directly during the casting process with a reusable 3D-printed mold. This streamlined approach simplifies fabrication and eliminates the need for additional materials or post-treatment. The sensor delivers strong electrical output, achieving a power density of 75.02 μW/cm2 at 100 MΩ, making it suitable for both energy harvesting and self-powered sensing. Its fully encapsulated design ensures excellent durability, maintaining stable performance over 13,000 mechanical cycles. It also exhibits strong washability, with output remaining nearly unchanged after water immersion and drying. To accommodate finger motion, the sensor adopts an arc-shaped configuration with an extended textile segment, providing mechanical flexibility and wearer comfort without requiring stretchable materials. The system’s practical potential is demonstrated through two applications, as shown in Figure 1a: (1) real-time LED control via finger bending angle, and (2) high-accuracy machine learning-based recognition, where a CNN achieves 99.2% accuracy in user identification and 97.0% in object recognition. By balancing structural simplicity, washability, and energy autonomy, this work offers a compelling platform for next-generation wearable HMIs in assistive robotics, AR/VR interaction, and biometric authentication.

Figure 1.

(a) A schematic illustration of the application scenario of the self-powered glove-based HMI sensors. (b) The step-by-step fabrication process of the self-powered TENG sensor.

2. Materials and Methods

2.1. Fabrication of Ecoflex Film

The fabrication process of the single-electrode TENG sensor is illustrated in Figure 1. A simple 3D-printed mold with dimensions of 1.5 × 3 cm2 was first prepared (Figure 1b(i)). The mold was fabricated using a RAISE3D E2 printer (RAISE3D Technologies Inc., Irvine, CA, USA) with 1.75 mm PLA filament (Raise3D Technologies Inc., Irvine, CA, USA). Printing parameters included a layer height of 0.1 mm, 50% infill density, three wall line counts, and an infill speed of 70 mm/s. This setup produced fine surface features that were successfully transferred to the Ecoflex (Smooth-On Inc., Macungie, PA, USA) during molding. The electrode of the TENG sensor was constructed by laminating two layers of single-sided conductive fabric (1.5 × 3 cm2) back-to-back, with a conductive wire sandwiched between them to ensure robust electrical connectivity. This layered configuration provided a stable adhesion interface for subsequent electrical characterization. The assembled electrode was then positioned within the mold (Figure 1b(ii)).

Ecoflex, a biocompatible and flexible silicone elastomer, was chosen for its superior softness and stretchability compared to materials like PDMS. Its low elastic modulus allows better contact with surfaces, improving triboelectric charge transfer. Ecoflex also demonstrates reliable triboelectric performance and mechanical stability, making it well-suited for durable wearable TENG devices. To prepare the Ecoflex solution, its two components (Part A and Part B) were mixed at a 1:1 weight ratio and homogenized via vortex mixing for 1 min to ensure uniformity. The blended solution was poured into the mold (Figure 1b(iii)), allowing it to permeate the conductive fabric and fully encapsulate the electrode.

Pure Ecoflex Control: For control samples, the Ecoflex was cured at room temperature overnight, after which the film was demolded (Figure 1b(iv)). Both surfaces of the pure Ecoflex film were evaluated as triboelectric interfaces: the smooth upper surface (see Figure S1a in the Supplementary Materials) and the textured underside, which retained the inverse pattern of the 3D-printed mold (Figure S1b).

Powder-Coated Ecoflex Fabrication: To enhance triboelectric performance, a modified curing process was used. The Ecoflex was first partially cured (30 min at room temperature) to achieve a semi-solid state. Triboelectrically negative polytetrafluoroethylene (PTFE) powders (particle diameter: 2–3 μm) were mechanically deposited onto the surface of the semi-cured (see Figure S2). These powders were selected for their strong electron affinity [33], enabling investigation into their effect on charge generation. During the final curing stage, which involved an overnight cure at room temperature, the PTFE particles became partially embedded in the Ecoflex matrix due to the tacky nature of the semi-cured surface. This physical interlocking, along with van der Waals interactions at the interface, produced a durable and adherent triboelectric coating.

2.2. Assembly of Arc-Shaped Glove-Based HMI Sensors

The cured Ecoflex film was mounted over the proximal interphalangeal (PIP) joint of each finger on a textile glove (70% cotton, 30% polyester-cotton blend, as specified by the supplier) using adhesive, with both ends secured to form an arc-shaped configuration (Figure 1b(v)). This structure allows the glove textile to bend naturally during finger movements, initiating contact–separation cycles between the textile (positive tribo-material) and the Ecoflex film (negative tribo-material). The selected glove material offers wide availability, comfort, and suitability for wearable human–machine interface (HMI) applications. Furthermore, the significant triboelectric polarity difference between the materials ensures efficient charge generation during motion. The resulting mechanical deformation produces distinct electrical signals, enabling real-time motion detection and energy harvesting.

2.3. Characterization and Measurement

To evaluate the electrical performance of the glove-based HMI sensors, cyclic mechanical stimuli were applied using a programmable linear motor with adjustable force and frequency. The triboelectric output signals were characterized under two electrical conditions. The open-circuit voltage Voc was measured using a high-impedance oscilloscope (DHO1204, RIGOL, Beijing, China) equipped with a 100 MΩ passive probe to minimize signal attenuation. The short-circuit current Isc was recorded using a precision electrometer (B2985B, Keysight Technologies, Santa Rosa, CA, USA), which provided high-resolution current measurements in the nanoampere to picoampere range.

3. Results

3.1. Characterization of TENG Sensor

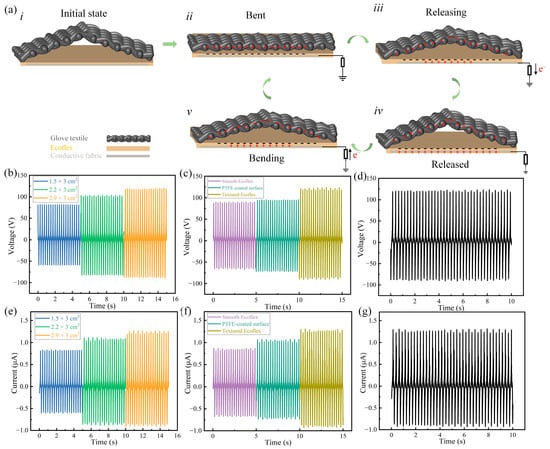

The operational principle of the arc-shaped minimalist TENG sensor is schematically illustrated in Figure 2a. For clarity, the Ecoflex film is depicted as a flat surface, while the glove textile is shown in an elongated arc to facilitate visualization. In the initial state (Figure 2a(i)), the Ecoflex film and the glove textile remain spatially separated, resulting in no physical contact between the triboelectric materials and, consequently, no charge generation. As the user bends their finger (Figure 2a(ii)), the glove textile deforms and gradually makes contact with the Ecoflex surface. Due to Ecoflex film’s strong electron affinity (i.e., its highly negative position in the triboelectric series), electrons are transferred from the Ecoflex to the glove textile upon contact. This contact-induced charge transfer generates an electrostatic imbalance: the Ecoflex acquires a net negative charge, while the glove textile accumulates an equal and opposite positive charge. During the release phase (Figure 2a(iii)), as the finger returns to its resting position, the two triboelectric layers gradually move apart. This separation generates an electrostatic potential difference that causes electrons to flow through the external circuit, from the conductive fabric electrode to the ground, in order to re-establish electrostatic balance. The electrical output reaches a transient peak when the layers are at maximum separation (Figure 2a(iv)). With continued finger motion (Figure 2a(v)), the process reverses: as the layers come into contact again, electrons flow in the opposite direction through the circuit to balance the newly generated potential. This cyclic process of contact and separation continuously produces alternating electrical signals (voltage and current) that directly correlate with the finger’s bending kinematics. By capturing and analyzing real-time electrical signals, the TENG sensor offers a self-powered and reliable mechanism for tracking finger motion, enabling low-latency and intuitive HMI.

Figure 2.

(a) A schematic illustration of the working mechanism of the self-powered TENG sensor. (b,e) Voc and Isc outputs of TENG sensors with varying contact areas (1.5 × 3 cm2 to 2.2 × 3 cm2). (c,f) Voc and Isc outputs of TENG sensors with different surface modification strategies. (d,g) Representative Voc and Isc outputs of the optimized TENG sensor (1.5 × 3 cm2) under a 24 N contact force at 4 Hz.

To evaluate its output performance, cyclic contact–separation tests were conducted using a programmable linear motor, applying a contact force of 24 N at a frequency of 4 Hz. In these tests, the textile glove served as the triboelectrically positive contact material. To investigate the influence of device geometry, TENG sensors with varying contact areas (from 1.5 × 3 cm2 to 2.2 × 3 cm2) were fabricated and characterized (Figure 2b,e). As shown, both Voc and Isc increased monotonically with contact area, rising from approximately 140.2 V to 204.6 V and from ~1.43 μA to ~2.1 μA, respectively. This scaling behavior arises because a larger effective contact area leads to greater total charge transfer, thereby enhancing the overall electrical output [34]. These results underscore the importance of geometric optimization in tailoring TENG sensors for specific HMI applications, balancing enhanced performance with ergonomic integration.

To further enhance the TENG sensor’s output, multiple surface modification strategies were systematically investigated. Ecoflex films with distinct surface morphologies—smooth (flat) and rough (patterned using a 3D-printed mold)—were fabricated to evaluate the effect of surface texture on triboelectric performance. Additionally, Ecoflex films coated with PTFE powders were developed to examine the impact of surface chemistry modifications. As shown in Figure 2c,f, compared to the smooth Ecoflex surface, the PTFE-coated film exhibited moderately improved output, with a Voc of approximately 160 V and an Isc of ~1.7 μA. In stark contrast, the rough-surfaced Ecoflex fabricated using the 3D-printed mold delivered the highest output, significantly outperforming even the PTFE-coated variant. This significant enhancement is attributed to the synergistic effects of increased interfacial contact area and friction during mechanical deformation. Upon applied force, the textured surface (Figure S2b) compresses and conforms more intimately with the counterpart tribo-material, effectively increasing the real contact area and enhancing charge transfer. Due to its superior performance and ease of fabrication, the textured Ecoflex surface was selected as the standard triboelectric interface for subsequent experiments. These findings highlight the effectiveness of surface engineering, particularly mold-based roughening, as a simple yet powerful approach to optimize self-powered HMI sensors.

Considering practical application scenarios in which the device is mounted on finger joints, the TENG sensor size was optimized to 1.5 × 3 cm2. Under a contact force of 24 N and an operating frequency of 4 Hz, the device exhibited a typical Voc of approximately 204 V and an Isc of ~2.1 μA, as shown in Figure 2d,g.

Furthermore, to evaluate the consistency in fabrication and signal output, we fabricated and tested 10 independently prepared TENG devices with the optimized structure under identical measurement conditions. The corresponding output voltages and statistical results are presented in Figure S3, showing small variations among different samples. These results confirm good reproducibility across multiple samples, verifying the reliability of the preparation method and the robustness of the sensor’s electrical performance. In addition, the washability of the device was evaluated to assess its long-term usability. As the conductive fabric-based electrode is fully encapsulated within Ecoflex, the TENG sensor exhibits excellent water resistance. A washability test was conducted by immersing the device in water for 20 min. The output voltage was measured before and after immersion under a fixed force amplitude and frequency. As shown in Figure S4, the output voltage remained nearly unchanged, confirming the device’s stability and durability in wet conditions. These results demonstrate that the sensor is washable and suitable for practical long-term use in wearable applications.

Moreover, potential sources of error in the sensor output were considered to reinforce the reliability of the system. These include slight inconsistencies during manual fabrication, variations in the effective contact area during repeated measurements, and environmental factors such as humidity and temperature. Limitations of the measurement system, including oscilloscope resolution and ambient electrical noise, may also introduce fluctuations. To minimize these uncertainties, consistent testing conditions such as stable humidity and temperature, force amplitude, and frequency, were maintained within each experimental set. The low standard deviation observed in Figure S3 further highlights the high stability and repeatability of the sensor system.

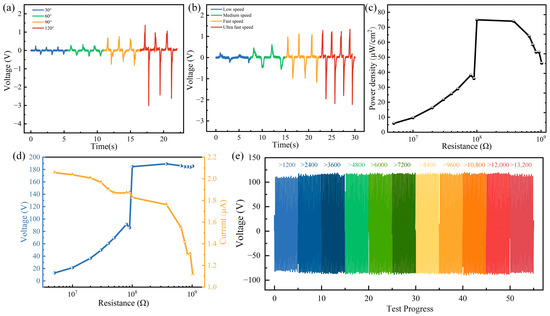

With the optimized device size and surface morphology, the TENG sensor was designed in an arc shape and mounted on the PIP joint of the finger (Figure 1a). Performance characterization was carried out with the sensor positioned on the index finger, which was actuated to bend at the PIP joint at a frequency of ~0.56 Hz. The recorded output voltages corresponding to bending angles of 30°, 60°, 90°, and 120° were approximately 0.42 V, 0.57 V, 1.16 V, and 3.83 V, respectively. The output clearly increased with bending angle due to the enlarged effective contact area. These results demonstrate that precise control of finger flexion angles offers an intuitive mechanism for proportional HMI command input, such as graded control of peripheral devices.

In addition to bending angle, bending speed also influenced the TENG output. Typical finger motion speeds at the PIP joint vary across real-world scenarios: slow movements (e.g., precise manipulation or typing) occur at approximately 0.2 to 0.5 Hz, moderate-to-fast motions (e.g., dynamic gestures or object grasping) range from 0.5 to 2 Hz, and ultra-fast actions (e.g., snapping) can exceed 5 Hz. To capture this spectrum, we tested a graded set of bending frequencies ranging from approximately 0.27 Hz (slow) to 0.53 Hz, which we define as the upper limit of “ultra-fast” within our experimental measurements. While this highest tested frequency does not reach the ultra-fast range observed in some rapid finger actions, it effectively represents the speeds relevant for many practical HMI applications. As shown in Figure 3b, when the bending angle was fixed at 30°, the output voltage increased with higher motion speeds, demonstrating the sensor’s sensitivity to both subtle and rapid motions. Moreover, motion speed can be inferred from the time interval between signal peaks, enabling both angle and speed to serve as tunable parameters for HMI control.

Figure 3.

(a) The output voltage of the TENG sensor mounted on the index finger at the PIP joint during bending at angles of 30°, 60°, 90°, and 120° at a constant speed. (b) Output voltage response at a fixed bending angle of 30° under varying bending speeds. (c) Output power density as a function of external load resistance, showing an optimal performance at 100 MΩ. (d) Output voltage and current as functions of external load resistance, highlighting impedance-matching behavior. (e) The durability performance of the TENG sensor under continuous cyclic operation at 4 Hz for over 13,000 cycles. The test was conducted in 5 min intervals (approximately 1200 cycles per segment), and cycle counts are indicated above each segment.

The internal impedance of the TENG sensor plays a critical role in both energy harvesting efficiency and signal acquisition circuit design. By varying the external load resistance from 5.1 MΩ to 1 GΩ, the peak-to-peak voltage (Vpp) increases and gradually saturates at approximately 200 V at 1 GΩ, while the current decreases to near-zero levels (Figure 3d). The power density (P) is calculated using the equation P = IU/(RA), where I, U, R, and A represent the output current, voltage, load resistance, and device area, respectively. Although both voltage and power increase initially with load resistance, the power eventually decreases due to the simultaneous drop in current (Figure 3c), resulting in a peak power density of 75.02 μW/cm2 at the optimal impedance 100 MΩ. This impedance-matching analysis confirms the sensor’s dual functionality as a self-powered motion detector and a microscale energy harvester.

The durability of the TENG device was further evaluated under cyclic contact–separation at a frequency of 4 Hz (Figure 3e). The results demonstrated excellent stability, with no significant degradation in output after 13,000 operational cycles. This long-term reliability affirms the device’s suitability for extended HMI applications that demand robust performance. With angle- and velocity-dependent outputs, optimized impedance characteristics, and strong cyclic stability, the TENG sensor establishes itself as a versatile platform for intuitive, self-powered human–machine interfaces. Its programmable response to biomechanical stimuli enables precise mapping of finger kinematics to control commands, advancing the development of energy-autonomous wearable HMI systems.

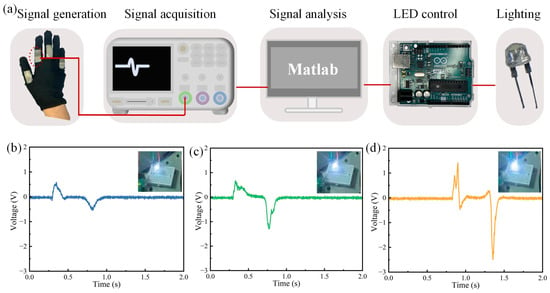

3.2. Glove-Based HMI for LED Control

As demonstrated in the TENG sensor characterization, the output voltage exhibits continuous tunability based on finger bending angle and speed, enabling proportional control of connected devices through analog electrical signals. To validate this capability, a glove-based HMI system was prototyped with the TENG sensor mounted on the PIP joint of the index finger (Figure 4a). The sensor’s output signals were acquired by an oscilloscope and transmitted via a local area network (LAN) to MATLAB R2021b for real-time processing. A Vpp extraction algorithm was implemented in MATLAB to quantify bending kinematics, and the normalized Vpp values were mapped to control the brightness of an LED via an Arduino Uno microcontroller. When the index finger was bent to small, medium, and large angles, the normalized Vpp increased proportionally, resulting in a corresponding linear increase in LED brightness (Figure 4b–d; see Video S1 in the Supplementary Materials). This direct correlation between angular displacement and luminous intensity establishes a straightforward mechanism for graded control of peripheral devices.

Figure 4.

(a) A schematic diagram illustrating the workflow of the glove-based HMI LED control system. Output signals from the TENG sensor when the index finger is bent at (b) small, (c) medium, and (d) large angles, with insets showing the corresponding LED brightness levels.

In addition to angular displacement, bending speed also enhances the TENG output, offering further input granularity. Since larger bending angles are typically accompanied by faster motion, the combined effect of angle and speed provides a robust mechanism for fine-tuning output levels [34]. This synergy supports intuitive control; for instance, a swift, full flexion could trigger maximum brightness, while a slow, partial bend might enable incremental adjustments. While the LED demonstration serves as a foundational example, the HMI platform’s programmability enables expansion to more complex applications, such as robotic manipulators, motorized vehicles, or virtual reality interfaces. By calibrating Vpp thresholds to specific motion profiles, users can define customized control schemes (e.g., speed-sensitive braking in robotic arms or gesture-based commands for smart home systems). Furthermore, the system’s self-powered operation eliminates the need for external power sources, making it particularly well-suited for wearable and portable applications.

3.3. Multi-Sensor Integration for Hand Motion Capture and American Sign Language (ASL) Recognition

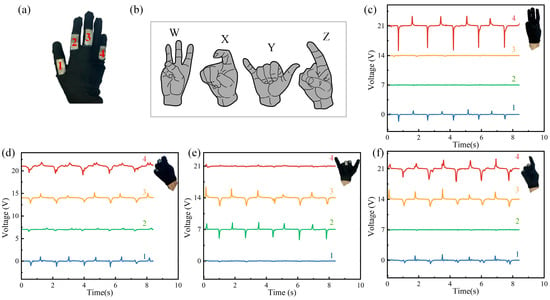

To extend the functionality of the glove-based HMI system, TENG sensors were integrated across four fingers (thumb, index, middle, and little fingers) at their PIP joints, labeled as Sensors 1–4 from left to right (Figure 5a). This multi-sensor configuration enables comprehensive hand motion capture, particularly for recognizing ASL alphabets. Four representative hand gestures corresponding to the ASL alphabet signs “W,” “X,” “Y,” and “Z” were selected, each involving different fingers and bending angles (Figure 5b). Real-time voltage signals acquired from the sensors (Figure 5c–f) reveal distinct patterns corresponding to specific gestures.

Figure 5.

(a) Photographs of the arc-shaped TENG sensors mounted on the thumb, index, middle, and little fingers, labeled as Sensors 1–4, corresponding to Channels 1–4. (b) Illustrations of hand gestures representing the ASL alphabet signs “W,” “X,” “Y,” and “Z.” (c–f) Real-time output signals from TENG sensors 1–4 in response to the gestures “W” to “Z,” respectively. Colored lines labeled 1, 2, 3, and 4 correspond to the output signals of Sensors 1, 2, 3, and 4.

For the “W” gesture, only the thumb and little fingers are bent, with the little finger exhibiting a slightly larger bending angle than the thumb. Consequently, Sensor 4 generates a higher output voltage than Sensor 1 (Figure 5c). The “X” gesture (Figure 5d) involves the simultaneous bending of all four fingers. Among them, the index finger, monitored by Sensor 2, exhibits only slight flexion, while the other three fingers, corresponding to Sensors 1, 3, and 4, undergo more pronounced bending and generate moderate output signals reflecting larger angular displacements. To represent the “Y” gesture (Figure 5e), the index and middle fingers bend to similar angles, leading to comparable voltage outputs from Sensors 2 and 3. Additionally, due to the mechanical coupling of the fingers, a slight fluctuation is observed in the output of Sensor 4, indicating minor movement of the little finger. For the “Z” gesture (Figure 5f), the thumb, middle, and little fingers bend simultaneously at specific angles, resulting in notable signals only from Sensors 1, 3, and 4.

In summary, by analyzing the output signals from the glove-integrated TENG sensors, real-time detection of multi-finger bending motions and corresponding gesture recognition can be successfully achieved.

3.4. Deep Learning-Assisted Glove-Based HMI for Human and Object Recognition

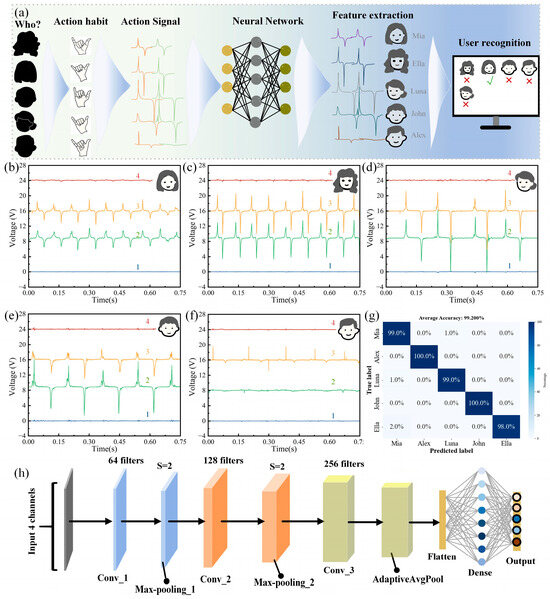

The rapid advancement of artificial intelligence (AI) has revolutionized numerous fields, with machine learning (ML) emerging as a powerful tool for extracting subtle biomechanical signatures from complex sensor signals. This capability has proven particularly transformative in glove-based HMIs, enabling precise user identification through motion pattern analysis. Leveraging this potential, we developed a customized CNN algorithm to distinguish individual users based on their unique TENG sensor signatures. The system workflow is illustrated in Figure 6a.

Figure 6.

(a) A schematic illustration of the glove-based HMI system equipped with 4 TENG sensors for user identification. (b–f) Output signal profiles from the “Y” gesture performed by five individuals: (b) Mia, (c) Ella, (d) Luna, (e) John, and (f) Alex. (g) A confusion matrix showing the classification performance of the trained CNN model. (h) The detailed architecture of the CNN used for person recognition.

Five participants (Mia, Ella, Luna, John, and Alex) wore a glove integrated with four TENG sensors (labeled 1–4) and performed the same ASL gesture “Y.” Despite the standardized gesture, distinct signal profiles were observed across users, even though only two fingers (index and middle) were utilized (Figure 6b–f). These differences are attributed to variations in hand-glove adaptability, biomechanical habits (e.g., flexion speed, acceleration), and individual movement rhythms. For participants 1 through 4, both sensors 2 and 3 captured observable signals. For Mia, both sensors generated balanced biphasic waveforms with nearly symmetrical positive and negative voltage peaks. In contrast, Ella exhibited significantly higher amplitudes, with sensor 3 displaying an asymmetric profile where the negative peak exceeded the positive by approximately 1.5 times. Luna also produced comparable positive and negative peaks across both sensors, though with larger amplitudes than Mia. John, the fourth participant, showed a lower signal magnitude on sensor 3 compared to sensor 2, with both channels exhibiting noticeable fluctuations. For the final participant, Alex, only sensor 1 produced a detectable signal, characterized by a distinct positive peak and a negligible negative component.

The architecture of the CNN model is illustrated in Figure 6h. It processes 10,000 time-step sequences from all four sensor channels and comprises three hierarchical Conv1D–MaxPool1D blocks for multiscale feature extraction, followed by an adaptive pooling layer and a fully connected classifier with dropout regularization. Raw sensor signals were preprocessed using Savitzky–Golay filtering (window size = 101, polynomial order = 3) to reduce high-frequency noise. No additional feature engineering was applied, as the 1D-CNN architecture automatically extracts discriminative spatiotemporal patterns from the filtered time-series data during training. The dataset, comprising 1500 samples (300 per participant), was randomly divided into a training set and a validation set in a 2:1 ratio. After 21 training epochs using the Adam optimizer (learning rate: 0.001), the model achieved a validation accuracy of 99.2%, demonstrating its ability to decode user-specific biomechanical signatures from kinematically similar gestures. This high recognition accuracy underscores the potential of the system for personalized HMI applications, such as user-aware virtual keyboards, adaptive robotic teleoperation, and biometric-secured AR/VR interfaces. By correlating subtle motion signatures with user identity, the platform effectively bridges biomechanical individuality with intelligent machine perception, paving the way for secure, intuitive human–machine collaboration.

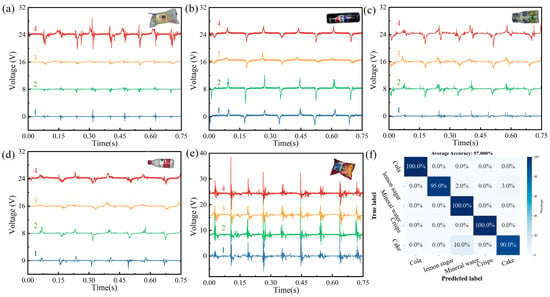

Beyond user identification, the self-powered glove-based HMI system also demonstrates strong potential for object recognition in practical applications, such as integration with robotic manipulators in automated vending machines. In this scenario, the glove can be worn by a robotic arm to classify and interact with various items autonomously. For a proof-of-concept demonstration, the glove-based system was worn by a single individual to grasp a range of common food items, including cola, lemon candy, mineral water, crisps, and cake. The corresponding triboelectric signal profiles are presented in Figure 7a–f. These distinct mechanical interactions, driven by differences in object shape, weight, and rigidity, led to clearly distinguishable signal patterns. Heavier, cylindrical objects (e.g., cola cans, water bottles) generated pronounced voltage peaks with minimal fluctuations (Figure 7b–d), indicative of greater bending angles and stronger grip forces. In contrast, lighter, flat items such as crisps and cake exhibited signals characterized by multiple transient spikes and less-defined voltage peaks, reflecting lower applied forces and intermittent contact during grasping.

Figure 7.

(a–e) Output signal profiles of the self-powered glove-based HMI system when grasping different objects: (a) cake, (b) cola, (c) lemon candy, (d) mineral water, and (e) crisps. Colored lines labeled 1, 2, 3, and 4 correspond to the output signals of Sensors 1, 2, 3, and 4. Distinct signal patterns reflect variations in object shape, weight, and rigidity (f). Confusion matrix for object recognition, demonstrating an overall classification accuracy of 97%.

The raw sensor signals underwent the same preprocessing procedure used for human recognition. A dataset comprising 1500 samples (300 per object class) was constructed, with a 2:1 split between training and validation sets. Using the same CNN architecture described in Figure 6h, the model achieved a recognition accuracy of 97% after 187 training epochs. This high performance underscores the system’s capability to decode object-specific tactile signatures arising from nuanced biomechanical interactions, such as uniform surface contact versus localized deformation. These results highlight the versatility of the glove-based platform as a self-powered, sensor-integrated intelligent system with potential applications in automated inventory-aware manipulation and adaptive prosthetics with context-aware force control.

4. Conclusions

In this work, we developed a flexible, self-powered glove-based HMI system featuring a minimalist single-electrode TENG sensor composed of a conductive fabric electrode and textured Ecoflex. Surface patterning via 3D-printed molds enhanced the triboelectric output without adding structural complexity, resulting in a peak power density of 75.02 μW/cm2 and stable performance over 13,000 cycles, demonstrating long-term durability for wearable use. The arc-shaped sensor design enabled accurate, real-time tracking of finger kinematics (30–120° bending angles, variable motion speeds), as demonstrated through proportional LED brightness control.

Beyond motion sensing, the system’s multi-sensor configuration accurately captured complex gestures including ASL alphabets, through voltage profiles from individual fingers. A custom CNN architecture with Conv1D layers and adaptive pooling achieved 99.2% accuracy in user identification and 97% in object recognition. While the current study focused on a proof-of-concept demonstration with a limited set of static gestures and participants, future work will broaden the dataset to include a larger variety of gestures, as well as dynamic and continuous gesture sequences. A more diverse participant pool with different motion patterns and biomechanical characteristics will be included. These efforts will support a more comprehensive assessment of system robustness and generalizability. Further, advanced temporal modeling methods and refined performance metrics will be introduced to strengthen real-world deployment. Together, these developments will enhance the system’s ability to support continuous and intuitive human–machine interaction, particularly in applications such as sign language recognition, virtual reality, and robotics.

To better understand the advantages of our system, we conducted a comparative analysis (Table S1, Supplementary Materials) against representative glove-integrated TENG systems reported in the recent literature. Our glove-based platform distinguishes itself through minimalist one-step fabrication, high power density (75.02 μW/cm2), and verified washability. These combined features are rarely achieved in prior works. Although the intrinsic stretchability of the material is limited, the arc-shaped sensor allows for natural finger flexion, preserving both comfort and performance. Angle-resolved sensitivity tests were affected by manual control constraints, but the strong triboelectric response indicates excellent mechanical sensitivity. Overall, this comparison highlights the system’s practicality and readiness for real-world use.

This work presents a scalable and energy-autonomous solution for next-generation HMI. It promotes the integration of intelligent, human-centered technologies in areas such as assistive robotics, immersive environments, and personalized interaction. The findings provide a foundation for future wearable systems that are seamless, adaptive, and user-friendly.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/electronics14122469/s1, Figure S1. Optical microscopy images of Ecoflex surfaces: (a) smooth surface and (b) rough surface patterned using a 3D-printed mold. Figure S2: Step-by-step fabrication process of the self-powered TENG sensor utilizing PTFE-coated Ecoflex as the contact surface. Figure S3: (a) Output voltages of 10 TENG sensors fabricated with the optimized structure, measured under the same force and frequency. (b) Mean output voltage with error bars representing the standard deviation. Figure S4. (a) Optical image of the self-powered TENG sensor immersed in water. (b) Voltage output comparison before immersion and after 20 min of water immersion followed by drying. Table S1. Comparison of the proposed TENG sensor and recently reported glove-based TENG HMI systems. Video S1: Demonstration of real-time TENG-based finger-bending detection and LED brightness control.

Author Contributions

Conceptualization, S.L.; methodology, S.L., X.D. and J.W.; software, S.L., X.D. and Q.T.; validation, S.L. and X.D.; investigation, S.L.; resources, S.L. and L.P.; data curation, S.L. and Q.T.; writing—original draft preparation, S.L.; writing—review and editing, S.L. and L.S.; visualization, S.L.; supervision, S.D. and L.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China (No. 62301479).

Data Availability Statement

The original contributions presented in this study are included in the article and Supplementary Material. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, C.; Niu, H.; Shen, G.; Li, Y. Self-Healing Hydrogel-Based Triboelectric Nanogenerator in Smart Glove System for Integrated Drone Safety Protection and Motion Control. Adv. Funct. Mater. 2024, 35, 2419809. [Google Scholar] [CrossRef]

- Luo, Y.; Wang, Z.; Wang, J.; Xiao, X.; Li, Q.; Ding, W.; Fu, H.Y. Triboelectric Bending Sensor Based Smart Glove towards Intuitive Multi-Dimensional Human-Machine Interfaces. Nano Energy 2021, 89, 106330. [Google Scholar] [CrossRef]

- Zhu, M.; Sun, Z.; Zhang, Z.; Shi, Q.; He, T.; Liu, H.; Chen, T.; Lee, C. Haptic-Feedback Smart Glove as a Creative Human-Machine Interface (HMI) for Virtual/Augmented Reality Applications. Sci. Adv. 2020, 6, eaaz8693. [Google Scholar] [CrossRef]

- Tang, C.; Xu, Z.; Occhipinti, E.; Yi, W.; Xu, M.; Kumar, S.; Virk, G.; Gao, S.; Occhipinti, L. From brain to movement: Wearables-based motion intention prediction across the human nervous system. Nano Energy 2023, 115, 108712. [Google Scholar] [CrossRef]

- Hussein, S.E.; Granat, M.H. Intention Detection Using a Neuro-Fuzzy EMG Classifier. IEEE Eng. Med. Biol. Mag. 2002, 21, 123–129. [Google Scholar] [CrossRef]

- Lyu, J.; Maýe, A.; Görner, M.; Ruppel, P.; Engel, A.K.; Zhang, J. Coordinating Human-Robot Collaboration by EEG-Based Human Intention Prediction and Vigilance Control. Front. Neurorobotics 2022, 16, 1068274. [Google Scholar] [CrossRef]

- Ju, J.; Bi, L.; Feleke, A.G. Detection of Emergency Braking Intention From Soft Braking and Normal Driving Intentions Using EMG Signals. IEEE Access 2021, 9, 131637–131647. [Google Scholar] [CrossRef]

- Zhu, M.; Sun, Z.; Lee, C. Soft Modular Glove with Multimodal Sensing and Augmented Haptic Feedback Enabled by Materials’ Multifunctionalities. ACS Nano 2022, 16, 14097–14110. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Zhu, M.; Lee, C. Progress in the Triboelectric Human-Machine Interfaces (HMIs)-Moving from Smart Gloves to AI/Haptic Enabled HMI in the 5G/IoT Era. Nanoenergy Adv. 2021, 1, 81–120. [Google Scholar] [CrossRef]

- Dong, W.; Yang, L.; Fortino, G. Stretchable Human Machine Interface Based on Smart Glove Embedded With PDMS-CB Strain Sensors. IEEE Sens. J. 2020, 20, 8073–8081. [Google Scholar] [CrossRef]

- Tchantchane, R.; Zhou, H.; Zhang, S.; Dunn, A.; Sariyildiz, E.; Alici, G. Advancing Human-Machine Interface (HMI) Through Development of a Conductive-Textile Based Capacitive Sensor. Adv. Mater. Technol. 2025, 10, 2401458. [Google Scholar] [CrossRef]

- Ozioko, O.; Dahiya, R. Smart Tactile Gloves for Haptic Interaction, Communication, and Rehabilitation. Adv. Intell. Syst. 2022, 4, 2100091. [Google Scholar] [CrossRef]

- Glauser, O.; Wu, S.; Panozzo, D.; Hilliges, O.; Sorkine-Hornung, O. Interactive Hand Pose Estimation Using a Stretch-Sensing Soft Glove. ACM Trans. Graph. 2019, 38, 1–15. [Google Scholar] [CrossRef]

- Liu, J.; Qiu, Z.; Kan, H.; Guan, T.; Zhou, C.; Qian, K.; Wang, C.; Li, Y. Incorporating Machine Learning Strategies to Smart Gloves Enabled by Dual-Network Hydrogels for Multitask Control and User Identification. ACS Sens. 2024, 9, 1886–1895. [Google Scholar] [CrossRef]

- Liu, Y.; Yiu, C.; Song, Z.; Huang, Y.; Yao, K.; Wong, T.; Zhou, J.; Zhao, L.; Huang, X.; Nejad, S.K.; et al. Electronic Skin as Wireless Human-Machine Interfaces for Robotic VR. Sci. Adv. 2022, 8, eabl6700. [Google Scholar] [CrossRef]

- Tashakori, A.; Jiang, Z.; Servati, A.; Soltanian, S.; Narayana, H.; Le, K.; Nakayama, C.; Yang, C.; Wang, Z.J.; Eng, J.J.; et al. Capturing Complex Hand Movements and Object Interactions Using Machine Learning-Powered Stretchable Smart Textile Gloves. Nat. Mach. Intell. 2024, 6, 106–118. [Google Scholar] [CrossRef]

- Fang, P.; Zhu, Y.; Lu, W.; Wang, F.; Sun, L. Smart Glove Human-Machine Interface Based on Strain Sensors Array for Control UAV. IJEETC 2023, 216–222. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, L. Packaged Elastomeric Optical Fiber Sensors for Healthcare Monitoring and Human-Machine Interaction. Adv. Mater. Technol. 2024, 9, 2301415. [Google Scholar] [CrossRef]

- Li, J.; Liu, B.; Hu, Y.; Liu, J.; He, X.-D.; Yuan, J.; Wu, Q. Plastic-Optical-Fiber-Enabled Smart Glove for Machine-Learning-Based Gesture Recognition. IEEE Trans. Ind. Electron. 2024, 71, 4252–4261. [Google Scholar] [CrossRef]

- Yang, B.; Cheng, J.; Qu, X.; Song, Y.; Yang, L.; Shen, J.; Bai, Z.; Ji, L. Triboelectric-Inertial Sensing Glove Enhanced by Charge-Retained Strategy for Human-Machine Interaction. Adv. Sci. 2025, 12, 2408689. [Google Scholar] [CrossRef]

- Zhang, Y.; Zheng, X.T.; Zhang, X.; Pan, J.; Thean, A.V.-Y. Hybrid Integration of Wearable Devices for Physiological Monitoring. Chem. Rev. 2024, 124, 10386–10434. [Google Scholar] [CrossRef] [PubMed]

- Niu, S.; Wang, Z.L. Theoretical Systems of Triboelectric Nanogenerators. Nano Energy 2015, 14, 161–192. [Google Scholar] [CrossRef]

- Cheng, T.; Gao, Q.; Wang, Z.L. The Current Development and Future Outlook of Triboelectric Nanogenerators: A Survey of Literature. Adv. Mater. Technol. 2019, 4, 1800588. [Google Scholar] [CrossRef]

- Peng, X.; Dong, K.; Ye, C.; Jiang, Y.; Zhai, S.; Cheng, R.; Liu, D.; Gao, X.; Wang, J.; Wang, Z.L. A Breathable, Biodegradable, Antibacterial, and Self-Powered Electronic Skin Based on All-Nanofiber Triboelectric Nanogenerators. Sci. Adv. 2020, 6, eaba9624. [Google Scholar] [CrossRef]

- Sun, Z.; Zhu, M.; Shan, X.; Lee, C. Augmented Tactile-Perception and Haptic-Feedback Rings as Human-Machine Interfaces Aiming for Immersive Interactions. Nat. Commun. 2022, 13, 5224. [Google Scholar] [CrossRef]

- Tu, X.; Fang, L.; Zhang, H.; Wang, Z.; Chen, C.; Wang, L.; He, W.; Liu, H.; Wang, P. Performance-Enhanced Flexible Self-Powered Tactile Sensor Arrays Based on Lotus Root-Derived Porous Carbon for Real-Time Human–Machine Interaction of the Robotic Snake. ACS Appl. Mater. Interfaces 2024, 16, 9333–9342. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, D.; Wang, Z.; Xi, G.; Mao, R.; Ma, Y.; Wang, D.; Tang, M.; Xu, Z.; Luan, H. Ultrastretchable, Self-Healing Conductive Hydrogel-Based Triboelectric Nanogenerators for Human–Computer Interaction. ACS Appl. Mater. Interfaces 2023, 15, 5128–5138. [Google Scholar] [CrossRef]

- Li, Y.; Nayak, S.; Luo, Y.; Liu, Y.; Salila Vijayalal Mohan, H.K.; Pan, J.; Liu, Z.; Heng, C.H.; Thean, A.V.-Y. A Soft Polydimethylsiloxane Liquid Metal Interdigitated Capacitor Sensor and Its Integration in a Flexible Hybrid System for On-Body Respiratory Sensing. Materials 2019, 12, 1458. [Google Scholar] [CrossRef]

- Qu, X.; Yang, Z.; Cheng, J.; Li, Z.; Ji, L. Development and Application of Nanogenerators in Humanoid Robotics. Nano Trends 2023, 3, 100013. [Google Scholar] [CrossRef]

- Xu, G.; Wang, H.; Zhao, G.; Fu, J.; Yao, K.; Jia, S.; Shi, R.; Huang, X.; Wu, P.; Li, J.; et al. Self-Powered Electrotactile Textile Haptic Glove for Enhanced Human-Machine Interface. Sci. Adv. 2025, 11, eadt0318. [Google Scholar] [CrossRef]

- Wen, F.; Zhang, Z.; He, T.; Lee, C. AI Enabled Sign Language Recognition and VR Space Bidirectional Communication Using Triboelectric Smart Glove. Nat. Commun. 2021, 12, 5378. [Google Scholar] [CrossRef] [PubMed]

- Wen, F.; Sun, Z.; He, T.; Shi, Q.; Zhu, M.; Zhang, Z.; Li, L.; Zhang, T.; Lee, C. Machine Learning Glove Using Self-Powered Conductive Superhydrophobic Triboelectric Textile for Gesture Recognition in VR/AR Applications. Adv. Sci. 2020, 7, 2000261. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.; Wang, Y.; Wang, Y.; Zhao, Z.; Zhang, L.; Chen, H. Highly stretchable PTFE particle enhanced triboelectric nanogenerator for droplet energy harvestings. Nano Energy 2023, 118, 109000. [Google Scholar] [CrossRef]

- He, T.; Sun, Z.; Shi, Q.; Zhu, M.; Anaya, D.V.; Xu, M.; Chen, T.; Yuce, M.R.; Thean, A.V.-Y.; Lee, C. Self-powered glove-based intuitive interface for diversified control applications in real/cyber space. Nano Energy 2019, 58, 641–651. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).