iRisk: Towards Responsible AI-Powered Automated Driving by Assessing Crash Risk and Prevention

Abstract

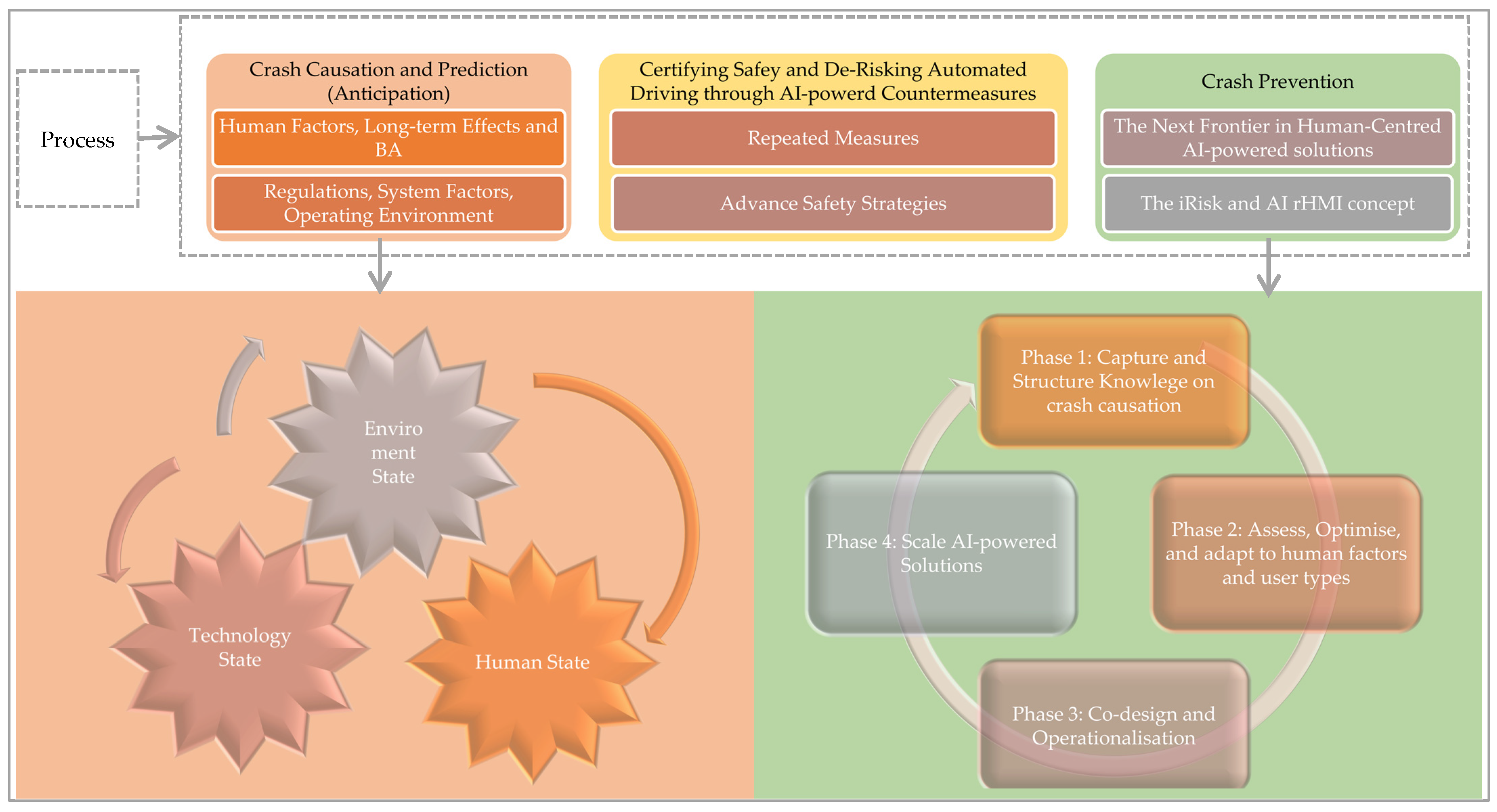

1. Introduction

Contribution

- Human Factor Requirements Engineering: De-risking crashes with AI-powered behavioural requirements analysis. Improve human users and automation system/software interaction efficiency through automated, execution-ready, and comprehensive analysis. Induce transparent processes with a human-centred knowledge tool that extracts knowledge on automated driving crash databases.

- ADS Engineering and Error Management: Enhance automation architecture decisions and accelerate design maturity with AI-driven information. Optimize efficiency with AI-augmented Design and Process Failure Mode and Effects Analysis (D-FMEA and P-FMEA) and streamlined support strategies.

- Setup new repeated measures and long-term research direction of in-vehicle socially cognitive robots and IoV, etc.

- Address specific psychological user needs and risk-based human factors.

- Adhere to the principle of form follows function and align with agile engineering.

- Iteratively refined via use in real-world settings and adaptable to evolving contexts.

- Usable, learnable, and applicable to different levels of automation.

2. Crash Risk Cause Analysis, Prediction, and Prevention: Human Factors and Long-Term Effects

- Qualitatively, risk reflects a state of uncertainty or potential threat within the traffic system, which, under specific conditions, may lead to accidents.

- Quantitatively, risk denotes the probability of danger materialising into an accident and is commonly used to assess safety conditions prior to an incident [12].

2.1. The Link to Human Factors and Behavioural Adaptations

2.1.1. Learning Experiences

2.1.2. Mental Models and Behavioural Adaptations

2.1.3. Trust, Reliance, and Acceptance

2.2. The Link to Regulations, System Factors, Operating Environment

2.3. Certifying Safety and De-Risking Automated Driving Through AI-Powerd Countermeasures

“They [users] learn the speed, they learn at speed—how fast do I have to act, how often does it flash, how fast do I have to look up, and they actually get a little faster as they learn, and then that makes them feel more comfortable, because they do not want it to escalate.”

3. The Next Frontier in Human-Centred AI-Powered Solutions for Ergonomically Responsible Automated Driving: The iRisk and AI rHMI

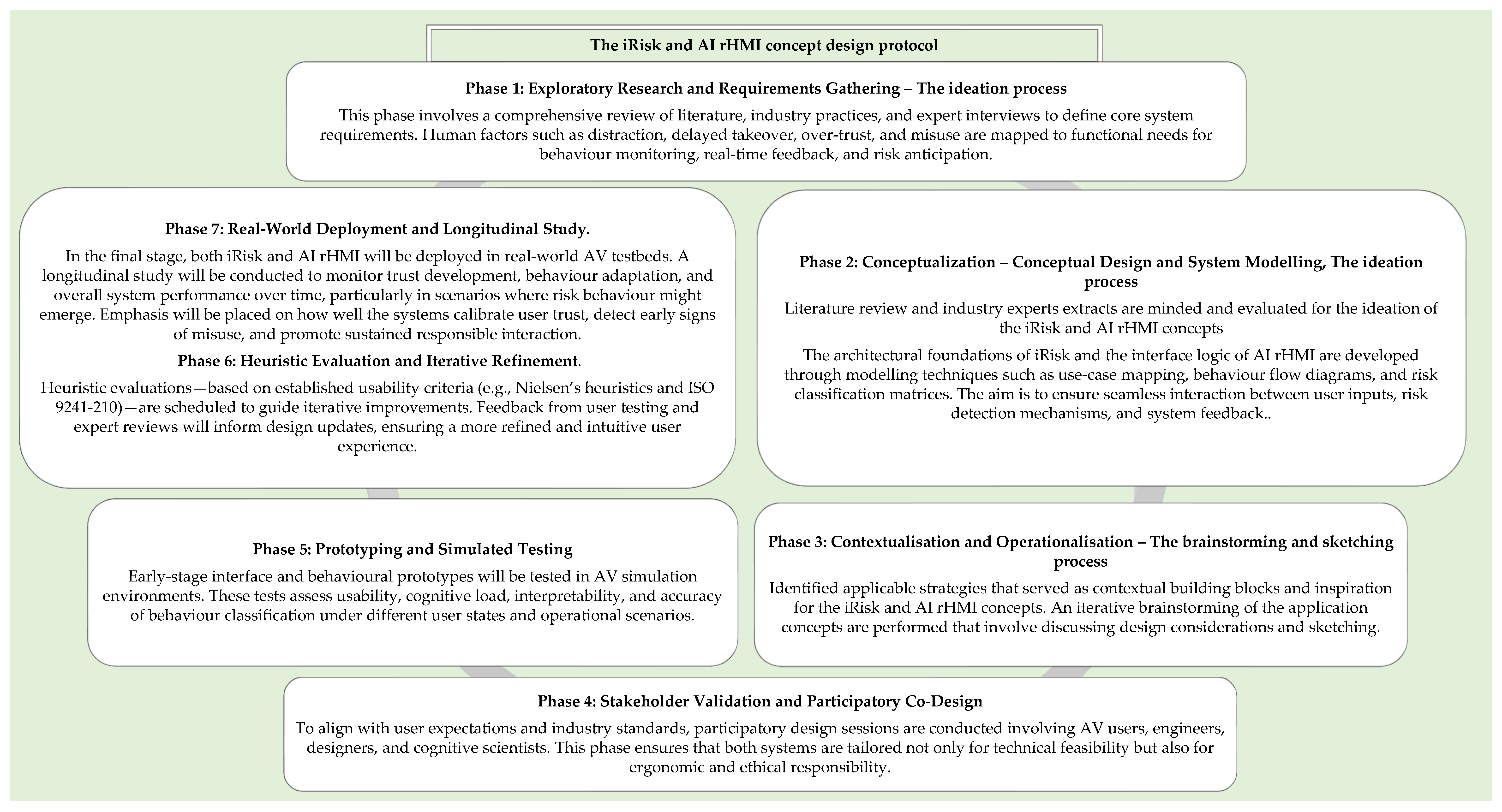

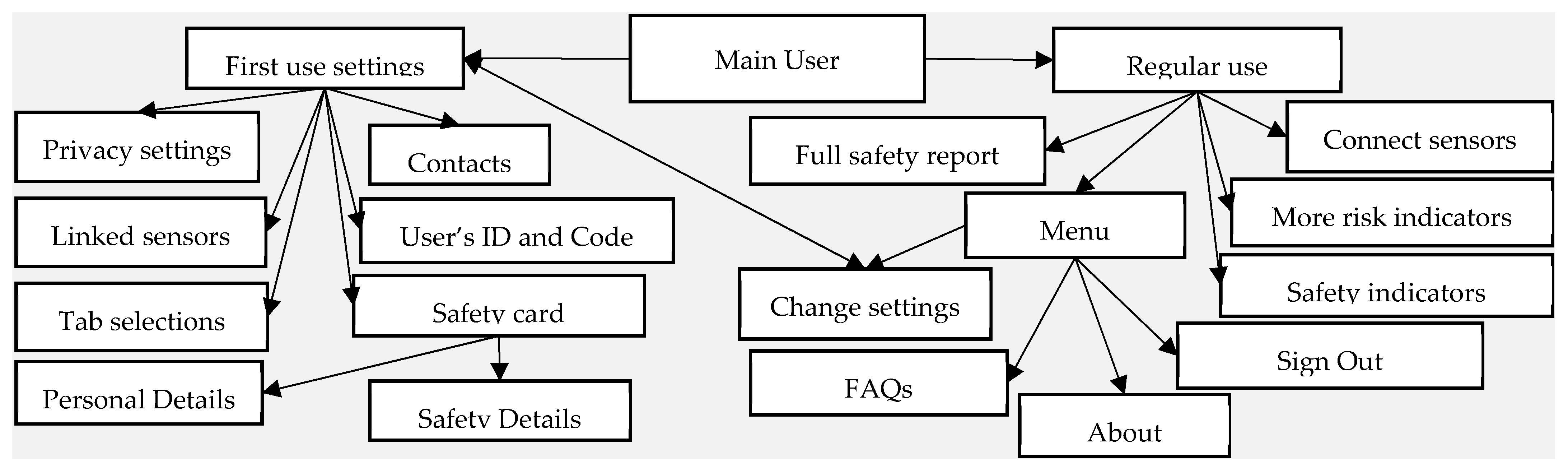

3.1. Phase 1: Exploratory Research and Requirements Gathering and Conceptualization

Case Analysis: INEX AI rHMI

3.2. Phase 2–4: Conceptual Design and System Modelling, and Contextualisation

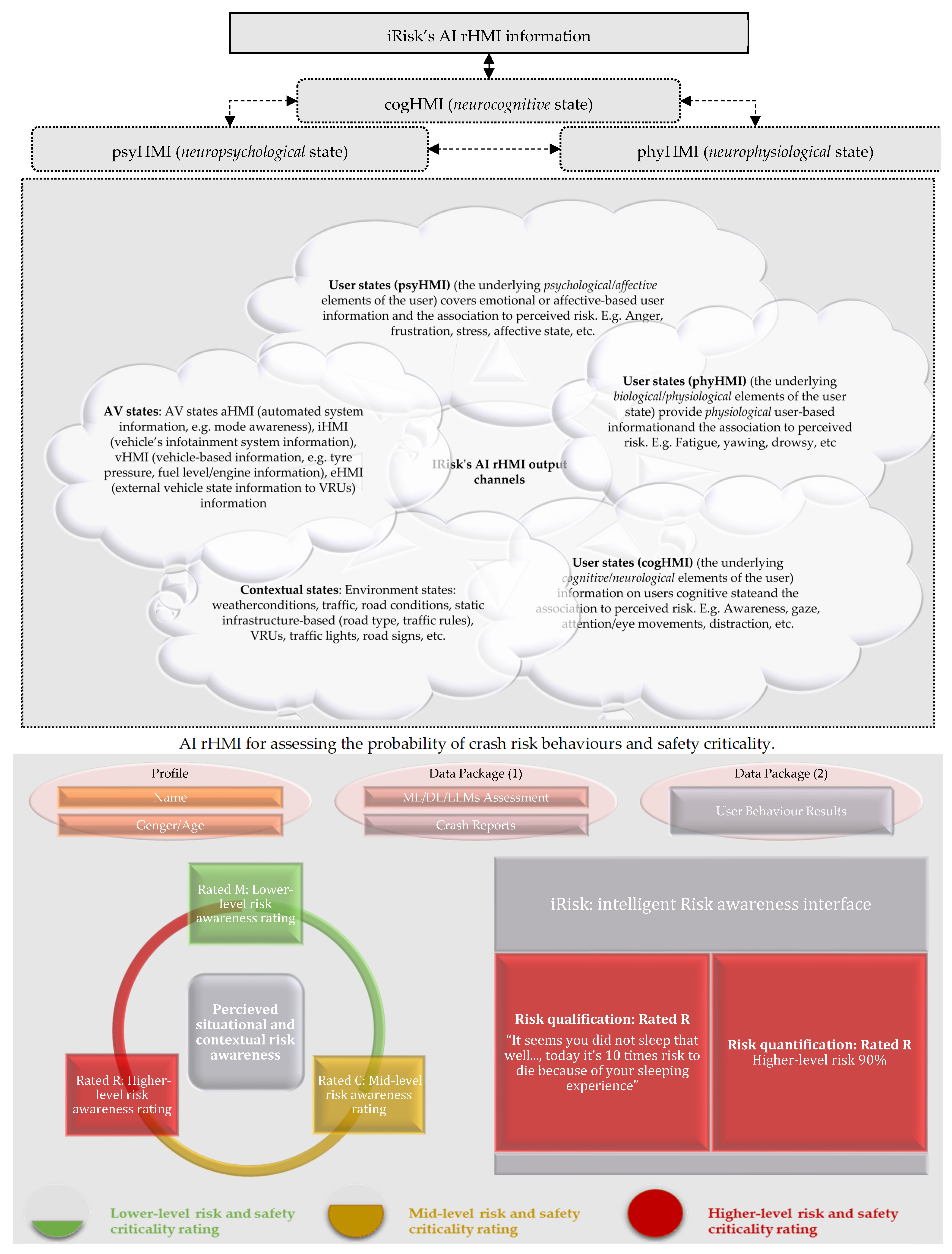

3.2.1. Conceptual Design and Sketching: The iRisk Brain Networks and AI rHMI Architecture

- DL/ML for real-time sensor data analysis, behavioural classification, and risk quantification. ML/DL modules handle behavioural data analysis, risk computation, and prediction.

- LLM for real-time HMI dialogue, persuasive feedback, user education, and interpretability. LLM modules handle user-facing communication, real-time persuasion, and interpretability.

- Multimodal integration to combine sensor insights with user-facing conversational interfaces. A central control logic orchestrates when and how each system communicates with the user, adapting to risk, behaviour, and context.

- More effective real-time user guidance.

- Stronger behavioural influence through persuasive HMI.

- Improved understanding of system outputs, making safety and performance gains more transparent and actionable for users.

- On one hand, the value network provides an estimate of the risk value (quantified and qualified) based on the current user behavioural state during automated driving: the probability of crash risk, given the current drowsy state, for example. The output of the value network is the probability of a crash risk encounter during automated driving in a distinctive context. It focuses on the long run, analysing the whole situation to reduce possible crash risk encounters.

- On the other, the policy networks provide crash risk awareness guidance concerning the action that iRisk must choose, given the current drowsy state (for example) during automated driving. The output is a probability value for crash risk awareness based on possible permissible change in driving behaviour (the output of the network is as large as the board). It focuses on the present and decides the next steps.

- Driver Performance: By integrating AI-based qEEG and HRV scans, we can monitor the cognitive performance and stress levels of AV users. These scans can provide real-time insights into user attention, workload, and stress levels, allowing the AV to adapt and provide personalized assistance to optimize user performance and safety.

- Health and Wellbeing Monitoring: MCI biomarker scans can be used to detect early signs of cognitive impairment, executive functions (EFs), or ailments like Alzheimer, dementia, or heart attacks in users. By analysing biomarkers associated with cognitive decline, we can provide interventions, support, or alerts to ensure safety.

- Personalised Driving Experience: AI can analyse qEEG, HRV, and MCI biomarker data to create individual user profiles. These profiles can be used to tailor the UX by adjusting AV settings, such as seat positioning, temperature, lighting, and entertainment options, to optimize comfort, reduce stress, and enhance overall satisfaction.

- User Support and Safety: AI can process qEEG, HRV, and MCI biomarker data to detect fatigue, distraction, or cognitive decline that may impact safety. The AV can then provide adaptive assistance, alerts, or interventions to mitigate risks and enhance safety.

- Research and Development: AI-based analysis of qEEG, HRV, and MCI biomarker data to gain insights into user behaviour, performance, and habits. This information can inform efforts to improve AV design, develop advanced systems, and safety features.

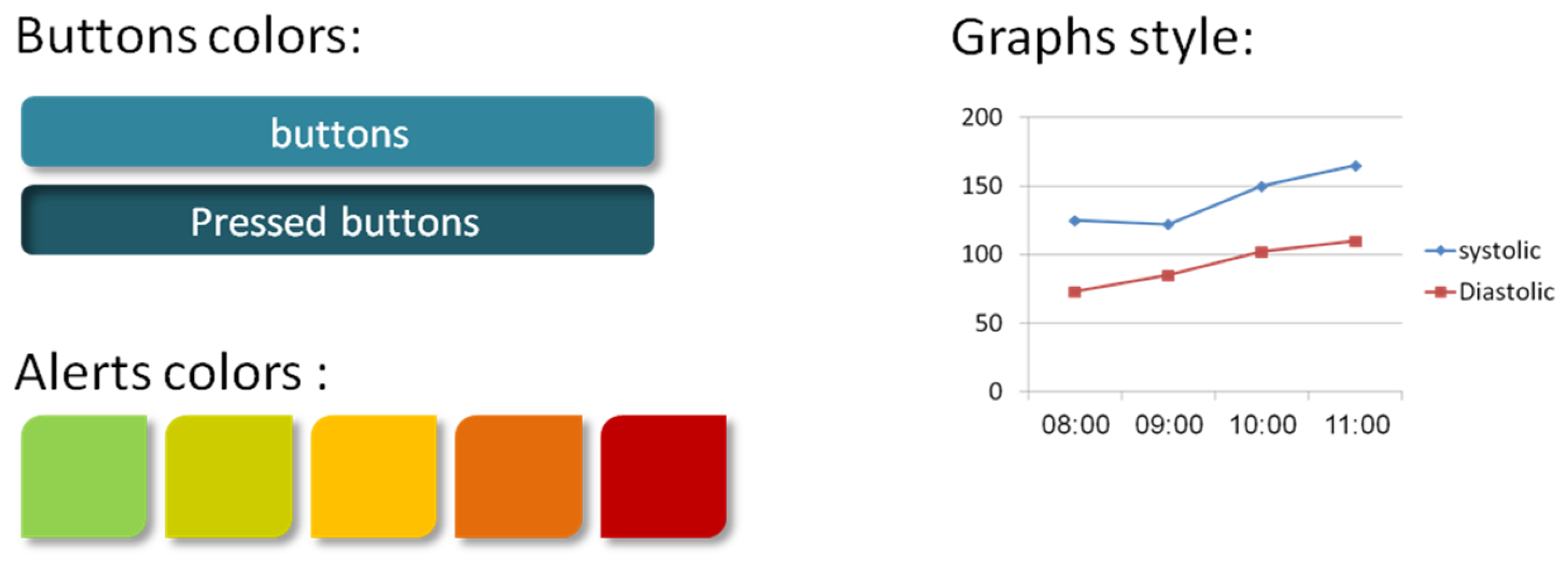

- Lower-level crash risk (Green) rating marked as Rated-M, with the capitalised M (for manageable). Perceived crash risk is manageable based on user state and behaviour during automated driving. The driving conditions are normal with low risk.

- Mid-level crash risk (Amber) rating marked as Rated-C, with the capitalised C (for caution). Perceived crash risk is controllable, but user/driver caution is advised based on user state and behaviour during automated driving. Attention, moderate risk of collision is detected. Exercise caution and remain attentive.

- Higher-level crash risk (Red) rating marked as Rated-R, with the capitalised R (for restricted behaviour). The user/driver should restrict risk-taking behaviour as safety is in critical risk due to user state and behaviour during automated driving. Warning, a high risk of collision is identified.

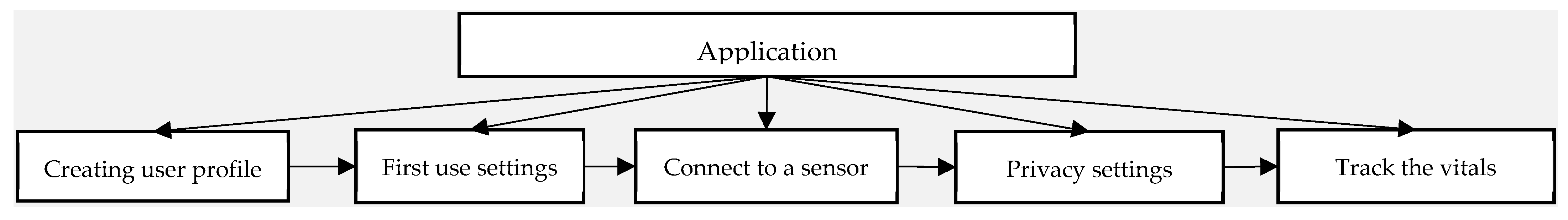

Custom Settings and Data Privacy Overview

- Manual Control

- The user monitors the situation, generates options, makes decisions, and physically executes actions.

- The system provides no decision-making assistance.

- Shared Control

- Both the user and system generate potential options.

- The user selects which option to implement, while execution is shared between the system and the user.

- The system may suggest decision/action alternatives, executing them only with user approval.

- This mode enables equal-autonomy collaboration.

- Automated Decision-Making

- The system generates, selects, and executes an option, with user input influencing the alternatives.

- In risk-sensitive situations, the system operates based on the current goal and execution context.

- A veto window is provided—e.g., the user has 30 s to cancel or override a decision.

- If no user response is received within the time limit, the system proceeds with full automation.

3.3. Phase 5–7: Application—Prototyping and Simulated Testing, the Heuristic Evaluation and Implementation Protocol, Real-World Deployment and Longitudinal Study

4. Limitations

- LLMs do not natively process real-time sensor data, this remains the strength of DL.

- Their output can be non-deterministic and occasionally inaccurate if not grounded in real data.

- They require careful safety and alignment tuning to avoid misleading the user or offering inappropriate advice.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADS | Automated Driving System |

| AV | Automated Vehicles |

| INEX | INternal-EXternal |

| AI rHMI | Artificial Intelligence-powered risk-aware Human–Machine Interface |

| iRisk | intelligent Risk assessment system |

| LLM | Large Language Model |

| ML | Machine Learning |

| DL | Deep Learning |

| D-FMEA | Design Failure Mode and Effects Analysis |

| P-FMEA | Process Failure Mode and Effects Analysis |

References

- SAE International. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles (J3016_202104); SAE International: Warrendale, PA, USA, 2021. [Google Scholar]

- Krause, A.; Singh, A.; Guestrin, C. Near-optimal Sensor Placements in Gaussian Processes: Theory, Efficient Algorithms and Empirical Studies. J. Mach. Learn. Res. 2008, 9, 235–284. [Google Scholar]

- Yang, Q. The Role of Design in Creating Machine-Learning-Enhanced User Experience. In Association for the Advancement of Artificial Intelligence (AAAI) Spring Symposia; Technical Report SS-17-04; 2017; Available online: https://cdn.aaai.org/ocs/15363/15363-68257-1-PB.pdf (accessed on 10 June 2025).

- Karakaya, B.; Bengler, K. Investigation of Driver Behavior during Minimal Risk Maneuvers of Automated Vehicles. In Proceedings of the 21st Congress of the International Ergonomics Association (IEA 2021); Lecture Notes in Networks and Systems, 221, Online, 13–18 June 2021; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Li, M.; Holthausen, B.E.; Stuck, R.E.; Walker, B.N. No risk no trust: Investigating perceived risk in highly automated driving. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands, 21–25 September 2019; pp. 177–185. [Google Scholar]

- Stapel, X.H.I.; Gentner, A.; Happee, R. Perceived safety and trust in SAE Level 2 partially automated cars: Results from an online questionnaire. PLoS ONE 2021, 16, e0260953. [Google Scholar]

- Kyriakidis, M.; de Winter, J.C.F.; Stanton, N.; Bellet, T.; van Arem, B.; Brookhuis, K.; Martens, M.H.; Bengler, K.; Andersson, J.; Merat, N.; et al. A human factors perspective on automated driving. Theor. Issues Ergon. Sci. 2019, 20, 223–249. [Google Scholar] [CrossRef]

- Carroll, J.M.; Carrithers, C. Training wheels in a user interface. Commun. ACM 1984, 27, 800–806. [Google Scholar] [CrossRef]

- Roshandel, S.; Zheng, Z.; Washington, S. Impact of real-time traffic characteristics on freeway crash occurrence: Systematic review and meta-analysis. Accid. Anal. Prev. 2015, 79, 198–211. [Google Scholar] [CrossRef]

- Savolainen, P.T.; Mannering, F.L.; Lord, D.; Quddus, M.A. The statistical analysis of highway crash-injury severities: A review and assessment of methodological alternatives. Accid. Anal. Prev. 2011, 43, 1666–1676. [Google Scholar] [CrossRef]

- Mannering, F. Temporal instability and the analysis of highway accident data. Anal. Methods Accid. Res. 2018, 17, 1–13. [Google Scholar] [CrossRef]

- Xiao, D.; Zhang, B.; Chen, Z.; Xu, X.; Du, B. Connecting tradition with modernity: Safety literature review. Digit. Transp. Saf. 2023, 2, 1–11. [Google Scholar] [CrossRef]

- Mbelekani, N.Y.; Bengler, K. Risk and safety-based behavioural adaptation towards automated vehicles: Emerging advances, effects, challenges and techniques. In International Congress on Information and Communication Technology; Springer Nature: Singapore, 2024; pp. 459–482. [Google Scholar]

- Chu, Y.; Liu, P. Human Factor Risks in Driving Automation Crashes. In HCI in Mobility, Transport, and Automotive Systems; Krömker, H., Ed.; HCII 2023. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2023; Volume 14048, pp. 3–12. [Google Scholar] [CrossRef]

- Simonet, S.; Wilde, G.J.S. Risk: Perception, acceptance and homeostasis. Appl. Psychol. Int. Rev. 1997, 46, 235–252. [Google Scholar] [CrossRef]

- National Transportation Safety Board. Collision Between a Car Operating with Automated Vehicle Control Systems and a Tractor-Semitrailer Truck near Williston, Florida, 7 May 2016; Highway Accident Report NTSB/HAR-17/02; NTSB: Washington, DC, USA, 2017. [Google Scholar]

- National Transportation Safety Board. Rear-End Collision Between a Car Operating with Advanced Driver Assistance Systems and a Stationary Fire Truck, Culver City, California, 22 January 2018; Highway Accident Report NTSB/HAB-19/07; NTSB: Washington, DC, USA, 2019. [Google Scholar]

- National Transportation Safety Board. Collision Between a Sport Utility Vehicle Operating with Partial Driving Automation and a Crash Attenuator, Mountain View, California, 23 March 2018; Highway Accident Report NTSB/HAR-20/01; NTSB: Washington, DC, USA, 2020. [Google Scholar]

- National Transportation Safety Board. Collision Between Car Operating with Partial Driving Automation and Truck-Tractor Semitrailer Delray Beach, Florida, 1 March 2019; Highway Accident Report NTSB/HAR-20/01; NTSB: Washington, DC, USA, 2020. [Google Scholar]

- National Transportation Safety Board. Collision Between Vehicle Controlled by Developmental Automated Driving System and Pedestrian, Tempe, Arizona, 18 March 2018; Highway Accident Report NTSB/HAR-19/03; NTSB: Washington, DC, USA, 2019. [Google Scholar]

- National Transportation Safety Board. Low-Speed Collision Between Truck-Tractor and Autonomous Shuttle, Las Vegas, Nevada, 8 November 2017; Highway Accident Report NTSB/HAR-19/06; NTSB: Washington, DC, USA, 2019. [Google Scholar]

- Rudin-Brown, C.M.; Parker, H.A. Behavioural adaptation to adaptive cruise control (ACC): Implications for preventive strategies. Transp. Res. Part F Traffic Psychol. Behav. 2004, 7, 59–76. [Google Scholar] [CrossRef]

- Metz, B.; Wörle, J.; Hanig, M.; Schmitt, M.; Lutz, A.; Neukum, A. Repeated usage of a motorway automated driving function: Automation level and behavioural adaption. Transp. Res. Part F Traffic Psychol. Behav. 2021, 81, 82–100. [Google Scholar] [CrossRef]

- Beggiato, M.; Krems, J.F. The evolution of mental model, trust and acceptance of adaptive cruise control in relation to initial information. Transp. Res. Part F Traffic Psychol. Behav. 2013, 18, 47–57. [Google Scholar] [CrossRef]

- Homans, H.; Radlmayr, J.; Bengler, K. Levels of Driving Automation from a User’s Perspective: How Are the Levels Represented in the User’s Mental Model. In International Conference on Human Interaction and Emerging Technologies; Springer: Cham, Switzerland, 2019; pp. 21–27. [Google Scholar] [CrossRef]

- Wozney, L.; McGrath, P.J.; Newton, A.; Huguet, A.; Franklin, M.; Perri, K.; Leuschen, K.; Toombs, E.; Lingley-Pottie, P. Usability, learnability and performance evaluation of Intelligent Research and Intervention Software: A delivery platform for eHealth interventions. Health Inform. J. 2016, 22, 730–743. [Google Scholar] [CrossRef] [PubMed]

- Ojeda, L.; Nathan, F. Studying learning phases of an ACC through verbal reports. Driv. Support Inf. Syst. Exp. Learn. Appropr. Eff. Adapt. 2006, 1, 47–73. [Google Scholar]

- Heimgärtner, R. Human Factors of ISO 9241-110 in the Intercultural Context. Adv. Ergon. Des. Usability Spec. Popul. 2014, 3, 18. [Google Scholar]

- Körber, M.; Baseler, E.; Bengler, K. Introduction matters: Manipulating trust in automation and reliance in automated driving. Appl. Ergon. 2018, 66, 18–31. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors J. Hum. Factors Ergon. Soc. 2004, 46, 50–80. [Google Scholar] [CrossRef]

- Hecht, T.; Darlagiannis, E.; Bengler, K. Non-driving related activities in automated driving–an online survey investigating user needs. In Proceedings of the Human Systems Engineering and Design II: Proceedings of the 2nd International Conference on Human Systems Engineering and Design (IHSED2019): Future Trends and Applications, Universität der Bundeswehr München, Munich, Germany, 16–18 September 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 182–188. [Google Scholar]

- Karakaya, B.; Bengler, K. Minimal risk maneuvers of automated vehicles: Effects of a contact analog head-up display supporting driver decisions and actions in transition phases. Safety 2023, 9, 7. [Google Scholar] [CrossRef]

- He, X.; Stapel, J.; Wang, M.; Happee, R. Modelling perceived risk and trust in driving automation reacting to merging and braking vehicles. Traffic Psychol. Behav. 2022, 86, 178–195. [Google Scholar] [CrossRef]

- Stapel, J.; Gentner, A.; Happee, R. On-road trust and perceived risk in Level 2 automation. Transp. Res. Part F Traffic Psychol. Behav. 2022, 89, 355–370. [Google Scholar] [CrossRef]

- Karakaya, B.; Kalb, L.; Bengler, K. A video survey on minimal risk maneuvers and conditions. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Online, 5–9 October 2020; SAGE Publications: Los Angeles, CA, USA, 2020; Volume 64, pp. 1708–1712. [Google Scholar]

- Nordhoff, S.; Kyriakidis, M.; van Arem, B.; Happee, R. A multi-level model on automated vehicle acceptance (MAVA): A review-based study. Theor. Issues Ergon. Sci. 2019, 20, 682–710. [Google Scholar] [CrossRef]

- Zhang, T.; Tao, D.; Qu, X.; Zhang, X.; Lin, R.; Zhang, W. The Roles of Initial Trust and Perceived risk in Public’s Acceptance of Automated Vehicles. Transp. Res. Part C Emerg. Technol. 2019, 98, 207–220. [Google Scholar] [CrossRef]

- Every, J.L.; Barickman, F.; Martin, J.; Rao, S.; Schnelle, S.; Weng, B. A novel method to evaluate the safety of highly automated vehicles. In International Technical Conference on the Enhanced Safety of Vehicles; NHTSA: Detroit, MI, USA, 2017. [Google Scholar]

- Mbelekani, N.Y.; Bengler, K. Learning Design Strategies for Optimizing User Behaviour Towards Automation: Architecting Quality Interactions from Concept to Prototype. In HCI in Mobility, Transport, and Automotive Systems; Krömker, H., Ed.; HCII 2023. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2023; Volume 14048. [Google Scholar] [CrossRef]

- Mbelekani, N.Y.; Bengler, K. Learnability in Automated Driving (LiAD): Concepts for applying learnability engineering (CALE) based on long-term learning effects. Information 2023, 14, 519. [Google Scholar] [CrossRef]

- Mbelekani, N.Y.; Bengler, K. Interdisciplinary Industrial Design Strategies for Human-Automation Interaction: Industry Experts’ Perspectives. Interdiscip. Pract. Ind. Des. 2022, 48, 132–141. [Google Scholar] [CrossRef]

- Hock, P.; Kraus, J.; Walch, M.; Lang, N.; Baumann, M. Elaborating Feedback Strategies for Maintaining Automation in Highly Automated Driving. In Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI ’16), Ann Arbor, MI, USA, 24–26 October 2016. [Google Scholar]

- Thomaz, A.L.; Breazeal, C. Teachable robots: Understanding human teaching behavior to build more effective robot learners. Artif. Intell. 2008, 172, 716–737. [Google Scholar] [CrossRef]

- Baum, E.B.; Lang, K. Query Learning can work Poorly when a Human Oracle is Used. Neural Netw. 1992, 8, 8. [Google Scholar]

- Amershi, S.; Fogarty, J.; Kapoor, A.; Tan, D. Overview Based Example Selection in End User Interactive Concept Learning. In Proceedings of the 22nd Annual ACM Sympo-Sium on User Interface Software and Technology (UIST ’09), Bend, OR, USA, 29 October–2 November 2022; pp. 247–256. [Google Scholar] [CrossRef]

- Gurevich, N.; Markovitch, S.; Rivlin, E. Active Learning with Near Misses. American Association for Artificial Intelligence (AAAI) 2006, 362–367. Available online: https://cdn.aaai.org/AAAI/2006/AAAI06-058.pdf (accessed on 10 June 2025).

- Amershi, S.; Cakmak, M.; Knox, W.B.; Kulesza, T. Power to the people: The role of humans in interactive machine learning. Ai Mag. 2014, 35, 105–120. [Google Scholar] [CrossRef]

- Tong, S.; Chang, E. Support Vector Machine Active Learning for Image Retrieval. In Proceedings of the Ninth ACM International Conference on Multimedia (MULTIMEDIA 2001), Ottawa, ON, Canada, 30 September–5 October 2001; pp. 107–118. [Google Scholar] [CrossRef]

- Fails, J.A.; Olsen, D.R., Jr. Interactive Machine Learning. In Proceedings of the 8th International Conference on Intelligent User Interfaces (IUI ’03), Miami, FL, USA, 12–15 January 2003; pp. 39–45. [Google Scholar] [CrossRef]

- Dey, A.K.; Hamid, R.; Beckmann, C.; Li, I.; Hsu, D. A CAPpella: Programming by Demonstration of Context-Aware Applications. In Proceedings of the SIGCHI Conference on Human Factors in Computing Sys-Tems (CHI ’04), Vienna, Austria, 24–29 April 2004; pp. 33–40. [Google Scholar] [CrossRef]

- Steinder, M.; Sethi, A.S. A Survey of Fault Localization Techniques in Computer Networks. Sci. Comput. Program. 2004, 53, 165–194. [Google Scholar] [CrossRef]

- Fogarty, J.; Tan, D.; Kapoor, A.; Winder, S. CueFlik: Interactive Concept Learning in Image Search. In Proceedings of the SIGCHI Conference on Human Factors in Computing Sys-Tems (CHI ’08), Florence, Italy, 5–10 April 2008; pp. 29–38. [Google Scholar] [CrossRef]

- Jain, P.; Kulis, B.; Dhillon, I.S.; Grauman, K. Online Metric Learning and Fast Similarity Search. NIPS 2008, 761–768. Available online: https://proceedings.neurips.cc/paper_files/paper/2008/file/aa68c75c4a77c87f97fb686b2f068676-Paper.pdf (accessed on 10 June 2025).

- Ritter, A.; Basu, S. Learning to Generalize for Complex Selection Tasks. In Proceedings of the 14th International Conference on Intelligent User Interfaces (IUI ’09), Sanibel Island, FL, USA, 8–11 February 2009; pp. 167–176. [Google Scholar] [CrossRef]

- Amershi, S.; Fogarty, J.; Kapoor, A.; Tan, D. Examining Multiple Potential Models in End-User Interactive Concept Learning. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’10), Atlanta, GA, USA, 10–15 April 2010; pp. 1357–1360. [Google Scholar] [CrossRef]

- Amershi, S.; Lee, B.; Kapoor, A.; Mahajan, R.; Christian, B. CueT: Human-Guided Fast and Accurate Network Alarm Triage. In Proceedings of the SIGCHI Conference on Human Factors in Com-Puting Systems (CHI ’11), Vancouver, BC, Canada, 7–12 May 2011; pp. 157–166. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of Go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef] [PubMed]

- Murugan, S.; Sampathkumar, A.; Raja, S.K.S.; Ramesh, S.; Manikandan, R.; Gupta, D. Autonomous Vehicle Assisted by Heads up Display (HUD) with Augmented Reality Based on Machine Learning Techniques. In Virtual and Augmented Reality for Automobile Industry: Innovation Vision and Applications; Springer: Cham, Switzerland, 2022; pp. 45–64. [Google Scholar]

- Goldberg, K. What is automation? IEEE Trans. Autom. Sci. Eng. 2011, 9, 1–2. [Google Scholar] [CrossRef]

- Mbelekani, N.Y.; Bengler, K. Traffic and Transport Ergonomics on Long Term Multi-Agent Social Interactions: A Road User’s Tale; HCII 2022, LNCS 13520; Rauterberg, M., Fui-Hoon Nah, F., Siau, K., Krömker, H., Wei, J., Salvendy, G., Eds.; Springer: Cham, Switzerland, 2022; pp. 499–518. [Google Scholar] [CrossRef]

- Tran, T.T.M.; Parker, C.; Wang, Y.; Tomitsch, M. Designing wearable augmented reality concepts to support scalability in autonomous vehicle–pedestrian interaction. Front. Comput. Sci. 2022, 4, 39. [Google Scholar] [CrossRef]

- Bengler, K.; Rettenmaier, M.; Fritz, N.; Feierle, A. From HMI to HMIs: Towards an HMI Framework for Automated Driving. Information 2020, 11, 61. [Google Scholar] [CrossRef]

- Mirnig, A.G.; Marcano, M.; Trösterer, S.; Sarabia, J.; Diaz, S.; Dönmez Özkan, Y.; Sypniewski, J.; Madigan, R. Workshop on Exploring Interfaces for Enhanced Automation Assistance for Improving Manual Driving Abilities. In Proceedings of the 13th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI ‘21 Adjunct), Leeds, UK, 13–14 September 2021; pp. 178–181. [Google Scholar] [CrossRef]

- Haeuslschmid, R.; Buelow, M.; Pfleging, B.; Butz, A. Supporting Trust in Autonomous Driving. In IUI; ACM: Limassol, Cyprus, 2017. [Google Scholar]

- Forster, Y.; Hergeth, S.; Naujoks, F.; Krems, J.; Keinath, A. User Education in Automated Driving: Owner’s Manual and Interactive Tutorial Support Mental Model Formation and Human-Automation Interaction. Information 2019, 10, 143. [Google Scholar] [CrossRef]

- Watson, D.; Clark, L.A. The PANAS-X: Manual for the Positive and Negative Affect Schedule–Expanded Form; Unpublished manuscript; University of Iowa: Iowa City, IA, USA, 1994. [Google Scholar]

- Watson, D.; Vaidya, J. Mood measurement: Current status and future directions. Handb. Psychol. Res. Methods Psychol. 2003, 2, 351–375. [Google Scholar]

- Balters, S.; Steinert, M. Capturing emotion reactivity through physiology measurement as a foundation for affective engineering in engineering design science and engineering practices. J. Intell. Manuf. 2017, 28, 1585–1607. [Google Scholar] [CrossRef]

- Watson, D.; Tellegen, A. Toward a consensual structure of mood. Psychol. Bull. 1985, 98, 219. [Google Scholar] [CrossRef]

- Fredrickson, B.L.; Tugade, M.M.; Waugh, C.E.; Larkin, G.R. What good are positive emotions in crisis? A prospective study of resilience and emotions following the terrorist attacks on the United States on September 11th, 2001. J. Personal. Soc. Psychol. 2003, 84, 365–376. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Facial Action Coding System (FACS) [Database record]. Environmental Psychology & Nonverbal Behavior. APA PsycTests 1978. [Google Scholar] [CrossRef]

- Gottman, J.M.; Krokoff, L.J. Marital interaction and satisfaction: A longitudinal view. J. Consult. Clin. Psychol. 1989, 57, 47. [Google Scholar] [CrossRef]

- Bachorowski, J.-A.; Owren, M.J. Vocal expression of emotion: Acoustic properties of speech are associated with emotional intensity and context. Psychol. Sci. 1995, 6, 219–224. [Google Scholar] [CrossRef]

- Russell, J.A.; Bachorowski, J.-A.; Fernández-Dols, J.-M. Facial and vocal expressions of emotion. Annu. Rev. Psychol. 2003, 54, 329–349. [Google Scholar] [CrossRef] [PubMed]

- Coulson, M. Attributing emotion to static body postures: Recognition accuracy, confusions, and viewpoint dependence. J. Nonverbal Behav. 2004, 28, 117–139. [Google Scholar] [CrossRef]

- Dael, N.; Mortillaro, M.; Scherer, K.R. The body action and posture coding system (BAP): Development and reliability. J. Nonverbal Behav. 2012, 36, 97–121. [Google Scholar] [CrossRef]

- Levenson, R.W. The autonomic nervous system and emotion. Emot. Rev. 2014, 6, 100–112. [Google Scholar] [CrossRef]

- Mauss, I.B.; Robinson, M.D. Measures of emotion: A review. Cogn. Emot. 2009, 23, 209–237. [Google Scholar] [CrossRef]

- Stilgoe, J. Machine learning, social learning and the governance of self-driving cars. Soc. Stud. Sci. 2018, 48, 25–56. [Google Scholar] [CrossRef]

- Carsten, O.; Martens, M.H. How can humans understand their automated cars? HMI principles, problems and solutions. Cogn. Technol. Work. 2019, 21, 3–20. [Google Scholar] [CrossRef]

- Strömberg, H.; Pettersson, I.; Andersson, J.; Rydström, A.; Dey, D.; Klingegård, M.; Forlizzi, J. Designing for social experiences with and within autonomous vehicles—Exploring methodological directions. Des. Sci. 2018, 4, E13. [Google Scholar] [CrossRef]

- Flemisch, F.; Kelsch, J.; Löper, C.; Schieben, A.; Schindler, J. Automation spectrum, inner/outer compatibility and other potentially useful human factors concepts for assistance and automation. In Human Factors for Assistance and Automation; de Waard, D., Flemisch, F.O., Lorenz, B., Oberheid, H., Brookhuis, K.A., Eds.; Shaker Publishing: Maastricht, The Netherlands, 2008; pp. 1–16. [Google Scholar]

- Zimmerman, J.; Forlizzi, J. Research through Design in HCI. In Ways of Knowing in HCI.; Olson, J., Kellogg, W., Eds.; Springer: New York, NY, USA, 2014. [Google Scholar]

| Applications of User-Interactive ML Systems | |

|---|---|

| Crayons: Supports interactive training of pixel classifiers for image segmentation in camera-based applications. | [49] |

| CAPpella: Programming by demonstrations of context aware applications. This enables end-user training of ML for context detection in sensor-equipped environments. | [50] |

| CueT: Human-guided fast and accurate network alarm triage. The system uses interactive machine learning to learn from the triaging decisions of operators. It combines novel visualisations and interactive machine learning to deal with a highly dynamic environment where the groups of interest are not known a priori and evolve constantly. | [56] |

| CueFlik: Interactive concept learning in image search. | [52] |

| AlphaGo: A Go game computer program created by Google’s DeepMind Company, which became the first game program to defeat a world champion. Using deep neural networks, the tree search in AlphaGo evaluated positions and selected moves, and these neural networks were trained using (1) ‘supervised learning from human expert moves’ and (2) ‘reinforcement learning from self-play’. | [57,58] |

| Industry | Concepts |

|---|---|

| Automated flying (Aircraft Experts) |

|

| Automated driving (Car Experts) |

|

| Automated trucking (Experts), automated farming (Experts) |

|

| Leading Safe Automated Driving | Tracking Risk Indicators | Emergency Response | |

|---|---|---|---|

|

|

| |

| Personalised Interaction Design | |||

| Establish emotional connection and iRisk | The greatest value of emotional connection is the sense of immersion it creates, which helps build user trust. Simple interaction is not enough—emotional design fosters a belief in consistent positive experiences. In the short term, it enhances user response to stimuli by amplifying comfort and reducing stress, encouraging deeper engagement with the AV’s OS iRisk, AI rHMI, or in-vehicle AI social robot. iRisk plays two roles:

Over time, this adaptive and emotionally aware system could form genuine emotional bonds with users, enriching the in-car experience and promoting safer, more personalized driving. | ||

| Emotional Design in Intelligent Systems, iRisk | The iRisk project explores how to meet drivers’ emotional and psychological needs in real-world scenarios. Emotions are influenced by both immediate context and deeper personal states. For example, a system saying “Don’t worry, I will support you” may comfort one user but feel intrusive to another, depending on their emotional state. Since such reactions cannot be predicted through logic alone, iRisk uses AI and big data to improve emotional accuracy—analysing user behaviour to increase the likelihood of emotionally appropriate responses. Emotional design under iRisk focuses on building context-driven interactions that enhance positive feelings and reduce stress. This is achieved through:

| ||

| Designing the In-vehicle AI robot persona: Inspired by expectations, grounded in reality | User expectations for AI robots are often shaped by popular media: wise companion (Knight Rider), strong protector (I, Robot), warm caregiver (Baymax), etc. These characters represent powerful emotional and functional roles. However, in the driving context, a vehicle remains primarily a tool, so the intelligent character must be designed with practicality and emotional relevance in mind. What kind of character do we want to offer in the car? Traits for the driving context:

| ||

| User-centred design requirements | The system must be adaptable to different user types, with a user interface that is intuitive and accessible. Design considerations include clear visual contrast for colours to ensure easy distinction of elements, smooth and understandable screen transitions to support user orientation, simplified workflows to reduce cognitive load and operational effort. User Analysis: Applies to different user types, including tech savvy, tech illiterate, and tech literates. Moreover, considers early users, mid-level users, and long-term users. Users should be able to easily check whether their vital states are within normal ranges, access detailed information on each vital, and view analysis over a selected period. The goal is to deliver a low-effort, high-clarity experience that supports both quick insights and deeper understanding. | ||

| Design and experience objectives | The system experience should be friendly, polite, intuitive, and easy to use. The graphical interface must clearly convey information about the monitored situation, encouraging engagement through simplicity and usability. It should also instil a sense of security, especially in emergency situations. The broader goal is to co-design a connected car service that offers a rich range of in-vehicle content, powered by technologies such as ML/DL, LLM, Big Data and Neuro-Ergonomics, Maps, AI, and Portal Services. Additionally, the aim is to advance in-vehicle voice recognition through NLP and to develop intelligent vehicle-to-everything services, enhancing the seamless integration of mobility and lifestyle. | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mbelekani, N.Y.; Bengler, K. iRisk: Towards Responsible AI-Powered Automated Driving by Assessing Crash Risk and Prevention. Electronics 2025, 14, 2433. https://doi.org/10.3390/electronics14122433

Mbelekani NY, Bengler K. iRisk: Towards Responsible AI-Powered Automated Driving by Assessing Crash Risk and Prevention. Electronics. 2025; 14(12):2433. https://doi.org/10.3390/electronics14122433

Chicago/Turabian StyleMbelekani, Naomi Y., and Klaus Bengler. 2025. "iRisk: Towards Responsible AI-Powered Automated Driving by Assessing Crash Risk and Prevention" Electronics 14, no. 12: 2433. https://doi.org/10.3390/electronics14122433

APA StyleMbelekani, N. Y., & Bengler, K. (2025). iRisk: Towards Responsible AI-Powered Automated Driving by Assessing Crash Risk and Prevention. Electronics, 14(12), 2433. https://doi.org/10.3390/electronics14122433