DataMatrix Code Recognition Method Based on Coarse Positioning of Images

Abstract

1. Introduction

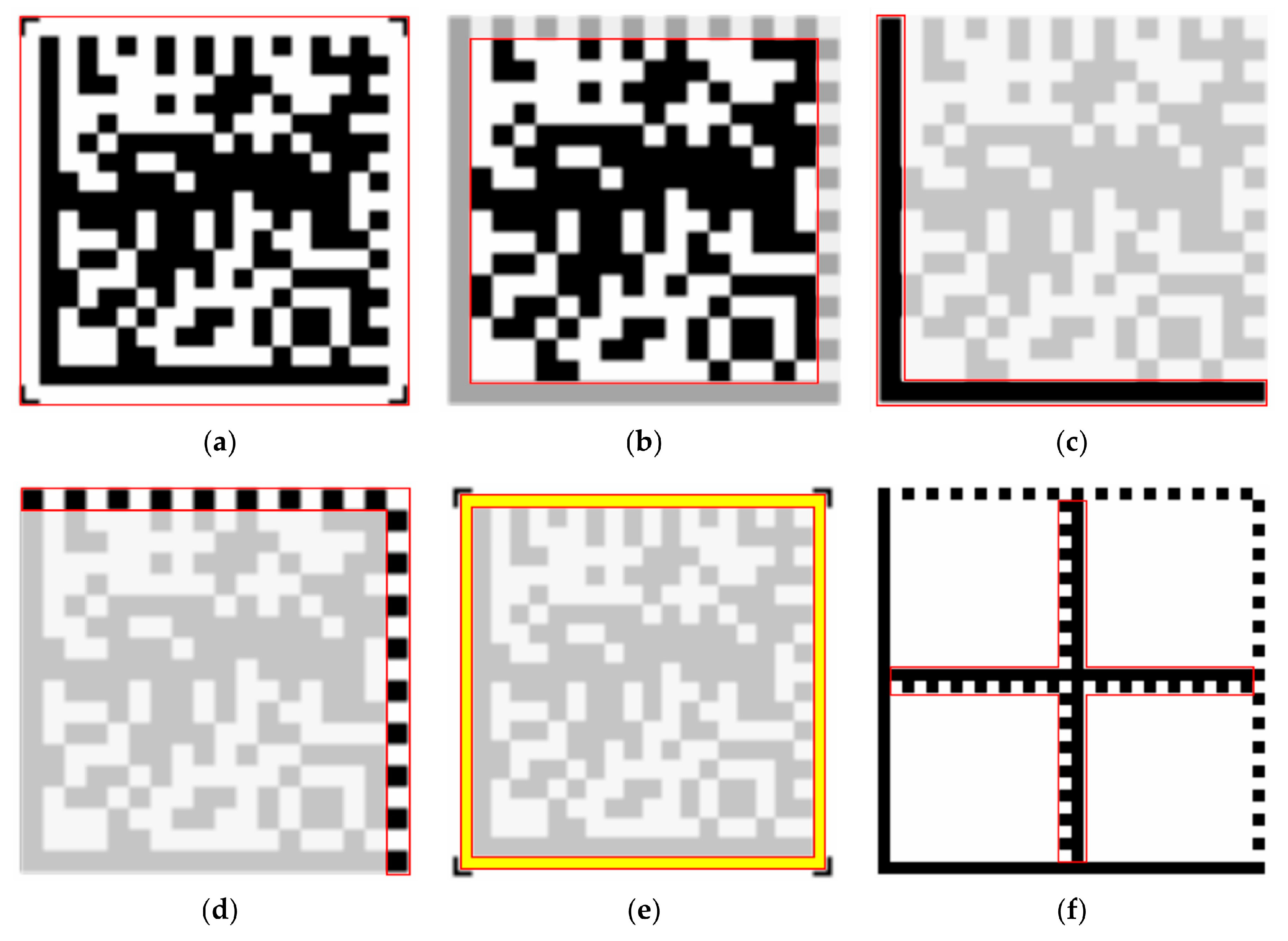

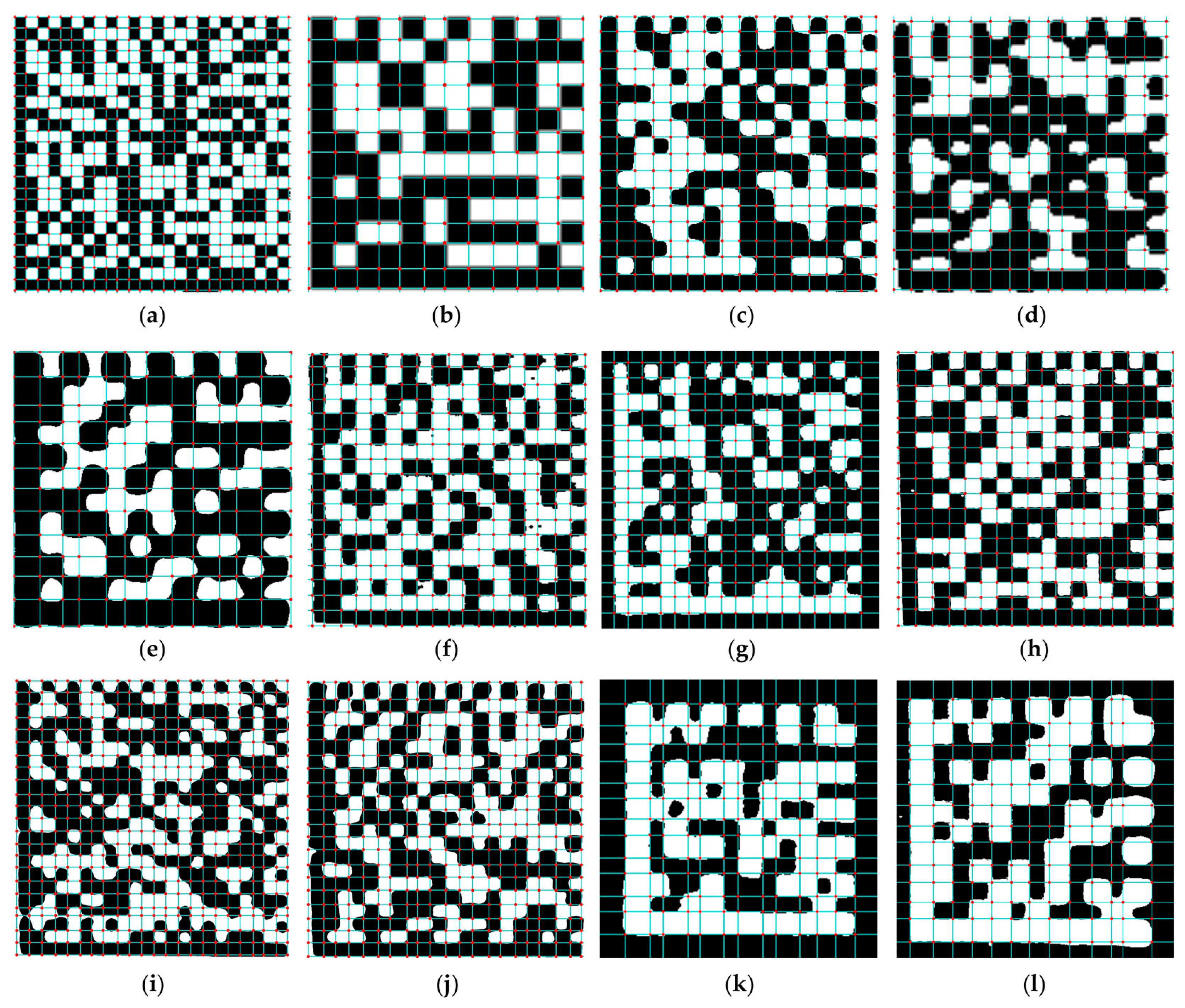

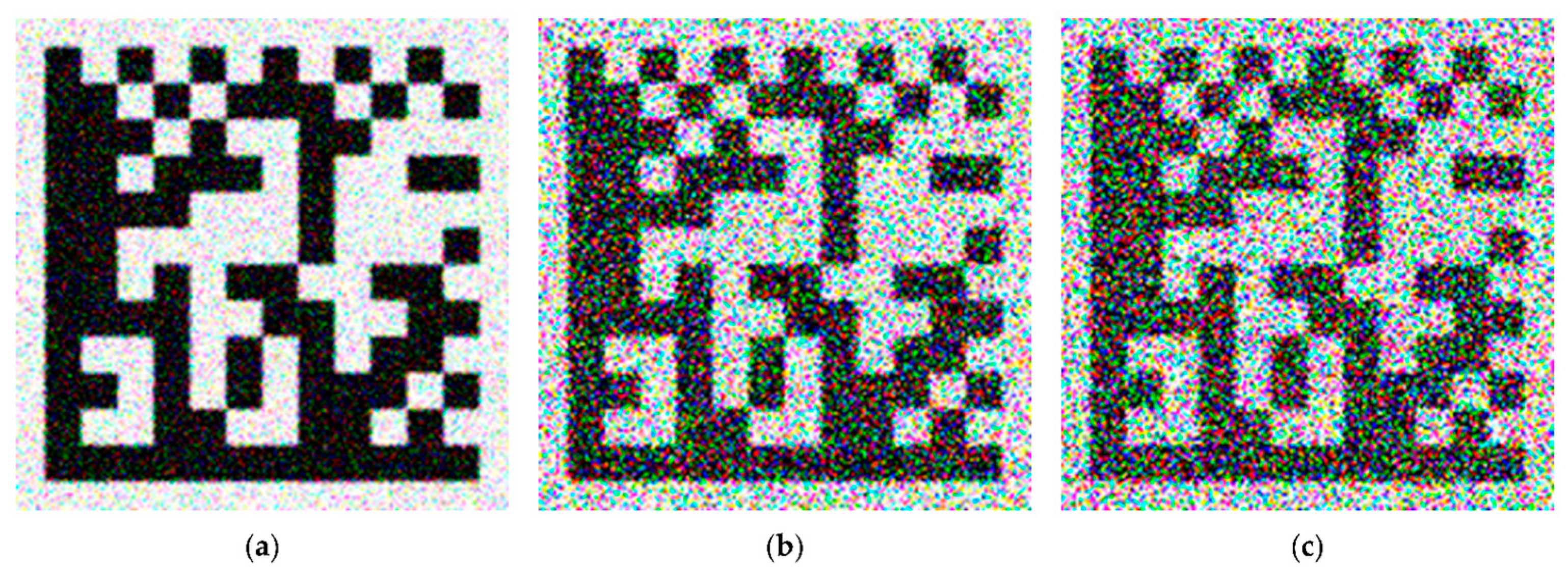

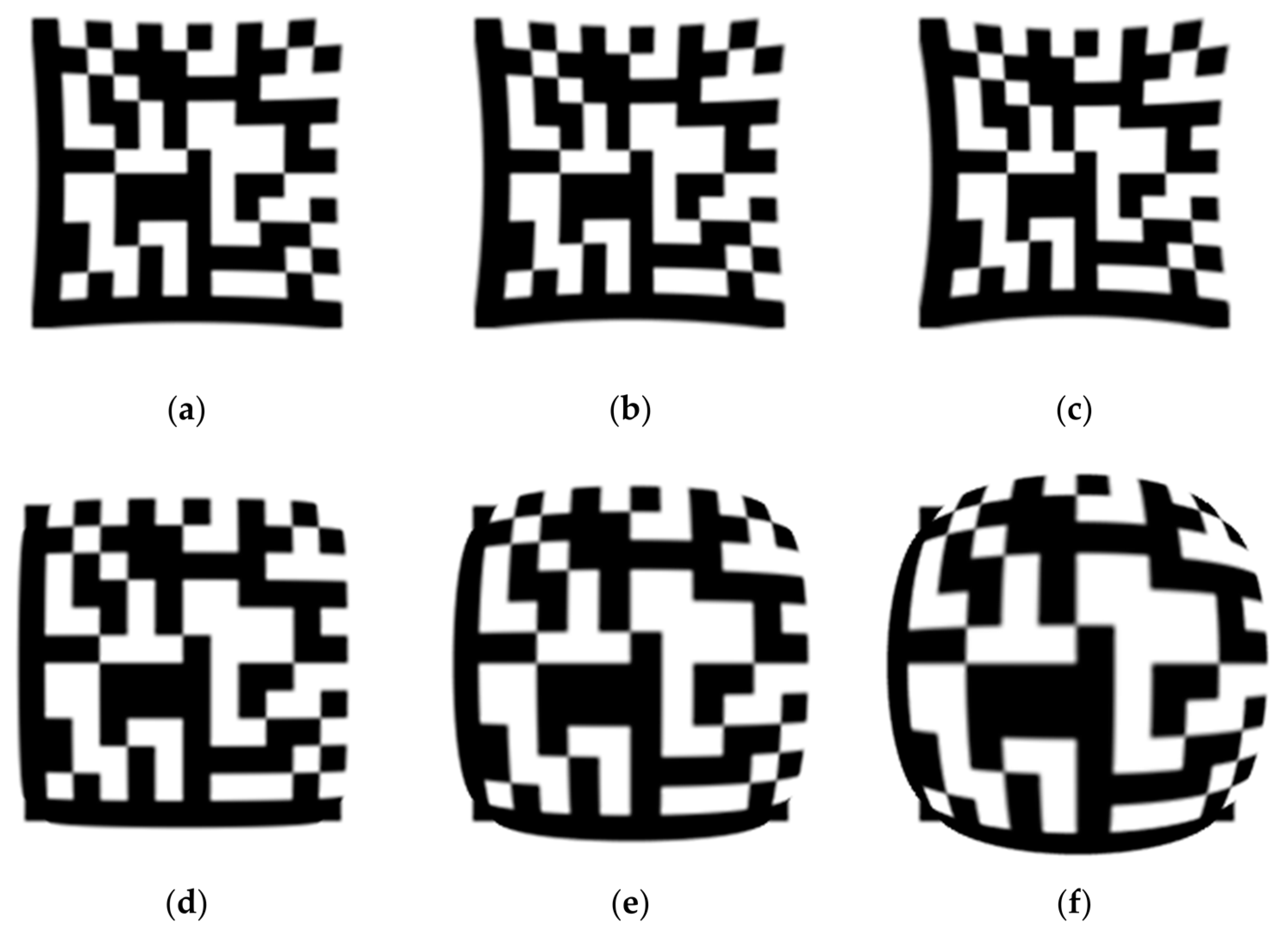

- Data regions: A matrix arranged according to certain rules, containing encoded data information. Dark and light modules represent binary “1” and “0”, respectively, as shown in Figure 1b.

- Finder pattern: The finder pattern is a perimeter to the data region and is one module wide. The two adjacent sides illustrated in Figure 1c form the L boundary, and the two opposite sides illustrated in Figure 1d are made up of alternating dark and light modules. The finder pattern is used to locate and define the size of data modules, helping to determine the physical size and distortion of the barcode.

- Quiet zone: This is one data module wide, mainly used to separate the two-dimensional barcode from background information, as shown in Figure 1e.

- Alignment pattern: When the barcode symbol consists of multiple data regions, the data regions need to be divided by alignment patterns, as depicted in Figure 1f.

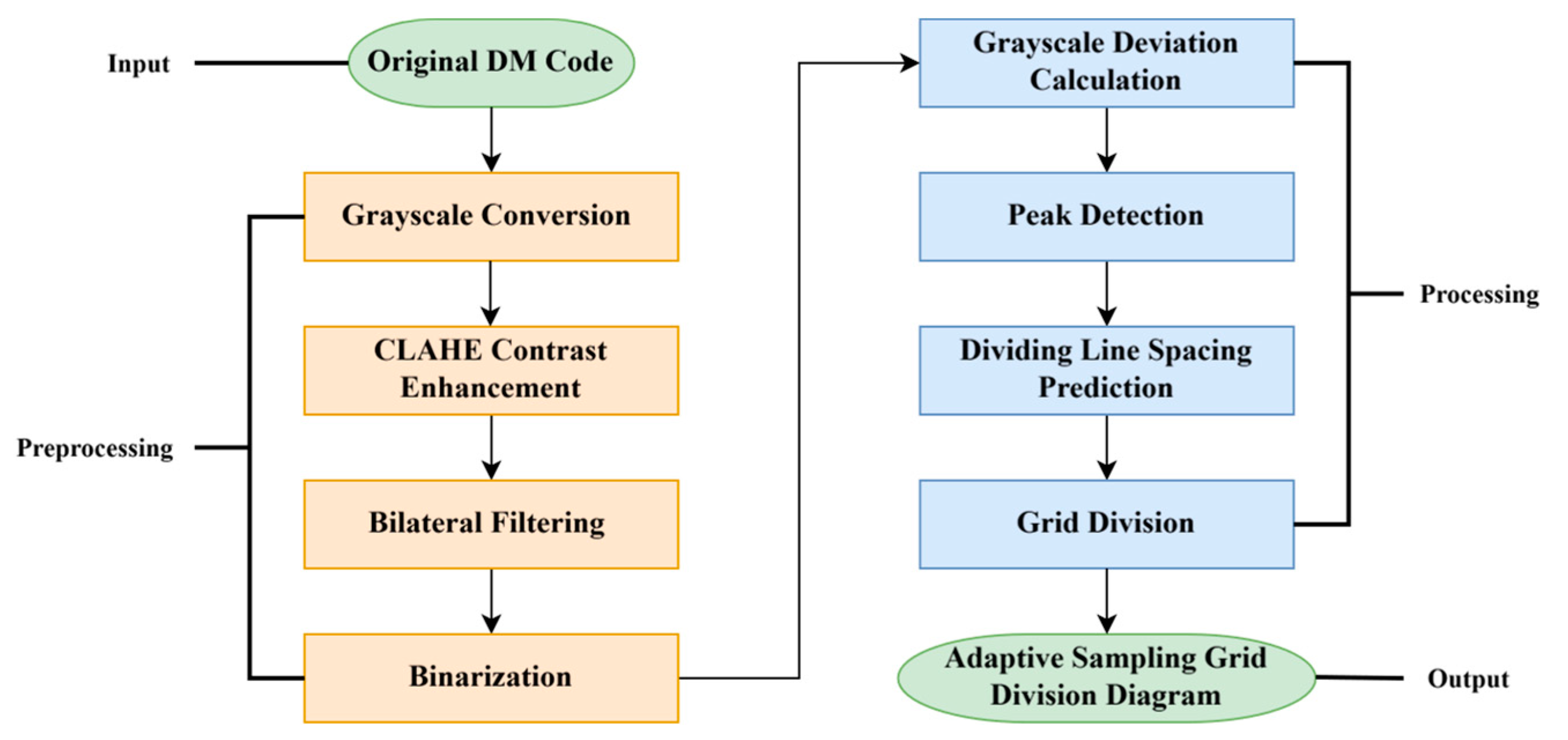

- Based on the projection algorithm and the exponential weighted moving average model, an adaptive sampling grid division method is proposed to overcome the problem that the sampling grid cannot be divided when the finder pattern encounters pollution or is missing.

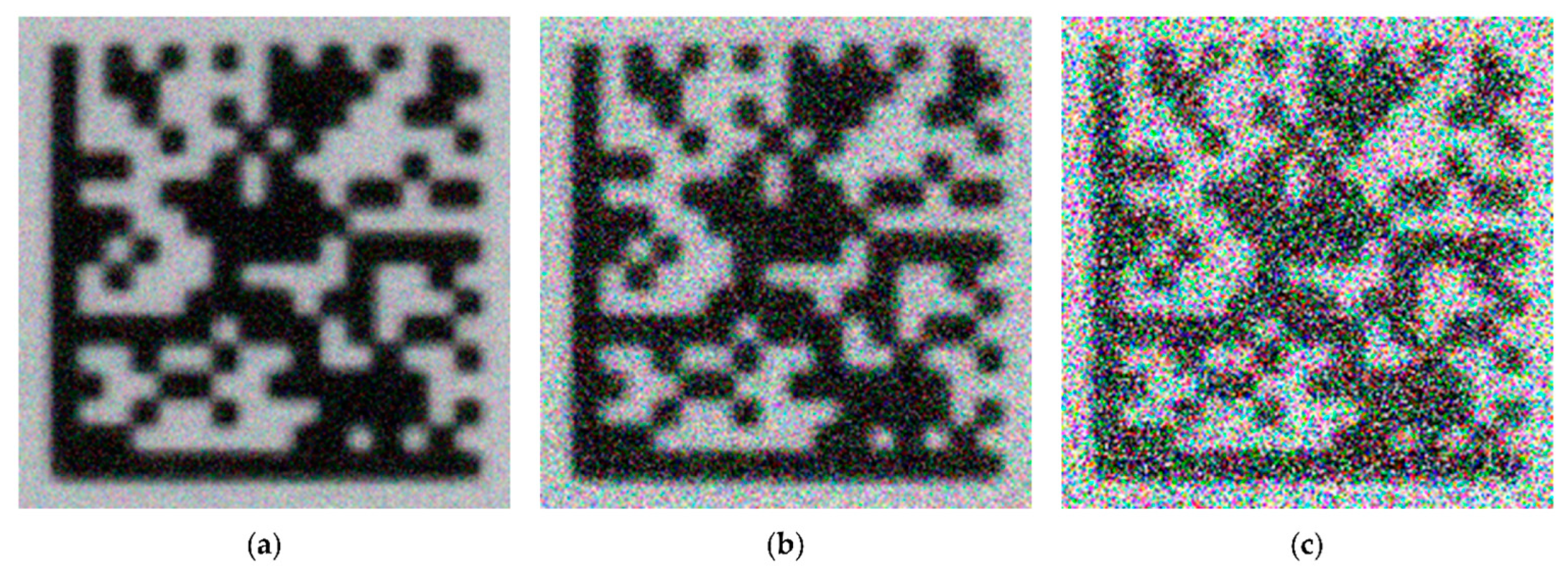

- By combining the local outlier factor (LOF) algorithm and the local weighted linear regression algorithm, a binarization method based on grid grayscale change trends is proposed. It optimizes problems such as blurry DM code images, wear, corrosion, geometric distortion, and strong background interference in practical application scenarios. It reduces the quality requirements for DM code images and improves decoding capabilities in complex environments.

2. Related Work

3. Method of Adaptive Sampling Grid Partitioning

3.1. DM Code Image Preprocessing

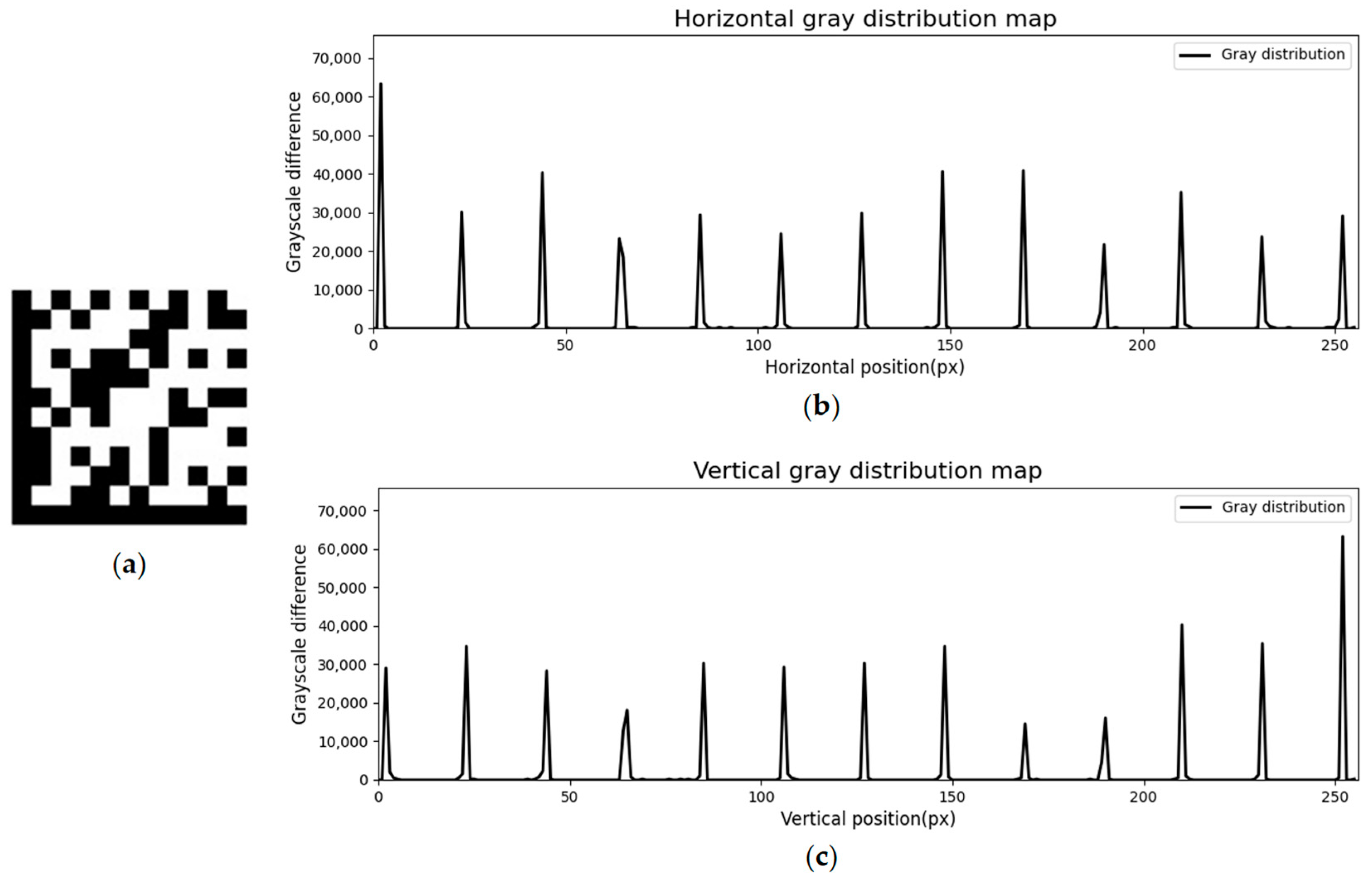

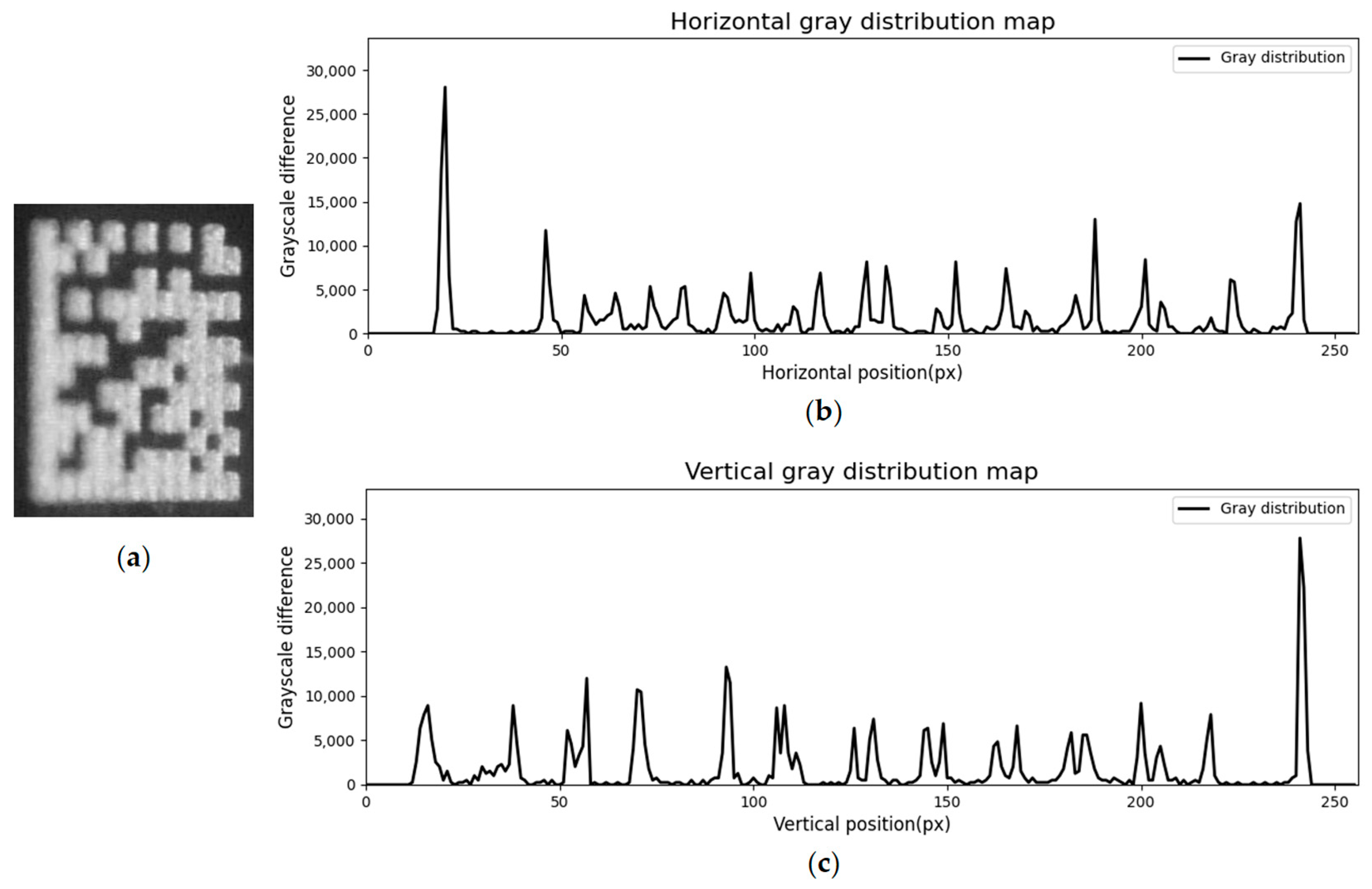

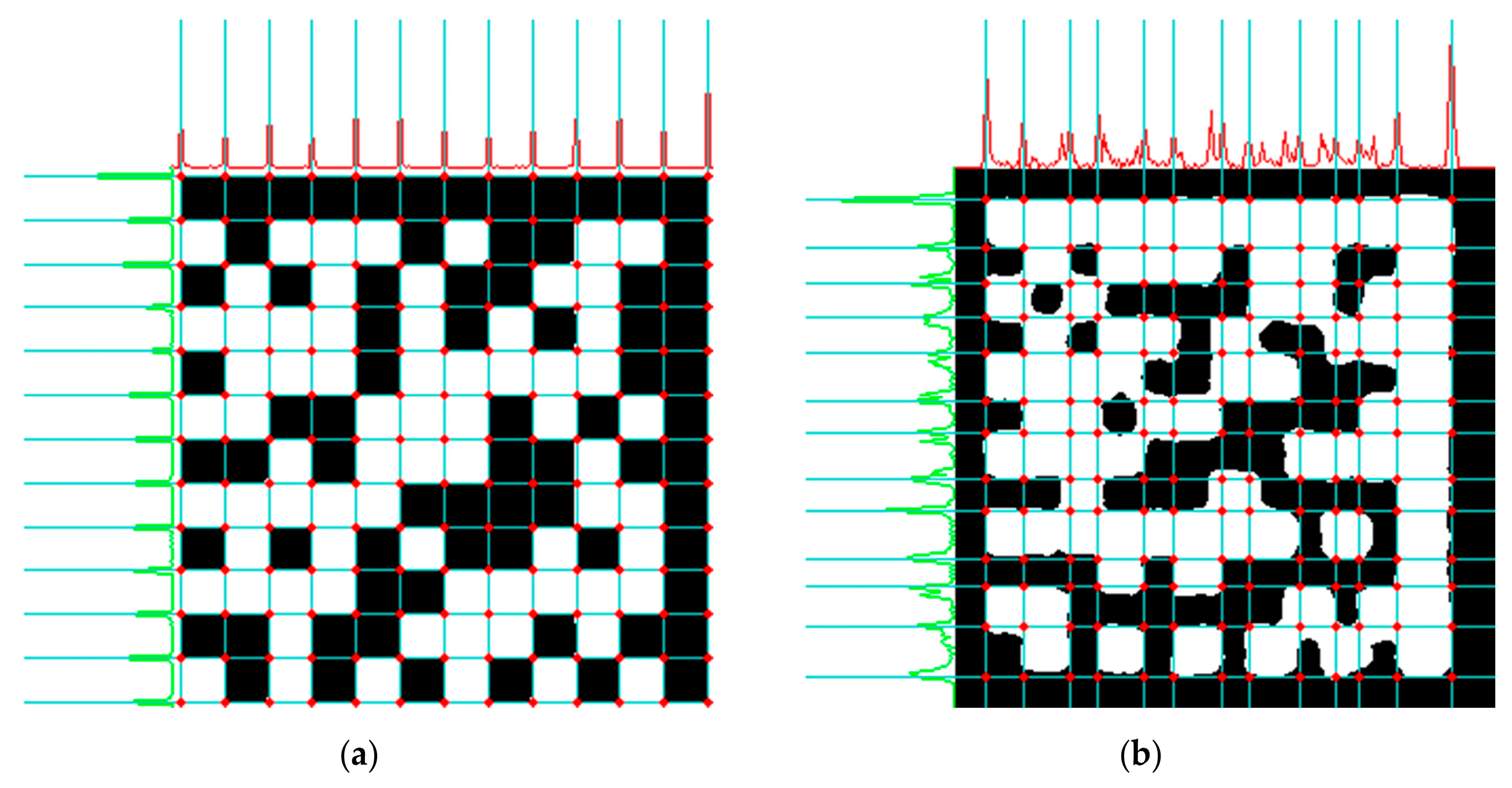

3.2. Waveform Extraction

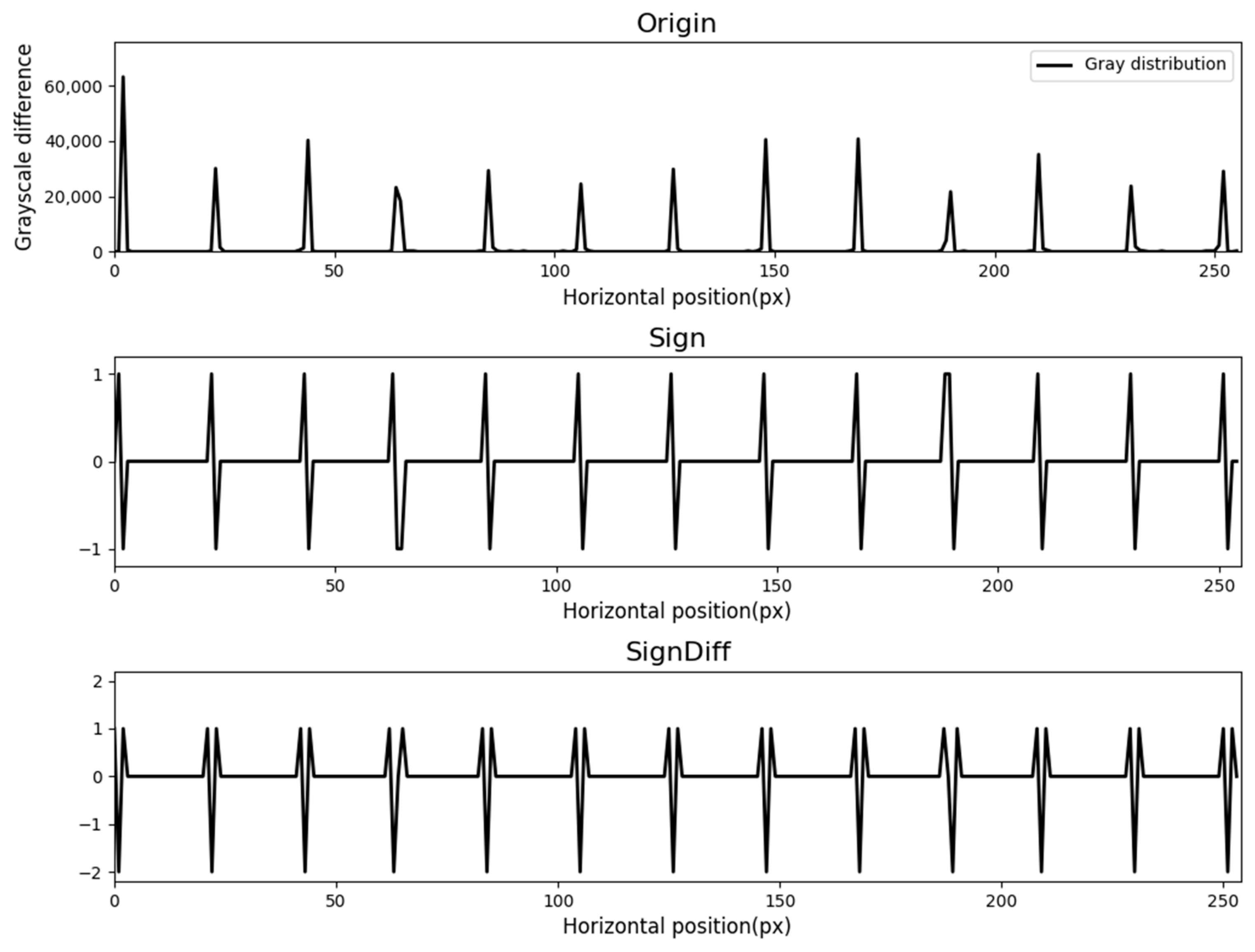

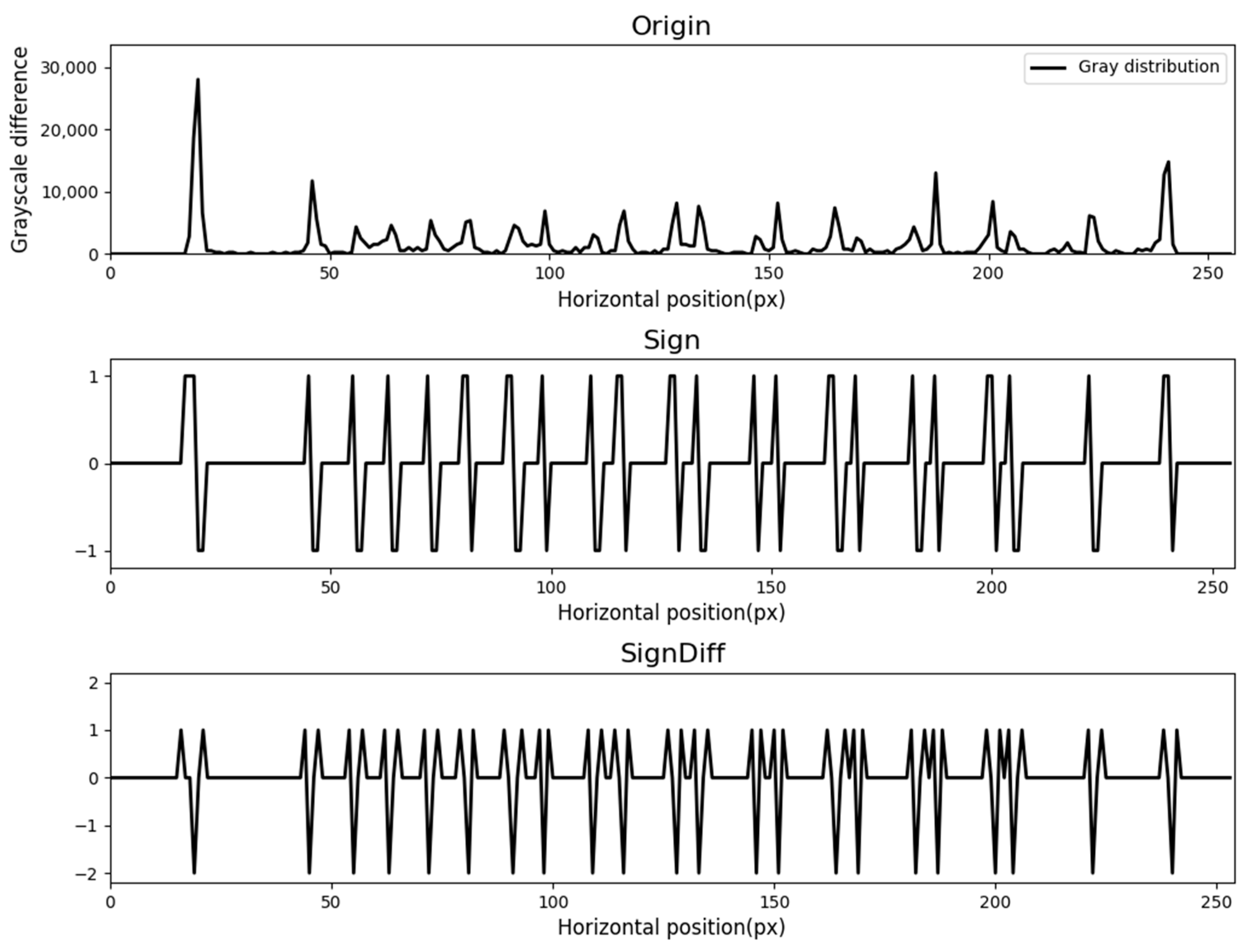

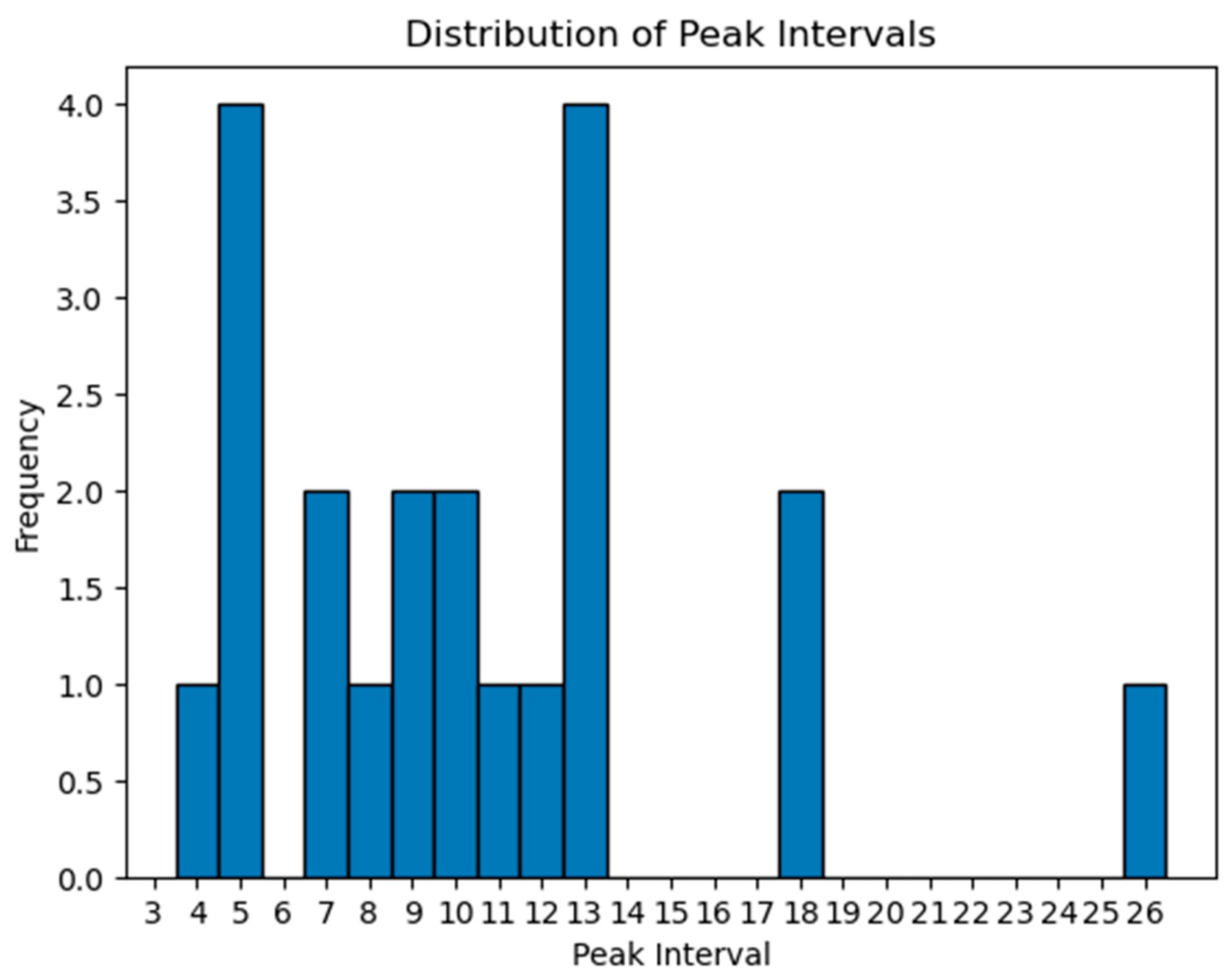

3.3. Peak Detection

3.4. Dividing Line Recognition

4. Binarization Method Based on Grayscale Change Trend

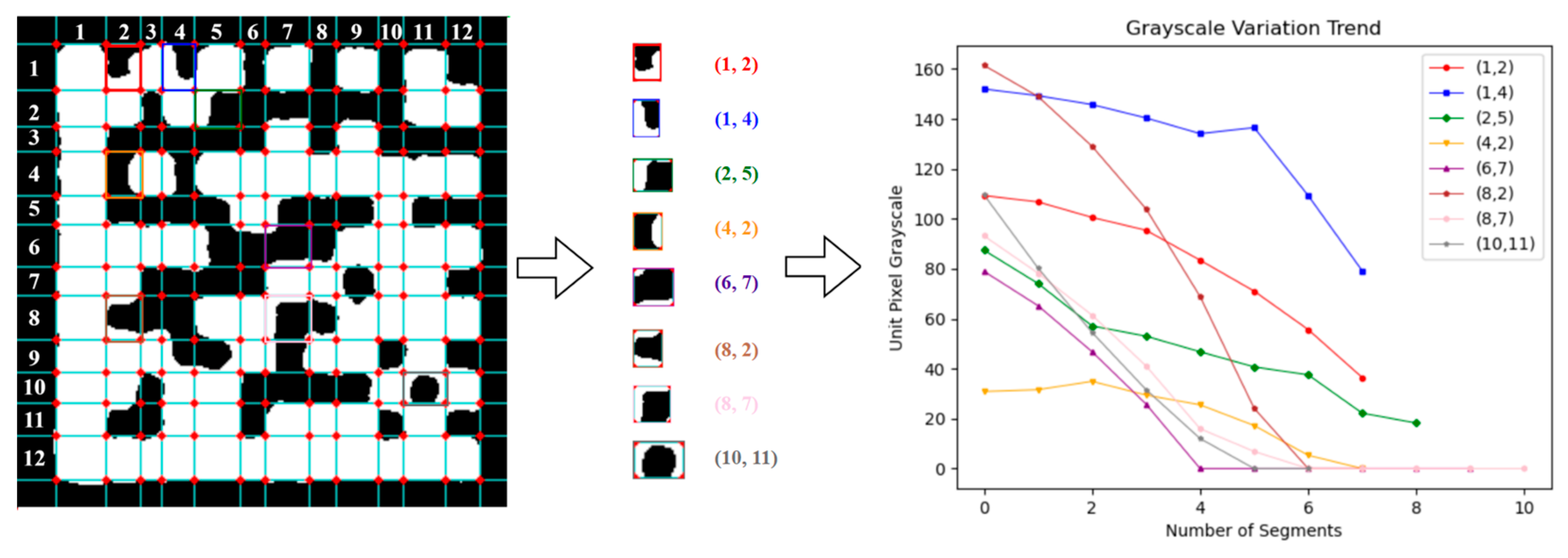

4.1. Gray Change Trend

| Algorithm 1. Iterative division of the grid |

| Input: A list of grids GPL that each contains the four corner coordinates of a grid after partitioning, a binarized black-and-white image BI. Output: A list of the grayscale distribution trends GVLA for each grid in the grid list. 1: Initialize GVLA as an empty list 2: For each grid_points in GPL: 3: Initialize an empty list 4: Get grid_length and grid_width from grid_points 5: While grid_length > 2 and grid_width > 2: 6: Create an empty mask of the same size as BI 7: Fill grid_points region in the mask with value 255 8: Compute gray_value = mean of pixels in BI inside the mask 9: Append gray_value to list 10: If grid_length > 2 and grid_width > 2: 11: Decrease grid_length and grid_width by 2 12: Else If grid_length ≤ 2 < grid_width: 13: Decrease grid_width by 2 14: Else: 15: Decrease grid_length by 2 16: Update grid_points by shifting corners inward 17: End While 18: Append list to GVLA 19: End For 20: Return GVLA |

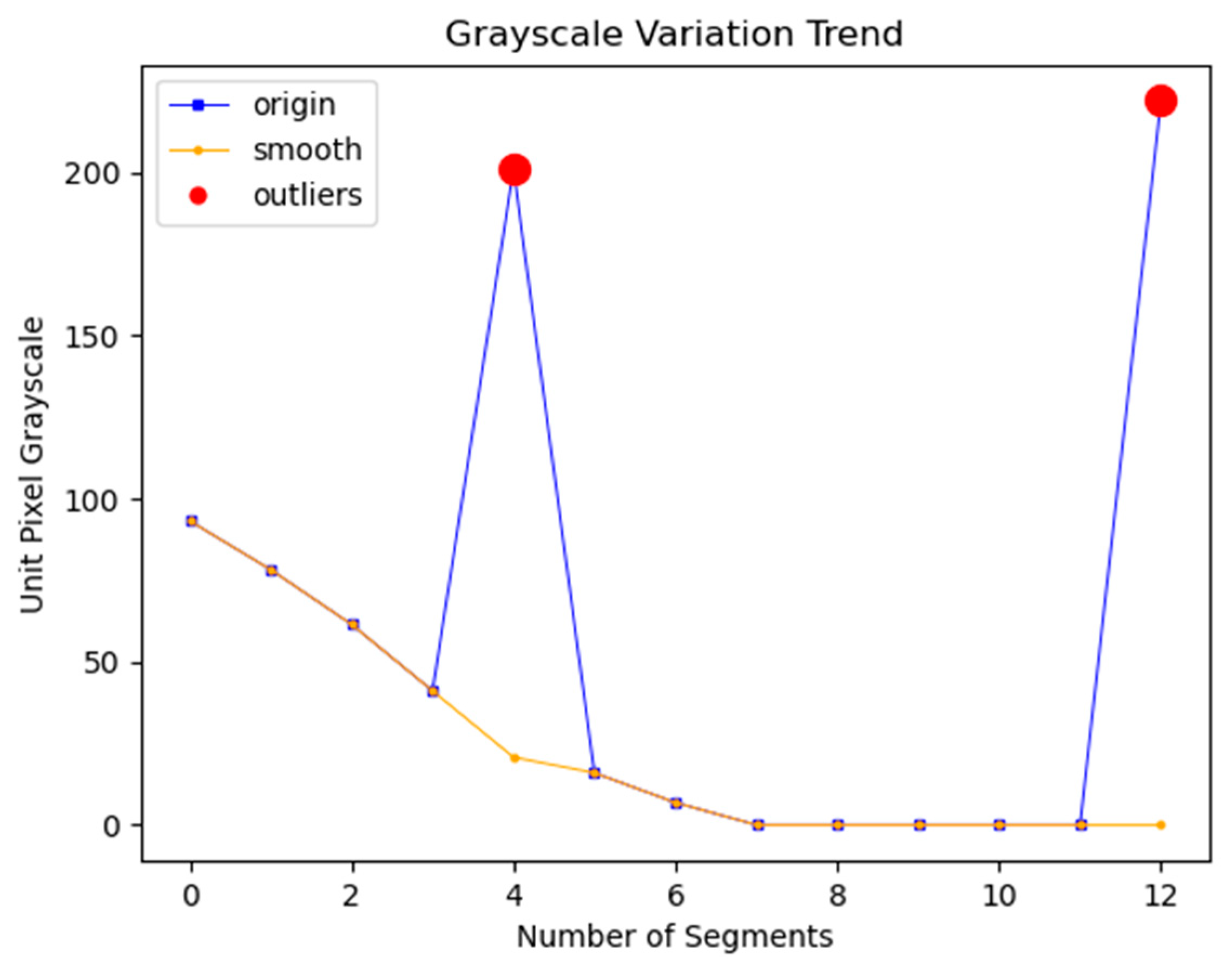

4.2. Outlier Detection and Correction

- Distance between sample points. There are many ways to measure the distance between points, and the commonly used distance measurement method can be defined by the Minkowski distance [49]. For a dataset P, the distance between point and point is denoted as , and the Minkowski distance formula is shown in Equation (5):when the value of in the Minkowski distance expression is 1, it is called the Manhattan distance, which is used to calculate the orthogonal-side distance between two points. When the value of in the Minkowski distance expression is 2, it is called the Euclidean distance [50], which is used to calculate the straight-line distance between two points in Euclidean space. When the value of in the Minkowski distance expression tends to infinity, it is called the Chebyshev distance, which is used to calculate the maximum absolute value of the difference between coordinate values. The Chebyshev distance focuses on the maximum deviation in a single dimension and is not sensitive to coordinated changes across multiple directions. The Manhattan distance captures the cumulative horizontal and vertical deviations by summing the differences in each dimension, making it unable to directly reflect the true magnitude of diagonal changes. The Euclidean distance can directly measure the linear distance between two points, providing an accurate measure regardless of whether the deviation occurs horizontally, vertically, or diagonally. This means that the Euclidean distance can capture variations in all directions in DM codes, not just in a certain direction. Therefore, this paper chooses the Euclidean distance as the measurement method for distance.

- The k-distance. If there are at least k points in dataset P that do not include , satisfying , at most k−1 points ; not including and satisfying , then the k-distance of point refers to the distance between the k-farthest point from in the dataset and .

- The k-distance neighborhood. For dataset P, the k-distance neighborhood of point is denoted as , and the definition of is shown in Equation (6):In fact, the k-distance neighborhood of point is a set of points that satisfy special properties. The points in the set satisfy that their distance to point is less than or equal to the k-distance of point .

- Reachability distance. For dataset P, the reachability distance from point to point is denoted as , and the definition of is shown in Equation (7):The reachability distance from point p to any point is at least equal to the k-distance of point . If a point belongs to the k-distance neighborhood of point , then the reachability distance from point to this point is equal to the k-distance of point ; if a point does not belong to the k-distance neighborhood of point , then the reachability distance from point to this point is equal to the actual distance between the two points.

- Local reachability density. For dataset P, the local reachability density of point is denoted as , and the definition of is shown in Equation (8):where represents the number of points contained in the k-distance neighborhood of point . In fact, the local reachability density of point represents the reciprocal of the average value of the reachability distance from all points in the k-distance neighborhood of point to point . When the distance between point and other points in its k-distance neighborhood is larger, the local reachability density of point is smaller, indicating that the distribution of point and its surrounding points is sparser. Conversely, the larger the local reachability density of point , the denser the distribution of point and its surrounding points. It should be noted that there is an implicit premise in the definition of local reachability density, i.e., all points in the k-distance neighborhood of point cannot be duplicate points. This is because if such duplicate points exist, the reachability distance calculated in local reachability density will be zero, causing local reachability density to become infinite.

- Local outlier factor. For dataset P, the LOF of point is denoted as , and the definition of is shown in Equation (9):where the LOF of point represents the average of the ratio of the local reachability density of the neighborhood points of point to the local reachability density of point . If the LOF is less than 1, it means that the local reachability density of point is higher than that of its neighborhood points, so point belongs to a dense point. If the LOF is equal to 1, it means that the local reachability density of point is similar to that of its neighborhood points, so point and its neighborhood points may belong to the same cluster. If the LOF is greater than 1, it means that the local reachability density of point is lower than that of its neighborhood points, so point belongs to an outlier. Based on the LOF algorithm, outliers in the grayscale change trend can be detected. The process of correcting outliers based on the grayscale change trend is as follows: Suppose point is an outlier, then the previous point of the outlier is , and the next point of the outlier is . The correction value of outlier is calculated by Equation (10):

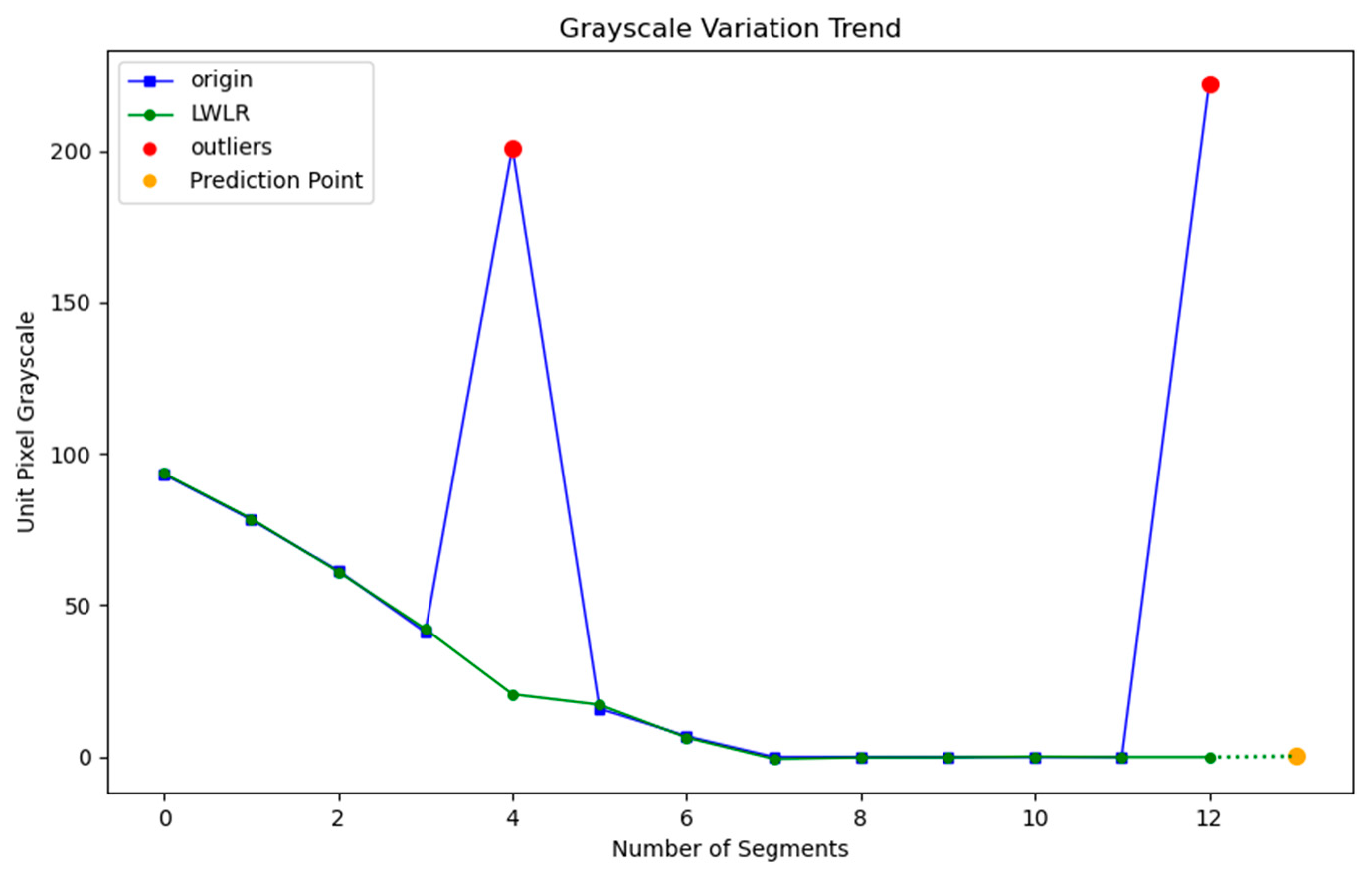

4.3. Locally Weighted Linear Regression

| Algorithm 2. Grayscale prediction and output of data matrix |

| Input: A list of the grayscale distribution trends GVLA from Algorithm 1. Output: A decoded data matrix DM. 1: Initialize an empty list 2: For each gray_trend_list in GVLA: 3: Create an array x of indices from 0 to len(gray_trend_list) − 1 4: Convert gray_trend_list to a numpy array y 5: Apply Local Outlier Factor (LOF) to detect outliers in y 6: Identify outlier_indices where LOF = −1 7: For each outlier_idx in outlier_indices: 8: If outlier_idx is at start or end, apply smoothing based on neighbors 9: End For 10: Fit Kernel Regression model on smoothed y with x 11: Calculate predicted gray value for next point 12: If predicted gray value > 128: 13: Set predicted value to 255 (black) 14: Else: 15: Set predicted value to 0 (white) 16: Append predicted value to list 17: End For# Post-processing after obtaining the predicted gray values 18: Reshape list into a 2D array 19: Modify the first row to have alternating 0 and 255 values 20: Modify the last column to have alternating 255 and 0 values 21: Set the first column and the last row to 0 22: Return DM |

5. Experiments and Result Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Martínez-Moreno, J.; Marcén, P.G.; Torcal, R.M. Data matrix (DM) codes: A technological process for the management of the archaeological record. J. Cult. Heritage 2011, 12, 134–139. [Google Scholar] [CrossRef]

- ISO/IEC. Information Technology—Automatic Identification and Data Capture Techniques—Data Matrix Bar Code Symbology Specification; International Organization for Standardization: Geneva, Switzerland, 2006. [Google Scholar]

- Dai, Y.; Liu, L.; Song, W.; Du, C.; Zhao, X. The realization of identification method for DataMatrix code. In Proceedings of the 2017 International Conference on Progress in Informatics and Computing (PIC), Nanjing, China, 15–17 December 2017; pp. 410–414. [Google Scholar] [CrossRef]

- Dita, I.-C.; Otesteanu, M.; Quint, F. Scanning of industrial data matrix codes in non orthogonal view angle cases. In Proceedings of the 6th Euro American Conference on Telematics and Information Systems, Valencia, Spain, 23–25 May 2012; Association for Computing Machinery: Valencia, Spain, 2012; pp. 363–366. [Google Scholar] [CrossRef]

- Kulshreshtha, R.; Kamboj, A.; Singh, S. Decoding robustness performance comparison for QR and data matrix code. In Proceedings of the Second International Conference on Computational Science, Engineering and Information Technology, Coimbatore, India, 26–28 October 2012; Association for Computing Machinery: Coimbatore UNK, India, 2012; pp. 722–731. [Google Scholar] [CrossRef]

- Moss, C.; Chakrabarti, S.; Scott, D.W. Parts quality management: Direct part marking of data matrix symbol for mission assurance. In Proceedings of the 2013 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2013; pp. 1–12. [Google Scholar] [CrossRef]

- Karrach, L.; Pivarčiová, E. Recognition of Data Matrix codes in images and their applications in Production Processes. Manag. Syst. Prod. Eng. 2020, 28, 154–161. [Google Scholar] [CrossRef]

- Li, J.; Su, G.; Liu, L. Research on DPM technology standardization status quo and development suggestion. Stand. Sci. 2009, 32–35. [Google Scholar]

- Czerwińska, K.; Pacana, A. Analysis of the implementation of the identification system for directly marked parts-Data Matrix code. Prod. Eng. Arch. 2019, 23, 22–26. [Google Scholar] [CrossRef]

- Iwabuchi, S.; Kakazu, Y.; Koh, J.-Y.; Harata, N.C. Evaluation of the effectiveness of Gaussian filtering in distinguishing punctate synaptic signals from background noise during image analysis. J. Neurosci. Methods 2014, 223, 92–113. [Google Scholar] [CrossRef]

- Green, O. Efficient Scalable Median Filtering Using Histogram-Based Operations. IEEE Trans. Image Process. 2018, 27, 2217–2228. [Google Scholar] [CrossRef]

- Xiao, H.; Guo, B.; Zhang, H.; Li, C. A Parallel Algorithm of Image Mean Filtering Based on OpenCL. IEEE Access 2021, 9, 65001–65016. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, Z. A novel local threshold binarization method for QR image. In Proceedings of the International Conference on Automatic Control and Artificial Intelligence (ACAI 2012), Xiamen, China, 3–5 March 2012; pp. 224–227. [Google Scholar] [CrossRef]

- Wang, Q. Segmentation of maximum entropy threshold based on gradient boundary control. J. Comput. Appl. 2011, 31, 1030–1032+1120. [Google Scholar] [CrossRef]

- Qiang, F. Application of Bernsen method in the field of character segmentation. Heilongjiang Sci. Technol. Inf. 2014, 144–145. [Google Scholar] [CrossRef]

- Kim, T.-S.; Park, K.-A.; Lee, M.-S.; Park, J.-J.; Hong, S.; Kim, K.-L.; Chang, E. Application of Bimodal Histogram Method to Oil Spill Detection from a Satellite Synthetic Aperture Radar Image. Korean J. Remote. Sens. 2013, 29, 645–655. [Google Scholar] [CrossRef]

- Li, D.; Guo, H.; Tian, J.; Tian, Y. An improved method of locating L-edges in DataMatrix codes. J. Shenzhen Univ. (Sci. Eng.) 2018, 35, 151–157. [Google Scholar] [CrossRef]

- Chen, W.; Zhang, X. Improved edge detecting algorithm of mathematical morphology. In Proceedings of the in 2010 International Conference on Machine Vision and Human-Machine Interface, Kaifeng, China, 24–25 April 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 199–202. [Google Scholar] [CrossRef]

- Lingling, L.; Yaoquan, Y.; Tao, G. The detection and realization of Data Matrix code by accurate locating. Int. J. Adv. Pervasive Ubiquitous Comput. 2014, 6, 35–42. [Google Scholar] [CrossRef][Green Version]

- Michael, D. Multithreaded two-pass connected components labelling and particle analysis in ImageJ. R. Soc. Open Sci. 2021, 8, 201784. [Google Scholar] [CrossRef]

- Karrach, L.; Pivarčiová, E.; Nikitin, Y.R. Comparing the impact of different cameras and image resolution to recognize the data matrix codes. J. Electr. Eng. 2018, 69, 286–292. [Google Scholar] [CrossRef]

- Chen, W.; Chen, B. Extraction technology of 2-D barcode under complicated background. J. Xi’an Univ. Posts Telecommun. 2014, 19, 48–51. [Google Scholar]

- Zhu, C.; Ge, D.-Y.; Yao, X.-F.; Xiang, W.-J.; Li, J.; Li, Y.-X. Zebra-crossing detection based on cascaded Hough transform principle and vanishing point characteristics. Open Comput. Sci. 2023, 13, 20220260. [Google Scholar] [CrossRef]

- Wu, Q.; He, Y.; Luo, Y. Research on QR code image processing on the LED screen. In Proceedings of the International Conference on Optics and Image Processing (ICOIP 2021), Guilin, China, 4–6 June 2021; Volume 11915. [Google Scholar] [CrossRef]

- Wang, L.; Chen, Z.; Chen, S.; Chen, X. An improved algorithm of simple polygon convex hull. Comput. Eng. 2007, 33, 200–201. [Google Scholar]

- Solomon, C.; Breckon, T. Fundamentals of Digital Image Processing: A Practical Approach with Examples in Matlab. Available online: https://onlinelibrary.wiley.com/doi/book/10.1002/9780470689776 (accessed on 21 February 2025).

- Min, Q.; Zhang, X.; Li, D.; Fan, Y. The review of algorithms to correct barrel distortion image. Adv. Mater. Res. 2012, 628, 403–409. [Google Scholar] [CrossRef]

- Yuan, J.; Suen, C.Y. An optimal algorithm for detecting straight lines in chain codes. In Proceedings of the 11th IAPR International Conference on Pattern Recognition. Vol. III. Conference C: Image, Speech and Signal Analysis, The Hague, The Netherlands, 30 August–3 September 1992; pp. 692–695. [Google Scholar] [CrossRef]

- Greene, N.; Heckbert, P.S. Creating Raster Omnimax Images from Multiple Perspective Views Using the Elliptical Weighted Average Filter. IEEE Comput. Graph. Appl. 1986, 6, 21–27. [Google Scholar] [CrossRef]

- Kwon, H. AudioGuard: Speech Recognition System Robust against Optimized Audio Adversarial Examples. Multimedia Tools Appl. 2024, 83, 57943–57962. [Google Scholar] [CrossRef]

- Rosenfeld, A.; Pfaltz, J.L. Sequential Operations in Digital Picture Processing. J. ACM 1966, 13, 471–494. [Google Scholar] [CrossRef]

- Liao, L.; Li, J.; Lu, C. Data Extraction Method for Industrial Data Matrix Codes Based on Local Adjacent Modules Structure. Appl. Sci. 2022, 12, 2291. [Google Scholar] [CrossRef]

- Wang, W.; He, W.; Lei, L.; Li, W.; Guo, G. Accurate location of polluted DataMatrix code from multiple views. J. Comput.-Aided Des. Comput. Graph. 2013, 25, 1345–1353. [Google Scholar] [CrossRef]

- Liu, Z.; Zheng, H.; Cai, W. Research on Two-Dimensional Bar Code Positioning Approach Based on Convex Hull Algorithm. In Proceedings of the International Conference on Digital Image Processing, Bangkok, Thailand, 7–9 March 2009; pp. 177–180. [Google Scholar] [CrossRef]

- Hu, D.; Tan, H.; Chen, X. The application of Radon transform in 2D barcode image recognition. Wuhan Univ. J. (Nat. Sci. Ed.) 2005, 51, 584–588. [Google Scholar] [CrossRef]

- Lu, X.; Bao, X.; Shen, Y. Data Matrix code positioning and recognition in complex background of PCB board. J. Hubei Minzu Univ. (Nat. Sci. Ed.) 2019, 37, 296–299+303. [Google Scholar]

- Lv, H.; Shan, P.; Shi, H.; Zhao, L. An adaptive bilateral filtering method based on improved convolution kernel used for infrared image enhancement. Signal, Image Video Process. 2022, 16, 2231–2237. [Google Scholar] [CrossRef]

- Xiang, A.; Qin, J.; Cai, H. A new method for automatic identification of peaks in noisy signals based on normalized difference. Meteorol. Hydrol. Mar. Instrum. 2016, 33, 75–78. [Google Scholar] [CrossRef]

- Mei, K.; Liu, X.; Mu, C.; Qin, X. Fast defogging algorithm based on adaptive exponentially weighted moving average filtering. Chin. J. Lasers 2020, 47, 250–259. [Google Scholar] [CrossRef]

- Li, L.; Yin, Y.; Wu, J.; Dong, W.; Shi, G. Mask-fused human face image quality assessment method. J. Image Graph. 2022, 27, 3476–3490. [Google Scholar] [CrossRef]

- Hodge, V.J.; Austin, J. A survey of outlier detection methodologies. Artif. Intell. Rev. 2004, 22, 85–126. [Google Scholar] [CrossRef]

- Sadik, S.; Gruenwald, L. Online outlier detection for data streams. In Proceedings of the 15th Symposium on International Database Engineering & Applications, Lisbon, Portugal, 21–27 September 2011; Association for Computing Machinery: Lisbon, Portugal, 2011; pp. 88–96. [Google Scholar] [CrossRef]

- Cao, C.; Tian, Y.; Zhang, Y.; Liu, X. Application of statistical methods in outlier detection for time series data. J. Hefei Univ. Technol. (Nat. Sci.) 2018, 41, 1284–1288. [Google Scholar] [CrossRef]

- Ramaswamy, S.; Rastogi, R.; Shim, K. Efficient algorithms for mining outliers from large data sets. ACM Sigmod Rec. 2000, 29, 427–438. [Google Scholar] [CrossRef]

- Breunig, M.M.; Kriegel, H.-P.; Ng, R.T.; Sander, J. LOF: Identifying density-based local outliers. Sigmod Rec. 2000, 29, 93–104. [Google Scholar] [CrossRef]

- Zhuo, L.; Zhao, H.; Zhan, S. Overview of anomaly detection methods and applications. Appl. Res. Comput. 2020, 37, 9–15. [Google Scholar]

- Zhang, L.-C.; Jiang, H.-M.; Zhang, J.-X.; Xie, K. Phase demodulation of fiber vibration sensing by modified ellipse fitting algorithm based on local outlier factor optimization. Acta Phys. Sin. 2022, 71, 194206. [Google Scholar] [CrossRef]

- Zhi, X.; Xu, Z. Minkowski distance based soft subspace clustering with feature weight self-adjustment mechanism. Appl. Res. Comput. 2016, 33, 2688–2692. [Google Scholar] [CrossRef]

- Ji, W.; Ni, W. A dynamic control method of population size based on euclidean distance. J. Electron. Inf. Technol. 2022, 44, 2195–2206. [Google Scholar] [CrossRef]

- Atkeson, C.G.; Moore, A.W.; Schaal, S. Locally Weighted Learning. Artif. Intell. Rev. 1997, 11, 11–73. [Google Scholar] [CrossRef]

- Alqasrawi, Y.; Azzeh, M.; Elsheikh, Y. Locally weighted regression with different kernel smoothers for software effort estimation. Sci. Comput. Program. 2022, 214, 102744. [Google Scholar] [CrossRef]

- Zhang, W.; Yang, P.; Dai, D.; Nehorai, A. Reflectance estimation using local regression methods. In Proceedings of the Advances in Neural Networks–ISNN 2012, Shenyang, China, 11–14 July 2012; pp. 116–122. [Google Scholar] [CrossRef]

- Wang, M.; van Ryzin, J. A class of smooth estimators for discrete distributions. Biometrika 1981, 68, 301–309. [Google Scholar] [CrossRef]

- Racine, J.S. Nonparametric econometrics: A primer. Found. Trends Econom. 2008, 3, 1–88. [Google Scholar] [CrossRef]

- Jamei, M.; Hasanipanah, M.; Karbasi, M.; Ahmadianfar, I.; Taherifar, S. Prediction of flyrock induced by mine blasting using a novel kernel-based extreme learning machine. J. Rock Mech. Geotech. Eng. 2021, 13, 1438–1451. [Google Scholar] [CrossRef]

- Kisi, O.; Ozkan, C. A new approach for modeling sediment-discharge relationship: Local weighted linear regression. Water Resour. Manag. 2017, 31, 1–23. [Google Scholar] [CrossRef]

- msva, 2005, libdmtx. 2022. Available online: https://github.com/dmtx/libdmtx (accessed on 21 February 2025).

- srowen, 2007, zxing. 2023. Available online: https://github.com/zxing/zxing (accessed on 21 February 2025).

| Time Complexity | Space Complexity |

|---|---|

| O(x × L × logL × p) | O(x × t + p) |

| Time Complexity | Space Complexity |

|---|---|

| O(m × (nlogn + n2)) | O(m × n) |

| Dataset | Algorithm | Average Execution Time(s) | Average Memory Consumption (KB) |

|---|---|---|---|

| A | libdmtx | 0.002 | 35.600 |

| zxing | 0.435 | 5.000 | |

| this paper | 5.133 | 244.800 | |

| B | libdmtx | 0.004 | 60.364 |

| zxing | 0.356 | 6.909 | |

| this paper | 14.971 | 329.454 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, L.; Zhong, G.; Chen, Z.; Chen, Z. DataMatrix Code Recognition Method Based on Coarse Positioning of Images. Electronics 2025, 14, 2395. https://doi.org/10.3390/electronics14122395

Hu L, Zhong G, Chen Z, Chen Z. DataMatrix Code Recognition Method Based on Coarse Positioning of Images. Electronics. 2025; 14(12):2395. https://doi.org/10.3390/electronics14122395

Chicago/Turabian StyleHu, Lingyue, Guanbin Zhong, Zhiwei Chen, and Zhong Chen. 2025. "DataMatrix Code Recognition Method Based on Coarse Positioning of Images" Electronics 14, no. 12: 2395. https://doi.org/10.3390/electronics14122395

APA StyleHu, L., Zhong, G., Chen, Z., & Chen, Z. (2025). DataMatrix Code Recognition Method Based on Coarse Positioning of Images. Electronics, 14(12), 2395. https://doi.org/10.3390/electronics14122395