Abstract

The visual nature of Sign Language datasets raises privacy concerns that hinder data sharing, which is essential for advancing deep learning (DL) models in Sign Language recognition and translation. This study evaluated two anonymization techniques, realistic avatar synthesis and face swapping (FS), designed to anonymize the identities of signers, while preserving the semantic integrity of signed content. A novel metric, Identity Anonymization with Expressivity Preservation (IAEP), is introduced to assess the balance between effective anonymization and the preservation of facial expressivity crucial for Sign Language communication. In addition, the quality evaluation included the LPIPS and FID metrics, which measure perceptual similarity and visual quality. A survey with deaf participants further complemented the analysis, providing valuable insight into the practical usability and comprehension of anonymized videos. The results show that while face swapping achieved acceptable anonymization and preserved semantic clarity, avatar-based anonymization struggled with comprehension. These findings highlight the need for further research efforts on securing privacy while preserving Sign Language understandability, both for dataset accessibility and the anonymous participation of deaf people in digital content.

1. Introduction

Sign Language is the primary means of communication for the deaf community, which comprises about 5% of the population. Being a visual language, it requires special attention to body movements and facial expressions. Providing a system that facilitates and aids communication between the deaf and hearing communities is urgent. In the last decade, with the advent of Deep Learning (DL), research efforts have increased related to translation between Sign Language and spoken languages, but much larger datasets are required for training DL models. However, there is some reluctance to contribute to data collection [1] due to privacy concerns about identity exposure in Sign Language datasets.

In this work, we analyze different techniques to address the challenge of Sign Language data anonymization, aiming to change the appearance of the subjects shown in the videos, while maintaining the original content being signed.

Anonymization of signers allows those contributing to a dataset to conceal their identity, without compromising the meaning of the information they convey. The process of visually anonymizing Sign Language datasets has resulted in a boost in openness to participate in data sharing, largely attributed to the elimination of signer-specific appearance [1]. Alternative approaches such as pixelation [2], blackening [3], and grayscale filters [1] have been tried unsuccessfully because, during sign execution, they affect the facial and body information that is strictly necessary to provide the correct meaning of the message.

The objective of this study was to evaluate the impact of anonymization techniques, specifically through the use of realistic avatars and Face Swapping (FS), on the quality and intelligibility of Sign Language content. This evaluation was carried out using quality metrics, as well as validation by a volunteer group of individuals through a survey, ensuring a comprehensive assessment of how these anonymization methods affected both the preservation of the original message and the usability of the data.

Our work makes the following contributions to the field:

- We compare two formats of video anonymization: one using realistic avatars, and another using face swapping techniques.

- We propose a new measure to evaluate the anonymization of the facial expression preservation of the generated Sign Language videos.

- An extensive survey for a group of deaf individuals was carried out to evaluate the anonymization and comprehension of the synthetic videos.

The remainder of this paper is organized as follows: In Section 2, we provide a comprehensive review of related work. Section 3 shows the methodology used for anonymizing Sign Language videos. The experiment is explained in Section 4, with the results in Section 5. Finally, the conclusions and future work are given in Section 6.

2. Related Work

Although tasks related to Sign Language Recognition [4,5,6,7] and Sign Language Translation [8,9] have been explored for years, the scarcity of consistent and adequate datasets [1] creates a major challenge for their advancement.

Providing variations in appearance and style ensures the diversity in scenarios and situations that will be needed later for the object of study of Sign Language, for which it is essential to have a high number of signers in a dataset. This raises privacy concerns due to possible misuse of data, which has a negative impact on the collection of Sign Language datasets [10]. Anonymization of such video datasets, which have significant research value, would facilitate the contribution of participating signers [1]. Furthermore, it could help increase the anonymous participation of deaf signers, who share their videos on social networks that sometimes include sensitive subjects [11,12]. To preserve the privacy of participants and ensure anonymization of Sign Language datasets, different approaches have been addressed in the state of the art [13] and have been supported by recent studies [14]. It should be noted that work has even been carried out [15] to identify an original signer from his or her gestures.

Early approaches used pixelation [2] or basic graphic filters that masked the face during the sign, but this was not optimal because it resulted in the omission of facial expression, which is essential for the understanding of the sign. Therefore, Sign Language anonymization works followed the following strategies:

- Avatar-based techniques

- Photorealistic retargeting

Both strategies are normally supported by tools such as OpenPose [16] or Mediapipe [17], which estimate body landmarks of the signer. Thus, in a frame-by-frame manner, movements are captured and can be used in the anonymized version. It should also be noted that these techniques have been used for Sign Language Production (SLP) [18], so the state of the art is quite advanced, given that the production of signs was an objective pursued before anonymization.

2.1. Using Avatars for Anonymization

The idea is to reproduce all the signer signs using a representation of the human. These representations or avatars can be seen as cartoonized animations [19], or virtual 3D human animations [20,21]. Once the manual, corporal, and facial expressions have been collected, it is possible to re-enact signed messages. The use of realistic avatars is a time-intensive task [13], but current game engines such as Unreal (https://www.unrealengine.com/, accessed on 1 June 2025) are reducing the processing time and generating realistic avatars.

The work of Tze et al. [19] anonymized Sign Language videos by converting them, through skeleton retargeting, into animated cartoons that mimic the original signer’s gestures, while hiding their identity. This method is designed to be lightweight and capable of running efficiently on a CPU, delivering performance close to real time. For skeleton extraction, it uses MediaPipe Holistic modules. The authors pointed out that their method occasionally fails to accurately transfer facial expressions, mouths, and the spatial alignment of the hands and face.

Virtual 3D humans have been explored by Krishna et al. [22] in SignPose, comparing 3D pose estimation models, including 3D body and hand motion from monocular videos [23,24,25] and those that convert a 2D pose to 3D, using OpenPose as an intermediate model and a quaternion-based architecture to move the 2D keypoints to 3D. Results showed that this intermediate option generated better results. However, the work had a major shortcoming: it did not capture facial expressions. SGNify [26] introduced linguistic priors and constraints for 3D hand pose that help in resolving ambiguities within isolated signs. Unlike Signpose, which requires all OpenPose key points to be detected above the pelvis, which is impractical in noisy LS videos, SGNify incorporates Sign Language knowledge in the form of linguistic constraints. Their authors evaluated SGNify through a perceptual study including 20 experts in American Sing Language, who rated their ease in recognizing the presented sign. Future work included the need to better capture facial expressions, tongue and eye movements, mouth morphemes, and eyebrows. Finally, Baltatzis et al. [27] proposed a diffusion-based 3D SLP model trained on a large-scale dataset of 4D signing avatars and corresponding text transcripts. The proposed method uses an anatomically informed graph neural network defined on the body skeleton.

2.2. Photorealistic Retargeting

To achieve an anonymized signer capable of communicating in the same manner as the original signer, various techniques have been investigated. Research in Sign Language Production (SLP) has utilized Generative Adversarial Networks (GANs) to generate photo-realistic Sign Language video sequences [28], as well as transformer-based architectures [29,30].

Focusing specifically on anonymization methods, AnonySign [31] is a notable example. This approach generates photo-realistic retargeted videos using a Variational Autoencoder (VAE) [32] that incorporates pose and style encoders to synthesize human appearance, effectively replacing the original signer’s identity with a synthetic one.

In [33,34], SignGAN was introduced to produce continuous, photo-realistic Sign Language videos directly from spoken language. The system employed a transformer architecture with a Mixture Density Network (MDN) [35] to translate spoken language into skeletal pose, followed by a pose-conditioned human synthesis model to generate the photo-realistic videos. A keypoint-based loss function was implemented to enhance the quality of hand images in the synthesized outputs.

Xia et al. [14] proposed an unsupervised image animation method featuring a high-resolution decoder and loss functions specifically designed for facial and hand regions, to transform the signer’s identity. However, the authors noted that this method struggled to adapt to videos captured in uncontrolled environments. To address these challenges, they later introduced diffusion models [36] for zero-shot text-guided Sign Language video anonymization [37,38]. These models integrate ControlNet [39] to eliminate the need for pose estimation, along with a specialized module for capturing facial expressions.

The authors claimed their method improves upon existing approaches, such as Cartoonized Anonymization [19], by relying on skeleton estimation to ensure accurate anonymization. Furthermore, they highlighted that, while photorealistic methods such as AnonySign [31], SLA [14], and Neural Sign Reenactor [40] can achieve realistic results, they require training on large-scale Sign Language datasets or precise skeleton estimation, making them less effective in real-world scenarios. Despite these advancements, their proposed method has limitations, particularly when the face is occluded by one or both hands or when rapid movements result in motion blur. Additionally, artifacts commonly associated with Stable Diffusion Models occasionally appear, impacting the overall video quality.

2.3. Conclusions

Progress has been made in anonymization from two points of view, SLP, where signs must be generated from text, and anonymization itself, replacing the original signer with another signer through cartoons, 3D avatars, or photorealistic assemblies. Progress has been very significant in recent years, but there is no established methodology to check whether anonymization has actually been achieved and, the most important question, whether the message has been retained. In this sense, this paper proposes a series of metrics to answer these questions and provides a comparison of objective quality metrics and subjective answers from deaf people watching the same set of videos.

3. Methodology

This section details the proposed methodology for the anonymization of Sign Language datasets.

3.1. Collection of Original Video

To conduct our experiments, we first had to select a series of videos in Spanish Sign Language (LSE), as the objective was to carry out a survey targeting the deaf population in our country. For this purpose, we utilized videos previously recorded by our research group under controlled laboratory conditions, as detailed in [41]. Specifically, a Nikon D3400 camera (Nikon Corporation, Tokyo, Japan) was used to capture high quality RGB video signals (resolution 1920 × 1080 @ 60 FPS). Five out of 40 signers gave explicit permission to appear in an online survey where their faces would be distorted: two men and three women (this highlights the deaf community’s privacy concerns). For each signer, 6 videos were chosen from a set of 18 different LSE phrases. This set was used for the face-swapping tests.

Additionally, one of the collaborator signers was asked to record an additional set of videos specifically for this work, where instead of signing a full phrase, he signed pairs or trios of signs that differed only in facial expressions. This second set, consisting of 16 videos (5 pairs plus 2 trios), was specifically used to evaluate the preservation of facial expressiveness after the face-swapping process.

Finally, for the generation of avatar videos, 9 LSE videos from the first set were selected, and the same collaborating signer was asked to record an additional set of 5 videos with particularly expressive signing to evaluate the transfer of expressiveness to the avatar. This resulted in a total of 14 videos, which were generated using both male and female avatars. All the videos, original and generated, can be watched at GitHub (https://github.com/Deepknowledge-US/TAL-IA/tree/main/Synthetic_video_for_Sign_Language_Anonymization, accessed on 30 Sepptember 2024). Section 4.1.2 outlines the survey design.

3.2. Video Synthesis with Avatars

To generate videos anonymizing the signers through avatars, we utilized the Dollars Mocap tool [42] (Dollars Mocap, Shanghai, China), an AI-powered motion capture solution designed to streamline the creation of realistic animations for characters in various digital applications.

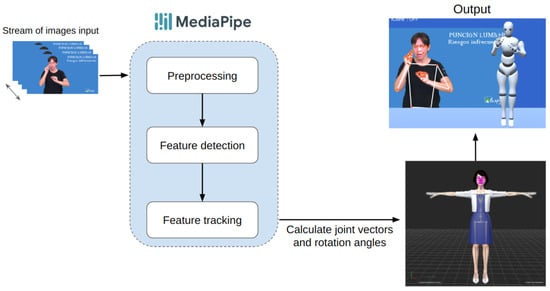

Figure 1 illustrates the Dollars tool animation workflow. The process starts with MediaPipe (Google LLC, Mountain View, CA, USA) capturing a continuous stream of image frames from the original video file and detecting body, hand, and face landmarks. The tool include tracking algorithms to ensure smooth and consistent key points across frames, enabling accurate motion capture. The captured data are processed to calculate skeleton rotation angles, followed by smoothing and pose adjustments to reduce noise and enhance stability. The refined data are then exported or streamed to third-party software for avatar generation.

Figure 1.

Pipeline for creating realistic animations from input video to final avatar.

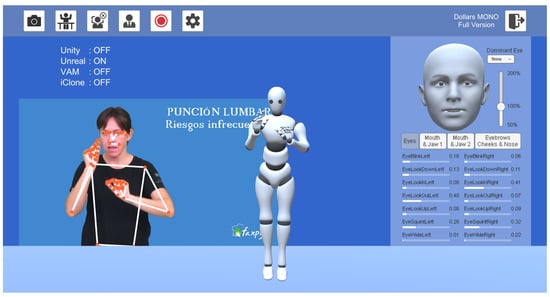

Figure 2 shows an example of the Dollars tool interface. As can be seen, the tool uses the extraction of keypoints with mediapipe for subsequent generation of the avatar.

Figure 2.

Example of the use of Dollars MONO on an image.

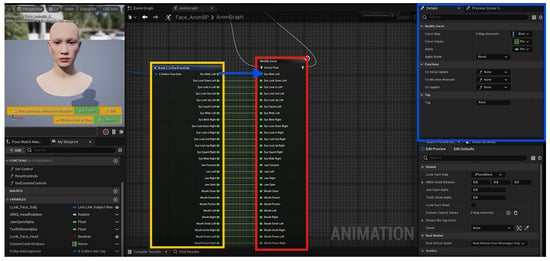

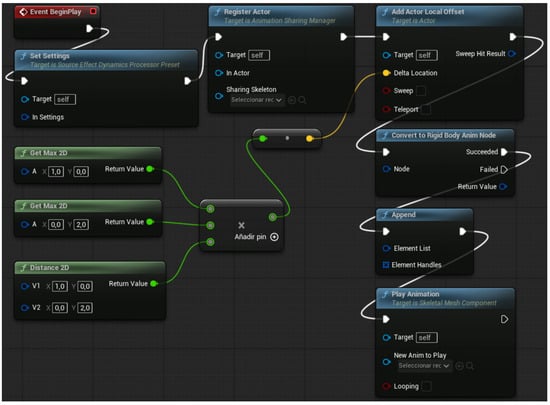

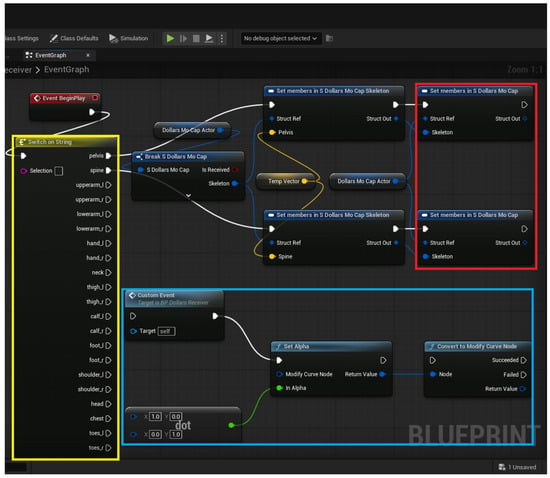

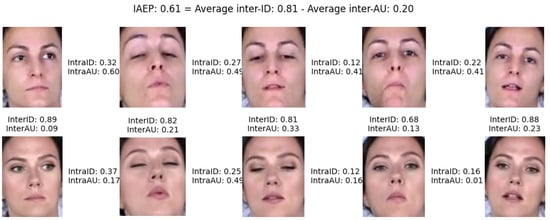

The final avatar in the video was created using Unreal Engine’s (Epic Games, Cary, NC, USA) online Metahuman 5.0 application (https://metahuman.unrealengine.com/, accessed on 3 June 2025), which utilized BVH-exported coordinates from EGAO software. These coordinates were integrated with the Metahuman skeleton, allowing the animation data to be linked and further refined. Figure 3 shows the animation workflow, where, through UE5 blueprints, the animation data (represented by the yellow box) are linked to the Metahuman skeleton (represented by the red box). At this stage, adjustments can be made to the animation through the blueprints, such as the one in Figure 4, allowing modification of aspects such as the movement range.

Figure 3.

UE5 animation editing environment. Animation data are represented in the yellow box, metahuman skeleton are represented in the red box and details configuration are represented in the blue box.

Figure 4.

Blueprint that refines the coordinates of the animations of a hand to avoid unrealistic movements.

Additionally, if the inference of the points is too far from the correct one, the animation can be manually corrected. While manual corrections are possible for cases where the point inference deviates significantly, such as self-intersections, this process is time-intensive and may introduce distortions that affect the entire animation, so it is reserved for critical issues only, such as self-intersections, as can be seen in Figure 5.

Figure 5.

Interface for the manual correction of critical animation errors. Colors indicate the side of the body: blue corresponds to the left side, and red to the right side.

3.3. Video Synthesis with Face Swapping

In this section, the process developed to anonymize the identity of the signers in the dataset through face swapping techniques is explained.

Face swapping [43] is a technique that replaces one person’s face in an image or video with another person’s face, using image processing and computer vision tools. This typically involves detecting and mapping facial landmarks in both images, aligning the facial features, and applying blending techniques to ensure seamless integration, while preserving consistency in lighting, textures, and facial expressions.

A major challenge in face swapping is achieving results that are both visually convincing and scalable. To address this, we opted for a near real-time solution using the DeepFaceLive tool [44], built on the DeepFaceLab framework [45]. This tool enables face swapping from a webcam feed or a video using pre-trained facial models from famous actors, and it also allows the training of custom models, which we utilized to incorporate our own faces into the synthesis of new videos. To achieve this swapping, this tool uses a state-of-the-art face detector, then it aligns the face using 2DFAN [46] or PRNet [47] and calculates a similarity transformation matrix through a facial landmark template. Finally, the new face can be swapped using face segmentation [48].

DeepFaceLive provides user-friendly customization options, enabling us to fine-tune the face-swapping process to meet the specific requirements of Sign Language video synthesis. This ensured that the generated results were both accurate and ethically sound.

We performed face swapping using Live with one of the official pretrained models from DeepFaceLab. These models rely on a U-Net–based segmentation network known as TernausNet [48], which uses a VGG11 encoder pretrained on ImageNet for accurate facial region extraction [45]. The pretrained models were originally trained on tens of thousands of images from the FFHQ dataset, which includes a wide variety of facial poses, lighting conditions, and expressions. No additional training or fine-tuning was applied in our case, and we used the models as provided (https://www.deepfakevfx.com/guides/deepfacelab-2-0-guide/, accessed on 3 June 2025).

Given the real-time constraints of our application, we employed the Quick96 model, which offers a favorable balance between performance and computational efficiency. This model is commonly used in DeepFaceLive, due to its low-resolution architecture that is optimized for live inference, making it suitable for generating anonymized face-swapped videos without sacrificing speed.

4. Experiments on Video Synthesis

4.1. Evaluation Metrics

In this section, we detail all metrics that were used to evaluate the anonymization precision of the dataset. The anonymization quality was tested with both objective quality metrics and subjective human evaluations.

4.1.1. Quality Metrics

We next describe the computer-vision-based metrics used to evaluate the quality of anonymization achieved through Face Swapping. The “Learned Perceptual Image Patch Similarity” (LPIPS) [49] and “Frechet Inception Distance” (FID) [50] metrics, widely utilized in the literature for avatar generation [51] and dataset anonymization [14,31,52] were applied. Additionally, we introduced a novel quality metric specifically designed to evaluate the effectiveness of anonymization in Sign Language videos.

The LPIPS metric evaluates the perceptual similarity between images by leveraging features extracted from a pre-trained deep neural network, such as VGG or AlexNet. These networks capture high-level representations that align more closely with human perception of similarities and differences, surpassing simple pixel-to-pixel comparisons. Higher LPIPS values indicate greater perceptual differences between images.

FID is a widely adopted metric for assessing the quality of images generated by models like Generative Adversarial Networks (GANs), making it particularly suitable for evaluating face swapping. FID compares the feature distributions of generated and real images, utilizing features extracted by a pre-trained network, typically Inception v3. This metric calculates the Frechet distance (Wasserstein-2 distance) between two multivariate Gaussian distributions. Lower FID values indicate greater consistency between the generated content and the original videos.

LPIPS and FID primarily evaluate the overall visual quality and similarity between the original and generated videos, but they do not measure whether the identity of the original individual has been effectively obscured or guarantee proper anonymization in synthetic videos. To address this gap, we propose a new metric, IAEP (Identity Anonymization with Expressivity Preservation), designed to evaluate changes in apparent identity while preserving facial expressivity. IAEP combines measurements from two embedding spaces: one optimized for face verification, and another for encoding facial expressions.

Face verification models, which have surpassed human performance [53], can provide reliable metrics for measuring anonymization success. However, encoding facial expressions presents a greater challenge. Most off-the-shelf models have been trained to classify basic emotional expressions (e.g., neutral, angry, surprise, fear, happiness, disgust, sadness) [54], whereas facial expressivity in Sign Language conveys subtle grammatical information through movements of the eyebrows, mouth, and head [55]. For such nuanced expressivity, the Facial Action Coding System (FACS) [56] offers a more appropriate framework, as it describes changes in facial muscles through Action Units (AUs).

In this context, AU intensity estimation quantifies the strength of specific facial muscle movements. To implement IAEP, we propose a method that integrates AU-based expressivity measurements with identity verification to assess anonymization success, while ensuring the preservation of crucial facial expressions in Sign Language communication. The following implementation details this approach:

where

- : the set of frames selected in the original video (the swapped frames in , are the same),

- : a distance function between the embedding vectors coming out from the Face Verification model () corresponding to aligned frames, f, , and

- , the same for the AU intensity embedding vectors.

Using a normalized metric , such as the Cosine distance from the scipy.spatial.distance function (normalized [0, 2]), values fall within the range [−2, 2]. However, the specific range depends on the choice of and embedding spaces, and, in practice, they typically lie between [0, 1], as demonstrated in the examples below. This range is more interpretable compared to FID and LPIPS. Higher values indicate better anonymization and preservation of facial expressivity, whereas lower values reflect poor anonymization and/or ineffective transfer of expressions to the swapped face. Unlike IAEP, LPIPS and FID primarily measure visual similarity. While small FID and LPIPS values suggest a high visual consistency between original and swapped faces, they do not provide specific insights into changes in identity or expressivity.

The Face Verification model used to analyze the original–swapped video pairs was FaceNet [57], which generates a 512-dimensional embedding vector, as implemented in the Deepface Python library [58]. For Action Unit (AU) intensity estimation, we employed the LibreFace Python library [59], which was trained to estimate the intensity of 12 key AUs (1, 2, 4, 5, 6, 9, 12, 15, 17, 20, 25, 26). These AUs represent most of the eyebrow and mouth movements critical for conveying the grammatical nuances essential in Sign Language communication (Visit https://imotions.com/blog/learning/research-fundamentals/facial-action-coding-system/ (accessed on 1 May 2025) for a video description of each AU).

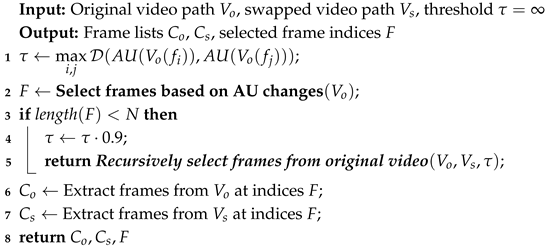

The set of frames selected for calculating , , should capture the variability in expressiveness within the video, as the primary goal is to evaluate both anonymization effectiveness and the preservation of expressive moments. Using all frames for comparison risks averaging out critical changes in expression that occur over a few frames. To address this, we implemented a selection process based on intra-video AU changes, , starting with a high threshold and iteratively reducing it until at least N frames are selected for inter-video comparison. This was implemented with a recursive algorithm explained in the following pseudocode (Algorithms 1 and 2):

| Algorithm 1: Recursively select frames from original video |

|

| Algorithm 2: Select frames based on Action Unit (AU) changes |

|

We conducted experiments by varying N from 5 to 20 and observed no significant changes within this range. When N was too small, the results tended to be noisy. As N increased beyond 20 and approached the total number of frames in the video, the metric became increasingly dominated by the Face Verification component. This was because more frames with similar and neutral Action Units (AUs) were averaged, which reduced the influence of the Expressivity Preservation term—especially in cases where the swapped face failed to replicate extreme facial expressions. In the experiments presented in Section 5, N was fixed at 5. Note that more frames may be selected if their AU differences exceed the current threshold.

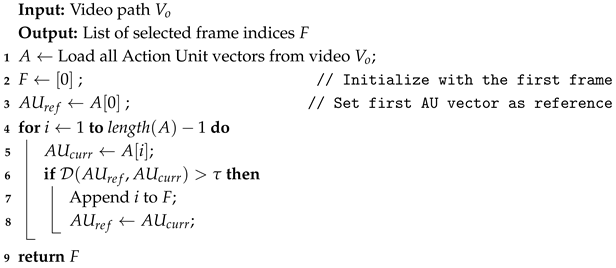

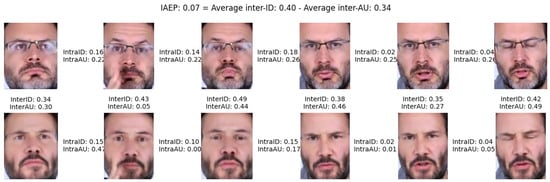

Figure 6 provides a graphical example of IAEP calculation, comparing an original video (top) with its swapped counterpart (bottom). The figure includes intra-ID and intra-AU scores as references for the distances between embeddings from the same video, as well as inter-ID and inter-AU scores for the corresponding selected frames, , across the two videos.

Figure 6.

Example of IAEP calculation from a original–swapped video pair.

A detailed analysis of these metrics highlighted several key properties of the IAEP measurement:

- Robustness of ID-Embedding: The largest intra-ID scores provide insights into the robustness of the ID-embedding against changes in expression and pose. Smaller intra-ID values indicate a more robust ID model.

- Anonymization Quality: For a robust ID-embedding, the difference between inter-ID and intra-ID scores quantifies the separability of identities, offering an objective assessment of anonymization effectiveness.

- Robustness of AU-Embedding: inter-AU and intra-AU scores provide insights into the robustness of the AU-embedding to variations in identity and pose. In general, inter-AU scores are expected to be smaller than intra-AU scores.

- Expressivity Transfer: For a robust AU-embedding and effective transfer of expressivity, the distribution of intra-AU scores in the original video should closely match that of the swapped video, and the inter-AU scores should remain minimal.

These properties collectively ensure that IAEP provides a comprehensive evaluation of both identity anonymization and the preservation of expressive nuances.

The example in Figure 6 illustrates these properties effectively. The ID verification model reveals significant differences between inter-ID and intra-ID scores. While this reflects good separability between identities, a greater invariance to pose and expression changes would be ideal. For instance, values of 0.32 and 0.37 between the first and second selected frames are relatively high for a state-of-the-art verifier like FaceNet-512, which typically uses an ID verification threshold around 0.3.

Similarly, the AU intensity estimation model demonstrated that intra-AU values in the original video are generally higher than in the swapped video. These differences become more pronounced when expressive features, such as raised eyebrows or mouth deformations, are not accurately transferred. As with the ID verification model, greater invariance in AU intensity estimation to pose changes would be beneficial. For example, the low values observed between the 1st and 2nd frames and the 4th and 5th frames in the swapped video are inconsistent with the rest of the values, highlighting areas for improvement in the robustness of AU estimation.

4.1.2. Subjective Evaluation

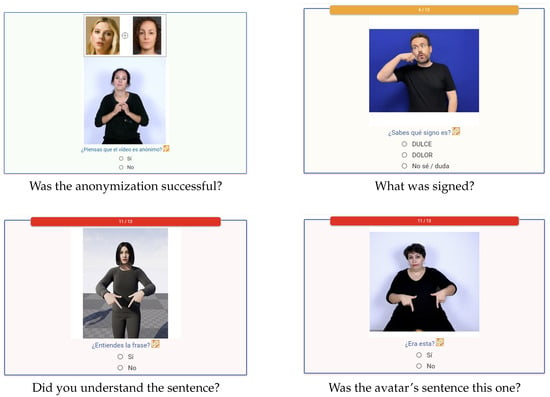

In addition to the quality metrics, we emphasized the importance of incorporating subjective evaluation. To achieve this, we designed a survey aimed at a group of deaf participants, to address three specific scientific questions. The survey was divided into three sections, with participants watching videos of deaf individuals signing particular phrases or words for evaluation.

- Part 1: Anonymization through Face Swapping: The first part aimed to assess whether a face swapping technique successfully anonymized the identity of the signer. Familiarity of the participant with the signing person might bias their response, so we designed two questions. The participants are first asked if they recognize the signer, which helps gauge how familiar they are with the person signing. The second question directly asks whether they believe the face swapping has successfully anonymized the person’s identity.

- Part 2: Preservation of Meaning with Facial Expression: The second part focuses on whether face swapping has preserved the meaning of signs where facial expressions are crucial. Participants watch videos of words that vary in meaning depending on facial expression and are then asked a single question: they must choose the correct meaning from several options.

- Part 3: Comprehensibility of Avatars: In the third part, instead of using face swapping for anonymization, avatars are used. Here, the goal is to assess whether the avatar can generate an understandable signed sentence. Participants were asked three questions: Whether they understood the phrase signed by the avatar. After watching the original video with the phrase, participants were asked if the recognized phrase matched the original one. Finally, participants were asked what they believe could be improved, specifically whether the manual components of the sign or the facial expressiveness could be enhanced for better clarity.

To design the survey questions, we sought help from our contacts in the deaf community and from interpreters who collaborate with us. We needed to select sentences with sufficient manual and facial expressiveness so that the survey results would be generalizable. For Part 1, we also needed to find famous individuals with enough videos available online to feed the face swapping algorithm and generate videos of volunteer signers for the survey design. In the end, we had five signers—three women and two men—each of whom recorded several different sentences for Part 1. Each of these sentences underwent a face swapping process, generating 30 sentences to present randomly to the respondents. It is important to highlight that the face swapping was carried out while preserving the gender of the signer, to avoid undesirable effects of generating faces with gender ambiguity.

For Part 2, we needed to find signs that had different meanings depending on the facial expression. We recorded seven groups of videos for this section, each containing two or three challenging signs that could only be distinguished by facial expression. All these videos were recorded by the same highly expressive deaf signer to avoid bias resulting from different respondents watching videos of different expressive individuals. Each of these videos then underwent a face-swapping process, resulting in a final total of 16 videos to be shown randomly.

Finally, for Part 3, we generated 14 sentences with two avatars (one male and one female). All the created videos are available at the previous GitHub repository. The survey is accessible (in Spanish and LSE) at https:/signamed.web.app/survey, accessed on 1 May 2025. Each time a respondent entered the survey, a random subset of videos was shown in each part, to prevent fatigue and encourage them to return and complete the survey at another time.

Figure 7 displays snapshots of the main survey questions, which are based on video viewing. Please note that the questions are presented in Spanish (text) and also available in Sign Language by clicking on the orange icon.

Figure 7.

Main questions of the Survey.

5. Results

5.1. Quality Metrics Results

All experimentation was carried out on a computer with a 12 Gb Nvidia 3080 GPU (Nvidia, Sunnyvale, California, USA) As explained in Section 4.1, LPIPS and FID metrics were calculated for all P1 anonymized videos with face swapping.

Studies such as [14,31,52] also employed FID and LPIPS metrics to evaluate anonymized videos. However, they did not provide direct comparisons with other works in the field, instead focusing on demonstrating improvements over their previous methods. Low LPIPS and FID values indicated that the generated images were visually similar to the real ones, suggesting that the generated videos maintained a high degree of visual realism and strong correlation with the original content.

We cropped the facial region in both the original and generated videos, rather than measuring the entire frame. This approach allowed for a more precise comparison, as the face is the only area modified during the face swapping process.

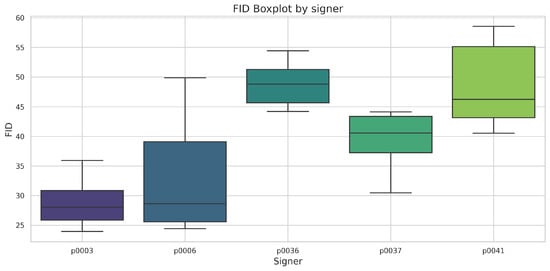

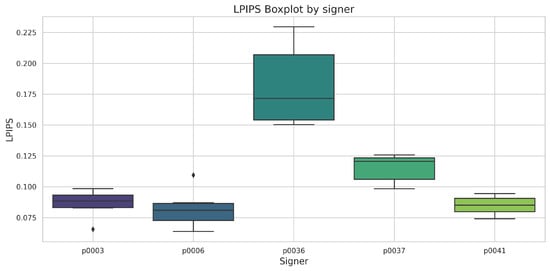

Figure 8 and Figure 9 show the distributions of FID and LPIPS metrics, respectively, grouped by signer. Although both metrics operate in different ranges of values, the distributions obtained showed a similar trend, at least for four out of five signers.

Figure 8.

Distribution of the FID metric per signer.

Figure 9.

Distribution of the LPIPS metric per signer.

Figure 10 shows a sequence of frames from the original video and the synthesized video of signer p0036 with large FID and LPIPS values, while Figure 11 shows a sequence of signer p0006 with lower FID and LPIPS values.

Figure 10.

Visual comparison between sequences of frames from original and generated videos using face swapping for a video with high LPIPS and FID results.

Figure 11.

Visual comparison between sequences of frames from original and generated videos using face swapping for a video with low LPIPS and FID results.

As mentioned before, these two metrics inform us about perceptual similarities. Looking at the example in Figure 11, it seems clear that there are significant image similarities, so we can claim that the FaceSwapping process preserved the general structure and texture of the original images (low FID/LPIPS values). Can we also claim that the identity is obscured?

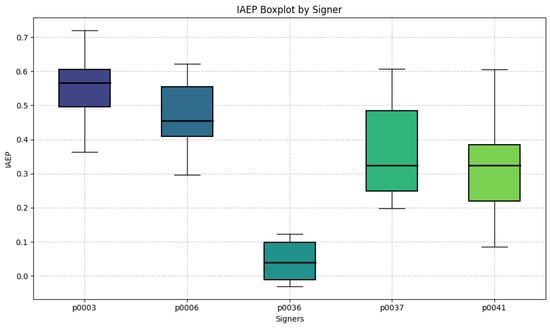

As we presented above, the five signers underwent face swapping with same-gender celebrities. Figure 12 shows the distribution of the IAEP metric for all the videos of each signer. These are exactly the same videos as used for the computation of LPIPS and FID, but using the strategy of frame selection justified in the IAEP definition.

Figure 12.

Distribution of the IAEP metrics per signer.

The distributions per signer are notably inversely related to FID and LPIPS metrics, where high visual consistency (low FID/LPIPS values) corresponds to high IAEPD values, and vice versa. This seemingly contradictory behavior arises from the way these metrics operate. FID and LPIPS rely on generic visual features learned by deep learning models trained on broad datasets, while IAEP utilizes two specialized models: one trained for face verification to be invariant to changes unrelated to identity attributes, and another optimized for Action Unit (AU) intensity estimation to focus on facial muscle changes.

For instance, the video pair of signer p0036, previously shown in Figure 10, exhibited high FID/LPIPS values but low IAEPD values (see Figure 13). These low IAEPD values stemmed from the face verification model, which assigned scores close to the decision threshold of FaceNet-512 for inter-ID pairs. Although the videos appear visually distinct due to features like glasses, reflections, and gray/black beard (high FID/LPIPS values), these attributes are not identity-related, as FaceNet is trained to recognize identities invariant to such attribute differences. However, the expressivity transfer was not fully accurate, as indicated by the inter-AU values being, on average, larger than the intra-AU values.

Figure 13.

Video pair with low IAEP metric, corresponding to a high FID metric.

Conversely, the video pair of signer p0006, shown in Figure 11, displayed low FID/LPIPS values but mid-range IAEPD values (see Figure 14). Although these two videos are visually similar, differences in eye color and the shapes of the eyebrows, nose, and mouth resulted in a relatively high inter-ID score of 0.71. This examples highlights the strengths of IAEP in measuring both anonymization and expressivity preservation.

Figure 14.

Video pair with mid IAEP metric corresponding to a low FID metric.

5.2. Survey Results

The survey was conducted online using a platform that allowed the participants to watch videos and answer related questions. The survey is still accessible to anyone at the following address: https://signamed.web.app/survey, accessed on 1 May 2025. This platform is already well known within the deaf community, as it has been used for research on Sign Language Recognition technologies and has an associated Instagram channel. To recruit volunteers, a campaign was launched through Instagram, and the survey remained open for responses from 30 July to 13 September 2024. Since participants could leave the survey at any time, it was closed once 100 complete responses, including all three parts, had been collected. Demographic diversity could not be assessed, as anonymous access was granted, and no personal data were collected. Sign language proficiency was specified in the introductory LSE video. There might have been cultural and age-related bias in the participants, as the Instagram channel (with 888 followers) used to promote the campaign is mainly used to inform about the progress of the project.

Table 1 shows the collected interactions during the campaign.

Table 1.

Survey Data Summary.

It is important to note that each time a user starts the survey, the videos they see are randomly selected from a pre-recorded set, so it is normal for users with more interest in the topic to respond to the survey more than once. It was also observed that the first and second parts, related to face swapping, were completed more often than the third part, which was related to avatars. In the following paragraphs, we provide an analysis of the responses to each part and a final discussion.

In the first part of the survey, participants were asked to evaluate the effectiveness of face-swapping technology in anonymizing a person who signs in a video. Question “p01a” assessed whether respondents recognized the signer based on their picture (a known person in the community), while question “p01b” involved the respondents watching a video where the signer’s face was swapped with that of a well-known celebrity. The video showed a blended appearance of the original signer and the celebrity, alongside photos of both individuals, and participants were asked if the identity anonymization was successful.

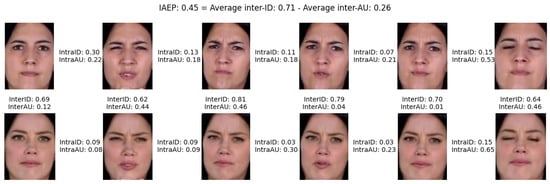

The analysis revealed that 58.1% of the respondents answered “TRUE” (i.e., believed the anonymization was successful) when exposed to the face-swapped video, indicating that the face swap was often effective. However, the results also showed a relationship between the answers to “p01a” and “p01b”: when respondents initially did not recognize the face of the original signer, their perception of successful anonymization was 56.5%. Conversely, if they recognized the signer, they were slightly more likely to perceive the anonymization as successful, with 59.8% responding “TRUE”. This result is summarized in Figure 15.

Figure 15.

Proportions of successful/unsuccessful anonymizations conditioned on the knowledge of the signer.

A statistical significance test was performed to evaluate the potential dependency between the respondents’ recognition of the original signer (“p01a”) and their assessment of successful anonymization (“p01b”). The chi-square test returned a p-value of 0.467, indicating no statistically significant relationship between the two variables at conventional significance levels. This result suggests that respondents’ familiarity with the signer did not significantly influence their perception of the success of the anonymization, implying that the effectiveness of the face-swapping process was independent of prior familiarity with the signer.

However, this finding should be interpreted with caution, as the survey only addressed familiarity with the signer and not the celebrity involved in the face swap. It is possible that familiarity with either of the subjects—signer, celebrity, or both—could have differently influenced responses regarding anonymization success. Further investigation is needed to account for these potential confounding factors.

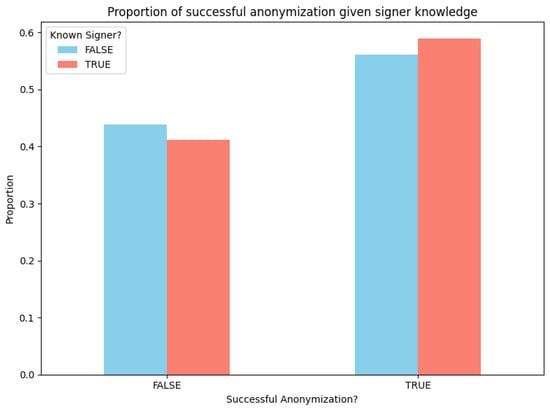

Given the difference in the signer’s FID/LPIPS/IAEP measurements in Figure 8, Figure 9 and Figure 12, we wanted to verify whether this difference was also reflected in the subjective evaluation. Figure 16 shows a pair of stacked bars for each signer, indicating the number of responses with negative/positive anonymization (FALSE/TRUE), conditioned on whether the signer was known or unknown to the participants (red/blue).

Figure 16.

Counts of successful/unsuccessful anonymizations per signer.

Upon closer examination, we observed that participants more frequently perceived signers p0003 and p0006 as correctly anonymized compared to signers p0036, p0037, and p0041. Reviewing the corresponding videos, we found that the pictures used to match the first two signers with their celebrity counterparts depicted them with long hair, whereas in most of the videos, their hair was tied back. This perceptual discrepancy may have influenced the participants’ decisions, leading to a higher correct anonymization rate.

Analyzing the IAEP and FID metrics, these two signers exhibited the largest IAEPD and smallest FID values, while the other three showed the smallest IAEPD and largest FID. This observation might suggest an interesting correlation between IAEP/FID metrics and deaf individuals’ perception of anonymity; however, considering p0036, we remain cautious. The videos of signer p0036 were perceived as correctly anonymized a similar number of times as p0037 and p0041, with all three only slightly better than random choice. FID seemed to somewhat reflect the opinions of deaf individuals, while IAEPD identified more metric differences between signer p0036 and the rest (similarly to LPIPS). In any case, it appeared that additional factors influenced the deaf individuals’ decisions that were not captured by the three tested metrics.

In the second part of the study, participants were tasked with identifying the signed gesture by choosing from three options (question p02): the correct sign, a similar sign differing only in facial expression, or the“I don’t know” option. The analysis indicated that 399 participants (78.4%) selected the correct sign, 86 participants (16.9%) chose the similar sign with a different facial expression, and 18 participants (3.5%) opted for “I don’t know”. These findings suggest that the majority of participants accurately identified the correct sign, even with the option to express uncertainty, implying that the face-swapping process effectively preserved the original signer’s essential facial expressions.

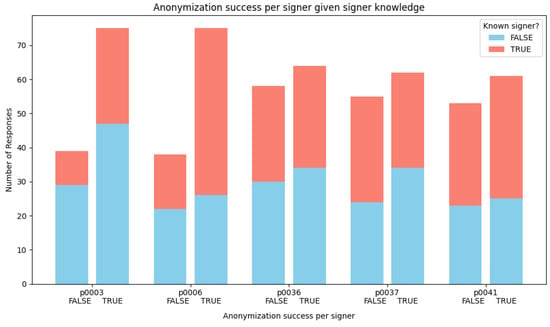

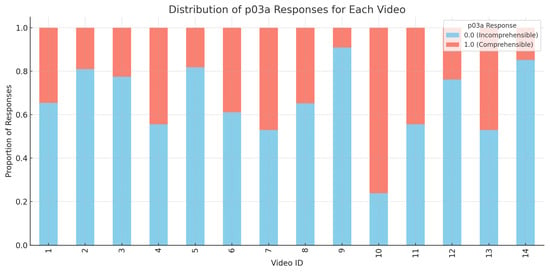

In the third part, participants were asked whether the sentence signed by an avatar was comprehensible (question p03a). The results showed that 66.7% of their responses indicated incomprehension, while 33.3% selected the option labeled “YES” (indicating comprehension). In the next question of this part (p03b), the participants were shown the same sentence signed by a real person and were asked if what they understood from the avatar matched the sentence signed by the deaf contributor. Among the responses of positive comprehension, 80.2% also answered “YES”. This suggests that those who initially comprehended the avatar were also likely to perceive consistency between the avatar’s message and the deaf person’s message. However, was this comprehension ratio evenly distributed for all synthesized videos? Figure 17 displays the ratio of comprehension of the avatar message for each one of the 14 synthesized sentences. Most of the videos (11) ranged between 20 and 50% comprehension, two videos were lower than 10%, and only one had a comprehension of 76.2%. This variation suggests that the comprehensibility of the avatar’s signing was quite low in general and also influenced by factors specific to each video, such as the clarity of the original signing, the quality of the synthesizing process, and the complexity of the sentence being signed.

Figure 17.

Comprehension ratio for each synthesized message (blue: incomprehensible, red: comprehensible).

As a final question (p03c), we wanted to know the participants’ opinions about which components of Sign Language require more precision for the avatar’s message to be comprehensible. The two answer options for this question were ’Hands’ and ’Facial Expression’. Participants could select either or both options only if they did not understand the avatar message previously. Most of the responses (55%) indicated that both components (hands and facial expressions) needed more precision, followed by hands only (36.5%) and facial expressions only (8.5%).

These poor results in avatar comprehensibility may also explain the higher number of abandoned interactions during the third part of the survey, compared to the first and second parts (300 versus 523). Deaf individuals are generally frustrated by the low quality of avatars for continuous Sign Language, and, as these results reflect, participants struggled to understand the avatar in about two-thirds of the cases, largely due to issues with the fidelity of hand movements.

One of the main causes of the low comprehension rate for avatar-based videos (66.7%) lies in the technical limitations of the animation process. As explained in the methodology, we used MediaPipe to extract body, face, and hand keypoints, and then generated animations using Unreal Engine and its Metahuman system. However, the mapping between the extracted keypoints and the Metahuman skeleton is not perfect, which results in inaccurate motion transfer for critical components such as eyebrows, eyes, mouth, and head. While manual corrections are technically possible through Unreal’s blueprint system, they are extremely time-consuming, especially when working with continuous videos that involve a high level of facial expressiveness. Therefore, we only applied manual corrections in critical cases, such as evident self-intersections. This structural limitation of the current pipeline largely explains the observed lack of expressive fidelity and, consequently, the low comprehension rate reported by participants.

5.3. Discussion

The results in this section highlight several takeaways that are pivotal for advancing the field:

- Effectiveness of Face Swapping: Face swapping proved to be a viable anonymization technique, achieving decent rates of perceived anonymization among participants and large rates of measured face discriminability. However, certain discrepancies, such as variations in hairstyle, subtle visual inconsistencies, or even questionable understanding of the query about signer–celebrity anonymization, impacted participants’ perceptions, underscoring the need for a greater understanding of anonymity perception.

- Challenges with Avatar-Based Anonymization: The results demonstrate that avatar-based anonymization struggles with comprehensibility, particularly for complex sentences. The survey responses indicate that both hand movements and facial expressions need refinement to meet the high expectations of Sign Language users. The low comprehension rates observed highlight the need for further improvements in avatar synthesis technologies.

- The current work highlights the need for larger and more diverse datasets for sign language anonymization research. In future work, we aim to expand our dataset by defining clear collection targets—e.g., several hundred signers across different age groups, signing styles, and regions. This will require building long-term collaborations with deaf communities and institutions, possibly through partnerships with national federations or sign language interpretation networks. Such collaborations would not only increase the scale and demographic variety, but also promote inclusiveness and ethical engagement.

- Metrics for Evaluation: The introduction of the IAEP metric provided an innovative way to assess the balance between anonymization and expressivity preservation. While the metric showed promise, its performance in certain scenarios suggested that more robust or complementary subjective metrics may be needed to capture all relevant aspects of anonymization quality, particularly when dealing with varied signer swapped attributes.

- User Perception and Practical Usability: This small scale survey provided valuable insights into how deaf participants perceived the anonymization techniques. The alignment of their perceptions with FID and IAEP metrics for some signers indicates the potential of these tools to reflect user experience. However, variations in the comprehension and anonymization success across different signers suggest that additional contextual or subjective factors influence user perception.

6. Conclusions

This study explored two anonymization approaches for Sign Language videos: realistic avatar synthesis and face swapping, with a focus on balancing privacy concerns and the preservation of communicative content. The proposed IAEP metric offers a novel evaluation framework, addressing the limitations of existing visual similarity metrics like FID and LPIPS by incorporating both identity anonymization and expressivity preservation.

Our results show that face swapping achieved a reasonable level of anonymization while maintaining semantic clarity, as reflected by both the objective quality metrics and subjective evaluations. However, the avatar-based approach struggled with comprehension, particularly in conveying complex expressions critical for Sign Language communication. The survey results from deaf participants provided valuable insights into user perceptions, highlighting the strengths and limitations of each method.

Future work should prioritize refining metrics such as IAEP to enhance their robustness against subtle variations in expression and identity shifts, as well as comparing it with other related metrics in the literature. Additionally, improving avatar synthesis technologies to achieve higher fidelity in hand movements and facial expressions is critical to making this approach more comprehensible. Expanding datasets with diverse signer profiles and a greater number of source swapping faces is also essential for ensuring the scalability and generalizability of these anonymization techniques. By addressing these challenges, the field can move closer to enabling privacy-preserving Sign Language datasets that maintain their linguistic and communicative integrity.

Author Contributions

Conceptualization, J.L.A.-C., M.V.-E., M.P.-T. and J.A.Á.-G.; methodology, J.L.A.-C. and J.A.Á.-G.; software, M.V.-E., M.P.-T. and J.C.B.-B.; validation, J.L.A.-C., M.V.-E. and M.P.-T.; formal analysis, M.V.-E., J.L.A.-C., M.P.-T. and J.A.Á.-G.; investigation, M.V.-E., J.L.A.-C., M.P.-T., J.C.B.-B. and J.A.Á.-G.; resources, J.L.A.-C. and J.A.Á.-G.; writing—original draft preparation, M.P.-T., J.L.A.-C., M.V.-E. and J.A.Á.-G.; writing—review and editing, M.P.-T., J.L.A.-C., M.V.-E. and J.A.Á.-G.; supervision, J.L.A.-C. and J.A.Á.-G.; project administration, J.L.A.-C. and J.A.Á.-G.; funding acquisition, J.L.A.-C. and J.A.Á.-G. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been partially funded by MCIN/AEI/10.13039/501100011033 through the projects PID2021-123988OB-C32 and PID2021-126359OB-I00, by the “European Union NextGeneration EU/PRTR”, ECSEL framework program (Grant agreement ID: 101007254) throw Project ID2PPAC, by the Xunta de Galicia and ERDF through the Consolidated Strategic Group AtlanTTic (2019–2022). Manuel Vázquez Enríquez was funded by the Spanish Ministry of Science and Innovation through the predoc grant PRE2019-088146.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

All the data and code needed to reproduce the experiments in this work can be obtained through the GitHub repository (https://github.com/Deepknowledge-US/TAL-IA/tree/main/Synthetic_video_for_Sign_Language_Anonymization accessed on 20 December 2024).

Acknowledgments

We would like to acknowledge the contributions of the deaf individuals and interpreters who helped to build the dataset, as well as those that answered the online survey. We wish to highlight the contributions of Ania Pérez-Pérez to the design of said survey.

Conflicts of Interest

The authors declare no conflicts of interest and no financial interest in this work.

References

- Bragg, D.; Koller, O.; Caselli, N.; Thies, W. Exploring collection of sign language datasets: Privacy, participation, and model performance. In Proceedings of the 22nd International ACM SIGACCESS Conference on Computers and Accessibility, Virtual, 26–28 October 2020; pp. 1–14. [Google Scholar]

- Rudge, L.A. Analysing British Sign Language Through the Lens of Systemic Functional Linguistics. Ph.D. Thesis, University of the West of England, Bristol, UK, 2018. [Google Scholar]

- Bleicken, J.; Hanke, T.; Salden, U.; Wagner, S. Using a language technology infrastructure for German in order to anonymize German Sign Language corpus data. In Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC’16), Portorož, Slovenia, 23–28 May 2016; pp. 3303–3306. [Google Scholar]

- Das, S.; Biswas, S.K.; Purkayastha, B. Occlusion robust sign language recognition system for indian sign language using CNN and pose features. Multimed. Tools Appl. 2024, 83, 84141–84160. [Google Scholar] [CrossRef]

- Najib, F.M. A multi-lingual sign language recognition system using machine learning. Multimed. Tools Appl. 2024. [Google Scholar] [CrossRef]

- Liu, Y.; Lu, F.; Cheng, X.; Yuan, Y. Asymmetric multi-branch GCN for skeleton-based sign language recognition. Multimed. Tools Appl. 2024, 83, 75293–75319. [Google Scholar] [CrossRef]

- Perea-Trigo, M.; López-Ortiz, E.J.; Soria-Morillo, L.M.; Álvarez-García, J.A.; Vegas-Olmos, J. Impact of Face Swapping and Data Augmentation on Sign Language Recognition; Springer Nature: Berlin, Germany, 2024. [Google Scholar] [CrossRef]

- Dhanjal, A.S.; Singh, W. An optimized machine translation technique for multi-lingual speech to sign language notation. Multimed. Tools Appl. 2022, 81, 24099–24117. [Google Scholar] [CrossRef]

- Xu, W.; Ying, J.; Yang, H.; Liu, J.; Hu, X. Residual spatial graph convolution and temporal sequence attention network for sign language translation. Multimed. Tools Appl. 2023, 82, 23483–23507. [Google Scholar] [CrossRef]

- Lee, S.; Glasser, A.; Dingman, B.; Xia, Z.; Metaxas, D.; Neidle, C.; Huenerfauth, M. American sign language video anonymization to support online participation of deaf and hard of hearing users. In Proceedings of the 23rd International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS ’21), Association for Computing Machinery, New York, NY, USA, 22–25 October 2023; pp. 1–13. [Google Scholar]

- Mack, K.; Bragg, D.; Morris, M.R.; Bos, M.W.; Albi, I.; Monroy-Hernández, A. Social App Accessibility for Deaf Signers. Proc. ACM Hum.-Comput. Interact. 2020, 4, 125. [Google Scholar] [CrossRef]

- Yeratziotis, A.; Achilleos, A.; Koumou, S.; Zampas, G.; Thibodeau, R.A.; Geratziotis, G.; Papadopoulos, G.A.; Kronis, C. Making social media applications inclusive for deaf end-users with access to sign language. Multimed. Tools Appl. 2023, 82, 46185–46215. [Google Scholar] [CrossRef]

- Isard, A. Approaches to the anonymisation of sign language corpora. In Proceedings of the LREC2020 9th Workshop on the Representation and Processing of Sign Languages: Sign Language Resources in the Service of the Language Community, Technological Challenges And Application Perspectives; European Language Resources Association (ELRA): Marseille, France, 2020; pp. 95–100. [Google Scholar]

- Xia, Z.; Chen, Y.; Zhangli, Q.; Huenerfauth, M.; Neidle, C.; Metaxas, D. Sign language video anonymization. In Proceedings of the LREC2022 10th Workshop on the Representation and Processing of Sign Languages: Multilingual Sign Language Resources, Marseille, France, 25 June 2022; pp. 202–211. [Google Scholar]

- Battisti, A.; van den Bold, E.; Göhring, A.; Holzknecht, F.; Ebling, S. Person Identification from Pose Estimates in Sign Language. In Proceedings of the LREC-COLING 2024 11th Workshop on the Representation and Processing of Sign Languages: Evaluation of Sign Language Resources, Torino, Italy, 25 May 2024; pp. 13–25. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. Mediapipe: A framework for building perception pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

- Rastgoo, R.; Kiani, K.; Escalera, S.; Sabokrou, M. Sign Language Production: A Review. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021; pp. 3446–3456. [Google Scholar]

- Tze, C.O.; Filntisis, P.P.; Roussos, A.; Maragos, P. Cartoonized Anonymization of Sign Language Videos. In Proceedings of the 2022 IEEE 14th Image, Video, and Multidimensional Signal Processing Workshop (IVMSP), Nafplio, Greece, 26–29 June 2022; pp. 1–5. [Google Scholar]

- Heloir, A.; Nunnari, F. Toward an intuitive sign language animation authoring system for the deaf. Univers. Access Inf. Soc. 2016, 15, 513–523. [Google Scholar] [CrossRef]

- McDonald, J. Considerations on generating facial nonmanual signals on signing avatars. Univers. Access Inf. Soc. 2024, 24, 19–36. [Google Scholar] [CrossRef]

- Krishna, S.; P, V.V.; J, D.B. SignPose: Sign language animation through 3D pose lifting. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 2640–2649. [Google Scholar]

- Pavlakos, G.; Choutas, V.; Ghorbani, N.; Bolkart, T.; Osman, A.A.; Tzionas, D.; Black, M.J. Expressive body capture: 3d hands, face, and body from a single image. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 10967–10977. [Google Scholar]

- Rong, Y.; Shiratori, T.; Joo, H. Frankmocap: Fast monocular 3d hand and body motion capture by regression and integration. arXiv 2020, arXiv:2008.08324. [Google Scholar]

- Choutas, V.; Pavlakos, G.; Bolkart, T.; Tzionas, D.; Black, M.J. Monocular expressive body regression through body-driven attention. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part X 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 20–40. [Google Scholar]

- Forte, M.P.; Kulits, P.; Huang, C.H.P.; Choutas, V.; Tzionas, D.; Kuchenbecker, K.J.; Black, M.J. Reconstructing Signing Avatars from Video Using Linguistic Priors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 12791–12801. [Google Scholar]

- Baltatzis, V.; Potamias, R.A.; Ververas, E.; Sun, G.; Deng, J.; Zafeiriou, S. Neural Sign Actors: A diffusion model for 3D sign language production from text. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 1985–1995. [Google Scholar]

- Stoll, S.; Camgoz, N.C.; Hadfield, S.; Bowden, R. Text2Sign: Towards sign language production using neural machine translation and generative adversarial networks. Int. J. Comput. Vis. 2020, 128, 891–908. [Google Scholar] [CrossRef]

- Saunders, B.; Camgoz, N.C.; Bowden, R. Progressive transformers for end-to-end sign language production. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 687–705. [Google Scholar]

- Zelinka, J.; Kanis, J. Neural sign language synthesis: Words are our glosses. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2020; pp. 3384–3392. [Google Scholar]

- Saunders, B.; Camgoz, N.C.; Bowden, R. Anonysign: Novel human appearance synthesis for sign language video anonymisation. In Proceedings of the 2021 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2021), Jodhpur, India, 15–18 December 2021; pp. 1–8. [Google Scholar]

- Kingma, D.P. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Saunders, B. Photo-Realistic Sign Language Production. Ph.D. Thesis, University of Surrey, Guildford, UK, 2024. [Google Scholar] [CrossRef]

- Saunders, B.; Camgoz, N.C.; Bowden, R. Everybody sign now: Translating spoken language to photo realistic sign language video. arXiv 2020, arXiv:2011.09846. [Google Scholar]

- Bishop, C.M. Mixture Density Networks; Aston University: Birmingham, UK, 1994. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10674–10685. [Google Scholar]

- Xia, Z.; Neidle, C.; Metaxas, D.N. DiffSLVA: Harnessing Diffusion Models for Sign Language Video Anonymization. arXiv 2023, arXiv:2311.16060. [Google Scholar]

- Xia, Z.; Zhou, Y.; Han, L.; Neidle, C.; Metaxas, D.N. Diffusion models for Sign Language video anonymization. In Proceedings of the LREC-COLING 2024 11th Workshop on the Representation and Processing of Sign Languages: Evaluation of Sign Language Resources, Torino, Italia, 25 May 2024; pp. 395–407. [Google Scholar]

- Zhang, L.; Rao, A.; Agrawala, M. Adding conditional control to text-to-image diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 4–6 October 2023; pp. 3813–3824. [Google Scholar]

- Tze, C.O.; Filntisis, P.P.; Dimou, A.L.; Roussos, A.; Maragos, P. Neural sign reenactor: Deep photorealistic sign language retargeting. arXiv 2022, arXiv:2209.01470. [Google Scholar]

- Docío-Fernández, L.; Alba-Castro, J.L.; Torres-Guijarro, S.; Rodríguez-Banga, E.; Rey-Area, M.; Pérez-Pérez, A.; Rico-Alonso, S.; García-Mateo, C. LSE_UVIGO: A Multi-source Database for Spanish Sign Language Recognition. In Proceedings of the LREC2020 9th Workshop on the Representation and Processing of Sign Languages: Sign Language Resources in the Service of the Language Community, Technological Challenges and Application Perspectives; European Language Resources Association (ELRA): Marseille, France, 2020; pp. 45–52. [Google Scholar]

- DollarsMocap. 2024. Available online: https://www.dollarsmocap.com/ (accessed on 20 May 2024).

- Bitouk, D.; Kumar, N.; Dhillon, S.; Belhumeur, P.; Nayar, S.K. Face swapping: Automatically replacing faces in photographs. ACM Trans. Graph. 2008, 27, 1–8. [Google Scholar] [CrossRef]

- DeepFaceLive. 2023. Available online: https://github.com/iperov/DeepFaceLive (accessed on 15 May 2024).

- Perov, I.; Gao, D.; Chervoniy, N.; Liu, K.; Marangonda, S.; Umé, C.; Dpfks, M.; Facenheim, C.S.; RP, L.; Jiang, J.; et al. DeepFaceLab: Integrated, flexible and extensible face-swapping framework. arXiv 2020, arXiv:2005.05535. [Google Scholar]

- Bulat, A.; Tzimiropoulos, G. How far are we from solving the 2d & 3d face alignment problem? (And a dataset of 230,000 3d facial landmarks). In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1021–1030. [Google Scholar]

- Feng, Y.; Wu, F.; Shao, X.; Wang, Y.; Zhou, X. Joint 3d face reconstruction and dense alignment with position map regression network. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018; Proceedings, Part XIV. Springer: Berlin/Heidelberg, Germany, 2018; pp. 557–574. [Google Scholar]

- Iglovikov, V.; Shvets, A. Ternausnet: U-net with vgg11 encoder pre-trained on imagenet for image segmentation. arXiv 2018, arXiv:1801.05746. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

- Nunn, E.J.; Khadivi, P.; Samavi, S. Compound frechet inception distance for quality assessment of gan created images. arXiv 2021, arXiv:2106.08575. [Google Scholar]

- Dong, L.; Chaudhary, L.; Xu, F.; Wang, X.; Lary, M.; Nwogu, I. SignAvatar: Sign Language 3D Motion Reconstruction and Generation. arXiv 2024, arXiv:2405.07974. [Google Scholar]

- Lakhal, M.I.; Bowden, R. Diversity-Aware Sign Language Production through a Pose Encoding Variational Autoencoder. arXiv 2024, arXiv:2405.10423. [Google Scholar]

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. DeepFace: Closing the Gap to Human-Level Performance in Face Verification. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar] [CrossRef]

- Li, S.; Deng, W. Deep Facial Expression Recognition: A Survey. IEEE Trans. Affect. Comput. 2022, 13, 1195–1215. [Google Scholar] [CrossRef]

- Porta-Lorenzo, M.; Vázquez-Enríquez, M.; Pérez-Pérez, A.; Alba-Castro, J.L.; Docío-Fernández, L. Facial Motion Analysis beyond Emotional Expressions. Sensors 2022, 22, 3839. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W. Manual for the Facial Action Code; Consulting Psychologist Press: Palo Alto, CA, USA, 1978. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A unified embedding for face recognition and clustering. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Serengil, S.; Ozpinar, A. A Benchmark of Facial Recognition Pipelines and Co-Usability Performances of Modules. J. Inf. Technol. 2024, 17, 95–107. [Google Scholar] [CrossRef]

- Chang, D.; Yin, Y.; Li, Z.; Tran, M.; Soleymani, M. LibreFace: An Open-Source Toolkit for Deep Facial Expression Analysis. In Proceedings of the 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 1–6 January 2024; pp. 8190–8200. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).