An Empirical Study on Pointing Gestures Used in Communication in Household Settings

Abstract

1. Introduction

2. A Review of Gesture Research

- Iconic gestures: depict concrete objects or actions (e.g., shaping a “tree” with the hands).

- Metaphoric gestures: represent abstract ideas (e.g., an upward motion for “progress”).

- Deictic gestures: point to objects, people, or locations.

- Beat gestures: rhythmically accompany speech but carry no specific meaning.

- Ubiquitous in daily interaction: people point into empty space to indicate referents.

- Uniquely human: like language, pointing is exclusive to our species.

- Primordial in ontogeny: one of children’s first communicative acts.

- Iconic: it can depict movement trajectories, not just direction.

3. Study Design

3.1. Participants

3.2. Procedure

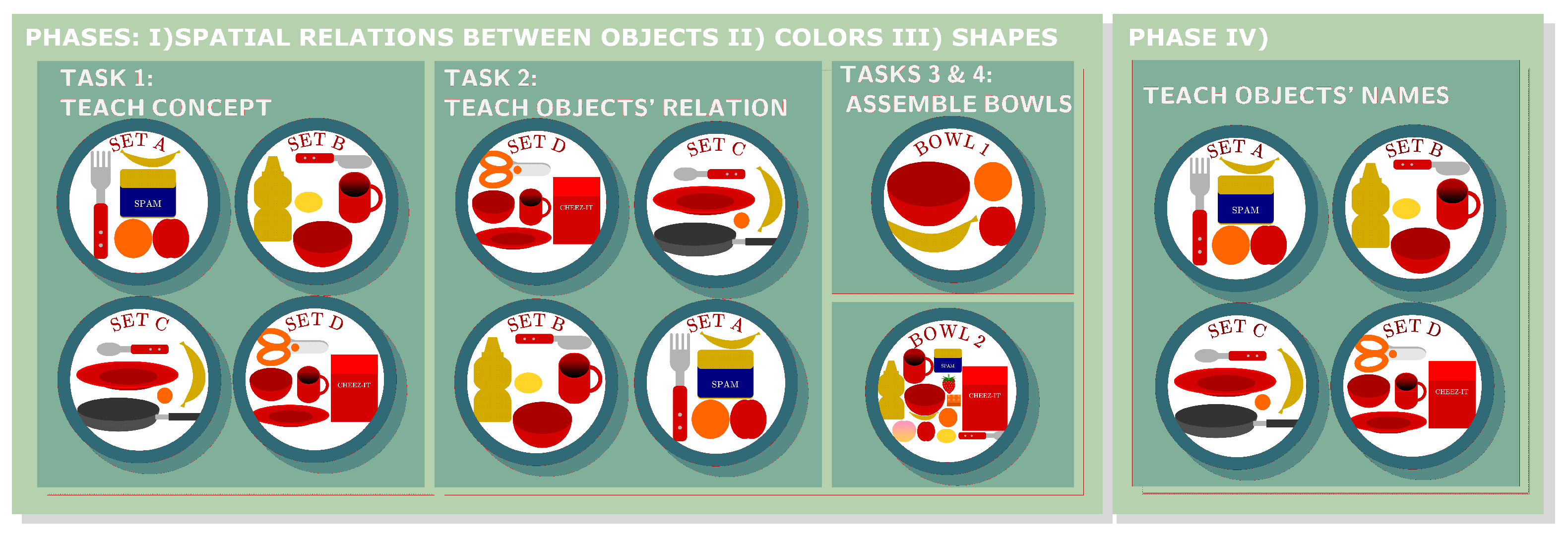

3.3. Tasks

- Tasks 1 and 2: Communicating Features and Relations: these two tasks were repeated over four sets, with different objects being used each time. There were always five objects on the table. In the first task of each set, the participants communicated the specific characteristics of the objects (colors, sizes, shapes, names) to the assistant. In the second task, they taught the relationships between these features (the same color, the same size, the same shape).

- Tasks 3 and 4: Goal-Oriented Instructions: the participants guided the assistant to prepare a bowl of fruits using the concepts previously taught. This involved providing verbal instructions and gestures to identify and place fruits in a bowl. The participants were supposed to first point at the fruit object and later point at the bowl, during which they guided the assistant verbally by saying, for example, “Put this round object in this concave object”. The difference between Tasks 3 and 4 was only in the number of objects on the table. In the third task, seven objects (with minimal ambiguities) were included. In the fourth task, the complexity increased as the table included thirteen objects, comprising both fruits and other objects.

3.4. Data Collection

4. Analysis and Results

4.1. Computational Analysis

4.1.1. Data Processing

- Forward-filling missing values: any missing data points were filled by copying the last observed non-missing value forward.

- Filtering: a low-pass Chebyshev Type I filter was applied with:

- Cutoff frequency: 4 Hz

- Sampling rate: 30 Hz

- Order: 4

- Gesture extraction: individual gestures were extracted by segmenting the dataset. As the criterion, we used a significant increase in the distance between the wrist and the hip, defined as the 55th percentile of the distance distribution in a given segment. We applied a minimum gesture length of seven frames.

4.1.2. Results

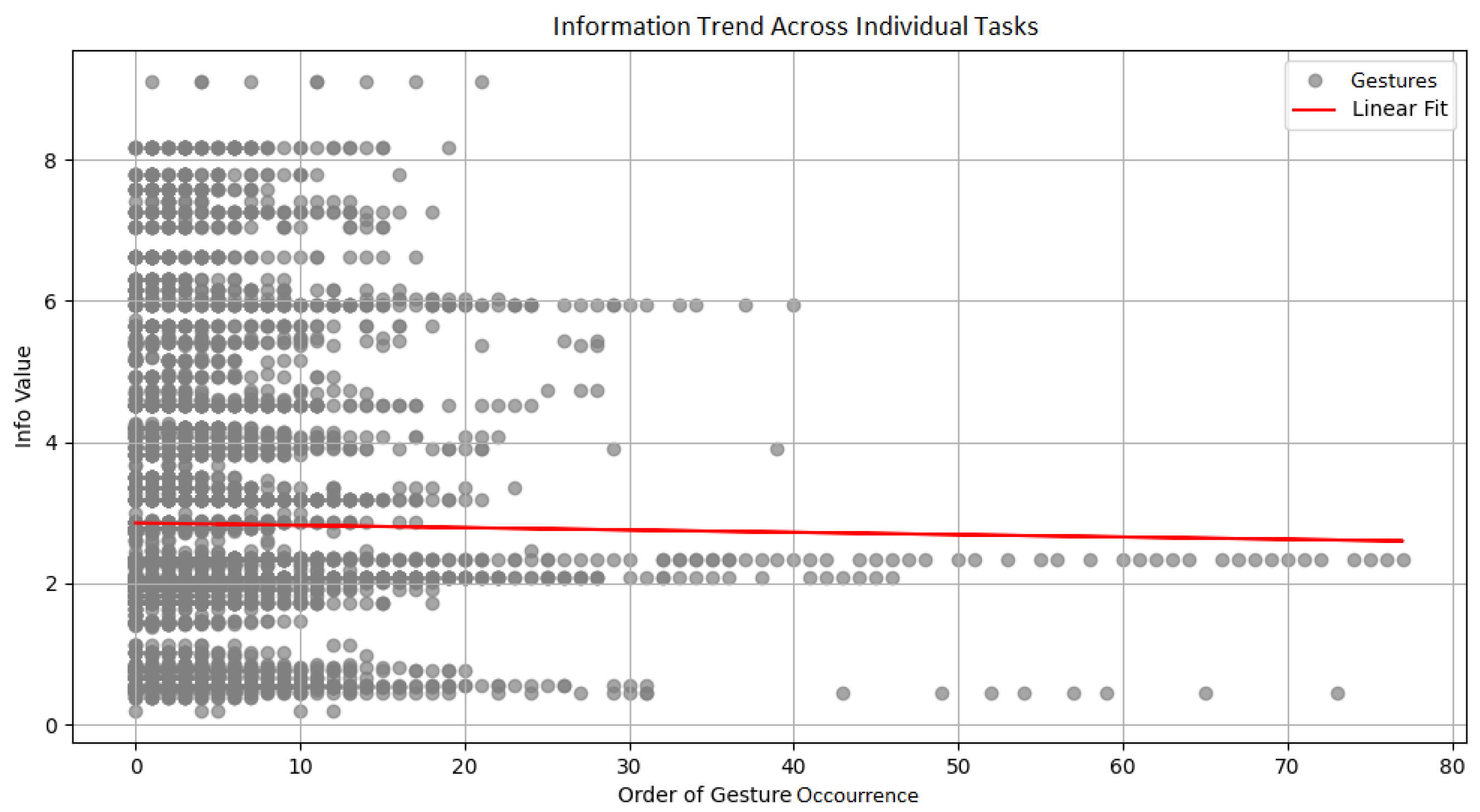

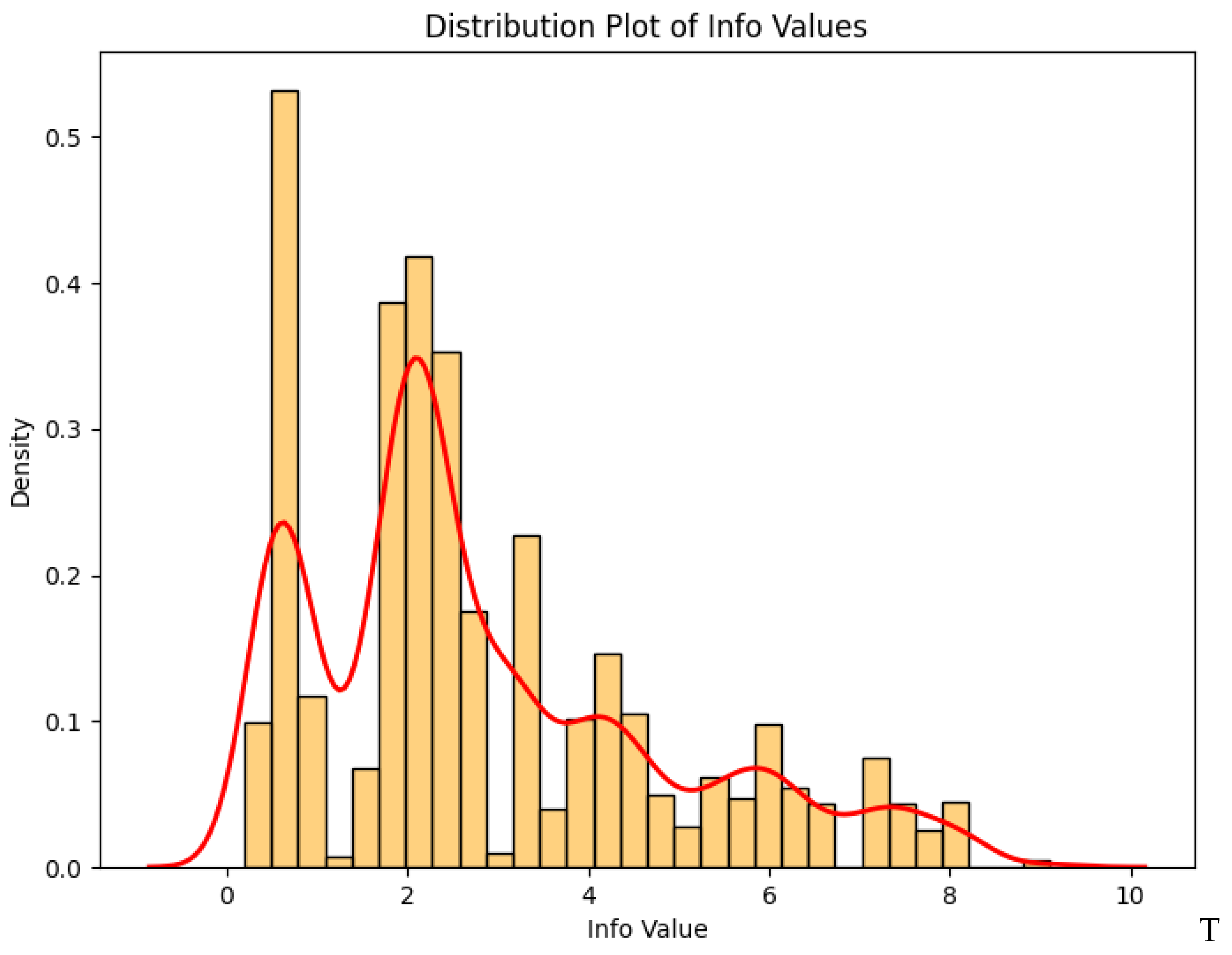

Distributional Information

Observations Based on the Analysis

- Spearman correlation: 0.006 (p = 0.6)

- Summary: No other relevant metrics reached statistical significance. Taken individually, 14 out of 26 subjects showed a negative Spearman correlation.

- Spearman correlation: 0.039 (p = 0.003)

- Summary: no statistical significance was reached in other relevant metrics. Taken individually, 13 out of 26 subjects showed a negative Spearman correlation.

4.1.3. Discussion

4.2. Manual Annotation Using MAXQDA Software

4.2.1. Coding Procedure

- Layered coding: videos were annotated in layers, starting with general body language and progressing to specific gestures.

- Gesture boundaries: gestures were marked from the onset of movement to completion.

- Comprehensive annotation: all involved body parts were annotated (e.g., fingers, gaze, head).

- Temporal coding: the full gesture duration was marked, including overlapping gestures.

- Repetition or pausing: these were annotated with reference to the specific part or full gesture.

- Hand switching: coders noted when participants switched hands during a gesture.

4.2.2. MAXQDA Analysis Results and Discussion

Frequency of Code Occurrence

Co-Occurrence of Codes

- 1.

- Co-occurrence of eyes/gaze gesture in all codes (intersection of codes in a segment)

- 2.

- Co-occurrence of pointing gestures at the same time

- 3.

- Co-occurrence of body language within a 1-second window

- 4.

- Co-occurrence of beats and iconic/metaphorical gestures (excluding pointing) in a 1-second frame

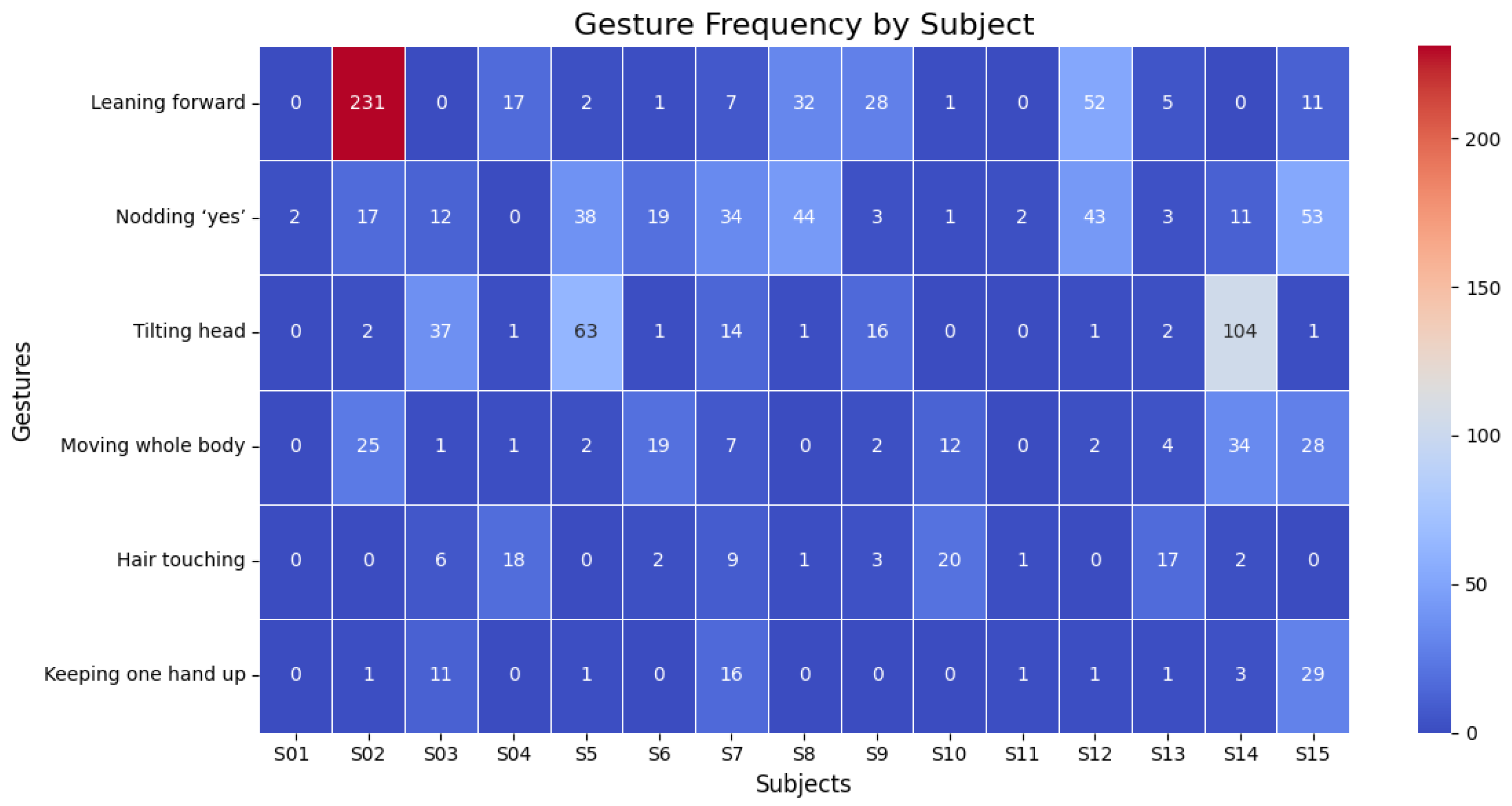

Individual Differences in Gesture Use

Total Number of Pointing Gestures

Gestures Pointing at One vs. Multiple Objects

Hand Preference in Pointing

Method of Pointing

Gesture Pauses and Repetitions

Most Common Additional Gestures

4.2.3. Discussion

5. Conclusions

- Generalized backbone: captures the bulk of user commands with minimal calibration.

- Adaptive personalization module: actively learns each user’s unique gesture “signature” during early interactions and adjusts recognition thresholds (e.g., minimum motion magnitude, finger-extension angles) to preserve accuracy over time.

6. Limitations and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Uba, J.; Jurewicz, K.A. A review on development approaches for 3D gestural embodied human-computer interaction systems. Appl. Ergon. 2024, 121, 104359. [Google Scholar] [CrossRef] [PubMed]

- Cooperrider, K. Fifteen ways of looking at a pointing gesture. Public J. Semiot. 2023, 10, 40–84. [Google Scholar] [CrossRef]

- Kendon, A. Do gestures communicate? A review. Res. Lang. Soc. Interact. 1994, 27, 175–200. [Google Scholar] [CrossRef]

- Kelmaganbetova, A.; Mazhitayeva, S.; Ayazbayeva, B.; Khamzina, G.; Ramazanova, Z.; Rahymberlina, S.; Kadyrov, Z. The role of gestures in communication. Theory Pract. Lang. Stud. 2023, 13, 2506–2513. [Google Scholar] [CrossRef]

- McNeill, D. Hand and Mind: What Gestures Reveal About Thought; University of Chicago Press: Chicago, IL, USA, 1992. [Google Scholar]

- Kita, S. Pointing: Where Language, Culture, and Cognition Meet; Psychology Press: East Sussex, UK, 2003. [Google Scholar]

- Eibl-Eibesfeldt, I. Human Ethology; Routledge: London, UK, 2017. [Google Scholar]

- Wilkins, D. Why pointing with the index finger is not a universal (in sociocultural and semiotic terms). In Pointing; Psychology Press: East Sussex, UK, 2003; pp. 179–224. [Google Scholar]

- Cooperrider, K.; Slotta, J.; Núñez, R. The preference for pointing with the hand is not universal. Cogn. Sci. 2018, 42, 1375–1390. [Google Scholar] [CrossRef] [PubMed]

- Özer, D.; Göksun, T. Gesture use and processing: A review on individual differences in cognitive resources. Front. Psychol. 2020, 11, 573555. [Google Scholar] [CrossRef] [PubMed]

- Clough, S.; Duff, M.C. The role of gesture in communication and cognition: Implications for understanding and treating neurogenic communication disorders. Front. Hum. Neurosci. 2020, 14, 323. [Google Scholar] [CrossRef] [PubMed]

- Cochet, H.; Vauclair, J. Deictic gestures and symbolic gestures produced by adults in an experimental context: Hand shapes and hand preferences. Laterality 2014, 19, 278–301. [Google Scholar] [CrossRef] [PubMed]

- Clements, C.; Chawarska, K. Beyond pointing: Development of the “showing” gesture in children with autism spectrum disorder. Yale Rev. Undergrad. Res. Psychol. 2010, 2, 1–11. [Google Scholar]

- Cappuccio, M.L.; Chu, M.; Kita, S. Pointing as an instrumental gesture: Gaze representation through indication. Humana. Mente J. Philos. Stud. 2013, 24, 125–149. [Google Scholar]

- Butterworth, G.; Itakura, S. How the eyes, head and hand serve definite reference. Br. J. Dev. Psychol. 2000, 18, 25–50. [Google Scholar] [CrossRef]

- Blodow, N.; Marton, Z.C.; Pangercic, D.; Rühr, T.; Tenorth, M.; Beetz, M. Inferring generalized pick-and-place tasks from pointing gestures. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Cosgun, A.; Trevor, A.J.B.; Christensen, H.I. Did you mean this object?: Detecting ambiguity in pointing gesture targets. In Proceedings of the Workshops of the 2015 ACM/IEEE International Conference on Human-Robot Interaction, HRI’15 Workshop, Portland, OR, USA, 2 March 2015. [Google Scholar]

- Choi, E.; Kwon, S.; Lee, D.; Lee, H.; Chung, M.K. Towards successful user interaction with systems: Focusing on user-derived gestures for smart home systems. Appl. Ergon. 2014, 45, 1196–1207. [Google Scholar] [CrossRef] [PubMed]

- Calli, B.; Singh, A.; Bruce, J.; Walsman, A.; Konolige, K.; Srinivasa, S.; Abbeel, P.; Dollar, A.M. Yale-CMU-Berkeley dataset for robotic manipulation research. Int. J. Robot. Res. 2017, 36, 261–268. [Google Scholar] [CrossRef]

- Maat, S. Clustering Gestures Using Multiple Techniques. Ph.D. Thesis, Tilburg University, Tilburg, The Netherlands, 2020. [Google Scholar]

- Humaira, H.; Rasyidah, R. Determining the appropriate cluster number using elbow method for k-means algorithm. In Proceedings of the 2nd Workshop on Multidisciplinary and Applications (WMA) 2018, Padang, Indonesia, 24–25 January 2018. [Google Scholar] [CrossRef]

- Jurewicz, K.A.; Neyens, D.M. Redefining the human factors approach to 3D gestural HCI by exploring the usability-accuracy tradeoff in gestural computer systems. Appl. Ergon. 2022, 105, 103833. [Google Scholar] [CrossRef] [PubMed]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Pagán Cánovas, C.; Valenzuela, J.; Alcaraz Carrión, D.; Olza, I.; Ramscar, M. Quantifying the speech-gesture relation with massive multimodal datasets: Informativity in time expressions. PLoS ONE 2020, 15, e0233892. [Google Scholar] [CrossRef]

- Ghosh, D.K.; Ari, S. A static hand gesture recognition algorithm using k-mean based radial basis function neural network. In Proceedings of the 2011 8th International Conference on Information, Communications & Signal Processing, Singapore, 13–16 December 2011; pp. 1–5. [Google Scholar] [CrossRef]

- Alibali, M.W.; Hostetter, A.B. Gestures in cognition: Actions that bridge the mind and the world. In The Cambridge Handbook of Gesture Studies; Cienki, A., Ed.; Cambridge University Press: Cambridge, UK, 2024; pp. 501–524. [Google Scholar]

- Cahlikova, J.; Cingl, L.; Levely, I. How stress affects performance and competitiveness across gender. Manag. Sci. 2020, 66, 3295–3310. [Google Scholar] [CrossRef]

- Silletti, F.; Barbara, I.; Semeraro, C.; Salvadori, E.A.; Difilippo, M.; Ricciardi, A. Pointing gesture: Sex, temperament, and prosocial behavior. A cross-cultural study with Italian and Dutch infants. In Proceedings of the SRCD 2021 Biennial Meeting, Virtual, 7–9 April 2021. [Google Scholar] [CrossRef]

- Gullberg, M. The Relationship Between Gestures and Speaking in L2 Learning. In The Routledge Handbook of Second Language Acquisition and Speaking, 1st ed.; Trevise, N., Munoz, C., Thomson, M., Eds.; Routledge: London, UK, 2022; p. 13. [Google Scholar]

| Set | A | B | C | D | Bowl 1 | Bowl 2 |

|---|---|---|---|---|---|---|

| Objects | Fork, SPAM can, small banana, orange, apple | Mustard bottle, knife, lemon, mug, bowl | Spoon, plate, small orange, banana, frying pan | Scissors, bowl, mug, plate, Cheez-it box | Bowl, orange, apple, banana | Bowl, mug, mustard bottle, peach, apple, small banana, orange, lemon, block piece, strawberry, SPAM can, Cheez-it box and spoon |

| Task | Trials | Communication Type |

|---|---|---|

| Feature recognition | 4 | Declarative |

| Feature relations | 4 | Declarative |

| Performing a simple task | 1 | Imperative |

| Performing a complex task | 1 | Imperative |

| Metric | Mean | Median | Range | Variance | SD | IQR |

|---|---|---|---|---|---|---|

| Information value | 2.8369 | 2.3293 | 8.9146 | 3.8911 | 1.9726 | 2.2073 |

| Metric | Total | Avg/Subject | Min | Max |

|---|---|---|---|---|

| Gesture count | 5699 | 219 | 38 | 552 |

| Predictor | Correlation | p-Value |

|---|---|---|

| Gender (1 = female) | 0.3974 | |

| Education (1 = higher) | −0.3717 | |

| Social Skills Inventory | 0.1222 | |

| Age | −0.5723 |

| Category | Dimension/Subcategory | Codes |

|---|---|---|

| Pointing | Number of objects | One object; two objects; more than two objects |

| Sequence of actions | Simultaneous at many objects; sequential; single | |

| Number of hands used | One hand; both hands | |

| Part of the body | Index finger; whole hand; other finger; head; eyes/gaze | |

| Other | Repetitive pointing; pausing/freezing | |

| Beats | – | One-hand beat; two-hands beat; head beat movements |

| Iconic/metaphoric | – | One hand; two hands |

| Body language | – | Tilting head; two hands up; playing with hands; one hand up/on chest; walking; leaning forward; moving whole body; nose rubbing; finger to lip; forehead rubbing; hand to cheek; temple touching; chin rubbing; adjusting glasses; crossing arms; hair touching; clothes touching; ear rubbing |

| Approval/disagreement | – | Nodding ‘yes’; Nodding ‘no’; showing ‘yes’ with hand(s); showing ‘no’ with hand(s); thumb ‘OK’ |

| Code | Frequency | Percentage |

|---|---|---|

| Eyes/gaze | 467 | 98.11% |

| One hand | 448 | 94.12% |

| One object | 298 | 62.61% |

| Point at one object | 294 | 61.76% |

| Index finger | 294 | 61.76% |

| One after the other | 272 | 57.14% |

| Whole hand | 232 | 48.74% |

| Point at two objects | 227 | 47.69% |

| Nodding “yes” | 181 | 38.03% |

| Pausing/freezing the gesture | 158 | 33.09% |

| Two hands iconic/metaphoric | 13 | 2.73% |

| Finger to lip | 9 | 1.89% |

| One hand iconic/metaphoric | 8 | 1.68% |

| Showing “yes” with hands | 5 | 1.05% |

| Hand on cheek | 4 | 0.84% |

| Clothes touching | 3 | 0.63% |

| Showing “no” with hands | 3 | 0.63% |

| Ear rubbing | 2 | 0.42% |

| Forehead rubbing | 1 | 0.21% |

| Metrics | S01 | S02 | S03 | S04 | S05 | S06 | S07 | S08 | S09 | S10 | S11 | S12 | S13 | S14 | S15 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Pointing gestures in total | 111 | 312 | 153 | 171 | 127 | 144 | 142 | 138 | 63 | 277 | 40 | 140 | 152 | 157 | 221 |

| Pointed at one object (%) | 64 | 61 | 66 | 50 | 59 | 58 | 63 | 46 | 3 | 43 | 58 | 56 | 59 | 54 | 62 |

| Pointed at two objects (%) | 34 | 26 | 27 | 45 | 37 | 35 | 24 | 42 | 44 | 50 | 35 | 41 | 39 | 44 | 23 |

| Pointed at more objects (%) | 2 | 13 | 7 | 5 | 4 | 7 | 13 | 12 | 53 | 7 | 7 | 3 | 2 | 2 | 15 |

| Hands used to point | 100% one hand | 86% both hands | 100% one hand | 100% one hand | 100% one hand | 97% one hand | 83% one hand | 99% one hand | 94% one hand | 95% one hand | 100% one hand | 100% one hand | 97% one hand | 99% one hand | 92% one hand |

| Pointed with index finger (%) | 0 | 0.3 | 99 | 100 | 0 | 84 | 69 | 99.3 | 97 | 95 | 15 | 1 | 69 | 3 | 81 |

| Pointed with whole hand (%) | 100 | 99.7 | 1 | 0 | 100 | 16 | 21 | 0.7 | 3 | 0.3 | 85 | 99 | 31 | 97 | 17 |

| Pointed with other finger (%) | 0 | 0 | 0 | 0 | 0 | 0 | 8 | 0 | 0 | 4.7 | 0 | 0 | 0 | 0 | 0 |

| Pointed with head (%) | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 |

| Pointed with gaze (%) | 100 | 99.7 | 99.3 | 100 | 100 | 96.5 | 99.3 | 100 | 100 | 100 | 97.5 | 100 | 100 | 100 | 81.4 |

| Pausing gesture (count) | 0 | 78 | 29 | 20 | 20 | 6 | 25 | 6 | 68 | 20 | 12 | 17 | 4 | 58 | 6 |

| Repeating gesture (count) | 1 | 4 | 11 | 43 | 7 | 4 | 20 | 30 | 10 | 65 | 1 | 35 | 6 | 5 | 36 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kukier, T.; Wróbel, A.; Sienkiewicz, B.; Klimecka, J.; Gonzalez, A.G.C.; Gajewski, P.; Indurkhya, B. An Empirical Study on Pointing Gestures Used in Communication in Household Settings. Electronics 2025, 14, 2346. https://doi.org/10.3390/electronics14122346

Kukier T, Wróbel A, Sienkiewicz B, Klimecka J, Gonzalez AGC, Gajewski P, Indurkhya B. An Empirical Study on Pointing Gestures Used in Communication in Household Settings. Electronics. 2025; 14(12):2346. https://doi.org/10.3390/electronics14122346

Chicago/Turabian StyleKukier, Tymon, Alicja Wróbel, Barbara Sienkiewicz, Julia Klimecka, Antonio Galiza Cerdeira Gonzalez, Paweł Gajewski, and Bipin Indurkhya. 2025. "An Empirical Study on Pointing Gestures Used in Communication in Household Settings" Electronics 14, no. 12: 2346. https://doi.org/10.3390/electronics14122346

APA StyleKukier, T., Wróbel, A., Sienkiewicz, B., Klimecka, J., Gonzalez, A. G. C., Gajewski, P., & Indurkhya, B. (2025). An Empirical Study on Pointing Gestures Used in Communication in Household Settings. Electronics, 14(12), 2346. https://doi.org/10.3390/electronics14122346