ESCI: An End-to-End Spatiotemporal Correlation Integration Framework for Low-Observable Extended UAV Tracking with Cascade MIMO Radar Subject to Mixed Interferences

Abstract

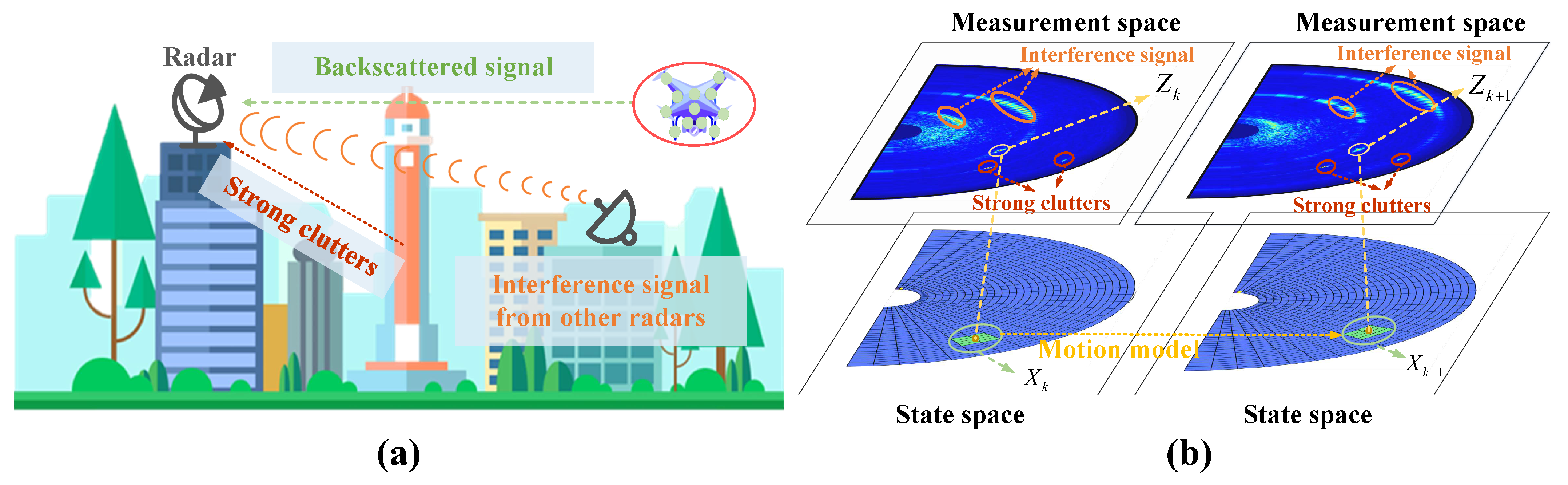

:1. Introduction

2. System Design

2.1. Cascade FMCW-MIMO Radar Signal Processing for Intra-Channel and Inter-Channel Coherent Integration

2.1.1. Signal Model of Cascade FMCW-MIMO Radar for Extended UAV

2.1.2. Intra-Channel and Inter-Channel Coherent Integration in the Presence of Interferences

2.1.3. Simulation Results

2.2. End-to-End Spatiotemporal Correlation Integration-Based Tracking Framework with Recursive Bayesian Estimation

2.2.1. State and Existence Indicator Transition Models

2.2.2. Measurement Model for Cascade MIMO Radar

2.2.3. End-to-End Spatiotemporal Correlation Integration-Based State Estimation with Recursive Bayesian Estimation

2.2.4. Simulation Results

3. Evaluation Metrics and Experimental Results

3.1. Evaluation Metrics

3.2. Comparisons

3.2.1. Error CDF of Tracked Trajectory

3.2.2. Intersection over Union

3.3. Experimental Results

3.3.1. Mutual Interference Scenario

3.3.2. Strong Clutter Scenario

3.3.3. Mixed Interference Scenario

3.3.4. Rainy Scenario

4. Performance Analysis and Discussion

4.1. Impact of Occlusion

4.2. Impact of SINR

4.3. Computational Complexity Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Biswas, K.; Ghazzai, H.; Sboui, L.; Massoud, Y. Urban air mobility: An iot perspective. IEEE Internet Things Mag. 2023, 6, 122–128. [Google Scholar] [CrossRef]

- Cummings, C.; Mahmassani, H. Emergence of 4-D system fundamental diagram in urban air mobility traffic flow. Transp. Res. Rec. 2021, 2675, 841–850. [Google Scholar] [CrossRef]

- Li, G.; Liu, X.; Loianno, G. Rotortm: A flexible simulator for aerial transportation and manipulation. IEEE Trans. Robot. 2023, 40, 831–850. [Google Scholar] [CrossRef]

- Guo, J.; Chen, L.; Li, L.; Na, X.; Vlacic, L.; Wang, F.-Y. Advanced air mobility: An innovation for future diversified transportation and society. IEEE Trans. Intell. Veh. 2024, 9, 3106–3110. [Google Scholar] [CrossRef]

- Li, J.; Ye, D.H.; Kolsch, M.; Wachs, J.P.; Bouman, C.A. Fast and robust UAV to UAV detection and tracking from video. IEEE Trans. Emerg. Top. Comput. 2021, 10, 1519–1531. [Google Scholar] [CrossRef]

- Zhang, Y.; Deng, J.; Liu, P.; Li, W.; Zhao, S. Domain adaptive detection of mavs: A benchmark and noise suppression network. IEEE Trans. Autom. Sci. Eng. 2024, 22, 1764–1779. [Google Scholar] [CrossRef]

- Huang, D.; Zhang, Z.; Fang, X.; He, M.; Lai, H.; Mi, B. STIF: A spatial–temporal integrated framework for end-to-end micro-UAV trajectory tracking and prediction with 4-D MIMO radar. IEEE Internet Things J. 2023, 10, 18821–18836. [Google Scholar] [CrossRef]

- Rai, P.K.; Idsøe, H.; Yakkati, R.R.; Kumar, A.; Khan, M.Z.A.; Yalavarthy, P.K.; Cenkeramaddi, L.R. Localization and activity classification of unmanned aerial vehicle using mmwave FMCW radars. IEEE Sens. J. 2021, 21, 16043–16053. [Google Scholar] [CrossRef]

- Wang, B.; Lai, T.; Wang, Q.; Huang, H. A nonlinear compensation method for enhancing the detection accuracy of weak targets in FMCW radar. Remote Sens. 2025, 17, 829. [Google Scholar] [CrossRef]

- Takamatsu, H.; Hinohara, N.; Suzuki, K.; Sakai, F. Experimental analysis of accuracy and precision in displacement measurement using millimeter-wave FMCW radar. Appl. Sci. 2025, 15, 3316. [Google Scholar] [CrossRef]

- Huang, Z.; Jiang, P.; Fu, M.; Deng, Z. Angle estimation for range-spread targets based on scatterer energy focusing. Sensors 2025, 25, 1723. [Google Scholar] [CrossRef] [PubMed]

- Jansen, F.; Laghezza, F.; Alhasson, S.; Lok, P.; van Meurs, L.; Geraets, R.; Paker, Ö.; Overdevest, J. Simultaneous multi-mode automotive imaging radar using cascaded transceivers. In Proceedings of the 2021 18th European Radar Conference (EuRAD), London, UK, 5–7 April 2022; pp. 441–444. [Google Scholar]

- Sun, S.; Petropulu, A.P.; Poor, H.V. MIMO radar for advanced driver-assistance systems and autonomous driving: Advantages and challenges. IEEE Signal Process. Mag. 2020, 37, 98–117. [Google Scholar] [CrossRef]

- Fan, L.; Wang, J.; Chang, Y.; Li, Y.; Wang, Y.; Cao, D. 4D mmwave radar for autonomous driving perception: A comprehensive survey. IEEE Trans. Intell. Veh. 2024, 9, 4606–4620. [Google Scholar] [CrossRef]

- Ahmed, N.; Rutten, M.; Bessell, T.; Kanhere, S.S.; Gordon, N.; Jha, S. Detection and tracking using particle-filter-based wireless sensor networks. IEEE Trans. Mob. Comput. 2010, 9, 1332–1345. [Google Scholar] [CrossRef]

- Liu, H.; Huang, C.; Gan, L.; Zhou, Y.; Truong, T.-K. Clutter reduction and target tracking in through-the-wall radar. IEEE Trans. Geosci. Remote Sens. 2019, 58, 486–499. [Google Scholar] [CrossRef]

- Kulikov, G.Y.; Kulikova, M.V. The accurate continuous-discrete extended Kalman filter for radar tracking. IEEE Trans. Signal Process. 2015, 64, 948–958. [Google Scholar] [CrossRef]

- Rakai, L.; Song, H.; Sun, S.; Zhang, W.; Yang, Y. Data association in multiple object tracking: A survey of recent techniques. Expert. Syst. Appl. 2022, 192, 116300. [Google Scholar] [CrossRef]

- Angle, R.B.; Streit, R.L.; Efe, M. A low computational complexity JPDA filter with superposition. IEEE Signal Process. Lett. 2021, 28, 1031–1035. [Google Scholar] [CrossRef]

- Sheng, H.; Chen, J.; Zhang, Y.; Ke, W.; Xiong, Z.; Yu, J. Iterative multiple hypothesis tracking with tracklet-level association. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 3660–3672. [Google Scholar] [CrossRef]

- Li, C.; Tsay, T.J. Robust visual tracking in cluttered environment using an active contour method. In Proceedings of the 2018 57th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Nara, Japan, 11–14 September 2018; pp. 53–58. [Google Scholar]

- Wagner, T.; Feger, R.; Stelzer, A. Modification of DBSCAN and application to range/doppler/doa measurements for pedestrian recognition with an automotive radar system. In Proceedings of the 2015 European Radar Conference (EuRAD), Paris, France, 9–11 September 2015; pp. 269–272. [Google Scholar]

- Tuncer, B.; Özkan, E. Random matrix based extended target tracking with orientation: A new model and inference. IEEE Trans. Signal Process. 2021, 69, 1910–1923. [Google Scholar] [CrossRef]

- Larrat, M.; Sales, C. Classification of flying drones using millimeter-wave radar: Comparative analysis of algorithms under noisy conditions. Sensors 2025, 25, 721. [Google Scholar] [CrossRef]

- Granstrom, K.; Lundquist, C.; Gustafsson, F.; Orguner, U. Random set methods: Estimation of multiple extended objects. IEEE Robot. Autom. Mag. 2014, 21, 73–82. [Google Scholar] [CrossRef]

- Chen, Z.; Ristic, B.; Kim, D.Y. Bernoulli filter for extended target tracking in the framework of possibility theory. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 9733–9739. [Google Scholar] [CrossRef]

- Wang, S.; Men, C.; Li, R.; Yeo, T.-S. A maneuvering extended target tracking IMM algorithm based on second order EKF. IEEE Trans. Instrum. Meas. 2024, 73. [Google Scholar] [CrossRef]

- Wang, L.; Zhan, R. Joint detection, tracking, and classification of multiple maneuvering star-convex extended targets. IEEE Sens. J. 2024, 24, 5004–5024. [Google Scholar] [CrossRef]

- Aubry, A.; Maio, A.D.; Carotenuto, V.; Farina, A. Radar phase noise modeling and effects-part I: MTI filters. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 698–711. [Google Scholar] [CrossRef]

- Liu, Y.; Manikas, A. MIMO radar: An h-infinity approach for robust multitarget tracking in unknown cluttered environment. IEEE Trans. Aerosp. Electron. Syst. 2024, 60, 1740–1752. [Google Scholar] [CrossRef]

- Xue, J.; Fan, Z.; Xu, S. Adaptive coherent detection for maritime radar range-spread targets in correlated heavy-tailed sea clutter with lognormal texture. IEEE Geosci. Remote Sens. Lett. 2024, 21. [Google Scholar] [CrossRef]

- Huang, P.; Zou, Z.; Xia, X.-G.; Liu, X.; Liao, G.; Xin, Z. Multichannel sea clutter modeling for spaceborne early warning radar and clutter suppression performance analysis. IEEE Trans. Geosci. Remote Sens. 2020, 59, 8349–8366. [Google Scholar] [CrossRef]

- Liu, Z.; Zhu, S.; Xu, J.; He, X.; Duan, K.; Lan, L. Range-ambiguous clutter suppression for STAP-based radar with vertical coherent frequency diverse array. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–17. [Google Scholar] [CrossRef]

- Cui, N.; Duan, K.; Xing, K.; Yu, Z. Beam-space reduced-dimension 3d-STAP for nonside-looking airborne radar. IEEE Geosci. Remote. Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Huang, D.; Cui, G.; Yu, X.; Ge, M.; Kong, L. Joint range–velocity deception jamming suppression for SIMO radar. IET Radar Sonar Navig. 2019, 13, 113–122. [Google Scholar] [CrossRef]

- Han, X.; He, H.; Zhang, Q.; Yang, L.; He, Y. Suppression of deception-false-target jamming for active/passive netted radar based on position error. IEEE Sens. J. 2022, 22, 7902–7912. [Google Scholar] [CrossRef]

- Aydogdu, C.; Keskin, M.F.; Carvajal, G.K.; Eriksson, O.; Hellsten, H.; Herbertsson, H.; Nilsson, E.; Rydstrom, M.; Vanas, K.; Wymeersch, H. Radar interference mitigation for automated driving: Exploring proactive strategies. IEEE Signal Process. Mag. 2020, 37, 72–84. [Google Scholar] [CrossRef]

- Wang, Y.; Huang, Y.; Liu, J.; Zhang, R.; Zhang, H.; Hong, W. Interference mitigation for automotive FMCW radar with tensor decomposition. IEEE Trans. Intell. Transp. Syst. 2024, 25, 9204–9223. [Google Scholar] [CrossRef]

- Neemat, S.; Krasnov, O.; Yarovoy, A. An interference mitigation technique for FMCW radar using beat-frequencies interpolation in the STFT domain. IEEE Trans. Microw. Theory Tech. 2018, 67, 1207–1220. [Google Scholar] [CrossRef]

- Yang, S.; Zhang, D.; Li, Y.; Hu, Y.; Sun, Q.; Chen, Y. iSense: Enabling radar sensing under mutual device interference. IEEE Trans. Mobile Comput. 2024, 23, 10554–10569. [Google Scholar] [CrossRef]

- Raeis, H.; Kazemi, M.; Shirmohammadi, S. CAE-MAS: Convolutional autoencoder interference cancellation for multiperson activity sensing with FMCW microwave radar. IEEE Trans. Instrum. Meas. 2024, 73, 1–10. [Google Scholar] [CrossRef]

- Patel, J.S.; Fioranelli, F.; Anderson, D. Review of radar classification and RCS characterisation techniques for small UAVs or drones. IET Radar Sonar Navig. 2018, 12, 911–919. [Google Scholar] [CrossRef]

- Pieraccini, M.; Miccinesi, L.; Rojhani, N. RCS measurements and ISAR images of small UAVs. IEEE Aerosp. Electron. Syst. Mag. 2017, 32, 28–32. [Google Scholar] [CrossRef]

- Xu, J.; Yu, J.; Peng, Y.-N.; Xia, X.-G. Radon-Fourier transform for radar target detection, i: Generalized doppler filter bank. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 1186–1202. [Google Scholar] [CrossRef]

- Fang, X.; Li, J.; Zhang, Z.; Xiao, G. FMCW-MIMO radar-based pedestrian trajectory tracking under low-observable environments. IEEE Sens. J. 2022, 22, 19675–19687. [Google Scholar] [CrossRef]

- Li, X.; Yang, Y.; Sun, Z.; Cui, G.; Yeo, T.S. Multi-frame integration method for radar detection of weak moving target. IEEE Trans. Veh. Technol. 2021, 70, 3609–3624. [Google Scholar] [CrossRef]

- Li, X.; Sun, Z.; Yeo, T.S.; Zhang, T.; Yi, W.; Cui, G.; Kong, L. STGRFT for detection of maneuvering weak target with multiple motion models. IEEE Trans. Signal Process. 2019, 67, 1902–1917. [Google Scholar] [CrossRef]

- Wang, J.; Cai, D.; Wen, Y. Comparison of matched filter and dechirp processing used in linear frequency modulation. In Proceedings of the 2011 IEEE 2nd International Conference on Computing, Control and Industrial Engineering, Wuhan, China, 20–21 August 2011; Volume 2, pp. 70–73. [Google Scholar]

- Robey, F.C.; Coutts, S.; Weikle, D.; McHarg, J.C.; Cuomo, K. MIMO radar theory and experimental results. In Proceedings of the Conference Record of the Thirty-Eighth Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 7–10 November 2004; Volume 1, pp. 300–304. [Google Scholar]

- Kim, G.; Mun, J.; Lee, J. A peer-to-peer interference analysis for automotive chirp sequence radars. IEEE Trans. Veh. 2018, 67, 8110–8117. [Google Scholar] [CrossRef]

| Parameters | Victim Radar | Interfering Radar |

|---|---|---|

| Carrier frequency | 77 GHz | 77 GHz |

| Bandwidth | 2.4 GHz | 0.87 GHz |

| Pulse duration | 50 s | 18.98 s |

| No. of transmitters | 12 | 2 |

| No. of receivers | 16 | 4 |

| Sweep slope | 85.021 MHz/s | 85.021 MHz/s |

| Sample rate | 9000 KHz | 6250 KHz |

| Range resolution | 0.062 m | 0.17 m |

| Azimuth resolution | 1.4062° | 14.3° |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, G.; Fang, X.; Huang, D.; Zhang, Z. ESCI: An End-to-End Spatiotemporal Correlation Integration Framework for Low-Observable Extended UAV Tracking with Cascade MIMO Radar Subject to Mixed Interferences. Electronics 2025, 14, 2181. https://doi.org/10.3390/electronics14112181

Hu G, Fang X, Huang D, Zhang Z. ESCI: An End-to-End Spatiotemporal Correlation Integration Framework for Low-Observable Extended UAV Tracking with Cascade MIMO Radar Subject to Mixed Interferences. Electronics. 2025; 14(11):2181. https://doi.org/10.3390/electronics14112181

Chicago/Turabian StyleHu, Guanzheng, Xin Fang, Darong Huang, and Zhenyuan Zhang. 2025. "ESCI: An End-to-End Spatiotemporal Correlation Integration Framework for Low-Observable Extended UAV Tracking with Cascade MIMO Radar Subject to Mixed Interferences" Electronics 14, no. 11: 2181. https://doi.org/10.3390/electronics14112181

APA StyleHu, G., Fang, X., Huang, D., & Zhang, Z. (2025). ESCI: An End-to-End Spatiotemporal Correlation Integration Framework for Low-Observable Extended UAV Tracking with Cascade MIMO Radar Subject to Mixed Interferences. Electronics, 14(11), 2181. https://doi.org/10.3390/electronics14112181