Sequential Recommendation System Based on Deep Learning: A Survey

Abstract

1. Introduction

2. SRSs Based on DL

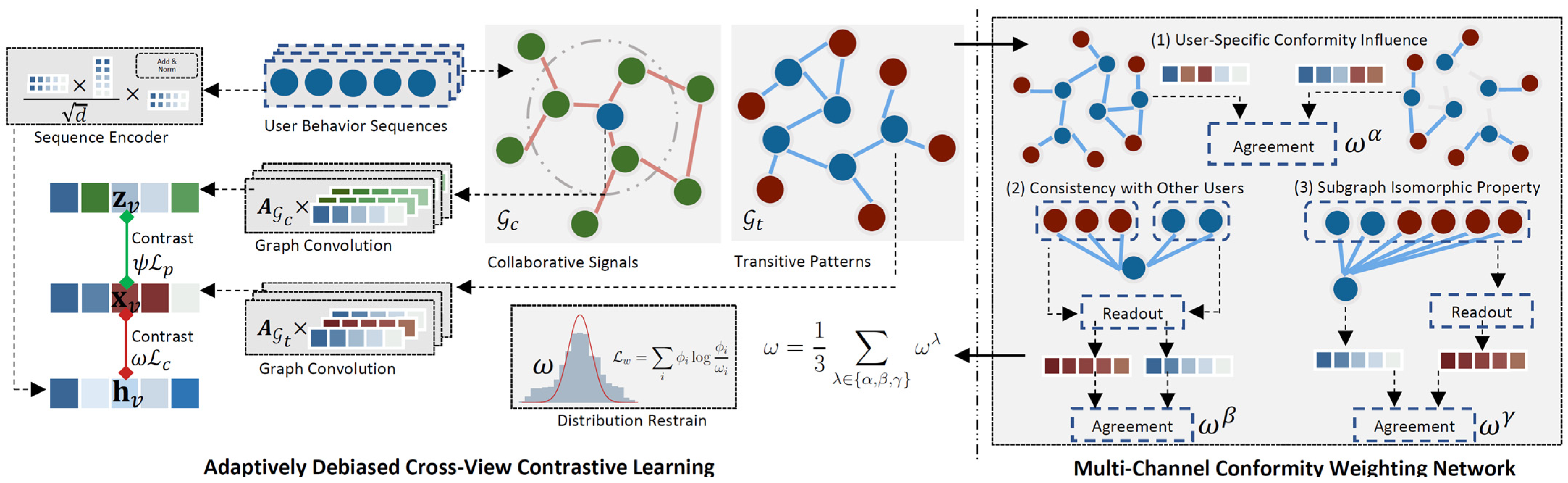

2.1. Sequence Recommendation Based on CL

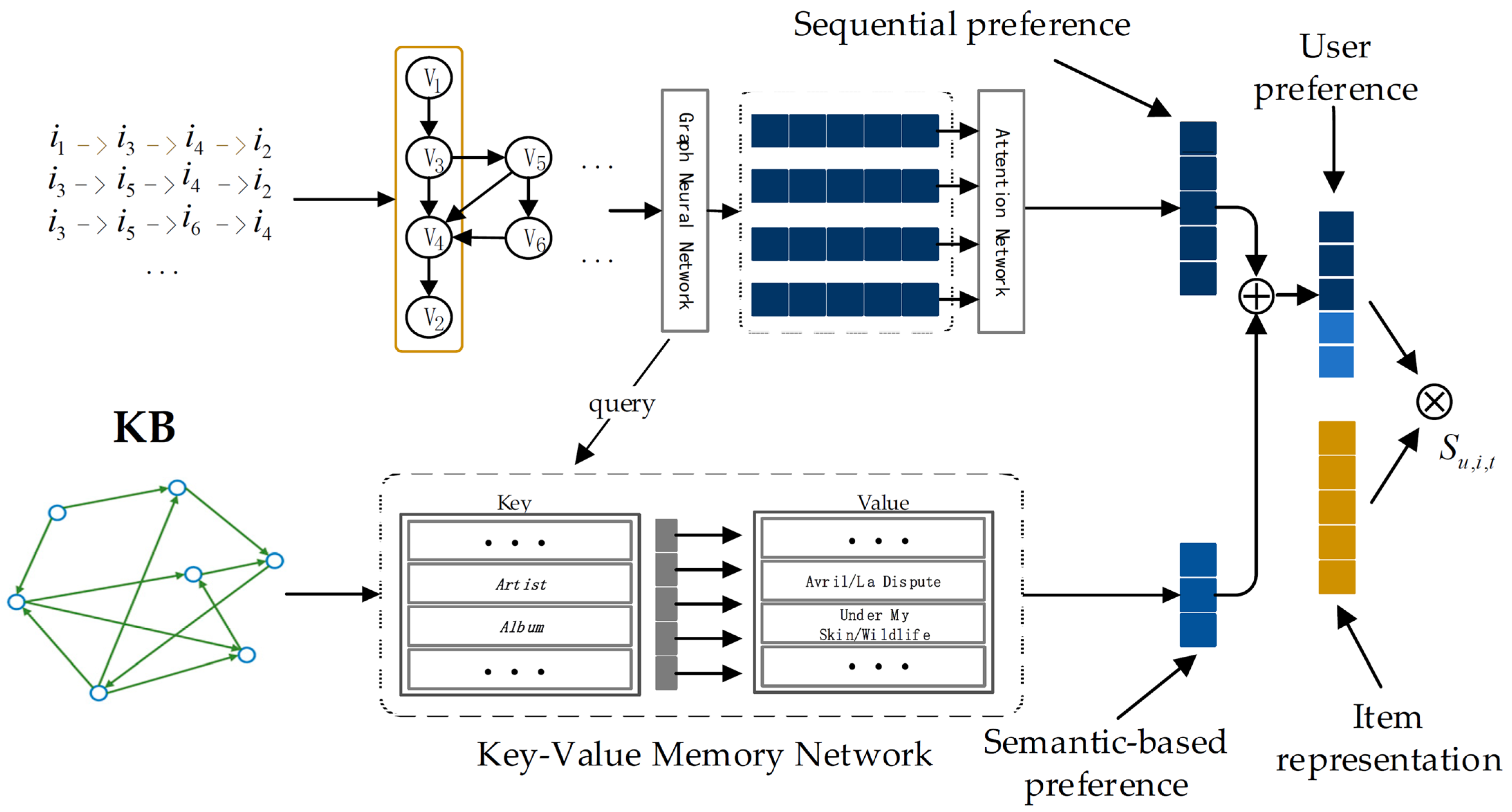

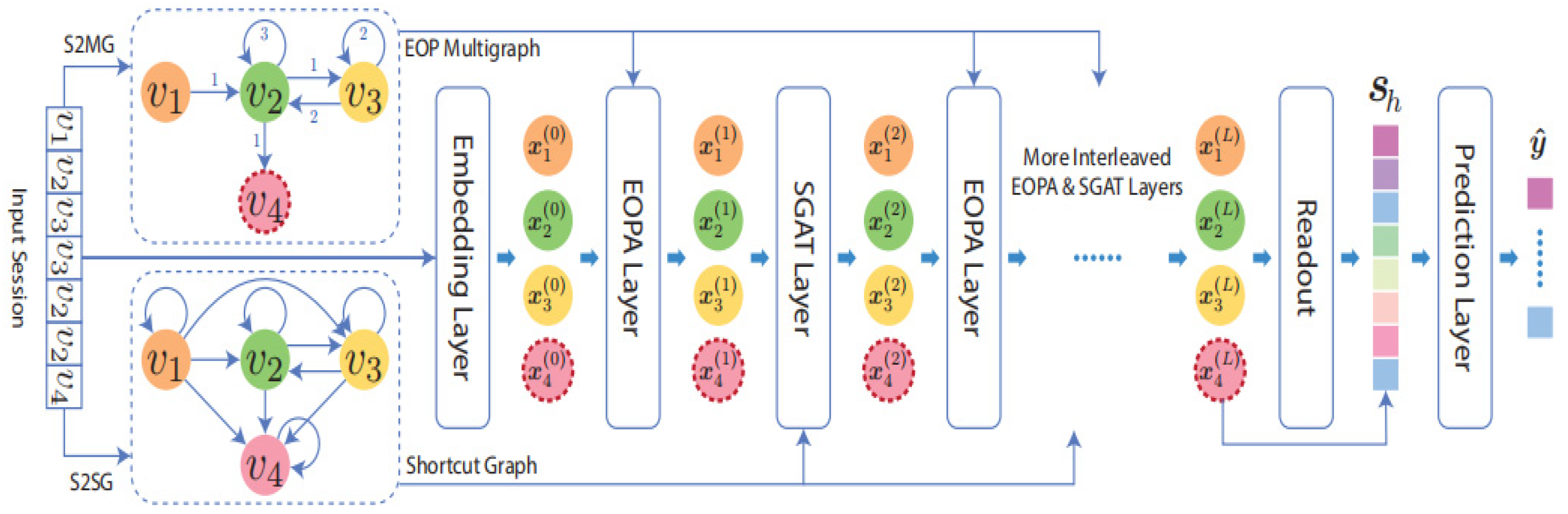

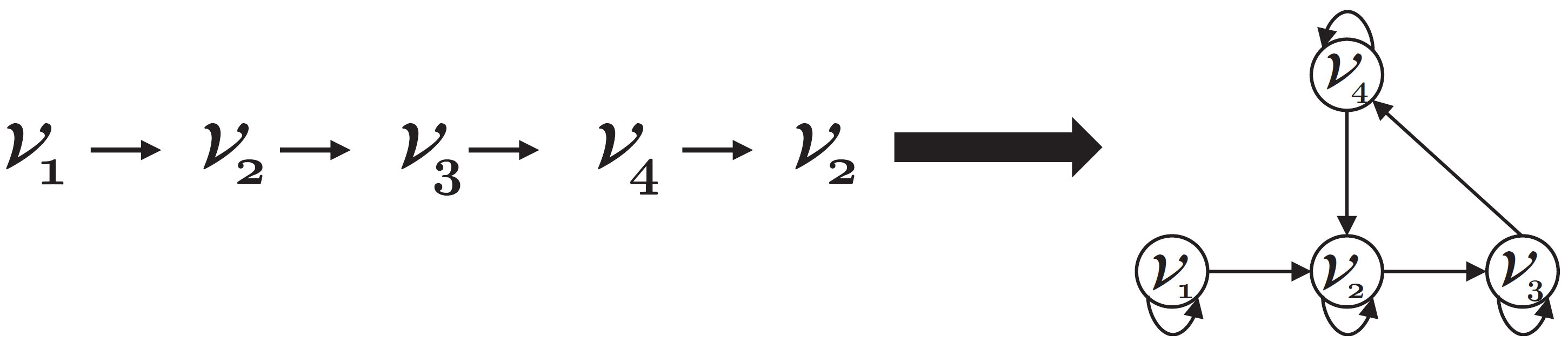

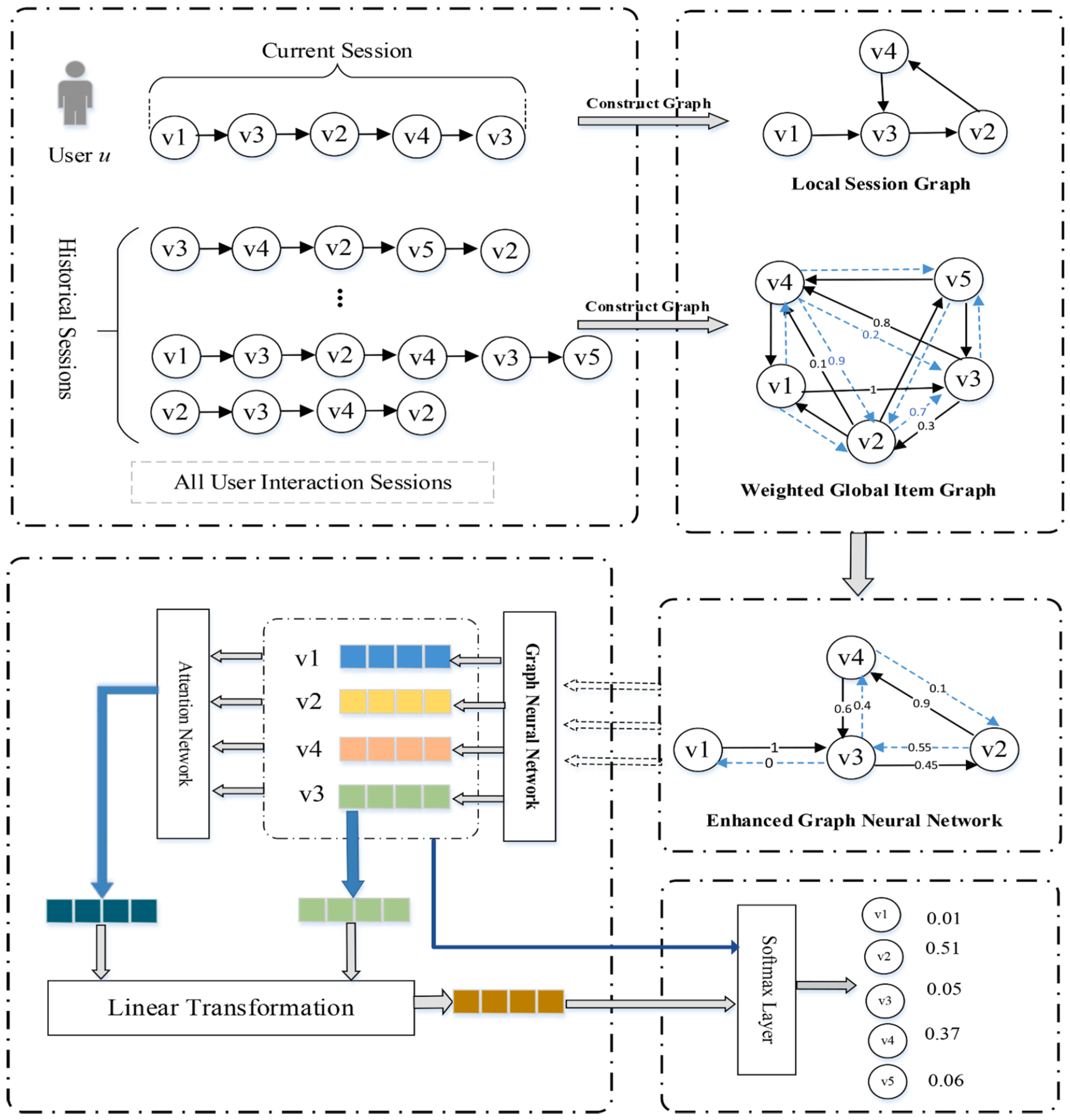

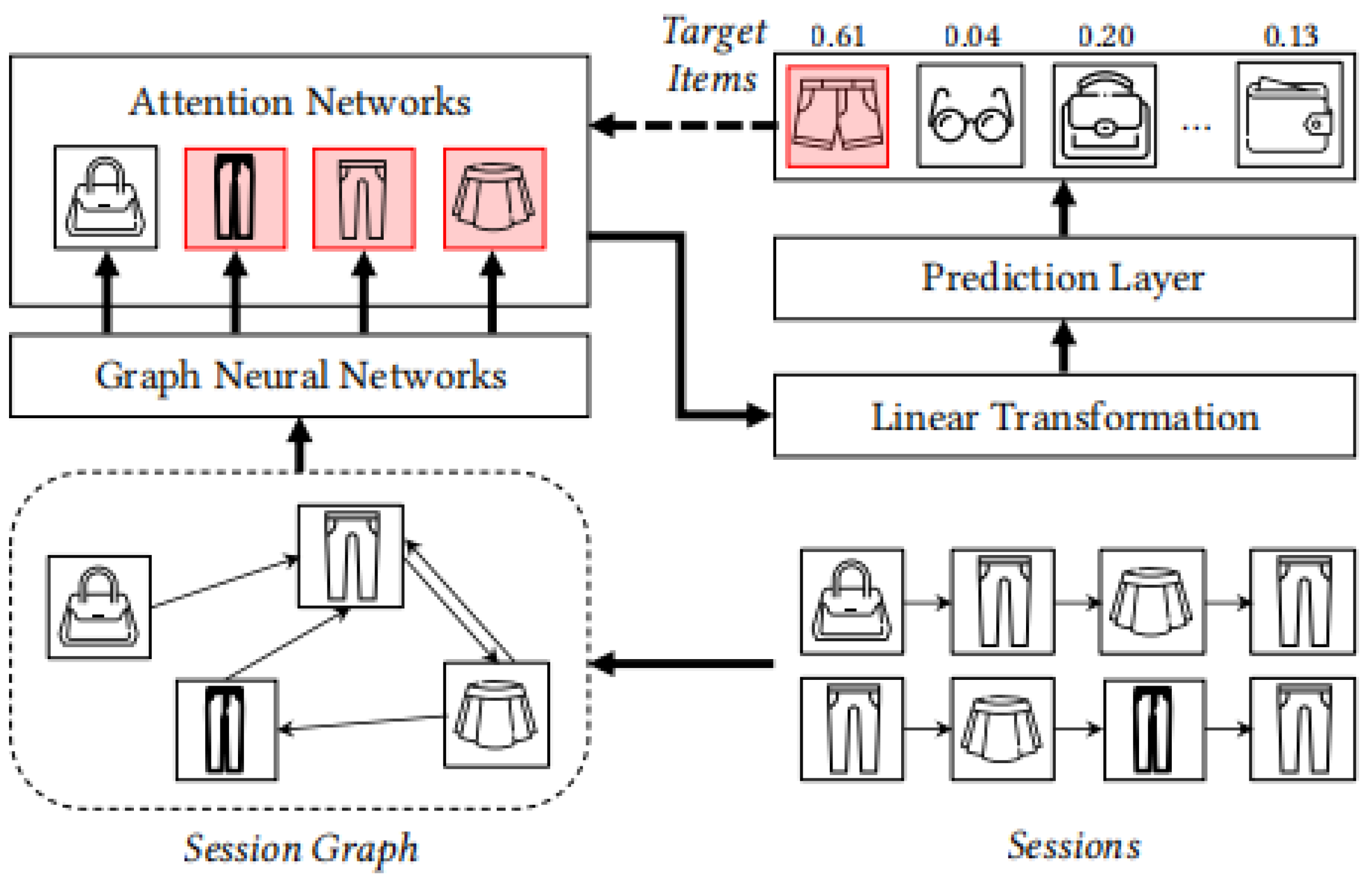

2.2. Sequence Recommendation Based on GNNs

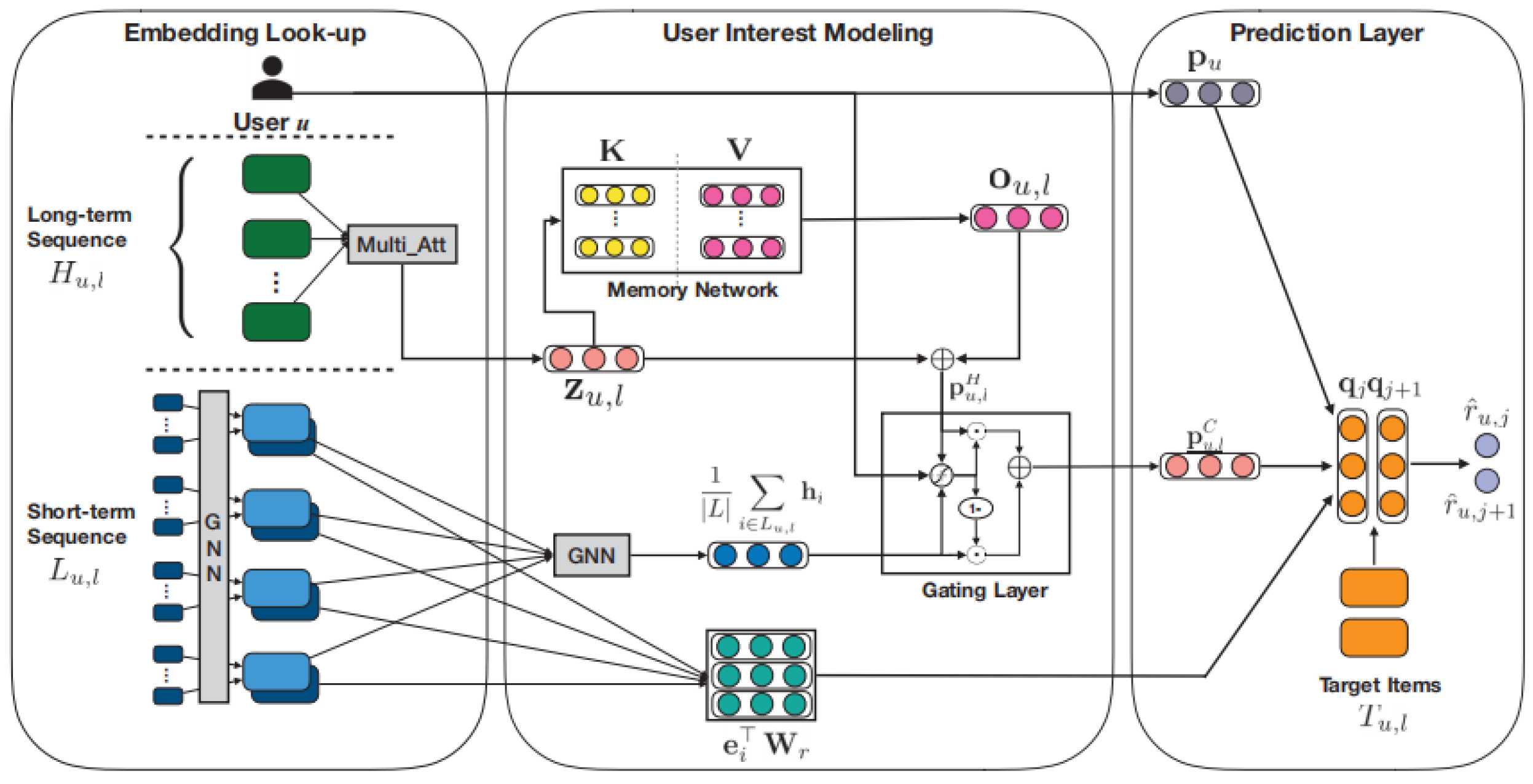

2.3. Application of Attention Mechanisms in SRSs

3. Metrics, Datasets, and Application Scenarios

3.1. Metrics

3.2. Datasets

3.3. The Latest Application Scenarios of SRSs

4. Future Development Trends

4.1. Explainability

4.2. Fairness

4.3. Diversity

4.4. Cross-Domain SR

4.5. The Dynamics in SRS

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| RS | Recommendation Systems |

| CL | Collaborative Filtering |

| CBF | Content-Based Filtering |

| KBF | Knowledge-Based Filtering |

| SRS | Sequential Recommendation System |

| ARIMA | Auto-Regressive Integrated Moving Average |

| SVM | Support Vector Machine |

| GBRT | Gradient Boosting Regression Tree |

| HMM | Hidden Markov Model |

| DL | Deep Learning |

| CL | Contrastive Learning |

| GNN | Graph Neural Network |

| DCRec | De-biased Contrastive learning paradigm for Recommendation system |

| MCLSR | Multi-level CL framework is proposed for Sequence Recommendation |

| KV-MN | Key-Value Memory Network |

| KB | Knowledge Base |

| GGNN | Gated Graph Neural Network |

| HG-GNN | Heterogeneous Global Graph Neural Network |

| GCE-GNN | Global Context-Enhanced Graph Neural Network |

| SGNN-HN | Star GNN with Highway Networks |

| TE-GNN | Time-Enhanced Graph Neural Network |

| RN-GNN | Recurrent Neural Graph Neural Network |

| LESSR | Lossless Edge-order preserving aggregation and Shortcut graph attention for Session-based Recommendation |

| EOPA | Edge-Order Preserving Aggregation |

| SGAT | Shortcut Graph Attention |

| FGNN | Fully connected Graph Neural Network |

| WGAT | Weighted Graph Attention |

| HGNN | Hybrid sequential gated GNN |

| SR-GNN | Session-based Representation Graph Neural Network |

| DGS-MGNN | Dynamic Global Structure-enhanced Multi-channel Graph Neural Network |

| DAT-MDI | Dual Attention Transfer based on Multi-Dimensional Integration |

| GCN | Graph Convolutional Networks |

| GRU | Gated Recurrent Units |

| ReGNN | GNNs with a Repetition exploration mechanism |

| ZSL | Zero-Shot Learning |

| MA-GNN | Memory Graph Neural Network |

| TASRec | Time-Augmented GNN for Session-based Recommendations |

| TSG | Time span-aware Sequential Graph |

| DGSR | Dynamic Graph neural network for SR |

| E-GNN | Enhanced Graph Neural Network |

| WGIG | Weighted Global Item Graph |

| LSG | Local Session Graph |

| Int-GNN | Intention-aware graph neural network |

| ID-GNN | Intention-aware Denoising Graph Neural Network |

| TAGNN | Target-Attention Graph Neural Network |

| CDR | Cross-Domain Recommendation |

| CD-ASR | Cross-Domain Attentive SR |

| AHRNN | Attentive Hybrid Recurrent Neural Network |

| Bi-LSTM | Bidirectional Long Short-Term Memory |

| Disen-GNN | Disentangled Graph Neural Network |

| TIAN | Temporal Interest Attention Network |

| HA-GNN | Higher-order Attention Graph Neural Network |

Appendix A. The Descriptions of Metrics and Datasets

| Reference | Baseline | Metric | Dataset |

| [15] | Pop, BPR-MF, NCF, GRU4Rec+, SASRecGC-SAN, S3-RecMIP | HR, NDCG | Beauty, Sports, Yelp, ML-1M |

| [52] | BPR-MF, GRU4Rec, Caser, SASRec, FDSA, S3Rec, CL4SRec, ICLRec | HR, NDCG | Beauty, Sports, ML-1M |

| [53] | BPR, FPMC, GRU4Rec, Time-LSTM, Caser, TiSASRec, CL4SRec, FMLPRec, DuoRec | HR, NDCG | MovieLens, Beauty, Video Games, CDs&Vinyl, Movies&TV |

| [65] | BPR, NCF, GC-MC, LightGCN, SGL, CKE, RippleNet, KGCN, KGAT, KGIN, CKAN, MVIN | Recall, NDCG | Yelp2018, Amazon-book, MIND |

| [8] | BPR-MF, Caser, GRU4Rec, SASRec, BERT4Rec | HR, NDCG | Beauty, Sports, Toys, Yelp |

| [9] | PopRec, GRU4Rec, Caser, BERT4Rec, SASRec, DSSRec, S3-RecMIP,SP, CL4SRec, CoSeRec | HR, NDCG | Sports, Beauty, Yelp, Toys |

| [12] | PopRec, BPR-MF, GRU4Rec, SASRec, Bert4Rec, S3-Rec, CL4SRec, CoSeRec | HR, NDCG | Beauty, Sports, Yelp, Toys, VideoGames, Health, Apps, Tmall |

| [78] | PopRec, FPMC, GRU4Rec, Caser, SR-GNN, SASRec, BERT4Rec, SSE-PT, DGCF, PTGCN | Recall, NDCG | MovieLens, CDs, Beauty |

| [116] | NCF, DIN, LightGCN, Caser, GRU4Rec, DIEN, CLSR | AUC, GAUC, MRR, NDCG | Taobao, Amazon, Yelp |

| [10] | DIN, Caser, GRU4REC, DIEN, SASRec, SLi-Rec | AUC, MRR, NDCG, WAUC | Taobao, Amazon Toys |

| [13] | GRU4Rec, GC-SAN, SASRec, S3Rec(MLP), CL4Rec, DuoRec, GEC4SRec | HR, NDCG | Beauty, Sports, ML-1M |

| [83] | BRP-MF, GRU4Rec, Caser, SASRe, BERT4Rec, S3Rec(MLP), CL4SRec, DuoRec | HR, NDCG | Beauty, Clothing, Sports ML-1M |

| [14] | Caser, GRU4Rec, SASRec, BERT4Rec, SR-GNN, GCSAN, SURGE, S3-Rec, CL4SRec, DuoRec, ICLRec | HR, NDCG | Reddit, Beauty, Sports Movielens-20M |

| [84] | Mult-VAE, DNN+SSL, BUIR, MixGCL | Recall, NDCG | Douban-Book, Yelp2018 Amazon-Book |

| [37] | POP, GRU4REC, NARM, RNN-KNN, STAN, CSRM, SR-GNN, NISER+, GCE-GNN | Recall, MRR | Diginetica, Nowplaying, Yoochoose |

| [26] | POPRec, GRU4Rec, SASRec, ComiRec-SA, GCSAN, S3-RecMIP, CL4SRec, DuoRec, MCLSR | Recall, NDCG, Hit | Amazon, Gowalla |

| [85] | FPMC, GRU4REC, NARM, STAMP, SASRec, BERT4Rec SR-GNN, CSRM, FGNN, GC-SAN, GCE-GNN, TASRec S2-DHCN | HR, MRR | Tmall, Diginetica, Gowalla, RetailRocket, Nowplaying, LastFM |

| [86] | GRU4Rec, Caser, SASRec, S3RecMIP, CL4SRec, DuoRec, CFIT4SRec | HR, NDCG | Beauty, Clothing, Sports, ML-1M |

| [87] | GRU4Rec, Caser, NItNet, SASRec, GRU-SQN, Caser-SQN, NItNet-SQN, SASRec-SQN, CP4Rec, CP4Rec-SQN, ICM, GIRIL, EMI, DAM | HR, NDCG | RC15, RetailRocket, |

| [11] | NCF, DIN, LightGCN, Caser, GRU4REC, DIEN, SASRec, SURGE, SLi-Rec | AUC, GAUC, MRR, NDCG | Taobao, Kuaishou |

| [29] | BPR-MF, FPMC, GRU4REC, GRU4REC+, NARM, STAMP, SR-GNN, KSR | Recall, NDCG | Ml-20m,Ml-1m,Book |

| [27] | POP, S-POP, Item-KNN, BPR-MF, FPMC, GRU4REC, NARM, STAMP, SR-GNN | Precision, MRR | Diginetica, Yoochoose |

| [88] | FPMC, GRU4REC+BPR, GRU4REC+CE, NARM, STAMP, SR-GNN, RIB, KM-SR, M(GRU)-SR, M(GGNN)-SR, M(GGNNx2)-SR, M-SR | Hit, MRR | KKBOX, JDATA |

| [34] | ItemKNN, GRU4Rec, NARM, SR-GNN, LESSR, GCE-GNN, H-RNN, A-PGNN | HR, MRR | LastFM, Xing, Reddit |

| [33] | S-POP, FPMC, GRU4REC, NARM, CSRM, STAMP, SR-IEM, SR-GNN, NISER+ | Precision, MRR | Yoochoose, Diginetica |

| [35] | POP, ItemKNN, FPMC, GRU4REC, NARM, STAMP, SR-GNN, DGTN, LESSR, TAGNN | MRR, Precision | Diginetica, Yoochoose |

| [89] | POP, Item-KNN, FPMC, GRU4REC, NARM, STAMP, CSRM, SR-IEM, SR-GNN, TAGNN, GCE-GNN | Precision, MRR | Diginetica, Tmall, Nowplaying, Retailrocket |

| [37] | POP, Item-KNN, GRU4REC, NARM, RNN-KNN, STAN,SR-GNN, NISER+,GCE-GNN | Recall, MRR | Diginetica, Nowplaying, Yoochoose |

| [40] | Item-KNN, FPMC, NextItNet, NARM, FGNN, SR-GNN, GC-SAN, LESSR | HR, MRR | Diginetica, Gowalla, LastFM |

| [91] | POP, S-POP, Item-KNN, BPR-MF, FPMC, GRU4REC, NARM, STAMP, SR-GNN, FGNN-SG-Gated, FGNN-SG-ATT, FGNN-SG, FGNN-BCS-0, FGNN-BCS-1, FGNN-BCS-2, FGNN-BCS-3 | Recall, MRR | Yoochoose, Diginetica |

| [92] | ItemKNN, ItemKNN(geo), FPMC, NextItNet, NARM, STAMP,SR-GNN, SSRM, SNextItNet, SNARM, SSTAMP, SSR-GNN, SSSRM | HR, MRR | Gowalla, Delicious, Foursquare |

| [93] | Item-KNN, FPMC, PRME, GRU4REC, NextItNet, NARM, STAMP, SR-GNN, GC-SAN, FGNN, SR-HGNN | Precision, MRR | Yoochoose, Diginetica |

| [42] | POP, S-POP, Item-KNN, BPR-MF, FPMC, GRU4REC, NARM, STAMP, SR-GNN | Precision, MRR | Yoochoose, Diginetica |

| [94] | POP, S-POP, Item-KNN, FPMC, GRU4Rec, SKNN, NARM, STAMP, SRGNN, TAGNN | Recall, MRR | Retailrocket, Yoochoosel, Diginetica, Xing, Reddit |

| [95] | POP, Item-KNN, FPMC, GRU4REC, NARM, STAMP, CSRM, DSAN, SR-GNN, TAGNN, COTREC, GCE-GNN | Precision, MRR | Diginetica, Yoochoose, Retailrocket |

| [36] | POP, BPRMF, FPMC, GRU4REC, SASRec, TiSASRec, SR-GNN, CatGCN, CTGNN | NDCG, Recall | Taobao, Diginetica, Amazon |

| [96] | POP, Item-KNN,FPMC, GRU4Rec, NARM, STAMP, SR-GNN, FGNN, GC-SAN, GCE-GNN | Precision, MRR | Diginetica, Yoochoose, Gowalla, LastFM |

| [98] | MP, BPR, Mult-DAE, Lig htGCN, FPMC, TransRec, GRU4Rec, NARM, Caser, SASRec, MCF, CKE, LightGCN+, MoHR | HR, NDCG, MRR | Amazon, Books, Yelp, Google Local |

| [99] | BPR-MF, FPMC, GRU4Rec, AttRec, Caser, HGN | Recall, NDCG | ML100k, Luxury, Digital, Software |

| [100] | POP, FPMC, Item-KNN, GRU4REC, NARM, STAMP, SR-GNN, DHCN, GCE-GNN | Precision, MRR | Diginetica, Tmall, Yoochoose |

| [94] | POP, S-POP, ItemKNN, FPMC, GRU4Rec, SKNN, NARM, STAMP, SRGNN, TAGNN | Recall, MRR | Retailrocket, Yoochoose, Diginetica, Xing, Reddit |

| [101] | POP, S-POP, Item-KNN, BPR-MF, FPMC, GRU4REC, NARM, STAMP, RepeatNet, SR-GNN | Precision, MRR | Yoochoose, Dignietica |

| [102] | SR-GNN,SR-GNN-ATT, GC-SAN, GCE-GNN, COTREC | Precision, MRR | AmazonG&GF, Yelpsmall |

| [106] | BPRMF, GRU4Rec, GRU4Rec+,GC-SAN, Caser, SARSRec, MARank | Recall, NDCG | CDs, Books, Children, Comics, ML20M |

| [107] | POP, S-POP,BPR-MF, GRU4REC, NARM, FGNN, SSRM | Recall, MRR | LastFM, Gowalla |

| [108] | GRU4REC, SR-GNN,CSRM,LESSR, TASRec | Recall, NDCG | Aotm, Diginetica, Retailrocket |

| [109] | FPMC, GRU4REC+,SASRec, SR-GNN, GC-SAN, FGNN, RetaGNN, HGNN-GAT1, HGNN-GAT2, HGNN-T, HGNN-En | Hit, RR | Steam, MovieLens |

| [110] | BPR-MF, FPMC, GRU4Rec+, Caser, SASRec, SR-G NN, HGN, TiSASRec, GCE-GNN, SERec, HyperRec | NDCG, Hit | Ablation, Beauty, Games, CDs, ML-1M |

| [111] | Item-POP, S-POP, Item-KNN, BPR-MF, FPMC, GRU4REC, NARM, STAMP, SR-GNN | RecalL, MRR | Yoochoose, Diginetica |

| [112] | POP, GRU4Rec, NARM, STAMP, SR-GNN,NISER,LESSR, GCE-GNN, DSAN, DHCN, COTREC | Precision, MRR | Diginetica, Tmall, RetailRocket |

| [113] | FPMC, STAMP, GC-SAN, GCE-GNN, DHCN, ID-GNN | HR, MRR, NDCG | Tmall, Yoochoose |

| [27] | POP, S-POP, Item-KNN, BPR-MF, FPMC, GRU4REC, NARM, STAMP, SR-GNN | Precision, MRR | Diginetica, Yoochoose |

| [114] | HGN, SASRec, HAM,MA-GNN, HGAN, GCMC,I GMC | NDCG, RecalL, Precision | Instagram, MovieLens, Book-Crossing |

| [100] | POP, FPMC, Item-KNN, GRU4REC, NARM, STAMP, SR-GNN, DHCN, GCE-GNN | P, MRR | Diginetica, Tmall, Yoochoose |

| [11] | NCF, DIN, LightGCN, Caser, GRU4REC, DIEN, SASRec, SURGE | AUC, GAUC, MRR, NDCG | Taobao, Kuaishou |

| [116] | NCF, DIN, LightGCN, Caser, GRU4REC, DIEN, CLSR | AUC, GAUC, MRR, NDCG | Taobao, Amazon-Movie and TV, yelp |

| [117] | Pop, BPR-MF, FPMC, SASRec, FISSA-lg,CoNet, CD-SASRec, CD-ASR | HR, NDCG | Books, Movies, Music |

| [147] | Item-KNN, BPR-MF, NCF, LightGCN, VUI-KNN, NCF-MLP++, Conet, GRU4REC, HGRU4REC, NAIS, Time-LSTM, TGSRec, π-Net, PSJNet, DA-GCN | MRR, Recall | HAMAZON, HVIDEO |

| [36] | POP, BPRMF, FPMC, GRU4REC, SASRec, TiSASRec, SR-GNN, CatGCN, CTGNN | NDCG, Recall | Taobao, Diginetica, Amazon |

| [120] | POP, ItemKNN, BPRMF, GRU4RE, AFM, RBM, Caser, TransRec, SASRec | Recall | MovieLens1M, Tmall |

| [121] | POP, Item-KNN, FPMC, GRU4REC, NARM, STAMP, SR-GNN, TAGNN | Precision, MRR | Diginetica, Yoochoose 1, Nowplaying |

| [102] | SR-GNN, SR-GNN-ATT, GC-SAN, GCE-GNN, COTREC | Precision, MRR | AmazonG&GF, Yelpsmall |

| [95] | POP, Item-KNN, FPMC, GRU4REC, NARM, STAMP, CSRM, DSAN, SR-GNN, TAGNN, COTREC, GCE-GNN | Precision, MRR | Diginetica, Yoochoose, Retailrocket |

| [89] | POP, Item-KNN, FPMC, GRU4REC, NARM, STAMP, CSRM, SR-IEM, SR-GNN, TAGNN, GCE-GNN | Precision, MRR | Diginetica, Tmall, Nowplaying, Retailrocket |

| [38] | POP, Item-KNN, FPMC, GRU4Rec, NARM, STAMP, CSRM, SR-GNN, FGNN, FGNN | Precision, MRR | Diginetica, Tmall, Nowplaying |

| [101] | POP, S-POP, Item-KNN, BPR-MF, FPMC, GRU4REC, NARM, STAMP, RepeatNet, SR-GNN | Precision, MRR | Yoochoose, Dignietica |

| [122] | BPR, FPMC, GRU4Rec+, Caser, SASRec, BERT4Rec, DHCN, TiSASRec, DGCF | Recall, NDGG | MovieLens, Amazon CDs_and_Vinyl, Amazon Movies and_TV |

| [96] | POP, Item-KNN, FPMC, GRU4Rec, NARM, STAMP, SR-GNN, FGNN, GC-SAN, GCE-GNN | Precision, MRR | Diginetica, Yoochoose, Gowalla, LastFM |

| [52] | BPR-MF, GRU4Rec, Caser, SASRec, FDSA, S3Rec, CL4SRec, ICLRec | HR, NDCG | Beauty, Sports, ML-1M |

| [93] | Item-KNN, FPMC, PRME, GRU4REC, NextItNet, NARM, STAMP, SR-GNN, GC-SAN, FGNN, SR-HGNN | Precision, MRR | Yoochoose, Diginetica |

| [123] | FPMC, FOSSIL, GRU4Rec, NARM,HGN, SASRec, LightSANs, HME,SRGNN, GCSAN, LESSR | HR, NDCG, MAP | Beauty, Pet, TH, MYbank |

| [124] | POP, FPMC, Item-KNN, GRU4Rec, NARM, STAMP, SR-GNN, TAGNN, ICM-SR | MRR, Precision | Yoochoose, Diginetica |

| [125] | POP, BPRFMC, FPMC, Fossil, GRU4Rec, Caser | Prec, Recall, MAP | MovieLens, Gowalla |

References

- Sarwar, B.; Karypis, G.; Konstan, J.; Riedl, J. Item-based collaborative filtering recommendation algorithms. In Proceedings of the 10th International Conference on World Wide Web, Hong Kong, China, 1–5 May 2001; pp. 285–295. [Google Scholar]

- Pazzani, M.J.; Billsus, D. Content-based recommendation systems. In The Adaptive Web: Methods and Strategies of Web Personalization; Springer: Berlin/Heidelberg, Germany, 2007; pp. 325–341. [Google Scholar]

- Wang, S.; Hu, L.; Wang, Y.; Cao, L.; Sheng, Q.; Orgun, M. Sequential recommender systems: Challenges, progress and prospects. arXiv 2019, arXiv:2001.04830. [Google Scholar]

- Wang, S.; Cao, L.; Wang, Y.; Sheng, Q.; Orgun, M.; Lian, D. A survey on session-based recommender systems. ACM Comput. Surv. 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Burke, R. Hybrid recommender systems: Survey and experiments. User Model. User-Adapt. Interact. 2002, 12, 331–370. [Google Scholar] [CrossRef]

- Adomavicius, G.; Tuzhilin, A. Toward the next generation of recommender systems: A survey of the state-of-the-art and possible extensions. IEEE Trans. Knowl. Data Eng. 2005, 17, 734–749. [Google Scholar] [CrossRef]

- Bachman, P.; Hjelm, R.D.; Buchwalter, W. Learning representations by maximizing mutual information across views. In Proceedings of the Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; p. 32. [Google Scholar]

- Chen, Y.; Liu, Z.; Li, J.; McAuley, J.; Xiong, C. Intent contrastive learning for sequential recommendation. In Proceedings of the ACM Web Conference, Lyon, France, 25–29 April 2022; pp. 2172–2182. [Google Scholar]

- Li, X.; Sun, A.; Zhao, M.; Yu, J.; Zhu, K.; Jin, D.; Yu, M.; Yu, R. Multi-intention oriented contrastive learning for sequential recommendation. In Proceedings of the 16th ACM International Conference on Web Search and Data Mining, Singapore, 27 February–3 March 2023; pp. 411–419. [Google Scholar]

- Lin, G.; Gao, C.; Li, Y.; Zheng, Y.; Li, Z.; Jin, D.; Li, Y. Dual contrastive network for sequential recommendation. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; pp. 2686–2691. [Google Scholar]

- Zheng, Y.; Gao, C.; Chang, J.; Niu, Y.; Song, Y.; Jin, D.; Li, Y. Disentangling long and short-term interests for recommendation. In Proceedings of the ACM Web Conference 2022, Lyon, France, 25–29 April 2022; pp. 2256–2267. [Google Scholar]

- Wei, Z.; Wu, N.; Li, F.; Wang, K.; Zhang, W. MoCo4SRec: A momentum contrastive learning framework for sequential recommendation. Expert Syst. Appl. 2023, 223, 119911. [Google Scholar] [CrossRef]

- Yang, X.Y.; Xu, F.; Yu, J.; Li, Z.; Wang, D. Graph neural network-guided contrastive learning for sequential recommendation. Sensors 2023, 23, 5572. [Google Scholar] [CrossRef]

- Yang, Y.; Huang, C.; Xia, L.; Huang, C.; Luo, D.; Lin, K. Debiased contrastive learning for sequential recommendation. In Proceedings of the ACM Web Conference 2023, Austin, TX, USA, 30 April–4 May 2023; pp. 1063–1073. [Google Scholar]

- Xie, X.; Sun, F.; Liu, Z.; Wu, S.; Gao, J.; Ding, B.; Cui, B. Contrastive learning for sequential recommendation. In Proceedings of the 2022 IEEE 38th International Conference on Data Engineering (ICDE), Kuala Lumpur, Malaysia, 9–12 May 2022; pp. 1259–1273. [Google Scholar]

- Zuva, T.; Ojo, S.O.; Ngwira, S.; Zuva, K. A survey of recommender systems techniques, challenges and evaluation metrics. Int. J. Emerg. Technol. Adv. Eng. 2012, 2, 382–386. [Google Scholar]

- Hu, Y.; Koren, Y.; Volinsky, C. Collaborative filtering for implicit feedback datasets. In Proceedings of the 2008 8th IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008; pp. 263–272. [Google Scholar]

- Koren, Y.; Rendle, S.; Bell, R. Advances in collaborative filtering. In Recommender Systems Handbook; Springer: Berlin/Heidelberg, Germany, 2021; pp. 91–142. [Google Scholar]

- Salakhutdinov, R.; Mnih, A. Bayesian probabilistic matrix factorization using Markov chain Monte Carlo. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 880–887. [Google Scholar]

- Ling, G.; Lyu, M.R.; King, I. Ratings meet reviews, a combined approach to recommend. In Proceedings of the 8th ACM Conference on Recommender Systems, Silicon Valley, CA, USA, 6–10 October 2014; pp. 105–112. [Google Scholar]

- McAuley, J.; Leskovec, J. Hidden factors and hidden topics: Understanding rating dimensions with review text. In Proceedings of the 7th ACM Conference on Recommender Systems, Hong Kong, China, 12–16 October 2013; pp. 165–172. [Google Scholar]

- Ricci, F. Mobile recommender systems. Inf. Technol. Tour. 2010, 12, 205–231. [Google Scholar] [CrossRef]

- Gemmis, M.D.; Iaquinta, L.; Lops, P.; Musto, C.; Narducci, F.; Semeraro, G. Preference learning in recommender systems. Prefer. Learn. 2009, 41, 41–55. [Google Scholar]

- Ghazanfar, M.; Prugel-Bennett, A. An improved switching hybrid recommender system using naive Bayes classifier and collaborative filtering. In Proceedings of the 2010 IAENG International Conference on Data Mining and Applications, Hong Kong, China, 17–19 March 2010. [Google Scholar]

- Melville, P.; Sindhwani, V. Recommender systems. Encycl. Mach. Learn. 2010, 1, 829–838. [Google Scholar]

- Wang, Z.; Liu, H.; Wei, W.; Hu, Y.; Mao, X.; He, S.; Fang, R.; Chen, D. Multi-level contrastive learning framework for sequential recommendation. In Proceedings of the 31th ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–21 October 2022; pp. 2098–2107. [Google Scholar]

- Yu, F.; Zhu, Y.; Liu, Q.; Wu, S.; Wang, L.; Tan, T. TAGNN: Target attentive graph neural networks for session-based recommendation. In Proceedings of the 43th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 25–30 July 2020; pp. 1921–1924. [Google Scholar]

- Li, Y.; Tarlow, D.; Brockschmidt, M.; Zemel, R. Gated graph sequence neural networks. arXiv 2015, arXiv:1511.05493. [Google Scholar]

- Wang, B.; Cai, W. Knowledge-enhanced graph neural networks for sequential recommendation. Information 2020, 11, 388. [Google Scholar] [CrossRef]

- Ying, H.; Zhuang, F.; Zhang, F.; Liu, Y.; Xu, G.; Xie, X.; Xiong, H.; Wu, J. Sequential recommender system based on hierarchical attention network. In Proceedings of the IJCAI International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 3926–3932. [Google Scholar]

- Hidasi, B.; Karatzoglou, A.; Baltrunas, L.; Tikk, D. Session-based recommendations with recurrent neural networks. arXiv 2015, arXiv:1511.06939. [Google Scholar]

- Sun, F.; Liu, J.; Wu, J.; Pei, C.; Lin, X.; Ou, W.; Jiang, P. BERT4Rec: Sequential recommendation with bidirectional encoder representations from transformer. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 1441–1450. [Google Scholar]

- Pan, Z.; Cai, F.; Chen, W.; Chen, H.; Rijke, M. Star graph neural networks for session-based recommendation. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Virtual Event, 19–23 October 2020; pp. 1195–1204. [Google Scholar]

- Pang, Y.; Wu, L.; Shen, Q.; Zhang, Y.; Wei, Z.; Xu, F.; Chang, E.; Long, B.; Pei, J. Heterogeneous global graph neural networks for personalized session-based recommendation. In Proceedings of the 15th ACM International Conference on Web Search and Data Mining, Virtual Event, 21–25 February 2022; pp. 775–783. [Google Scholar]

- Feng, L.; Cai, Y.; Wei, E.; Li, J. Graph neural networks with global noise filtering for session-based recommendation. Neurocomputing 2022, 472, 113–123. [Google Scholar] [CrossRef]

- Hao, Y.; Ma, J.; Zhao, P.; Liu, G.; Xian, X.; Zhao, L.; Sheng, V. Multi-dimensional Graph Neural Network for Sequential Recommendation. Pattern Recognit. 2023, 139, 109504. [Google Scholar] [CrossRef]

- Wang, J.; Xie, H.; Wang, F.L.; Lee, L.; Wei, M. Jointly modeling intra-and inter-session dependencies with graph neural networks for session-based recommendations. Inf. Process. Manag. 2023, 60, 103209. [Google Scholar] [CrossRef]

- Wang, Z.; Wei, W.; Cong, G.; Li, X.; Mao, X.; Qiu, M. Global context enhanced graph neural networks for session-based recommendation. In Proceedings of the 43th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 25–30 July 2020; pp. 169–178. [Google Scholar]

- Qiu, R.; Li, J.; Huang, Z.; Yin, H. Rethinking the item order in session-based recommendation with graph neural networks. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 579–588. [Google Scholar]

- Chen, T.; Wong, R.C.W. Handling information loss of graph neural networks for session-based recommendation. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual Event, 6–10 July 2020; pp. 1172–1180. [Google Scholar]

- Li, Q.; Han, Z.; Wu, X.M. Deeper insights into graph convolutional networks for semi-supervised learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Wu, S.; Tang, Y.; Zhu, Y.; Wang, L.; Xie, X.; Tan, T. Session-based recommendation with graph neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 346–353. [Google Scholar]

- Zhang, G.P. Times series forecasting using a hybrid ARIMA and neural network model. Neurocomputing 2001, 50, 159–175. [Google Scholar] [CrossRef]

- Noble, W. What is a support vector machine? Nat. Biotechnol. 2006, 24, 1565–1567. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, Y.; Yao, Y.; Xiao, Z.; Shang, K.; Guo, X.; Yang, J.; Hue, S.; Wang, J. GBRT-Based Estimation of Terrestrial Latent Heat Flux in the Haihe River Basin from Satellite and Reanalysis Datasets. Remote Sens. 2021, 13, 1054. [Google Scholar] [CrossRef]

- Rendle, S.; Freudenthaler, C.; Schmidt-Thieme, L. Factorizing personalized markov chains for next-basket recommendation. In Proceedings of the 19th International Conference on World Wide Web, Raleigh North, CA, USA, 26–30 April 2010; pp. 811–820. [Google Scholar]

- He, R.; Kang, W.C.; McAuley, J. Translation-based recommendation. In Proceedings of the 11th ACM Conference on Recommender Systems, Como, Italy, 27–31 August 2017; pp. 161–169. [Google Scholar]

- He, R.; Fang, C.; Wang, Z.; McAuley, J. Vista: A visually, socially, and temporally-aware model for artistic recommendation. In Proceedings of the 10th ACM Conference on Recommender Systems, Boston, MA, USA, 15–19 September 2016; pp. 309–316. [Google Scholar]

- Dash, A.; Chakraborty, A.; Ghosh, S.; Mukherjee, A.; Gummadi, K. FaiRIR: Mitigating Exposure Bias From Related Item Recommendations in Two-Sided Platforms. IEEE Trans. Comput. Soc. Syst. 2023, 10, 1301–1313. [Google Scholar] [CrossRef]

- Li, Q.; Peng, H.; Li, J.; Xia, C.; Yang, R.; Sun, L.; Yu, P.S.; He, L. A survey on text classification: From traditional to deep learning. ACM Trans. Intell. Syst. Technol. 2022, 13, 1–41. [Google Scholar] [CrossRef]

- Covington, P.; Adams, J.; Sargin, E. Deep neural networks for youtube recommendations. In Proceedings of the 10th ACM Conference on Recommender Systems, Boston, MA, USA, 15–19 September 2016; pp. 191–198. [Google Scholar]

- Yan, B.; Wang, H.; Ouyang, Z.; Chen, C.; Xia, A. Item attribute-aware contrastive learning for sequential recommendation. IEEE Access 2023, 11, 70795–70804. [Google Scholar] [CrossRef]

- Wang, J.; Shi, Y.; Yu, H.; Zhang, K.; Wang, X.; Yan, Z.; Li, H. Temporal density-aware sequential recommendation networks with contrastive learning. Expert Syst. Appl. 2023, 211, 118563. [Google Scholar] [CrossRef]

- Chen, J.; Wu, W.; Shi, L.; Ji, Y.; Hu, W.; Chen, X.; Zheng, W.; He, L. DACSR: Decoupled-aggregated end-to-end calibrated sequential recommendation. Appl. Sci. 2022, 12, 11765. [Google Scholar] [CrossRef]

- Li, C.T.; Hsu, C.; Zhang, Y. Fairsr: Fairness-aware sequential recommendation through multi-task learning with preference graph embeddings. ACM Trans. Intell. Syst. Technol. 2022, 13, 1–21. [Google Scholar] [CrossRef]

- Abdollahpouri, H.; Mansoury, M.; Burke, R.; Mobasher, B. The impact of popularity bias on fairness and calibration in recommendation. arXiv 2019, arXiv:1910.05755. [Google Scholar]

- Rastegarpanah, B.; Gummadi, K.P.; Crovella, M. Fighting fire with fire: Using antidote data to improve polarization and fairness of recommender systems. In Proceedings of the 12th ACM International Conference on Web Search and Data Mining, Melbourne, Australia, 11–15 February 2019; pp. 231–239. [Google Scholar]

- Jiang, Y.; Yang, Y.; Xia, L.; Huang, C. DiffKG: Knowledge Graph Diffusion Model for Recommendation. In Proceedings of the 17th ACM International Conference on Web Search and Data Mining (WSDM ‘24). Association for Computing Machinery, New York, NY, USA, 4–8 March 2024; pp. 313–321. [Google Scholar]

- Zhu, Z.; Hu, X.; Caverlee, J. Fairness-aware tensor-based recommendation. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; pp. 1153–1162. [Google Scholar]

- Zhang, M.; Hurley, N. Avoiding monotony: Improving the diversity of recommendation lists. In Proceedings of the 2008 ACM Conference on Recommender Systems, Lausanne, Switzerland, 23–25 October 2008; pp. 123–130. [Google Scholar]

- Wu, Q.; Liu, Y.; Miao, C.; Zhao, Y.; Guan, L.; Tang, H. Recent advances in diversified recommendation. arXiv 2019, arXiv:1905.06589. [Google Scholar]

- Chen, W.; Ren, P.; Cai, F.; Sun, F.; De Rijke, M. Multi-interest diversification for end-to-end sequential recommendation. ACM Trans. Inf. Syst. 2021, 40, 1–30. [Google Scholar] [CrossRef]

- Chen, W.; Ren, P.; Cai, F.; De Rijke, M. Improving end-to-end sequential recommendations with intent-aware diversification. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Virtual Event, 19–23 October 2020; pp. 175–184. [Google Scholar]

- Deng, Y.; Hou, X.; Li, B.; Wang, J.; Zhang, Y. A novel method for improving optical component smoothing quality in robotic smoothing systems by compensating path errors. Opt. Express 2023, 31, 30359–30378. [Google Scholar] [CrossRef]

- Yang, Y.; Huang, C.; Xia, L.; Li, C. Knowledge graph contrastive learning for recommendation. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; pp. 1434–1443. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Monti, F.; Bronstein, M.; Bresson, X. Geometric matrix completion with recurrent multi-graph neural networks. In Proceedings of the Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Li, Z.; Li, S.; Francis, A.; Luo, X. A novel calibration system for robot arm via an open dataset and a learning perspective. IEEE Trans. Circuits Syst. II Express Briefs 2022, 69, 5169–5173. [Google Scholar] [CrossRef]

- Wu, Y.; DuBois, C.; Zheng, A.X.; Ester, M. Collaborative denoising auto-encoders for top-n recommender systems. In Proceedings of the 9th ACM International Conference on Web Search and Data Mining, San Francisco, CA, USA, 22–25 February 2016; pp. 153–162. [Google Scholar]

- Hinton, G.E. A practical guide to training restricted Boltzmann machines. In Neural Networks: Tricks of the Trade, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 599–619. [Google Scholar]

- Donkers, T.; Loepp, B.; Ziegler, J. Sequential user-based recurrent neural network recommendations. In Proceedings of the 11th ACM Conference on Recommender Systems, Como, Italy, 27–31 August 2017; pp. 152–160. [Google Scholar]

- Li, Z.; Li, S.; Bamasag, O.O.; Alhothali, A.; Luo, X. Diversified regularization enhanced training for effective manipulator calibration. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 8778–8790. [Google Scholar] [CrossRef] [PubMed]

- Zhu, F.; Wang, Y.; Chen, C.; Zhou, J.; Li, L.; Liu, G. Cross-domain recommendation: Challenges, progress, and prospects. arXiv 2021, arXiv:2103.01696. [Google Scholar]

- Wang, X.; He, X.; Wang, M.; Feng, F.; Chua, T. Neural graph collaborative filtering. In Proceedings of the 42th International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 165–174. [Google Scholar]

- He, X.; Liao, L.; Zhang, H.; Nie, L.; Hu, X.; Chua, T. Neural collaborative filtering. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 173–182. [Google Scholar]

- Man, T.; Shen, H.; Jin, X.; Cheng, X. Cross-domain recommendation: An embedding and mapping approach. In Proceedings of the 26th International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; Volume 17, pp. 2464–2470. [Google Scholar]

- Sahu, A.K.; Dwivedi, P. User profile as a bridge in cross-domain recommender systems for sparsity reduction. Appl. Intell. 2019, 49, 2461–2481. [Google Scholar] [CrossRef]

- Duan, H.; Zhu, Y.; Liang, X.; Zhu, Z.; Liu, P. Multi-feature fused collaborative attention network for sequential recommendation with semantic-enriched contrastive learning. Inf. Process. Manag. 2023, 60, 103416. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Volume 27. [Google Scholar]

- Zhang, Y.; Bai, Y.; Chang, J.; Zhang, X.; Lu, S.; Lu, J.; Feng, F.; Niu, Y.; Song, Y. Leveraging watch-time feedback for short-video recommendations: A causal labeling framework. In Proceedings of the 32th ACM International Conference on Information and Knowledge Management, Birmingham, UK, 21–25 October 2023; pp. 4952–4959. [Google Scholar]

- Deng, Y.; Hou, X.; Li, B.; Wang, J.; Zhang, Y. A highly powerful calibration method for robotic smoothing system calibration via using adaptive residual extended Kalman filter. Robot. Comput.-Integr. Manuf. 2024, 86, 102660. [Google Scholar] [CrossRef]

- Wu, Y.; Xie, R.; Zhu, Y.; Zhuang, F.; Zhang, X.; Lin, L. Personalized prompt for sequential recommendation. IEEE Trans. Knowl. Data Eng. 2024, 36, 3376–3389. [Google Scholar] [CrossRef]

- Wang, L.; Lim, E.P.; Liu, Z.; Zhao, T. Explanation guided contrastive learning for sequential recommendation. In Proceedings of the 31th ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–21 October 2022; pp. 2017–2027. [Google Scholar]

- Yu, J.; Yin, H.; Xia, X.; Chen, T.; Cui, L.; Nguyen, Q. Are graph augmentations necessary? Simple graph contrastive learning for recommendation. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; pp. 1294–1303. [Google Scholar]

- Wan, Z.; Liu, X.; Wang, B.; Qiu, J.; Li, B.; Guo, T.; Chen, G.; Wang, Y. Spatio-temporal contrastive learning enhanced Gnns for session-based recommendation. ACM Trans. Inf. Syst. 2023, 42, 1–26. [Google Scholar] [CrossRef]

- Zhang, Y.; Yin, G.; Dong, Y. Contrastive learning with frequency domain for sequential recommendation. Appl. Soft Comput. 2023, 144, 110481. [Google Scholar] [CrossRef]

- Liu, Z.; Ma, Y.; Hildebrandt, M.; Ouyang, Y.; Xiong, Z. CDARL: A contrastive discriminator-augmented reinforcement learning framework for sequential recommendations. Knowl. Inf. Syst. 2022, 64, 2239–2265. [Google Scholar] [CrossRef]

- Meng, W.; Yang, D.; Xiao, Y. Incorporating user micro-behaviors and item knowledge into multi-task learning for session-based recommendation. In Proceedings of the 43th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 25–30 July 2020; pp. 1091–1100. [Google Scholar]

- Tang, G.; Zhu, X.; Guo, J.; Dietze, S. Time enhanced graph neural networks for session-based recommendation. Knowl.-Based Syst. 2022, 251, 109204. [Google Scholar] [CrossRef]

- Yuan, F.; Karatzoglou, A.; Arapakis, I.; Jose, J.; He, X. A simple convolutional generative network for next item recommendation. In Proceedings of the 12th ACM International Conference on Web Search and Data Mining, Melbourne, Australia, 11–15 February 2019; pp. 582–590.32. [Google Scholar]

- Qiu, R.; Huang, Z.; Li, J.; Yin, H. Exploiting cross-session information for session-based recommendation with graph neural networks. ACM Trans. Inf. Syst. 2020, 38, 1–23. [Google Scholar] [CrossRef]

- Chen, T.; Wong, R.C.W. An efficient and effective framework for session-based social recommendation. In Proceedings of the 14th ACM International Conference on Web Search and Data Mining, Virtual Event, 8–12 March 2021; pp. 400–408. [Google Scholar]

- Chen, Y.H.; Huang, L.; Wang, C.D.; Lai, J. Hybrid-order gated graph neural network for session-based recommendation. IEEE Trans. Ind. Inform. 2021, 18, 1458–1467. [Google Scholar] [CrossRef]

- Zhang, C.; Zheng, W.; Liu, Q.; Nie, J.; Zhang, H. SEDGN: Sequence enhanced denoising graph neural network for session-based recommendation. Expert Syst. Appl. 2022, 203, 117391. [Google Scholar] [CrossRef]

- Zhu, X.; Tang, G.; Wang, P.; Li, C.; Guo, J.; Dietze, S. Dynamic global structure enhanced multi-channel graph neural network for session-based recommendation. Inf. Sci. 2023, 624, 324–343. [Google Scholar] [CrossRef]

- Chen, C.; Guo, J.; Song, B. Dual attention transfer in session-based recommendation with multi-dimensional integration. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 11–15 July 2021; pp. 869–878. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Zhu, T.; Sun, L.; Chen, G. Graph-based embedding smoothing for sequential recommendation. IEEE Trans. Knowl. Data Eng. 2023, 35, 496–508. [Google Scholar] [CrossRef]

- Tao, Y.; Wang, C.; Yao, L.; Li, W.; Yu, Y. Item trend learning for sequential recommendation system using gated graph neural network. Neural Comput. Appl. 2023, 35, 13077–13092. [Google Scholar] [CrossRef]

- Sang, S.; Yuan, W.; Li, W.; Yang, Z.; Zhang, Z. Position-aware graph neural network for session-based recommendation. Knowl.-Based Syst. 2023, 262, 110201. [Google Scholar] [CrossRef]

- Xian, X.; Fang, L.; Sun, S. ReGNN: A repeat aware graph neural network for session-based recommendations. IEEE Access 2020, 8, 98518–98525. [Google Scholar] [CrossRef]

- Jin, D.; Wang, L.; Zheng, Y.; Song, G.; Jiang, F.; Li, X.; Lin, W.; Pan, S. Dual intent enhanced graph neural network for session-based new item recommendation. In Proceedings of the ACM Web Conference 2023, Austin, TX, USA, 30 April–4 May 2023; pp. 684–693. [Google Scholar]

- Yu, L.; Zhang, C.; Liang, S.; Zhang, X. Multi-order attentive ranking model for sequential recommendation. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 5709–5716. [Google Scholar]

- Tang, J.; Wang, K. Personalized top-n sequential recommendation via convolutional sequence embedding. In Proceedings of the 11th ACM International Conference on Web Search and Data Mining, Marina Del Rey, CA, USA, 5–9 February 2018; pp. 565–573. [Google Scholar]

- Belletti, F.; Chen, M.; Chi, E.H. Quantifying long range dependence in language and user behavior to improve RNNs. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 1317–1327. [Google Scholar]

- Ma, C.; Ma, L.; Zhang, Y.; Sun, J.; Liu, X.; Coates, M. Memory augmented graph neural networks for sequential recommendation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 5045–5052. [Google Scholar]

- Qiu, R.; Yin, H.; Huang, Z.; Chen, T. Gag: Global attributed graph neural network for streaming session-based recommendation. In Proceedings of the 43th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 25–30 July 2020; pp. 669–678. [Google Scholar]

- Zhou, H.; Tan, Q.; Huang, X.; Zhou, K.; Wang, X. Temporal augmented graph neural networks for session-based recommendations. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 11–15 July 2021; pp. 1798–1802. [Google Scholar]

- Xue, L.; Yang, D.; Xiao, Y. Factorial user modeling with hierarchical graph neural network for enhanced sequential recommendation. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo, Taipei, Taiwan, 18–22 July 2022; pp. 1–6. [Google Scholar]

- Zhang, M.; Wu, S.; Yu, X.; Liu, Q.; Wang, L. Dynamic graph neural networks for sequential recommendation. IEEE Trans. Knowl. Data Eng. 2023, 35, 4741–4753. [Google Scholar] [CrossRef]

- Sheng, Z.; Zhang, T.; Zhang, Y.; Gao, S. Enhanced graph neural network for session-based recommendation. Expert Syst. Appl. 2023, 213, 118887. [Google Scholar] [CrossRef]

- Xu, G.; Yang, J.; Guo, J.; Huang, Z.; Zhang, B. Int-GNN: A user intention aware graph neural network for session-based recommendation. In Proceedings of the ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing, Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Hua, S.; Gan, M. Intention-aware denoising graph neural network for session-based recommendation. Appl. Intell. 2023, 53, 23097–23112. [Google Scholar] [CrossRef]

- Hsu, C.; Li, C.T. Retagnn: Relational temporal attentive graph neural networks for holistic sequential recommendation. In Proceedings of the Web Conference 2021, Ljubljana, Slovenia, 19–23 April 2021; pp. 2968–2979. [Google Scholar]

- Ricci, F.; Rokach, L.; Shapira, B. Recommender systems: Introduction and challenges. In Recommender Systems Handbook; Springer: Berlin/Heidelberg, Germany, 2015; pp. 1–34. [Google Scholar]

- Li, Y.; Yang, C.; Ni, T.; Zhang, Y.; Liu, A. Long and short-term interest contrastive learning under filter-enhanced sequential recommendation. IEEE Access 2023, 11, 95928–95938. [Google Scholar] [CrossRef]

- Alharbi, N.; Caragea, D. Cross-domain attentive sequential recommendations based on general and current user preferences (CD-ASR). In Proceedings of the IEEE/WIC/ACM International Conference on Web Intelligence and Intelligent Agent Technology, Melbourne, Australia, 14–17 December 2021; pp. 48–55. [Google Scholar]

- Lian, J.; Zhang, F.; Xie, X.; Sun, G. CCCFNet: A content-boosted collaborative filtering neural network for cross domain recommender systems. In Proceedings of the 26th International Conference on World Wide Web Companion, Perth, Australia, 3–7 April 2017; pp. 817–818. [Google Scholar]

- Zhong, S.T.; Huang, L.; Wang, C.D.; Lai, J.; Yu, P. An autoencoder framework with attention mechanism for cross-domain recommendation. IEEE Trans. Cybern. 2022, 52, 5229–5241. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, P.; Li, J.; Xiao, Z.; Shi, H. Attentive hybrid recurrent neural networks for sequential recommendation. Neural Comput. Appl. 2021, 33, 11091–11105. [Google Scholar] [CrossRef]

- Li, A.; Cheng, Z.; Liu, F.; Gao, Z.; Guan, W.; Peng, Y. Disentangled graph neural networks for session-based recommendation. IEEE Trans. Knowl. Data Eng. 2023, 35, 7870–7882. [Google Scholar] [CrossRef]

- Huang, L.; Ma, Y.; Liu, Y.; Du, B.; Wang, S.; Li, D. Position-enhanced and time-aware graph convolutional network for sequential recommendations. ACM Trans. Inf. Syst. 2023, 41, 1–32. [Google Scholar] [CrossRef]

- Guo, N.; Liu, X.; Li, S.; Ma, Q.; Gao, K.; Han, B.; Zheng, L.; Guo, S.; Guo, X. Poincaré Heterogeneous Graph Neural Networks for Sequential Recommendation. ACM Trans. Inf. Syst. 2023, 41, 1–26. [Google Scholar] [CrossRef]

- Sang, S.; Liu, N.; Li, W.; Zhang, Z.; Qin, Q.; Yuan, W. High-order attentive graph neural network for session-based recommendation. Appl. Intell. 2022, 52, 16975–16989. [Google Scholar] [CrossRef]

- Hao, J.; Dun, Y.; Zhao, G.; Wu, Y.; Qian, X. Annular-graph attention model for personalized sequential recommendation. IEEE Trans. Multimed. 2021, 24, 3381–3391. [Google Scholar] [CrossRef]

- Wu, B.; He, X.; Wu, L.; Zhang, X.; Ye, Y. Graph-augmented co-attention model for socio-sequential recommendation. IEEE Trans. Syst. Man Cybern. Syst. 2023, 53, 4039–4051. [Google Scholar] [CrossRef]

- Vargas, S.; Castells, P. Rank and relevance in novelty and diversity metrics for recommender systems. In Proceedings of the 5th ACM Conference on Recommender Systems, Chicago, IL, USA, 23–27 October 2011; pp. 109–116. [Google Scholar]

- Shani, G.; Gunawardana, A. Evaluating recommendation systems. In Recommender Systems Handbook; Springer: Berlin/Heidelberg, Germany, 2010; pp. 257–297. [Google Scholar]

- Avazpour, I.; Pitakrat, T.; Grunske, L.; Grundy, J. Dimensions and metrics for evaluating recommendation systems. In Recommendation Systems in Software Engineering; Springer: Berlin/Heidelberg, Germany, 2013; pp. 245–273. [Google Scholar]

- Boka, F.T.; Niu, Z.; Neupane, R.B. A survey of sequential recommendation systems: Techniques, evaluation, and future directions. Inf. Syst. 2024, 125, 102427. [Google Scholar] [CrossRef]

- Nasir, M.; Ezeife, C.I. A survey and taxonomy of sequential recommender systems for e-commerce product recommendation. SN Comput. Sci. 2023, 4, 708. [Google Scholar] [CrossRef]

- He, R.; McAuley, J. Ups and downs: Modeling the visual evolution of fashion trends with one-class collaborative filtering. In Proceedings of the 25th International Conference on World Wide Web, Montréal, QC, Canada, 11–15 April 2016; pp. 507–517. [Google Scholar]

- McAuley, J.; Targett, C.; Shi, Q.; Hengel, A. Image-based recommendations on styles and substitutes. In Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval, Santiago, Chile, 9–13 August 2015; pp. 43–52. [Google Scholar]

- Zhu, H.; Chang, D.; Xu, Z.; Zhang, P.; Li, X.; He, J.; Li, H.; Xu, J.; Gai, K. Joint optimization of tree-based index and deep model for recommender systems. In Proceedings of the 33th Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32, pp. 1–10. [Google Scholar]

- Zangerle, E.; Pichl, M.; Gassler, W.; Specht, G. #Nowplaying music dataset: Extracting listening behavior from twitter. In Proceedings of the 1th International Workshop on Internet-Scale Multimedia Management, Orlando, FL, USA, 7 November 2014; pp. 21–26. [Google Scholar]

- Harper, F.M.; Konstan, J.A. The movielens datasets: History and context. ACM Trans. Interact. Intell. Syst. 2015, 5, 1–19. [Google Scholar] [CrossRef]

- McFee, B.; Lanckriet, G.R.G. Hypergraph models of playlist dialects. In Proceedings of the International Society for Music Information Retrieval, Porto, Portugal, 8–12 October 2012; Volume 12, pp. 343–348. [Google Scholar]

- Zhang, Y.; Humbert, M.; Rahman, T.; Li, C.; Pang, J.; Backes, M. Tagvisor: A privacy advisor for sharing hashtags. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 287–296. [Google Scholar]

- Jannach, D.; Zanker, M.; Felfernig, A.; Friedrtich, G. Recommender Systems: An Introduction; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Wang, S.; Hu, L.; Wang, Y.; He, X.; Sheng, Q.; Orgun, M.; Cao, L.; Wang, N.; Ricci, F.; Yu, P. Graph learning approaches to recommender systems: A review. arXiv 2020, arXiv:2004.11718. [Google Scholar]

- Berg, R.; Kipf, T.N.; Welling, M. Graph convolutional matrix completion. arXiv 2017, arXiv:1706.02263. [Google Scholar]

- Ying, R.; He, R.; Chen, K.; Eksombatchai, P.; Hamilton, W.; Leskovec, J. Graph convolutional neural networks for web-scale recommender systems. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Dining, London, UK, 19–23 August 2018; pp. 974–983. [Google Scholar]

- Yin, Z.; Han, K.; Wang, P.; Hu, H. Multi global information assisted streaming session-based recommendation system. IEEE Trans. Knowl. Data Eng. 2023, 35, 8245–8256. [Google Scholar] [CrossRef]

- Yuan, H.; Yu, H.; Gui, S.; Ji, S. Explainability in graph neural networks: A taxonomic survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 5782–5799. [Google Scholar] [CrossRef]

- Yang, Z.; Dong, S.; Hu, J. GFE: General knowledge enhanced framework for explainable sequential recommendation. Knowl.-Based Syst. 2021, 230, 107375. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, X. Explainable Recommendation: A Survey and New Perspectives. Foundations and Trends® in Information Retrieval; Now Publishers Inc.: Hanover, MA, USA, 2020; Volume 14, pp. 1–101. [Google Scholar]

- Guo, L.; Zhang, J.; Tang, L.; Chen, T.; Zhu, L.; Yin, H. Time interval-enhanced graph neural network for shared-account cross-domain sequential recommendation. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 4002–4016. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, P.; Shu, H.; Gan, J.; Deng, X.; Liu, Y.; Sun, W.; Chen, T.; Hu, C.; Hu, Z.; Deng, Y.; et al. Sequential Recommendation System Based on Deep Learning: A Survey. Electronics 2025, 14, 2134. https://doi.org/10.3390/electronics14112134

Wei P, Shu H, Gan J, Deng X, Liu Y, Sun W, Chen T, Hu C, Hu Z, Deng Y, et al. Sequential Recommendation System Based on Deep Learning: A Survey. Electronics. 2025; 14(11):2134. https://doi.org/10.3390/electronics14112134

Chicago/Turabian StyleWei, Peiyang, Hongping Shu, Jianhong Gan, Xun Deng, Yi Liu, Wenying Sun, Tinghui Chen, Can Hu, Zhenzhen Hu, Yonghong Deng, and et al. 2025. "Sequential Recommendation System Based on Deep Learning: A Survey" Electronics 14, no. 11: 2134. https://doi.org/10.3390/electronics14112134

APA StyleWei, P., Shu, H., Gan, J., Deng, X., Liu, Y., Sun, W., Chen, T., Hu, C., Hu, Z., Deng, Y., Qin, W., & Li, Z. (2025). Sequential Recommendation System Based on Deep Learning: A Survey. Electronics, 14(11), 2134. https://doi.org/10.3390/electronics14112134