Abstract

Tracking the precise movement of surgical tools is essential for enabling automated analysis, providing feedback, and enhancing safety in robotic-assisted surgery. Accurate 3D tracking of surgical tooltips is challenging to implement when using monocular videos due to the complexity of extracting depth information. We propose a pipeline that combines state-of-the-art foundation models—Florence2 and Segment Anything 2 (SAM2)—for zero-shot 2D localization of tooltip coordinates using a monocular video input. Localization predictions are refined through supervised training of the YOLOv11 segmentation model to enable real-time applications. The depth estimation model Metric3D computes the relative depth and provides tooltip camera coordinates, which are subsequently transformed into world coordinates via a linear model estimating rotation and translation parameters. An experimental evaluation on the JIGSAWS Suturing Kinematic dataset achieves a 3D Average Jaccard score on tooltip tracking of 84.5 and 91.2 for the zero-shot and supervised approaches, respectively. The results validate the effectiveness of our approach and its potential to enhance real-time guidance and assessment in robotic-assisted surgical procedures.

1. Introduction

1.1. Robotic Surgery

The popularity of robot-assisted surgery has surged due to 3D visualization and wristed instruments enabling enhanced dexterity and precision in minimally invasive procedures. This is reflected in the 8.4-fold increase in the robotic procedure volume, rising from 1.8% in 2012 to 15.1% in 2018 [1,2]. However, the effective operation of surgical robots requires extensive training, as these systems demand exceptional hand–eye coordination and bimanual dexterity [3,4]. Consequently, the development of techniques to objectively and automatically evaluate the proficiency of surgical trainees holds considerable value, enabling standardized assessment and improved training outcomes.

1.2. Tool Tracking and Skill Assessment

The analysis of surgical tool motions has become an effective way to objectively assess the skill level of a trainee. Multiple studies show that quantitative tool motion metrics (e.g., path length, smoothness, economy of motion) discriminate expertise levels and correlate with the operative efficiency and complication rates [5,6,7]. A possible approach involves capturing the kinematic data that are generated during robotic-assisted procedures, which provides detailed information about the spatial and temporal characteristics of tool movement [8]. These data can then be analyzed using advanced machine learning (ML) algorithms to extract meaningful metrics which correlate with surgical expertise. The reliability on kinematic data is constrained by the proprietary nature of robotic systems and the need for specialized hardware, limiting its accessibility and scalability. Tools for kinematic data analysis are currently not readily available for traditional laparoscopic procedures, which account for the majority of minimally invasive surgeries (MISs) worldwide, with approximately 3 million being performed annually in the US alone [9]. Expanding kinematic performance analysis to traditional laparoscopy would therefore offer substantial benefits for training and benchmarking purposes.

As a result, there is growing interest in alternative modalities to the use of raw kinematic data, such as monocular video, to achieve comparable insights. Monocular video enables non-invasive, scalable, and cost-effective tracking of tool motion, providing a viable solution for skill assessment in diverse surgical settings, including traditional laparoscopy, without the need for specialized hardware. Furthermore, the use of real-time video analysis can provide immediate kinematic feedback, enhancing the adaptability and responsiveness of training programs by allowing trainees to adjust their techniques as they perform. A system that operates on monocular video can be passively applied to large datasets of pre-recorded surgeries, facilitating retrospective studies and leveraging the ever-growing repositories of surgical video data. This capability not only enhances the accessibility of skill assessment but also promotes data-driven approaches to improving surgical training and evaluation in centers that cannot afford experienced trainers or additional robotic consoles. Whereas depth estimation using stereoscopic cameras is robust, its requirement for specialized dual-camera hardware limits its applicability in standard laparoscopy and prevents retrospective analysis of vast existing monocular video archives. Monocular depth estimation and 3D spatial understanding for the purposes of surgical tool tracking remain open areas of research. Our study is motivated by the need to develop an effective method focusing on accessibility and broad applicability to track 3D tooltip positions across a video, acquired via a monocular camera, without the need for additional data.

1.3. Prior Approaches

Computer vision (CV) has been applied to surgical video analysis across various tasks, including phase recognition, instrument detection, and skill assessment [10,11,12]. Tool tracking using machine learning (ML) techniques has traditionally been achieved by training specialized models that are capable of identifying the position of surgical instruments within a video frame [13,14,15,16]. These methods have demonstrated effectiveness in determining tool positions with respect to the 2D image plane. Popular methods for 3D instrument tracking include setting up an interface to directly record kinematic data that are generated as the robotic arms are manipulated by the surgeon [17], employing a stereo (dual-camera) setup to compute depth information and accurately localize the tools in 3D space [18,19], or even utilizing neural fields to perform a slow reconstruction of the scene in 3D [20]. The release of the JIGSAWS dataset [21], which contains videos of surgical training exercises along with corresponding 3D kinematic annotations, enables the benchmarking of new techniques by offering synchronized video and kinematic data, allowing researchers to explore innovative approaches, including those that operate solely on monocular video inputs.

1.4. Zero-Shot Tool Tracking

Recent advancements in CV have led to the emergence of foundation models that are trained on massive and diverse datasets, often incorporating multiple input modalities [22]. In particular, the power of deep learning (DL) in bridging vision and text has been exemplified by models such as CLIP [23], LLaVA [24], and Segment Anything (SAM) [25]. These models leverage paired image and text data to develop a rich semantic understanding of visual features, enabling them to operate effectively in zero-shot settings. In such scenarios, the model can interpret and respond to inputs that were not explicitly encountered during training by applying knowledge transfer and reasoning capabilities. This ability to generalize to unseen data makes them particularly valuable in complex, dynamic applications like surgical instrument tracking, where diverse scenarios and unique tool configurations are common. Furthermore, these models can serve as a powerful tool for generating initial annotations, which can subsequently be refined and validated by domain experts.

2. Materials and Methods

2.1. JIGSAWS Suturing Dataset

The JHU-ISI Gesture and Skill Assessment Working Set (JIGSAWS) is a benchmark dataset designed to evaluate surgical skill and robotic surgical tool manipulation. The dataset includes three surgical tasks, performed by eight participants with varying skill levels (novice, intermediate, and expert), including knot tying, needle passing, and suturing. Our study focuses on the suturing task, which includes 78 video recordings of the eight participants performing a suturing exercise using the da Vinci Surgical System (dVSS; developed by Intuitive Surgical, CA, USA). The suturing task involves the subject grasping a surgical needle and aligning it with a vertical incision on a bench-top model, passing it through the simulated tissue from the designated entry dot on one side to the corresponding exit dot on the opposite side (Figure 1). Upon each exit, the needle is withdrawn and handed to the right hand, and the process is repeated three additional times for a total of four passes. The subjects were not allowed to move the camera or apply the clutch while performing the task.

Figure 1.

Example frames captured from the suturing exercise in the JIGSAWS dataset.

Each video captures the task being performed, with a frame resolution varying between 640 × 480 pixels and 320 × 240 pixels at a framerate of 30 frames per second (fps). This dataset is widely used for skill assessment, gesture recognition, and tool tracking in robotic surgery research [26,27,28].

The dataset includes synchronized motion data that are stored as kinematic variables for every video frame. A total of 19 kinematic variables are used to describe the motion of the manipulators, encompassing both the master tool manipulators (MTMs) and the patient-side manipulators (PSMs). For our study, the annotations for the left and right PSMs are used as the ground truth 3D coordinate locations of the tooltips. The dataset does not include calibration parameters for the cameras that were used to record the tasks.

Although the JIGSAWS Suturing dataset provides well-annotated kinematic and stereo video streams, its dry-lab bench-top setup lacks realistic tissue deformation, bleeding, and lighting variability and includes only a small, standardized cohort, limiting its reflection of true surgical complexity. Nonetheless, it remains an invaluable benchmark for initial algorithm development and reproducible comparative evaluation in surgical skill assessment.

2.2. Computer Vision Pipeline

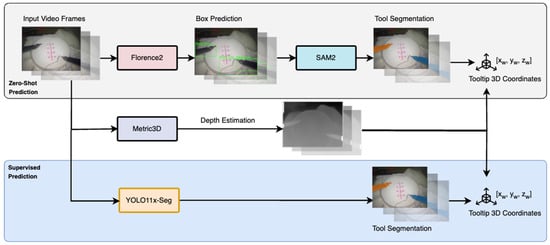

This study aims to develop a system that utilizes computer vision (CV) and deep learning (DL) models to estimate the 3D positions of surgical tooltips from monocular video. The proposed system employs a multi-stage approach as demonstrated below (Figure 2).

Figure 2.

Overview of the multi-stage pipeline for 3D tooltip prediction from monocular video.

- Zero-Shot Tool Localization: A foundation model, Florence2 [29], is employed to perform zero-shot localization of surgical tools. This model uses a text prompt to identify the position of the tools within each video frame and generate bounding box annotations, ensuring robust performance even in the absence of task-specific training data.

- Zero-Shot Tool Segmentation: Another foundation model, Segment Anything 2 (SAM 2) [30], is applied to further refine the localization. SAM 2 takes the detected bounding boxes as input and outputs precise segmentation masks for the tools that are present in the scene. The tooltip coordinates are extracted based on the mask geometry: for the right tool, the top-left pixel is selected, while for the left tool, the bottom-right pixel is chosen. This ensures accurate localization of tooltip positions in the 2D image plane.

- Depth Estimation: To extend the 2D tooltip coordinates into 3D space, we employ a monocular depth estimation model, Metric3D [31]. This model computes a relative depth value for each pixel in the range of [0.0, 1.0]. Using this depth information, the 2D tooltip coordinates (xc, yc) are combined with the estimated depth (zc) to produce the 3D tooltip positions in the camera coordinate frame as (xc, yc, zc).

- Transformation to World Coordinates: To reconstruct the 3D trajectories of the tooltips in the world coordinate frame, we apply a linear transformation. This involves estimating the rotation matrix (R) and translation vector (T), which represent the camera extrinsic parameters relative to the surgical robot. By applying this transformation, the 3D camera coordinates (xc, yc, zc) are mapped to world coordinates (xw, yw, zw), enabling accurate trajectory reconstruction. We can then evaluate this against the ground truth kinematic annotations of the JIGSAWS dataset to evaluate the approach.

- Comparison with a Supervised Model: A subset of the dataset is annotated with precise tool masks by healthcare professionals. These annotations are used to train an independent, task-specific tool segmentation model (YOLOv11 [32]). The supervised model demonstrates improved tool segmentation accuracy and reduced inference time compared to the foundation models, making it better suited for real-time surgical applications. The trained model, combined with the existing depth estimation process, is then applied to the remaining dataset for 3D tooltip tracking. The results are compared with the zero-shot approach to evaluate the benefits of domain-specific supervised training, highlighting the trade-offs between general-purpose foundation models and specialized supervised models for robotic-assisted surgery tasks.

This multi-stage pipeline combines state-of-the-art vision models with depth estimation and transformation techniques, providing a straightforward and effective solution for 3D tracking of surgical tooltips from monocular video. Notably, employing separate steps for bounding box proposal and tool segmentation ensures enhanced precision in identifying the tooltips, as the models exhibit complementary strengths. The zero-shot stage remains advantageous for two key reasons. First, the SAM2 model can generalize to previously unseen instruments with only minor prompt adjustments [33]. Second, the zero-shot masks supply large-scale, low-cost pseudo-labels that reduce the manual annotation time by an order of magnitude and boost performance when a small, expert-verified subset is used for fine-tuning, as demonstrated in our experiments.

A further description of each DL model is provided below.

Florence-2: Florence-2 is a state-of-the-art vision-language foundation model that excels in multi-task learning across diverse computer vision tasks. It uses a unified architecture to handle over 10 vision-related tasks, such as image classification, object detection, segmentation, and visual question answering, without needing separate task-specific models. Its core is a transformer-based multi-modal encoder–decoder, which processes both image and text embeddings for seamless integration of visual and textual information. A standout feature is its ability to handle region-specific tasks, like object detection, through the use of specialized location tokens for bounding boxes or polygons. We apply Florence-2 on each frame of the video to extract bounding box proposals for the tools that are present in the frame. Extra boxes are eliminated so that only a single tightest-fit box remains for each left and right tool.

Segment Anything 2 (SAM 2): Segment Anything Model 2 (SAM 2) is an advanced computer vision model, designed for segmenting objects in both images and videos. Building on its predecessor, SAM, this updated version introduces several significant improvements that enhance its real-world usability. SAM 2 uses a memory-based architecture designed to process video frames sequentially, ensuring temporal continuity and robust segmentation across dynamic scenes. It incorporates components like a ViT (Vision Transformer) [34] backbone for feature extraction and a novel “occlusion head” for handling occluded objects. Bounding box predictions extracted from Florence-2 are used as prompts to segment the tool and obtain the tooltip pixel coordinates (xc, yc). This approach ensures that even if segmentation predictions for certain frames are lost, the availability of bounding boxes allows for re-inferencing, enhancing robustness across video sequences.

Metric3D: Metric3D is a monocular depth estimation framework that is designed to predict metric depths from a single image, tackling the challenges of real-world depth prediction. Its core innovation is the Canonical Space Transformation Module (CSTM), which maps images to a standardized “canonical space,” normalizing camera intrinsics to mitigate metric ambiguity. After the depth estimation in this space, the results are transformed back to the original view for real-world metric depth predictions. Architecturally, Metric3D employs a ConvNeXt [35] or ViT backbone for feature extraction, leveraging large-scale multi-dataset training to ensure robustness. The model is trained with data from diverse sources, including real-world and synthetic datasets, integrating normal depth and surface predictions to refine its 3D reconstruction capabilities.

The depth estimation model is run independently on the videos in the dataset to obtain per-frame depth map predictions, which contain per-pixel relative depth outputs (zc). Upon tooltip detection, we directly concatenate the tooltip pixel coordinates with the depth prediction at the same location to obtain the 3D coordinates with respect to the camera frame (xc, yc, zc). We estimate a simple linear transformation for each tool, which translates and rotates the point for each tool and is defined as follows:

where K = [R|t] represents the estimated camera extrinsic matrix, which has a 3 × 3 rotation component (R) and a 3 × 1 translation vector (t). This is achieved by fitting a simple linear regression model between the predicted camera coordinates and a small subset of the ground truth kinematic data, which serve as the world coordinates. We select 1000 kinematic points (i.e., 1000 frames) from the dataset for this purpose and apply the learnt R and t parameters across the remainder of the dataset. This transformation step is crucial for aligning the camera frame coordinates with the global world frame, enabling accurate trajectory reconstruction. Finally, the computed world coordinates are compared against the ground truth data for evaluation.

Xc = [xc, yc, zc]

Xw = [xw, yw, zw] = Xc · R + t

YOLOv11: YOLOv11 extends the You-Only-Look-Once (YOLO) object detection framework, implementing a single-stage detection architecture [32,36,37]. The architecture combines a CNN backbone with three key modifications: the Cross-Stage Partial block with a kernel size of 2 (C3k2), Spatial Pyramid Pooling—Fast (SPPF), and Convolutional block with Parallel Spatial Attention (C2PSA), optimizing both the computational efficiency and feature extraction capabilities. The experimental results demonstrate competitive performance metrics in object detection, tracking, and segmentation tasks. The architecture maintains the characteristic computational efficiency of the YOLO series, with inference latency and parameter count metrics that compare favorably to larger foundation models. We selected YOLOv11-seg for the supervised segmentation stage because its design meets three constraints of tool tracking in robotic surgery: real-time speed, a small memory footprint, and data-efficient fine-tuning.

The supervision stage comprises the annotation of 15 videos, selected through stratified sampling from the dataset and encompassing cases that exhibited varying detection performances in zero-shot evaluation. Surgical residents perform manual tool segmentation mask annotation on this subset to establish a supervised training dataset. The YOLOv11 segmentation variant (YOLO11x-seg) is trained on these annotations, implementing tool detection and segmentation in a unified framework. Using the trained YOLO11x-seg model, tooltip coordinates are extracted and paired with the existing depth estimation and linear transformation procedures to produce 3D predictions.

2.3. Data Preprocessing

All videos from the JIGSAWS Suturing dataset were resized to a fixed resolution of 640 × 480 pixels and down-sampled by half (15 fps). The synchronized kinematic annotations were processed to generate per-frame 3D coordinate labels for the tooltips considering the patient-side manipulator (PSM) positions as the ground truth labels. In the supervision stage, the 15 annotated videos were split into training and validation sets of 10 and 5 videos, respectively, in order to develop the supervised segmentation model, after which the evaluation was carried out on the remainder of the dataset.

3. Results

3.1. Evaluation Metrics

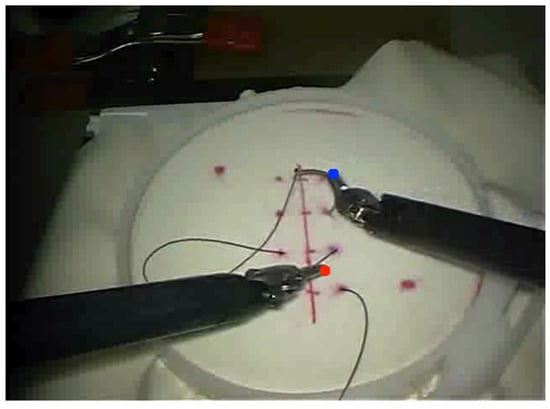

From the JIGSAWS Suturing dataset, we use all 78 videos to evaluate the multi-stage pipeline for 3D tool tracking. Figure 3. provides a visualization of the tooltip tracking in 3D. We calculate regression-based metrics like the mean absolute error (MAE) and root mean squared error (RMSE) jointly across the x-, y-, and z-axes. The displacement error is computed as the Euclidean distance between the predicted trajectories and ground truth kinematics, providing a measure of the spatial error. Additionally, we calculate two point-tracking metrics jointly across both tools, inspired by the TAPVid-3D Benchmark [38]:

Figure 3.

Visualization of tooltip tracking output in 2D (top) and 3D (bottom) for the video frame.

- APD: The 3D-APD metric measures the percentage of predicted points that are within a threshold distance from the corresponding ground truth 3D points. The threshold is dynamically adapted based on the depth of each point, assigning points that are closer to the camera (less depth) with higher importance. The metric uses the Euclidean norm and an indicator function to assess the tracking accuracy, with a focus on the visibility of ground truth points across the frames.

- 3D-Average Jaccard (AJ): The 3D-AJ metric is calculated as the ratio of true positives (points that are correctly predicted to be visible within a distance threshold) to the sum of true positives, false positives (incorrectly predicted as visible), and false negatives (points that are predicted as occluded or out of range). The metric emphasizes both tracking accuracy and depth correctness, and in cases of perfect depth estimation, it simplifies to a 2D metric. It is particularly useful for evaluating 3D trajectory predictions across video frames, with a focus on correct tracking and depth estimation.

3.2. Performance Comparison

The performance comparison (Table 1) between the zero-shot and supervised (YOLO11x-seg) methods for tooltip tracking in robotic surgical videos highlights the strong baseline performance of the zero-shot approach while emphasizing the improvements that are made possible by the supervised model. Although the zero-shot approach achieves commendable results, with displacement errors within 0.02 m (2 cm), its predictions are often noisy, leading to inconsistencies in tracking. The performance of the zero-shot approach can also be partially attributed to the limited set of surgical tools that are used in the JIGSAWS dataset. The YOLO11x-seg model demonstrates a clear advantage in tracking accuracy, with an APD of 93.4, compared to 88.2 for the zero-shot baseline. The displacement error for both the left and right tooltips shows a notable reduction of 10–15%, with the 3D-AJ increasing from 84.5 to 91.2. These enhancements translate to smoother and more reliable tracking trajectories, which are essential for maintaining the integrity of robotic surgical tasks.

Table 1.

Three-dimensional tooltip tracking performance based on the JIGSAWS Suturing dataset. We compare zero-shot tooltip detection with supervised tooltip detection using a trained YOLOv11 model (post-refinement). AJ/APD metrics are reported for all objects (tools) present in the scene.

4. Discussion

4.1. Performance Insights

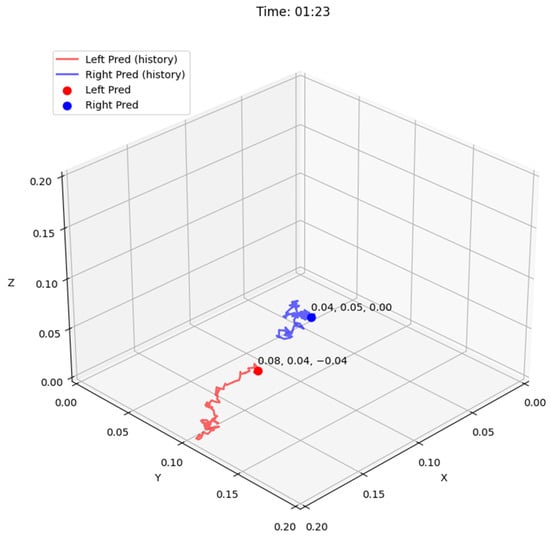

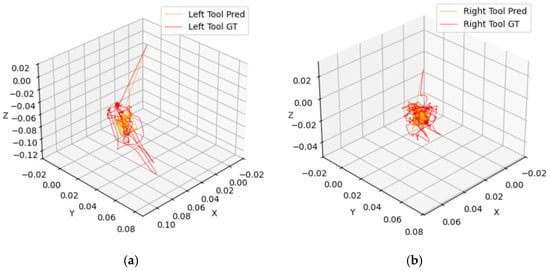

The results reveal a consistent discrepancy between the tracking accuracy of the left and right tooltips, with the left tool exhibiting higher displacement errors and lower 3D Average Jaccard (3D-AJ) scores. A visualization of the predicted and ground-truth tooltip tracks is provided in Figure 4. This difference indicates inherent challenges that are specific to the left tool. A possible explanation lies in the operational dynamics of robotic-assisted surgery. The right tool is frequently used as the primary manipulator for precision tasks, resulting in smoother and more predictable trajectories. In contrast, the left tool often performs stabilization or support roles, involving abrupt, reactive movements that are more challenging for the model to track accurately. This difficulty is compounded in the zero-shot model, which sometimes incorrectly incorporates the needle, or portions of it, as part of the tooltip. Furthermore, image quality issues (low resolution, suboptimal lighting) exacerbate tracking difficulties, particularly for the left tool. These issues manifest as the tool blurring during rapid movements or becoming indistinguishable from the background near the edges of the frame. These findings underscore the importance of considering both tool-specific dynamics and image quality limitations when evaluating tracking systems.

Figure 4.

Visualization of predicted vs. ground truth tracks in 3D across a full video for (a) left and (b) right tooltips for the supervised model.

4.2. Considerations and Future Work

The proposed method achieves an average displacement error within 2 cm, which is adequate for many analytic, training, and high-level monitoring purposes. The skill analysis and safety monitoring remain robust when spatial noise is below about a trocar diameter (1–2 cm), as is accepted in JIGSAWS-based benchmarking studies [39]. On the other hand, fine-grained surgical interventions and augmented visualization demand more precise tracking. If a critical structure (e.g., a blood vessel or nerve) lies only a few millimeters from the operative field, a 2 cm (20 mm) localization error could lead to unintended injury or an incomplete resection. For instance, laparoscopic tumor resections aim for only ~5 mm margins of healthy tissue [40].

This study demonstrates the feasibility and effectiveness of combining zero-shot and supervised approaches for 3D tooltip tracking in robotic surgical videos, paving the way for several directions in future research. One avenue is optimizing the integration of depth estimation and segmentation to further enhance tracking accuracy, especially in challenging scenarios involving complex tool trajectories or occlusions. Moreover, the development of such a tool tracking system could serve as the foundation for solving occlusion challenges, thereby enhancing the performance of subsequent computer vision and augmented reality models and advancing various surgical applications.

Improvements in tooltip localization logic, such as dynamic selection strategies for coordinate extraction, could also address minor observed inconsistencies in specific tool configurations. An example of this is to train lightweight keypoint detectors to propose candidate tip locations or compute multiple candidates from mask centroids, depth peaks, and edge gradients and select the tooltip as the best candidate. Additionally, extending the current approach to other surgical tasks and tool types would validate its generalizability and applicability in broader surgical contexts. Incorporating additional kinematic data, such as real-time force and torque measurements, could complement the visual tracking pipeline, enabling a more holistic analysis of tool motion. From the algorithm perspective, different prompting mechanisms could be explored to improve the zero-shot performance of foundation models.

Furthermore, this pipeline’s compatibility with real-time systems is a crucial consideration for practical implementation, where the speed of kinematic feedback is often the determining factor for practical use. As shown in Table 2, the pipeline achieves approximately 11.5 fps in a zero-shot setting and 23.6 fps with supervised techniques, which may pose a limitation for real-time applications requiring higher frame rates. Many real-time segmentation and tracking pipelines target ~30 fps to balance temporal coherence, as humans can perceive motion optimally between 30 and 60 fps. While higher rates (e.g., ≥60 fps) can further improve stability for rapid instrument motions or augmented reality overlays, they demand substantially more processing power [41]. Achieving consistent >30 fps performance therefore necessitates further optimization efforts. Leveraging advancements in hardware acceleration and efficient model architectures can facilitate deployment in live settings requiring these higher frame rates. On the other hand, while the processing time is less critical for training and improvement purposes, the hardware cost is paramount for broader accessibility and implementation.

Table 2.

Processing times for different components of the pipeline, with the average inference time being measured per frame on an Nvidia A100 GPU. As expected, substituting foundation models with a supervised model substantially decreases the per-frame processing time, nearly reducing it by half.

Another significant practical limitation of this study is its reliance on data that were generated in a laboratory environment using simulated settings, such as a suture pad, which significantly differs from actual surgical conditions, limiting the translatability of these findings to real-world settings. Therefore, future research should aim to validate kinematic data derived from tool tracking approaches in authentic surgical environments to assess their robustness and clinical applicability. This necessitates the development of kinematic datasets derived from real surgeries to accurately evaluate tool tracking under realistic clinical conditions.

By addressing these considerations, future work can advance automated surgical assessment, safety monitoring, and personalized feedback systems, ultimately contributing to improved patient outcomes, not only in robotic-assisted surgery.

5. Conclusions

This study introduces a novel pipeline for 3D tracking of robotic surgical tooltips using monocular video, an advancement with significant implications for robotic-assisted surgery. The results, based on the JIGSAWS Suturing dataset, validate the accuracy and reliability of the proposed method, with displacement errors consistently within 2 cm and a 3D Average Jaccard of 91.2—highlighting the system’s precision and robustness. This pipeline represents a step forward in integrating computer vision techniques into surgical applications, providing a framework that bridges the gap between advanced vision models and real-world clinical needs. Beyond its immediate applications in robotic-assisted surgery, the methodology has the potential to be generalized to other domains requiring precise tool tracking, such as industrial robotics and augmented reality. The work underscores the growing importance of computer vision and AI in transforming surgical workflows, paving the way for enhanced safety, performance monitoring, and skill development in next-generation robotic systems.

Author Contributions

Conceptualization, S.N., F.F. and Z.K.; methodology, S.N., M.K.T., M.B. and S.C.; software, S.N. and M.K.T.; validation, S.N., M.K.T. and M.B.; formal analysis, S.N.; investigation, S.N. and M.K.T.; resources, Z.K.; data curation, M.B. and S.C.; writing—original draft preparation, S.N. and M.B.; writing—review and editing, Z.K., F.F. and M.K.T.; visualization, S.N.; supervision, F.F. and Z.K.; project administration, F.F. and Z.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

JIGSAWS is a public dataset, available at https://cirl.lcsr.jhu.edu/research/hmm/datasets/jigsaws_release/ (accessed on 10 September 2024). Additional annotations and models were generated at the Intraoperative Performance Analytics Laboratory (IPAL), Lenox Hill Hospital, and are available from the corresponding author(s) upon reasonable request.

Acknowledgments

Jeffrey Nussbaum (jnussbaum2@northwell.edu), Alexander Farrell (afarrell8@northwell.edu), and Brianna King (bking7@northwell.edu) assisted with annotations.

Conflicts of Interest

Filippo Filicori is a consultant for Boston Scientific and provides research support to Intuitive Surgical.

References

- Picozzi, P.; Nocco, U.; Labate, C.; Gambini, I.; Puleo, G.; Silvi, F.; Pezzillo, A.; Mantione, R.; Cimolin, V. Advances in Robotic Surgery: A Review of New Surgical Platforms. Electronics 2024, 13, 4675. [Google Scholar] [CrossRef]

- Sheetz, K.H.; Claflin, J.; Dimick, J.B. Trends in the Adoption of Robotic Surgery for Common Surgical Procedures. JAMA Netw. Open. 2020, 3, e1918911. [Google Scholar] [CrossRef] [PubMed]

- Anderberg, M.; Larsson, J.; Kockum, C.C.; Arnbjörnsson, E. Robotics versus laparoscopy—An experimental study of the transfer effect in maiden users. Ann. Surg. Innov. Res. 2010, 4, 3. [Google Scholar] [CrossRef] [PubMed]

- Lim, C.; Barragan, J.A.; Farrow, J.M.; Wachs, J.P.; Sundaram, C.P.; Yu, D. Physiological Metrics of Surgical Difficulty and Multi-Task Requirement During Robotic Surgery Skills. Sensors 2023, 23, 4354. [Google Scholar] [CrossRef]

- Mason, J.D.; Ansell, J.; Warren, N.; Torkington, J. Is motion analysis a valid tool for assessing laparoscopic skill? Surg. Endosc. 2013, 27, 1468–1477. [Google Scholar] [CrossRef]

- Ghasemloonia, A.; Maddahi, Y.; Zareinia, K.; Lama, S.; Dort, J.C.; Sutherland, G.R. Surgical Skill Assessment Using Motion Quality and Smoothness. J. Surg. Educ. 2017, 74, 295–305. [Google Scholar] [CrossRef]

- Ebina, K.; Abe, T.; Yan, L.; Hotta, K.; Shichinohe, T.; Higuchi, M.; Iwahara, N.; Hosaka, Y.; Harada, S.; Kikuchi, H.; et al. A surgical instrument motion measurement system for skill evaluation in practical laparoscopic surgery training. PLoS ONE 2024, 19, e0305693. [Google Scholar] [CrossRef]

- Gerull, W.D.; Kulason, S.; Shields, M.C.; Yee, A.; Awad, M.M. Impact of robotic surgery objective performance indicators: A systematic review. J. Am. Coll. Surg. 2025, 240, 201–210. [Google Scholar] [CrossRef]

- Mattingly, A.S.; Chen, M.M.; Divi, V.; Holsinger, F.C.; Saraswathula, A. Minimally Invasive Surgery in the United States, 2022: Understanding Its Value Using New Datasets. J. Surg. Res. 2023, 281, 33–36. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Garrow, C.R.; Kowalewski, K.; Li, L.; Wagner, M.; Schmidt, M.W.; Engelhardt, S.; Hashimoto, D.A.; Kenngott, H.G.; Bodenstedt, S.; Speidel, S.; et al. Machine learning for surgical phase recognition: A systematic review. Ann. Surg. 2021, 273, 684–693. [Google Scholar] [CrossRef]

- Chadebecq, F.; Vasconcelos, F.; Mazomenos, E.; Stoyanov, D. Computer Vision in the Surgical Operating Room. Visc. Med. 2020, 36, 456–462. [Google Scholar] [CrossRef] [PubMed]

- Dick, L.; Boyle, C.P.; Skipworth, R.J.E.; Smink, D.S.; Tallentire, V.R.; Yule, S. Automated analysis of operative video in surgical training: Scoping review. BJS Open 2024, 8, zrae124. [Google Scholar] [CrossRef]

- Nema, S.; Vachhani, L. Surgical instrument detection and tracking technologies: Automating dataset labeling for surgical skill assessment. Front. Robot. AI 2022, 9, 1030846. [Google Scholar] [CrossRef]

- Chen, Z.; Zhao, Z.; Cheng, X. Surgical instruments tracking based on deep learning with lines detection and spatio-temporal context. In Proceedings of the Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 2711–2714. [Google Scholar] [CrossRef]

- Deol, E.S.; Henning, G.; Basourakos, S.; Vasdev, R.M.S.; Sharma, V.; Kavoussi, N.L.; Karnes, R.J.; Leibovich, B.C.; Boorjian, S.A.; Khanna, A. Artificial intelligence model for automated surgical instrument detection and counting: An experimental proof-of-concept study. Patient Saf. Surg. 2024, 18, 24. [Google Scholar] [CrossRef]

- Nwoye, C.I.; Padoy, N. SurgiTrack: Fine-Grained Multi-Class Multi-Tool Tracking in Surgical Videos. arXiv 2024, arXiv:2405.20333. [Google Scholar]

- Ye, M.; Zhang, L.; Giannarou, S.; Yang, G. Real-Time 3D Tracking of Articulated Tools for Robotic Surgery. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2016: Proceedings of the 19th International Conference, Athens, Greece, 17–21 October 2016; Springer International Publishing: New York, NY, USA, 2016; pp. 386–394. [Google Scholar] [CrossRef]

- Hao, R.; Özgüner, O.; Çavuşoğlu, M.C. Vision-Based Surgical Tool Pose Estimation for the da Vinci® Robotic Surgical System. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 1298–1305. [Google Scholar] [CrossRef]

- Franco-González, I.T.; Lappalainen, N.; Bednarik, R. Tracking 3D motion of instruments in microsurgery: A comparative study of stereoscopic marker-based vs. Deep learning method for objective analysis of surgical skills. Inform. Med. Unlocked 2024, 51, 101593. [Google Scholar] [CrossRef]

- Gerats, B.G.; Wolterink, J.M.; Mol, S.P.; Broeders, I.A. Neural Fields for 3D Tracking of Anatomy and Surgical Instruments in Monocular Laparoscopic Video Clips. arXiv 2024, arXiv:2403.19265. [Google Scholar]

- Gao, Y.; Vedula, S.S.; Reiley, C.E.; Ahmidi, N.; Varadarajan, B.; Lin, H.C.; Tao, L.; Zappella, L.; Béjar, B.; Yuh, D.D.; et al. The JHU-ISI Gesture and Skill Assessment Working Set (JIGSAWS): A Surgical Activity Dataset for Human Motion Modeling. In Modeling and Monitoring of Computer Assisted Interventions (M2CAI)—MICCAI Workshop; Johns Hopkins University: Baltimore, MD, USA, 2014. [Google Scholar]

- Li, C.; Gan, Z.; Yang, Z.; Yang, J.; Li, L.; Wang, L.; Gao, J. Multimodal Foundation Models: From Specialists to General-Purpose Assistants. arXiv 2023, arXiv:2309.10020. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models from Natural Language Supervision. arXiv 2021, arXiv:2103.00020. [Google Scholar]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual Instruction Tuning. arXiv 2023, arXiv:2304.08485. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment Anything. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 3992–4003. [Google Scholar] [CrossRef]

- Papp, D.; Elek, R.N.; Haidegger, T. Surgical Tool Segmentation on the JIGSAWS Dataset for Autonomous Image-Based Skill Assessment. In Proceedings of the 2022 IEEE 10th Jubilee International Conference on Computational Cybernetics and Cyber-Medical Systems (ICCC), Reykjavík, Iceland, 6–9 July 2022; pp. 000049–000056. [Google Scholar] [CrossRef]

- Lefor, A.K.; Harada, K.; Dosis, A.; Mitsuishi, M. Motion analysis of the JHU-ISI Gesture and Skill Assessment Working Set using Robotics Video and Motion Assessment Software. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 2017–2025. [Google Scholar] [CrossRef] [PubMed]

- Carciumaru, T.Z.; Tang, C.M.; Farsi, M.; Bramer, W.M.; Dankelman, J.; Raman, C.; Dirven, C.M.; Gholinejad, M.; Vasilic, D. Systematic review of machine learning applications using nonoptical motion tracking in surgery. NPJ Digit. Med. 2025, 8, 28. [Google Scholar] [CrossRef] [PubMed]

- Xiao, B.; Wu, H.; Xu, W.; Dai, X.; Hu, H.; Lu, Y.; Zeng, M.; Liu, C.; Yuan, L. Florence-2: Advancing a Unified Representation for a Variety of Vision Tasks. arXiv 2023, arXiv:2311.06242. [Google Scholar]

- Ravi, N.; Gabeur, V.; Hu, Y.; Hu, R.; Ryali, C.; Ma, T.; Khedr, H.; Rädle, R.; Rolland, C.; Gustafson, L.; et al. SAM 2: Segment Anything in Images and Videos. arXiv 2024, arXiv:2408.00714. [Google Scholar]

- Yin, W.; Zhang, C.; Chen, H.; Cai, Z.; Yu, G.; Wang, K.; Chen, X.; Shen, C. Metric3D: Towards Zero-shot Metric 3D Prediction from A Single Image. arXiv 2023, arXiv:2307.10984. [Google Scholar]

- Jocher, G.; Qiu, J. Ultralytics Yolo11. 2024. Available online: https://github.com/ultralytics/ultralytics (accessed on 2 November 2024).

- Lou, A.; Li, Y.; Zhang, Y.; Labadie, R.F.; Noble, J. Zero-Shot Surgical Tool Segmentation in Monocular Video Using Segment Anything Model 2. arXiv 2024, arXiv:2408.01648. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. arXiv 2022, arXiv:2201.03545. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Koppula, S.; Rocco, I.; Yang, Y.; Heyward, J.; Carreira, J.; Zisserman, A.; Brostow, G.; Doersch, C. TAPVid-3D: A Benchmark for Tracking Any Point in 3D. arXiv 2024, arXiv:2407.05921. [Google Scholar]

- Ahmidi, N.; Tao, L.; Sefati, S.; Gao, Y.; Lea, C.; Haro, B.B.; Zappella, L.; Khudanpur, S.; Vidal, R.; Hager, G.D. A Dataset and Benchmarks for Segmentation and Recognition of Gestures in Robotic Surgery. IEEE Trans. Bio-Med. Eng. 2017, 64, 2025. [Google Scholar] [CrossRef] [PubMed]

- Pelanis, E.; Teatini, A.; Eigl, B.; Regensburger, A.; Alzaga, A.; Kumar, R.P.; Rudolph, T.; Aghayan, D.L.; Riediger, C.; Kvarnström, N.; et al. Evaluation of a novel navigation platform for laparoscopic liver surgery with organ deformation compensation using injected fiducials. Med. Image Anal. 2021, 69, 101946. [Google Scholar] [CrossRef] [PubMed]

- Ozbulak, U.; Mousavi, S.A.; Tozzi, F.; Rashidian, N.; Willaert, W.; De Neve, W.; Vankerschaver, J. Less is More? Revisiting the Importance of Frame Rate in Real-Time Zero-Shot Surgical Video Segmentation. arXiv 2025, arXiv:2502.20934. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).