The Role of Facial Action Units in Investigating Facial Movements During Speech

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

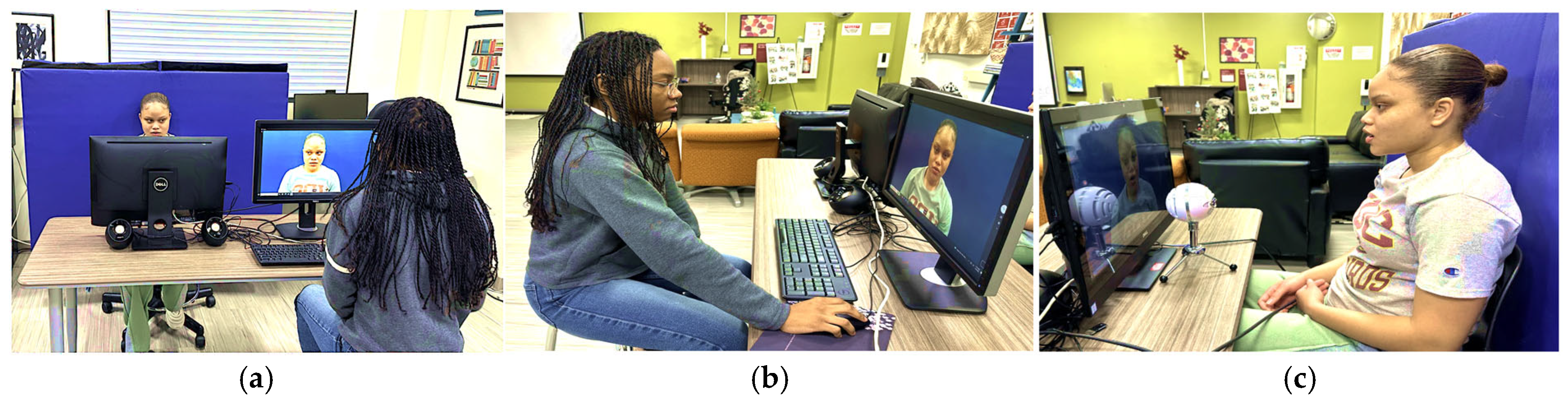

2.2. Data Collection

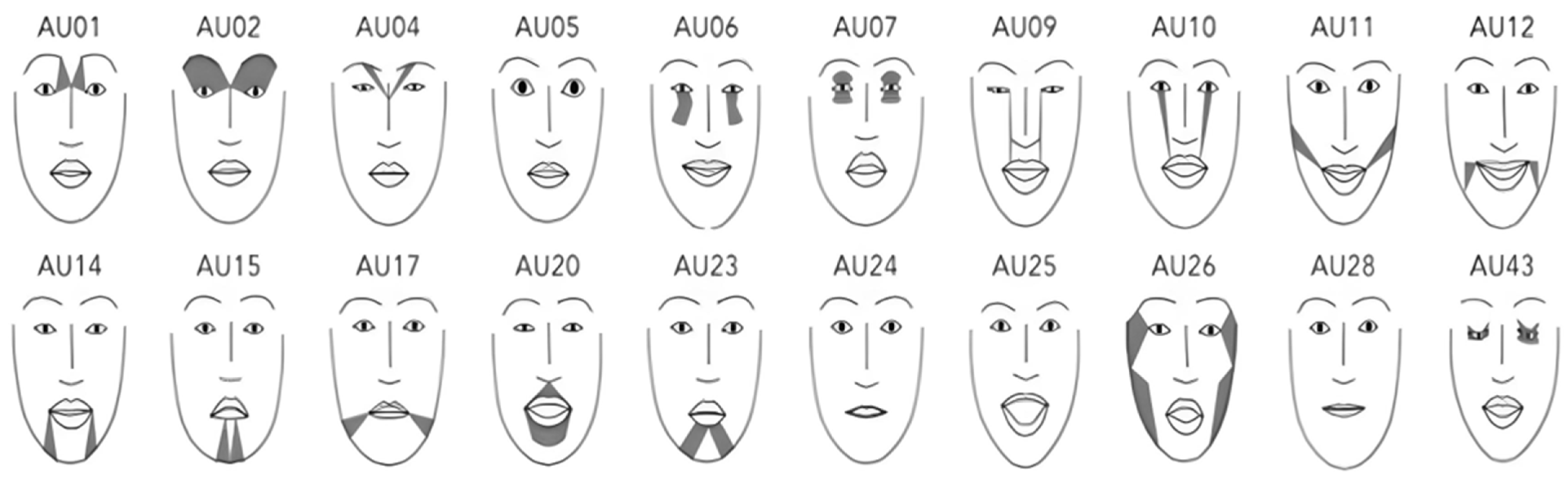

2.3. Data Processing

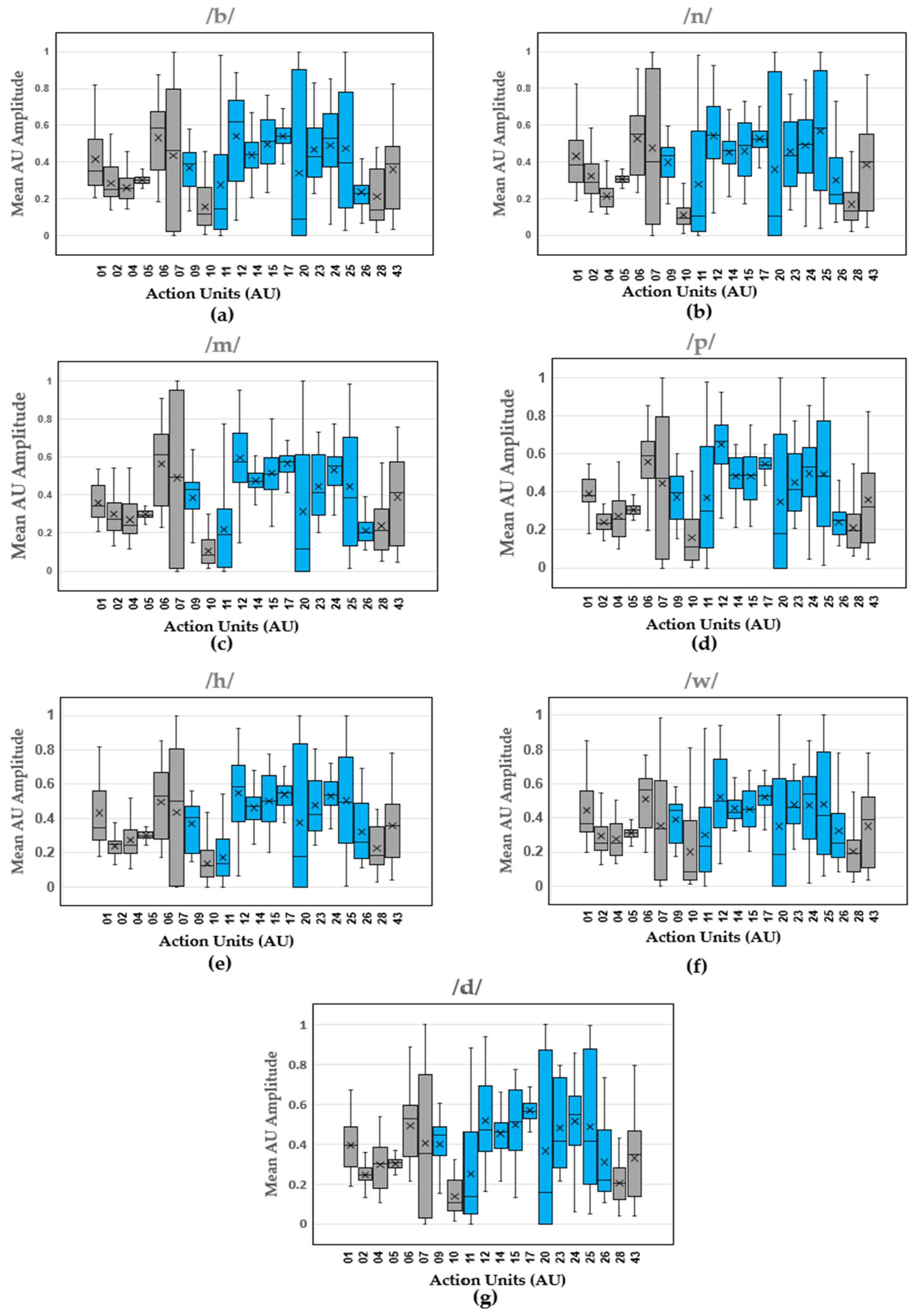

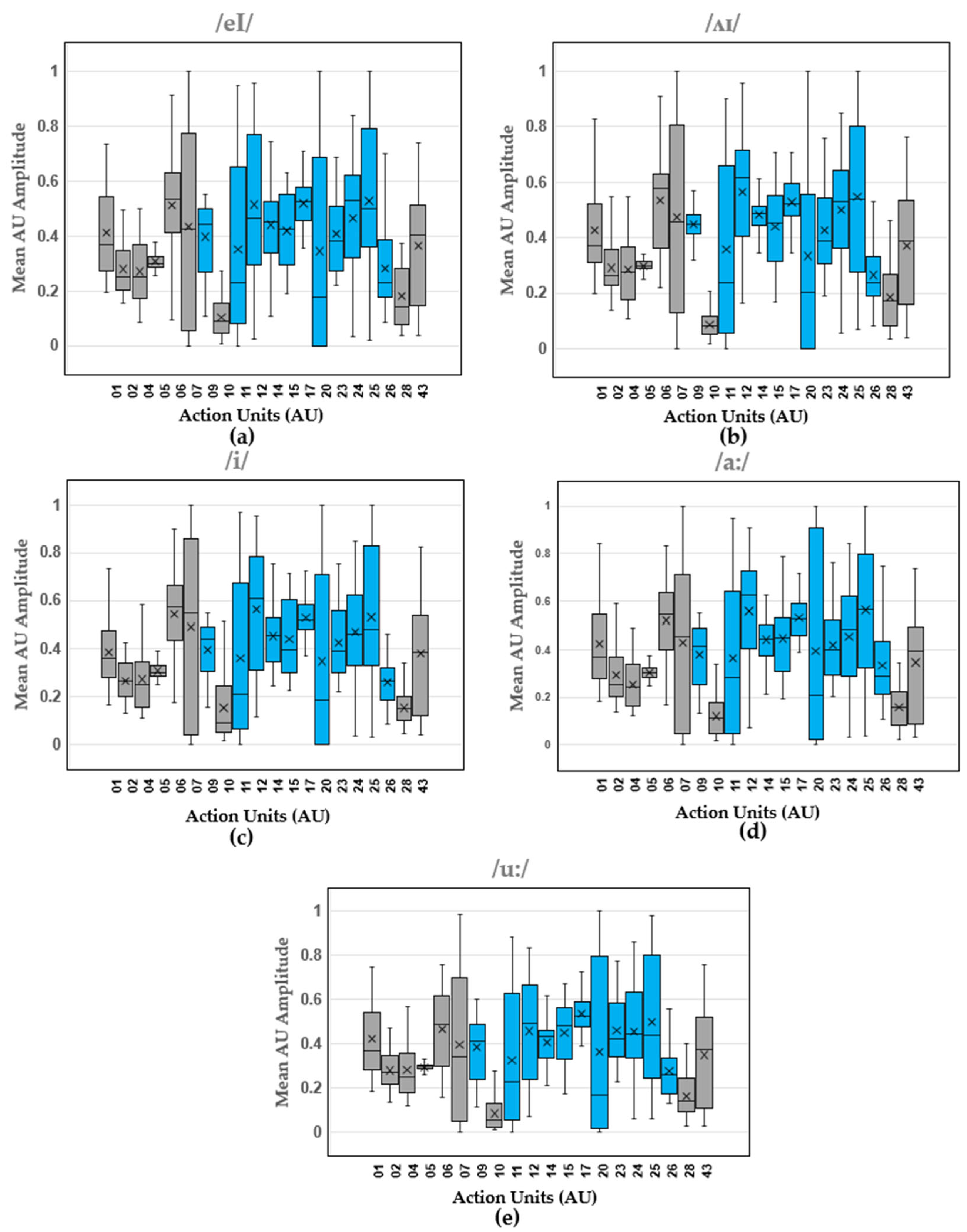

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Menon, E.B.; Ravichandran, S.; Tan, E.S. Speech disorders in closed head injury patients. Singap. Med. J. 1993, 34, 45–48. [Google Scholar]

- A Tool for Differential Diagnosis of Childhood Apraxia of Speech and Dysarthria in Children: A Tutorial. Available online: https://pubs.asha.org/doi/epdf/10.1044/2022_LSHSS-21-00164 (accessed on 24 March 2025).

- Speech Disorders: Types, Symptoms, Causes, and Treatment. Available online: https://www.medicalnewstoday.com/articles/324764 (accessed on 20 March 2025).

- What Is Apraxia of Speech?|NIDCD. Available online: https://www.nidcd.nih.gov/health/apraxia-speech (accessed on 20 March 2025).

- Björelius, H.; Tükel, Ş.; Björelius, H.; Tükel, Ş. Comorbidity of Motor and Sensory Functions in Childhood Motor Speech Disorders. In Advances in Speech-Language Pathology; IntechOpen: London, UK, 2017. [Google Scholar] [CrossRef][Green Version]

- Steingass, K.J.; Chicoine, B.; McGuire, D.; Roizen, N.J. Developmental Disabilities Grown Up: Down Syndrome. J. Dev. Behav. Pediatr. 2011, 32, 548–558. [Google Scholar] [CrossRef] [PubMed]

- American Speech-Language-Hearing Association. Speech Sound Disorders: Articulation and Phonology. Available online: https://www.asha.org/practice-portal/clinical-topics/articulation-and-phonology/ (accessed on 12 March 2025).

- Berisha, V.; Utianski, R.; Liss, J. Towards a Clinical Tool for Automatic Intelligibility Assessment. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 2825–2828. [Google Scholar] [CrossRef]

- van Doornik, A.; Welbie, M.; McLeod, S.; Gerrits, E.; Terband, H. Speech and language therapists’ insights into severity of speech sound disorders in children for developing the speech sound disorder severity construct. Int. J. Lang. Commun. Disord. 2025, 60, e70022. [Google Scholar] [CrossRef]

- Tyler, A.A.; Tolbert, L.C. Speech-Language Assessment in the Clinical Setting. Am. J. Speech-Lang. Pathol. 2002, 11, 215–220. [Google Scholar] [CrossRef]

- Rvachew, S. Stimulability and Treatment Success. Top. Lang. Disord. 2005, 25, 207–219. [Google Scholar] [CrossRef]

- Usha, G.P.; Alex, J.S.R. Speech assessment tool methods for speech impaired children: A systematic literature review on the state-of-the-art in Speech impairment analysis. Multimed. Tools Appl. 2023, 82, 35021–35058. [Google Scholar] [CrossRef]

- Selin, C.M.; Rice, M.L.; Girolamo, T.; Wang, C.J. Speech-Language Pathologists’ Clinical Decision Making for Children with Specific Language Impairment. Lang. Speech Hear. Serv. Sch. 2019, 50, 283–307. [Google Scholar] [CrossRef]

- Speech and Language Assessment—Pediatric (Standardized Tests). Available online: https://www.voxlingue.com/post/speech-and-language-assessment-pediatric-standardized-tests (accessed on 24 March 2025).

- Kannass, K.N.; Colombo, J.; Wyss, N. Now, Pay Attention! The Effects of Instruction on Children’s Attention. J. Cogn. Dev. 2010, 11, 509–532. [Google Scholar] [CrossRef][Green Version]

- Draheim, C.; Pak, R.; Draheim, A.A.; Engle, R.W. The role of attention control in complex real-world tasks. Psychon. Bull. Rev. 2022, 29, 1143–1197. [Google Scholar] [CrossRef]

- Hamm, J.; Kohler, C.G.; Gur, R.C.; Verma, R. Automated Facial Action Coding System for Dynamic Analysis of Facial Expressions in Neuropsychiatric Disorders. J. Neurosci. Methods 2011, 200, 237–256. [Google Scholar] [CrossRef]

- Iwano, K.; Yoshinaga, T.; Tamura, S.; Furui, S. Audio-Visual Speech Recognition Using Lip Information Extracted from Side-Face Images. EURASIP J. Audio Speech Music Process. 2007, 2007, 64506. [Google Scholar] [CrossRef]

- Lee, C.M.; Yildirim, S.; Bulut, M.; Kazemzadeh, A.; Busso, C.; Deng, Z.; Lee, S.; Narayanan, S.S. Emotion recognition based on phoneme classes. In Proceedings of the Interspeech 2004, ISCA, Jeju, Republic of Korea, 4–8 October 2004; pp. 889–892. [Google Scholar] [CrossRef]

- Bates, R.A.; Ostendorf, M.; Wright, R.A. Symbolic phonetic features for modeling of pronunciation variation. Speech Commun. 2007, 49, 83–97. [Google Scholar] [CrossRef]

- Qu, L.; Zou, X.; Li, X.; Wen, Y.; Singh, R.; Raj, B. The Hidden Dance of Phonemes and Visage: Unveiling the Enigmatic Link between Phonemes and Facial Features. arXiv 2023, arXiv:2307.13953. [Google Scholar] [CrossRef]

- Yau, W.C.; Kumar, D.K.; Arjunan, S.P. Visual recognition of speech consonants using facial movement features. Integr. Comput. Eng. 2007, 14, 49–61. [Google Scholar] [CrossRef]

- Cappelletta, L.; Harte, N. Phoneme-To-Viseme Mapping for Visual Speech Recognition. In Proceedings of the International Conference on Pattern Recognition Applications and Methods, SCITEPRESS, Vilamoura, Portugal, 6–8 February 2012; pp. 322–329. [Google Scholar] [CrossRef]

- Pickett, K.L. The Effectiveness of Using Electropalatography to Remediate a Developmental Speech Sound Disorder in a School-Aged Child with Hearing Impairment; Brigham Young University: Provo, Utah, 2013. [Google Scholar]

- McAuliffe, M.J.; Ward, E.C. The use of electropalatography in the assessment and treatment of acquired motor speech disorders in adults: Current knowledge and future directions. NeuroRehabilitation 2006, 21, 189–203. [Google Scholar] [CrossRef]

- Matsumoto, D.; Willingham, B. Spontaneous Facial Expressions of Emotion of Congenitally and Noncongenitally Blind Individuals. J. Personal. Soc. Psychol. 2009, 96, 1. [Google Scholar] [CrossRef]

- Valente, D.; Theurel, A.; Gentaz, E. The role of visual experience in the production of emotional facial expressions by blind people: A review. Psychon. Bull. Rev. 2018, 25, 483–497. [Google Scholar] [CrossRef]

- Yu, Y.; Lado, A.; Zhang, Y.; Magnotti, J.F.; Beauchamp, M.S. The Effect on Speech-in-Noise Perception of Real Faces and Synthetic Faces Generated with either Deep Neural Networks or the Facial Action Coding System. bioRxiv 2024. [Google Scholar] [CrossRef]

- Garg, S.; Hamarneh, G.; Jongman, A.; Sereno, J.A.; Wang, Y. Computer-vision analysis reveals facial movements made during Mandarin tone production align with pitch trajectories. Speech Commun. 2019, 113, 47–62. [Google Scholar] [CrossRef]

- Garg, S.; Hamarneh, G.; Jongman, A.; Sereno, J.A.; Wang, Y. ADFAC: Automatic detection of facial articulatory features. MethodsX 2020, 7, 101006. [Google Scholar] [CrossRef]

- Ma, J.; Cole, R. Animating visible speech and facial expressions. Vis. Comput. 2004, 20, 86–105. [Google Scholar] [CrossRef]

- Fasel, B.; Luettin, J. Automatic facial expression analysis: A survey. Pattern Recognit. 2003, 36, 259–275. [Google Scholar] [CrossRef]

- Esfandbod, A.; Rokhi, Z.; Meghdari, A.F.; Taheri, A.; Alemi, M.; Karimi, M. Utilizing an Emotional Robot Capable of Lip-Syncing in Robot-Assisted Speech Therapy Sessions for Children with Language Disorders. Int. J. Soc. Robot. 2023, 15, 165–183. [Google Scholar] [CrossRef]

- Schipor, O.-A.; Pentiuc, S.-G.; Schipor, M.-D. Towards a multimodal emotion recognition framework to be integrated in a Computer Based Speech Therapy System. In Proceedings of the 2011 6th Conference on Speech Technology and Human-Computer Dialogue (SpeD), Brasov, Romania, 18–21 May 2011; pp. 1–6. [Google Scholar] [CrossRef]

- Clark, E.A.; Kessinger, J.; Duncan, S.E.; Bell, M.A.; Lahne, J.; Gallagher, D.L.; O’Keefe, S.F. The Facial Action Coding System for Characterization of Human Affective Response to Consumer Product-Based Stimuli: A Systematic Review. Front. Psychol. 2020, 11, 920. [Google Scholar] [CrossRef]

- Cheong, J.H.; Jolly, E.; Xie, T.; Byrne, S.; Kenney, M.; Chang, L.J. Py-Feat: Python Facial Expression Analysis Toolbox. Affect. Sci. 2023, 4, 781–796. [Google Scholar] [CrossRef]

- Parent, R.; King, S.; Fujimura, O. Issues with lip sync animation: Can you read my lips? In Proceedings of the Computer Animation 2002 (CA 2002), Geneva, Switzerland, 21 June 2002; pp. 3–10. [Google Scholar] [CrossRef]

- Wynn, A.T.; Wang, J.; Umezawa, K.; Cristea, A.I. An AI-Based Feedback Visualisation System for Speech Training. In Artificial Intelligence in Education. Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practitioners’ and Doctoral Consortium; Rodrigo, M.M., Matsuda, N., Cristea, A.I., Dimitrova, V., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 510–514. [Google Scholar] [CrossRef]

- Das, A.; Mock, J.; Chacon, H.; Irani, F.; Golob, E.; Najafirad, P. Stuttering Speech Disfluency Prediction using Explainable Attribution Vectors of Facial Muscle Movements. arXiv 2020, arXiv:2010.01231. [Google Scholar] [CrossRef]

| Participant | Gender | Age | Ethnicity | Traumatic Brain/Stroke Injury | Cognitive Impairments | Development Delays | Learning Disability and/or Speech Impairment |

|---|---|---|---|---|---|---|---|

| S1 | F | 26 | Black/African American | No | No | No | No |

| S2 | F | 46 | Black/African American | No | No | No | No |

| S3 | F | 38 | Black/African American | No | No | No | No |

| S4 | M | 26 | Asian/Pacific Islander | No | No | No | No |

| S5 | M | 21 | Black/African American | No | No | No | No |

| S6 | F | 41 | Asian/Pacific Islander | No | No | No | No |

| S7 | M | 25 | Black/African American | No | No | No | No |

| S8 | F | 29 | Black/African American | No | No | No | No |

| S9 | M | 25 | Black/African American | No | No | No | ADHD, Graphomotor Disorder |

| S10 | M | 23 | Black/African American | No | No | No | No |

| S11 | F | 26 | Black/African American | No | No | No | No |

| S12 | M | 28 | Hispanic/Latino | No | No | No | No |

| S13 | F | 43 | Black/African American | No | No | No | No |

| S14 | F | 24 | Black/African American | No | No | No | No |

| Action Units | Muscle Groups | Description of Muscle Group Function |

|---|---|---|

| AU09 | Levator Labii Superioris Alaeque Nasi | Nose wrinkler |

| AU11 | Zygomaticus Minor | Nasolabial deepener |

| AU12 | Zygomaticus Major | Lip corner puller |

| AU14 | Buccinator | Dimpler |

| AU15 | Depressor Anguli Oris | Lip corner depressor |

| AU17 | Mentalis | Chin raiser |

| AU20 | Risorius, Platysma | Lip stretcher |

| AU23 | Orbicularis Oris | Lip tightener |

| AU24 | Orbicularis Oris | Lip pressor |

| AU25 | Depressor Labii Inferioris | Lip part |

| AU26 | Masseter, Temporalis, Medial Pterygoid | Jaw drop [36,37] |

| Vowels | Consonants | Diphthongs | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Range of AUs | /æ/ | /ε/ | /ɪ/ | /ɒ/ | /ʊ/ | /b/ | /n/ | /m/ | /p/ | /h/ | /w/ | /d/ | /eI/ | /ʌɪ/ | /i/ | /a:/ | /u:/ |

| AU01 | 0.375 | 0.371 | 0.368 | 0.35 | 0.35 | 0.35 | 0.38 | 0.35 | 0.39 | 0.34 | 0.37 | 0.42 | 0.37 | 0.37 | 0.37 | 0.37 | 0.37 |

| AU02 | 0.250 | 0.239 | 0.25 | 0.26 | 0.25 | 0.25 | 0.29 | 0.27 | 0.26 | 0.26 | 0.25 | 0.26 | 0.25 | 0.26 | 0.27 | 0.25 | 0.27 |

| AU04 | 0.24 | 0.264 | 0.27 | 0.25 | 0.23 | 0.26 | 0.24 | 0.24 | 0.25 | 0.25 | 0.25 | 0.3 | 0.25 | 0.28 | 0.25 | 0.24 | 0.25 |

| AU05 | 0.3 | 0.297 | 0.63 | 0.30 | 0.30 | 0.30 | 0.31 | 0.31 | 0.3 | 0.30 | 0.31 | 0.31 | 0.30 | 0.30 | 0.30 | 0.31 | 0.30 |

| AU06 | 0.59 | 0.592 | 0.63 | 0.54 | 0.6 | 0.59 | 0.55 | 0.61 | 0.59 | 0.53 | 0.56 | 0.53 | 0.53 | 0.58 | 0.57 | 0.55 | 0.49 |

| AU07 | 0.45 | 0.52 | 0.42 | 0.28 | 0.38 | 0.46 | 0.4 | 0.5 | 0.47 | 0.5 | 0.34 | 0.35 | 0.43 | 0.45 | 0.55 | 0.45 | 0.34 |

| AU09 | 0.425 | 0.425 | 0.12 | 0.40 | 0.4 | 0.39 | 0.43 | 0.43 | 0.4 | 0.4 | 0.44 | 0.45 | 0.44 | 0.43 | 0.44 | 0.41 | 0.41 |

| AU10 | 0.175 | 0.13 | 0.66 | 0.14 | 0.09 | 0.12 | 0.11 | 0.11 | 0.13 | 0.13 | 0.08 | 0.13 | 0.1 | 0.11 | 0.1 | 0.13 | 0.1 |

| AU11 | 0.075 | 0.165 | 0.5 | 0.125 | 0.21 | 0.15 | 0.1 | 0.21 | 0.3 | 0.21 | 0.24 | 0.13 | 0.23 | 0.24 | 0.21 | 0.28 | 0.23 |

| AU12 | 0.695 | 0.691 | 0.47 | 0.52 | 0.61 | 0.62 | 0.54 | 0.58 | 0.66 | 0.58 | 0.5 | 0.47 | 0.46 | 0.62 | 0.61 | 0.63 | 0.5 |

| AU14 | 0.475 | 0.477 | 0.54 | 0.43 | 0.46 | 0.44 | 0.46 | 0.48 | 0.46 | 0.47 | 0.43 | 0.46 | 0.45 | 0.48 | 0.46 | 0.44 | 0.43 |

| AU15 | 0.45 | 0.45 | 0.18 | 0.45 | 0.45 | 0.51 | 0.49 | 0.51 | 0.46 | 0.5 | 0.45 | 0.51 | 0.42 | 0.45 | 0.39 | 0.45 | 0.48 |

| AU17 | 0.55 | 0.53 | 0.66 | 0.54 | 0.54 | 0.54 | 0.52 | 0.58 | 0.54 | 0.55 | 0.53 | 0.55 | 0.53 | 0.52 | 0.52 | 0.53 | 0.53 |

| AU20 | 0.21 | 0.25 | 0.49 | 0.22 | 0.17 | 0.09 | 0.11 | 0.12 | 0.18 | 0.18 | 0.18 | 0.16 | 0.18 | 0.2 | 0.19 | 0.21 | 0.17 |

| AU23 | 0.39 | 0.38 | 0.46 | 0.41 | 0.39 | 0.43 | 0.43 | 0.41 | 0.41 | 0.42 | 0.46 | 0.42 | 0.38 | 0.39 | 0.39 | 0.4 | 0.42 |

| AU24 | 0.5 | 0.49 | 0.53 | 0.51 | 0.49 | 0.53 | 0.49 | 0.56 | 0.53 | 0.53 | 0.54 | 0.55 | 0.53 | 0.53 | 0.46 | 0.48 | 0.44 |

| AU25 | 0.475 | 0.48 | 0.51 | 0.45 | 0.37 | 0.4 | 0.58 | 0.39 | 0.48 | 0.5 | 0.42 | 0.41 | 0.5 | 0.54 | 0.48 | 0.56 | 0.44 |

| AU26 | 0.275 | 0.243 | 0.27 | 0.25 | 0.25 | 0.25 | 0.22 | 0.23 | 0.27 | 0.26 | 0.25 | 0.22 | 0.26 | 0.24 | 0.29 | 0.29 | 0.27 |

| AU28 | 0.19 | 0.24 | 0.19 | 0.18 | 0.16 | 0.14 | 0.13 | 0.21 | 0.2 | 0.19 | 0.2 | 0.2 | 0.14 | 0.17 | 0.16 | 0.13 | 0.14 |

| AU43 | 0.18 | 0.417 | 0.39 | 0.38 | 0.38 | 0.39 | 0.4 | 0.41 | 0.32 | 0.36 | 0.39 | 0.35 | 0.4 | 0.39 | 0.39 | 0.4 | 0.38 |

| Legend for Median Ranges | |||||||||||||||||

| 0–0.2 | 0.21–0.39 | 0.4–0.59 | 0.6–0.79 | 0.8–1.0 | |||||||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Newby, A.A.; Bhatta, A.; Kirkland, C., III; Arnold, N.; Thompson, L.A. The Role of Facial Action Units in Investigating Facial Movements During Speech. Electronics 2025, 14, 2066. https://doi.org/10.3390/electronics14102066

Newby AA, Bhatta A, Kirkland C III, Arnold N, Thompson LA. The Role of Facial Action Units in Investigating Facial Movements During Speech. Electronics. 2025; 14(10):2066. https://doi.org/10.3390/electronics14102066

Chicago/Turabian StyleNewby, Aliya A., Ambika Bhatta, Charles Kirkland, III, Nicole Arnold, and Lara A. Thompson. 2025. "The Role of Facial Action Units in Investigating Facial Movements During Speech" Electronics 14, no. 10: 2066. https://doi.org/10.3390/electronics14102066

APA StyleNewby, A. A., Bhatta, A., Kirkland, C., III, Arnold, N., & Thompson, L. A. (2025). The Role of Facial Action Units in Investigating Facial Movements During Speech. Electronics, 14(10), 2066. https://doi.org/10.3390/electronics14102066