Vision-Based Structural Adhesive Detection for Electronic Components on PCBs

Abstract

1. Introduction

2. Related Works

3. Methods

3.1. Detection Problem and Solutions

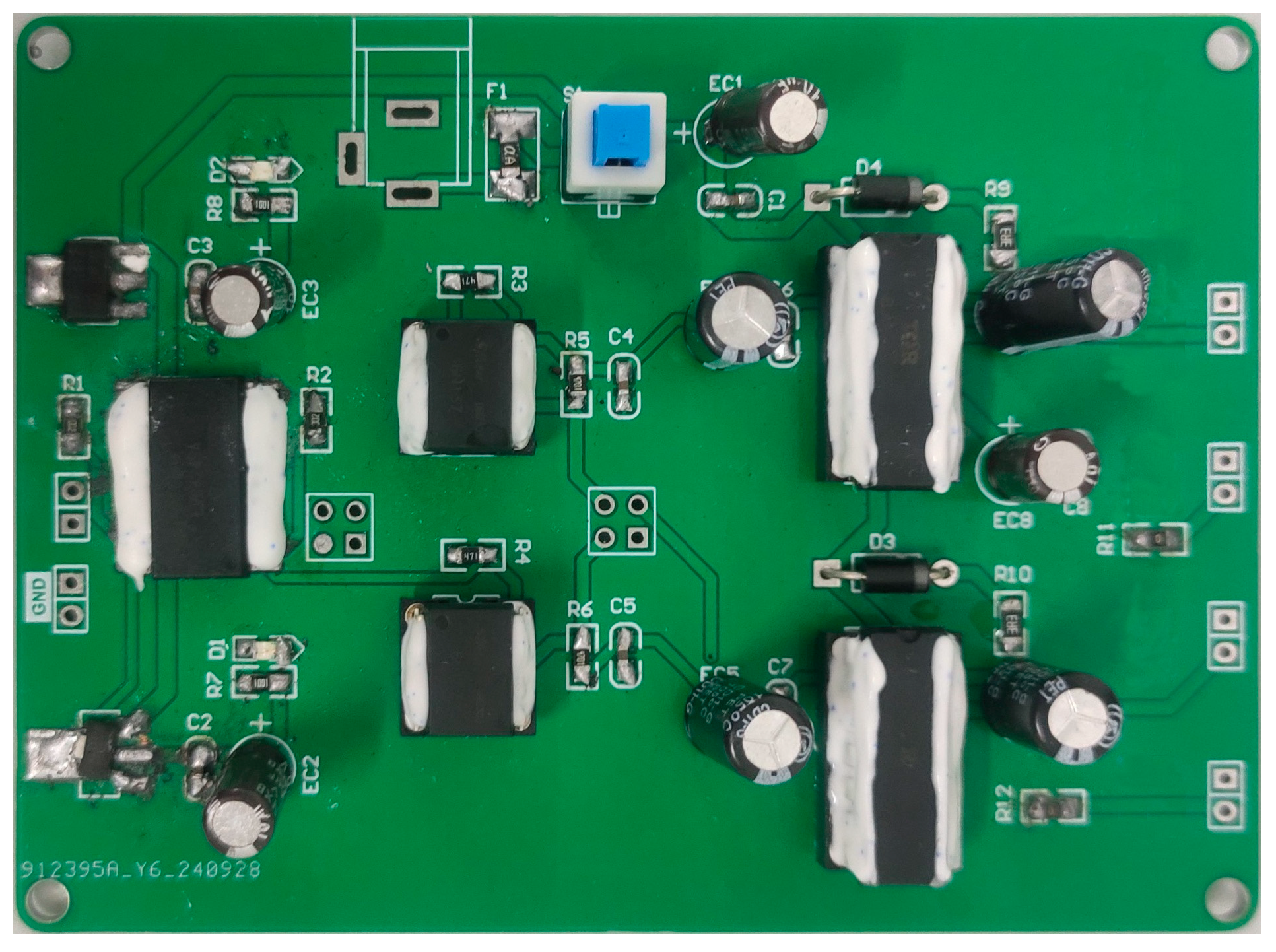

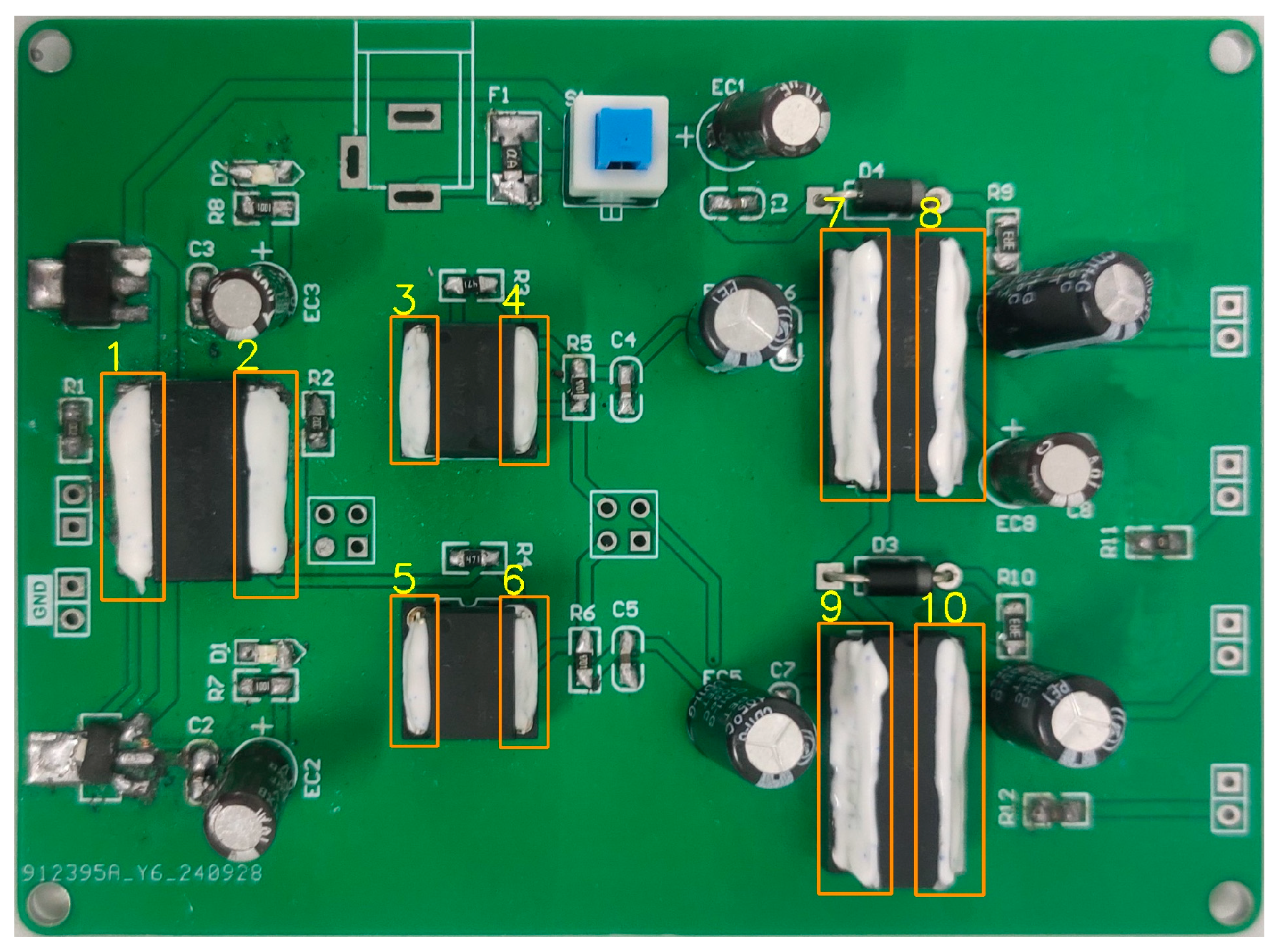

3.2. Region of Interest Extraction

3.3. Standard Template Establishment

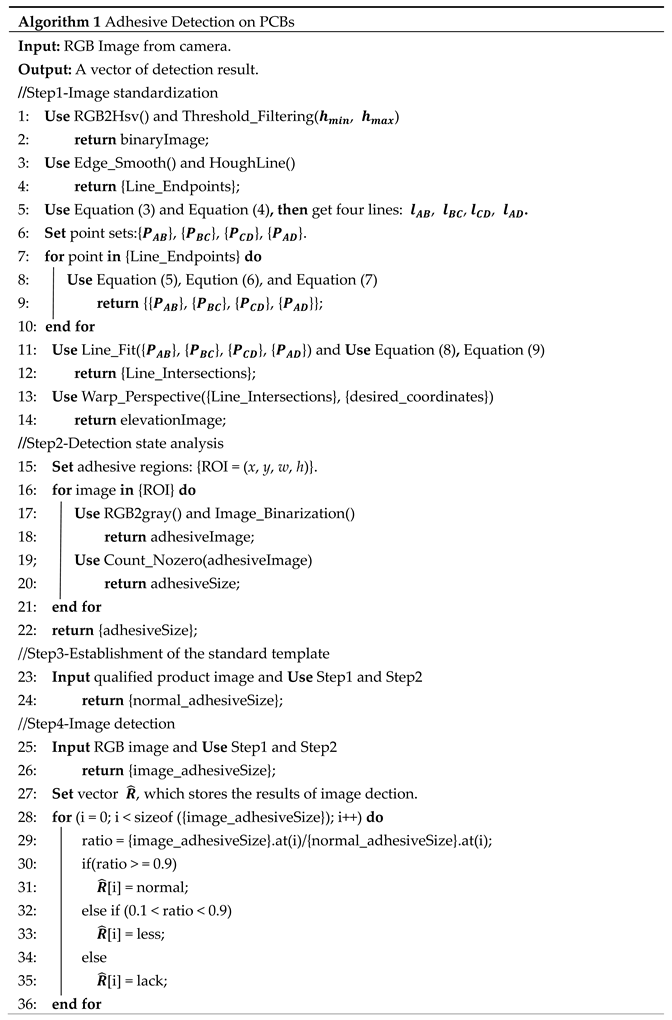

3.4. Detection Algorithm

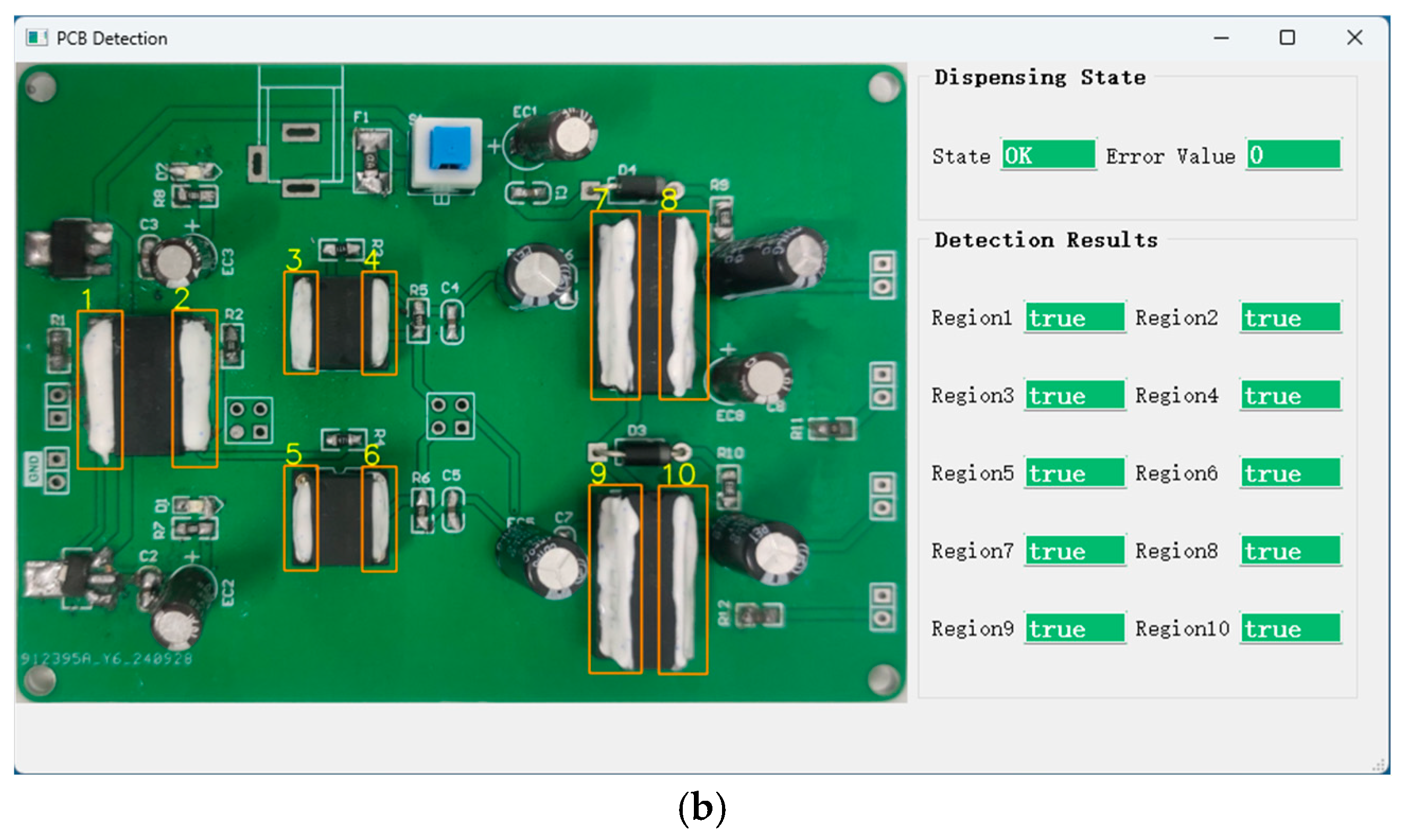

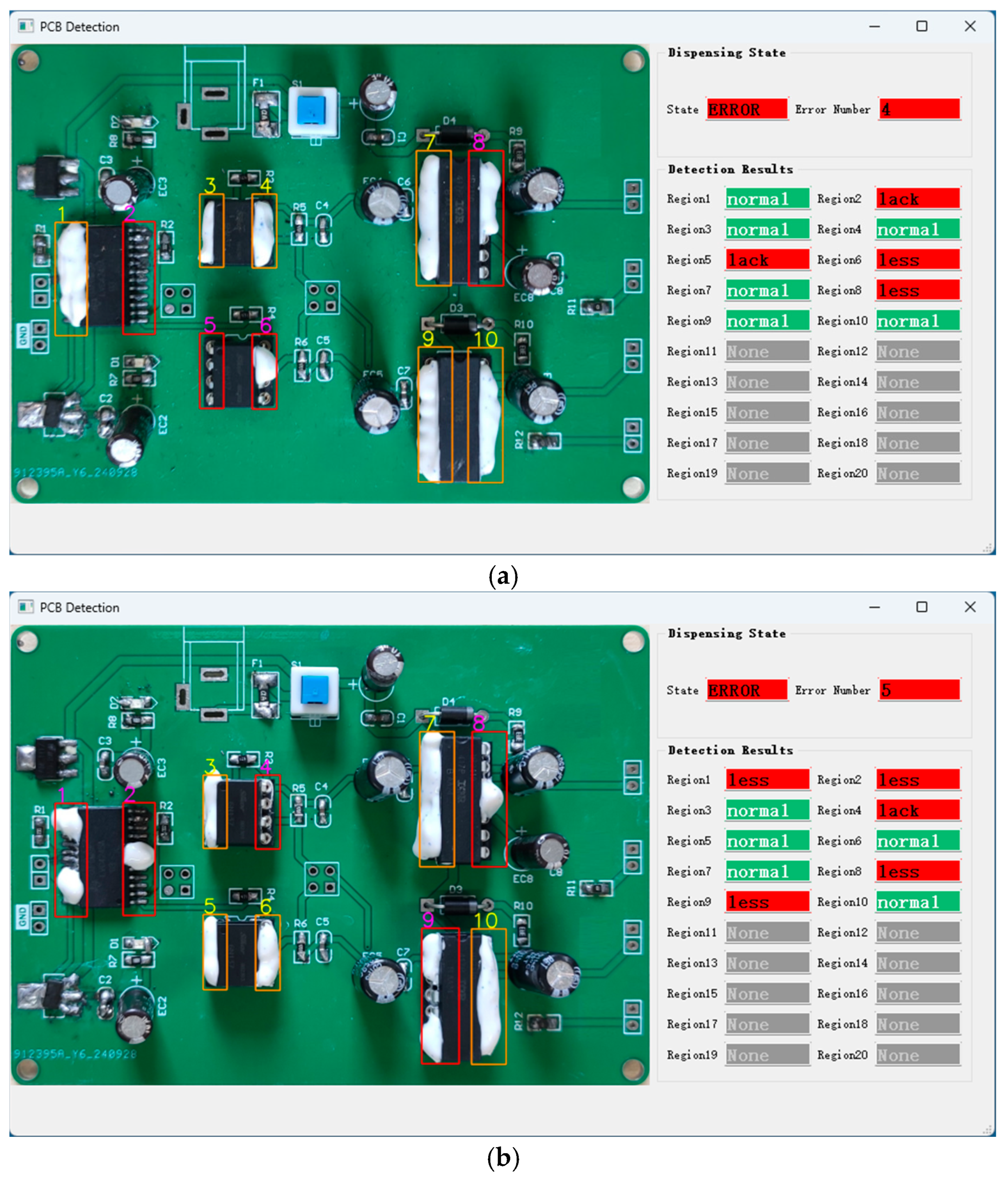

3.5. Detection Software

4. Experiment

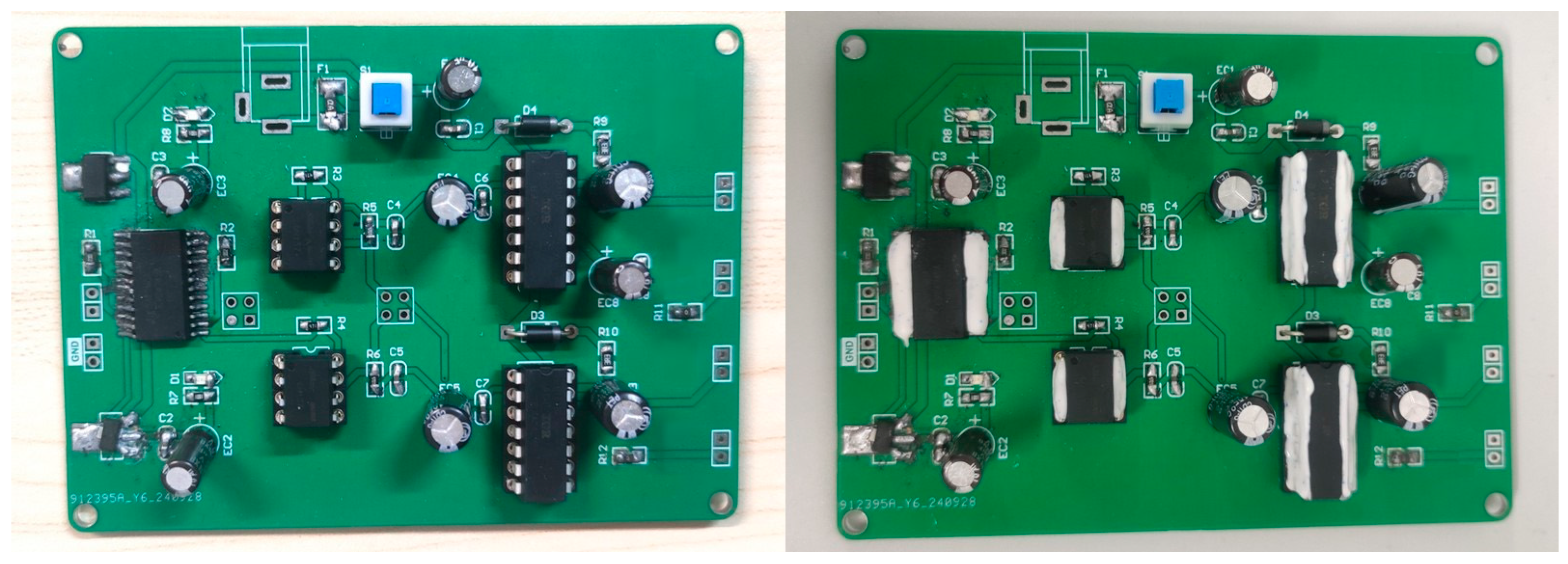

4.1. Experimental Condition and Process

4.2. Detection of Absent and Insufficient Adhesives

- Detection of Absent Adhesives

4.3. Detecting Adhesive on Different PCBs

4.4. Experimental Summary

5. Conclusions

- Poor generalization capability.

- Lacking sufficient automation and intelligence in the detection process.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ren, G.; Tian, F.; Yang, L. The research of thermal design for vehicle controller based on simulation. Appl. Therm. Eng. 2013, 58, 420–429. [Google Scholar]

- Park, T.; Oh, H. New PCB strain-based structural design methodology for reliable and rapid evaluation of spaceborne electronics under random vibration. Int. J. Fatigue 2021, 146, 106147. [Google Scholar] [CrossRef]

- Liu, F.; Gong, R.; Duan, Z.; Wang, Z.; Zhou, J. Effect of PCB fastening method and thickness on PCB assembly vibration reliability in thermal environments. Microelectron. Reliab. 2025, 165, 115587. [Google Scholar] [CrossRef]

- Freitas, C.; Leite, T.; Lopes, H.; Gomes, M.; Cruz, S.; Magalhães, R.; Silva, A.F.; Viana, J.C.; Delgado, I. Influence of adhesive on optical fiber-based strain measurements on printed circuit boards. J. Mater. Sci. Mater. Electron. 2023, 34, 699. [Google Scholar] [CrossRef]

- Deng, W. Investigation of visual inspection methodologies for printed circuit board products. J. Opt. 2024, 53, 1462–1470. [Google Scholar] [CrossRef]

- Rahman, A.; Mousavi, A. A review and analysis of automatic optical inspection and quality monitoring methods in electronics industry. IEEE Access 2020, 8, 183192–183271. [Google Scholar]

- Sankar, V.; Lakshmi, G.; Sankar, Y. A review of various defects in PCB. J. Electron. Test. 2022, 38, 481–491. [Google Scholar] [CrossRef]

- Crispin, A.; Rankov, V. Automated inspection of PCB components using a genetic algorithm template-matching approach. Int. J. Adv. Manuf. Tech. 2007, 35, 293–300. [Google Scholar] [CrossRef]

- Ren, W.; Luo, J.; Jiang, W.; Qu, L.; Han, Z.; Tian, J.; Liu, H. Learning self-and cross-triplet context clues for human-object interaction detection. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 9760–9773. [Google Scholar] [CrossRef]

- Ulger, F.; Yuksel, S.E.; Yilmaz, A.; Gokcen, D. Solder joint inspection on printed circuit boards: A survey and a dataset. IEEE Trans. Instrum. Meas. 2023, 72, 2515121. [Google Scholar] [CrossRef]

- Wang, Q.; Huang, Z.; Fan, H.; Fu, S.; Tang, Y. Unsupervised person re-identification based on adaptive information supplementation and foreground enhancement. IET Image Process. 2024, 18, 4680–4694. [Google Scholar] [CrossRef]

- Song, X.; Wang, Y.; Li, C.; Song, L. WDC-YOLO: An improved YOLO model for small objects oriented printed circuit board defect detection. J. Electron. Imaging 2024, 33, 013051. [Google Scholar] [CrossRef]

- Zhang, J.; Shi, X.; Qu, D.; Xu, H.; Chang, Z. PCB defect recognition by image analysis using deep convolutional neural network. J. Electron. Test. 2024, 40, 657–667. [Google Scholar] [CrossRef]

- Liu, Z.; Qu, B. Machine vision based online detection of PCB defect. Microprocess. Microsyst. 2021, 82, 103807. [Google Scholar] [CrossRef]

- Gaidhane, V.H.; Hote, Y.V.; Singh, V. An efficient similarity measure approach for PCB surface defect detection. Pattern Anal. Appl. 2018, 21, 277–289. [Google Scholar] [CrossRef]

- Chen, Z.; Jiang, H.; Wang, Y.Q.; Jing, Y.Q. Research on machine vision-based alignment system in PCB inkjet printer. In Proceedings of the International Conference on Information Engineering and Applications (IEA); Springer: London, UK, 2012; pp. 689–697. [Google Scholar]

- Wang, W.B.; Liu, D.Y.; Yao, Y.Q. Defects detection of printed circuit board based on the machine vision method. Appl. Mech. Mater. 2014, 494, 785–788. [Google Scholar] [CrossRef]

- Luo, Z.D.; Lei, L.; Li, H.X. Rotation-angle-based principal feature extraction and optimization for PCB defect detection under uncertainties. IEEE Trans. Ind. Inform. 2024, 21, 932–939. [Google Scholar] [CrossRef]

- Vafeiadis, T.; Dimitriou, N.; Ioannidis, D.; Wotherspoon, T.; Tinker, G.; Tzovaras, D. A framework for inspection of dies attachment on PCB utilizing machine learning techniques. J. Manag. Anal. 2018, 5, 81–94. [Google Scholar] [CrossRef]

- Zhou, Y.; Yuan, M.; Zhang, J.; Ding, G.; Qin, S. Review of vision-based defect detection research and its perspectives for printed circuit board. J. Manuf. Syst. 2023, 70, 557–578. [Google Scholar] [CrossRef]

- Wang, W.C.; Chen, S.L.; Chen, L.B.; Chang, W.J. A machine vision-based automatic optical inspection system for measuring drilling quality of printed circuit boards. IEEE Access 2016, 5, 10817–10833. [Google Scholar] [CrossRef]

- Chen, X.; Wu, Y.; He, X.; Ming, W. A comprehensive review of deep learning-based PCB defect detection. IEEE Access 2023, 11, 139017–139038. [Google Scholar] [CrossRef]

- Ma, Y.; Yin, J.; Huang, F.; Li, Q. Surface defect inspection of industrial products with object detection deep networks: A systematic review. Artif. Intell. Rev. 2024, 57, 333. [Google Scholar] [CrossRef]

- Lv, S.; Ouyang, B.; Deng, Z.; Liang, T.; Jiang, S.; Zhang, K.; Chen, J.; Li, Z. A dataset for deep learning based detection of printed circuit board surface defect. Sci. Data 2024, 11, 811. [Google Scholar] [CrossRef]

- Huang, W.; Wei, P. A PCB Dataset for Defects Detection and Classification. arXiv 2019, arXiv:1901.08204. [Google Scholar]

- Li, Y.T.; Guo, J.I. A VGG-16 based faster RCNN model for PCB error inspection in industrial AOI applications. In Proceedings of the IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW), Taiwan, China, 19–21 May 2018; pp. 1–2. [Google Scholar]

- Lei, R.; Yan, D.; Wu, H.; Peng, Y. A precise convolutional neural network-based classification and pose prediction method for PCB component quality control. In Proceedings of the 14th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Bucharest, Romania, 30 June–1 July 2022; pp. 1–6. [Google Scholar]

- Yu, J.; Zhao, L.; Wang, Y.; Ge, Y. Defect detection of printed circuit board based on adaptive key-points localization network. Comput. Ind. Eng. 2024, 193, 110258. [Google Scholar] [CrossRef]

- Ling, Q.; Isa, N.A.M.; Asaari, M.S.M. SDD-Net: Soldering defect detection network for printed circuit boards. Neurocomputing 2024, 610, 128575. [Google Scholar] [CrossRef]

- Lu, J.; Lee, S.H. Real-time defect detection model in industrial environment based on lightweight deep learning network. Electronics 2023, 12, 4388. [Google Scholar] [CrossRef]

- Li, Y.T.; Kuo, P.; Guo, J.I. Automatic industry PCB board DIP process defect detection with deep ensemble method. In Proceedings of the IEEE 29th International Symposium on Industrial Electronics (ISIE), Delft, The Netherlands, 17–19 June 2020; pp. 453–459. [Google Scholar]

- Li, Y.T.; Kuo, P.; Guo, J.I. Automatic industry PCB board DIP process defect detection system based on deep ensemble self-adaption method. IEEE Trans. Compon. Packag. Manuf. Technol. 2020, 11, 312–323. [Google Scholar] [CrossRef]

- Gao, Y.; Zhang, R.; Yang, M.; Sabah, F. YOLOv8-TDD: An optimized YOLOv8 algorithm for targeted defect detection in printed circuit boards. J. Electron. Test. 2024, 40, 645–656. [Google Scholar]

- Kong, W.; Zhang, Z.; Zhang, T.; Wang, L.; Cheng, Z.-Y.; Zhou, M. SMC-YOLO: Surface defect detection of PCB based on multi-scale features and dual loss functions. IEEE Access 2024, 12, 137667–137682. [Google Scholar] [CrossRef]

- Yang, Y.; Kang, H. An enhanced detection method of PCB defect based on improved YOLOv7. Electronics 2023, 12, 2120. [Google Scholar] [CrossRef]

- Zhang, J.; Chang, Z.; Xu, H.; Qu, D.; Shi, X. Printed circuit board defect image recognition based on the multimodel fusion algorithm. J. Electron. Packag. 2024, 146, 021009. [Google Scholar] [CrossRef]

- Chen, W.; Meng, S.; Wang, X. Local and global context-enhanced lightweight CenterNet for PCB surface defect detection. Sensors 2024, 24, 4729. [Google Scholar] [CrossRef] [PubMed]

- Ding, R.; Zhang, C.; Zhu, Q.; Liu, H. Unknown defect detection for printed circuit board based on multi-scale deep similarity measure method. J. Eng. 2020, 13, 388–393. [Google Scholar] [CrossRef]

| Item | Type | Parameter |

|---|---|---|

| Processing system | Mini Intel NUC | Intel Core i7-13700H, 16 G RAM, 512 G SSD |

| Camera | RGB industrial camera | Effective Pixels: 2592 (H) × 1944 (V), Pixel size: 1.4 µm × 1.4 µm, Frame rate ≥ 30 FPS |

| No. | PCB type | Size (mm) | Solder Mask Color | Adhesive Regions | Main IC Package |

|---|---|---|---|---|---|

| 1 |  | (L) 95 (W) 70 | Green | 10 | DIP |

| 2 |  | (L) 85 (W) 55 | Black | 15 | QFP |

| PCB Type | Adhesive State | Total Number | Detection Results | Accuracy (%) | Speed | ||

|---|---|---|---|---|---|---|---|

| Normal | Missed | Over | (F/min) | ||||

| Qualified PCB | Qualified | 40 | 38 | 0 | 2 | 95 | 60 |

| Unqualified PCB | Absent | 20 | 20 | 0 | 0 | 100 | 60 |

| Insufficient | 20 | 18 | 1 | 1 | 90 | 60 | |

| PCB Type | Adhesive State | Total Number | Detection Results | Accuracy (%) | Speed | ||

|---|---|---|---|---|---|---|---|

| Normal | Missed | Over | (F/min) | ||||

| Qualified PCB | Qualified | 30 | 30 | 0 | 0 | 100 | 60 |

| Unqualified PCB | Absent | 15 | 15 | 0 | 0 | 100 | 60 |

| Insufficient | 15 | 14 | 0 | 1 | 93 | 60 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, R.; Yan, T.; Zhang, J. Vision-Based Structural Adhesive Detection for Electronic Components on PCBs. Electronics 2025, 14, 2045. https://doi.org/10.3390/electronics14102045

Zhang R, Yan T, Zhang J. Vision-Based Structural Adhesive Detection for Electronic Components on PCBs. Electronics. 2025; 14(10):2045. https://doi.org/10.3390/electronics14102045

Chicago/Turabian StyleZhang, Ruzhou, Tengfei Yan, and Jian Zhang. 2025. "Vision-Based Structural Adhesive Detection for Electronic Components on PCBs" Electronics 14, no. 10: 2045. https://doi.org/10.3390/electronics14102045

APA StyleZhang, R., Yan, T., & Zhang, J. (2025). Vision-Based Structural Adhesive Detection for Electronic Components on PCBs. Electronics, 14(10), 2045. https://doi.org/10.3390/electronics14102045