Abstract

Extended-reality (XR) tools are increasingly used to revitalise museum experiences, but typical head-mounted or smartphone solutions tend to fragment audiences and suppress the social dialogue that makes cultural heritage memorable. This article addresses that gap on two fronts. First, it proposes a four-phase design methodology—spanning artifact selection, narrative framing, tangible-interface fabrication, spatial installation, software integration, validation, and deployment—that helps curators, designers, and technologists to co-create XR exhibitions in which co-presence, embodied action, and multisensory cues are treated as primary design goals rather than afterthoughts. Second, the paper reports LanternXR, a proof-of-concept built with the methodology: visitors share a 3D-printed replica of the fourteenth-century Virgin of Boixadors while wielding a tracked “camera” and a candle-like lantern that lets them illuminate, photograph, and annotate the sculpture inside a life-sized Gothic nave rendered on large 4K displays with spatial audio and responsive lighting. To validate the approach, the article presents an analytical synthesis of feedback from curators, museologists, and XR technologists, underscoring the system’s capacity to foster collaboration, deepen engagement, and broaden accessibility. The findings show how XR can move museum audiences from isolated immersion to collective, multisensory exploration.

1. Introduction

Extended-reality (XR) technologies, encompassing virtual reality (VR), augmented reality (AR), and mixed reality (MR), have emerged as powerful tools to transform cultural heritage engagement by enabling immersive, interactive, and emotionally resonant experiences. VR immerses users entirely in digitally constructed environments [1,2,3], AR overlays digital content onto real-world views [4,5,6], and MR blends digital and real-world elements interactively in real time [7]. Through augmented, virtual, and mixed reality environments, XR offers novel ways to experience artifacts, narratives, and spaces that are otherwise inaccessible, fragile, or abstract. These technologies hold significant potential to enhance not only how heritage is visualised and interpreted but also how it is felt, remembered, and collectively discussed.

Despite the significant advances in the application of XR in museums and heritage institutions, challenges remain in designing systems that preserve the inherently social nature of cultural experiences while leveraging the benefits of digital immersion. Many current XR implementations prioritize individual interaction and visual spectacle, often at the cost of shared interpretation, physical collaboration, and spontaneous communication among visitors.

In response to this gap, this article proposes a design methodology aimed at creating socially engaging XR systems that prioritize co-presence, embodied interaction, and multisensory engagement. The authors of this work validate this methodology through its application in the development ofLanternXR, an interactive XR prototype designed specifically for museum environments. By combining physical artifacts, spatial interaction, and large-format displays with handheld tracked devices, LanternXR reimagines how visitors can collectively explore and interpret cultural content in shared physical space.

1.1. Motivation

LanternXR was conceived in response to growing concerns that traditional XR deployments—particularly those relying on personal head-mounted displays or smartphones—often limit social interaction, spontaneous discovery, and collaborative learning in museums [8,9]. While such technologies offer compelling immersion, but they may disrupt the natural flow of group visits and reduce opportunities for meaningful discussion [10]. To address this, this work aims to explore alternative approaches to XR that restore the social and embodied qualities of cultural engagement, emphasizing co-presence, shared interpretation, and physical interaction.

1.2. Background

In recent decades, XR technology has revolutionised the cultural heritage sector by enhancing visual and spatial experiences while also evoking emotional responses that deepen user engagement with historical narratives [11]. Digital technologies have become almost essential for the presentation of all types of cultural heritage in the modern era, offering new modes of interaction and experience for visitors to museums and cultural venues [12]. This technological evolution has transformed the way people interact with their historical and cultural heritage, creating new opportunities for engagement and learning [13].

Virtual museum systems, based on different XR technologies, have begun to emerge as decisive tools for promoting exhibitions and reaching wider audiences [14]. These technologies enable the creation of virtual representations of traditional crafts and practices, offering users the possibility to travel through time via 3D reconstructions of monuments and cultural sites [15]. The integration of XR into museums has demonstrated significant potential to enhance visitor experiences by creating immersive environments that foster deeper connections with cultural content [16].

However, despite major advances in the field, digital resources alone are not sufficient to adequately present cultural heritage. Additional information about historical context is often required in the form of narratives, virtual reconstructions, and digitised artifacts [17]. Moreover, traditional XR experiences often depend on personal devices such as AR/VR headsets or smartphones, which can limit social interaction and shared experiences that are fundamental to museum visits [9]. This technological barrier can significantly disrupt the natural flow of museum visits and reduce opportunities for spontaneous discussion and shared discovery [10].

Traditional approaches to XR in museums have primarily focused on the use of personal devices, such as virtual reality headsets and mobile augmented reality applications, to enhance visitor experiences [18]. While these technologies excel at delivering immersive experiences, research suggests they often compromise the social aspects of museum visits, which play a critical role in fostering learning and engagement [8].

The metaverse represents a post-reality universe that seamlessly merges the physical world with digital virtuality, providing a continuous and immersive social networking environment [19]. However, traditional XR experiences frequently isolate users and constrain social interaction. Research by Slater and Sanchez-Vives [20] indicates that visitors spend a significant portion of their time managing personal devices or headsets rather than interacting with their companions or the exhibited content. This technological overhead can significantly disrupt the natural flow of museum visits and reduce opportunities for spontaneous discussion and shared discovery.

Although the work of Petrelli et al. [21] has explored alternative tangible interface solutions for cultural heritage, effective combinations of physical interaction and digital augmentation—while preserving the social dynamics proposed by Hornecker and Buur [22]—remain limited. The challenge lies in creating experiences that leverage the immersive capabilities of XR technologies while preserving the social and collaborative dimensions that are central to meaningful museum experiences [23].

As the focus shifts from museum exhibitions to visitor-centered experiences, the use of emerging technologies and the co-creation of virtual museums not only supports the preservation of cultural heritage, but also enhances dissemination, engagement, and overall experience—while addressing issues of mobility and the plurality of voices and perspectives represented [24]. Tangible user interfaces (TUIs) have been employed to provide various physical forms and materials that allow users to input, output, and receive feedback from their actions, as well as manipulate digital information—enabling them to literally grasp data with their hands [21].

Immersion refers to the technological factors that primarily affect user engagement in XR environments and determine the “sense of presence” [14]. This immersive quality is a key factor for entertainment and, when combined with educational elements, creates what is often referred to as “edutainment” (education + entertainment)—an important driver in fostering a motivating and effective learning environment [25]. It has been demonstrated that the integration of multisensory elements in museum experiences significantly enhances visitor engagement and knowledge retention [26].

XR technologies allow users to engage in hyperspatial–temporal communication and multisensory self-expression through devices such as head-mounted displays (HMDs), tangible user interfaces (TUIs), and tracking systems, helping them to achieve greater social presence, motivation, and enjoyment [27]. Unlike traditional screen-based interactions, XR aims to enhance communication through richer emotional cues (cf. the media richness theory introduced in 1986 by Daft and Lengel), a stronger sense of social presence [28], and more embodied interactivity. The development of multisensory XR experiences has opened new possibilities for creating more inclusive and engaging museum environments [29].

1.3. Objectives and Contributions

This article presents a design methodology tailored to the development of interactive XR systems that foster social, collaborative, and sensory-rich engagement with cultural heritage. As a concrete application, the authors of this work describe the design, implementation, and evaluation of LanternXR, a spatially interactive installation that employs handheld 6DoF-tracked devices and large-format displays to create a shared XR experience.

The contributions of this article are as follows:

- Design Methodology: The authors of this work propose a design framework informed by emotional design principles [11], user experience research [30], and practical challenges in heritage-based AR [31].

- Prototype Implementation: This work describes LanternXR as an applied case that implements this methodology, combining a 3D-printed physical artifact with spatial interaction metaphors (a virtual flashlight and camera) to enable collaborative exploration.

- Empirical Evaluation: This work presents a qualitative and observational analysis of user engagement, with a focus on social interaction, embodiment, and emotional resonance within the installation.

1.4. Structure of the Article

The remainder of the article is structured as follows: the proposed methodology and prototype system are developed in Section 2. Subsequently, Section 3 provides insight into the feasibility of the system and additional aspects. Finally, the paper concludes with a discussion and concluding remarks presented in Section 4 and Section 5.

2. Materials and Methods

2.1. Design Methodology for XR Exhibition Spaces

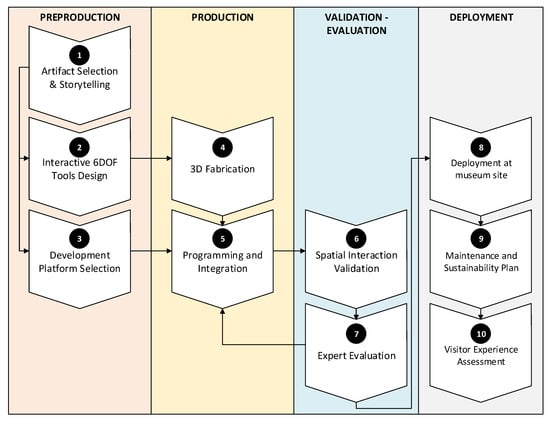

This section presents a structured methodology specifically tailored for developing XR exhibition spaces in museum contexts, organised into clearly defined phases. Figure 1 presents a structured workflow divided into four phases, each comprising several steps, which are described below.

Figure 1.

Diagram illustrating the structured phases of the XR exhibition space design methodology.

2.1.1. Phase 1. Preproduction

This phase focuses on the conceptual and technical foundation of the project (Figure 1, Preproduction) and consists of three steps. The process begins with Artifact Selection & Storytelling, where objects are chosen based on their historical significance, symbolic resonance, and suitability for three-dimensional reconstruction. Priority is given to items exhibiting partial damage or missing elements, as virtual restoration can significantly enhance curatorial interpretation. Accurate modeling and subsequent physical output rely on obtaining high-fidelity scans or comparable reference data. For each selected piece, a bespoke virtual environment is developed to reinforce its historical and aesthetic narrative, incorporating period-appropriate architecture, contextual soundscapes, and tailored interactive cues to maximize visitor immersion.

The second step, Interactive 6-DOF Tool Design, translates narrative requirements into tangible interfaces. In the present project, a hand-held “camera” and a “lantern” are conceived to harmonise visually and thematically with the storyworld established in Phase 1. Both implements incorporate dedicated sensors that deliver six-degree-of-freedom tracking, guaranteeing precise spatial correspondence within the gallery.

The third step, Development Platform Selection, focuses on identifying a software ecosystem capable of real-time rendering at high visual fidelity. Essential attributes include advanced shader and material editors, sophisticated lighting and animation pipelines, and comprehensive interaction-design toolsets. The platform must also facilitate multidisciplinary collaboration by offering support for widely adopted programming languages, extensive documentation, and an active community.

2.1.2. Phase 2. Production

This phase focuses on the creation and integration of the system’s physical and digital components (Figure 1, Production) and consists of two steps.

The process begins with step four,3D Fabrication, where high-resolution additive manufacturing techniques are used to produce fine, durable, and aesthetically coherent components. These include physical replicas of the selected artifacts and any interactive elements, such as the handheld camera and lantern. Concurrently, the spatial envelope of the XR installation is defined, and tracking hardware is strategically positioned to ensure that the interactive devices can be monitored with minimal latency. Screens, sensors, and other physical set-pieces are arranged through an iterative prototyping process to establish robust, spatialized interaction and sustained immersion.

The fifth step, Programming and Integration, translates the narrative and interactive concepts into a fully functional system. This involves implementing the exhibition logic, creating intuitive user interfaces, and precisely synchronizing virtual content with the physical installation. Interactive behaviors are programmed, ensuring that visitor interactions with the camera, lantern, and other elements trigger accurate visual, audio, and narrative responses. Detailed narrative scripts guide user interactions, ensuring historical accuracy and enhancing storytelling. Initial alpha-stage evaluations within the project team are followed by structured user-testing sessions, which inform iterative refinements to improve usability, engagement, and coherence between the virtual and physical components.

2.1.3. Phase 3. Validation–Evaluation

This phase ensures that the XR system meets its design objectives and provides a seamless, engaging user experience (Figure 1, Validation–Evaluation) and consists of two steps.

The process begins with step six, Spatial Interaction Validation, where the hybrid nature of XR is rigorously tested. This involves quantifying alignment accuracy, system responsiveness, and tracking precision. Key metrics such as latency, synchronization error, and interaction smoothness are measured and optimized to maintain immersion. This step guarantees that physical interactions with the hand-held camera and lantern are accurately mirrored in the virtual environment, ensuring a coherent and responsive experience.

The seventh step, Expert Evaluation, convenes focus-group reviews with specialists from fields such as history, education, and interactive technology. These experts experience the system firsthand and provide detailed feedback on its historical fidelity, educational value, and technical performance. Their critique is systematically incorporated into a concluding iteration, ensuring that the final XR experience maintains both narrative integrity and technical reliability.

Completing these two steps results in a thoroughly validated XR exhibition, ready for pilot deployment. Although the present study concludes at this stage, the remaining phase is strongly recommended for any full public rollout. These steps are essential for ensuring long-term operational stability, accessibility, and continuous improvement of visitor outcomes.

2.1.4. Phase 4. Deployment

This phase ensures the successful implementation and sustainable operation of the validated XR system in a museum setting (Figure 1, Deployment).

The process begins with the eighth step, Deployment at Museum Site, where the fully validated XR system is physically installed at the museum site. This involves carefully arranging screens, sensors, and interactive devices according to the optimized layout established during the production phase. The network configuration is secured, and all system components are connected and calibrated to ensure accurate tracking and seamless interaction. The installation is rigorously tested in its final environment to confirm that the spatial interactions and visual effects maintain their intended quality.

The ninth step, Maintenance and Sustainability Plan, establishes protocols to guarantee the system’s long-term functionality. This includes creating a maintenance schedule for routine inspections, software updates, and hardware replacements. Key components, such as tracking sensors, handheld devices (camera and lantern), and display screens, are monitored to prevent performance degradation. Training sessions are provided for museum staff, equipping them with the knowledge to perform basic troubleshooting and ensure the installation operates smoothly without requiring constant external support. Sustainability planning may also include remote monitoring systems that track system performance and provide alerts for any malfunctions.

The tenth step, Visitor Experience Assessment, systematically collects feedback from museum visitors to evaluate the effectiveness of the XR installation. Both quantitative metrics (e.g., number of interactions, time spent in the experience, photograph count) and qualitative insights (e.g., visitor interviews, satisfaction surveys, and open-ended comments) are gathered. These data are analyzed to identify areas of strength and aspects needing improvement. The insights gained inform future refinements of the system, ensuring that it remains engaging, educational, and accessible to diverse audiences.

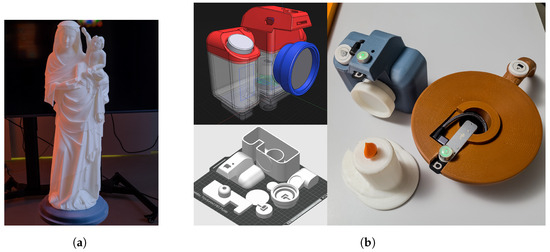

2.2. Prototype of the Virgin of Boixadors

The four-phase design procedure presented earlier was executed in its entirety to build a XR demonstrator devoted to the fourteenth-century alabaster Virgin and Child now in the Museu d’Art Medieval [32], located in Vic city (Spain). The piece portrays the Virgin and Child—a canonical Gothic icon dated to 1350–1370 CE; see Figure 2.

Figure 2.

Gothic sculpture “Virgin of Boixadors” from the collection of the Museu d’Art Medieval [32].

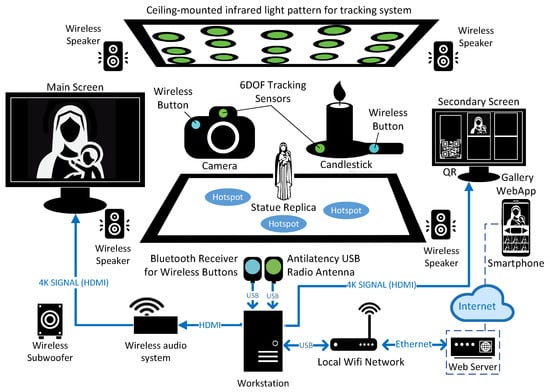

Work took place inside the 6 m × 6 m × 3 m XR Laboratory of the Institute of Design and Manufacturing (IDF), Universitat Politècnica de València (UPV, Spain). The physical installation photographed in Figure 3 occupies the geometric centre of the laboratory. A 3D-printed replica of the fourteenth-century alabaster Virgin and Child (label 1 in Figure 3, see also Figure 4a for more details) stands on a plinth exactly beneath a ceiling-mounted zig-zag array of infrared LEDs (label 3 in Figure 3). That pattern is recognised by two Antilatency trackers [33], one embedded in the camera interface and the other in the candlestick, giving each prop six degrees of freedom with sub-millimetre precision (see Figure 4b for more details). Three numbered decals on the floor (label 2 in Figure 3) mark the hotspots defined during the Interactive 6DOF Tools Design step; stepping on any of them later unlocks narrative media. Behind the statue, a 100-inch 4K monitor (label 4 in Figure 3) renders the virtual nave produced in Unity, while an 85-inch screen at the opposite end (label 5 in Figure 3) provides onboarding instructions and, once interaction begins, a rolling gallery of visitor photographs. Illumination derives from threeGodox SL300R RGB luminaires (from Godox manufacturer, located in Shenzhen, China) (label 6 in Figure 3) whose hue and intensity match the chromatic atmosphere computed for the digital church.Quadraphonic Sony loudspeakers (from Sony manufacturer, located in Minato, Japan) and a subwoofer (label 7 in Figure 3) occupy the four corners of the room; their positions correspond to the virtual co-ordinates used by Unity’s spatial-audio renderer, so that music and commentaries appear to emanate from the statue itself. A workstation driven by anNVIDIA RTX 4090 graphics card (from NVIDIA manufacturer, located in Santa Clara, CA, USA) (label 8 in Figure 3) orchestrates all devices, and a nearby table (label 9 in Figure 3) provides a safe exchange point for the camera and candlestick when visitors alternate roles.

Figure 3.

Physical counterpart of the prototype Virgin of Boixadors presented in this work: (1) touch-permissible, printed replica of the sculpture; (2) “hotspots”; (3) Antilatency tracking grid; (4) 100-inch 4K screen; (5) 85-inch secondary screen; (6) RGB spotlights; (7) quadraphonic loudspeakers and a subwoofer; (8) workstation; (9) designated area for safely storing visitor-held artifacts when not in use.

Figure 4.

Three-dimensional-printed artifacts designed for the prototype Virgin of Boixadors. (a) Three-dimensional-printed replica of the sculpture. (b) Three-dimensional-printed camera and candlestick.

Both tangible interfaces were produced in the 3D Fabrication step and are illustrated in Figure 4b. The exploded CAD model at upper left shows how the camera shell is partitioned so that the tracker, the rechargeable battery, and the USB-C port lie along a single longitudinal axis; the assembly printed in dark slate PLA weighs 210 g, a compromise between ergonomic comfort and perceptual solidity. The candlestick, printed in ivory and copper PLA, integrates an orange diffuser that masks the tracker’s status LED while encouraging users to orient the sensor toward the ceiling. In both objects, a recessed Flic 2 button is visible: a white cap marks the camera shutter, while the tealight-icon cap identifies the trigger that leaves a candle in virtual space. These buttons were preferred to custom electronics because their firmware can be re-programmed over the air and their housings snap securely into the PLA recesses designed for them.

Further material details complete the hardware ecosystem. The quadraphonic loudspeakers and subwoofer communicate with the PC through a proprietary wireless audio bridge that preserves uncompressed 48-kHz signals. Three Godox luminaires wirelessly broadcast their DMX profiles to an Android controller, allowing real-time synchronisation between Unity’s light temperature and the room’s wash. The Antilatency LED rails were ultimately fixed to the ceiling—rather than to the floor as in the manufacturer’s default configuration—because preliminary validation demonstrated that an overhead constellation eliminates line-of-sight occlusions caused by visitors’ bodies. The room therefore constitutes a self-contained, cable-safe arena in which every movement of the props is captured at 120 Hz and mapped with less than 2 mm jitter, values that satisfy the <15 ms visuo-motor coherence threshold frequently cited in XR literature.

The schematic in Figure 5 shows the communication diagram of the developed prototype. Solid blue arrows indicate the 4K HDMI feeds that connect the workstation to the two monitors, while the dotted lines plot the wireless audio channels that distribute quadraphonic sound and the low-frequency content of the subwoofer. Two USB cables tether the Antilatency radio antenna and the Bluetooth Flic-button receiver to the PC; together they close the loop between physical gestures and on-screen events. A wired Ethernet spur reaches a university WordPress server that stores every photograph generated during a visit; the decision to avoid Wi-Fi at this point was made during the Development Platform Selection step to guarantee 100-ms upload latency even under heavy laboratory traffic.

Figure 5.

Architectural communication diagram of the developed prototype. The diagram illustrates the system’s connections, with solid blue arrows for 4K HDMI video signals, dashed lines for wireless audio, and USB connections for Bluetooth buttons and Antilatency radio, while Ethernet ensures low-latency uploads to the web server.

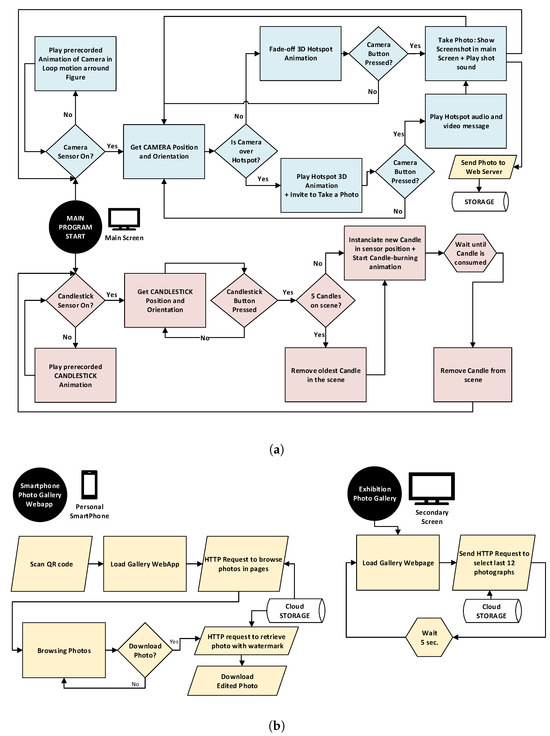

The software layer builds directly on that hardware. Unity 2022.3 running the High-Definition Render Pipeline constitutes the real-time engine selected during the Development Platform Selection step. On the RTX 4090 card, HDRP maintains volumetric fog, screen-space global illumination, and full-resolution ray-traced reflections at a stable 60 fps in 4K; these features allow the candle’s soft shadow to migrate realistically across virtual stone and produce authentic depth-of-field as the camera approaches the statue. Within Unity, a single C# state machine implements the interaction logic graphed in Figure 6a. Every frame, the programme polls Antilatency for the tracker’s position and orientation, updates the virtual camera or candle, and inspects two asynchronous channels. If the camera button has been pressed, a 4K render-texture is captured, a shutter sound is localised at the camera’s coordinates and the image collapses into the centre of the monitor in a brief VFX-Graph flourish. If that press coincides with the camera’s presence inside the cylindrical bounds of a hotspot, a second branch executes: a Houdini-authored particle animation highlights the statue’s detail under study, a twelve-second explanatory video inset appears in the lower-left corner, and a scholarly narration plays from the loudspeaker that lies closest to the visitor’s real-world position.

Figure 6.

Flow diagrams of the application: (a) the main application flow manages camera and candle interactions, while (b) the web-app flow handles photo storage, display, and download through cloud services.

The candlestick routine, which occupies the lower half of Figure 6a, restricts the scene to a maximum of five virtual tealights. Each activation instantiates an emissive mesh whose wax-melting shader linearly consumes its volume over sixty seconds; once six candles exist, the oldest extinguishes itself before the newest ignites, maintaining optical clarity in the nave. Because that lighting mechanic derives from in-game photography paradigms and from the gamified reward systems, the prototype preserves theoretical continuity between its empirical implementation and the research that motivated it.

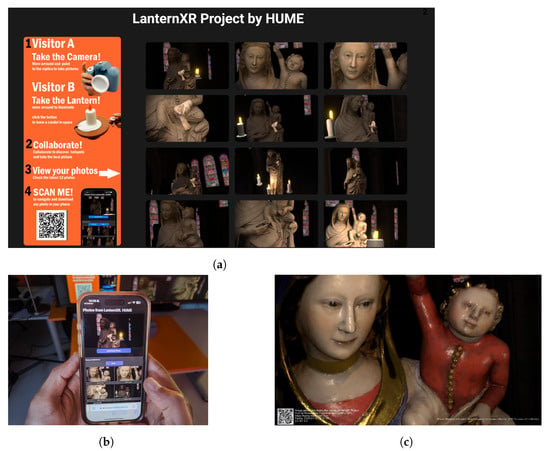

All visual assets created by visitors are routed through the cloud workflow diagrammed in Figure 6b. When Unity finalises a screen shot, the PNG is sent to a HTTP micro-service that embeds a watermark, the project name, the current date, and a QR code that resolves to an informational landing page. The same service transmits a JSON array containing the twelve most recent images to the 85-inch screen every five seconds, ensuring that spectators who enter the room at any time encounter an up-to-date gallery. A second endpoint, paginated for smartphones, is opened when a visitor scans the QR code displayed during onboarding. Figure 7 documents the result: Figure 7a shows the 85-inch monitor with the live instruction banner and thumbnail grid, Figure 7b shows the responsive web-app that allows any thumbnail to be enlarged and downloaded, and Figure 7c shows the HD file stored on the phone, complete with licence and QR redirection. This closed loop lets visitors share their images on social networks without orphaning those images from their curatorial context, thereby fulfilling the participatory-documentation objective stated in the Artifact Selection & Storytelling step.

Figure 7.

Interactive system display and photo-sharing interface: (a) the main display shows live instructions and a thumbnail grid of recent photos; (b) the web-app allows users to view, enlarge, and download images; (c) the HD image stored on the phone includes a license and QR code for context.

Validation of the finished system proceeded on two fronts. Instrumented walkthroughs confirmed that tracker latency remained below 10 ms everywhere inside the 6 m × 6 m envelope, while positional jitter never exceeded 2 mm. Subsequent expert conservators, museum educators, and XR engineers recommended three adjustments that were implemented before the public pilot: the candlestick grip was remodelled to accommodate smaller hands; a low-pass filter was introduced to soften transitions between quadraphonic zones; and hotspot dwell time was reduced to sixty seconds to stabilise visitor throughput at approximately twenty participants per hour.

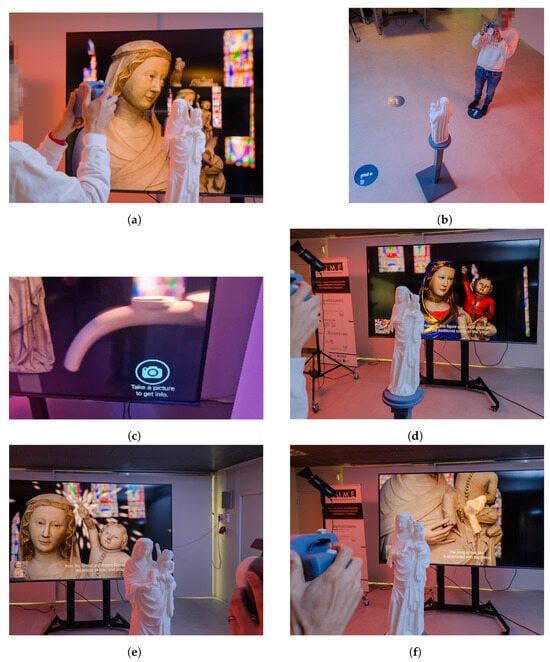

Figure 8 and Figure 9 capture the human experience that these technical decisions make possible. Figure 8a–d tracks two visitors from the initial instruction screen to free exploration of the virtual nave, culminating in the emission of candlelight that colours both the physical and the digital statue. Figure 9 then records the narrative arc triggered when the camera aligns with a floor hotspot: Figure 9a freezes the instant of shutter release, Figure 9b confirms the visitor’s position over the decal, Figure 9c shows the on-screen invitation to “Take a picture to get info”, and Figure 9d–f reveals the three scholarly narratives—lost polychromy, the bird held by the Infant, and the reconstructed blessing gesture—each from the participant’s self-selected vantage point.

Figure 8.

Visitor interaction and virtual asset behavior: (a,b) show the initial instructions and the use of interactive devices, while (c,d) demonstrate the camera and candlelight effects applied to the virtual scene.

Figure 9.

Interactive narrative triggered by camera alignment with a hotspot: (a–c) depict the camera interaction and prompt, while (d–f) showcase the three scholarly narratives revealed: lost polychromy, the bird held by the Infant, and the reconstructed blessing gesture. (a) Instant of shutter release. (b) Visitor’s position over the decal. (c) On-screen invitation to “Take a picture to get info”. (d) Scholarly narrative of lost polychromy. (e) Scholarly narrative of the bird held by the Infant. (f) Scholarly narrative of the reconstructed blessing gesture.

By laying hardware and software out in the order in which they materialise from the design methodology, the section demonstrates that the LanternXR prototype fulfils every methodological requirement up to Expert Evaluation. Deployment to a museum gallery, longitudinal visitor studies, and a formal maintenance plan remain future work, but the system now constitutes a fully validated laboratory demonstrator that integrates tangible interaction, real-time photogrammetric rendering, and participatory image making around a single Gothic artifact.

2.3. Research Design

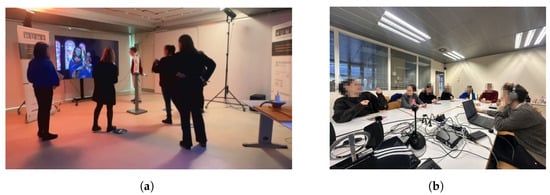

This investigation follows the staged methodological framework summarised in Figure 1 and terminates at the Expert Evaluation stage, deferring large-scale visitor studies to future work; see Figure 10.

Figure 10.

Expert evaluation stage of the study: (a) Phases 1 and 2 involved direct interaction with the system, while (b) Phase 3 gathered expert feedback in a focus group session.

Before the expert review, a formative usability trial was conducted with nine laboratory staff members who had no prior experience with the system. Each participant completed two scripted tasks—(i) illuminate the nave with three tealights; (ii) trigger all three hotspots—while wearing a head-mounted eye tracker. Logged metrics included positional jitter, frame latency, button-response time, task-completion time, and number of misfires. Results showed mean positional error of 1.7 mm (SD = 0.4), mean system latency of 8.2 ms (SD = 1.1), and 100% task completion within 5 min. Minor adjustments followed: tracker–origin offsets were recalibrated, the hotspot trigger volume was enlarged by 5%, and the candlestick grip was shortened by 12 mm to suit smaller hands.

A single 90-min focus-group session concluded the study. Six specialists—three curators, one art historian, one digital-heritage researcher, and one museum technologist—were recruited through professional networks, with the following phases:

- Phase 1 (Interactive experience, 30 min). Each expert pair explored LanternXR freely. System logs captured photo counts, dwell time per hotspot, and tealight usage; observers recorded navigation patterns and collaborative behaviours.

- Phase 2 (Non-participant observation, 20 min). While a second pair interacted, the remaining experts observed to reflect on social dynamics and accessibility.

- Phase 3 (Discussion and survey, 40 min). A semi-structured discussion, guided by six prompts (social interaction, interface ergonomics, narrative clarity, hotspot utility, likelihood of image sharing, and comparison with traditional displays), was audio-recorded and later coded. In addition, similarly to [1,34,35,36], participants then completed the System Usability Scale (SUS) test [37] and provided open-ended comments.

2.4. Data Analysis

All evidence gathered during the focus-group session—field notes, satisfaction-and-usability surveys, automatically logged system metrics, and verbatim discussion transcripts—was examined in a single, integrative analytic workflow designed to illuminate expert perceptions of LanternXR. The first analytic pass addressed the ethnographic field notes compiled while experts interacted with the camera and candlestick and while they observed their peers. Applying the six-stage reflexive thematic procedure in [38], recurrent patterns were coded around navigation strategies, prop handling, collaboration styles, and any non-verbal signs of confusion or friction; emergent codes were iteratively refined into candidate themes that pointed to latent usability constraints.

Quantitative survey items were processed next. Basic descriptive statistics summarised central tendencies in perceived ease of use, immersion, engagement, and collaborative value, while open-ended survey answers underwent a streamlined content analysis following [39]. The resulting thematic categories complemented the observational findings—for example, comments that “aligning the camera is tricky” echoed coded episodes in which participants repeatedly repositioned themselves over a hotspot.

Concurrent with these interpretive stages, the raw system logs were parsed to establish objective interaction baselines: total photographs captured, dwell times at each hotspot, frequency of tealight placement, and any error messages. Simple ratios and cross-tabulations revealed, for instance, that the hotspot with the lowest photograph count corresponded to the area where observers had documented alignment difficulties, thereby linking behavioural telemetry to lived experience.

Finally, the 40-min moderated discussion was transcribed verbatim and subjected to another round of reflexive thematic analysis [38]. Codes generated from spoken reflections were clustered into higher-order concepts—social interaction, interface ergonomics, information accessibility, engagement with the virtual twin, and comparisons with traditional displays—mirroring the categories that structured the dialogue. Insights from this qualitative core were then cross-checked against survey trends and interaction metrics to confirm or nuance preliminary interpretations, an iterative comparison that [40] recommend for technology-evaluation studies in cultural-heritage settings.

By deliberately triangulating observational records, questionnaire data, system telemetry, and focus-group discourse, the study constructed a convergent evidence base whose internal consistency strengthens credibility and trustworthiness.

3. Results

This section presents the findings obtained from the expert focus group evaluation of the LanternXR system and observed user interaction patterns.

The focus group, comprising six experts in art history, museology, and technology applied to heritage, evaluated LanternXR as a XR experience designed for medieval art exhibitions. Their feedback, analysed thematically, is categorised below:

- General Assessment and Immediate Reactions: The thematic analysis of the focus group discussions revealed a strong initial positive response. The XR experience was widely described as innovative and transformative, with notable potential for educational and cultural applications. Experts emphasised its ability to promote closer observation of details often overlooked in traditional museum settings. The system’s immersiveness was also highlighted as a key strength, with participants reporting a strong sense of presence within the virtual environment. However, the analysis also identified usability challenges, particularly concerning the handling of the camera and interaction points, which were found potentially confusing for users unfamiliar with technology. Additionally, the simultaneous presence of numerous elements was noted as potentially overwhelming.

- Educational Impact: Participants emphasised the system’s educational value, particularly its capacity to contextualize artifacts within their original environment, which was considered invaluable for learning. Suggestions were made to enhance authenticity by incorporating sensory information like sounds and smells.

- Technological Aspects: This work achieved promising results by programming specific shaders to animate the transformation of one material into another. This was particularly effective in the polychromy recreation of the statue, where the animation utilised the occlusion map to transition to the material featuring the recreated colors of the Virgin and Child figure. A particle system was introduced in one of the animations to highlight the child’s hand, emphasizing its reconstruction at the moment when this point of information is activated. The particle effect, positioned behind the child’s hand, serves as a metaphorical emphasis, suggesting the child is blessing his mother. The prototype was developed in English, and implementing a multilingual system remains a challenge. This raises the question of whether the information points should include spoken messages or consider a mix of spoken content in English with subtitles in various languages. The results showed a high level of satisfaction with the graphical output using Unity’s High Definition Render Pipeline (HDRP). This system enabled real-time ray tracing at 4K resolution, with shadows programmed to deliver highly realistic lighting effects.

- Visual Representation and Realism: The visual quality of the system was widely praised, with experts noting the impressive representation of color and materials. However, suggestions were made to refine certain details, such as the shine on the Virgin’s mantle, and to incorporate more realistic lighting, such as simulating the flickering of medieval candles.

- Interaction and Navigation: Feedback on interaction was mixed. While the concept was appreciated, experts suggested improvements such as including a virtual magnifying glass for detailed exploration and stabilizing the camera with a virtual tripod for smoother interaction.

- Social and Collaborative Interaction: The system’s focus on promoting collaboration was positively received. The thematic analysis of focus group discussions revealed a strong theme of collaborative potential, with experts noting its ability to foster socialization in a museum context. As one expert stated, “It really got us talking and working together, which you don’t always get in a museum”. However, it was also pointed out that the complexity of interactive elements could potentially distract from the main objective. The potential for individual exploration with automatic support was also suggested, indicating a desire for both social and individual learning experiences.

- Museography and Educational Applications: The XR system was acknowledged as a valuable tool for contextualizing delocalised artifacts through the reconstruction of historical environments. Experts reiterated the recommendation to incorporate multisensory elements—such as scents (e.g., incense) and ambient music—to further enhance the perceived authenticity of the experience. The importance of designing inclusive interfaces was strongly emphasised, with specific suggestions including the integration of tactile textures and auditory descriptions to improve accessibility for visually impaired users. Additionally, the potential integration of digital replicas of lost or non-exhibited objects was identified as a significant added value of the system.

- Suggestions for Improvement: Participants proposed several key enhancements to optimize the user experience. First, they highlighted the difficulty of maintaining camera stability, suggesting the integration of a wheeled tripod (dolly) to facilitate more stable positioning. While this mechanical solution was considered viable, software-based stabilization was deliberately avoided in order to preserve the system’s real-time responsiveness. Second, it was recommended to explore the use of multiple light sources—such as placing several candle-like devices on tripods—to create varied and more naturalistic lighting setups, instead of relying on a single device with digitally duplicated candle effects. Another suggestion concerned the physical model of the sculpture: participants advocated for a 1:1 scale replica, rather than the current 1:2 version, to improve both the visual and tactile perception. The reduced scale was perceived to diminish the sculpture’s presence and physical impact. Finally, the implementation of adaptive interfaces and inclusive features—especially for individuals with disabilities and children—was strongly encouraged. Adjustments to accommodate users of different heights were also recommended, particularly to enhance accessibility and engagement for younger audiences.

Analysis of observational data revealed that participants naturally adopted roles of “guides” and “explorers”, aligning with the theme of “Collaborative Discovery” identified in the qualitative analysis. Moreover, 83.33% of participants reported enhanced engagement compared to traditional museum experiences. The tracking system maintained sub-millimeter accuracy throughout testing, with a 97% uptime during operational hours. The photo sharing functionality demonstrated high engagement, with an average of 12 virtual photographs captured per group session.

To complement the qualitative insights gathered during the focus group session, a series of user experience metrics were extracted from technical logs, structured observation, and survey data. These metrics provide objective evidence of participant engagement, interface intuitiveness, and overall system usability during the Expert Evaluation step:

- Interface Intuitiveness and Usability: 83.33% of participants were able to operate both interactive devices—the virtual camera and the candlestick—without requiring external assistance. This high rate of unaided interaction suggests that the system’s physical–digital affordances were intuitively designed and effectively communicated through the devices’ materiality and interaction logic.

- Interaction Duration as a Proxy for Engagement: The average time spent interacting with the LanternXR system was approximately 25 min per participant, significantly exceeding the estimated 10-min engagement norm for comparable static exhibitions. This prolonged interaction time is indicative of sustained attention and immersive user involvement.

- Hotspot Activation and Technical Responsiveness: Log data confirmed consistent activation of all three information hotspots by 83.33% of participants, suggesting a high degree of exploratory behaviour and successful interaction with spatialised content. Technical responsiveness remained within acceptable parameters, with no perceivable latency noted during spatial interaction validation or expert testing.

- The number of photographs taken per participant ranged from 3 to 9, with a mean of 5.6, indicating active engagement with the photographic affordances of the virtual camera. These outputs served not only as interaction logs but also as indicators of individual exploration and interest.

4. Discussion

The expert evaluation and initial interactions suggest that LanternXR can meaningfully contribute to enhancing museum experiences, particularly by promoting social engagement without compromising accessibility. The spontaneous role-playing observed among participants—where individuals naturally assumed the roles of “guides” or “explorers”—suggests that the system successfully bridges the gap between individual exploration and collaborative engagement. This emergent dynamic challenges the traditionally solitary nature of museum visits, aligning closely with the design principles of LanternXR.

Moreover, the methodological framework established in this project can be adapted to other domains—such as archaeology or the natural sciences—by reconfiguring the tangible interfaces and metaphors. For instance, replacing the Gothic Virgin sculpture with a 3D model of a dinosaur could allow experimentation with alternative metaphors and interaction tools—such as replacing the candle and camera with context-specific instruments like environmental sensors or excavation tools.

The strong engagement with the photo-sharing feature suggests that LanternXR effectively supports the extension of museum experiences into digital domains. Participants consistently interacted with this functionality, reflecting broader patterns in heritage communication where digital content fosters continued public engagement beyond the exhibition space.

The expert feedback highlighted both strengths and areas requiring refinement. Experts consistently praised the system’s high visual fidelity and its capacity to enrich contextual understanding of the artifact, particularly in educational scenarios. These findings demonstrate that XR technologies can effectively support knowledge transfer and interpretation in museum environments. However, challenges related to tool handling and system intuitiveness for less tech-savvy users underscore the need for interface simplification and iterative usability enhancements. Suggestions regarding the incorporation of multisensory elements and improvements in visual realism offer clear directions for future development.

Increased average interaction times, when compared to conventional exhibit engagement, suggest that LanternXR’s interactive and gamified mechanics—particularly hotspot discovery and virtual photography—effectively sustain user attention. Furthermore, the high rate of successful interface usage without prior instruction reflects the system’s intuitive design, although expert feedback indicates further simplification is desirable.

This study was limited to a focused expert evaluation with a relatively small and homogeneous group. As a next step, the authors of this work are planning to deploy the prototype in the Museu d’Art Medieval, located in Vic city (Spain), where it will be tested with general museum visitors to gather validation data and user feedback. Broader generalizations should be made with caution until further evaluations are conducted with more diverse audiences. Additionally, the results pertain to a single artifact scenario, and further studies are needed to assess the system’s applicability across various types of museum content.

5. Conclusions

This work reframed XR practice in museums by replacing personal head-mounted displays with optically tracked, hand-held props that invited visitors to discover heritage together. In the prototype developed, the tangible camera and candlestick converted passive looking into embodied, collaborative exploration; during expert trials the system recorded sub-millimetre positional precision and a mean of 12 photographs per session, while 83.33% of participants expressed a preference for LanternXR over conventional label-and-screen displays. Ceiling-mounted tracking beacons allowed the interaction footprint to expand simply by adding additional rails, and multiple screens could be daisy-chained without forcing competition for a single viewpoint. Although real-time ray tracing demanded a high-end GPU, the architecture proved compatible with emerging neural-rendering and AI up-scaling pipelines that promised to reduce hardware costs.

The prototype also demonstrated how faithful 3D-replicas enriched interpretation: simulated medieval daylight revealed polychromy lost to time, and hotspot animations exposed symbolic elements such as the bird in the child’s hand or the restored blessing gesture. Experts agreed that combining speculative reconstruction with hands-on agency deepened curatorial storytelling by letting visitors test alternative conservation hypotheses rather than merely receive them. By enabling visitors to test restoration hypotheses rather than merely receive them, the design advanced HCI research on embodied cognition. It also showed how game-inspired mechanics—virtual photography and candle-placing—anchor learning in action while meeting museological calls for inclusive, shareable content through multilingual captions, open-licence image downloads, and spatial audio that adapts to group movement.

Nevertheless, the practical limitations of the system must be acknowledged. Because the installation relies on room-scale tracking, dimmable theatrical lighting, and a pair of tracked props, visitor capacity is inherently capped. This constraint makes the installation less suited to high-volume, open-plan galleries such as the Fallas Museum or the Oceanogràfic, both located in Valencia city (Spain). Instead, the concept is best positioned as a specialised micro-experience, a ticketed add-on, a pop-up in a temporary exhibition, or a behind-the-scenes offer for schools, members, or VIP tours. Many institutions already reserve side rooms for VR films, conservation demos, or education labs; our setup fits naturally into that niche while delivering a higher degree of agency and multisensory feedback than headset-only stations.

Furthermore, scaling the experience is largely a logistical—not technological—challenge. Multiple identical pods could operate in parallel. Throughput could be increased by shortening each interaction loop or by scheduling timed slots. Future iterations might explore inside-out tracking (e.g., depth-sensing cameras embedded in the props) to remove the ceiling rig and reduce installation overhead. These refinements lie beyond the scope of the current study, yet they underscore the adaptability of the underlying methodology for other heritage venues and audience sizes.

Despite these strengths, reliance on proprietary infrared tracking limited immediate adoption in smaller institutions. Future iterations will investigate AI-based pose estimation with commodity RGB cameras, scene-swapping for multi-object shows, and longitudinal field studies to examine knowledge retention and social impact. By positioning XR as a social, device-free medium, LanternXR shifted digital-heritage practice from solitary immersion to shared meaning-making, inviting visitors not merely to observe the past but to co-create it through collective narrative and memorable, situated action.

Author Contributions

Conceptualization, A.M.; Methodology, A.M.-T.; Software, A.M. and J.J.C.-F.; Validation, J.J.C.-F.; Formal analysis, A.M.-T. and L.G.; Investigation, A.M. and J.E.S.; Resources, L.G.; Data curation, J.J.C.-F.; Writing—original draft, A.M. and J.E.S.; Writing—review & editing, J.E.S. and L.G.; Supervision, J.E.S.; Funding acquisition, L.G. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been funded by the Spanish Government (Grants PID2024-156583OB-I00 and PID2020-117421RB-C21 funded by MCIN/AEI/10.13039/501100011033).

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Solanes, J.E.; Muñoz, A.; Gracia, L.; Tornero, J. Virtual Reality-Based Interface for Advanced Assisted Mobile Robot Teleoperation. Appl. Sci. 2022, 12, 6071. [Google Scholar] [CrossRef]

- Schoene, B.; Kisker, J.; Lange, L.; Gruber, T.; Sylvester, S.; Osinsky, R. The reality of virtual reality. Front. Psychol. 2023, 14, 1093014. [Google Scholar] [CrossRef] [PubMed]

- Simon-Vicente, L.; Rodriguez-Cano, S.; Delgado-Benito, V.; Ausin-Villaverde, V.; Delgado, E.C. Cybersickness. A systematic literature review of adverse effects related to virtual reality. Neurologia 2024, 39, 701–709. [Google Scholar] [CrossRef]

- Martí-Testón, A.; Muñoz, A.; Solanes, J.E.; Gracia, L.; Tornero, J. A Methodology to Produce Augmented-Reality Guided Tours in Museums for Mixed-Reality Headsets. Electronics 2021, 10, 2956. [Google Scholar] [CrossRef]

- Gopakumar, M.; Lee, G.Y.; Choi, S.; Chao, B.; Peng, Y.; Kim, J.; Wetzstein, G. Full-colour 3D holographic augmented-reality displays with metasurface waveguides. Nature 2024, 629, 791–797. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, X.; Le, B.; Wang, L. Why people use augmented reality in heritage museums: A socio-technical perspective. Herit. Sci. 2024, 12, 108. [Google Scholar] [CrossRef]

- Starszak, K.; Bajor, G.; Stanuch, M.; Pikula, M.; Trybus, O.; Bojanowicz, W.; Basza, M.; Karas, R.; Lepich, T.; Skalski, A. Mixed Reality Technology and Three-Dimensional Printing in Teaching: Heart Anatomy as an Example. J. Vis. Exp. 2025, 218, e67850. [Google Scholar] [CrossRef]

- Kosmas, P.; Galanakis, G.; Constantinou, V.; Drossis, G.; Christofi, M.; Klironomos, I.; Zaphiris, P.; Antona, M.; Stephanidis, C. Enhancing accessibility in cultural heritage environments: Considerations for social computing. Univers. Access Inf. Soc. 2020, 19, 471–482. [Google Scholar] [CrossRef]

- Sylaiou, S.; Kasapakis, V.; Dzardanova, E.; Gavalas, D. Leveraging Mixed Reality Technologies to Enhance Museum Visitor Experiences. In Proceedings of the 2018 International Conference on Intelligent Systems (IS), Funchal, Portugal, 25–27 September 2018; pp. 595–601. [Google Scholar]

- Trunfio, M.; Campana, S. A visitors’ experience model for mixed reality in the museum. Curr. Issues Tour. 2020, 23, 1053–1058. [Google Scholar] [CrossRef]

- Lin, C.; Xia, G.; Nickpour, F.; Chen, Y. A review of emotional design in extended reality for the preservation of cultural heritage. npj Herit. Sci. 2025, 13, 86. [Google Scholar] [CrossRef]

- Bekele, M.K.; Pierdicca, R.; Frontoni, E.; Malinverni, E.S.; Gain, J. A survey of augmented, virtual, and mixed reality for cultural heritage. J. Comput. Cult. Herit. 2018, 11, 1–36. [Google Scholar] [CrossRef]

- Bekele, M.K.; Champion, E. A Comparison of Immersive Realities and Interaction Methods: Cultural Learning in Virtual Heritage. Front. Robot. AI 2019, 6, 91. [Google Scholar] [CrossRef]

- Leopardi, A.; Ceccacci, S.; Mengoni, M.; Naspetti, S.; Gambelli, D.; Ozturk, E. X-reality technologies for museums: A comparative evaluation based on presence and visitors experience through user studies. J. Cult. Herit. 2021, 47, 188–198. [Google Scholar] [CrossRef]

- Carrozzino, M.; Bergamasco, M. Beyond virtual museums: Experiencing immersive virtual reality in real museums. J. Cult. Herit. 2010, 11, 452–458. [Google Scholar] [CrossRef]

- Jung, T.H.; Tom Dieck, M.C. Augmented reality, virtual reality and 3D printing for the co-creation of value for the visitor experience at cultural heritage places. J. Place Manag. Dev. 2017, 10, 140–151. [Google Scholar] [CrossRef]

- Okanovic, V.; Ivkovic-Kihic, I.; Boskovic, D.; Mijatovic, B.; Prazina, I.; Skaljo, E.; Rizvic, S. Interaction in eXtended Reality Applications for Cultural Heritage. Appl. Sci. 2022, 12, 1241. [Google Scholar] [CrossRef]

- Margetis, G.; Apostolakis, K.C.; Ntoa, S.; Papagiannakis, G.; Stephanidis, C. X-Reality museums: Unifying the virtual and real world towards realistic virtual museums. Appl. Sci. 2021, 11, 338. [Google Scholar] [CrossRef]

- Lee, L.H.; Braud, T.; Zhou, P.Y.; Wang, L.; Xu, D.; Lin, Z.; Kumar, A.; Bermejo, C.; Hui, P. All One Needs to Know about Metaverse: A Complete Survey on Technological Singularity, Virtual Ecosystem, and Research Agenda. Found. Trends Hum.-Comput. Interact. 2024, 18, 100–337. [Google Scholar] [CrossRef]

- Slater, M.; Sanchez-Vives, M.V. Enhancing Our Lives with Immersive Virtual Reality. Front. Robot. AI 2016, 3, 74. [Google Scholar] [CrossRef]

- Petrelli, D.; Ciolfi, L.; van Dijk, D.; Hornecker, E.; Not, E.; Schmidt, A. Integrating material and digital: A new way for cultural heritage. Interactions 2013, 20, 58–63. [Google Scholar] [CrossRef]

- Hornecker, E.; Buur, J. Getting a grip on tangible interaction: A framework on physical space and social interaction. In Proceedings of the CHI06: CHI 2006 Conference on Human Factors in Computing, Montréal, QC, Canada, 22–27 April 2006; pp. 437–446. [Google Scholar]

- Koutsabasis, P.; Vosinakis, S. Kinesthetic interactions in museums: Conveying cultural heritage by making use of ancient tools and (re-) constructing artworks. Virtual Real. 2018, 22, 103–118. [Google Scholar] [CrossRef]

- Hulusic, V.; Gusia, L.; Luci, N.; Smith, M. Tangible User Interfaces for Enhancing User Experience of Virtual Reality Cultural Heritage Applications for Utilization in Educational Environment. Acm J. Comput. Cult. Herit. 2023, 16, 1–24. [Google Scholar] [CrossRef]

- Checa, D.; Bustillo, A. A review of immersive virtual reality serious games to enhance learning and training. Multimed. Tools Appl. 2019, 79, 5501–5527. [Google Scholar] [CrossRef]

- Luo, D.; Doucé, L.; Nys, K. Multisensory museum experience: An integrative view and future research directions. Mus. Manag. Curatorship 2024, 1–28. [Google Scholar] [CrossRef]

- Wang, Y.; Gong, D.; Xiao, R.; Wu, X.; Zhang, H. A Systematic Review on Extended Reality-Mediated Multi-User Social Engagement. Systems 2024, 12, 396. [Google Scholar] [CrossRef]

- Oh, C.S.; Bailenson, J.N.; Welch, G.F. A systematic review of social presence in virtual reality. Front. Robot. AI 2018, 5, 114. [Google Scholar] [CrossRef]

- Jung, T.; Tom Dieck, M.C.; Lee, H.; Chung, N. Effects of Virtual Reality and Augmented Reality on Visitor Experiences in Museum. In Proceedings of the International Conference on Information and Communication Technologies in Tourism, Bilbao, Spain, 2–5 February 2016; pp. 621–635. [Google Scholar]

- Li, J.; Wider, W.; Ochiai, Y.; Fauzi, M.A. A bibliometric analysis of immersive technology in museum exhibitions: Exploring user experience. Front. Virtual Real. 2023, 4, 1240562. [Google Scholar] [CrossRef]

- Ramtohul, A.; Khedo, K.K. Augmented reality systems in the cultural heritage domains: A systematic review. Digit. Appl. Archaeol. Cult. Herit. 2024, 32, e00317. [Google Scholar] [CrossRef]

- MEV, Museu d’Art Medieval. Vergin of Boixadors. Available online: https://www.museuartmedieval.cat/en/colleccions/gotico/virgen-de-boixadors-mev-10634 (accessed on 30 April 2025).

- Antilatency. Antilatency AR/VR High-Precision Tracking System. Available online: https://antilatency.com/vrar (accessed on 1 May 2025).

- Attig, C.; Wessel, D.; Franke, T. Assessing Personality Differences in Human-Technology Interaction: An Overview of Key Self-report Scales to Predict Successful Interaction. In Proceedings of the 19th International Conference, HCI International 2017, Vancouver, BC, Canada, 9–14 July 2017; pp. 19–29. [Google Scholar]

- Blattgerste, J.; Strenge, B.; Renner, P.; Pfeiffer, T.; Essig, K. Comparing Conventional and Augmented Reality Instructions for Manual Assembly Tasks. In Proceedings of the 10th International Conference on PErvasive Technologies Related to Assistive Environments, Island of Rhodes, Greece, 21–23 June 2017; pp. 75–82. [Google Scholar] [CrossRef]

- Franke, T.; Attig, C.; Wessel, D. A Personal Resource for Technology Interaction: Development and Validation of the Affinity for Technology Interaction (ATI) Scale. Int. J. Hum.-Comput. Interact. 2018, 35, 456–467. [Google Scholar] [CrossRef]

- Brooke, J. “SUS-A Quick and Dirty Usability Scale”. Usability Evaluation in Industry; CRC Press: Boca Raton, FL, USA, 1996; ISBN 9780748404605. [Google Scholar]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Guest, G.; MacQueen, K.M.; Namey, E.E. Applied Thematic Analysis; SAGE Publications: Thousand Oaks, CA, USA, 2012. [Google Scholar] [CrossRef]

- Innocente, C.; Ulrich, L.; Moos, S.; Vezzetti, E. A framework study on the use of immersive XR technologies in the cultural heritage domain. J. Cult. Herit. 2023, 62, 268–283. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).