1. Introduction

Given the definition of mobility, which is the ability to move or travel, existing systems, such as vehicles, have been solely dedicated to moving from one place to another, and this action or operation has been considered a function, not a service. However, recent technical developments allow the system to be implemented as a service. Since the beginning of mobility as a service (MaaS), mobility services have considered not just offering services but also providing convenience and safety while driving.

In mobility services, identifying the user conditions and status, including drivers and passengers, is necessary to prevent potential abrupt disturbance and instability for safety. For instance, if the driver is under pressure and stress due to a long drive and heavy traffic congestion, the situation deteriorates the driver’s concentration on driving and possibly causes a traffic accident. In this context, emotion recognition is one of the effective methods to understand user conditions so that the meditation approach or related services can be provided to drivers once the vehicle finds out about their conditions.

Facial emotion recognition schemes using a camera are commonly available to capture and analyze facial information to identify the emotion status. In particular, they use image or video types from the human face to detect emotion using a depth camera or webcam, usually extracting features like Landmarks [

1,

2]. However, this is susceptible to the camera’s bandwidth and has limitations following mobility environmental circumstances. In addition, the camera itself can be costly and not applicable to small-scaled mobility devices such as scooters or electric bicycles. With these matters in mind, speech emotion recognition can be an affordable solution in mobility service environments, as it only needs one microphone as an input for emotion detection, as shown in

Figure 1.

Current speech emotion recognition models can classify or predict one emotion state, not multiple. In reality, humans can contain multiple emotions simultaneously, and classifying only one emotion state may cause a misinterpretation of the user condition, resulting in ill-conditioned mobility services for users. We also have to consider that the mobility services are offered under driving conditions, and the services genuinely have high-level sound noises that can interfere with speech sounds like in

Figure 1. Thus, we need to conduct noise analysis and testing for speech emotion recognition and provide a new multiple emotion detection scheme with substantial performance improvement.

In this paper, we propose multi-detection-based speech emotion recognition using an autoencoder structure in mobility environments. Instead of simple classification, we use the single autoencoder scheme for multiple emotion detection. This autoencoder can detect the emotional state and suppress the noise to improve signal-to-noise ratios (SNRs). In this paper, we also compare other speech-emotion recognition schemes and confirm that our proposed scheme outperforms them.

This paper is organized as follows:

Section 2 describes the system model and details speech emotion recognition. In

Section 3, we propose our multi-detection-based speech emotion recognition algorithm.

Section 4 presents our experimental setup and results. In

Section 5, we provide consideration and discussion of our proposed model, and we conclude this paper in

Section 6.

2. System Model of Speech Emotion Recognition

Speech emotion recognition has recently been studied using various methods and models. Features like mel-scaled spectrogram, chromatogram, and spectral contrast are possible options to differentiate the recognition domain into spectral domains for the recognition and classification of emotion [

3]. Even for the recognition model, a convolutional neural network or transformer model is considered an applied model [

2,

4]. Given our mobility service condition, we must limit our hardware condition while achieving high efficiency and performance. Thus, we do not consider the multiple features scheme.

Concerning the feature selection, other prosodic-related features such as wavelet, pitch, spectral contract, and Short-Time Fourier Transform (STFT) represent the time domain. This characteristic can often result in a variation of features due to speaker differences, noise, or recording conditions. On the other hand, MFCCs (Mel-Frequency Cepstral Coefficients) consist of temporal blocks with cepstral coefficients that target the characteristics of human voices and seismic audio echoes compared to other features [

5]. Since we want human voice-oriented representations of the emotion features, we select MFCC as the feature for our work with the proven performance in the sound field [

6]. The MFCC is the output of the inverse Discrete-Cosine Transform (DCT) after the Discrete-Fourier Transform (DFT) with triangular mel weighting filters [

7]. Including the MFCC case, we derive our system model of speech emotion recognition, as shown in

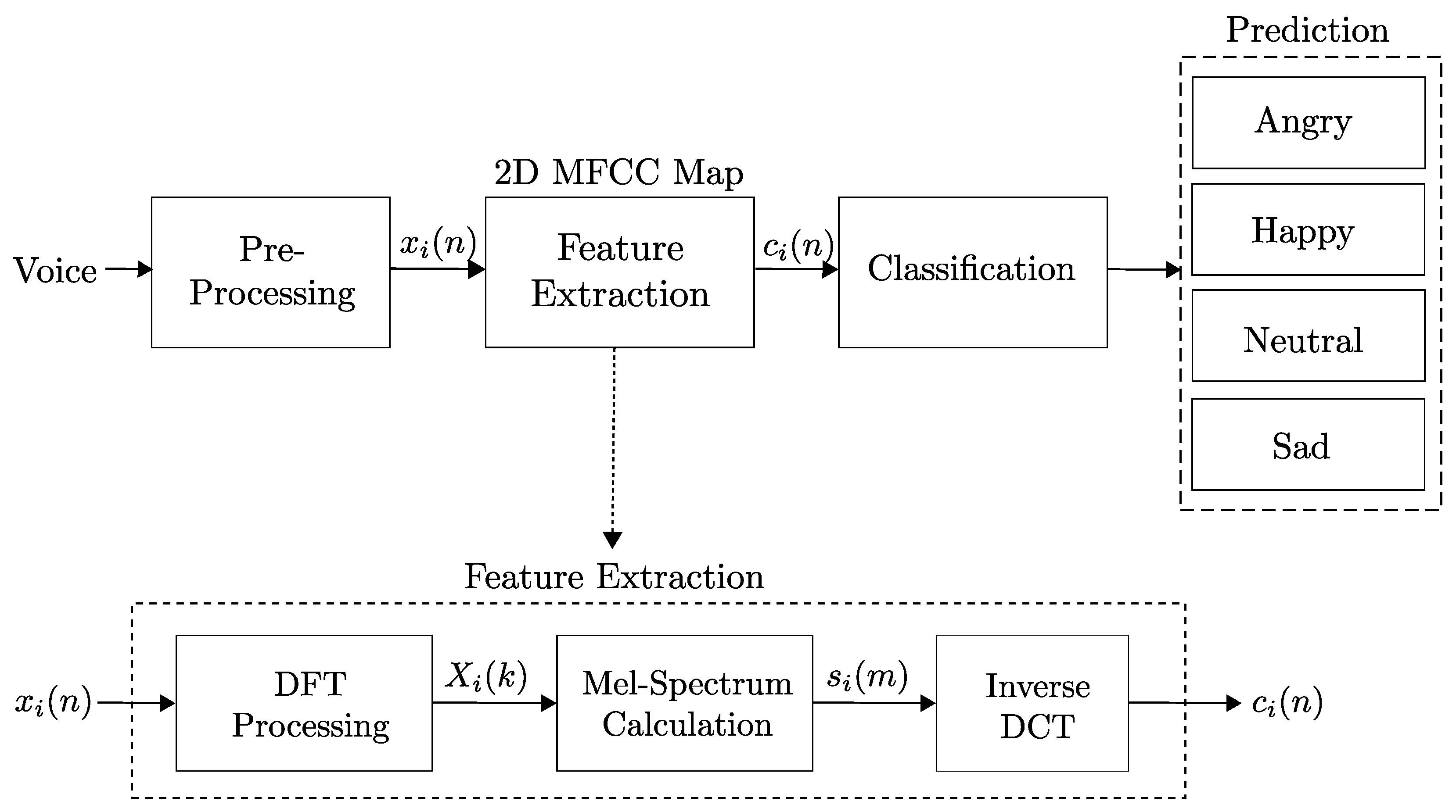

Figure 2.

Each process in

Figure 2 is as follows: First, the system microphone records human voice signals as input data into models. Then, we extract the features, such as MFCC. After training features, models predict the probability of emotion classes. In our scheme, the list of emotion classes includes angry, happy, neutral, and sad. Finally, an emotion with the highest probability becomes classified as a predicted emotion. In this paper, we extract the feature as the 2D-MFCC, MFCC over the time domain, as feature maps from speech data to fit in convolutional networks.

For the details of MFCC procedure corresponding to

Figure 2 [

7,

8], when we define the voice sample as

with temporal block index

i, the DFT

is

where N is the DFT size. From Equation (

1), the mel-spectrum of the magnitude spectrum

needs to be computed with

as follows:

where M is the number of triangular mel weighting filters and

is the weight at the

kth energy frequency spectrum bin to the

mth output band as

where

is

where

and

are the low and high boundary frequencies for the full filter bank. In addition,

can be defined as

With the inverse DCT calculation, the formula of MFCC is as below. Note that in this formula,

is the cepstral coefficients and C is the size of MFCCs.

Over the temporal block index

i and cepstral coefficient index

n, the two-dimensional MFCC map can be produced and used for emotion classification. Note that the classification algorithm in

Figure 2 outputs the indices mapping to each emotion, and we consider the classification of four emotions because these are common emotion categories for dataset and we count complexity of multi-emotion cases in this paper.

3. Multi-Detection Based Speech Emotion Recognition Scheme

Since existing models focus on classifying one emotion, we first investigated each model and compared their performances with our proposed model in the later section. The existing models include Dual-Sequence Long Short-Term Memory (LSTM) Architecture [

9], Local Feature Learning Block Network [

10], and Deep-Net [

11]. In addition, we proposed our autoencoder-based multi-emotion detection scheme. The models introduced in this section are equivalent to the classification module in

Figure 2.

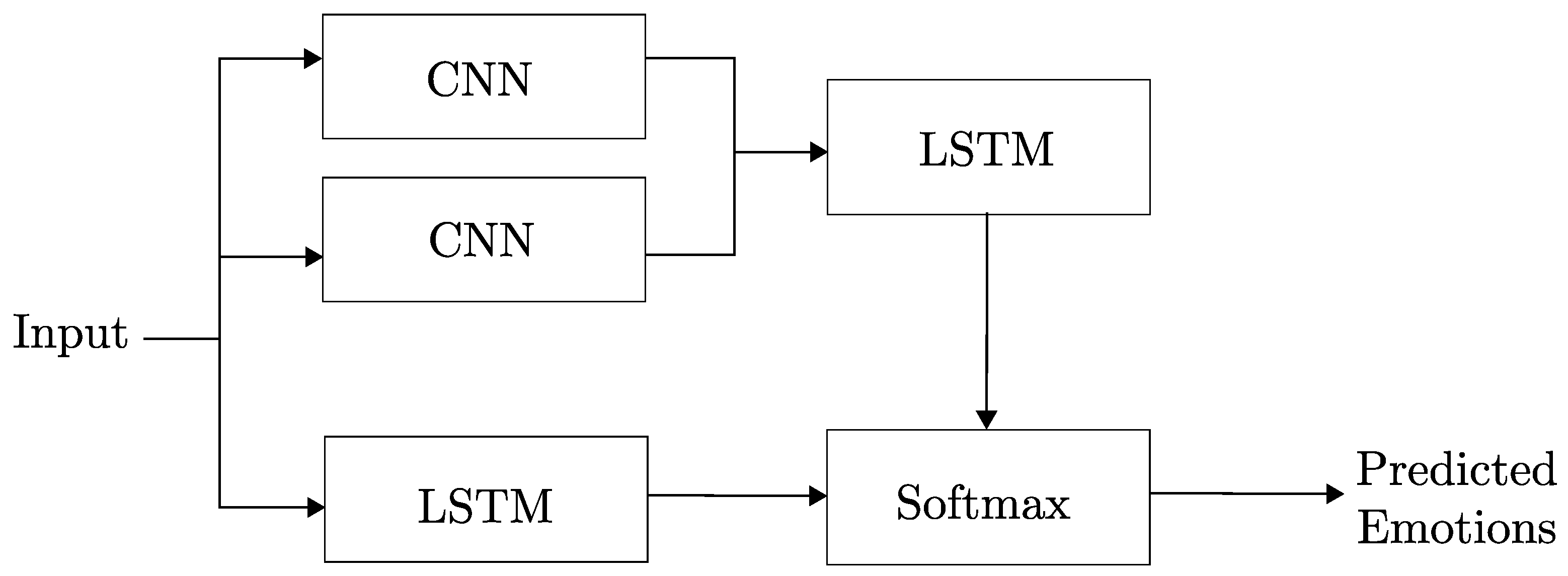

3.1. Dual-Sequence LSTM Architecture

The Dual-Sequence LSTM Architecture features two LSTM (Long Short-Term Memory) paths [

9]. We input two different mel-spectrogram features into CNN architectures, and the outputs of two convolution networks are aligned and pass an LSTM layer. The other LSTM architecture receives MFCC input data directly. Each LSTM has a classification layer with a softmax activation function, and the average of these values becomes the final prediction.

Figure 3 illustrates the architecture of Dual-Sequence LSTM.

3.2. Local Feature Learning Block Network

The deep networks, as shown in

Figure 4, have local feature learning blocks (LFLBs) consisting of convolution 1D, Batch Normalization (BN), Exponential Linear Unit (ELU), and max-pooling layers [

10]. After connecting four local feature learning blocks in series, input data with audio and its Log-Mel Spectrogram (LMS) pass 4LFLBs, the LSTM, and fully connected layers before softmax. Finally, the softmax activation function calculates probability and predicts an emotion.

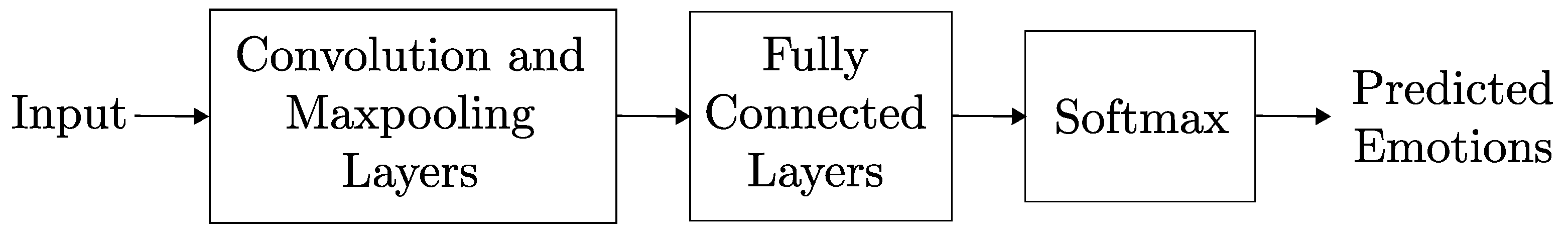

3.3. Deep-Net

Deep-Net mainly consists of combinations of convolutional and max-pooling layers [

11]. In

Figure 5, data pass through fully connected layers after passing these layers, and the softmax activation function calculates probabilities and predicts an emotion.

3.4. Multi-Task Learning Scheme

Since the transformer models popularized the large language models that can grasp sequential data with different data types, there is a promise that it can be extended to emotion recognition. Multi-task learning is one of the examples, which has the main model to achieve multiple objectives with different tasks [

12]. According to

Figure 6, this particular multi-task learning model processes voice and text data for high accuracy. With pretraining their “Pretrained wav2vec-2.0” module, this model produces the features and calculates the loss values for each text and voice data via a fully connected layer. In this paper, this model uses voice data with pre-scripted text data from the dataset for the training and testing. Note that this model uses twelve transformer blocks with seven convolutional blocks [

12].

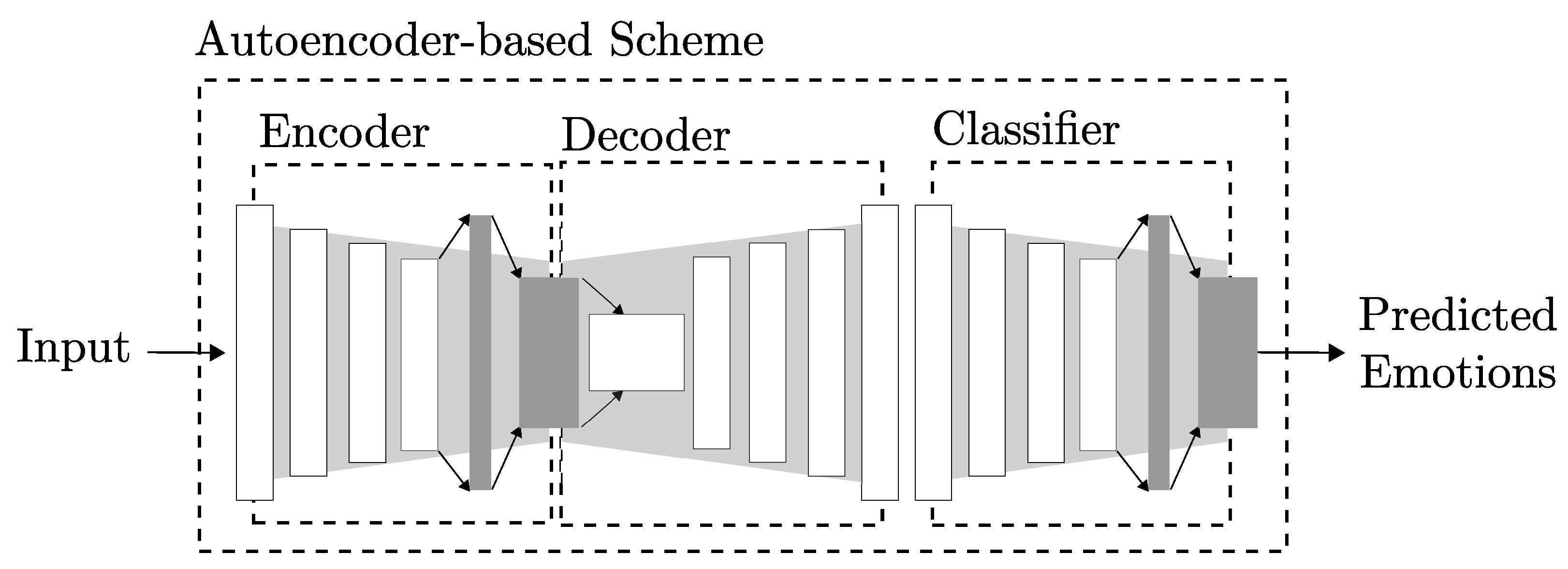

3.5. Proposed Architecture: Autoencoder-Based Multi-Emotion Detection System

Existing speech emotion recognition models predict emotions using only encoded speech feature images. However, this can cause problems when the extracted features are from mobility services where mobility noises might exist. In this context, a model with denoising is essential. Models with autoencoder structures are well known for reducing image noises [

13]. Since we use MFCC images as feature maps, an autoencoder model can be a method for speech emotion recognition in mobility services.

Figure 7 illustrates the Multi-Emotion Detection Autoencoder-based Structure we propose. In order to minimize the impact of mobility noise, we propose an autoencoder structure that can detect multi-emotion. There are three parts in our model: the encoder part, the decoder part, and the classification part. The encoder part takes charge of reducing data scale by saving essential features of the original data. In addition, we add the dropout layer to avoid overfitting and improve the system’s robustness against noisy data cases. The decoder part expands encoded data to its approximate original size. As a result, data that passed both the encoder and decoder parts are reconstructed as the closed size of the original data. Note that the output size of our decoder part does not precisely match the input data dimension. Since the final objective of our scheme is emotion detection instead of merely eliminating noises, no reconstruction error is used in this scheme.

The classification module consists of convolution and max-pooling layers, similar to the encoder. After the fully connected layer, which consists of one flattened and one dense layers, the sigmoid activation function calculates the probability of each emotion. Particularly, the sigmoid function calculates the respective probability of each emotion. Therefore, the scale of each emotion is from zero to one and multiple emotion selection can be possible via specific thresholds. On the other hand, the softmax function computes the overall probability of all samples so that the sum of probabilities in the softmax function is one. In other words, the softmax function is only suitable to select a single emotion.

Specific layers consist of three main layers: encoder, decoder, and classifier. In the encoder part, the first layer is the dropout layer with a fixed rate. After the dropout, the second and fourth layers are convolution layers, each witj filter size 256 (13,13) and 128 (7,7). The third and fifth layers are max-pooling layers with pool sizes (3,1) and (2,1). The data pass through a batch normalization and a flattened layer, illustrated in

Figure 8 in one flattened layer. The final layer of the encoder part is a dense layer with 1287 units.

In the decoder part, the fully connected data with 1287 units are reshaped into (33,39,1). Then, the data undergo the reversed encoder part, which consists of a batch normalization layer and two sequences of upsampling and convolution layers. The upsampling layers are equivalent to the reverse of the max-pooling layers. In the classifier part, the first and third layers and the convolution layers have filter sizes of 128 (13,13) and 64 (7,7). Finally, a dense layer with the sigmoid activation function predicts the probabilities of each emotion. Note that the reason for the sigmoid function is that we want to facilitate the detection of the emotion independently instead of the softmax function, which translates the probability of overall emotions. In our work, we initially pick the emotion with the max value to select multi-emotions, and any other emotion that exceeds 0.5 is selected. Note that 0.5 is the unbiased threshold between zero and one. Except for the final dense layer of the classifier, all convolution and dense layers in our model use the Rectified Linear Unit (ReLU) as the activation function.

4. Experimental Setup and Results

4.1. Environment Setup

We used the Toronto Emotional Speech Set (TESS) [

14] and The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS) [

15] as datasets since those datasets used North American English speech with the details in

Table 1. The TESS dataset has 200 samples per speaker for an emotion: One Older Talker and One Younger Talker. The RAVDESS dataset has 192 samples for each emotion: Angry, Happy, and Sad. It also has 96 samples for Neutral. We have a total of 2272 samples, and we add mobility noises to the original data in different SNRs from −20 dB to +10 dB. We test all models using noise-added data and use single emotion data of each SNR to compare all models.

Regarding mobility noises, we initially consider the condition that a small device within the vehicle records voices in mobility conditions. Existing literature discusses the array design, direction, and number of microphone in the vehicle [

16,

17,

18]. However, we consider the realistic case and one of the cases is that a user only bring their own small device with an in-built microphone in the vehicle, such as smartphone. Given that assumption, we set up the noise recording as shown in

Figure 9 via operating the 2022 Genesis G80 made by Hyundai-Kia Motor Group, and we utilize two different devices, Galaxy S9 and S20, with different recording locations for the noise recording. During the recording, we extract the noise samples that contain only driving noises, not other noises like hunting sounds or other persons’ voices.

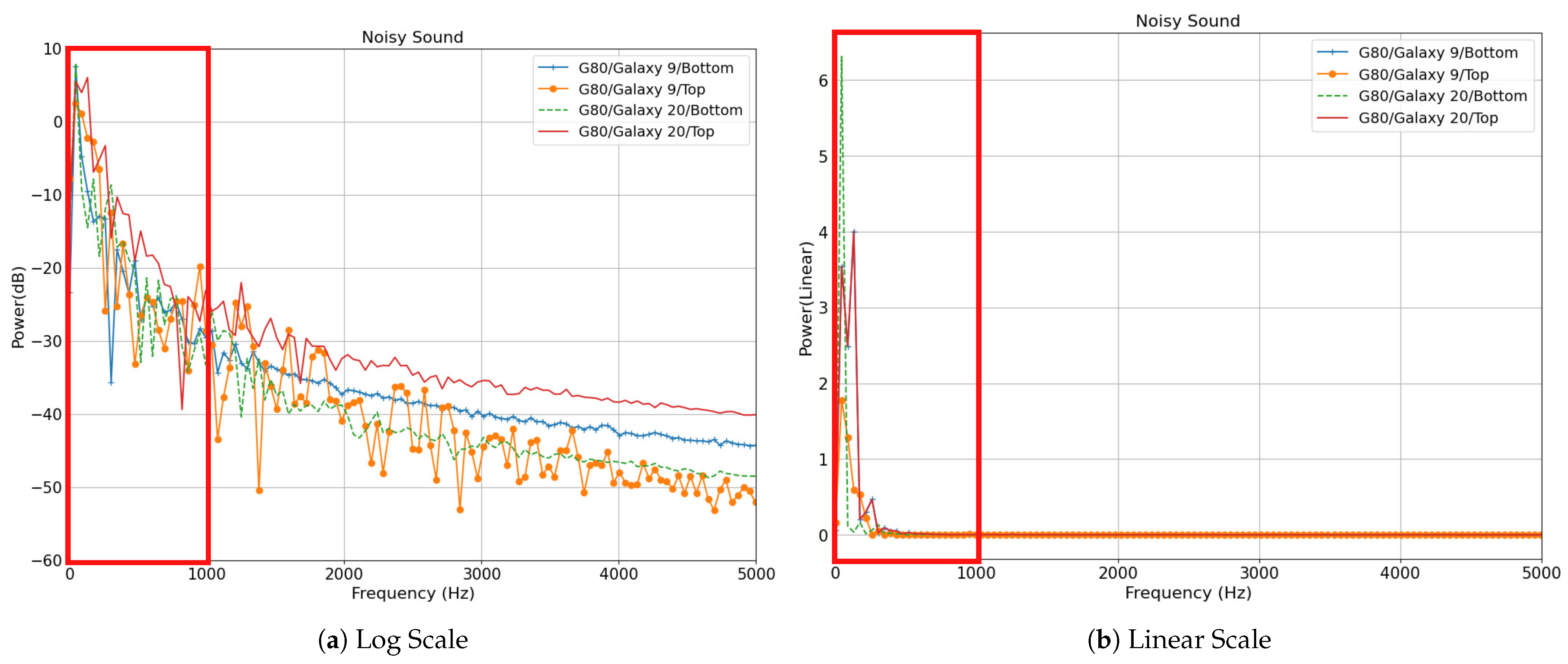

When we investigate the noise analysis with these extracted noises, we confirm that all noises show relatively similar patterns in the linear scale of the overall frequency spectrum, according to

Figure 10. At the same time, we observe certain noises presented at the low-frequency band and slight noise power difference over the frequency band depending on the device and location within the vehicle cabin because of the driving condition during the recording and the sensitivity of the device microphone. While noise shows a similar pattern, we consider the worst case, and we select the noise in Genesis G80 with Galaxy S20 and the top of center fascia for emotion recognition. Also, note that the noise range in the acoustic field is from −10 dB to 10 or 20 dB [

19,

20,

21]. Based on the existing literature, we determine to set up the SNR range from −20 dB to 10 dB to observe the noise impact associated with performance, especially between −20 dB and −10 dB, to explore the noise impact against high noise levels.

To obtain the multi-emotion labeled sample data for our work, we extensively investigate the datasets of speech emotion recognition. However, these datasets generally have single emotion labels [

14,

15,

22,

23]. One of the datasets, the Korean speech emotional database, contains the emotional decision data via five experts, and the research case that uses the five expert judgments to formulate the multi-emotion class [

24] exists. While this existing case shows significant potential to generate the multi-emotion, their method, which considers a threshold-based approach as it decided an emotion via two out of five judges, does not firmly determine the sample contains only chosen emotions. In addition, their method can eliminate the slight possibility of other judgments. For example, one of the samples was classified as anger, while another judgment included neutral, sadness, and disgust. In other words, the size of judgment is not sufficient to firmly determine the exact multi-emotion class. Based on our knowledge, instead of finding a new dataset with multiple emotion labeling, we conduct the multi-emotion class generation in that we concatenate two different samples to contain two exact emotions instead of sound mixing or using judgment data.

To fairly assign the two emotions without bias, we construct multi-emotion data by choosing randomly and connecting two samples with the same SNR for multi-emotion detection so that one combined sample has two emotions. Note that we generate two-emotion samples to show the potential of multi-emotion classification, not because we cannot generate three-emotion classes. After reading two WAV data, we concatenate them in series randomly using Numpy and wrote multi-emotion data with a 16,000 Hz sampling rate. With our experiment data specification shown in

Table 2, we conduct single and multi-emotion recognition experiments in the following ways:

Single Emotion Experiment: For the single emotion case, Angry, Happy, Neutral, and Sad in English are used, and details of the data are shown in

Table 2 and

Table 3. In this experiment, we train the clean dataset only because we need to show the noise robustness of our proposed autoencoder model. We also compare four different models. In addition, we differentiate the dropout rate 0.1 and 0.2 in our proposed model.

Multi-Emotion Experiment: Two different emotion-mixed data are present in this experiment. We use 9000 samples: 1500 samples of the six combination labels: Angry/Happy, Angry/Neutral, Angry/Sad, Happy/Neutral, Happy/Sad, and Neutral/Sad. In this experiment, we train and test two different cases: (1) Clean dataset only (No Noise) and (2) Clean and noisy datasets, which add to our sample noise. For the second case, we add the noise with SNR = −20, −15, −10, −5, 0, 5, 10 dB. Thus, the total number of clean and noisy samples in the second case is 72,000.

Single/Multi-Emotion Experiment: This case considers whether the driver may have one or two emotions. We use 10 combination labels for multiple emotions, including single emotions, with 11,272 samples. Note that the total number of samples comes from the sum of

Table 3. In addition, we train and test the case in the same manner as a multi-emotion experiment. For that matter, the total number of clean and noisy samples is 90,176.

We train all models using single emotion samples without noise for the model comparison experiment. After completing training, we test the models over single emotion samples added with noise from −20 dB∼+10 dB and analyze its results. Since all other models except our model intend to classify only a single emotion, we conduct the multi-emotion cases with our proposed model only. For the multi-emotion and single/multi-emotion experiments, we initially train our proposed model using single/multi-emotion samples without noises; however, training only a clean dataset does not improve the performance of our model. Thus, we train with the clean dataset and noise-added datasets from −20 dB to 10 dB. Regarding the confusion matrix case, we generate the results with the test samples with clean samples. For this experiment, we follow the environment setup shown in

Table 3.

4.2. Experiment Results

In our experiment, we initially conducted single emotion detection using existing speech emotion recognition models and our proposed model with details of

Table 3.

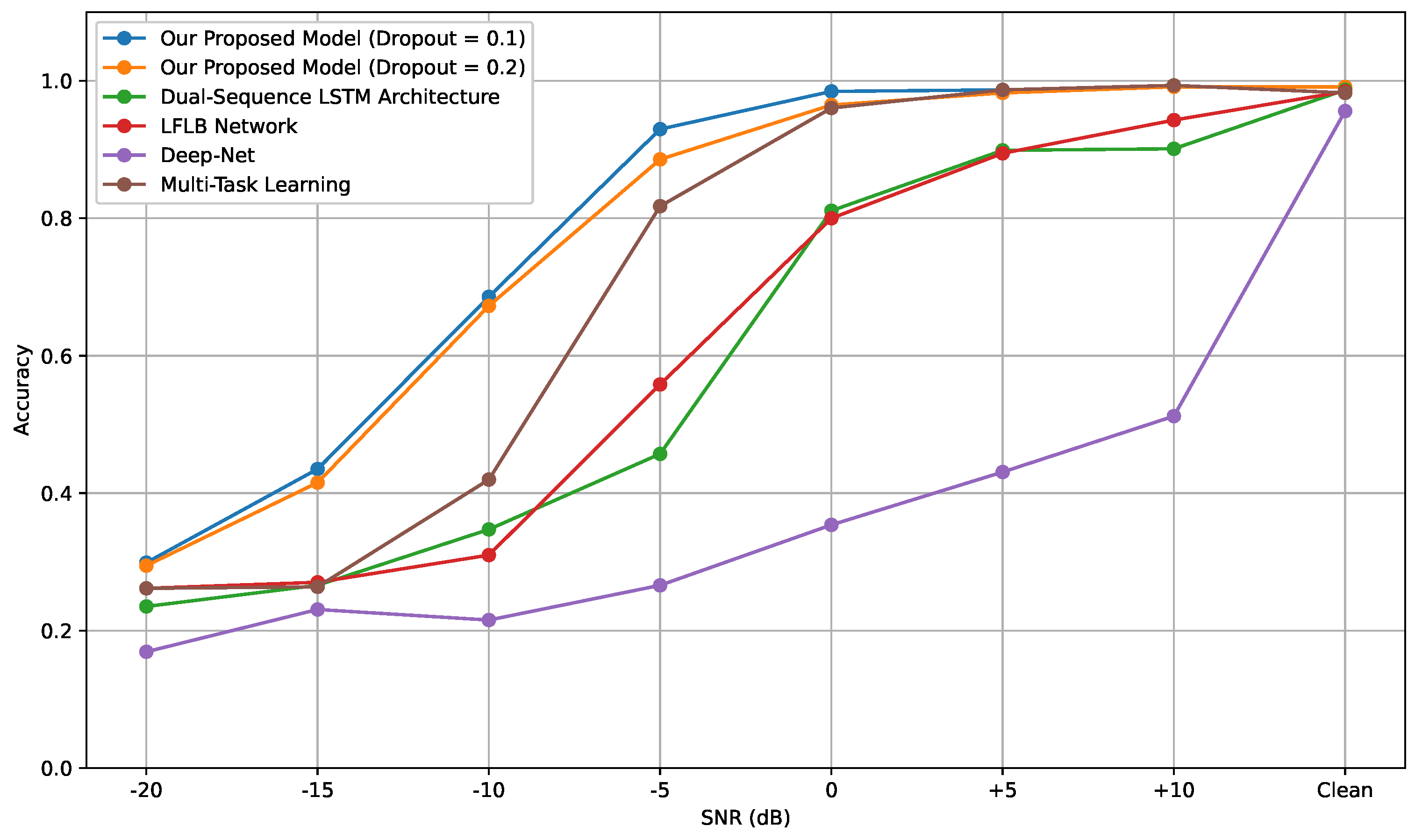

As in

Figure 11 and

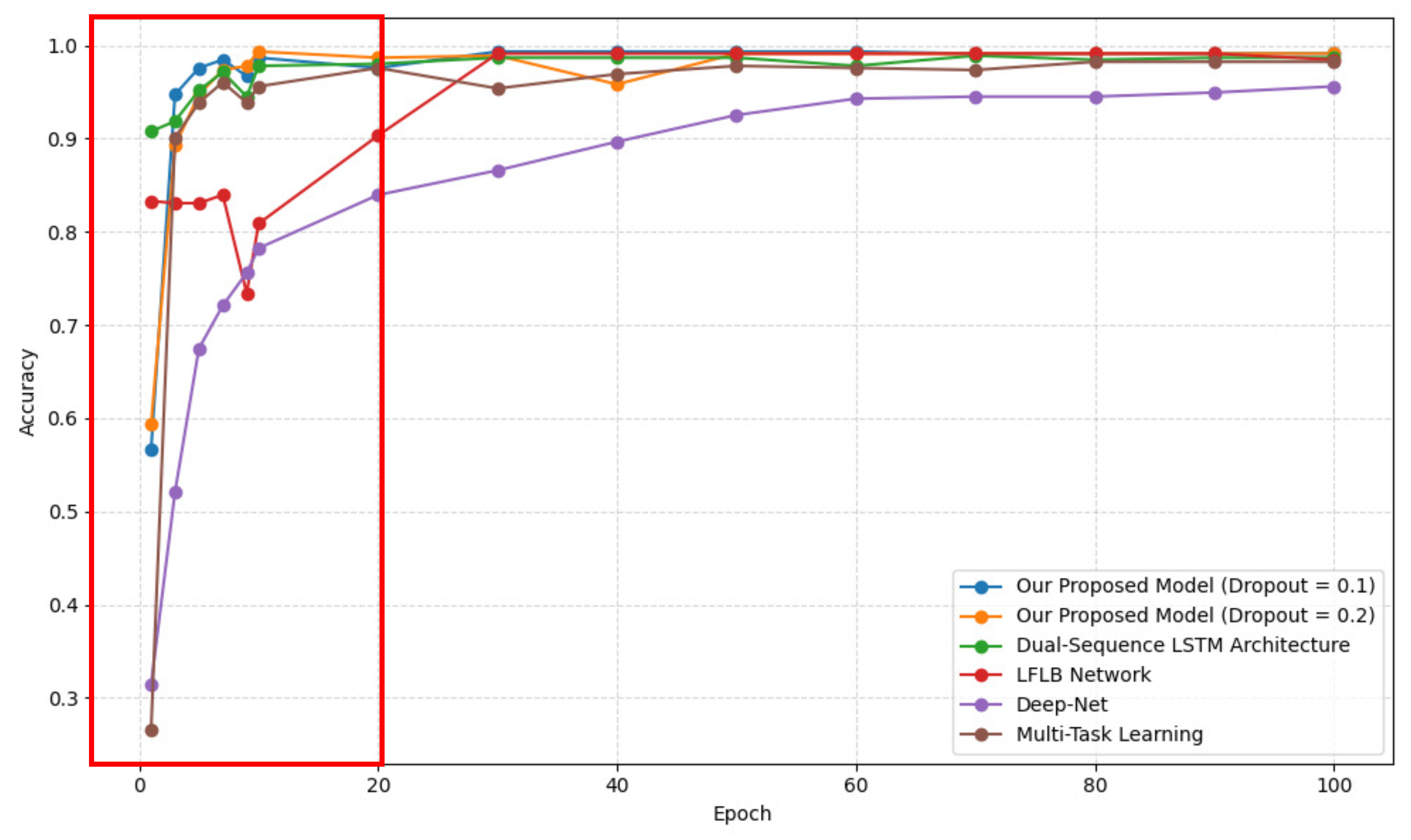

Figure 12, the performance results of accuracy and F1 score showed that our proposed model substantially outperformed the other three models. Our model accuracy showed significantly above consistently over different SNRs since our autoencoder suppressed the noise. At the SNR = −10 dB, our proposed models with dropouts 0.1 and 0.02 are above 0.65 accuracy while other models are below 0.42. Even the F1-score showed that our proposed model scores above 0.64, whereas other models are below 0.38. In comparison, the performances of other models deteriorated with lower SNRs, especially the Deep-Net case. We also confirmed that our models can detect a single emotion in the clase case with 96% accuracy and F1-score at SNR = 0 dB, the highest performance among the models. These results prove that our models can operate against noises well compared to other models. For the performance convergence over epoch,

Figure 13 shows that the converange of the model performance was already reached before epoch 20 due to the data size and number of emotion types.

For the comparison among models over various factors including noise level (SNR), we list the ablation study in

Table 4. Note that depending on objective and utilized dataset, the accuracy and F1-scores is determined, and the performance of each model is proportional to the SNRs. Also, we denote that the autoencoder in multi-emotions training with clean dataset only showed the poor performance of accuracy and F1-score, especially below 0.22 accuracy. Thus, we determined to train clean and noisy dataset both in multi-emotion cases (

Table 5).

We also conducted experiments for double emotion detection using our proposed model.

Figure 14 shows the performance result of the multi-emotion detection case over SNRs. In

Figure 14, we observed that our model achieved a substantial accuracy between 79% and 87%. This result indicated that, unlike other models, our autoencoder model could detect the double emotion. Our model, especially in the F1-score, reached a value over 0.93. Since the F1-score weighs false positives and false negatives equally and is crucial under imbalance distribution, we can consider our high F1-score performance to be the outperformance of our proposed models.

Regarding the single/multi-emotion detection case, which is the classification of both single and double emotion classification, as shown in

Figure 15, our performance showed consistent accuracy over SNRs from 75% to 82.7%. Of course, compared to a double emotion-only detection case, its accuracy is underperformed because it also needs additional attention on single emotion detection. In

Figure 15, we confirm that the model performance is proportional to the level of SNRs.

To identify the double emotion detection error, our confusion matrix in

Figure 16 shows that all combination classes achieved more than 77%, and the combination of Neutral and Sad achieved up to 87.25%. Regarding the false classification, the combinations of Angry/Happy and Angry/Neutral given each other showed significant false classification up to 14.04% and 9.45%. Also, the combination of Neutral/Sad was falsely classified as Happy/Sad in 9.96% of cases.

The Single/Double Emotion Detection case is more complex and dynamic. As presented in

Figure 17, all classes performed at least above 73% except the Angry/Happy case with 64.32%, and the Happy and Sad cases recorded up to 93.73% and 95.45%, respectively. The performance result also tends to record high accuracy in a single emotion class compared to the double emotion class. Regarding false classification matters, the model also tends to falsely classify double emotion as a single emotion via results of false classification of Happy over Happy/Neutral up to 10.89%.

For the analysis of the incorrectly estimated sample, we conducted an MFCC analysis to determine the incorrect detection and show that several aspects can cause samples to be wrongly detected. At first, as shown in

Figure 18, we observed a similar wave pattern at the edge of the image. This wave pattern of MFCC images may cause the samples to be incorrectly detected. Another factor is that the white spaces in the middle of the images are almost identical. That similarity can occur in the false detection of emotion detection. These issues are a few of various existing examples that direct the system to inaccurate detection, and other issues need to be investigated in later work.

5. Discussion and Consideration

In this paper, we proposed the autoencoder-based model to design the multi-emotion detection and were able to classify single and multi-emotions. In addition, our performance showed that our proposed model could outperform the single-emotion detection substantially better than other models and could also detect multi-emotion with consistent accuracy. This result offers the potential and promise of our model for the actual mobility application.

While we designed the outperformed model, it is necessary to consider certain challenges and conditions in our work via five perspectives: Detection Objectives, Data Processing, Data Augmentation, Model Design and Implementation, and Noise Impact.

Detection Objectives: For further research, we need to specify the objectives of the applications using emotion detection. Depending on the case, multi-emotion detection is essential, and three or four additional emotion detections are often needed to serve their purposes. In addition to the number of emotions, we must explore the adequate frequency of the updating emotion condition. Since the emotion status is continuous, the frequency of the emotion update must be set to ensure the accurate status of the driver’s emotion. Especially in slow traffic conditions like traffic jams or under construction, driving stress may heavily impact emotional condition and can fluctuate during driving. Therefore, the application needs to have an explicit objective of emotion detection, and these above matters need to be investigated in later work.

Data Processing: The segmentation scheme and size of the voice sample are another matter that if the voice sample is excessively long, the processing delay can be occurred, but also it cannot produce the real-time recognition in the application. Also, unlike a normal condition, mobility environments are in complex and often unexpected conditions and human responding delay can occur for up to two seconds [

25]. Even short-time samples matter in the mobility situation.

Data Augmentation: Generating emotion samples for multi-emotion is another factor that needs to be considered in our work. For double emotion samples, we concatenated two pieces of voice data with size control such that the concatenated data had the same length of voice time as the original single emotion case. However, a simple crossover that we just performed is one of the various available options to augment the data. We must consider adding other augmented options for the multi-emotion contained samples to resolve the augmented data bias issues.

Model Design and Implementation: We designed our autoencoder-based model based on the assumption that we train it with general computing resources instead of particular hardware. However, in the cases of embedded hardware and single-board computers, we may need to optimize our model to be affordable for the small hardware to run. In other words, structural modification is essential for this low-performed hardware adoption. Regarding the model design, improving the complexity of our model is an additional consideration due to the faster operation and efficiency improvement. Regarding hardware implementation, the possibility of operating small-scale devices such as NVIDIA Jetson Nano or Raspberry Pi 5 needs to be considered for future work.

Noise Impact: As in

Figure 11, our proposed model shows the highest performance against the effect of mobility noises. In addition, our model can detect multiple emotions with high performance, as illustrated in

Figure 14. Although our proposed autoencoder model shows high performance, we can still observe that mobility noises still affect speech emotion recognition performance. Research on the enhanced model that can denoise more effectively is necessary in future work.

6. Conclusions

In this paper, we proposed and validated an autoencoder-based scheme for speech-emotion recognition in mobility services. We tested our and existing models using data with recorded mobility service noises of various signal-to-noise ratios. To compare performances, we conducted experiments in which all models detected a single emotion for each data, and our model showed outstanding performance in this comparison. Our model also detected multiple emotions for data of double emotions with remarkable performance since it used a sigmoid activation function as a classifier.

For further research, we need to improve our model to mitigate the false classification of multi-emotions, especially single/double emotion cases. In order to improve the model, restructuring our model or amending additional models are potential candidates for further research. We also need to develop an accurate method to obtain multi-emotion voice samples and recordings because all speech emotion datasets are usually labeled as single emotions, and a mixture of multiple emotions usually happens in the real world. For this particular subject, we may extend this future research to the recording and speech method from an ecological perspective. In addition, since we target our model for the mobility service case, we need to implement our model with the actual microphone to conduct the wild test to validate the emotion detection service in the mobility device.

Author Contributions

Conceptualization, J.M.O. and J.Y.K.; methodology, J.M.O., J.K.K. and J.Y.K.; software, J.M.O.; validation, J.M.O., J.K.K. and J.Y.K.; formal analysis, J.K.K. and J.Y.K.; investigation, J.M.O.; resources, J.Y.K.; data curation, J.M.O.; writing, J.M.O., J.K.K. and J.Y.K.; visualization, J.Y.K.; supervision, J.Y.K.; project administration, J.Y.K.; funding acquisition, J.Y.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Sungshin Women’s University Research Grant of 2023.

Data Availability Statement

Data are available from the corresponding author upon reasonable request.

Conflicts of Interest

Author Jin Kwan Kim was employed by the company iT-Telecom. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MFCC | Mel-frequency Cepstral Coefficient |

| DCT | Discrete Cosine Transform |

| DFT | Discrete Fourier Transform |

| CNN | Convolutional Neural Network |

| LSTM | Long Short-Term Memory |

| SNR | Signal-to-Noise Ratio |

| ReLU | Rectified Linear Unit |

| TESS | Toronto Emotional Speech Set |

| RAVDESS | Ryerson Audio-Visual Database of Emotional Speech and Song |

| dB | Decibel |

| WAV | Waveform Audio File Format |

| OS | Operating System |

| CPU | Computing Processing Unit |

| GPU | Graphic Processing Unit |

| LFLB | Local Feature Learning Blocks |

| BN | Batch Normalization |

| ELU | Exponential Linear Unit |

| LMS | Log-Mel Spectogram |

References

- Medioni, G.; Choi, J.; Labeau, M.; Leksut, J.T.; Meng, L. 3D facial landmark tracking and facial expression recognition. J. Inf. Commun. Converg. Eng. 2013, 11, 207–215. [Google Scholar] [CrossRef][Green Version]

- Revina, I.M.; Emmanuel, W.S. A survey on human face expression recognition techniques. J. King Saud-Univ.-Comput. Inf. Sci. 2021, 33, 619–628. [Google Scholar] [CrossRef]

- Issa, D.; Demirci, M.F.; Yazici, A. Speech emotion recognition with deep convolutional neural networks. Biomed. Signal Process. Control 2020, 59, 101894. [Google Scholar] [CrossRef]

- Wagner, J.; Triantafyllopoulos, A.; Wierstorf, H.; Schmitt, M.; Burkhardt, F.; Eyben, F.; Schuller, B.W. Dawn of the transformer era in speech emotion recognition: Closing the valence gap. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10745–10759. [Google Scholar] [CrossRef] [PubMed]

- Dwivedi, D.; Ganguly, A.; Haragopal, V.V. Contrast between simple and complex classification algorithms. In Statistical Modeling in Machine Learning; Academic Press: Cambridge, MA, USA, 2023; pp. 93–110. [Google Scholar]

- Bui, K.-H.N.; Oh, H.; Yi, H. Traffic density classification using sound datasets: An empirical study on traffic flow at asymmetric roads. IEEE Access 2020, 8, 125671–125679. [Google Scholar] [CrossRef]

- Sreenivasa, R.K.; Shashidhar, G. Robust Emotion Recognition Using Spectral and Prosodic Features; Springer Briefs in Speech Technology; Springer: New York, NY, USA, 2013. [Google Scholar]

- Ganchev, T.; Fakotakis, N.; Kokkinakis, G. Comparative evaluation of various MFCC implementations on the speaker verification task. In Proceedings of the SPECOM, Patras, Greece, 17–19 October 2005; Volume 1, pp. 191–194. [Google Scholar]

- Wang, J.; Xue, M.; Culhane, R.; Diao, E.; Ding, J.; Tarokh, V. Speech emotion recognition with dual-sequence LSTM architecture. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 6474–6478. [Google Scholar]

- Zhao, J.; Mao, X.; Chen, L. Speech emotion recognition using deep 1D and 2D CNN LSTM networks. Biomed. Signal Process. Control 2019, 47, 312–323. [Google Scholar] [CrossRef]

- Anvarjon, T.; Mustaqeem; Kwon, S. Deep-net: A lightweight CNN-based speech emotion recognition system using deep frequency features. Sensors 2020, 20, 5212. [Google Scholar] [CrossRef] [PubMed]

- Cai, X.; Yuan, J.; Zheng, R.; Huang, L.; Church, K. Speech emotion recognition with multi-task learning. In Proceedings of the Interspeech, Brno, Czech Republic, 30 August–3 September 2021; Volume 2021, pp. 4508–4512. [Google Scholar]

- Gondara, L. Medical image denoising using convolutional denoising autoencoders. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining Workshops (ICDMW), Barcelona, Spain, 12–15 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 241–246. [Google Scholar]

- Pichora-Fuller, M.K.; Dupuis, K. Toronto Emotional Speech Set (TESS). Borealis, V1. 2020. Available online: https://borealisdata.ca/dataset.xhtml?persistentId=doi%3A10.5683%2FSP2%2FE8H2MF (accessed on 6 May 2025).

- Livingstone, S.R.; Russo, F.A. The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A dynamic, multimodal set of facial and vocal expressions in North American English. PLoS ONE 2018, 13, e0196391. [Google Scholar] [CrossRef] [PubMed]

- Freudenberger, J.; Stenzel, S.; Venditti, B. Microphone diversity combining for in-car applications. Eurasip J. Adv. Signal Process. 2010, 2010, 509541. [Google Scholar] [CrossRef]

- Alkaher, Y.; Cohen, I. Dual-microphone speech reinforcement system with howling-control for in-car speech communication. Front. Signal Process. 2022, 2, 819113. [Google Scholar] [CrossRef]

- Wechsler, J.; Chetupalli, S.R.; Mack, W.; Habets, E.A.P. Multi-microphone speaker separation by spatial regions. In Proceedings of the ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Tchorz, J.; Kollmeier, B. SNR estimation based on amplitude modulation analysis with applications to noise suppression. IEEE Trans. Speech Audio Process. 2003, 11, 184–192. [Google Scholar] [CrossRef]

- McLoughlin, I.V. Super-audible voice activity detection. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 1424–1433. [Google Scholar] [CrossRef]

- Pittman, A.L.; Wiley, T.L. Recognition of speech produced in noise. J. Speech Lang. Hear. Res. 2001, 44, 487–496. [Google Scholar] [CrossRef] [PubMed]

- Burkhardt, F.; Paeschke, A.; Rolfes, M.; Sendlmeier, W.F.; Weiss, B. A database of German emotional speech. In Proceedings of the Interspeech, Lisbon, Portugal, 4–8 September 2005; Volume 5, pp. 1517–1520. [Google Scholar]

- Busso, C.; Bulut, M.; Lee, C.-C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335–359. [Google Scholar] [CrossRef]

- Park, S.; Jeon, B.; Lee, S.; Yoon, J. Multi-Label Emotion Recognition of Korean Speech Data Using Deep Fusion Models. Appl. Sci. 2024, 14, 7604. [Google Scholar] [CrossRef]

- Kim, J.Y.; Lee, J.W.; Kim, J.; Lee, Y. Driver HMI Service Scenario and Blueprint Development for C-ITS. J. Korea Inst. Inf. Commun. Eng. 2023, 27, 782–795. [Google Scholar] [CrossRef]

Figure 1.

Mobility scenarios in speech emotion recognition.

Figure 1.

Mobility scenarios in speech emotion recognition.

Figure 2.

System model of speech emotion recognition.

Figure 2.

System model of speech emotion recognition.

Figure 3.

The architecture of Dual-Sequence LSTM.

Figure 3.

The architecture of Dual-Sequence LSTM.

Figure 4.

The architecture of LFLB Network.

Figure 4.

The architecture of LFLB Network.

Figure 5.

The brief architecture of Deep-Net.

Figure 5.

The brief architecture of Deep-Net.

Figure 6.

The basic architecture of speech emotion recognition with multi-task learning [

12].

Figure 6.

The basic architecture of speech emotion recognition with multi-task learning [

12].

Figure 7.

The basic architecture of our proposed autoencoder model for multi-emotion detection.

Figure 7.

The basic architecture of our proposed autoencoder model for multi-emotion detection.

Figure 8.

The detailed layer structure of our proposed model.

Figure 8.

The detailed layer structure of our proposed model.

Figure 9.

Experiment setup of mobility noise recording and location.

Figure 9.

Experiment setup of mobility noise recording and location.

Figure 10.

Experimental results of our noise recording in linear and DB scales. Note that the majority of the noise presented less than 1000 Hz and the noise in the rest of the frequency band is negligible.

Figure 10.

Experimental results of our noise recording in linear and DB scales. Note that the majority of the noise presented less than 1000 Hz and the noise in the rest of the frequency band is negligible.

Figure 11.

Performance comparison of accuracy among models for single emotion detection at epoch = 100.

Figure 11.

Performance comparison of accuracy among models for single emotion detection at epoch = 100.

Figure 12.

Performance comparison of F1-score among models for single emotion detection at epoch = 1000.

Figure 12.

Performance comparison of F1-score among models for single emotion detection at epoch = 1000.

Figure 13.

Performance convergence of accuracy score among models over epoch with clean dataset. Note that the convergence of all models happen rapidly as shown in the red box due to the size of dataset.

Figure 13.

Performance convergence of accuracy score among models over epoch with clean dataset. Note that the convergence of all models happen rapidly as shown in the red box due to the size of dataset.

Figure 14.

Performance of double emotion detection model.

Figure 14.

Performance of double emotion detection model.

Figure 15.

Performance of multi-emotion detection model.

Figure 15.

Performance of multi-emotion detection model.

Figure 16.

Colormap performance of confusion matrix on multi-emotion detection.

Figure 16.

Colormap performance of confusion matrix on multi-emotion detection.

Figure 17.

Colormap performance of confusion matrix on single/multi-emotion detection.

Figure 17.

Colormap performance of confusion matrix on single/multi-emotion detection.

Figure 18.

MFCC comparison between Happy/Neutral (incorrect prediction to Happy) and Happy (correct prediction) samples.

Figure 18.

MFCC comparison between Happy/Neutral (incorrect prediction to Happy) and Happy (correct prediction) samples.

Table 1.

Speech Dataset Characteristics.

Table 1.

Speech Dataset Characteristics.

| Data Categories | Types | Angry | Happy | Neutral | Sad |

|---|

| TESS/ | Count | 200 | 200 | 200 | 200 |

| (Older Talker) | Avg. Length (Sec) | 1.5695 | 2.0036 | 2.020 | 2.5358 |

| TESS/ | Count | 200 | 200 | 200 | 200 |

| (Yonger Talker) | Avg. Length (Sec) | 2.1131 | 1.9423 | 2.0892 | 2.2684 |

| RAVDESS | Count | 192 | 192 | 96 | 192 |

| Avg. Length (Sec) | 3.8714 | 3.6382 | 3.5032 | 3.6945 |

Table 2.

Emotion Data Sample Count for Training and Testing.

Table 2.

Emotion Data Sample Count for Training and Testing.

| Single Emotion | Multi-Emotion |

|---|

| Emotion Type | Sample # | Emotion Type | Sample # |

| | | Angry and Happy | 1500 |

| Angry | 592 | Angry and Neutral | 1500 |

| Happy | 592 | Angry and Sad | 1500 |

| Neutral | 496 | Happy and Neutral | 1500 |

| Sad | 592 | Happy and Sad | 1500 |

| | | Neutral and Sad | 1500 |

| Total # | 2272 | Total # | 9000 |

Table 3.

Experiment properties and settings for Speech Emotion Recognition Experiments.

Table 3.

Experiment properties and settings for Speech Emotion Recognition Experiments.

| Parameters | Environment 1 | Environment 2 |

|---|

| OS | Ubuntu 22.04 | Ubuntu 22.04 |

| Types | Cloud | Local Server |

| Python | 3.10 | 3.9 |

| Tensorflow (incl. Keras) | 2.15 | 2.19 |

| CUDA | 12.8 | 12.2 |

| CPU | 24Core 2.1 GHz | 16Core 2.1 GHz |

| GPU | NVIDIA H100 | NVIDIA RTX3080 |

| Batch size | 16 (Single Emotion), 32 (Multi-Emotion) |

| SNR | −20, −15, −10, −5, 0, +5, +10 |

| Epoch | 1–100 |

| Types of Emotions | Angry, Happy, Sad, Neutral |

| Sample Noise Record | Genesis G80/Galaxy S20 at the top of Center fascia |

Table 4.

Ablation Study for Models, Noise Level, and Dataset Combination at Epoch = 100.

Table 4.

Ablation Study for Models, Noise Level, and Dataset Combination at Epoch = 100.

| Model | SNR | Emotion Types | Dataset | Accuracy | F1-Score |

|---|

| Dual-LSTM | Clean | Single | Clean Only | 0.9868 | 0.9867 |

| LFLB | Clean | Single | Clean Only | 0.9846 | 0.9846 |

| Deep-Net | Clean | Single | Clean Only | 0.9560 | 0.9575 |

| Multi-Task Learning | Clean | Single | Clean Only | 0.9824 | 0.9824 |

| AE (0.1) | Clean | Single | Clean Only | 0.9912 | 0.9911 |

| AE (0.2) | Clean | Single | Clean Only | 0.9912 | 0.9911 |

| Dual-LSTM | −10 dB | Single | Clean Only | 0.3472 | 0.2609 |

| LFLB | −10 dB | Single | Clean Only | 0.3098 | 0.1979 |

| Deep-Net | −10 dB | Single | Clean Only | 0.2153 | 0.1576 |

| Multi-Task Learning | −10 dB | Single | Clean Only | 0.4197 | 0.3752 |

| AE (Dropout:0.1) | −10 dB | Single | Clean Only | 0.6857 | 0.6894 |

| AE (Dropout:0.2) | −10 dB | Single | Clean Only | 0.6725 | 0.6484 |

| AE (Dropout:0.1) | −10 dB | Multi | Clean Only | 0.2133 | 0.4873 |

| AE (Dropout:0.2) | −10 dB | Multi | Clean Only | 0.1811 | 0.4233 |

| AE (Dropout:0.1) | −10 dB | Multi | Clean/Noisy | 0.7516 | 0.8802 |

| AE (Dropout:0.2) | −10 dB | Multi | Clean/Noisy | 0.7622 | 0.8823 |

| AE (Dropout:0.1) | Clean | Single/Multi | Clean/Noisy | 0.8317 | 0.9242 |

| AE (Dropout:0.2) | Clean | Single/Multi | Clean/Noisy | 0.8190 | 0.9163 |

| AE (Dropout:0.1) | −10 dB | Single/Multi | Clean/Noisy | 0.7713 | 0.8945 |

| AE (Dropout:0.2) | −10 dB | Single/Multi | Clean/Noisy | 0.7779 | 0.8945 |

Table 5.

Performance Results of Multi-Emotion and Single/Multi-Emotion Detection over SNR with Dropout rate 0.1.

Table 5.

Performance Results of Multi-Emotion and Single/Multi-Emotion Detection over SNR with Dropout rate 0.1.

| SNR | Multi-Emotion | Single/Multi-Emotion |

|---|

| Accuracy | F1-Score | Accuracy | F1-Score |

| −20 dB | 0.6766 | 0.8413 | 0.6984 | 0.8508 |

| −15 dB | 0.7383 | 0.8719 | 0.7436 | 0.8749 |

| −10 dB | 0.7516 | 0.8802 | 0.7713 | 0.8945 |

| −5 dB | 0.7705 | 0.8886 | 0.7893 | 0.9026 |

| 0 dB | 0.7905 | 0.8982 | 0.8052 | 0.9126 |

| 5 dB | 0.7838 | 0.8959 | 0.8121 | 0.9167 |

| 10 dB | 0.7944 | 0.9001 | 0.8121 | 0.9173 |

| Clean | 0.8134 | 0.9128 | 0.8317 | 0.9242 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).