A Systematic Analysis of Security Metrics for Industrial Cyber–Physical Systems

Abstract

1. Introduction

2. State of the Art

- Automatic generation: Used by Ani et al. [14], i.e., a framework that generates specific security metrics after a preliminary study that analyzes the context and the security objectives of that field.

- Multivocal literature review (MLR): Used by Fernandez et al. [15], that consists of exploring the academic and gray literature, using the snowballing process and filtering them with a multi-step approach.

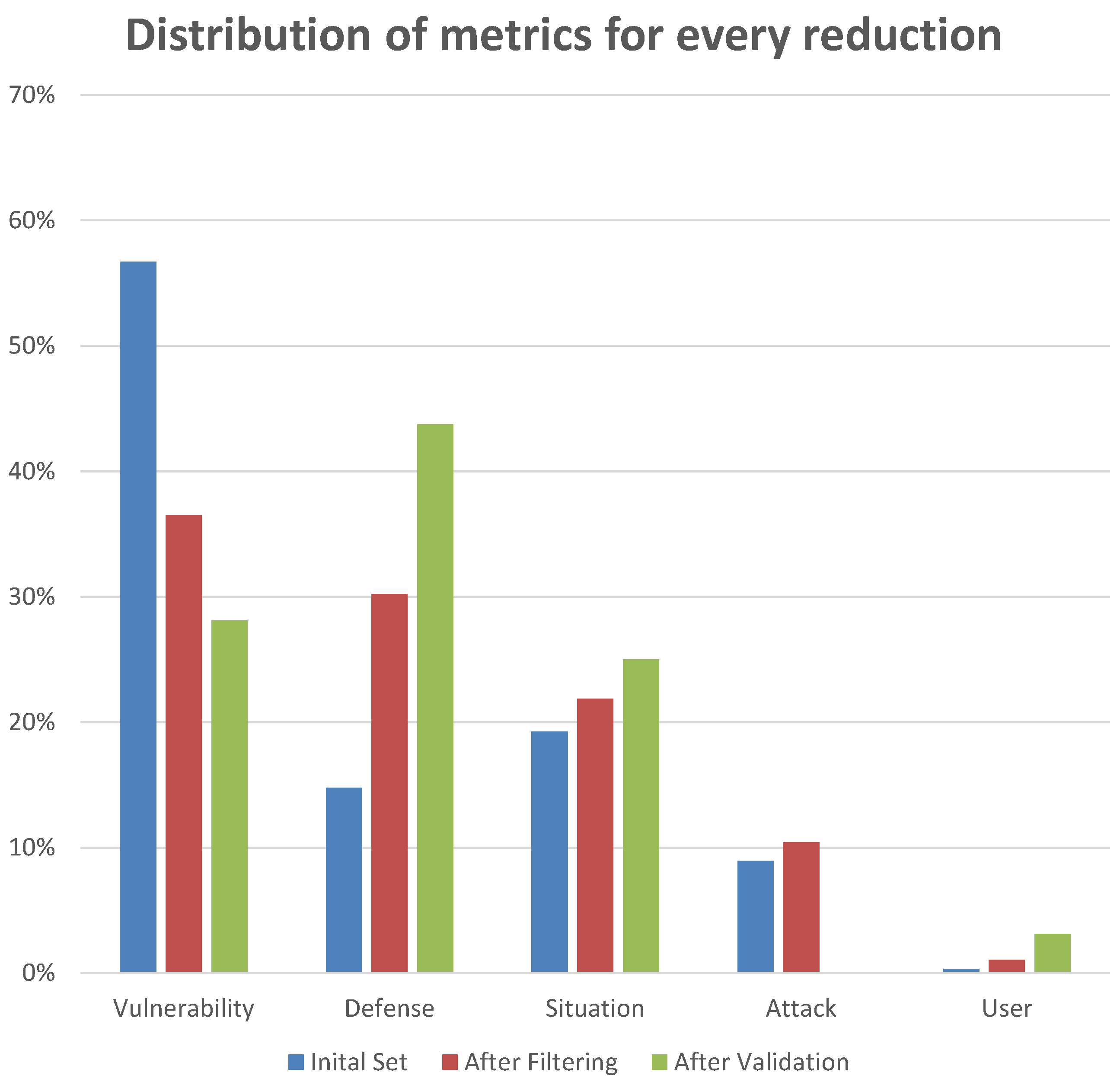

- Defense metrics: These metrics assess the strength and effort required to implement defense mechanisms within a system. They encompass the evaluation of preventive, reactive, and proactive defenses, as explored in [17].

- Vulnerability metrics: These metrics gauge system vulnerabilities, encompassing user vulnerabilities, interface-induced vulnerabilities, and software vulnerabilities. Examples include password vulnerabilities, attack surface [18], and software vulnerabilities, as documented by the Common Vulnerability Scoring System (CVSS) (https://nvd.nist.gov/vuln-metrics/cvss, accessed on 19 March 2024).

- Attack metrics: These metrics quantify the strength of performed cyberattacks. Unlike the previous categories that assess the security level via configuration and device analysis, attack metrics concentrate on measuring and analyzing cyberattacks and threats. They are crucial for risk assessment, evaluating the success of security measures, and guiding resource allocation. Examples include network bandwidth used by a botnet for launching denial-of-service attacks, the occurrence of obfuscation in malware samples, or the runtime complexity of packers measured in the number of layers or granularity [19].

- Situation metrics: Focusing on the security state of a system, situation metrics are time-dependent and dynamically evolve based on attack–defense interactions. Examples include metrics based on the frequency of security incidents or those related to investment in security improvement [20]. They are further categorized into data-driven metrics, such as the network maliciousness metric [21], and model-driven metrics, such as the fraction of compromised computers.

- The measurability of properties that should be consistently accessible.

- The feasibility and potential for automated data collection, taking into account associated costs.

- The methodology for quantifying the metric, such as using cardinal numbers or percentages.

- The establishment of units for measurement.

- Well-defined, i.e., it measures components of the security level and is meaningful, objective, unbiased, and complete, to not miss any aspects of the definition needed to be effective as well as not to be too expensive to evaluate;

- Progressive, i.e., the metric expresses a value or set of values that coherently evolve together with the actual level of security so that progress towards an acceptable value of the metric is an indicator of improved security level;

- Strongly or weakly reproducible, i.e., it can be used in different environments and still produce comparable results.

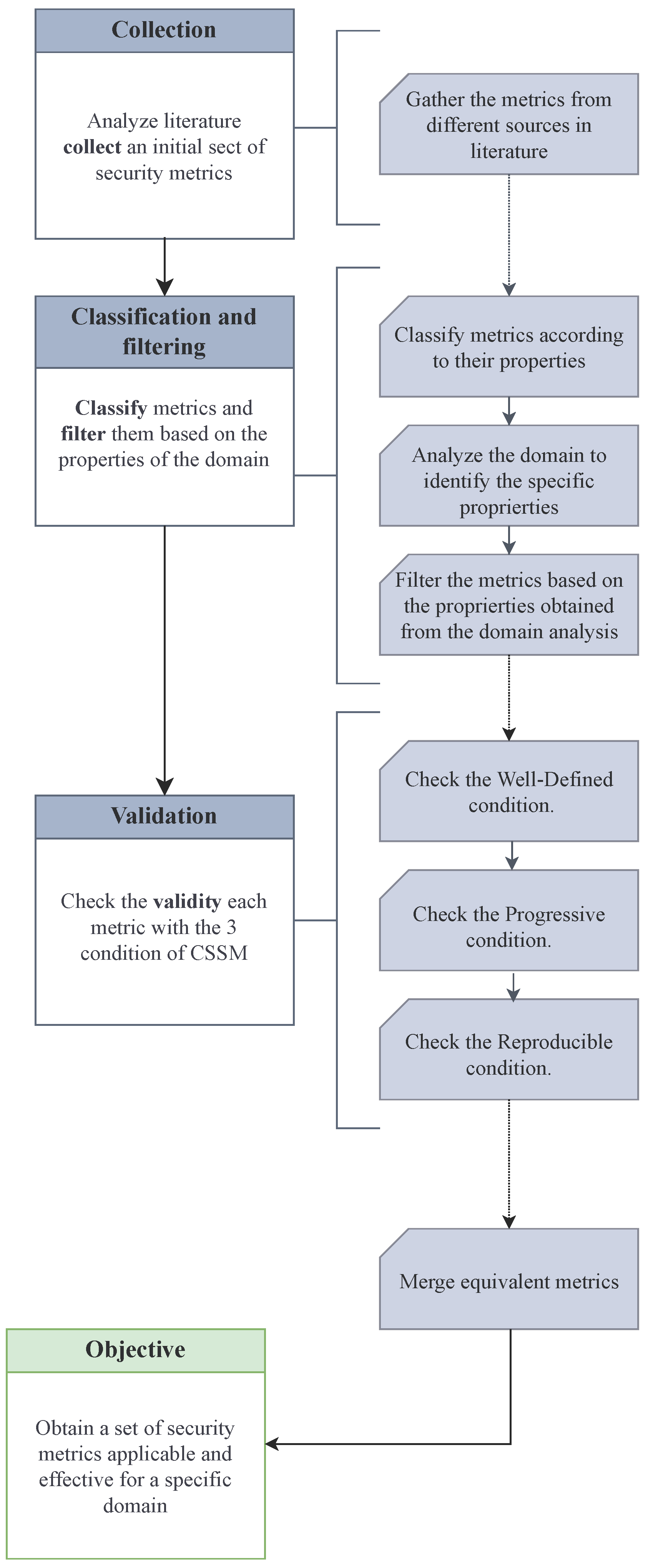

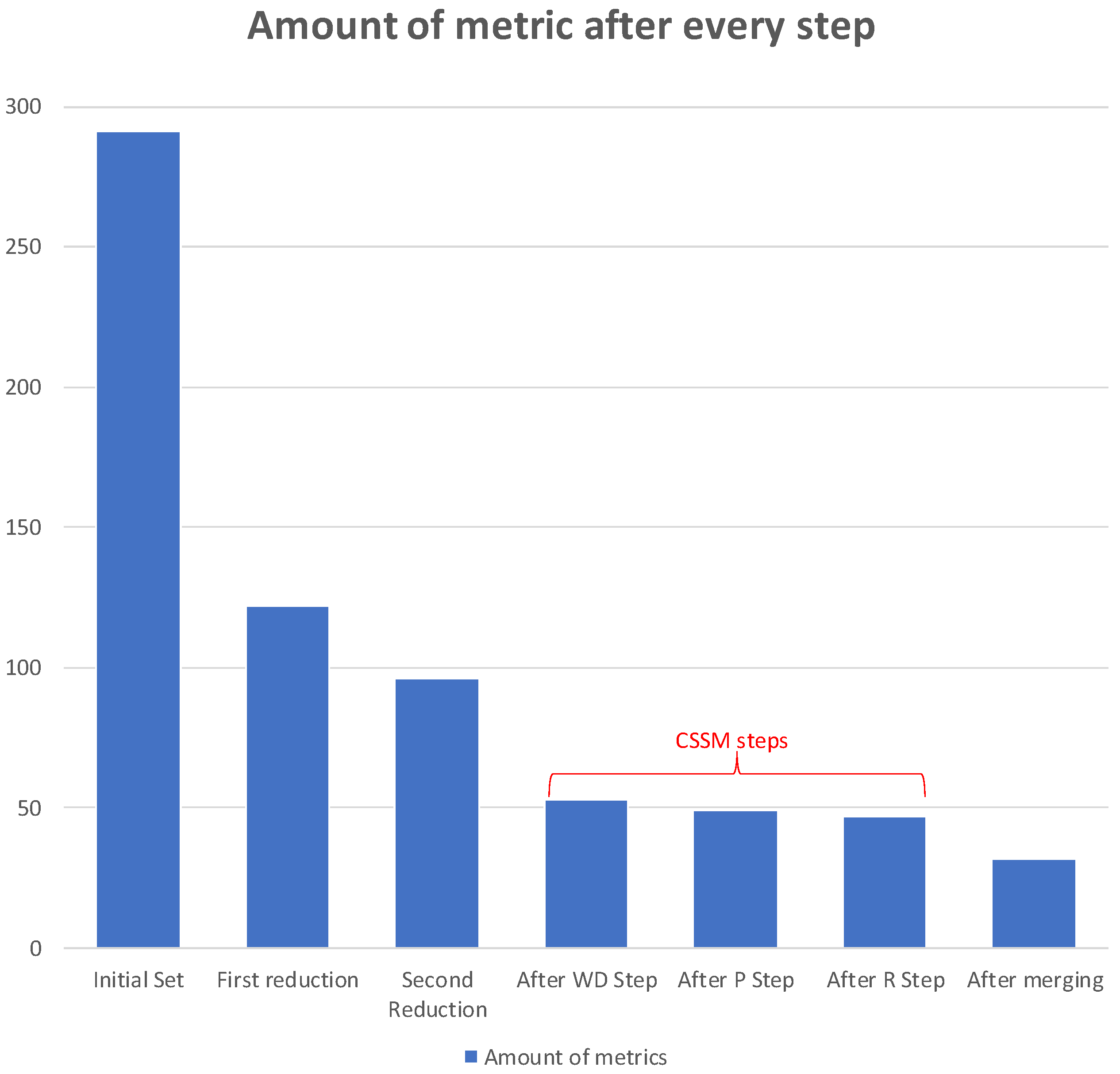

3. Selection Methodology

| Algorithm 1 An algorithmic view on the procedure to obtain the filtered and validated set of security metrics. | |

| 1: | procedure Obtaining-Metrics |

| 2: | ▹ collection: |

| 3: | |

| 4: | |

| 5: | ▹ classification: |

| 6: | |

| 7: | while do |

| 8: | . |

| 9: | . |

| 10: | end while |

| 11: | ▹ filtering: |

| 12: | |

| 13: | |

| 14: | |

| 15: | while do |

| 16: | if then |

| 17: | |

| 18: | end if |

| 19: | . |

| 20: | end while |

| 21: | ▹ validation: |

| 22: | |

| 23: | |

| 24: | |

| 25: | while do |

| 26: | if then |

| 27: | |

| 28: | end if |

| 29: | . |

| 30: | end while |

| 31: | ▹ merge: |

| 32: | |

| 33: | |

| 34: | |

| 35: | |

| 36: | while do |

| 37: | while do |

| 38: | if then |

| 39: | if then |

| 40: | |

| 41: | |

| 42: | end if |

| 43: | end if |

| 44: | . |

| 45: | end while |

| 46: | . |

| 47: | . |

| 48: | end while |

| 49: | end procedure |

- A name, that represents in a few words the meaning of the metric.

- A definition, that describes the metric and what it measures.

- A meaning, that summarizes the objective measurement of the metric and why it is useful.

- A weakness, that shows requirements, possible problems, or critical issues related to the metric, e.g., needing external support to calculate the metrics.

- A scope, that represents the field in which the metric is focused, e.g., network, device, user, organizations, system, etc.

- A result type, that can be quantitative if the metric gives a result in a numerical form or qualitative if the result is in a descriptive and discrete form (e.g., bad, normal, good).

- An automation field, that divides metrics into automatic, where the computation can be performed in an automatic way, or manual, where humans are required.

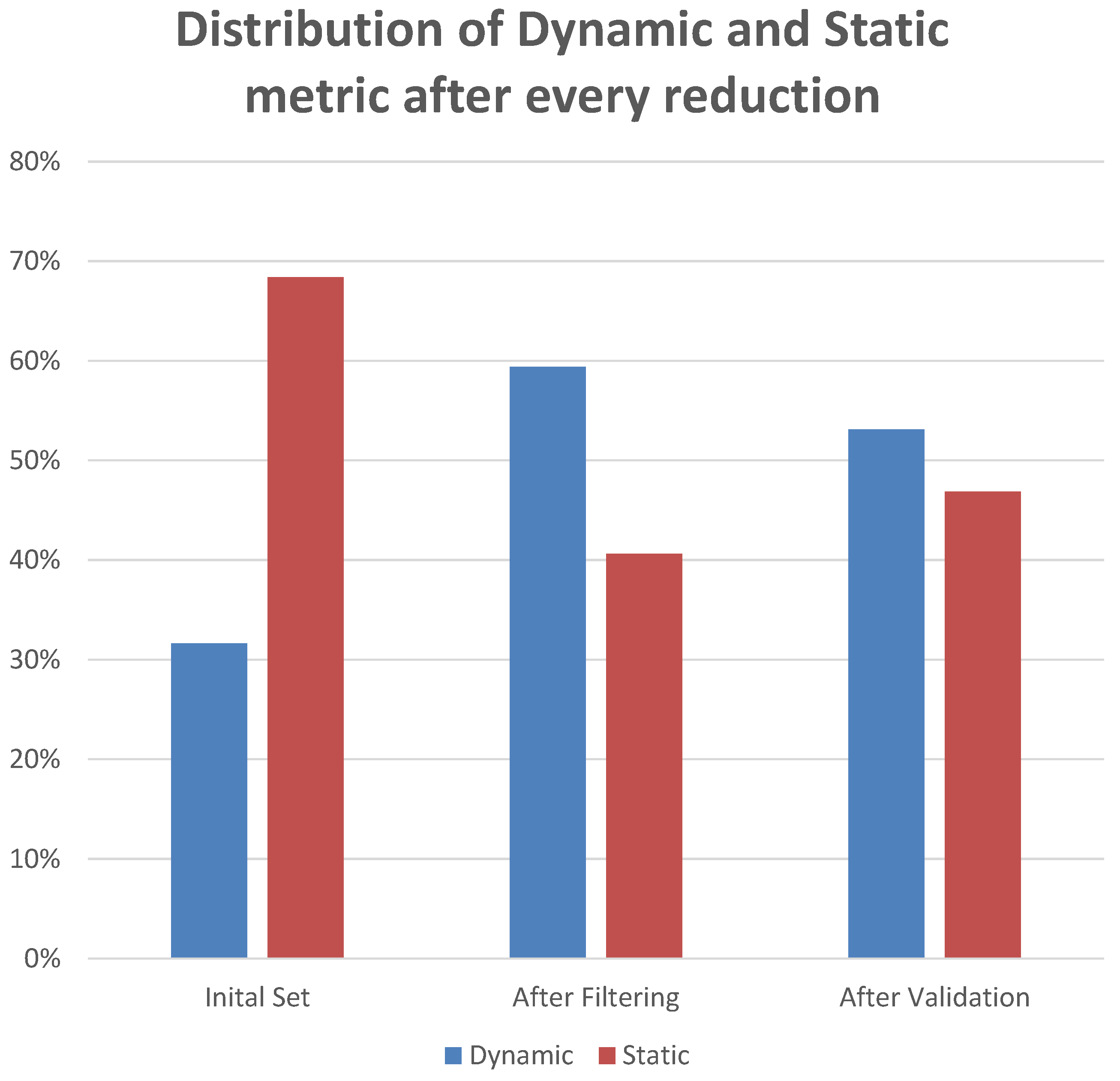

- A measurement field, that could be dynamic if the metric changes at runtime or static if the metric only changes with a new configuration.

- A construction, that can be modeled if the metrics need a model to be computed, i.e., an attack graph [29], or measured if it represents a simple calculation that can be directly executed without models.

4. Application: Metrics Selection for ICPSs

4.1. Metrics Classification

- Initially, they chose the data sources for the metrics, resulting in conference proceedings and academic journals from IEEE Xplore, Elsevier, AMC Digital Library, Springer, and Google Scholar search engine.

- Then, they gathered the metrics and labeled them with definition, scale, scope, automation, and measurement attributes.

- Finally, they filtered the metrics.

4.2. Domain Analysis

4.3. Metrics Filtering

- The definition of the metric must be applicable to IT or OT networks, components, protocols, and devices.

- The meaning attribute of the metric must explicitly declare an objective measurement related at least to one of the security properties of confidentiality, integrity, or availability.

- The weakness attribute of the metrics must be related to problems, issues, or requirements that can be resolved inside the ICPS domain.

- The scope attribute must be of the type “network”, “device”, “system”, or “user”.

4.4. Results

5. Discussion and Limitations

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CIA | Confidentiality integrity availability |

| CPS | Cyber–physical system |

| CSSM | Conditions for Sound Security Metrics |

| CVSS | Common Vulnerability Scoring System |

| DoS | Denial of service |

| HMI | Human–machine interface |

| IC | Inclusion criteria |

| ICPS | Industrial cyber–physical system |

| IT | Information technology |

| MLR | Multivocal literature review |

| OT | Operational technology |

| P | Progressive |

| PLC | Programmable logic controller |

| R | Reproducible |

| SCADA | Supervisory Control And Data Acquisition |

| WD | Well-defined |

References

- Azuwa, M.; Ahmad, R.; Sahib, S.; Shamsuddin, S. Technical security metrics model in compliance with ISO/IEC 27001 standard. Int. J. Cyber-Secur. Digit. Forensics 2012, 1, 280–288. [Google Scholar]

- Chew, E.; Swanson, M.; Stine, K.M.; Bartol, N.; Brown, A.; Robinson, W. Sp 800–55 Rev. 1. Performance Measurement Guide for Information Security; NIST: Gaithersburg, MD, USA, 2008. [Google Scholar]

- Tran, J.L. Navigating the Cybersecurity Act of 2015. Chap. L. Rev. 2016, 19, 483. [Google Scholar]

- Philippou, E.; Frey, S.; Rashid, A. Contextualising and aligning security metrics and business objectives: A GQM-based methodology. Comput. Secur. 2020, 88, 101634. [Google Scholar] [CrossRef]

- Wang, L.; Jajodia, S.; Singhal, A. Network Security Metrics; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Longueira-Romerc, Á.; Iglesias, R.; Gonzalez, D.; Garitano, I. How to quantify the security level of embedded systems? A taxonomy of security metrics. In Proceedings of the 2020 IEEE 18th International Conference on Industrial Informatics (INDIN), Warwick, UK, 20–23 July 2020; IEEE: New York, NY, USA, 2020; Volume 1, pp. 153–158. [Google Scholar]

- Doran, G.T. There’s a SMART way to write management’s goals and objectives. Manag. Rev. 1981, 70, 35–36. [Google Scholar]

- Brotby, W.K.; Hinson, G. Pragmatic Security Metrics: Applying Metametrics to Information Security; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Savola, R.M. Quality of security metrics and measurements. Comput. Secur. 2013, 37, 78–90. [Google Scholar] [CrossRef]

- Pendleton, M.; Garcia-Lebron, R.; Cho, J.H.; Xu, S. A survey on systems security metrics. ACM Comput. Surv. (CSUR) 2016, 49, 1–35. [Google Scholar] [CrossRef]

- Hauet, J.P. ISA99/IEC 62443: A solution to cyber-security issues? In Proceedings of the ISA Automation Conference, Jeju Island, Republic of Korea, 17–21 October 2012. [Google Scholar]

- Sultan, K.; En-Nouaary, A.; Hamou-Lhadj, A. Catalog of metrics for assessing security risks of software throughout the software development life cycle. In Proceedings of the 2008 International Conference on Information Security and Assurance (ISA 2008), Busan, Republic of Korea, 24–26 April 2008; IEEE: New York, NY, USA, 2008; pp. 461–465. [Google Scholar]

- Morrison, P.; Moye, D.; Pandita, R.; Williams, L. Mapping the field of software life cycle security metrics. Inf. Softw. Technol. 2018, 102, 146–159. [Google Scholar] [CrossRef]

- Ani, U.P.D.; He, H.; Tiwari, A. A framework for Operational Security Metrics Development for industrial control environment. J. Cyber Secur. Technol. 2018, 2, 201–237. [Google Scholar] [CrossRef]

- Fernández-Alemán, J.L.; Señor, I.C.; Lozoya, P.Á.O.; Toval, A. Security and privacy in electronic health records: A systematic literature review. J. Biomed. Inform. 2013, 46, 541–562. [Google Scholar] [CrossRef]

- Sundararajan, A.; Sarwat, A.I.; Pons, A. A survey on modality characteristics, performance evaluation metrics, and security for traditional and wearable biometric systems. ACM Comput. Surv. (CSUR) 2019, 52, 39. [Google Scholar] [CrossRef]

- Hong, J.B.; Enoch, S.Y.; Kim, D.S.; Nhlabatsi, A.; Fetais, N.; Khan, K.M. Dynamic security metrics for measuring the effectiveness of moving target defense techniques. Comput. Secur. 2018, 79, 33–52. [Google Scholar] [CrossRef]

- Manadhata, P.K.; Wing, J.M. An attack surface metric. IEEE Trans. Softw. Eng. 2010, 37, 371–386. [Google Scholar] [CrossRef]

- Roundy, K.A.; Miller, B.P. Binary-code obfuscations in prevalent packer tools. ACM Comput. Surv. (CSUR) 2013, 46, 1–32. [Google Scholar] [CrossRef]

- Zhan, Z.; Xu, M.; Xu, S. A characterization of cybersecurity posture from network telescope data. In Proceedings of the Trusted Systems: 6th International Conference, INTRUST 2014, Beijing, China, 16–17 December 2014; Revised Selected Papers 6. Springer: Berlin/Heidelberg, Germany, 2015; pp. 105–126. [Google Scholar]

- Zhang, J.; Durumeric, Z.; Bailey, M.; Liu, M.; Karir, M. On the Mismanagement and Maliciousness of Networks. In Proceedings of the NDSS, San Diego, CA, USA, 23–26 February 2014; Volume 14, pp. 23–26. [Google Scholar]

- Ahmed, R.K.A. Security metrics and the risks: An overview. Int. J. Comput. Trends Technol. 2016, 41, 106–112. [Google Scholar] [CrossRef]

- Yee, G.O. Improving the Derivation of Sound Security Metrics. In Proceedings of the 2022 IEEE 46th Annual Computers, Software, and Applications Conference (COMPSAC), Los Alamitos, CA, USA, 27 June–1 July 2022; IEEE: New York, NY, USA, 2022; pp. 1804–1809. [Google Scholar]

- Shostack, A. Threat Modeling: Designing for Security; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Aigner, A.; Khelil, A. A Benchmark of Security Metrics in Cyber-Physical Systems. In Proceedings of the 2020 IEEE International Conference on Sensing, Communication and Networking (SECON Workshops), Como, Italy, 22–26 June 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar]

- Yee, G.O. Designing sound security metrics. Int. J. Syst. Softw. Secur. Prot. 2019, 10, 1–21. [Google Scholar] [CrossRef]

- Villarrubia, C.; Fernández-Medina, E.; Piattini, M. Towards a Classification of Security Metrics. In Proceedings of the WOSIS, Porto, Portugal, 1 April 2004; pp. 342–350. [Google Scholar]

- Savola, R. Towards a security metrics taxonomy for the information and communication technology industry. In Proceedings of the International Conference on Software Engineering Advances (ICSEA 2007), Cap Esterel, France, 25–31 August 2007; IEEE: New York, NY, USA, 2007; p. 60. [Google Scholar]

- Ou, X.; Boyer, W.F.; McQueen, M.A. A scalable approach to attack graph generation. In Proceedings of the 13th ACM Conference on Computer and Communications Security, Alexandria, VA, USA, 30 October–3 November 2006; pp. 336–345. [Google Scholar]

- Ramos, A.; Lazar, M.; Holanda Filho, R.; Rodrigues, J.J. Model-based quantitative network security metrics: A survey. IEEE Commun. Surv. Tutor. 2017, 19, 2704–2734. [Google Scholar] [CrossRef]

- Enoch, S.Y.; Hong, J.B.; Ge, M.; Kim, D.S. Composite metrics for network security analysis. arXiv 2020, arXiv:2007.03486. [Google Scholar]

- Morrison, P.; Moye, D.; Williams, L.A. Mapping the Field of Software Security Metrics; Technical Report; Department of Computer Science, North Carolina State University: Raleigh, NC, USA, 2014. [Google Scholar]

- Boyer, W.; McQueen, M. Ideal based cyber security technical metrics for control systems. In Proceedings of the Critical Information Infrastructures Security: Second International Workshop, CRITIS 2007, Málaga, Spain, 3–5 October 2007; Revised Papers 2. Springer: Berlin/Heidelberg, Germany, 2008; pp. 246–260. [Google Scholar]

- Bhol, S.G.; Mohanty, J.; Pattnaik, P.K. Taxonomy of cyber security metrics to measure strength of cyber security. Mater. Today Proc. 2023, 80, 2274–2279. [Google Scholar] [CrossRef]

- Conti, M.; Donadel, D.; Turrin, F. A survey on industrial control system testbeds and datasets for security research. IEEE Commun. Surv. Tutor. 2021, 23, 2248–2294. [Google Scholar] [CrossRef]

- Williams, T.J. The Purdue enterprise reference architecture. Comput. Ind. 1994, 24, 141–158. [Google Scholar] [CrossRef]

- ISA95, Enterprise-Control System Integration. Available online: https://www.isa.org/standards-and-publications/isa-standards/isa-standards-committees/isa95 (accessed on 19 March 2024).

- Zhang, K.; Shi, Y.; Karnouskos, S.; Sauter, T.; Fang, H.; Colombo, A.W. Advancements in industrial cyber-physical systems: An overview and perspectives. IEEE Trans. Ind. Inform. 2022, 19, 716–729. [Google Scholar] [CrossRef]

- Chen, Z.; Ji, C. Measuring network-aware worm spreading ability. In Proceedings of the IEEE INFOCOM 2007—26th IEEE International Conference on Computer Communications, Barcelona, Spain, 6–12 May 2007; IEEE: New York, NY, USA, 2007; pp. 116–124. [Google Scholar]

- Goebel, J.; Holz, T.; Willems, C. Measurement and analysis of autonomous spreading malware in a university environment. In Proceedings of the Detection of Intrusions and Malware, and Vulnerability Assessment: 4th International Conference, DIMVA 2007, Lucerne, Switzerland, 12–13 July 2007; Proceedings 4. Springer: Berlin/Heidelberg, Germany, 2007; pp. 109–128. [Google Scholar]

- Johnson, B.; Chuang, J.; Grossklags, J.; Christin, N. Metrics for Measuring ISP Badness: The Case of Spam: (Short Paper). In Proceedings of the Financial Cryptography and Data Security: 16th International Conference, FC 2012, Kralendijk, Bonaire, 27 Februray–2 March 2012; Revised Selected Papers 16. Springer: Berlin/Heidelberg, Germany, 2012; pp. 89–97. [Google Scholar]

- Meneely, A.; Srinivasan, H.; Musa, A.; Tejeda, A.R.; Mokary, M.; Spates, B. When a patch goes bad: Exploring the properties of vulnerability-contributing commits. In Proceedings of the 2013 ACM/IEEE International Symposium on Empirical Software Engineering and Measurement, Baltimore, MD, USA, 10–11 October 2013; IEEE: New York, NY, USA, 2013; pp. 65–74. [Google Scholar]

- Alexopoulos, N. New Approaches to Software Security Metrics and Measurements. Ph.D. Thesis, Technische Universität Darmstadt, Darmstadt, Germany, 2022. [Google Scholar]

- Tupper, M.; Zincir-Heywood, A.N. VEA-bility security metric: A network security analysis tool. In Proceedings of the 2008 Third International Conference on Availability, Reliability and Security, Barcelona, Spain, 4–7 March 2008; IEEE: New York, NY, USA, 2008; pp. 950–957. [Google Scholar]

- Lanotte, R.; Merro, M.; Munteanu, A.; Tini, S. Formal impact metrics for cyber-physical attacks. In Proceedings of the 2021 IEEE 34th Computer Security Foundations Symposium (CSF), Dubrovnik, Croatia, 21–25 June 2021; IEEE: New York, NY, USA, 2021; pp. 1–16. [Google Scholar]

- Zhang, M.; Wang, L.; Jajodia, S.; Singhal, A.; Albanese, M. Network diversity: A security metric for evaluating the resilience of networks against zero-day attacks. IEEE Trans. Inf. Forensics Secur. 2016, 11, 1071–1086. [Google Scholar] [CrossRef]

- Gu, G.; Fogla, P.; Dagon, D.; Lee, W.; Skorić, B. Measuring intrusion detection capability: An information-theoretic approach. In Proceedings of the 2006 ACM Symposium on Information, Computer and Communications Security, Taipei, Taiwan, 21–24 March 2006; pp. 90–101. [Google Scholar]

- Wang, L.; Jajodia, S.; Singhal, A.; Noel, S. k-zero day safety: Measuring the security risk of networks against unknown attacks. In Proceedings of the Computer Security—ESORICS 2010: 15th European Symposium on Research in Computer Security, Athens, Greece, 20–22 September 2010; Proceedings 15. Springer: Berlin/Heidelberg, Germany, 2010; pp. 573–587. [Google Scholar]

- Sharma, D.P.; Enoch, S.Y.; Cho, J.H.; Moore, T.J.; Nelson, F.F.; Lim, H.; Kim, D.S. Dynamic security metrics for software-defined network-based moving target defense. J. Netw. Comput. Appl. 2020, 170, 102805. [Google Scholar] [CrossRef]

- Demme, J.; Martin, R.; Waksman, A.; Sethumadhavan, S. Side-channel vulnerability factor: A metric for measuring information leakage. In Proceedings of the 2012 39th Annual International Symposium on Computer Architecture (ISCA), Portland, OR, USA, 9–13 June 2012; Volume 40, pp. 106–117. [Google Scholar]

- Jalkanen, J. Is Human the Weakest Link in Information Security?: Systematic Literature Review. Master’s Thesis, University of Jyväskylä, Jyväskylän yliopisto, Finland, 2019. [Google Scholar]

| Metric | Definition | Ref. | Match IC |

|---|---|---|---|

| Infection Rate | Average number of computers that can be infected by a compromised computer (per time unit) at the early stage of spreading | [39] | Yes |

| ISP badness metric | Quantifies the effect of spam from one ISP or autonomous system on the rest of the Internet comparing the “spamcount” with its “disconnectability” | [41] | No |

| Metric | Description | Ref. | WD | P | R |

|---|---|---|---|---|---|

| Vulnerability lifetime | Measures how long it takes to patch a vulnerability since its disclosure | [10] | X | ||

| Network maliciousness metric | Estimates the fraction of blacklisted IP addresses n a network | [21] | V | X | |

| Worst-case loss | Maximum dollar value of the damage/loss that could be inflicted by malicious personnel via a compromised control system | [33] | V | V | X |

| VEA-bility | Aggregating scores from CVSS for the overall system, identifying all the (well-known) vulnerabilities on hosts | [44] | V | V | V |

| Name | Scope | Result | Auto | Measure | Construction | Type | Ref. | C | I | A |

|---|---|---|---|---|---|---|---|---|---|---|

| Attack impact | network | qualitative | manual | static | model | vulnerability | [45] | X | X | X |

| Attack surface | network | quantitative | auto | static | model | vulnerability | [18] | X | X | X |

| Component test count | device | quantitative | auto | dynamic | measure | situation | [33] | X | X | X |

| Cost metric | network | quantitative | auto | dynamic | measure | defense | [10] | X | X | X |

| d1-Diversity | network | quantitative | auto | static | model | defense | [46] | X | X | X |

| Data transmission exposure | network | quantitative | auto | dynamic | measure | situation | [33] | X | ||

| Defense depth | network | quantitative | auto | static | model | vulnerability | [33] | X | X | X |

| Detection mechanism deficiency count | system | quantitative | auto | static | measure | defense | [33] | X | X | X |

| Historically exploited vulns metric | device | quantitative | auto | static | measure | vulnerability | [10] | X | X | X |

| Incident rate | network | quantitative | auto | static | measure | situation | [10] | X | X | X |

| Intrusion detection capability metric | network | quantitative | auto | dynamic | measure | defense | [47] | X | X | X |

| k-zero-day-safety metric | system | quantitative | auto | static | model | vulnerability | [48] | X | X | X |

| Mean of attack path lengths | network | quantitative | auto | static | model | defense | [30] | X | X | X |

| Mean effort-to-failure (METF) | device | quantitative | manual | dynamic | model | situation | [30] | X | X | X |

| Mean time-to-compromise (MTTC) | network | quantitative | manual | dynamic | model | vulnerability | [10] | X | X | X |

| Median of path lengths | network | quantitative | auto | static | model | defense | [30] | X | X | X |

| Minimum password strength | user | quantitative | auto | dynamic | measure | user | [33] | X | ||

| Moving target defense evaluation | network | qualitative | manual | dynamic | model | defense | [49] | X | X | X |

| Network compromise percentage | network | quantitative | auto | dynamic | model | vulnerability | [30] | X | X | X |

| Number of attack paths | network | quantitative | auto | static | model | defense | [30] | X | X | X |

| Penetration resistance | system | qualitative | manual | dynamic | model | defense | [10] | X | X | X |

| Reachability count | network | quantitative | auto | static | model | situation | [33] | X | X | X |

| Reaction time metric | network | quantitative | auto | dynamic | measure | defense | [10] | X | X | X |

| Relative effectiveness | network | qualitative | manual | dynamic | model | defense | [30] | X | X | X |

| Restoration time | system | quantitative | manual | static | model | defense | [33] | X | ||

| Return on investment | system | quantitative | manual | static | model | situation | [10] | X | X | X |

| Rogue change days | system | quantitative | auto | dynamic | measure | situation | [33] | X | X | X |

| Root privilege count | user | quantitative | auto | dynamic | measure | situation | [33] | X | X | X |

| SDPL and MoPL | network | quantitative | auto | static | model | defense | [30] | X | X | X |

| Side-channel vuln factor | device | quantitative | manual | dynamic | model | vulnerability | [50] | X | ||

| VEA-bility | network | quantitative | auto | dynamic | model | defense | [44] | X | X | X |

| Vulnerable host percentage | network | quantitative | manual | dynamic | model | vulnerability | [33] | X | X | X |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gori, G.; Rinieri, L.; Melis, A.; Al Sadi, A.; Callegati, F.; Prandini, M. A Systematic Analysis of Security Metrics for Industrial Cyber–Physical Systems. Electronics 2024, 13, 1208. https://doi.org/10.3390/electronics13071208

Gori G, Rinieri L, Melis A, Al Sadi A, Callegati F, Prandini M. A Systematic Analysis of Security Metrics for Industrial Cyber–Physical Systems. Electronics. 2024; 13(7):1208. https://doi.org/10.3390/electronics13071208

Chicago/Turabian StyleGori, Giacomo, Lorenzo Rinieri, Andrea Melis, Amir Al Sadi, Franco Callegati, and Marco Prandini. 2024. "A Systematic Analysis of Security Metrics for Industrial Cyber–Physical Systems" Electronics 13, no. 7: 1208. https://doi.org/10.3390/electronics13071208

APA StyleGori, G., Rinieri, L., Melis, A., Al Sadi, A., Callegati, F., & Prandini, M. (2024). A Systematic Analysis of Security Metrics for Industrial Cyber–Physical Systems. Electronics, 13(7), 1208. https://doi.org/10.3390/electronics13071208