Abstract

With the exploration of subterranean scenes, determining how to ensure the safety of subterranean pedestrians has gradually become a hot research topic. Considering the poor illumination and lack of annotated data in subterranean scenes, it is essential to explore the LiDAR-based domain adaptive detectors for localizing the spatial location of pedestrians, thus providing instruction for evacuation and rescue. In this paper, a novel domain adaptive subterranean 3D pedestrian detection method is proposed to adapt pre-trained detectors from the annotated road scenes to the unannotated subterranean scenes. Specifically, an instance transfer-based scene updating strategy is designed to update the subterranean scenes by transferring instances from the road scenes to the subterranean scenes, aiming to create sufficient high-quality pseudo labels for fine-tuning the pre-trained detector. In addition, a pseudo label confidence-guided learning mechanism is constructed to fully utilize pseudo labels of different qualities under the guidance of confidence scores. Extensive experiments validate the superiority of our proposed domain adaptive subterranean 3D pedestrian detection method.

1. Introduction

Recently, with the steady development of resource exploitation technology, subterranean scenes, such as mines and caves, have been widely explored [1]. In subterranean scenes, there are risks of rockfalls and cave-ins, which seriously threaten the safety of subterranean workers. As an essential computer vision technique, 3D pedestrian detection is able to locate the spatial position of pedestrians in the scenes [2,3]. Introducing 3D pedestrian detection for subterranean scenes is capable of acquiring the locations of pedestrians in an emergency situation, thus providing instruction for evacuation and rescue. Compared with images captured by the RGB camera, the LiDAR point cloud has the ability to assist in locating the 3D spatial position of pedestrians and is hardly affected by the poor illumination in the subterranean scenes [4]. However, existing LiDAR-based 3D pedestrian detectors rely on large-scale annotated point cloud datasets for supervised training. For complex subterranean scenes, obtaining pedestrian annotations of point cloud data is time-consuming and labor-intensive, which makes it challenging to train the detectors for subterranean 3D pedestrian detection in a supervised way. In comparison to the subterranean scenes, abundant annotated point cloud data exist in the road scenes. It is observed that domain adaptation has the ability to transfer knowledge from the annotated scenes to the unannotated scenes [5,6]. Therefore, exploring an effective domain adaptive subterranean 3D pedestrian detection method for accurate 3D pedestrian detection in the target domain subterranean scenes requires further research.

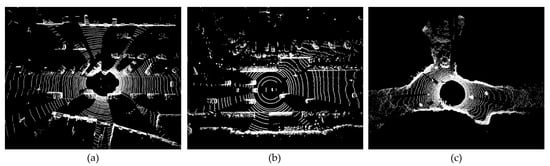

Over the last few years, LiDAR-based domain adaptive 3D detection has been proposed in road scenes with great performance. To transfer the detection knowledge between different road scenes, some works have adopted statistical normalization or domain alignment approaches to reduce the domain gaps [7,8,9]. However, these methods inevitably require target domain statistical information or complex detection network structures, which are difficult to achieve in practical applications. To address the above problems, self-training-based domain adaptive 3D detection methods [10,11,12] have been proposed. These methods generally employ detectors pre-trained in source scenes to generate pseudo labels in target scenes, and then use the pseudo labels to iteratively fine-tune the pre-trained detector. For example, Yang et al. [10] constructed a pseudo label memory bank for self-training to improve the adaptability of pre-trained detectors. However, subterranean scenes have the characteristics of narrow spaces and complex terrain. As shown in Figure 1, the domain gaps between the subterranean scenes (i.e., Edgar [13] dataset) and road scenes (i.e., KITTI [14] and ONCE [15] datasets) are larger than the gaps between different road scenes. This causes the 3D pedestrian detector pre-trained in road scenes to generate many low-quality pseudo labels in the subterranean scenes, which further misleads the network optimization of self-training. Therefore, determining how to create sufficient high-quality pseudo labels for effective self-training in subterranean scenes requires further exploration. In addition, most of the existing methods treat pseudo labels identically when the pseudo labels are taken out from the memory bank for the self-training stage [11,12]. However, pseudo labels generated in the subterranean scenes usually have different qualities, and treating them identically limits the different contributions of pseudo labels. This situation affects the further performance improvement of domain adaptive subterranean 3D pedestrian detection. Hence, determining how to fully utilize pseudo labels with different qualities for improving detection performance needs further exploration.

Figure 1.

Illustrations of different point cloud scenes. (a) A road scene from the KITTI dataset. (b) A road scene from the ONCE dataset. (c) A subterranean scene from the Edgar dataset.

To overcome the above challenges, a novel domain adaptive subterranean 3D pedestrian detection method is proposed to transfer pre-trained 3D pedestrian detectors from the road scenes to the subterranean scenes. On the one hand, since the instances in the source domain are manually annotated, the annotated 3D bounding boxes are fitted accurately to the pedestrian instances. Therefore, an intuitive idea is to transfer the pedestrian instances and their annotations from the road scenes to the subterranean scenes. In this way, the transferred instance annotations can be regarded as high-quality pseudo labels in the subterranean scenes. At the same time, there are also some low-quality pseudo labels in the subterranean scenes, which could mislead the network optimization of self-training. Transferring annotated pedestrian instances to replace the 3D bounding boxes of the low-quality pseudo labels and their enclosing point clouds can both reduce the number of low-quality pseudo labels and make the distribution of the updated scenes similar to the original scenes. On the other hand, it is observed that the confidence scores of pseudo labels reflect the qualities to a certain extent. Therefore, high-confidence pseudo labels should play a greater role in the self-training stage for network optimization, while the effect of low-confidence pseudo labels should be weakened. Guiding network optimization in the self-training stage by the confidence scores of the pseudo labels contributes to the full exploitation of pseudo labels, which in turn improves the performance of domain adaptive subterranean 3D pedestrian detection. Based on the above considerations, this paper designs an instance transfer-based scene updating strategy to obtain sufficient high-quality pseudo labels and constructs a pseudo label confidence-guided learning mechanism to fully utilize pseudo labels of different qualities for fine-tuning the pre-trained detector. By jointly utilizing these two components, the proposed method is capable of achieving effective domain adaptive subterranean 3D pedestrian detection. The contributions of this paper are summarized as follows:

- (1)

- A novel domain adaptive subterranean 3D pedestrian detection method is proposed in this paper to mitigate the impact of large domain gaps between the road scenes and the subterranean scenes, thus achieving accurate subterranean 3D pedestrian detection.

- (2)

- By transferring the annotated instances from the road scenes to the subterranean scenes, an instance transfer-based scene updating strategy is proposed to achieve scene updating of the target domain, in turn obtaining sufficient high-quality pseudo labels.

- (3)

- To further optimize the network learning, a pseudo label confidence-guided learning mechanism is introduced to fully utilize pseudo labels of different qualities in the self-training stage.

- (4)

- Extensive experiments demonstrate that the proposed method obtains great performance in domain adaptive subterranean 3D pedestrian detection.

The rest of this paper is organized as follows. Section 2 reviews related works, including LiDAR-based 3D detection, domain adaptive object detection, and visual tasks in subterranean scenes. Section 3 describes the technical details of the proposed domain adaptive subterranean 3D pedestrian detection method. Section 4 demonstrates the extensive experimental results. In Section 5, the conclusions are summarized.

2. Related Works

2.1. LiDAR-Based 3D Detection

The LiDAR point cloud has the ability to describe the 3D structure of scenes [16,17]. By utilizing properties of the point cloud, LiDAR-based 3D detection is designed to locate and classify 3D objects from point cloud scenes and has widespread applications in areas including autonomous driving and intelligent robotics. Existing methods can be categorized into three groups, consisting of point-based [18,19,20], voxel-based [21,22,23,24], and point-voxel-based [25,26,27] methods. In point-based methods, Shi et al. [18] segmented the point cloud in the foreground and then generated 3D proposals through a bottom-up approach. Yang et al. [20] designed a fusion sampling strategy to achieve efficient detection. In voxel-based methods, the point cloud is represented as regular voxels of fixed size for subsequent feature extraction using 2D or 3D CNNs. Zhou et al. [21] achieved object detection based on 3D voxels by introducing a voxel feature encoding module to convert points inside voxels into a uniform representation. To improve the efficiency of voxel-based 3D detection, Yan et al. [22] proposed a sparse voxel convolution-based detection method, which uses sparse 3D convolution to improve the learning of voxel features. Lang et al. [23] introduced a novel method that predicts the 3D bounding boxes by learning the features of the point cloud on the pillar voxels. Yu et al. [24] achieved accurate detection by exploring the perspective information of the point cloud and establishing correlations between objects in the scenes. In point-voxel-based methods, Chen et al. [26] proposed a two-stage approach using 3D convolution and 2D convolution together to extract discriminative features for 3D detection. Shi et al. [27] proposed a hybrid framework to take advantage of the fine-grained 3D information of points and the high computational efficiency of voxels for effective 3D detection. Despite the above methods achieving excellent 3D detection performance, they require adequate annotated data for supervised training, which is not applicable in subterranean scenes with a lack of annotations.

2.2. Domain Adaptive Object Detection

Domain adaptive object detection seeks to adapt detectors supervised trained in the source domain to the unannotated target domain. Recently, numerous works have been proposed to investigate image-based domain adaptive 2D object detection [28,29,30]. These methods adopt either feature alignment or data alignment approaches to mitigate the impact of domain gaps. With the widespread use of point clouds, some works have explored LiDAR-based domain adaptive 3D detection in road scenes. Wang et al. [7] introduced a statistical normalization approach to reduce the domain gaps. Luo et al. [9] proposed a multilevel teacher-student model that adaptively produces robust pseudo labels by constraining consistency at different levels. In [10], a novel paradigm based on self-training was explored through a random object scaling strategy and an iterative pseudo label updating mechanism. Furthermore, Yang et al. [11] introduced a source domain data-assisted training strategy to correct the gradient direction and devised a domain-specific normalization approach to take full advantage of the source domain data. Considering the different densities of point clouds collected by various LiDARs, Hu et al. [12] proposed a random beam resampling strategy and an object graph alignment unit to enhance the consistency between objects with different densities. Chen et al. [31] designed a cross-domain examination strategy to generate reliable pseudo labels for guiding the network fine-tuning. Although the above methods achieve great performance in domain adaptive 3D object detection in road scenes, they lack attention to subterranean scenes. Unlike previous methods, the proposed method is designed to transfer detectors from road scenes to subterranean scenes, enabling domain adaptive subterranean 3D pedestrian detection with wider domain gaps.

2.3. Visual Tasks in Subterranean Scenes

Recently, deep neural networks have been widely used in various fields, such as 3D shape clustering [32], quality assessment [33], and video enhancement [34]. Taking advantage of deep neural networks, researchers have conducted preliminary explorations of different visual tasks in subterranean scenes, which mainly contain the two categories of simultaneous localization and mapping (SLAM) [35,36,37] and object detection [38,39,40]. For subterranean SLAM, the success of Subterranean Challenge (SubT) [41] has contributed to the maturation of numerous multirobot- and multisensor-based methods. For instance, Tranzatto et al. [35] proposed a multimodal complementary SLAM method with the support of CompSLAM [42]. Agha et al. [36] focused on autonomy in challenging environments, and designed closely associated perception and decision-making units. On the basis of maps obtained by the above methods, deep reinforcement learning-based approaches [43,44,45] are able to accomplish path planning in interaction with the environment to achieve autonomous movement of the robot in an unknown environment. In addition, for object detection in subterranean scenes, it is necessary to overcome the challenge of poor illumination. Wei et al. [38] proposed a parallel feature transfer network that balances precision and speed in subterranean pedestrian detection. Patel et al. [39] searched for the approximate location of objects in subterranean scenes and then completed accurate 2D object detection based on images. Wang et al. [40] accomplished object detection under poor illumination conditions in subterranean mines by enhancing low-light images and designing a decoupled head for classification and regression. Although some of these methods have focused on pedestrian detection in subterranean scenes, they still rely on image data captured by RGB cameras to achieve 2D detection, which fails to completely eliminate the effects of poor illumination. In this paper, the illumination-independent LiDAR point cloud is utilized to achieve reliable domain adaptive subterranean 3D pedestrian detection.

3. Method

3.1. Problem Statement and Overview

The proposed method aims to efficiently transfer the 3D pedestrian detection knowledge learned from the road scenes to the subterranean scenes through domain adaptation. In detail, the 3D pedestrian detector is first pre-trained with the source domain point clouds and annotations , and then fine-tuned with the unannotated target domain point clouds , where and denote the i-th point cloud data in the source and target domains, and and represent the number of point cloud data in these two domains, respectively. is a set of annotated pedestrian 3D bounding boxes for the i-th point cloud data in the source domain. In addition, Table 1 summarizes the symbols used in this paper.

Table 1.

Glossary of symbols.

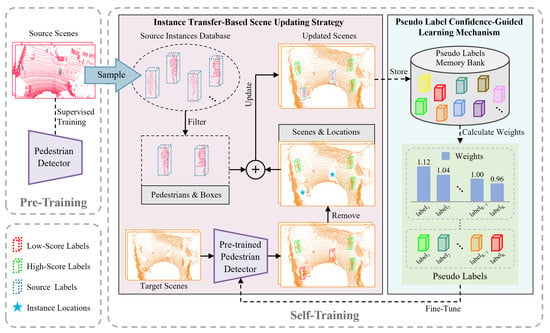

Figure 2 illustrates the architecture of our proposed method. As shown in the figure, to adapt the 3D pedestrian detector trained in the road scenes to the subterranean scenes, the proposed method employs a two-stage training paradigm, denoted as the pre-training stage and the self-training stage, respectively. During the pre-training stage, the annotated data from the source domain road scenes are used to train the 3D pedestrian detector with supervision. Then, in the self-training stage, an instance transfer-based scene updating strategy is designed to mitigate the impact of domain gaps. In the designed strategy, a pre-trained detector is deployed to generate initial prediction results, and then the instances are transferred from the source domain road scenes to update the target domain subterranean scenes, so as to obtain sufficient high-quality pseudo labels. After that, a pseudo label confidence-guided learning mechanism is constructed to fully utilize pseudo labels of different qualities for guiding network optimization. The specific technical details of the proposed method are introduced in the following.

Figure 2.

Architecture of the proposed domain adaptive subterranean 3D pedestrian detection method.

3.2. Instance Transfer-Based Scene Updating Strategy

Considering that the pedestrian instances in the source domain contain manual annotations, transferring the annotated source domain instances to the target domain for replacing the 3D bounding boxes of low-quality pseudo labels and their enclosing points contributes to improving the quality of the pseudo labels in the target domain. Therefore, an instance transfer-based scene updating strategy is designed to produce sufficient high-quality pseudo labels for fine-tuning the pre-trained pedestrian detector.

As shown in Figure 2, the designed instance transfer-based scene updating strategy first generates prediction results using a pre-trained detector and then divides them according to their confidence scores. Specifically, to obtain the pseudo labels, the point clouds of subterranean scenes are sent to the pre-trained detector to generate the initial prediction results of pedestrians in the subterranean scenes, where and are the 3D bounding boxes and the confidence scores of the prediction results, respectively. Since the confidence scores represent the qualities of pseudo labels to a certain extent, for each prediction result , it is divided according to the confidence score , where m is the number of prediction results. The process is represented as follows:

where and are confidence thresholds, and . , , and are the prediction results in different confidence intervals. After dividing the prediction results, and are treated as high-quality pseudo labels and low-quality pseudo labels according to their confidence scores. Due to the relatively low confidence scores, contain many false detections and are thus discarded. In addition, since the high-quality pseudo labels have high confidence scores, they are directly stored in the memory bank for the subsequent self-training stage. Meanwhile, for low-quality pseudo labels , their 3D bounding boxes and the enclosing points are removed, retaining only the bottom center coordinates and the confidence scores .

Thereafter, to update the target domain scenes, instances with annotations are sampled from the road scenes to the subterranean scenes for obtaining sufficient pseudo labels. Specifically, a series of annotated instances are sampled from the source domain, where are the 3D bounding boxes of the instances, and are the points enclosed by the 3D bounding boxes. To make the transferred instances fit the distribution of the subterranean scenes, instance filtering is applied after sampling to obtain instances in which the number of points is higher than . The filtering process is represented as follows:

After filtering, the instances are transferred to the target domain subterranean scenes. In detail, to ensure that the transferred instances are placed at the locations of the removed low-quality pseudo labels , the 3D bounding boxes of transferred instances are aligned with at the bottom center. Then, the residual points inside the boxes are removed, and the transferred instances are placed at the locations of the corresponding boxes, so as to guarantee that the transferred instances do not conflict with the original residual points of the target domain. Finally, the pseudo labels of transferred instances are also stored in the memory bank to obtain the whole target domain pseudo labels , where are the annotated 3D bounding boxes of transferred instances, and are the confidence scores of at the corresponding positions.

In this way, the target domain subterranean scenes are updated by transferring instances from the source domain, so as to obtain sufficient high-quality pseudo labels for fine-tuning the pre-trained detector.

3.3. Pseudo Label Confidence-Guided Learning Mechanism

By observation, the qualities of pseudo labels can be represented by confidence scores, a pseudo label confidence-guided learning mechanism is constructed to fully utilize pseudo labels of different qualities for guiding network optimization.

In detail, the constructed pseudo label confidence-guided learning mechanism uses the confidence scores of pseudo labels to calculate confidence weights, which are used to guide network optimization in the self-training stage, as depicted in Figure 2. Specifically, for pseudo labels , the confidence weights w is calculated based on their confidence scores s by batch, where b are the 3D bounding boxes of pseudo labels. Taking the k-th pseudo label in the current batch as an example, the process of calculation is as follows:

where is a hyperparameter to adjust the dynamic range of the weights. K is the number of pseudo labels in the current batch, and is the k-th confidence score in the current batch. Note that, since the pseudo labels in the transferred instances are manually annotated in the source domain, they should share a consistent quality. Therefore, the weights of these pseudo labels are modified to a uniform fixed value , which is set to 1.0.

After obtaining the confidence weights of pseudo labels, the weights are used to balance the loss function of the self-training stage, and the loss of the self-training stage in the target domain is calculated as follows:

where and are the classification loss and regression loss in the target domain, similar to [10].

Through the construction of the pseudo label confidence-guided learning mechanism, the confidence scores of pseudo labels are used to guide the network optimization of the self-training stage, which in turn improves the performance of domain adaptive subterranean 3D pedestrian detection.

3.4. Implementation Details

In the proposed method, the training process is represented as two stages: the pre-training stage and the self-training stage. During the pre-training stage, the source domain point clouds are input to the detector after various data augmentations such as GT sampling, random flipping, random rotation, and random scaling. Then, the source domain annotations are used to constrain the outputs of the detector. The loss function of the pre-training stage is as follows:

where and are the classification loss and regression loss, similar to [10].

In the self-training stage, the target domain point clouds are augmented with random flipping, random rotation, and random scaling before being fed to the pre-trained detector. The outputs of the detector in the self-training stage are constrained by the pseudo labels. In addition, similar to ST3D++ [11], a portion of the annotated source domain data is introduced in the self-training stage to correct the gradient direction in network optimization, and the corresponding loss is denoted as . Therefore, the loss function in the self-training stage is as follows:

where is the loss of the self-training stage in the target domain, as given in Equation (4). The training details of the proposed method are summarized in Algorithm 1.

| Algorithm 1 The training procedure of the proposed method. |

|

The proposed method is implemented using SECOND-IoU [10] and PointPillars [23] as 3D pedestrian detectors. All implementations are based on the OpenPCDet [46] codebase and are performed on a GeForce RTX 3090 GPU and two Xeon Silver 4310 CPUs at 2.10 GHz. In the implementation, the Adam [47] optimizer with a One Cycle scheduler is adopted with 80 epochs of pre-training and 30 epochs of self-training, respectively. When SECOND-IoU [10] and PointPillars [23] are used as detectors, the batch sizes are set to 32 and 4, respectively. The point cloud detection ranges for both the source and target domains are, respectively, set to [0 m, 35.2 m], [−20.0 m, 20.0 m], and [−3.0 m, 1.0 m] for the x-, y-, and z-axis. The confidence thresholds and for dividing prediction results are set to 0.4 and 0.2, respectively. When filtering source domain instances, is set to 100. The hyperparameter is set to 0.1. In the implementation, the memory used for inference depends on 3D detectors, with 2272 MB and 3042 MB utilized when using SECOND-IoU [10] and PointPillars [23] as detectors, respectively.

4. Experiments

4.1. Experimental Settings

Our proposed method is able to adapt the 3D pedestrian detector trained in the road scenes to the subterranean scenes. To validate the performance of the proposed method, the KITTI [14] dataset is used as the source domain road scenes, and the Edgar [13] dataset is used as the target domain subterranean scenes, and both of these datasets contain point cloud data collected by 64-beam LiDARs. Among them, KITTI [14] is a commonly used dataset of road scenes containing 3D bounding box annotations, and it contains 3712 training samples, 3769 validation samples, and 7518 testing samples, where the training samples are used to pre-train the 3D pedestrian detector in the proposed method. In addition, Edgar [13] is a point cloud dataset captured with an unmanned robot in subterranean mine scenes. In the proposed method, following [13], 700 training samples from this dataset are selected for the self-training stage in the target domain. In addition, another 268 testing samples are selected for performance validation of the proposed method to ensure that the training and testing samples are not overlapping.

The performance of domain adaptive subterranean 3D pedestrian detection is evaluated using metrics consistent with [13]. Specifically, the average precision (AP) of the 3D bounding box and bird’s eye view (BEV) over 40 recall positions, denoted as and , are reported, respectively, and the IoU thresholds are set to 0.50 and 0.25 when calculating the average precision.

4.2. Comparison Results

To demonstrate the performance of the proposed method for 3D pedestrian detection in subterranean scenes, several existing domain adaptive 3D object detection methods are used as comparisons, including SN [7], ST3D [10], ST3D++ [11], and ReDB [31]. In addition, comparisons are made with Source Only, which means that the pre-trained detectors are evaluated directly in the target domain. Note that these methods are designed for cross-road scenes, so they are reproduced using road scenes as the source domain and subterranean scenes as the target domain. For the compared method and the proposed method, experiments are conducted using SECOND-IoU [10] and PointPillars [23] as detectors, respectively, so as to verify the effectiveness of the proposed method.

Table 2 and Table 3 show the results of the qualitative comparison of domain adaptive subterranean 3D pedestrian detection using SECOND-IoU [10] and PointPillars [23] as detectors, respectively. For the SECOND-IoU detector, compared to Source Only, the proposed method improves by 7.46% and 8.03% in and metrics with an IoU threshold of 0.50. This means that the proposed method mitigates the impact of domain gaps and adapts the pre-trained detector effectively to subterranean scenes. In addition, compared to the domain adaptive 3D object detection methods SN, ST3D, ST3D++, and ReDB, the proposed method obtains 6.71%, 4.73%, 3.87%, and 6.79% performance gains in metric with an IoU threshold of 0.50, respectively. The fact is that the proposed method updates the target domain scenes through instance transfer and uses the confidence scores of pseudo labels to guide the network optimization, thus improving the domain adaptive detection performance. Meanwhile, similar results are also observed for the PointPillars detector. Compared to Source Only, the proposed method improves by 7.60% and 12.32% in and metrics with an IoU threshold of 0.50. In addition, compared to the domain adaptive 3D object detection methods SN, ST3D, ST3D++, and ReDB, the proposed method obtains 8.44%, 3.35%, 2.62%, and 4.65% improvement in metric with an IoU threshold of 0.50, respectively. Furthermore, it is observed that the proposed method shows a very substantial improvement in all the metrics with an IoU threshold of 0.25 when using both two detectors, which is due to the fact that the proposed method creates sufficient high-quality pseudo labels and fully utilizes pseudo labels of different qualities for self-training. To sum up, the results of quantitative experiments demonstrate the superiority of the proposed method.

Table 2.

Comparative results of domain adaptive subterranean 3D pedestrian detection performance when using SECOND-IoU as the detector.

Table 3.

Comparative results of domain adaptive subterranean 3D pedestrian detection performance when using PointPillars as the detector.

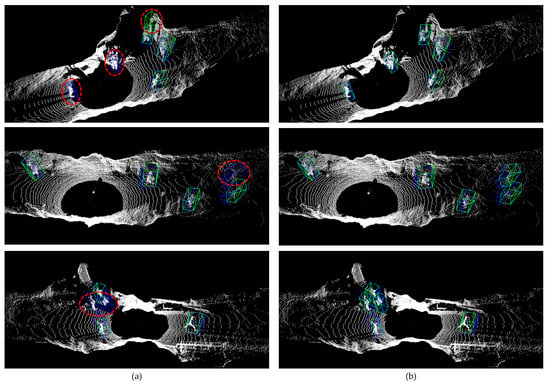

For further verifying the effectiveness of the proposed method, the results of domain adaptive subterranean pedestrian detection when using SECOND-IoU as the detector are visualized in Figure 3. As illustrated in Figure 3a, there are several missed and false detections in the prediction results of the compared method ST3D++, as circled in red. In contrast, our proposed method is capable of accurately predicting the locations of pedestrians in subterranean scenes. This is because the proposed method is able to generate sufficient high-quality pseudo labels and use the pseudo label confidence scores to guide network optimization. The visualization results further validate the effectiveness of the proposed method.

Figure 3.

Visualization results of domain adaptive subterranean 3D pedestrian detection when using SECOND-IoU as the detector. The prediction results of pedestrians are represented in green and the annotated 3D bounding boxes are in blue, and the missed and false detections are circled in red. (a) The prediction results of the comparison method ST3D++. (b) The prediction results of the proposed method.

4.3. Ablation Studies

In the proposed method, an instance transfer-based scene updating strategy (ITSUS) and a pseudo label confidence-guided learning mechanism (PLCLM) are designed to mitigate the impact of domain gaps for effective domain adaptive subterranean 3D pedestrian detection. To validate the effectiveness of these two components, ablation experiments are performed for domain adaptive subterranean 3D pedestrian detection utilizing SECOND-IoU as the detector. The results of the ablation experiment are shown in Table 4. In this table, “w/o ITSUS” indicates that the proposed method removes the instance transfer-based scene updating strategy and uses the pseudo labels generated by the pre-trained detector for the self-training stage. Similarly, “w/o PSCLM” indicates that the proposed method removes the pseudo label confidence-guided learning mechanism and utilizes the pseudo labels identically for network optimization. In this table, the “w/o ITSUS” and “w/o PSCLM” methods drop significantly in the performance of domain adaptive subterranean 3D pedestrian detection. Specifically, compared to the proposed method, the “w/o ITSUS” method shows a significant performance reduction of 2.11% in metric with an IoU threshold of 0.50. This is because many low-quality pseudo labels are involved in self-training after the removal of the instance transfer-based scene updating strategy, thus misleading the network optimization. Meanwhile, “w/o PSCLM” also exhibits a performance degradation compared to the proposed method. This phenomenon is mainly attributed to the fact that all pseudo labels of different qualities are utilized equally in the self-training stage. In contrast, the best performance is achieved by jointly utilizing both components in the proposed method, which fully validates the effectiveness of both components.

Table 4.

Results of ablation experiments utilizing SECOND-IoU as the detector.

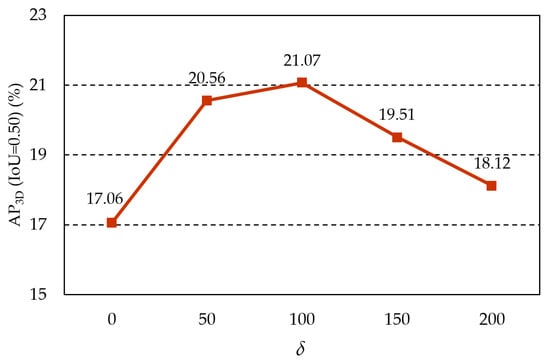

4.4. Hyperparameter Selection Experiments

In the designed instance transfer-based scene updating strategy, the hyperparameter in Equation (2) is introduced for filtering the sampled instances to match the distribution of the subterranean scenes. To verify the impact of different values of on the proposed method, hyperparameter selection experiments are performed by adjusting the value of . Figure 4 illustrates the fluctuation curve of (IoU = 0.50) metric with different settings of when SECOND-IoU is used as the detector. As shown in the figure, the best performance of domain adaptive subterranean 3D pedestrian detection is achieved when is set to 100, attaining 21.07% in metric with an IoU threshold of 0.50, and either increasing or decreasing the value of results in a decrease of performance. For example, when is set to 50 or 150, the performance decreases by 0.51% and 1.56% in metric with an IoU threshold of 0.50, respectively. Therefore, the value of in the proposed method is set to 100, which is utilized as the default setting in the experiments.

Figure 4.

Evaluation of hyperparameters when using SECOND-IoU as the detector.

5. Conclusions

This paper introduces a domain adaptive subterranean 3D pedestrian detection method that effectively adapts the 3D pedestrian detector pre-trained in the road scenes to the unannotated subterranean scenes. In the proposed method, an instance transfer-based scene updating strategy is designed to transfer annotated pedestrian instances in the road scenes for updating the subterranean scenes, so as to create sufficient high-quality pseudo labels for fine-tuning the pre-trained detector in the self-training stage. Then, a pseudo label confidence-guided learning mechanism is designed to guide the network optimization through the confidence scores of pseudo labels, thus making full use of pseudo labels of different qualities. By jointly utilizing these two components, the proposed method obtains superior performance based on SECOND-IoU and PointPillars detectors. In this way, the proposed method is able to localize the spatial location of pedestrians in subterranean scenes, which would provide instruction for evacuation and rescue in an emergency situation. However, although the proposed method improves the quality of pseudo labels used for self-training to some extent, the pseudo labels are still unable to exactly match with real annotations, limiting further improvement in the performance of domain adaptive subterranean 3D pedestrian detection. In future work, it is worthwhile researching how to further improve the accuracy of pseudo labels, thereby further improving the performance of domain adaptive subterranean 3D pedestrian detection.

Author Contributions

Conceptualization, Z.L. and Z.Z.; methodology, Z.L. and T.Q.; software, Z.L. and Z.Z.; investigation, L.X. and X.Z.; data curation, L.X.; writing—original draft preparation, Z.L.; writing—review and editing, Z.L., T.Q. and L.X.; supervision, Z.Z., T.Q. and X.Z.; project administration, T.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Science and Technology Major Project of Tibetan Autonomous Region of China under Grant XZ202201ZD0006G04.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, H.; Savkin, A.V.; Vucetic, B. Autonomous area exploration and mapping in underground mine environments by unmanned aerial vehicles. Robotica 2020, 38, 442–456. [Google Scholar] [CrossRef]

- Yu, C.; Lei, J.; Peng, B.; Shen, H.; Huang, Q. SIEV-Net: A structure-information enhanced voxel network for 3D object detection from LiDAR point clouds. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5703711. [Google Scholar] [CrossRef]

- Lima, J.P.; Roberto, R.; Figueiredo, L.; Simoes, F.; Teichrieb, V. Generalizable multi-camera 3D pedestrian detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021. [Google Scholar]

- Sierra-García, J.E.; Fernández-Rodríguez, V.; Santos, M.; Quevedo, E. Development and experimental validation of control algorithm for person-following autonomous robots. Electronics 2023, 12, 2077. [Google Scholar] [CrossRef]

- Zhang, Z.; Gao, Z.; Li, X.; Lee, C.; Lin, W. Information separation network for domain adaptation learning. Electronics 2022, 11, 1254. [Google Scholar] [CrossRef]

- Lei, J.; Qin, T.; Peng, B.; Li, W.; Pan, Z.; Shen, H.; Kwong, S. Reducing background induced domain shift for adaptive person re-identification. IEEE Trans. Ind. Inform. 2023, 19, 7377–7388. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, X.; You, Y.; Li, L.E.; Hariharan, B.; Campbell, M.; Weinberger, K.Q.; Chao, W.L. Train in Germany, Test in the USA: Making 3D object detectors generalize. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020. [Google Scholar]

- Zhang, W.; Li, W.; Xu, D. SRDAN: Scale-aware and range-aware domain adaptation network for cross-dataset 3D object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021. [Google Scholar]

- Luo, Z.; Cai, Z.; Zhou, C.; Zhang, G.; Zhao, H.; Yi, S.; Lu, S.; Li, H.; Zhang, S.; Liu, Z. Unsupervised domain adaptive 3D detection with multi-level consistency. In Proceedings of the IEEE International Conference on Computer Vision, Virtual, 11–17 October 2021. [Google Scholar]

- Yang, J.; Shi, S.; Wang, Z.; Li, H.; Qi, X. ST3D: Self-training for unsupervised domain adaptation on 3D object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021. [Google Scholar]

- Yang, J.; Shi, S.; Wang, Z.; Li, H.; Qi, X. ST3D++: Denoised self-training for unsupervised domain adaptation on 3D object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 6354–6371. [Google Scholar] [CrossRef] [PubMed]

- Hu, Q.; Liu, D.; Hu, W. Density-insensitive unsupervised domain adaption on 3D object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Yuwono, Y.D. Comparison of 3D Object Detection Methods for People Detection in Underground Mine. Master’s Thesis, Colorado School of Mines, Golden, CO, USA, 2022. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Mao, J.; Niu, M.; Jiang, C.; Liang, H.; Chen, J.; Liang, X.; Li, Y.; Ye, C.; Zhang, W.; Li, Z.; et al. One million scenes for autonomous driving: Once dataset. arXiv 2021, arXiv:2106.11037. [Google Scholar]

- Lei, J.; Song, J.; Peng, B.; Li, W.; Pan, Z.; Huang, Q. C2FNet: A coarse-to-fine network for multi-view 3D point cloud generation. IEEE Trans. Image Process. 2022, 31, 6707–6718. [Google Scholar] [CrossRef]

- Peng, B.; Chen, L.; Song, J.; Shen, H.; Huang, Q.; Lei, J. ZS-SBPRnet: A zero-shot sketch-based point cloud retrieval network based on feature projection and cross-reconstruction. IEEE Trans. Ind. Inform. 2023, 19, 9194–9203. [Google Scholar] [CrossRef]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D object proposal generation and detection from point cloud. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. STD: Sparse-to-dense 3D object detector for point cloud. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–3 November 2019. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3DSSD: Point-based 3D single stage object detector. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-end learning for point cloud based 3D object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely embedded convolutional detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Yu, C.; Peng, B.; Huang, Q.; Lei, J. PIPC-3Ddet: Harnessing perspective information and proposal correlation for 3D point cloud object detection. IEEE Trans. Circuits Syst. Video Technol. 2023, accepted. [Google Scholar] [CrossRef]

- Liu, Z.; Tang, H.; Lin, Y.; Han, S. Point-voxel cnn for efficient 3D deep learning. In Proceedings of the International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Chen, Y.; Liu, S.; Shen, X.; Jia, J. Fast point r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–3 November 2019. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. PV-RCNN: Point-voxel feature set abstraction for 3D object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020. [Google Scholar]

- Chen, Y.; Li, W.; Sakaridis, C.; Dai, D.; Van Gool, L. Domain adaptive faster r-cnn for object detection in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Saito, K.; Ushiku, Y.; Harada, T.; Saenko, K. Strong-weak distribution alignment for adaptive object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Huang, S.W.; Lin, C.T.; Chen, S.P.; Wu, Y.Y.; Hsu, P.H.; Lai, S.H. Auggan: Cross domain adaptation with gan-based data augmentation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Chen, Z.; Luo, Y.; Wang, Z.; Baktashmotlagh, M.; Huang, Z. Revisiting domain-adaptive 3D object detection by reliable, diverse and class-balanced pseudo-labeling. In Proceedings of the IEEE International Conference on Computer Vision, Paris, France, 2–6 October 2023. [Google Scholar]

- Peng, B.; Lin, G.; Lei, J.; Qin, T.; Cao, X.; Ling, N. Contrastive multi-view learning for 3D shape clustering. IEEE Trans. Multimed. 2024; accepted. [Google Scholar] [CrossRef]

- Yue, G.; Cheng, D.; Li, L.; Zhou, T.; Liu, H.; Wang, T. Semi-supervised authentically distorted image quality assessment with consistency-preserving dual-branch convolutional neural network. IEEE Trans. Multimed. 2022, 25, 6499–6511. [Google Scholar] [CrossRef]

- Peng, B.; Zhang, X.; Lei, J.; Zhang, Z.; Ling, N.; Huang, Q. LVE-S2D: Low-light video enhancement from static to dynamic. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 8342–8352. [Google Scholar] [CrossRef]

- Tranzatto, M.; Miki, T.; Dharmadhikari, M.; Bernreiter, L.; Kulkarni, M.; Mascarich, F.; Andersson, O.; Khattak, S.; Hutter, M.; Siegwart, R.; et al. Cerberus in the darpa subterranean challenge. Sci. Robot. 2022, 7, eabp9742. [Google Scholar] [CrossRef] [PubMed]

- Agha, A.; Otsu, K.; Morrell, B.; Fan, D.D.; Thakker, R.; Santamaria-Navarro, A.; Kim, S.K.; Bouman, A.; Lei, X.; Edlund, J.; et al. NeBula: Quest for robotic autonomy in challenging environments; Team costar at the darpa subterranean challenge. arXiv 2021, arXiv:2103.11470. [Google Scholar]

- Buratowski, T.; Garus, J.; Giergiel, M.; Kudriashov, A. Real-time 3D mapping inisolated industrial terrain with use of mobile robotic vehicle. Electronics 2022, 11, 2086. [Google Scholar] [CrossRef]

- Wei, X.; Zhang, H.; Liu, S.; Lu, Y. Pedestrian detection in underground mines via parallel feature transfer network. Pattern Recognit. 2020, 103, 107195. [Google Scholar] [CrossRef]

- Patel, M.; Waibel, G.; Khattak, S.; Hutter, M. LiDAR-guided object search and detection in subterranean environments. In Proceedings of the IEEE International Symposium on Safety, Security, and Rescue Robotics, Seville, Spain, 8–10 November 2022. [Google Scholar]

- Wang, J.; Yang, P.; Liu, Y.; Shang, D.; Hui, X.; Song, J.; Chen, X. Research on improved yolov5 for low-light environment object detection. Electronics 2023, 12, 3089. [Google Scholar] [CrossRef]

- Darpa Subterranean (SubT) Challenge. Available online: https://www.darpa.mil/program/darpa-subterranean-challenge (accessed on 15 January 2024).

- Khattak, S.; Nguyen, H.; Mascarich, F.; Dang, T.; Alexis, K. Complementary multi–modal sensor fusion for resilient robot pose estimation in subterranean environments. In Proceedings of the International Conference on Unmanned Aircraft Systems, Athens, Greece, 1–4 September 2020. [Google Scholar]

- Tai, L.; Paolo, G.; Liu, M. Virtual-to-real deep reinforcement learning: Continuous control of mobile robots for mapless navigation. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar]

- Wang, B.; Liu, Z.; Li, Q.; Prorok, A. Mobile robot path planning in dynamic environments through globally guided reinforcement learning. IEEE Robot. Autom. Lett. 2020, 5, 6932–6939. [Google Scholar] [CrossRef]

- Zhao, K.; Song, J.; Luo, Y.; Liu, Y. Research on game-playing agents based on deep reinforcement learning. Robotics 2022, 11, 35. [Google Scholar] [CrossRef]

- OpenPCDet: An Open-Source Toolbox for 3D Object Detection from Point Clouds. Available online: https://github.com/open-mmlab/OpenPCDet (accessed on 15 January 2024).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).