Automation of System Security Vulnerabilities Detection Using Open-Source Software

Abstract

1. Introduction

2. Related Work

- Some of the solutions are too narrow-scoped. In other words, they are not of a general nature. They focus either exclusively on specific operating systems or on specific services;

- Most of the solutions require network access rights to access the target hosts when running from outside their network, with the exception of the solutions that allow the installation of local agents. Some of them also require the credentials of the target host to be known;

- Most solutions do not automatically determine the infrastructure information. This means that the information about the target hosts must be obtained from the system administrators and configured manually in the tool before the scans are executed;

- Some of these scanners require a daemon to be running. This can lead to problems if a fault occurs, e.g., if the hard disk is full or the memory is exhausted, and therefore requires the implementation of a watchdog that restarts the service if necessary. The PnP philosophy is not adopted, as no tool or framework has been found that is immediately ready to use. Quite the contrary—all require prior configuration before execution.

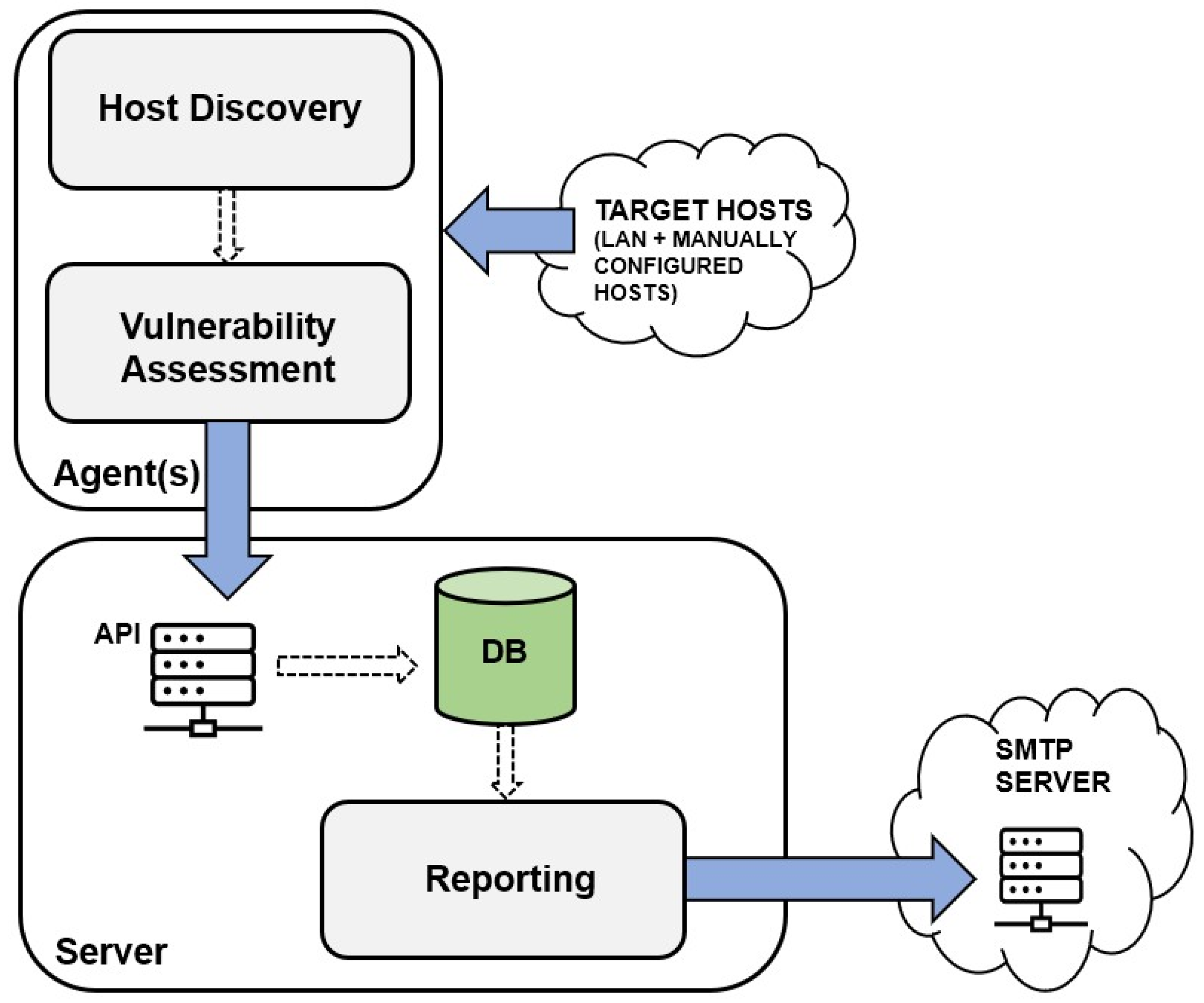

3. System Design and Implementation

3.1. Design Choices

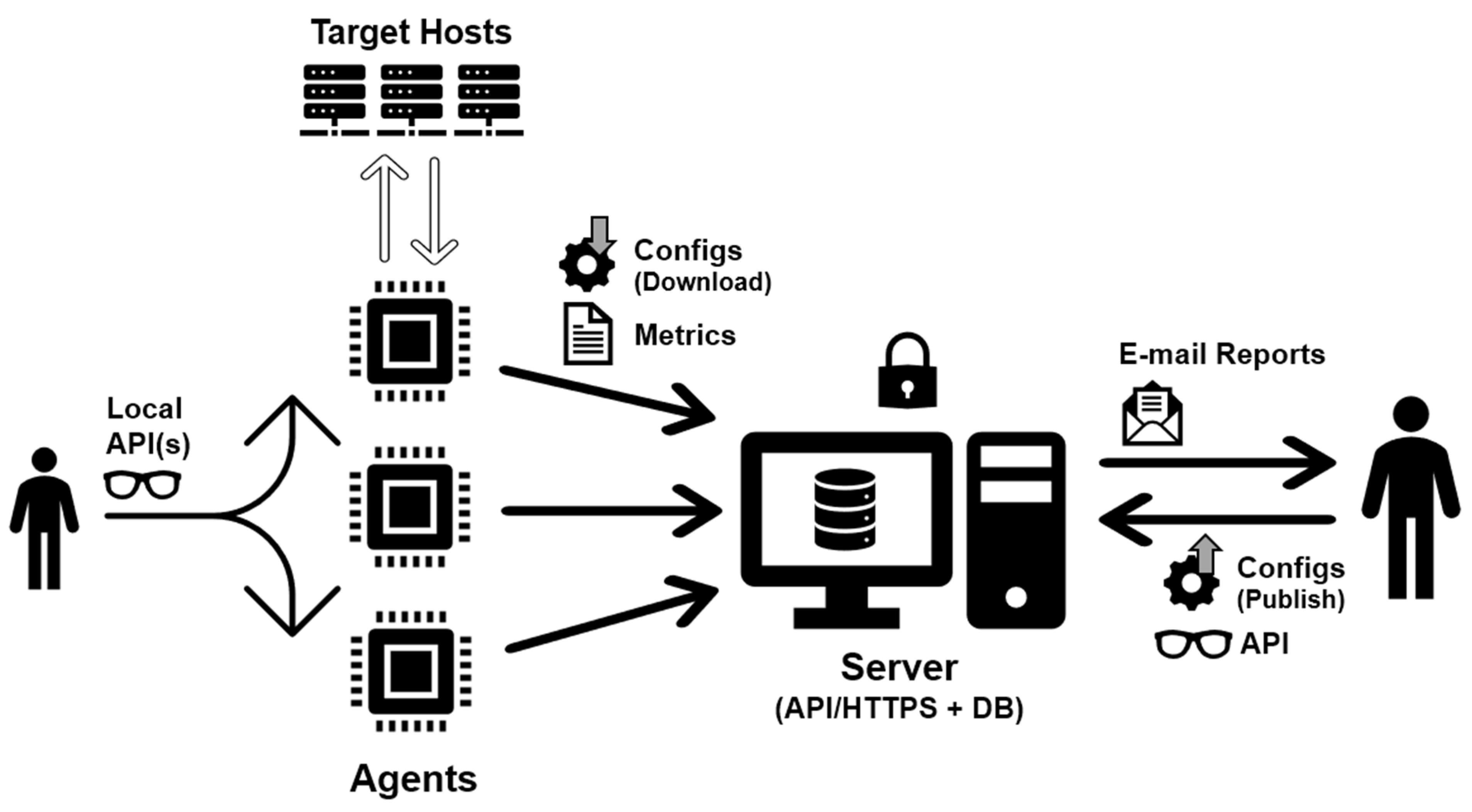

- Agent-server architecture—This choice enables effortless scalability (through the addition or removal of agents) and the adaptability of deploying agents directly within LANs. This placement behind firewalls and proxies allows for the direct targeting of host systems. Meanwhile, the operator can conveniently access all metrics from all agents through a single server. Additionally, it is feasible to access metrics from agents without relying on a server, granting the operator the ability to possess a portable vulnerability scanner. Further elaboration on these aspects will be provided subsequently;

- Low-cost hardware—The primary emphasis of the agent hardware is on the Raspberry Pi board, which uses the ARM architecture. However, the solution should possess the adaptability to function on a conventional computer/server with an x86 architecture;

- Free software—The implementation must solely rely on open-source software (FOSS) and tools, with a preference for those that are portable and have minimal resource requirements to accommodate the hardware’s limitations. The selected options for this purpose include Linux as the operating system, Python as the programming language, Nmap as the port scanning tool, and MongoDB as the database;

- Scalability—Adding and removing processing power to/from the solution should be a straightforward task. The proposed method involves employing a multi-agent solution, which allows for the seamless addition or removal of agents within the environment.

- Modularity—The flexibility of implementation enables users to enhance and personalize their experience as much as possible. By adopting an API-centric approach, users have the freedom to directly engage with the system through the standard CLI or create their own clients, web frontends, mobile applications, or AI/ML systems for data manipulation and the generation of remediation proposals. Moreover, the agents can function independently from the backend server, allowing for standalone usage, if necessary;

- Plug and play (PnP)—After installation, the system should be operational and ready to use. However, it should also offer a high level of customization, allowing the operator to configure it according to specific requirements. The operator has the option to define which scans to execute and which hosts to target. In the absence of operator intervention, the application should automatically identify and scan hosts within its vicinity. Being “plug and play” implies that the agent–server communication should be established using a minimal number of ports; more specifically, only HTTP/HTTPS. Moreover, this communication should only occur in an outbound manner, with the agent initiating contact with the server. This design choice facilitates the functioning of agents in environments with firewalls and restricted network access, which usually allow for outbound connections by default for well-known ports. Furthermore, the process of discovering vulnerabilities and conducting scans should be continuous and ongoing. It should operate continuously and iteratively in the background without requiring any human intervention. This ensures that the system remains vigilant and up-to-date in identifying potential security risks;

- E-mail reporting—Configured recipients should receive automatic e-mails containing security vulnerability reports. Additionally, the level of detail in these reports should be adjustable, allowing for the filtering of vulnerabilities that are exploitable [22]. This feature is crucial as it enables the identification and prioritization of vulnerabilities that require immediate attention and resolution;

- Security—Communication between the various components of the solution and the end users must undergo authentication and be conducted securely over the HTTPS protocol, which uses SSL/TLS encryption;

- Future-proofing—The progression of the technological landscape, specifically in terms of IPv6 capability, should be taken into account.

3.2. Implementation

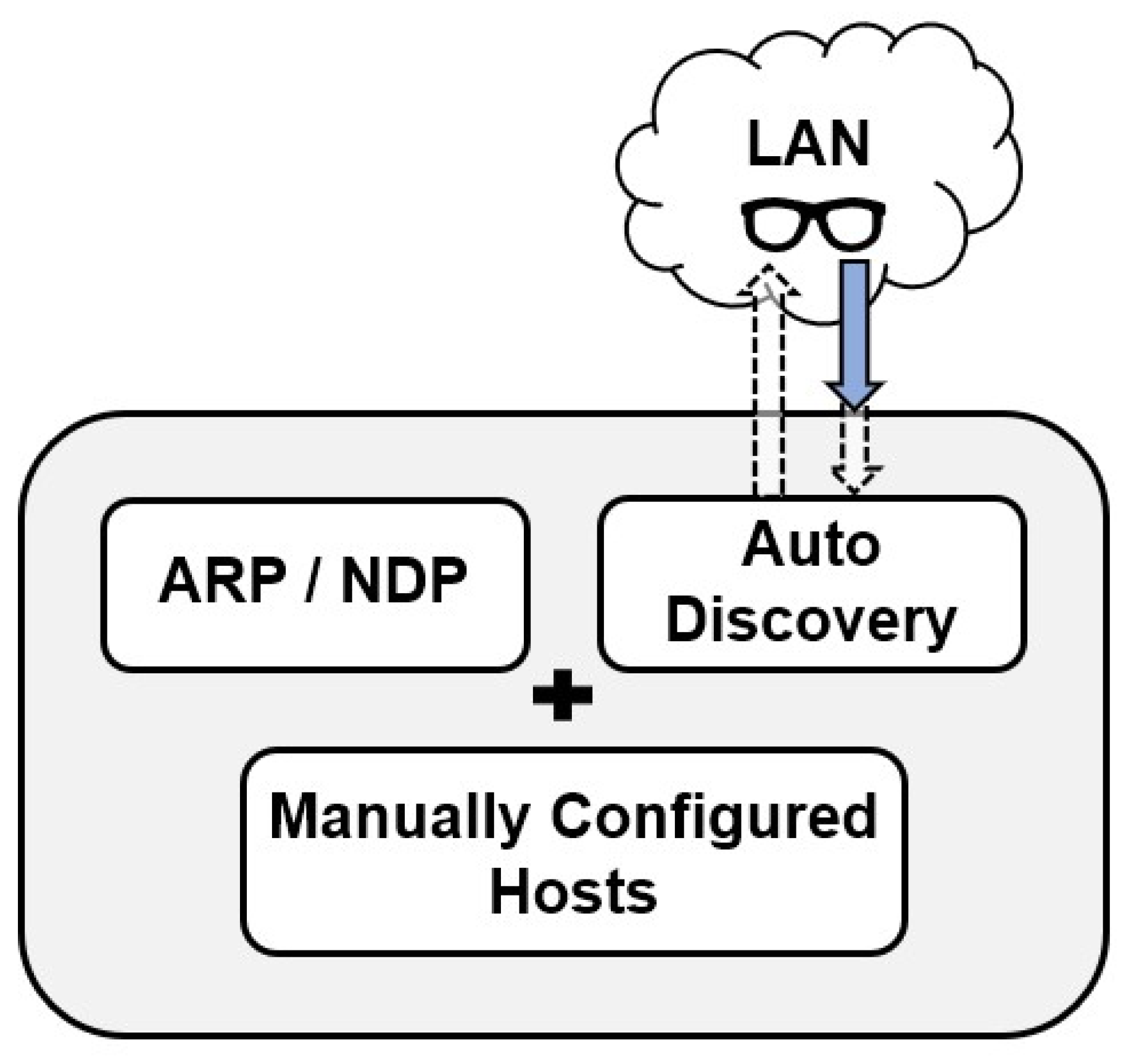

3.2.1. Network Discovery Module

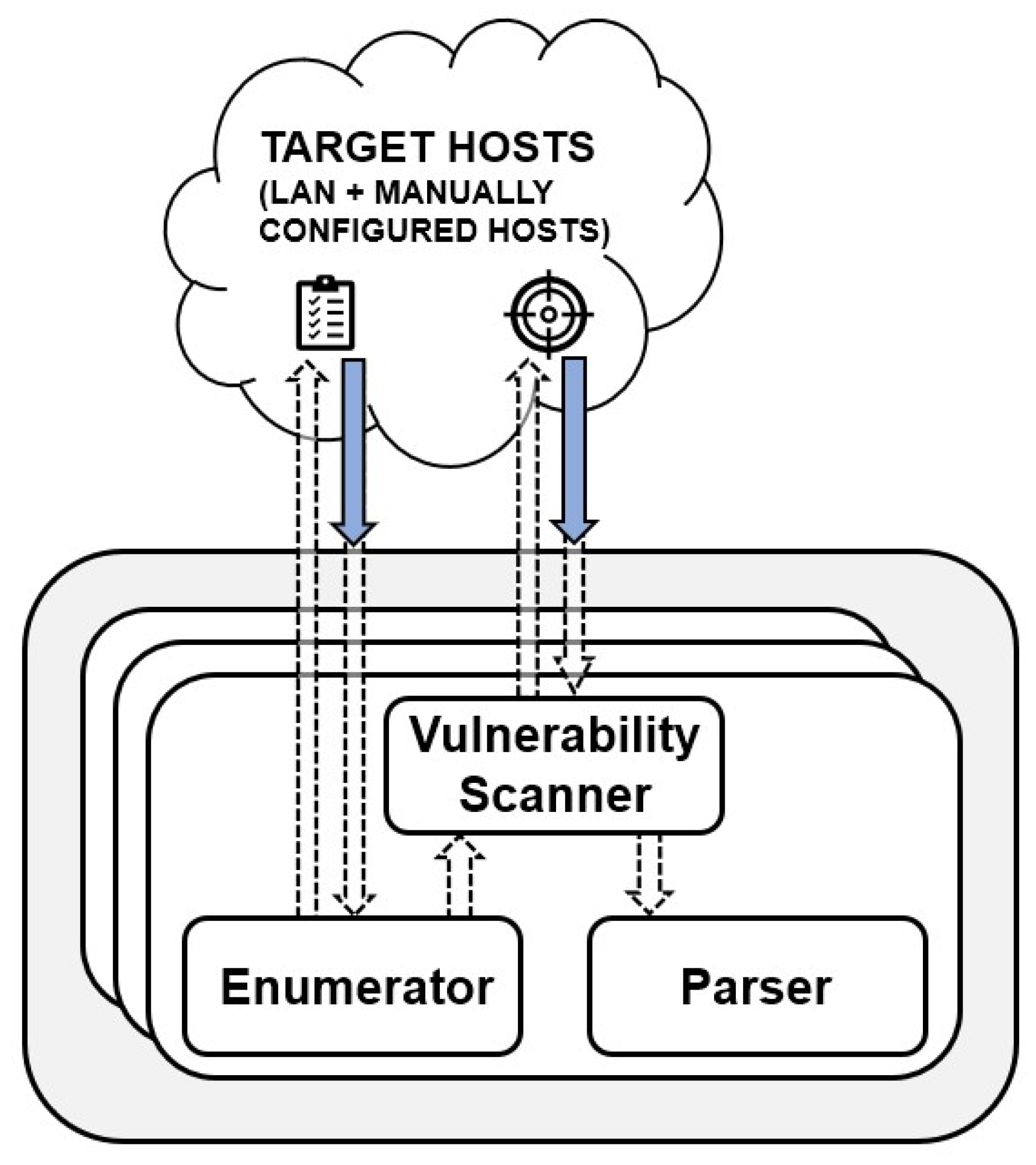

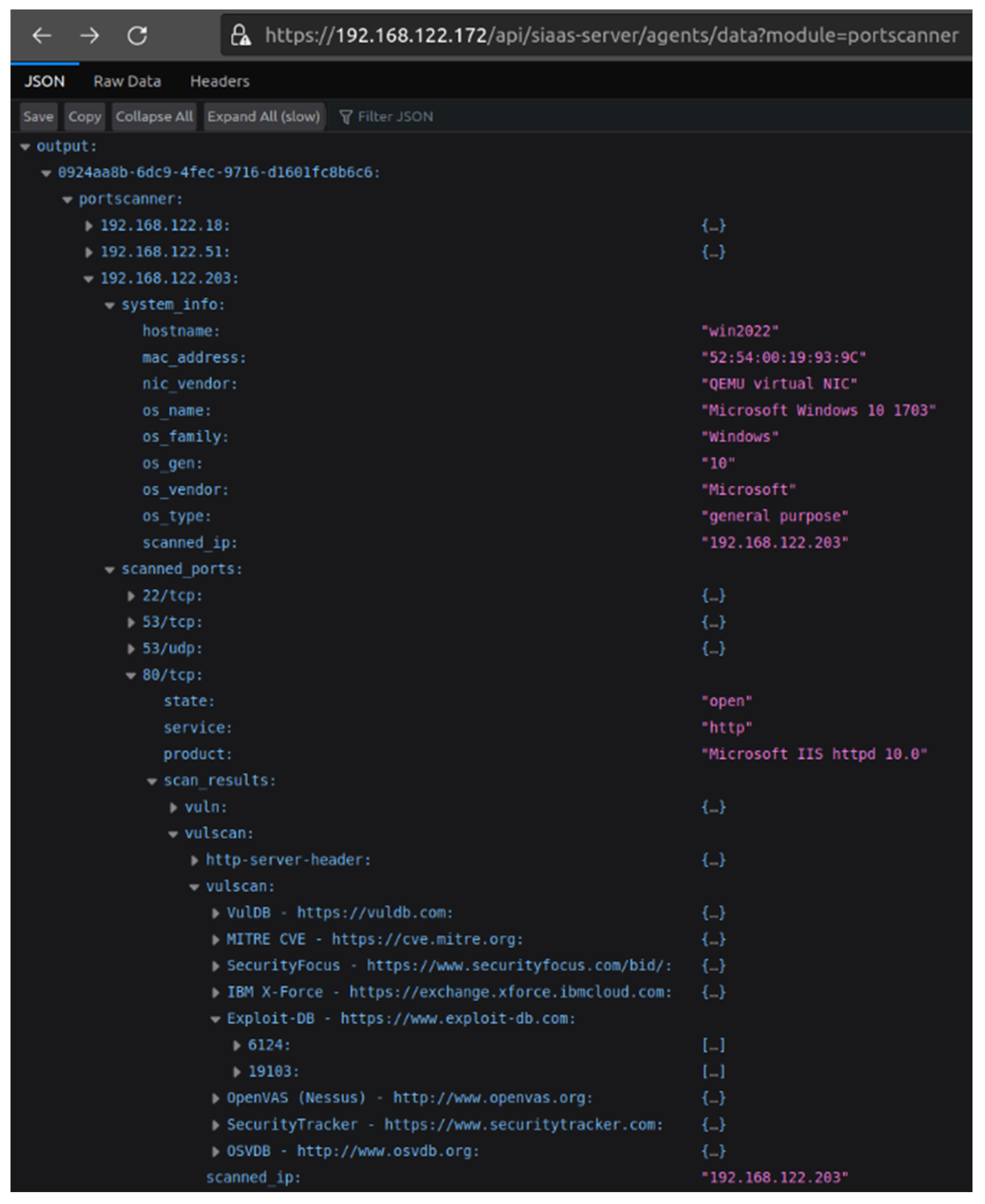

3.2.2. Vulnerability Assessment Module

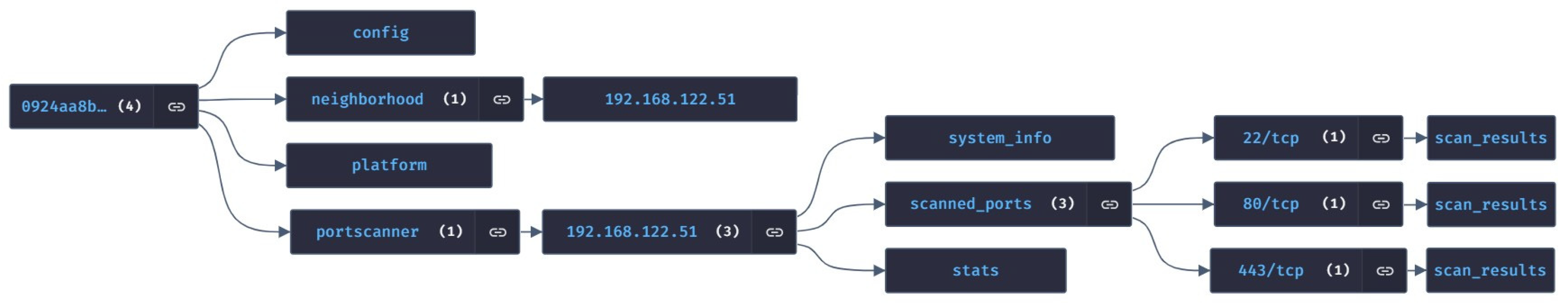

3.2.3. Agent–Server (and Client) Communications

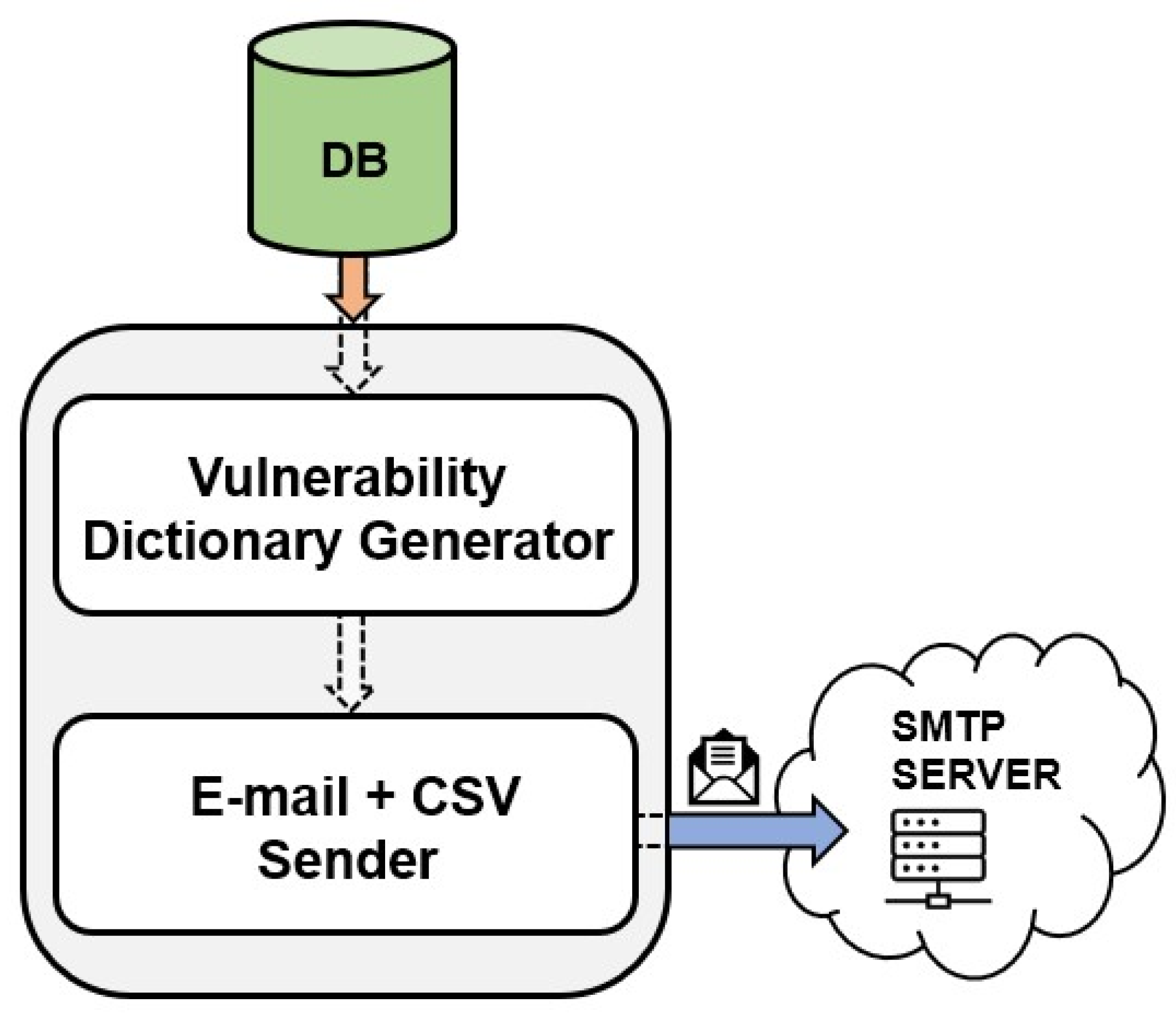

3.2.4. Reporting Module

3.2.5. Other Considerations

4. Validation

4.1. Local Tests

- JP-OLD (server and agent, for testing/staging)—1.75 GHz dual-core processor, 8 GB RAM, connected via Wi-Fi;

- RPI4 (agent for testing/staging)—1.5 GHz quad-core processor, 2 GB RAM, connected via Wi-Fi;

- RPI1 (agent for testing/staging)—700 MHz single-core processor, 256 MB RAM, connected via Ethernet;

- AIO (VM running in an external hypervisor; mostly for deployment)—1.8 GHz quad-core processor, 8 GB RAM, connected via Ethernet and Wi-Fi.

4.1.1. Reliability Tests

4.1.2. Accuracy Tests

4.1.3. Security Tests

- Request against the server’s API IP address (instead of the hostname), using the correct username/password, but without the endpoint’s certificate to validate against (expected result—rejection);

- Repeat, but explicitly using the client’s flag to ignore certification validation (expected result—acceptance);

- Request with proper username/password and CA bundle to validate against, but using IP address instead of hostname (expected result—rejection);

- Repeat, but with correct hostname, and wrong username/password (expected result—rejection);

- Repeat, but with correct user/name (expected result—acceptance);

- Repeat, but using HTTP in the URL instead of HTTPS (expected result—client redirected from HTTP to HTTPS, and then accepted).

4.2. User Tests

5. Conclusions

Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Check Point Blog. Check Point Research: Third Quarter of 2022 Reveals Increase in Cyberattacks and Unexpected Developments in Global Trends. checkpoint.com. Available online: https://blog.checkpoint.com/2022/10/26/third-quarter-of-2022-reveals-increase-in-cyberattacks/ (accessed on 2 February 2024).

- IBM. Cost of a Data Breach Report; IBM: Armonk, NY, USA, 2023. [Google Scholar]

- Morgan, S. Cybercrime To Cost The World $10.5 Trillion Annually By 2025. cybersecurityventures.com. Available online: https://cybersecurityventures.com/hackerpocalypse-cybercrime-report-2016/ (accessed on 2 February 2024).

- Furnell, S.; Fischer, P.; Finch, A. Can’t get the staff? The growing need for cyber-security skills. Comput. Fraud. Secur. 2017, 2017, 5–10. [Google Scholar] [CrossRef]

- Furnell, S. The cybersecurity workforce and skills. Comput. Fraud. Secur. 2021, 100, 102080. [Google Scholar] [CrossRef]

- Russu, C. The Impact of Low Cyber Security on the Development of Poor Nations. developmentaid.org. Available online: https://www.developmentaid.org/news-stream/post/149553/low-cyber-security-and-development-of-poor-nations (accessed on 2 February 2024).

- Smith, G. The intelligent solution: Automation, the skills shortage and cyber-security. Comput. Fraud. Secur. 2018, 2018, 6–9. [Google Scholar] [CrossRef]

- Ko, R.K.L. Cyber Autonomy: Automating the Hacker—Self-healing, self-adaptive, automatic cyber defense systems and their impact to the industry, society and national security. arXiv 2020. [Google Scholar] [CrossRef]

- Deascona. How ChatGPT Will Revolutionize the Cyber Security Industry. uxdesign.cc. Available online: https://bootcamp.uxdesign.cc/how-chat-gpt-will-revolutionize-the-cyber-security-industry-7847cc7fc24e (accessed on 2 February 2024).

- Ponemon Institute. The State of Vulnerability Management in DevSecOps; Ponemon Institute: Traverse City, MI, USA, 2022. [Google Scholar]

- Anderson, J. Updates to ISO 27001/27002 Raise the Bar on Application Security and Vulnerability Scanning. invict.com. Available online: https://www.invicti.com/blog/web-security/iso-27001-27002-changes-in-2022-application-security-vulnerability-scanning/ (accessed on 2 February 2024).

- Shea, S. SOAR (Security Orchestration, Automation and Response). techtarget.com. Available online: https://www.techtarget.com/searchsecurity/definition/SOAR (accessed on 2 February 2024).

- Liu, W. Design and Implement of Common Network Security Scanning System. In Proceedings of the 2009 International Symposium on Intelligent Ubiquitous Computing and Education, Chengdu, China, 15–16 May 2009; pp. 148–151. [Google Scholar] [CrossRef]

- Shah, S.; Mehtre, B.M. An automated approach to Vulnerability Assessment and Penetration Testing using Net-Nirikshak 1.0. In Proceedings of the 2014 IEEE International Conference on Advanced Communications, Control and Computing Technologies, Ramanathapuram, India, 8–10 May 2014; pp. 707–712. [Google Scholar] [CrossRef]

- Wang, Y.; Bai, Y.; Li, L.; Chen, X.; Chen, A. Design of Network Vulnerability Scanning System Based on NVTs. In Proceedings of the 2020 IEEE 5th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 12–14 June 2020; pp. 1774–1777. [Google Scholar] [CrossRef]

- Chen, H.; Chen, J.; Chen, J.; Yin, S.; Wu, Y.; Xu, J. An Automatic Vulnerability Scanner for Web Applications. In Proceedings of the 2020 IEEE 19th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Guangzhou, China, 29 December 2020–1 January 2021; pp. 1519–1524. [Google Scholar] [CrossRef]

- Zhang, X.; Zhao, J.; Yang, F.; Zhang, Q.; Li, Z.; Gong, B.; Zhi, Y.; Zhang, X. An Automated Composite Scanning Tool with Multiple Vulnerabilities. In Proceedings of the 2019 IEEE 3rd Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 11–13 October 2019; pp. 1060–1064. [Google Scholar] [CrossRef]

- Wang, C.; Liu, X.; Zhou, X.; Zhou, R.; Lv, D.; Lv, Q.; Wang, M.; Zhou, Q. FalconEye: A High-Performance Distributed Security Scanning System. In Proceedings of the 2019 IEEE International Conference on Dependable, Autonomic and Secure Computing, International Conference on Pervasive Intelligence and Computing, International Conference on Cloud and Big Data Computing, International Conference on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), Fukuoka, Japan, 5–8 August 2019; pp. 282–288. [Google Scholar] [CrossRef]

- Davies, P.; Tryfonas, T. A lightweight web-based vulnerability scanner for small-scale computer network security assessment. J. Netw. Comput. Appl. 2009, 32, 78–95. [Google Scholar] [CrossRef]

- Kals, S.; Kirda, E.; Kruegel, C.; Jovanovic, N. SecuBat: A web vulnerability scanner. In Proceedings of the 15th International Conference on World Wide Web (WWW ’06), Edinburgh, Scotland, 23–26 May 2006; Association for Computing Machinery: New York, NY, USA, 2006; pp. 247–256. [Google Scholar] [CrossRef]

- Noman, M.; Iqbal, M.; Rasheed, K.; Muneeb Abid, M. Web Vulnerability Finder (WVF): Automated Black-Box Web Vulnerability Scanner. Int. J. Inf. Technol. Comput. Sci. 2020, 12, 38–46. [Google Scholar] [CrossRef]

- Haydock, W. But Is It Exploitable? deploy-securely.com. Available online: https://www.blog.deploy-securely.com/p/but-is-it-exploitable (accessed on 2 February 2024).

- Lyon, G.F. Nmap Network Scanning; The Official Nmap Project Guide to Network Discovery and Security Scanning; Insecure Press: Sunnyvale, CA, USA, 2008; ISBN 978-0-9799587-1-7. Available online: https://nmap.org/book/toc.html (accessed on 2 February 2024).

- Chalvatzis, I.; Karras, D.A.; Papademetriou, R.C. Evaluation of Security Vulnerability Scanners for Small and Medium Enterprises Business Networks Resilience towards Risk Assessment. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 29–31 March 2019; pp. 52–58. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, J. Ethical Hacking and Network Defense: Choose Your Best Network Vulnerability Scanning Tool. In Proceedings of the 2017 31st International Conference on Advanced Information Networking and Applications Workshops (WAINA), Taipei, Taiwan, 27–29 March 2017; pp. 110–113. [Google Scholar] [CrossRef]

- Zulkarneev, I.; Kozlov, A. New Approaches of Multi-agent Vulnerability Scanning Process. In Proceedings of the 2021 Ural Symposium on Biomedical Engineering, Radioelectronics and Information Technology (USBEREIT), Yekaterinburg, Russia, 13–14 May 2021; pp. 488–490. [Google Scholar] [CrossRef]

- Rockikz, A. How to Get Hardware and System Information in Python. thepythoncode.com. Available online: https://www.thepythoncode.com/article/get-hardware-system-information-python (accessed on 2 February 2024).

- Waldvogel, B. Layer 2 Network Neighbourhood Discovery Tool. github.com. Available online: https://github.com/bwaldvogel/neighbourhood (accessed on 2 February 2024).

- Elmrabit, N.; Zhou, F.; Li, F.; Zhou, H. Evaluation of Machine Learning Algorithms for Anomaly Detection. In Proceedings of the 2020 International Conference on Cyber Security and Protection of Digital Services (Cyber Security), Dublin, Ireland, 15–19 June 2020; pp. 1–8. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seara, J.P.; Serrão, C. Automation of System Security Vulnerabilities Detection Using Open-Source Software. Electronics 2024, 13, 873. https://doi.org/10.3390/electronics13050873

Seara JP, Serrão C. Automation of System Security Vulnerabilities Detection Using Open-Source Software. Electronics. 2024; 13(5):873. https://doi.org/10.3390/electronics13050873

Chicago/Turabian StyleSeara, João Pedro, and Carlos Serrão. 2024. "Automation of System Security Vulnerabilities Detection Using Open-Source Software" Electronics 13, no. 5: 873. https://doi.org/10.3390/electronics13050873

APA StyleSeara, J. P., & Serrão, C. (2024). Automation of System Security Vulnerabilities Detection Using Open-Source Software. Electronics, 13(5), 873. https://doi.org/10.3390/electronics13050873