Abstract

Siamese network object tracking is a widely employed tracking method due to its simplicity and effectiveness. It first employs a two-stream network to independently extract template and search region features. Subsequently, these features are then combined through feature association to yield object information within the visual scene. However, the conventional approach faces limitations when it leverages the template features as a convolution kernel to convolve the search image features, which restricts the ability to capture complex and nonlinear feature transformations of objects, thereby restricting its discriminative capabilities. To overcome this challenge, we propose replacing traditional convolutional correlation with Slot Attention for feature association. This novel approach enables the effective extraction of nonlinear features within the scene, while augmenting the discriminative capacity. Furthermore, to increase the inference efficiency and reduce the parameter occupation, we suggest deploying a single Slot Attention module for multiple associations. Our tracking algorithm, SiamSlot, was evaluated on diverse benchmarks, including VOT2019, GOT-10k, UAV123, and Nfs. The experiments show a remarkable improvement in performance relative to previous methods under the same network size.

1. Introduction

In recent years, deep learning has become the dominant approach in visual object tracking, outperforming traditional manual feature-based methods due to its exceptional performance. Compared to manual feature-based methods, deep learning methods rely heavily on large datasets for training. Therefore, it provides a significant advantage in most cases.

The Siamese network is a widely adopted tracking strategy originally designed for signature verification by comparing the similarity between two input values. With this feature, the Siamese network is used to measure the similarity between the target template and the object being analyzed to determine the object and its location, and its architecture is straightforward and transparent, consisting of three primary parts: Backbone Network: as a feature extractor, the backbone network processes input images to generate image features. Feature Association Network: using convolution operation, the feature association network compares the similarity between the search region and the object, enabling the identification of matching patterns. Head Network: based on the associated features, the head network determines the object’s location within the visual scene. Overall, the Siamese network’s simplicity makes it an attractive choice for many applications in computer vision.

Since the authors of [1] proposed the SiamFC algorithm, the Siamese network tracking method has gained prominence among other tracking algorithms due to its impressive accuracy and speed. Nevertheless, despite its successes, the original SiamFC design contains several shortcomings. Therefore, some researchers have improved specific components of the algorithm. For instance, ref. [2] observed that the original SiamFC relies on a variety of fixed scales to accommodate object variations, but this approach still falls short in terms of robustness. To address this limitation, they introduced SiamRPN, which replaced the head network in the original SiamFC with a Region Proposal Network (RPN) [3]. This modification significantly enhances the accuracy of object detection. Similar improvements were made by the authors of [4,5], who replaced the shallow AlexNet network with a deeper more robust model and increased the feature extraction capability, resulting in better overall performance.

Despite these advancements, Siamese networks continue to rely on the convolution operation for feature association. Although this approach is straightforward and easy to apply, it is vulnerable to losing nonlinear semantic information due to its local linear matching nature. Additionally, the matching process remains a one-way street, limiting the ability to adjust the template information adaptively based on the search region. Although increasing the network size might improve the performance, the bottleneck of feature association will persistently hinder further performance improvement.

Beyond this, current learning-based approaches often rely on disorganized features, which limits their interpretability and generalization capacity. Tasks such as visual reasoning [6] and structured environment modeling [7] generally benefit from object-centric feature representations and leverage them to perform downstream tasks. One of the typical approaches is Slot Attention [8]. The Slot Attention module generates a set of slots to represent various entities in the scene, leading to remarkable performance gains in scene reconstruction and ensemble predictions.

In summary, we consider replacing the original convolutional association layer with the Slot Attention module to address the above shortcoming. By doing so, we transform the linear matching into a nonlinear matching process, enhancing the feature representation capability. Due to Slot Attention, our approach ensures better feature association properties while enhancing the semantic information and interpretability of features. Moreover, the establishment of communication channels between template search image pairs through Slot Attention allows for the creation of structured features containing more informative details about the object, mitigating crucial information loss.

To minimize the number of parameters in a Transformer-based network, we adopt a single Slot Attention module for multiple data associations instead of stacking multiple attention modules. This strategy significantly reduces the number of parameters and memory footprint during training and inference.

The primary contributions of this work are as follows:

- We build a tracking network incorporating the Slot Attention module, which enhances the extraction of nonlinear feature information, leading to better performance in scenarios where objects exhibit sudden transformations or blurriness.

- Our method for training a network that reuses the attention module considerably reduces the parameters, leading to a noticeable decrease in memory consumption.

- Our experiments on various datasets demonstrate that our SiamSlot outperforms other state-of-the-art tracking networks under the same network size.

2. Related Work

2.1. Improvement in the Siamese Network Backbone Network

As the pioneering work in the field of Siamese network, SiamFC [1] utilizes AlexNet as the backbone network for feature extraction. Subsequent works such as SiamRPN [2] and DaSiamRPN [9] also adopted AlexNet as their backbone. However, due to its limited depth and number of convolutional layers, AlexNet’s feature extraction capability is severely limited. To overcome this constraint, SiamDW conducts extensive research on various deep convolutional networks for feature extraction. A collection of guidelines is formulated to guide the design of backbone networks, leading to the development of a novel backbone network. This updated backbone network was successfully incorporated into SiamFC and SiamRPN and evaluated at VOT2016 and OTB2015; both showed an impressive improvement of over 8% on EAO metrics.

Inspired by SiamDW, SiamRPN++ recognized that ResNet, owing to its lack of translation invariance, hinders deeper networks from fully exploiting their potential in Siamese networks. To address this limitation, SiamRPN++ specifically improves the ResNet network by setting the convolutional layer to 0 padding, thereby creating a customized ResNet with translation invariance. This innovation enables the deployment of a larger ResNet50 as the backbone network, leading to notable performance improvements.

Siamese network trackers use CNN networks to extract features. With the proposal of Transformer in 2017, the feature extraction capability of the backbone network is further enhanced. TrDiMP [10] represents a pioneering effort in this domain by integrating Transformer mechanisms for feature association. It first extracts deep features through the CNN backbone. Next, TrDiMP employs a self-attention mechanism to enhance the search region features while simultaneously augmenting template features with the search region features via a cross-attention mechanism. Finally, template and search features are correlated through a convolutional layer. TrDiMP achieves the interaction of template and search features through a cross-attention mechanism, which remarkably improves the ability to recognize objects displaying drastic appearance changes, as demonstrated by the remarkable achievement of an AUC score of 71.1% on the OTB-2015 dataset and an accuracy of 60% on the VOT2018 dataset. Compared to the fully convolutionally-based DiMP [11], TrDiMP shows a commendable performance boost of 4.5% and 0.3% on these benchmarks.

Similar to TrDiMP, TransT proposed by [12] also leverages the CNN-Transformer structure but differs in its approach to feature enhancement. In particular, TransT incorporates two additional modules, ECA and CFA, into its framework. ECA utilizes a self-attention mechanism to refine the extracted features, while CFA utilizes a cross-attention mechanism to enable multiple interactions between template and search features to improve feature representation. TransT exclusively relies on its self-designed Transformer for feature refinement, resulting in superior performance compared to purely CNN-based trackers. It achieved an impressive score of 72.3% on the GOT-10k [13] test dataset.

TrDiMP and TrasT achieve great performance improvement by combining Transformer, but they still rely on CNN for feature extraction. The acquisition of robust global feature representations remains a challenge. To address this limitation, the OSTrack [14] adopts a full Transformer architecture for tracing. By feeding the template and search images as image patches into the Transformer network simultaneously, the OSTrack can effectively extract the features of the template and search scene. Consequently, the OSTrack achieved an exceptional performance of 73.7% AO score on the GOT-10k test dataset, outperforming other state-of-the-art trackers.

The CNN-Transformer hybrid structure and full Transformer structure each have advantages and disadvantages. On one hand, the full Transformer has a concise and efficient network structure. However, due to its lack of prior knowledge about images [15], more training data and epochs are required during training. Furthermore, the Transformer architecture suffers from lower parameter utilization than CNN, which demands increased memory usage during operation. Our tracker builds upon the CNN-Transformer hybrid structure and optimizes the association network with Slot Attention to overcome these limitations.

2.2. Improvement in the Siamese Network Feature Association

Both SiamFC and SiamRPN employ convolutional operations for object association. However, there are two inherent problems with this approach. Firstly, due to the shallowness of Alexnet, there is a restricted difference between features extracted at different depths. However, in a deep network such as ResNet50, comprising numerous layers, the distinctiveness of features generated by each block increases significantly. Shallower networks primarily concentrate on extracting information related to colors and edges, while deeper networks emphasize semantically meaningful features about objects. Utilizing a one-time feature association strategy for backbone network features neglects the potential benefits of incorporating all levels of feature data. To address this shortcoming, SiamRPN++ proposes a multi-level feature association scheme for ResNet50 that can integrate all the different levels of information. By comprehensively integrating diverse levels of feature data, this approach demonstrates remarkable efficacy in object tracking.

Although utilizing multiple feature associations at different levels can effectively improve the performance of the tracker, SiamRPN++ continues to adopt the convolutional association method. Limitations exist in the ability of convolutional associations to extract nonlinear information regarding the target and search region, which constrains the tracker’s overall performance. To address this challenge, we introduce Slot Attention as a novel association method, which effectively improves the nonlinear feature extraction capabilities of the tracker.

2.3. Improvement in the Siamese Network Head Network

The head network is a component that has a great influence on the tracking accuracy of the object. In SiamFC, the head network generates a similarity map to locate the object, followed by a fixed multi-scale tracking mode that produces multiple detection boxes and selects the one with the highest score as the current frame’s tracking result. This strategy only estimates the current tracking box based on predetermined information rather than truly detecting the box of the target. Therefore, when the object undergoes significant deformation, the tracking accuracy decreases. To address this issue, SiamRPN proposed to combine RPN in object detection. The input feature maps from the Siamese network are passed to the classification branch and the regression branch. Based on the set parameters, the tracker computes the object’s position and shape. Since RPN requires fewer computational resources, it enhances the tracking accuracy while maintaining real-time performance.

Despite the improvements offered by SiamRPN, it relies on fixed anchor boxes for object detection, which can be restrictive in accurately detecting objects with varying shapes. In particular, the fixed scales and aspect ratios of anchor boxes limit the tracker’s ability to handle rapidly changing objects. To overcome this limitation, SiamCAR [16] abandons anchors and adopts a pixel-by-pixel prediction approach. By predicting the distance between the bounding box and the pixels based on their positions, SiamCAR eliminates the need for preset anchors and yields improved detection results for rapidly evolving objects.

However, both anchor-based SiamRPN and anchor-free SiamCAR generate a large number of detection boxes during the detection process. A series of post-processing techniques are subsequently employed to refine these results, which leads to a tedious and time-consuming reasoning process. In contrast, STARK [17] models detection box regression as a direct bounding box prediction problem. By predicting the heat map of the upper left and lower right points, STARK efficiently obtains an optimal bounding box per frame without requiring complex and hyperparameter-sensitive post-processing, making the entire tracking procedure more efficient.

3. Algorithm

In this section, we offer a detailed examination of the Siamese network augmented with Slot Attention, providing a comprehensive explanation of the architecture components and operational principles from two perspectives. First, we explain the model architecture of the Slot Attention module and its underlying mechanisms. Then, building upon the original Slot Attention, we refine the module to better accommodate the demands of object tracking. In Section 3.2, we present a comprehensive overview of the complete network framework, showcasing how the backbone network, feature fusion network, and prediction head network remain integral to the Siamese network structure. The main focus lies in illustrating how to integrate Slot Attention into the tracking network, including managing module input and output interactions, as well as composing the feature association network via the modules.

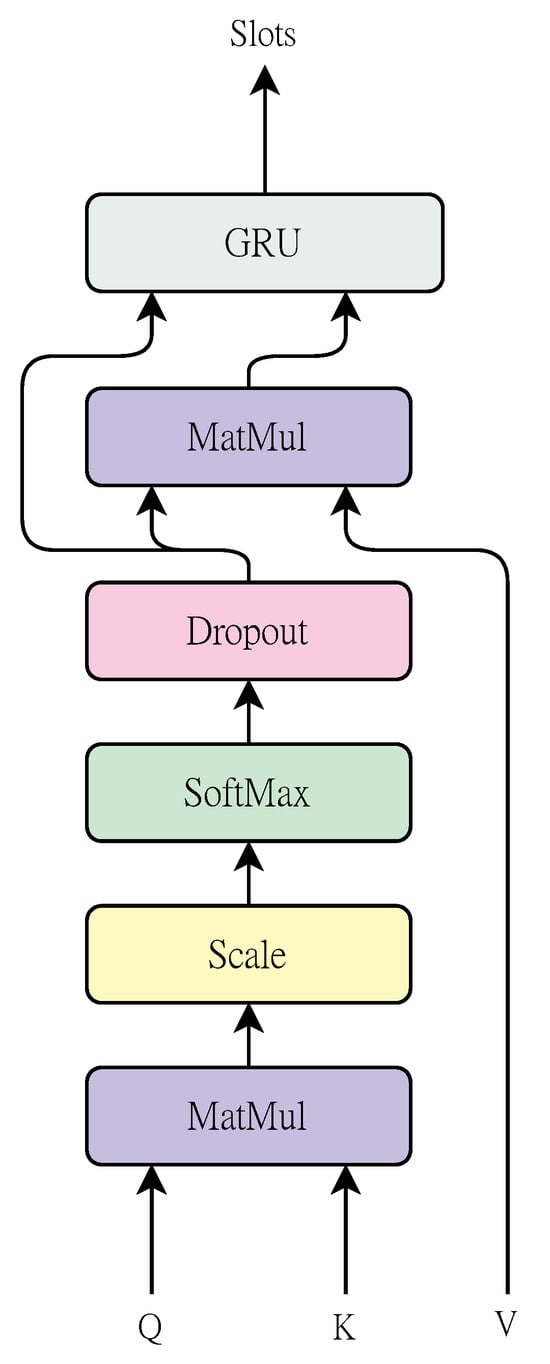

3.1. Slot Attention

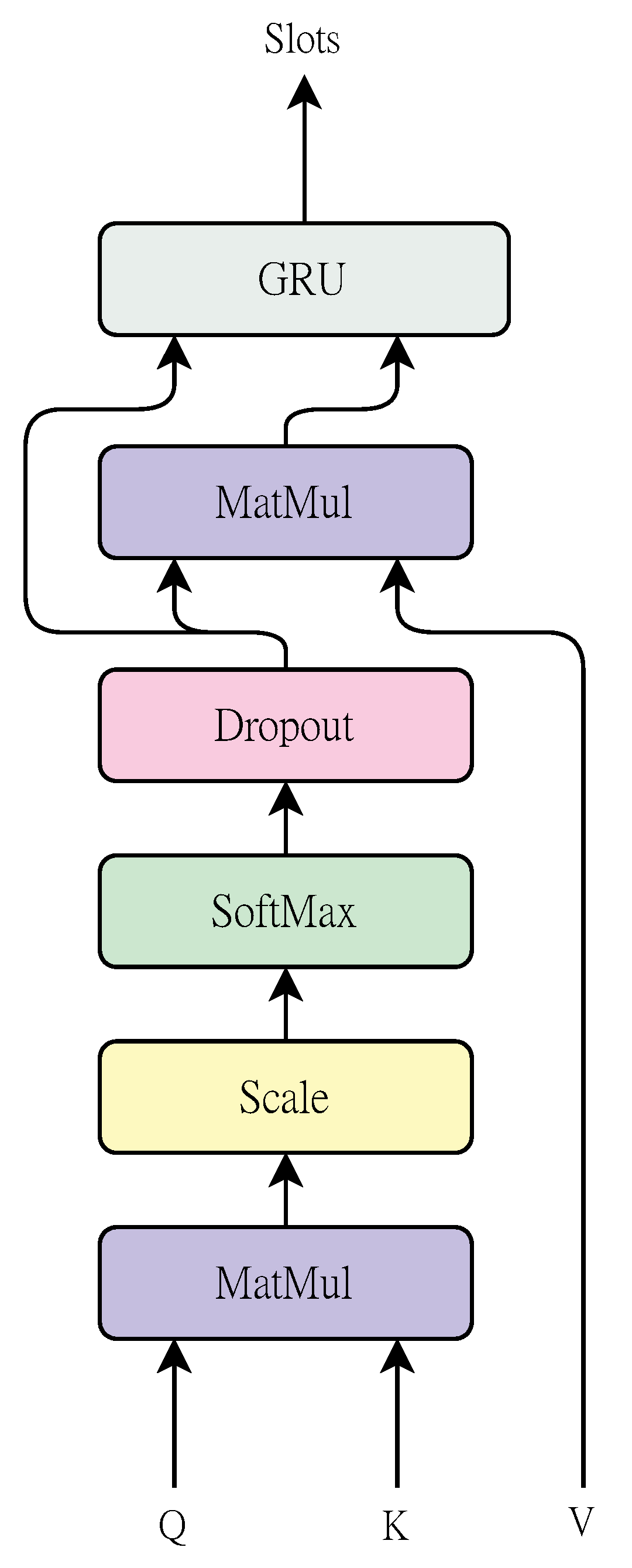

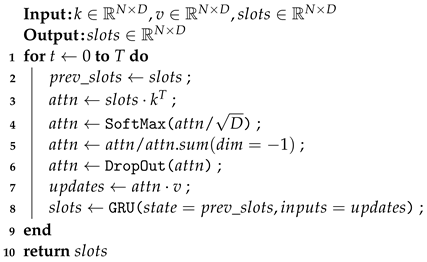

As an algorithm for implementing structured feature representation, the Slot Attention module processes a set of vector inputs and produces a set of output vectors, known as slots. Each vector in the set of output vectors can represent an entity in the input, while the combination of all the slots provides a complete scene representation. To accommodate the need for object tracking, we have introduced several improvements to the original Slot Attention algorithm. The algorithm is illustrated in the pseudo-code in Algorithm 1, and the module structure diagram is shown in Figure 1.

Figure 1.

Slot Attention structure diagram.

Initially, ref. [8] sampled Gaussian random vectors as initial slots and utilized them as inputs to the query vectors in the attention module. Through repetition of attention iterations, each slot vector becomes increasingly associated with a particular target feature, resulting in a well-organized representation of the object feature. To cater to the task of object tracking, we leverage the template image features extracted by the CNN backbone network as the initialized slots and employ the search image features as both the key and value vectors in the attention modules. By performing T iterations of attention, we successively decode the search image features to obtain the desired object information.

We will now delve into the details of one iteration of Slot Attention. All notations used in iteration are defined in Algorithm 1. Unlike the dot product attention, Slot Attention employs SoftMax between slots. It creates a competitive environment among all pixels in the image. In contrast, dot product attention operates on a global scale and employs SoftMax in image patches. It is equivalent to competition between all channels in the image. As a result, each slot in Slot Attention is mutually exclusive with others, forming a compact representation of distinct features tied to specific regions of the input image.

To ensure the stability of the attention mechanism, we employed two strategies concurrently. First, we adhered to the Transformer convention of implementing SoftMax with temperature and setting the temperature to a constant value , where D denotes the dimensionality of the slot vectors. Additionally, to maintain the numerical stability of the SoftMax calculation, we added a negligible value of 1 × 10−6 to all SoftMax calculations to mitigate potential underflow issues. Second, we resorted to a weighted average approach for computing the attention matrix, which ensures that the attention-weighted values sum to 1. Notably, the simultaneous application of SoftMax with temperature and the weighted average contributes to smoothing the attention weights and reducing the discrepancy between slots. Therefore, it prevents the attention mechanism from ignoring certain input elements.

After processing the attention matrix, we have incorporated an additional strategy inspired by Transformer. Specifically, we applied a Dropout layer [18] before multiplying the attention matrix and the value vector. This simple yet effective technique serves as a regularization method during training, preventing overfitting.

At the end of the Slot Attention module, we further refined the integrated information by leveraging a Gated Recurrent Unit (GRU) [19]. The GRU module enables us to aggregate the input and output slots, preserving the most valuable historical and contemporary knowledge. This step enhances our ability to capture complex contextual relationships and make more informed predictions.

| Algorithm 1: Streamline of Slot Attention. The inputs correspond to the key, query, and value vectors in Transformer, respectively. Each vector is a set of D-dimensional vectors. We use the search image features to initialize the k, v vectors. The slots vector is initialized with template image features. In the experiment, we set the number of iterations as T. |

|

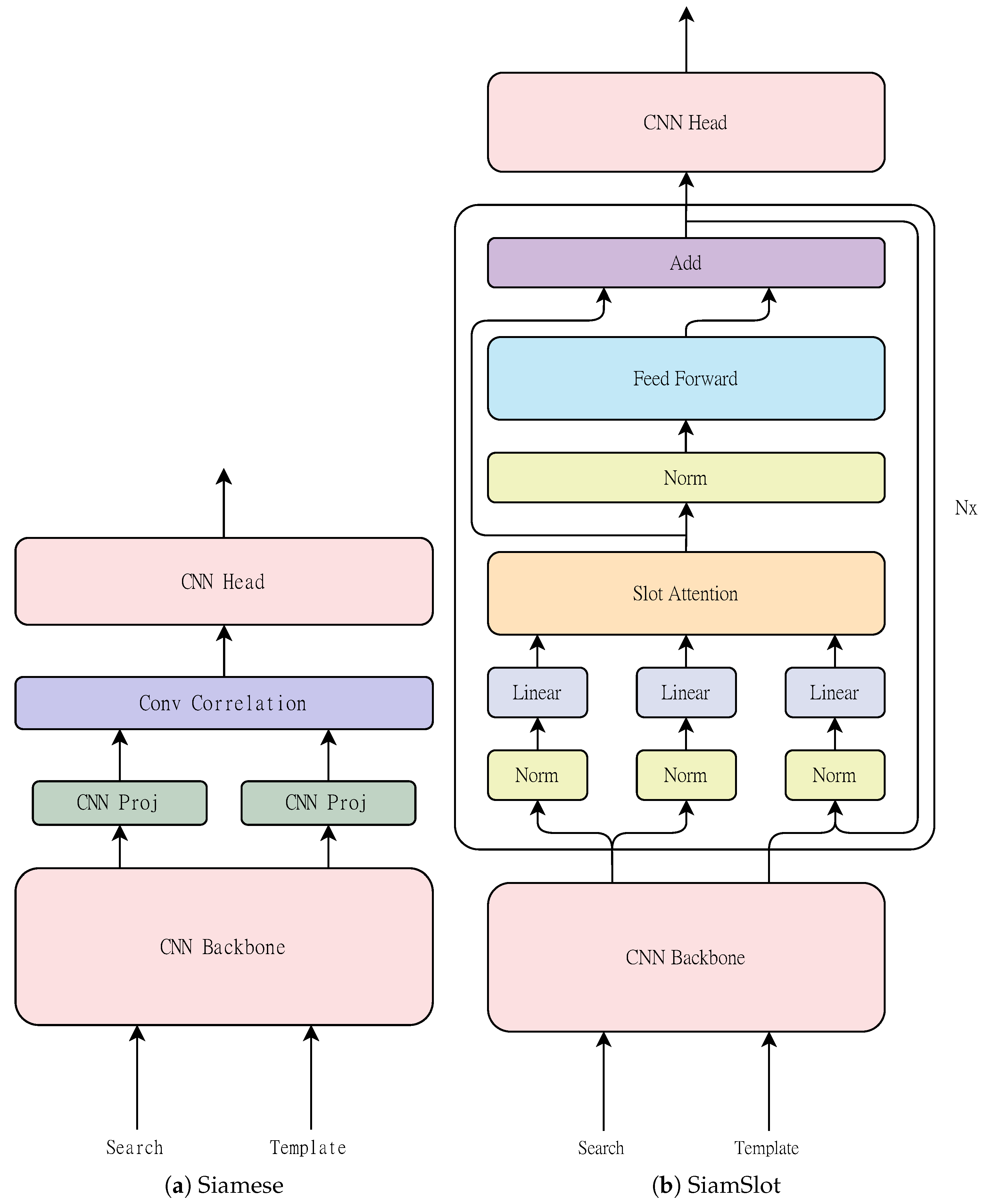

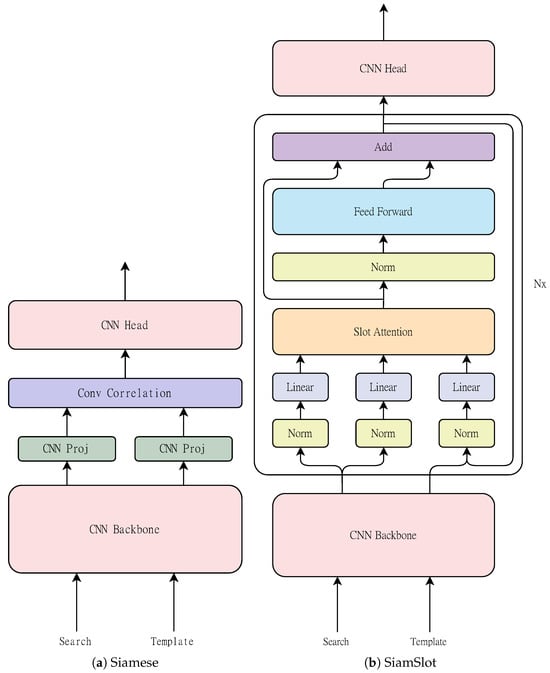

3.2. Siamese Trackers

The tracking process of our improved Siamese network tracking algorithm is depicted in Figure 2b, which builds upon the framework established in Figure 2a. In contrast to the conventional convolutional association layer used in the network, we replaced it with Slot Attention to better capture the nonlinear changes of the object during the tracking process.

Figure 2.

(a) Standard Siamese tracker architecture. (b) Our SiamSlot architecture.

Backbone. We employed Alexnet Figure [20] as the backbone network, which has shown balanced accuracy and efficiency in previous works such as [1,2,9]. To further reduce the computational requirements, we adopted a weight-sharing strategy, where the same backbone network is used for feature extraction on both the template image and the search region image.

Processing before feature association. Similar to existing transformer-based models, we used several linear mapping layers to map the input feature vectors into query, key, and value vectors and perform self-attention computations. Before feeding the features into the attention module, we applied LayerNorm [21] and Linear layer to form a linear mapping layer for feature transformation. The search image features were split into two streams, each processed by a separate linear mapping layer. The combined results were fed into the Slot Attention modules as key and value vectors. Conversely, the template image features were transformed through another linear mapping layer and served as the initial slots vector.

Feature association. When multiple Slot Attention modules are stacked in a network, the network parameters increase as the number of modules increases. To address this issue, we propose to utilize a single attention module for feature association. In particular, the outputs of the attention module were fed back into itself repeatedly to perform feature association on the extracted features. This strategy allowed us to achieve performance increases with fewer parameters. In addition, we investigated the number of attention module reuses that would have a significant impact on the performance, and we present the corresponding experimental results.

After feature association. We applied LayerNorm followed by Multi-Layer Perceptron (MLP) with residuals to perform linear mapping of the slots, enhancing the stability of training.

Head network. To simplify the construction of the head network, we adopted the head network proposed in [17], which proposed a straightforward model architecture that requires only a CNN network for construction. Compared to SiamRPN, the head network does not rely on prior information and is more convenient to adjust.

In summary, our improved Siamese network tracking algorithm leverages Slot Attention to better capture nonlinear changes during the tracking process, simplifies the construction of the head network, and reduces the computational complexity by employing a weight-sharing strategy. The experimental results demonstrate the effectiveness of our approach in terms of both the performance and efficiency.

4. Experiment

4.1. Implementation Details

- Experimental setting. We implemented the tracking network using Python 3.8 and PyTorch 1.12.1 and conducted experiments on a single Nvidia GeForce RTX 3090 GPU.

- Dataset setting. Our training dataset consisted of LaSOT [22], GOT-10k [13], ILSVRCVID [23], and COCO [24]. For the video dataset, we selected image pairs from two random frames within the same video sequence, with a maximum interval of 80 frames. To enhance the diversity of the training samples, we additionally incorporated the COCO object detection dataset, where each image pair was derived from each object to be detected. Moreover, we employed data augmentation techniques, including scaling, luminance variations, and vertical and horizontal flips, to expand the training dataset.

- Training setting. We utilized a pre-trained AlexNet model in PyTorch as the backbone network. To optimize the model performance, we selected Adam [25] as the optimizer. In total, we trained the network for 4000 epochs, with the Slot Attention and prediction network undergoing 4000 epochs of training, while the backbone network was frozen in the beginning for 200 epochs and then began training. Each epoch sampled 30,000 image pairs for training. Both the template and search images were resized to 255 pixels before being fed into the network. We applied a weight decay of 5 × 10−4 and a momentum of 0.9. We employed the cosine decay learning rate strategy to adjust the learning rate during training. The initial learning rate of the backbone network was set to 1 × 10−5, while different learning rates were applied to the Slot Attention module according to its number of stacked modules. The relationship between the number and learning rate is shown in Table 1.

Table 1. Relationship between attention module number and learning rate.

Table 1. Relationship between attention module number and learning rate.

4.2. Comparison on Multiple Benchmarks

VOT2019 Dataset. We tested SiamSlot on the VOT2019 dataset [26] with varying numbers of association (1–5). We compared its performance to other state-of-the-art tracking algorithms. The VOT dataset employs the reset-based method, where trackers are reset when there is no overlap between the predicted and actual bounding boxes. VOT assesses tracking performance through Expected Average Overlap (EAO), Accuracy, and Robustness. Accuracy measures the average overlap between the predicted and actual bounding box during successful tracking, which indicates the tracker’s ability to accurately forecast the boundaries of the target. A higher accuracy score implies better predictions. Robustness is calculated by averaging the number of failures across video sequences during tracking. Therefore, a lower robustness value suggests the tracker is more resistant to unexpected events. EAO integrates both accuracy and robustness evaluations into a single metric. Table 2 presents the result. Specifically, we used SiamSlot_x to represent our tracker, where x represents the number of times the Slot Attention mechanism performs feature associations. Notably, even though SiamSlot_1 was associated only once, the EAO metrics significantly outperformed many trackers. Enhancing the number of associations yielded remarkable performance gains for our trackers. Among them, SiamSlot_3 exhibited the highest performance, displaying a 2% improvement in accuracy and a 4.1% boost in EAO compared to MDNet. Additionally, it surpassed other approaches except MDNet in terms of Robustness.

Table 2.

The evaluation of VOT2019. The top two results are highlighted in red and blue, respectively.

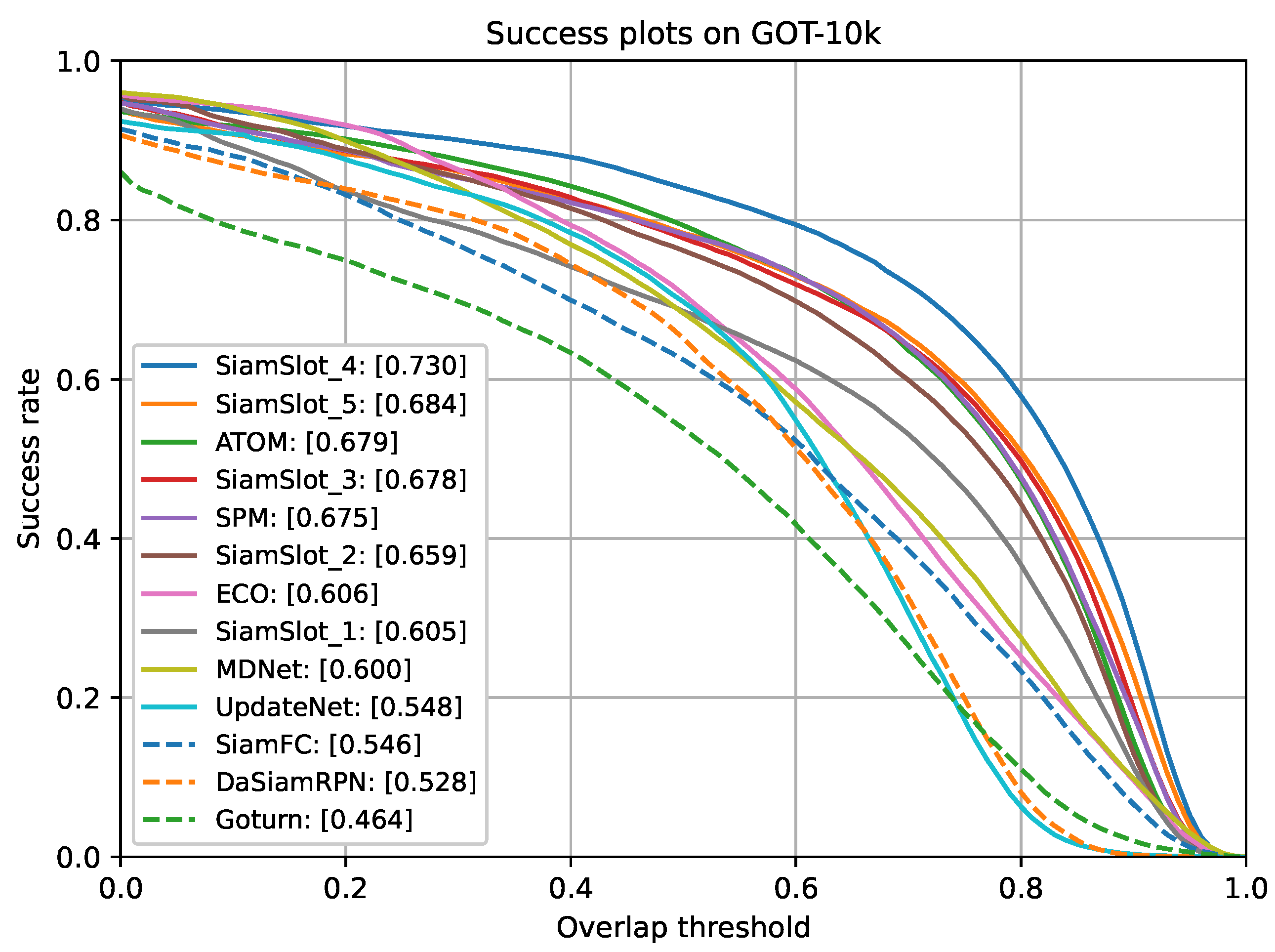

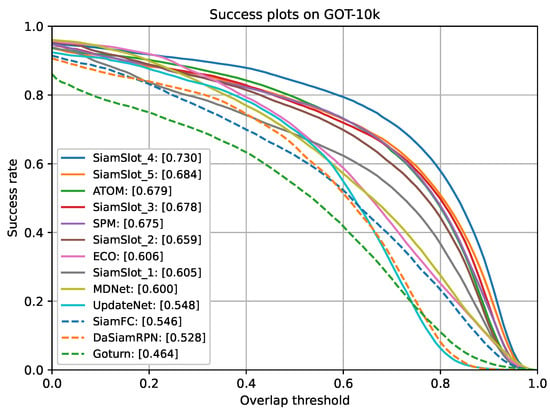

GOT-10k Dataset. The GOT-10k dataset [13] consists of 180 video sequences, with a total of 84 target categories. Therefore, it possesses a strong test effect for the generalization of trackers. To ensure fairness, we solely utilized the training set of GOT-10k to train our model. As seen in Figure 3, shows the trackers comparison in terms of Average Overlap (AO), including ATOM [33], SPM [34], ECO [35], MDNet, UpdateNet, SiamFC, DaSiamFRPN, Goturn, and our trackers. Thanks to Slot Attention’s better feature matching capabilities, our best SiamSlot_4 outperformed other comparison baselines, such as ATOM, by 5.1%. The result confirms the generalization ability of our tracker to adapt to diverse object instances.

Figure 3.

The evaluation with state-of-the-art trackers on GOT-10k in terms of success plots.

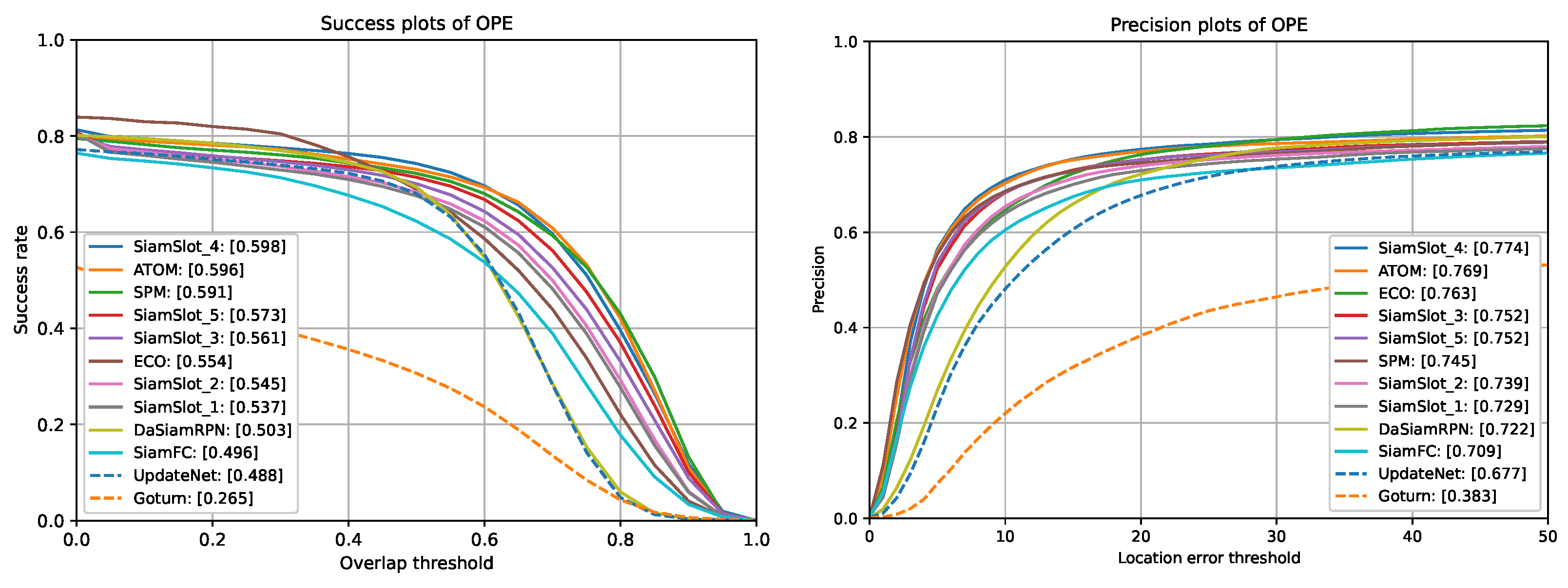

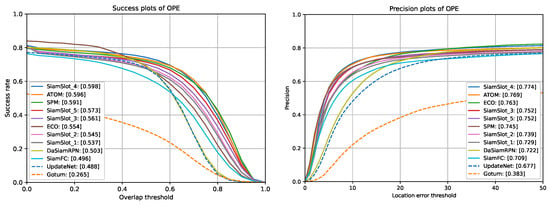

UAV123 Dataset. The UAV123 dataset [36] offers challenging scenes for trackers due to its diversity of scenes and actions. For example, with the movement of the UAV, the size and aspect ratio of the object relative to the initial frame change substantially. Some videos were recorded with unstable cameras, resulting in low resolution and noise. Therefore, testing on the UAV123 provides a more accurate assessment of a tracker’s capacity to handle rapidly changing objects. Our experimental results are displayed in Figure 4, where SiamSlot_4 achieved the highest tracking success rate, demonstrating a 0.2% and 0.7% improvement compared to ATOM and SPM, respectively. This shows that our trackers have made significant progress in handling scale variation and noise interference. The sensitivity of Slot Attention to appearance changes and its adaptability to nonlinear feature changes are fully demonstrated.

Figure 4.

The comparison with state-of-the-art trackers on UAV123 in terms of success and precision plots.

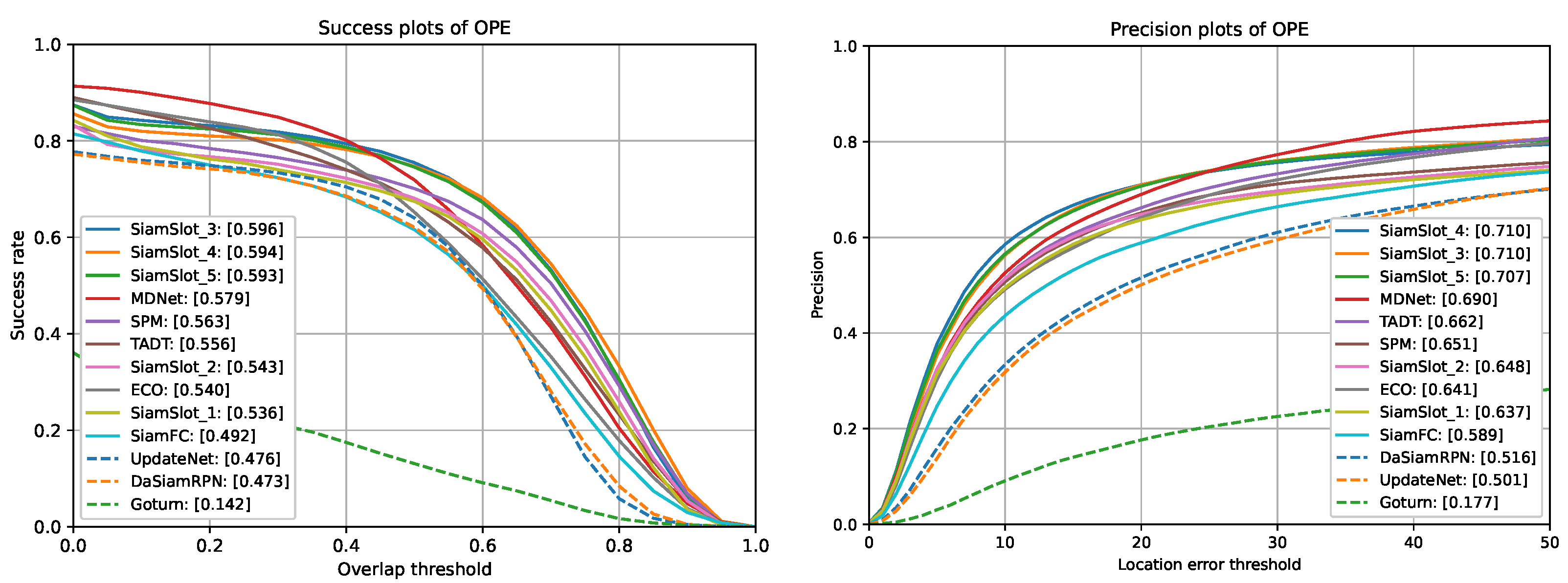

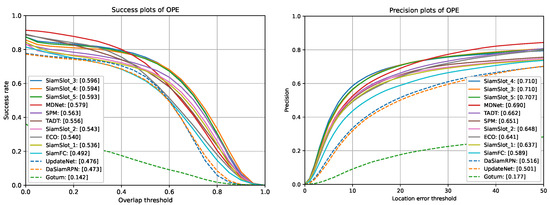

NFS Dataset. The Nfs dataset [37] employs a high-frame-rate camera to capture video sequences for tracking evaluation, thus eliminating the need for trackers to deal with blurred objects due to fast motion. However, other challenges persist, including background interference, object deformation, and low resolution. The evaluation result is shown in Figure 5, where SiamSlot_3, SiamSlot_4, and SiamSlot_5 emerged as the top performers. Notably, SiamSlot_3 displays a tracking success rate 1.7% higher than MDNet. Despite the relatively small challenges of the Nfs dataset, this excellent result demonstrates Slot Attention’s excellent performance against background interference and blurring.

Figure 5.

The comparison with state-of-the-art trackers on Nfs in terms of success and precision plots.

4.3. Ablation Experiments

The feature association method significantly influences the tracking performance, as the model needs to extract relevant object features from the search scene through feature association. Choosing an appropriate method can greatly affect the quality of the features. To verify the impact of Slot Attention on performance, we designed and implemented two tracking networks: one utilizing Slot Attention and the other using a convolutional association method. Both networks share the same architecture, but they differ in their different association strategies.

The Siamese tracking algorithm based on the convolutional association method is represented by the symbol SiamConv. The tracking algorithm based on Slot Attention is named according to the naming convention introduced in Section 4.2. We evaluated both comparison networks on the VOT2019 dataset, and the results are shown in Table 3. It is evident that even incorporating a single feature association via Slot Attention improveed the tracking accuracy and robustness by 0.7% and 1.261. This discovery verifies that Slot Attention possesses a superior feature extraction capability compared to the convolution technique. Although Slot Attention does not improve the accuracy much, it reinforces the robustness more effectively. This indicates that it can better capture target features in extreme situations and thereby ensure uninterrupted tracking. Moreover, as the number of associations increases, considerable advancements in tracking accuracy, robustness, and EAO can be clearly observed. The clear trend is shown in Table 3. In addition, since Slot Attention takes slots as the final output, we can infer that augmenting the number of associations leads to enhanced interactions between slots and image features, allowing slots to continually refine themselves according to the changing search scenario. This adaptation enables slot to better accommodate the shifting characteristics of the search environment, thereby contributing to improved model performance.

Table 3.

Comparison between convolutional association and Slot Attention association.

However, beyond four associations, the robustness and EAO begin to display different degrees of decline. For example, the performance of SiamSlot_4 and SiamSlot_5 is lower than that of SiamSlot_3. Therefore, we draw the conclusion that increasing the number of Slot Attention modules’ association times leads to progressive improvement in model performance. Nonetheless, when the number of associations exceeds 3 or 4, further augmenting the single attention module yields negligible enhancements in model performance.

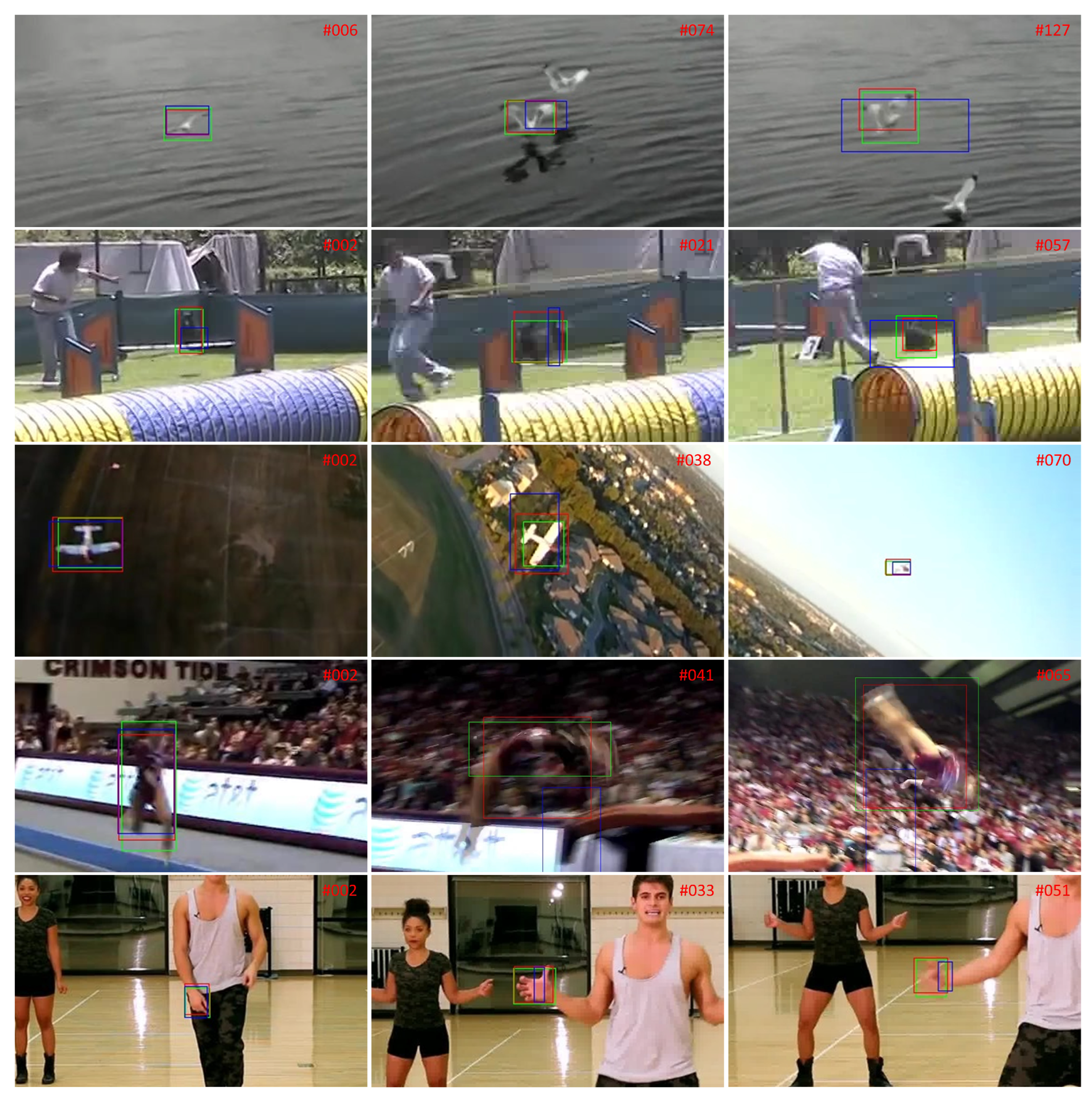

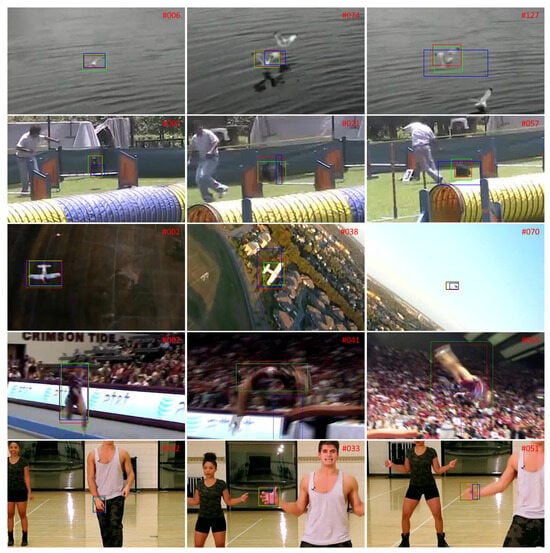

To provide a comprehensive assessment of our approach, we went beyond relying solely on quantitative evaluation metrics of the test dataset for comparison, we also conducted additional tests to evaluate the trackers’ performance under real-world scenarios characterized by object deformation and motion blur. To facilitate a qualitative analysis of the compare tracker, we visually represent the tracking scenarios. The comparison result is shown in Figure 6. Green denotes the ground truth, blue indicates the tracking results obtained through convolution, and red denotes the tracking results acquired through Slot Attention. The first and third rows of Figure 6 correspond to the cases where the object deformation is more serious. The second, fourth, and fifth rows demonstrate situations with both significant deformation and intense motion blur. Observe that upon integrating Slot Attention, the tracker displays remarkable improvement in performance under these challenging circumstances.

Figure 6.

Based on convolutional and Slot attention feature association methods, the tracking effect is compared in the case of motion blur and drastic object deformation. Green is the ground truth. Red is the detection box of SiamSlot. Blue is the detection box of SiamConv.

5. Conclusions

In this paper, we show the impact of different feature association methods on the performance of the tracker. Specifically, we compare the performance of a convolutional association method and Slot Attention association method, respectively. We also compare the effect of varying the number of Slot Attention associations on the tracker’s performance. To conduct these comparisons, we tested the tracker on several benchmark datasets, including VOT2019, GOT-10k, UAV123, and Nfs. The quantitative and qualitative analysis of the experimental results confirms that the employment of Slot Attention is indeed effective in improving the accuracy and robustness of the tracker. It demonstrates the capacity to effectively handle scenarios iharacterized by target blurring and extreme deformation to some extent. In addition, we find that incrementally increasing the number of Slot Attention iterations leads to a notable improvement in the tracker’s performance without incurring excessive memory burden. Instead, this requires only minor additional computational resources.

Although Slot Attention has attained commendable results, it remains in need of further optimization and enhancement. The Transformer architecture allows for an infinite number of output tokens in an autoregressive manner. Nevertheless, our experiments have uncovered a limitations: the number of Slot Attention associations is restricted. For example, if the number of associations different from that in training is changed, the output of the model may be negatively affected, which weakens the generalization ability of the model. In certain instances, increasing the number of associations is crucial to fostering stronger correlations between the template image and the search image. In view of this, developing strategies to enable Slot Attention to flexibly adapt to the varying number of feature associations in different situations has become an immediate imperative for this methodology.

Author Contributions

Conceptualization, J.W. and J.G.; software, J.W.; validation, J.G., X.Y. and D.W.; investigation, X.Y.; resources, X.T. and Z.L.; data curation, J.W. and D.W.; writing—original draft preparation, J.W.; writing—review and editing, J.W. and Z.L.; visualization, J.G.; supervision, X.T. and Z.L.; project administration, X.T. and Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Proceedings of the Computer Vision—ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Proceedings, Part II 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 850–865. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High performance visual tracking with siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Cananda, 7–12 December 2015. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar]

- Zhang, Z.; Peng, H. Deeper and wider siamese networks for real-time visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4591–4600. [Google Scholar]

- Yi, K.; Gan, C.; Li, Y.; Kohli, P.; Wu, J.; Torralba, A.; Tenenbaum, J.B. Clevrer: Collision events for video representation and reasoning. arXiv 2019, arXiv:1910.01442. [Google Scholar]

- Kulkarni, T.D.; Gupta, A.; Ionescu, C.; Borgeaud, S.; Reynolds, M.; Zisserman, A.; Mnih, V. Unsupervised learning of object keypoints for perception and control. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Locatello, F.; Weissenborn, D.; Unterthiner, T.; Mahendran, A.; Heigold, G.; Uszkoreit, J.; Dosovitskiy, A.; Kipf, T. Object-centric learning with slot attention. Adv. Neural Inf. Process. Syst. 2020, 33, 11525–11538. [Google Scholar]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware siamese networks for visual object tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 101–117. [Google Scholar]

- Wang, N.; Zhou, W.; Wang, J.; Li, H. Transformer meets tracker: Exploiting temporal context for robust visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1571–1580. [Google Scholar]

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning discriminative model prediction for tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6182–6191. [Google Scholar]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8126–8135. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. Got-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed]

- Ye, B.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Joint feature learning and relation modeling for tracking: A one-stream framework. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 341–357. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Guo, D.; Wang, J.; Cui, Y.; Wang, Z.; Chen, S. SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6269–6277. [Google Scholar]

- Yan, B.; Peng, H.; Fu, J.; Wang, D.; Lu, H. Learning spatio-temporal transformer for visual tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10448–10457. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. Lasot: A high-quality benchmark for large-scale single object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5374–5383. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Kristan, M.; Matas, J.; Leonardis, A.; Felsberg, M.; Pflugfelder, R.; Kamarainen, J.K.; Čehovin Zajc, L.; Drbohlav, O.; Lukezic, A.; Berg, A.; et al. The seventh visual object tracking VOT2019 challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Yang, T.; Chan, A.B. Learning dynamic memory networks for object tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 152–167. [Google Scholar]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4293–4302. [Google Scholar]

- Yang, T.; Chan, A.B. Visual tracking via dynamic memory networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 360–374. [Google Scholar] [CrossRef]

- Li, X.; Ma, C.; Wu, B.; He, Z.; Yang, M.H. Target-aware deep tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1369–1378. [Google Scholar]

- Zhang, L.; Gonzalez-Garcia, A.; Weijer, J.V.D.; Danelljan, M.; Khan, F.S. Learning the model update for siamese trackers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4010–4019. [Google Scholar]

- Held, D.; Thrun, S.; Savarese, S. Learning to track at 100 fps with deep regression networks. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 749–765. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. Atom: Accurate tracking by overlap maximization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4660–4669. [Google Scholar]

- Wang, G.; Luo, C.; Xiong, Z.; Zeng, W. Spm-tracker: Series-parallel matching for real-time visual object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3643–3652. [Google Scholar]

- Danelljan, M.; Bhat, G.; Shahbaz Khan, F.; Felsberg, M. Eco: Efficient convolution operators for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6638–6646. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for uav tracking. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 445–461. [Google Scholar]

- Kiani Galoogahi, H.; Fagg, A.; Huang, C.; Ramanan, D.; Lucey, S. Need for speed: A benchmark for higher frame rate object tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1125–1134. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).