Abstract

Traditional software effort estimation methods, such as term frequency–inverse document frequency (TF-IDF), are widely used due to their simplicity and interpretability. However, they struggle with limited datasets, fail to capture intricate semantics, and suffer from dimensionality, sparsity, and computational inefficiency. This study used pre-trained word embeddings, including FastText and GPT-2, to improve estimation accuracy in such cases. Seven pre-trained models were evaluated for their ability to effectively represent textual data, addressing the fundamental limitations of TF-IDF through contextualized embeddings. The results show that combining FastText embeddings with support vector machines (SVMs) consistently outperforms traditional approaches, reducing the mean absolute error (MAE) by 5–18% while achieving accuracy comparable to deep learning models like GPT-2. This approach demonstrated the adaptability of pre-trained embeddings for small datasets, balancing semantic richness with computational efficiency. The proposed method optimized project planning and resource allocation while enhancing software development through accurate story point prediction while safeguarding privacy and security through data anonymization. Future research will explore task-specific embeddings tailored to software engineering domains and investigate how dataset characteristics, such as cultural variations, influence model performance, ensuring the development of adaptable, robust, and secure machine learning models for diverse contexts.

1. Introduction

Software effort estimation is a cornerstone in the project management and software development lifecycle that allows for improved resource allocation, budgeting, and planning [1,2,3,4]. Historically, computed effort estimation methods were rooted in statistical and traditional machine learning paradigms [5,6]. However, effort estimations based on textual use cases and user stories are challenging due to their intrinsic informality and domain-specific information. Therefore, representing domain-specific terms and addressing the scarcity of domain-specific software engineering data could address limited datasets and domain gaps [7].

Advanced embedding representations have proved effective in most required engineering tasks [8]. Pre-trained models are applied in many areas, such as code review [9], cross-language code representation [10], requirement entity extraction [11], user feedback analysis [12], and quality of machine translation without a ground truth [13]. Furthermore, pre-trained models have sophisticated pre-training objectives and huge model parameters that can effectively capture knowledge from massive labeled and unlabeled datasets [14]. As a result, pre-trained embeddings capture rich semantic information, enabling a more realistic understanding of user stories. Given the plethora of available embeddings, such as global vectors for word representation (GloVe) [15], document to vector (Doc2Vec) [16], FastText [17], word to vector (Word2Vec) [18], and more recent models like bidirectional encoder representations from transformers (BERT) [19], Sentence-BERT (SBERT) [20], universal sentence encoder (USE) [21], and generative pre-trained transformer 2 (GPT-2) [22], the need for a comprehensive evaluation is imperative.

1.1. Problem

The TF-IDF approach, while simple and interpretable, fails to capture deep semantic relationships, particularly in small datasets. Its reliance on discrete representations leads to high-dimensional and sparse-feature spaces, resulting in increased computational costs and scalability issues [6]. Furthermore, TF-IDF struggles to distinguish between synonyms and antonyms, which limits its ability to represent textual data accurately [23].

Pre-trained word embeddings, such as FastText and GPT-2, address these shortcomings by providing contextualized representations that capture semantic nuances. However, increasing the sample size to enhance accuracy and stability, a standard solution for such challenges, is often impractical for software engineering datasets, which are typically limited in size [24]. Additionally, the absence or inaccessibility of historical project estimation data hinders informed decision making in this domain [25]. Another critical issue is overinterpretation, i.e., models make confident predictions despite lacking salient features [26]. While pre-trained models provide better semantic representation, they may lack the domain specificity inherently available in TF-IDF, potentially affecting performance in specialized tasks.

Given these challenges, traditional machine learning models, such as support vector machines (SVMs) and random forests, are better suited for small datasets due to their computational efficiency, interpretability, and ability to perform well with contextualized embeddings. These models effectively leverage embeddings while avoiding the high computational costs and overfitting risks associated with deep learning. Well-specified machine learning models that effectively use embeddings may outperform deep learning approaches when working with limited data [27,28,29,30].

1.2. Prior Research

While active learning can potentially enhance effort estimation, it is rather time-consuming and depends on the selection of humans and datasets [31]. The Fine-SE software effort estimation model revealed a superior performance that integrates 13 expert features with semantic features [3]. However, the model depended on crafted features that may vary based on projects and length of time [3]. Contextualized pre-trained models like BERT achieved promising results, with a mean absolute error (MAE) of 4.25 [32]. Similarly, Molla et al. [4] demonstrated that a domain-specific retrained BERT enhanced the prediction from 1.40% to 4.80% of the average pre-trained model. Our study extends the existing literature with several word-embedding methods based on conventional machine learning methods to capture genuine efforts with less training within and across software projects. Our reliable results exhibit generalizability and speed, which are valuable for effort estimation.

1.3. Approach

Pre-trained language models are trending in various domains and are considered similar to software reuse [7]. In software engineering tasks, especially text embedding, 35% of related studies employed text embedding to solve specific software engineering problems [7]. Consequently, contextualized pre-trained models are expected to present a transfer learning solution, enabling training on generic software effort estimation datasets. Therefore, we employed light traditional regression models with features extracted from pre-trained large language models.

1.4. Objectives and Research Questions

Our main objective was to explore pre-trained embedding models applied to effort estimation on textual features. We aimed to address the following research questions.

- What is the average best-performing pre-trained embedding model when evaluated across diverse software repositories and traditional machine learning methods, particularly for small datasets?

- Which pre-trained embedding model provides the best trade-off between computational efficiency and semantic richness for effort estimation tasks in small datasets?

- How do pre-trained embedding models compare to TF-IDF in individual and cross-project scenarios for software effort estimation, especially in data-constrained environments?

- How do the performance and efficiency of traditional machine learning methods with pre-trained embeddings compare to deep learning models and those without embeddings, particularly for small datasets?

This study is structured as follows. Section 2 provides the background on pre-trained models and discusses related work in story point prediction. Section 3 illustrates the methodology. Section 4 and Section 5 present and discuss the results, respectively. Section 6 addresses challenges and limitations. Finally, Section 7 concludes this study and outlines potential future work.

2. Background and Related Work

This section presents an overview of some pre-trained language models and related work in software effort estimation.

2.1. Background of Pre-Trained Models

The literature reports several embedding methods, e.g., GloVe [15], Doc2Vec [16], FastText [17], and Word2Vec [18], and more recent models like BERT [19], Sentence-BERT (SBERT) [20], universal sentence encoder [21], and GPT-2 [22]. GloVe embeddings capture word co-occurrence statistics based on global corpus statistics. In contrast, Doc2Vec distributes representations of sentences and documents embedded in longer text segments. FastText extends word embeddings by incorporating subword information and mapping words into continuous vector spaces to handle out-of-vocabulary words. BERT and SBERT focus on embedding entire text snippets to generate fixed-size vectors for varying-length text inputs, similar to the USE approach. GPT-2 is a state-of-the-art language model based on contextual generative text information.

Table 1 presents an analysis of embedding methods that can potentially be applied for software effort estimation. For instance, TF-IDF provides a fixed representation for each word or sentence. In contrast, Doc2Vec creates fixed-length vectors for documents, while GloVe captures the global context. FastText captures subword information for improved understanding, while USE generates contextualized representations for entire sentences. Furthermore, SBERT and GPT-2 provide context-aware embeddings for better semantic representation. Therefore, these methods may improve software effort estimation with varying lengths and training data.

Table 1.

Word-embedding methods.

2.2. Related Work

The literature shows that agile story point estimation with graph neural networks (GNNs) [33] attains 80% accuracy in classifying story points when using GNNs with GloVe embeddings compared to TF-IDF methods. Other studies tried to enhance long short-term memory (LSTM) predictions using optimization [34] or transfer learning techniques [35]. Deep-SE [36] is a model for agile project story point estimation based on LSTM and recurrent highway network architectures on a dataset of 23,313 issues. Tawosi et al. [37] analyzed a pre-trained model for its software effort estimation efficacy based on Deep-SE. Contrary to expectations, pre-training did not consistently enhance Deep-SE’s story point estimation accuracy. However, the Wilcoxon test revealed statistically significant differences in favor of pre-training for two projects. Their study concluded that pre-training the lower Deep-SE layer with user stories from other projects had a negligible effect on improving its story point estimation accuracy or convergence speed. However, the pre-training was in the lower LSTM embedding layers without relying on labeled data.

Favero et al. [32] utilized the contextualized pre-trained embedding model BERT to enhance the precision of effort estimates derived solely from textual requirements and achieved promising results, with a mean absolute error (MAE) of 4.25%. They showed that pre-trained word-embedding models provide more generalizability, facilitating estimate generation between diverse projects and companies. Similarly, another study showed that a retrained BERT could enhance 1.40% to 4.80% of the average pre-trained model [4].

One study compared the reliability of effort estimation using random forest and BERT feedforward linear neural networks [38]. The results showed that BERT had slightly better performance, with no significant difference between the methods. Utilizing six domain-specific models and two baselines, further research examined the effectiveness of additional pre-training on BERT models for story point estimation [39]. They reported that pre-training with domain- and repository-specific datasets shows limited effectiveness compared to off-the-shelf models and Deep-SE. The generative story point prediction model (GPT2SP) demonstrates substantial improvements over baseline approaches and notably enhances Deep-SE performance by 6–47%, where the GPT-2 and one fully connected layer used an off-the-shelf model [40].

Some research suggests that models trained on unlabeled data can outperform pre-trained embeddings in specific scenarios. For instance, a study focused on Agile Scrum projects achieved a significant 1.48% accuracy boost in effort estimation using pre-trained FastText embeddings compared to traditional methods [41]. However, optimal configurations likely depend on factors like model architecture and specific datasets. This underscores the need for further exploration to unlock the potential of pre-trained embeddings in this domain.

Recent advancements in software effort estimation show the effectiveness of integrating machine learning techniques to enhance predictive accuracy and address the limitations of traditional models. According to Mustafa and Osman [42], a random forest model with oversampling and feature selection provided accurate estimates superior to random guessing, particularly relevant for the SEERA dataset (software engineering in Sudan). Iordan [34] demonstrated that an optimized LSTM neural network enhanced with particle swarm optimization outperformed traditional machine learning methods such as k-nearest neighbors, decision trees, and random forests in predicting software development efforts across diverse datasets and achieved superior accuracy based on the mean absolute error (MAE) and root mean square error (RMSE). Sánchez-García et al. [43] emphasized the application of statistical models and machine learning techniques for software effort estimation at the design stage, effectively addressing challenges typically encountered in late-stage estimation. Their findings showed that while regression models achieved comparable accuracy for effort estimation, random forest models produced the most statistically accurate results for predicting development time, validated through leave-one-out cross-validation across 37 software projects.

Consequently, the identified research gaps underscore the need for further studies to develop effort estimation models optimized for small datasets and leveraging conventional machine learning techniques. In addition, pre-trained models must provide enough details about pre-trained models and their intended use [27,28,29]. Well-specified traditional machine learning models could outperform deep learning models [30].

3. Methodology

Our research aimed to build an accurate and efficient story point prediction system using pre-trained word embeddings and machine learning models. Figure 1 depicts the role of vectorization methods in converting textual data into feature-rich embeddings, which serve as inputs for regression models. The vectorization step involved loading text vectorization models into memory for quick access, leveraging pre-trained embedding models such as FastText and GPT-2. The embeddings were averaged per story and padded to desired lengths, balancing semantic richness with computational efficiency.

Figure 1.

Proposed methodology.

Multiple embedding lengths (e.g., 50, 100, and 300 dimensions) were tested to optimize trade-offs between computational cost and model performance and evaluate the outlined balance. The optimization of these embeddings and the regression models involved two parameter selection methods: random optimization and grid search over 10-fold cross-validation. Grid search was employed for algorithms with less than 100 parameter combinations, while random search was utilized for larger configurations, balancing the trade-off between computation time and thorough parameter exploration.

These embeddings were then used as inputs for various regression models, including lasso regression (LR), support vector machine (SVM), and random forest (RF), as shown in Table 2. The methodological steps are outlined in detail below.

Table 2.

Distribution of the dataset introduced in [36] and the processed dataset. C1 represents the count of instances in the original dataset, and C2 represents the count of the instances in the processed dataset.

3.1. Step 1: Data Preprocessing

Our initial step involved dataset collection for software effort estimation. Ultimately, we adopted the Deep-SE [36] dataset, derived from software issue reports, including titles and descriptions of issues and story points. We combined the issue titles and descriptions into a single text document for each entry, where the description followed the title. The dataset details are shown in Table 2. This dataset underwent a data preprocessing phase to ensure its quality, consistency, and readiness for subsequent analysis. Through preprocessing, we removed instances with empty or short descriptions (<30 characters) and eliminated non-informative URLs and HTML content. Short descriptions that did not provide meaningful information were also removed to maintain data quality. Preprocessing ensured the dataset was well structured and accessible to irrelevant noise, enabling the models to focus on meaningful features for prediction. The cleaned textual stories were then subject to further processing.

3.2. Step 2: Text Vectorization

Text vectorization transforms textual data into numerical representations using diverse word-embedding models. TF-IDF is considered a shallow model, and word-embedding models are more robust (see Table 1). We opted to load text vectorization models to memory; therefore, its availability locally allowed quick calls and void reloading. The default implementations of these text vectorization models were used, and further processing included averaging the embeddings per story and padding the sequences to the desired length.

However, these embeddings vary in length, with shorter vectors providing computational efficiency and longer vectors capturing more semantic richness. We experimented with multiple embedding lengths (e.g., 50, 100, and 300 dimensions) to balance these factors, allowing us to evaluate the trade-off between computational costs and model performance. Furthermore, the embeddings effectively encoded textual features such as word context, subword semantics, and syntactic structures, enhancing the representation of issue descriptions and story points for improved prediction accuracy. Moreover, reporting the performance of pre-trained models with varying vector lengths is essential to facilitate computational efficiency and model simplicity. However, a balance of overly aggressive truncation is needed to avoid the loss of crucial information from longer sequences. Therefore, varying lengths were used in this study, starting from 50 to 700, to optimize the performance and information retention of the model. The proposed approach can particularly be applied to small datasets, where traditional methods often fail to generalize effectively, offering improved accuracy without deep learning models. Consequently, using Algorithms 1 and 2, dimensionality was reduced, which allowed us to control vector representation dimensionality by manipulating sequence length, i.e., either truncating or padding.

| Algorithm 1: Preprocessing and Vectorization |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Algorithm 2: Training and testing pre-trained models step 3: Model training |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

3.3. Step 3: Model Training

We used six machine learning models for story point prediction: lasso regression, support vector regression (SVR), random forest regression, gradient boosting regression, k-nearest neighbor (KNN), and XGBoost. We applied grid search for models with fewer than 100 parameter combinations (e.g., lasso, SVR, random forest, and KNN) for hyperparameter tuning to ensure exhaustive parameter space exploration. We employed a randomized search for models with larger parameter spaces, such as gradient boosting and XGBoost, which allow for efficient optimization while maintaining high-quality results. The machine learning methods are detailed in Table 3.

Table 3.

Machine learning models used in effort estimation.

3.4. Step 4: Performance Metrics Evaluation

The final step involved a comprehensive model performance evaluation of several methods in Table 3. We employed an array of performance metrics, as detailed in Table 4. These metrics provide a holistic view of the models’ effectiveness in predicting story points and are commonly used in story point prediction models.

Table 4.

Performance metrics.

4. Results

Following the proposed approach in the previous section, the results were analyzed.

4.1. Step 1: Data Preprocessing

We selected the Deep-SE dataset [36]. This is one of the most cited datasets, with 23,313 user stories of effort estimation, and was collected from 16 open-source projects for agile project effort estimation. After applying our preprocessing steps, 21,070 instances were subjected to subsequent steps, as shown in Table 2.

4.2. Step 2: Text Vectorization

Our configuration optimized text vectorization using the scikit-learn library. The choice of models reflected a diversity of approaches to text representation, balancing simplicity, semantic richness, and computational efficiency. Our TF-IDF approach controlled vector space dimensionality through parameters such as “max_features”, “ngram_range”, “stop_words”, and “strip_accents”. In Doc2Vec, the Tagged Document model from Gensim was used to accurately generate vector representations using parameters like “vector_size”, “window”, and “min_count”. GloVe transformed textual representations (downloaded from GloVe websites with 300 dimensions) into “torch” vectors through the GloVe library from torch. FastText used the initial model of “cc.en.300.bin” and extended its capabilities with Hugging Face’s implementation, adding a layer of versatility to our vector space. USE incorporated Encoder v4 (512 dimensions) from TensorFlow Hub to generate universal sentence embeddings that transcend individual linguistic nuances. Proceeding to paraphrase identification and generation, we utilized SBERT with the paraphrase-MiniLM-L6-v2a model (“word_embedding_dimension”: 384), and further refinement was carried out using SentenceTransformer with a transformer model (BertModel) and advanced pooling mechanisms. The GPT-2 model used GPT2Model and GPT2Tokenizer features from transformers (768 dimensions). Therefore, our research employed diverse embedding techniques orchestrated to enhance the efficacy of our machine learning models.

4.3. Step 3: Model Training

Algorithms 1 and 2 used for regression models are presented in Table 3, and the parameters are described in Table 5. We employed a 10-fold cross-validation with a fixed random seed generator number for reproducibility.

Table 5.

Key parameters used for machine learning models used in effort estimation.

4.4. Step 4: Performance Metric Evaluation

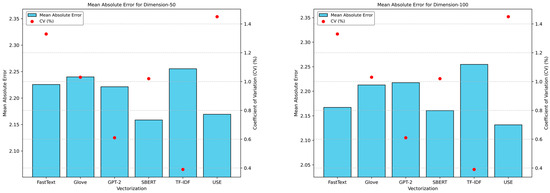

Figure 2 presents the average MAE for each pre-trained model across dimensionality lengths ranging from 50 to 700. The average MAE generally decreased or remained stable across most pre-trained models. On average, the TF-IDF and Doc2Vec models performed the worst, with MAEs of 2.26% and 2.20%, respectively. In contrast, models such as USE and SBERT exhibited slight variations in the average MAE, with scores of 2.14%. We examined the MAE’s standard deviation and Coefficient of Variation (CV) to assess model stability and reliability comprehensively. The Doc2Vec and TF-IDF models exhibited negligible standard deviations (0.000 and 0.009, respectively), indicating minimal variability and consistent performance, with CV values of 0.00% and 0.39%, respectively. Conversely, the USE had a higher standard deviation of 0.031, suggesting more significant variability in MAE values and a CV of 1.45%. This variability indicates that the USE’s performance may be sensitive to changes in vector length, necessitating precise vector length selection for optimal performance. GPT-2 had a lower standard deviation of 0.014 and a CV of 0.61%. On the other hand, FastText and SBERT exhibited relatively similar standard deviations (0.029 and 0.022, respectively), with CVs of 1.3% and 1.02%, implying potentially similar behavior across vector lengths. Additionally, we examined the standard deviation within the same vector length across pre-trained models (i.e., all models). For instance, at vector length 50, the average standard deviation was relatively low at 0.036 (CV = 1.62%). In contrast, the average standard deviation increased to 0.043 (CV = 1.89%) and 0.053 (CV = 2.44%) for vector lengths of 100 and 700, respectively. Therefore, vector length optimization for each word-embedding technique ensures stable and reliable model performance across different natural language processing tasks. Consequently, a general trend is that the MAE values tend to decrease or remain relatively stable as the vector length increases for most word-embedding methods. However, beyond 256, improvements were marginal or negligible, and the methods exhibited relatively consistent performance. It is essential to note that this observation might be specific to the dataset and task.

Figure 2.

Average MAE for pre-trained models across vector lengths (50–700). Lower values indicate better model performance, with FastText and SBERT achieving the lowest MAE.

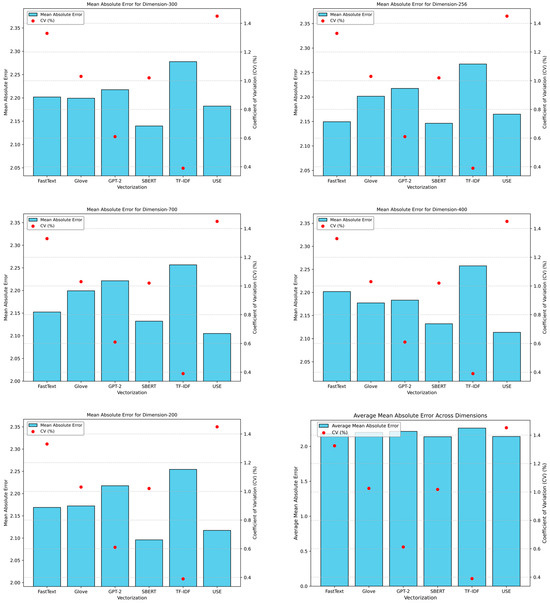

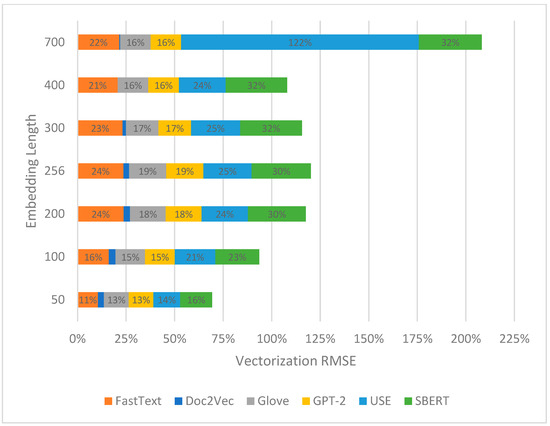

The average RMSE for each pre-trained model across different lengths and trends captured by various pre-trained models across different lengths are shown in Figure 3. For instance, Doc2Vec consistently maintained an RMSE mean of 3.00 (CV = 0.0%) across all vector lengths, indicating stable performance regardless of vectorization size. In contrast, models like FastText and GloVe exhibited slight variations in RMSE across vector lengths, with an average of 2.918 (CV = 0.50%) and 2.927 (CV = 0.60%), respectively. However, SBERT demonstrated a relatively high standard deviation of 0.020 (CV = 0.69%), suggesting more significant variability in RMSE values across vector lengths. Conversely, models like GPT-2 displayed a lower standard deviation of 0.004 (CV = 0.1%), indicating more consistent RMSE values. The CVs ranged from 1.14% when length = 50 to 1.98% when length = 700, indicating more discrepancy as RMSE further penalizes errors.

Figure 3.

Average RMSE for pre-trained models across vector lengths. Lower values indicate better model performance, with FastText and SBERT achieving the lowest RMSE.

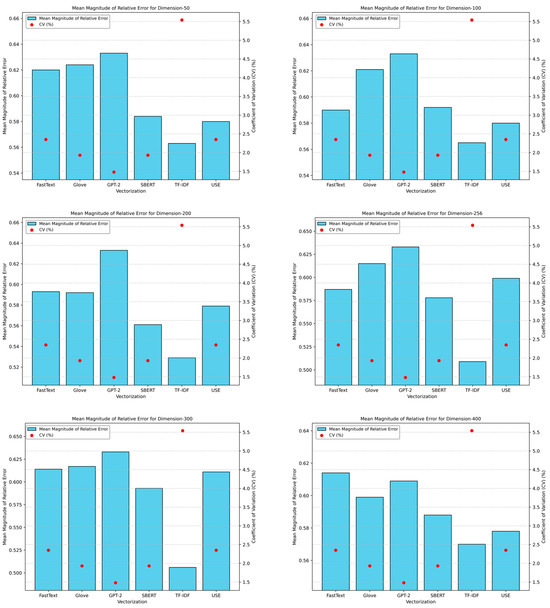

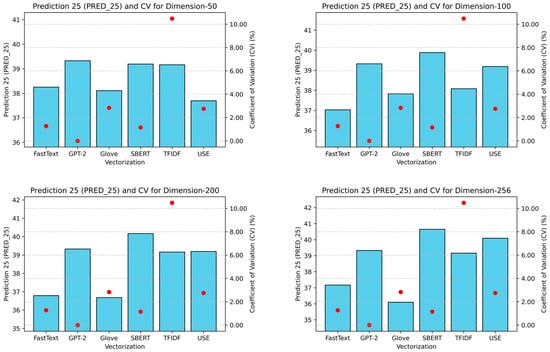

The average MMRE for each pre-trained model across different lengths and trends captured by various pre-trained models across different lengths are shown in Figure 4. For instance, Doc2Vec maintained a consistent MMRE of 0.55 (CV = 0.00%) across all vector lengths, suggesting stable performance regardless of vectorization size. Conversely, models such as TF-IDF showed fluctuations in MMRE across vector lengths, with an average of 0.55 (CV = 5.5%). Moreover, SBERT exhibited a relatively low standard deviation of 0.011 (CV = 1.9%), indicating consistent MMRE values across vector lengths. In contrast, USE displayed a higher standard deviation of 0.014 (CV = 2.3%), implying more significant variability in MMRE values than SBERT.

Figure 4.

Average MMRE for pre-trained models across vector lengths (50–700). Lower values indicate better model performance, with FastText and SBERT achieving the lowest.

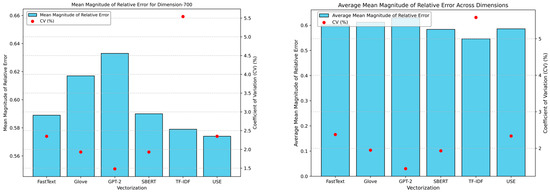

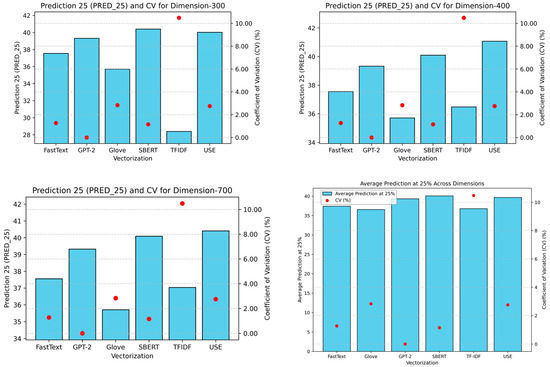

The average PRED (25) for all the analyzed machine learning models is shown in Figure 5. Pred (25) was used to measure the percentage of predictions within a threshold (25 in this case) of the true values. Doc2Vec consistently achieved a Pred (25) value of 39.16 (CV = 0.00%) across all vector lengths, indicating stable performance in predicting values within the specified threshold. Conversely, models like FastText and GloVe demonstrated variations in Pred (25) values across vector lengths, with average values of 37.42 (CV = 1.27%) and 36.55 (CV = 2.8%), respectively. TF-IDF exhibited a relatively high standard deviation of 3.854 (CV = 10.5%), suggesting significant variability in Pred (25) values across vector lengths. Conversely, models like GPT-2 displayed a lower standard deviation of 0.000 (CV= 0.00%), indicating more consistent Pred (25) values. For Pred (25), CVs were 1.7% when length = 50, and 4.5% when length = 700.

Figure 5.

Average PRED (25) scores for pre-trained models. SBERT and USE achieved the highest percentage of predictions.

This study set the TF-IDF vector model as a baseline compared to other models due to its simplicity and domain specificity. Comparing TF-IDF with FastText revealed intriguing performance patterns. One reason is that FastText handles out-of-vocabulary words and captures intricate linguistic patterns through subword information. However, models like GPT-2 and SBERT might excel in specific scenarios but struggle with domain-specific or limited context datasets. Therefore, the results indicated that FastText exhibits superior performance, with an average MAE of 2.18, RMSE of 2.92, MMRE of 0.60, and Pred (25) score of 37.42. Moreover, MAE standard deviations were low (per model: 0.01 over 300 dimensions). Therefore, the analysis indicated that FastText could be used for real-world applications as a reliable tool for software effort estimation endeavors.

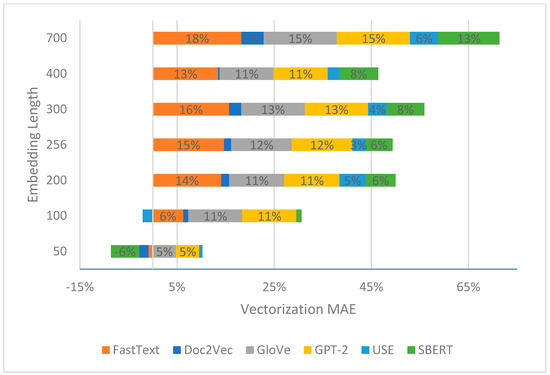

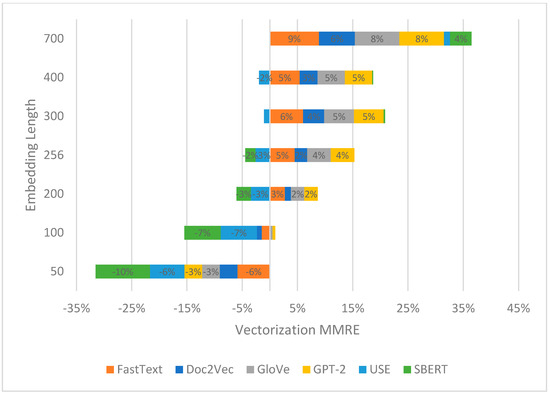

The performance of various machine learning algorithms (Boost, KNN, lasso, RF, SVM, and XGBoost) trained using FastText embeddings across different lengths was evaluated through percentage differences between the MAEs of TF-IDF and another vectorization model. Figure 6 illustrates the MAEs of pre-trained models compared to TF-IDF. Notably, when the length of FastText embeddings was set to 700, performance was enhanced by 18%, the highest among all lengths for FastText. In contrast, GloVe and GPT-2 exhibited an improved range between 11% and 15%. However, minimal improvement was observed for Doc2Vec. Additionally, a 13% enhancement was observed for SBERT at a length of 700, while a proportionate 6–8% enhancement was observed for lengths ranging from 200 to 400. Similarly, Figure 7 presents the MMRE of pre-trained models compared to TF-IDF. Notably, FastText exhibited the most significant improvement at a length of 700, with an enhancement of 9%. GloVe and GPT-2 showed improvements ranging from 5% to 8%, while SBERT demonstrated a maximum 4% increase at a length of 700. Figure 8 displays the RMSEs of pre-trained models compared to TF-IDF. Once again, FastText demonstrated the highest enhancement of 22% at a length of 700, followed by improvements ranging from 16% to 19% for GloVe and GPT-2. SBERT exhibited an enhancement of 32% at a length of 700 and an improvement of 0% to 32% for lengths ranging from 50 to 400, respectively. Multiple evaluation metrics must be considered for a comprehensive understanding. We noted that the improvement for the USE RMSE was above 100% because the RMSE involves the average magnitude of errors. At the same time, the MAE measures the average error magnitude without considering direction. This distinction becomes evident when comparing models exhibiting trade-offs between accuracy and precision. Therefore, the RMSE and MAE should be carefully evaluated to inform model selection and optimization decisions.

Figure 6.

Percentage improvement in MAE for pre-trained models compared to TF-IDF. FastText showed the highest improvement, particularly at a vector length of 700.

Figure 7.

Percentage improvement in MMRE for pre-trained models compared to TF-IDF. FastText consistently outperformed TF-IDF, demonstrating significant improvements.

Figure 8.

Percentage of improvement in RMSE for pre-trained models compared to TF-IDF. FastText demonstrated the highest improvement, underscoring its stability and reliability.

The results revealed that while FastText frequently demonstrated superior performance across metrics such as MAE, RMSE, and MMRE, regression methods also influenced its effectiveness. Among all six machine learning models—Boost, KNN, lasso, RF, SVM, and XGBoost—FastText consistently exhibited low variability and robust results. Its Coefficient of Variation (CV) for MAE (1.3%) and RMSE (0.50%) highlighted its stability, particularly at higher vector lengths, where it often outperformed TF-IDF and Doc2Vec. However, alternative embeddings such as SBERT and GPT-2 exhibited competitive performance with specific regression methods, such as XGBoost, demonstrating slightly lower CVs in RMSE (0.69%) and consistent MMRE values.

The interaction between embedding models and regression algorithms is critical in determining overall performance. FastText’s superior handling of out-of-vocabulary words and subword information aligns well with models like Random Forest and Gradient Boosting, which effectively capture complex relationships within the data. However, the variability in embeddings like USE and SBERT suggests that task-specific considerations, including vector length optimization and regression algorithm choice, are crucial for achieving the best results.

Regarding practical application, the results validated FastText as a reliable embedding for software effort estimation when paired with robust regression models. Even with small datasets, machine learning models such as SVM and Random Forest provided good results when utilizing FastText embeddings, demonstrating their adaptability and effectiveness. Its minimal variability across metrics and machine learning methods makes it a strong general-purpose candidate. However, the observed CV differences among embeddings and regression methods underscore the importance of aligning model and algorithm choices with the task and dataset characteristics. Consequently, these findings highlight the need for comprehensive evaluations across metrics and regression models to guide optimal selection for specific applications.

FastText consistently outperformed TF-IDF and other embeddings across all metrics, demonstrating superior stability and reliability. Our results align with recent literature that reports that FastText was a good choice [44]. It achieved the lowest MAE and RMSE values with minimal variability, highlighting its precision across vector lengths. FastText also exhibited robust predictive accuracy, achieving higher PRED (25) scores than TF-IDF while maintaining consistent performance in MMRE. These improvements, particularly at a vector length of 700, validated that FastText is an efficient and reliable tool for estimating software effort.

5. Discussion

The existing literature has notable gaps regarding effort estimation using pre-trained models. This study compared the performance of various pre-trained embedding models, such as FastText, GPT-2, and SBERT, alongside regression techniques like Support Vector Machine (SVM) and Random Forest (RF), against external benchmarks. This comprehensive analysis evaluates their effectiveness in effort estimation across diverse datasets and features. We explored the dynamics between static and contextualized embeddings, weighing trade-offs between dimensionality, computational costs, and performance improvements.

FastText emerged as a consistently strong performer, with exceptional reliability and stability across various metrics. Its average MAE of 2.18% and RMSE of 2.92% highlighted its accuracy. The model’s relatively low standard deviations in the MAE (0.01 per model, 0.03 across models over 300 dimensions) indicated stable performance across different vector lengths, which is crucial for reliable software effort estimation. Comparing FastText pre-trained models to TF-IDF, there was an enhancement of 18% in performance, the highest among all lengths for FastText. FastText’s proficiency in handling out-of-vocabulary words and capturing complex linguistic patterns through subword information enhanced its utility. Moreover, its lightweight architecture allows for efficient training; therefore, it is considered a dependable tool for software effort estimation, especially when the dataset is shallow, where pre-trained models could help contextualize the efforts.

We assessed the influence of vector size on model performance, considering semantic information capture and computational efficiency. Our experiments with optimized 10-fold cross-validation using the six machine learning models and varying vector lengths indicated that FastText was the best-performing vector model when the length was 300. The baseline method, TF-IDF, yielded significant results in a few datasets (projects) such as “dura-cloud” and “user grid”; however, more complex projects, such as “Moodle”, highlighted TF-IDF’s limitations with a higher MAE (12.46%). Conversely, FastText is competitive compared to TF-IDF, with MAE values ranging from 0.88% to 11.94%.

Table 6 compares story point prediction methods with the best model, FastText, with a vector length of 300 (SVM). The SVM model maintained a competitive edge, often outperforming several state-of-the-art models. For instance, our SVM model outperformed the Graph Neural Network (GNN) model referenced in [33] across six projects, with average error values of 3.15 compared to 4.01. Additionally, the SVM demonstrated lower mean and median error values, indicating a tendency for more accurate predictions. Our models’ adaptability enhanced their versatility, with an absolute difference of 1.05 and 1.71 with Deep-SE and GTP2SP, respectively. This trend indicates that our SVM (with FastText) is reliable for estimating software effort. Table 7 substantiates our findings by comparing SVM with different pre-trained models. The average and median values from the table reveal that pre-trained models generally enhance prediction accuracy. The SVM model performed commendably, and specific pre-trained models excelled in some scenarios. For instance, the SVM and SBERT combination with a vector size of 200 exhibited superior performance in Project 1 (“appceleratorstudio”), while GBOOST with FastText at 200 vectors excelled in Project 2 (“aptanastudio”). Therefore, appropriate model configurations depend on project-specific requirements. Hence, the results have practical benefits of integrating pre-trained models in software effort estimation to deliver reliable and accurate predictions across diverse project contexts. Consequently, our SVM with FastText embeddings demonstrated robust performance in software effort estimation across diverse projects.

Table 6.

Comparison with state-of-the-art models using MAE (HAN: hierarchical attention networks, GNN: graph neural networks, HeteroSP: heterogeneous graph neural networks for story point estimation, Deep-SE: deep learning model for estimating story points, GPT2SP: transformer-based agile story point estimation approach-GPT-2-based software point estimation).

Table 7.

FastText comparison with datasets (projects).

The implications drawn from our study hold significant relevance for both practitioners and researchers in software engineering and natural language processing. Adopting pre-trained FastText with a vector size of 300 enhanced the accuracy of software effort estimation tasks, especially for cases where TF-IDF is not prominent due to shallow dataset size. Therefore, better estimation empowers project managers and development teams to make informed decisions regarding resource allocation, budgeting, and project planning. Moreover, integrating pre-trained embedding models into software effort estimation workflows can significantly streamline project management processes. However, future research should prioritize the development of task-specific embeddings tailored to software-engineering domain intricacies based on specific project types.

While using cross-validation and randomized optimization mitigates a few challenges to the proposed model, other factors demand attention to enrich the depth of our analysis and enhance the reliability of our conclusions. While FastText demonstrates commendable performance across datasets, balancing model complexity and performance is essential. Exploring FastText generalizability across diverse software domains is imperative. Our analysis primarily focuses on open-source software datasets; therefore, extending the assessment to different domains, such as enterprise software or specialized applications, could shed light on the models’ adaptability and effectiveness across varied contexts. Moreover, ensemble methods, which amalgamate predictions from multiple models, could enhance predictive accuracy and robustness in software effort estimation tasks. Benchmarking our model’s performance against software effort estimation methods, such as function point analysis or COCOMO, could further highlight its automation, accuracy, and adaptability. In addition, dataset imbalance or quality could result in poor results and impede model performance. Model interpretability and explainability are also essential for practitioners. Therefore, feature importance analysis, attention mechanisms, or model-agnostic interpretability methods can explain the relationship between input features and output predictions for trustworthiness and usability models.

6. Limitations and Threats to Validity

A few limitations that could affect the generalizability and interpretation of our findings should be acknowledged. Studies have revealed that relevant features depend primarily on the characteristics of the available datasets and underlying projects [47]. We utilized open-source datasets, which may inherently limit the generalizability of our results to proprietary or domain-specific projects. Further research should evaluate the model’s performance across diverse datasets to ensure broader applicability. Even with small datasets, machine learning models like SVM and FastText embeddings demonstrate their practicality for effort estimation tasks in data-constrained scenarios. In addition, external factors, such as cultural differences, could be triggered inside datasets. Therefore, the model’s performance may be influenced by shifts in language use or user story expressions that could not align with the pre-trained models. Thus, longitudinal studies and continuous model retraining are recommended.

We acknowledge a few factors that pose challenges to validity, which were addressed using different strategies. Dataset sizes and project characteristics could threaten sample representativeness, thus influencing our findings’ external validity. However, sampling bias was mitigated by preprocessing and averaging the results with several metrics across machine learning and word-embedding models. Furthermore, careful consideration should be given to hyperparameter sensitivity. We addressed this problem with an in-depth analysis where many systematic parameters were subject to grid search and employed 10-fold cross-validation. However, conducting sensitivity analyses to explore alternative hyperparameter configuration effects would provide a more comprehensive understanding of our model’s behavior. While we utilize widely accepted regression metrics, it is essential to recognize that they might not capture other capabilities. Therefore, integrating additional metrics such as mean squared logarithmic error (MSLE) or quantile loss and conducting residual analysis could unveil further findings.

7. Conclusions and Future Direction

This study determined the effectiveness of embedding models across diverse datasets and machine learning algorithms. We examined TF-IDF, GloVe, Doc2Vec, FastText, Universal Sentence Encoder (USE), SBERT, and GPT-2 embeddings, and conducted thorough experiments across 16 datasets with varying sequence lengths of embedding models. The results showed that contextualized models, particularly FastText and GPT-2, are better story point prediction models for TF-IDF vectors. FastText consistently outperformed TF-IDF across multiple regression algorithms, reducing the Mean Absolute Error (MAE) by an average of 5% to 18%. Notably, the FastText model, optimized through a vector length of 300 and paired with SVM, demonstrated a performance comparable to state-of-the-art deep learning methods like GPT2SP and Deep-SE while maintaining computational efficiency. Therefore, SVM emphasizes effectively capturing story point semantics due to FastText features, such as out-of-vocabulary and subword semantics. Thus, the adaptability and versatility of pre-trained models across diverse datasets and machine learning algorithms balance semantic richness with computational efficiency. Our findings showed that pre-trained embedding models, particularly FastText with SVM, are well suited for small datasets, offering improved prediction accuracy and computational efficiency without relying on deep learning.

Further research could investigate the development of task-specific embeddings tailored to software engineering domains. Subsequently, customizing embedding models to the unique characteristics of software projects may lead to more reliable predictions. Furthermore, the interpretability of pre-trained models could enhance trust in model predictions for better decision making. Research could also explore ensemble learning techniques to enhance the robustness and resilience of software effort estimation models. Longitudinal studies could assess model performance over time using ensemble models and implement continuous retraining strategies. In addition, researchers might explore transfer learning and domain adaptation techniques to tailor pre-trained models to specific software engineering domains and project types.

Author Contributions

Conceptualization, I.A.; methodology, I.A.; software, I.A. and A.A.O.; validation, I.A. and A.A.O.; formal analysis, I.A. and A.A.O.; investigation, I.A. and A.A.O.; resources, I.A. and A.A.O.; data curation, I.A. and A.A.O.; writing—original draft preparation, I.A. and A.A.O.; writing—review and editing I.A. and A.A.O.; visualization, I.A. and A.A.O.; supervision I.A. and A.A.O.; project administration, I.A. and A.A.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The datasets are found in this link: https://github.com/jai2shukla/JIRA-Estimation-Prediction/tree/master/storypoint/IEEE%20TSE2018/dataset (accessed on 2 December 2024).

Acknowledgments

This research was partially supported by Philadelphia University, Jordan.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jadhav, A.; Shandilya, S.K.; Izonin, I.; Gregus, M. Effective Software Effort Estimation Leveraging Machine Learning for Digital Transformation. IEEE Access 2023, 11, 83523–83536. [Google Scholar] [CrossRef]

- Ritu; Bhambri, P. Software Effort Estimation with Machine Learning—A Systematic Literature Review. In Agile Software Development; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2023; pp. 291–308. ISBN 9781119896838. [Google Scholar]

- Li, Y.; Ren, Z.; Wang, Z.; Yang, L.; Dong, L.; Zhong, C.; Zhang, H. Fine-SE: Integrating Semantic Features and Expert Features for Software Effort Estimation. In Proceedings of the 2024 IEEE/ACM 46th International Conference on Software Engineering (ICSE), Lisbon, Portugal, 14–20 April 2024; IEEE Computer Society: Los Alamitos, CA, USA, 2024; pp. 292–303. [Google Scholar]

- Molla, Y.S.; Yimer, S.T.; Alemneh, E. COSMIC-Functional Size Classification of Agile Software Development: Deep Learning Approach. In Proceedings of the 2023 International Conference on Information and Communication Technology for Development for Africa (ICT4DA), Bahir Dar, Ethiopia, 26–28 October 2023; pp. 155–159. [Google Scholar]

- Swandari, Y.; Ferdiana, R.; Permanasari, A.E. Research Trends in Software Development Effort Estimation. In Proceedings of the 2023 10th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Palembang, Indonesia, 20–21 September 2023; pp. 625–630. [Google Scholar]

- Rashid, C.H.; Shafi, I.; Ahmad, J.; Thompson, E.B.; Vergara, M.M.; De La Torre Diez, I.; Ashraf, I. Software Cost and Effort Estimation: Current Approaches and Future Trends. IEEE Access 2023, 11, 99268–99288. [Google Scholar] [CrossRef]

- Gong, L.; Zhang, J.; Wei, M.; Zhang, H.; Huang, Z. What Is the Intended Usage Context of This Model? An Exploratory Study of Pre-Trained Models on Various Model Repositories. ACM Trans. Softw. Eng. Methodol. 2023, 32, 1–57. [Google Scholar] [CrossRef]

- Sonbol, R.; Rebdawi, G.; Ghneim, N. The Use of NLP-Based Text Representation Techniques to Support Requirement Engineering Tasks: A Systematic Mapping Review. IEEE Access 2022, 10, 62811–62830. [Google Scholar] [CrossRef]

- Li, Z.; Lu, S.; Guo, D.; Duan, N.; Jannu, S.; Jenks, G.; Majumder, D.; Green, J.; Svyatkovskiy, A.; Fu, S.; et al. Automating Code Review Activities by Large-Scale Pre-Training. In Proceedings of the 30th ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Singapore, 14–18 November 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 1035–1047. [Google Scholar]

- Lin, Z.; Li, G.; Zhang, J.; Deng, Y.; Zeng, X.; Zhang, Y.; Wan, Y. XCode: Towards Cross-Language Code Representation with Large-Scale Pre-Training. ACM Trans. Softw. Eng. Methodol. 2022, 31, 1–44. [Google Scholar] [CrossRef]

- Li, M.; Yang, Y.; Shi, L.; Wang, Q.; Hu, J.; Peng, X.; Liao, W.; Pi, G. Automated Extraction of Requirement Entities by Leveraging LSTM-CRF and Transfer Learning. In Proceedings of the 2020 IEEE International Conference on Software Maintenance and Evolution (ICSME), Adelaide, Australia, 28 September–2 October 2020; pp. 208–219. [Google Scholar] [CrossRef]

- Hadi, M.A.; Fard, F.H. Evaluating pre-trained models for user feedback analysis in software engineering: A study on classification of app-reviews. Empir. Softw. Eng. 2023, 28, 88. [Google Scholar] [CrossRef]

- Chen, Y.; Su, C.; Zhang, Y.; Wang, Y.; Geng, X.; Yang, H.; Tao, S.; Jiaxin, G.; Minghan, W.; Zhang, M.; et al. HW-TSC’s participation at WMT 2021 quality estimation shared task. In Proceedings of the Sixth Conference on Machine Translation, Online, 10–11 November 2021; Barrault, L., Bojar, O., Bougares, F., Chatterjee, R., Costa-jussa, M.R., Federmann, C., Fishel, M., Fraser, A., Freitag, M., Graham, Y., et al., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 890–896. [Google Scholar]

- Han, X.; Zhang, Z.; Ding, N.; Gu, Y.; Liu, X.; Huo, Y.; Qiu, J.; Yao, Y.; Zhang, A.; Zhang, L.; et al. Pre-trained models: Past, present and future. AI Open 2021, 2, 225–250. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C.D. GloVe: Global vectors for word representation. In Proceedings of the EMNLP 2014—2014 Conference on Empirical Methods in Natural Language Processing, Proceedings of the Conference, Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Le, Q.V.; Mikolov, T. Distributed representations of sentences and documents. In Proceedings of the 31st International Conference on Machine Learning (ICML-14), Beijing, China, 21–26 June 2014; pp. 1188–1196. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. In Proceedings of the 1st Workshop on Subword and Character Level Models in NLP, Copenhagen, Denmark, 7 September 2017; pp. 56–65. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed Representations of Words and Phrases and their Compositionality. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–8 December 2013; pp. 3111–3119. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the NAACL HLT 2019—2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies—Proceedings of the Conference, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing, Hong Kong, China, 3–7 November 2019; pp. 3982–3992. [Google Scholar]

- Cer, D.; Yang, Y.; Kong, S.; Hua, N.; Limtiaco, N.; John, R.S.; Constant, N.; Guajardo-Cespedes, M.; Yuan, S.; Tar, C.; et al. Universal Sentence Encoder. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Brussels, Belgium, 31 October–4 November 2018. [Google Scholar] [CrossRef]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models are Few-Shot Learners. arXiv 2019, arXiv:2005.14165. [Google Scholar]

- Patil, R.; Boit, S.; Gudivada, V.; Nandigam, J. A Survey of Text Representation and Embedding Techniques in NLP. IEEE Access 2023, 11, 36120–36146. [Google Scholar] [CrossRef]

- Cui, Z.; Gong, G. The effect of machine learning regression algorithms and sample size on individualized behavioral prediction with functional connectivity features. Neuroimage 2018, 178, 622–637. [Google Scholar] [CrossRef]

- Duszkiewicz, A.G.; Sørensen, J.G.; Johansen, N.; Edison, H.; Rocha Silva, T. Leveraging Historical Data to Support User Story Estimation. In Proceedings of the Product-Focused Software Process Improvement; Kadgien, R., Jedlitschka, A., Janes, A., Lenarduzzi, V., Li, X., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 284–300. [Google Scholar]

- Li, Y.; Zhang, T.; Luo, X.; Cai, H.; Fang, S.; Yuan, D. Do Pretrained Language Models Indeed Understand Software Engineering Tasks? IEEE Trans. Softw. Eng. 2023, 49, 4639–4655. [Google Scholar] [CrossRef]

- Mitchell, M.; Wu, S.; Zaldivar, A.; Barnes, P.; Vasserman, L.; Hutchinson, B.; Spitzer, E.; Raji, I.D.; Gebru, T. Model Cards for Model Reporting. In Proceedings of the Conference on Fairness, Accountability, and Transparency, Atlanta, GA, USA, 29–31 January 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 220–229. [Google Scholar]

- Arnold, M.; Bellamy, R.K.E.; Hind, M.; Houde, S.; Mehta, S.; Mojsilović, A.; Nair, R.; Ramamurthy, K.N.; Olteanu, A.; Piorkowski, D.; et al. FactSheets: Increasing trust in AI services through supplier’s declarations of conformity. IBM J. Res. Dev. 2019, 63, 6:1–6:13. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, Y.; Wang, J.; Liu, B.; Li, D.; Guo, Y.; Chen, X.; Liu, Y. Remos: Reducing defect inheritance in transfer learning via relevant model slicing. In Proceedings of the Proceedings of the 44th International Conference on Software Engineering, Pittsburgh, PA, USA, 22–27 May 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 1856–1868. [Google Scholar]

- Bailly, A.; Blanc, C.; Francis, É.; Guillotin, T.; Jamal, F.; Wakim, B.; Roy, P. Effects of dataset size and interactions on the prediction performance of logistic regression and deep learning models. Comput. Methods Programs Biomed. 2022, 213, 106504. [Google Scholar] [CrossRef]

- Guo, Y.; Hu, Q.; Cordy, M.; Papadakis, M.; Le Traon, Y. DRE: Density-based data selection with entropy for adversarial-robust deep learning models. Neural Comput. Appl. 2023, 35, 4009–4026. [Google Scholar] [CrossRef]

- De Bortoli Fávero, E.M.; Casanova, D.; Pimentel, A.R. SE3M: A model for software effort estimation using pre-trained embedding models. Inf. Softw. Technol. 2022, 147, 106886. [Google Scholar] [CrossRef]

- Phan, H.; Jannesari, A. Story Point Level Classification by Text Level Graph Neural Network. In Proceedings of the 1st International Workshop on Natural Language-based Software Engineering, Pittsburgh, PA, USA, 8 May 2022; pp. 75–78. [Google Scholar] [CrossRef]

- Iordan, A.-E. An Optimized LSTM Neural Network for Accurate Estimation of Software Development Effort. Mathematics 2024, 12, 200. [Google Scholar] [CrossRef]

- Hoc, H.T.; Silhavy, R.; Prokopova, Z.; Silhavy, P. Comparing Stacking Ensemble and Deep Learning for Software Project Effort Estimation. IEEE Access 2023, 11, 60590–60604. [Google Scholar] [CrossRef]

- Choetkiertikul, M.; Dam, H.K.; Tran, T.; Pham, T.T.M.; Ghose, A.; Menzies, T. A Deep Learning Model for Estimating Story Points. IEEE Trans. Softw. Eng. 2019, 45, 637–656. [Google Scholar] [CrossRef]

- Tawosi, V.; Moussa, R.; Sarro, F. Agile Effort Estimation: Have We Solved the Problem Yet? Insights From a Replication Study. IEEE Trans. Softw. Eng. 2023, 49, 2677–2697. [Google Scholar] [CrossRef]

- Alhamed, M.; Storer, T. Evaluation of Context-Aware Language Models and Experts for Effort Estimation of Software Maintenance Issues. In Proceedings of the 2022 IEEE International Conference on Software Maintenance and Evolution (ICSME), Limassol, Cyprus, 2–7 October 2022; pp. 129–138. [Google Scholar] [CrossRef]

- Amasaki, S. On Effectiveness of Further Pre-Training on BERT Models for Story Point Estimation. In Proceedings of the 19th International Conference on Predictive Models and Data Analytics in Software Engineering, San Francisco, CA, USA, 8 December 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 49–53. [Google Scholar]

- Fu, M.; Tantithamthavorn, C. GPT2SP: A Transformer-Based Agile Story Point Estimation Approach. IEEE Trans. Softw. Eng. 2023, 49, 611–625. [Google Scholar] [CrossRef]

- Porru, S.; Murgia, A.; Demeyer, S.; Marchesi, M.; Tonelli, R. Estimating story points from issue reports. In Proceedings of the ACM International Conference Proceeding Series; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar]

- Mustafa, E.I.; Osman, R. A random forest model for early-stage software effort estimation for the SEERA dataset. Inf. Softw. Technol. 2024, 169, 107413. [Google Scholar] [CrossRef]

- Sánchez-García, Á.J.; González-Hernández, M.S.; Cortés-Verdín, K.; Pérez-Arriaga, J.C. Software Estimation in the Design Stage with Statistical Models and Machine Learning: An Empirical Study. Mathematics 2024, 12, 1058. [Google Scholar] [CrossRef]

- Raza, A.; Espinosa-Leal, L. Predicting the Duration of User Stories in Agile Project Management. In Proceedings of the Smart Technologies for a Sustainable Future; Auer, M.E., Langmann, R., May, D., Roos, K., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 316–328. [Google Scholar]

- Kassem, H.; Mahar, K.; Saad, A.A. Story Point Estimation Using Issue Reports With Deep Attention Neural Network. E-Inform. Softw. Eng. J. 2023, 17, 1–15. [Google Scholar] [CrossRef]

- Phan, H.; Jannesari, A. Heterogeneous Graph Neural Networks for Software Effort Estimation. Int. Symp. Empir. Softw. Eng. Meas. 2022, 103–113. [Google Scholar] [CrossRef]

- Sousa, A.O.; Veloso, D.T.; Goncalves, H.M.; Faria, J.P.; Mendes-Moreira, J.; Graca, R.; Gomes, D.; Castro, R.N.; Henriques, P.C. Applying Machine Learning to Estimate the Effort and Duration of Individual Tasks in Software Projects. IEEE Access 2023, 11, 89933–89946. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).