MNCATM: A Multi-Layer Non-Uniform Coding-Based Adaptive Transmission Method for 360° Video

Abstract

1. Introduction

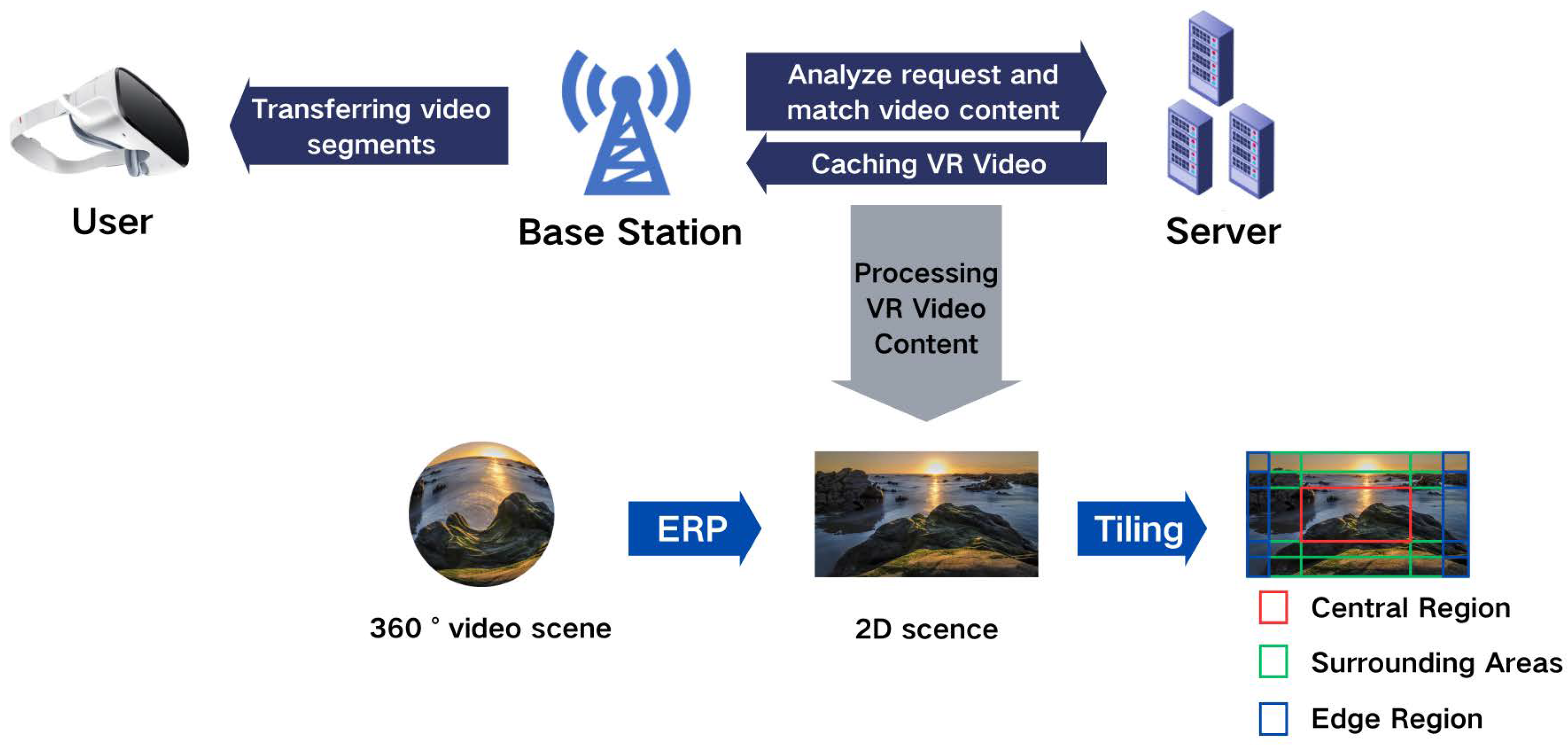

- This paper proposes a non-uniform 360-degree video encoding scheme, where the viewing screen is divided into regions of different sizes based on the varying distribution of user attention.

- This paper introduces an adaptive transmission architecture based on non-uniformly encoded multi-layer for 360° video, mathematically modeling the adaptive transmission process, and formulating the optimization problems that need to be addressed. Finally, the MNCATM method is proposed to solve the optimization problem, fully utilizing the available network bandwidth, thereby avoiding resource wastage while ensuring optimal user’s QoE.

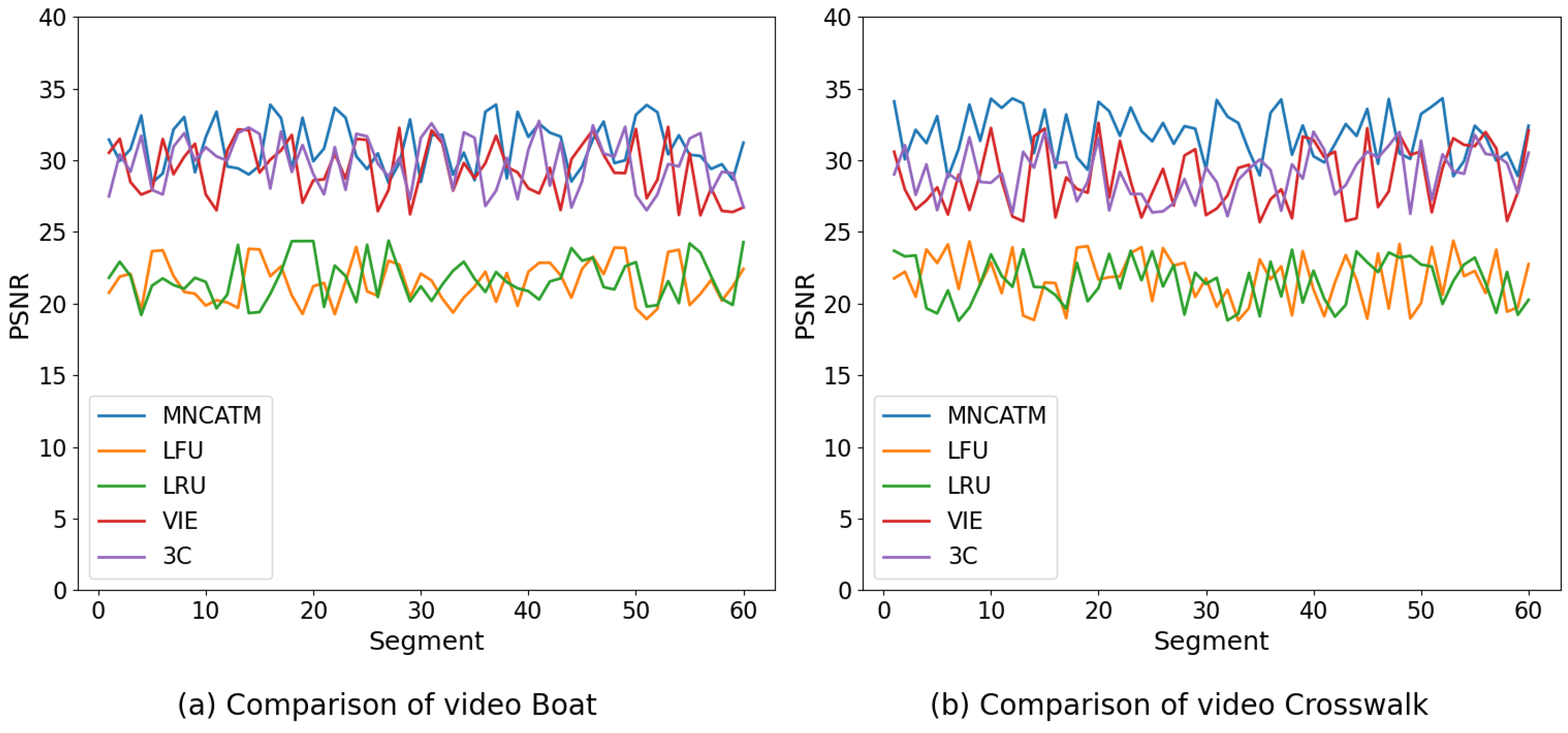

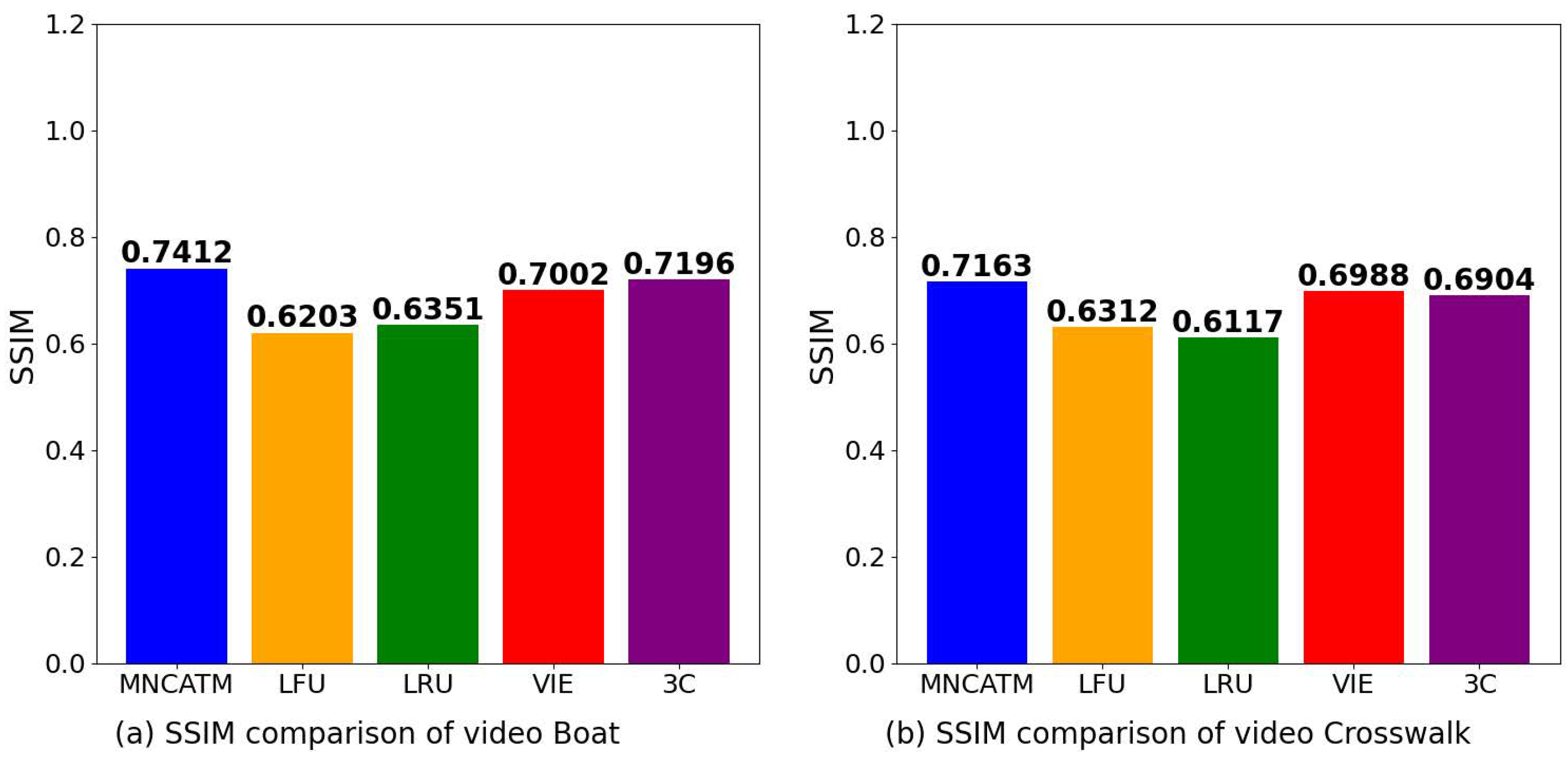

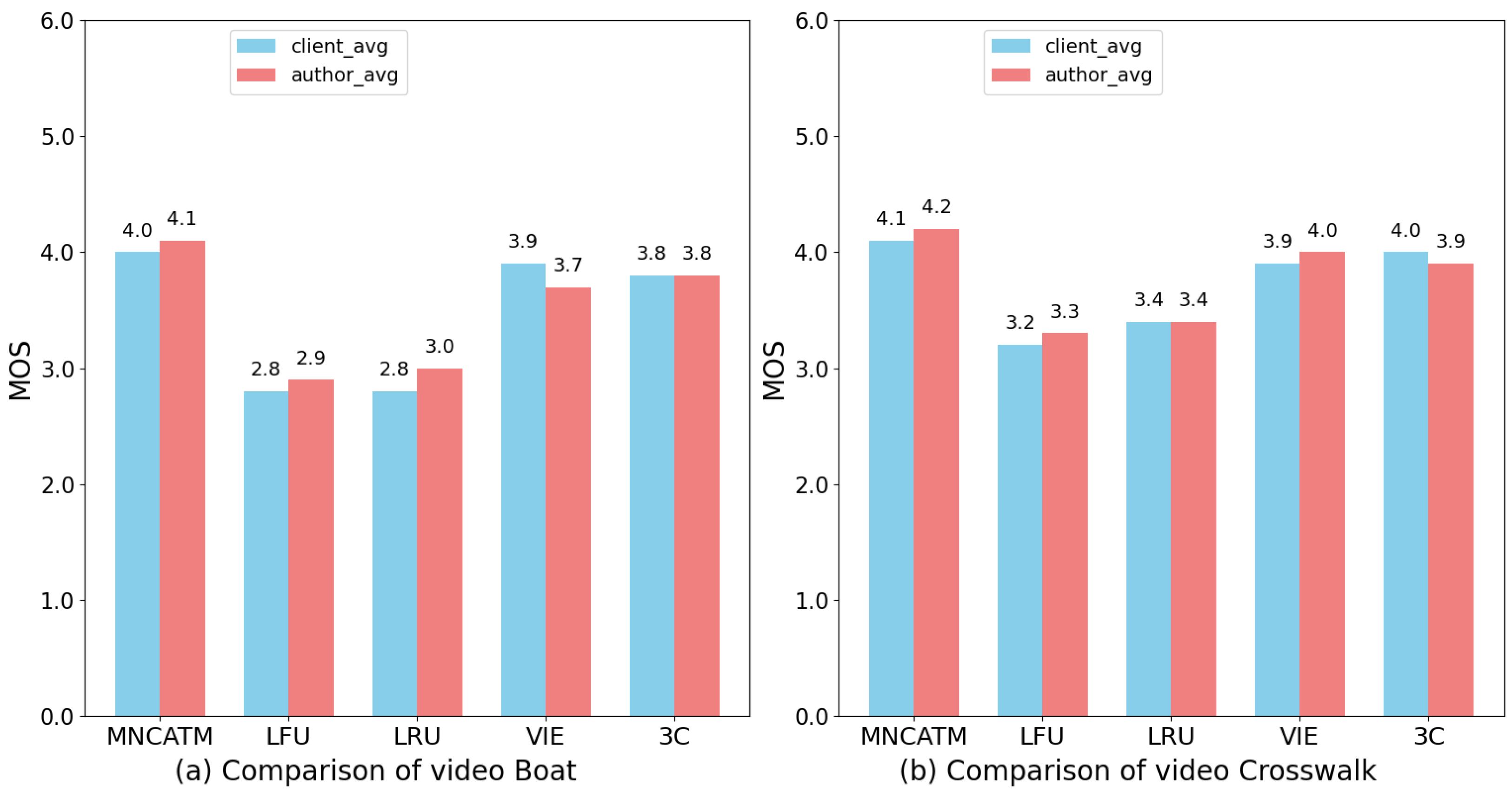

- In the simulation experiments, the proposed scheme is compared and evaluated against four other transmission schemes, and the impact of key performance indicators is analyzed. The results demonstrate that MNCATM outperforms in terms of bandwidth utilization and user’s QoE.

2. Related Work

2.1. Optimizations of Caching Techniques

2.1.1. Edge Caching

2.1.2. DRL-Based Caching

2.1.3. Collaborative Caching

2.1.4. Proactive Caching and Viewport Prediction

2.2. Optimization of Multicast Delivery Technology

2.2.1. Tile-Based Multicasting

2.2.2. Proactive Multicasting and Viewport Prediction

2.2.3. Multicasting in Innovative 5G Networks

3. System Model

3.1. Network Model

3.2. Content Model

3.3. Caching Model

4. Algorithm

| Algorithm 1 MNCATM algorithm. |

Require: The bandwidth: B, the bitrate: b. .

|

5. Computational Complexity Analysis

5.1. Algorithmic Complexity

5.2. Real-Time Feasibility at the Edge

6. Experimental Results

6.1. Experiment Settings

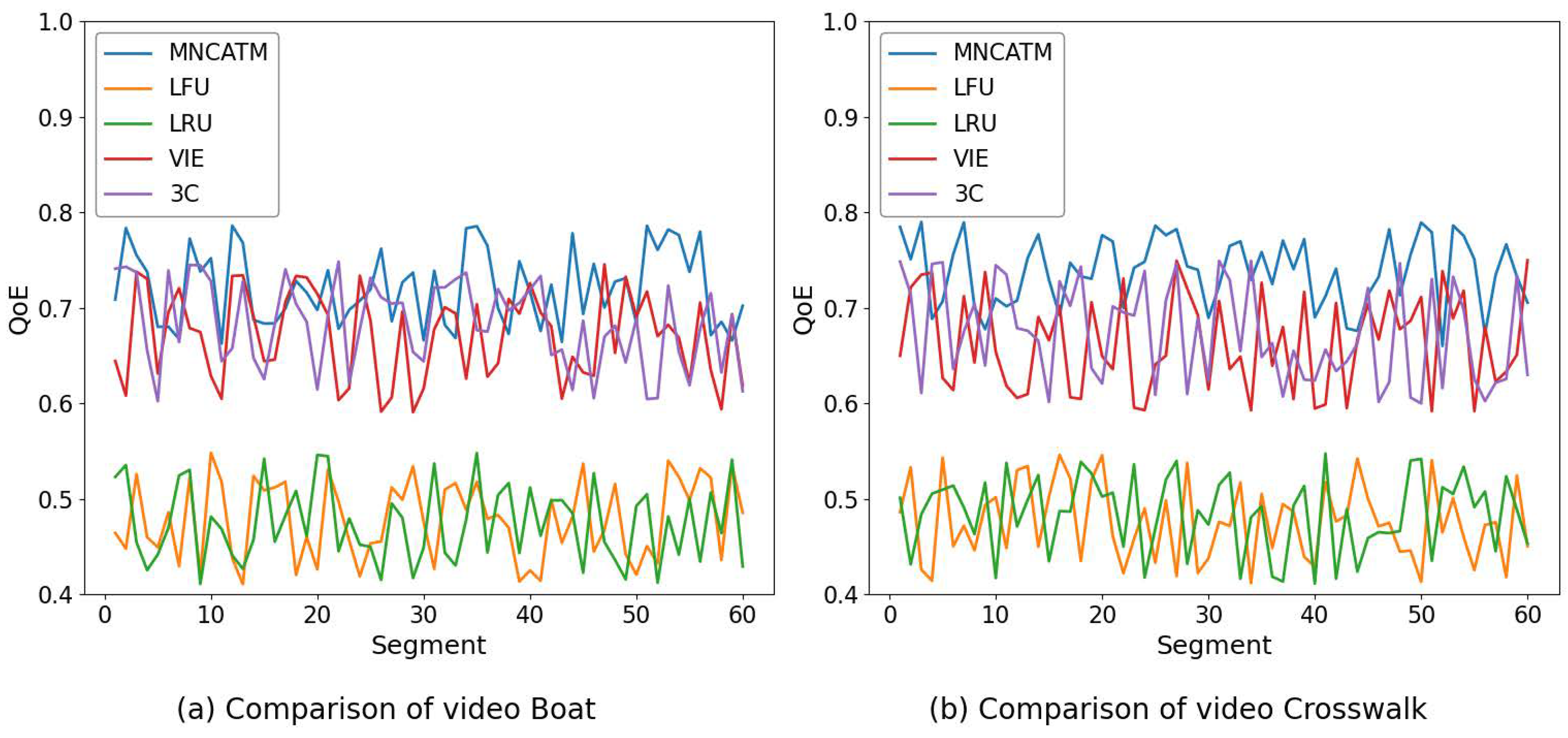

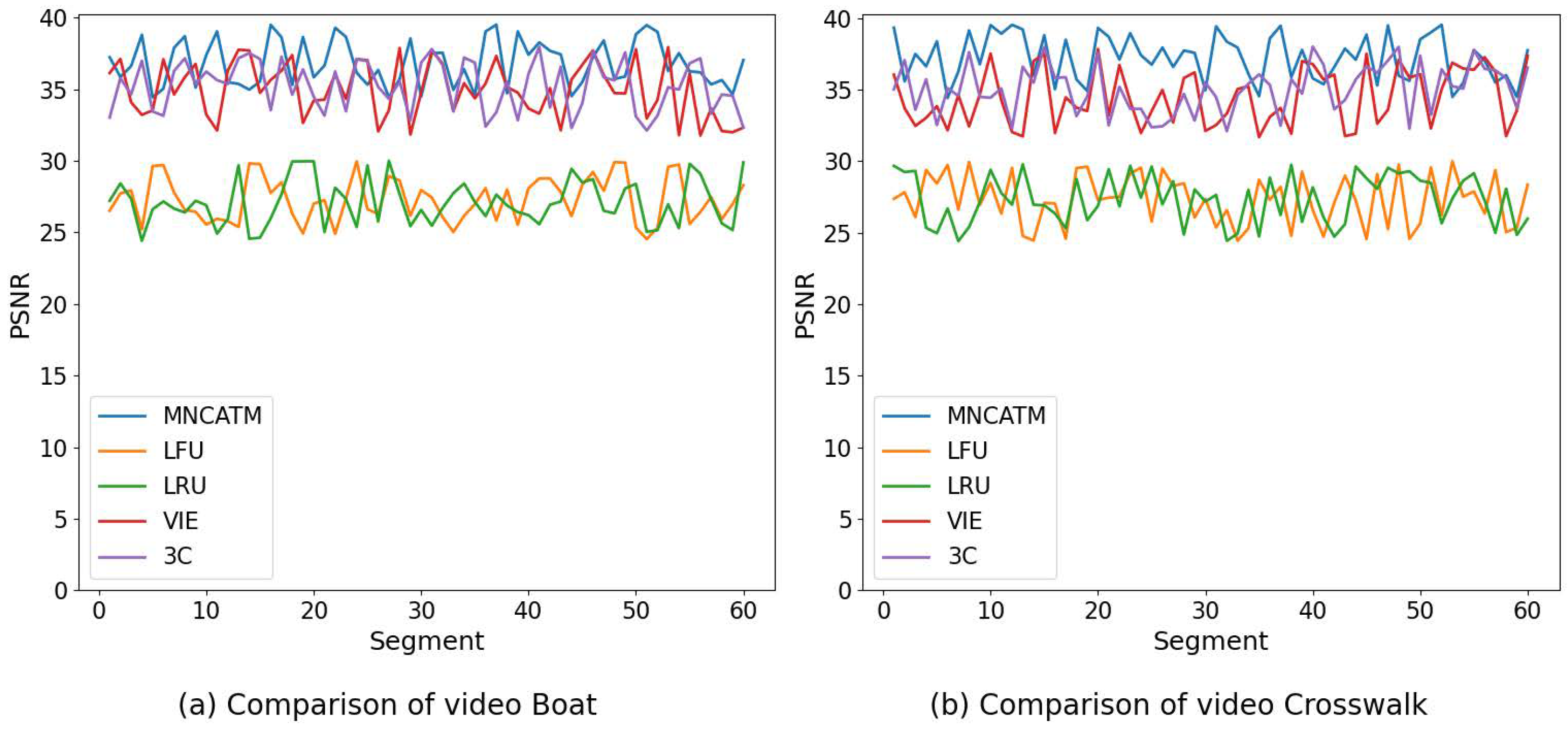

- Least frequent use (LFU) [4]: The number of times each tile has been requested is recorded, and caching newly arrived tiles by removing the least frequently used tiles.

- Least recently used (LRU) [4]: The last requested time of each tile is recorded, and caching newly arrived tiles by removing the least recently used tiles.

- The recent victor download and recent failure deletion (VIE) [22]: By predicting users’ viewing behavior, popular video tiles are cached, and infrequently used tiles are dynamically removed from the cache based on their popularity.

- A joint communication–computation–cache (3C) optimization algorithm (3C) [23]: The overall optimization problem is decomposed into three sub-problems through the decomposition method: joint caching and computing problem, bandwidth allocation problem, and request probability solution problem.

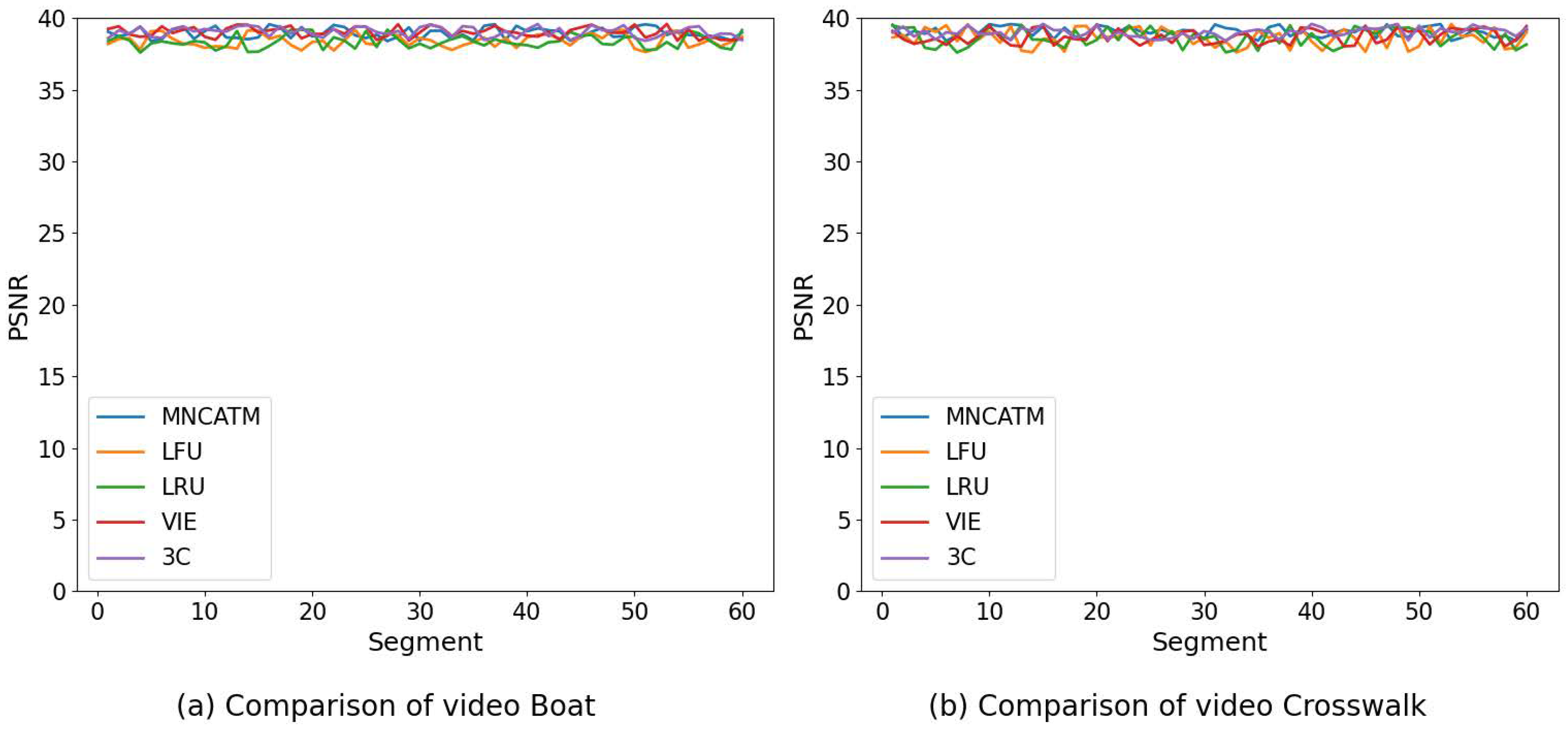

6.2. Evaluation Results

6.3. Statistical Analysis and Results

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- AR/VR Headset Market Forecast to Decline 8.3% in 2023 But Remains on Track to Rebound in 2024, According to IDC. Available online: https://www.idc.com/getdoc.jsp?containerId=prUS51574023 (accessed on 5 March 2024).

- Yang, T.; Tan, Z.; Xu, Y.; Cai, S. Collaborative edge caching and transcoding for 360° video streaming based on deep reinforcement learning. IEEE Internet Things J. 2022, 9, 25551–25564. [Google Scholar] [CrossRef]

- Mahmoud, M.; Rizou, S.; Panayides, A.S.; Kantartzis, N.V.; Karagiannidis, G.K.; Lazaridis, P.I.; Zaharis, Z.D. A survey on optimizing mobile delivery of 360° videos: Edge caching and multicasting. IEEE Access 2023, 11, 68925–68942. [Google Scholar] [CrossRef]

- Maniotis, P.; Thomos, N. Viewport-Aware Deep Reinforcement Learning Approach for 360° Video Caching. IEEE Trans. Multimed. 2021, 24, 386–399. [Google Scholar] [CrossRef]

- Zheng, C.; Liu, S.; Huang, Y.; Yang, L. Hybrid policy learning for energy-latency tradeoff in MEC-assisted VR video service. IEEE Trans. Veh. Technol. 2021, 70, 9006–9021. [Google Scholar] [CrossRef]

- Han, S.; Su, H.; Yang, C.; Molisch, A.F. Proactive edge caching for video on demand with quality adaptation. IEEE Trans. Wirel. Commun. 2019, 19, 218–234. [Google Scholar] [CrossRef]

- Guo, Y.; Yu, F.R.; An, J.; Yang, K.; Yu, C.; Leung, V.C.M. Adaptive bitrate streaming in wireless networks with transcoding at network edge using deep reinforcement learning. IEEE Trans. Veh. Technol. 2020, 69, 3879–3892. [Google Scholar] [CrossRef]

- Sun, L.; Duanmu, F.; Liu, Y.; Wang, Y.; Ye, Y.; Shi, H. A two-tier system for on-demand streaming of 360 degree video over dynamic networks. IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 43–57. [Google Scholar] [CrossRef]

- Hu, M.; Chen, J.; Wu, D.; Zhou, Y.; Wang, Y.; Dai, H. TVG-streaming: Learning user behaviors for QoE-optimized 360-degree video streaming. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 4107–4120. [Google Scholar] [CrossRef]

- Ozcinar, C.; Abreu, A.D.; Smolic, A. Viewport-aware adaptive 360 video streaming using tiles for virtual reality. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017. [Google Scholar]

- Son, J.; Ryu, E.-S. Tile-based 360-degree video streaming for mobile virtual reality in cyber physical system. Comput. Electr. Eng. 2018, 72, 361–368. [Google Scholar] [CrossRef]

- Nguyen, D.V.; Tran, H.T.T.; Pham, A.T.; Thang, T.C. An optimal tile-based approach for viewport-adaptive 360-degree video streaming. IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 29–42. [Google Scholar] [CrossRef]

- Jeppsson, M. Efficient live and on-demand tiled HEVC 360 VR video streaming. Int. J. Semant. Comput. 2019, 13, 367–391. [Google Scholar] [CrossRef]

- Carreira, J.; de Faria, S.M.M.; Tavora, L.M.N.; Navarro, A.; Assuncao, P.A. 360° video coding using adaptive tile partitioning. In Proceedings of the 2021 Telecoms Conference (ConfTELE), Leiria, Portugal, 11–12 February 2021. [Google Scholar]

- Yaqoob, A.; Muntean, C.; Muntean, G.-M. Flexible Tiles in Adaptive Viewing Window: Enabling Bandwidth-Efficient and Quality-Oriented 360° VR Video Streaming. In Proceedings of the IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Bilbao, Spain, 15–17 June 2022. [Google Scholar]

- Nguyen, D.V.; Tran, H.T.T.; Thang, T.C. An evaluation of tile selection methods for viewport-adaptive streaming of 360-degree video. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2020, 16, 1–24. [Google Scholar] [CrossRef]

- Ye, Z.; Li, Q.; Ma, X.; Zhao, D.; Jiang, Y.; Ma, L. VRCT: A viewport reconstruction-based 360 video caching solution for tile-adaptive streaming. IEEE Trans. Broadcast. 2023, 69, 691–703. [Google Scholar] [CrossRef]

- EE and BT Unveil New 5G-Enabled XR Sports Experiences. Available online: https://www.broadcastnow.co.uk/production/ee-and-bt-unveil-new-5g-enabled-xr-sports-experiences/5168508.article (accessed on 15 March 2024).

- EE and BT Unveil New Sports and Performing Arts Experiences Based on 5G and Extended Reality. Available online: https://newsroom.bt.com/ee-and-bt-unveil-new-sports-and-performing-arts-experiences-based-on-5g-and-extended-reality/ (accessed on 15 March 2024).

- Shafi, R.; Shuai, W.; Younus, M.U. 360-degree video streaming: A survey of the state of the art. Symmetry 2020, 12, 1491. [Google Scholar] [CrossRef]

- Zhang, X.; Qi, Z.; Min, G.; Miao, W.; Fan, Q.; Ma, Z. Cooperative edge caching based on temporal convolutional networks. IEEE Trans. Parallel Distrib. Syst. 2021, 33, 2093–2105. [Google Scholar] [CrossRef]

- Liu, Q.; Chen, H.; Li, Z.; Bai, Y.; Wu, D.; Zhou, Y. Online Caching Algorithm for VR Video Streaming in Mobile Edge Caching System. Mob. Netw. Appl. 2024, 1–13. [Google Scholar] [CrossRef]

- Fu, B.; Tang, T.; Wu, D.; Wang, R. Interest-Aware Joint Caching, Computing, and Communication Optimization for Mobile VR Delivery in MEC Networks. arXiv 2024, arXiv:2403.05851. [Google Scholar]

- Xia, J.; Chen, L.; Tang, Y.; Wang, W. Multi-MEC cooperation based VR video transmission and cache using K-shortest paths optimization. In Proceedings of the International Conference on Mobile and Ubiquitous Systems: Computing, Networking, and Services, Pittsburgh, PA, USA, 14–17 November 2022; Springer Nature: Cham, Switzerland, 2022; pp. 334–355. [Google Scholar]

- Shafi, R.; Shuai, W.; Younus, M.U. MTC360: A multi-tiles configuration for viewport-dependent 360-degree video streaming. In Proceedings of the 2020 IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020. [Google Scholar]

- Chen, H.Y.; Lin, C.S. Tiled streaming for layered 3D virtual reality videos with viewport prediction. Multimed. Tools Appl. 2022, 81, 13867–13888. [Google Scholar] [CrossRef]

- Cheng, Q.; Shan, H.; Zhuang, W.; Yu, L.; Zhang, Z.; Quek, T.Q.S. Design and Analysis of MEC-and Proactive Caching-Based 360° Mobile VR Video Streaming. IEEE Trans. Multimed. 2021, 24, 1529–1544. [Google Scholar] [CrossRef]

- Yang, J.; Guo, Z.; Luo, J.; Shen, Y.; Yu, K. Cloud-edge-end collaborative caching based on graph learning for cyber-physical virtual reality. IEEE Syst. J. 2023, 17, 5097–5108. [Google Scholar] [CrossRef]

- Long, K.; Cui, Y.; Ye, C.; Liu, Z. Optimal wireless streaming of multi-quality 360 VR video by exploiting natural, relative smoothness-enabled, and transcoding-enabled multicast opportunities. IEEE Trans. Multimed. 2020, 23, 3670–3683. [Google Scholar] [CrossRef]

- Okamoto, T.; Ishioka, T.; Shiina, R.; Fukui, T.; Ono, H.; Fujiwara, T. Edge-assisted multi-user 360-degree video delivery. In Proceedings of the 2023 IEEE 20th Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 8–11 January 2023; pp. 194–199. [Google Scholar]

- Zhang, G.; Wu, C.; Gao, Q. Exploiting layer and spatial correlations to enhance SVC and tile based 360-degree video streaming. Comput. Netw. 2021, 191, 107985. [Google Scholar] [CrossRef]

- Nasrabadi, A.T.; Mahzari, A.; Beshay, J.D.; Prakash, R. Adaptive 360-degree video streaming using layered video coding. In Proceedings of the 2017 IEEE Virtual Reality (VR), Los Angeles, CA, USA, 18–22 March 2017; pp. 347–348. [Google Scholar]

- Zhang, X.; Hu, X.; Zhong, L.; Shirmohammadi, S.; Zhang, L. Cooperative tile-based 360 panoramic streaming in heterogeneous networks using scalable video coding. IEEE Trans. Circuits Syst. Video Technol. 2018, 30, 217–231. [Google Scholar] [CrossRef]

- Nguyen, D.; Hung, N.V.; Phong, N.T.; Huong, T.T.; Thang, T.C. Scalable multicast for live 360-degree video streaming over mobile networks. IEEE Access 2022, 10, 38802–38812. [Google Scholar] [CrossRef]

- Zhong, L.; Chen, X.; Xu, C.; Ma, Y.; Wang, M.; Zhao, Y. A multi-user cost-efficient crowd-assisted VR content delivery solution in 5G-and-beyond heterogeneous networks. IEEE Trans. Mob. Comput. 2022, 22, 4405–4421. [Google Scholar] [CrossRef]

- Chakareski, J.; Khan, M. Live 360° Video Streaming to Heterogeneous Clients in 5G Networks. IEEE Trans. Multimed. 2024, 26, 1–14. [Google Scholar] [CrossRef]

- Chiariotti, F. A survey on 360-degree video: Coding, quality of experience and streaming. Comput. Commun. 2021, 177, 133–155. [Google Scholar] [CrossRef]

- Mean Opinion Score (MOS) Terminology in the ITU-T P.800 Standard Released by ITU in 1996. Available online: https://www.itu.int/rec/T-REC-P.800.1-201607-I/en (accessed on 22 March 2024).

| Symbol | Notations |

|---|---|

| The subjective video quality perceived by the user. | |

| k | The instability index of video playback. |

| I | Video library |

| The i-th segment of the 360° video | |

| V | A 360° video |

| The 3 areas divided in the screen | |

| The m th tile | |

| The weights for each region | |

| The bit rate of the tiles transferred | |

| Network bandwidth at time t |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Nie, J.; Zhang, X.; Li, C.; Zhu, Y.; Liu, Y.; Tian, K.; Guo, J. MNCATM: A Multi-Layer Non-Uniform Coding-Based Adaptive Transmission Method for 360° Video. Electronics 2024, 13, 4200. https://doi.org/10.3390/electronics13214200

Li X, Nie J, Zhang X, Li C, Zhu Y, Liu Y, Tian K, Guo J. MNCATM: A Multi-Layer Non-Uniform Coding-Based Adaptive Transmission Method for 360° Video. Electronics. 2024; 13(21):4200. https://doi.org/10.3390/electronics13214200

Chicago/Turabian StyleLi, Xiang, Junfeng Nie, Xinmiao Zhang, Chengrui Li, Yichen Zhu, Yang Liu, Kun Tian, and Jia Guo. 2024. "MNCATM: A Multi-Layer Non-Uniform Coding-Based Adaptive Transmission Method for 360° Video" Electronics 13, no. 21: 4200. https://doi.org/10.3390/electronics13214200

APA StyleLi, X., Nie, J., Zhang, X., Li, C., Zhu, Y., Liu, Y., Tian, K., & Guo, J. (2024). MNCATM: A Multi-Layer Non-Uniform Coding-Based Adaptive Transmission Method for 360° Video. Electronics, 13(21), 4200. https://doi.org/10.3390/electronics13214200