Abstract

Aiming at the problems of low positioning accuracy and poor mapping effect of the visual SLAM system caused by the poor quality of the dynamic object mask in an indoor dynamic environment, an indoor dynamic VSLAM algorithm based on the YOLOv8 model and depth information (YOD-SLAM) is proposed based on the ORB-SLAM3 system. Firstly, the YOLOv8 model obtains the original mask of a priori dynamic objects, and the depth information is used to modify the mask. Secondly, the mask’s depth information and center point are used to a priori determine if the dynamic object has missed detection and if the mask needs to be redrawn. Then, the mask edge distance and depth information are used to judge the movement state of non-prior dynamic objects. Finally, all dynamic object information is removed, and the remaining static objects are used for posing estimation and dense point cloud mapping. The accuracy of camera positioning and the construction effect of dense point cloud maps are verified using the TUM RGB-D dataset and real environment data. The results show that YOD-SLAM has a higher positioning accuracy and dense point cloud mapping effect in dynamic scenes than other advanced SLAM systems such as DS-SLAM and DynaSLAM.

1. Introduction

In order to enable mobile robots to perform well in unfamiliar environments, it is necessary to solve the problem of accurately positioning their positions and perceiving the surrounding environment. Simultaneous Localization And Mapping (SLAM) is one of the critical technologies [1]. The mainstream open-source visual SLAM (VSLAM) systems such as SVO [2], PTAM [3], DTAM [4], and ORB-SLAM3 [5] are mostly suitable for static environments or environments with a small amount of dynamic interference. They cannot work properly in indoor dynamic environments with much dynamic interference. Thanks to the development of deep learning, detection and segmentation technology have made significant progress in identifying a priori objects, such as SegNet [6], PSPNet [7], YOLO [8], U-Net [9], and BlitzNet [10]. Driven by this work, a series of open-source visual SLAM systems suitable for indoor dynamic environments have emerged, such as CNN-SLAM [11], NICE-SLAM [12], DS-SLAM [13], and DynaSLAM [14].

However, existing semantic visual SLAM systems still suffer from inaccurate mask coverage of a priori dynamic objects, easy omission of the small pixel area of a priori dynamic objects in segmentation models, and difficulty in distinguishing non-prior dynamic objects. These problems lead to errors in the visual odometer, pollution of the map by dynamic object information, and failure of loop closure detection.

This paper aims to explore and solve these problems and proposes a new semantic SLAM system, YOD-SLAM, that can work in an indoor dynamic environment. YOD-SLAM uses ORB-SLAM3 as the development platform, which is only suitable for static environments. In the tracking thread of YOD-SLAM, YOLOv8s [15] is used to obtain semantic and mask information about objects in the environment. The original masks of an a priori dynamic object are corrected using the depth information obtained from the RGB-D camera. Based on the constant velocity motion model, the mask’s predicted average depth and center point are used to determine whether there is a missed detection of an a priori dynamic object. If there is a missed detection, the mask’s predicted average depth and center point is used to redraw the missed mask. The relationship between the edge distance and edge depth between masks is used to determine non-prior dynamic objects. Finally, the information on all dynamic objects is eliminated to obtain reliable pose estimation and clear dense point cloud maps in subsequent operations.

The main contributions of this paper are as follows:

- To improve the mask’s coverage of a priori dynamic objects, a method of optimizing the original mask of a priori dynamic objects using a depth filtering mechanism is proposed. The method first limits the effective depth of the camera, then filters the mask information that does not belong to the a priori dynamic object using the average depth of the mask, and finally performs the dilate operation on it.

- To better cover a priori dynamic objects with small pixel areas, a method for detecting missed a priori dynamic objects using the average depth and center point of the mask and to redraw after a missed detection is proposed. This method first uses a constant velocity motion model to predict the average depth and center point of the a priori dynamic object mask in the current frame. Then, it determines whether there is a missed detection based on these two factors. Finally, if missed, it searches for pixels near the predicted center point that match the expected depth range and redraws the mask.

- To avoid adverse effects of non-prior dynamic objects on the system, a method is proposed to determine non-priority dynamic objects using mask edge and depth information. This method utilizes the distance and depth information of the mask edge between dynamic objects and a priori static objects to determine whether the prior static objects have been interacted with. If an interaction occurs, it is a non-prior dynamic object and will be removed in subsequent operations.

- We compared the performance of our system with the ORB-SLAM3, DS-SLAM, and DynaSLAM systems on the Technical University of Munich (TUM) RGB-D [16] dataset, which is widely used, and evaluated its performance in real indoor environments. The experimental results show that this system has the best camera positioning accuracy among the four systems in three high-dynamic sequences: fr3/w/half, fr3/w/rpy, and fr3/w/xyz. There is a small gap or a certain performance improvement compared to other SLAM systems in low-dynamic and static sequences. In mask processing experiments, YOD-SLAM can effectively cover all dynamic objects in the image. It can establish clear and accurate dense point cloud maps both in dense point cloud experiments and real-world experiments. The conclusion is that YOD-SLAM can perform visual SLAM tasks in indoor dynamic environments.

2. Related Works

Ul et al. [17] used dense optical tracking to establish the initial attitude of each frame and used K-means for spatial scene clustering to achieve better dynamic target recognition. The experimental results of their study show that the multi-view geometry is optimized, and the efficiency and real-time processing of dynamic scenes is improved. Cheng et al. [18] proposed a sparse motion removal (SMR) model based on the Bayesian framework, which can detect the dynamic and static areas of the input frame, and then the part of the removed dynamic areas will participate in the SLAM link. Jeon et al. [19] provided a more robust solution for SLAM problems in dynamic environments by integrating scene flow analysis, filtering-based Conditional Random Field optimization strategies, and using static feature points in dynamic objects. Zhong et al. [20] used the sliding window mechanism and mask-matching algorithm to remove dynamic feature points from the target detection results. At the same time, they established an online object database and imposed semantic constraints to optimize the camera pose and map, to effectively deal with interference in the dynamic environment. Yang et al. [21] introduced adaptive dynamic object segmentation and feature update based on scene semantics, as well as an innovative AD-keyframes selection mechanism, which improves the positioning and mapping accuracy and processing speed of the system under complex dynamic conditions while maintaining the robustness of the system. Wang et al. [22] used super point segmentation to generate probability grids to distinguish dynamic and static features, and then only used feature points in the low probability grids for camera motion estimation, thus improving the stability and accuracy of SLAM in dynamic scenes. Wei et al. [23] optimized the filtering strategy of dynamic feature points by assigning different motion levels to different types of dynamic targets, thus reducing the impact of dynamic targets on the positioning accuracy of the VSLAM system. Zhang et al. [24] used optical flow and depth data to build a Gaussian model after case segmentation and used the nonparametric Kolmogorov–Smirnov test to distinguish dynamic feature points. At the same time, a data association algorithm based on the Bayesian model was designed, which fuses feature point descriptors and their spatial information to reduce matching errors.

Yang et al. [25] used two depth learning models to obtain depth images and semantic features, respectively, and comprehensively used semantic features, local depth information comparison, and multi-view projection constraints to filter dynamic feature points. Gou et al. [26] designed a lightweight semantic segmentation network for workshop scenarios and then used a feature point dynamic probability calculation module integrating an edge constraint, epipolar constraint, and position constraint to remove dynamic key points and reduce the impact on the visual odometer. Cai, L. et al. [27] used BRISK descriptors to describe feature points, and then incorporated the mathematical method of affine transformation into ORB feature extraction, normalized samples, and processed image restoration, thus solving the problem of a small number of feature points being extracted and easy loss of key frames. Li, A. et al. [28] iteratively updated the movement probability of dynamic key points and eliminated dynamic feature points through the Bayesian theorem combined with the results of the geometric model and Mask-RCNN semantic segmentation, to improve the stability and accuracy of visual odometer positioning in dynamic environments. Ai, Y. et al. [29] proposed the dynamic object probability (DOP) model to calculate the probability that feature points belong to the moving objects, thus improving the detection efficiency of dynamic targets. Ran, T. et al. [30] proposed a context mask correction method based on Bayesian update to improve the accuracy of the segmentation mask, which regards the segmentation result of the previous frame as a priori, and regards the segmentation of the current frame as likelihood, and then updates it. Li, X. et al. [31] proposed to divide the current frame into many mesh regions. The region containing dynamic keys is called a dynamic region, and vice versa. If a static area is surrounded by more than five dynamic areas, it is regarded as a dynamic area, and is used to remove dynamic points more accurately and reduce the number of static points removed by mistake. Qian, R. et al. [32] proposed an optimal grid map background painting algorithm based on the grid flow model, which uses the static information of adjacent frames to repair the RGB image and depth image of the current frame to remove the dynamic information, thus providing more high-quality key point matching pairs for pose estimation. Li et al. [33] proposed a VSLAM system that uses a segmentation model to remove most of the prior dynamic object information, and then uses LK optical flow to remove dynamic key points. However, the system did not fully utilize the depth information provided by RGB-D cameras. The YDD-SLAM proposed by Cong et al. [34] utilizes YOLOv5 to subdivide nonrigid objects (people) into three subcategories: “person”, “heads”, and “hands”. By calculating the depth range of these three types of objects and finally combining them with the depth range of the human body, the dynamic feature points of the human body can be determined and eliminated. The SEG-SLAM system proposed by Cong et al. [35] uses mask information to eliminate internal points and depth information to evaluate boundary points for clearly dynamic targets. For potential dynamic objects, polar geometry methods are used to evaluate the dynamic properties of feature points. This further improves the robustness of the system.

Despite numerous efforts by previous researchers to improve the robustness of visual SLAM systems in indoor dynamic environments, some issues still have not been thoroughly resolved. Firstly, the lightweight segmentation model adopted for real-time performance cannot precisely cover a priori dynamic objects, such as the missing mask at the edge of the human body. Secondly, lightweight segmentation models make it difficult to effectively segment prior dynamic objects with small pixel areas, such as people far away from the camera. Thirdly, the discrimination effect on non-prior dynamic objects is not good. These issues lead to decreased pose estimation accuracy and contamination of maps with dynamic object information.

3. System Description

3.1. Overview of YOD-SLAM

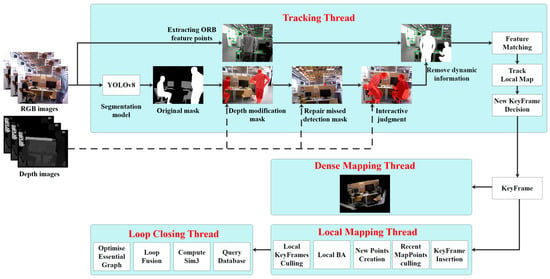

The structure of YOD-SLAM proposed in this article is shown in Figure 1. YOD-SLAM is designed based on the ORB-SLAM3 system. The system incorporates depth information into modifying prior dynamic object masks or determining non-prior dynamic objects, especially in mask processing, to improve pose estimation accuracy and reduce residual images in dense point cloud maps.

Figure 1.

Overview of YOD-SLAM.

In the YOD-SLAM system, firstly, semantic information and the original mask are obtained using YOLOv8s. The original masks obtained by YOLOv8s sometimes fail to cover dynamic objects properly. Secondly, since an a priori dynamic object usually has a noticeable depth difference from an a priori static object in the background, depth information is utilized to modify the original mask to obtain a more accurate coverage. Thirdly, since YOLOv8s has a weak ability to segment objects with small pixel areas, residual images are often created in dense point cloud maps due to missing small prior dynamic objects. Assuming that the object’s acceleration changes very little between adjacent frames, the mask’s average depth and center point will change regularly. Therefore, these two can be used to determine whether the a priori dynamic object in the current frame has missed detection, and if so, they can be used to complete the mask of the a priori dynamic object. Fourthly, as a common indoor dynamic object, humans often interact with a priori static objects. A priori static objects are currently in a dynamic state, i.e., non-prior dynamic objects. Therefore, a non-prior dynamic object judgment link is added based on the mask edge and depth information. Finally, all removed dynamic objects’ RGB and depth images are transmitted to the ORB-SALM3 system for key point extraction and matching, pose estimation, dense point cloud mapping, and other processes.

3.2. Get Semantic Information from YOLOv8

The YOLOv8s model is used for segmentation in the YOD-SLAM system. YOLOv8 is a semantic segmentation neural network based on a one-stage discriminator. It can realize the functions of target detection and semantic segmentation in one forward propagation, so it has good real-time performance. The YOLOv8s model trained on the COCO dataset can recognize 80 common object categories (person, chair, couch, potted plant, bed, dining table, etc.) and simultaneously obtain masks of various prior objects.

In this paper, masks are divided into prior dynamic objects and prior static objects according to the types of objects. Prior dynamic objects refer to an object with a high probability of being dynamic, and their attitude is often unreliable. Humans, cats, and dogs are common transcendental dynamic objects in indoor environments. Prior static objects refer to all objects except prior dynamic objects, such as chairs, water cups, books, keyboards, and other objects in indoor scenes.

In this paper, we refer to the dynamic prior static objects as non-prior dynamic objects, which will also adversely affect pose estimation and mapping.

3.3. Using a Depth Filtering Mechanism to Modify the Mask

The mask obtained by YOLOv8s processing visual images often cannot cover prior dynamic objects well. Too much or too little coverage will interfere with the accuracy of positioning and mapping. Fortunately, the RGB-D camera not only provides visual images but also provides depth images. An a priori dynamic object usually has a noticeable depth difference from an a priori static object in the background, so we can use it to modify the original mask.

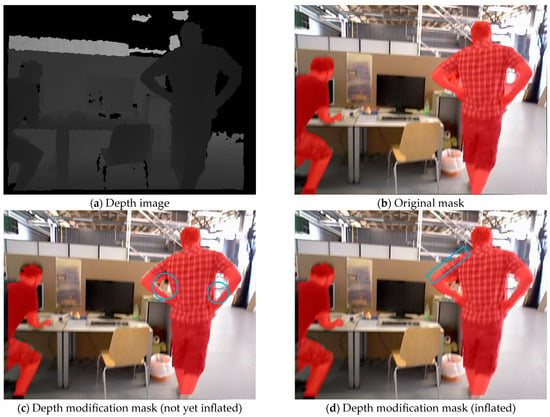

In Figure 2 we demonstrate the processing of a specific frame. In the figure, (a) is the depth image passed into this stage, (b) is the original mask image obtained by YOLOv8 passed into this stage, (c) is the depth-modified mask, and (d) is the modified mask after inflation.

Figure 2.

The process of modifying prior dynamic object masks using depth information. Figure (a) shows the depth image corresponding to the current frame. Through the algorithm presented in this article, the background area that is excessively covered in (b) is removed in (c). The expanded edges of the human body have achieved better coverage in (d).

The accuracy of the RGB-D camera in obtaining the depth image decreases with the increased distance between the object and the camera; however, the depth information of objects too close cannot be obtained correctly. Therefore, the depth image should be trimmed appropriately, which can be processed by the following formula:

where represents the depth value at the coordinate in the depth image. and represent the minimum and maximum thresholds, and the specific values of and are related to the accuracy of RGB-D cameras. Generally speaking, the higher the accuracy of RGB-D cameras, the wider their threshold range. Pixels outside the threshold range are assigned a depth value 0 and do not participate in pose estimation and mapping work.

Assuming represents the set of pixel coordinates in the prior object mask, is the total number of pixel points in the mask. The geometric center and the average depth of the prior object mask can be calculated as follows:

where is the depth value corresponding to point .

The pixels in the prior dynamic object mask are traversed, and then the pixel points that do not belong to the mask are removed using the following formula:

is the threshold value of the depth difference to avoid the wrong exclusion of pixels that should belong to the object. The threshold TD is an empirical value, taken as 40 centimeters in this article.

To ensure that the mask adequately covers the prior dynamic objects and to avoid extracting ORB key points around these objects, the mask must be dilated twice using a dilation kernel, resulting in a total dilation of 4 pixels.

Due to the significant depth difference between prior dynamic objects and static environments, static objects such as books often have relatively small depth differences with static environments. Therefore, the deep filtering mechanism only modifies the prior dynamic object mask.

3.4. Missing Detection Judgment and Redrawing Using Depth Information

In an indoor dynamic environment, people often move far away. Due to factors such as lightweight models, the segmentation performance of the segmentation model is not ideal for prior dynamic objects with small pixel areas.

A constant velocity motion model is proposed based on the scene characteristics of an indoor dynamic environment, assuming that the acceleration changes of objects in adjacent frames within the camera field of view are minimal. Therefore, it is possible to use past frames to predict the center point and average depth of the current frame mask.

This center point and average depth can be used to determine whether there are missed detections for prior dynamic objects. After a missed detection occurs, a dynamic object mask is created near the center point using the prior average depth to redraw.

Figure 3 takes the processing of a particular frame as an example to illustrate the process of using depth information to redraw the previously missed dynamic object mask. In this figure, we present the depth image corresponding to this frame (a), the original mask with missed detections obtained by the YOLOv8 segmentation model (b), and the mask image processed by our method (c).

Figure 3.

The process of redrawing the mask of previously missed dynamic objects. Figure (a) shows the depth image corresponding to the current frame. In (b), we can see that people in the distance were not covered by the original mask, resulting in missed detections. The mask in (c) can be obtained by filling in the mask with the depth information specific to that location in (a).

We assume that the acceleration of each mask center point and the average depth within adjacent frames of a prior dynamic object tends to be constant; in other words, its average acceleration satisfies:

We can use the average velocity of the object in adjacent frames in the past to predict the position where the object should exist. The average velocity of the mask center point of a prior dynamic object can be calculated using the following formula:

where is the total number of frames used to calculate , that is, how many frames before the occurrence of missed detections are used to calculate ; represents the current frame; is the reciprocal of the RGB camera frame rate. Then, the expected center point coordinates of the current frame can be obtained using the following equation:

The expected average depth of the mask in the current frame can be obtained:

We can use the Euclidean distance to find the nearest prior dynamic object mask center point from the current frame , and obtain the minimum distance :

The depth difference between the average depth of pixels in the range near and is:

To determine whether there is a missed detection of prior dynamic objects in the current frame, the following judgment exists:

Here, and are the two thresholds for and , respectively.

When a missed detection occurs, it is only necessary to search for pixels with a depth within the range in the area centered on and add them to the prior dynamic object mask sequence to obtain the tracking mask.

In addition, it is worth mentioning that we have excluded pixels that are too far away from SLAM. Sometimes, the redraw tracking mask cannot fully cover prior dynamic objects on RGB images, but the depth of pixels in uncovered areas is too great, which has no impact on the system. For example, in Figure 3c, the person’s hair is not entirely covered by the tracking mask, but it does not affect the system.

3.5. Using Mask Edge and Depth Information to Determine Non-Prior Dynamic Objects

It is well known that force is the reason for changing the motion state of objects, so an a priori static object in an indoor scene often does not move spontaneously. Generally, only when a dynamic object interacts with an a priori static object can the motion state of the a priori static object be changed. We call this object a non-prior dynamic object, which will affect positioning and mapping. For this problem, we can use the edge distance and depth relationship between the dynamic object and the prior static object to judge the motion state of the prior static object.

We show the process of judging non-prior dynamic objects in Figure 4, where (a) and (b) are depth maps and original masks, respectively. The chair in (c) is also determined as a non-prior dynamic object after the interaction between the person and the chair.

Figure 4.

The process of excluding prior static objects in motion.

The logic for judging non-prior dynamic objects is as follows: identify pairs of pixel points (composed of coordinates from multiple dynamic object pixels and prior static object pixels) where the edge distance between dynamics and the mask of prior static objects is less than 6. When the difference in depth between each pair of pixels is less than the depth threshold, it is considered that an interaction occurs between the dynamic and prior static objects. At this point, the prior static object is regarded as a non-prior dynamic object, and its information is deemed unreliable.

It is worth noting that the red mask in Figure 4c incorporates depth modification and inflated operations, which have processed the mask to a certain extent. Although the absence in the depth image shown in (a) leads to incomplete mask coverage in areas such as the right chair and the top of the person’s head in (c), this does not impact the pose estimation or dense point cloud mapping tasks performed by the SLAM system.

4. Experimental Results

In this section, we compare the performance of YOD-SLAM with ORB-SLAM3, DS-SLAM, and DynaSLAM on the TUM RGB-D dataset [16]. DynaSLAM and DS-SLAM are excellent open-source visual SLAM systems. Thanks to efficient improvement measures, they can be competent in visual SLAM tasks in dynamic indoor environments.

The TUM RGB-D dataset provides a variety of dynamic scenes, and the camera will move with a specific rule. It will also offer the camera’s ground truth trajectory data for quantitative analysis. Specifically, in the sitting and desk sequences, there will be 0 to 2 people chatting, gesturing, or moving objects at the table. In the walking sequence, two people are chatting, walking, gesturing, or moving objects at the table. In the above sequence, the sitting and desk sequences represent low-dynamic environments, while the walking sequence represents high-dynamic environments. The camera has four motion modes: xyz—the camera moves slowly along the x, y, and z axes; rpy—the camera rotates slowly around the main axis; halfsphere—the camera moves along a hemispherical track; static—the camera hardly moves.

We compared the above four visual SLAM systems in four high-dynamic sequences (fr3/w), three low-dynamic sequences (fr3/s and fr2/desk/p), and one static sequence (fr2/rpy, a desk with sundries). Evaluation in the dynamic sequence aims to verify whether the improvement measures can effectively deal with dynamic interference. The purpose of the pure static sequence is to confirm whether the improvement measures harm SLAM tasks in the static environment.

The computer configuration we used was as follows: Intel Core i7-11800H CPU(Santa Clara, CA, USA), 16 GB RAM, NVIDIA RTX3050 GPU, Ubuntu 18.04 operating system.

4.1. Positioning Accuracy Experiments

In this section, we evaluate the positioning accuracy of the camera using absolute trajectory error (ATE) and relative pose error (RPE) [16]. ATE represents the difference between the points of the ground truth trajectory and the estimated trajectory and is a crucial indicator for evaluating the global consistency and algorithm accuracy of SLAM systems. RPE measures the local error within a given period and can be used to evaluate the drift of the visual odometry system. RPE can be subdivided into two aspects: translational RPE and rotational RPE. This section uses root mean square error (RMSE) and standard deviation (S.D.) to characterize ATE and RPE. RMSE can reflect the accuracy of performance indicators, while S.D. reflects the stability of performance indicators.

Table 1, Table 2, and Table 3, respectively, compare the ATE, translation RPE, and rotation RPE of our YOD-SLAM system with those of the other three visual SLAM systems after running on multiple TUM RGB-D datasets. The best-performing RMSE and S.D. in the same dataset are shown in bold font. The “Improvements” column in the table represents the performance improvement of our YOD-SLAM compared to ORB-SLAM3. The calculation formula is:

where represents the improvement value, represents the value obtained from ORB-SLAM 2, and represents the value of YOD-SLAM. When , it means that YOD-SLAM has a performance improvement compared to ORB-SLAM 2, and vice versa.

Table 1.

Comparison of ATE results.

Table 2.

Comparison of translational RPE results.

Table 3.

Comparison of rotational RPE results.

Thanks to our processing of dynamic object masks in high-dynamic scenes, we can see in Table 1, Table 2, and Table 3 that YOD-SLAM has significantly improved accuracy in high-dynamic scenes. Specifically, YOD-SLAM performs the best among the four systems in the high-dynamic walking sequences of “fr3/w/half”, “fr3/w/rpy”, and “fr3/w/xyz”, with performance improvements of 93.56%, 95.16%, and 97.61% in ATE, respectively. The translational RPE is 88.33%, 87.14%, and 93.00%, respectively. The rotational RPE is 82.27%, 83.01%, and 87.68%, respectively. The performance of YOD-SLAM in the “fr3/w/static” sequence is second only to the DS-SLAM system, and even so, there is still about 90% improvement in ATE and RPE performance. Overall, YOD-SLAM achieved an average RMSE improvement of 93.50%, 89.49%, and 84.43% for ATE, translational RPE, and rotational RPE of walking high-dynamic sequences, respectively. The average S.D. improvement values are 94.05%, 93.42%, and 89.40%, respectively. It can be concluded that our improvement measures for masks are effective and have achieved good results in high-dynamic scenes.

In low-dynamic and static scenes, the average RMSE improvement values of ATE, translational RPE, and rotational RPE of YOD-SLAM are 11.83%, 6.34%, and −2.24%, respectively. The improvement values in average S.D. are 11.58%, 11.58%, and −5.04%, respectively. The average RMSE difference between the ATE, translation RPE, and rotation RPE indicators of YOD-SLAM and the optimal one is 0.001198 m, 0.001811 m, and 0.025216 deg. Compared to the best, the average difference in S.D. is 0.001028 m, 0.000921 m, and 0.015431 deg. This performance difference is relatively small, mainly at the millimeter level or around 0.02 degrees. In summary, it can be considered that our improvement measures have no negative impact on SLAM tasks in static scenarios and are at the same level as ORB-SLAM3. The improvement measures have a certain positive effect on SLAM tasks in low-dynamic scenes, which is in line with our expected results in improving dynamic object masks.

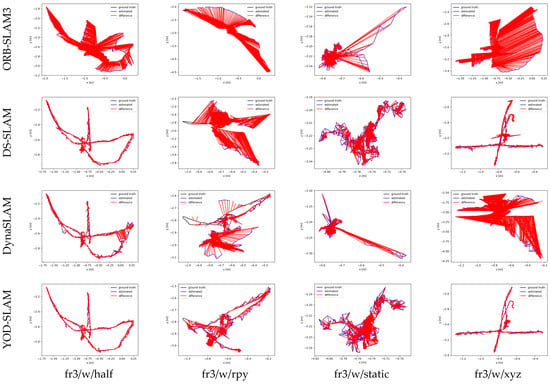

Figure 5 compares the estimated trajectories of four systems in high-dynamic sequences and the actual trajectories. The first to fourth rows represent the operational results of ORB-SLAM3, DS-SLAM, DynaSLAM, and YOD-SLAM systems, respectively. The first to fourth columns represent four high-dynamic sequences: fr3/w/half, fr3/w/rpy, fr3/w/static, and fr3/w/xyz. Each image consists of black, blue, and red lines. The black line represents the official ground truth provided by the TUM RGB-D dataset. The blue line represents the estimated trajectory obtained by the SLAM system. The red line connects the differences between ground truth and estimated trajectory. We can qualitatively analyze that the shorter the red line, the smaller the system trajectory estimation error. We can intuitively see that ORB-SLAM3 is very weak in handling high-dynamic scenes, while the other three dynamic SLAM systems have somewhat improved. Among them, our YOD-SLAM system has a relatively better processing effect.

Figure 5.

Comparison of estimated trajectories and real trajectories of four systems.

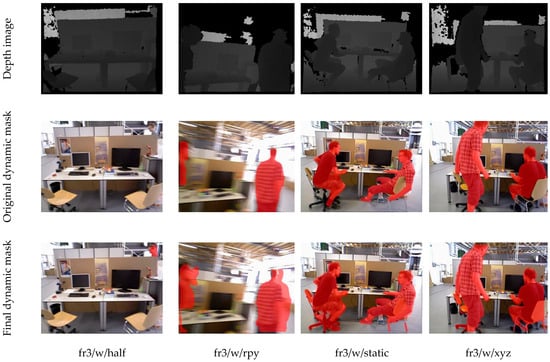

4.2. Mask Processing Experiments

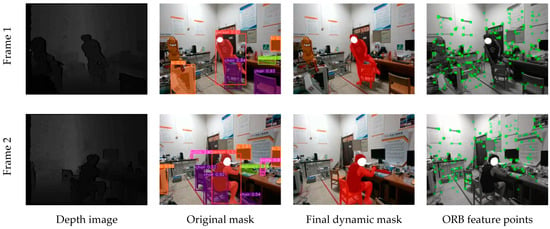

Taking four sets of images from four high-dynamic datasets as examples, Figure 6 clearly illustrates our mask modification work. The three graphs of each column come from the same time of their respective datasets. The first line is the depth image corresponding to the current frame. The second line is the original mask obtained from the YOLOv8s segmentation model (only showing an a priori dynamic object, i.e., human). The third line is all the final dynamic object masks (including prior dynamic objects and dynamic prior static objects) processed by YOD-SLAM. Each column highlights the performance of our different processing links.

Figure 6.

The results of mask modification on dynamic objects. The three graphs of each column come from the same time as their respective datasets. The first line is the depth image corresponding to the current frame; the second is the original mask obtained by YOLOv8; and the third is the final mask after our modification.

In Figure 6, in the fr3/w/half sequence image in the first column, YOLOv8 missed the detection of the head in the upper right corner. However, our YOD-SLAM could still determine the missed detection of prior dynamic object masks by using the average depth and center point and ultimately complete the mask at that location.

In the fr3/w/rpy sequence images in the second column, the YOLOv8 model appropriately completed the segmentation task based on RGB images. However, due to the time difference between the acquisition of RGB images and depth images, errors in depth sensors, and rapid changes in perspective, objects in RGB could not achieve de facto consistency with the depth in their depth maps. Our YOD-SLAM can use depth information to reduce this error and improve positioning accuracy and mapping effect.

In the fr3/w/static and fr3/w/xyz sequence images in the third and fourth columns, we can see that our YOD-SLAM excludes dynamic prior static objects, such as the chair being seated, through edge distance and edge depth. In some images of the final dynamic mask, there is a situation of missing mask information, which is due to the lack of depth information in that area or being filtered out due to exceeding the depth threshold. The pixel information at this location will not participate in pose estimation and mapping, so the impact on the system is insignificant.

In summary, this experiment can demonstrate that the original mask processed by YOD-SLAM can provide a better dynamic object mask for the system, assisting with tracking, mapping, and looping threads.

4.3. Evaluation of Dense Point Cloud Maps

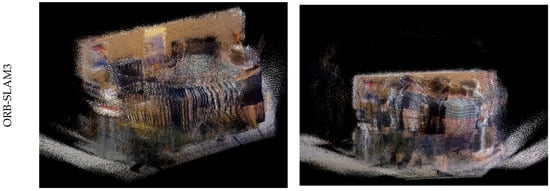

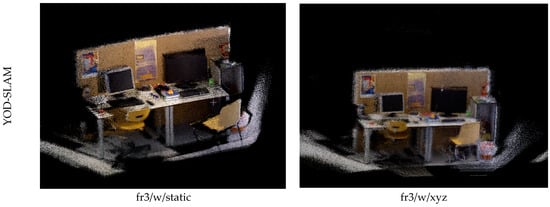

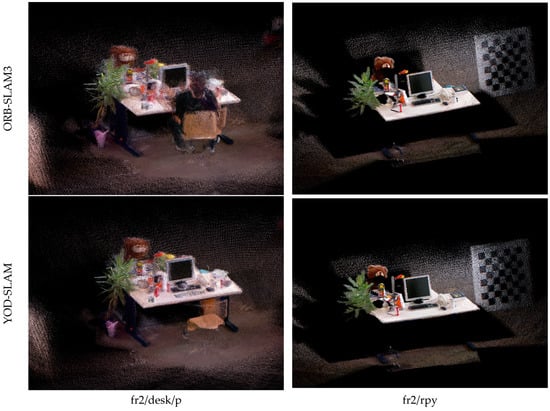

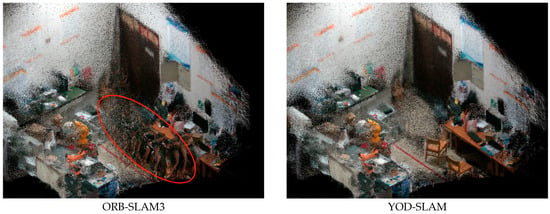

In Figure 7 and Figure 8, we show the point cloud images obtained by ORB-SLAM3 and YOD-SLAM from two sets of highly dynamic sequences, one set of low-dynamic sequences, and one set of static scene sequences, and compare them from a relatively fixed perspective. Each column represents a different dataset. The first row represents the result of ORB-SLAM3 and the second row represents the result of YOD-SLAM.

Figure 7.

Comparison of point cloud maps between ORB-SLAM3 and YOD-SLAM in two sets of highly dynamic sequences.

Figure 8.

Comparison of point cloud maps between ORB-SLAM3 and YOD-SLAM in low- and static dynamic sequences, where fr2/desk/p is a low-dynamic scene, while fr2/rpy is a static scene.

In Figure 7, we can see the inability of ORB-SLAM3 to interfere with dynamic objects. Due to the interference of pose estimation, the edges of the background plate appear to have serious divergence. Due to the inability to remove the pixel information of dynamic objects, there are many human residual images on the map, which may seriously impact the path planning and navigation of automatic equipment. In contrast, our YOD-SLAM performed very well. Specifically, YOD-SLAM can remove most of the dynamic object information and many residual images of the human body. The edge contour of static objects is also very clear. It is worth mentioning that many undetected human parts often appear above and on the left side of the background plate in the fr3/w/xyz sequence, but this rarely occurs in our system. So far, we can conclude that YOD-SLAM has significantly improved the mapping of high-dynamic scenes.

In Figure 8, we can see that YOD-SLAM can remove people from the fr2/desk/p low-dynamic scene and reduce the interference of people to an a priori static object, improving the low-dynamic scene to a certain extent. In the pure static scene, fr2/rpy, YOD-SLAM, and ORB-SLAM3 have the same mapping performance, and no negative impact on the mapping of static scenes is observed. This is consistent with our conclusion in the section on the positioning accuracy experiment.

4.4. Evaluation in the Real-World Environment

To test the performance of YOD-SLAM in real indoor dynamic scenes, we conducted indoor testing using the Intel RealSense Depth Camera D455 (Intel, Santa Clara, CA, USA) shown in Figure 9. The D455 is renowned for its high precision and reliability, providing a more extended sensing range and higher accuracy compared to previous models in the same series [36], with specific parameters shown in Table 4.

Figure 9.

Intel RealSense Depth Camera D455.

Table 4.

Parameters of the RealSense D455 camera.

Figure 10 shows the mask processing and ORB feature point extraction process in the laboratory environment. Each line represents the processing of the same frame. The first column is the depth map obtained by D455. The second column is the original mask obtained from YOLOv8s, including the mask, bounding box, label, and probability. The third column represents all dynamic object masks processed by YOD-SLAM. The fourth column represents the extracted ORB feature points.

Figure 10.

Mask processing and ORB feature point extraction in real laboratory environments. Several non English exhibition boards are leaning against the wall to simulate typical indoor environments. The facial features of the characters have been treated with confidentiality.

In the two sets of frames in Figure 10, the YOD-SLAM algorithm can effectively use the mask edge distance and edge depth to determine the non-prior dynamic object. The algorithm determined that the chairs in Frame 1 and the chairs and keyboards in Frame 2 were non-prior dynamic objects, and no ORB feature points were extracted in that area.

Figure 11 compares the dense point cloud mapping of ORB-SLAM3 and YOD-SLAM in indoor dynamic environments and presents them at a relatively fixed angle. Compared to ORB-SLAM3, which contains many residual human images, the YOD-SLAM-established map is more precise, and static objects in the environment are better displayed. The interference information of prior dynamic objects is almost eliminated.

Figure 11.

Comparison of dense point cloud mapping between ORB-SLAM3 and YOD-SLAM in real laboratory environments. We marked the map areas affected by dynamic objects with red circles.

5. Conclusions and Future Work

The experimental results show that the proposed indoor dynamic VSLAM algorithm, YOD-SLAM, can effectively use mask and depth information to achieve accurate removal of prior and non-prior dynamic objects, as well as missing detection judgment and redrawing. Finally, the system has excellent positioning accuracy and mapping effects in highly dynamic scenes, which can help future indoor automation equipment positioning, navigation, and path planning. The specific conclusions are as follows:

- This paper presents a YOD-SLAM system based on ORB-SLAM3. Different from previous studies, the main idea of YOD-SLAM is to try to add depth information to the process of modifying an a priori dynamic object mask or judging non-prior dynamic objects, especially in the process of mask processing, to improve the accuracy of pose estimation and reduce the artifacts in dense point cloud maps. In YOD-SLAM, the YOLOv8s model is first used to obtain the original mask. Second, a deep filtering mechanism is used to modify the prior dynamic object mask, covering the prior dynamic objects as fully as possible while minimizing coverage of the static environment. Third, the mask’s average depth and center point are used to determine whether the prior dynamic object mask has missed detection. In the case of missing detection, the mask is redrawn using both to reduce the impact caused by prior dynamic objects with a small pixel area missing from the segmentation model. Fourth, we use the mask edge and depth information to exclude an a priori static object in the dynamic state, i.e., a non-prior dynamic object. Finally, the ORB feature points in the static region are extracted to obtain higher positioning accuracy and a more precise and more accurate dense point cloud map.

- To test the performance of YOD-SLAM, we compared it with ORB-SLAM3, DS-SLAM, and DynaSLAM systems in the TUM RGB-D dataset and conducted experimental tests in a real indoor environment. In the positioning accuracy experiment, YOD-SLAM performed best among the four systems in the high-dynamic scene, and the performance of ATE and RPE was improved by about 90%. Our improvement measures positively affect SLAM tasks in low-dynamic scenarios. Our improvement measures have no negative impact on SLAM tasks in static scenarios and are at the same level as ORB-SLAM3. In the mask processing experiment, YOD-SLAM better processed the dynamic object mask and achieve high accuracy. In the dense point cloud map experiment, YOD-SLAM obtained a more precise dense point cloud map, which significantly reduced the human residual image and the remote small residual image. YOD-SLAM was not only suitable for the TUM RGB-D dataset but also performed well in the laboratory’s real indoor environment. To sum up, YOD-SLAM is competent in SLAM tasks in an indoor dynamic environment, obtaining accurate pose estimation and precise dense point cloud mapping.

In future work, we will try to integrate the IMU data into the YOD-SLAM system, and more input information may improve the system’s robustness. Secondly, the semantic map is the key cornerstone to realize the automatic movement of unmanned devices indoors, and we will continue to try to build a more excellent map environment. Finally, the main application scenario of YOD-SLAM is the indoor dynamic environment. We will continue to improve it to adapt to outdoor dynamic scenes and even low-texture scenes to form a universal SLAM system for the whole scene.

Author Contributions

The manuscript was heavily influenced by all the contributors. Y.L. made important contributions to the study design, algorithm implementation, data management, chart production, and manuscript writing. Y.W. provided project support for the study and made important contributions to the idea of the study, as well as the writing and review of the manuscript. L.L. and Q.A. made important contributions to the literature search, data acquisition, and data analysis. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Open Project of the Key Laboratory of Modern Measurement and Control Technology of the Ministry of Education, grant No. KF20221123205; Young Backbone Teachers Support Plan of Beijing Information Science & Technology University, grant No. YBT 202405; Industry-University Collaborative Education Program of Ministry of Education: 220606429061954.

Institutional Review Board Statement

All subjects gave their informed consent for inclusion before they participated in the study. The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board of Beijing Information Science & Technology University (protocol code 2024073001R and 30 July 2024).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The TUM RGB-D datasets that are presented in this study can be obtained from https://cvg.cit.tum.de/data/datasets/rgbd-dataset/download (accessed on 29 July 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Theodorou, C.; Velisavljevic, V.; Dyo, V.; Nonyelu, F. Visual Slam Algorithms and Their Application for Ar, Mapping, Localization and Wayfinding. Array 2022, 15, 100222. [Google Scholar] [CrossRef]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. Svo: Fast Semi-Direct Monocular Visual Odometry. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar]

- Klein, G.; Murray, D. Parallel Tracking and Mapping for Small Ar Workspaces. In Proceedings of the 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 3–16 November 2007; IEEE: Piscataway, NJ, USA, 2007. [Google Scholar]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. Dtam: Dense Tracking and Mapping in Real-Time. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011. [Google Scholar]

- Campos, C.; Elvira, R.; Rodriguez, J.J.G.; Montiel, J.M.M.; Tardos, J.D. Orb-Slam3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap Slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Handa, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Robust Semantic Pixel-Wise Labelling. arXiv 2015, arXiv:1505.07293. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A Comprehensive Review of Yolo Architectures in Computer Vision: From Yolov1 to Yolov8 and Yolo-Nas. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer International Publishing: New York, NY, USA, 2015. [Google Scholar]

- Dvornik, N.; Shmelkov, K.; Mairal, J.; Schmid, C. Blitznet: A Real-Time Deep Network for Scene Understanding. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Tateno, K.; Tombari, F.; Laina, I.; Navab, N. Cnn-Slam: Real-Time Dense Monocular Slam with Learned Depth Prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Zhu, Z.; Peng, S.; Larsson, V.; Xu, W.; Bao, H.; Cui, Z.; Oswald, M.R.; Pollefeys, M. NICE-SLAM: Neural Implicit Scalable Encoding for SLAM. arXiv 2021, arXiv:2112.12130. [Google Scholar] [CrossRef]

- Yu, C.; Liu, Z.; Liu, X.; Xie, F.; Yang, Y.; Wei, Q.; Qiao, F. Ds-Slam: A Semantic Visual Slam Towards Dynamic Environments. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1168–1174. [Google Scholar]

- Bescós, B.; Fácil, J.M.; Civera, J.; Neira, J. Dynaslam: Tracking, Mapping, and Inpainting in Dynamic Scenes. IEEE Robot. Autom. Lett. 2018, 3, 4076–4083. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics Yolo. Available online: https://github.com/ultralytics/ultralytics (accessed on 30 July 2024).

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A Benchmark for the Evaluation of Rgb-D Slam Systems. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; IEEE: Piscataway, NJ, USA, 2012. [Google Scholar]

- Islam, Q.U.; Ibrahim, H.; Chin, P.K.; Lim, K.; Abdullah, M.Z.; Khozaei, F. Ard-Slam: Accurate and Robust Dynamic Slam Using Dynamic Object Identification and Improved Multi-View Geometrical Approaches. Displays 2024, 82, 102654. [Google Scholar] [CrossRef]

- Cheng, J.; Wang, C.; Meng, M.Q.H. Robust Visual Localization in Dynamic Environments Based on Sparse Motion Removal. IEEE Trans. Autom. Sci. Eng. 2020, 17, 658–669. [Google Scholar] [CrossRef]

- Jeon, H.; Han, C.; You, D.; Oh, J. Rgb-D Visual Slam Algorithm Using Scene Flow and Conditional Random Field in Dynamic Environments. In Proceedings of the 22nd International Conference on Control, Automation and Systems (ICCAS), Busan, Republic of Korea, 27 November–1 December 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

- Zhong, M.; Hong, C.; Jia, Z.; Wang, C.; Wang, Z. Dynatm-Slam: Fast Filtering of Dynamic Feature Points and Object-Based Localization in Dynamic Indoor Environments. Robot. Auton. Syst. 2024, 174, 104634. [Google Scholar] [CrossRef]

- Yang, L.; Cai, H. Enhanced Visual Slam for Construction Robots by Efficient Integration of Dynamic Object Segmentation and Scene Semantics. Adv. Eng. Inform. 2024, 59, 102313. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, Y.; Li, X. Pmds-Slam: Probability Mesh Enhanced Semantic Slam in Dynamic Environments. In Proceedings of the 5th International Conference on Control, Robotics and Cybernetics (CRC), Wuhan, China, 16–18 October 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Wei, B.; Zhao, L.; Li, L.; Li, X. Research on Rgb-D Visual Slam Algorithm Based on Adaptive Target Detection. In Proceedings of the IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), Zhengzhou, China, 14–17 November 2023; IEEE: Piscataway, NJ, USA, 2023. [Google Scholar]

- Zhang, J.; Yuan, L.; Ran, T.; Peng, S.; Tao, Q.; Xiao, W.; Cui, J. A Dynamic Detection and Data Association Method Based on Probabilistic Models for Visual Slam. Displays 2024, 82, 102663. [Google Scholar] [CrossRef]

- Yang, L.; Wang, L. A Semantic Slam-Based Dense Mapping Approach for Large-Scale Dynamic Outdoor Environment. Measurement 2022, 204, 112001. [Google Scholar] [CrossRef]

- Gou, R.; Chen, G.; Yan, C.; Pu, X.; Wu, Y.; Tang, Y. Three-Dimensional Dynamic Uncertainty Semantic Slam Method for a Production Workshop. Eng. Appl. Artif. Intell. 2022, 116, 105325. [Google Scholar] [CrossRef]

- Cai, L.; Ye, Y.; Gao, X.; Li, Z.; Zhang, C. An Improved Visual Slam Based on Affine Transformation for Orb Feature Extraction. Optik 2021, 227, 165421. [Google Scholar] [CrossRef]

- Li, A.; Wang, J.; Xu, M.; Chen, Z. Dp-Slam: A Visual Slam with Moving Probability Towards Dynamic Environments. Inf. Sci. 2021, 556, 128–142. [Google Scholar] [CrossRef]

- Ai, Y.; Rui, T.; Yang, X.-Q.; He, J.-L.; Fu, L.; Li, J.-B.; Lu, M. Visual Slam in Dynamic Environments Based on Object Detection. Def. Technol. 2021, 17, 1712–1721. [Google Scholar] [CrossRef]

- Ran, T.; Yuan, L.; Zhang, J.; Tang, D.; He, L. Rs-Slam: A Robust Semantic Slam in Dynamic Environments Based on Rgb-D Sensor. IEEE Sens. J. 2021, 21, 20657–20664. [Google Scholar] [CrossRef]

- Li, X.; Guan, S. Sig-Slam: Semantic Information-Guided Real-Time Slam for Dynamic Scenes. In Proceedings of the 35th Chinese Control and Decision Conference (CCDC), Yichang, China, 20–22 May 2023; IEEE: Piscataway, NJ, USA, 2023. [Google Scholar]

- Qian, R.; Guo, H.; Chen, M.; Gong, G.; Cheng, H. A Visual Slam Algorithm Based on Instance Segmentation and Background Inpainting in Dynamic Scenes. In Proceedings of the 38th Youth Academic Annual Conference of Chinese Association of Automation (YAC), Hefei, China, 27–29 August 2023; IEEE: Piscataway, NJ, USA, 2023. [Google Scholar]

- Li, Y.; Wang, Y.; Lu, L.; Guo, Y.; An, Q. Semantic Visual Slam Algorithm Based on Improved Deeplabv3+ Model and Lk Optical Flow. Appl. Sci. 2024, 14, 5792. [Google Scholar] [CrossRef]

- Cong, P.; Liu, J.; Li, J.; Xiao, Y.; Chen, X.; Feng, X.; Zhang, X. Ydd-Slam: Indoor Dynamic Visual Slam Fusing Yolov5 with Depth Information. Sensors 2023, 23, 9592. [Google Scholar] [CrossRef]

- Cong, P.; Li, J.; Liu, J.; Xiao, Y.; Zhang, X. Seg-Slam: Dynamic Indoor Rgb-D Visual Slam Integrating Geometric and Yolov5-Based Semantic Information. Sensors 2024, 24, 2102. [Google Scholar] [CrossRef] [PubMed]

- RealSense, Intel. Intel Realsense Depth Camera D455. Available online: https://store.intelrealsense.com/buy-intel-realsense-depth-camera-d455.html (accessed on 30 July 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).