In order to ensure the validity and trustworthiness of our model, we analyze some important properties. CPNs can be used to verify information-theory-related properties, such as data consistency and reliability, through state space analyses or model checking techniques. The formal analysis of MSCFS-RP includes two parts. The first part is about state space statistics that are automatically generated by CPN Tools. The second part involves the verification of properties.

6.2. Property Specifications

In this section, several critical properties are verified for our model using CPN Tools. These properties are abstracted from functional requirements, and many of them refer to [

7]. The illustrations of the nine properties are as follows. We first discuss properties P1–P7 (

Table 5) and then analyze properties P8–P9 since their configurations are different. Note that these property specifications are described using ASK-CTL logic and ML within CPN Tools, integrating temporal logic with functional language. Further details regarding their syntax and semantics can be found in the referenced works [

33,

34].

- P1

Deadlock freedom: no infinite occurrence sequences in the state space;

- P2

Writing correctness: a file to write (if it is the first writing to this file) is finally written to the cluster;

- P3

Reading correctness: a file to read (if the file exists in the cluster) is finally received by the client;

- P4

Mutual exclusion: a file is being written by at most one writer at any time;

- P5

Writing once: a file can be written only once;

- P6

Minimal distance: a file is read from the closest storage node for a request;

- P7

Replication consistency: the replications should be the same after being confirmed by the meta server;

- P8

Writing Robustness: a file cannot be written to a storage node that breaks down.

- P9

Reading Robustness: a file can be read successfully when some of its storage nodes break down.

Here, the deadlock freedom indicates that there is no infinite loop in the state space of a CPN model. In the state space report automatically generated by CPN Tools, the Fairness Properties indicate this property. If “No infinite occurrence sequences” appears under the Fairness Properties item, we say deadlock freedom holds.

Writing correctness means that every first-time writing request has a corresponding storage result in the cluster. The main auxiliary functions are Function 1 and Function 2 (see more auxiliary functions in

Appendix A). For each writing request in the initial state, the function

IsOneWritten(

n,

q) is satisfied. Given an initial marking, if for all the state space nodes of an MSCFS-RP model it is possible from the initial state to reach a state where this holds, we say the model’s writing is correct. For a writing request (

tid,

typ,

fid), check whether this file has been written. If so, the corresponding tokens should be in the place

WriteMore ThanOnce and

File Location, respectively. Otherwise, it should satisfy that (1) the place

FileLocation has a token about the corresponding file (

fid,

locs), and (2) for the first location (

nid,

bid), namely, the location as a response to the client, the data of the block

bid in the node

nid should be the same as the data to write in the client.

| Function 1 IsAllWritten |

- 1:

fun IsAllWritten init n = - 2:

if List.null(GetWQueries init) - 3:

then true - 4:

else List.all (IsOneWritten n) (GetWQueries init)

|

The description of Function 1 using mathematical formalism is illustrated as follows. Let

init be the initial state and

n be a node identifier. The function

IsAllWritten(

init,

n) is defined.

Here,

GetWQueries(

init) retrieves the list of write queries from the initial state. If this list is empty, the function returns

. Otherwise, it checks if the function

IsOneWritten(

n,

q) returns

for all

q in the list of write queries.

| Function 2 IsOneWritten |

- 1:

fun IsOneWritten n q = - 2:

let val (tid, WRITE, fid) = q in - 3:

if List.exists (fn x ⇒ x = q) (Mark.Prepare’WriteMore_ThanOnce 1 n) andalso - 4:

List.exists (fn x ⇒ (#1 x) = fid) (Mark.MetaServer’File_Location 1 n) - 5:

then true - 6:

else - 7:

let val floc = GetFileLocation n fid in - 8:

if isSome(floc) - 9:

then - 10:

let val (_, loc::_) = valOf(floc); - 11:

val bdata = GetNodeBlock n loc; - 12:

val wdata = GetWrittenData n tid in - 13:

if isSome(bdata) andalso isSome(wdata) - 14:

then #2 (valOf(bdata)) = #2 (valOf(wdata)) - 15:

else false - 16:

end - 17:

else false - 18:

end - 19:

end

|

The description of Function 2 using mathematical formalism is illustrated as follows. Let n be a node identifier and q = (tid, WRITE, fid) be a query tuple, where tid is a task ID, WRITE is the operation type, and fid is a file ID. The function IsOneWritten(n, q) returns true if q exists in the marking Prepare’WriteMore_ThanOnce(1, n) and the file ID fid exists in the marking MetaServer’File_Location(1, n). If not, the function checks if the file location floc is retrievable using GetFileLocation(n, fid). If floc is found, the function further checks if both block data bdata from GetNodeBlock(n, loc) and written data wdata from GetWrittenData(n, tid) are retrievable. If both data sets are present, the function compares the second elements of bdata and wdata. If they match, the function returns ; otherwise, it returns .

Similar to writing correctness, reading correctness means that for every reading request, a file to read (if it exists in the cluster) can be returned. There are also two main auxiliary functions, as shown in Function 3 and Function 4, for reading correctness. The only differences between reading correctness and writing correctness lies in that (1) reading requests instead of writing requests in a scenario are considered, (2) the file to read that does not exist is recorded in the place

ReadNotExist, and (3) the data of the corresponding location are checked with the received result of the client in the place

Result but not the data in the place

WritingData.

| Function 3 IsAllRead |

- 1:

fun IsAllRead init n = - 2:

if List.null(GetRQueries init) - 3:

then true - 4:

else List.all (IsOneRead n) (GetRQueries init)

|

| Function 4 IsOneRead |

- 1:

fun IsOneRead n q = - 2:

let val (tid, READ, fid) = q in - 3:

if List.exists (fn x ⇒ x = q) (Mark.Prepare’Read_NotExist 1 n) - 4:

then true - 5:

else - 6:

let val floc = GetFileLocation n fid in - 7:

if isSome(floc) - 8:

then - 9:

let val (_, loc::_) = valOf(floc) in - 10:

val bdata = GetNodeBlock n loc; - 11:

val rdata = GetResult n tid in - 12:

if isSome(bdata) andalso isSome(rdata) - 13:

then #2 (valOf(bdata)) = #2 (valOf(rdata)) - 14:

else false - 15:

end - 16:

else false - 17:

end - 18:

end

|

The description of Function 3 using mathematical formalism is illustrated as follows. Let

init be the initial state and

n be a node identifier. The function

IsAllRead(

init,

n) is defined.

Here, GetRQueries(init) retrieves the list of read queries from the initial state init. If this list is empty, the function returns true. Otherwise, it checks if the function IsOneRead(n, q) returns true for all q in the list of read queries.

The description of Function 4 using mathematical formalism is illustrated as follows. Let n be a node identifier and q = (tid, READ, fid) be a query tuple, where tid is a task ID, READ is the operation type, and fid is a file ID. The function IsOneRead(n, q) returns true if q exists in the marking Prepare’Read_NotExist(1, n). If not, the function checks if the file location floc is retrievable using GetFileLocation(n, fid). If floc is found, the function further checks if both block data bdata from GetNodeBlock(n, loc) and result data rdata from GetResult(n, tid) are retrievable. If both data sets are present, the function compares the second elements of bdata and rdata. If they match, the function returns true; otherwise, it returns false.

As shown in Function 5, mutual exclusion emphasizes that a file cannot be written by more than one writer at the same time. We use a place called

WritingFile to record the file identifier that is being written. Also, this place is used to control write–write or write–read conflicts, which mean that when a writer is writing a file, the other writer or reader of the same file has to wait until the writing is finished. As the following function shows, to check mutual exclusion, we only need to determine whether there are two tokens of the same file identifier in the place

WritingFile.

| Function 5 IsMutualExclusion |

- 1:

fun IsMutualExclusion n = - 2:

not(duplicated (List.concat(Mark.Entry’Writing_File 1 n)))

|

The description of Function 5 using mathematical formalism is illustrated as follows. Let

n be a node identifier. The function

IsMutualExclusion(

n) returns true if there are no duplicate tokens in the concatenated list of markings from

Mark.Entry’Writing_File(

1,

n). This is formally expressed as below, where

duplicated(

x) is a function that checks if there are any duplicate elements in the list

x:

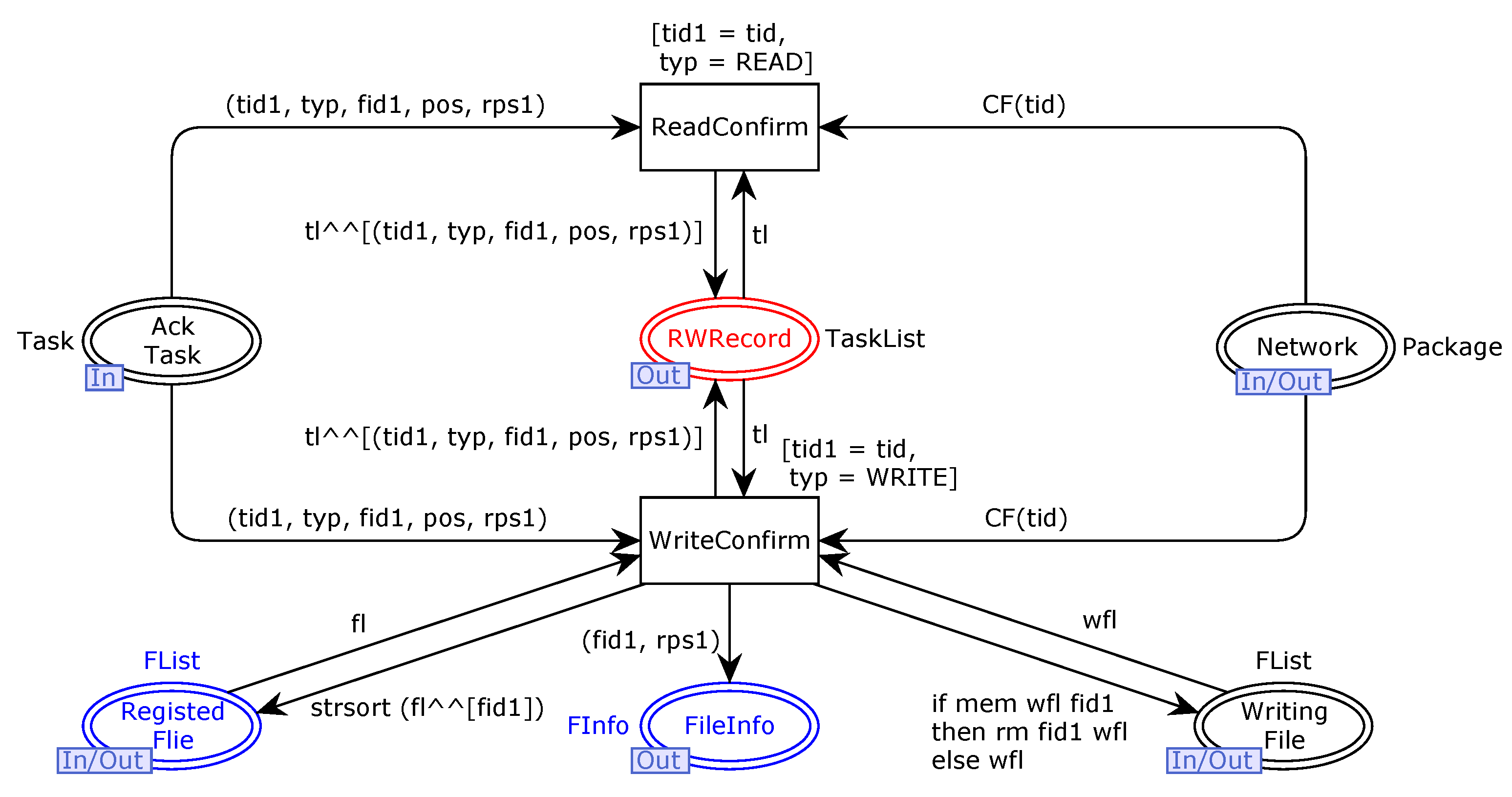

In Function 6, “writing once” indicates that each file cannot be written more than once. As stated in [

6], the write-once–read-many access model for files simplifies data consistency issues and enables high throughput data access. This property can be simply checked by judging whether there are two writing records of the same file in the place

RWRecord.

| Function 6 IsWriteOnce |

- 1:

fun IsWriteOnce n = - 2:

not(duplicated (List.map (fn x ⇒ #3 x) - 3:

(List.filter (fn x ⇒ #2 x = WRITE) - 4:

(List.concat(Mark.Confirm’RWRecord 1 n)))))

|

The description of Function 6 using mathematical formalism is illustrated as follows. Let

n be a node identifier. The function

IsWriteOnce(

n) checks for duplicates in the list of file IDs (denoted as

#3 x) that are associated with the WRITE operation (denoted as

#2 x). Specifically, it first concatenates the marking

Confirm’RWRecord(

1,

n), filters this list to retain only those entries for which the second element is equal to WRITE, maps this filtered list to extract the third element (the file ID), and then checks for duplicates in this resulting list. The function returns true if there are no duplicates, which is formally expressed as:

As shown in Function 7 and Function 8, the minimal distance means that a file is read from the closest replication node. We use the function

mindist(

pos,

rnl) to compute which replication node has the minimal distance. If the node is the one with the minimal distance, the function

IsOneMinimal(

n,

r) returns true; otherwise it is false. For all the reading records in

RWRecord, the minimal distance property should hold.

| Function 7 IsAllMinimal |

- 1:

fun IsAllMinimal n = - 2:

if List.null(getRRecord n) - 3:

then true - 4:

else List.all (IsOneMinimal n) (getRRecord n)

|

The description of Function 7 using mathematical formalism is illustrated as follows. Let

n be a node identifier. The function

IsAllMinimal(

n) is defined.

Here,

getRRecord(

n) retrieves the list of read records for node

n. If this list is empty, the function returns

true. Otherwise, it checks if the function

IsOneMinimal(

n,

q) returns

true for all

q in the list of read records.

| Function 8 IsOneMinimal |

- 1:

fun IsOneMinimal n r = - 2:

let val (_, _, fid, pos, [(nid, _)]) = r; - 3:

val nl = List.concat(Mark.MetaServer’NodeInfo 1 n); - 4:

val floc = List.find (fn x ⇒ (#1 x) = fid) - 5:

(Mark.MetaServer’File_Location 1 n) in - 6:

if isSome(floc) - 7:

then - 8:

let val nids = List.map (fn (nid, _) ⇒ nid) (#2 (valOf(floc))); - 9:

val rnl = List.filter (fn (nid, _, _, _) ⇒ - 10:

(List.exists (fn x ⇒ x = nid) nids)) nl; - 11:

val ni = mindist pos rnl in - 12:

nid = #1 ni - 13:

end - 14:

else false - 15:

end

|

The description of Function 7 using mathematical formalism is illustrated as follows. Let n be a node identifier and r = (_, _, fid, pos, [(nid, _)]) be a tuple representing a record r, where fid denotes the file ID and pos denotes a position. The function IsOneMinimal(n, r) first retrieves a list nl by concatenating all node information from the marking Mark.MetaServer’NodeInfo(1, n). It then searches for a file location floc in the marking Mark.MetaServer’File_Location(1, n), where the first element matches fid. If floc exists, the function extracts a list nids containing all node identifiers associated with floc. It filters nl to retrieve rnl, which includes entries where the node identifier nid exists in nids. The function then computes ni using a function mindist(pos, rnl) to determine the closest node identifier to pos within rnl. If ni is found and its first element matches nid, the function returns true; otherwise, it returns false.

In Function 9 and Function 10, replication consistency means that all the replications of a file should be the same after its writing request is confirmed. First, as shown in the following function

IsOneConsistent(

n,

ar), for one writing request, all the data in the locations of a writing record should be the same. Then, for each writing request in the place

RWRecord, the replication consistency holds. Note that the replication consistency might be not satisfied during writing, but it holds after the meta server confirms this writing, and a new token will be generated to record this writing in

RWRecord.

| Function 9 IsAllConsistent |

- 1:

fun IsAllConsistent n = - 2:

if List.null(getWRecord n) - 3:

then true - 4:

else List.all (IsOneConsistent n) (getWRecord n)

|

The description of Function 9 using mathematical formalism is illustrated as follows. Let

n denote a node identifier. The function

IsAllConsistent(

n) is defined.

Here,

getWRecord(

n) retrieves the list of write records associated with node

n. If

getWRecord(

n) is empty, indicating no write records exist, the function returns

true. Otherwise, it checks if the function

IsOneConsistent(

n,

ar) returns

true for all

r in the list

getWRecord(

n).

| Function 10 IsOneConsistent |

- 1:

fun IsOneConsistent n ar = - 2:

let val (_, _, _, loc::rps) = ar; - 3:

val b = GetNodeBlock n loc; - 4:

val bs = GetNodeBlockList n rps in - 5:

(isSome b) andalso List.all (fn b’ ⇒ (isSome b’) andalso - 6:

(#2 (valOf(b)) = #2 (valOf(b’)))) bs - 7:

end

|

The description of Function 10 using mathematical formalism is illustrated as follows. The function IsOneConsistent(n, ar) is defined as follows: Let n denote a node identifier, and ar = (_, _, _, loc::rps) represents a record tuple, where loc denotes a location and rps denotes the replication pipelining sequence. The function first retrieves the block b from GetNodeBlock(n, loc) and the list bs of blocks from GetNodeBlockList(n, rps). It returns true if and only if:

b is retrievable (i.e., isSome(b) is true);

All blocks bs are retrievable (i.e., isSome(b’) for all b’ ∈ bs);

For all b’ ∈ bs, the second elements of b and b’ are equal (#2(valOf(b)) = #2(valOf(b’))).

If these conditions are satisfied, the function returns true; otherwise, it returns false.

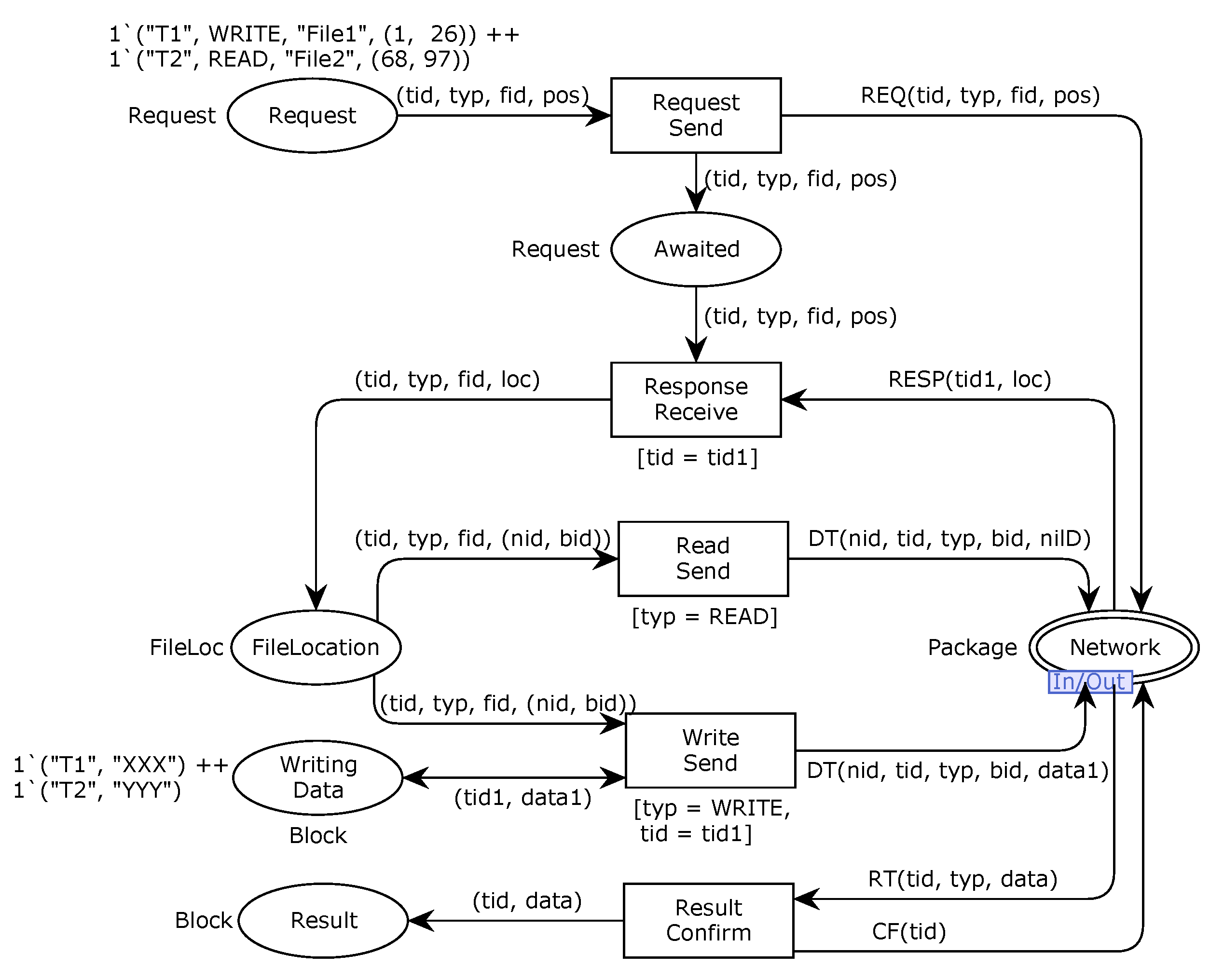

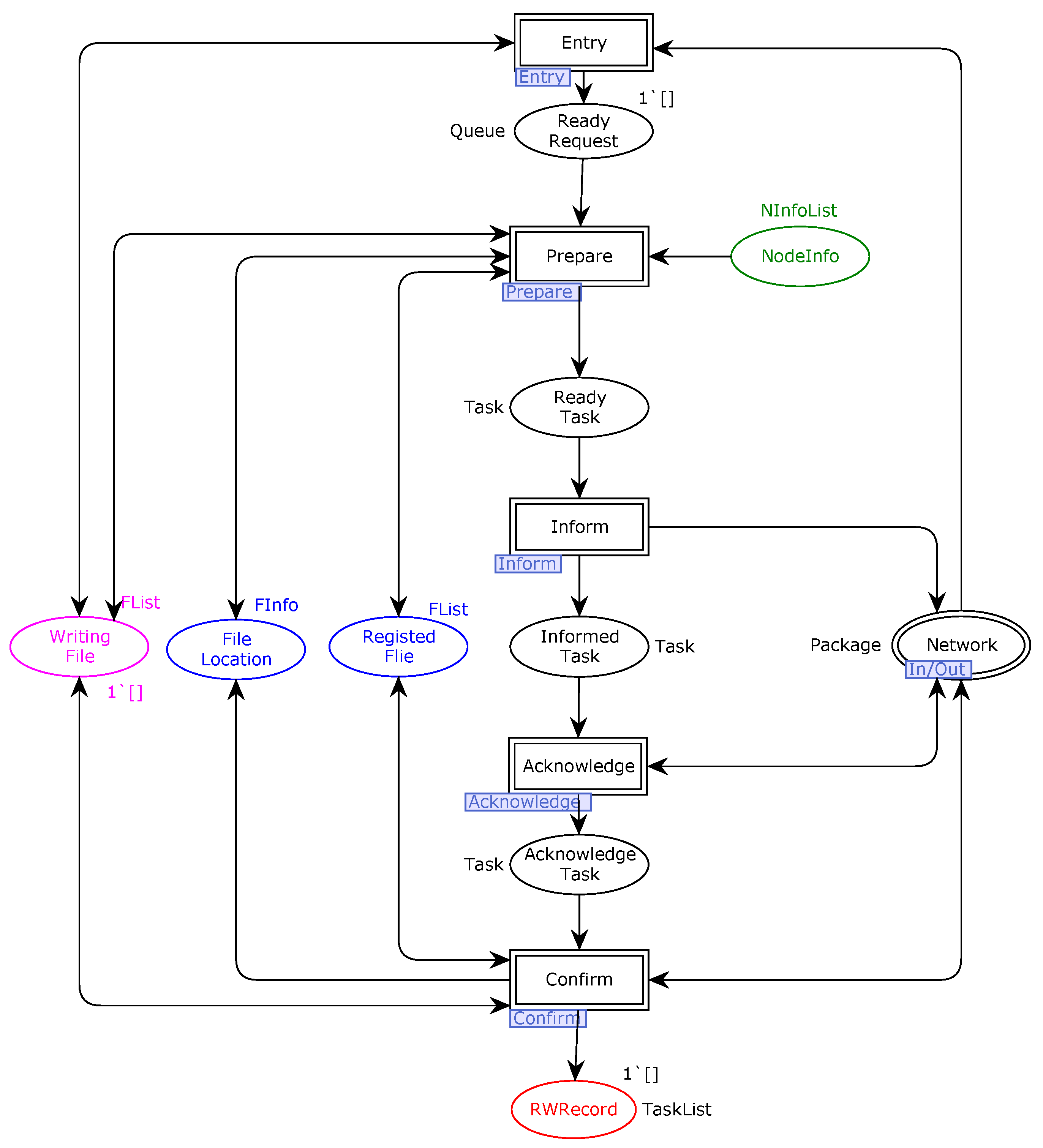

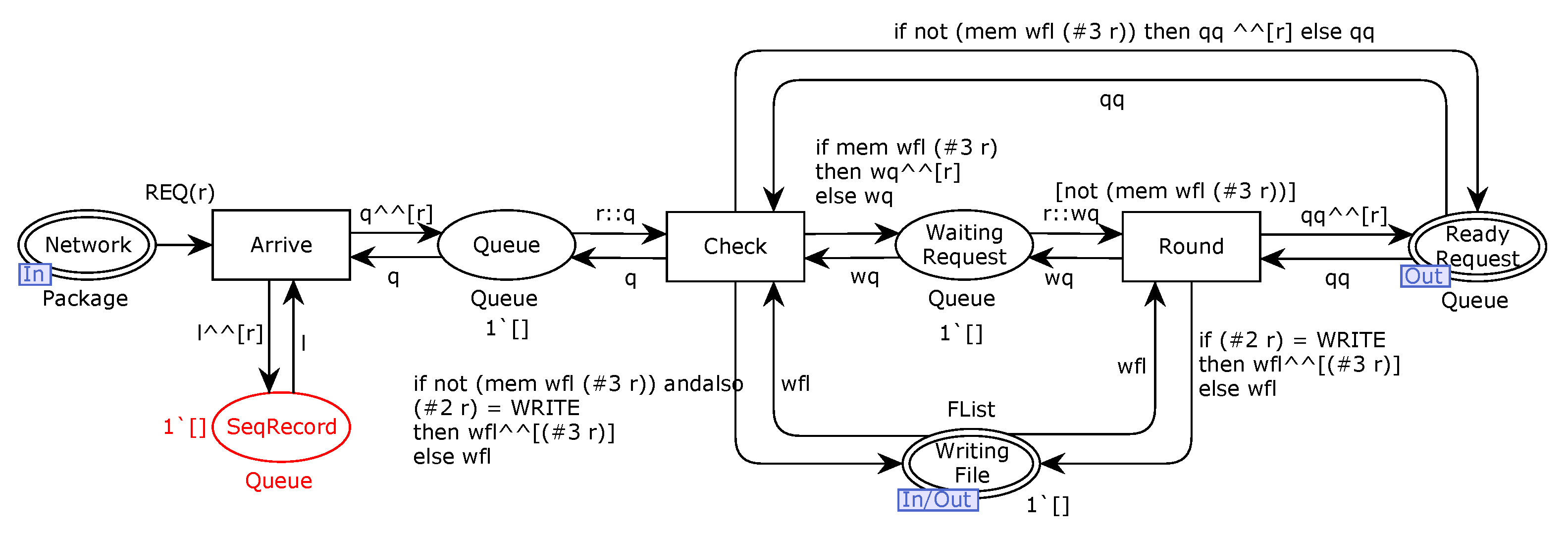

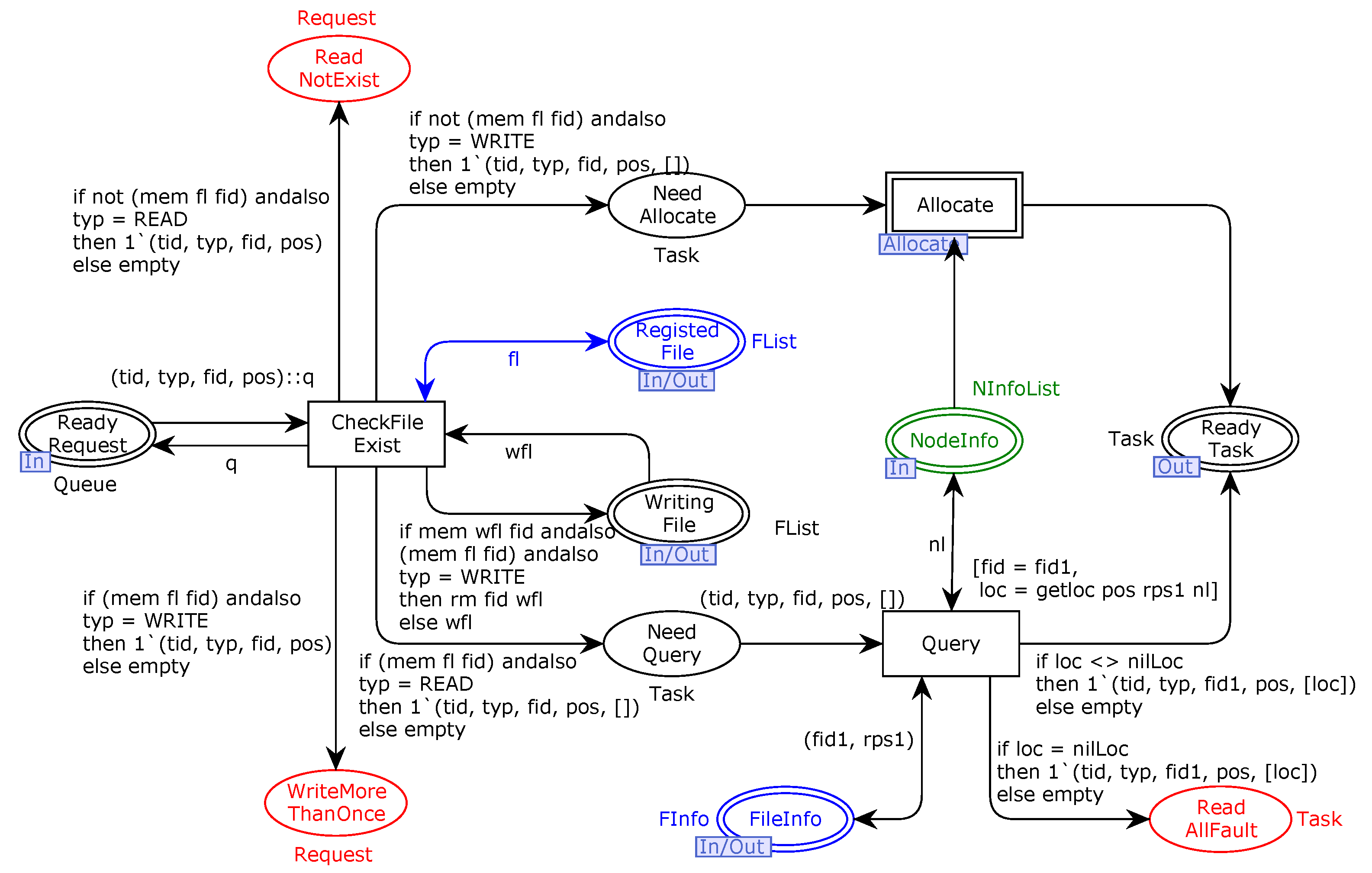

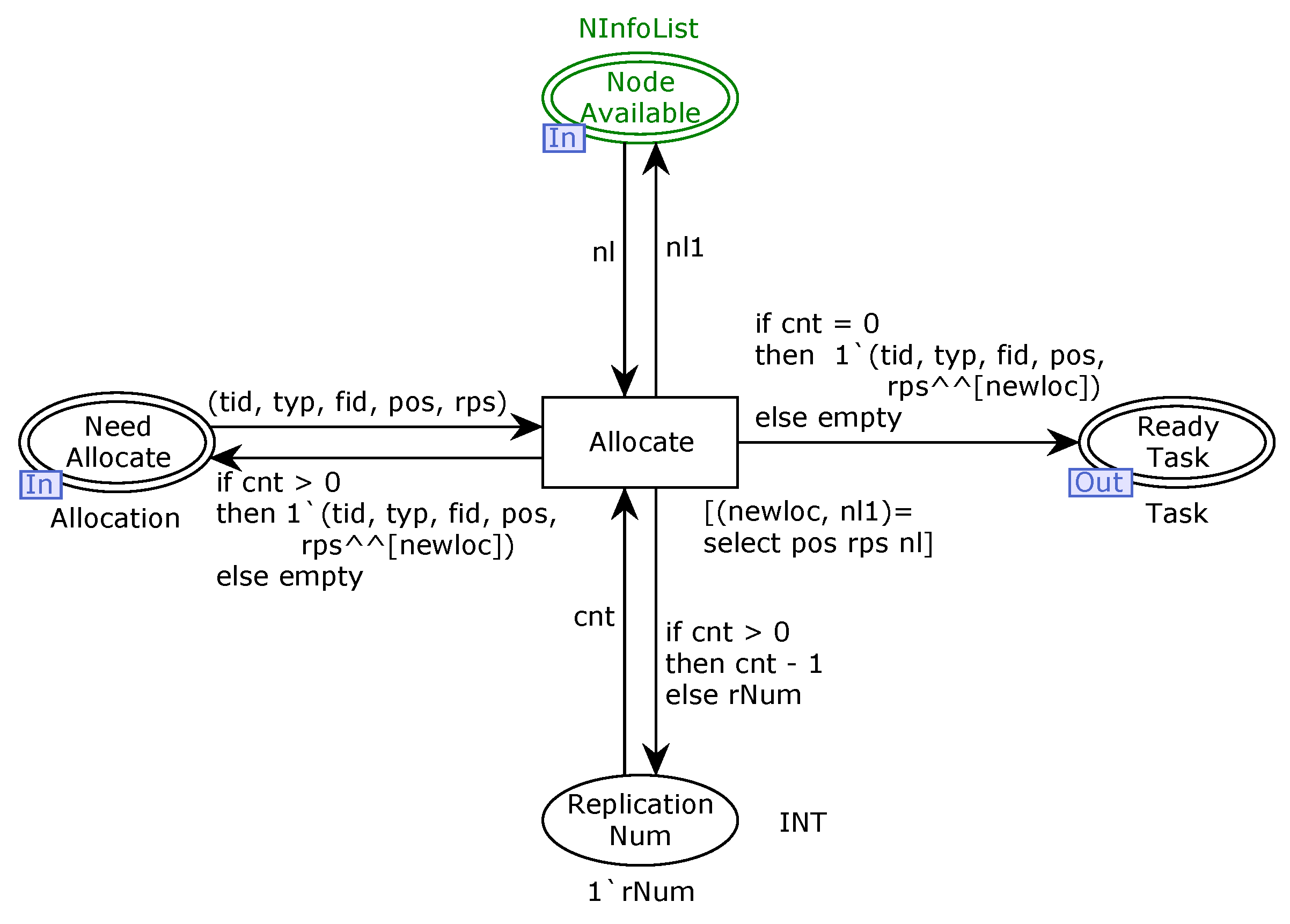

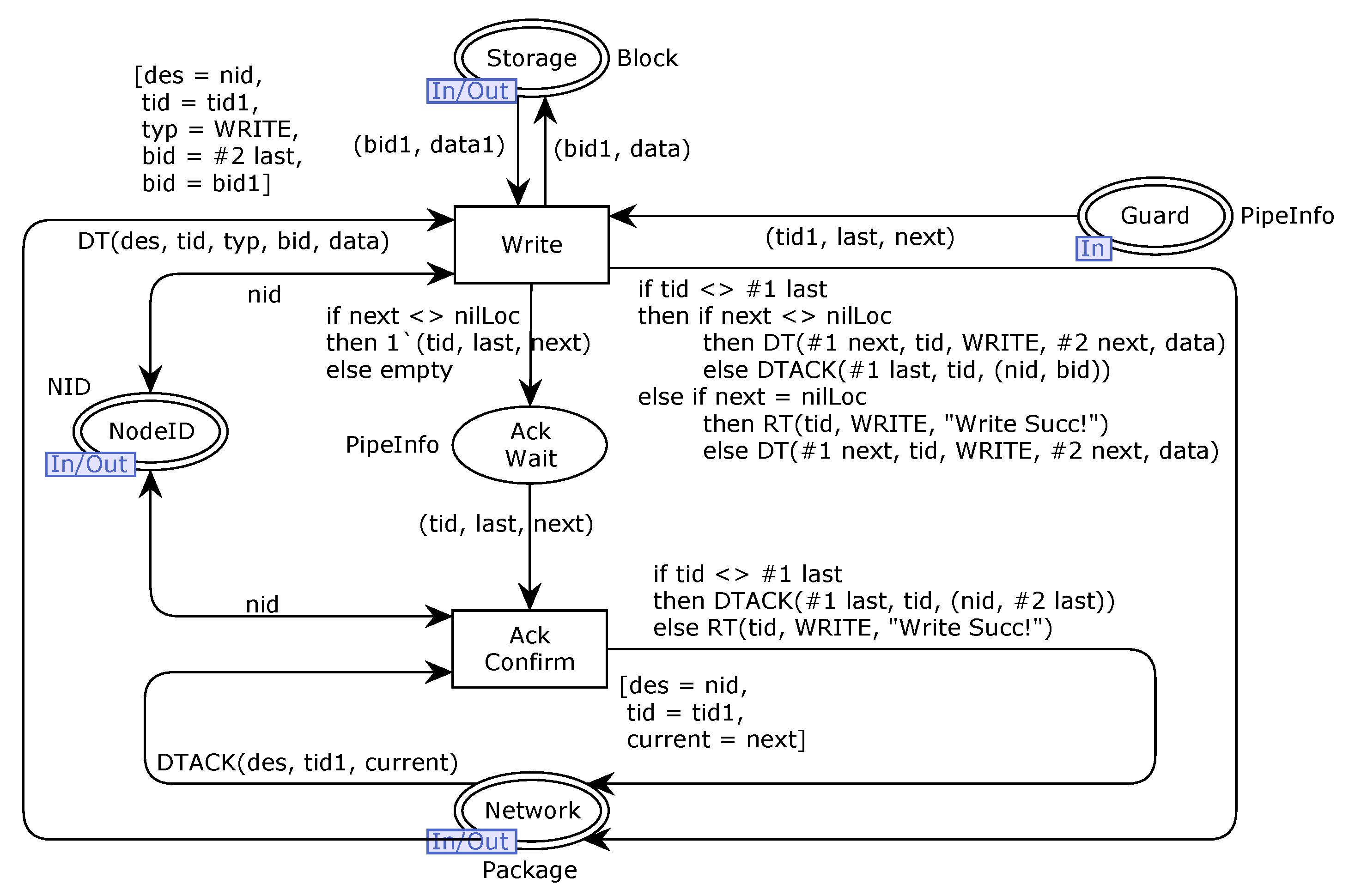

6.3. Verification Process

In this section, we use CPN Tools to implement verification of the mentioned properties, ensuring the correctness and reliability of our MSCFS-RP model. CPN Tools facilitates this process through comprehensive simulation capabilities that allow us to observe the behavior of the model across diverse operational scenarios. The simulation results of our MSCFS-RP model provide a better understanding of the data flow and the interactions between clients, meta servers, and clusters. A simulation scenario in CPN Tools is shown in

Figure 20.

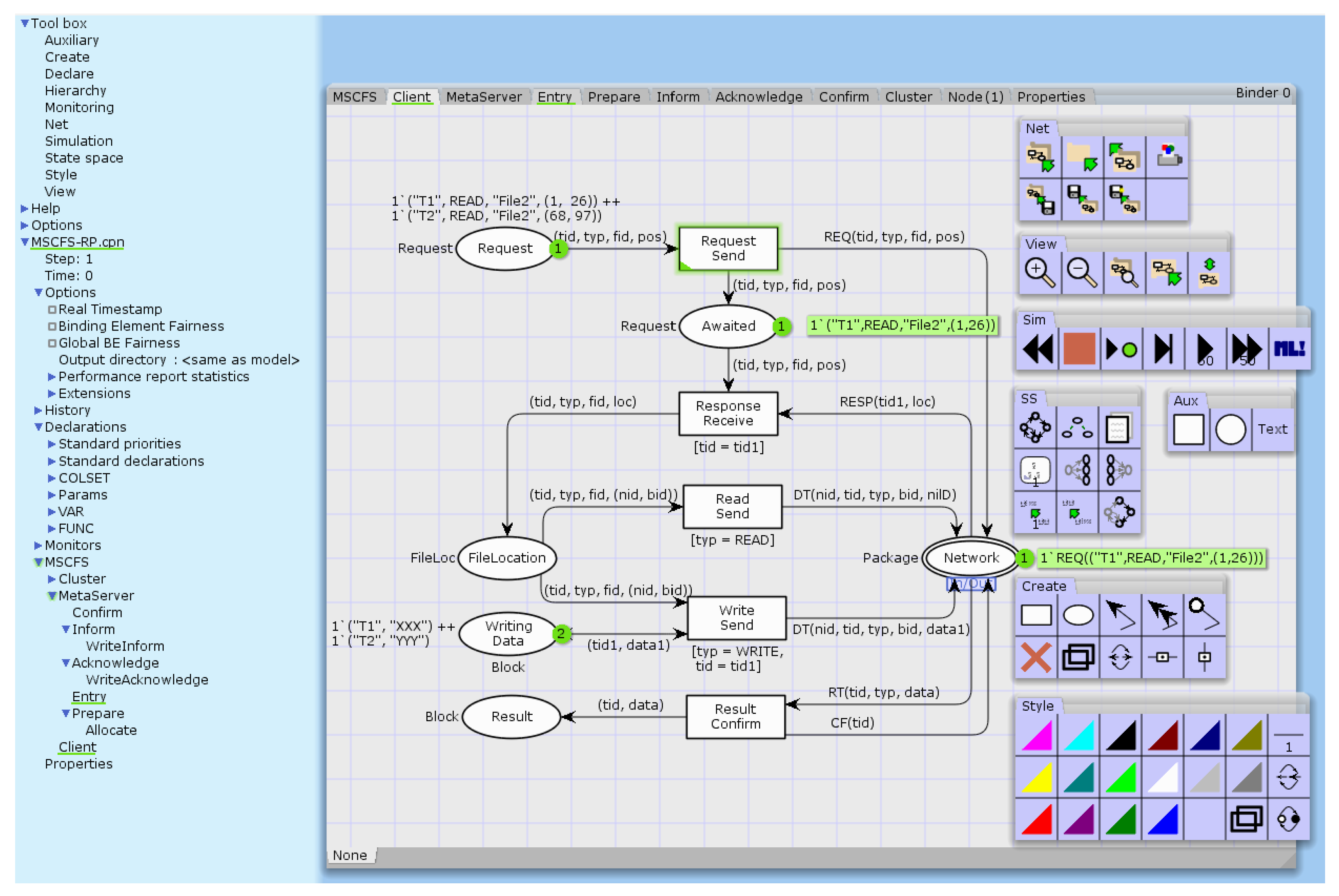

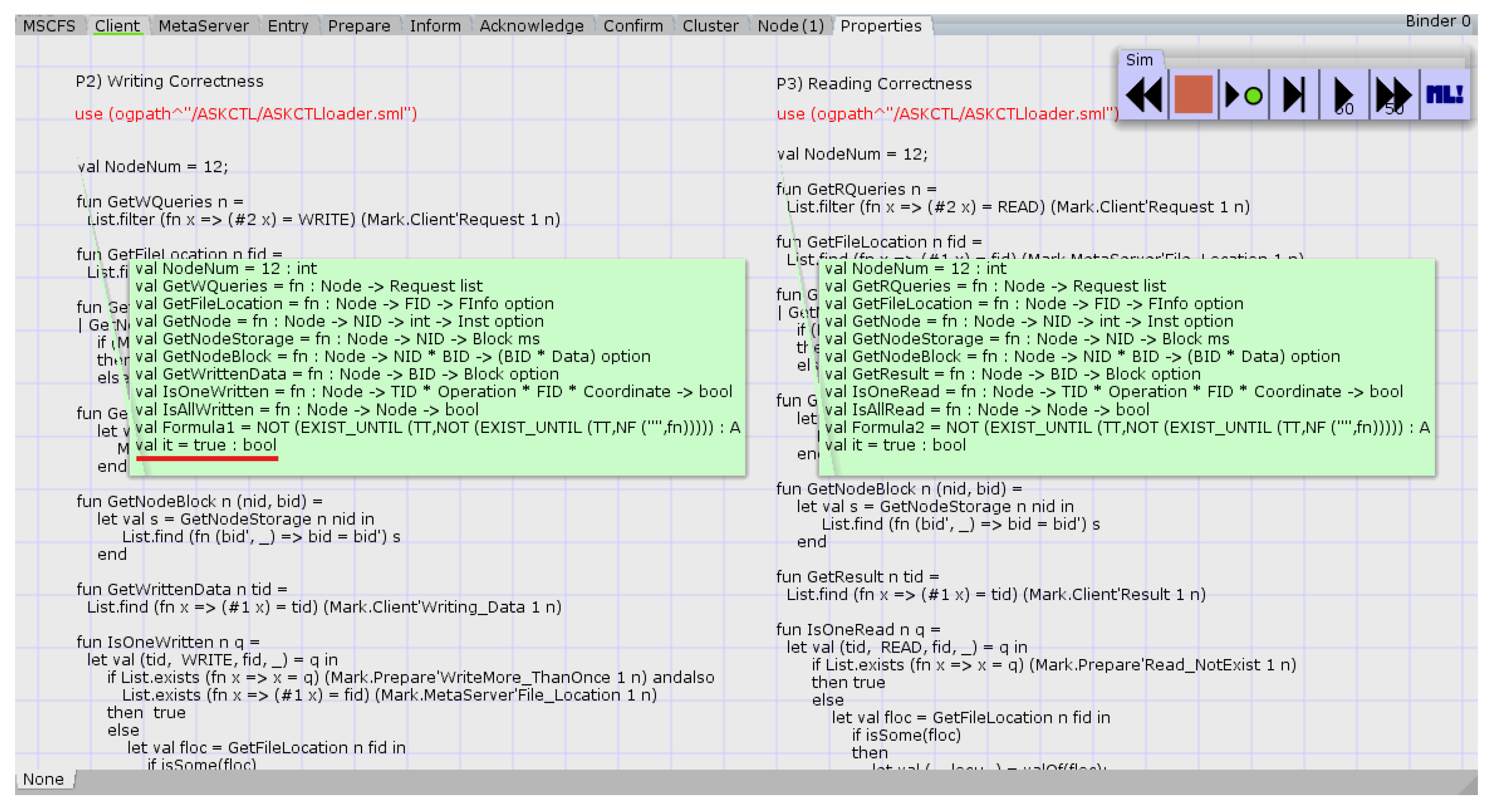

In

Section 6.2, nine critical properties are specified using auxiliary functions written in ASK-CTL. The ASK-CTL logic and model checker are implemented in ML [

33]. Each property has a corresponding ML function and formula. These functions analyze the “Nodes” or “Arcs” of the state space to check if a property holds. In CPN Tools, the verification steps should be performed as follows [

35]: (1) select the State Space Tool; (2) calculate a State_Space (including the SCC graph); (3) evaluate the following ML code: use (ogpath⌃“/ASKCTL/ASKCTLloader.sml”); (4) evaluate the ML functions (i.e., ASK-CTL formulas) of property specifications.

For example, “Writing correctness” is verified by checking if all writing requests result in the file being stored in the cluster. As shown in

Figure 21, each auxiliary function is correctly defined and the result “it” is true. The verification process is repeated for each scenario. More details about how to use CPN Tools for verification can be found in [

36]. We verified the above seven properties in the eight scenarios shown in

Table 6. The results show that all the properties P1–P7 are satisfied in related scenarios.

The property P8 is verified under scenario S1, as shown in

Table 7. According to our previous simulations without node failure, the request

(“T1”, WRITE, “File1”, (1, 26)) should result in

“File1” being stored in “

Node22”, “

Node11”, and “

Node31”. We assume some of these three nodes fail and check whether the writing concerning this request is still successful. As the results show, the property P8 still holds for all the cases. This is because our model will choose other nodes if the most suitable ones have faults.

The property P9 is verified under scenario S2, as shown in

Table 8. In this scenario, a reader reads “

File2”, which has three replications located in “

Node21”, “

Node12”, and “

Node41”, respectively. We assume that any of the nodes can break down. The results show that if one or two replication nodes fail, the property P9 is still satisfied, i.e., the file can be read correctly, meaning that our model can tolerate node failure to some extent. When all the replication nodes fail, the file will be lost. The results in other reading scenarios are similar to this scenario.

The verification of the nine properties highlighted above demonstrates the significance of the MSCFS-RP model for reliability and trustworthiness. By rigorously verifying these critical properties, we ensure that the model operates as intended without inherent design flaws. Through formal analysis, MSCFS-RP can be evaluated against its properties, facilitating a comprehensive assessment within the broader context of similar systems.

There are some limitations of our method. First, as a common problem mentioned in [

20], analysis of state spaces is always relative to a specific configuration (initial marking) of the system parameters. Second, when the value of parameters increases, the method will encounter the state space explosion problem like many model checking methods. The solution to the first problem might be selecting representative parameters or configurations and combining effective testing methods. The solution to the second problem might be abstracting and optimizing models according to the “separation of concerns” principle.