A Robust AR-DSNet Tracking Registration Method in Complex Scenarios

Abstract

1. Introduction

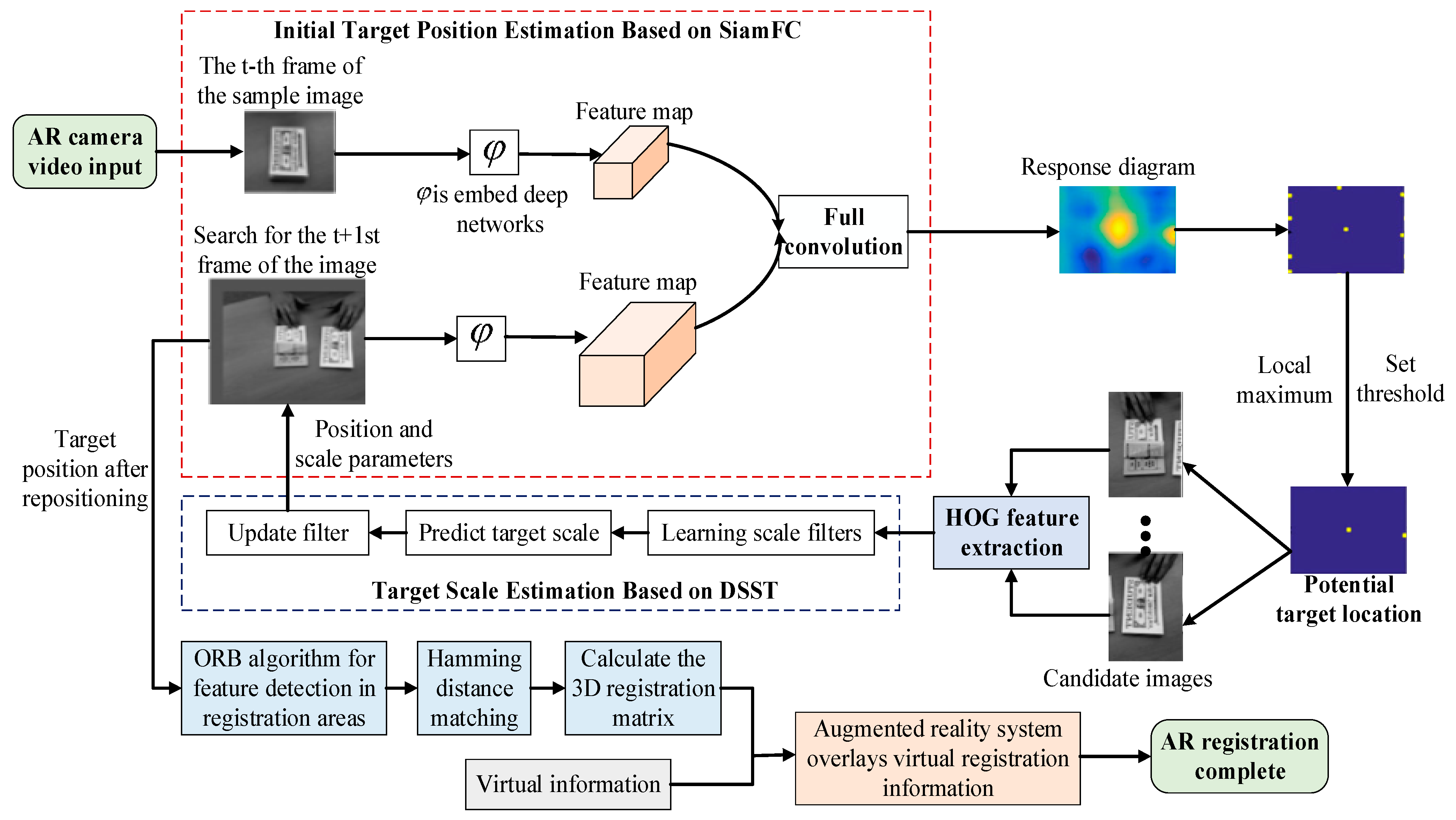

- Based on the distribution of local maximum values on the SiamFC tracking response map, a threshold is set to filter positions of potential targets ahead, so that better initial target positions are transferred to the DSST filter, which helps to reduce boundary effects on similar targets.

- In the prediction stage of the AR scale to be registered, multi-scale images are collected at the target location to form independent samples for training scale filters. And the scale of the target is inferred based on the response value of the scale filter of the samples, thereby adaptively tracking the scale changes of the target to be registered.

- By updating the relevant filtering coefficients through linear interpolation, the target is repositioned to obtain accurate target positions. After tracking the accurate target, the ORB (Oriented FAST and Rotated BRIEF) algorithm is used to perform feature detection and relationship matching, obtaining the registration matrix and overlaying virtual information to enhance the real world.

2. Literature Review

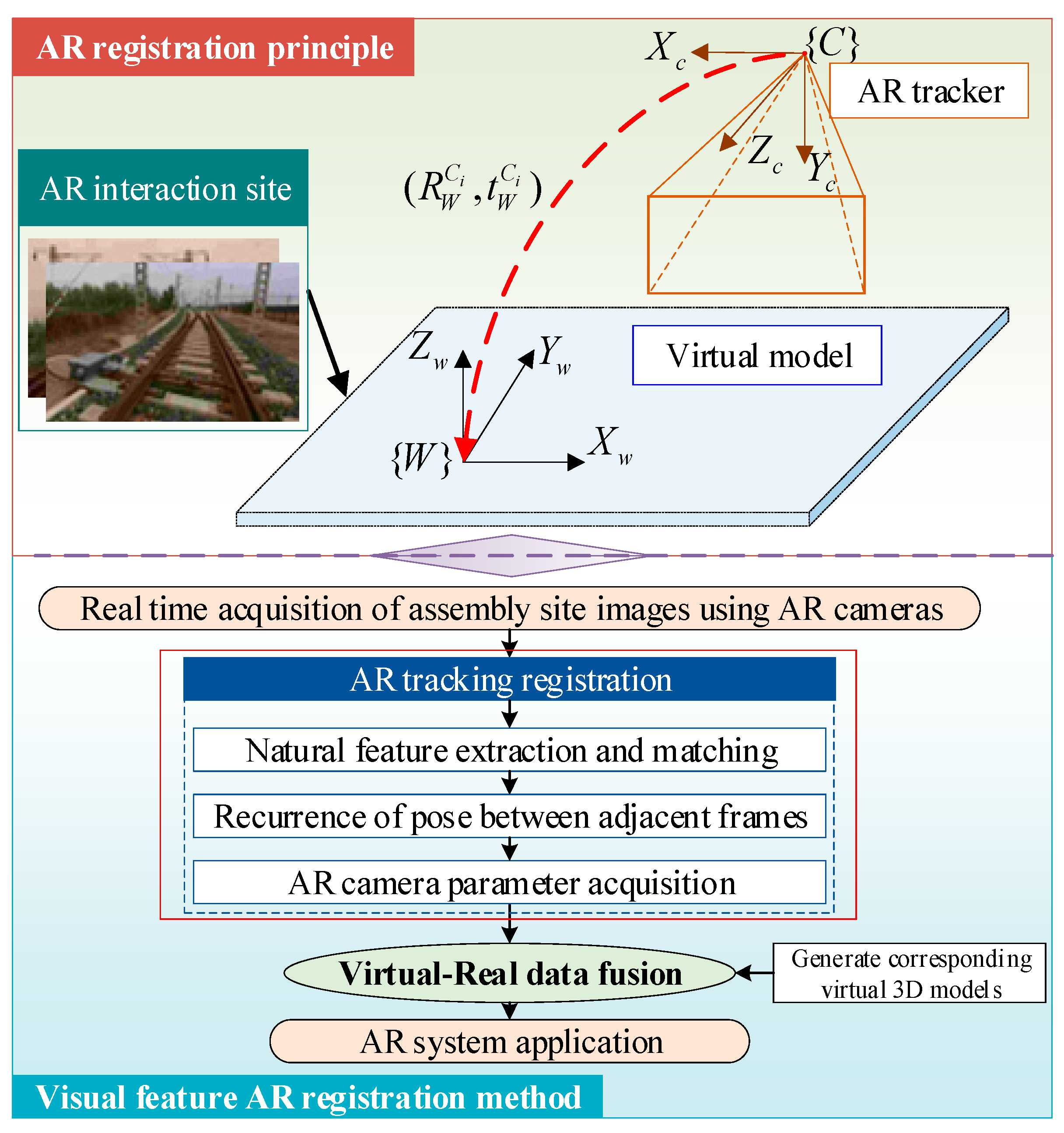

3. Methodology

3.1. AR-DSNet Initial Target Position Inference Based on SiamFC

3.1.1. SiamFC Network Tracking

3.1.2. Filtering Potential Target Locations

3.2. AR-DSNet Target Scale Inference Based on DSST

3.3. AR-DSNet Target Relocalization

3.4. AR-DSNet Feature Matching and Registration

4. Experimental Results and Analysis

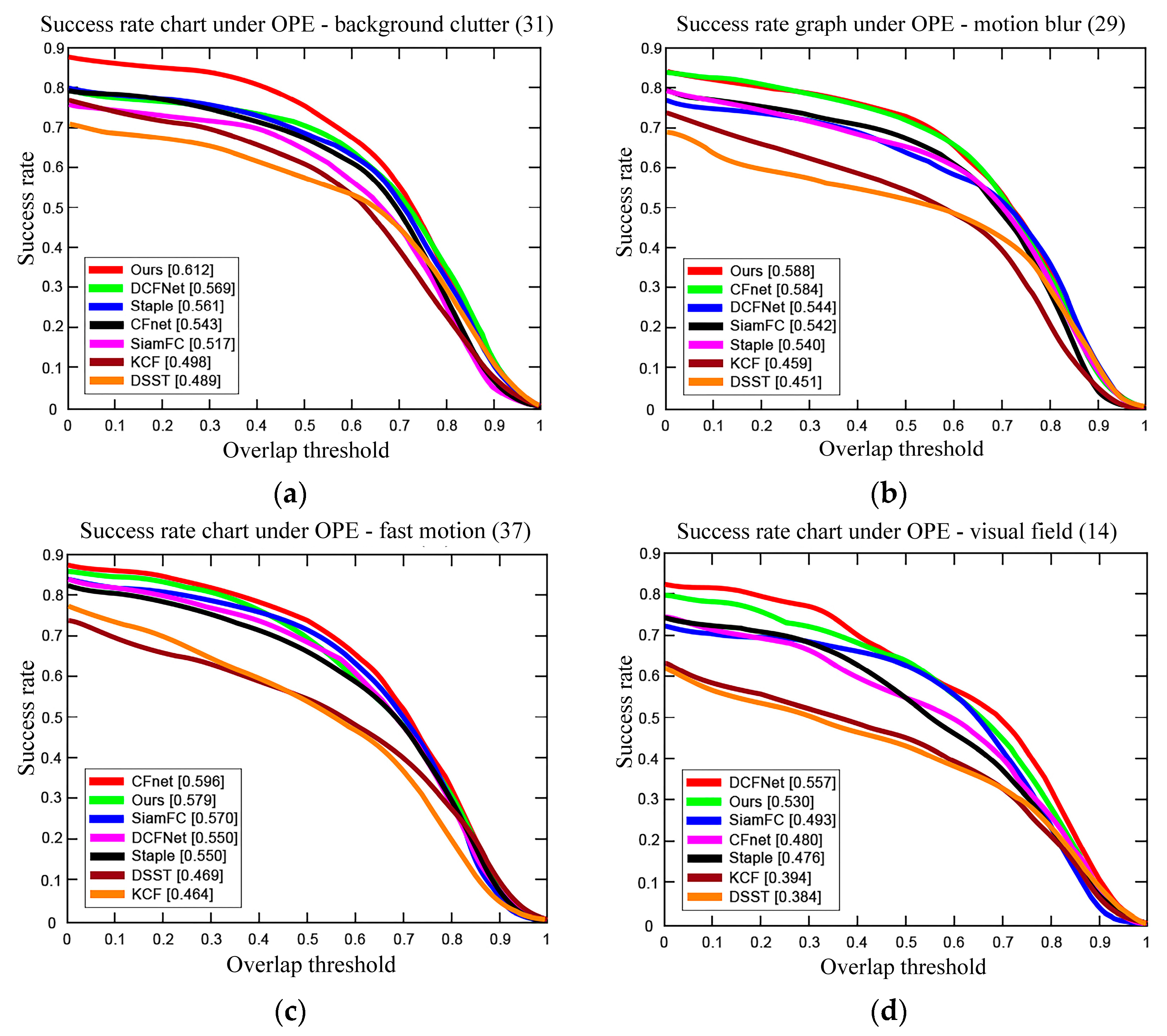

4.1. Results and Analysis of Moving Target Tracking

4.2. Tracking Results and Analysis of Classic Algorithms

4.3. Siamese Network Tracking Results and Analysis

4.4. Analysis of Registration Results for Moving Targets

5. Discussion

- (1)

- Improving performance of AR registration networks. As researchers continue to deeply explore different changes in AR tracking registration networks and optimize their architectures, we can expect to see performance improvements on higher-dimensional tasks. This may involve adjusting the information-sharing method on higher-dimensional inference between AR tracking and registration networks, or integrating the outputs of two or multiple networks in a more complex way, further improving the performance of AR registration networks.

- (2)

- Improving model generalization ability. At present, when the tracking algorithm of the AR registration network is used for feature extraction, the network generally has more layers and needs to be pre-trained on the ImageNet dataset. The training cycle is relatively long. In the future, unsupervised training or small-sample augmentation training can be used to improve the generalization ability of AR tracking registration methods and their applications in other fields, such as the AR CenterNet network [4], which trains relevant models for AR assembly industry applications.

- (3)

- Integration with other network architectures. AR tracking registration networks can be combined with other neural network architectures or attention mechanisms to create more complex models. For example, in the CoS-PVNet network, a global attention mechanism is used to deal with complex scene feature extraction, lacking features, or featureless scenes [2], which can provide better performance in certain tasks.

- (4)

- Optimizing AR registration backbone network. The AR tracking registration model can be lightweight processed, for example by using pruning, quantization, and other techniques to reduce redundant network calculations, thereby improving the real-time performance of tracking registration algorithms. Additionally, neural network search methods can be used to automatically search for specialized AR registration backbone networks for target tracking based on task characteristics.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Baroroh, D.K.; Chu, C.H.; Wang, L. Systematic literature review on augmented reality in smart manufacturing: Collaboration between human and computational intelligence. J. Manuf. Syst. 2021, 61, 696–711. [Google Scholar] [CrossRef]

- Yong, J.; Lei, X.; Dang, J.; Wang, Y. A Robust CoS-PVNet Pose Estimation Network in Complex Scenarios. Electronics 2024, 13, 2089. [Google Scholar] [CrossRef]

- Egger, J.; Masood, T. Augmented reality in support of intelligent manufacturing-a systematic literature review. J. Comput. Ind. Eng. 2020, 140, 106195. [Google Scholar] [CrossRef]

- Li, W.; Wang, J.; Liu, M.; Zhao, S.; Ding, X. Integrated registration and occlusion handling based on deep learning for augmented reality assisted assembly instruction. IEEE Trans. Ind. Inform. 2022, 19, 6825–6835. [Google Scholar] [CrossRef]

- Danielsson, O.; Holm, M.; Syberfeldt, A. Augmented reality smart glasses in industrial assembly: Current status and future challenges. J. Ind. Inf. Integr. 2020, 20, 100175. [Google Scholar] [CrossRef]

- Sizintsev, M.; Mithun, N.C.; Chiu, H.-P.; Samarasekera, S.; Kumar, R. Long-Range Augmented Reality with Dynamic Occlusion Rendering. J. IEEE Trans. Vis. Comput. Graph. 2021, 27, 4236–4244. [Google Scholar] [CrossRef]

- Wang, L.; Wu, X.; Zhang, Y.; Zhang, X.; Xu, L.; Wu, Z.; Fei, A. DeepAdaIn-Net: Deep Adaptive Device-Edge Collaborative Inference for Augmented Reality. J. IEEE J. Sel. Top. Signal Process 2023, 17, 1052–1063. [Google Scholar] [CrossRef]

- Thiel, K.K.; Naumann, F.; Jundt, E.; Guennemann, S.; Klinker, G.C. DOT-convolutional deep object tracker for augmented reality based purely on synthetic data. J. IEEE Trans. Vis. Comput. Graph. 2021, 28, 4434–4451. [Google Scholar] [CrossRef] [PubMed]

- Wei, H.; Liu, Y.; Xing, G.; Zhang, Y.; Huang, W. Simulating shadow interactions for outdoor augmented reality with RGB data. J. IEEE Access 2019, 7, 75292–75304. [Google Scholar] [CrossRef]

- Li, J.; Laganiere, R.; Roth, G. Online estimation of trifocal tensors for augmenting live video. In Proceedings of the Third IEEE and ACM International Symposium on Mixed and Augmented Reality, Arlington, VA, USA, 5 November 2004; pp. 182–190. [Google Scholar]

- Yuan, M.L.; Ong, S.K.; Nee, A.Y. Registration using natural features for augmented reality systems. J. IEEE Trans. Vis. Comput. Graph. 2006, 12, 569–580. [Google Scholar] [CrossRef] [PubMed]

- Bang, J.; Lee, D.; Kim, Y.; Lee, H. Camera pose estimation using optical flow and ORB descriptor in SLAM-based mobile AR game. In Proceedings of the 2017 International Conference on Platform Technology and Service (PlatCon), Busan, Republic of Korea, 13–15 February 2017; pp. 1–4. [Google Scholar]

- Jiang, J.; He, Z.; Zhao, X.; Zhang, S.; Wu, C.; Wang, Y. REG-Net: Improving 6DoF object pose estimation with 2D keypoint long-short-range-aware registration. J. IEEE Trans. Ind. Inform. 2023, 19, 328–338. [Google Scholar] [CrossRef]

- Henriques, J.F.; Caseiro, R.; Pedro, M. High Speed Tracking with Kernelized Correlation Filters. J. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef]

- Huang, D.; Luo, L.; Chen, Z.Y. Applying detection proposals to visual tracking for scale and aspect ratio adaptability. J. Int. J. Comput. Vis. 2017, 122, 524–541. [Google Scholar] [CrossRef]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H.S. End-to-end representation learning for Correlation Filter based tracking. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5000–5008. [Google Scholar]

- Wang, Q.; Gao, J.; Xing, J.; Zhang, M.; Hu, W. DCFNet: Discriminant Correlation Filters Network for Visual Tracking. arXiv 2017, arXiv:1704.04057. [Google Scholar]

- Yang, T.; Jia, S.; Yang, B.; Kan, C. Research on tracking and registration algorithm based on natural feature point. J. Intell. Autom. Soft Comput. 2021, 28, 683–692. [Google Scholar] [CrossRef]

- Kuai, Y.L.; Wen, G.J.; Li, D.D. When correlation filters meet fully-convolutional Siamese networks for distractor-aware tracking. Signal Process. Image Commun. 2018, 64, 107–117. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, S.; Yang, S.; He, W.; Bai, X. Mechanical assembly assistance using marker-less augmented reality system. J. Assem. Autom. 2018, 38, 77–87. [Google Scholar] [CrossRef]

- Xiao, R.; Schwarz, J.; Throm, N.; Wilson, A.D.; Benko, H. MRTouch: Adding touch input to head-mounted mixed reality. J. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1653–1660. [Google Scholar] [CrossRef]

- Fotouhi, J.; Mehrfard, A.; Song, T.; Johnson, A.; Osgood, G.; Unberath, M.; Armand, M.; Navab, N. Development and Pre-Clinical Analysis of Spatiotemporal-Aware Augmented Reality in Orthopedic Interventions. J. IEEE Trans. Med. Imaging 2021, 40, 765–778. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 116–124. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of Siamese visual tracking with very deep networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar]

- Pu, L.; Feng, X.; Hou, Z.; Yu, W.; Zha, Y. SiamDA: Dual attention Siamese network for real-time visual tracking. J. Signal Process. Image Commun. 2021, 95, 116293. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware Siamese networks for visual object tracking. In Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 103–109. [Google Scholar]

- Li, P.; Chen, B.; Ouyang, W.; Wang, D.; Yang, X.; Lu, H. GradNet: Gradient-guided network for visual object tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6162–6171. [Google Scholar]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4293–4302. [Google Scholar]

- Danelljan, M.; Van, G.; Timofte, R. Probabilistic regression for visual tracking. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7183–7192. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ATOM: Accurate Tracking by Overlap Maximization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4655–4664. [Google Scholar]

- Li, X.; Ma, C.; Wu, B.; He, Z.; Yang, M.-H. Target-Aware Deep Tracking. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1369–1378. [Google Scholar]

| Algorithms | CFnet | DCFnet | SiamFC | Staple | DSST | KCF | Ours |

|---|---|---|---|---|---|---|---|

| DP | 77.6 | 75.3 | 75.9 | 78.5 | 66.6 | 69.6 | 79.8 |

| OS | 73.9 | 71.0 | 70.9 | 70.2 | 59.2 | 55.6 | 72.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, X.; Lu, W.; Yong, J.; Wei, J. A Robust AR-DSNet Tracking Registration Method in Complex Scenarios. Electronics 2024, 13, 2807. https://doi.org/10.3390/electronics13142807

Lei X, Lu W, Yong J, Wei J. A Robust AR-DSNet Tracking Registration Method in Complex Scenarios. Electronics. 2024; 13(14):2807. https://doi.org/10.3390/electronics13142807

Chicago/Turabian StyleLei, Xiaomei, Wenhuan Lu, Jiu Yong, and Jianguo Wei. 2024. "A Robust AR-DSNet Tracking Registration Method in Complex Scenarios" Electronics 13, no. 14: 2807. https://doi.org/10.3390/electronics13142807

APA StyleLei, X., Lu, W., Yong, J., & Wei, J. (2024). A Robust AR-DSNet Tracking Registration Method in Complex Scenarios. Electronics, 13(14), 2807. https://doi.org/10.3390/electronics13142807