Virtual Reality for Career Development and Exploration: The CareProfSys Profiler System Case

Abstract

1. Introduction

2. Related Work

2.1. AR/VR Systems for Career Development in Architecture, Engineering, and Construction Industry (AEC)

2.2. AR/VR Systems for Career Development in Medicine

2.3. AR/VR Systems for Career Development in Science

2.4. VR in Career Development in Arts, Humanities, and Other Domains

3. CareProfSys Web Virtual Reality Scenarios for Career Development and Exploration

3.1. Functionalities of Web VR Scenarios

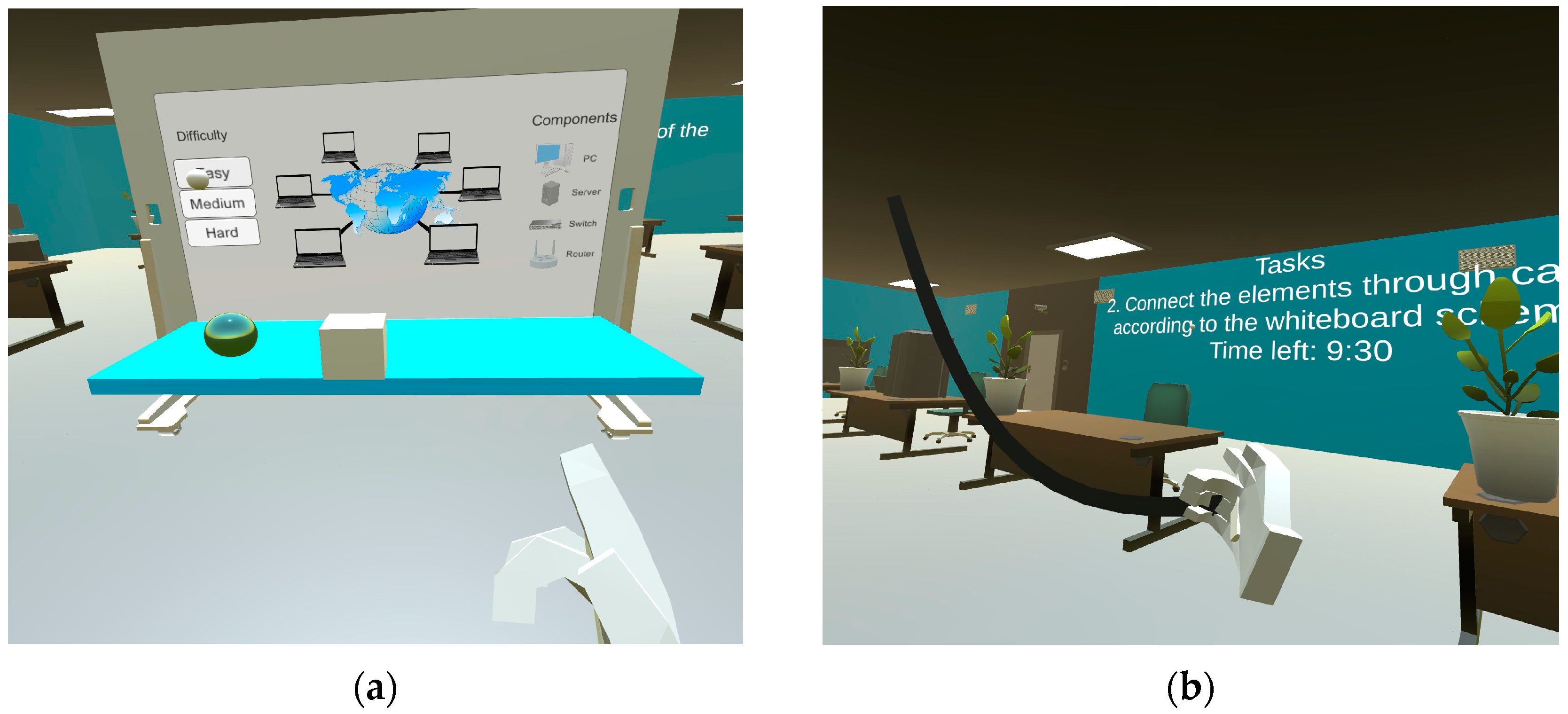

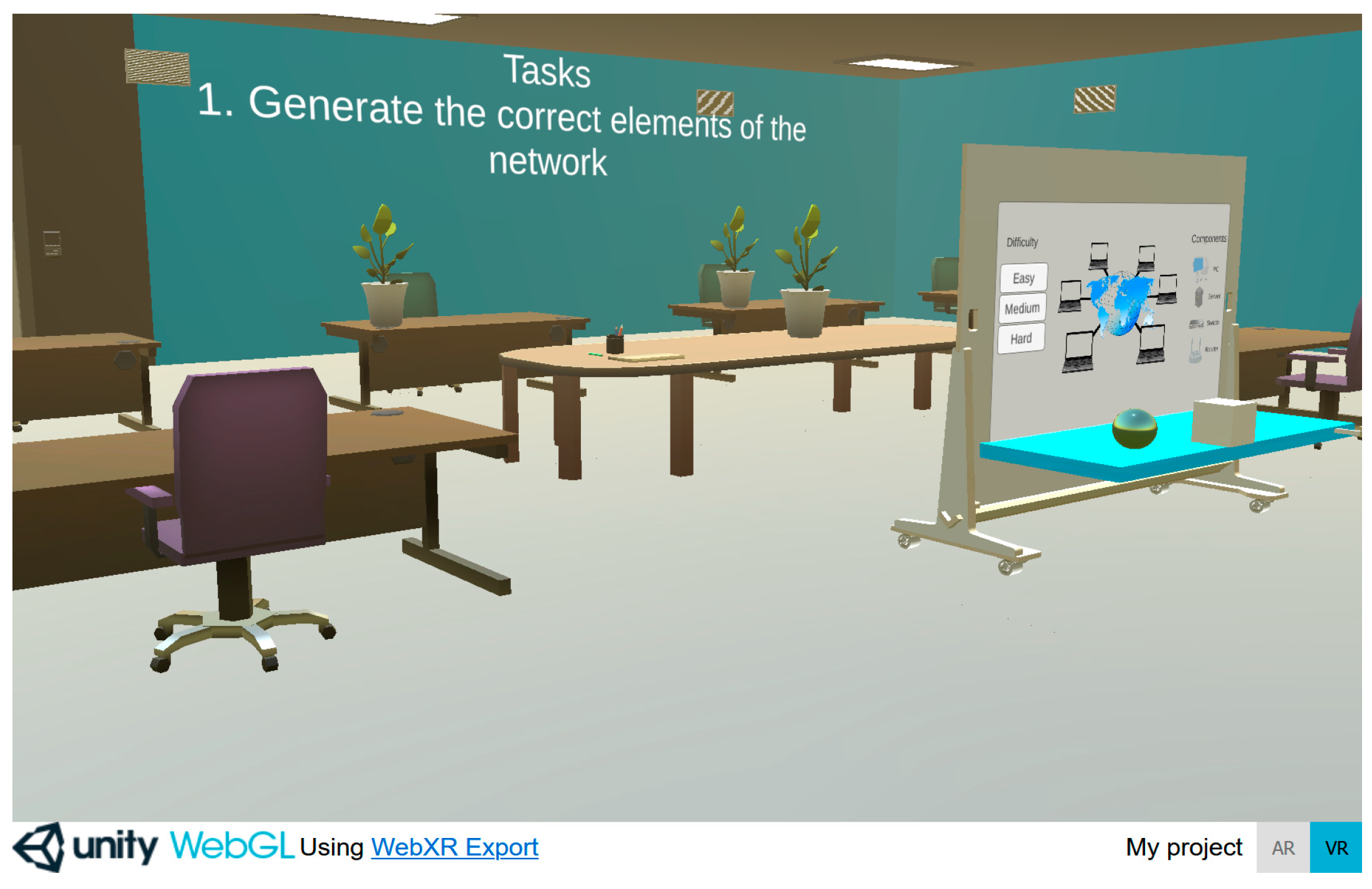

3.1.1. Computer Network Specialists

- Select easy difficulty from the board;

- Generate a PC, a server, and a switch;

- Connect the elements with cables according to the layout on the board;

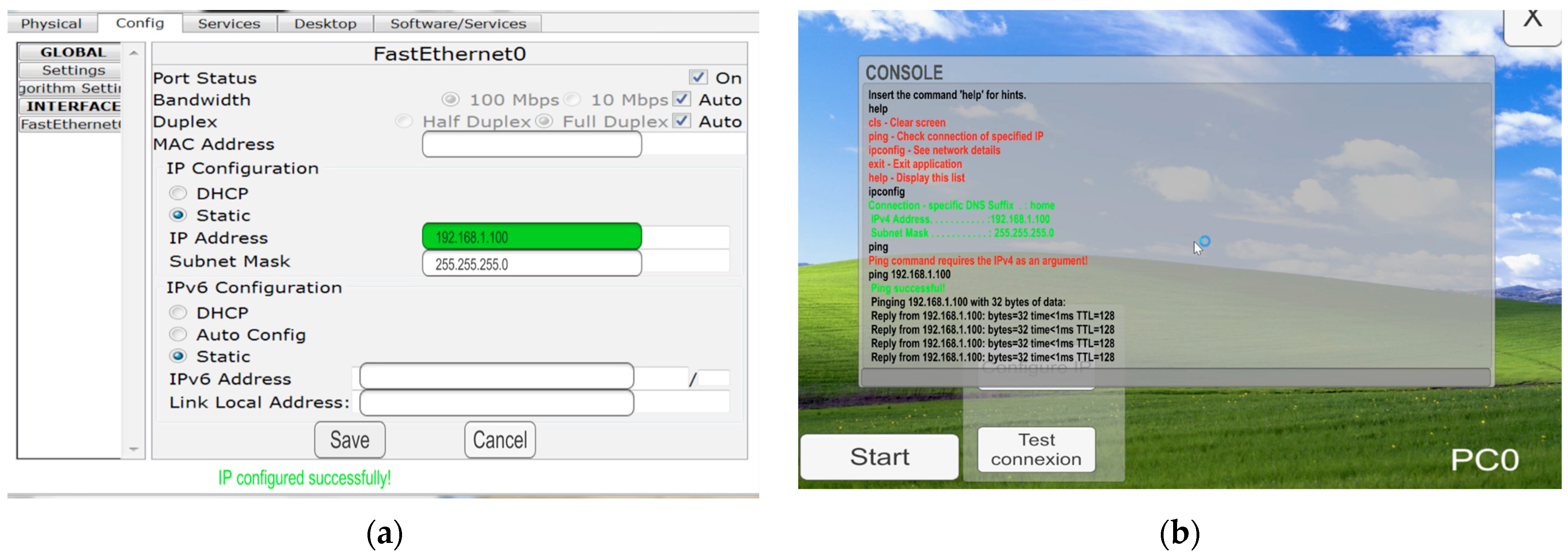

- Click the start button on a computer; the screen shows a display, and the IP must be configured (from settings, with the IP address already displayed on the board); the IPv4 field is highlighted as a clue, so the user does not fill in other fields (MAC address, network mask, local address); the program also allows configuration of the equivalent IPv6 address;

- The same IP configuration process is repeated for the server;

- Both connections must be checked on the corresponding device’s console, using the “ping” command followed by the previously configured correct IP address (see Figure 3).

- Select medium difficulty from the board;

- Generate four PCs, two switches, and one router;

- Connect the elements with cables according to the given layout;

- Click the start button on all computers, the screen lights up, and IPs need to be configured; the IPv4 is no longer highlighted as a clue; the program also allows configuration of the equivalent IPv6 address;

- All connections must be checked in the device’s corresponding console, using the “ping” command followed by the previously configured correct IP address;

- Configure the router connecting two distinct local networks; the IP addresses of its two interfaces (eth0 and eth1) must be correctly configured according to the layout and then activated.

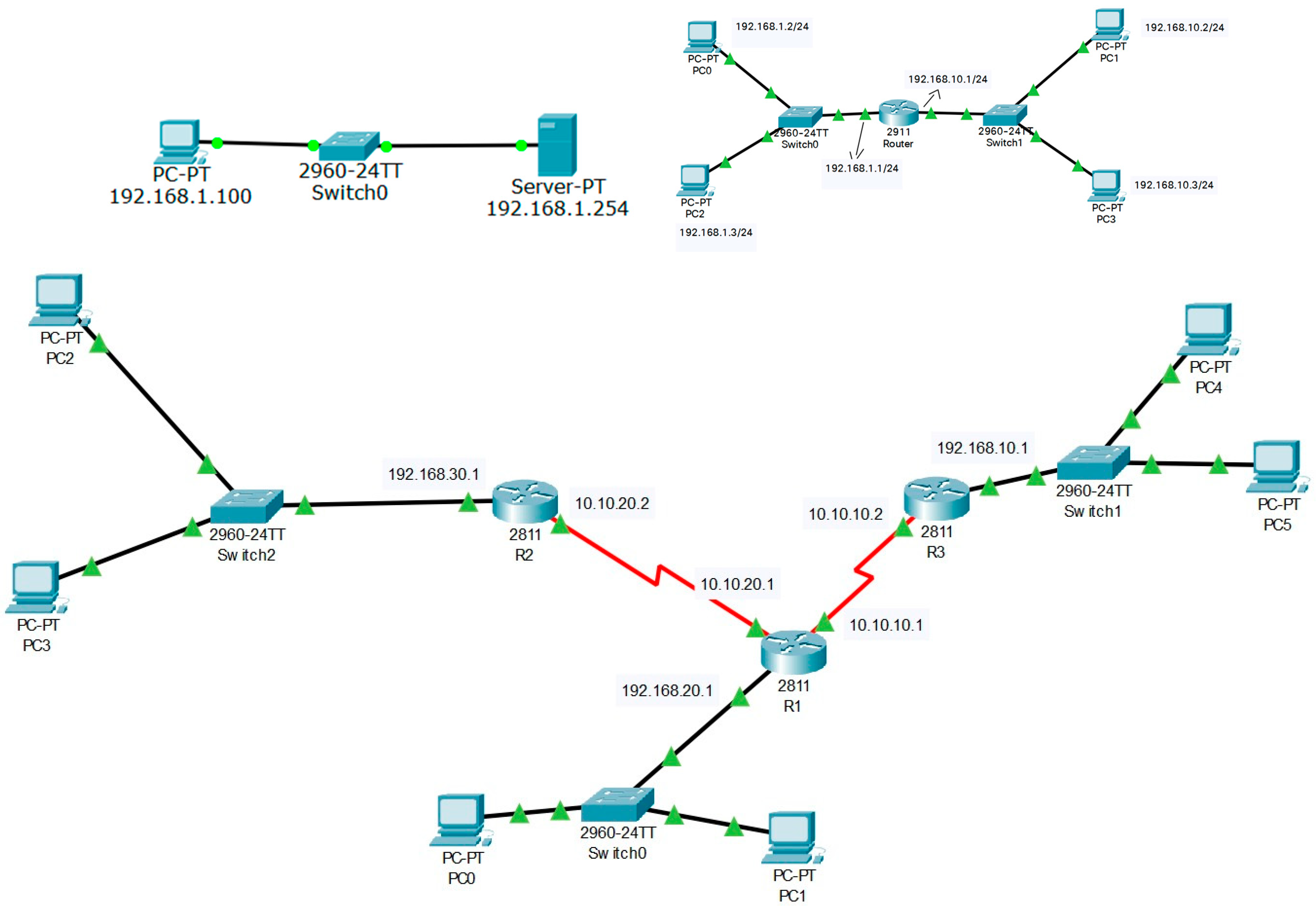

- Select hard difficulty from the board;

- Generate six PCs, three switches, and three routers;

- Connect the elements with cables according to the given layout;

- Click the start button on all computers, the screen lights up, and IPs need to be configured; there are no hints at all, and IPs are no longer present on the board; the user must configure valid IP addresses based on the network addresses mentioned;

- All connections must be checked in the device’s corresponding console, using the “ping” command followed by the previously configured correct IP address;

- Configure the three routers connecting distinct local networks; this includes a more complicated configuration of the middle router connected to the other two routers. The routers must have the Routing Internet Protocol (RIP) IP list configured; this involves inserting all known IPs of the other two routers into the RIP settings tab. This must be repeated for all routers. The RIP allows routers to choose the best network route when redirecting packets. Another task users must complete before having a functional network is configuring the gateway address for each computer. The gateway address is the IP of the router on the gigabit Ethernet connection on the same side of the LAN where the computer is located.

3.1.2. Civil Engineers

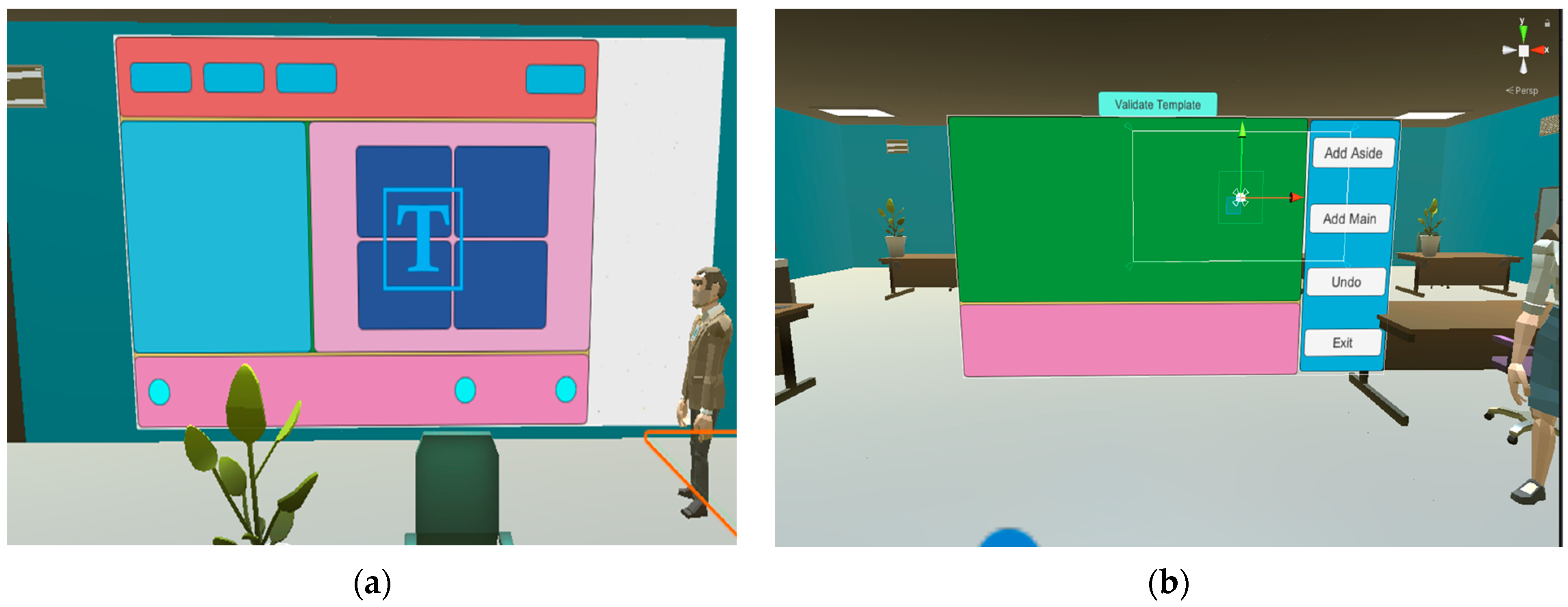

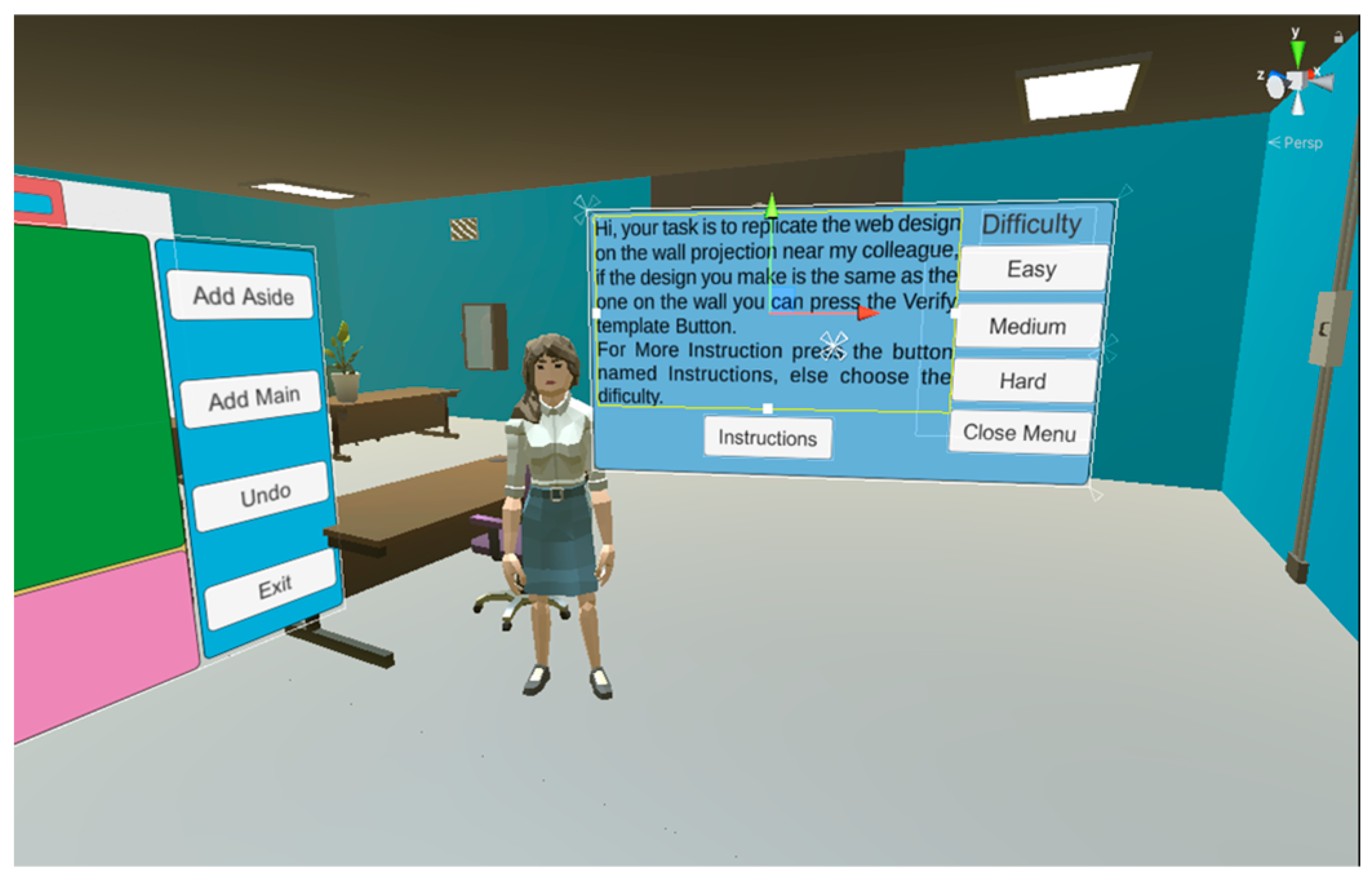

3.1.3. Web and Multimedia Developers

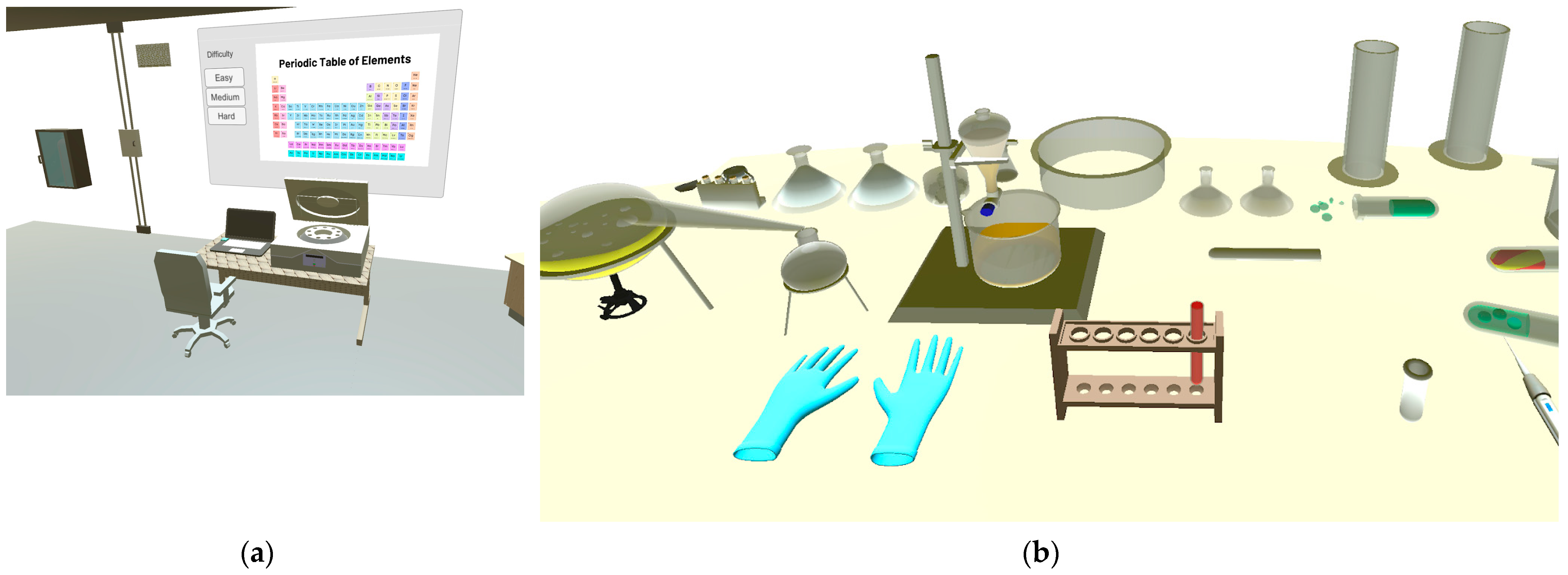

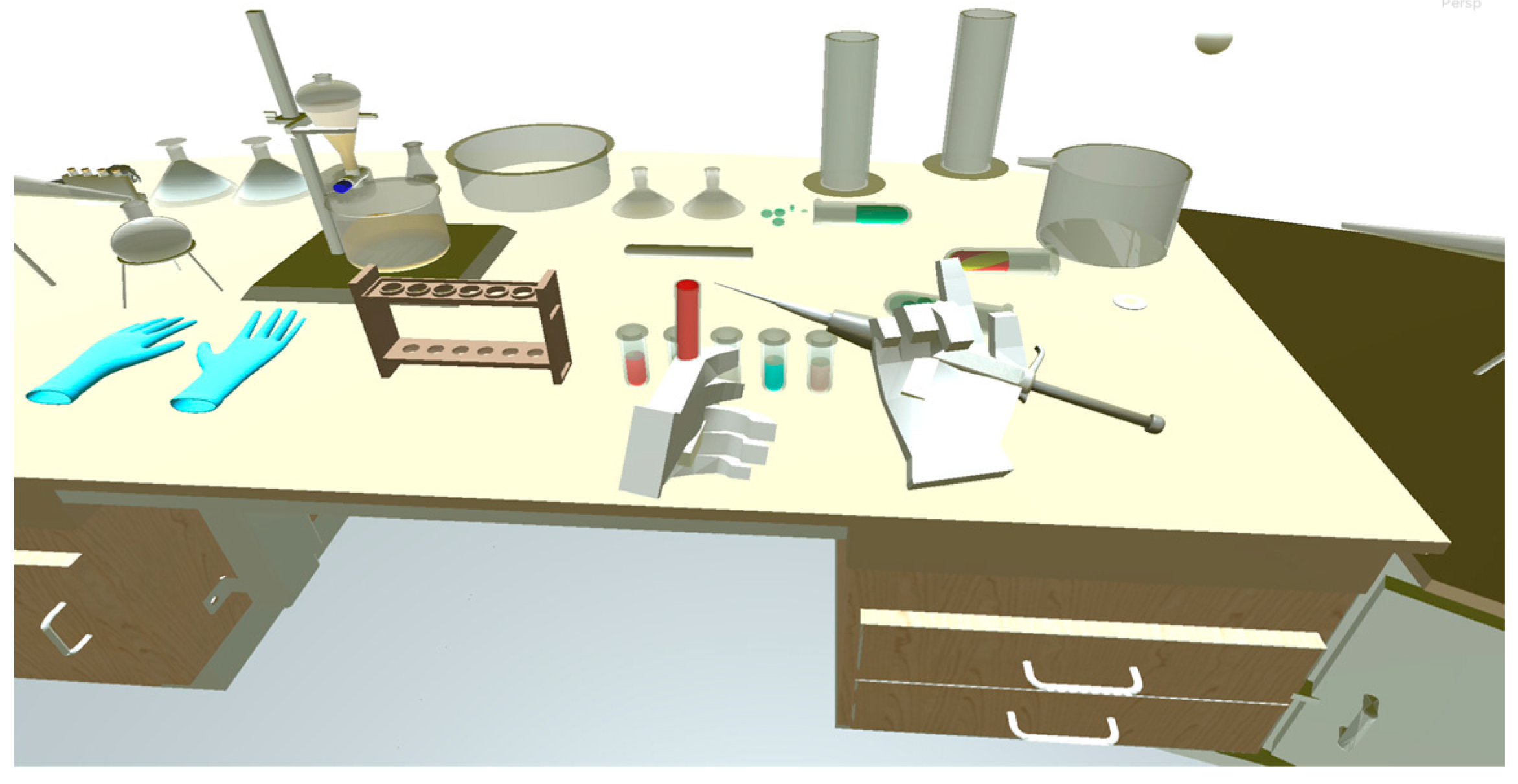

3.1.4. Chemical Engineers

- Select the easy level difficulty on the panel;

- The user must wash their hands for 5 s;

- The user must put on gloves;

- The user takes the blood test tube from the rack;

- The user uses a pipette to take a drop of blood and places it into the second test tube with a reagent (opaque, without seeing the color of the reagent);

- The user inserts the resulting test tube into the analyzer;

- The analyzer screen displays the result “Analysis successfully completed”.

- Select the medium difficulty level on the panel;

- The user must wash their hands for 5 s;

- The user must put on gloves;

- The user takes the blood test tube from the rack;

- The user uses a pipette to place a drop of blood in each reagent test tube;

- The user sequentially inserts each resulting test tube into the analyzer and reads on the laptop screen whether the analysis was successfully completed, or an error occurred (there is only one test tube with the correct reagent);

- The user selects the correct reagent color by pressing the corresponding button on the screen.

- Select the hard difficulty level on the panel;

- The user must wash their hands for 5 s;

- The user must put on gloves;

- The user uses a pipette to place a drop of blood from each blood test tube to be analyzed into each correct reagent test tube;

- The five resulting test tubes are inserted into the analyzer and the result is read on the laptop screen; if there is at least one incorrect pairing, the process must be repeated;

- If the analysis is successfully completed, the glucose result for each test tube is displayed: low—less than 65 mg/dL; normal—65–110 mg/dL; high—more than 110 mg/dL;

- The user must label the test tubes based on values: (blue—low; green—normal; red—high).

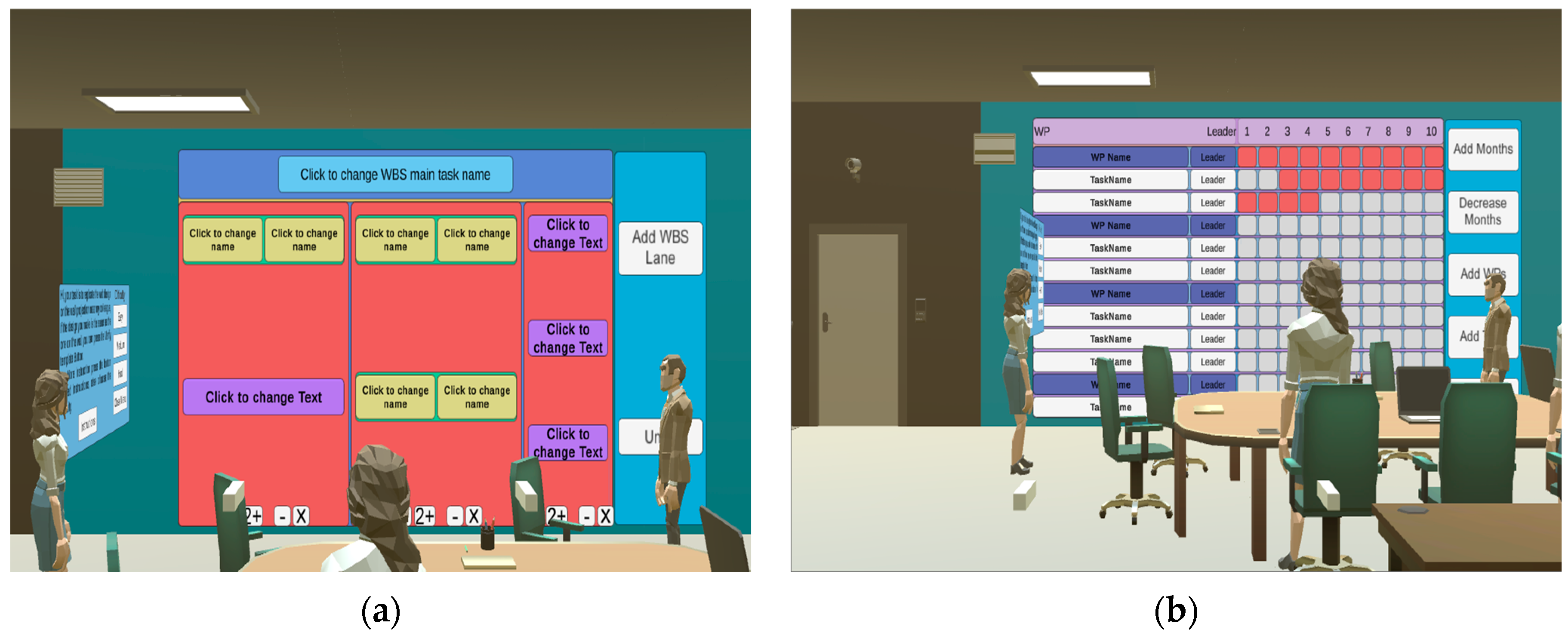

3.1.5. Project Managers

- Select the easy level difficulty from the panel;

- The user must read the instructions;

- Select the WBS diagram;

- The user creates multiple columns to allocate tasks;

- The user then creates tasks for each column based on requirements;

- The user must name each task;

- The user confirms the end of the activity.

- Select the medium level of difficulty;

- The user must select the Gantt chart;

- The user must create the necessary number of WPs (work packages);

- The user must create the necessary number of activities for each WP;

- The user must add the number of months needed to complete the project;

- The user must select the boxes corresponding to the months in which the respective activity must be carried out.

- Select the hard level of difficulty;

- The user must read the instructions;

- Select the WBS diagram;

- The user creates multiple columns where tasks are allocated;

- The user then creates tasks for each column based on requirements;

- The user must name each task;

- The user confirms the end of the activity for this;

- The user returns to the selection menu;

- The user must select the Gantt chart;

- The user must create the necessary number of WPs;

- The user must create the necessary number of activities for each WP;

- The user must add the number of months needed to complete the project;

- The user must select the boxes corresponding to the months in which the respective activity must be carried out.

3.1.6. University Professors

- Select the easy level on the panel;

- The teacher goes to the lectern and turns on the computer;

- A PowerPoint presentation about pointers appears on the projector and computer screen, which the teacher must navigate using the mouse;

- When reaching the last slide, the teacher must draw a diagram/explanation on the board about double pointers;

- There are inattentive students in the room; the teacher must identify them and hold their attention (those who are talking or on the phone); when five students (or all the inattentive ones, if fewer than five) are identified, the level is completed.

- Select the medium level on the panel;

- The teacher goes to the lectern and opens the computer;

- A PowerPoint presentation with the theoretical elements of the C language appears on the projector and computer screen, which the teacher must navigate using the mouse;

- The last slide includes a coding exercise that students must solve; the teacher must walk between desks and classify the responses as correct/incorrect for five students;

- The teacher returns to the lectern and shows a correct solution to the exercise.

- Select the hard level on the panel;

- The teacher goes to the lectern and opens the computer;

- A PowerPoint presentation with the theoretical elements of the C language appears on the projector and computer screen, which the teacher must navigate using the mouse;

- The last slide includes a coding exercise that students must solve; the teacher must walk between desks and classify the responses as correct/incorrect for five students;

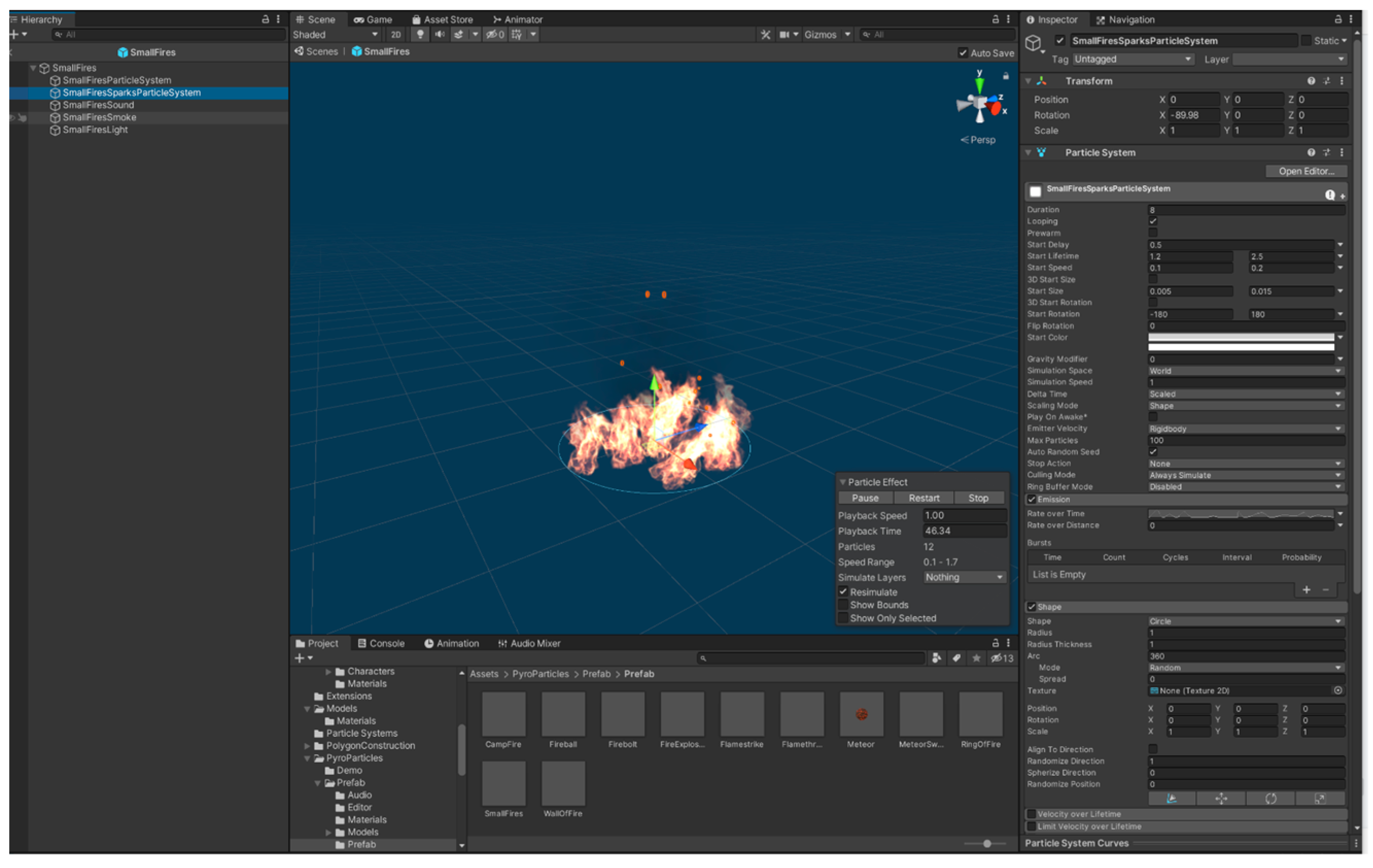

- Suddenly, a fire breaks out due to a short circuit; the teacher must go to each student’s desk and ensure they head towards the exit;

- After the students are successfully evacuated, the teacher takes the fire extinguisher and extinguishes the fire.

3.2. Technical Details of Web VR Scenarios

3.2.1. Architecture of the CareProfSys WebVR Module

3.2.2. Technologies

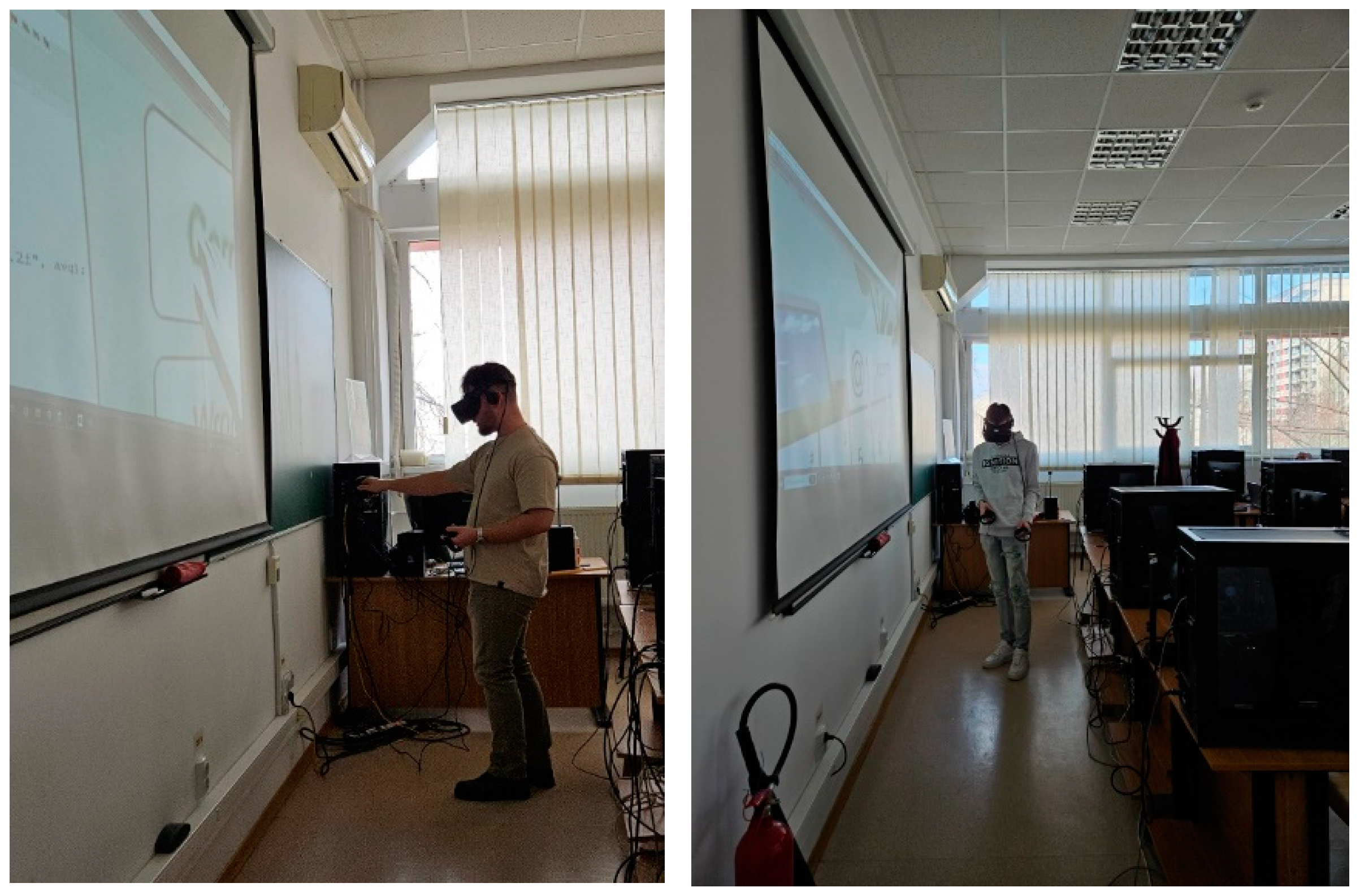

3.2.3. VR Equipment

3.2.4. Teleportation and Movement in VR

3.2.5. User Interface (UI)

3.2.6. Physics

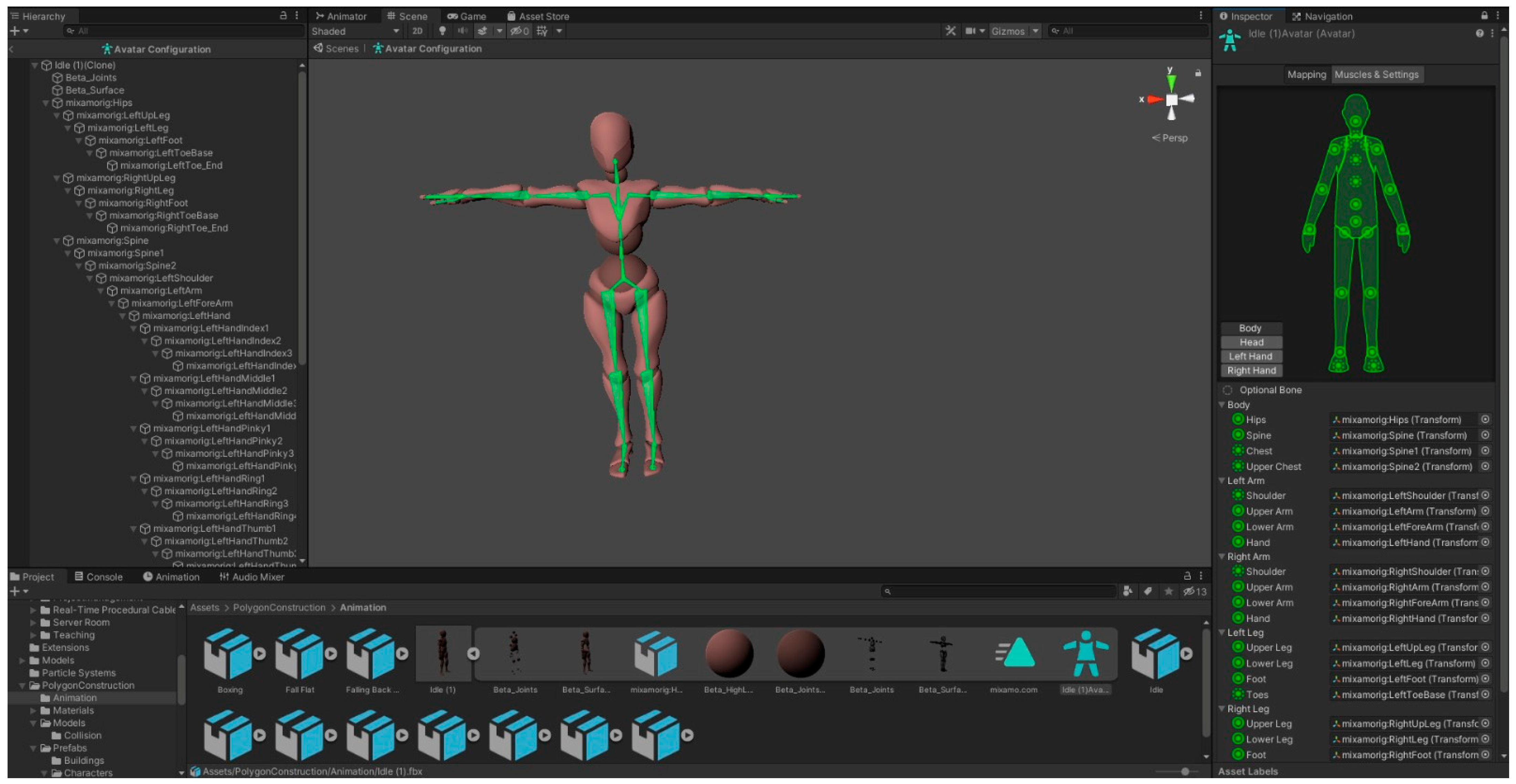

3.2.7. Character Animations

3.2.8. Specific Technical Details for the Networking Scenario

3.2.9. Specific Technical Details for the Civil Engineering Scenario

3.2.10. Specific Technical Details for the Web and Multimedia Developer Scenario

3.2.11. Specific Technical Details for the Chemical Engineer Scenario

3.2.12. Specific Technical Details for the Project Manager Scenario

3.2.13. Specific Technical Details for the University Professor Scenario

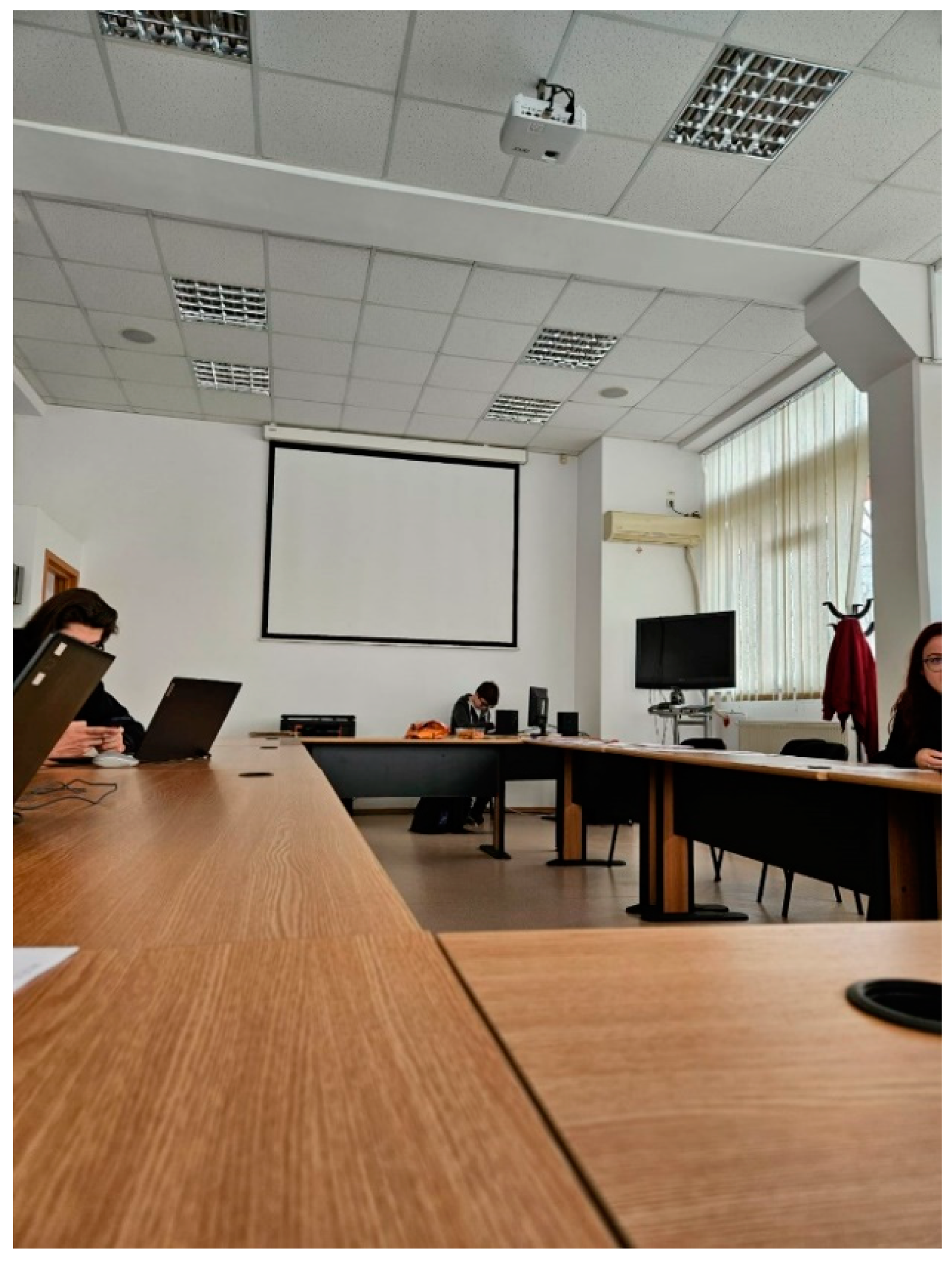

4. Materials and Methods Used in CareProfSys Experiments

- Scheduling for the experiment;

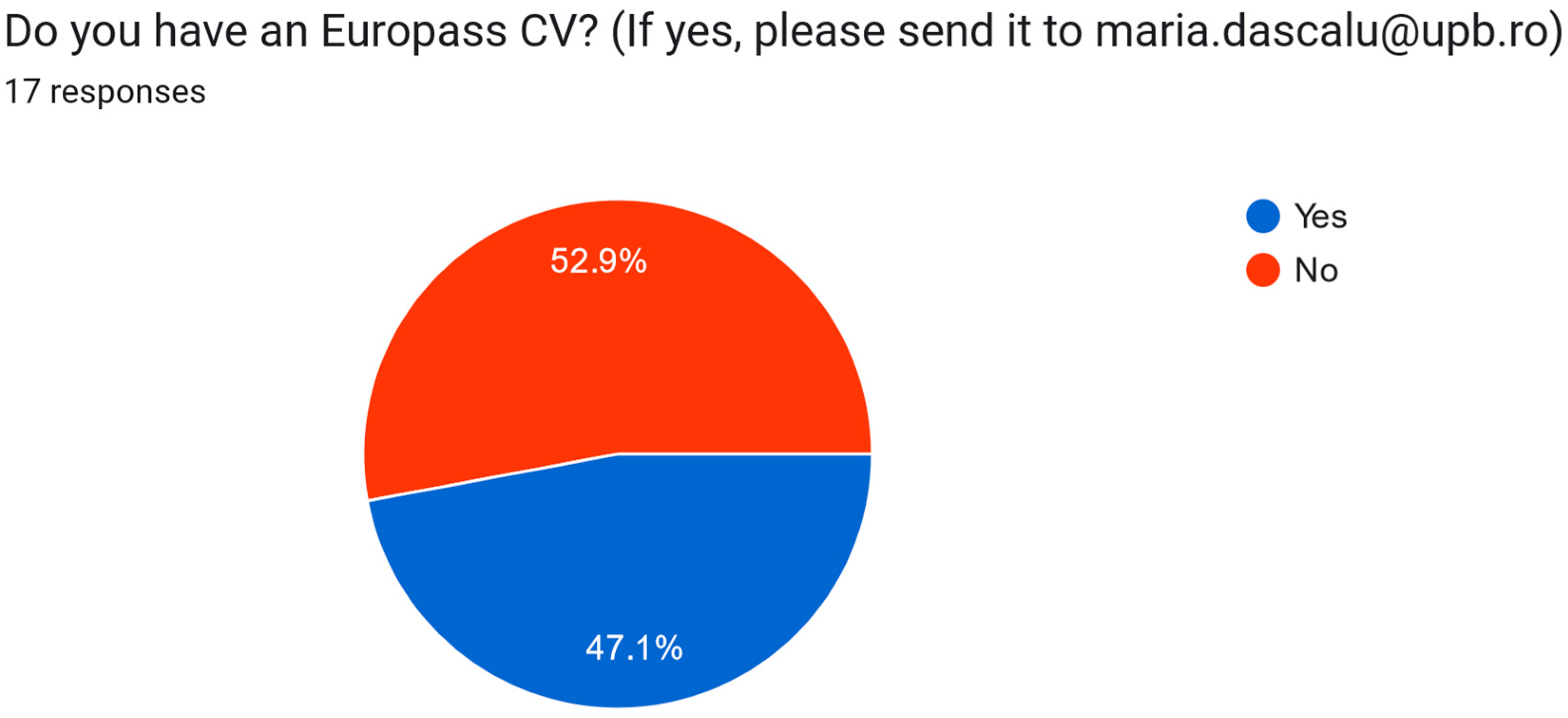

- (Optional) filling in a CV in Europass format, in order to upload it to the CareProfSys platform;

- Account on social networks, to give the CareProfSys system access to the information available there;

- (Optional) basic knowledge of the occupations for which VR scenarios were built.

5. Results

- The introductory self-assessment questionnaire shared with participants via a Google form;

- The final feedback questionnaire, distributed to participants through a Google form;

- VR simulation performance recording module, which exports the following information to text files for each user simulation session: start time of the training session; the level of difficulty achieved; last task achieved; total number of system errors; score; completion time of the training session;

- Interview with users, after completing the final feedback.

5.1. First Round of Experiments

5.1.1. Results of the Introductory Self-Assessment Questionnaire

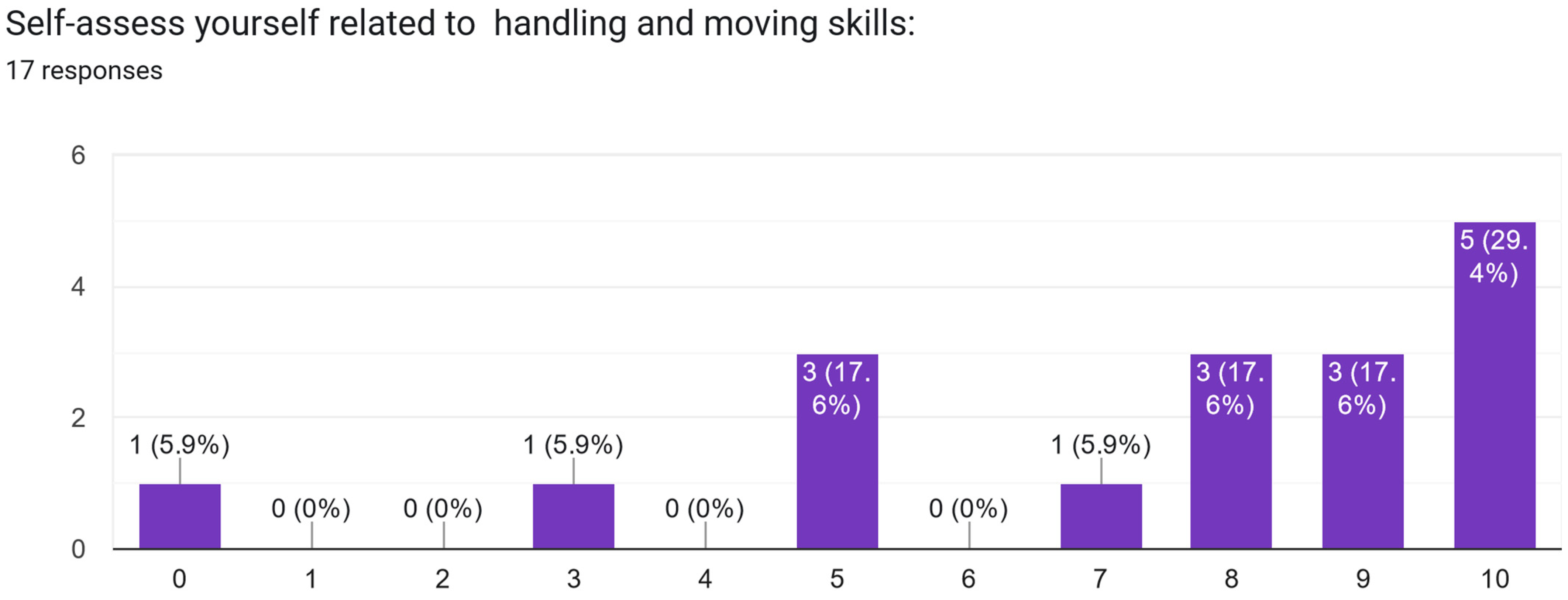

- Skill and movement: 30% of respondents appreciate that they have a high level of skill.

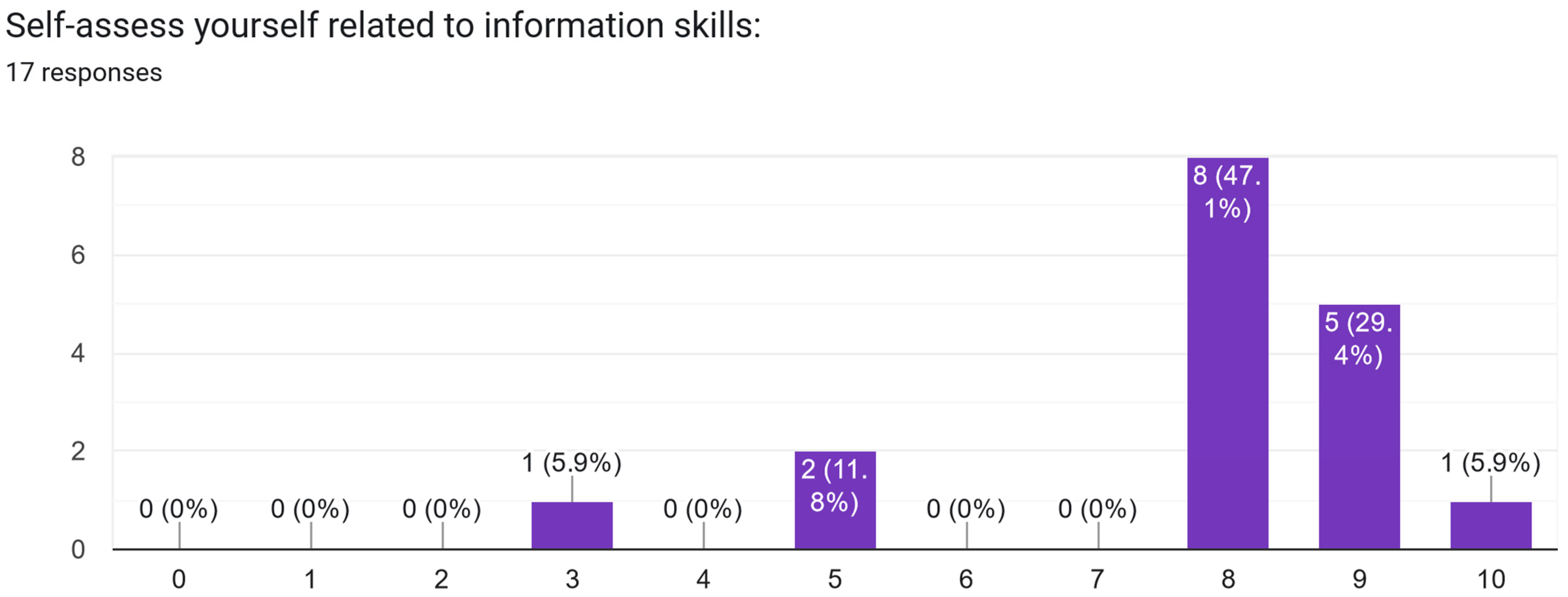

- Information and information search: 50% of students believe they know how to become informed.

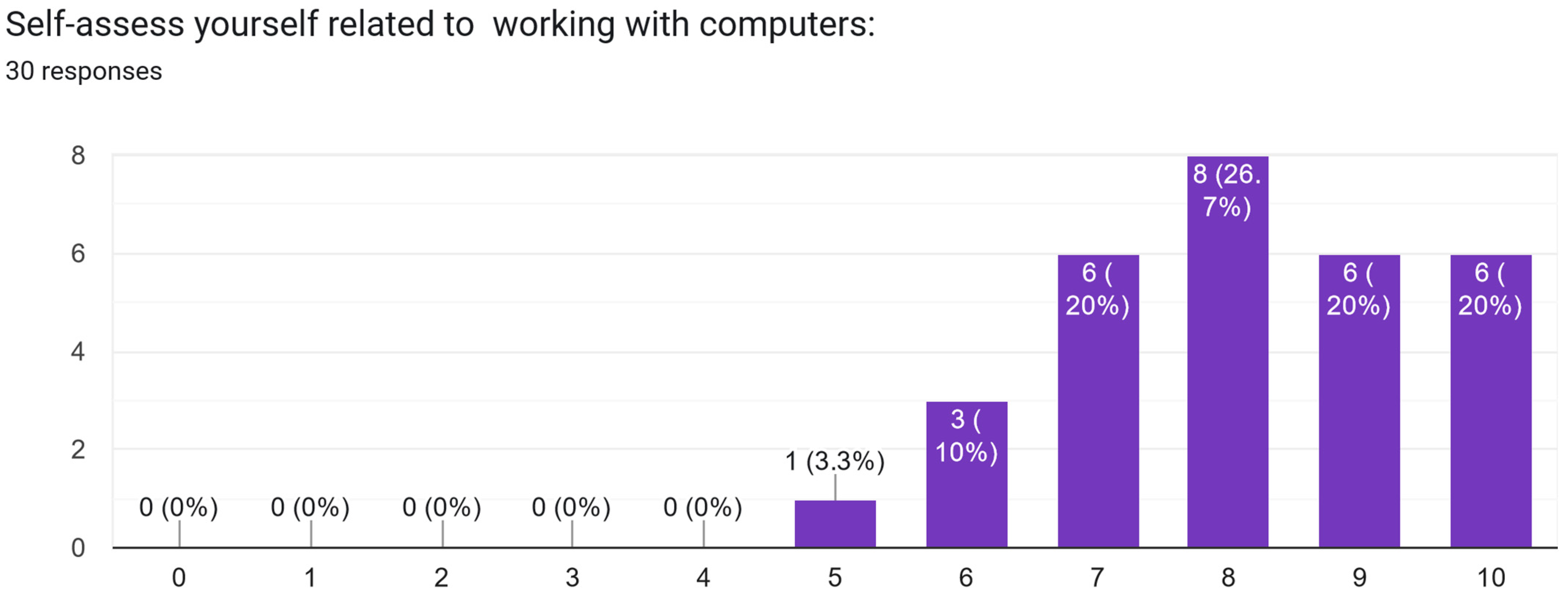

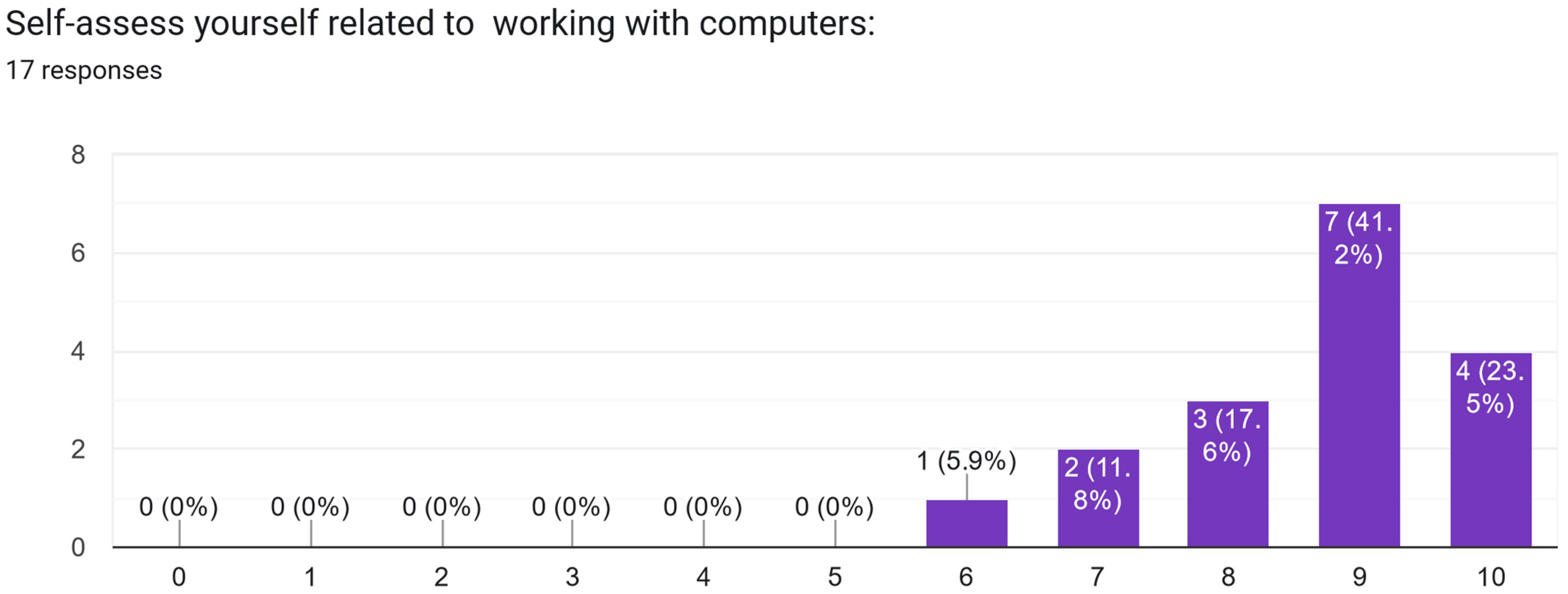

- Working with computers: over 80% of students believe they have knowledge of operating with computers.

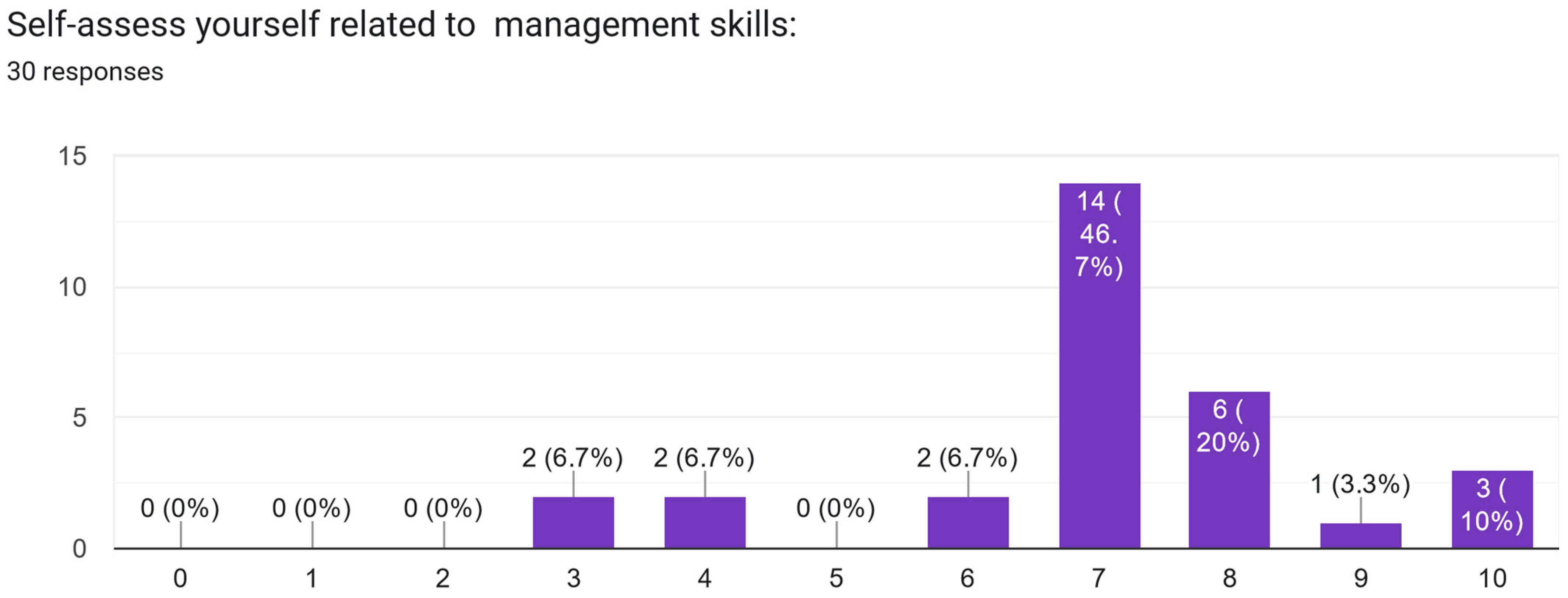

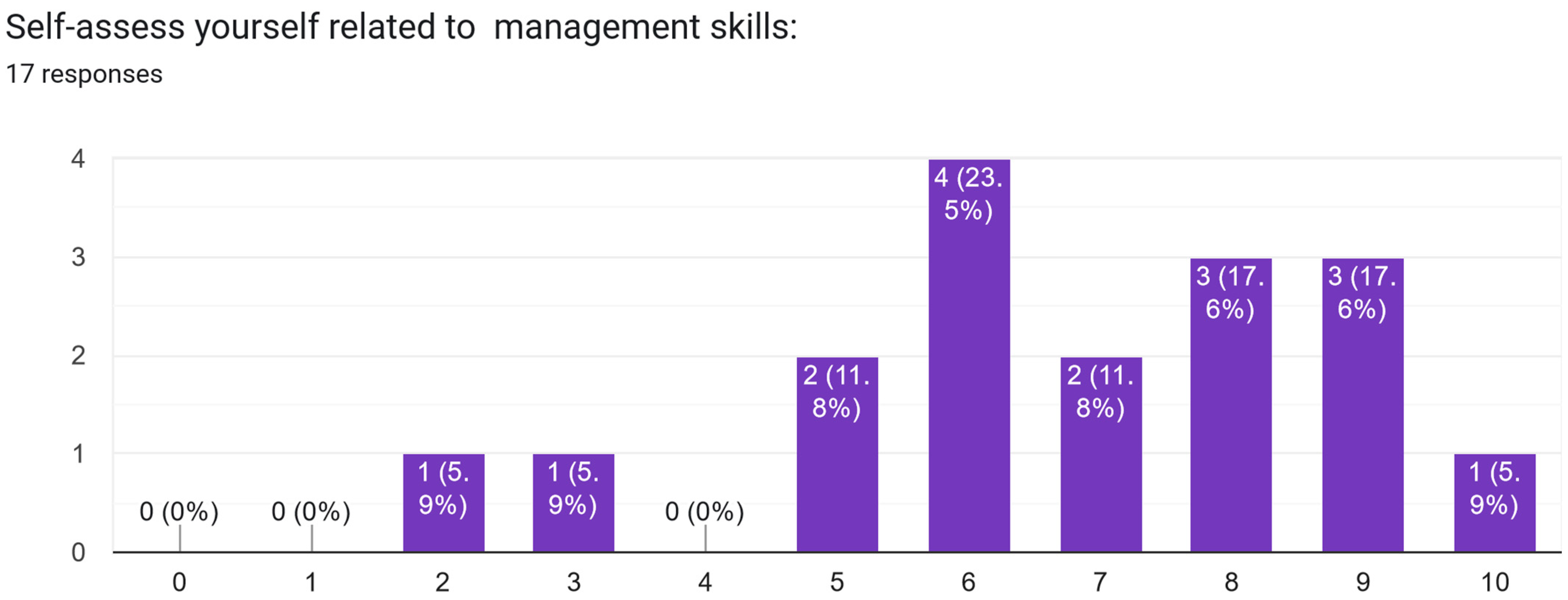

- Management: over 80% of respondents believe they have good and very good management skills.

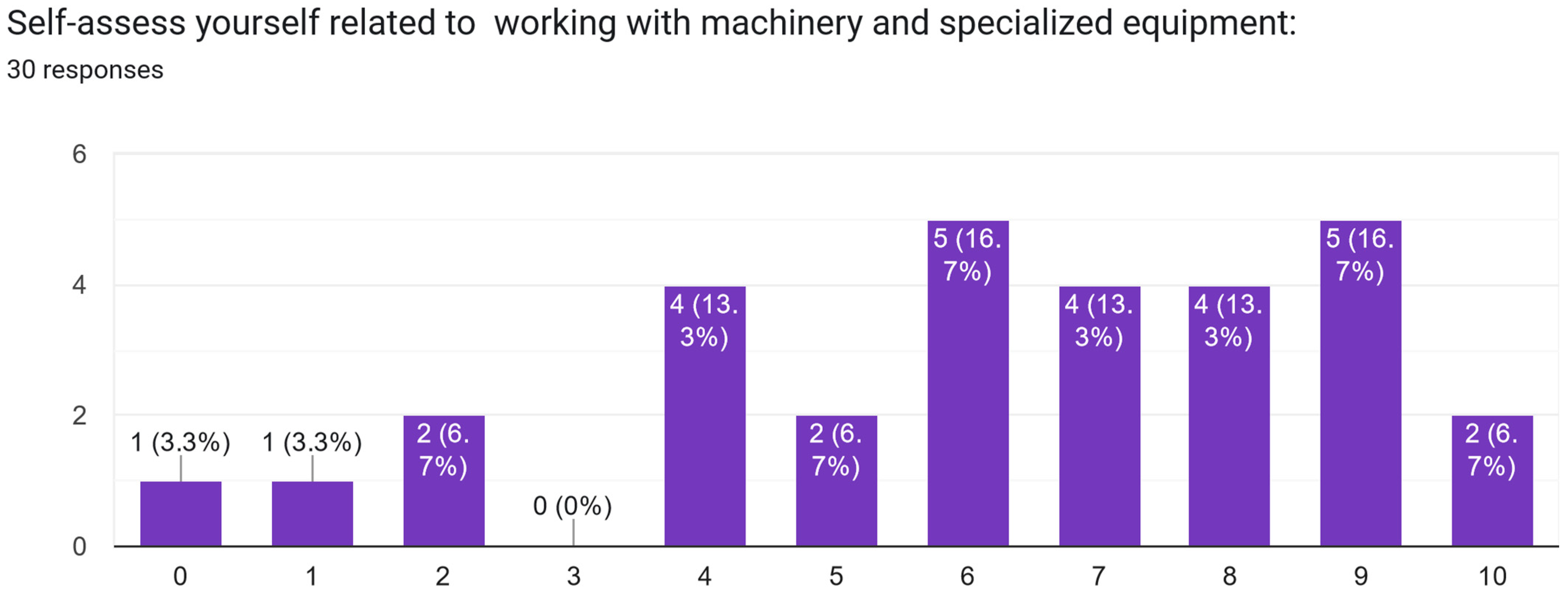

- Working with specialized machinery and equipment: over 74% of respondents believe they have the necessary skills to work with specialized machinery and equipment.

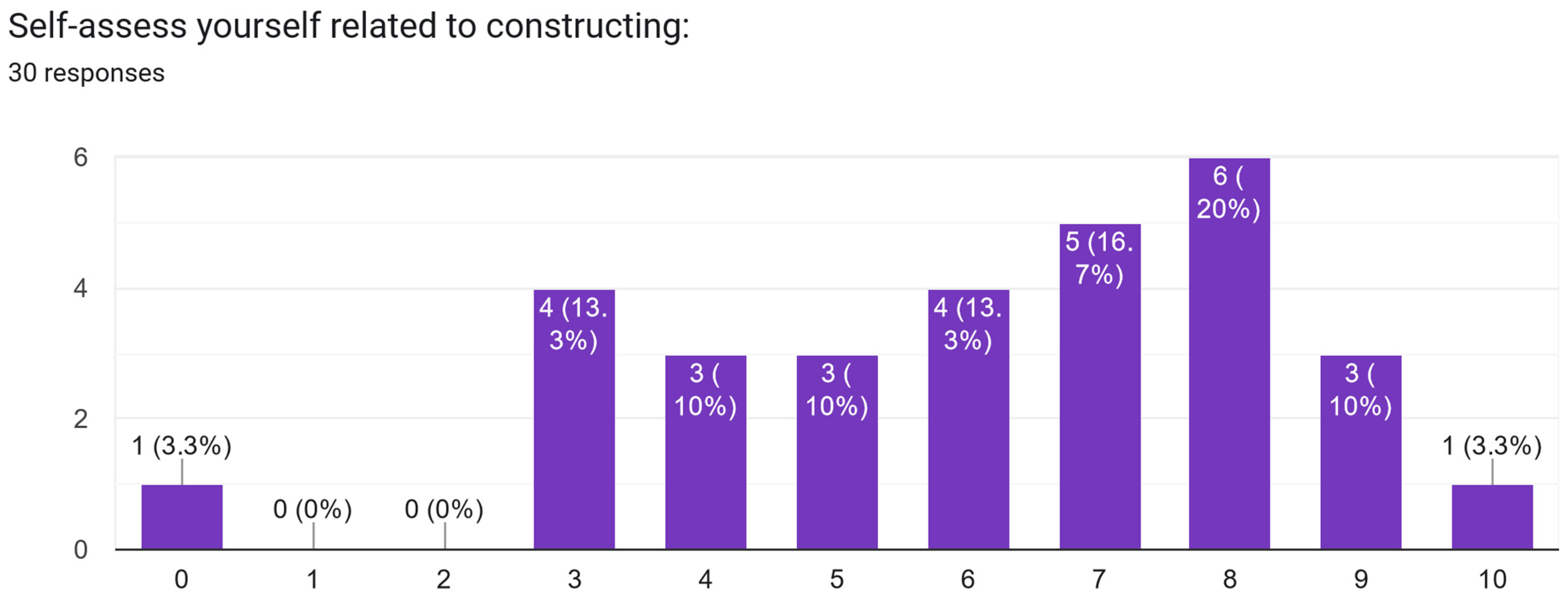

- Construction: over 74% of respondents self-assess their construction skills as satisfactory.

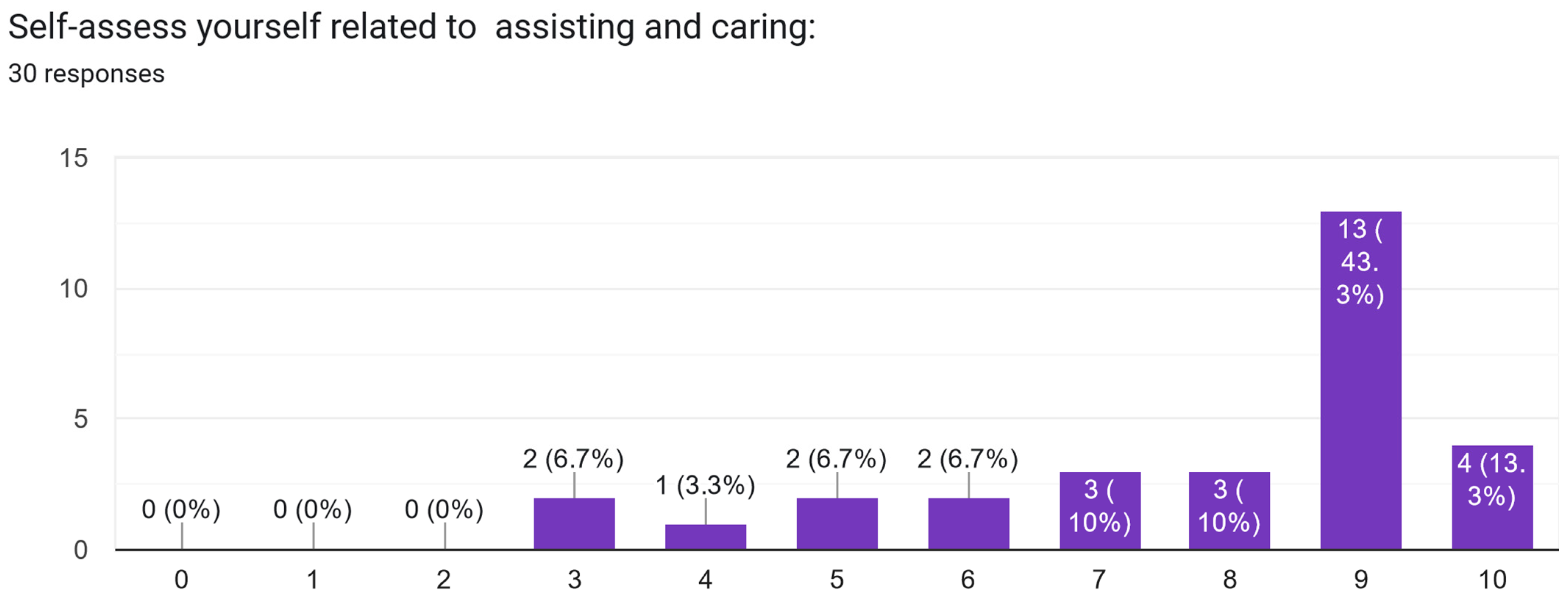

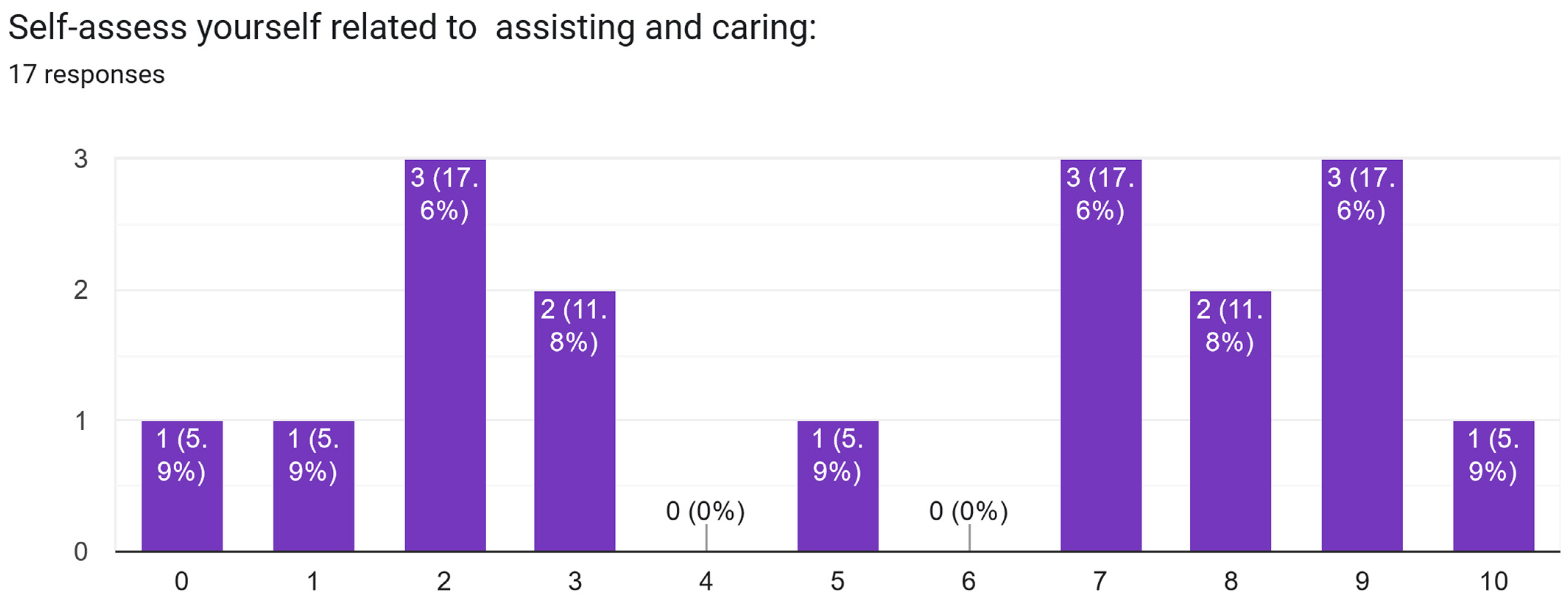

- Support and care: 90% have high and very high skills (over 56% of respondents have very high skills).

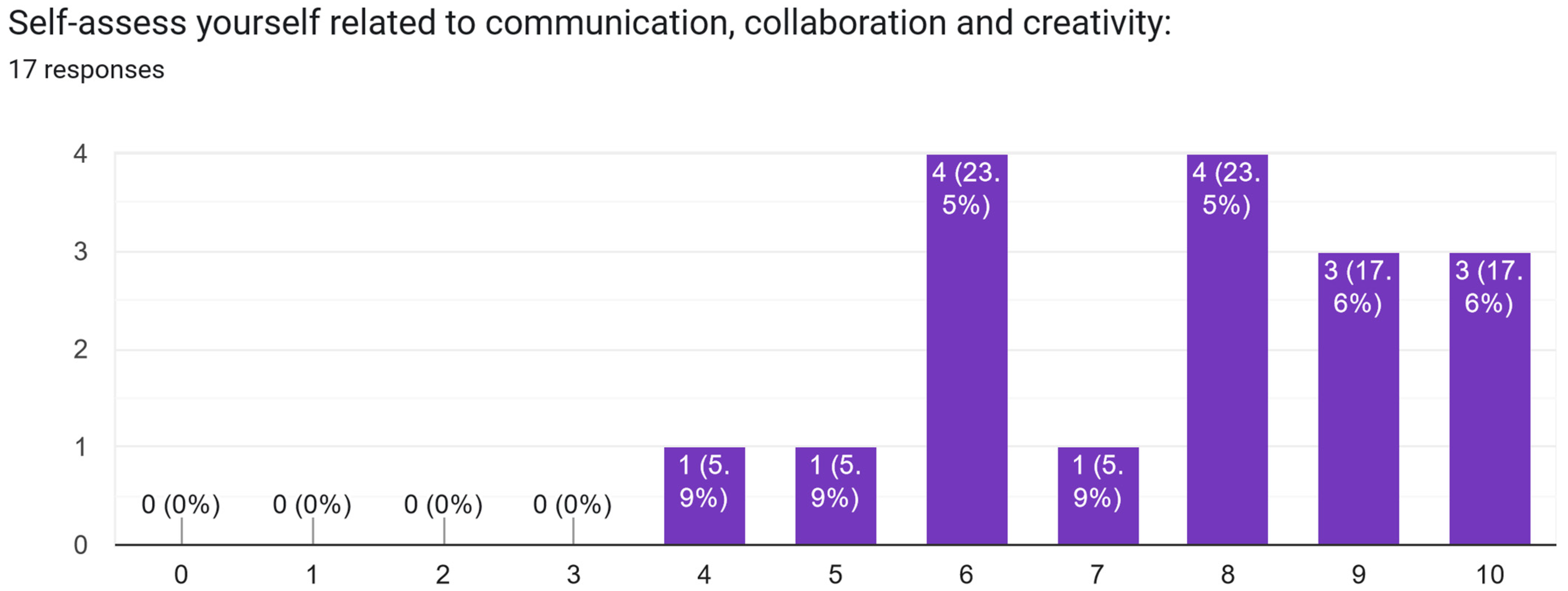

- Communication, collaboration, and creativity: over 96% believe they have high communication, collaboration, and creativity skills.

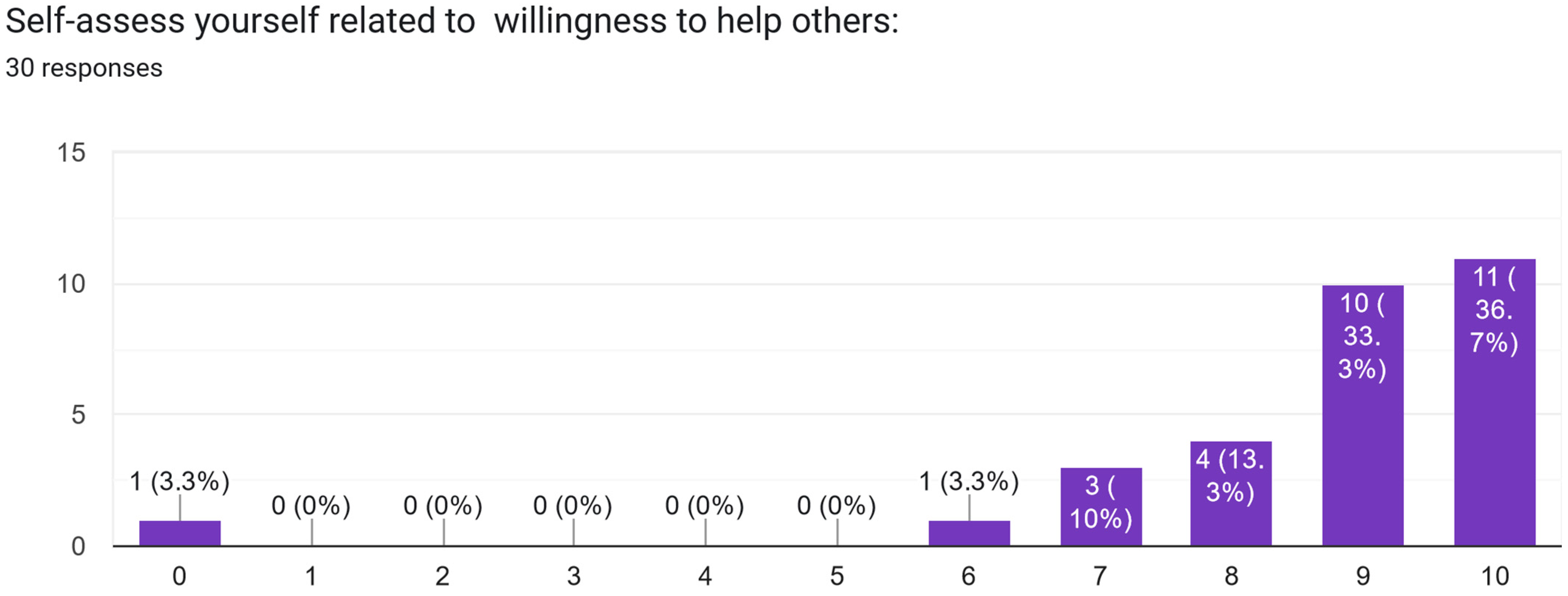

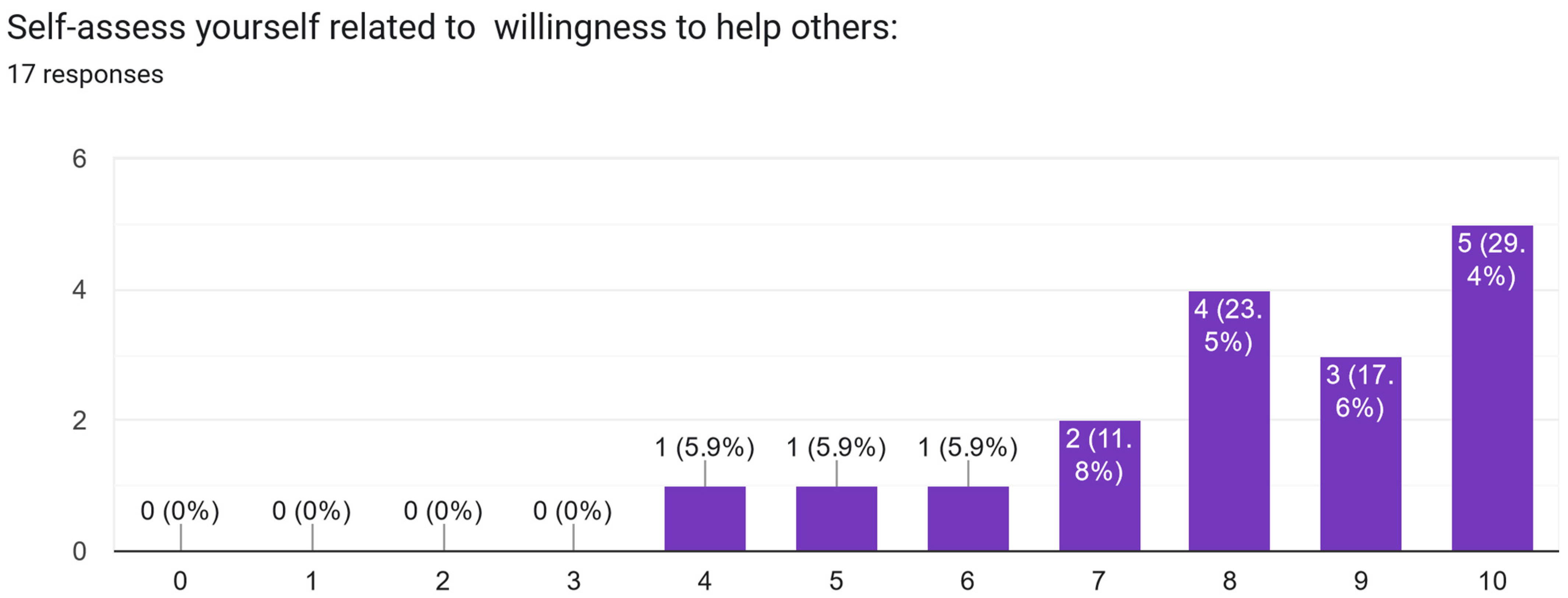

- Interests related to motivation to help others: over 96% of respondents say they are eager to help others.

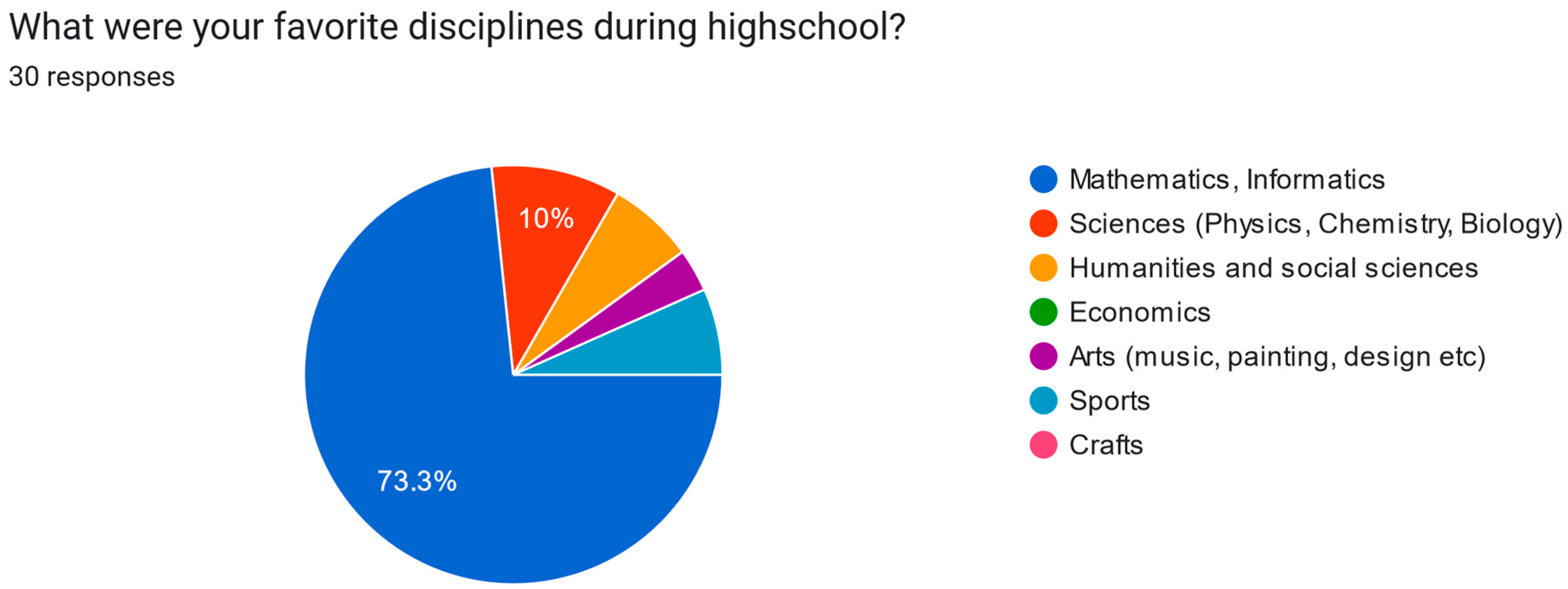

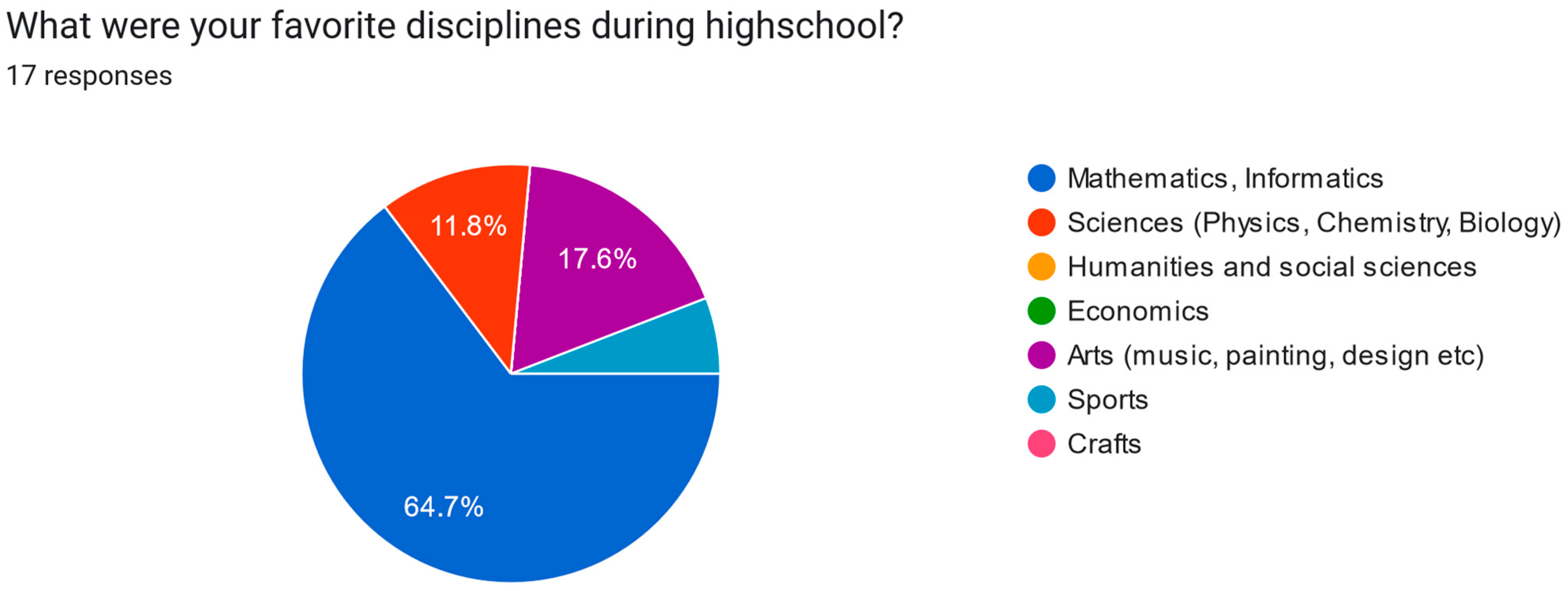

- Preferred subjects in high school: over 73% of surveyed students chose mathematics/computer science as their preferred subjects during high school; 10% had sciences (physics, chemistry, biology) as preferred subjects; the lowest percentages of choice were recorded for arts and sports, and economic and creative fields were absent from the choice.

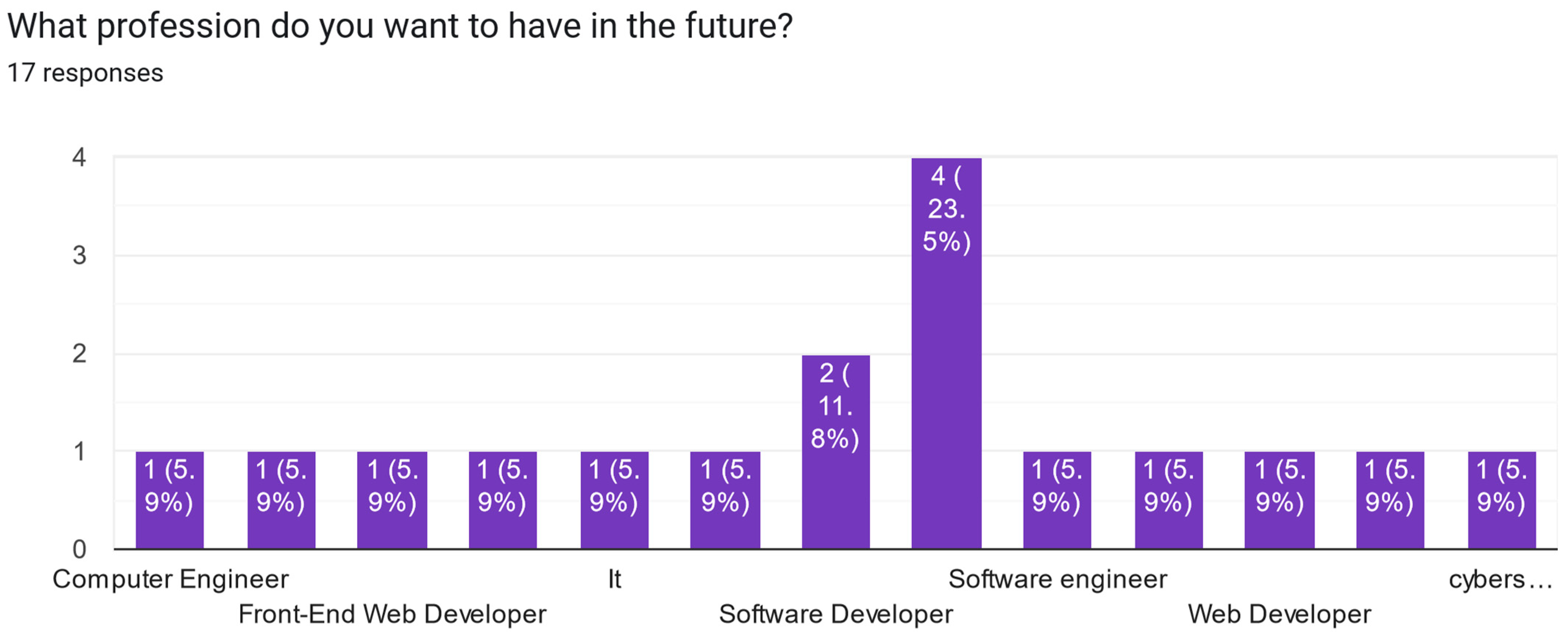

- Future profession: the most desired profession was programmer—50% of respondents; 16% of respondents chose cybersecurity engineer as their desired profession.

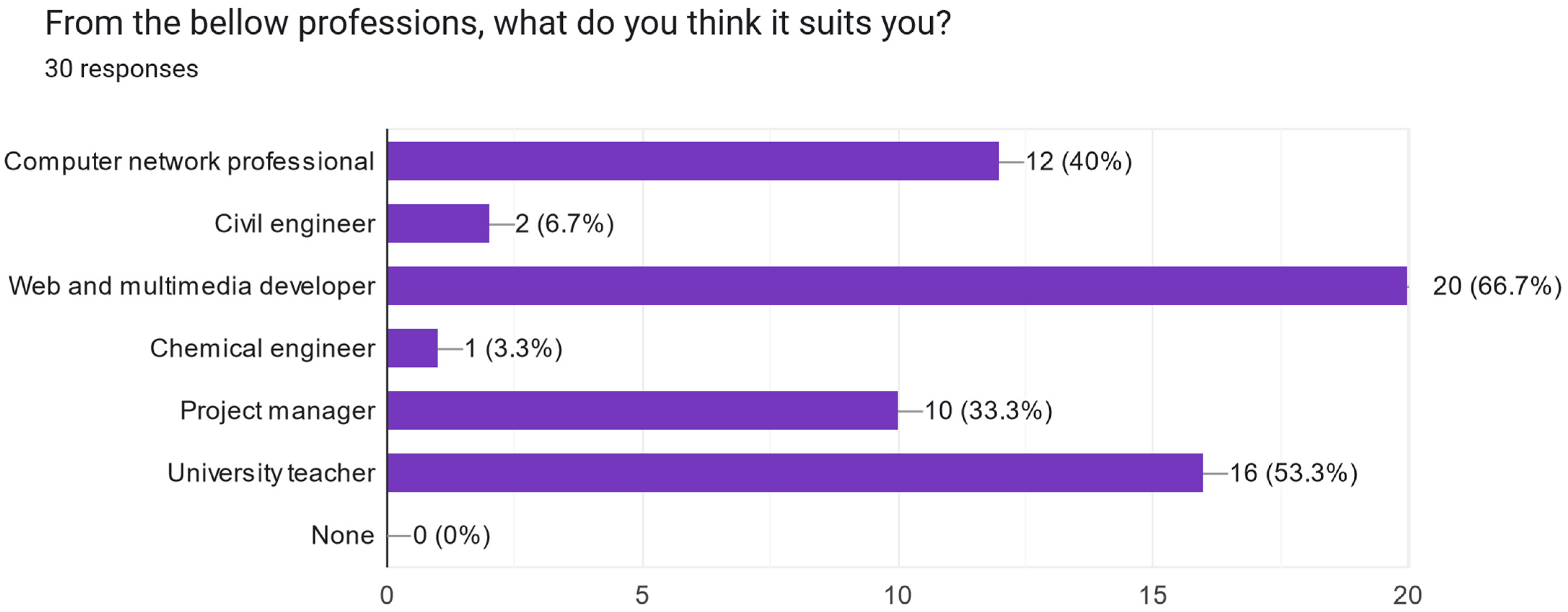

- Matching with the six professions modeled within the CareProfSys system: the profession with which most of the surveyed students identified was web programmer (66.7%); in second place of the choices is the university teaching profession (over 53%); in third place is the profession of network engineer (40%).

- For the web programmer, the reasons are related to choosing a profession with a future, passion for computers/programming, interest in cyber security, and problem-solving thinking;

- For the university teacher, the reasons are perception as a prestigious profession, desire to share accumulated knowledge, pleasure in helping others and working with people, and management and communication skills;

- For the network engineer, the motivations are computer work, preference to learn and perform practical activities, pleasure to program, calm temperament, and concern for cybersecurity.

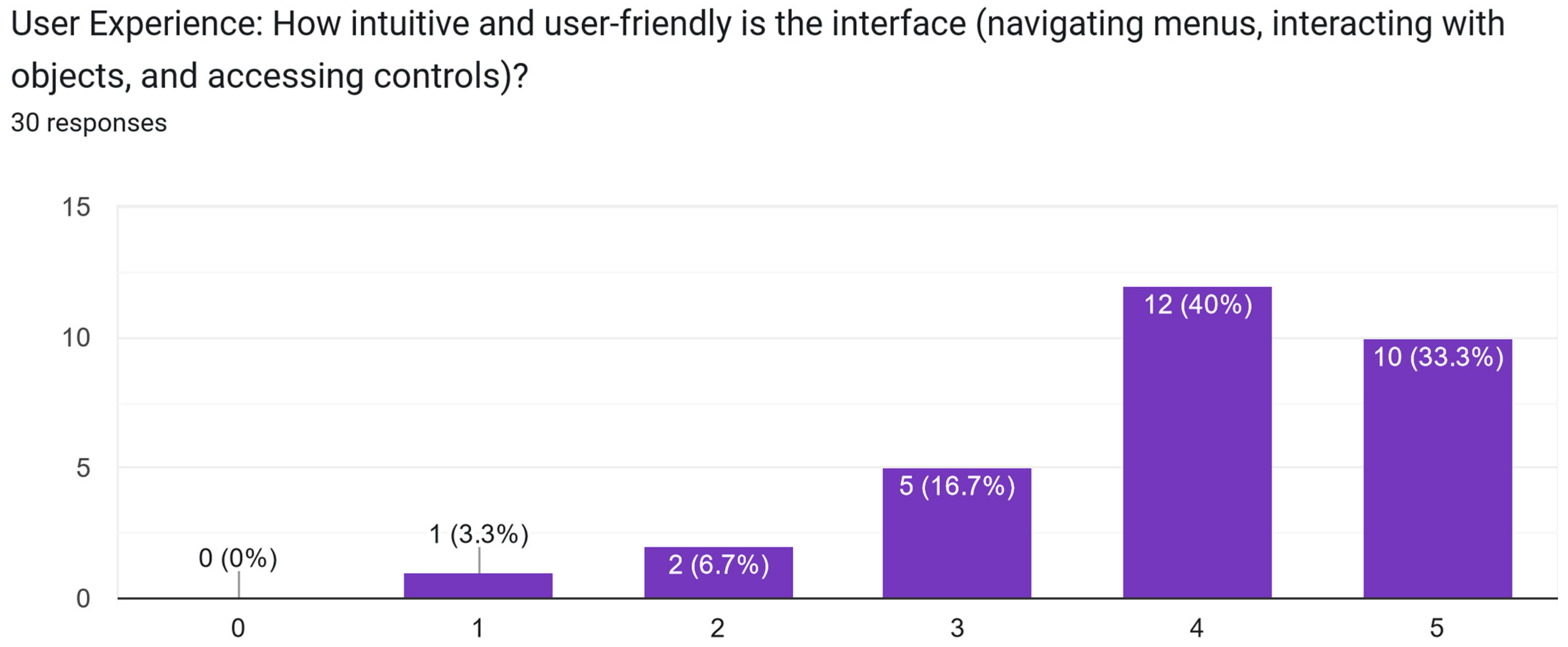

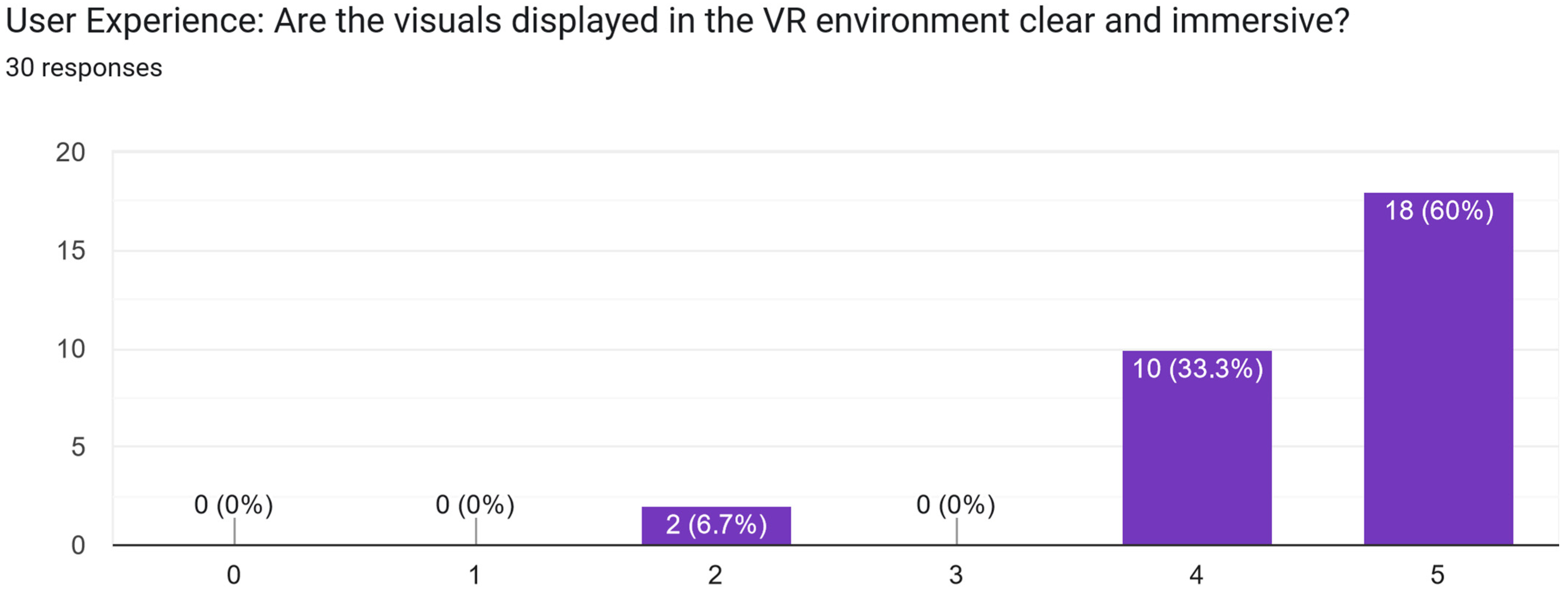

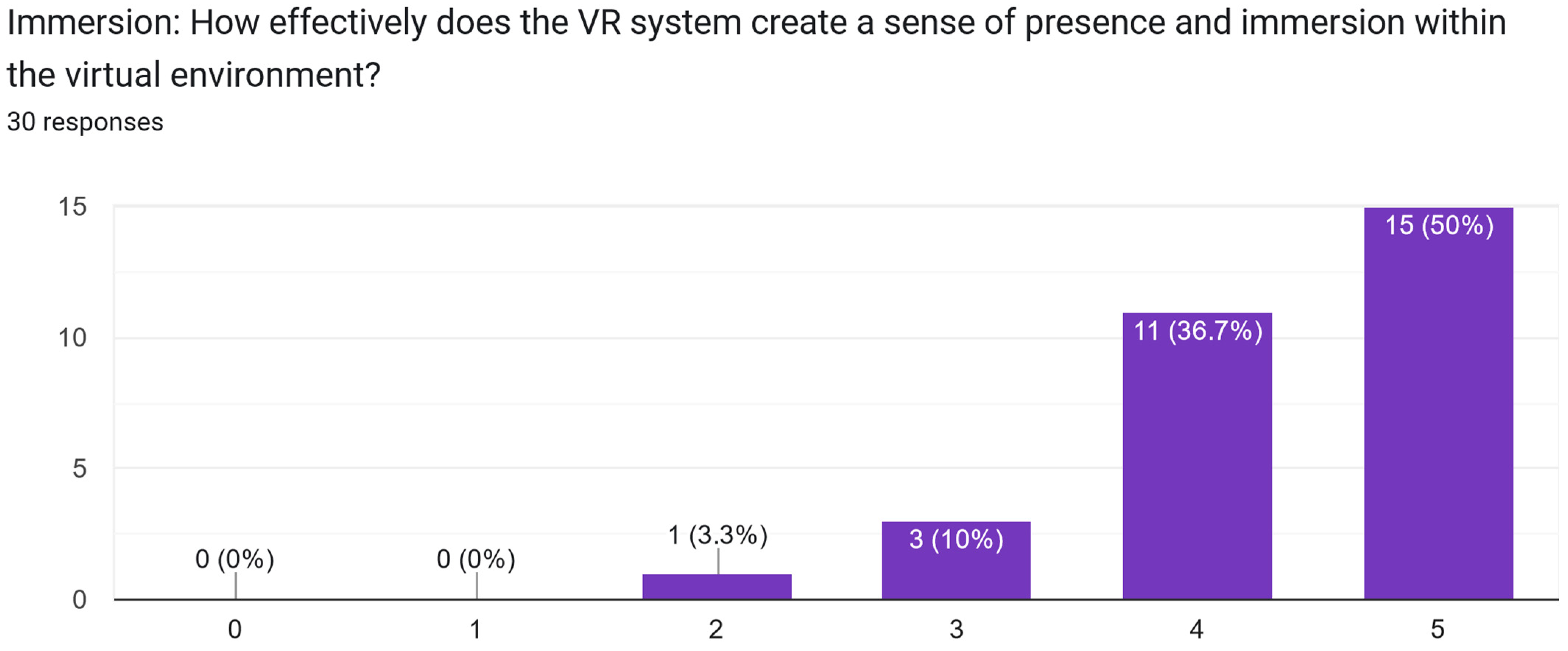

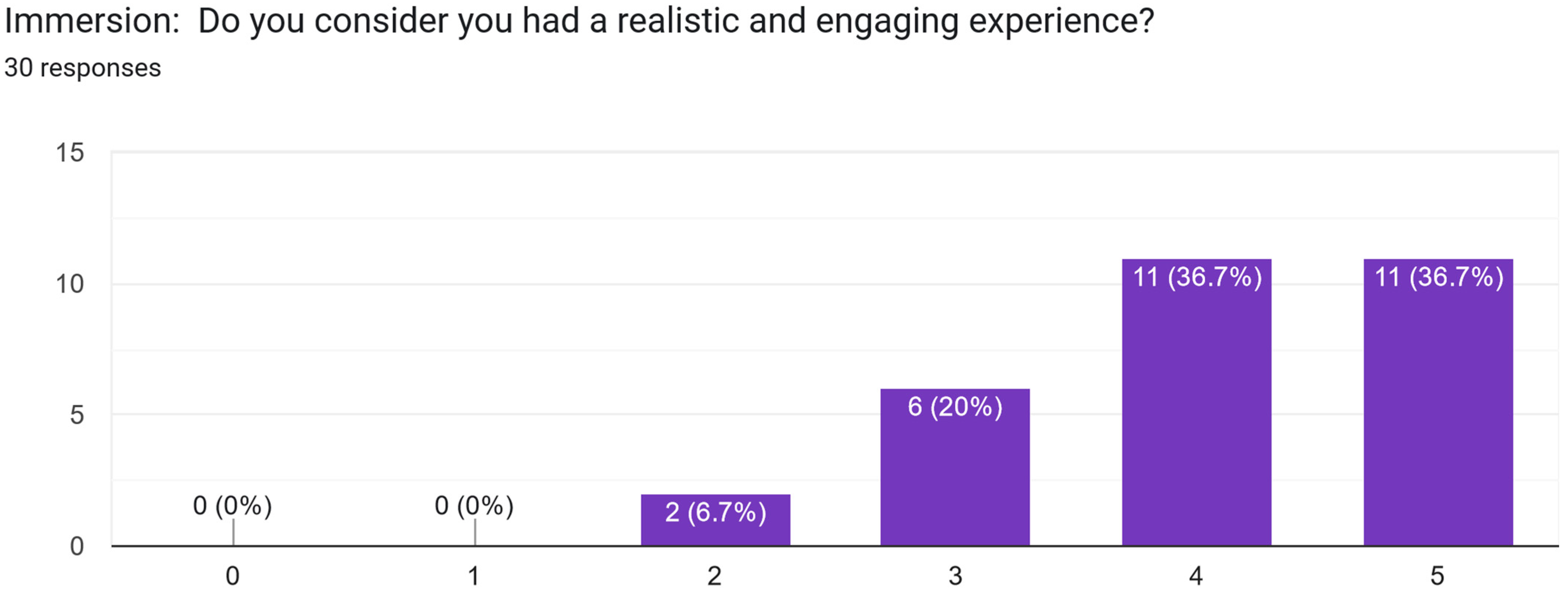

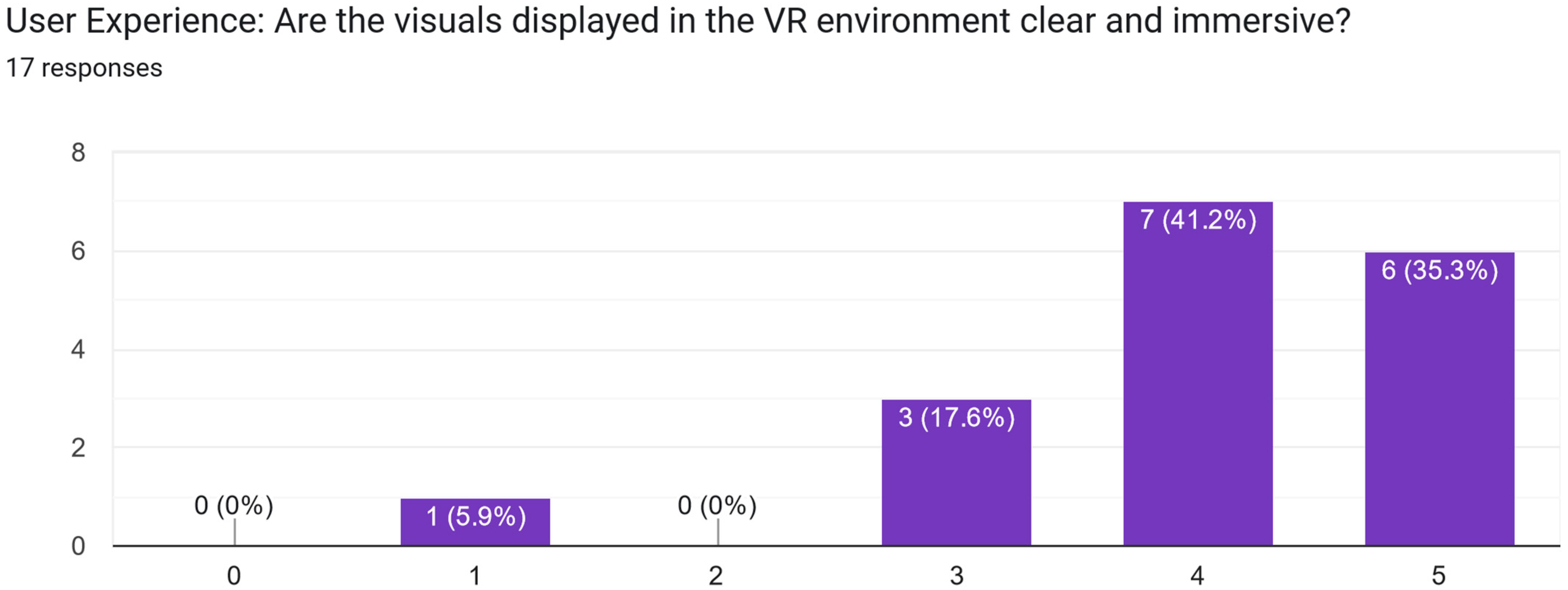

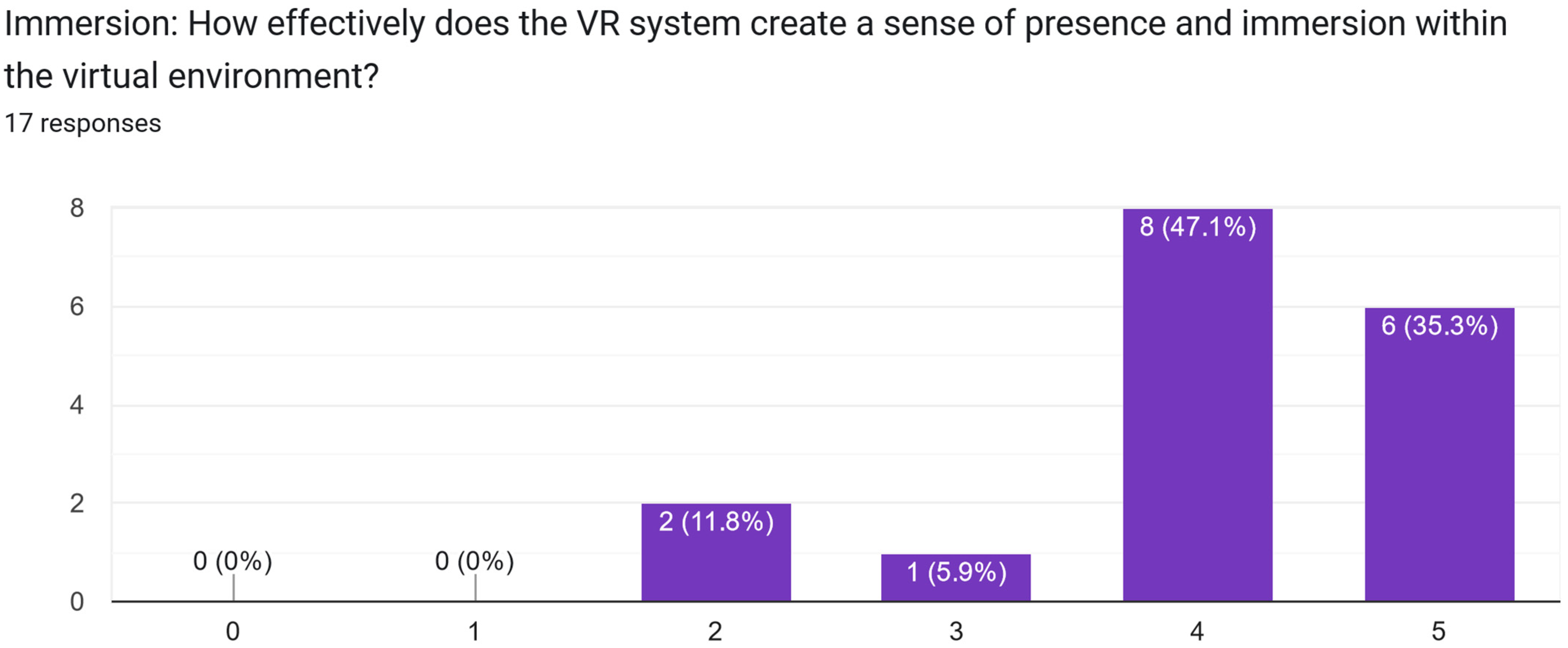

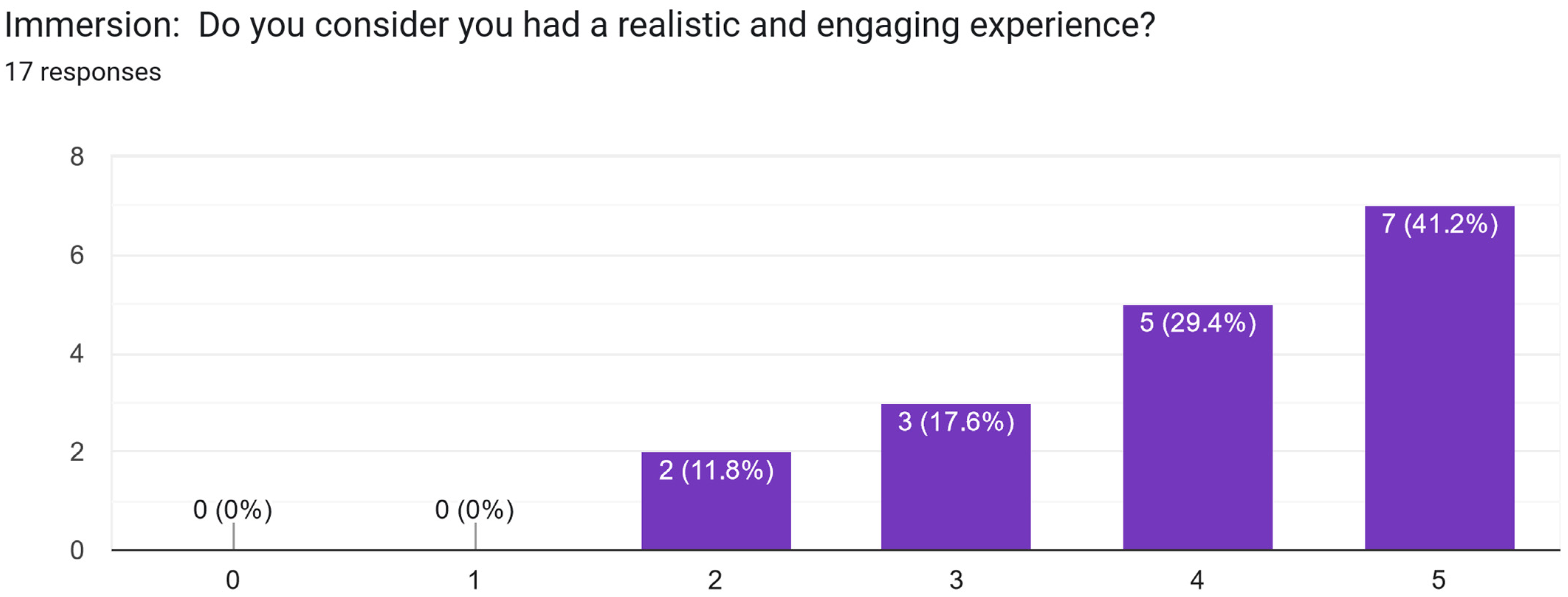

5.1.2. Results of the Feedback Questionnaire

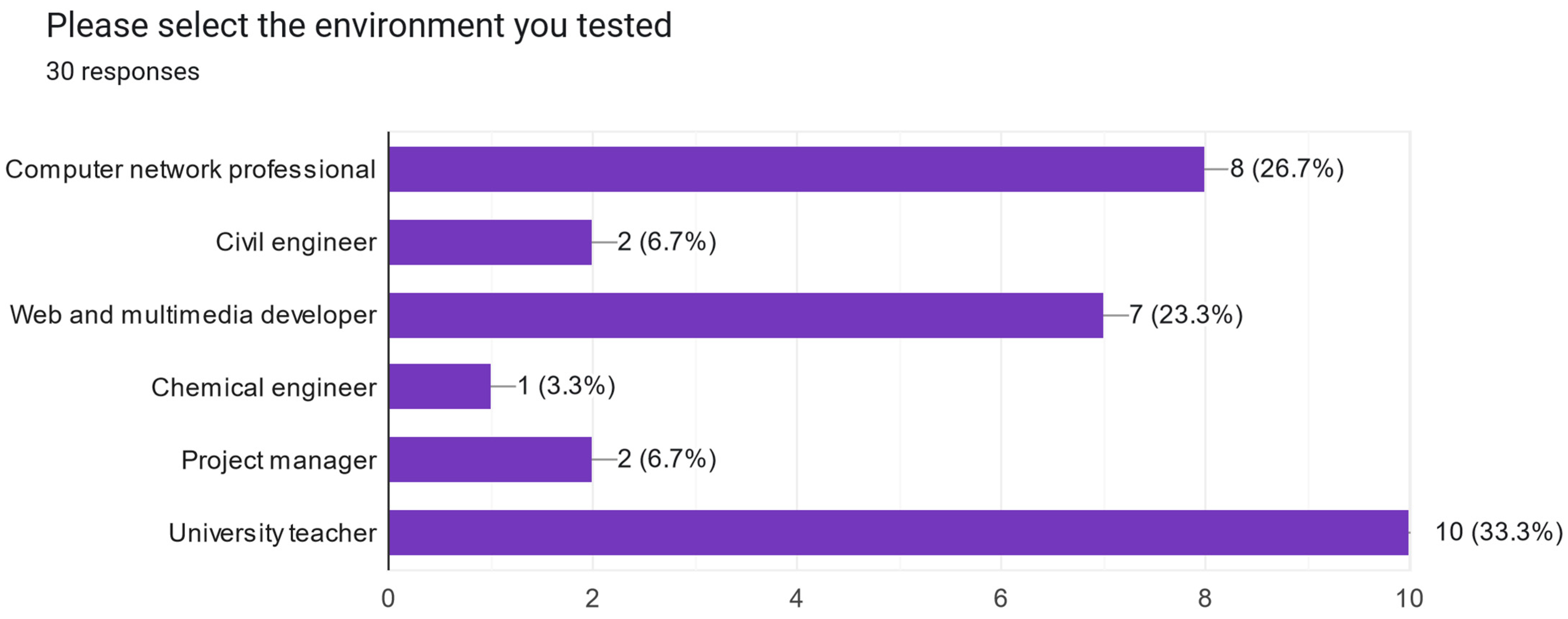

- Medium chosen to be tested: the most tested scenario was that of university professor (33.3%); the least tested was that of chemical engineer (3.3%).

- Projection in the future regarding the choice of the tested profession: over 80% of responding students believed that they could practice the tested profession in the future.

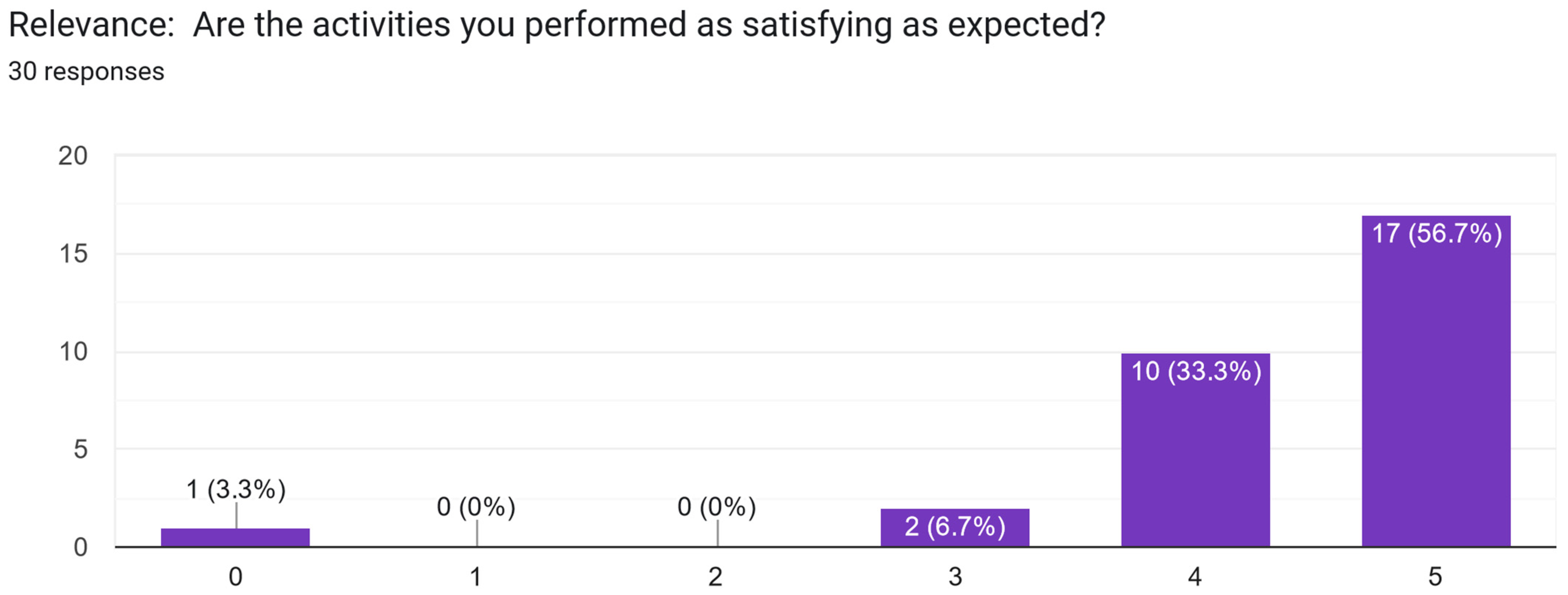

- Satisfaction with the activities carried out according to the scenario chosen for testing: 90% of respondents found these experiences satisfactory and very satisfactory.

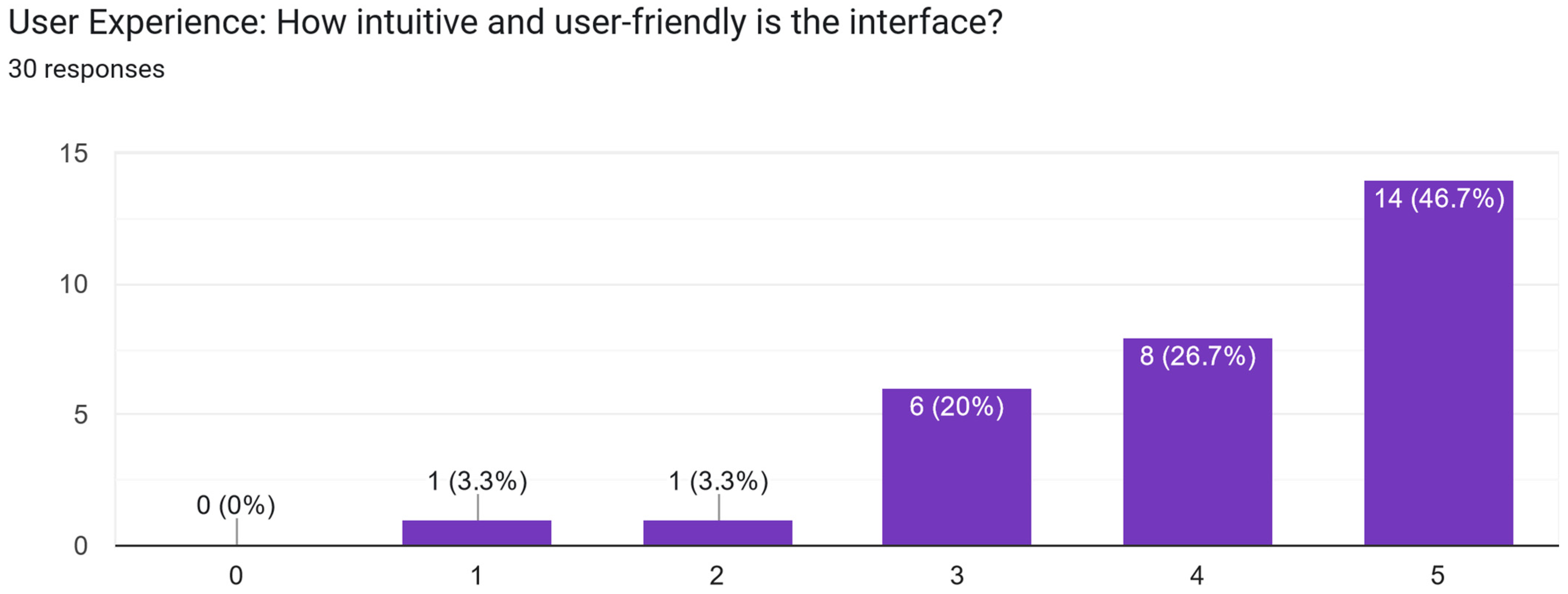

- Appreciation of the interface—intuitive and easy to use: over 95% of respondents believed that the interface was intuitive and easy to use.

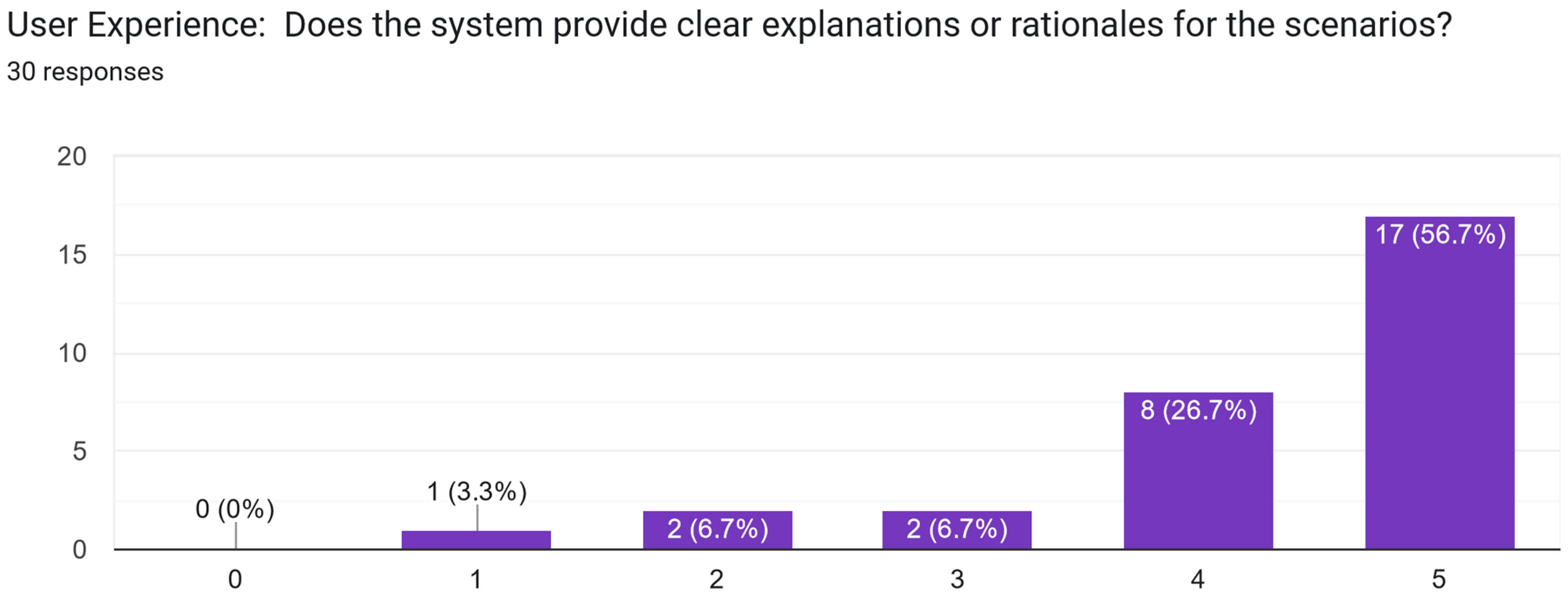

- Providing clear reasoning and explanations by the system: 90% of respondents believed they had received clear and sufficient explanations from the system.

- Appreciation of the interface—navigating menus, interacting with objects, accessing functionalities: 90% of respondents considered these features to be easy.

- Clarity and immersiveness of the visual part of the system: over 93% of respondents positively appreciated these qualities.

- Appreciating the experience as realistic and immersive: over 73% of respondents considered the experience realistic and immersive.

- Identifying distractions and technical limitations that interrupted VR immersion.

- Hardware limitations caused by sensor position.

- Accommodation with the environment.

- Getting used to the joystick.

- Dizziness induced by movement with the joystick.

- Consideration of unforeseen situations: adjustment of tone of voice and emotional intelligence.

- Inaccurate placement of objects.

- Difficulty in using controllers.

- VR headset quality.

- Problems when colliding with objects.

- Suggestion for introducing gravity.

- Promptness of the system to commands and user movements: over 90% of the surveyed students appreciated the system as efficient in terms of promptness of ordering and reductions.

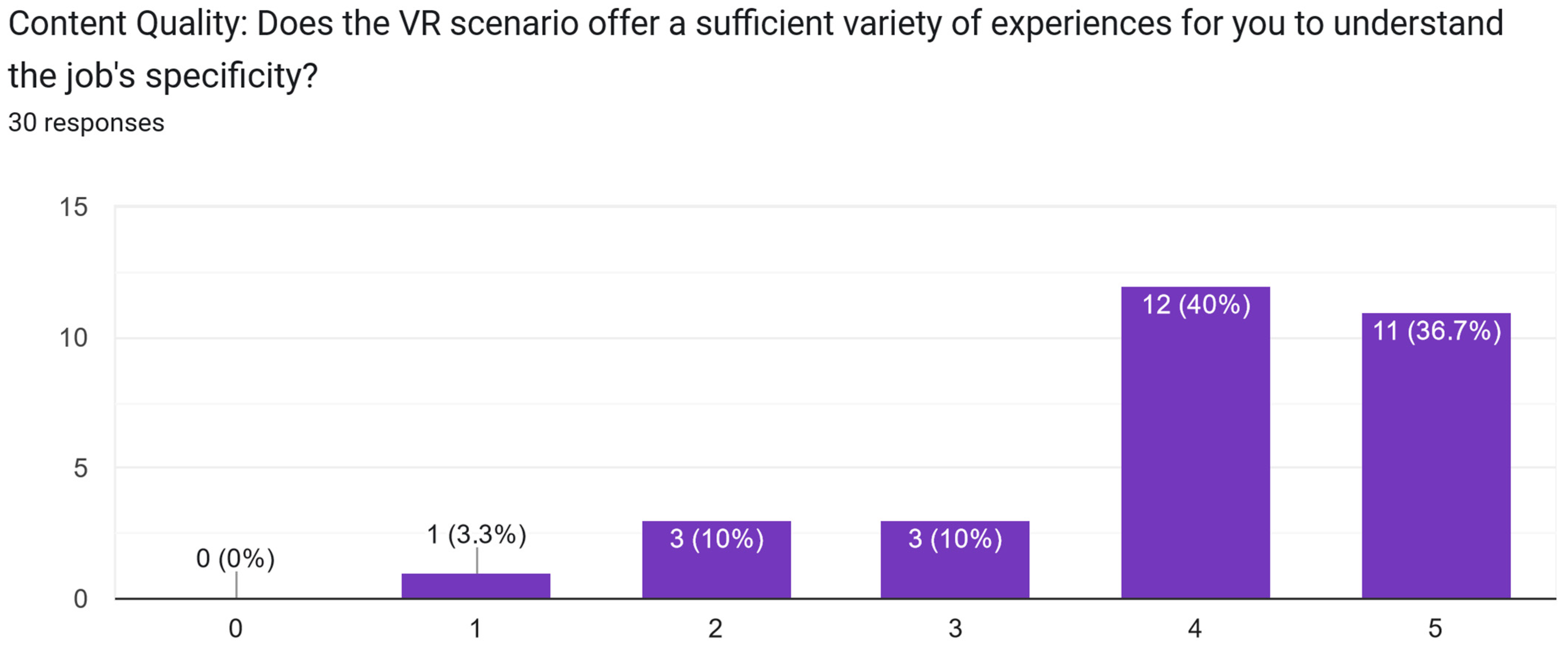

- Variety of experiences to form a clear opinion about the job: over 76% believed they could relate to this experience for choosing a profession in the future.

- Comfort and safety in using VR equipment: in total, 6 responses out of 12 provided identified the following types of risks: hitting walls/other objects in the room, difficulty reading text for visually impaired people, limited free space in the test room, motion sickness, the existence of neural disorders such as epilepsy and dizziness, self-injury due to lack of experience in using the VR system.

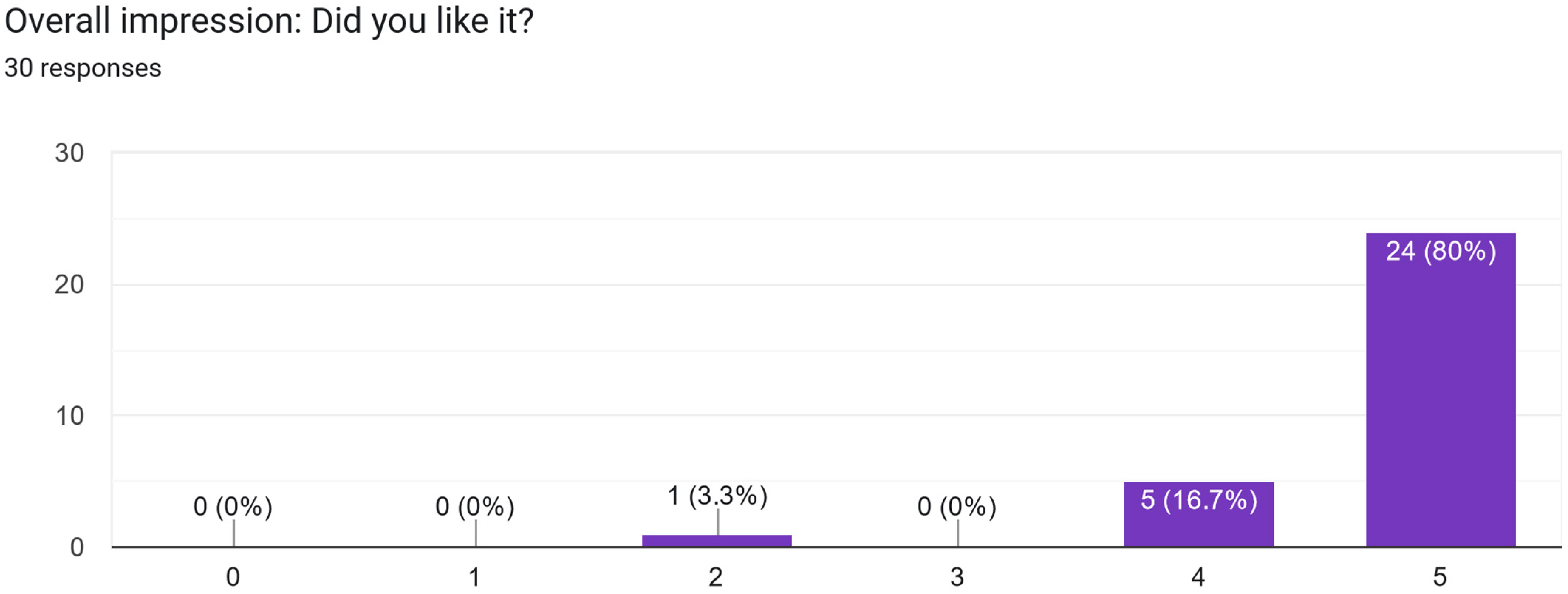

- General impression: over 96% of respondents rated this experience as positive.

5.1.3. Results from the VR Simulation Performance Recording Module

5.1.4. Results from the Final Interview

5.2. Second Round of Experiments

5.2.1. Results of the Introductory Self-Assessment Questionnaire

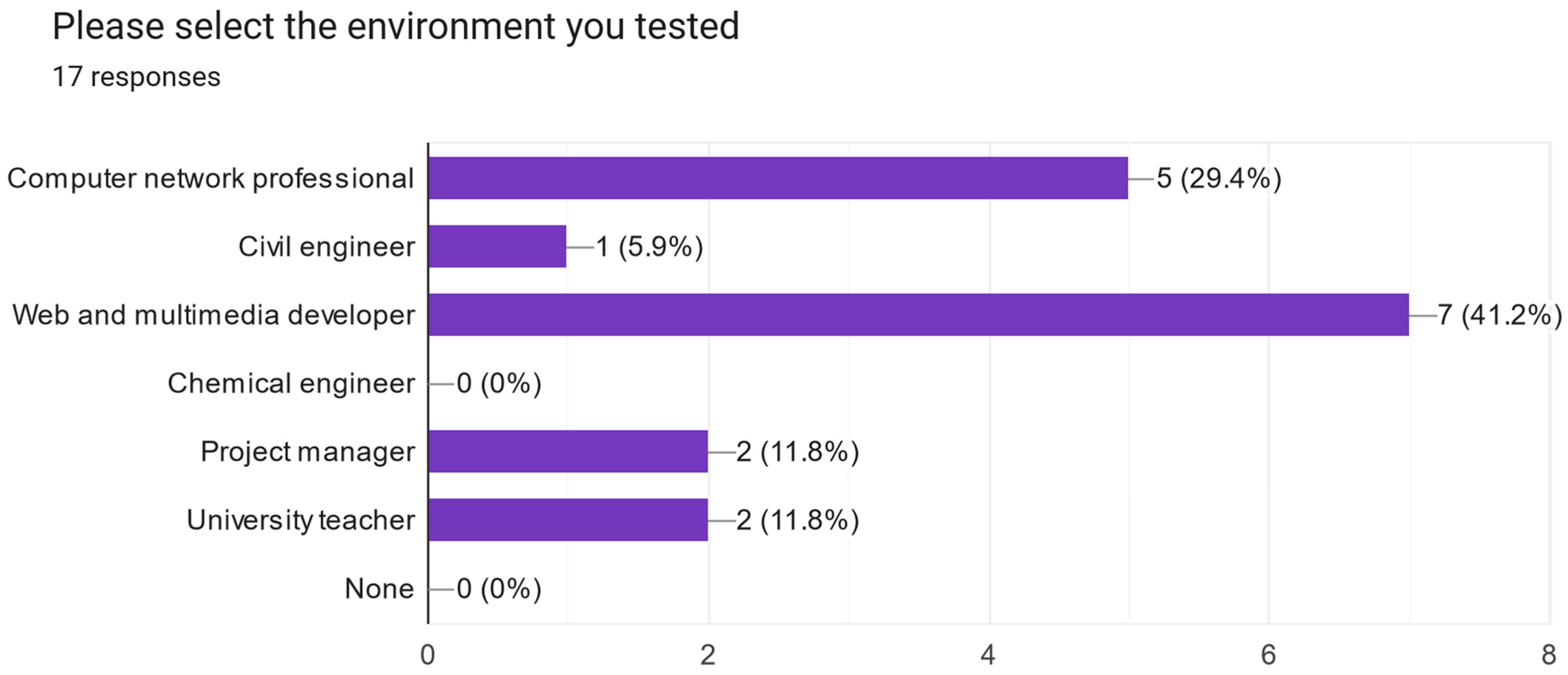

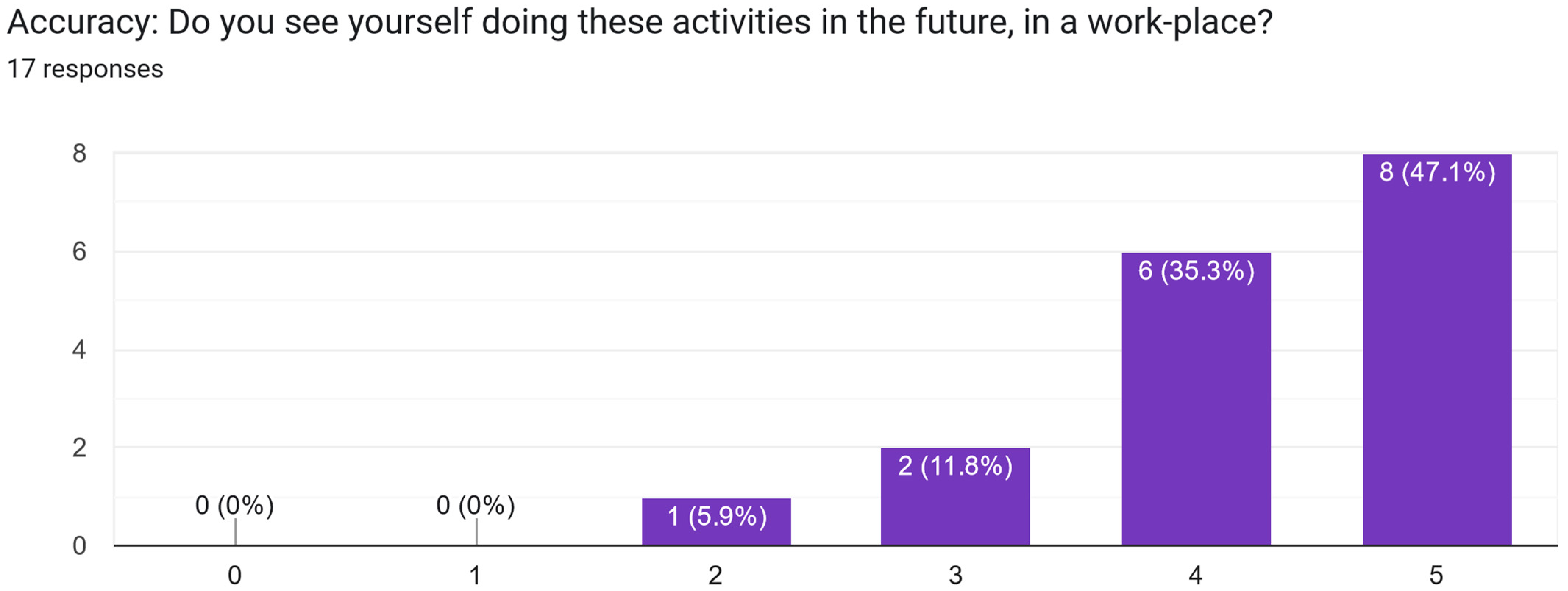

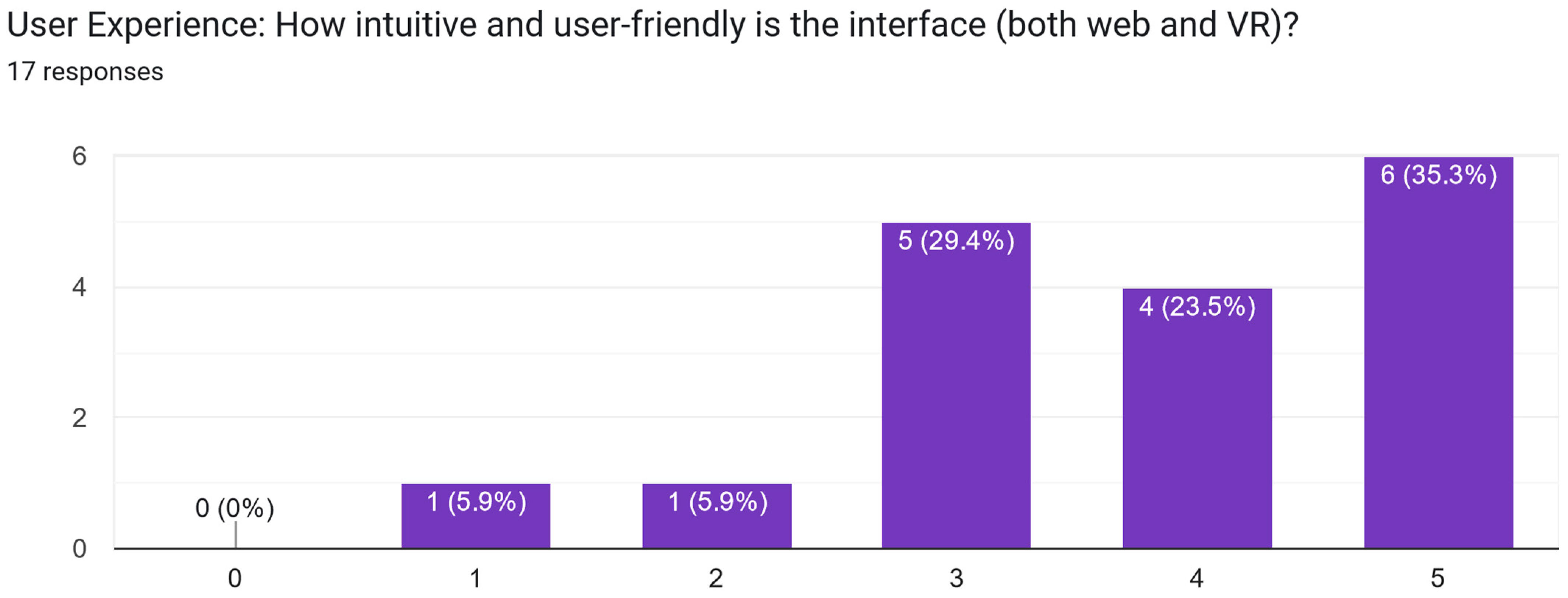

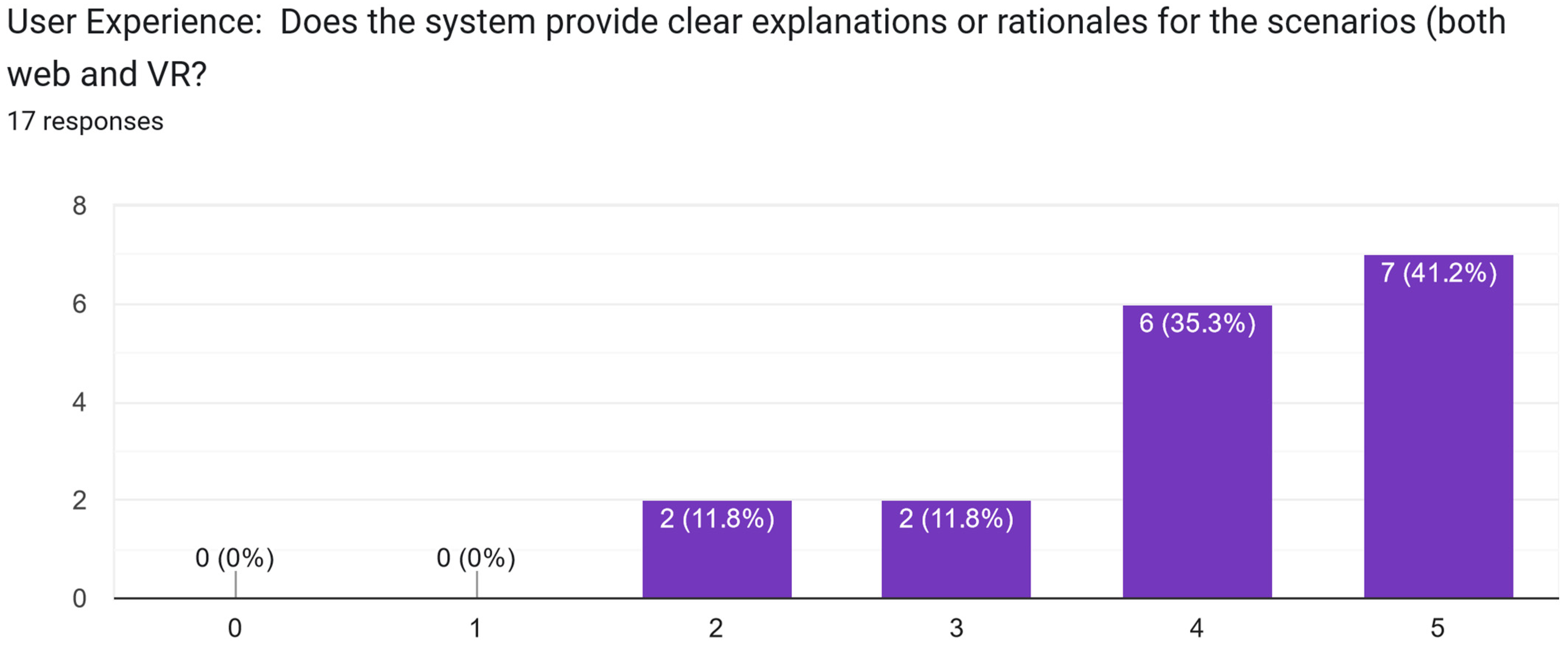

5.2.2. Results of the Feedback Questionnaire

5.2.3. Results from the VR Simulation Performance Recording Module

5.2.4. Results from the Final Interview

6. Discussion

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

Appendix C

Appendix D

References

- Capecchi, I.; Borghini, T.; Barbierato, E.; Guazzini, A.; Serritella, E.; Raimondi, T.; Saragosa, C.; Bernetti, I. The Combination of Serious Gaming and Immersive Virtual Reality through the Constructivist Approach: An Application to Teaching Architecture. Educ. Sci. 2022, 12, 536. [Google Scholar] [CrossRef]

- Dascalu, M.I.; Bodea, C.-N.; Ordóñez de Pablos, P.; Lytras, M.D. Improving e-learning communities through optimal composition of multidisciplinary learning groups. Comput. Human Behav. 2014, 30, 362–371. [Google Scholar] [CrossRef]

- Baker, L.; Wright, S.; Mylopoulos, M.; Kulasegaram, K.; Ng, S. Aligning and applying the paradigms and practices of education. Acad. Med. 2019, 94, 1060. [Google Scholar] [CrossRef]

- Fineman, B. 2018 VR/AR in Research and Education Survey; Internet 2: Ann Arbor, MI, USA, 2018. [Google Scholar]

- Perkins Coie; XR Association. 2020 Augmented and Virtual Reality Survey Report. 2020. Available online: https://www.perkinscoie.com/images/content/2/3/231654/2020-AR-VR-Survey-v3.pdf (accessed on 3 April 2024).

- AlGerafi, M.A.M.; Zhou, Y.; Oubibi, M.; Wijaya, T.T. Unlocking the Potential: A Comprehensive Evaluation of Augmented Reality and Virtual Reality in Education. Electronics 2023, 12, 3953. [Google Scholar] [CrossRef]

- Silva, M.; Bermúdez, K.; Caro, K. Effect of an Augmented Reality App on Academic Achievement, Motivation, and Technology Acceptance of University Students of a Chemistry Course. Comput. Educ. X Real. 2023, 2, 100022. [Google Scholar] [CrossRef]

- Liu, Q.; Yu, S.; Chen, W.; Wang, Q.; Xu, S. The Effects of an Augmented Reality Based Magnetic Experimental Tool on Students’ Knowledge Improvement and Cognitive Load. J. Comput. Assist. Learn. 2021, 37, 645–656. [Google Scholar] [CrossRef]

- Sun, J.C.-Y.; Ye, S.-L.; Yu, S.-J.; Chiu, T.K.F. Effects of Wearable Hybrid AR/VR Learning Material on High School Students’ Situational Interest, Engagement, and Learning Environment. J. Sci. Educ. Technol. 2023, 32, 1–12. [Google Scholar] [CrossRef]

- Dewey, J. Democracy and Education: An Introduction to the Philosophy of Education; Macmillan: New York, NY, USA, 1916. [Google Scholar]

- Avila-Pesántez, D.; Rivera, L.A.; Alban, M.S. Approaches for serious game design: A systematic literature review. Comput. Educ. J. 2017, 8, 1–11. [Google Scholar]

- Checa, D.; Bustillo, A. A review of immersive virtual reality serious games to enhance learning and training. Multimed. Tools Appl. 2020, 79, 5501–5527. [Google Scholar] [CrossRef]

- Swati, J.; Pramod, P.J. A Collaborative Metaverse based A-La-Carte Framework for Tertiary Education (CO-MATE). Heliyon 2023, 9, e13424. [Google Scholar]

- Alhalabi, W. Virtual reality systems enhance students’ achievements in engineering education. Behav. Inf. Technol. 2016, 35, 919–925. [Google Scholar] [CrossRef]

- Wang, P.; Bai, X.L.; Billinghurst, M.; Zhang, S.S.; Zhang, X.Y.; Wang, S.X.; He, W.P.; Yan, Y.X.; Ji, H.Y. AR/MR Remote Collaboration on Physical Tasks: A Review. Robot. Comput. Integr. Manuf. 2021, 72, 102071. [Google Scholar] [CrossRef]

- Tan, Y.; Xu, W.; Li, S.; Chen, K. Augmented and Virtual Reality (AR/VR) for Education and Training in the AEC Industry: A Systematic Review of Research and Applications. Buildings 2022, 12, 1529. [Google Scholar] [CrossRef]

- Dick, E. The Promise Ofimmersive Learning: Augmented and Virtual Reality’s Potential in Education, Information Technology & Inovation Foundation—ITIF Report. Available online: https://itif.org/publications/2021/08/30/promise-immersive-learning-augmented-and-virtual-reality-potential/ (accessed on 3 April 2024).

- Hwang, G.-J.; Chien, S.-Y. Definition, roles, and potential research issues of the metaverse in education: An artificial intelligence perspective. Comput. Educ. Artif. Intell. 2022, 3, 100082. [Google Scholar] [CrossRef]

- Sampaio, A.Z.; Martins, O.P. The application of virtual reality technology in the construction of bridge: The cantilever and incremental launching methods. Autom. Constr. 2014, 37, 58–67. [Google Scholar] [CrossRef]

- Vergara, D.; Rubio, M.P.; Lorenzo, M. New Approach for the Teaching of Concrete Compression Tests in Large Groups of Engineering Students. J. Prof. Issues Eng. Educ. Pract. 2017, 143, 05016009. [Google Scholar] [CrossRef]

- Goulding, J.; Nadim, W.; Petridis, P.; Alshawi, M. Construction industry offsite production: A virtual reality interactive training environment prototype. Adv. Eng. Inform. 2012, 26, 103–116. [Google Scholar] [CrossRef]

- Pedro, A.; Le, Q.T.; Park, C.S. Framework for Integrating Safety into Construction Methods Education through Interactive Virtual Reality. J. Prof. Issues Eng. Educ. Pract. 2015, 142, 04015011. [Google Scholar] [CrossRef]

- Chou, C.; Hsu, H.L.; Yao, Y.S. Construction of a Virtual Reality Learning Environment for Teaching Structural Analysis. Comput. Appl. Eng. Educ. 1997, 5, 223–230. [Google Scholar] [CrossRef]

- Guerrero-Mosquera, L.F.; Gómez, D.; Thomson, P. Development of a virtual earthquake engineering lab and its impact on education. Dyna 2018, 85, 9–17. [Google Scholar] [CrossRef]

- Setareh, M.; Bowman, D.A.; Kalita, A.; Gracey, M.; Lucas, J. Application of a Virtual Environment System in Building Sciences Education. J. Archit. Eng. 2004, 11, 165–172. [Google Scholar] [CrossRef]

- Fogarty, J.; El-Tawil, S. Exploring Complex Spatial Arrangements and Deformations in Virtual Reality. In Proceedings of the Structures Congress, Boston, MA, USA, 3–5 April 2014; pp. 1089–1096. [Google Scholar]

- Dib, H.N.; Adamo, N. An Augmented Reality Environment for Students’ Learning of Steel Connection Behavior. In Proceedings of the ASCE International Workshop on Computing in Civil Engineering, Seattle, WA, USA, 25–27 June 2017; pp. 51–58. [Google Scholar]

- Ayer, S.K.; Messner, J.I.; Anumba, C.J. Augmented Reality Gaming in Sustainable Design Education. J. Archit. Eng. 2016, 22, 04015012. [Google Scholar] [CrossRef]

- Chang, Y.S.; Hu, K.J.; Chiang, C.W.; Lugmayr, A. Applying Mobile Augmented Reality (AR) to Teach Interior Design Students in Layout Plans: Evaluation of Learning Effectiveness Based on the ARCS Model of Learning Motivation Theory. Sensors 2019, 20, 105. [Google Scholar] [CrossRef]

- Riera, A.S.; Redondo, E.; Fonseca, D. Geo-located teaching using handheld augmented reality: Good practices to improve the motivation and qualifications of architecture students. Univers. Access Inf. Soc. 2014, 14, 363–374. [Google Scholar] [CrossRef]

- Su, X.; Dunston, P.S.; Proctor, R.W.; Wang, X.Y. Influence of training schedule on development of perceptual–motor control skills for construction equipment operators in a virtual training system. Autom. Constr. 2013, 35, 439–447. [Google Scholar] [CrossRef]

- Jeelani, I.; Han, K.; Albert, A. Development of virtual reality and stereo-panoramic environments for construction safety training, Engineering. Constr. Archit. Manag. 2020, 27, 1853–1876. [Google Scholar] [CrossRef]

- Huang, T.-K.; Yang, C.-H.; Hsieh, Y.-H.; Wang, J.-C.; Hung, C.-C. Augmented reality (AR) and virtual reality (VR) applied in dentistry. Kaohsiung J. Med. Sci. 2018, 34, 243–248. [Google Scholar] [CrossRef] [PubMed]

- Roy, E.; Bakr, M.M.; George, R. The need for virtual reality simulators in dental education: A Review. Saudi Dent. J. 2017, 29, 41–47. [Google Scholar] [CrossRef] [PubMed]

- Verstreken, K.; Van, C.J.; Marchal, G.; Naert, I.; Suetens, P.; Steenberghe, D. Computer-assisted planning of oral implant surgery: A three-dimensional approach. Int. J. Oral. Maxillofac. Implant. 1996, 11, 806. [Google Scholar]

- Makris, D.; Tsolaki, V.; Robertson, R.; Dimopoulos, G.; Rello, J. The future of training in intensive care medicine: A European perspective. J. Intensive Med. 2023, 3, 52–61. [Google Scholar] [CrossRef]

- Said, A.S.; Cooley, E.; Moore, E.A.; Shekar, K.; Maul, T.M.; Kollengode, R.; Zakhary, B. Development of a standardized assessment of simulation-based extracorporeal membrane oxygenation educational courses. ATS Sch. 2022, 3, 242–257. [Google Scholar] [CrossRef]

- Nonas, S.A.; Fontanese, N.; Parr, C.R.; Pelgorsch, C.L.; Rivera-Tutsch, A.S.; Charoensri, N.; Saengpattrachai, M.; Pongparit, N.; Gold, J.A. Creation of an international interprofessional simulation-enhanced mechanical ventilation course. ATS Sch. 2022, 3, 270–284. [Google Scholar] [CrossRef] [PubMed]

- Seam, N.; Lee, A.J.; Vennero, M.; Emlet, L. Simulation training in the ICU. Chest 2019, 156, 1223–1233. [Google Scholar] [CrossRef] [PubMed]

- Tay, Y.X.; McNulty, J.P. Radiography education in 2022 and beyond—Writing the history of the present: A narrative review. Radiography 2023, 29, 391–397. [Google Scholar] [CrossRef]

- Cosson, P.; Willis, R.N. Comparison of Student Radiographers’ Performance in a Real X-ray Room after Training with a Screen-Based Computer Simulator. 2012. Available online: http://www.lecturevr.com/distrib/etc/WhitePaper-ComparisonOfStudentRadiographersPerformanceInaRealXrayRoomAfterTrainingWithAScreenBasedComputerSimulator.pdf (accessed on 3 April 2024).

- Sapkaroski, D.; Baird, M.; McInerney, J.; Dimmock, M.R. The implementation of a haptic feedback virtual reality simulation clinic with dynamic patient interaction and communication for medical imaging students. J. Med. Radiat. Sci. 2018, 65, 218–225. [Google Scholar] [CrossRef] [PubMed]

- Harknett, J.; Whitworth, M.; Rust, D.; Krokos, M.; Kearl, M.; Tibaldi, A.; Bonali, F.L.; Van Wyk, B. The use of immersive virtual reality for teaching fieldwork skills in complex structural terrains. J. Struct. Geol. 2022, 163, 104681. [Google Scholar] [CrossRef]

- Dong, S.; Behzadan, A.H.; Chen, F.; Kamat, V.R. Collaborative visualization of engineering processes using tabletop augmented reality. Adv. Eng. Softw. 2013, 55, 45–55. [Google Scholar] [CrossRef]

- Dascălu, M.I.; Bodea, C.N.; Nemoianu, I.V.; Hang, A.; Puskás, I.F.; Stănică, I.C.; Dascălu, M. CareProfSys—An ontology for career development in engineering designed for the romanian job market. Rev. Roum. Sci. Tech. Série Électrotechnique Énergétique 2023, 68, 212–217. [Google Scholar] [CrossRef]

- Dascalu, M.I.; Brîndușescu, V.A.; Stanica, I.C.; Uta, B.I.; Bratosin, I.A.; Mitrea, D.A.; Brezoaie, R.E. Chatbots for career guidance: The case of careprofsys conversational agent. In Proceedings of the 18th International Technology, Education and Development Conference, Valencia, Spain, 2024, 4–6 March; pp. 6194–6204.

- Dascalu, M.I.; Bumbacea, A.S.; Bratosin, I.A.; Stanica, I.C.; Bodea, C.N. CareProfSys—Combining Machine Learning and Virtual Reality to Build an Attractive Job Recommender System for Youth: Technical Details and Experimental Data. ECBS 2023. Lecture Notes in Computer Science. In Proceedings of the 8th International Conference on Engineering of Computer-Based Systems, Västerås, Sweden, 16–19 October 2023; Volume 14390, pp. 289–298, ISBN 978-3-031-49251-8. [Google Scholar]

- Unity. Available online: https://docs.unity3d.com/Packages/com.unity.entities@0.2/manual/ecs_core.html (accessed on 3 April 2024).

- Mixamo. Available online: https://www.mixamo.com/ (accessed on 3 April 2024).

- Oculus Casting. Available online: https://www.oculus.com/casting/ (accessed on 3 April 2024).

- CareProfSys Project Website. Available online: https://www.careprofsys.upb.ro/ (accessed on 5 May 2024).

| Term | Description |

|---|---|

| Internet Protocol Address (IP) | A unique identifier for the personal computer used to access the local network or the Internet. It consists of 4 sets of numbers on 8 bits, such as 192.255.255.255. The previous example on 32 bits is called IPv4, which has a limited number of addresses. The enhanced version IPv6 is a 128-bit version that uses hexadecimal in its configuration and incorporates an Internet Protocol Security (IPsec) protocol. Both types of addresses can be used in a network configuration |

| ping | It is a utility command meant to test the connection between two devices |

| ipconfig | It is a utility command used to display the currently configured interfaces if no arguments are given; arguments can be used to configure the given interfaces |

| Network mask | The network mask is a 32-bit number intended to separate the host and network address parts of an IP. In a local network, IPs should have the same network address to allow communication |

| Routing Information Protocol (RIP) | Represents a dynamic routing protocol that automatically establishes the best connection between configured networks by calculating the number of hops between networks |

| Switch | A network device used to connect multiple hosts in a Local Area Network (LAN). The devices’ IPs require the same network address |

| Router | A network device used to connect multiple LANs. In this case, the devices can have different network addresses |

| Media Access Control address (MAC) | This represents the physical address of the network interface controller and is associated with the IP address to correctly locate the device in a local network |

| Term | Description |

|---|---|

| Safety helmet | A helmet used to prevent head injuries in case of impact with objects falling from high heights |

| Identification badge | A document for identifying authorized personnel at the workplace |

| Unauthorized Items | Objects that are not allowed during working hours (e.g., alcoholic beverages) |

| Occupational Safety Regulations | Rules that must be followed for the proper conduct of work activities and safety |

| Term | Description |

|---|---|

| Header | The top section of a website that consistently appears on all pages. Usually located above any other content on the site, it contains elements such as the logo, website menu, and other important information |

| Content | The content of a website refers to all elements used to communicate your message on a website. Website content is typically divided into two main categories: web copy or body copy, which refers to written text, and multimedia content, which includes images, videos, and audio |

| Footer | The bottom section of content on a web page. Typically, it contains copyright notices, a link to a privacy policy, site map, logo, contact information, social media icons, and an email signup form |

| Social media icons | Social media icons are buttons that link to the social media pages associated with the website/organization for promotion purposes |

| Term | Description |

|---|---|

| Reagent | DexOnline—“Chemical substance that undergoes a specific reaction in the presence of a certain ion or group of ions.” |

| Blood sugar | The concentration of glucose in the blood |

| Blood sugar levels (unit of measurement) | um: mg/dL - Low: <65 mg/dL - Normal: 65–110 mg/dL - High: >110 mg/dL |

| Analyzer | An instrument that performs chemical analysis |

| Test tube | A glass tube used in the laboratory |

| Pipette | A device equipped with a pump for extracting small quantities (e.g., drops) of liquid |

| Stand | A device used to hold laboratory vessels (e.g., test tubes) in place |

| Term | Description |

|---|---|

| WBS (work breakdown structure) | A technique used in project management to break down a project into smaller, more manageable tasks |

| Gantt chart | A technique used in project management for the graphical representation of the planning and duration of project tasks |

| WP (work package) | It is a structure that groups multiple activities or tasks of the same type. It is one of the main units used to divide a project |

| Activity | A structure designed to monitor the progress of work packages, resource allocation and time |

| Device | HTC Vive Cosmos Elite (HTC Corporation, New Taipei City, Taiwan) | Oculus Rift (Meta Platforms, Menlo Park, CA, USA) | Meta Quest (Meta Platforms, Menlo Park, CA, USA) | Meta Quest 2 (Meta Platforms, Menlo Park, CA, USA) |

|---|---|---|---|---|

| Helmet model |  |  |  |  |

| Controller model |  |  |  |  |

| Resolution | 2880 × 1700 pixels (combined for both eyes) | 2160 × 1200 pixels (combined for both eyes) | 2880 × 1600 pixels (combined for both eyes) | 3664 × 1920 pixels (combined for both eyes) |

| Horizontal field of view | 110 degrees | 87 degrees | 93 degrees | 97 degrees |

| Wireless? | No | No | Yes | Yes |

| Tracking type | Outside in (IR sensors) | Outside in (IR sensors) | Inside out | Inside out |

| Stage ID | Goal | Activities | Estimated Results | Duration |

|---|---|---|---|---|

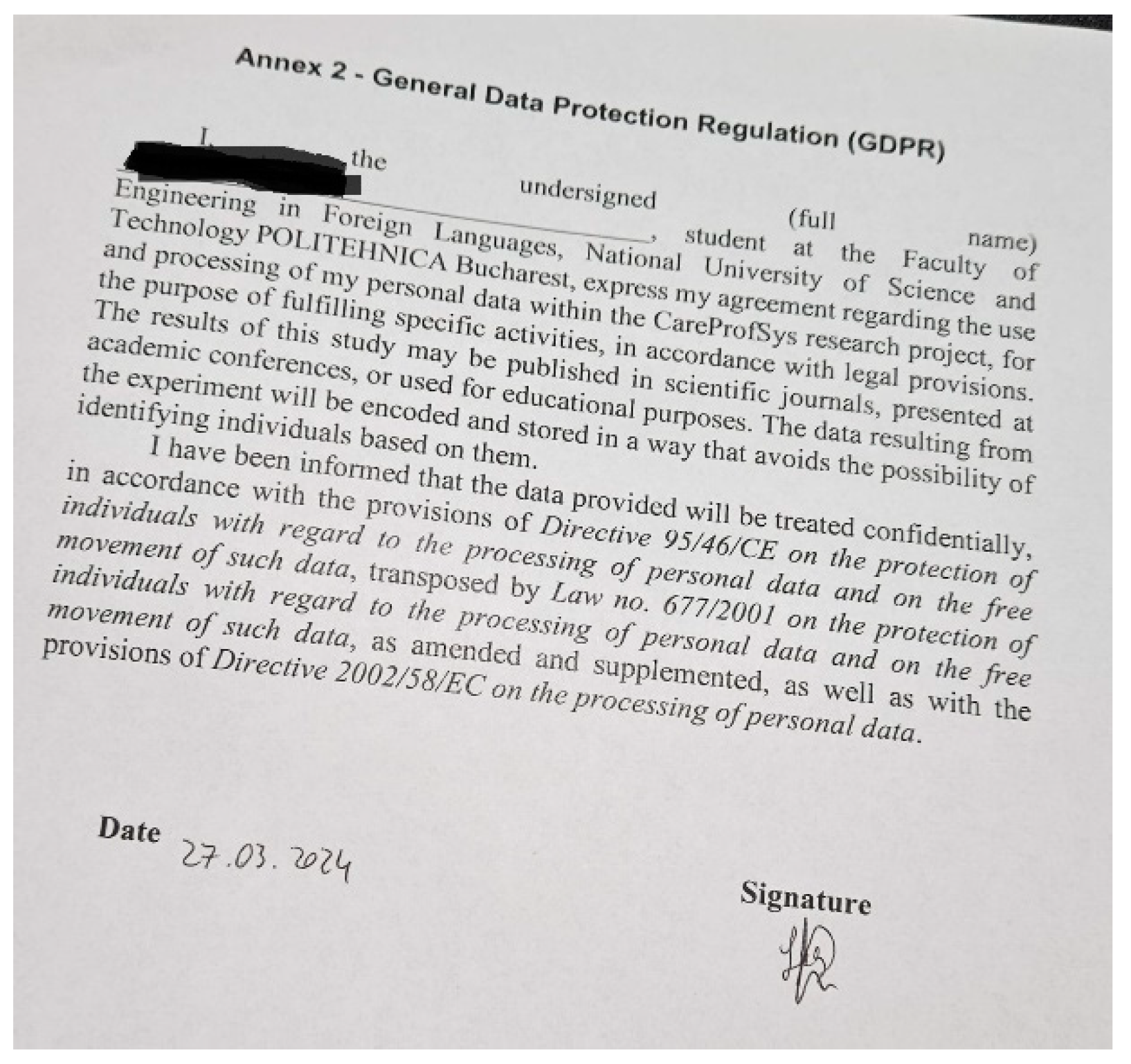

| Stage 1 | Experiment presentation and users’ consent | - Explanation of procedure and duration of experiment - Completion of informed consent form to take part in the experiment (available in Supplementary Materials) - GDPR Agreement Supplement (available in Supplementary Materials) - Filling in the introduction, self-assessment form (available in Supplementary Materials) | - User feedback - Informed consent, GDPR form | 5 min |

| Stage 2 | Testing the recommendation module | - Presentation of the recommender module and its functionalities - CV upload, social media profile, and feature exploration - Getting a career recommendation | - Career recommendations | 5 min |

| Stage 3 | VR Overview and Accommodation | (Only for users who have received one of the 6 career recommendations (civil engineer, chemical engineer, teacher, project manager, web systems designer, networking specialist) or who have evaluated themselves as having affinities towards one of the 6 jobs available in VR) - Read document to obtain basic theoretical knowledge for the recommended profession (available in Supplementary Materials) - System and hardware VR presentation - Helmet adjustment for users - Presentation of VR input for movement, action, and functionalities - Software configuration according to user profile (unique ID) | - Unique ID, tested scene | 5–10 min (extra time may be needed for people with no previous experience using VR) |

| Stage 4 | Explore VR scenarios | (Only for users who have received one of the 6 career recommendations (civil engineer, chemical engineer, teacher, project manager, web systems designer, networking specialist) or who have evaluated themselves as having affinities towards one of the 6 jobs available in VR) - The user must accomplish as many tasks as possible in the given time - All tests will start with the easiest difficulty level; if a level is successfully completed, the user will then move on to the next difficulty level (medium, then hard) | - Score according to the logic of each game - Mistakes (errors) in game logic - Real-time feedback to identify bugs and suggestions for improvements | 10 min |

| Stage 5 | Final feedback | - Filling in the questionnaire at the end of the tests, focused on usefulness and interview applied following the questionnaire. | - User feedback | 5–10 min |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dascalu, M.-I.; Stanica, I.-C.; Bratosin, I.-A.; Uta, B.-I.; Bodea, C.-N. Virtual Reality for Career Development and Exploration: The CareProfSys Profiler System Case. Electronics 2024, 13, 2629. https://doi.org/10.3390/electronics13132629

Dascalu M-I, Stanica I-C, Bratosin I-A, Uta B-I, Bodea C-N. Virtual Reality for Career Development and Exploration: The CareProfSys Profiler System Case. Electronics. 2024; 13(13):2629. https://doi.org/10.3390/electronics13132629

Chicago/Turabian StyleDascalu, Maria-Iuliana, Iulia-Cristina Stanica, Ioan-Alexandru Bratosin, Beatrice-Iuliana Uta, and Constanta-Nicoleta Bodea. 2024. "Virtual Reality for Career Development and Exploration: The CareProfSys Profiler System Case" Electronics 13, no. 13: 2629. https://doi.org/10.3390/electronics13132629

APA StyleDascalu, M.-I., Stanica, I.-C., Bratosin, I.-A., Uta, B.-I., & Bodea, C.-N. (2024). Virtual Reality for Career Development and Exploration: The CareProfSys Profiler System Case. Electronics, 13(13), 2629. https://doi.org/10.3390/electronics13132629