1. Introduction

Cloud computing has grown in popularity in recent years because of its capacity to provide a wide range of efficient internet-based services [

1]. This technical innovation has revolutionized how companies handle their computing needs by offering flexible and scalable solutions to meet the increasing demands of modern applications [

2]. While cloud environments efficiently allocate resources and workloads, certain challenges may arise. Load balancing is an essential technique that spreads incoming tasks or requests across many servers [

3]. This ensures effective resource management, system enhancement, and consistent system reliability. The intricacies of load balancing in cloud environments stem from many factors, including dynamic workload fluctuations, resource diversity, evolving scalability needs, and varying application demands [

4]. The static load-balancing methods, including round-robin and the least connection algorithms, cannot handle these issues. They fail to respond effectively to changes in conditions, allocate resources effectively, and meet the requirements of different applications [

5]. As a result, resource usage is not ideal, and the system’s performance is degraded. To address these difficulties, scholars and professionals have concentrated on using meta-heuristic algorithms inspired by natural processes to achieve load balancing in cloud systems. These algorithms reflect versatility and smartness due to their mimicking of natural phenomena such as swarm intelligence, genetic algorithms, and a technique modeled upon how ants search for food. This allows them to allocate resources to the flow and ensure that workloads are shared by the fairest means possible [

6].

This paper comprehensively evaluates the bio-inspired meta-heuristic algorithms for solving the cloud load-balancing problem. It discusses the observed hurdles, assesses the shortcomings of conventional approaches, and proposes the possible benefits of nature influence-based algorithms. These benefits include improved resource utilization, increased system capacity, and flexibility to meet various application requirements. The study elucidates defining traits, merits, and relevance in cloud environments. Generally, this paper aims to highlight the value of nature-inspired meta-heuristic algorithms in resolving the cloud load-balancing problem by answering the following research questions.

RQ1: What is the purpose and significance of load balancing in cloud computing?

RQ2: How can meta-heuristic algorithms reformulate issues encountered in cloud computing?

RQ3: How do meta-heuristic algorithms facilitate cloud load balancing?

RQ4: What are the present and potential issues regarding cloud load balancing?

Ensuring load balancing is crucial in cloud settings as it directly impacts the dependability, scalability, and efficiency of cloud services. An effective load-balancing strategy enables optimal resource use, reducing processing time and minimizing the risk of over-utilization and system breakdowns in cloud environments. Cloud systems’ dynamic, decentralized, and distributed characteristics pose challenges in achieving effective load balancing, which is crucial for maintaining optimal performance and customer satisfaction. While several researches and evaluations exist concerning cloud load balancing, our study distinguishes itself due to several distinct causes.

Nature-inspired meta-heuristic focus: Unlike other research primarily examining traditional load-balancing solutions, this study delves further into nature-inspired meta-heuristic algorithms. This study examines the benefits, distinctive characteristics, and present use of cloud computing, providing a fresh viewpoint.

Comparative performance evaluation: Our approach involves surveying current meta-heuristic algorithms, conducting a thorough study, and comparing their performance using actual data obtained from case studies and experiments. This technique allows us to determine which algorithms are most suited for certain cloud resource load-balancing situations we have established.

Integration of heuristic initial solutions: Our study emphasizes the significance of using typical heuristic methods to provide initial solutions for meta-heuristics to enhance the overall optimization process. This hybrid technique has received little attention in the existing literature and represents a novel addition to the discipline.

The ensuing organization of this work is structured as follows.

Section 2 thoroughly analyzes the challenges related to load balancing in cloud systems, which primarily consist of dynamic workloads, resource heterogeneity, dynamic elasticity, and changes in application needs.

Section 3 offers a comprehensive introduction to bio-inspired load-balancing methods.

Section 4 provides the empirical results of our research.

Section 5 provides potential avenues for further study. Ultimately,

Section 6 reaches certain conclusions.

2. Background

This section lays the foundation by introducing fundamental concepts and critical terms in cloud computing, load balancing within cloud environments, and meta-heuristic algorithms. We begin by outlining the essential characteristics of cloud computing, then exploring the pivotal role that load balancing assumes within this domain. Additionally, we delve into the significance and application of meta-heuristic algorithms in cloud load balancing.

2.1. Cloud Computing Characteristics

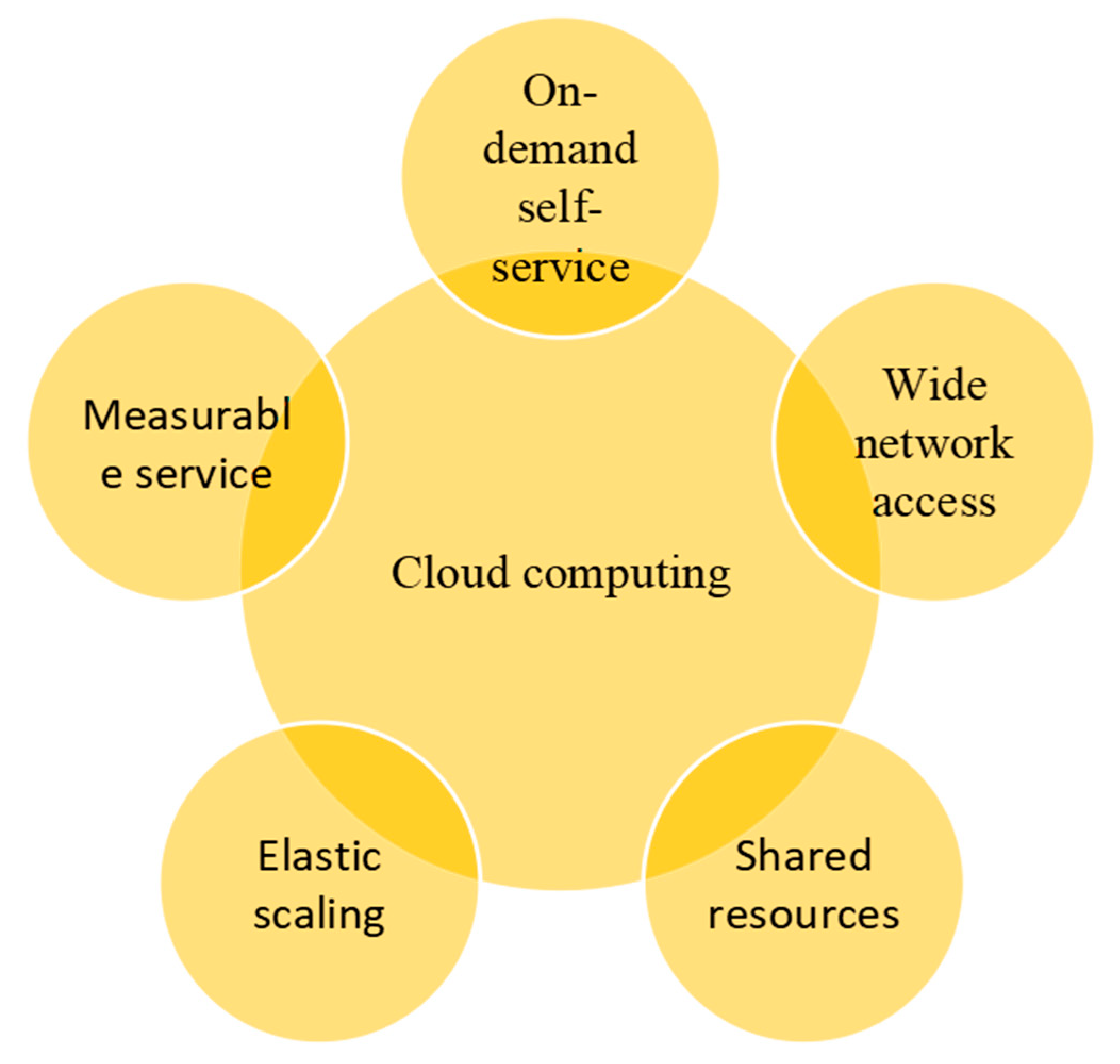

Cloud computing represents an adaptable and convenient approach characterized by its on-demand availability, expansibility, cost-effectiveness, virtualization, and constant accessibility. Renowned for its prowess in parallel computing, cloud computing offers a spectrum of services, including virtualized resources, metered resource consumption, on-demand access to computing resources, dynamic and elastic scalability, and pervasive computing that can be effortlessly provisioned and released. In this context, cloud resources and services encounter substantial uncertainty during provisioning. This uncertainty can manifest in diverse facets of storage, communication, and computational processes. To adeptly navigate this uncertainty, existing computing models can be tailored to accommodate this evolution, and novel resource management strategies can be devised. Managing the intricate infrastructure of cloud systems poses a formidable challenge. Essential issues encompass cost efficiency, performance consistency, Quality of Service (QoS), security, and reliability. Five key attributes characterize cloud computing: measurable service, elastic scaling, shared resources, wide network access, and on-demand self-service, collectively defining its transformative influence on contemporary computational paradigms (

Figure 1) [

7].

Measurable service: Cloud usage is meticulously measured and invoiced in alignment with consumption, fostering transparency and economical usage.

Elastic scaling: Cloud resources can be scaled up or down based on demand, guaranteeing optimal performance and responsiveness while accommodating varying workloads.

Shared resources: Cloud providers aggregate resources to cater to multiple users, facilitating efficient resource allocation and utilization across a diverse user community.

Wide network access: Cloud services are conveniently available through ubiquitous network connections, enabling users to engage with resources and applications from various devices and locations.

On-demand self-service: Cloud consumers can independently access and employ computing resources, such as processing capabilities and storage, without human involvement as provided by service providers via the Internet.

2.2. Role of Load Balancing in Cloud Computing

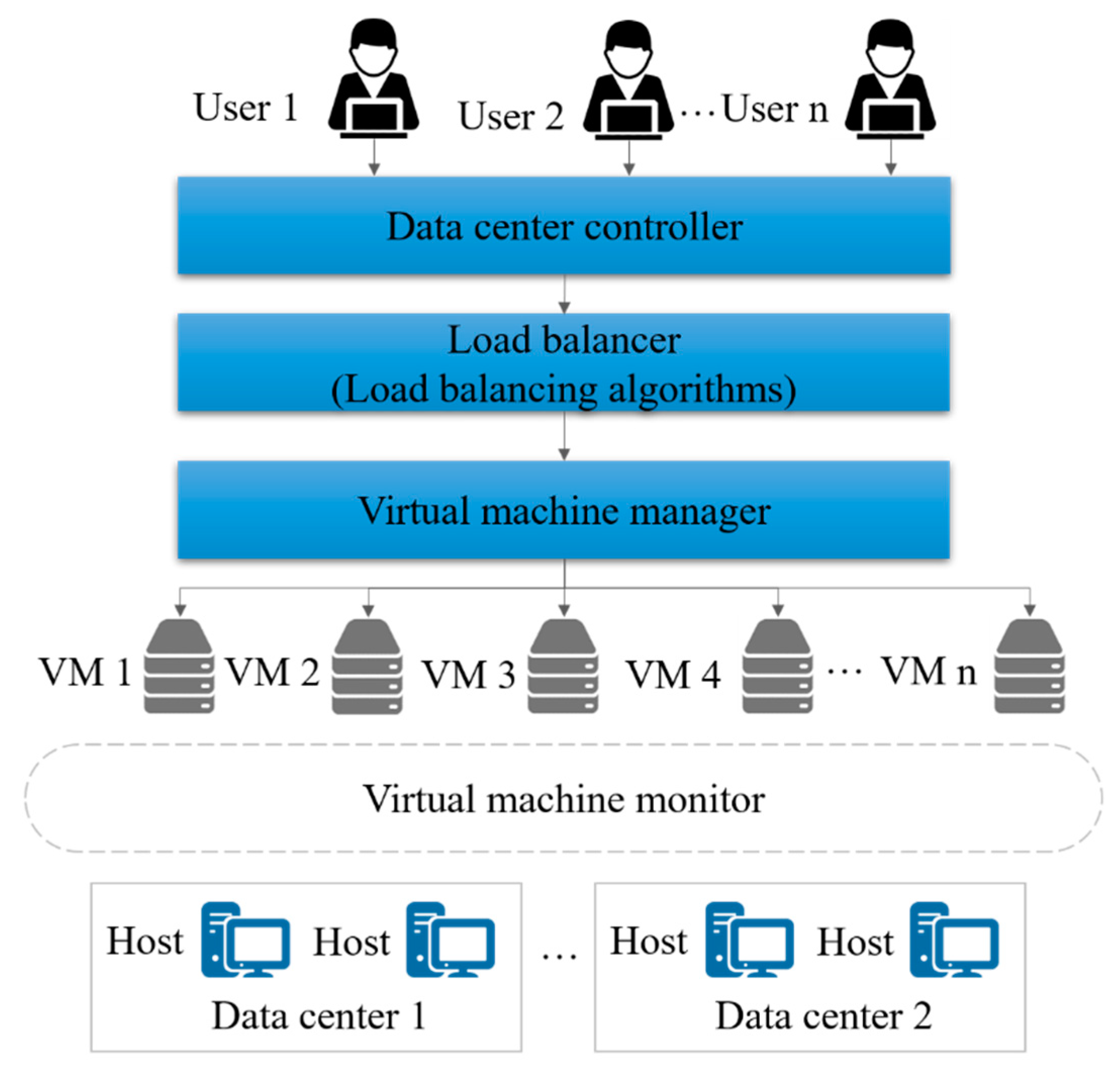

Figure 2 illustrates the load-balancing model, where the load balancer is a pivotal component. This load balancer takes users’ demands and executes load-balancing strategies to assign them equitably among Virtual Machines (VMs). The load balancer is critical in determining the most suitable VM to provide for each incoming request. Concurrently, the data center controller assumes task management responsibility, encompassing task submission for load balancing. The load balancer, in turn, employs load-balancing algorithms to allocate tasks to VMs that can adeptly handle them proficiently. Furthermore, the VM manager oversees the VMs themselves. Virtualization emerges as a dominant technology within cloud computing, with its primary objective being the resource sharing of valuable hardware among VMs. VMs represent software implementations of computers, thereby facilitating the operation of both operating systems and applications. These VMs diligently process user requests, hailing from a global user base that submits requests randomly. The assignment of these requests to VMs for processing is of paramount significance. A pivotal concern arises when some VMs become excessively burdened while others remain idle or have minimal tasks. Such imbalanced workload distribution can lead to a QoS degradation. In turn, diminished QoS can engender dissatisfaction, potentially prompting users to depart from the system without returning.

The role of a hypervisor or VM Monitor (VMM) becomes pivotal in creating and managing VMs. The VMM facilitates essential operations that include multiplexing, storage, provision, and migration. These operations stand as integral requisites for effective load balancing. Pourghebleh and Hayyolalam [

3] emphasize the need for load balancing to address two key functions: task scheduling and resource allocation. The convergence of these processes leads to increased resource utilization, high resource availability, energy conservation, reduced resource utilization costs, preservation of cloud computing’s elasticity, and reduced carbon emissions. Various metrics have been suggested for evaluating and directing load-balancing algorithms. These metrics offer valuable insights into load-balancing operation, productivity, and efficacy within a computing system. These metrics are summarized as follows:

Throughput: Throughput quantifies the rate at which processes or tasks are completed within the system over time. It serves as a measure of the system’s processing capacity and efficiency [

8].

Makespan: Makespan corresponds to the longest completion time or when a user’s resources have been allocated. It denotes the total time to complete all tasks and provides an insight into system efficiency [

9].

Response time: Response time captures the overall duration required to fulfill a given task, encompassing processing, communication, and queueing delays. It reflects the user experience regarding task completion speed [

10].

Scalability: Scalability evaluates an algorithm’s ability to uniformly balance the workload across the system as the number of nodes increases. A highly scalable algorithm can effectively manage load distribution regardless of node count [

11].

Migration time: Migration time signifies the duration of the process of moving tasks from overloaded hosts to under-loaded ones. It impacts system responsiveness and resource utilization [

12].

Fault tolerance: Fault tolerance assesses an algorithm’s capability to maintain load balancing under node or link failures. It ensures system stability and performance during challenging scenarios [

13].

Imbalance degree: The degree of imbalance measures the disparity in workload distribution among VMs or nodes. A balanced workload allocation contributes to optimal system performance [

14].

Carbon emission: Carbon emission determines the volume of carbon emitted from system resources. Load balancing is crucial in mitigating carbon emissions by redistributing loads and optimizing resource utilization [

15].

Energy consumption: Energy consumption reflects the energy consumed throughout the network. Load balancing contributes to energy conservation by preventing overheating and optimizing resource usage [

16].

Performance: Performance evaluates the overall efficiency and effectiveness of the system resulting from the use of a load-balancing approach. It considers various factors, including throughput, response time, and scalability [

17].

2.3. Load-Balancing Challenges

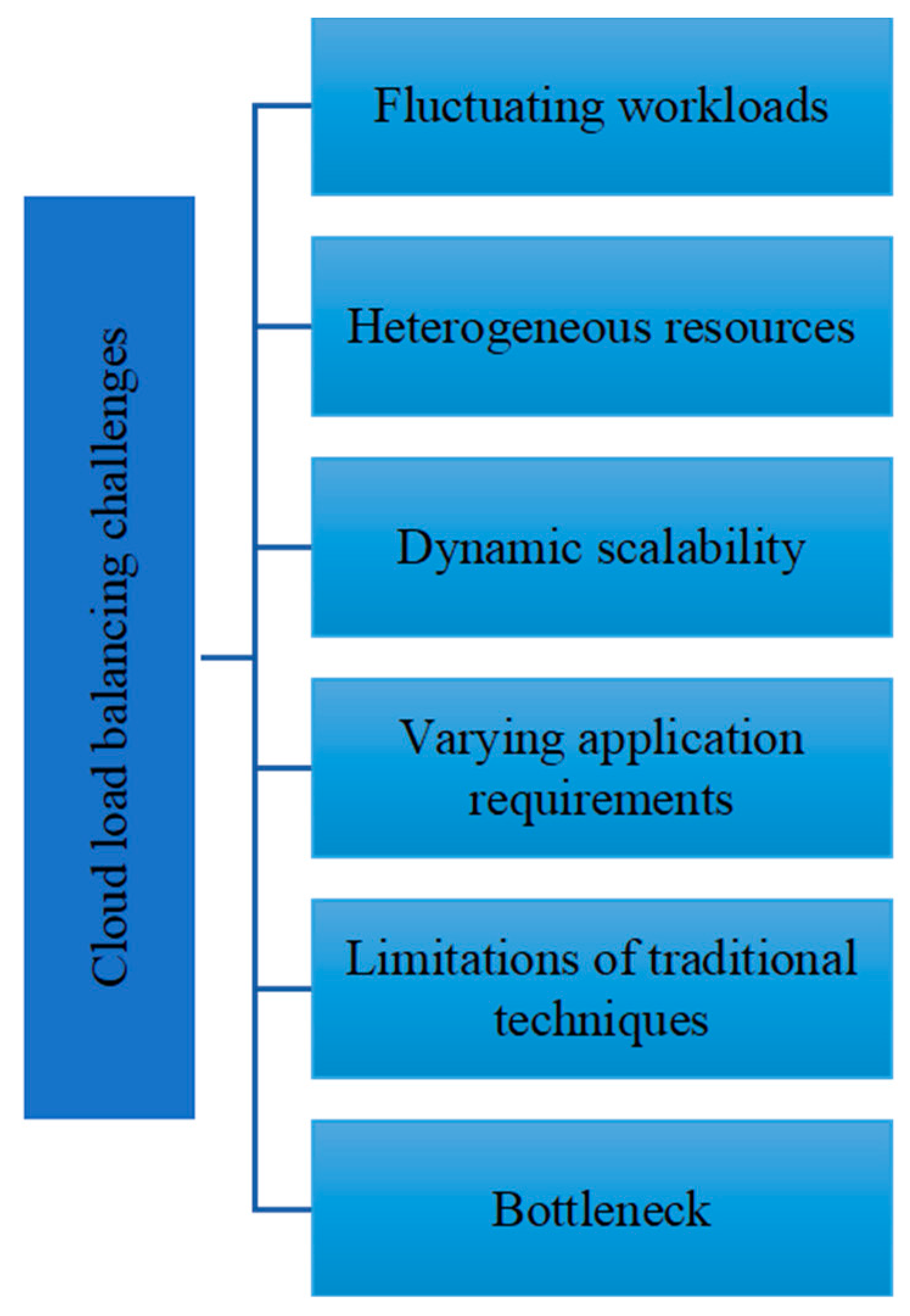

As shown in

Figure 3, load balancing presents several challenges, each demanding innovative solutions to ensure efficient and effective workload distribution in cloud environments. These challenges encompass:

Fluctuating workloads: Cloud ecosystems encounter dynamic workload variations driven by diurnal patterns, seasonal demands, and abrupt surges in user engagement. Conventional load-balancing methods, entrenched in static allocation strategies, grapple to accommodate these shifts adeptly. Consequently, specific servers may experience overload while others remain underutilized, culminating in suboptimal resource utilization and heightened response times. Nature-inspired meta-heuristic algorithms emerge as a solution by dynamically adapting resource allocation in real time to prevailing workload conditions [

18].

Heterogeneous resources: Cloud environments encompass a medley of resources, spanning diverse server types, storage devices, and networking components. These resources differ in their capabilities, performance attributes, and availability. Balancing workloads across this heterogeneity while acknowledging varying capacities and performance levels constitutes a formidable endeavor. Conventional load-balancing approaches often extend uniform treatment to all resources, yielding subpar resource allocation outcomes [

19].

Dynamic scalability: A cardinal trait of cloud computing is dynamic scalability, affording resources the liberty to scale proportionally to demand fluctuations. Load-balancing algorithms must seamlessly navigate this dynamic resource provisioning landscape to ensure optimized performance and judicious resource usage. Traditional load-balancing techniques often grapple with suboptimal resource scaling, which may lead to underutilization or overprovisioning. Nature-inspired meta-heuristic algorithms shine in this context, demonstrating remarkable adaptability to evolving demands and adeptly recalibrating resource allocation [

20].

Varying application requirements: Cloud-hosted applications wield disparate performance prerequisites and Service Level Agreements (SLAs). Load-balancing algorithms confront the challenge of reconciling these diverse demands while optimizing resource allocation. Conventional approaches often emphasize resource usage, sidelining the distinctive needs of different applications. This oversight may result in SLA breaches and subpar application performance [

21]. Nature-inspired meta-heuristic algorithms surmount this hurdle by embracing multi-objective optimization. By weighing diverse performance metrics, these algorithms unearth optimal solutions that cater to the diverse necessities of distinct applications.

Limitations of traditional techniques: Conventional load-balancing techniques, exemplified by round-robin, least-connection, and static partitioning, grapple with the intricacies of load balancing within the cloud milieu. These approaches frequently exhibit an absence of adaptability, neglect real-time contingencies, and falter in optimizing resource allocation. For instance, static partitioning mandates fixed workload allotments to each server, engendering imbalanced workloads and suboptimal resource utilization [

22]. Nature-inspired meta-heuristic algorithms circumvent these limitations, harnessing intelligent search and optimization mechanisms to ascertain nearly optimal solutions in the dynamic, multifaceted expanse of cloud environments.

Bottleneck: The entire computing system can be affected if the network server fails. Thus, building suitable distributed models where an individual node is not dominant over the entire system is challenging [

23].

2.4. Load-Balancing Policies

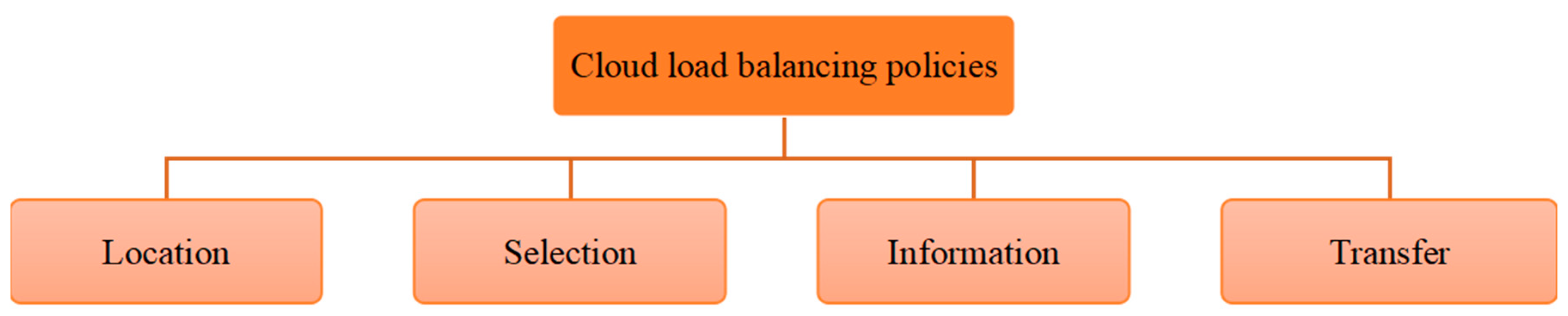

Cloud computing has several load-balancing policies that are widely adopted, as illustrated in

Figure 4. These policies encompass:

Location policy: This policy primarily focuses on identifying dormant or underutilized VMs and reallocating tasks to them for further processing. The process involves specifying crucial factors for migrating tasks through random selection, negotiation, and probing. The policy determines a target node and randomly transfers assigned work activities.

Selection policy: The selection policy delineates the work activities eligible for migration from one device to another. It emphasizes work activities with specific characteristics and structural attributes conducive to migration.

Information policy: Operating as a dynamic load-balancing strategy, the policy stores comprehensive information about resources within the system. This data is leveraged by various methods to guide their actions. The policy dictates methods for data collection, and nodes utilize the Agent technique to gather data. Different types of information policies exemplify this approach, such as supply, routine, and state change policies.

Transfer policy: This policy defines the scenarios in which tasks are migrated between network devices, termed the target device. This policy employs two methods for recognizing tasks to be migrated: all-recent and last-obtained. Incoming tasks follow the last-obtained method, while the latest procedure uses the all-recent method. The transfer policy identifies whether a task can be migrated and the suitable procedure to execute, such as task rescheduling.

2.5. Meta-Heuristic Algorithms

In tandem with the burgeoning era of information technology, many optimization problems are surfacing across diverse domains. Regrettably, most real-world optimization challenges are inherently NP-hard, defying efficient decoding within polynomial time frames. Consequently, exact mathematical methods can only grapple with diminutive instances, leaving larger-scale problems insurmountable. However, rather than capitulating, researchers have ingeniously harnessed possible approximation methods to unearth viable solutions within given time constraints. These approximation techniques fall under two principal categories: meta-heuristics and heuristics, both founded on the tenets of randomization. Distinctive to these algorithms is their disparity in problem dependency. Heuristics exhibit a more problem-specific disposition than their meta-heuristic counterparts, rendering them applicable to a constrained subset of challenges. In contrast, meta-heuristics boast versatility across a gamut of optimization problems, owing to their utilization of a specialized optimizer often referred to as a “black box”.

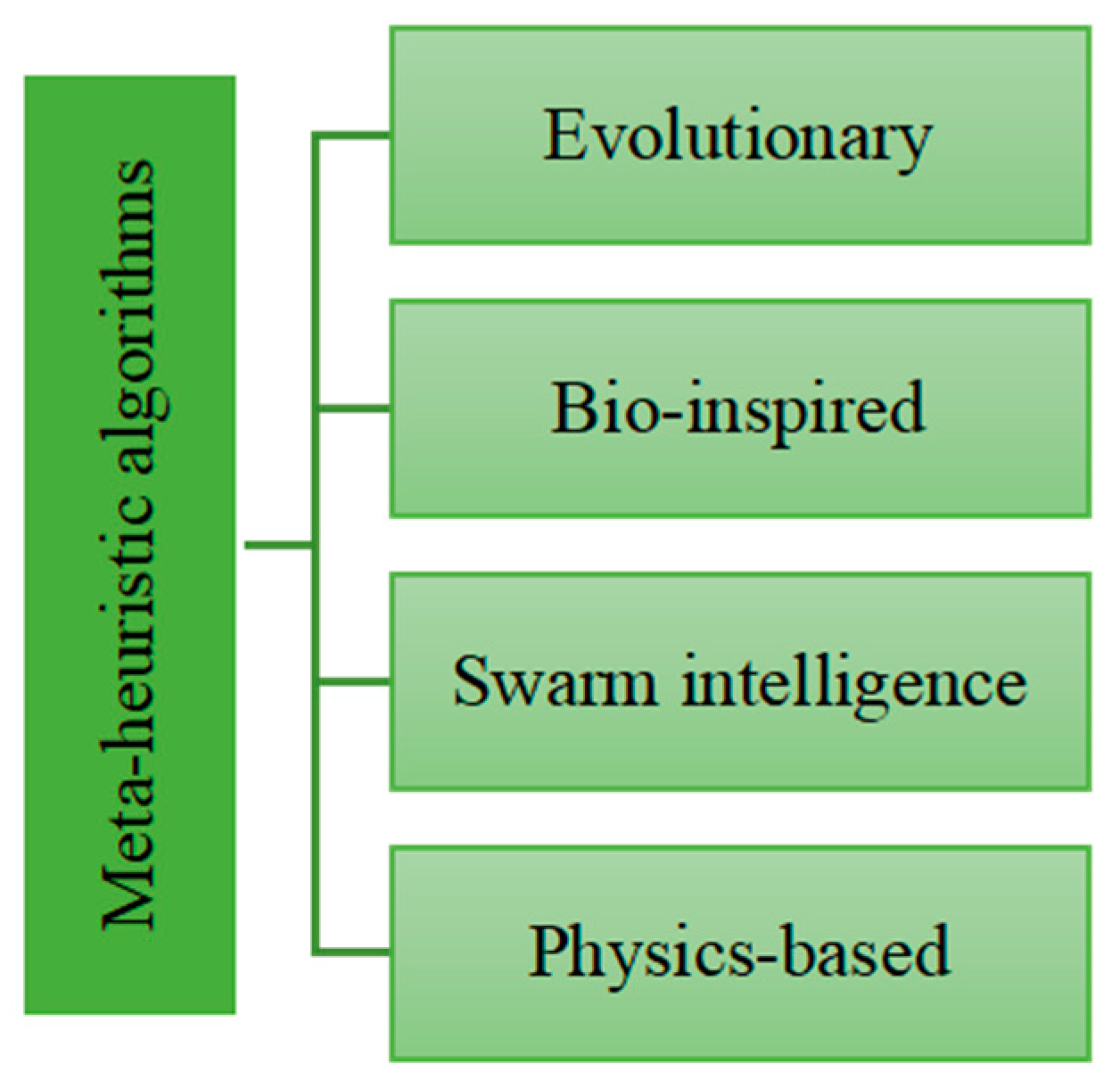

The recent literature has presented an array of nature-inspired meta-heuristic algorithms, including evolutionary algorithms, bio-inspired algorithms, swarm intelligence, and physics-based algorithms (

Figure 5). These innovative methodologies leverage insights from natural processes, phenomena, and behaviors to navigate complex optimization landscapes, paving the way for effective and efficient solutions across an extensive spectrum of real-world optimization problems.

Evolutionary Algorithms (EAs): These algorithms are inspired by natural evolution. They include techniques like Differential Evolution (DE), Evolution Strategies (ESs), Genetic Algorithms (GAs), and Genetic Programming (GP). EAs maintain a population of potential solutions and iteratively evolve them through selection, crossover, and mutation operators. Over time, the population improves through the principles of survival of the fittest [

24].

Bio-inspired algorithms: These algorithms draw inspiration from biological processes and phenomena. They often mimic the behavior of living organisms. One example is the Artificial Immune System (AIS), a simulation of the human immune mechanism to solve optimization problems. Other examples include the Hormone-Inspired Optimization Algorithm and the Bacterial Foraging Optimization Algorithm [

25].

Swarm intelligence: These algorithms take inspiration from the collective behavior of decentralized and self-organized systems, particularly the behavior of social insects like bees, ants, and birds. Particle Swarm Optimization (PSO) is a well-known example of individuals (particles) in a population adjusting their positions according to their own experience and the experience of the entire swarm to find optimal solutions [

26].

Physics-based algorithms: These algorithms take inspiration from physics principles to solve optimization problems. They often simulate physical phenomena like gravity, force, and energy to guide the optimization process. An example is the Gravitational Search Algorithm (GSA), where solutions are attracted to better solutions, like particles in a gravitational field [

27].

The proliferation of metaphor-based algorithms in the optimization field has sparked considerable debate, as many of these algorithms are perceived as minor variations of well-established techniques without substantial novelty. The work by Camacho-Villalón et al. [

28] underscores this issue by critically examining several such algorithms, including the grey wolf, moth-flame, whale, firefly, bat, and antlion algorithms. This study recognizes the necessity of distinguishing between truly innovative approaches and those that merely repackage existing methods under new metaphors. Our study intends to comprehensively assess meta-heuristic algorithms, specifically focusing on their practical application and performance in cloud load balancing, in order to address these challenges. We have conducted a thorough analysis of the literature to find really innovative elements and enhancements provided by these algorithms. Moreover, our work enhances the area by evaluating the efficiency and efficacy of these algorithms using empirical analysis and examining their theoretical foundations and possible benefits in dynamic cloud systems. Our goal is to provide an unbiased viewpoint that recognizes the constraints pointed out by earlier criticisms, while also illustrating the tangible advantages that certain algorithms may provide in particular situations.

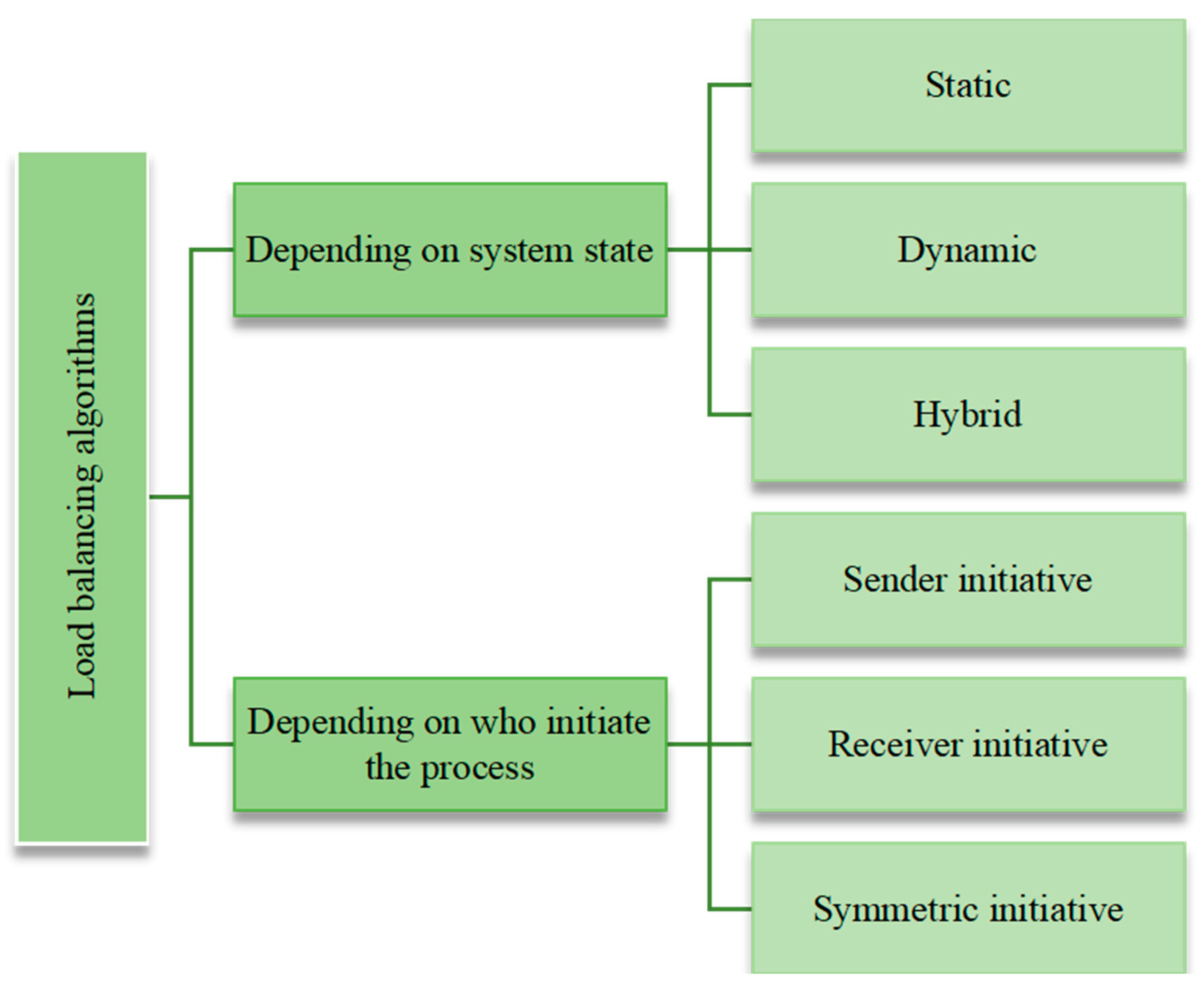

2.6. Classification of Load-Balancing Algorithms

As shown in

Figure 6, load-balancing techniques can be categorized based on different criteria, including system state and the initiator of the load-balancing process. These algorithms can be divided into three groups depending on the system state: static, dynamic, and hybrid. In static load balancing, the distribution of tasks or resources is set before the execution of the application and remains constant. This approach assumes that the system’s workload and resource availability remain relatively constant during the application’s execution. Static load-balancing algorithms are simple to use but may not adapt well to dynamic changes in the system. Dynamic load-balancing algorithms continuously monitor the system’s current state and adapt the distribution of tasks or resources in real time. These algorithms suit systems where the workload and resource availability vary significantly during execution. Dynamic load-balancing techniques often use metrics and heuristics to dynamically decide task/resource allocation. Hybrid load-balancing combines elements of both static and dynamic approaches. It allows initial static allocation to establish a baseline distribution, then employs dynamic adjustments to respond to changing system conditions. This approach aims to strike a balance between the simplicity of static methods and the adaptability of dynamic ones.

Load-balancing algorithms can also be classified based on who initiates the load-balancing process. In sender-initiated load balancing, the sender (the entity responsible for distributing tasks or resources) decides how to distribute the workload. This approach is often used when the sender better understands the system’s state and resource availability. Sender-initiated load balancing can be more proactive in managing system resources. In receiver-initiated load balancing, the receiver (the entity receiving the tasks or resources) plays a more active role in determining how to balance the load. This approach is useful when the receiver can provide feedback on workload and resource availability, allowing for more localized load-balancing decisions. Symmetric initiative load balancing involves both senders and receivers participating in the load-balancing process. This approach aims to balance sender and receiver knowledge, making decisions collaboratively. Symmetric load balancing can be effective in systems where both ends have valuable insights into the workload and resource conditions.

3. Meta-Heuristic Algorithms for Cloud Load Balancing

Traditional load-balancing methods, while straightforward, often fall short when confronted with complex and highly uncertain scenarios. To address these more intricate challenges, adopting meta-heuristic methods has become prevalent. A meta-heuristic method can be characterized as an iterative and interactive procedural approach that steers the process of exploration and utilization within the search space. In contrast to conventional techniques, meta-heuristic methods exhibit higher adaptability and robustness, making them well-suited for tackling the intricate and unpredictable nature of certain problems, such as load balancing in dynamic and uncertain environments. These methods provide a versatile framework that enables effective navigation through complex solution spaces, leading to enhanced performance and optimized outcomes. This section reviews recent load-balancing techniques and specifies their important features. We have surveyed chosen methods based on nature-inspired meta-heuristic algorithms under the following categories: ant colony optimization (ACO), artificial bee colony (ABC), GA, PSO, bat algorithm (BA), whale optimization algorithm (WOA), simulated annealing (SA), biogeography-based optimization (BBO), firefly algorithm (FA), and grey wolf optimizer (GWO).

Table 1,

Table 2,

Table 3,

Table 4,

Table 5,

Table 6,

Table 7,

Table 8,

Table 9 and

Table 10 compare the reviewed methods in a tabular manner.

Even though we have provided a brief literature review of the meta-heuristic algorithms under consideration, a more in-depth understanding of each of them is required, taking into account the primary benefits of each meta-heuristic over other approaches. Herein, we evaluate the set features of each algorithm in terms of adjustability, rate of convergence, computation complexity, and dynamic workload handling. For example, conventional shopping algorithms such as round-robin or the least connection approach have a straightforward and non-problematic implementation but respond poorly to intense and dynamic cloud systems. However, the nature-inspired algorithms, either PSO or BA, have proven to be more flexible in terms of adapting to the dynamic distribution of resources. The BA, derived from the PSO algorithm, incorporates new exploration and exploitation mechanisms to improve its performance for specific problems. Various experiments and case studies have demonstrated the effectiveness of these meta-heuristic algorithms in handling cloud load-balancing problems, surpassing the time and resource consumption of conventional methods.

3.1. Ant Colony Optimization Algorithm

The ACO algorithm is a nature-inspired meta-heuristic algorithm based on the foraging behavior of ants. It mimics how real ants find the shortest path between their nest and food sources by leaving pheromone trails that guide their fellow ants. In ACO, a population of artificial ants iteratively explores solution spaces to optimize complex problems. Each ant constructs solutions by moving through solution components and depositing artificial pheromones on visited components. Solutions with higher pheromone concentrations attract more ants, leading to an iterative reinforcement process that converges toward optimal solutions [

68]. In cloud load balancing, ACO is crucial in optimizing resource allocation among VMs in a dynamic and distributed environment. The cloud load-balancing problem involves distributing incoming workloads efficiently across available VMs to enhance resource utilization and minimize response times. ACO helps address this challenge by modeling VMs as potential solution components and workload distribution strategies as paths. By employing ACO, the cloud load-balancing process can dynamically adapt to changing workloads and VM availability, ensuring effective resource utilization and minimizing response times in a scalable and self-adjusting manner.

Gao and Wu [

29] address the task distribution and coordination challenges in cloud computing for optimal resource utilization and load avoidance. They propose a novel ACO approach, employing forward–backward ant mechanisms and max–min rules to efficiently identify candidate nodes for load balancing. Their method incorporates pheromone initialization, updates based on physical resources, and task execution prediction. They define ant movement probabilities to accelerate the search, resulting in dynamic load balancing, reduced search times, and improved network performance in various load scenarios. Simulations demonstrate the effectiveness of their strategy in achieving efficient load distribution in cloud computing. Muteeh et al. [

30] introduce the Multi-resource Load-Balancing Algorithm (MrLBA) within a cloud computing framework. Built on ACO, the algorithm prioritizes makespan and cost while ensuring a balanced system load. Experimental validation using benchmark workflows demonstrates that MrLBA significantly decreases execution time and cost. It achieves efficient resource utilization by maintaining a balanced distribution of load among available resources.

Xu et al. [

31] focus on multidimensional resource load balancing across physical machines within a cloud computing platform. They utilize ant colony optimization to create an efficient algorithm for VM allocation, considering the problem’s NP-hard nature. They tailor ant colony optimization for VM allocation and enhance physical machine selection to avoid premature convergence or local optima. Extensive simulations showcase the effectiveness of their algorithm in achieving load balancing and enhancing resource utilization in cloud computing. Gabhane et al. [

32] devise a unique hybrid method, combining ACO with Tabu search (TS) for multi-resource load balancing. They evaluate performance metrics, including makespan, average throughput, and total cost. The introduced ACOTS approach outperforms existing optimization methods like GA, PSO, ACO, and TS, demonstrating a 30% enhancement in data delivery compared to these algorithms. ACOTS is particularly effective in achieving rapid file delivery and processing.

Bui et al. [

33] developed a VM provisioning solution that uses game theory to balance the interests of both service providers and customers. They utilize the ACO algorithm based on Nash equilibrium to approximate optimal or near-optimal solutions. To solve the game, experiments involve applying various ant algorithms, including the Ant System, Max–Min Ant System, and Ant Colony System. Simulation results reveal the use of coefficients for achieving load balancing in VM provisioning, which are contingent on the objectives of cloud computing service providers.

Ragmani et al. [

34] introduce an innovative hybrid algorithm merging Fuzzy logic and ACO to enhance load balancing in the cloud. Traditional load-balancing methods struggle with high requests and server fluctuations. This novel approach prioritizes load balancing and response time goals in the Cloud environment. ACO’s performance depends on parameter values optimized using Taguchi experimental design. Additionally, a Fuzzy module evaluates pheromone values to enhance calculation speed. Simulations in the CloudAnalyst platform validate the effectiveness of the Fuzzy–ACO algorithm, showcasing its superiority over other load-balancing techniques. Mohammadian et al. [

35] proposed a new load-balancing mechanism, LBAA, employing ant colony optimization and artificial bee colony algorithms. LBAA is designed to distribute the workload evenly across systems in data centers. Simulations demonstrate that this algorithm surpasses previous response time, imbalance degree, makespan, and resource utilization methods, achieving improvements of up to 25%, 15%, 12%, and 10%, respectively.

Raghav and Vyas [

36] propose a hybrid method called “ACBSO,” merging ACO and bird swarm optimization techniques. Their comparative analysis against standalone ACO and bird swarm optimization shows the hybrid approach’s superiority. Using primary factors like makespan, throughput, fitness score, and resource consumption, simulation results indicate ACBSO’s improved performance. The CloudSim simulator is employed for these necessary simulations.

Minarolli [

37] offer a distributed task scheduling strategy using swarm intelligence. Schedulers on different nodes make local task allocation decisions, incorporating ant colony optimization and queue load data to address the delayed reward issue during high load conditions. Experiments in a simulated environment demonstrate superior outcomes compared to distributed scheduling based solely on ant colony or queue load information. Amer et al. [

38] introduce a hybrid multi-objective algorithm, SMO_ACO, to address scheduling challenges. Combining Spider Monkey Optimization (SMO) with ACO, the algorithm employs a fitness function encompassing four scheduling objectives: schedule length, execution cost, consumed energy, and resource utilization. Implemented using the CloudSim toolkit, SMO_ACO’s performance is evaluated across varying workloads and compared with recent algorithms. The results demonstrate its efficiency in resource allocation, cloud performance enhancement, and increased profits.

3.2. Artificial Bee Colony Algorithm

The Artificial Bee Colony (ABC) algorithm draws inspiration from the foraging behavior of honeybee colonies. In ABC, three types of artificial bees are employed: employed bees, onlooker bees, and scout bees. Employed bees explore the solution space by exploiting the information stored in promising solutions, while onlooker bees select solutions based on their fitness values. Scout bees introduce diversity by randomly searching unexplored regions. Through this process of employed bee exploitation and onlooker bee exploration, the algorithm iteratively refines solutions to converge toward optimal or near-optimal solutions [

69]. The ABC algorithm is vital in efficiently distributing incoming workloads across VMs in cloud load balancing. This process aims to optimize resource utilization, minimize response times, and enhance the overall performance of cloud-based services. By mapping the problem of load balancing onto the ABC framework, the algorithm treats potential load distribution solutions as food sources, and the bees’ interactions simulate the exploration of these solutions. ABC’s ability to balance exploration and exploitation makes it well-suited for dynamically adjusting the allocation of workloads to VMs based on changing demand patterns, ultimately leading to improved resource utilization and reduced latencies in cloud-based applications.

Kruekaew and Kimpan [

39] present the MOABCQ approach, an independent task scheduling method for cloud computing. It combines a multi-objective task scheduling optimization utilizing the Artificial Bee Colony Algorithm (ABC) with the reinforcement learning technique Q-learning. This hybrid method aims to optimize scheduling, enhance resource utilization, maximize VM throughput, and achieve load balancing across VMs by considering makespan, cost, and resource utilization. Performance comparisons using CloudSim against existing algorithms were conducted on three datasets, including Max–Min, FCFS, HABC_LJF, Q-learning, MOPSO, and MOCS. Results demonstrate that the MOABCQ approach outperforms other algorithms by optimizing makespan, cost, imbalance, throughput, and resource utilization.

Kruekaew and Kimpan [

40] introduce HABC, a novel approach merging ABC and heuristic scheduling algorithms for improved VM scheduling in cloud computing. HABC aims to minimize makespan and enhance load balance in homogeneous and heterogeneous environments. Comparisons with other swarm intelligence algorithms, including ACO, PSO, and IPSO, demonstrate HABC’s superiority in VM scheduling management, as confirmed through CloudSim-based experiments.

Kumar and Chaturvedi [

41] introduce the Improved Cuckoo Search + Artificial Bee Colony (ICSABC) algorithm to enhance load balancing. This bio-inspired approach optimizes load distribution across VMs for efficient scheduling, which is crucial in cloud computing, where users pay based on resource usage. The algorithm computes the best task-to-VM mapping, factoring in VM processing speed and workload length. Experimental results demonstrate that ICSABC achieves superior average VM load distribution, offering high accuracy and low complexity compared to existing methods.

Janakiraman and Priya [

42] introduce an innovative approach, the IABC-MBOA-LB, for optimizing resource allocation in cloud environments. This method combines the global exploration abilities of ABC with the local exploitation potential of the Monarchy Butterfly Optimization Algorithm (MBOA). The focus is on efficiently assigning user tasks to VMs while addressing network and computing resource challenges. The IABC-MBOA-LB framework tackles issues like task-finishing delays and fragmentation by strategically utilizing network and computing resources for improved allocation. Through extensive simulations, the approach is proven effective. Results demonstrate its superiority in minimizing load variance, makespan, connection deviations, imbalance degree, and maximizing throughput. This advantage is maintained across tasks and VM quantities, highlighting its efficacy in enhancing resource allocation and load balancing.

Tabagchi Milan et al. [

43] presented an innovative technique grounded in artificial bee behavior, aiming to improve QoS metrics and reduce energy consumption in green computing. The method incorporates honey bee tasks extracted from overloaded VMs, treating them as migration candidates prioritizing lower-priority tasks. Implemented in the CloudSim framework, the technique remarkably enhances QoS, reduces makespan, and minimizes energy usage compared to alternatives. This empirical evidence underscores the approach’s potential to enhance performance metrics and promote energy-efficient computing practices simultaneously.

Sefati and Halunga [

44] introduce the Artificial Bee Colony and Genetic Algorithm (ABCGA), a two-step meta-heuristic algorithm for optimized service selection in cloud computing. Initially, GA selects services based on fitness. If suitable, services are presented to the ABC algorithm for personalized user selection. Using CloudSim simulations, the proposed ABCGA method is rigorously evaluated, demonstrating reliability, availability, and cost-effectiveness improvements. These findings support the potential of ABCGA in optimizing service selection and allocation within cloud computing environments.

3.3. Genetic Algorithm

GA is a powerful meta-heuristic optimization technique inspired by natural evolution. In GA, a population of potential solutions is evolved over generations through genetic operators such as selection, crossover, and mutation. Solutions with higher fitness, evaluated based on an objective function, are more likely to be selected for reproduction, mimicking the principle of survival of the fittest. Crossover combines genetic information from parent solutions to create new offspring, while mutation introduces small random changes. This iterative process emulates the evolutionary cycle, gradually improving the population’s overall fitness and converging toward optimal or near-optimal solutions [

70]. GA addresses load balancing by representing different load distribution strategies as potential solutions within the population. The algorithm refines these solutions through successive generations to adapt to varying workloads and VM availability. GA’s ability to explore diverse solutions and exploit promising ones makes it well-suited for adapting load distribution strategies in real time, ultimately leading to improved performance and responsiveness of cloud-based services.

Makasarwala and Hazari [

45] developed a cloud computing load-balancing approach using the GA. Their innovation lies in initializing the population by prioritizing requests based on time-based priority, mirroring real-world scenarios. This incorporation of request priority aims to enhance the algorithm’s real-world applicability. Simulations conducted using the Cloud Analyst framework demonstrate the approach’s superiority over existing methods. By factoring in real-world priority considerations, the proposed method offers enhanced authenticity and practicality to cloud computing load balancing, contributing to its notable performance improvement.

Saadat and Masehian [

46] proposed an innovative hybrid intelligent load-balancing approach, integrating the GA module with a Fuzzy Logic module. The GA module optimizes load distribution through randomized job arrangement, while the Fuzzy Logic module evaluates server states based on RAM and CPU queues. A constructed objective function captures complex relationships. Input variables like satisfaction degree and service times inform service availability output. Computational experiments validate the approach’s efficacy, notably achieving superior solutions faster than planned. This swift optimization enhances user satisfaction, highlighting the potential of this hybrid intelligent approach to elevate cloud computing load balancing.

Gulbaz et al. [

47] present the “Balancer Genetic Algorithm” (BGA), a load-balancing scheduler aimed at improving both makespan and load balancing in computing systems. Inefficiencies caused by uneven resource utilization are tackled by BGA’s load-balancing mechanism, considering the actual VM load in a million instructions. With an awareness of the complexity of makespan optimization and load balancing, the authors adopt a multi-objective optimization approach to enhance both metrics simultaneously. Comprehensive experimentation covers workload distributions and batch sizes, confirming BGA’s effectiveness. Compared to state-of-the-art methods, BGA significantly improves metrics like makespan, throughput, and load balancing. These findings underscore BGA’s potential to optimize computing system performance and address load-balancing challenges.

3.4. Particle Swarm Optimization Algorithm

The PSO algorithm simulates the social behavior of birds or fish flocking. In PSO, a population of particles represents potential solutions to an optimization problem. Each particle adjusts its position in the solution space based on its best-known solution and the group’s collective knowledge. Particles communicate by sharing information about their local best solutions, enabling them to navigate towards optimal or near-optimal solutions over iterations. The algorithm balances exploration and exploitation, as particles are guided by their experience and the influence of more successful particles in the swarm [

71]. PSO addresses cloud load balancing by treating different load distribution configurations as particle positions. By iteratively adjusting these positions based on the collective experience of the particles, PSO adapts to changing workload patterns and VM availability. The algorithm’s ability to exploit local and global information makes it well-suited for dynamically optimizing load distribution strategies, ultimately enhancing the performance and responsiveness of cloud-based applications.

Pradhan and Bisoy [

48] have introduced an innovative approach for task scheduling in cloud environments. Their method, LBMPSO (Load Balancing Modified PSO), optimizes makespan and resource utilization through enhanced communication between tasks and data center resources. The strategy is executed using the CloudSim simulator. Notably, simulation outcomes underscore the superiority of the proposed technique in minimizing makespan and maximizing resource utilization compared to existing methods.

Alguliyev et al. [

49] introduce a novel load-balancing technique, αPSO-TBLB, that employs task-based load balancing in the cloud. The method optimizes task migration from overloaded VMs to suitable counterparts, aiming to minimize task execution and transfer times. Experimental validation using Cloudsim and Jswarm tools demonstrates its effectiveness in achieving optimal task scheduling and equitable task distribution among VMs, reducing time consumption for task-to-VM assignments.

Mapetu et al. [

50] present an optimized binary PSO algorithm for efficient task scheduling and load balancing within cloud computing. The algorithm focuses on low time complexity and cost. Their approach involves defining an objective function to minimize completion time differences among diverse VMs while adhering to introduced optimization constraints. The particle position updating mechanism is tailored to load balancing. The proposed algorithm outperforms existing heuristic and meta-heuristic algorithms through experimentation, effectively enhancing task scheduling and load distribution.

Malik and Suman [

51] introduce a novel approach, the hybrid Lateral Wolf and Particle Swarm Optimization (LW-PSO), to address task scheduling challenges and ensure optimal load distribution across VMs in cloud computing. This method aims to optimize VM selection and parallel task scheduling for reduced response times. By employing Lateral Wolf (LW) for parallel task scheduling and PSO to obtain optimal solutions, the approach ensures balanced VM loads, eliminating overload or underutilization. LW calculates Fitness Value (FV), which guides PSO in updating particles, positions, and velocities. This process optimally assigns tasks to VMs. Results demonstrate the proposed method’s superiority over existing systems by analyzing key factors, including load, processor use, turnaround time, response time, runtime, and memory.

3.5. Bat Algorithm

BA draws inspiration from the echolocation behavior of bats. Each virtual bat represents a potential solution to an optimization problem in BA. Bats fly through the solution space, emitting ultrasonic pulses (representing solutions) and adjusting their positions based on their previous experience and the best solutions found. Bats are guided by their echolocation (local search) and the echolocation of other bats (global search), enabling them to explore and exploit the solution space in search of optimal or near-optimal solutions. The algorithm’s key features include frequency tuning and loudness adjustment to balance exploration and exploitation [

72]. By mapping the load-balancing problem onto the BA framework, virtual bats correspond to different load distribution configurations. The algorithm refines these configurations through successive iterations based on individual and collective experiences, adapting to changing workload demands and VM availability. BA’s ability to simultaneously explore diverse solutions and intensively exploit promising ones makes it well-suited for addressing dynamic load-balancing challenges in cloud environments, ultimately leading to improved performance and responsiveness of cloud-based services.

Sharma et al. [

52] utilized the bat algorithm as a load-balancing technique to fulfill load-balancer objectives. They implemented this algorithm using the Parallel Processing toolbox in Matlab and contrasted the outcomes against round-robin and Fuzzy GSO methods. The evaluation focused on response time and task count. The study acknowledged that load balancing, involving job migration among VMs, impacts response time. Future work aims to enhance this by developing a job migration algorithm.

Ullah and Chakir [

53] are dedicated to enhancing task distribution within cloud computing’s VMs through load-balancing techniques. Their approach involves modifying the bat algorithm’s fitness function value within the load balancer section to optimize task allocation. Algorithm iterations are followed by task distribution among VMs, with further modifications to the bat search process at the dimension level. This revised approach is referred to as the modified bat algorithm. The algorithm’s performance is assessed using throughput, makespan, imbalance degree, and processing time. Notably, the proposed algorithm outperforms standard techniques, significantly boosting the accuracy and efficiency of cloud data centers.

Zheng and Wang [

54] propose an innovative approach to enhancing cloud computing service quality through a hybrid multi-objective bat algorithm. To counter local minimum convergence, they categorize the bat population and employ the back-propagation algorithm based on mean square error and conjugate gradient method to amplify search direction and pulse emission rate. Incorporating a random walk using Lévy flight further bolsters global search capability, optimizing the solution. Simulations demonstrate the algorithm’s superiority over multi-objective ant colony optimization, genetic, particle swarm, and cuckoo search algorithms, specifically regarding makespan, imbalance degree, throughput, and cost, with a slight advantage over multi-objective ant colony optimization and genetic algorithms.

3.6. Whale Optimization Algorithm

WOA takes inspiration from the hunting behavior of humpback whales. In WOA, each potential solution is a “whale” in the solution space, and the algorithm simulates whales’ movement patterns as they search for prey. Whales exhibit exploration through random movements and exploitation by homing in on prey locations. WOA employs these principles through mathematical equations that guide the movement of whales, encouraging a balance between exploration and exploitation. The algorithm iteratively refines the positions of whales to converge toward optimal or near-optimal solutions [

73]. WOA addresses cloud load balancing by mapping different load distribution strategies to whale movements. The algorithm adjusts these strategies through successive iterations based on the collective experience of the “whales” adapting to changing workloads and VM availability. WOA’s ability to combine exploratory and exploitative search behaviors makes it well-suited for dynamically optimizing load distribution in cloud environments, ultimately enhancing the performance and responsiveness of cloud-based applications.

To enhance cloud performance, Ramya and Ayothi [

55] proposed the Hybrid Dingo and Whale Optimization Algorithm-Based Load Balancing Mechanism (HDWOA-LBM). This method leverages the hunting behavior of dingoes (symbolizing tasks) pursuing VMs as prey. The process integrates exploration and exploitation inspired by the Dingo Optimization Algorithm (DOA), utilizing chasing, approaching, encircling, and harassing tactics. Whale optimization benefits improve DOA’s exploitation phase, balancing local and global search trade-offs. Simulation experiments using CloudSim demonstrate significant advantages: 21.28% enhanced throughput, 25.42% increased reliability, 22.98% reduced makespan, and 20.86% better resource allocation. HDWOA-LBM performs comparably with other intelligent load-balancing techniques.

Strumberger et al. [

56] introduce a hybrid whale optimization algorithm to tackle cloud resource scheduling challenges. This novel approach addresses the balance between exploration and exploitation, improving upon weaknesses in the original algorithm. Through extensive simulations on both standard benchmarks and real/artificial datasets using CloudSim, the hybrid algorithm consistently outperforms the original version and other heuristics and meta-heuristics. The study proves the algorithm’s efficacy in enhancing cloud resource scheduling and refining the original whale optimization implementation.

Ni et al. [

57] introduce a multi-objective task scheduling approach for cloud computing using a three-layer model based on whale-Gaussian cloud optimization. Their strategy, GCWOAS2, minimizes task completion time while optimizing resource utilization and load balancing. This is achieved through opposition-based learning, adaptive search range expansion, and a whale optimization algorithm based on Gaussian clouds. The proposed GCWOA algorithm combines these techniques to escape local maxima and attain global optimal scheduling. Experimental results demonstrate improved task completion time, VM load balance, and resource utilization compared to other meta-heuristic algorithms.

3.7. Simulated Annealing Algorithm

The SA arithmetic constitutes stochastic global optimization, drawing inspiration from the parallelism in the annealing procedure used for solid matter and the overall approach to addressing combinational optimization issues [

74]. The procedure can be outlined through the ensuing stages:

Stage 1: Initialize the process by assigning a suitably large initial temperature T0 > 0 and setting the annealing iteration counter k = 0. Initialize a solution x(i) and estimate its corresponding energy value.

Stage 2: Determine whether the predetermined cycle termination criteria have been fulfilled at the current temperature. If affirmative, proceed to stage 3. If not, randomly generate a new solution x(j) within the neighborhood N(x(i)) of the current point. Determine the energy disparity between the current solution and the new one. If the energy difference is favorable (lower), update x(i) = x(j). If the energy difference is unfavorable, calculate the probability . If p exceeds a random number n drawn uniformly from the interval (0, 1), update x(i) = x(j); otherwise, repeat step 2.

Stage 3: Compute Tk+1 = d(Tk) using an annealing equation d(Tk). Evaluate whether the Metropolis criteria are satisfied. If so, conclude the calculation and output the result. If not, return to Stage 2 for further iterations.

Sabar and Song [

58] propose a novel technique for load balancing, combining simulated annealing (SA) with grammatical evolution (GE). SA is effective but reliant on manual parameter tuning. To address this, the authors introduce GE to adjust SA’s cooling schedule and neighborhood structures dynamically. This adaptive approach enhances solutions by tailoring SA to the evolving search landscape. They apply this to the Google machine reassignment problem, demonstrating its superiority over state-of-the-art algorithms in achieving load balancing.

Hanine and Benlahmar [

59] focus on achieving workload balance among VMs through a two-phase method. The first phase sets operational thresholds for VMs, defining overload points. In the second phase, tasks are allocated using an enhanced version of the “simulated annealing (SA)” meta-heuristic algorithm. They notably refine SA’s acceptance probability, optimizing task allocation with fewer iterations than standard SA.

Kumar et al. [

60] introduce a simulated annealing-based framework for job scheduling to minimize execution time and ensure load balance across VMs. Implemented in CloudSim, their algorithm yields optimal solutions, outperforming algorithms like FCFS, min-min, RR, and Iterative Improvement. Notably, it significantly reduces job schedule execution times, demonstrating its effectiveness.

3.8. Biogeography-Based Optimization Algorithm

BBO stands as an evolutionary-derived approach, drawing inspiration from the principles of biogeography, particularly the analysis of species geographical distribution. This algorithm is equipped with two principal functions: migration and mutation. BBO boasts several noteworthy characteristics that set it apart from other meta-heuristic algorithms. Notably, it exhibits a remarkable degree of stability and demonstrates strong convergence properties. Moreover, the algorithm capitalizes on the enduring preservation and enhancement of solutions across generations through its migration function. Additionally, BBO introduces a unique dynamic where emigration and immigration parameter rates are dynamically adapted based on the fitness of each solution. Each individual within the BBO framework is endowed with distinct mutation constants within the population assembly, enhancing its performance compared to traditional GA, which employs a uniform mutation operator across the population. The algorithm’s effectiveness is further reinforced by a diverse range of evolutionary operators applied to individual entities within BBO, enabling it to surpass the performance of alternative algorithms [

75].

Ghobaei-Arani [

61] introduced a resource provisioning strategy focused on workload clustering for heterogeneous cloud applications. They combine BBO and K-means clustering to classify workloads under QoS criteria. Additionally, Bayesian learning determines suitable resource provisioning actions aligned with QoS demands. Simulation results demonstrate the approach’s effectiveness in reducing delay, SLA violations, cost, and energy consumption compared to alternative methods. This confirms its superiority in optimizing cloud application execution.

Bouhank and Daoudi [

62] present the Non-dominated Ranking Biogeography Optimization (NRBBO) algorithm to reduce power consumption and resource wastage during server VM placement. Using synthetic data, they demonstrate NRBBO’s effectiveness through empirical evaluation. NRBBO excels in efficiency, convergence, and solution coverage for VM placement compared to other multi-objective approaches. This emphasizes its proficiency in enhancing optimization outcomes, convergence rates, and solution coverage for VM-related challenges.

3.9. Firefly Algorithm

FA emerges as a potent and highly efficient meta-heuristic technique that has demonstrated remarkable efficacy in tackling various engineering optimization challenges, which is evident in contemporary literature. The FA draws inspiration from the luminous patterns of fireflies, cleverly mimicking their flashing behavior. By generating solutions in a stochastic manner and conceptualizing them as virtual fireflies, the algorithm sets out to emulate the diverse functions these natural luminous creatures serve. In nature, fireflies employ their flashes for many purposes, encompassing objectives like locating prey, alluring suitable mates, and establishing a safety alert mechanism. This dynamic process also extends to the intricate interplay between male and female fireflies. Notably, these fireflies exhibit distinct flashing frequencies, influenced by the speed and interval of their luminous emissions, which play a pivotal role in attracting mates and conveying information [

76].

Devaraj et al. [

63] introduce FIMPSO, a novel load-balancing algorithm merging FA and Improved Multi-Objective PSO (IMPSO). FA minimizes the search space, while IMPSO refines responses. IMPSO selects the global best particle using a minimal distance criterion from a point to a line. FIMPSO achieves balanced load distribution, enhanced resource utilization, and reduced task response times. Simulations affirm FIMPSO’s superiority, yielding impressive metrics: average response time of 13.5 ms, peak CPU utilization of 98%, memory at 93%, 67% reliability, 72% throughput, and a makespan of 148, outperforming alternatives.

RM et al. [

64] present the Energy Efficient Cloud-Based Internet of Everything (EECloudIoE) framework, a pioneering effort to integrate expansive domains and provide valuable services to end-users. The architecture prioritizes energy efficiency via a two-step optimization process. Firstly, diverse IoT networks are clustered using the Wind Driven Optimization Algorithm, enhancing energy efficiency. Then, optimized cluster heads are chosen for each cluster based on FA, effectively reducing data traffic over non-clustering strategies. Rigorous comparisons against state-of-the-art approaches confirm EECloudIoE’s superiority, extending lifetimes and significantly reducing traffic burdens.

Sekaran et al. [

65] introduce the Dominant Firefly Algorithm, a novel meta-heuristic approach to optimize task distribution among cloud server VMs. The focus is improving cloud server response efficiency for enhanced mobile learning (m-learning) system accuracy. The method targets load imbalance in cloud servers to elevate m-learning user experiences. Innovations include Cloud-Structured Query Language (SQL) use and mobile device querying for seamless content delivery. Effective load-balancing techniques are emphasized, showcasing their potential to boost throughput and response times in mobile and cloud environments.

3.10. Grey Wolf Optimizer

GWO stands out as a widely referenced swarm intelligence (SI) algorithm that draws inspiration from the structured leadership and collaborative hunting dynamics that grey wolves exhibit in the natural world. Rooted in a population-based framework, this algorithm organizes the entire population based on the leadership of the top three performing wolves. The GWO algorithm has garnered extensive attention and application across diverse domains. It has proven effective in addressing a spectrum of optimization challenges. Its efficacy across such varied problem domains underscores its versatility and potential as a powerful optimization tool [

77].

Gohil and Patel [

66] propose a hybrid cloud computing load-balancing strategy, combining GWO and PSO algorithms. The approach improves system performance and resource utilization equity. Compared to Harmony Search, artificial bee colony, PSO, and GWO, the hybrid strategy exhibits enhanced convergence rates and implementation simplicity. Research experiments yield promising results, suggesting its potential to advance cloud load balancing significantly. This innovative combination holds promise for optimizing cloud computing environments.

Sefati et al. [

67] utilize the GWO algorithm to ensure effective load balancing by leveraging resource reliability. The approach involves identifying available and occupied nodes using GWO. Computation follows to establish individual thresholds and fitness functions for each node. The method is evaluated through CloudSim-based simulations, demonstrating its superiority over alternatives. It reduces costs and response times, optimizing resource allocation and load balancing. The approach generates optimal solutions, establishing it as a robust solution for cloud-based load-balancing challenges.

4. Discussion

Nature-inspired meta-heuristic algorithms have gained significant attention in various optimization and decision-making problems, including load balancing in cloud computing. These algorithms draw inspiration from natural processes and behaviors observed in biological, physical, and social systems to develop optimization techniques that efficiently solve complex problems. Regarding load balancing in cloud computing, nature-inspired meta-heuristic algorithms offer several advantages and play a crucial role.

Complex optimization: Load balancing in cloud computing involves distributing tasks and workloads across multiple servers or VMs to ensure efficient resource utilization and reduced response times. This task is often a complex optimization problem that requires finding optimal or near-optimal solutions. Nature-inspired algorithms provide powerful optimization techniques to tackle these challenges.

Global search: Cloud environments can have numerous variables and constraints, making it challenging to find the best solution. Nature-inspired algorithms, such as genetic algorithms, particle swarm optimization, and ant colony optimization, are designed to perform global searches in the solution space, helping to find solutions that traditional algorithms might miss.

Flexibility and adaptability: Nature-inspired algorithms are often designed to adapt and evolve, mimicking the ability of natural systems to adapt to changing environments. In cloud computing, workloads and resource availability can vary dynamically. These algorithms can help adapt load-balancing strategies to changing conditions effectively.

Parallelism and scalability: Cloud environments are inherently parallel and scalable. Many nature-inspired algorithms can be easily parallelized, allowing them to leverage the distributed nature of cloud computing resources. This makes them well-suited for addressing load-balancing challenges in large-scale cloud environments.

Multi-objective optimization: Load balancing often involves optimizing multiple objectives simultaneously, such as minimizing response time, maximizing resource utilization, and minimizing energy consumption. Nature-inspired algorithms can handle multi-objective optimization, allowing cloud administrators to find trade-offs among different goals.

Dynamic nature: Some nature-inspired algorithms, like particle swarm optimization, mimic the behavior of particles moving through a solution space. This dynamic nature aligns well with the dynamic nature of load balancing in cloud computing, where workloads and resources change over time.

Exploration and exploitation: Nature-inspired algorithms strike a balance between exploration (searching for new and unexplored areas of the solution space) and exploitation (refining solutions in promising regions). This is vital for finding optimal or near-optimal solutions to load-balancing problems.

Heuristic solutions: Load-balancing problems are often NP-hard, meaning that finding an optimal solution in a reasonable amount of time is practically impossible. Nature-inspired algorithms provide heuristic solutions that can efficiently find good solutions even for highly complex and large-scale load-balancing instances.

Domain-agnostic: Nature-inspired algorithms are generally domain-agnostic and can be applied to various problems, including load balancing in cloud computing. They can adapt to different system architectures and characteristics.

The meta-heuristic algorithms also use the heuristic algorithm to find initial solutions, which then serve as the base for further optimization. For example, online and portfolio scheduling can be used to determine the initial solution for meta-heuristic algorithms. Online scheduling algorithms work in an environment in which specific data regarding the system is utilized for scheduling tasks and servers. In addition to tracking the availability of resources and workload conditions, these algorithms organize tasks with a goal of achieving the best performance according to parameters such as response time and completion rates. Examples of online scheduling techniques include:

Earliest Deadline First (EDF): Tasks are prioritized according to their deadlines, with the tasks with the earliest dates given more priority. This strategy is efficient in time-sensitive situations where fulfilling deadlines is essential.

Least Laxity First (LLF): Similar to EDF, LLF arranges jobs according to the amount of time available before their deadlines, known as slack time or laxity. Tasks with the lowest amount of flexibility are assigned more importance, guaranteeing prompt completion.

First-Fit Decreasing (FFD): The tasks are arranged in descending order based on their size, then assigned to the first available resource to accommodate them. This strategy optimally allocates jobs within restricted resources, minimizes fragmentation, and enhances resource use.

Best-Fit Decreasing (BFD): Like FFD, tasks are assigned to the resource that has the lowest remaining capacity following the assignment. The objective of this strategy is to reduce the amount of unused space and enhance the efficiency of packing.

Greedy algorithms: These algorithms use local, optimal decisions at each stage in the expectation of discovering a global optimum. For instance, a greedy load balancer may allocate each incoming job to the server with the lowest current load, with the objective of gradually achieving load balance.

Portfolio scheduling entails the management of a diverse range of scheduling policies and the dynamic selection of the best appropriate policy based on the present circumstances of the system. This technique capitalizes on the advantages of different policies, adjusting to fluctuating workloads and the availability of resources. The main characteristics of portfolio scheduling are:

Dynamic policy selection: The scheduler assesses many policies in real time and selects the one that most effectively aligns with the present workload and system condition. This flexibility improves efficiency and the usage of resources.

Policy portfolio: The portfolio comprises a varied range of scheduling policies, including round-robin, least-connection, and FCFS. This enables the scheduler to seamlessly transition between policies as required in order to optimize performance.

The comparison of different meta-heuristic algorithms for balancing loads in the cloud has highlighted that different approaches have inherent correlational advantages and limitations. The ACO algorithm is best suited for solving complicated routing and resource allocation problems and managing such scenarios through pheromone communication, but it has the drawback of a slow rate of convergence and high sensitivity to the specific parameters adopted. The exploration of solution space and the ability to escape from local optima can be clearly observed when implementing the ABC algorithm, while it might be effective in gross tuning but may not be so effective when fine-tuning, as well as being devoid of any assurance of global optimality search. GA has good generalization capability and versatility in solving various or different types of problems, but it may require large computer resources and suffer from problem-specific, quick convergence.

PSO exhibits a high convergence rate and ease of implementation, yet its primary disadvantage is the potential for it to become stuck at a local optimum. BA stochastically adopts new forms of adaptive search and highly adaptive frequency modulation for a system’s operation, but could suffer when operating in noisy conditions and complex state spaces. The two key characteristics of WOA are that it utilizes the social behavior of humpback whales for the exploration and exploitation of the search space, but it might have a low convergence rate as well as being sensitive to premature convergence. SA has benefits and detriments: it has a grounded approach to making use of temperature-based exploration but takes more time than other meta-heuristic algorithms to converge. The migration and mutation methods that exist in the BBO algorithm may efficiently look for solutions, however, the BBO algorithm may have problems in terms of computational complexity and initial parameter setting. While FA demonstrates strong global search properties and facilitates illumination-based communication with varying light intensities, it can be susceptible to sloppy parameter tuning and premature convergence. GWO constructs a high-level and precise search process for grey wolf exploration and exploitation, but it may experience slow convergence in certain situations and require parameter tuning. In any case, the specific requirements of the application, the characteristics of the task to solve, and the capacities of the available computational resources determine the choice of algorithm.

5. Open Issues and Future Directions

While nature-inspired algorithms excel at optimization, their scalability to handle large-scale cloud environments with numerous resources and workloads remains challenging. Adapting these algorithms to handle the increased complexity becomes crucial as cloud systems grow. In addition, cloud environments are highly dynamic, with workload fluctuations and resource variations. Adapting nature-inspired algorithms to real-time changes and ensuring they can effectively respond to dynamic load balancing requirements is an ongoing challenge. Many load-balancing scenarios involve conflicting objectives, such as response time, energy efficiency, and resource utilization. Designing nature-inspired algorithms that efficiently handle multi-objective optimization in cloud load balancing remains an active research area. Combining multiple nature-inspired algorithms or integrating them with traditional optimization techniques and machine learning approaches could lead to more robust and effective load-balancing solutions. However, finding the right balance between these approaches and optimizing their interaction is still an open question. Nature-inspired algorithms often have several parameters that need to be configured. Finding optimal parameter values for specific cloud load-balancing scenarios is a challenge, and automated methods for parameter tuning are an ongoing area of research.

Future research could focus on developing adaptive nature-inspired algorithms that can autonomously adjust their behavior based on real-time changes in workload, resource availability, and system conditions. With the rise of edge and fog computing, extending nature-inspired algorithms to optimize load balancing in distributed environments that span cloud data centers and edge devices presents a new frontier. Integrating machine learning techniques with nature-inspired algorithms could enhance their performance in learning from historical data and predicting future load patterns, leading to more proactive load-balancing strategies. As energy efficiency becomes a critical concern in cloud computing, future research could focus on designing nature-inspired algorithms that explicitly consider energy consumption in load-balancing decisions.

Load-balancing strategies should consider security and privacy concerns. Future work could explore how nature-inspired algorithms can contribute to load-balancing solutions that ensure data confidentiality, integrity, and compliance with privacy regulations. Quantum-inspired meta-heuristic algorithms are an emerging area. Exploring their potential for load balancing in cloud computing could open new avenues for tackling complex optimization challenges. While many nature-inspired algorithms are extensively studied in simulations, translating their benefits into real-world cloud environments with practical constraints and considerations is an important next step. Investigating collaborative approaches where multiple cloud providers work together to balance workloads and share resources efficiently could lead to more holistic and effective load-balancing strategies.

6. Conclusions

This review paper highlighted load balancing in clouds with emphasis on the meta-heuristic nature-based technique. These load-balancing algorithms based on swarming, genetic evolution, and foraging have demonstrated the possibility of reinventing load-balancing solutions with advantages gained from collective intelligence. This work provided a comprehensive study of various nature-inspired meta-heuristic algorithms for load balancing, along with their prospects and demerits. One of the key findings of this research is the individual comparison of several algorithms and their overall comparison, providing insights into the specific circumstances where each algorithm excels. Based on the results obtained in this research, practical aspects of the use of such load-balancing algorithms have been illustrated based on a comprehensive evaluation of response time, throughput, resource utilization, and scalability. The adoption of load-balancing schemes that use meta-heuristic algorithms derived from nature shows great promise as cloud computing advances and influences various technological advances. A common characteristic of such algorithms is their ability to integrate computing paradigms with nature itself, which serves to enhance resource management.