Material Attribute Estimation as Part of Telecommunication Augmented Reality, Virtual Reality, and Mixed Reality System: Systematic Review

Abstract

:1. Introduction

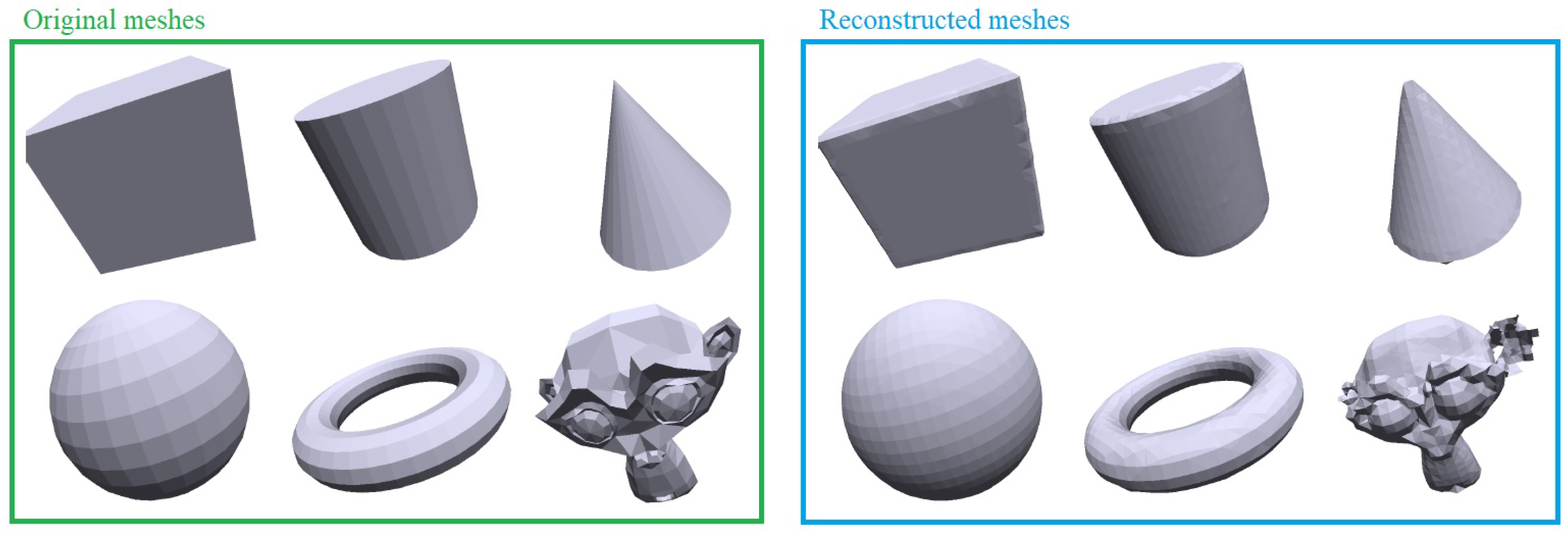

2. Object Representation

3. Visual Attribute Grouping and Estimation

3.1. Material Visual Attributes: Reflectance-Related

3.2. Material Visual Attributes: Surface Property Estimation

3.3. Visual Material Attributes: Texture Analysis

3.4. Material Datasets

4. Conceptional Realization through Tactile Internet

4.1. Realization

4.2. Limitations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| 2D | Two-dimensional |

| 3D | Three-dimensional |

| AE | AutoEncoder |

| AR | Augmented reality |

| BRDF | Bidirectional Reflectance Distribution Function |

| CNN | Convolutional neural network |

| DDM | Denoising diffusion model |

| FFT | Fast Fourier transform |

| GAN | Generative Adversarial Network |

| GMM | Gaussian Mixture Model |

| HandDiff | Hand motion synthesis network |

| KLD-adaptive filter | Kalman adaptive filter |

| MAE | Material attribute estimation |

| MC | Monte Carlo |

| MLP | Multilayer Perceptron |

| MR | Mixed reality |

| NeILF | Neural incident light field |

| NeRF | Neural radiance field |

| OBB | Oriented bounding box |

| PCL | Point Cloud Library |

| SDF | Signed-distance field |

| SG | Spherical Gaussian |

| SSD | Single Shot Multi-Box Detector |

| SVBRDF | Spatially Varying Bidirectional Reflectance Distribution Function |

| TI | Tactile Internet |

| TIM | Tactile Internet Metric |

| ToF | Time of Flight |

| TrajDiff | Trajectory synthesis algorithm |

| UDP | User Datagram Protocol |

| VR | Virtual reality |

| YOLO | You Only Look Once |

References

- Corsini, M.; Dellepiane, M.; Ponchio, F.; Scopigno, R. Image-to-geometry registration: A mutual information method exploiting illumination-related geometric properties. Comput. Graph. Forum 2009, 28, 1755–1764. [Google Scholar] [CrossRef]

- Vineet, V.; Rother, C.; Torr, P. Higher order priors for joint intrinsic image, objects, and attributes estimation. Adv. Neural Inf. Process. Syst. 2013, 26. Available online: https://proceedings.neurips.cc/paper_files/paper/2013/file/8dd48d6a2e2cad213179a3992c0be53c-Paper.pdf (accessed on 18 June 2024).

- Cellini, C.; Kaim, L.; Drewing, K. Visual and haptic integration in the estimation of softness of deformable objects. i-Perception 2013, 4, 516–531. [Google Scholar] [CrossRef] [PubMed]

- Yoon, Y.; Moon, D.; Chin, S. Fine tactile representation of materials for virtual reality. J. Sens. 2020, 2020, 1–8. [Google Scholar] [CrossRef]

- Li, Z.; Weng, L.; Zhang, Y.; Liu, K.; Liu, Y. Texture recognition based on magnetostrictive tactile sensor array and convolutional neural network. AIP Adv. 2023, 13, 105302. [Google Scholar] [CrossRef]

- Barreiro, H.; Torres, J.; Otaduy, M.A. Natural tactile interaction with virtual clay. In Proceedings of the 2021 IEEE World Haptics Conference (WHC), Montreal, QC, Canada, 6–9 July 2021; pp. 403–408. [Google Scholar]

- Boss, M.; Braun, R.; Jampani, V.; Barron, J.T.; Liu, C.; Lensch, H. Nerd: Neural reflectance decomposition from image collections. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 12684–12694. [Google Scholar]

- Chen, W.; Litalien, J.; Gao, J.; Wang, Z.; Fuji Tsang, C.; Khamis, S.; Litany, O.; Fidler, S. DIB-R++: Learning to predict lighting and material with a hybrid differentiable renderer. Adv. Neural Inf. Process. Syst. 2021, 34, 22834–22848. [Google Scholar]

- Zhang, J.; Yao, Y.; Li, S.; Liu, J.; Fang, T.; McKinnon, D.; Tsin, Y.; Quan, L. NeILF++: Inter-Reflectable Light Fields for Geometry and Material Estimation. arXiv 2023, arXiv:2303.17147. [Google Scholar]

- Kroep, K.; Gokhale, V.; Simha, A.; Prasad, R.V.; Rao, V.S. TIM: A Novel Quality of Service Metric for Tactile Internet. In Proceedings of the ACM/IEEE 14th International Conference on Cyber-Physical Systems (with CPS-IoT Week 2023), San Antonio, TX, USA, 9–12 May 2023; pp. 199–208. [Google Scholar]

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object detection using YOLO: Challenges, architectural successors, datasets and applications. Multimed. Tools Appl. 2023, 82, 9243–9275. [Google Scholar] [CrossRef] [PubMed]

- Richardt, C.; Tompkin, J.; Wetzstein, G. Capture, reconstruction, and representation of the visual real world for virtual reality. In Real VR–Immersive Digital Reality: How to Import the Real World into Head-Mounted Immersive Displays; Springer: Berlin/Heidelberg, Germany, 2020; pp. 3–32. [Google Scholar]

- Bargmann, S.; Klusemann, B.; Markmann, J.; Schnabel, J.E.; Schneider, K.; Soyarslan, C.; Wilmers, J. Generation of 3D representative volume elements for heterogeneous materials: A review. Prog. Mater. Sci. 2018, 96, 322–384. [Google Scholar] [CrossRef]

- Zeng, X.; Vahdat, A.; Williams, F.; Gojcic, Z.; Litany, O.; Fidler, S.; Kreis, K. LION: Latent point diffusion models for 3D shape generation. arXiv 2022, arXiv:2210.06978. [Google Scholar]

- Hasselgren, J.; Hofmann, N.; Munkberg, J. Shape, light, and material decomposition from images using Monte Carlo rendering and denoising. Adv. Neural Inf. Process. Syst. 2022, 35, 22856–22869. [Google Scholar]

- Wu, H.; Hu, Z.; Li, L.; Zhang, Y.; Fan, C.; Yu, X. NeFII: Inverse Rendering for Reflectance Decomposition with Near-Field Indirect Illumination. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 4295–4304. [Google Scholar]

- Liang, Y.; Wakaki, R.; Nobuhara, S.; Nishino, K. Multimodal material segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19800–19808. [Google Scholar]

- Achlioptas, P.; Diamanti, O.; Mitliagkas, I.; Guibas, L. Learning representations and generative models for 3d point clouds. In Proceedings of the International Conference on Machine Learning PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 40–49. [Google Scholar]

- Sharma, P.; Philip, J.; Gharbi, M.; Freeman, W.T.; Durand, F.; Deschaintre, V. Materialistic: Selecting Similar Materials in Images. arXiv 2023, arXiv:2305.13291. [Google Scholar] [CrossRef]

- Lagunas, M.; Malpica, S.; Serrano, A.; Garces, E.; Gutierrez, D.; Masia, B. A similarity measure for material appearance. arXiv 2019, arXiv:1905.01562. [Google Scholar] [CrossRef]

- Baars, T. Estimating the Mass of an Object from Its Point Cloud for Tactile Internet. Bachelor’s Thesis, Delft University of Technology, Delft, The Netherlands, 2022. [Google Scholar]

- Standley, T.; Sener, O.; Chen, D.; Savarese, S. image2mass: Estimating the mass of an object from its image. In Proceedings of the Conference on Robot Learning PMLR, Mountain View, CA, USA, 13–15 November 2017; pp. 324–333. [Google Scholar]

- Armeni, I.; He, Z.Y.; Gwak, J.; Zamir, A.R.; Fischer, M.; Malik, J.; Savarese, S. 3D scene graph: A structure for unified semantics, 3D space, and camera. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5664–5673. [Google Scholar]

- Tavazza, F.; DeCost, B.; Choudhary, K. Uncertainty prediction for machine learning models of material properties. ACS Omega 2021, 6, 32431–32440. [Google Scholar] [CrossRef] [PubMed]

- Sun, C.; Cai, G.; Li, Z.; Yan, K.; Zhang, C.; Marshall, C.; Huang, J.B.; Zhao, S.; Dong, Z. Neural-PBIR reconstruction of shape, material, and illumination. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 18046–18056. [Google Scholar]

- Li, X.; Dong, Y.; Peers, P.; Tong, X. Modeling surface appearance from a single photograph using self-augmented convolutional neural networks. ACM Trans. Graph. (ToG) 2017, 36, 1–11. [Google Scholar] [CrossRef]

- Wu, T.; Li, Z.; Yang, S.; Zhang, P.; Pan, X.; Wang, J.; Lin, D.; Liu, Z. Hyperdreamer: Hyper-realistic 3d content generation and editing from a single image. In Proceedings of the SIGGRAPH Asia 2023 Conference Papers, Sydney, Australia, 12–15 December 2023; pp. 1–10. [Google Scholar]

- Boss, M.; Jampani, V.; Kim, K.; Lensch, H.; Kautz, J. Two-shot spatially-varying brdf and shape estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3982–3991. [Google Scholar]

- Rodriguez-Pardo, C.; Kazatzis, K.; Lopez-Moreno, J.; Garces, E. NeuBTF: Neural fields for BTF encoding and transfer. Comput. Graph. 2023, 114, 239–246. [Google Scholar] [CrossRef]

- Huang, Y.H.; Cao, Y.P.; Lai, Y.K.; Shan, Y.; Gao, L. NeRF-texture: Texture synthesis with neural radiance fields. In Proceedings of the ACM SIGGRAPH 2023 Conference Proceedings, Los Angeles, CA, USA, 6–10 August 2023; pp. 1–10. [Google Scholar]

- Nagai, T.; Matsushima, T.; Koida, K.; Tani, Y.; Kitazaki, M.; Nakauchi, S. Temporal properties of material categorization and material rating: Visual vs non-visual material features. Vis. Res. 2015, 115, 259–270. [Google Scholar] [CrossRef]

- Schwartz, G.; Nishino, K. Recognizing material properties from images. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 1981–1995. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, R.; Lei, J.; Zhang, Y.; Jia, K. Tango: Text-driven photorealistic and robust 3d stylization via lighting decomposition. Adv. Neural Inf. Process. Syst. 2022, 35, 30923–30936. [Google Scholar]

- Zhou, X.; Hasan, M.; Deschaintre, V.; Guerrero, P.; Sunkavalli, K.; Kalantari, N.K. Tilegen: Tileable, controllable material generation and capture. In Proceedings of the SIGGRAPH Asia 2022 Conference Papers, Daegu, Republic of Korea, 6–9 December 2022; pp. 1–9. [Google Scholar]

- Liao, C.; Sawayama, M.; Xiao, B. Crystal or jelly? Effect of color on the perception of translucent materials with photographs of real-world objects. J. Vis. 2022, 22, 6. [Google Scholar] [CrossRef]

- Yuan, W.; Wang, S.; Dong, S.; Adelson, E. Connecting look and feel: Associating the visual and tactile properties of physical materials. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5580–5588. [Google Scholar]

- Deschaintre, V.; Gutierrez, D.; Boubekeur, T.; Guerrero-Viu, J.; Masia, B. The Visual Language of Fabrics; Technical Report. ACM Trans. Graph. 2023, 42, 4. [Google Scholar] [CrossRef]

- Su, S.; Heide, F.; Swanson, R.; Klein, J.; Callenberg, C.; Hullin, M.; Heidrich, W. Material classification using raw time-of-flight measurements. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3503–3511. [Google Scholar]

- Xue, J.; Zhang, H.; Dana, K.; Nishino, K. Differential angular imaging for material recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 764–773. [Google Scholar]

- Fleming, R.W. Visual perception of materials and their properties. Vis. Res. 2014, 94, 62–75. [Google Scholar] [CrossRef]

- Zhou, X.; Hasan, M.; Deschaintre, V.; Guerrero, P.; Hold-Geoffroy, Y.; Sunkavalli, K.; Kalantari, N.K. Photomat: A material generator learned from single flash photos. In Proceedings of the ACM SIGGRAPH 2023 Conference Proceedings, Los Angeles, CA, USA, 6–10 August 2023; pp. 1–11. [Google Scholar]

- Rodriguez-Pardo, C.; Dominguez-Elvira, H.; Pascual-Hernandez, D.; Garces, E. UMat: Uncertainty-Aware Single Image High Resolution Material Capture. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5764–5774. [Google Scholar]

- Vecchio, G.; Deschaintre, V. MatSynth: A Modern PBR Materials Dataset. arXiv 2024, arXiv:2401.06056. [Google Scholar]

- Liu, I.; Chen, L.; Fu, Z.; Wu, L.; Jin, H.; Li, Z.; Wong, C.M.R.; Xu, Y.; Ramamoorthi, R.; Xu, Z.; et al. Openillumination: A multi-illumination dataset for inverse rendering evaluation on real objects. Adv. Neural Inf. Process. Syst. 2024, 36. [Google Scholar]

- Stuijt Giacaman, W. Efficient Meshes from Point Clouds for Tactile Internet. Bachelor’s Thesis, Delft University of Technology, Delft, The Netherlands, 2022. [Google Scholar]

- Yang, H. Acquiring Material Properties of Objects for Tactile Simulation through Point Cloud Scans. Bachelor’s Thesis, Delft University of Technology, Delft, The Netherlands, 2022. [Google Scholar]

- Holland, O.; Steinbach, E.; Prasad, R.V.; Liu, Q.; Dawy, Z.; Aijaz, A.; Pappas, N.; Chandra, K.; Rao, V.S.; Oteafy, S.; et al. The IEEE 1918.1 “tactile internet” standards working group and its standards. Proc. IEEE 2019, 107, 256–279. [Google Scholar] [CrossRef]

- Shimada, S.; Mueller, F.; Bednarik, J.; Doosti, B.; Bickel, B.; Tang, D.; Golyanik, V.; Taylor, J.; Theobalt, C.; Beeler, T. Macs: Mass conditioned 3d hand and object motion synthesis. arXiv 2023, arXiv:2312.14929. [Google Scholar]

- Chen, Y. Tracking Physics: A Virtual Platform for 3D Object Tracking in Tactile Internet Applications. Bachelor’s Thesis, Delft University of Technology, Delft, The Netherlands, 2023. [Google Scholar]

- Bassetti, D.; Brechet, Y.; Ashby, M. Estimates for material properties. II. The method of multiple correlations. Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 1998, 454, 1323–1336. [Google Scholar] [CrossRef]

- Davis, A.; Bouman, K.L.; Chen, J.G.; Rubinstein, M.; Durand, F.; Freeman, W.T. Visual vibrometry: Estimating material properties from small motion in video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5335–5343. [Google Scholar]

- Papastamatiou, K.; Sofos, F.; Karakasidis, T.E. Calculating material properties with purely data-driven methods: From clusters to symbolic expressions. In Proceedings of the 12th Hellenic Conference on Artificial Intelligence, Corfu, Greece, 7–9 September 2022; pp. 1–9. [Google Scholar]

- Danaci, E.G.; Ikizler-Cinbis, N. Low-level features for visual attribute recognition: An evaluation. Pattern Recognit. Lett. 2016, 84, 185–191. [Google Scholar] [CrossRef]

- Farkas, L.; Vanclooster, K.; Erdelyi, H.; Sevenois, R.; Lomov, S.V.; Naito, T.; Urushiyama, Y.; Van Paepegem, W. Virtual material characterization process for composite materials: An industrial solution. In Proceedings of the 17th European Conference on Composite Materials, Munich, Germany, 26–30 June 2016; pp. 26–30. [Google Scholar]

- Takahashi, K.; Tan, J. Deep visuo-tactile learning: Estimation of tactile properties from images. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8951–8957. [Google Scholar]

- Luo, S.; Bimbo, J.; Dahiya, R.; Liu, H. Robotic tactile perception of object properties: A review. Mechatronics 2017, 48, 54–67. [Google Scholar] [CrossRef]

- Choi, M.H.; Wilber, S.C.; Hong, M. Estimating material properties of deformable objects by considering global object behavior in video streams. Multimed. Tools Appl. 2015, 74, 3361–3375. [Google Scholar] [CrossRef]

- Trémeau, A.; Xu, S.; Muselet, D. Deep Learning for Material recognition: Most recent advances and open challenges. arXiv 2020, arXiv:2012.07495. [Google Scholar]

- Fu, H.; Jia, R.; Gao, L.; Gong, M.; Zhao, B.; Maybank, S.; Tao, D. 3d-future: 3d furniture shape with texture. Int. J. Comput. Vis. 2021, 129, 3313–3337. [Google Scholar] [CrossRef]

- Ahmadabadi, A.A.; Jafari, H.; Shoorian, S.; Moradi, Z. The application of artificial neural network in material identification by multi-energy photon attenuation technique. Nucl. Instruments Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2023, 1051, 168203. [Google Scholar] [CrossRef]

- Han, X.; Wang, Q.; Wang, Y. Ball Tracking Based on Multiscale Feature Enhancement and Cooperative Trajectory Matching. Appl. Sci. 2024, 14, 1376. [Google Scholar] [CrossRef]

| Authors | Geometric Shape Estimation | Material Attribute Estimation |

|---|---|---|

| Bargmann et al. [13] | Experimental and computational methods (serial sectioning, tomography, simulation) | N/A |

| Zeng et al. [14] | Joint optimization with physically based rendering | Physically based material model |

| Chen et al. [8] | Differentiable rendering framework (deferred shading) | Monte Carlo integration, spherical Gaussians |

| Liang et al. [17] | Imaging modalities (RGB, polarization, NIR) | Behavior analysis of materials (reflection, refraction, absorption) |

| Achlioptas et al. [18] | Deep AutoEncoder network, Generative models (GANs, GMMs) | N/A |

| Sharma et al. [19] | Multi-scale encoder trained on synthetic renderings | Similarity to the material at query pixel location |

| Lagunas et al. [20] | Deep learning architecture with novel loss function | Human similarity judgments |

| Baars et al. [21] | The surface meshing of a point cloud, neural network towers | N/A |

| Richardt et al. [12] | Geometric modeling, mesh generation, surface reconstruction | Physically based material model |

| Zhang et al. [14] | Signed distance field representation, BRDF field, neural incident light fields | Texture datasets, fabric attribute understanding |

| Corsini et al. [1] | Multimodal image registration, scene understanding, object recognition | Reflectance, depth, illumination terms optimization |

| Yoon et al. [4] | Image filtering techniques (fast Fourier transform) | Vibration modeling based on texture patterns |

| Standley et al. [22] | Image-based mass estimation | N/A |

| Shape | 3D Model | Geometry | Surface Area | Volume |

|---|---|---|---|---|

| Sphere |  | 1 curved surface 0 edges 0 vertices | ||

| Cube |  | 6 faces 12 edges 8 vertices | ||

| Cylinder |  | 2 faces 1 curved surface 2 edges 0 vertices | ||

| Pyramid |  | 4 faces 6 edges 4 vertices | ||

| Cone |  | 1 face 1 curved surface 1 edge 0 vertices |

| Attribute | Estimation Techniques | Methods | Evaluation Techniques |

|---|---|---|---|

|

Reflectance- related | Albedo | SVBRDF-net [26], NeRD [7], MLPs, spherical Gaussians, diffusion priors, semantic segmentation [27] | Realistic image synthesis, BRDF parameter estimation, perceptual evaluation |

| SVBRD | Cascaded network architectures [28], MLPs, spherical Gaussians, autoencoders, neural textures, renderers [29], NeRF [30] | SVBRDF prediction accuracy, perceptual evaluation | |

| Surface Properties | Roughness | Scoring method [31], differentiable rendering [8], manual annotation [32], classifier training [33], SVBRDF-net [26] | Roughness estimation accuracy, perceptual evaluation |

| Metallicity | Similar estimation methods [31], NeRD [7], StyleGAN2 [34] | Metallicity perception evaluation, joint optimization of shape, BRDF, and luminosity | |

| Translucency | Behavioral tasks [35], diffusion, semantic segmentation, and material estimation models [27] | Translucency estimation accuracy, perceptual evaluation | |

| Emissivity | Human labeling and measurements [36], scene division into mesostructure textures and basic shapes [30] | Direct measurements, perceptual evaluation | |

| Texture Analysis | Pattern Recognition | Image filtering techniques [4], semantic understanding of natural language descriptions [37] | Pattern analysis accuracy, semantic understanding evaluation |

| Structural Analysis | Image processing techniques, machine learning algorithms | Structural analysis accuracy, machine learning model evaluation |

| PhotoMat [41] | UMat [42] | MatSynth [43] | OpenIllumination [44] | |

|---|---|---|---|---|

| Data | 2D | 2D | 2D | 3D |

| Materials | Real dataset of flash material photos with hidden material maps: albedo, roughness, normals, etc. | Textile materials such as crepe, jacquard, fleece, leather, etc. | Realistic materials like wood, stone, metal, fabric, etc. | Various materials including metals, plastics, fabrics, and ceramics. |

| Material Categories | N/A | 14 families of textile materials | N/A | N/A |

| Size of the Dataset | N/A | 2000 | N/A | N/A |

| Material Attributes | Albedo, roughness, normals, etc. | Texture, color, pattern, fabric type, material thickness. | Reflectance, roughness, texture, color, surface finish. | Reflectance, roughness, surface normals, texture, color. |

| Method | Conditional relightable GAN for material images in RGB domain and BRDF parameter estimator. | Utilizes U-Net generator within a GAN framework for image-to-image translation. | Designed to support modern, learning-based techniques for material-related tasks. | Utilizes a combination of physically based rendering and machine learning for material synthesis. |

| Dataset Details | - Uses a relightable generator to produce material images under conditional light source locations. | - Employs various loss functions including pixel-wise, adversarial, style, and frequency losses. | - Comprises a large collection of non-duplicate, high-quality, high-resolution realistic materials. | - Focuses on generating 3D models of various materials. |

| Data Augmentation | - Random cropping of real photos with flash highlights to obtain images with varied highlight locations. | - Patch-based training, affine transforms, random rescales, rotations, and intensity changes. - Random erasing for regularization. | - Material blending, rotation, cropping. - Environment illumination variations for renders. | N/A |

| Uncertainty | - Training strategy avoids baking highlights in neural materials to prevent mismatch with light conditions. | - Proposes an uncertainty quantification mechanism applied to individual per-map estimations. | N/A | N/A |

| Evaluation | - Evaluated based on the ability to produce realistic material images and BRDF parameter estimation. | - Evaluated on various criteria including categories, tags, creation methodology, and stationarity. | - Evaluated on various criteria including categories, tags, creation methodology, and stationarity. | N/A |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Christoff, N.; Tonchev, K. Material Attribute Estimation as Part of Telecommunication Augmented Reality, Virtual Reality, and Mixed Reality System: Systematic Review. Electronics 2024, 13, 2473. https://doi.org/10.3390/electronics13132473

Christoff N, Tonchev K. Material Attribute Estimation as Part of Telecommunication Augmented Reality, Virtual Reality, and Mixed Reality System: Systematic Review. Electronics. 2024; 13(13):2473. https://doi.org/10.3390/electronics13132473

Chicago/Turabian StyleChristoff, Nicole, and Krasimir Tonchev. 2024. "Material Attribute Estimation as Part of Telecommunication Augmented Reality, Virtual Reality, and Mixed Reality System: Systematic Review" Electronics 13, no. 13: 2473. https://doi.org/10.3390/electronics13132473

APA StyleChristoff, N., & Tonchev, K. (2024). Material Attribute Estimation as Part of Telecommunication Augmented Reality, Virtual Reality, and Mixed Reality System: Systematic Review. Electronics, 13(13), 2473. https://doi.org/10.3390/electronics13132473