Abstract

The inconsistency between classification and regression is a common problem in the field of object detection. Such inconsistency may lead to undetected objects, false detection, and regression boxes overlapping in the detection results. It has been determined that the inconsistency is mainly caused by feature coupling and the lack of information regarding the interactions between detection heads. In this study, the characteristics of spatial invariance were used, and the ability to fit the data distribution was enhanced by fully connected layers. A feature fusion module (FFM) was proposed in order to enhance the capabilities of the model’s feature extractions. This study also further considered the inconsistency between the loss functions and the proposed regression loss function (RMAE) based on mean absolute error (MAE) for the purpose of improving the location quality. Furthermore, in order to solve the problem of the lack of information regarding the interactions between detection heads, an inconsistency loss function (Lin) was added on the basis of the feature fusion module. Then, to evaluate the effectiveness of the proposed methods, the proposed feature fusion network (FMRNet) was trained based on RetinaNet. The experimental results demonstrated that this study’s proposed methods surpassed the accuracy of some existing detectors when FMRNet was adopted. It was confirmed that the proposed methods had the ability to solve the problems of undetected objects, false detection, and regression boxes overlapping.

1. Introduction

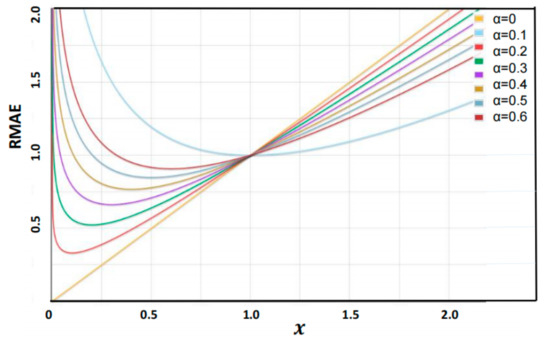

Object detection is an important research topic due to its broad application. The classic one-stage and two-stage methods of object detection have achieved good performance, such as YOLO [1], SSD [2,3], RetinaNet [4], and Faster RCNN [5], but they have the problem of insufficient generalization ability. The majority of detection systems have the common problem of using the same detection head during both classification and regression tasks. Generally speaking, the two tasks train independently but are combined during inference. In this study, this problem was investigated for the purpose of enhancing the correlation of classification and regression processes. First of all, the advantages and disadvantages of current methods were analyzed. Subsequently, the FMRNet was proposed, which adopted RetinaNet as the baseline. To be more specific, a feature fusion module and an inconsistency loss function were designed to suppress the inconsistency of classification and regression. Meanwhile, the limitations of mean absolute error were analyzed, and a regression loss function was proposed to improve the quality of the localization performance. In Figure 1, not only can the function image of RMAE be seen, but the relationship between MAE and RMAE is also revealed.

Figure 1.

Proposed novel RMAE, which adds a factor to the standard MAE; when , this study’s loss is equivalent to the MAE. It is derivable at 0 points, which is more remarkable than MAE before 1 point and less than MAE after 1 point. It produces various gradients for different samples. Therefore, it plays a role in improving the quality of regression.

Several methods consider the inconsistency of classification and regression from various aspects. These recent research methods include feature disentanglement, re-weighting, and intersection of union (IoU-based).

The majority of feature disentanglement method use information based on feature disentanglement, such as critical feature capturing network (CFC-Net) [6] and double-head R-CNN [7]. This approach is known to be simple and has been widely adopted since the features that are the most suitable for tasks can be easily obtained. However, the model cannot insufficiently extract features. Moreover, it cannot map different categories of targets well on a linear space, which results in undetected objects. The detector heads not only lack feature fusion information, but issues of model over-fitting may also occur. In order to address those issues, this study discussed the two following characteristics of fully connected layers: (1) Fully connected layers can allow distributed feature mapping to sample spaces that have spatial invariance. (2) Multi-level full connections not only enhance the fitting of data distribution for solving nonlinear problems but also do not change the sizes of feature maps. In addition, convolution can allow for the extraction of targeted sub-features. Therefore, based on the above discussion of the benefits to classification, this study proposed a FFM. The proposed FFM not only considered fully connected layer information but also the convolutional features in the process of feature fusion.

In order to solve the problems of no exchange of information and model over-fitting between the detection heads, some detector systems consider resolving those issues by re-weighting, such as HarmonicDet loss [8]. Re-weighting can enable those two branches to supervise and promote each other during training, thereby producing consistent predictions with co-occurrence of the top classifications and localization in the inference phase. Moreover, classification-aware regression loss (CARL) [9] was designed to optimize two branches simultaneously. Similar methods include dual-weighting label assignment (DWLA) [10]. Although the aforementioned approaches have achieved significant performance regarding inconsistency problems, they have ignored feature coupling. Therefore, an extra inconsistent loss function based on feature fusion was proposed in this study. The proposed inconsistent loss function considered not only feature entanglement but also model over-fitting.

Due to the use of classification confidence as the ranking metric during non-maximum suppression (NMS) [11] procedures, localization information tends to be lacking. This may to lead to the inconsistency of classification and regression. Therefore, in order to resolve those issues, traditional methods are based on the IoU-guided NMS [12]. In addition, probabilistic anchor assignment with IoU prediction (PPA) [13] utilizes IoU for localization quality. Generalized focal loss V1 (GFL V1) [14] adopts classification-IoU joint representation, which produces continuous 0~1 quality estimations on the corresponding category. However, although such methods have alleviated the aforementioned issues, they do not consider the limitations of sharing features. Furthermore, false-detection problems may occur due to a lack of information interaction. As a result, such methods are not widely adopted.

In this study, FMRNet was introduced, which included FFM, RMAE, and Lin. The results of a large number of experiments on the Microsoft COCO (MS COCO) dataset showed that this study’s proposed methods outperform the baseline. Therefore, based on the results of the methods proposed in this study, it was considered that the detection performance had been improved. The main contributions described in this study are as follows: (1) The proposed FFM was able to capture fusion features, which benefited down-stream tasks. (2) The RMAE was able to alleviate the inconsistency of loss function and improve location quality. (3) The Lin was designed for the purpose of increasing the mutual supervision information between the detection heads, which could potentially solve the problem of model over-fitting.

2. Related Work

There are other methods for the inconsistency problem of the classification and regression tasks besides the three main methods described above. These methods usually use a combination of an extra branch, localization quality, and feature fusion. Specifically, the problem first appeared with IoU-Net. It was found that the regression boxes with high confidence scores could only achieve bad-quality localization predictions. Therefore, in order to address those issues, IoU-Net adopted the IoU as the localization confidence and combined the classification score and regression score together for classification tasks. It was observed that that method alleviated the aforementioned issues. However, misalignments still existed in each spatial point.

Localization quality is often used to solve the inconsistency problems, and its applications are complicated and widespread. It is introduced in some methods that utilize an extra branch in order to perform localization quality estimations in the form of IoU or centerness score, such as NMS [15], IoU-Net, MS R-CNN [16], and IoU-aware [17]. The classification and localization are independently trained but are used together during inference. However, unreliable regression scores still exist. The IoU-balance method [18] differs from the re-weighted method in that rather than introducing an extra branch, it assigns weights to classification tasks based on IoU. In that way, the correlation between the classification tasks and regression tasks can be enhanced. As a result, a high consistency between the classification and the regression can be ensured. However, there are still limitations to the described approach since it does not change the classification loss function during training.

Object-detection performance can be improved from a feature perspective. Feature fusion implementation has also been used in other fields. Specifically, mask-refined R-CNN (MR R-CNN) [19] was proposed to consider the relationship between pixels at the object edge, where these pixels will be misclassified. To improve the generalization ability of the model and the detection accuracy of the small targets, a multi-layer convolution feature fusion algorithm (MCFF) [20] was proposed. The syncretic-NMS [21] algorithm belongs to the field of semantic segmentation, which combined the neighboring boxes that are strongly correlated with the corresponding bounding boxes. Mutual supervision (MuSu) [22] breaks the convention of the same training samples for these two heads in dense detectors. Dynamic anchor learning (DAL) [23] utilized the newly defined matching degree to comprehensively evaluate the localization potential of the anchors, and it alleviates inconsistency between classification confidence and localization accuracy. Task-aligned one-stage object detection (TOOD) [24] considered the spatial misalignment in predictions between the two tasks, which explicitly aligns the two tasks in a learning-based manner. Decoupled faster R-CNN (DeFRCN) [25] adopted a multi-task decoupling approach, which was utilized for few-shot object detection.

3. Materials and Methods

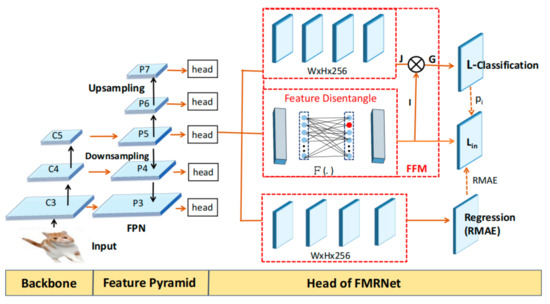

In this section, this study introduces the proposed FMRNet, which adds a feature fusion module based on RetinaNet, as well as loss functions. The structure is detailed in Figure 2. As can be seen in the figure, the model was comprised of three main components: (1) the FFM extracts fusion feature, which benefited the classification tasks; (2) RMAE to improve regression performance; and (3) the inconsistency loss function, which was utilized to enhance interactivity of the detection heads and solve any model over-fitting issues.

Figure 2.

The proposed FMRNet model, where indicates the feature disentanglement of the detection heads. is the output of fully connected layers. represents the output of convolution. denotes the output of the feature fusion module. interacts with the information from the regression and classification loss to mitigate inconsistency in the detection heads.

3.1. Feature Fusion Module

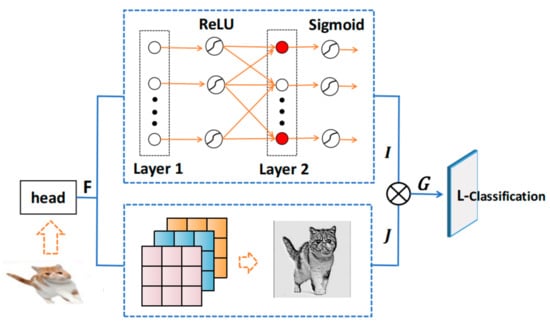

In this study, in order to solve the feature coupling problem and take advantage of the space sensitivity of the fully connected heads, the following three factors were considered: (1) The fully connected layers allow distributed feature mapping to sample spaces, thereby reducing the effects of feature location on the classification. (2) Multi-level fully connected layers can fit the data distribution and the activation function of the middle layers, which can solve the problems related to model over-fitting. (3) The convolutional feature fusion not only has the ability to preserve target sub-features but can also facilitate classification tasks. Therefore, a feature fusion module was added, which included both feature disentanglement and feature fusion. As shown in Figure 3, FFM includes two stages: feature disentangle and feature fusion. The red points on the layer 2 indicate that the neurons are activated, and the other points indicate that neurons are not activated. Activation functions are used between layers.

Figure 3.

The diagram of the proposed FFM. represents the results of the fully connected layers. denotes the convolutional feature. G indicates the results of the FFM. In the figure, the red neurons indicate that the feature is activated. Between layers are activation functions. : multiplication. ●: omit other neurons.

Feature Disentanglement

Specifically, this module gives the feature F using the output of FPN per layer as input. A very small sub-network, , was used in this study to implement feature disentanglement. The had two fully connected layers, which had the activation function ReLU and sigmoid, respectively. The results of the feature disentanglement in fully connected layers can be written as follows:

where and represent the ReLU and sigmoid; and indicate the weights; is the input channel dimension; and denotes the channel dimension of the middle layer.

This section first preserved the classification sub-net of RetinaNet, with the parameters of the sub-net shared across all pyramid levels. Then, input feature mapping with C channels from a given pyramid level were obtained and applied to four 3× 3 convolution layers with the ReLU activation function. The convolutional feature fusion was calculated as follows:

3.2. Regression Loss and Inconsistency Loss

This study observed problems related to undetected objects, false detection, and the overlapping of regression boxes, as shown in Figure 4. The reasons for the occurrences of those issues were analyzed as follows: (1) The feature fusion module led to model overfitting and resulted in false-detection results. (2) The classification loss could solve the problems of hard samples to some extent during detection, but the MAE was found to be insensitive to the hard samples. Therefore, this inconsistency created an undetected object problem. (3) Due to the specific similarity features of the samples with close space locations, overlapping regression boxes occurred. In order to address that issue, RMAE and Lin were introduced.

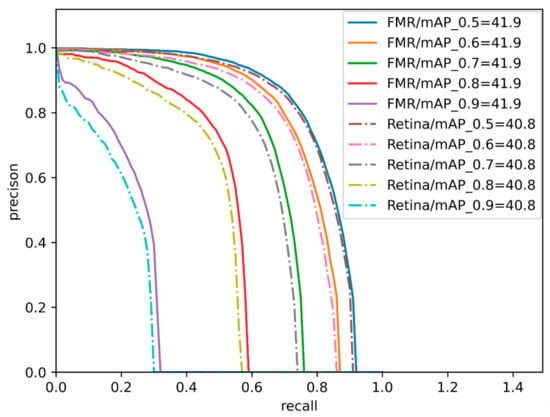

Figure 4.

Precision–recall (PR) curves on MS COCO by ResNeXt-64x8d-101-FPN backbone.

3.2.1. Regression Loss Function

The detection results were discussed, and the model’s performance in regression tasks was considered. As shown in Figure 1, it was found that the MAE had the disadvantage of stable gradients and was non-derivable at the point zero for different samples. RMAE was proposed to solve the disadvantage of MAE. On the other hand, the performance of the regression task was improved, which has the advantages of the simultaneous optimization for classification and regression. The inconsistency between loss function led to undetected objects. Therefore, in order to improve location quality performance, this study introduced the RMAE from the MAE:

where f(xi) is the model’s estimated probability, yi denotes the ground truth, and n indicates the number of samples. In order to better express this study’s equation, it was rewritten as . Furthermore, the mapping to (0, 1) was normalized by Softmax. Therefore, x could be rewritten as follows:

This study added logarithmic terms based on the MAE. Therefore, the proposed RMAE was calculated as follows:

where represents the hyper-parameters, and it controls the curve’s degree of curvature (Figure 1). In practice, was set. The RMAE solved the problem of 0 point non-derivability and produced various gradients for different samples during the training process. This also solved the problem of regression boxes overlapping.

3.2.2. Inconsistency Loss

In this research investigation, due to the lack of interaction information between detection heads, Lin was added after the FFM. Specifically, the fusion feature for the classification was utilized in order to obtain high classification confidence. Furthermore, the RMAE loss improved localization quality. In order to ensure high consistency between the classification and regression, the Lin was constructed by combining classification confidence and regression loss. The regression loss function was retained by using the deformation of the classification confidence as its weight. The Lin can be calculated as follows:

where pi indicates the classification confidence after feature fusion. was set. denotes the RMAE regression loss. There was found to be a positive correlation between the regression loss and classification confidence from Equation (6). In other words, the classification task was supervised by the regression loss, which achieved mutual supervision between the two tasks.

Based on the above analysis, the model’s total loss function was comprised of three main components as follows:

where denotes focal loss. is inconsistency loss function. denotes the RMAE regression loss. We treat each loss function fairly.

4. Experiments

In this section, the experimental setting and the main experimental results are presented. Ablation experiments were introduced in order to quantitatively analyze the effectiveness of each module in the proposed FMRNet. All of the experiments were conducted on the complex dataset MS COCO. There were two parts in total, consisting of the training set with 118 k images and the validation set with 5 k images. The training set part was used for training, and the performance was reported on the validation and test set. The standard MS COCO-style AP metric was adopted, which averaged the mAP of IoUs from 0.5 to 0.95 with an interval of 0.05. For all experiments, this study used depth 50 or 101 ResNets [26] with a feature pyramid network (FPN) [27]. The proposed FMRNet was compared with common one-stage/two-stage detection methods.

RetinaNet was used as the benchmark and retained focal loss. The proposed FMRNet was trained with the standard 1 × (12 epochs) and 1.5 × (18 epochs) training configurations. A RTX 3090 GPU was used for acceleration. Stochastic gradient descent (SGD) [28] was adopted, and the momentum term was set to 0.9. The training weight decay was 0.0001. The maximum learning rate was set to 0.001 for backbone and 0.01 for other parts. Linear decay strategies were used to adjust the learning rate. The batch size was set to 32, and the maximum epoch was set to 18. Random flip was utilized for data augmentation. The hyperparameter in the FMRNet was determined through a large number of experiments. A new hyperparameter was introduced in the RMAE, which controlled the curve degree of the function (Figure 1). Then, in order to obtain the best of , the hyperparameters in the RMAE were tested on the MS COCO datasets. Table 1 shows that the majority of AP reach the optimal values when is set to 0.2.

Table 1.

Hyperparameter experiment results of the FFM (ResNet-50 backbone). All experiments were performed on the MMDetection frame [29] and validated on MS COCO minival.

4.1. Main Experiments Results

The performance results of normal two-stage/one-stage detection algorithms are shown in Table 2. Generally speaking, the two-stage algorithm detection results were better than those of the one-stage detection algorithm due to the limitations of the one-stage detection algorithm. This study tested the proposed model using different epoch settings and under the MMDetection framework. It was observed that as the epochs increased, the model worked better than in the validation set.

Table 2.

Comparison results between various detectors (single-model and single-scale results) on MS COCO test-dev. All of the experiments were performed on the MMDetection frame. R, ResNet; X, ResNeXt; HG, Hourglass.

The performance results of the proposed FMRNet with 12 epochs and 18 epochs are detailed in the Table 2. It was observed that when compared to the above-mentioned methods, the accuracy was improved. For example, using ResNeXt-64x8d-101-FPN as the backbone, the FMRNet achieved a 1.1 point mAP gap (41.9 versus 40.8) with the baseline. We also compared the relevant methods. FMRNet achieved a 0.4 point mAP gap (41.9 versus 41.5) with double-head R-CNN. It obtained an improvement of 1.3 point mAP gap (41.9 vs. 40.6) and 0.7 point mAP gap (41.9 vs. 41.2). This also proves the effectiveness of FMRNet in addressing two-task inconsistency. However, there was still a gap compared to the state-of-the-art TOOD (46.7 mAP), which was not compared in Table 2. In addition, when compared with the two-stage method, the proposed FMRNet achieved a 5.1 point gap above the top-performing faster R-CNN model based on inception-ResNet-v2-TDM. In summary, this study confirmed that when the performance of the proposed FMRNet was compared with one-stage/two-stage detection methods, better performance for both results were achieved, as shown in Table 2.

The precision–recall (PR) curves were used to evaluate the performance of FMRNet and baseline, as shown in Figure 4. The solid line represents FMRNet. The dashed line represents RetinaNet. The IoU threshold of PR curves was used to distinguish between positive and negative samples. The IoU threshold of PR curves was set to 0.5, 0.6, 0.7, 0.8, and 0.9. With the increase of the IoU threshold, the gap between FMRNet and baseline increases. This also demonstrates the improvement of the proposed network over the original RetinaNet.

4.2. Ablation Studies

For all of the ablation studies, an image scale of 800 pixels was used for the training and testing processes. The effectiveness of the proposed FMRNet was investigated and compared with the effectiveness of different object-detection components. The baseline of this experiment is the focal loss and MAE, and the proposed RMAE is motivated by improving regression performance rather than resolving inconsistency. This study adopted a 1× learning schedule (12 epochs) without multi-scale training for the ablation studies. The average accuracy (mAP) of different target sizes was used as the evaluation criterion. The ablation experiments were designed to test the contributions of each module for the FMRNet, as shown in Table 3. A ResNet-50 backbone on the ATSS [36] was utilized to conduct the experiments. Each component was disabled by replacing it with a vanilla method in order to quantitatively analyze the effectiveness of each module in the FMRNet.

Table 3.

Ablation study on ResNet-50 backbone. All experiments were performed on the MMDetection frame and validated on MS COCO minival.

Table 3 details how the components contributed to the performance of the FMRNet. The second row represents the baseline. “✓” indicates that the corresponding method of the column is used in the FMRNet. Specially, in the RMAE column, “✓” shows that the RMAE replaces MAE on the baseline. Row 3 of the table shows that the detection precision improved from 35.7% to 36.3% after FFM module was added to the baseline. The detection precision increased from 35.7% to 36.2% after the MAE was replaced with RMAE on the baseline, as shown in rows 2 and 4. Row 5 of the table shows that the detection precision increased from 35.7% to 36.5% after the Lin was added on the baseline. Row 6 of the table shows that the detection precision increased from 35.7% to 36.5% after the FFM was added and the MAE replaced with RMAE on the baseline. Row 7 of the table shows that the detection precision increased from 35.7% to 36.6% after the MAE was replaced with RMAE and the Lin added on the baseline. Rows 2 and 8 show that the detection precision increased from 35.7% to 36.5% after the FFM and the Lin were added on the baseline. These experimental results indicated that the RMAE method is better than MAE. Finally, FFM, RMAE, and Lin were used on the baseline. Row 9 shows that the precision increased from 35.7% to 36.8%. The results illustrate the effectiveness of the FMRNet and that the proposed components had the ability to boost the performance of the baseline.

4.3. Detection Results

There are various problems that may be encountered in datasets, including class imbalance, shadows, blurring, etc., which may lead to undetected objects, false detection, and overlapping regression boxes in the results. Object detection has many challenges. In this study, for the purpose of comparing the effectiveness of the proposed components, the effects of the detection on RetinaNet and FMRNet were visualized. Representative examples were selected from the many test results. As shown in Figure 5, the skis were undetected objects, and the background was detected as the foreground. In this research investigation, the inconsistency of the classification and regression tasks as well as the regression quality were analyzed. Corresponding solutions were then proposed for the different problems. Finally, the results of the baseline with FMRNet were compared on MS COCO datasets. The test results showed that the above-mentioned issues were alleviated, as shown in Figure 6. This confirms the effectiveness of the proposed method.

Figure 5.

The detection results on MS COCO by RetinaNet-ResObj with ResNet-50-FPN show some problems, including undetected objects, false detection, and overlapping of regression boxes.

Figure 6.

Qualitative results on the minival of MS COCO (ResNet-50 used as the backbone).

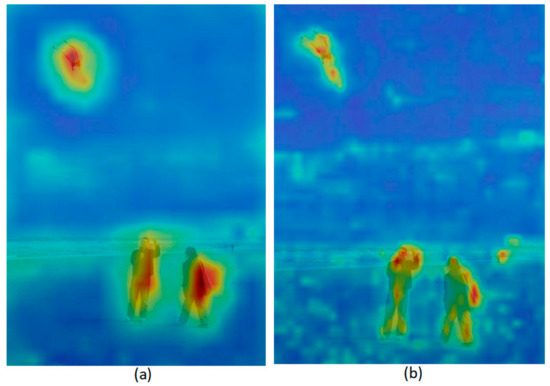

The heat map of object detection is used to highlight the important regions and visual interpretations. To evaluate how well the FMRNet focuses the image, the heat maps of FMRNet and baseline were visualized separately by using the trained weights. As shown in Figure 7, the heat map of FMRNet was able to focus on important regions. However, the areas of baseline are discrete. This means that baseline features are not used for downstream tasks. This also demonstrates the improved quality of FMRNet for classification and regression tasks.

Figure 7.

Visualization of heat map. ResNet-50 is used as the backbone. (a) The heat map of FMRNet. (b) The heat map of the baseline.

We performed speed tests on the ResNet-50 backbone by using the trained weights. The uniform image size was set to 1280 × 800. Table 4 depicts the flops and parameters for the baseline and FMRNet. We found that there was almost no difference in the speed of the model using the same size images, and the number of parameters did not change.

Table 4.

Comparison of test results for operation speed.

Table 5 depicts the processing time of the baseline and FMRNet, with ResNet-50 as the test backbone. We used 2000 images of the same size. The time taken by the baseline was 9.9 s, while that spent by FMRNet was 9.1 s. Therefore, our model not only improves accuracy but also remains stable in terms of computing power.

Table 5.

Comparison of test results for time performance.

5. Conclusions

In this study, in order to alleviate inconsistency between detection heads, an FMRNet was proposed, which included a FFM, RMAE, and inconsistency loss. It was found that the FFM performed feature fusion from the perspective of feature decoupling, which solved the problems related to feature coupling and the insufficient ability to extract features. The inconsistency loss was used for mutual supervision and information interactions between heads. It was observed that by considering the inconsistency of the loss function, the regression performance could be improved, and RMAE was proposed. Finally, numerous experiments were conducted in order to verify that the proposed FMRNet model achieved good results.

Author Contributions

Methodology, M.Z.; writing—original draft preparation, M.Z.; writing—review, X.T.; supervision, X.T. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the National Nature Science Foundation of China under grant No. 61966029 and Natural Science Foundation of Ningxia under grant No. 2021AAC03102.

Institutional Review Board Statement

No applicable.

Data Availability Statement

No new data were created. The MS COCO datasets for training and assessment can be found on http://cocodataset.org.

Conflicts of Interest

The authors declare that they have no conflicts of interest to report regarding the present study.

References

- Joseph, R.; Ali, F. Yolo9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 6517–6525. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multi-box detector. arXiv 2015, arXiv:1512.02325. [Google Scholar]

- Yang, F.C.; Wei, L.; Ananth, R.; Ambrish, T.; Alexander, C. Dssd: Deconvolutional single shot detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Tsung-Yi, L.; Priya, G.; Ross, G.; Kaiming, H.; Piotr, D. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 318–327. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef]

- Qi, M.; Lingjuan, M.; Zhiqiang, Z.; Yunpeng, D. Cfc-net: A critical feature capturing network for arbitrary-oriented object detection in remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar]

- Wu, Y.; Chen, Y.; Yuan, L.; Liu, Z.; Wang, L.; Li, H.; Fu, Y. Rethinking classification and localization for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10183–10192. [Google Scholar]

- Keyang, W.; Lei, Z. Reconcile prediction consistency for balanced object detection. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3611–3620. [Google Scholar]

- Hang, C.Y.; Kai, C.; Change, L.C.; Dahua, L. Prime sample attention in object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11580–11588. [Google Scholar]

- Shuai, L.; Hang, H.C.; Huang, L.R.; Lei, Z. A dual weighting label assignment scheme for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9377–9386. [Google Scholar]

- Jan, H.; Rodrigo, B.; Bernt, S. Learning non-maximum suppression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6469–6477. [Google Scholar]

- Borui, J.; Xuan, L.R.; Yuan, M.J.; Tete, X.; Yuning, J. Acquisition of localization confidence for accurate object detection. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 784–799. [Google Scholar]

- Kang, K.; Seok, L.H. Probabilistic anchor assignment with iou prediction for object detection. arXiv 2020, arXiv:2007.08103. [Google Scholar]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection. Adv. Neural Inf. Process. Syst. 2020, 33, 21002–21012. [Google Scholar]

- Tychsen, S.L.; Lars, P. Improving object localization with fitness nms and bounded iou loss. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6877–6885. [Google Scholar]

- Jin, H.Z.; Chao, H.L.; Chao, G.Y.; Chang, H.; Gang, W.X. Mask scoring r-cnn. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 6402–6411. [Google Scholar]

- Kai, W.S.; Li, L.X.P.; Gang, W.X. Iou-aware single-stage object detector for accurate localization. Image Vis. Comput. 2019, 97, 103911. [Google Scholar]

- Kai, W.S.; Rong, Y.J.; Gang, W.X.; Ping, L.X. Iou-balanced loss functions for single-stage object detection. Pattern Recognit Lett. 2019, 156, 96–103. [Google Scholar]

- Zhang, Y.; Chu, J.; Leng, L.; Miao, J. Mask-Refined R-CNN: A Network for Refining Object Details in Instance Segmentation. Sensors 2020, 20, 1010. [Google Scholar] [CrossRef] [PubMed]

- Chu, J.; Guo, Z.; Leng, L. Object Detection Based on Multi-Layer Convolution Feature Fusion and Online Hard Example Mining. IEEE Access 2018, 6, 19959–19967. [Google Scholar] [CrossRef]

- Chu, J.; Zhang, Y.; Li, S.; Leng, L.; Miao, J. Syncretic-nms: A merging non-maximum suppression algorithm for instance segmentation. IEEE Access 2020, 8, 114705–114714. [Google Scholar] [CrossRef]

- Gao, Z.; Wang, L.; Wu, G. Mutual supervision for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3621–3630. [Google Scholar]

- Ming, Q.; Zhou, Z.; Miao, L.; Zhang, H.; Li, L. Dynamic anchor learning for arbitrary-oriented object detection. arXiv 2020, arXiv:2012.04150. [Google Scholar] [CrossRef]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. Tood: Task-aligned one-stage object detection. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3490–3499. [Google Scholar]

- Qiao, L.; Zhao, Y.; Li, Z.; Qiu, X.; Wu, J.; Zhang, C. DeFRCN: Decoupled Faster R-CNN for Few-Shot Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 8661–8670. [Google Scholar]

- Ming, H.K.; Yu, Z.X.; Qing, R.S.; Jian, S. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 770–778. [Google Scholar]

- Tsung-Yi, L.; Piotr, D.; Ross, G.; Ming, H.K.; Bharath, H.; Serge, B. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 936–944. [Google Scholar]

- Ilya, L.; Frank, H. Sgdr: Stochastic gradient descent with warm restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J. MMDetection: Open mmlab detection toolbox and benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y. Speed/accuracy trade-offs for modern convolutional object detectors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3296–3297. [Google Scholar]

- Christian, S.; Sergey, I.; Vincent, V.; Alexander, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Abhinav, S.; Rahul, S.; Jitendra, M.; Abhinav, G. Beyond skip connections: Top-down modulation for object detection. arXiv 2016, arXiv:1612.06851. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE Transactions on Pattern Analysis and Machine Intelligence, Piscataway, NJ, USA, 4 December 2017. [Google Scholar]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. Foveabox: Beyond anchor-based object detector. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Hei, L.; Jia, D. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Volume 128, pp. 642–656. [Google Scholar]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 9756–9765. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).