Reinforcement Learning Based Dual-UAV Trajectory Optimization for Secure Communication

Abstract

:1. Introduction

2. System Model

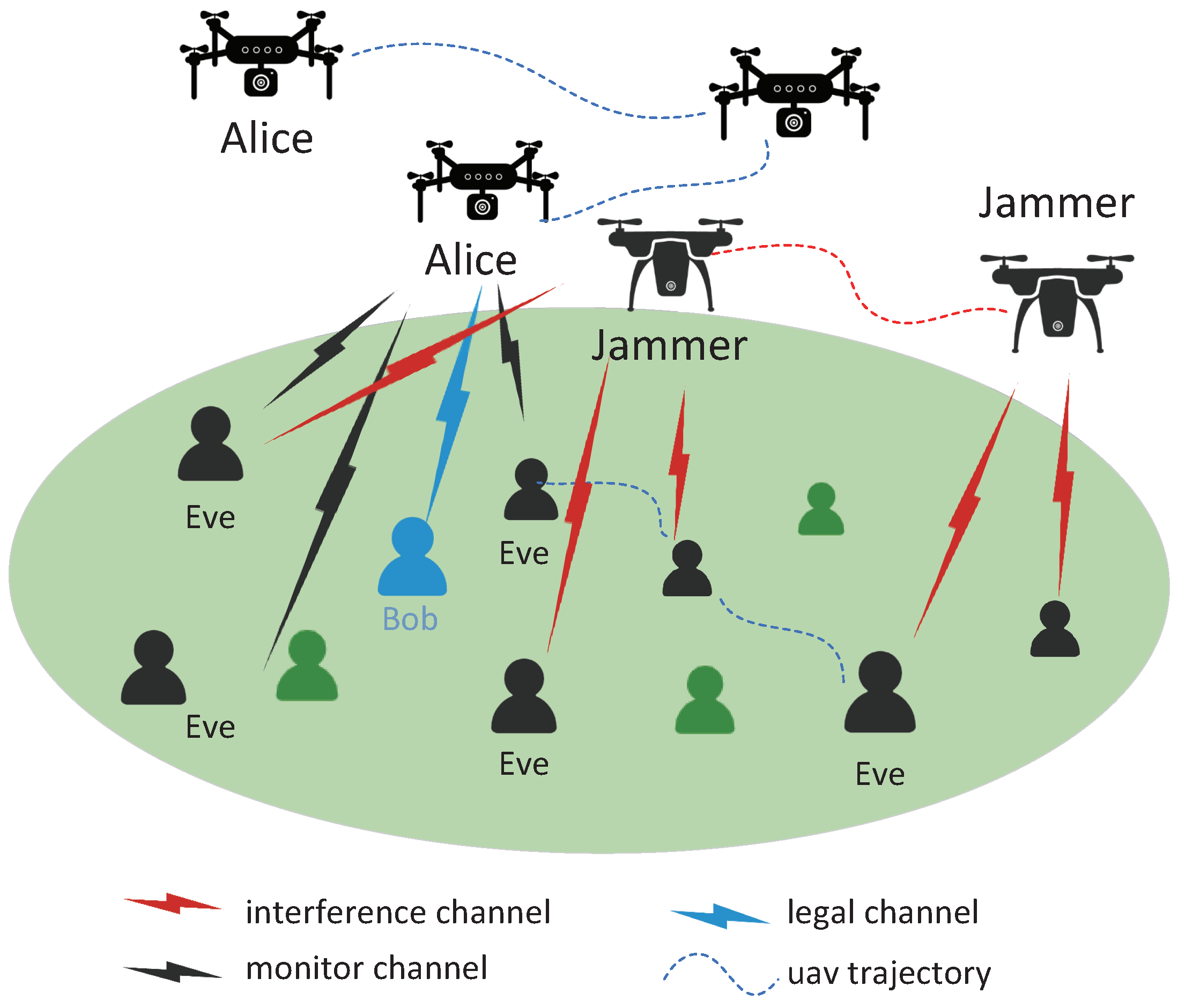

2.1. Network Model

2.2. Mobility Model

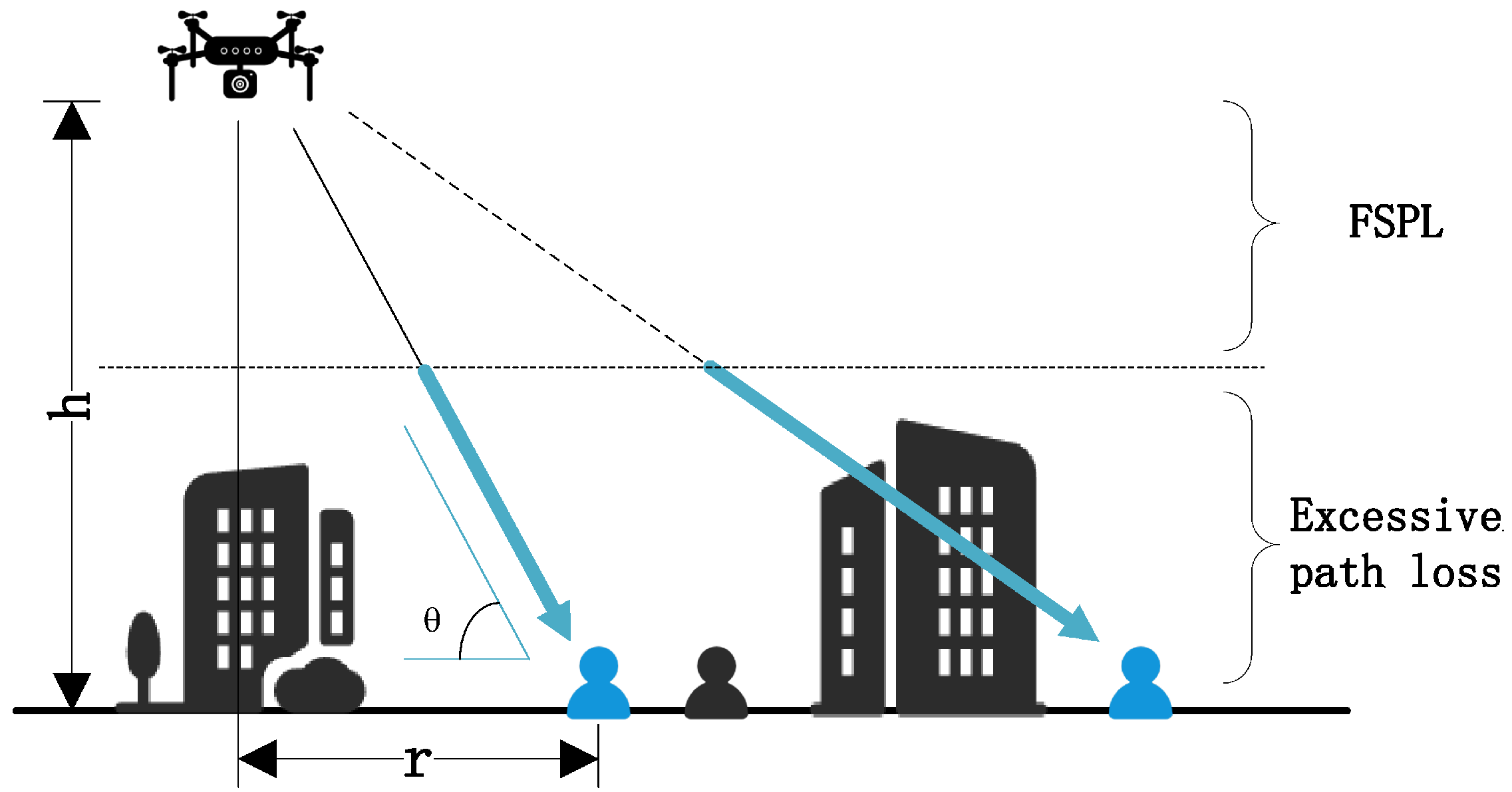

2.3. Transmission Model

3. System Problem Formulation and Markov Decision Process

3.1. Problem Formulation

3.2. Markov Decision Process

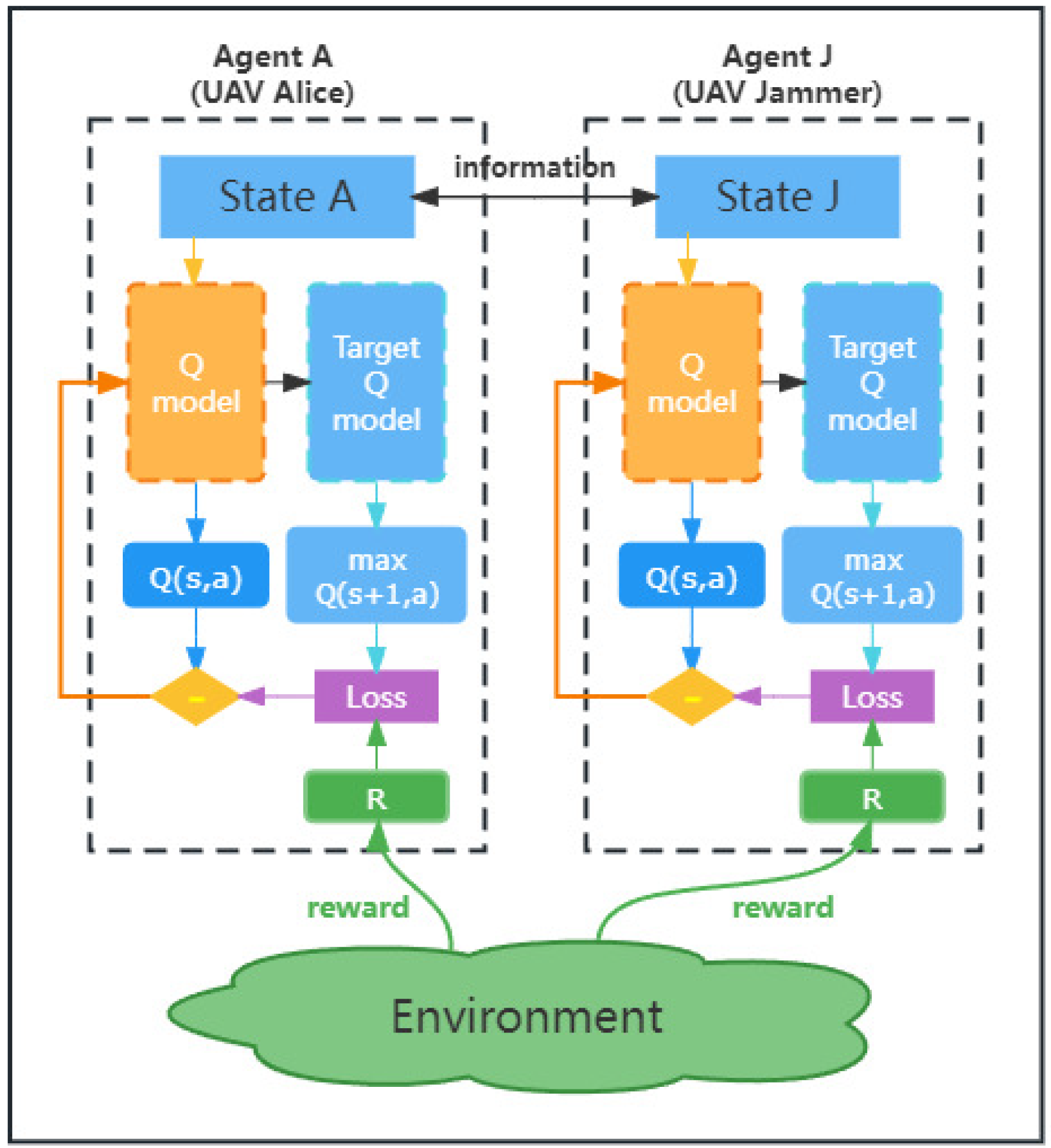

- Each UAV can be regarded as an agent. An independent model is used to solve the MDP problem for each UAV. However, because the agent can be influenced by other agents, from its own point of view, other agents make the environment unstable. Therefore, UAVs need to communicate during the flight to inform each other of the position information. Then they take action and get environmental rewards according to the position information of all agents. In this paper, we only consider two agents, named Alice and Jammer. The complete model structure is shown in Figure 3.

- is the state space; includes the location of each UAV, Bob, and Eves. The coordinates of Alice and Jammer are described as , ; the coordinate of Bob is . The coordinate of Eve e is , .

- is the action space, which contains the moving speed of the UAV at each moment and its transmitting power. To reduce the complexity of the network model, we discretize the speed into a set . The speed of Alice and Jammer in each time slot is denoted as , , and the transmitting power is and , , .

- denotes the state-transition probability. As it is difficult to predict , we adopt the model-free RL method to address the above MDP problem.

- is the reward space. For all UAVs, the reward obtained in each time slot consists of the scenario penalty, the energy penalty, the information secrecy rate reward, and the distance reward. The information secrecy rate reward is equal for each agent. For the scene penalty, due to the complex arrangement of tall buildings and vegetation in the city, the free flight space of the UAV is limited, so we need to give the UAV an environmental penalty when the UAV wants to fly away from the limited area. The coordinate of the UAV i is denoted as , . Then the scenario penalty iswhere C is a constant. Since the battery capacity of the UAV is finite, it is necessary to consider the energy cost of the UAV without wireless charging. The energy consumption penalties of Alice and Jammer are defined as and . The transmitting powers of Alice and Jammer in the current time slot are denoted as and . Therefore, the energy penalty can be given bywhere and are hyperparameters. In the initial state of the model, Alice may be far away from Bob, which makes the information secrecy rate very small. The too-small reward leads to the model being unable to learn the features of the environment effectively. To avoid this, we introduce a distance penalty and define the distance penalty for Alice and Jammer as and . We use the average of the distances as the reward, as shown in (16). The distance reward according to the Euclidean distance from the UAV to the target can be expressed aswhere , are the distance reward factors of Alice and Jammer. Thus, the total reward function for each UAV can be given bywhere and are the reward for Alice and Jammer separately.

3.3. Double DQN

| Algorithm 1 A DDQN-based optimization algorithm for dual UAVs. |

|

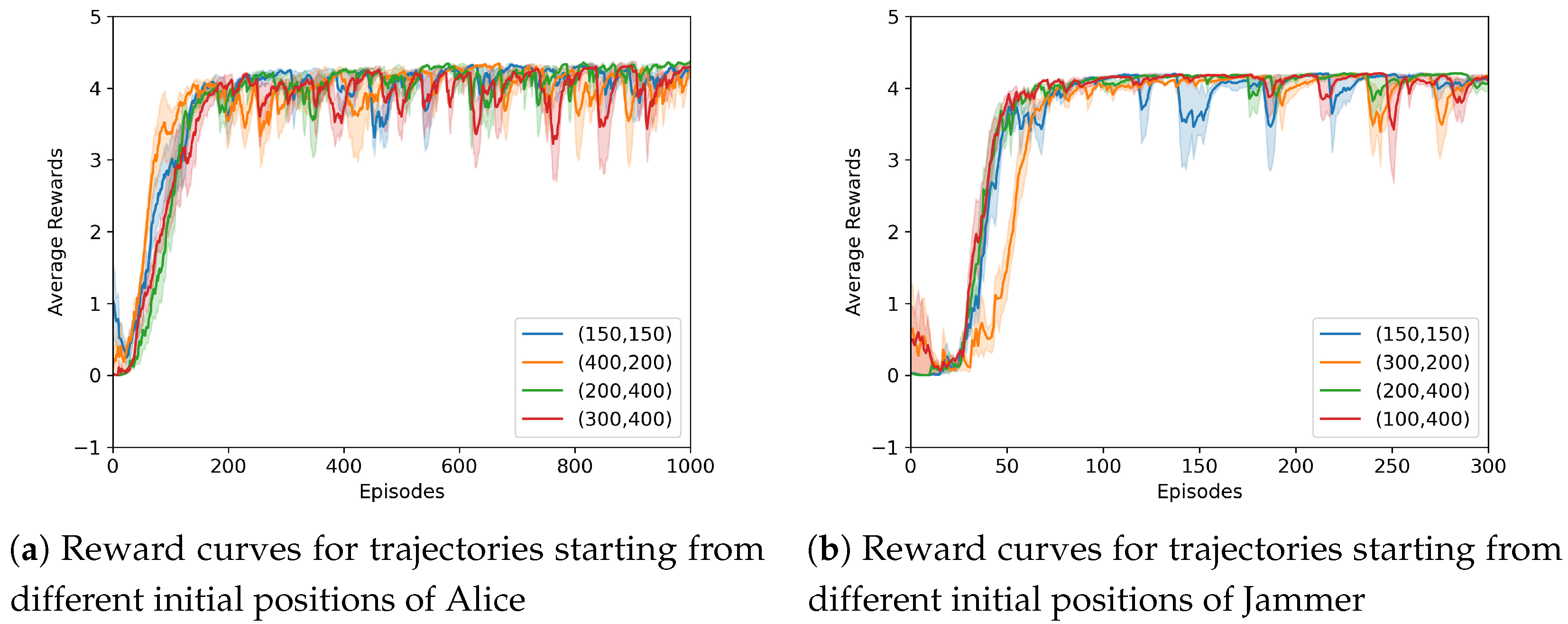

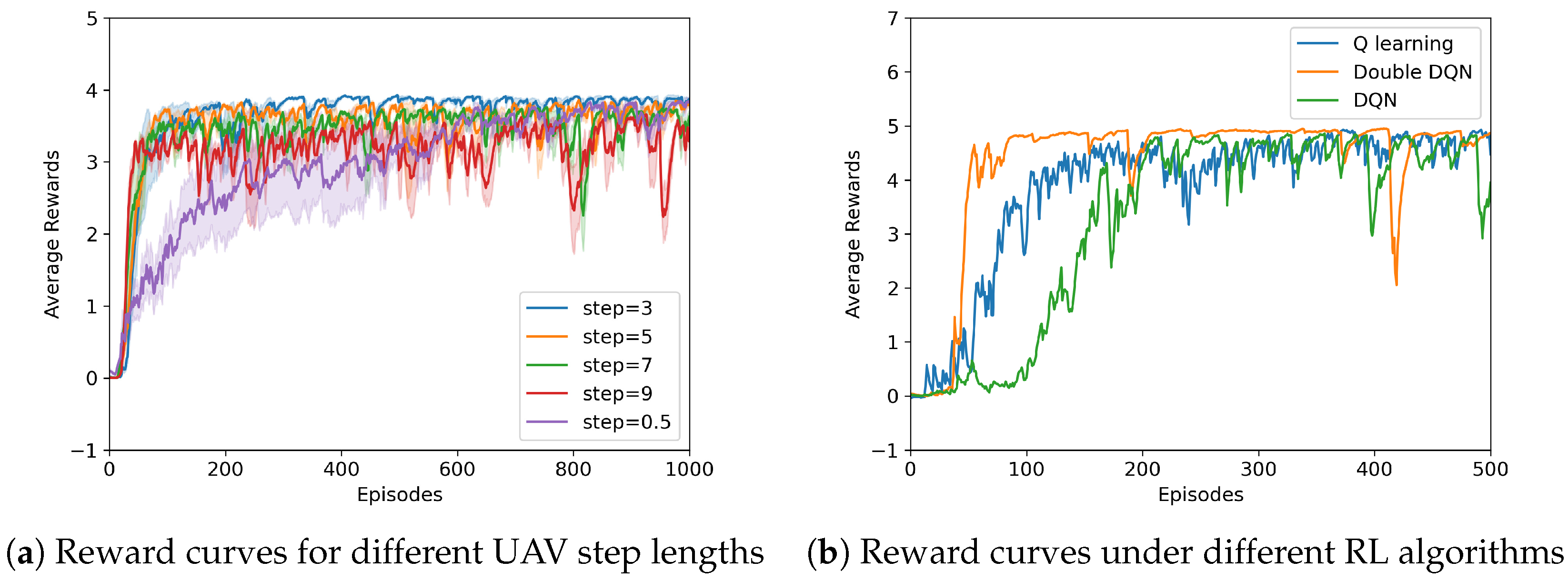

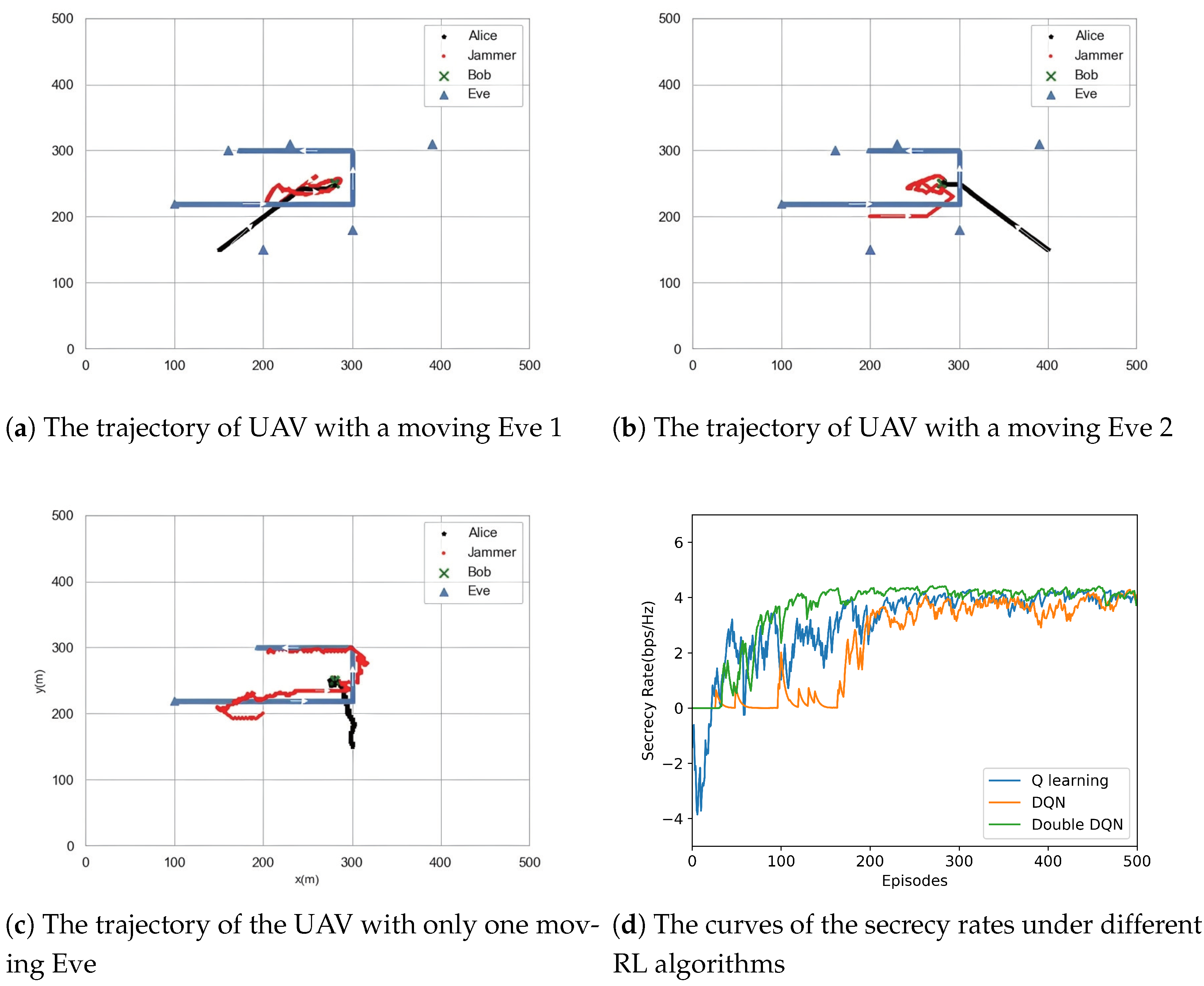

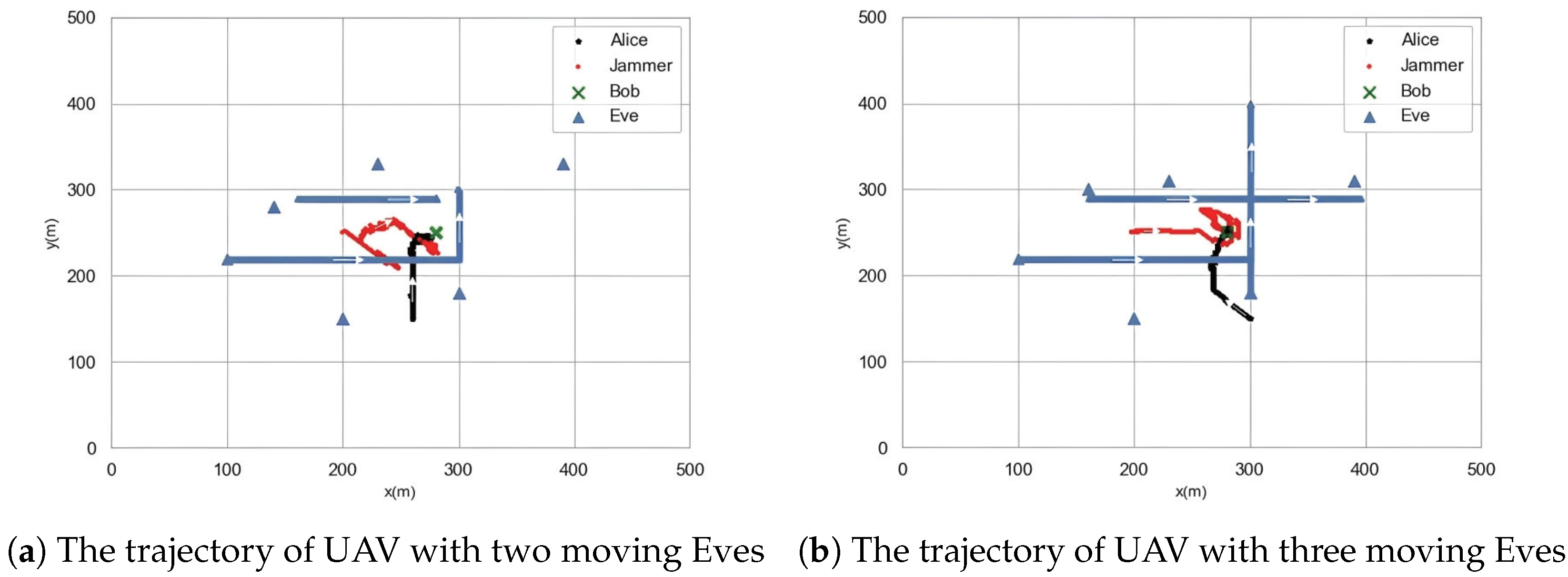

4. Simulation Results and Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tang, F.; Kawamoto, Y.; Kato, N.; Liu, J. Future intelligent and secure vehicular network toward 6G: Machine-learning approaches. Proc. IEEE 2019, 108, 292–307. [Google Scholar] [CrossRef]

- Zhao, N.; Lu, W.; Sheng, M.; Chen, Y.; Tang, J.; Yu, F.R.; Wong, K.K. UAV-assisted emergency networks in disasters. IEEE Wirel. Commun. 2019, 26, 45–51. [Google Scholar] [CrossRef]

- Cheng, F.; Zhang, S.; Li, Z.; Chen, Y.; Zhao, N.; Yu, F.R.; Leung, V.C. UAV trajectory optimization for data offloading at the edge of multiple cells. IEEE Trans. Veh. Technol. 2018, 67, 6732–6736. [Google Scholar] [CrossRef]

- Zhong, C.; Yao, J.; Xu, J. Secure UAV communication with cooperative jamming and trajectory control. IEEE Commun. Lett. 2018, 23, 286–289. [Google Scholar] [CrossRef]

- Sun, X.; Ng, D.W.K.; Ding, Z.; Xu, Y.; Zhong, Z. Physical layer security in UAV systems: Challenges and opportunities. IEEE Wirel. Commun. 2019, 26, 40–47. [Google Scholar] [CrossRef]

- Lu, H.; Zhang, H.; Dai, H.; Wu, W.; Wang, B. Proactive eavesdropping in UAV-aided suspicious communication systems. IEEE Trans. Veh. Technol. 2018, 68, 1993–1997. [Google Scholar] [CrossRef]

- Xiao, L.; Lu, X.; Xu, T.; Zhuang, W.; Dai, H. Reinforcement learning-based physical-layer authentication for controller area networks. IEEE Trans. Inf. Forensics Secur. 2021, 16, 2535–2547. [Google Scholar] [CrossRef]

- Mao, Q.; Hu, F.; Hao, Q. Deep learning for intelligent wireless networks: A comprehensive survey. IEEE Commun. Surv. Tutor. 2018, 20, 2595–2621. [Google Scholar] [CrossRef]

- Zhang, G.; Wu, Q.; Cui, M.; Zhang, R. Securing UAV communications via joint trajectory and power control. IEEE Trans. Wirel. Commun. 2019, 18, 1376–1389. [Google Scholar] [CrossRef]

- Cai, Y.; Wei, Z.; Li, R.; Ng, D.W.K.; Yuan, J. Joint trajectory and resource allocation design for energy-efficient secure UAV communication systems. IEEE Trans. Commun. 2020, 68, 4536–4553. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, L.; Zhou, Y.; Liu, X.; Zhou, F.; Al-Dhahir, N. Resource allocation and trajectory design in UAV-assisted jamming wideband cognitive radio networks. IEEE Trans. Cogn. Commun. Netw. 2020, 7, 635–647. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, R.; Zhang, J.; Yang, L. Cooperative jamming via spectrum sharing for secure UAV communications. IEEE Wirel. Commun. Lett. 2019, 9, 326–330. [Google Scholar] [CrossRef]

- Zhou, X.; Wu, Q.; Yan, S.; Shu, F.; Li, J. UAV-enabled secure communications: Joint trajectory and transmit power optimization. IEEE Trans. Veh. Technol. 2019, 68, 4069–4073. [Google Scholar] [CrossRef]

- Razaviyayn, M. Successive Convex Approximation: Analysis and Applications. Ph.D. Thesis, University of Minnesota, Minneapolis, MN, USA, 2014. [Google Scholar]

- Zhang, J. A Q-learning based Method f or Secure UAV Communication against Malicious Eavesdropping. In Proceedings of the 2022 14th International Conference on Computer and Automation Engineering (ICCAE), Brisbane, Australia, 25–27 March 2022; pp. 168–172. [Google Scholar] [CrossRef]

- Fu, F.; Jiao, Q.; Yu, F.R.; Zhang, Z.; Du, J. Securing UAV-to-vehicle communications: A curiosity-driven deep Q-learning network (C-DQN) approach. In Proceedings of the 2021 IEEE International Conference on Communications Workshops (ICC Workshops), Montreal, QC, Canada, 14–23 June 2021; pp. 1–6. [Google Scholar]

- Zhang, Z.; Zhang, Q.; Miao, J.; Yu, F.R.; Fu, F.; Du, J.; Wu, T. Energy-efficient secure video streaming in UAV-enabled wireless networks: A safe-DQN approach. IEEE Trans. Green Commun. Netw. 2021, 5, 1892–1905. [Google Scholar] [CrossRef]

- Zhang, Y.; Mou, Z.; Gao, F.; Jiang, J.; Ding, R.; Han, Z. UAV-enabled secure communications by multi-agent deep reinforcement learning. IEEE Trans. Veh. Technol. 2020, 69, 11599–11611. [Google Scholar] [CrossRef]

- Ye, H.; Li, G.Y.; Juang, B.H.F. Deep reinforcement learning based resource allocation for V2V communications. IEEE Trans. Veh. Technol. 2019, 68, 3163–3173. [Google Scholar] [CrossRef]

- Deng, D.; Li, X.; Menon, V.; Piran, M.J.; Chen, H.; Jan, M.A. Learning-based joint UAV trajectory and power allocation optimization for secure IoT networks. Digital Commun. Netw. 2022, 8, 415–421. [Google Scholar] [CrossRef]

- Liu, C.; Zhang, Y.; Niu, G.; Jia, L.; Xiao, L.; Luan, J. Towards reinforcement learning in UAV relay for anti-jamming maritime communications. Digital Commun. Netw. 2022. [Google Scholar] [CrossRef]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Mukherjee, A.; Swindlehurst, A.L. Jamming games in the MIMO wiretap channel with an active eavesdropper. IEEE Trans. Signal Process. 2012, 61, 82–91. [Google Scholar] [CrossRef]

- Mukherjee, A.; Swindlehurst, A.L. Detecting passive eavesdroppers in the MIMO wiretap channel. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 2809–2812. [Google Scholar]

- Al-Hourani, A.; Kandeepan, S.; Lardner, S. Optimal LAP altitude for maximum coverage. IEEE Wirel. Commun. Lett. 2014, 3, 569–572. [Google Scholar] [CrossRef]

- Bloch, M.; Barros, J.; Rodrigues, M.R.; McLaughlin, S.W. Wireless information-theoretic security. IEEE Trans. Inf. Theory 2008, 54, 2515–2534. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

| Notation | Definition |

|---|---|

| T | Total flight time |

| Number of time slots | |

| t | Index of time slots |

| Path loss of ATG | |

| Free space path loss | |

| Distance between the UAV and the ground receiver | |

| Height of the UAV | |

| f | Carrier frequency of the system |

| c | Speed of light |

| Value of excessive path loss | |

| Propagation group | |

| Channel path loss | |

| Probability of LoS | |

| Probability of NLoS | |

| Angle between the UAV and the ground user | |

| Path loss between Alice and Bob | |

| Path loss between Alice (Jammer) and Eve | |

| Path loss between Jammer and Alice | |

| Data rate of legitimate channel | |

| Data rate of wiretap channel | |

| Secrecy rate of the system | |

| Power of the natural Gaussian noise | |

| E | Number of Eves |

| Transmitting power of Alice | |

| Artificial noise power generated by Jammer | |

| State space of MDP | |

| Action space of MDP | |

| State-transition probability of MDP | |

| Reward space of MDP |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qian, Z.; Deng, Z.; Cai, C.; Li, H. Reinforcement Learning Based Dual-UAV Trajectory Optimization for Secure Communication. Electronics 2023, 12, 2008. https://doi.org/10.3390/electronics12092008

Qian Z, Deng Z, Cai C, Li H. Reinforcement Learning Based Dual-UAV Trajectory Optimization for Secure Communication. Electronics. 2023; 12(9):2008. https://doi.org/10.3390/electronics12092008

Chicago/Turabian StyleQian, Zhouyi, Zhixiang Deng, Changchun Cai, and Haochen Li. 2023. "Reinforcement Learning Based Dual-UAV Trajectory Optimization for Secure Communication" Electronics 12, no. 9: 2008. https://doi.org/10.3390/electronics12092008

APA StyleQian, Z., Deng, Z., Cai, C., & Li, H. (2023). Reinforcement Learning Based Dual-UAV Trajectory Optimization for Secure Communication. Electronics, 12(9), 2008. https://doi.org/10.3390/electronics12092008