Abstract

Over recent years, machine translation has achieved astounding accomplishments. Machine translation has become more evident with the need to understand the information available on the internet in different languages and due to the up-scaled exchange in international trade. The enhanced computing speed due to advancements in the hardware components and easy accessibility of the monolingual and bilingual data are the significant factors that have added up to boost the success of machine translation. This paper investigates the machine translation models developed so far to the current state-of-the-art providing a solid understanding of different architectures with the comparative evaluation and future directions for the translation task. Because hybrid models, neural machine translation, and statistical machine translation are the types of machine translation that are utilized the most frequently, it is essential to have an understanding of how each one functions. A comprehensive comprehension of the several approaches to machine translation would be made possible as a result of this. In order to understand the advantages and disadvantages of the various approaches, it is necessary to conduct an in-depth comparison of several models on a variety of benchmark datasets. The accuracy of translations from multiple models is compared using metrics such as the BLEU score, TER score, and METEOR score.

1. Introduction

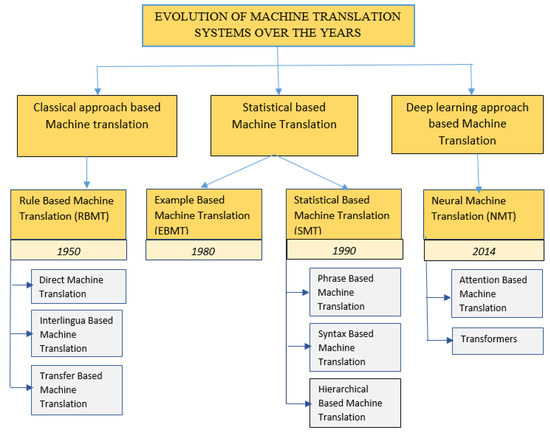

Machine translation is a sub-field of natural language processing that aims to perform automated translation from one natural language to another. The necessity of understanding, sharing and exchange of ideas of multilingual people related to a subject of interest gave birth to the field of “Machine Translation”. The concept of automatizing the translation of language was first conceived in 1933 by Peter Petrovich Troyanskii [1] who proposed his vision at the Academy of Sciences; his work was limited to preliminary discussions only. Later, A.D Booth and Warner Weaver in the year 1946 [2] at Rockefeller Foundation revived the idea of automatizing the task of translation. Since then, a lot of advancements have taken place in terms of both computing power and methodologies that have leveraged translation quality. The evolution of machine translation systems over the years has been shown in the form of a timeline in Figure 1. Various approaches have been adopted so far for the machine translation task which is categorized into three main categories as shown in Figure 2.

Figure 1.

The evolution of machine translation systems over the years.

Figure 2.

The taxonomy is a representation of the evolving machine translation systems over the year.

The rule-based machine translation (RBMT) system was the first commercial translation system that follows a knowledge-driven approach [3]. It constitutes two major components, namely rules and lexicon. The generation rules were hand-crafted from linguistic knowledge; there is an extensive dependency on linguistic data such as bilingual dictionaries, grammar and morphological dictionaries. Various approaches for building rule-based translation systems have been developed such as OpenLogos MT (Scott and Barreiro, 2009) [4], Apertium (Forcada, 2006) [5] and Grammatical Framework (Ranta, 2011) [6].

The example-based translation system (EBMT) is also known as “memory-based translation”. It was proposed as a solution to overcome RBMT’s issue. The idea was first conceived by (Nagao, 1981) when he presented his paper “Translation by Analogy”, which was published in 1984 [7] three years later. EBMT is at times linked with translation memory due to the popularity they gained at roughly the same time. The main idea of EBMT centers around the example-based database and bilingual corpora that are managed in the translation memory. In the 1990s, two translation paradigms received much attention from the research community, one being example-based machine translation and the other SMT due to the positive results that they demonstrated. Some initial works that helped to gain recognition are the example selection issue by approximate input–output sentence matching (Sato and Nagao 1990) [8] and the example-based translation of technical computer terms (Sato 1993) [9].

The statistical machine translation system is related to data-driven methods and is based on probabilistic models. The statistical approach was incorporated to translate French sentences to English (Peter F. Brown 1990) [10]; the model assigns a probability for possible word-to-word alignment between bilingual pairs of sentences (Peter F. Brown 1993) [11], discriminative models based on maximum entropy capturing sentence features (Och 2002) [12], translation models based on bilingual phrases to model local context (Manning 2000) [13], and construction of meaningful sentences by using a statistical approach to semantic parsing (Wong & Mooney 2006) [14]. The listed models have been used widely despite data sparsity being an issue. There are various algorithms to solve the issues of machine translation such as RNN encoding–decoding in existing log-linear SMT, discourse label enabled coherence in SMT, transfer learning method, machine translation using self-attention mechanism, unsupervised training algorithm and iterative refinement algorithm based SMT, neural machine translation using the adversarial augmentation method, machine translation using reinforcement learning, the continuous semantic augmentation (CSANMT) paradigm for neural machine translation, baseline, NMT (LSTM), NMT (transformer), hybrid (NMT + SMT), rule-based MT, phrase-based MT, neural CRF, neural Seq2Seq, ensemble three models and so on. Encoding–decoding was performed by RNNs for the present log-linear SMT. Encoding the original sentence requires the use of a recurrent neural network (RNN), and decoding the sentence after it has been translated requires the use of a second RNN. Models of statistical machine translation (SMT) that use this approach have shown considerable improvements in their levels of performance. When discourse-level information is incorporated into SMT models, such as with discourse labels, the translations’ coherence and fluency can be significantly improved. Transfer learning is a strategy that involves training a model using a large amount of data from one language pair, and then using that pre-trained model to set the weights for another language pair. One example of this would be to build a model using data from Spanish and English. This can be a significant advantage in situations where there is a scarcity of training data in the target language. A machine translation model that makes use of a self-attention mechanism is able to concentrate on various parts of the language that it receives as input in order to produce a translation from the text that it was given. This approach has the potential to improve the standard of the translations. Methods of SMT that rely on unsupervised training algorithms and iterative refinement algorithms might improve the quality of translation by first training models on monolingual data rather than parallel data. These methods are also known as iterative refinement algorithms. This method can be useful in situations in which it is difficult to obtain parallel data. For the purpose of producing more stable models, the neural machine translation (NMT) adversarial augmentation approach makes use of a training methodology known as adversarial training. The model has been trained to generate translations that are difficult for a discriminator network to distinguish from versions that were created by humans. Another approach to natural language translation (NMT) makes use of a reinforcement learning framework to help fine-tune the model’s performance when it comes to translation. A reward signal in the form of data that represents the quality of the translations is employed during the training process of the model. The continuous semantic augmentation model is used in neural machine translation. This method employs the usage of a continuous semantic space to convey the meaning of words and phrases in order to improve the accuracy and naturalness of translations. In general, the data that are currently available, the computational resources that are currently available, and other considerations such as time constraints will all play a part in choosing which method or strategy is ultimately utilized. An encoder, a decoder, and an attention mechanism are the three components that make up an attention-based model. During the decoding process, the attention mechanism focuses on certain parts of the input sequence. In addition to reaching a performance that is considered to be state-of-the-art on a wide variety of benchmark datasets, it has been demonstrated that these models perform well even when they are provided with exceedingly long input sequences. Models based on reinforcement learning reward function are iteratively improved with the help of reinforcement learning models, which are utilized to make translations of higher quality. It has been demonstrated that these models, which are educated with a mixture of supervised and reinforcement learning techniques, are able to accomplish difficult translation tasks with relative ease. Transformer models grasp input sequences using self-attention strategies, which enables them to be useful for a wide variety of natural language processing applications, including machine translation. In recent years, transformer models have gained popularity as a result of their improved performance as compared to the state-of-the-art on various benchmark datasets.

In later years, due to the massive accomplishments of deep learning methods in various applications, researchers integrated these methods for translation tasks which proved to be successful and eventually became de facto in academia and industry [15]. The success revolutionized the field, and a new translation model was developed named “neural machine translation” (NMT). The NMT utilizes neural networks, technically the first work to build a neural network and jointly tune it to maximize the performance by Bahdanau and Sutskever. Recently, a completely different architecture was proposed by Google in one of their papers, “Attention Is All You Need”, which completely changed the dynamics of the NLP field. The attention mechanism proposed in the paper focuses on the relevant part of the sentence during translation, which now has become the current de facto approach in most models.

The survey conducted aims to highlight the significant approaches adopted for machine translation. In the survey table, the performance metrics taken are BLEU score, NIST and accuracy. BLEU stands for “Bilingual Evaluation Understudy” score is based on geometric n-gram precision, the most widely used metric to measure machine-translated text against professional human-translated text on a scale of 0 to 1. The value near 1 shows more overlapping between human and machine-translated text. The second metric considered is accuracy, defined as a ratio of correctly predicted observation against total observation and is mostly used in the classical approach. Another metric used by some researchers is NIST which is a close derivative of BLEU that intakes information from each n-gram precision and based on these metrics survey has been conducted. The major contributions of this paper are:

- (i)

- A critical review of existing literature.

- (ii)

- A Comparative analysis of deep learning enabled methods.

2. Classical Approach-Based Machine Translation

The earliest machine translation systems were based on the classical approach that utilized hand-crafted linguistic grammar rules. These handcrafted grammar rules are extracted from bilingual dictionaries of the source and target languages, covering specific language syntactic, semantic, morphological and orthographic regularities and forming the base for translation. The following approach heavily depends on language theory; hence, it is resource-intensive. This section discusses the advantages of rule-based machine translation, transfer-based machine translation, and interlingua-based machine translation.

2.1. Rule-Based Machine Translation

The rule-based machine translation, better known as “RBMT,” is based on a knowledge-driven approach. This translation system works on handcrafted lexicon and grammar rules developed by experts over time that play a major role in various phases of translation. There are three phases involved in the complete translation process, namely analysis, transfer, and generation, for which three modules are required source language parser, source to target language transfer module, and target language generator [16]. In the first phase, intensive linguistic analysis is performed, which given the source sentence, extracts imperative information such as word sense disambiguation, parts-of-speech (POS) tags, morphology, and name entity. In the second phase (lexical transfer) bilingual dictionaries are utilized to translate the source root word to the corresponding target language root word, followed by the translation of suffixes [17]. The final third phase of RBMT corrects the gender of translated words, followed by local and long-distance agreement which is implemented by a word generator. The functional flow of the RBMT is shown in Figure 3.

Figure 3.

RBMT functional flow.

Though SMT systems produce more fluent translations for a language that is low-resourced, RBMT is an appropriate choice as it pays attention to the details of the original content (G. Abercrombie 2016) [18].

2.1.1. Direct Machine Translation

The world’s first-generation translation systems are based on the “direct machine translation” approach. It is also better known by other names such as literal translation, word-based translation, or dictionary translation. It typically depends on source–target language pair-dependent rules to carry out translation tasks. The Bernard Vauquois pyramid [19] uses the direct approach to machine translation. It is easy to build the translation for closely related languages and instances. Some of the examples of RBMT systems such as SYSTRAN (Wheeler, 1984) [20], a typically direct translation system, are initially adapted only for translating Russian to English; further, the model refined and included other language pairs and became a major influencer in machine translation. Some other exemplary systems are PAHO (Leon, 1985) [21] and ANUSAARAKA (Bharati et al., 1997) [22]. The approach is based on word level where the source directly translates to the target language without passing through an intermediate representation. For analyzing and generating target representation, such systems require a large bilingual dictionary and monolithic program; hence, this approach is bilingual and unidirectional.

- Issues

- This approach works at the lexical level and does not account for the structural relationship between words. At the lexical level, frequent mistranslation and inappropriate syntax can be observed.

- The linguistic and computational naivety of this approach is also an issue determined in the late 1950s by an unsophisticated approach to linguistics in the MT project. The translation system developed is specific to a language pair and cannot be adapted to different language pairs.

2.1.2. Interlingua-Based Machine Translation

The interlingua approach sits at the top of the pyramid, which allows having an intermediate representation of the source text. The translation process involves two phases where in the first phase, the source language is translated into an abstract independent representation called interlingua. In the second phase, interlingua is expressed using lexical and syntactic rules of the target language. Many works have been done to improve the translation quality. Some successfully implemented examples of systems are CETA (Vauqouis, 1975) [23], TRANSLATOR (Nirenburg et al., 1987) [24] and ANGLABHARTI (Sinha et al., 1995) [25]. BIAS analyzer was developed by incorporating the Interlingua model to provide a unified method of knowledge reasoning (Yusuf, 1992) [26], design and development of KANT the knowledge-based machine translation system (Lonsdale, 1994) [27], and creation of the dictionaries based on lexical conceptual structure for the use in foreign language tutoring (FLT) and interlingua MT (Dorr, 1997) [28].

- Issues

- The important issue lies in defining interlingua representation which keeps the meaning of the original sentence intact.

2.1.3. Transfer-Based Machine Translation

The transfer approach generates the translation from intermediate representation, which simulates the original sentence meaning. Due to the structural differences in source and target language, the transfer systems are decomposed into three phases. (1) The first phase is ’the analysis phase’ where the source language (SL) parser produces a syntactic representation of the source sentence. (2) Phase 2 translates a syntactic representation to an equivalent target language-oriented representation. (3) In the final phase, the target text is generated. Transfer translation systems include EUROTRA (Arnold and des Tombe, 1987) [29], METAL (Bennett, 1982) [30] and the SHAKTI machine translation system (Bharati et al., 2003) [31]. Designing the rules for rule-based systems consumes much time and human effort, so an attempt was made to automatize the generating rules in the form of transfer rules, including inflection rules, conjugation rules, and word-level correspondence rules. Agglutinative languages present particular linguistic features which are costly to curate in a bilingual environment, so any attempt to generate transfer rules using small or medium-sized corpora was done [32].

- Issues

- Compilation of dictionaries makes one walk through the minefield of copyright issues (F. Bond et al., 2005).

- Insufficiency of the existence of good dictionaries. Building a new dictionary consumes much time and human effort.

- Inefficiency in adapting to a new domain and keeping up with changing technology. Difficulty in dealing with rule interactions in big systems, ambiguity, and idiomatic expressions. Sometimes the meaning of the source sentence is lost.

3. Statistical Approach-Based Machine Translation System

Statistical-based approaches are much better in terms of performance and give an alternative to the classical-based approach. The two main statistical approaches to machine translation are Example-Based Machine Translation (EBMT) and Statistical-Based machine translation (SMT). The translation is produced through statistical models and the parameters are procured from bilingual parallel-aligned text corpora which form the base for translation. However, the approach may not be practically feasible for zero- or low-resourced languages.

3.1. Example-Based Machine Translation (EBMT)

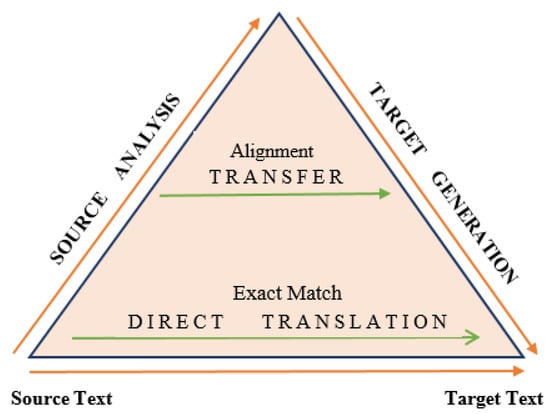

Example-Based Machine Translation (EBMT) is a corpus-based, i.e., data-driven approach that takes the stance between RBMT and SMT. The first paper was published by (Nagao, 1984) [7], and the idea carries the example-based translation as a reference. The Vauquois pyramid of the classical approach has been adopted for the EBMT with the addition of alignment, and exact match, as shown in Figure 4. Makoto Nagao briefly captured the example guided machine translation essence in his statement: “A man does not translate a sentence by performing linguistic analysis, but rather does the translation by segmenting the sentence into fragmental phrases which are translated into target language phrases and finally ordering them into one line sentence [33]. The translation of every phrase is done on grounds of analogy translation principle with proper examples as references”. He identified three components that are essential to EBMT: (1) matching fragments against real example databases; (2) identification of corresponding translation fragments (3); recombination of corresponding translation fragments to produce target text.

Figure 4.

The “Vauquois Pyramid” adapted for EBMT [34].

Perhaps it is didactic to choose a familiar pyramid diagram (Vauquois, 1968) and superimpose EBMT. For the EBMT system, the sentence is an ideal translation unit that shares a standard feature with Translation Memory technology. Furthermore, if the translation for the same sentence is not found, then EBMT utilizes the similarity metric to find optimal matching examples. The phases in the traditional machine translation approach of analyzing source sentences are replaced by matching input against an exemplary set. After selecting a relevant example, corresponding fragments in the target are selected, which is termed “alignment” or “adaptation”. After choosing appropriate fragments, they are combined with the target text. Like in some implementations, both matching and recombination phases use similar techniques for traditional machine translation analysis and generation phases. One feature in which the pyramid does not work for EBMT is “direct translation” related to “exact match”. Since they are different and require the least analysis but looking from other perspectives exact match means “perfect representation”, which requires no adaptation, and hence could be located at the top of the pyramid.

Since EBMT is a corpus-based approach, the parallel-aligned corpus is the heart. However, the question arises what should be the size of the corpus? Well, data being the crucial aspect for translation, there is no report so far that confirms the detail about the size but the few experiments conducted conveyed the importance of data. One experiment conducted by Mima et al. (1998) [35] showcased how translation quality improved upon the addition of more examples to the database. In the test case of Japanese abdominal particle construction, 774 examples were uploaded to the database in increments of 100. A visible increment in the translation accuracy was observed, it rose from 30 percent with 100 examples at 65 percent. Though the improvement was linear, it was observed that after some limit, even after adding more examples, the translation quality did not increase.

The main knowledge base for EBMT stems from the examples that bypass the knowledge acquisition bottleneck found in RBMT and generalize examples. The portion of criterion might be examples utilized at run time or not, but holding this measure statistical method could be ruled out. Although in the traditional sense, rules cannot be derived from examples, at run time there is no consultation to exemplary database [36]. The above-mentioned example-based approach offers little relief from “structure-preserving translation” but some issues are still mentioned below. A brief survey for example-based machine translation has been shown in Table 1. The survey table describes the papers that formed a significant part of evolution, highlighting the proposed methodology, techniques used, training data, and performance measurement based on BLEU score and accuracy.

Table 1.

Survey for example-based machine translation.

- Issues

- EBMT is suitable for sub-languages; once the right corpus is located, the problem comes with alignment: identification of finer granularity for sentences that correspond to each other. EBMT does not compose structure as it follows that source language structure does not impose on target language structure.

- Another issue is the storage of examples as a complex structure that derives huge computational expense.

3.2. Statistical Machine Translation

A machine translation paradigm SMT is based on probabilistic mathematical theory advocating that the chances of the occurrence rely on the variables that are likely to impact the event. The initial works involve individual sentence translation, and there can be many acceptable translations possible, and the selection from them is largely a matter of taste. The objective of SMT is to translate source sentence f into a target sentence e, and every translation pair is assigned a probability which is interpreted as the probability that the translator produces. The chances of error are reduced by selecting the sentence that maximizes the conditional probability according to Bayes’ rule over the possible translations [10].

This modeling translation approach is known as the noisy channel framework. Particularly in reference to IBM models, they are the instance of a noisy channel. The framework architecture comprises two components: translation model and language model .

- Language Model

The language model computes the probability of a word given all the words that precede it. Consider a sentence e and decompose it into m words. We compute the probability as a product of conditional probabilities of an object word given the history , but due to many histories, a pile of parameters is formed which is not efficient and realistic; hence, a bigram language model can be considered which takes the immediate history into an account and a trigram model takes two immediate histories at a certain time [11].

- Translation Model

We want to translate a sentence, and the words in the source text generate the words in the target, so we say there is word-to-word alignment. However, sometimes, a source word may generate two or more words in the target language or sometimes no word in a corresponding target language. However, the generalization of correspondence of the words is of relevance that we term alignment. There are two derived notions from alignment known as fertility and distortion, which helps in building a translation system [47]. Fertility is defined as the number of target words generated by the source word. Distortion is defined as when the source word and its corresponding target word or words appear in the same part of the sentence or perhaps near the end or beginning; such words are said to be translated roughly undistorted.

IBM models proved to be a significant landmark in the history of machine translation. In the late 1980s, the seminal work done by the researchers of IBM Watson Research Center revived the statistical approach to machine translation. They proposed the concept of word-by-word alignment, where each word acts as a translation unit and probabilities are calculated by using a single word. In 1990 Brown et al. provided their first experimental results for a French and English translation pair that utilizes statistical techniques for translation. The proposed model attains 48% accuracy, out of which 5% of the sentences translate exactly to their actual translation. Further discussion concluded that the model alleviates about 60% of the manual work for translation. The proposed model introduces the concept of word-by-word alignment. Each word acts as a translation unit and probabilities are calculated using a single word alignment between a pair of sentences , which shows the origin in e of each word in f. The above-mentioned Equation (1) is modified to integrate alignment variable a.

For IBM model 1 and IBM model 2, is decomposed into three probability distributions: (1) over target sentence length, (2) over alignment configuration of alignment model, (3) over target sentence, given the source sentence and alignment configuration. The core difference in the IBM model 1 and 2 lies in their way of computing alignment probabilities. IBM model 1 considers uniform distribution over source–target language pair while IBM model 2 uses zero-order alignment considering the alignments are independent of each other at different positions. For IBM model 3, IBM model 4 and IBM model 5, are decomposed and fertilities are directly parameterized. The fertility of each word in e is computed along with a set of words in f.

For IBM models 3 and 4, the probability mentioned above is further decomposed into fertility, distortion, and translation probabilities. The core difference between IBM models 3 and 4 lies in the formulation of the distortion probabilities, as model 3 assumes zero-order distortion probabilities, meaning that the probability depends on the current position and e and f sentence length, whereas IBM model 4 parameterizes the distortion probabilities as (1) placing the head of each word, and (2) placing the rest of the words. However, both models fail to focus on the events of interest and hence are deficient. IBM model 5 is the last proposed model from the IBM series that intends to avoid deficiency by reformulating the alignment model of its immediate predecessor. All five proposed IBM models have a specific way to decompose the translation model. Each distribution models events well, but there is still scope for improvement in the model’s capacity.

To leverage the capacity and accuracy of statistical models, Vogel proposed a new alignment model based on Hidden Markov Models (HMM) that model strong localization effects between the language pairs. The translation model is decomposed into the following components: HMM alignment probabilities and translation probabilities. This approach makes alignment probabilities depend on word alignment relative position. SMT systems produce more fluent translations than RBMT. Furthermore, the error rate of SMT is 29% than classical RBMT 62%, which is comparatively low. The use of bilingual words significantly improves the translation quality (2002). The phrase-based approach is better than the single-word approach because the phrase acts as a single translation unit that grabs the contextual meaning.

- Issues

- Difficulty in applicability with agglutinative languages.

- The construction of a corpus is expensive due to limited resources. Do not work with languages having different word orders.

The three predominant types of statistical machine translation built in the past are:

3.2.1. Phrase-Based SMT Systems

The Phrase-based models are an advancement over word-based ones where phrases are the basic unit of translation. A phrase is a continuous sub-sequence of words in a sentence that allows a model to learn local word re-orderings, deletion, and insertion sensitive to the local context. The process starts with the segmentation of the input sentence into phrases that act as an atomic unit for translation; we translate each phrase and reorder the translated phrases into the target language. To perform phrase translation, phrases are extracted by training statistical alignment models and computing word alignment of the training corpus. The union of both alignments gives a symmetrical word alignment matrix, a commencing point for phrase extraction. A hidden variable is introduced to utilize phrases for the translation model, and one-to-one phrase alignment is used to obtain phrase translation. Many researchers tried to improve translation quality by advancing the state-of-the-art with these models. Phrase-based systems are refined by using an alignment template (Och et al. 1999) [48]. The Proposed Joint Probability Model is where without-alignment model translations between phrases are learned (Marcu & Wong, 2002) [49], a phrase size greater than three words improves performance (Koehn, 2003) [50], and some refinements to baseline phrase and word penalty were introduced. The survey on phrase-based machine translation is shown in Table 2. The table intends to highlight the important works of the phrase-based approach and describes the proposed methodology, techniques used along with the training data, and performance evaluated based on the BLEU and NIST metrics.

Table 2.

Survey for phrase-based statistical machine translation.

3.2.2. Syntax-Based SMT System

The model utilizes the syntactic structure in the input channel. The decoder builds a parse tree for the target sentence given the source sentence. The procedure starts from the target’s context-free grammar obtained from the training corpus and channel conditions certain operations on features of tree nodes, i.e., reordering, inserting, and translating, independent of each other. The IBM-MT system (1988) utilized statistical techniques that facilitated a complex glossary of fixed correspondence of fixed locations. However, this TM could not model structural or syntactic aspects for languages with different word orders (alignment score = 0.431); this issue was overcome in 2001 when the channel model was made to accept a parse tree that can perform certain operations and the alignment score became = 0.582. The survey for syntax-based machine translation is shown in Table 3.

Table 3.

Survey for Syntax based machine translation.

3.2.3. Hierarchical Based SMT Systems

There are some key issues: (1) A single target word can be aligned to a single source word leading to modeling issues. For instance, “teitadake” in English is translated as “could you,” but SMT allows only “could” to be mapped and “you” being unmapped is treated with zero fertility. (2) Various parameters rely on the EM algorithm for training which can optimize the log-likelihood of a model over bilingual corpus, but this algorithm does not assure to find globally the best solution. (3) The success of SMT also relies on the search issue search system (decoder) that should induce the source string from a sequence of target words by using clues from a large number of parameters. To overcome such issues, a hierarchical phase alignment approach is proposed (Imamura, 2001) [66] which aims to acquire translational knowledge from bilingual corpus without syntactic annotation automatically. It is an approach that computes the correspondence of sub-trees between source and target sentence parse trees based on partial parse results. The survey on hierarchical-based machine translation is shown in Table 4.

Table 4.

Survey of hierarchically based machine translation.

4. Deep Learning Approach-Based Machine Translation System

Deep learning approaches, when incorporated into machine translation tasks, showed promising results and completely revolutionized the natural language processing field, and became a mainstream approach in industry and academia [69]. This approach helps to develop the right architecture that automatically enables the system to learn features from the training data. The application of this approach requires minimum domain knowledge and has achieved outstanding performance in various complex tasks. In comparison to previously mentioned approaches, the deep learning approach can produce better translation by capturing long-term dependencies and removing unwanted preprocessing steps in feature engineering [70].

4.1. Neural Machine Translation (NMT)

Experiments showed that continuous representation of linguistic units exhibited state-of-the-art results for large-scale translation tasks such as translating English to French or translating English to German. In the year 1997, Neco and Forcada [71] proposed a modification of Pollack’s RAAM called RHAM (Recursive Hetero-Associative Memory) which consists of two feed-forward networks, namely “encoder” and “decoder”, which could also be implemented to learn general translations from examples. Later, in 2003, a neural network-based language model was developed by Yoshua Bengio to alleviate the data sparsity issue of SMT [72]. In the year 2013 (Kalchbrenner and Blunsom, 2013) [73], a neural machine translation system was officially unveiled; unlike other translation models, which consist of various components for the design, NMT has been an attempt to build a translation model with a large single neural network. Similar works have been done by (Sutskever et al., 2014) [74] and (Cho et al., 2014a) [75]. The model received much attention over the last couple of years because of its simple conceptual and minimal domain knowledge requirement. The approach has aided in modeling continuous representations of linguistics. It has also shown promising results when applied to other domains of research such as computer vision (Krizhevsky, 2012) [76], speech recognition (Hinton et al.) [77], character recognition (Ling et al., 2015) [78] etc. Its tremendous rise in performance and overall technological advances have made it state-of-the-art.

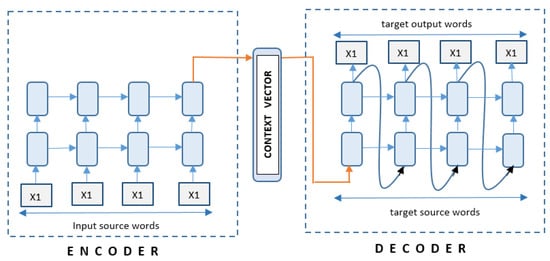

4.2. RNN Encoder-Decoder Framework

The neural machine translation system architecture consists of two core parts: an encoder and a decoder which are implemented using recurrent neural networks (RNNs). The neural network components for machine translation are shown in Figure 5. This architecture forms the base for most sequence-to-sequence models, and here, we discuss the general form of encoder–decoder architecture.

Figure 5.

Basic encoder–decoder framework.

- Encoder RNN

RNN is a neural network that takes in a variable-length sequence of the source text as an input and a sequence of vectors . Each time step hidden state is updated by

where f is a non-linear transformation function. RNN can be trained to predict a distribution over x given the words that precede the word implementing a softmax function with vocabulary V at every instant.

- Decoder RNN

The main goal of the decoder is to predict the next word in the target sentence given the context vector c, which is a fixed-sized vector that holds the meaning of the source sentence and previously predicted words. Like the encoder, the decoder also operators in a similar way; it also maintains the hidden state at every time step t, which is updated as:

q is a non-linear function and to find the target word by decoder conditional distribution is calculated as:

Both the parts of the NMT encoder and decoder are trained together to maximize the conditional log-likelihood

The basic encoder–decoder framework has been leveraged by replacing the RNN cells with recurrent bidirectional networks (Bi-RNN) while maintaining the other variables as it is and the conditional probability is computed as:

Unlike the above-mentioned framework, the probability for each target word is conditioned on a distinct context vector. The survey conducted for neural-based machine translation is shown in Table 5. The survey table highlights the research work that forms a significant part of the evolution of neural-based MT describing the proposed methodology, techniques used, and the training data and performance evaluated on the BLEU metric.

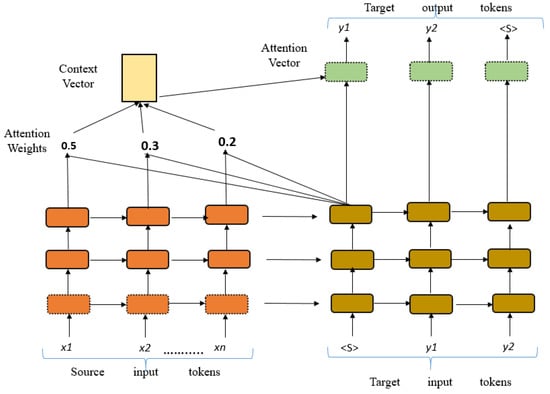

4.3. Integrated Attention Layer-Based NMT

The basic encoder–decoder model squishes the meaning of the original sentence into a fixed-sized vector better known as a summary vector or a context vector. However, as the length of the sentence is increased, the model fails to efficiently encode the information into the context vector leading to the loss of the relevant information. So in order to remove this bottleneck. Bahdanau et al. (2014a) [79] proposed an attention mechanism, which is an intermediate component between an encoder and decoder by extending the baseline whose main objective is to provide word alignment information (word correlation) in translation dynamically. It allows the model to learn the alignments between various modalities and automatically search for the parts in the source sentence that are relevant in predicting the target words. Their work explained no requirement of encoding into fixed-sized vectors; rather, the decoder, during decoding, could look at all the fixed-length vectors for each word called annotation vectors at a single time step to generate tokens in the target language. The diagrammatic representation of the attention mechanism can be seen in Figure 6 and then take a closer look at the attention mechanism from a mathematical perspective.

where a context vector and an RNN hidden state at time step i computed as:

Figure 6.

The attention mechanism provides additional alignment information rather than just using fixed sized context vector.

Context vector depends upon sequence of annotations which is computed as weighted sum of those annotations :

where attention weights represent probabilistic alignment showing how words in source and target language can be aligned to generate target words. The weight for each annotation is calculated as:

where the alignment model which is to be computed as before the computation of attention weights. The alignment score is a metric that measures the relevance of the i-th word in the source sentence to the jth target word. This is computed for all the source words to generate each target word. The proposed model is a bidirectional RNN (Bi-RNN) for the encoder and unidirectional for the decoder, which Bahdanau et al. trained their model on ACL WMT ’14 English–French parallel corpora. They experimented with the variable length of source sentences which exhibited that the performance of the conventional NMT model drops when the source sentence length becomes more significant than 30. However, with their proposed approach, the model continues to achieve good performance even with long sentences.

The attention architecture is classified into two types: global attention, which refers to looking at all the source positions, and local attention, which refers to looking at the sub-part of the source positions during the decoding phase. During this phase, the common step involved in both types is to intake the target hidden state to capture context vector to predict the target word . Both targets are hidden state , and the context vector is concatenated to produce an additional hidden state:

is passed through softmax to produce predictive distribution meaning to find the most probable word from the vocabulary.

- Global Attention

The architecture that implements global attention considers all encoder hidden states to derive a context vector. In order to compute context vector first variable length alignment vector is computed:

The context-based function is computed and referred to as the score:

- Local Attention

The above-mentioned approach is costly for longer sequences because to predict each target word, all source words have to be taken care of. To improve upon it, local attention-based architecture chooses to attend only a subset of source positions. An aligned position is produced by the architecture for each target word to achieve this. To compute a context vector, a first fixed dimensional alignment vector is generated:

where = monotonic alignment and and = model parameters. In both types of architecture, independent attention decisions are made, and to make alignment decisions, past alignment information is considered.

Table 5.

Survey for neural machine translation systems.

Table 5.

Survey for neural machine translation systems.

| Year | Aim | Dataset | Results |

|---|---|---|---|

| 2014 [75] | Aims to show that sentences with longer lengths leads to performance degradation by using 2 models: RNN Encoder–Decoder &gated recursive convolutional neural network | English-to-French translation having bilingual, parallel corpus | BLEU scores for RNNenc = 27.81 and Moses = 33.08 |

| 2015 [80] | To present minimum risk training (MRT) for optimizing model parameters with respect to arbitrary evaluation metric for end-to-end NMT (gated RNN) | To be evaluated on 3 translation task: Chinese–English, English–French, and English–German. | MRT performed best for English–French translation (Bleu = 34.23); English–German translation (Bleu = 20.45) |

| 2015 [81] | To investigate the embeddings learned by NMT model | word corpus of English–German & English–German sentence pairs extracted from WMT’14 | RNNenc and RNNsearch outperformed alternative embedding learning architecture RNNenc & RNNsearch = 0.93 TOFEL |

| 2016 [82] | Propose new interactive attention mechanism to keep track of interaction history and leverage performance | NIST Chinese–English translation task | Out of 3 chosen systems RNNsearch, NN-Cover-80 NMT-IA-80. NMT-IA-80 performed best with average score= 36.44 |

| 2015 [83] | A stronger neural network joint model (NNJM) which summarizes useful information through convolution architecture which can augment n-gram target language model | LDC data where sentence length is upto 40 words; vocabulary limited to 20K words for English and Chinese | The proposed model achieved significant growth in performance over previous model NNJM by +1.08 Bleu score |

| 2017 [84] | A simplified architecture based on succession of convolutional layers which simultaneously encodes source information compared to RNN | WMT’16 English-Romanian; WMT’15 English–German; WMT’14 English–French. | Results show that Single layer CNN + positional embeddings (30.4 Bleu score) outperform unidirectional LSTM encoder (27.4 Bleu score) & BILSTM |

| 2018 [85] | To simplify NMT addition subtraction twin-gated recurrent network (ATR) which has simplified recurrent units has been proposed which is more transparent than LSTM/GRU | WMT’14 English–German and English–French translation tasks. | Proposed model compared with previous models ATR yield = 21.99 Bleu; after integrating context-aware encoder (ATR +CA) = 22.7 Bleu outperforming (Buck et al., 2014) by 2 Bleu; 2.41/3.26 Bleu gain against exisiting LSTM |

| 2020 [86] | To propose novel gated recurrent unit (GRU)- gated attention model (GAtt) for NMT which enables translation -sensitive source representations that contributes to discriminative context vectors | Case-insensitive (Chinese– English) and WMT’17 case sensitive (English– German) | GAtt achieved 24.38 BLEU score and GAtt-Inv achieved 26.63 BLEU score outperforming RNNsearch |

- Issues

- Domain adaptation: A well-recognized challenge in translation; words in different domains have different translations and meanings. The development of NMT targets specific use cases; hence, methods for domain adaptation should be developed.

- Data sufficiency: NMT emerged as a promising approach, but some experiments reported poor results under low-resourced conditions. It is a challenge to provide machine translation solutions for an arbitrary language where there are no or little parallel corpora available.

- Vocabulary coverage: This issue can severely affect the translation quality because the NMT can choose to have candidate words in its vocabulary (frequent words) while the remaining are considered out-of-vocabulary (OOV). This challenge exists due to the proper nouns and non-usage of many verbs. So in practical implementation, the unknown or rare words are replaced by the “UNK” token, which ultimately hurts the semantic aspect of the text.

- Sentence length: NMT is not able to handle very long sentences, and upon translation, the meaning is lost.

4.4. Transformers

The transformer has emerged to be the greatest innovation in the history of machine translation. The transformer is an advanced fully attention-based neural machine translation paradigm proposed by (Vaswani et al., 2017) [87]. They have exhibited exemplary performance on a wide range of natural language processing (NLP) tasks such as text classification, question-answering, text summarization, machine translation, etc. Thus, it has become indispensable due to its salient features such as its ability to model long-term dependencies as they can attend complete sentences, its ability to support parallel processing, unlike recurrent networks, and the minimum inductive bias requirement for their design. Moreover, their unique design allows the processing of multiple modalities such as text, speech, images and videos. Their excellent scalability feature allows them to scale to large-capacity networks and datasets. Because of the many features mentioned above and the breakthrough results, researchers of other domains were interested in forwarding the research and advancing their products and services by integrating transformer models. Table 6 compares various neural-based methodologies adopted for translation tasks on English–French pairs. The other popular models that more advanced the research include GPT (Generative Pre-Trained Transformer) [88], BERT (Bidirectional Encoder Representations from Transformers) [89], T5 (Text-to-Text Transfer Transformer) [90]. These models have been successfully implemented for a variety of tasks in the vision domain such as object detection [91], image super-resolution [92], image segmentation [93], visual question-answering, etc. [94,95,96,97,98].

In [99], multi-scenario text production is used as a language model that can consistently and variably respond to multiple inputs. Meta-reinforcement learning (meta-RL) may assist language models quickly to adapt to new situations by using their existing knowledge. A meta-RL-based scenario-based text generation method helps a language model generate more realistic replies to a wide range of inputs. To ensure the language model can respond well in many situations, the scenarios, reward function, and training data must be carefully considered while creating such a system. However, in ChatGPT’s training process, both supervised and unsupervised techniques were used. A huge text corpus was used in conjunction with self-supervised learning, a sort of unsupervised learning, to train the model.

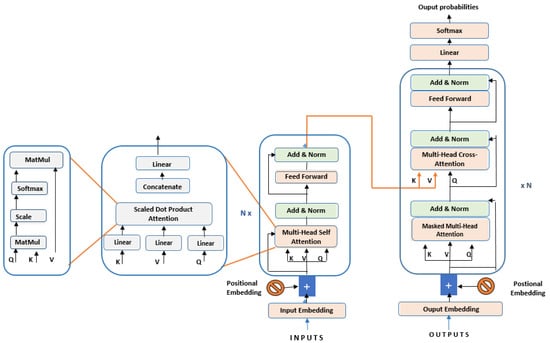

The transformer architecture eliminated the use of RNN and CNN framework and solely relied on an intense version of Attention called Self-Attention. The representation of the transformer architecture and its components are shown in Figure 7. Attention has become a primary component rather than an auxiliary component in text feature extraction. It is a feed-forward model that obeys the same encoding–decoding fundamentals but relatively differently. Its unique core components (multi-head attention, layer normalization, self-attention etc.) have revolutionized the traditional translation pipeline. The transformer is a different model, and we study the significant architectural differences below.

Figure 7.

A well established standard transformer architecture [87].

Table 6.

Comparison of previously adopted various methodologies scaled on BLEU Score for the translation purpose on English–French language pairs.

Table 6.

Comparison of previously adopted various methodologies scaled on BLEU Score for the translation purpose on English–French language pairs.

| Author | Methodolgy | BLEU Score |

|---|---|---|

| Cho et al., 2015 [75] | Gated CNN RNNenc Moses | 22.94 27.03 35.40 |

| Shen et al., 2016 [80] | Gated RNN with Search-MRT Gated RNN with Search-MRT + PostUnk | 31.30 34.23 |

| Gehrig et al., 2017 [84] | BiLSTM 2 layered BiLSTM Deep Convolutional (8/4) | 34.3 35.3 34.6 |

| Zhang et al., 2018 [85] | RNNSearch + ATR(twin gated RNN) + BPE RNNSearch + ATR (twin gated RNN) + CA + BPE RNNSearch + ATR (twin gated RNN) + CA+ BPE+ Ensemble | 36.89 37.88 39.06 |

| Liu et al., 2020 [100] | 6L Encoder and 6L Decoder (Default Transformer) 60L Encoder and 12L Decoder (Admin) | 41.5 43.8 |

| Liu et al., 2021 [101] | Confidence aware scheduled sampling target denoising | 42.97 |

- (1)

- Input Form

Input in transformers is handled in different fashion. At initial step input passes through an input embedding layers which transforms the one-hot encoded representation of every word to d dimensional embedding. Transformer creates three vectors namely Key, Value and Query vectors. Then, the positional encoding mechanism is implemented to enhance modelling capability of sequence order which makes model invariant to sequence ordering. Positional encoding is added to input embedding at the bottom of encoder and decoder stacks. Position functions are applied to calculate positional encoding and in this paper sine and cosine functions are chosen.

- (2)

- Encoder–decoder blocks

The encoder component is constructed by stacking six identical blocks of the encoder. Each encoder block has two sublayers, self-attention and feed-forward layer, which is equipped with residual connection followed by a layer normalization layer. However, the decoder part bit complicates the translation process. Like encoder, decoder components are constructed by stacking six identical blocks of decoder where each block consists of three sub-layers self-attention, encoder–decoder attention and layer normalization. The bottom attention layer is altered with a method masked to avoid the positions from attending subsequent positions.

- (3)

- Multi-head attention

The multi-head attention comprises multiple blocks of self-attention to capture complex relationships among input sequence elements. The self-attention mechanism is also called intra-attention and became widely recognized after its application in transformer, refined by adding “multi-headed” attention. This gives the model the ability to expand the focus at different positions. The multi-headed attention runs over-scaled dot product attention multiple times, and the independent attention outputs are combined and transformed linearly. Furthermore, the multiple runs of this step are referred to as multi-headed self-attention. Table 7 shows the survey of transformer-adopted architecture for translation tasks.

Table 7.

Survey for transformer based NMT.

In the field of natural language processing, machine translation is handled by a number of algorithms that are very well-known. Even though NMT has been shown to be effective, it is essential that any algorithm decision be made only after careful assessment of both the task at hand and the dataset at hand. When evaluating approaches, although performance comparison charts on datasets such as the WMT can be informative, it is also necessary to take into account other aspects such as processing requirements.

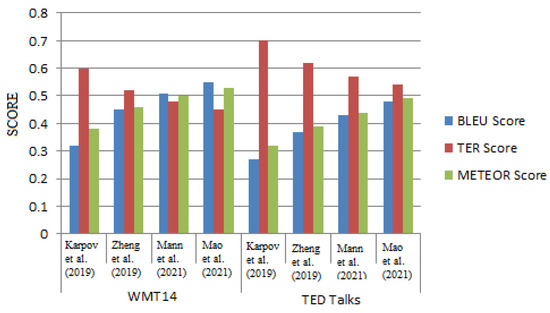

In this investigation, we analyze and compare the performance of four different machine translation algorithms by using the BLEU score, the TER (Translation Error Rate) score, and the METEOR (Metric for Evaluation of Translation with Explicit Ordering) score as shown in Figure 8. These scores are based on two different datasets. The performance on the TED Talks dataset is, in general, lower than the performance on the WMT14 dataset, and the performance of various techniques varies significantly depending on the metric that is being utilized.

Figure 8.

A comparative analysis [108,109,110,111].

5. Conclusions

Machine translation is a complex problem, and many systems have been developed as a result of research. The method has evolved from human intervention to completely automated translation, with its benefits and drawbacks. The rule-based approach has been widely used to develop early machine translation systems for language pairs, which are in close proximity to each other in terms of syntactic, semantic, and morphological regularities. The translation of the language is purely dependent on manually designed rules by linguists, as the rules play an integral part during various stages of translation. However, the quality of translation can only be maintained by enriching the computer with robust rules to keep up with linguistic phenomena and accommodate translations that incur high customization costs. The approach is incapable of handling ambiguity due to poor word sense disambiguation (WSD) and lacks fluency as a morphological analyzer processes word by word. The statistical machine translation approach came after the rule-based approach which was the dominant approach in the 1990s and 2000s utilizing parallel aligned bilingual text corpus, the inclusion of which brought dramatic improvements in the quality of translations produced. The crux of the approach is using translation and language models to maximize the likelihood of translating a source text to the target text utilizing statistical models. The approach outperformed the rule based on many factors. SMT could better handle function and phrase words using memory base training, producing good fluent translations, and is cost-effective. However, the integration of various components resulted in a complex architecture due to the diversified complex nature of natural language, making it difficult for the approach to capture all translation regularities manually. Another severe challenge is the data sparsity issue as it learns poor estimates of model parameters on low-count events. However, in recent years, the integration of deep learning approaches for translation tasks outperformed the statistical approach at international MT evaluation events. The deep learning approach has been capable of alleviating data sparsity issues to quite an extent by replacing symbolic representation with distributed representations. To find the best word alignment, ignore all history and consider only previous words; RNNs are considered to find alignment scores directly. The following approach allows NMT to learn the representation from data without the explicit need to frame features to capture design regularities. Despite its many advantages, deep learning suffers from some limitations, such as it is hard to explain the internal neural network works and hence it acts as a black box. Another issue is that NMT is data-hungry, and language with limited or no data produces no or poor translations. There still persist out-of-vocabulary words and the history of vector values issue. The paper provides a holistic overview of the machine translation system developed based on classical, statistical, and deep learning approaches. Every approach has its merits and demerits and is suitable for a particular scenario. Over the years, translation systems have evolved so much. The SMT systems evolved swiftly, but the incorporation of neural networks into machine translation systems has fueled the progress of natural language processing technologies and paved a new path to advancement.

6. Survey, Application and Future Works on Machine Translation

Over the years, a lot of advancement has been witnessed in machine translation due to the integration of deep learning models. Many challenges need to be addressed, and these challenges direct the research to build much better solutions for NMT and transformer-based systems. We list the challenges: Transformer-based NMT systems are vulnerable to noise and they break easily when the noise occurs in an input. There is a need to resolve this issue which is an understudied concept. Though the research was conducted to address the issue by fine-tuning the parameters [112]. Furthermore, there is a need to modify the transformer structure as stacking of blocks incurs high latency and to reduce latency, the model may suffer from low performance hence effective efforts should be made to restructure the models and attention computation to accelerate the model convergence to minimize lower latency [113]. Some scaled-up models have led to large performance improvements such as BERT with a 340M parameter model and the T5 model with an 11B parameter (2019). Some core approaches for efficient machine translation include knowledge distillation, quantization, pruning, and efficient attention.

- Knowledge Distillation

A high capacity teacher network predicts the soft label target for the labels for a small student network to learn from, hence distilling knowledge from the larger network into the smaller network (Hilton et al. 2015) [114]. Other ways in which knowledge distillation has been implemented in NLP describe whether to be used for pre-training task DistillBERT, a distilled version of BERT (Sanh et al., 2019) [115], leveraged BERT to encode contextual information by aggregating features (Wu et al. [116]). In this, it loops from teacher to student in language modeling with the task of predicting the mass token. Here we also try to align the intermediate feature maps having some loss and distance between teacher–student layers. Knowledge distillation for fine-tuning (Turc et al. 2019) [117], firstly where the student is pre-trained with language modeling then using downstream tasks then distillation, is performed. Liang et al. [118] tried to improve on the student model for the translation task by attempting to train a teacher using multiple sub-networks to produce various output variants. To improve upon NMT, Jooste et al. [119] employed sequence-level knowledge distillation on small-sized student models to distill knowledge from large teacher models.

- Quantization

This is the method of compressing neural networks which are described as alleviating the precision of weights. This can make it faster with inference and tensor cores in GPUs as running 4-bit and 8-bit precision runs faster than 32-bit. Q8BERT (Zafrir et al., 2019) [120] and QBERT (Shen et al., 2019) [121] integrate the idea of alleviating the precision of weights by scaling and clustering them around the values, and DQ-BART [122] jointly adopted the quantization and distillation approach to reduce memory requirement and latency issues.

- Pruning

In the context of the compression approach applied to all models of NLP, it exhibits the idea of masking out the weights that have low magnitude and do not contribute much to the output. The core intent of pruning is first to train massive neural networks and then mask out the weights and finally reach a sparse sub-network that does all the heavy lifting of the neural network (Brix et al., 2020) [123], Behnke and Headfield used [124] pruning applied to neural networks to fasten inference and utilized group lasso regularization to help prune the entire heads and feed-forward connections allowing the model to speed up by 51%.

- Efficient Attention

In the transformer architecture the quadratic bottleneck N*N multiplication with a query, a key matrix has been reduced to the linear attention of Big Bird (Zaheer et al., 2020) [125], linear transformer (Katharopoulos et al., 2020) [126], linformer (Wang et al., 2020) [127], performer (Choromanski et al.,2020) [128], and reformer (Kitaev et al., 2020) [129]. This idea of having more efficient attention means longer input sequences can be fed to the transformer models. These models successfully implement it by going beyond the dense attention matrix. There are different methods that can be segregated as (1) data-independent patterns where there is a sparse transformer (Child et al., 2019) [130] from OpenAI which breaks down the computation based on the structure of the computation into different blocks and execute them along diagonal and get stridden patterns, and (2) data-dependent patterns which have some way of clustering the values and performing multiplication where there is a requirement.

- Application of MT

Machine translation systems are consistently being employed in many business areas due to their high accuracy levels. Applications of machine translation can be classified into two broad categories which are B2B industry-specific machine translation and B2C machine translation [131].

- B2B industry-specific machine translation applications are adopted by different-sized business enterprises which rely on domain-related specific training data. For instance, the generic translators rely on generic data as their models are trained on it. Some businesses offer customizable solutions across various domains and some across specific domains. This industry includes military, financial, education, healthcare, legal, etc. A few examples of B2B machine translation systems are Kantan MT, SYSTRAN, Lingua Custodia (finance), SDL government, etc.

- B2C customer-based machine translation applications are generic applications that are meant for individuals (students, travelers), etc. These applications offer to perform text-to-text, speech-to-text, and image-to-text-based translation. The biggest players in B2C are Google translate where all capabilities are available under a single platform, and Facebook translate, and they are available in the form of a cloud service-independent application [132]. The wearable voice translator—‘The ili‘—is a small device that travelers can carry and offers to translate speech instantly. E-commerce platforms integrated machine translation services into their websites by providing easy access to global customers. Furthermore, due to COVID-19, virtual meetings became common so to enhance the virtual experience for learners, simultaneous translation (ST) became integrated into the video conferences to provide real-time translation in respective native languages.

However, machine translation applications are still evolving, they learn language patterns and behavior from the data they are trained on and seem to improve their accuracy which is a continuous process.

The success of neural machine translation systems and transformers is not just limited to the NLP field. Still, it has witnessed widespread success in other fields also, and one such field is computational chemistry. The papers published have shown the successful use of these systems in chemical reaction and retro-synthesis prediction problems due to their ability to capture complex and non-linear dependencies between various factors. In a chemical reaction, the major goal is to predict the organic reaction product. The prediction problem can be composed as a hybrid modeling problem that makes use of reaction templates combined with an end-to-end machine learning architecture to encode the reaction, discover transformation and predict the outcome [133]. In order to predict the chemical reaction product, Mann and Venkatasubrmanian exploited grammar as ontology in a reaction prediction framework [134], Schwaller et al. [135] utilized the multihead attention transformer model that required no manually crafted rules and could precisely predict chemical transformation, and in similar work, Nam and Kim [136] implemented the GRU-NMT+ parser which intakes reactions and tokenizes them into a list of parentheses, closure numbers, atoms and bonds, and also applies an attention layer to search for relevant data in the encoder component in every decoding step. Another core challenge in chemical synthesis is the retro-synthesis prediction for the desired molecule that satisfies chemical reaction feasibility, property constraints and commercial availability of precursors [137]. The retrosynthetic analysis involves the selection of the most appropriate pathways from a large number of molecular decomposed pathways. Many techniques have been utilized such as the manually encoded synthesis method and template-based methods, but they failed to predict precisely [108]. To overcome this issue, text representation is used to convert reactants into products by coupling them with NMT models. Zheng et al. [109] used a self-corrected retrosynthesis predictor using a transformer, Mann and Venkatasubramanian [110] used a grammar-based NMT for prediction, Mao et al. [111] used a graph enhanced transformer which utilizes graphical and sequential molecular information, and Schwallar et al. used molecular transformer coupled with a hyper-graph exploration strategy.

Author Contributions

Conceptualization, S.S., M.D. and P.S.; data curation, S.S., M.D. and P.S.; formal analysis, V.S. and S.K.; investigation, S.S., M.D. and P.S.; project administration, V.S., S.K. and J.K.; resources, S.S., M.D. and P.S.; software, S.S., M.D. and P.S.; supervision, M.D.; validation, S.S., M.D. and P.S.; visualization, S.S., M.D. and P.S.; writing—original draft, S.S., M.D. and P.S.; writing—review and editing, V.S., S.K. and J.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partly supported by the Technology Development Program of MSS [No. S3033853] and by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (No. 2020R1I1A3069700).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hutchins, J.; Lovtskii, E. Petr Petrovich Troyanskii (1894–1950): A forgotten pioneer of mechanical translation. Mach. Transl. 2000, 15, 187–221. [Google Scholar] [CrossRef]

- Weaver, W. Translation. Mach. Transl. Lang. 1955, 14, 10. [Google Scholar]

- Lehrberger, J.; Bourbeau, L. Machine Translation: Linguistic Characteristics of MT Systems and General Methodology of Evaluation; John Benjamins Publishing: Amsterdam, The Netherlands, 1988; Volume 15. [Google Scholar]

- Scott, B.; Barreiro, A. OpenLogos MT and the SAL Representation Language. 2009. Available online: https://aclanthology.org/2009.freeopmt-1.5 (accessed on 12 October 2022).

- Ramírez Sánchez, G.; Sánchez-Martínez, F.; Ortiz Rojas, S.; Pérez-Ortiz, J.A.; Forcada, M.L. Opentrad Apertium Open-Source Machine Translation System: An Opportunity for Business and Research; Aslib: Bingley, UK, 2006. [Google Scholar]

- Ranta, A. Grammatical Framework: Programming with Multilingual Grammars; CSLI Publications, Center for the Study of Language and Information: Stanford, CA, USA, 2011; Volume 173. [Google Scholar]

- Nagao, M. A framework of a mechanical translation between Japanese and English by analogy principle. Artif. Hum. Intell. 1984, 1, 351–354. [Google Scholar]

- Sato, S.; Nagao, M. Toward memory-based translation. In Proceedings of the COLNG 1990 Volume 3: Papers Presented to the 13th International Conference on Computational Linguistics, Gothenburg, Sweden, 18–19 May 1990. [Google Scholar]

- Satoshi, S. Example-based translation of technical terms. In Proceedings of the Fiftth International Conference on Theoritcal and Methodological Issues in Machine Translation, Kyoto, Japan, 14–16 July 1993; pp. 58–68. [Google Scholar]

- Brown, P.F.; Cocke, J.; Della Pietra, S.A.; Della Pietra, V.J.; Jelinek, F.; Lafferty, J.; Mercer, R.L.; Roossin, P.S. A statistical approach to machine translation. Comput. Linguist. 1990, 16, 79–85. [Google Scholar]

- Brown, P.F.; Della Pietra, S.A.; Della Pietra, V.J.; Mercer, R.L. The mathematics of statistical machine translation: Parameter estimation. Comput. Linguist. 1993, 19, 263–311. [Google Scholar]

- Och, F.J.; Ney, H. Discriminative training and maximum entropy models for statistical machine translation. In Proceedings of the 40th Annual meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 10–11 July 2002; pp. 295–302. [Google Scholar]

- Manning, C.; Schutze, H. Foundations of Statistical Natural Language Processing; MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Wong, Y.W.; Mooney, R. Learning for semantic parsing with statistical machine translation. In Proceedings of the Human Language Technology Conference of the NAACL, Main Conference, New York, NY, USA, 10–11 June 2006; pp. 439–446. [Google Scholar]

- Jean, S.; Cho, K.; Memisevic, R.; Bengio, Y. On using very large target vocabulary for neural machine translation. arXiv 2014, arXiv:1412.2007. [Google Scholar]

- Nirenburg, S. Knowledge-based machine translation. Mach. Transl. 1989, 4, 5–24. [Google Scholar] [CrossRef]

- Cohen, W.W. Fast effective rule induction. In Machine Learning Proceedings 1995; Elsevier: Amsterdam, The Netherlands, 1995; pp. 115–123. [Google Scholar]

- Abercrombie, G. A Rule-based Shallow-transfer Machine Translation System for Scots and English. In Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC’16), Portoroz, Slovenia, 23–28 May 2016; pp. 578–584. [Google Scholar]

- Vauquois, B. A survey of formal grammars and algorithms for recognition and transformation in mechanical translation. In Proceedings of the Ifip Congress (2), Edinburgh, UK, 5–10 August 1968; Volume 68, pp. 1114–1122. [Google Scholar]

- Wheeler, P.J. Changes and improvements to the european commission s systran MT system 1976/84. Terminologie 1984, 1, 25–37. [Google Scholar]

- Vasconcellos, M.; León, M. SPANAM and ENGSPAN: Machine translation at the Pan American Health Organization. Comput. Linguist. 1985, 11, 122–136. [Google Scholar]

- Bharati, A.; Chaitanya, V.; Kulkarni, A.P.; Sangal, R. Anusaaraka: Machine translation in stages. Vivek-Bombay 1997, 10, 22–25. [Google Scholar]

- Vauquois, B. La Traduction Automatique à Grenoble. Number 24, Dunod. 1975. Available online: https://aclanthology.org/J85-1003.pdf (accessed on 11 December 2022).

- Gale, W.A.; Church, K.W.; Yarowsky, D. Using bilingual materials to develop word sense disambiguation methods. In Proceedings of the International Conference on Theoretical and Methodological Issues in Machine Translation, Montreal, QC, Canada, 25–27 June 1992; pp. 101–112. [Google Scholar]

- Sinha, R.; Sivaraman, K.; Agrawal, A.; Jain, R.; Srivastava, R.; Jain, A. ANGLABHARTI: A multilingual machine aided translation project on translation from English to Indian languages. In Proceedings of the 1995 IEEE International Conference on Systems, Man and Cybernetics. Intelligent Systems for the 21st Century, Vancouver, BC, Canada, 22–25 October 1995; Volume 2, pp. 1609–1614. [Google Scholar]

- Yusuf, H.R. An analysis of indonesian language for interlingual machine-translation system. In Proceedings of the COLING 1992 Volume 4: The 14th International Conference on Computational Linguistics, Nantes, France, 23–28 August 1992. [Google Scholar]

- Lonsdale, D.; Mitamura, T.; Nyberg, E. Acquisition of large lexicons for practical knowledge-based MT. Mach. Transl. 1994, 9, 251–283. [Google Scholar] [CrossRef]

- Dorr, B.J.; Marti, A.; Castellon, I. Spanish EuroWordNet and LCS-Based Interlingual MT. In Proceedings of the MT Summit Workshop on Interlinguas in MT, San Diego, CA, USA, 28 October 1997. [Google Scholar]

- Varile, G.B.; Lau, P. Eurotra practical experience with a multilingual machine translation system under development. In Proceedings of the Second Conference on Applied Natural Language Processing, Austin, TX, USA, 21–22 August 1988; pp. 160–167. [Google Scholar]

- Bennett, W.S. The LRC machine translation system: An overview of the linguistic component of METAL. In Proceedings of the 9th Conference on Computational linguistics-Volume 2, Prague, Czech Republic, 5–10 July 1982; pp. 29–31. [Google Scholar]

- Bharati, R.M.; Reddy, P.; Sankar, B.; Sharma, D.; Sangal, R. Machine translation: The shakti approach. Pre-conference tutorial. ICON 2003, 1, 1–7. [Google Scholar]

- Varga, I.; Yokoyama, S. Transfer rule generation for a Japanese-Hungarian machine translation system. In Proceedings of the Machine Translation Summit XII, Ottawa, ON, Canada, 26–30 August 2009. [Google Scholar]

- Sumita, E.; Hitoshi, H. Experiments and prospects of example-based machine translation. In Proceedings of the 29th Annual Meeting of the Association for Computational Linguistics, Berkeley, CA, USA, 18–21 June 1991; pp. 185–192. [Google Scholar]

- Somers, H. Example-based machine translation. Mach. Transl. 1999, 14, 113–157. [Google Scholar] [CrossRef]

- Mima, H.; Iida, H.; Furuse, O. Simultaneous interpretation utilizing example-based incremental transfer. In Proceedings of the 36th Annual Meeting of the Association for Computational Linguistics and 17th International Conference on Computational Linguistics, Volume 2, Montreal, QC, Canada, 10–14 August 1998; pp. 855–861. [Google Scholar]

- Nirenburg, S.; Domashnev, C.; Grannes, D.J. Two approaches to matching in example-based machine translation. In Proceedings of the 5th International Conference on Theoretical and Methodological Issues in Machine Translation (TMI-93), Kyoto, Japan, 14–16 July 1993. [Google Scholar]

- Furuse, O.; Iida, H. Constituent boundary parsing for example-based machine translation. In Proceedings of the COLING 1994 Volume 1: The 15th International Conference on Computational Linguistics, Kyoto, Japan, 16–18 August 1994. [Google Scholar]

- Cranias, L.; Papageorgiou, H.; Piperidis, S. A matching technique in example-based machine translation. arXiv 1995, arXiv:cmp-lg/9508005. [Google Scholar]

- Grefenstette, G. The World Wide Web as a resource for example-based machine translation tasks. In Proceedings of the ASLIB Conference on Translating and the Computer, London, UK, 16–17 November 1999; Volume 21, pp. 517–520. [Google Scholar]

- Brown, R.D. Example-based machine translation in the pangloss system. In Proceedings of the COLING 1996 Volume 1: The 16th International Conference on Computational Linguistics, Copenhagen, Denmark, 5–9 August 1996. [Google Scholar]

- Sumita, E. Example-based machine translation using DP-matching between work sequences. In Proceedings of the ACL 2001 Workshop on Data-Driven Methods in Machine Translation, Toulouse, France, 7 July 2001. [Google Scholar]

- Brockett, C.; Aikawa, T.; Aue, A.; Menezes, A.; Quirk, C.; Suzuki, H. English-Japanese example-based machine translation using abstract linguistic representations. In Proceedings of the COLING-02: Machine Translation in Asia, Stroudsburg, PA, USA, 1 September 2002. [Google Scholar]

- Watanabe, T.; Sumita, E. Example-based decoding for statistical machine translation. In Proceedings of the Machine Translation Summit IX, New Orleans, LA, USA, 18–22 September 2003; pp. 410–417. [Google Scholar]

- Imamura, K.; Okuma, H.; Watanabe, T.; Sumita, E. Example-based machine translation based on syntactic transfer with statistical models. In Proceedings of the COLING 2004: 20th International Conference on Computational Linguistics, Geneva, Switzerland, 23–27 August 2004; pp. 99–105. [Google Scholar]

- Aramaki, E.; Kurohashi, S.; Kashioka, H.; Kato, N. Probabilistic model for example-based machine translation. In Proceedings of the MT Summit X, Phuket, Thailand, 13–15 September 2005; pp. 219–226. [Google Scholar]

- Armstrong, S.; Way, A.; Caffrey, C.; Flanagan, M.; Kenny, D. Improving the Quality of Automated DVD Subtitles via Example-Based Machine Translation. 2006. Available online: https://aclanthology.org/2006.tc-1.9.pdf (accessed on 10 December 2022).

- Brown, P.F.; Cocke, J.; Della Pietra, S.A.; Della Pietra, V.J.; Jelinek, F.; Mercer, R.L.; Roossin, P. A statistical approach to language translation. In Proceedings of the Coling Budapest 1988 Volume 1: International Conference on Computational Linguistics, Budapest, Hungary, 22–27 August 1988. [Google Scholar]

- Och, F.J.; Tillmann, C.; Ney, H. Improved alignment models for statistical machine translation. In Proceedings of the 1999 Joint SIGDAT Conference on Empirical Methods in Natural Language Processing and Very Large Corpora, Hong Kong, China, 7–8 October 1999. [Google Scholar]

- Marcu, D.; Wong, D. A phrase-based, joint probability model for statistical machine translation. In Proceedings of the 2002 Conference on Empirical Methods in Natural Language Processing (EMNLP 2002), Philadelphia, PA, USA, 6–7 July 2002; pp. 133–139. [Google Scholar]