Abstract

In this paper, we study the problem of traffic signal control in general intersections by applying a recent reinforcement learning technique. Nowadays, traffic congestion and road usage are increasing significantly as more and more vehicles enter the same infrastructures. New solutions are needed to minimize travel times or maximize the network capacity (throughput). Recent studies embrace machine learning approaches that have the power to aid and optimize the increasing demands. However, most reinforcement learning algorithms fail to be adaptive regarding goal functions. To this end, we provide a novel successor feature-based solution to control a single intersection to optimize the traffic flow, reduce the environmental impact, and promote sustainability. Our method allows for flexibility and adaptability to changing circumstances and goals. It supports changes in preferences during inference, so the behavior of the trained agent (traffic signal controller) can be changed rapidly during the inference time. By introducing the successor features to the domain, we define the basics of successor features, the base reward functions, and the goal preferences of the traffic signal control system. As our main direction, we tackle environmental impact reduction and support prioritized vehicles’ commutes. We include an evaluation of how our method achieves a more effective operation considering the environmental impact and how adaptive it is compared to a general Deep-Q-Network solution. Aside from this, standard rule-based and adaptive signal-controlling technologies are compared to our method to show its advances. Furthermore, we perform an ablation analysis on the adaptivity of the agent and demonstrate a consistent level of performance under similar circumstances.

1. Introduction

Nowadays, there are more and more areas of life wherein people utilize the help of artificial intelligence (AI). From medical applications [1,2], through industrial settings [3] to household gadgets [4], most products are already using or will use some machine learning (ML) or AI technique. This is also true for transportation, where researchers, stakeholders, companies, and also investors see great potential in autonomous vehicles (AVs), smart infrastructures, and intelligent traffic signals [5,6]. Among others, safety, security, and efficiency are the main motivations for developing such systems. The techniques of AI can be built into systems, which can be extrapolated and generalized to situations that have not been encountered before, unlike their classic rule-based controller ancestors [2]. This is fitting, especially in the case of reinforcement learning (RL), where the agents learn by interactions. There are a vast amount of successful applications in the literature where the AI created with RL shows superhuman performance [7,8,9]. From simple games such as the Atari domain, to more complicated strategic quests such as [10] or the complex game of Go, researchers have demonstrated that powerful results could be generated from scratch. In domains where the situations are endless, and there is no excellent and final amount of data, supervised learning (SL) methods can be used poorly. This is why RL algorithms may give a better solution in a traffic scenario, be it commuting cars or the supporting infrastructure.

With rapid population growth and urbanization, the traffic demand is steadily rising in metropolises worldwide, which causes more traffic jams and thus wastes people’s time. Existing intersections and infrastructure must become highly efficient to satisfy the rising needs of modern traffic. To tackle this problem, intelligent traffic lights and signal control devices were used in the US as early as the 1970s, and AI-based solutions were introduced in 2012 [11]. Thanks to modern technological advances, rapidly accelerated communication, and connectivity options, traffic control devices can be efficiently inserted into our daily commute. Apart from the vehicle-to-anything (V2X) communication, where the vehicles communicate with the infrastructure, a smart intersection without communication also holds promise in optimizing existing road network throughput, emergency, and priority vehicle allowance, reducing waiting times, and allowing more efficient flows, fewer traffic jams, and optimized emissions. In the city of Surrey in Canada [12], for instance, the traffic throughput is managed by a central control center with adaptive control signal periods.

Accordingly, different types of systems can be developed based on the infrastructural possibilities. Solutions to optimize a traffic flow in a neighborhood based on some requirements have been published and applied with different approaches in recent years [13,14,15]. However, most of the current working algorithms are rule-based, which are limited in their adaptivity, the most desired attribute of AI. These algorithms optimize for a particular goal forged into the training or coding at the programming time of the algorithms. Standard DRL also has a single or multi-objective function but is not adaptive to new tasks emerging after training. Even with a multi-objective target function, it can only be optimized for multiple goals at once (e.g., optimize traffic signal scheduling and timing to ameliorate traffic congestion at moderately and heavily busy single or multiple intersections), and we are unable to change it after training [16,17,18]. In the field of RL, recent works provide an adaptable framework for training agents, where the preferences can be altered even after the agent is deployed [19].

In this work, we intend to tackle the problem of traffic signal control (TSC) with RL methods to make the flows effective, environmentally friendly, and sustainable. Moreover, utilizing successor features (SFs), we provide an adaptive framework where the flow can be controlled based on our preferences after deploying the trained model. In some sustainable scenarios, we show how our proposed method compares to the classical rule-based and more advanced traffic-based algorithms. In addition to the classical, already applied solutions, we compare our solution to a Deep-Q-Network (DQN) agent to emphasize why our method is more viable and flexible. The novelty in our solution lies in the combination of the different reward signals and the possible adaptation to the current preferences of the network flow. Unlike other works, our multi-objective learning can be personalized and is not fixed to a value during training. With this, we present a more viable option for adaptive TSC. To the best of our knowledge, we are the first to report SF usage in the domain of TSC.

Our goal is to show that the SFs can be used in traffic signal problems. The paper progresses as follows: Section 2 shows the recent research literature in RL and TSC. The methodology is provided in Section 3, where SFs, General Policy Evaluation (GPE), and Improvement (GPI) are detailed. The following Section 4 shows our experimental settings and the ablation study setup. Finally, in Section 5, we highlight the results of our method and show the advantages of our method compared to classical, adaptive, and deep RL techniques. Lastly, Section 6 summarizes our paper and provides future work possibilities and opportunities.

2. Related Work

Traffic congestion is a persistent issue that affects urban areas and harms individuals and society. The trend of flocking to urban areas further accelerates these adverse outcomes over time. For instance, in 2020, traffic congestion cost Americans over USD 101 billion in lost productivity and wasted over 1.7 billion gallons of fuel. Traffic congestion was also attributed to over 18 million tons of harmful greenhouse gases [20]. The more troubling news is that this is due to the pandemic. The years before 2020 showed almost double the amount of waste and lost productivity. Reducing congestion would substantially benefit the economy, the environment, and society. One way to do this is by improving signalized intersections, a common cause of traffic bottlenecks in cities, and thus TSC plays a vital role in urban traffic management. A significant amount of research is being done on TSC systems to improve traffic flow at intersections by adjusting traffic phase splits. As the complexity and size of the control problem increase, classic approaches lack the required computational resources to provide a real-time solution. Hence, besides the classic control applications, adaptive methods started appearing in traffic control in recent decades. New solutions became feasible as the technology advanced for sensing and controlling hardware. One of the modern control applications is ML-based solutions. From the domain of ML, as stated above, RL has been the preferred unsupervised technique for accomplishing a fully dynamic TSC. Recent developments in RL, specifically deep RL, have made it possible to efficiently handle high-dimensional input data such as images or LiDAR measurements. This allows the agent to learn a state abstraction and a policy approximation directly from the input states. These advances have led to outstanding performance in various transportation-related domains and have potential for real-time application and in solving complex sequential decision-making problems [21,22].

There is a vast amount of research building around TSC and RL synergy. The following papers justify the usage of RL in this domain.

The suitable algorithms in the TSC problem have expanded along with the advances in RL. Some have originated from the value-based and policy-based solutions, such as DQN and Policy Gradient (PG), and evolved to the Actor-Critic approaches. Generally, the methods and solutions target three main areas of learning.

State-space: One is the state and action space representation, where authors work on their environment descriptions fed to the agent. In [23], the authors developed a state representation by utilizing Convolutional Neural Networks (CNN). Their method is called discrete traffic state encoding (DTSE). The authors of [24] utilized high-resolution event-based data for state representation, outperforming fixed-time and actuated controllers in queue length and total delay metrics. In [25], for decision-making, the authors construct signal groups and represent them as individual agents that operate on the intersection level. As for action space modification, an interesting example is shown in [26]. The authors developed an agent with a hybrid action space that combines discrete and continuous actions. Additionally, they compared their solution to deep reinforcement learning (DRL) algorithms with discrete and continuous action spaces and fixed-time control. The results showed that the proposed method outperformed all other methods in average travel time, queue length, and average waiting time.

Communication: The second area is communication. Connecting vehicles to the infrastructure results in valuable data that cannot be wasted. In [27], the researchers introduced an algorithm that builds on communication between the traffic signals and the traffic monitoring sensors in complex real-world networks. Recent advancements in this field have taken advantage of V2X technologies, which involve collaboration between vehicles and infrastructure to reduce delay and carbon emissions [28,29,30].

Rewarding: The last concept that RL practitioners consider is the rewarding concept. From the aspect of performance, rewarding is one of the most influential components of RL. It can ruin or advance the same algorithms by falsely assigning credit to non-relatable actions. In single TSC problems, the environment is the road traffic condition. The agent controls the traffic signal based on preset objectives, including reducing emissions, fuel consumption stops, delay times, managing vehicle density, queue lengths, increasing speed, and increasing throughput. Most traditional traffic control methods are single-objective, which means that they focus on optimizing a single parameter, e.g., [31]. However, the literature presents various multi-objective RL solutions to the TSC problem by selecting and optimizing these metrics. Multi-objective RL is comparable to single-objective RL in terms of time complexity since it only requires extending the reward signal with additional components. This means that significant performance improvements can be achieved at a low computational cost, unlike with traditional algorithms. However, with a more complex reward signal, the agent has a more challenging task. It is more difficult to decipher the environment and the results of the control signals.

The authors of [32] present an algorithm tailored to three traffic scenarios, where adaptation occurs offline based on the vehicle count in a given time frame. In [33], the authors introduced a dynamically changing discount factor in the DQN setting, modifying the target value of the Bellman equation. The coder in [34] decomposes the original RL problem into sub-problems, thus leading to straightforward RL goals. A new, rewarding concept is investigated by [35] with the DQN algorithm, combined with a coordination algorithm for controlling multiple intersections. Others, such as Ref. [36], proposed a Multi-Agent Advantage Actor-Critic (A2C) algorithm for solving the multi-intersection TSC problem. Most recently, Ref. [37] proposed a novel rewarding concept and compared its performance with the most common rewarding strategies in the literature. We refer the reader to [38,39,40] for other examples in the domain. Most similar to our proposed method are [17,18]. Their contribution shows a multi-objective RL-TSC algorithm, where they replace the single-objective reward with a scalarized signal, which is a weighted sum of the reward. Their objectives are one for light and one for heavy traffic. The onus is on the user to select weightings that will give good results. They showed a reduced convergence time and decreased the average delay in the network compared to a single-objective approach. While performance gains appear, selecting appropriate values for the weightings of rewards ought to be a time-consuming task, especially if there are various demands in the intersections. In [31], the authors extended their work further, considering a total of seven different parameters in the reward function.

The problem with the above-mentioned methods is that the agent has to solve the Markov Decision Process (MDP) and reveal the underlying reward components, which can be complex and error-prone. In contrast, by default, our solution decomposes the different reward functions, resulting in a more straightforward setting for training. Moreover, the composition of different reward components is a matter of preference change. The method can be quickly adapted to not only one or two setups but any arbitrary settings. We intend to propose a novel approach to the problem, where the different multi-objectives can be decomposed into individual ones and later, based on the inferencing preference, combined for a more tailored behavior. As a remark, one should mention the approaches based on Multi-Agent RL (MARL), traffic networks consisting of multiple intersections, where an independent RL agent controls the signals. These cases are much more relevant to real-world TSC problems than the Single-Agent RL (SARL) cases. From 2000, Ref. [41] was one of the most significant early works in Multi-Agent RL-TSC. The model-based approach clearly outperformed fixed-time controllers at high levels of network saturation. Although there are MARL algorithms in the literature, we limit our current work to the single-agent setup because we want to argue that the SFs can indeed be used with TSC as well.

Limitations: Various assumptions are often made to simplify the simulation process to make the optimization problem manageable. However, these assumptions often do not reflect real-world situations in which traffic conditions are influenced by a range of factors, such as driver behavior, interactions with vulnerable road users (e.g., pedestrians, cyclists), weather, and road conditions. These factors can hardly be fully described in a traffic model [40].

Based on the aforementioned works, we also restrict our optimization problem to random traffic flows; we discard any vulnerable road users (pedestrians, cyclists, or such). The intersection is a straight and right-way one. There is no signal for the left turn; thus, there is no possible blocking of one another. It should be noted that many of the proposed reward definitions above rely on information that is easy to obtain in a simulation but quite difficult or impractical to obtain in a real-world setting using current technologies. Data about ATWT, ATT, fuel usage, or emissions could not be collected reliably without some form of vehicle to junction (V2J) communication [42].

Contribution: As shown above, it has been presented several times that classical control algorithms can be beaten by RL technology on TSC. They can minimize the delay and improve the average throughput of the traffic intersection [14,15,37].

However, our goal is not to show this once again but to provide significant development in the domain of adaptivity and information decomposition and representation. Thus, after presenting our algorithm and methodology in Section 3, we compare our method to the DQN agent as most of the aforementioned works measure their solution to the original DQN agent and some fixed-time controller. Thus, our evaluation is also designed based on these works. Finally, we provide insight into how and why our method is preferable to previous ones.

3. Methodology

In RL, we distinguish an actor (agent) and its surroundings (an environment). They have continuous interaction. The agent selects an action based on the current state of the environment, with which it changes its state. In response, the agent receives feedback (reward), and the new state indicates the effect of the applied action. In the case of a TSC setting, the states might be lane occupancy, flow rate, standing vehicles, or lamp states. The state generally contains valuable information about the environment that helps the agent to make decisions. RL agents aim to observe the changes and their effects and devise a policy to maximize the rewards. Rewards, typically scalar signals, describe the goodness of the selected action. Due to the abstraction of the reward signal, RL opens the door for solving problems where the exact right answer is unknown; only an approximate estimate is given. One might imagine the RL training similarly to human learning. We learn based on the interaction and its consequences. Moreover, deciding the goodness of a complete process comes naturally because evaluating each step and assessing exactly which move was good or bad is intractable. By using the trial and error mechanism, RL agents explore their options of interactions and come to a conclusion about how best to act in a specific state. On the other hand, one must mention the credit assignment problem, which can hinder the training. Deciding what the optional action is depends on the planning horizon, long-term strategy, the problem itself, and the available action possibilities.

3.1. Markov Decision Processes

MDPs are used for the formulation of RL problems. It is a mathematical framework for decision-making in a situation where the system’s output (environment) is partially randomized but depends on the current action. Suppose that an MDP system has the Markov property. In this case, it means that the conditional probability distribution of the following state of a random process depends only on the current state, given all the previous states [43]. This implies that the state should contain all information needed to draw this probability and also helps us to make decisions based on the current observable state.

An MDP usually is characterized by a 4-element tuple, . Interactions create episodes. At each time step t, an RL agent manipulates the environment with arbitrary action . This results in an observation for every as state , and a reward . The agent’s goal is to maximize the sum of the expected rewards along the interactions of the episodes.

As discussed above, multi-objective RL has several reward functions, but, during the steps, it is compressed into a scalar value by weighting the reward components preferably. One can think of these reward functions as different tasks, and, thus, the goal of RL is to find a policy that maximizes the value of every state–action pair for a given reward component (task):

where denotes the expectation over the trajectories induced by , and is the discount factor, used for discounting future rewards. Having (1), the next step is to introduce the formulation of GPE and GPI with SFs.

3.2. SFs, GPE, and GPI

Successor Features (SFs) were formalized to aid multi-objective RL algorithms, where the reward functions are complicated. Their utilization helps to decompose complex reward functions into simpler ones, meanwhile enabling the exchange of information between tasks and promoting the reuse of the skills [19]. This section briefly introduces the method and provides the necessary equations without the full deduction. We refer the reader to [19] for a more detailed description.

First, we choose as our arbitrarily selected feature function vector, where each element is a “feature”. It is selected smartly to create our goal tasks, as described in Section 4.2. Then, according to [44], with any arbitrary preference vector , we can define the reward function as

Basically, Equation (2) results in a scalar value that displays a weighted sum of multi-objective reward components, where is the preference vector. In general RL, this multiplication is included inside the environment, where the different components of the reward are “hard-codedly” weighted and thus cannot be changed rapidly. However, using (2), the reward function values can be modified instantly by simply changing .

Following [19], the SFs are defined as the expected values of the discounted feature functions along a trajectory when acting on policy . This yields Equation (3).

characterizes the same Q-function as (1) does; however, additionally, it is parametrized by , and, most favorably, it is simplified to the inner product of . In terms of dimensionality, for n policies and , where d is the number of feature functions and k is the number of policies (training tasks). Using (4) as an efficient form of GPE, thus, for all policies, we can calculate the value function rapidly. For a deeper explanation of the deduction and proof of (4), see [19].

The last missing piece of the method is GPI. In this context, after Barreto et al., the improved policy yields

Basically, we evaluate the value function of the state–action pair over all policies (GPE). (In [44], Theorem 1 shows that Equation (5) is a legitimate form of GPI). Based on (5), we choose the actions with the highest values.

The following section deals with the environment and provides the details of the applied neural networks and training settings.

4. Experiments

This work aims to show that SFs are also an excellent choice in terms of multi-objective RL for TSC. Thus, we aim to set up a simple environment with simple agents and emphasize the advantages gained by utilizing the SF methodology.

4.1. Environment

To formulate a TSC problem in the literature, several different simulators are used, e.g., PARAMICS [45], Simulation of Urban MObility (SUMO) [46], or AnyLogic [47]. Similar to [23], we prefer SUMO due to its accessibility and high-fidelity capabilities.

4.1.1. Intersection

A single 4-way intersection was created with a length of 500 m for each lane. At the end of each line, random vehicle flow is generated, either uniformly or asymmetrically. A traffic light controls the intersection. As reasoned above, the cars can turn right or go straight. The simulation runs with 1 Hz, and the agents can interact every 15 s to allow some transition between the east–west and north–south directions and ensure that there is an actual traffic flow after a decision. A decision can be either opening the east–west lanes (EW) or the north–south (NS) direction. To highlight the adaptivity of the SF method, a prioritized vehicle (PV) is emitted to the road with a random position at a random time. The occupancy of each lane represents the scenario at every step. The work [23] uses SUMO as well, selects a discretized state space with grids, and shows how occupied a cell is. Instead of cells, we use the lanes and promote a fair comparison to classic rule-based optimizing algorithms. The lanes are further divided into 100 m chunks; thus, our RL-based agents can better understand the incoming flow. The location of the prioritized vehicle is indicated by the current lane and its position in that lane. In the 22-element-long state space, every value is normalized between 0 and 1.

4.1.2. Measures

To monitor the performance of the different methods in the environment, we selected a few metrics from the available set of the simulator so that the state of the environment can be characterized. The most important metrics include the , , fuel consumption, total delay, and the currently standing vehicles. Note: the simulator calculates the emissions from the fuel consumption; thus, only one is meaningful. Additionally, to accent the SF-based method’s adaptivity, we monitor the total waiting time of the emitted PV ().

From these metrics, we created a reward function where the components are the following: , , , and are negative values of , fuel consumption, delay, and the standing vehicle count of each time step, respectively, and . The resulting reward function is the sum of these.

4.2. Agents

Our method combines the described reward functions during evaluation and training time, which has several advantages. In this section, we introduce the other agents that our agent competes against.

Fixed time: The first is a standard time-based fix TSC. The signals are switched periodically after 15 s, with a transition of red–yellow states of 3 s.

Classic: A more sophisticated and traffic-optimizing algorithm is provided by SUMO [46]. The Classic TSC sees the incoming flow from 100 m and decides on the next lamp state to optimize the delay of all participants. The shortest time is set to 15 s to align with the other methods.

DQN: A baseline agent is also created to compare ourselves to a general existing RL solution. A DQN agent [48] is created with a standard 2-layer MLP network to estimate each action’s Q-values. The state space is the described 22-element-long vector, and the best action is selected based on -greedy policy. The reward function for DQN is the sum of all components: .

SF base: The difference in the DQN agent lies in calculating the Q values. It is not the output of the MLP, but, as described in (4), the network predicts the SF values, from which, with a preference and (), the Q values are calculated, and, using (5), we select the best action. This entails the essence of our approach. We can modify our training targets with a simple change in the . During training, our rewards serve as the features (), and the preferences are one-hot vectors for each reward component, e.g., . This results in 5 policies, from which we uniformly select one for each episode. Thus, is optimized for CO emission minimization, and minimizes the total waiting time of the PV. In this way, all policies have only one objective, one goal, which helps the training, as given in Section 5. During the evaluation, we can set arbitrarily, and thus weight the reward components according to our current preference and summarize the knowledge of the learned policies. Since each policy has an opinion about the best action, the best of all policies will be selected. The performance guarantees for this are derived in [19]. The agent is evaluated with to align the objectives with and become comparable to DQN, which, by default, learns on this reward combination.

is using the network and weights but is evaluated with , focusing only on the PV. has , trying to minimize the number of vehicles standing. The parameters for both training agents are shown in Table 1.

Table 1.

Training parameters.

5. Results

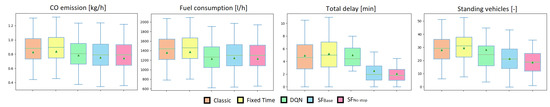

First, we look at a uniform flow distribution and compare the rule-based and learning algorithms by using boxplots in Figure 1. Each agent is given 1000 random episodes, and their performance is compared with the metrics of CO, fuel consumption, total delay, and standing vehicles. Each boxplot represents the average values based on 1000 random episodes that are identical. With the help of the boxplots, we can see the mean (green triangle) and the median (green line) of the data. Meanwhile, the boxes show points between the lower and upper quartiles (Q1–Q3), and the whiskers on top of the boxes are for the minimum and maximum ranges. The lower the values and the smaller the boxes are, the better.

Figure 1.

Comparison of classic (orange), fixed-time (yellow), DQN (green), and SF-based approaches (blue and magenta). Each boxplot refers to the average values and their distribution based on 1000 randomized scenarios with uniform flow. The compared metrics are the average of CO emissions, fuel consumption, total delay, and standing vehicles. The SF-based agent preferences are and .

In Figure 1, values are as expected; the fixed time agent scores the worst in each metric, followed by the classic agent. Although classic optimizes for the delay, the DQN and SF techniques perform better, as was shown before in the literature. The difference between and DQN is marginal in CO and fuel usage, while the agent did not explicitly learn to optimize the objective generated by . A more apparent advantage is observable when comparing the total delays of an episode. Both agents reduce the average delay minutes of the network. Note: tries to optimize the number of vehicles halting in the scene. Interestingly, despite the different perspectives, it performs almost as well as . The reason lies in our method. Each policy has its SF prediction, and when combined with , each has an idea of the best action. Furthermore, knows how to minimize the halting, but in situations when the scene is optimal for , the others can contribute to the decision, as Equation (5) suggests. This performs similarly to the all-optimizing . In regard to performing better than DQN, we can state that the complex reward function of five components allows the agent to optimize for the one with the highest magnitude: fuel consumption. Table 2 indicates the mean values of Figure 1 and displays the percentage of the improvement compared to the fixed time baseline. We can observe that DQN has the best score in fuel consumption as anticipated, but is closely followed by the agents. Meanwhile, the other metrics show a more significant difference in favor of our method. Table 2 also highlights the PV total waiting time average among the 1000 episodes, and it is clear that the methods allow the PV to pass approximately four times faster on average.

Table 2.

Comparison of the performance between the different solutions in case of uniform flows.

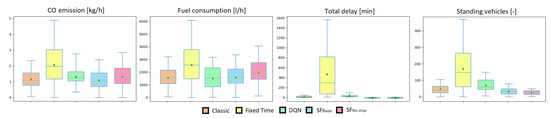

As an ablation of the possibilities using SF-based agents, we investigated 1000 scenarios using asymmetric flows. This meant that either the EW or the NS lanes had drastically more incoming vehicles. As seen in Figure 2, the metrics of the fixed time agent suffered the most. This can be also seen in Table 3. It is natural that switching between the methods equally in an uneven load results in the worst performance. Our attention is guided toward our learning methods. DQN falters the most in standing vehicles because, as mentioned before, it learns to optimize primarily for fuel consumption. could perform the best in all metrics except fuel. Visibly, could achieve the most negligible variance and mean regarding standing vehicles, but all other metrics were affected. Due to the long queues trying to pass the intersections, increased for all agents, but could keep it small. In reality, as the prioritized vehicle entered the scene, it opened the route and allowed all preceding cars to pass. This caused some degradation in other metrics, but it seems reasonable and acceptable.

Figure 2.

Comparison of classic (orange), fixed-time (yellow), DQN (green), and SF-based approaches (blue and magenta). Each boxplot refers to the average values and their distribution based on 1000 randomized scenarios with the asynchronous flow. The compared metrics are the average CO emissions, fuel consumption, total delay, and standing vehicles. The SF-based agent preferences are and .

Table 3.

Comparison of the performance between the different solutions in case of asymmetric flows.

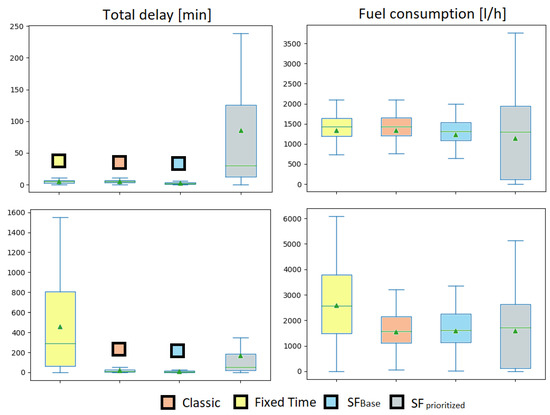

Lastly, Figure 3 shows how selected metrics change when evaluating the only prioritized interested policy . The total delay and fuel consumption worsening is significant in both the uniform and asymmetric cases, but managed to achieve . In the case of uneven loads, the outperformed the baseline. This indicates that with our adaptive preferences, we could allow the prioritized vehicles to move when needed and optimize the network emissions altogether otherwise. By using SF values to estimate the Q values, we gained a method to set the traffic preferences on the fly adaptively. We can change the any time and have a more suitable local solution for the intersection. Additionally, our method needs no supplementary sensors, as we have loop detectors. The PV’s location measurement can be based on GPS and internet communication with the traffic control system.

Figure 3.

Comparison of different methods with our solution when the prioritized vehicle passing has high importance (). Top row: metrics for 1000 episodes with uniform traffic. Bottom row: metrics for 1000 episodes with asymmetric flows.

6. Conclusions

In summary, our work emphasizes the adaptivity of our method, which uses SFs for TSC optimization. By introducing reward components or features, we can design multi-objective RL that can be used to train agents for various target tasks.

To the best of our knowledge, we are the first to use SFs in TSC optimization and have successfully demonstrated their advantages over other baselines. Our approach allows for the alteration of a trained agent’s behavior within the range of the SF combinations simply by adjusting the weight vector . This means that we can prioritize different objectives, such as queue length or total CO emissions, without a significant performance deterioration.

We have thoroughly tested our method in different scenarios and shown that it outperforms known algorithms for optimizing a single four-way intersection. Additionally, our approach remains capable of adapting to new and personalized preferences without requiring the agent to be retrained. Furthermore, we demonstrate that prioritized vehicles could be given the freeway when necessary, thus helping services such as public transport or emergency vehicles.

Moving forward, we plan to extend our method to complex networks and multiple intersections with cooperation between them. An exciting direction for future research is investigating the objective preferences of individual networks, which poses significant challenges but also has high potential. Furthermore, we aim to broaden our method and explore how a general optimizing network could recognize different scenarios and select preferences based on them.

Overall, our work provides yet another powerful ML-based method for optimizing traffic at intersections, with promising applications for real-world traffic management systems.

Author Contributions

Conceptualization, S.A. and T.B.; methodology, S.A. and L.S.; software, L.S.; writing—original draft preparation, L.S.; writing—review and editing, T.B.; visualization, L.S. and T.B.; supervision, S.A. All authors have read and agreed to the published version of the manuscript.

Funding

The research reported in this paper is part of project no. BME-NVA-02, implemented with the support provided by the Ministry of Innovation and Technology of Hungary from the National Research, Development and Innovation Fund, financed under the TKP2021 funding scheme. The research was also supported by the KDP-2021 program of the Ministry for Innovation and Technology from the source of the National Research, Development and Innovation Fund.

Data Availability Statement

Data is available at the authors.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fatemi, M.; Killian, T.W.; Subramanian, J.; Ghassemi, M. Medical Dead-ends and Learning to Identify High-Risk States and Treatments. In Advances in Neural Information Processing Systems; Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P., Vaughan, J.W., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2021; Volume 34, pp. 4856–4870. [Google Scholar]

- Jumper, J.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Žídek, A.; Potapenko, A.; et al. Highly accurate protein structure prediction with AlphaFold. Nature 2021, 596, 583–589. [Google Scholar] [CrossRef] [PubMed]

- Ibarz, J.; Tan, J.; Finn, C.; Kalakrishnan, M.; Pastor, P.; Levine, S. How to train your robot with deep reinforcement learning: Lessons we have learned. Int. J. Robot. Res. 2021, 40, 698–721. [Google Scholar] [CrossRef]

- Ahmad, T.; Madonski, R.; Zhang, D.; Huang, C.; Mujeeb, A. Data-driven probabilistic machine learning in sustainable smart energy/smart energy systems: Key developments, challenges, and future research opportunities in the context of smart grid paradigm. Renew. Sustain. Energy Rev. 2022, 160, 112128. [Google Scholar] [CrossRef]

- Kelley, S.B.; Lane, B.W.; Stanley, B.W.; Kane, K.; Nielsen, E.; Strachan, S. Smart Transportation for All? A Typology of Recent U.S. Smart Transportation Projects in Midsized Cities. Ann. Am. Assoc. Geogr. 2020, 110, 547–558. [Google Scholar] [CrossRef]

- Manfreda, A.; Ljubi, K.; Groznik, A. Autonomous vehicles in the smart city era: An empirical study of adoption factors important for millennials. Int. J. Inf. Manag. 2021, 58, 102050. [Google Scholar] [CrossRef]

- Fawzi, A.; Balog, M.; Huang, A.; Hubert, T.; Romera-Paredes, B.; Barekatain, M.; Novikov, A.; R Ruiz, F.J.; Schrittwieser, J.; Swirszcz, G.; et al. Discovering faster matrix multiplication algorithms with reinforcement learning. Nature 2022, 610, 47–53. [Google Scholar] [CrossRef]

- (FAIR)†, M.F.A.R.D.T.; Bakhtin, A.; Brown, N.; Dinan, E.; Farina, G.; Flaherty, C.; Fried, D.; Goff, A.; Gray, J.; Hu, H.; et al. Human-level play in the game of Diplomacy by combining language models with strategic reasoning. Science 2022, 378, 1067–1074. [Google Scholar]

- Wurman, P.R.; Barrett, S.; Kawamoto, K.; MacGlashan, J.; Subramanian, K.; Walsh, T.J.; Capobianco, R.; Devlic, A.; Eckert, F.; Fuchs, F.; et al. Outracing champion Gran Turismo drivers with deep reinforcement learning. Nature 2022, 602, 223–228. [Google Scholar] [CrossRef]

- Vinyals, O.; Babuschkin, I.; Chung, J.; Mathieu, M.; Jaderberg, M.; Czarnecki, W.M.; Dudzik, A.; Huang, A.; Georgiev, P.; Powell, R.; et al. AlphaStar: Mastering the Real-Time Strategy Game StarCraft II. 2019. Available online: https://www.deepmind.com/blog/alphastar-mastering-the-real-time-strategy-game-starcraft-ii (accessed on 10 February 2023).

- Smart Traffic Signals Cut Air Pollution in Pittsburgh. 2019. Available online: https://web.archive.org/web/20131010211917/http://www.mccain-inc.com/news/industry-news/its-solutions/769-smart-traffic-signals-cut-air-pollution-in-pittsburgh.html (accessed on 10 February 2023).

- Lam, J.K.; Eng, P.; Petrovic, S.; Craig, P. 2013 TAC Annual Conference Adaptive Traffic Signal Control Pilot Project for the City of Surrey. In Proceedings of the 2013 Transportation Association of Canada (TAC) Conference and Exhibition, Winnipeg, MB, Canada, 22–25 September 2013. [Google Scholar]

- Wang, Y.; Yang, X.; Liang, H.; Liu, Y. A review of the self-adaptive traffic signal control system based on future traffic environment. J. Adv. Transp. 2018, 2018, 1096123. [Google Scholar] [CrossRef]

- El-Tantawy, S.; Abdulhai, B.; Abdelgawad, H. Multiagent reinforcement learning for integrated network of adaptive traffic signal controllers (MARLIN-ATSC): Methodology and large-scale application on downtown Toronto. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1140–1150. [Google Scholar] [CrossRef]

- Abdulhai, B.; Pringle, R.; Karakoulas, G.J. Reinforcement learning for true adaptive traffic signal control. J. Transp. Eng. 2003, 129, 278–285. [Google Scholar] [CrossRef]

- Rasheed, F.; Yau, K.L.A.; Noor, R.M.; Wu, C.; Low, Y.C. Deep Reinforcement Learning for Traffic Signal Control: A Review. IEEE Access 2020, 8, 208016–208044. [Google Scholar] [CrossRef]

- Khamis, M.A.; Gomaa, W. Enhanced multiagent multi-objective reinforcement learning for urban traffic light control. In Proceedings of the 2012 11th International Conference on Machine Learning and Applications, Boca Raton, FL, USA, 12–15 December 2012; Volume 1, pp. 586–591. [Google Scholar] [CrossRef]

- Brys, T.; Pham, T.T.; Taylor, M.E. Distributed learning and multi-objectivity in traffic light control. Connect. Sci. 2014, 26, 65–83. [Google Scholar] [CrossRef]

- Barreto, A.; Hou, S.; Borsa, D.; Silver, D.; Precup, D. Fast reinforcement learning with generalized policy updates. Proc. Natl. Acad. Sci. USA 2020, 117, 30079–30087. [Google Scholar] [CrossRef]

- Schrank, D. Published by The Texas A&M Transportation Institute with Cooperation from INRIX. 2021. Available online: https://static.tti.tamu.edu/tti.tamu.edu/documents/mobility-report-2021.pdf (accessed on 10 February 2023).

- Kiran, B.R.; Sobh, I.; Talpaert, V.; Mannion, P.; Al Sallab, A.A.; Yogamani, S.; Pérez, P. Deep reinforcement learning for autonomous driving: A survey. IEEE Trans. Intell. Transp. Syst. 2021, 23, 4909–4926. [Google Scholar] [CrossRef]

- Fehér, Á.; Aradi, S.; Bécsi, T. Fast Prototype Framework for Deep Reinforcement Learning-based Trajectory Planner. Period. Polytech. Transp. Eng. 2020, 48, 307–312. [Google Scholar] [CrossRef]

- Genders, W.; Razavi, S. Using a Deep Reinforcement Learning Agent for Traffic Signal Control. arXiv 2016, arXiv:1611.01142. [Google Scholar] [CrossRef]

- Wang, S.; Xie, X.; Huang, K.; Zeng, J.; Cai, Z. Deep reinforcement learning-based traffic signal control using high-resolution event-based data. Entropy 2019, 21, 744. [Google Scholar] [CrossRef]

- Jin, J.; Ma, X. A group-based traffic signal control with adaptive learning ability. Eng. Appl. Artif. Intell. 2017, 65, 282–293. [Google Scholar] [CrossRef]

- Bouktif, S.; Cheniki, A.; Ouni, A. Traffic signal control using hybrid action space deep reinforcement learning. Sensors 2021, 21, 2302. [Google Scholar] [CrossRef]

- McKenney, D.; White, T. Distributed and adaptive traffic signal control within a realistic traffic simulation. Eng. Appl. Artif. Intell. 2013, 26, 574–583. [Google Scholar] [CrossRef]

- Feng, Y.; Yu, C.; Liu, H.X. Spatiotemporal intersection control in a connected and automated vehicle environment. Transp. Res. Part C Emerg. Technol. 2018, 89, 364–383. [Google Scholar] [CrossRef]

- Xu, B.; Ban, X.J.; Bian, Y.; Li, W.; Wang, J.; Li, S.E.; Li, K. Cooperative method of traffic signal optimization and speed control of connected vehicles at isolated intersections. IEEE Trans. Intell. Transp. Syst. 2018, 20, 1390–1403. [Google Scholar] [CrossRef]

- Yu, C.; Feng, Y.; Liu, H.X.; Ma, W.; Yang, X. Integrated optimization of traffic signals and vehicle trajectories at isolated urban intersections. Transp. Res. Part Methodol. 2018, 112, 89–112. [Google Scholar] [CrossRef]

- Khamis, M.A.; Gomaa, W. Adaptive multi-objective reinforcement learning with hybrid exploration for traffic signal control based on cooperative multi-agent framework. Eng. Appl. Artif. Intell. 2014, 29, 134–151. [Google Scholar] [CrossRef]

- Houli, D.; Zhiheng, L.; Yi, Z. Multiobjective Reinforcement Learning for Traffic Signal Control Using Vehicular Ad Hoc Network. EURASIP J. Adv. Signal Process. 2010, 2010, 1–7. [Google Scholar] [CrossRef]

- Wan, C.H.; Hwang, M.C. Value-based deep reinforcement learning for adaptive isolated intersection signal control. IET Intell. Transp. Syst. 2018, 12, 1005–1010. [Google Scholar] [CrossRef]

- Tan, T.; Bao, F.; Deng, Y.; Jin, A.; Dai, Q.; Wang, J. Cooperative deep reinforcement learning for large-scale traffic grid signal control. IEEE Trans. Cybern. 2019, 50, 2687–2700. [Google Scholar] [CrossRef]

- Van der Pol, E.; Oliehoek, F.A. Coordinated deep reinforcement learners for traffic light control. In Proceedings of the Learning, Inference and Control of Multi-Agent Systems (at NIPS 2016), Barcelona, Spain, 5–10 December 2016; Volume 8, pp. 21–38. [Google Scholar]

- Chu, T.; Wang, J.; Codecà, L.; Li, Z. Multi-agent deep reinforcement learning for large-scale traffic signal control. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1086–1095. [Google Scholar] [CrossRef]

- Kővári, B.; Pelenczei, B.; Aradi, S.; Bécsi, T. Reward Design for Intelligent Intersection Control to Reduce Emission. IEEE Access 2022, 10, 39691–39699. [Google Scholar] [CrossRef]

- Eom, M.; Kim, B.I. The traffic signal control problem for intersections: A review. Eur. Transp. Res. Rev. 2020, 12, 1–20. [Google Scholar] [CrossRef]

- Farazi, N.P.; Ahamed, T.; Barua, L.; Zou, B. Deep Reinforcement Learning and Transportation Research: A Comprehensive Review. arXiv 2020, arXiv:2010.06187. [Google Scholar]

- Wei, H.; Zheng, G.; Gayah, V.; Li, Z. Recent advances in reinforcement learning for traffic signal control: A survey of models and evaluation. ACM SIGKDD Explor. Newsl. 2021, 22, 12–18. [Google Scholar] [CrossRef]

- Wiering, M.A. Multi-agent reinforcement learning for traffic light control. In Machine Learning: Proceedings of the Seventeenth International Conference (ICML’2000), Stanford, CA, USA, 29 June–2 July 2000; Morgan Kaufmannm: Stanford, CA, USA, 2000; pp. 1151–1158. [Google Scholar]

- Mannion, P.; Duggan, J.; Howley, E. An Experimental Review of Reinforcement Learning Algorithms for Adaptive Traffic Signal Control. In Autonomic Road Transport Support Systems; Springer: Berlin/Heidelberg, Germany, 2016; pp. 47–66. [Google Scholar] [CrossRef]

- Sutton, R.S.; Precup, D.; Singh, S. Between MDPs and semi-MDPs: A framework for temporal abstraction in reinforcement learning. Artif. Intell. 1999, 112, 181–211. [Google Scholar] [CrossRef]

- Barreto, A.; Dabney, W.; Munos, R.; Hunt, J.J.; Schaul, T. Successor features for transfer in reinforcement learning. In Advances in Neural Information Processing Systems; Massachusetts Institute of Technology: Cambridge, MA, USA, 2017; Volume 30. [Google Scholar]

- Paramics 3D Traffic Microsimulation & Modelling Software for Transport–SYSTRA. Available online: https://www.paramics.co.uk/en/ (accessed on 10 February 2023).

- Lopez, P.A.; Behrisch, M.; Bieker-Walz, L.; Erdmann, J.; Flötteröd, Y.P.; Hilbrich, R.; Lücken, L.; Rummel, J.; Wagner, P.; Wießner, E. Microscopic Traffic Simulation using SUMO. In Proceedings of the 21st IEEE International Conference on Intelligent Transportation Systems, Maui, HI, USA, 4–7 November 2018. [Google Scholar]

- Road Traffic Simulation Software—AnyLogic Simulation Software. Available online: https://www.anylogic.com/road-traffic/ (accessed on 10 February 2023).

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with Deep Reinforcement Learning. 2013. Available online: http://xxx.lanl.gov/abs/1312.5602 (accessed on 10 February 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).